1. Introduction

Diffuse optical imaging (DOI) is a non-invasive computational image retrieval technique employing near-infrared (NIR) light to explore thick scattering media like biological tissues or fog [

1,

2,

3,

4,

5]. NIR light can penetrate several centimeters into soft tissues like the breast and brain [

6]. Because of this capability, DOI has diverse medical applications, including tumor detection [

7,

8], brain studies [

9,

10,

11,

12], and chemotherapy treatment monitoring [

13,

14,

15]. Its safety and ability to provide detailed tissue information make DOI a promising tool for diagnosing diseases and guiding treatment strategies [

16,

17].

Numerous specialized mathematical and computational algorithms have been developed for reconstructing anomalies within diffuse media with diverse geometrical and physical properties over recent decades [

17,

18,

19,

20]. However, image reconstruction in this domain poses significant challenges, primarily due to the inherent complexity of the inverse problem [

21]. The inverse problem is nonlinear, ill-posed, and ill-conditioned [

17,

22]. Furthermore, while camera measurements of light transmission through diffuse media offer notable imaging contrast, the reconstructed images often exhibit subpar spatial resolution [

23,

24]. Additionally, many classical methods rely on assumptions of complete measurements or strong linearization, usually based on precisely known boundary conditions, a scenario seldom achievable in practical settings. This limitation arises from the nature of light propagation within diffuse media, where the light takes a convoluted and unpredictable path influenced by multiple scattering events [

25].

In recent years, addressing this challenge has spurred active research into employing various deep neural network (DNN) architectures for image reconstruction in diffuse optical imaging [

13,

26,

27,

28,

29]. These algorithms offer several advantages for image reconstruction in DOI. They excel at learning complex mappings between input (camera measurement) and output (reconstructed anomalies) spaces, allowing for more accurate and robust reconstruction even in the presence of noise and uncertainties [

30,

31,

32]. Additionally, deep neural networks can capture intricate patterns and relationships within the data, enabling enhanced spatial resolution and finer detail in the reconstructed images compared to traditional methods [

16,

26]. Moreover, deep learning approaches have the flexibility to adapt to different imaging scenarios and can be trained on diverse datasets, making them highly versatile for DOI applications across various tissue types and conditions [

13].

However, despite their promise, deep learning methods for DOI image reconstruction have certain limitations. These include the need for large training data, which may be challenging to acquire, especially for rare or specialized imaging scenarios. Additionally, deep neural networks can be computationally intensive, requiring substantial resources for training and inference, which may limit their practical implementation in real-time imaging systems. Moreover, the “black-box” nature of several deep learning models can make it difficult to interpret the reasoning behind their decisions, raising concerns about the reliability and trustworthiness of the reconstructed images in clinical settings [

16,

17,

27,

33].

Some of the above limitations associated with learning-based approaches like DNNs can be mitigated through evolution-based methods such as Genetic Programming (GP) [

34]. Genetic programming is a powerful computational technique inspired by biological evolution that automatically generates tree-like recursive computer programs to solve complex problems [

35]. By mimicking the process of natural selection, GP evolves a population of candidate solutions over successive generations, repeatedly applying genetic operators such as mutation, crossover, and selection to facilitate evolutionary search [

34,

36]. This continual optimization process allows GP to effectively search large solution spaces and discover high-performing solutions. Modern science and medicine increasingly require transparent and interpretable AI models rather than opaque black-box systems. Genetic Programming (GP) naturally produces symbolic expressions, explicit rules, and fully interpretable computational pipelines, offering transparency by design. Unlike deep neural networks—which often depend on post hoc explanation techniques—GP provides built-in interpretability. This capability is especially relevant today, as regulatory and high-stakes domains such as the FDA, EMA, and medical imaging now mandate explainable and auditable AI systems. GP aligns directly with these requirements, making it a strong and compliant alternative to black-box approaches. Moreover, many real-world “AI for science” problems operate in low-data, noisy, or incomplete-data environments, where traditional deep learning struggles. GP excels under these conditions, delivering robust performance with limited samples while enabling flexible, globally optimized, and physically grounded model discovery. Together, these strengths—interpretability, flexibility, global optimization, symbolic discovery, and strong low-data performance—explain why GP is increasingly relevant in modern scientific and medical AI. To summarize, GP presents several advantages. Firstly, because the programs evolved by GP may contain functions tailored to the specific problem domain, the process often demands less training data since it leverages existing domain knowledge rather than constructing models from scratch. This attribute translates into shorter training times, particularly suitable for applications requiring swift deployment [

37]. Furthermore, GP trees are comprehensible to human programmers in certain conditions, offering a distinct advantage in interpretability compared to the often opaque neural networks. Such transparency is crucial, especially in fields like medical imaging, where comprehension and trust in the reconstruction process are paramount [

38]. Additionally, GP’s adaptability in defining and refining the cost function enables customization to the specific requirements and constraints of the task, unlike the more rigid loss functions typical in learning algorithms [

39]. These characteristics allow GP to find utility and relevance in various applications and industries [

37,

38,

40].

GP presents a unique approach to image reconstruction, focusing on evolving executable structures rather than specific parameter values [

37]. GP’s adaptability in representing and evolving executable code enables it to discern complex relationships within image datasets, thereby enhancing reconstruction accuracy. Moreover, GP’s interpretability and transparent modeling approach render it suitable for applications necessitating explainable AI, such as forensic imaging and industrial quality control [

41,

42,

43]. These recent advancements highlight GP’s growing significance in image reconstruction research and its potential for addressing real-world challenges across various domains [

37,

40,

44,

45].

We introduce

Diffuse optical

Imaging using

Genetic

Programming (DI-GP), a novel GP algorithm tailored to efficiently and accurately reconstruct 2D objects in strongly scattering media. Unlike recent machine learning (ML) approaches, which often require extensive computational resources, large datasets, and lengthy training times to achieve desired outcomes, DI-GP focuses on minimizing training duration, reducing dependence on extensive datasets, and effectively transferring domain knowledge to real-world experiments. DI-GP achieves this by directly integrating the principles of the diffusion equation into its evolutionary process, allowing for the development of optimized solutions with greater precision and efficiency [

25].

In addition to the advantages over DNNs outlined earlier, the proposed approach also presents several notable benefits compared to traditional analytical methods. These include expedited inference times and superior performance across multiple qualitative and quantitative metrics, such as a mean squared error of 0.0240 ± 0.0121, a structural similarity index of 0.8108 ± 0.0498, and a Pearson correlation coefficient of 0.7919 ± 0.0866. Furthermore, our algorithm can retrieve objects at imaging depths corresponding to several centimeters of human tissue [

46]. Overall, our findings underscore the potential of DI-GP as a valuable tool for rapid and accurate reconstruction in DOI applications as a proof of concept.

The following sections detail the image reconstruction algorithm, elaborate on the experimental methodology, and present the results obtained from our study.

2. Theory, Methods and Experiments

This section details the methodology of computational imaging in the diffuse regime. We start by discussing the forward and inverse problems associated with this imaging technique. Following this, we explore the methods for simulating light transport and collecting training data for algorithm development. Then, we introduce DI-GP, a novel approach that utilizes Genetic Programming for computational diffuse optical imaging. Finally, we provide a detailed overview of the experimental setup and procedures employed to validate the effectiveness of our proposed methodology.

2.1. Forward and Inverse Problems in Diffuse Optical Imaging

The forward problem in computational diffuse optical imaging involves predicting light distribution within the diffuse media based on known optical properties and boundary conditions. We conducted experiments using a melamine sponge with a thickness of 3 cm, where the transport mean free path (

) is more than 25 times smaller (at λ = 639 nm) than the total thickness of the material. This configuration places the light in the strongly diffuse regime, enabling us to utilize a diffusion approximation to model the propagation of photons within the diffuse medium [

24,

25]. The diffusion equation can be expressed as:

Here, represents the photon flux at a position r, is the absorption coefficient, is the source power density, and is the diffuse coefficient given by . Here, is the reduced scattering coefficient and is given by , where denotes the scattering coefficient and g denotes the anisotropy factor, given by the mean cosine of the scattering angle. The reduced scattering coefficient captures the effective rate of directional randomization in anisotropic scattering media.

When photon propagation is modeled using Equation (1), it follows the steepest descent of the scalar gradient weighted by the diffusivity (dominated by scattering

in strongly diffuse media), accompanied by an additional loss effect due to photon absorption. For highly localized sources, Equation (1) has an analytical solution given by [

25]:

Here, is the photon flux measurement at distance r, is the source amplitude term, and c is the speed of light in the medium.

Solving the forward problem is crucial for comprehending light interactions within diffuse media and serves as the foundation for image reconstruction in diffuse optical imaging [

17,

19,

47]. In computational diffuse optical imaging, the inverse problem revolves around deducing the characteristics of hidden structures within a medium based on measurements obtained from the camera. This problem is particularly pertinent in scenarios where the goal is to reconstruct the shape, contrast, or other relevant parameters of objects embedded within biological tissue or other scattering media [

23,

48].

In scenarios where anomalies are present within an otherwise homogeneous background, the anomaly can be reconstructed by inverting a linearized forward model given by:

Here,

is the measurement obtained at the camera,

is the optical perturbation, and

is the Jacobian matrix which is given by

, and can be obtained from Equation (2) [

25].

Initially, we compute the forward model using Equation (2) to simulate how light travels from the input plane to the object plane. Then, we use simple estimates to mask the propagation from the object plane in the diffuse medium to the measurement matrix. Next, we compare this numerical solution to the actual measurement by checking a cost function. This function helps adjust the shape of the estimated object, refining it through an iterative process of solving Equation (3) with the adjusted guess. Therefore, computational image reconstruction in diffuse optical imaging is typically framed as an optimization task aimed at minimizing the objective function given by:

Here,

represents the regularization term, frequently derived from specific assumptions regarding the statistical properties of optical images [

16,

29,

49]. Similarly, in this article, we propose a GP algorithm that retrieves anomalies (

) inside a strongly diffuse medium by optimizing a modified Equation (4) given by:

Here,

is the retrieved image containing the anomaly distribution,

is the predicted outcome for each individual of the GP algorithm and

is the thresholding mask, which works by minimizing Equation (5) only over pixels where the signal-to-noise ratio in the recorded data

exceeds a certain threshold [

23]. The binary mask defined from a per-pixel signal-to-noise ratio map

, where

denotes the standard deviation of background noise estimated from reference measurements acquired without the anomaly. Pixels satisfying

are retained, whereas the remaining pixels are excluded from the minimization to prevent noise-dominated measurements from influencing the solution. In this work,

denotes the threshold SNR which is set at

.

The details of the algorithm are presented in

Section 2.3. However, before delving into the image reconstruction algorithm, we look at the light transport simulations and the dataset used to evolve the GP algorithm.

2.2. Light Transport Simulations and Training Data

Applying evolution-based methods for DOI reconstruction requires physical knowledge about the background sample and the embedded anomalies, contributing to the contrast observed in DOI measurements. This necessitates the availability of a dataset. Although GP algorithms require datasets that are several orders of magnitude smaller than DNNs, acquiring such datasets in the context of DOI remains challenging [

13,

37,

40]. Therefore, simulating light propagation through digital phantoms offers a viable approach to generating datasets for algorithm training. Furthermore, light transport simulations offer another advantage: they allow for the assessment of experimental design considerations through computer simulations, helping to avoid the costly and wasteful construction of clinical prototype systems that may possess inherent design flaws [

8,

18].

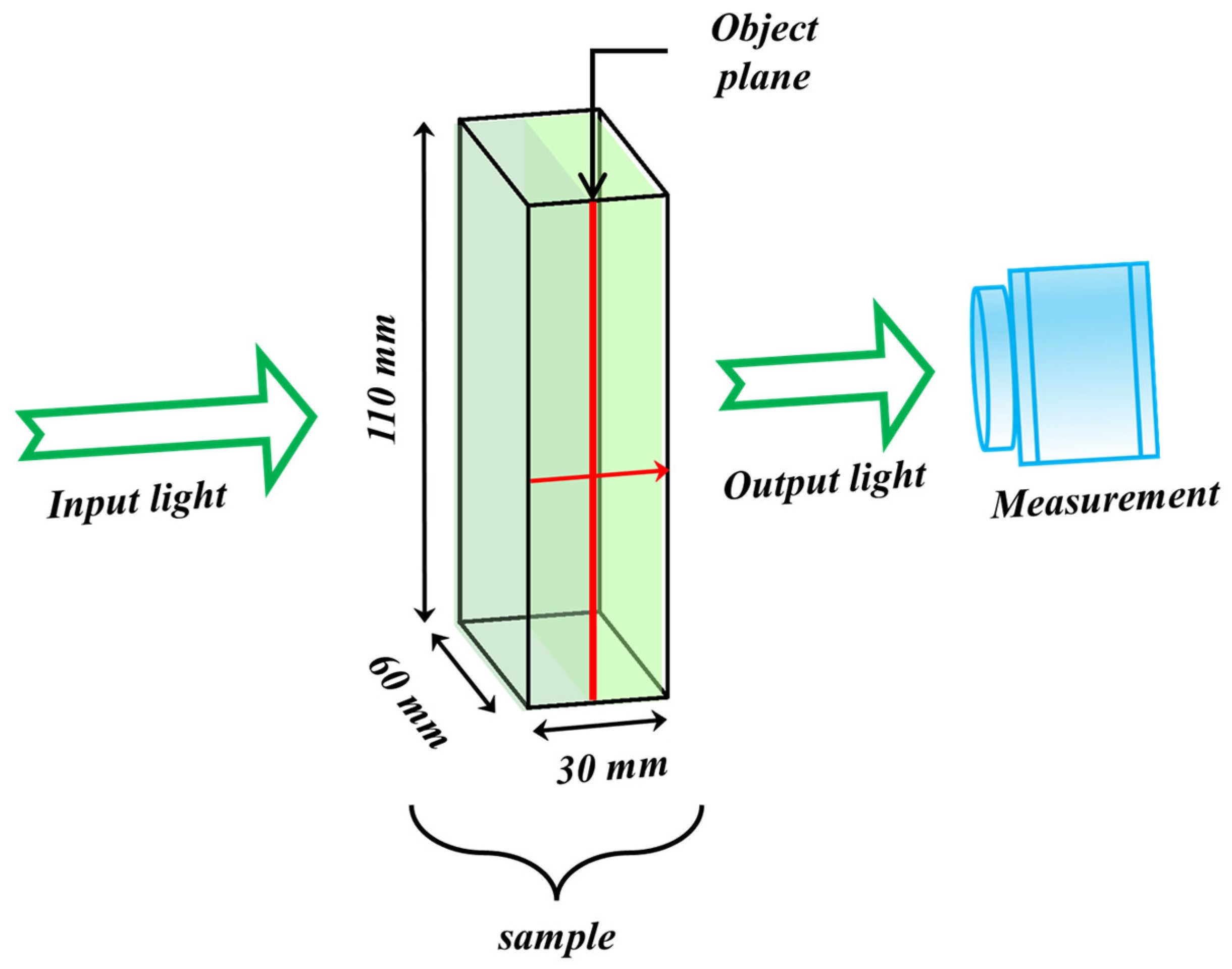

In this study, simulations are performed using a cuboid volume measuring 110 × 60 × 30

(height × width × thickness), as depicted in

Figure 1. The volumetric mesh is generated using a MATLAB-based meshing tool called iso2mesh [

50]. The sample’s imaging thickness is 30 mm, with absorption and scattering coefficients of 0.0035

and 0.8090

, respectively. These parameters were derived by fitting experimental measurements to the diffusion equation [

23,

51,

52].

A continuous wave Gaussian light source operating at a wavelength of 639 nm and a spot size of radius 1.5 cm is employed to illuminate the sample, with flux measurements taken at the opposite boundary. Anomalies are introduced at the central depth of the sample (15 mm), comprising hand-drawn shapes placed within the field of view of the imaging system. These anomalies are characterized by absorption coefficients ranging from 0.5 to 0.72 , representing the contrast between the anomalies and background samples in the experimental material.

The light transport simulations were executed using MCX [

53,

54]. This MATLAB-based software employs Monte Carlo algorithms to model light transport in diffuse media. The simulations were conducted on 30 distinct meshes, each featuring a unique hand-drawn anomaly shape. For each shape, both the shape itself and the corresponding measurement data were saved, resulting in a dataset comprising 30 measurement vectors as inputs and the corresponding anomaly distributions as ground truths. Additionally, the Jacobian at the imaging plane and the reference measurements were obtained by conducting simulations without the anomalies. A 2% Gaussian noise is added to the measurements to simulate any experimental noise [

8,

55]. The dataset is utilized to train the DI-GP, which evolves an optimal solution for retrieving hidden anomalies within diffuse media. The algorithm is described in the next section.

2.3. Training the DI-GP Algorithm: Diffuse Optical Imaging Using Genetic Programming

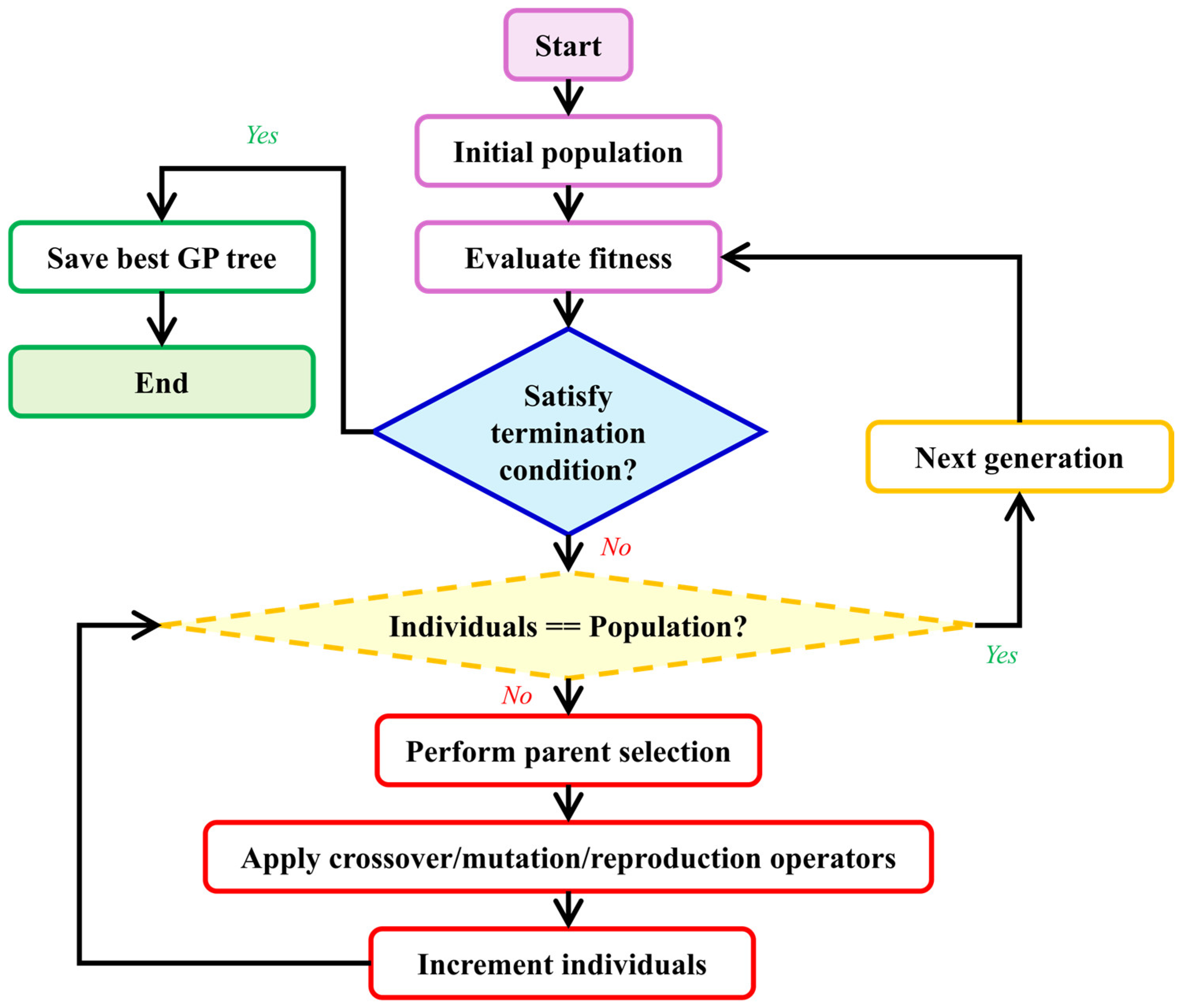

Genetic Programming is a nature-inspired hyper-heuristic search algorithm that generates and evolves computer programs representing solutions to a given problem. GP begins with a set of basic building blocks, a high-level objective, and a method for evaluating solution quality. As illustrated in

Figure 2, it automatically generates a group (or population) of random initial solutions (or individuals). Individuals are computer programs structured as trees. This structure allows for a hierarchical organization of operations and data, resembling branches and leaves, where each node represents a computational step or decision point. Individuals are evaluated using a predefined fitness function specifically tailored to the task at hand [

35,

56]. Better individuals are given more favorable fitness scores.

GP then constructs the next population of individuals (or the next generation) by using a method analogous to natural selection: better individuals (i.e., those with better fitness scores) are selected with a higher probability for the application of genetic operators, such as crossover, mutation, and gene duplication [

35]. Through fitness-based selections and genetic operators applied over time, genetic algorithms iteratively optimize solutions during simulated evolution.

Each genetic operator yields one or more individuals, which are inserted into the population. In contrast, individuals who were not selected are discarded, adhering to the survival of the fittest principle. The process then reiterates until one of the termination conditions is met, typically after a fixed number of generations has passed. Thus, over successive generations, through fitness-based selections and genetic operators applied repeatedly over time, genetic algorithms refine and optimize solutions to the specified problem. Further details regarding the image reconstruction are provided in the following sub-sections.

2.3.1. Image Reconstruction Using Genetic Programming and Structure of the GP Individuals

In this study, we utilize a Koza-style GP [

34] within the DEAP Python environment [

57]. In this framework, each GP individual in our algorithm embodies a function of the form

, where it processes the inputs represent a neighborhood of

(N = 5 in our case) pixels from the measurement image (

) and produces a single pixel at a corresponding location in the predicted image (

). This is repeated for all pixels in

where the

environment is fully contained in the image, while pixels with inadequate environments, in the margins or corners, are skipped. Consequently, the image is reconstructed pixel by pixel, leveraging local pixel data of the measurements. The pseudocode in

Table 1 represents the generation of a predicted image where

M is the input image,

GP_individual is the individual currently being evaluated,

N is the width of the environment (the environment is

pixels).

GP individuals, serving as candidate solutions for reconstructing a pixel using a local NxN environment of pixels in M, embody functions of the form

as stated above. Each GP individual (or GP tree) resembles a recursive LISP expression, consisting of inner functions that accept arguments and terminals which serve as leaf nodes without any arguments [

58]. Trees are initially constructed at random, adhering to specific depth constraints (see below). The leaf nodes are either input pixels, marked as

,

, …,

, or random constants in the range [0, 1]. Inner tree-nodes are functions with varying arities, with each being applied to the appropriate number of inputs, which can be outputs of other tree-nodes or values from terminals. All functions and terminals output numeric values. The final values GP individuals return are clipped to the range [0, 1].

Table 2 provides a comprehensive list of inner functions and terminals utilized by GP.

2.3.2. Parameters for Evolution

The algorithm’s parameters were empirically defined within specific ranges to optimize performance. The population size, representing the number of individuals in each generation, varied between 150 and 250, which is relatively small but still provides enough diversity. Generation count, indicating the number of iterations or generations, ranged from 20 to 40 since evolution typically converged to good solutions relatively fast. The crossover probability was set at 0.6 and the mutation probability at 0.4, which is relatively high for mutation, to avoid local optima. Tree depth, specifying the maximum depth of GP individuals, ranged from 3 to 5, balancing complexity and computational efficiency. Lastly, we used tournament selection [

59], which is currently one of the most widely used methods for selection [

60], with a tournament size of 4.

The genetic programming hyperparameters were determined empirically across several experiments, following standard practice in tree-based genetic programming and symbolic regression, with the goal of stable convergence and controlled expression growth. These values regulate the evolutionary search dynamics, including exploration intensity, selection pressure, and bloat control, and do not modify the underlying inverse problem, which is defined by the forward model and the physics-guided fitness functions. Consequently, reasonable variations in these hyperparameters mainly affect convergence rate, computational cost, and the complexity of the evolved expressions, rather than the physical objective being optimized [

61,

62].

2.3.3. Evaluation of the GP Individuals

The evaluation of GP individuals begins with reference-subtracted simulated measurements, serving as inputs for the GP optimization process, referred to as evolution or training. During this process, explained in the previous subsections, the model undergoes optimization through selection, where various candidate solutions compete over multiple generations, and the best-performing individuals are selected for further refinement. This iterative process enhances the model’s accuracy and robustness. Once the best individual is identified, it is tested on real measurement data to validate its performance. This real-world evaluation ensures that the model’s predictions are accurate and reliable. One important thing to note is that the algorithm leverages ground truth data solely during the training phase. This data is instrumental in optimizing the solution by assessing predictions using the Mean Squared Error (MSE) loss [

26], which aids in identifying the distribution of hidden anomalies, and the Dice loss, which enhances edge detection capabilities [

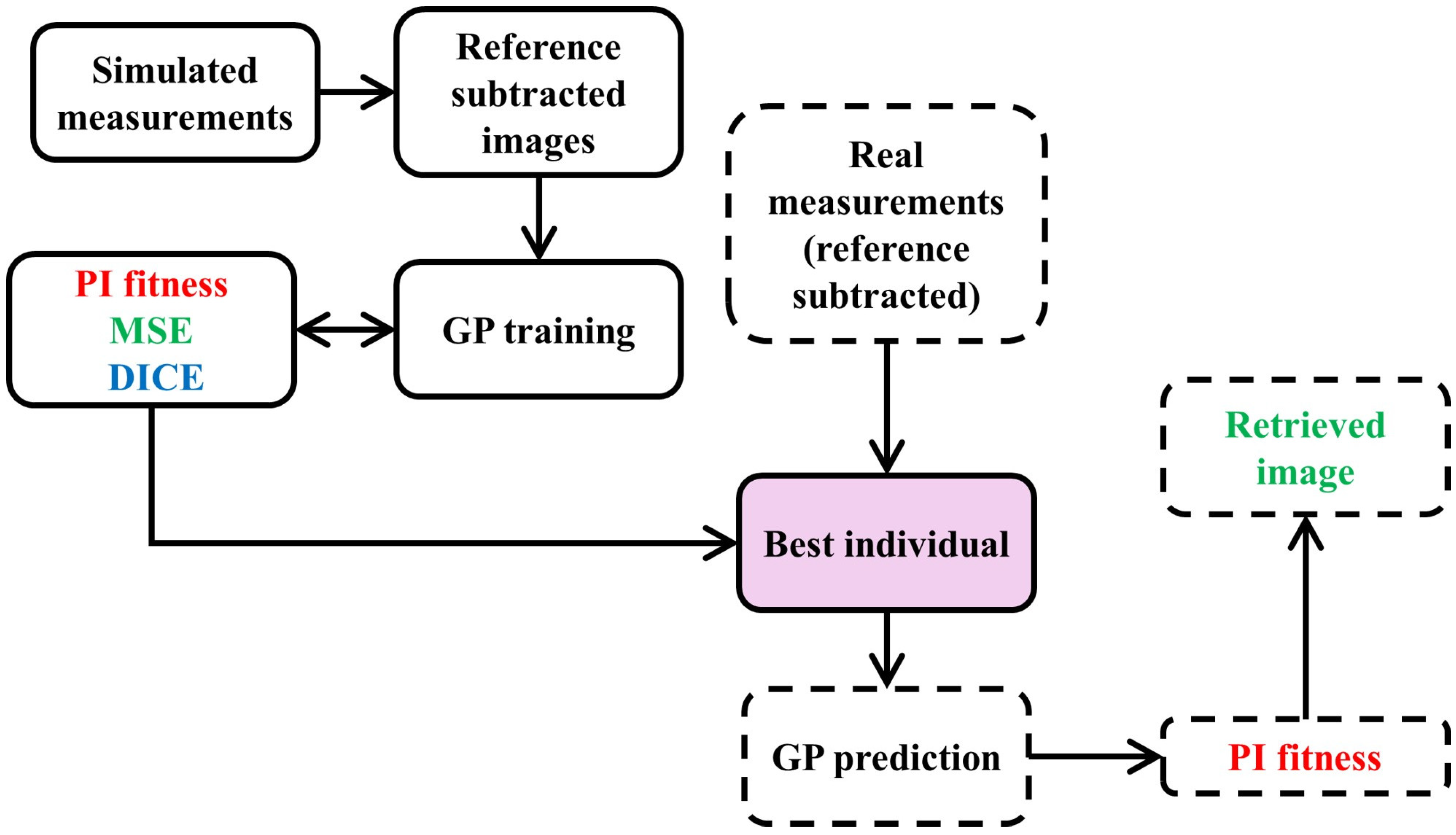

63]. The schematic of the DI-GP workflow to evaluate the best individual is shown in

Figure 3. All simulations and genetic programming experiments were executed on a workstation-class laptop, namely an HP OMEN by HP Laptop 16 c0004nj, running Microsoft Windows 11 64-bit, equipped with an AMD Ryzen 7 5800H central processing unit with 8 cores and 16 logical processors at a base frequency of 3.2 GHz, and 32 GB of random-access memory. Under this configuration, the full genetic programming evolution required approximately 15 min of wall clock time, and inference for a single reconstruction required less than 1 s.

As can be seen in

Figure 3, the fitness function plays a significant role in determining the best individual. It quantifies the accuracy and effectiveness of an individual model in solving the problem at hand by incorporating various performance metrics to provide a comprehensive assessment of the model’s quality. By assigning a fitness score to each candidate, the fitness function guides the selection process, ensuring that the most promising solutions are identified and iteratively refined, ultimately leading to the optimal solution. The fitness functions used in DI-GP are described in the next section.

2.3.4. Fitness Function Used by DI-GP

The cost of the fitness function used by DI-GP to retrieve hidden anomalies in diffuse media is given by:

Here,

is the overall cost of evaluating an optimal solution,

is the cost of the fitness function that is used to optimize Equation (5) and is given by:

where

is the measurement obtained by the DI-GP prediction and

is the measurement obtained at the camera. By constantly updating

using the latest

values predicted by the GP function, we incorporate domain knowledge regarding diffuse optical imaging into the algorithm.

is the MSE loss given by:

where N is the total number of samples, and

and

are predictions and the labels, respectively. Similarly,

is the Dice loss given by:

,

and

are constant weights used to vary the influence of each fitness function. For the training process, the optimal values for

,

and

in this experiment are 0.7, 0.25, and 0.15, respectively. However, when the algorithm is tested using unseen experimental data,

and

are 0, and

becomes 1, reducing Equation (6) to

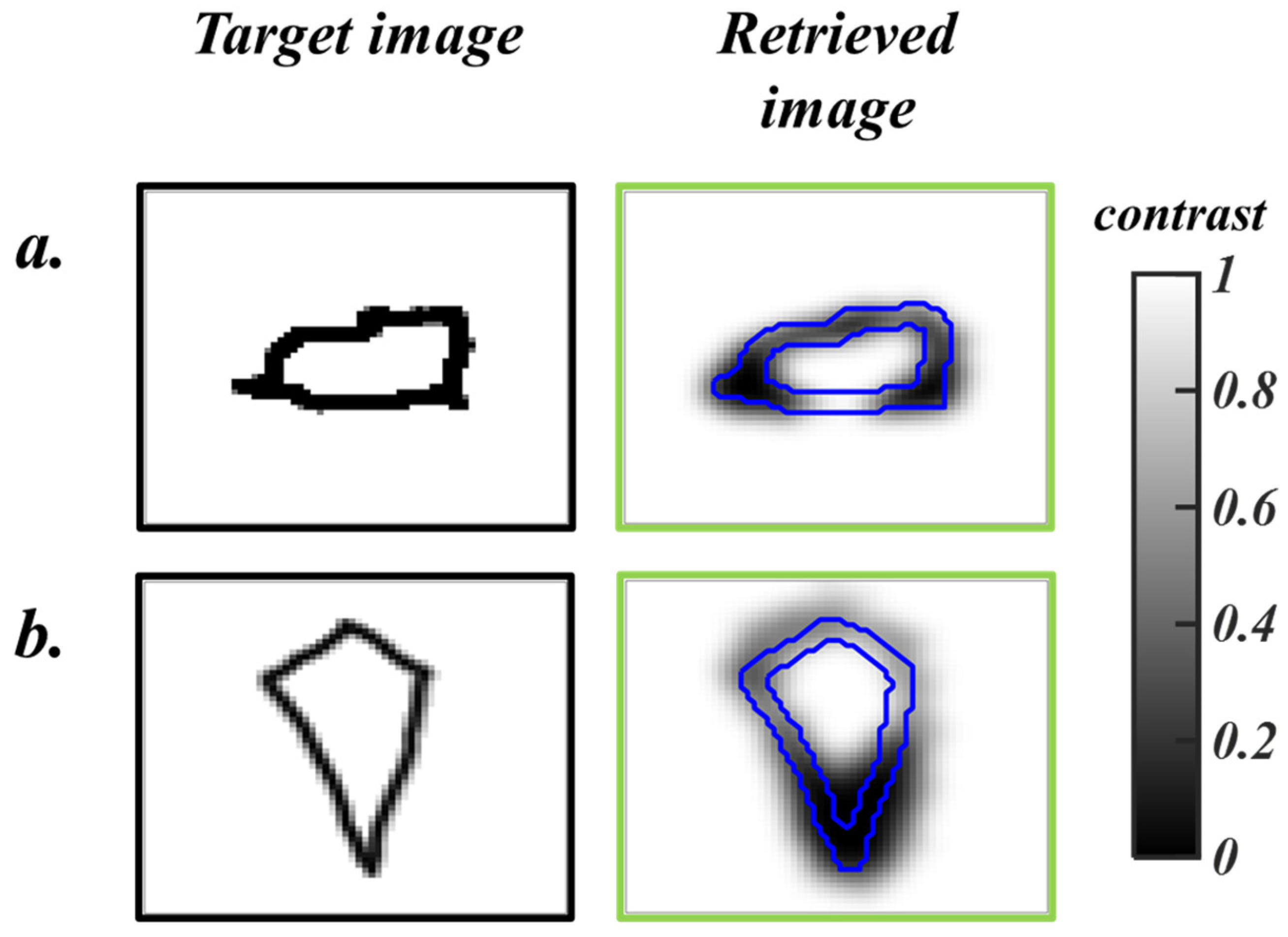

. When subjected to unseen simulated data, the DI-GP algorithm yields reconstructions with a mean squared error of 0.029 ± 0.0142. The reconstructed images are shown in

Figure 4.

The next sections detail the experimental setup used to validate the DI-GP algorithm and discuss the results.

2.4. Experimental Setup to Validate DI-GP

This study aims to computationally reconstruct anomalies embedded within the diffuse media using continuous wave (CW) measurements. To achieve this, we build an experimental setup, as illustrated in

Figure 5. We use a CW laser source (THORLABS PL252) with a wavelength of 639 nm and an output power of 4.5 mW. The beam is expanded to a spot with a radius of 15 mm using a beam expander, after which it is incident on the melamine foam of 3 cm thickness, containing the anomaly at a depth of 1.5 cm.

As discussed in

Section 2.2, the melamine foam is a highly diffuse medium where the measured optical properties correspond to a transport mean free path of 1230 μm, which is more than one order of magnitude smaller than the sample thickness

transport mean free paths. The hidden objects are placed at the central depth of the sample and are random shapes created using black tape.

The light passes through the material, with a portion absorbed by the concealed anomaly. Subsequently, the transmitted light is captured by a CMOS camera (uEYE UI-2210-C), which has a resolution of 640 × 480 pixels and a pixel size of 10 µm. Throughout the image acquisition process, we selectively utilize a resolution of 300 × 300 pixels, enabling the collection of transmitted light from a single source. A noteworthy aspect of these measurement images is the inability to visually discern the presence or absence of an object embedded inside the medium.

The acquired measurements are subsequently resized to 64 × 64 pixels and fed into the DI-GP algorithm, which reconstructs the location and distribution of the hidden object with precision and efficiency. To gauge the efficacy, the performance of our proposed algorithm is compared to that of the conjugate gradient descent algorithm (CGD), a well-established method for image reconstruction and widely used for comparison [

13,

27,

28,

29]. The results are detailed in the next section.

3. Results and Discussion

Figure 6 showcases the reconstructions obtained through the DI-GP algorithm when tested with experimental measurements. By incorporating knowledge specific to diffuse optical imaging into the fitness evaluation process, the DI-GP algorithm benefits from insights derived from the underlying principles of this field. This comprehensive evaluation approach enhances the robustness and reliability of the evolved solutions, as they are evaluated based on both empirical performance metrics and adherence to known principles of diffuse optical imaging. As depicted in

Figure 6, the experimental sample contains a variety of hidden anomalies, including a plus sign, two circles, an arrow, a diagonal line, and a trapezoid. The DI GP algorithm recovers the dominant location and contrast patterns of the concealed objects without prior information regarding their shapes. Fine features and disconnected components can be attenuated when their measurement signatures approach the effective resolution and noise limits of the experimental configuration. This effect is visible in Sample 4, where one of the two circles exhibits reduced recovery, and in Sample 5, where the thin arrow shaft is suppressed while the higher contrast arrow head is retained. Notably, the inference time for this task is less than 1 s.

The effectiveness of the proposed DI-GP algorithm lies in its optimization process, which minimizes the objective function described in Equation (5). Furthermore, by leveraging the fitness function detailed in Equation (6), with parameters and set to 0 for experimental evaluation, DI-GP achieves optimal solutions for reconstructing hidden anomalies within the diffuse media. This approach allows the algorithm to adaptively evolve and refine its predictions, leading to accurate reconstructions with high fidelity.

Notably, DI-GP requires only a small dataset for training as it incorporates domain-specific knowledge about DOI, significantly reducing the computational memory and training time needed. This efficient utilization of data resources streamlines the training process. It enhances the algorithm’s scalability and applicability to diverse imaging scenarios.

The performance evaluation of the proposed DI-GP algorithm involves using several key performance metrics to assess the quality and accuracy of the reconstructed images [

13,

27]. These metrics include Mean Squared Error (MSE), Structural Similarity Index (SSIM), and Pearson Correlation Coefficient (PCC). MSE measures the average squared difference between the pixel values of the reconstructed image and the ground truth image. SSIM quantifies the similarity between two images by comparing the luminance, contrast, and structure. It ranges from −1 to 1, where 1 indicates perfect similarity. Finally, the PCC measures the linear correlation between the pixel values of the reconstructed and ground truth images. The performance achieved by the DI-GP algorithm is 0.0240 ± 0.0121 in terms of MSE, 0.8108 ± 0.0498 in terms of SSIM, and 0.7919 ± 0.0866 in terms of PCC. The low MSE value indicates minimal average reconstruction error, suggesting that the DI-GP algorithm accurately captures the details of the hidden anomalies. The high SSIM and PCC values also reflect strong similarity in contrast, structure, and directional correlation between the reconstructed and ground truth images, affirming the algorithm’s effectiveness in reproducing the true anomalies. The performance of the DI-GP algorithm is compared to that of an Analytical (CGD), and the results are detailed in

Figure 7.

The performance enhancement obtained by DI-GP with respect to the analytical solution is given by an improvement ratio defined as:

Here, is the improvement ratio. and are the performance evaluation values obtained using the performance metrics mentioned above for the DI-GP and the CGD approaches, respectively.

The significant improvement ratios obtained for MSE, SSIM, and PCC metrics, namely 0.5138, 1.2015, and 1.2198, respectively, underscore the superior performance of the DI-GP algorithm compared to the analytical solution. These ratios indicate that DI-GP achieves notably lower errors and higher structural similarity and pixel-wise correlation with ground truth images, reflecting its ability to capture better the true characteristics of the hidden anomalies within the diffuse media.

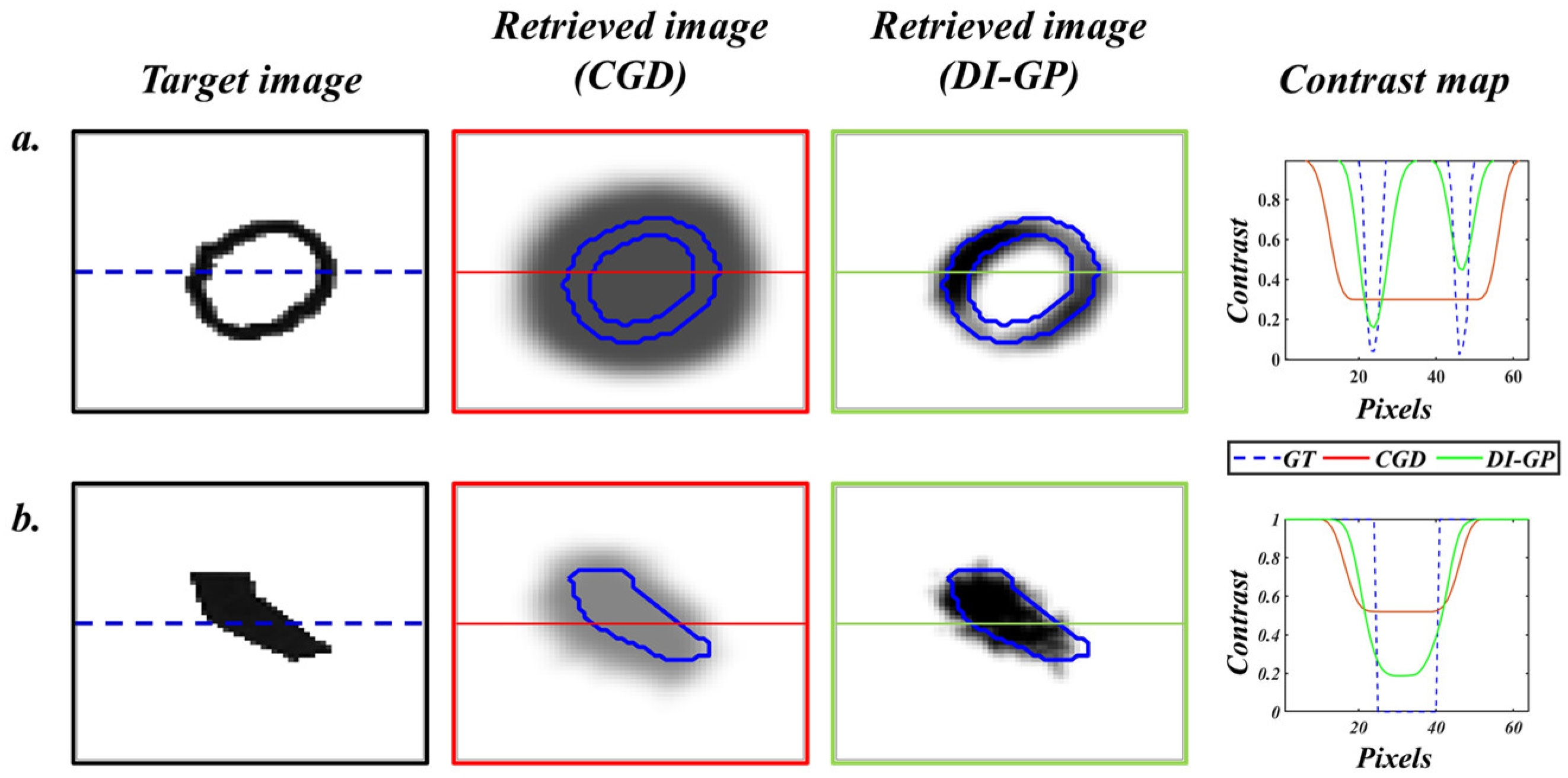

Figure 8 compares the reconstructions of the DI-GP and the analytical solution.

While the performance metrics demonstrate that DI GP outperforms conventional analytical reconstructions, it is important to emphasize that deep learning has also delivered impressive advances in diffuse optical imaging when large and diverse training datasets are available. The present work is positioned to complement, rather than compete with, those data-rich approaches by addressing a regime that is frequently encountered in practice, namely, limited training data. Consequently, a quantitative deep learning comparison is not included because the available dataset comprises only 30 samples, which is not sufficient to train, validate, and tune deep neural networks in a manner that would be both reliable and fair. Instead, the manuscript demonstrates that high reconstruction quality can be achieved in small-data settings using a physics-guided genetic programming framework that yields an explicit and interpretable expression. This transparency enables direct examination of the learned reconstruction logic. It supports confidence in deployment, while maintaining competitive performance against established analytical methods in scenarios where data are scarce, and interpretability is a priority.

Figure 9 presents a specific GP individual evolved by the DI-GP algorithm, depicted as a tree-structured expression that transforms DOI measurements into clear reconstructions of hidden objects. This evolved expression can be interpreted as a nonlinear contrast-enhancing filter emphasizing sharp transitions and suppressing background noise. It operates by examining local pixel neighborhoods and applying a sequence of adaptive transformations, allowing it to isolate regions likely to correspond to embedded objects within the diffuse media while disregarding more homogeneous background areas.

The GP individual begins with reference-subtracted measurements as inputs, labeled “Pixel #,” corresponding to intensities at different positions. Numeric constants like 0.5 or 0.75 serve as thresholds or scaling factors. These form the tree’s leaf nodes, supplying the raw data needed to generate the reconstruction. Operators, shown as pink rectangles in

Figure 9, perform key transformations. Initially, functions like “min3” compute minimum values across several groups of pixels to identify the darkest regions within a local patch. These are combined with inverted signals from key pixels via the “Compl” (Complement) operator to form a dynamic threshold. This result is then inverted again to amplify areas that stand out from their surroundings. Operators such as “sqr” (Square) emphasize stronger signals and suppress weaker ones, “pDiv” (Protected Division) normalizes while avoiding divide-by-zero errors, and “max9” amplifies key variations. Additional arithmetic functions mix, scale, or reshape pixel values.

At the root of the tree, the “if.then.else” node manages adaptive branching, applying different operations based on internal conditions. This design introduces dynamic gating that fine-tunes the reconstruction depending on signal levels. Together, these operators define a nonlinear mapping from measurements to reconstructed pixels, enabling the function to scan and integrate signals across the image. This process untangles scattering effects, converting noisy input into a clearer, high-contrast output.

Rather than acting as a generic filter, the evolved expression encodes a specific heuristic that adaptively responds to spatial intensity patterns typical of hidden anomalies. It behaves similarly to a learned segmentation operator, identifying and boosting subtle signal variations based on their spatial configuration. This level of detailed, functional interpretability reveals how each operator contributes to reconstruction, offering a clear explanation of how predictions are computed, often missing in black-box models. While the evolved expressions can be complex, and further validation on heterogeneous samples is needed, this approach paves the way for DI-GP’s use in clinical imaging. Overall, our study sets an optimistic foundation for the future development of GP in computational diffuse optical imaging.

However, several constraints of the present study limit the current scope and motivate the next research steps. The demonstrated results focus on two-dimensional reconstructions under a fixed acquisition geometry and within the range of optical properties represented in the simulations and phantom experiments. The modelling framework relies on a diffusion-based description of photon transport, which is well suited to highly scattering media but can lose accuracy when diffusion assumptions are weakened, including in strongly heterogeneous tissues. Reconstruction fidelity can also be affected by forward-model mismatch arising from variability in optical parameters, source and detector alignment, and measurement noise statistics relative to those used during training. These considerations motivate future work that expands training and validation across broader distributions of optical properties, geometries, and noise conditions, and that evaluates performance in heterogeneous and anatomically realistic phantoms. Robustness to mismatch can be strengthened through physics-guided fitness designs that incorporate explicit noise models and through data generation protocols that intentionally span experimentally relevant variability. Extension to three-dimensional volumetric imaging is followed by formulating the forward problem on a three-dimensional domain using diffusion-based or Monte Carlo-based light transport, yielding volumetric sensitivities, namely Jacobian operators, that map voxel-wise absorption perturbations to measurement changes. In this regime, genetic programming can be trained to estimate voxel-wise contrast using local volumetric neighborhoods, together with measurement features acquired under multiple illuminations, multiple source-detector separations, or multiple views. The evolutionary procedure remains unchanged, whereas feature construction, target representation, and physics-guided fitness are defined on a volumetric reconstruction grid. The dominant practical challenge is the computational scaling of volumetric forward evaluations and sensitivity operations, which motivates parallel implementations and efficient reduced-order representations.