4.1. Dataset

VisDrone2019: This dataset comprises 10,209 aerial images captured by UAVs under diverse weather conditions and from various viewing angles. It encompasses ten predefined categories: pedestrian, car, truck, bus, tricycle, bicycle, motorcycle, stroller, occluded person, and other. The dataset partitioning, detailing the training, validation, and test sets, is presented in

Table 1.

UAVVaste [

33]: This dataset is a low-altitude small-target dataset for garbage detection. It contains 772 UAV aerial images, with a total of 3718 annotation boxes, all labeled as one category: “rubbish”.

UAVDT [

34]: This is a challenging aerial dataset characterized by complex scenarios, comprising 40,735 images with a primary focus on vehicle targets. In this study, we select it as a supplementary benchmark to validate the generalization capability of SCA-DEIM in traffic surveillance scenarios.

To analyze the data distribution of VisDrone2019, we visualized the quantitative proportions of objects by class and by size. According to

Figure 7, small objects constitute the majority of the dataset, accounting for 62.4% of the total instances. Among these, pedestrians (84.1%), people (88.6%), and motorcycles (76.3%) are predominantly small-sized objects, reflecting the characteristic of distant targets appearing small from a UAV perspective. Medium-sized objects account for 32.7% of the total, and are primarily concentrated in vehicle-related classes, such as cars (43.5%), vans (46.2%), trucks (52.5%), and buses (55.8%). Large objects are relatively scarce, comprising only 4.9% of the total instances. However, they constitute a higher proportion within the truck (16.5%) and bus (17.1%) categories.

This dataset is characterized by several challenging attributes: an extremely high proportion of small objects, complex background scenes, severe occlusions, and a high degree of overlap among different object classes. These attributes render the dataset particularly suitable for research focused on small object detection.

4.2. Evaluation Metric

To assess our approach’s performance in detection, a set of metrics for performance assessment was employed, including: Average Precision (AP), , Average Precision for small, medium, and large objects (, , ), Average Recall (AR), detection speed (FPS), as well as parameter count and computational complexity. These metrics are defined below.

The accuracy and completeness of the detection outcomes are quantified by Precision and Recall, respectively, which are formulated as:

Here,

(True Positives) denotes the number of samples correctly predicted as positive,

(False Positives) denotes the number of samples incorrectly predicted as positive (i.e., predicted as positive but actually negative), and

(False Negatives) denotes the number of samples incorrectly predicted as negative (i.e., predicted as negative but actually positive). Mean Average Precision (mAP) represents the mean of AP scores computed over all object categories, serving as an indicator of a model’s overall detection capability.

Here,

N is the number of categories and

is the Average Precision for the

n-th class.

denotes the AP when the Intersection over Union (IoU) threshold is set to 0.5.

,

, and

represent the AP for small, medium, and large objects, respectively. Average Recall (AR) represents the average of recall values across different IoU thresholds, defined as:

where

K is the number of different IoU thresholds selected for the calculation.

4.4. Comparison of Different Attentions in the Backbone Network Feature Extraction Stage

To evaluate the impact of different attention mechanisms on feature extraction, we conducted a comparative analysis of four modules: ECA, CBAM, SCSA, and our proposed ASCSA. These modules were integrated into all four stages (Stages 1–4) of the feature extraction backbone. Building upon this, we further compared the performance metrics of ASCSA when integrated into all backbone stages (Stages 1–4) versus its integration into only the high-level semantic feature extraction stages (Stages 3 and 4). The experimental results are presented in

Table 3.

The experimental results indicate that the ECE attention mechanism [

35] employed in the baseline outperforms the ECA and CBAM mechanisms for this specific task. This outcome is potentially because the ECA mechanism solely models channel attention without spatial modeling, whereas CBAM processes channel and spatial attention in series. This serial configuration may lead to information fragmentation and impede the effective extraction of fine-grained details, thereby resulting in suboptimal performance for small object detection. The results also show that ASCSA performs marginally better than SCSA. We attribute this to ASCSA’s ability, in this task, to maintain non-linear expressiveness while providing smoother gradient variations. This characteristic subsequently enhances its feature modeling capabilities, particularly for small objects and against complex backgrounds. A comparison of the two integration strategies revealed that while integrating ASCSA into all stages (Stages 1–4) yields a slight improvement in performance metrics over integrating it into only Stages 3 and 4, it nearly doubles the total parameter count. This implies a significant increase in both computational and storage overhead. We hypothesize that during the low-level semantic feature extraction stages (Stages 1 and 2), the primary objective is to maintain feature integrity and local stability. Overly sophisticated attention mechanisms, such as SCSA and ASCSA, might disrupt these fundamental patterns. Furthermore, the substantial increase in parameters leads to an imbalance between the acquired performance gains and the escalated computational complexity.

We conclude that applying ASCSA to all stages does not yield a desirable balance between accuracy improvement and computational overhead. Therefore, the module is integrated solely into Stages 3 and 4, which enhances performance without compromising efficiency, preserving the model’s lightweight characteristics.

To further validate the rationale behind our architectural design, we conducted comparative experiments on the activation functions within the ASCSA module. The goal was to verify that SiLU outperforms the Sigmoid function in facilitating spatial-channel synergy for small object detection. Specifically, we initialized the model with pre-trained weights and fine-tuned it for 50 epochs.

As presented in

Table 4, replacing the standard Sigmoid (used in the original SCSA) with SiLU results in a significant boost in performance, particularly for small objects (

). Unlike Sigmoid, which saturates at 1.0, the unbounded nature of SiLU allows the network to linearly amplify the response of weak UAV targets while preserving gradient flow in deep layers. This structural upgrade transforms the mechanism from passive masking to active feature recalibration.

4.5. Performance of Different Convolutional Modules in Feature Fusion

To further demonstrate the efficacy of the CSP-SPMConv module, we conducted comparative experiments evaluating the impact of different convolutional types and various asymmetric kernel sizes on overall performance, as shown in

Table 5 and

Table 6.

First, through comparative experiments using different convolutional variants, we can clearly observe the superiority of the CSP-SPMConv module. Specifically, the baseline model exhibits the lowest parameter count and computational complexity, yet it also delivers the poorest small-object detection performance (9.0%). When PConv is introduced, both the model parameters and computational cost increase, but this trade-off yields gains in AP and . In contrast, SPMConv equipped with the Shift Channel Mix achieves a far more notable improvement, with AP rising to 18.3% and to 9.8%. This performance gain can be attributed to the mechanism’s enhanced ability to capture fine-grained semantics. When further integrated with the CSP architecture, the model reaches the optimal balance, attaining the highest performance of 10.1% while simultaneously achieving a significant reduction in computational complexity, thanks to the CSP structure’s effectiveness in eliminating redundant computation.

Second, we investigated the impact of the asymmetric padding size on AP. As shown in

Table 6,

is identified as the optimal solution. When

, the receptive field may be insufficient to fully capture target information, leading to a decline in AP. Conversely, while an excessively high

k expands the receptive field, it risks introducing background noise that distracts from the target, in addition to increasing parameters and computational complexity. Thus, a kernel size of 3 achieves the best balance between receptive field expansion and parameter efficiency.

4.6. Performance Comparison Experiment of Mainstream Methods

To demonstrate the effectiveness of SCA-DEIM, we selected several recent mainstream models for a comparative analysis. In the category of Real-time Object Detectors, we included the S and M versions of YOLOv11 [

36], the S and M versions of YOLOv12 [

37], and the S version of YOLOv13 [

38]. For Object Detectors for UAV Imagery, the S and M versions of FBRT-YOLO were selected [

39]. In the domain of End-to-end Object Detectors, our comparison included RTDETR-R18, the N and S versions of D-Fine, and the N and S versions of the baseline. Furthermore, to facilitate a comprehensive comparison across different model scales, we also trained an SCA-DEIM-S version.

Table 7 presents the comparative results between our method and current mainstream approaches on the VisDrone2019 dataset.

To visually evaluate the efficiency of the proposed method, we compare the trade-off between parameter count (Params) and detection accuracy (AP) in

Figure 8. As illustrated, the proposed SCA-DEIM series (marked in red) resides in the upper-left corner of the plot, indicating a superior balance between model complexity and performance. Compared to the Baseline and recent real-time detectors such as YOLOv11 and YOLOv12, SCA-DEIM achieves significantly higher AP while maintaining a compact model size, validating its effectiveness for resource-constrained UAV applications.

As shown in

Table 7, YOLOv12-M exhibits rather outstanding performance metrics within the YOLO series. However, SCA-DEIM-N surpasses its performance. Specifically, compared to YOLOv12-M, although SCA-DEIM-N achieves only a 0.3% increase in average precision (AP), it obtains significant 1.9% and 1.0% boosts in small object precision (

) and large object precision (

), respectively, while possessing only 20% of the parameters of YOLOv12-M. Compared to FBRT-YOLO-M, SCA-DEIM-S achieves increases of 3.8% in AP and 4.5% in

, with GFlops at only 42% of those of FBRT-YOLO-M. Compared to RT-DETR-R18, SCA-DEIM-S improves AP by 5.1% and AP50 by 5.3%, while remaining on par for

. Most notably, the parameter count of SCA-DEIM-S is only half that of RT-DETR-R18, and its computational complexity is also significantly lower. When compared against the D-Fine and DEIM-D-Fine series, SCA-DEIM demonstrates significant improvements in both AP and

.

Compared to the baseline, SCA-DEIM-N achieves increases of 1.8% in AP, 1.7% in , 2.3% in , and 2.0% in . SCA-DEIM-S achieves increases of 1.9% in AP and 1.7% in , respectively, and our method also reduces computational complexity by 0.6 GFlops compared to the baseline. The experimental results demonstrate that our method is more suitable for the task of small object detection and achieves an effective balance between accuracy and lightweight design. This further validates the effectiveness of our proposed modules in cross-scale feature modeling and information interaction, offering a novel approach to the design of lightweight object detection models.

We selected the UAVVaste and UAVDT datasets to further verify the robustness of our approach in different urban environments, as shown in

Table 8 and

Table 9. Experimental results indicate that SCA-DEIM-N achieves the highest AP and best small object detection (

) in both sparse and dense scenarios. This confirms the superior generalization and robustness of our method, proving its adaptability to diverse detection tasks.

To evaluate the deployment feasibility of SCA-DEIM in high-performance aerial computing scenarios, we conducted a comprehensive inference speed analysis on an NVIDIA GeForce RTX 3080Ti GPU. All models were accelerated using TensorRT 10.11.0 with FP16 precision and a batch size of 1.

Figure 9 illustrates the speed–accuracy trade-off, where the bubble size corresponds to the parameter count. As observed, the proposed SCA-DEIM-S (marked in red) establishes a new Pareto frontier within the high-accuracy regime. Specifically, compared to the baseline, our method achieves a substantial accuracy improvement of +1.9% AP while maintaining a rapid inference speed of approximately 295 FPS, effectively balancing computational cost and detection performance. While the YOLO series exhibits higher inference throughput, SCA-DEIM-S demonstrates superior capability in capturing small and occluded targets. This justifies a marginal compromise in speed for significant precision gains, which are paramount for safety-critical missions.

Complementing the server-side analysis, we further extended our benchmark to the NVIDIA Jetson Orin Nano (4 GB) to rigorously validate the practical deployment capabilities of SCA-DEIM in embedded UAV scenarios. Serving as a representative resource-constrained edge platform, this device imposes significantly stricter latency and memory constraints than server-based environments. To address these challenges, all models were exported to ONNX format and optimized via TensorRT 8.6.2 in FP16 mode. Inference performance was measured with a batch size of 1 to accurately simulate real-time processing conditions.

Table 10 details the inference efficiency on the NVIDIA Jetson Orin Nano. SCA-DEIM-N achieves a high throughput of 54.6 FPS with an ultra-low latency of 18.3 ms, satisfying the strict real-time requirement (<33 ms) for UAV navigation. Compared to YOLOv11-S, our model substantially reduces computational overhead, cutting parameters by 58.9% and GFLOPs by 66.8%, while maintaining comparable responsiveness. Furthermore, relative to the baseline, SCA-DEIM-N reduces computational complexity and improves inference speed despite a negligible increase in parameters, demonstrating a superior trade-off between efficiency and accuracy.

The above experimental results demonstrate that the proposed method is more suitable for small object detection tasks, achieving an effective balance among detection accuracy, lightweight design, and inference speed. Moreover, these results further validate the effectiveness of the proposed modules in cross-scale feature modeling and information interaction, offering a novel perspective for the design of UAV-based object detection models.

4.7. Ablation Experiment

To validate the effectiveness of ASCSA and CSP-SPMConv, a series of ablation experiments were carried out on the VisDrone dataset with four model configurations: A, B, C, and D. Here, A serves as the baseline; B represents the baseline augmented with ASCSA (Baseline + ASCSA); C represents the baseline augmented with CSP-SPMConv (Baseline + CSP-SPMConv); and D is the complete SCA-DEIM model.

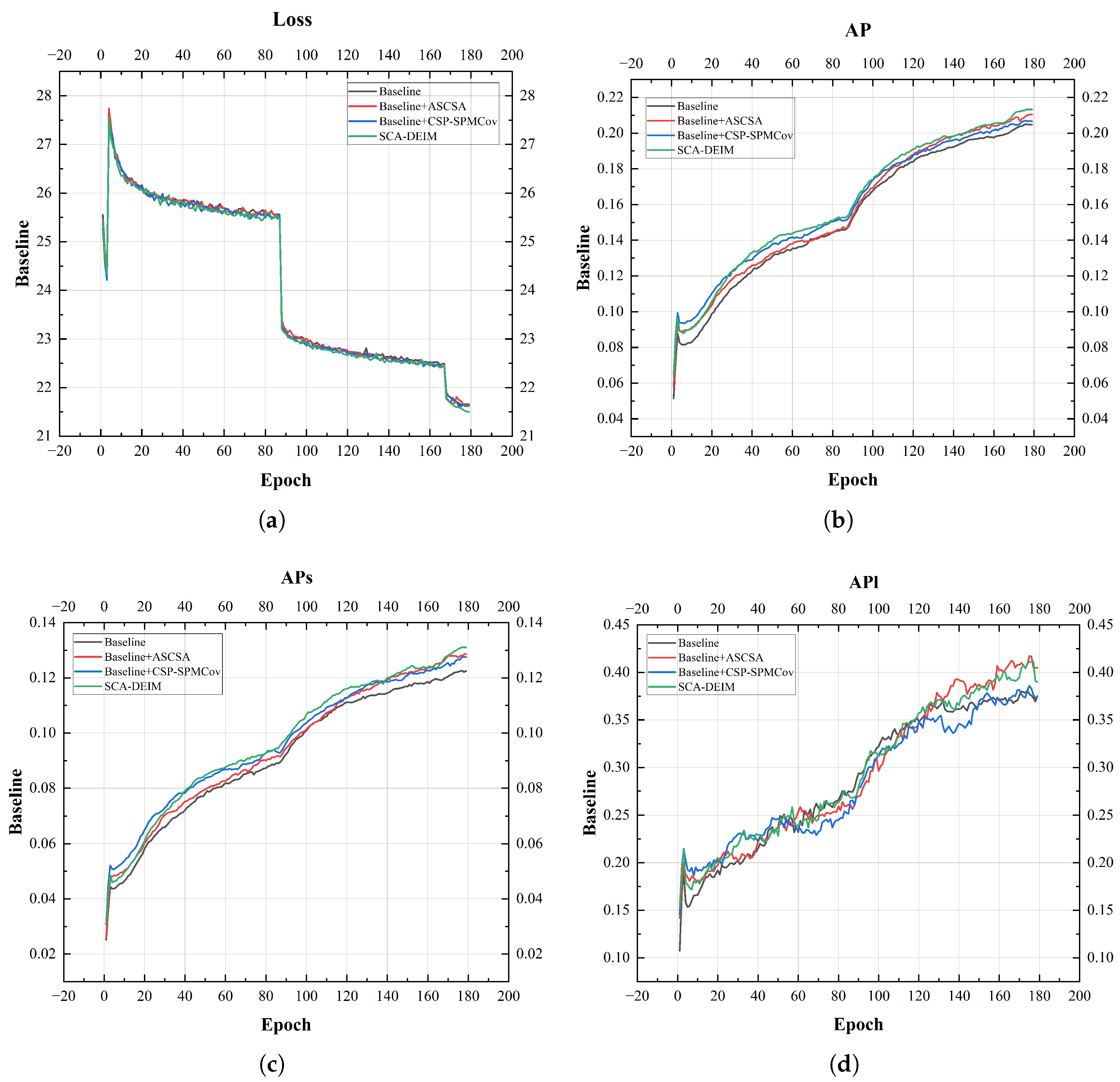

Table 11 presents the comparative results of this ablation study, and

Figure 10 displays the corresponding training curves.

As depicted in

Figure 10, the AP metrics experience a rapid increase by the 88th epoch, which marks the end of the second stage. This is precisely the intended outcome of enabling the Mosaic and Mixup techniques during this phase. Mosaic operates by randomly stitching four different training images into a new, composite image, applying random scaling, cropping, and arrangement during the process. Mixup linearly interpolates two images at a specific ratio, while simultaneously mixing their corresponding labels (i.e., bounding boxes) and class probabilities at the same ratio. The synergy of these two methods significantly enhances sample diversity, thereby improving the detection model’s generalization performance, convergence stability, and robustness.

Table 11 and

Figure 10 illustrate that integrating the ASCSA module improves the detection performance, yielding increases of 1.1% in AP, 1.2% in

, and 1.2% in

over the baseline. This indicates that ASCSA effectively enhances semantic feature extraction capabilities through its spatial-channel synergistic attention mechanism. Concurrently, the introduction of the CSP-SPMConv module increased AP by 0.3% and

by 1.1%, validating the effectiveness of its multi-scale feature fusion and partial-feature-sharing design for fine-grained feature extraction. When both modules were integrated, all performance metrics reached their peak values. This strongly demonstrates that ASCSA and CSP-SPMConv have a synergistic relationship in semantic feature extraction and multi-scale feature fusion, collaboratively enhancing the model’s detection accuracy for both overall and small objects.

Figure 11 illustrates the normalized confusion matrices from the ablation study on the VisDrone2019 validation set. The diagonal elements represent the classification accuracy for each category, while the off-diagonal elements indicate the misclassification rates. It can be observed that with the individual introduction of the ASCSA and CSP-SPMConv modules, the values along the diagonal generally increase, whereas the off-diagonal values decrease. Notably, when these two modules function synergistically, the detection accuracy for targets such as “pedestrian”, “bicycle”, and “car” is significantly enhanced, effectively reducing their corresponding misclassification rates.

Furthermore, we observed that when trained for 180 epochs under the same strategy, the complete SCA-DEIM model achieved a lower training loss compared to the other configurations. This suggests that the fusion of the ASCSA and CSP-SPMConv modules not only enhances detection accuracy but also facilitates a more stable and efficient optimization process. The lower loss value further validates that the proposed modules can effectively guide the network toward faster convergence and superior feature learning capabilities.