Abstract

In grinding machining, monitoring grinding wheel wear is essential for ensuring process quality wear and reducing production costs. This paper presents a hybrid CBiGRUPE model to predict grinding wheel wear, which integrates the advantages of convolutional neural networks (CNNs), bidirectional gated recurrent unit (BiGRU), and the Performer encoder. Time-domain features are extracted from the spindle motor current signals of a surface grinding machine. The structure and hyperparameters of CBiGRUPE are optimized using Bayesian optimization. Experimental validation of the model demonstrates superior performance, with mean absolute error (MAE), root mean square error (RMSE), and coefficient of determination (R2) values of 3.041, 3.927, and 0.920, respectively. Compared to models like CNN, BiGRU, and Transformer, the CBiGRUPE model offers more accurate and stable wear predictions. This paper also discusses the advantages and limitations of various models for estimating grinding wheel wear, emphasizing the effectiveness of the proposed approach. This study establishes a foundation for compensating wheel wear and accurately determining the optimal dressing time.

1. Introduction

Grinding wheel wear seriously affects processing quality. Therefore, monitoring grinding wheel wear online is essential to ensuring workpiece processing quality, improving processing efficiency, and maintaining production safety [,,].

Currently, grinding wheel wear detection methods are mainly classified into two types: direct and indirect []. The direct approach involves using advanced instruments such as a scanning electron microscope [,], white light interferometer [,], optical microscope [,], and optical profilometer [,]. While these methods offer high accuracy and comprehensive information, they are costly, require significant downtime, and are limited by the need for a controlled environment, restricting their applicability in real-time production environments. In contrast, the indirect approach evaluates wheel wear by analyzing the characteristics of signals, such as acoustic emission [,,], grinding force [,,], spindle current or power [,], and vibration [,,] during the grinding process. This approach supports real-time monitoring, making it suitable for online measurements and significantly improving processing efficiency. As a result, the indirect approach has gained widespread attention in industrial applications due to its ability to monitor grinding wheel wear intelligently.

While the indirect approach enables online monitoring without disrupting production, it still relies on manual feature extraction and struggles to address complex nonlinear relationships in the data effectively. Machine learning and deep learning algorithms have shown promise in predicting grinding wheel wear []. Liao et al. [] proposed a discrete wavelet decomposition-based method to extract the energy features of each raw acoustic emission signal segment and classify the grinding wheel wear state using an adaptive genetic clustering algorithm. Mahata et al. [] introduced a time-frequency analysis method based on the Hilbert–Huang Transform combined with a support vector machine to monitor the wear state during cylindrical grinding by analyzing vibration and power signals. Shen [] classified the grinding wheel wear state by analyzing the AE signals generated during the surface grinding of carbon steel workpieces using a support vector machine (SVM) algorithm with a linear kernel. Zhang et al. [] extracted features from power, acceleration, and acoustic emission signals generated during cylindrical grinding after preprocessing. Then, four features that correlate with grinding wheel wear were selected. Finally, the grinding wheel wear with various process parameters was predicted utilizing an interval two-type fuzzy basis function network (FBFN). Wan et al. [] employed the whale optimization algorithm to optimize the variational mode decomposition parameters of the AE, vibration, and force signals. Then, the INFO-SVM model was utilized to identify the grinding wheel wear state during surface grinding.

Deep learning models have more robust learning capabilities and better scalability performance than traditional machine learning methods, allowing them to mine valuable information from data. Chen et al. [] introduced a high-precision identification of grinding wheel wear states in robust noise environments using a combination of the IENEMD noise reduction method, the Hilbert transform, and the one-dimensional convolutional neural network. Guo et al. [] obtained many features of grinding force, acceleration, and acoustic emission signals by analyzing them during surface grinding, and a two-stage feature selection approach was introduced to obtain the optimal subset of features. In addition, a long short-term memory (LSTM) model was employed to predict grinding wheel wear. Xu et al. [] presented an approach utilizing variable modal decomposition to reduce noise in acoustic emission signals produced during cylindrical grinding. A neural network model called AO-CNN-LSTM was subsequently employed to assess the wear state. Si et al. [] proposed a hybrid model called TransBiGRU, which combines the advantages of the transformer encoder and the BiGRU model by analyzing 18 statistical features of current signals to predict wheel wear.

While deep learning models such as CNN, BiGRU, and Transformer perform impressively in various tasks, they still have some limitations. CNNs excel at extracting local features but struggle to capture long-range dependencies within sequences []. RNN-based models, like BiGRU, mitigate the vanishing gradient problem but still face challenges with very long sequences and require significant computational resources []. Transformer models are highly effective at capturing global dependencies, yet they suffer from memory bottlenecks and high computational complexity, particularly with long sequences []. These limitations underscore the necessity for a hybrid approach that combines the strengths of these models while addressing their weaknesses [,].

A hybrid model, CBiGRUPE, is proposed to address these limitations, integrating CNN, BiGRU, and the Performer encoder for grinding wheel wear prediction. By combining the strengths of these models, CBiGRUPE captures both local and global features, making it well suited for real-time industrial applications. Compared to other models, such as CNN, BiGRU, and Transformer, the CBiGRUPE model demonstrates superior prediction accuracy and processing efficiency, as shown by lower MAE and RMSE and higher R2 values in experimental validation. This paper also discusses the benefits and drawbacks of various models, highlighting the effectiveness of the CBiGRUPE approach in predicting grinding wheel wear.

2. Theoretical Foundation

2.1. Convolutional Neural Network

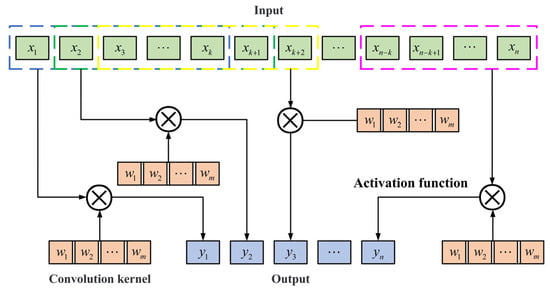

Grinding wheel wear is a complex process, and a Convolutional Neural Network (CNN) can better extract the information and features related to wheel wear prediction. By moving the convolutional kernel across several input sequence locations, the CNN can effectively capture the sequence data’s varying local patterns and features. As illustrated in Figure 1, the convolutional layer captures local features from the time series data by using a sliding window.

Figure 1.

CNN structure diagram.

2.2. Bidirectional Gated Recurrent Unit

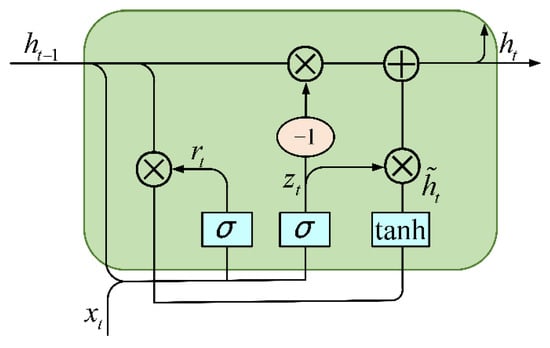

Although the Recurrent Neural Network (RNN) has the advantage of being able to extract contextually relevant information, it can access a minimal range of contextual information and suffers from the problem of long-term dependency. Therefore, it is essential to introduce a threshold mechanism into the RNN structure to preserve the necessary information. It can not only be an effective solution to the issues of gradient vanishing and gradient explosion but also can partially address the shortcomings of the difficulty of transmitting information over long distances. The more commonly used RNN structures with gate mechanisms are Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) architectures, which have temporal solid processing capability. However, because GRU does not need to retain memory, the network’s internal structure has only two gates, which saves memory space, reduces the training parameters, and results in faster training speed. Figure 2 illustrates the basic structure of a GRU model, and the forward GRU computational formula is as follows:

where represents the input vector of the current time step; , , and represent the weight matrices; and , , and denote the bias vectors.

Figure 2.

The basic structure of a GRU model.2.3. Performer.

The BiGRU model can learn both past and the future information. It can learn the input sequence information more comprehensively to avoid missing information when processing long sequences. and denote the corresponding outputs for the Forward GRU layer and Backward GRU layer at moment t, respectively, displayed as follows:

The output of BiGRU is displayed as follows:

where and denote the weight matrices for the forward and backward GRU structures, respectively, while denotes the bias vector of the output layer.

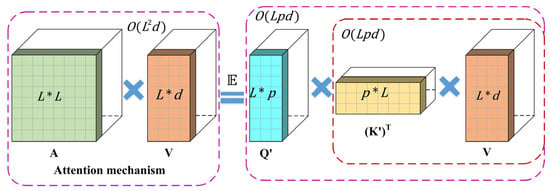

Performer [] introduces a linear generalized attention mechanism, which achieves near-linear growth in both space and time complexity by employing the Fast Attention Via Positive Orthogonal Random Features (FAVOR+) algorithm. This approach significantly reduces the training time, particularly when handling long sequences.

The FAVOR+ algorithm defines the attention matrix as follows:

where and represents the and row vectors of the query Q and key K, respectively. The kernel K is introduced as follows:

where denotes a mapping function.

When , their row vectors are represented as and , respectively.

The effective attention, based on the kernel definition, can be expressed as follows:

where and . Here, denotes the approximate attention, while the brackets indicate the computational order.

In terms of complexity, the FAVOR+ mechanism achieves a space complexity of and a time complexity of . In comparison, the conventional attention mechanism has a space complexity of and a time complexity of . Figure 3 illustrates the approximate regular attention mechanism.

Figure 3.

The illustration shows the mechanism of approximate regular attention.

The mapping function Φ of the kernel technique can be defined as follows:

where are different mapping functions, and the random samples are drawn from a distribution D. The distribution D is isotropic, typically Gaussian.

The softmax kernel of the attention matrix A is defined as follows:

If we let , the equation below can be obtained:

Due to the introduction of sin and cos functions in Equation (13), negative values may arise during the computation. If these negative values emerge after the model has been trained for a particular duration, the resulting variance may become more pronounced, adversely affecting the model’s training process. As a result, the weights needed should ensure that they are all positive to reduce the estimated contrast. The unbiased softmax approximation is achieved by employing the positive random features (PRFs) approach. It ensures that all results are positive, as depicted in Equation (14).

where, represents a Gaussian distribution, and denotes the hyperbolic cosine function.

3. Proposed Method

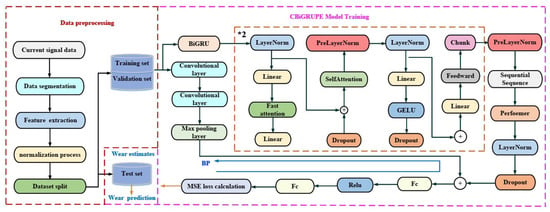

Figure 4 illustrates the structure of the CBiGRUPE model, which involves three main parts: data processing, model training, and wear prediction. The *2 symbol indicates that the model contains two identical modules, both enclosed in yellow boxes, which are the same in structure and function.

Figure 4.

The architecture of the CBiGRUPE model.

Data processing: The data processing stage includes noise reduction, feature extraction, and normalization. The initial data are filtered using a moving average filter to identify better patterns associated with wheel wear. This filtering approach removes periodic signals and white noise from the measurement signal. Subsequently, the measurement data are sliced into 200 segments using the upward rounding slicing method. Ten statistical features of the current signals are calculated simultaneously, and these features are normalized.

CBiGRUPE model training and wear prediction: The CBiGRUPE model mainly comprises a CNN module, a Performer encoder module, a BiGRU, a dropout layer, and two fully connected output layers, Fc. The input data first go through the CNN and BiGRU modules simultaneously. The CNN module, in turn, contains convolutional layers, activation functions, and the Maximum Pooling Layer. The CNN model can extract the local features of the data, which can effectively deal with high-dimensional data. The Performer encoder processes the output data generated by the BiGRU model. The multi-layer BiGRU module has better long-term dependency performance and powerful timing processing capability. The Performer encoder contains multiple attentional mechanisms that capture long-distance dependencies in sequence data, enhancing the ability to capture positional correlations in sequences. Skip connections connect the Performer encoder’s output to the CNN module’s output. These additional connections allow for a continuous flow of information, prevent the loss of essential data, and help the model better adjust to the training data, ultimately improving the model’s performance. Dropout reduces the over-reliance of neural networks on training data, thereby improving the model’s generalization capability and reducing the possibility of overfitting. The leave-one-out cross-validation approach is a widely employed method for model evaluation that enhances model reliability, particularly for datasets with limited samples. Moreover, it mitigates the instability caused by random training-validation splits, ensuring more stable evaluation results. The loss function for the CBiGRUPE model is a mean squared error (MSE), allowing for effective quantification of prediction errors. Finally, the trained model is used to predict wheel wear, thus facilitating real-time monitoring.

4. Experimental Research

4.1. Introduction to the Datasets

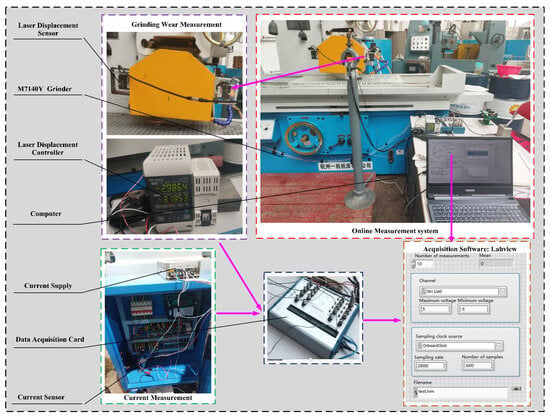

This study introduces an approach to measure the grinding wheel wear and current signals of the machine tool spindle motor in real time, as depicted in Figure 5. The experiment was finished on surface grinder M7140Y. The selected grinding wheel is a white corundum wheel with a grain size of 46#, which meets the definition of medium grain size according to ISO 6106:2013 [], balancing material removal rate and surface quality. This choice is consistent with the recommendations for the coarse grinding of carbon steel in the Machinery’s Handbook []. Its outer diameter is 350 mm, its inner diameter is 127 mm, and the width is 40 mm, a configuration that effectively balances the need for accurate wear measurement with the operational requirements of the grinding process. The workpiece material used is unquenched No. 45 steel, known for its good machinability and moderate mechanical strength, making it a popular choice for manufacturing mechanical parts. The dimensions of the workpiece are 100 18 35 mm. The laser displacement sensor records the grinding wheel wear within a linear range of 10 mm and a resolution of 5 μm. This ensures accurate detection of even minor wear changes, suitable for high-precision grinding monitoring. A current sensor records the current signals during grinding. Its input range is 0–10 A AC, and the output range is 0–3 V DC, capable of handling current fluctuations during grinding and providing reliable signals for wear analysis. A 16-bit data acquisition card with a maximum sampling frequency of 500 kS/s was selected to fulfill the experiment’s need for high-frequency data acquisition. NI LabVIEW 2019 was used for data collection. Given the fast fluctuations in signals during the grinding process, the high sampling frequency ensures precise capture of fine details, ensuring data accuracy and timeliness, particularly for dynamic wear prediction.

Figure 5.

Online measurement system for grinding wheel wear and grinding machine spindle motor current signals.

Because of the unevenness, the grinding wheel must be statically balanced two times before installation to minimize imbalance and reduce vibration that could affect the accuracy of the wear measurements. According to the DIN 12413 [] grinding wheel safety standards, the grinding wheel speed is 1500 r/min, which falls within the safe operating range for the wheel’s diameter (typically 20–35 m/s). This speed ensures both grinding efficiency and heat management, preventing excessive heat accumulation due to excessively high speeds. The depth of cut is 0.015 mm, providing effective material removal while minimizing excessive wheel wear. This is within the recommended range for coarse grinding in ISO 16089 []. The table speed is 50 mm/s, which, in conjunction with the wheel speed and feed rate, ensures smooth and consistent grinding conditions. By appropriately selecting the grinding wheel speed, grinding feed rate, table feed speed, and sensor parameters, the changes in wear can be effectively captured.

After the system setup is completed, since the actual output of the laser displacement sensor may have nonlinear errors, it is necessary to carry out online linear calibration of the laser displacement sensor to ensure that the output of the sensor in the measurement range is linear with the actual distance before carrying out the online detection of the grinding wheel wear amount, to improve the detection accuracy.

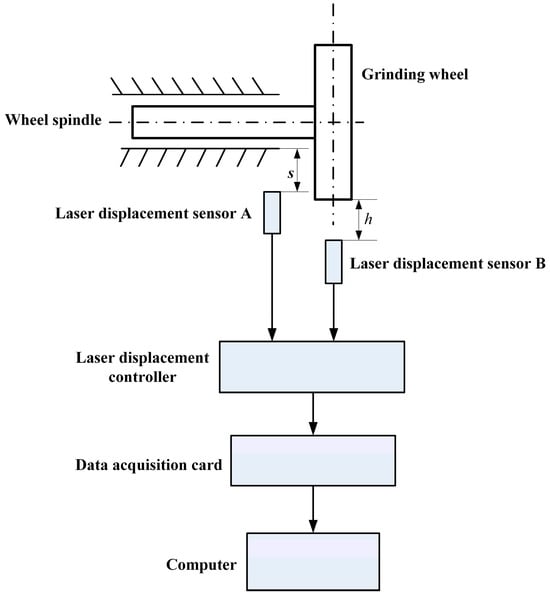

The measurement principle of the online detection system is shown in Figure 6. As can be seen from the figure, the position of sensor A is facing a fixed point on the grinding wheel spindle, and its distance to the grinding wheel spindle is s. Sensor B is installed in the position passing through the centerline of the grinding wheel, and its distance to the centerline of the grinding wheel is h. During the grinding process, with the continuous abrasion of the grinding wheel, the gap between the grinding wheel and the laser sensor increases. Therefore, in the calibration experiment, the grinding wheel wear can be simulated by the upward movement of the grinding wheel bracket. The calibration process is as follows:

Figure 6.

Calibration principle of the online detection system.

(1) As shown in Figure 6, adjust sensor A and sensor B so that the distance s and h are both equal to fixed constant values.

(2) Start the grinding wheel. The number of sampling points for one cycle of grinding wheel rotation is N. When the grinding wheel rotates stably, the data are collected by sensor B, and the average value of the N sampling points is calculated and recorded.

(3) Stop the grinding wheel and manually adjust the grinding wheel bracket upward to increase the reading value of sensor A by , which indicates the amount of wear. At this time, the distance between the grinding wheel and sensor A is .

(4) Restart the grinding wheel. When the grinding wheel rotates stably, sensor B collects data following the method of step (2), and the average value of N sampling points is calculated and recorded.

(5) Repeat steps (3) and (4), and when the distance between the grinding wheel and sensor A is , the corresponding measured value is obtained, and the experiment is continued until the end.

(6) The online calibration process detects grinding wheel wear by analyzing and calculating the sampled data.

To eliminate the effect of simple harmonic vibration caused by the unevenness of the grinding wheel, the product of the number of sampling points and the sampling interval time should be a multiple of the whole cycle of the grinding wheel rotation. The speed of the grinding wheel is , measured by a tachymeter. The period T of the grinding wheel is as follows:

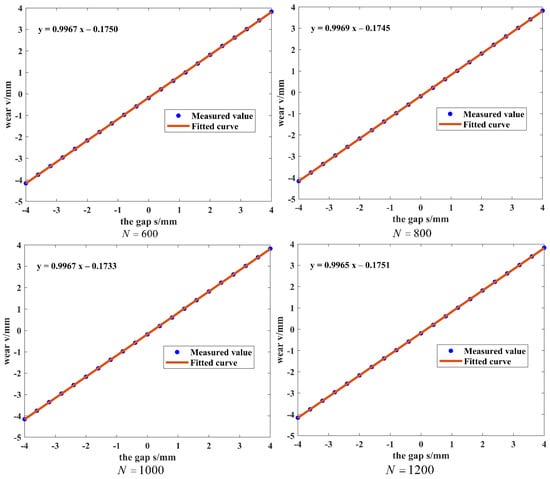

The sampling time is set to 10T to reduce the influence of random errors on the measurement results. The number of sampling points N is selected to be 600, 800, 1000, and 1200 points. The wear amount of each sampling point is represented by the average value of N sampling points, and the average value v of the measurement results of 10T represents the wear amount of this experiment. The measurement results are shown in Table 1, and the fitting curve is shown in Figure 7.

Table 1.

Wear measured at different number of sampling points.

Figure 7.

Fitted curves at different sampling points.

Based on the measured data in Table 1, the linearity of the system can be calculated when different sampling points are used:

From the above calculations and the fitting equations in Figure 7, it can be seen that the linearity of the fitted curves is 0.28%, 0.21%, 0.20%, and 0.20% and the sensitivities are 0.9967 V/mm, 0.9969 V/mm, 0.9967 V/mm, and 0.9964 V/mm when the number of sampling points is 600, 800, 1000, and 1200, respectively. All are close to 1. This indicates that the system has a good linear relationship between the output and the input and high sensitivity. Therefore, when detecting the wear amount of the grinding wheel, choosing 1000 sampling points can ensure that the system has high linearity and high sensitivity but also has good stability and can obtain a relatively accurate wear amount.

After the linear calibration was completed, to effectively suppress the measurement error caused by mechanical vibration, a special fixture with sealing all around was designed to rigidly fix the laser displacement sensor on the left side of the grinding wheel cover body, away from the coolant nozzle. Further sealing was carried out at the possible gaps in the sensor fixture to prevent the influence of water vapor generated by the high-speed rotation of the grinding wheel on the measurement results. At the same time, to prevent the adverse effects of debris, dust, and coolant splashing generated during the grinding process on the detection accuracy of the laser displacement sensor, a slot was designed between the fixture and the grinding wheel housing, in which a well-elasticized baffle plate was inserted.

When detecting the grinding wheel wear amount online, the number of sampling points is set to 1000, and the sampling time is 10T. The specific operation flow is as follows: insert the stopper after feeding and idle for 10 s after the grinding process is completed. Then, remove the stopper and use the LabVIEW program to carry out online detection of grinding wheel wear. Finally, the stopper is inserted again for grinding processing, and the above operation is repeated to realize the online detection of the grinding wheel wear amount.

The experimental steps after the installation of the grinding wheel are as follows:

(1) Turn on the machine and use a single-point diamond tool to dress the grinding wheel. The total amount of dressing is 0.06 mm.

(2) Each feed is followed by reciprocating grinding three times to complete the grinding process. During each grinding stroke, when the grinding wheel touches the workpiece, the current signal is collected for the first two seconds.

(3) Before each subsequent feed, the change in grinding wheel wear is captured by a laser displacement sensor after the completion of each grinding stroke.

(4) Measure and draw the wear curve of the grinding wheel during grinding; according to the drawn curve, the wear can be more easily understood.

(5) Repeat steps (2), (3), and (4) until the grinding wheel is determined to be dull due to a significant change in wear. Then, gather the current signal and grinding wheel wear data to ensure comprehensive dataset coverage. Current signals and the actual wear can be obtained from the dressing to dulling process.

(6) Repeat steps (1), (2), (3), (4), and (5) to obtain three independent repetitions of the experimental datasets N1, N2, and N3. This approach ensures the reproducibility and reliability of the results, providing more robust data for model predictions.

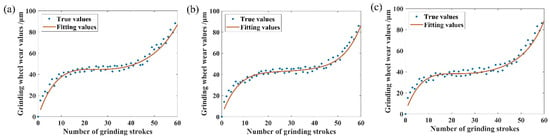

Wear curves are derived by fitting a fifth-order polynomial during grinding, as illustrated in Figure 8. This polynomial fitting provides a smooth representation of the wear progression over time. In Figure 8, the sharp abrasive grains on the surface come into contact with the surface of the workpiece for the first time in 0–11 grinding strokes, resulting in more abrasive grains falling off, a high wear rate, and the grinding wheel being in the early stage of wear. During 11–45 grinding strokes, the grinding wheel enters into a relatively stable working state, the wear rate is relatively stable and low, and the grinding wheel is in the normal wear stage. During 45–60 grinding strokes, the abrasive grains of the grinding wheel gradually become blunted, resulting in a significant decrease in cutting ability, indicating that the grinding wheel has entered the normal wear stage. Blunting and reduced cutting ability result in a significantly higher wear rate, causing the grinding wheel to fail and gradually enter the sharp wear stage. A worn wheel may cause vibration marks and damage to the surface of the workpiece due to continuous friction and the heat generated by the friction, as shown in Figure 9.

Figure 8.

Wear fitting curves: (a) fitted curve for N1; (b) fitted curve for N2; (c) fitted curve for N3.

Figure 9.

The surface condition of the workpiece at the end of grinding.

4.2. Feature Extraction

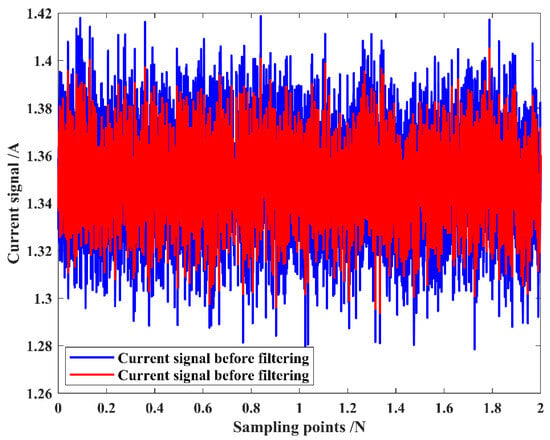

Python 3.11 was used for various stages of data processing, including signal filtering, feature extraction, modeling, and wear prediction. The moving average filtering method filters the collected current signal with a window size , which can better filter out the periodic interference and noise contained in the current signal. The window length of 15 for moving average filtering is chosen for the following reasons: (a) Random noise in the grinding signal usually has a short-term correlation, so choosing an appropriate window length can effectively smooth the noise. Specifically, the window length needs to be larger than the correlation time of the noise to ensure that the filter can effectively remove the high-frequency components of the noise. Setting the window time as too small may result in high-frequency noise not being filtered out, while setting the window time as too large may smooth out the practical feature information in the signal. Therefore, ensuring that the window length covers the correlation time of the noise while avoiding excessive smoothing is a key consideration when choosing the window length. (b) The larger the window length of the moving average filter, the larger the delay, which may affect the real-time performance. Therefore, a window length of 15 is chosen to effectively smooth the noise without introducing too much delay and maintain the signal’s effective characteristics. (c) To further validate the choice of window length, experiments with different window lengths were conducted to compare the signal-to-noise ratio with the correlation time of the noise signal. The results are shown in Table 2. From the table, it can be seen that with the increase in the window length, the signal-to-noise ratio gradually decreases while the correlation time gradually increases. According to the experimental data, when the window length is 15, the signal-to-noise ratio reaches the maximum value of 45.11 dB. Considering the signal-to-noise ratio and the delay together, we choose to obtain the optimal noise-filtering effect and signal retention ability. Selecting the window length of 15 for moving average filtering to filter the acquired current signal can better filter out the periodic interference and noise contained in the current signal. As displayed in Figure 10, the moving average filtering effectively handles the redundant information in the current signal and makes the data smoother.

Table 2.

Signal-to-noise ratio and correlation time at different window lengths.

Figure 10.

Comparison of current change before and after filtering.

After signal preprocessing, feature extraction is performed on the current signals. Initially, the segmented current data are divided into 200 non-overlapping segments using an upward rounding slicing method. Ten time-domain features are computed for each segment, as displayed in Table 3. Calculating these time-domain features provides a comprehensive understanding of the distribution, degree of dispersion, concentration trends, and presence of outliers within the data. The extracted features are then normalized.

Table 3.

Feature extraction.

4.3. Optimization of the CBiGRUPE Model

Adam was selected as the optimizer for the proposed model. The Mean Squared Error (MSE) is commonly used to evaluate the model’s fitting performance, with a smaller MSE indicating better model fitting. Hence, MSE is typically utilized as a loss function in a training process and is calculated as follows:

where n represents the sample size, denotes the actual values, and indicates the predicted values generated by the model.

Hyperparameter optimization is a critical step in training machine learning and deep learning models, aiming to find the optimal combination of hyperparameters to improve the model’s prediction accuracy. The objective function of the optimization process is the minimum of the average value of the loss function on the validation set:

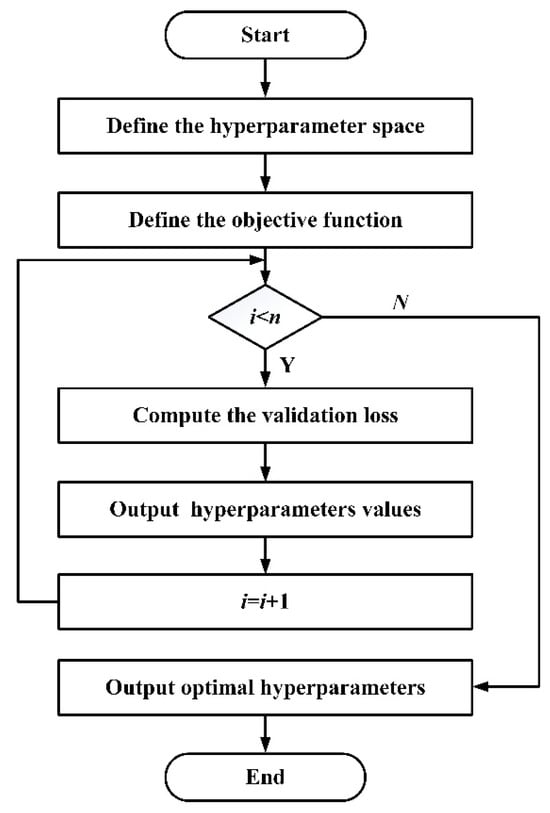

where represents the structural parameters and hyperparameters of the PMSCNN model, k denotes the number of samples, and represents the validation set data. The parameter search space for Bayesian optimization is shown in Table 4. The structure and hyperparameters of CBiGRUPE are optimized using Bayesian optimization, as presented in Figure 11.

Table 4.

The parameter search space for Bayesian optimization.

Figure 11.

Flowchart of CBiGRUPE model optimization.

A total of 50 trials were conducted, with an early stopping mechanism implemented to prevent overfitting and save time and computational resources. Table 5 provides a detailed structure of the CBiGRUPE model, while Table 6 lists the optimal hyperparameters.

Table 5.

Relevant parameters for the CBiGRUPE model.

Table 6.

Hyperparameters of the CBiGRUPE model.

4.4. Evaluation Metrics

MAE and RMSE are utilized as metrics to assess the performance of predictive models. Additionally, R2 provides a critical metric for comprehensively evaluating the model’s goodness of fit, providing insights into the proportion of variance the model explains.

where n represents the sample size, denotes the actual values, and indicates the predicted values generated by the model.

4.5. Discussion

The leave-one-out cross-validation method [] can fully use the limited data resources, can improve the model’s generalization ability and assessment accuracy, and is particularly suitable for predicting grinding wheel wear. This study adopted a model integration approach [] to enhance prediction performance. Integrating the advantages of different models can reduce the bias and overfitting problems in a single model, improving the overall model performance and generalization ability. The structure and hyperparameters of CBiGRUPE are optimized using Bayesian optimization within a specified search space, yielding the optimal hyperparameters.

- Comparison of prediction results based on empirical and optimized parameters:

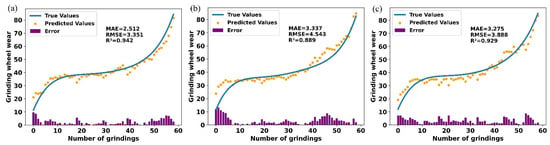

A Bayesian optimization approach was used to tune the structure and hyperparameters of the CBiGRUPE model within a specified search space. Table 7 and Table 8 present the evaluation metrics for the prediction results using empirical and optimization parameters on each dataset. As shown in Table 7 and Table 8, the model with optimized parameters outperforms the one with empirical parameters in both MAE and RMSE metrics. Specifically, in dataset N1, the MAE of the optimized model decreases by 0.078 (from 2.590 to 2.512), and the RMSE decreases by 0.0101 (from 3.361 to 3.351). Similarly, the N2 and N3 datasets show a similar trend, with the optimized model demonstrating better performance in terms of error reduction. This improvement in prediction accuracy indicates that hyperparameter optimization effectively reduces prediction error and enhances the model’s practical application accuracy.

Table 7.

Evaluation metrics of the CBiGRUPE model with empirical parameters.

Table 8.

Evaluation metrics of the CBiGRUPE model with optimized parameters.

Regarding model fit, the optimized model generally yields better R2 values. On the N1 dataset, the R2 of the optimized model increases slightly from 0.940 to 0.942. However, the most notable improvement is observed in the N2 dataset, where R2 increases from 0.841 to 0.889. This indicates that the optimized hyperparameters enhance the model’s explanatory power, enabling it to better capture intricate relationships within the datasets.

Overall, the average metrics across all datasets were improved after optimization: MAE decreased by 0.251 (from 3.292 to 3.041), RMSE decreased by 0.516 (from 4.443 to 3.927), and R2 increased by 0.025 (from 0.895 to 0.920).

In conclusion, the CBiGRUPE model with optimized parameters achieves a higher prediction accuracy using empirical parameters in all performance indicators. Hyperparameter optimization reduces prediction error, improves model fit, and enhances generalization. It is crucial for enhancing model performance during machine learning development. Future research should explore advanced optimization strategies to improve prediction model efficiency and accuracy.

Figure 12 shows the prediction results of the CBiGRUPE model after hyperparameter optimization on various datasets, further verifying the model’s reliable fit and robustness. As shown in Figure 12, the prediction errors remain within a small range, indicating excellent prediction accuracy and confirming the model’s robustness across different datasets.

Figure 12.

Prediction results for different test sets: (a) prediction results for N1; (b) prediction results for N2; (c) prediction results for N3.

- Comparison of predictions from different models:

Analyzing the predictive performance of different models provides insights into their strengths and weaknesses in predicting grinding wheel wear. By leveraging feature selection, different models were optimized for both structure and hyperparameters. Their performance in predicting wheel wear was assessed using the optimized hyperparameters. Table 9, Table 10 and Table 11 demonstrate the evaluation metrics for the prediction results of different models on different datasets.

Table 9.

Evaluation metrics for the MAE of the different models.

Table 10.

Evaluation metrics for the RMSE of the different models.

Table 11.

Evaluation metrics for the R2 of the different models.

Statistical significance analysis: The CBiGRUPE model significantly outperforms the CNN model on MAE and RMSE () and surpasses the CNN and Performer encoder models in R2 metrics (), as confirmed by an independent sample t-test (significance level ). The comprehensive comparison results show that the CBiGRUPE model exhibits superior prediction accuracy in the wheel wear prediction task. Especially in the R2 metrics, CBiGRUPE demonstrates significant advantages over the CNN and Performer encoder. While the differences in MAE and RMSE are not always significant, they are still improved. The stability and accuracy of the overall performance make it highly useful in wear prediction tasks. Therefore, the CBiGRUPE model has a wide range of application prospects in practical industrial applications, especially in wear monitoring and real-time prediction, which can provide accurate and efficient solutions.

CNN model: The CNN model has strong local feature extraction capability, but it is deficient in capturing global dependencies, especially on long time series data, which leads to its low prediction accuracy. This is manifested by , , and . On the N2 dataset, CNN’s prediction performance is poor, which may be related to its limited local feature extraction capability and insufficient modeling of global dependencies.

BiGRU model: The BiGRU model has the advantage of capturing forward and backward dependencies but lacks global modeling capability, which makes its performance degraded when dealing with long time series, as shown by , , and . However, the BiGRU model exhibits limited global modeling capability, struggling to capture long-range dependencies or global context. Additionally, its sequential nature restricts parallel processing, reducing computational efficiency in large-scale applications.

Transformer model: The transformer model, with its global modeling capability, can capture dependencies in long time series and exhibits high prediction accuracy (, , and ). However, Transformer has a high computational complexity, the training process requires a large amount of computational resources, the training speed is slow, and the training cost is high in industrial real-time applications. Therefore, in practical applications, Transformer may face the dual challenges of performance and computational resources, especially for the demand of real-time prediction.

Performer encoder model: The Performer model can efficiently capture global dependencies in long-sequence tasks, which can be beneficial for wear prediction. However, its limited focus on local features might affect performance in tasks where local details are crucial (, , and ).

CBiGRUPE model: The CBiGRUPE model combines the advantages of CNN, BiGRU, and Performer encoder to capture local features and deal with global dependencies in a long time series. The MAE (3.041), RMSE (3.927), and R2 (0.920) on all datasets demonstrate superior performance to the other models; especially on the N2 and N3 datasets, the CBiGRUPE model significantly reduces the prediction error and exhibits excellent stability and accuracy. Through this fusion, CBiGRUPE can provide higher accuracy in complex wear prediction tasks, showing significant potential for practical industrial applications and providing a more efficient and accurate solution for wear monitoring in grinding processes.

5. Conclusions

This paper proposes an advanced method for predicting grinding wheel wear by analyzing spindle motor current signals, utilizing an optimized CBiGRUPE model. The contributions are listed below:

(1) A laser displacement sensor is employed to measure grinding wheel wear in real time. It provides an efficient and cost-effective solution for online wear monitoring, serving as an alternative to traditional methods.

(2) The CBiGRUPE model, which integrates CNN, BiGRU, and Performer encoders, significantly enhances prediction accuracy by leveraging the strengths of each model, thus improving the overall prediction performance for grinding wheel wear.

(3) The structure and hyperparameters of CBiGRUPE are optimized using Bayesian optimization. This optimization enables the automatic identification of the optimal parameter set, improving the model’s robustness and performance.

(4) The optimized CBiGRUPE model was experimentally validated using spindle motor current signals, achieving impressive average evaluation metrics: , , and . These results demonstrate the model’s superior prediction accuracy in predicting grinding wheel wear. A detailed comparative analysis of various models highlights their respective advantages and limitations, offering valuable insights for future optimization.

While this study lays the groundwork for determining wheel dressing time and wheel wear compensation, further research is needed. Future work could integrate force, acceleration, and acoustic emission (AE) sensors to build a more comprehensive dataset, thereby significantly enhancing prediction accuracy. Additionally, the robustness of the CBiGRUPE model under varying grinding parameters and environmental noise should be investigated to further validate its applicability in real-world industrial settings.

Author Contributions

Conceptualization, S.S. and D.M.; methodology, S.S. and D.M.; validation, S.S. and H.T.; formal analysis, S.S.; investigation, S.S. and H.T.; resources, S.S. and D.M.; data curation, H.T.; writing—original draft preparation, S.S.; writing—review and editing, S.S. and D.M.; visualization, H.T.; supervision, D.M.; project administration, D.M.; funding acquisition, D.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Department of Science and Technology of Jilin Province (20200401119GX).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The datasets used during the current study are available from the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Lee, J.; Ni, J.; Singh, J.; Jiang, B.; Azamfar, M.; Feng, J. Intelligent maintenance systems and predictive manufacturing. J. Manuf. Sci. Eng. 2020, 142, 110805. [Google Scholar] [CrossRef]

- Tang, J.; Qiu, Z.; Li, T. A novel measurement method and application for grinding wheel surface topography based on shape from focus. Measurement 2019, 133, 495–507. [Google Scholar] [CrossRef]

- Xiao, Y.; Wang, S.; Dong, J.; Wang, S.; Xia, C. Online monitoring method of non-cylindrical wheel wear for gear grinding based on dynamic force model. Mech. Syst. Signal Process. 2024, 206, 110915. [Google Scholar] [CrossRef]

- Liao, T.W.; Tang, F.; Qu, J.; Blau, P.J. Grinding wheel condition monitoring with boosted minimum distance classifiers. Mech. Syst. Signal Process. 2008, 22, 217–232. [Google Scholar] [CrossRef]

- Wang, Q.; Zhao, W.; Liang, Z.; Wang, X.; Zhou, T.; Wu, Y.; Jiao, L. Investigation of diamond wheel topography in Elliptical Ultrasonic Assisted Grinding (EUAG) of monocrystal sapphire using fractal analysis method. Ultrasonics 2018, 84, 87–95. [Google Scholar] [CrossRef]

- Chen, J.; Cui, C.; Huang, G.; Xu, X. A new strategy for measuring the grain height uniformity of a grinding wheel. Measurement 2020, 151, 107250. [Google Scholar] [CrossRef]

- Yan, L.; Rong, Y.M.; Jiang, F.; Zhou, Z.X. Three-dimension surface characterization of grinding wheel using white light interferometer. Int. J. Adv. Manuf. Technol. 2011, 55, 133–141. [Google Scholar] [CrossRef]

- Darafon, A.; Warkentin, A.; Bauer, R. Characterization of grinding wheel topography using a white chromatic sensor. Int. J. Mach. Tools Manuf. 2013, 70, 22–31. [Google Scholar] [CrossRef]

- Li, C.; Sun, L.; Yang, S.; Zhang, L.; Wu, C.; Jiang, Z. Three-dimensional characterization and modeling of diamond electroplated grinding wheels. Int. J. Mech. Sci. 2018, 144, 553–563. [Google Scholar] [CrossRef]

- Lu, A.; Jin, T.; Liu, Q.; Guo, Z.; Qu, M.; Luo, H.; Han, M. Modeling and prediction of surface topography and surface roughness in dual-axis wheel polishing of optical glass. Int. J. Mach. Tools Manuf. 2019, 137, 13–29. [Google Scholar] [CrossRef]

- Ding, W.; Dai, C.; Yu, T.; Xu, J.; Fu, Y. Grinding performance of textured monolayer CBN wheels: Undeformed chip thickness nonuniformity modeling and ground surface topography prediction. Int. J. Mach. Tools Manuf. 2017, 122, 66–80. [Google Scholar] [CrossRef]

- Kang, M.; Zhang, L.; Tang, W. Study on three-dimensional topography modeling of the grinding wheel with image processing techniques. Int. J. Mech. Sci. 2020, 167, 105241. [Google Scholar] [CrossRef]

- Boaron, A.; Weingaertner, W.L. Dynamic in-process characterization method based on acoustic emission for topographic assessment of conventional grinding wheels. Wear 2018, 406, 218–229. [Google Scholar] [CrossRef]

- Lopes, W.N.; Junior, P.O.C.; Aguiar, P.R.; Alexandre, F.A.; Dotto, F.R.L.; da Silva, P.S.; Bianchi, E.C. An efficient short-time Fourier transform algorithm for grinding wheel condition monitoring through acoustic emission. Int. J. Adv. Manuf. Technol. 2021, 113, 585–603. [Google Scholar] [CrossRef]

- Wan, L.; Zhang, X.; Zhou, Q.; Wen, D.; Ran, X. Acoustic emission identification of wheel wear states in engineering ceramic grinding based on parameter-adaptive VMD. Ceram. Int. 2023, 49, 13618–13630. [Google Scholar] [CrossRef]

- DuyTrinh, N.; Shaohui, Y.; Nhat Tan, N.; Son, P.X.; Duc, L.A. A new method for online monitoring when grinding Ti-6Al-4V alloy. Mater. Manuf. Process. 2019, 34, 39–53. [Google Scholar] [CrossRef]

- Ren, X.; Chai, Z.; Xu, J.; Zhang, X.; He, Y.; Chen, H.; Chen, X. A new method to achieve dynamic heat input monitoring in robotic belt grinding of Inconel 718. J. Manuf. Process. 2020, 57, 575–588. [Google Scholar] [CrossRef]

- Caraguay, S.J.; Boaron, A.; Weingaertner, W.L.; Bordin, F.; Xavier, F. Wear assessment of microcrystalline and electrofused aluminum oxide grinding wheels by multi-sensor monitoring technique. J. Manuf. Process. 2022, 80, 141–151. [Google Scholar] [CrossRef]

- Nakai, M.E.; Aguiar, P.R.; Guillardi, H., Jr.; Bianchi, E.C.; Spatti, D.H.; D’Addona, D.M. Evaluation of neural models applied to the estimation of tool wear in the grinding of advanced ceramics. Expert Syst. Appl. 2015, 42, 7026–7035. [Google Scholar] [CrossRef]

- Mahata, S.; Shakya, P.; Babu, N.R. A robust condition monitoring methodology for grinding wheel wear identification using Hilbert Huang transform. Precis. Eng. 2021, 70, 77–91. [Google Scholar] [CrossRef]

- Sinha, M.K.; Setti, D.; Ghosh, S.; Rao, P.V. An investigation on surface burn during grinding of Inconel 718. J. Manuf. Process. 2016, 21, 124–133. [Google Scholar] [CrossRef]

- Yin, C.; Wang, Y.; Lee, H.P.; He, J.; He, Y.; Sun, Y. Robust wheel wear detection for solid carbide grinding under strong noise interference: A new approach based on improved ensemble noise-reconstructed empirical mode decomposition. Wear 2021, 486, 204112. [Google Scholar] [CrossRef]

- Korotkov, A.; Korotkov, V. Increase of grinding wheel durability. In IOP Conference Series: Materials Science and Engineering; IPO Publishing: Bristol, UK, 2020; Volume 709, p. 022020. [Google Scholar]

- González, D.; Alvarez, J.; Sánchez, J.A.; Godino, L.; Pombo, I. Deep learning-based feature extraction of acoustic emission signals for monitoring wear of grinding wheels. Sensors 2022, 22, 6911. [Google Scholar] [CrossRef]

- Liao, T.W.; Ting, C.F.; Qu, J.; Blau, P.J. A wavelet-based methodology for grinding wheel condition monitoring. Int. J. Mach. Tools Manuf. 2007, 47, 580–592. [Google Scholar] [CrossRef]

- Shen, C.H. Acoustic emission based grinding wheel wear monitoring: Signal processing and feature extraction. Appl. Acoust. 2022, 196, 108863. [Google Scholar] [CrossRef]

- Zhang, B.; Katinas, C.; Shin, Y.C. Robust wheel wear monitoring system for cylindrical traverse grinding. IEEE/ASME Trans. Mechatron. 2020, 25, 2220–2229. [Google Scholar] [CrossRef]

- Wan, L.; Chen, Z.; Zhang, X.; Wen, D.; Ran, X. A multi-sensor monitoring methodology for grinding wheel wear evaluation based on INFO-SVM. Mech. Syst. Signal Process. 2024, 208, 111003. [Google Scholar] [CrossRef]

- Guo, W.; Li, B.; Zhou, Q. An intelligent monitoring system of grinding wheel wear based on two-stage feature selection and Long Short-Term Memory network. Proc. Inst. Mech. Eng. Part B J. Eng. Manuf. 2019, 233, 2436–2446. [Google Scholar] [CrossRef]

- Xu, K.; Feng, D. A method for identifying the wear state of grinding wheels based on VMD denoising and AO-CNN-LSTM. Appl. Sci. 2024, 14, 3554. [Google Scholar] [CrossRef]

- Si, Z.; Si, S.; Mu, D. Precision forecasting of grinding wheel wear: A TransBiGRU model for advanced industrial predictive maintenance. Measurement 2024, 234, 114859. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Cho, K.; Van Merriënboer, B.; Gulcehre, C.; Bahdanau, D.; Bougares, F.; Schwenk, H.; Bengio, Y. Learning phrase representations using RNN encoder-decoder for statistical machine translation. arXiv 2014, arXiv:1406.1078. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 5998–6008. [Google Scholar]

- Xu, X.; Tao, Z.; Ming, W.; An, Q.; Chen, M. Intelligent monitoring and diagnostics using a novel integrated model based on deep learning and multi-sensor feature fusion. Measurement 2020, 165, 108086. [Google Scholar] [CrossRef]

- Choromanski, K.; Likhosherstov, V.; Dohan, D.; Song, X.; Gane, A.; Sarlos, T.; Hawkins, P.; Davis, J.; Mohiuddin, A.; Kaiser, L.; et al. Rethinking attention with performers. arXiv 2020, arXiv:2009.14794. [Google Scholar]

- ISO 6106:2013; Medium Grain Size for Grinding Wheels. International Organization for Standardization: Geneva, Switzerland, 2013.

- Oberg, E.; Jones, F.D.; Horton, H.L.; Ryffel, H.H. Machinery’s Handbook; Coarse Grinding of Carbon Steel Recommendations; Industrial Press: New York, USA, 2010. [Google Scholar]

- DIN 12413; Grinding Wheel Safety Standards. Deutsches Institut für Normung: Berlin, Germany, 2017.

- ISO 16089; Safety Requirements for Grinding Machines. International Organization for Standardization: Geneva, Switzerland, 2015.

- Dong, M.; Wang, N. Adaptive network-based fuzzy inference system with leave-one-out cross-validation approach for prediction of surface roughness. Appl. Math. Modell. 2011, 35, 1024–1035. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).