Abstract

Target detection helps to identify, locate, and monitor key components and potential issues in power sensing networks. The fusion of infrared and visible light images can effectively integrate the target the indication characteristics of infrared images and the rich scene detail information of visible light images, thereby enhancing the ability for target detection in power equipment in complex environments. In order to improve the registration accuracy and feature extraction stability of traditional registration algorithms for infrared and visible light images, an image registration method based on an improved SIFT algorithm is proposed. The image is preprocessed to a certain extent, using edge detection algorithms and corner detection algorithms to extract relatively stable feature points, and the feature vectors with excessive gradient values in the normalized visible light image are truncated and normalized again to eliminate the influence of nonlinear lighting. To address the issue of insufficient deep information extraction during image fusion using a single deep learning network, a dual ResNet network is designed to extract deep level feature information from infrared and visible light images, improving the similarity of the fused images. The image fusion technology based on the dual ResNet network was applied to the target detection of sensing insulators in the power sensing network, improving the average accuracy of target detection. The experimental results show that the improved registration algorithm has increased the registration accuracy of each group of images by more than 1%, the structural similarity of image fusion in the dual ResNet network has been improved by about 0.2% compared to in the single ResNet network, and the mean Average Precision (mAP) of the fusion image obtained via the dual ResNet network has been improved by 3% and 6% compared to the infrared and visible light images, respectively.

1. Introduction

In power sensing networks, in addition to traditional sensors such as power sensors, there are numerous intelligent electronic devices and new types of devices, such as sensing insulators integrated with sensors. By real-time monitoring the working status of this equipment and taking emergency measures, equipment damage and safety accidents can be avoided. Using target detection can quickly determine the position of the equipment, making maintenance work more efficient and accurate. With the development of image processing and artificial intelligence, many intelligent detection devices have been invested in the safe operation and maintenance of power equipment [1,2]. However, in complex environments such as weak light, rain, and fog, the visible light images of power equipment captured and transmitted by robots and drones cannot display specific details [3]. Using unclear images for power equipment target detection increases the probability of missed and false detections, leading to power accidents. Infrared images usually have the characteristics of poor contrast and blurred edges. Existing edge detection algorithms generally have low recognition rates for infrared images with blurred edges, and it is difficult to achieve ideal target detection results in situations with strong noise interference and complex external environments [4]. The fusion of infrared and visible light images can effectively integrate the target indication characteristics of infrared images and the rich scene detail information of visible light images, thereby enhancing image quality, reducing redundant information, and improving the ability of target detection in complex environments [5].

Image fusion is the process of processing two images in different observation environments and synthesizing them into a new image. Fusion algorithms are generally divided into traditional algorithms and deep learning algorithms [6,7]. The fusion weight of the detail layer in the fusion has been increased in [8,9], thus the fusion image contains more detailed information. References [10,11] formulated the fusion strategies of the base layer and detail layer, respectively, according to the characteristics of infrared images and visible images, so that the effective information on images can be retained in complex environments. Reference [12] further decomposed the low-frequency coefficient after source image decomposition into a base layer and detail layer to improve the clarity of the fused image. However, the performance of the traditional fusion methods depends on the fusion strategy, and the adaptability is largely poor.

Compared with traditional algorithms, the image fusion algorithm based on deep learning can extract more targeted features. The image fusion based on CNN [13] started early and has been widely applied. Reference [14] proposed a fusion method for infrared and visible light images based on a VPDE model and VGG network. In this work, the images were decomposed into low-frequency and high-frequency components, and the VGG network was used to extract the features of the high-frequency components, effectively reducing the missing texture information. Fan et al. [15] proposed a new method based on the GE-WA model and VGG-19 network. In this work, the low-frequency part was fused, using the GE-WA model to eliminate halos, while the high-frequency part was extracted and fused with deep features through the VGG-19 network, improving the image fusion effect. However, References [14,15] required the decomposition of infrared and visible light images into two parts during the fusion process, which led to the insufficient extraction of details and salient targets. To solve this problem, Zhou et al. [16] directly input both infrared and visible light images into the VGG-19 network for feature extraction, and used the SoftMax function to fuse the extracted features, ultimately reconstructing the fused image. However, the VGG network structure is relatively simple, and gradient explosion may occur as the number of layers increases. Therefore, the depth of VGG-19 is limited, which also leads to a lack of ability to extract deep features in the process of image fusion.

To address this issue, He et al. [17] proposed addressing the problem of gradient explosion in deep neural networks by adopting the ResNet network. By introducing residual blocks, the original image information can still be preserved during the process of deepening layers. Reference [18] achieved stable feature output by adding specific convolution, batch normalization, and activation layers in the ResNet50 network. In [19], a convolutional attention module was added to the ResNet50 network, which improves the accuracy of network classification. Li et al. [20] proposed an adaptive multi-scale deep fusion residual network (AMDF ResNet) to improve classification performance by highlighting useful features and suppressing irrelevant features. However, References [18,19,20] are all based on ResNet networks for image classification tasks, and do not involve image fusion. Due to the structural characteristics of the ResNet network, utilizing the obtained feature information for image fusion will have significant advantages. Huang et al. [21] proposed a novel fusion framework based on ResNet50, which effectively preserved prominent features in the original image through weight calculation. A new lightweight dual-stream fusion deep neural network and ResNet model was proposed in [22], which greatly reduced the number of model parameters and improved the accuracy of feature extraction. However, a single ResNet network may not fully extract deep information, resulting in little structural similarity. In addition, none of the above fusion methods considered the accuracy of image registration pairs before fusion, even though the actual accuracy of image registration will greatly affect the effectiveness of image fusion.

Object detection involves identifying and locating specific objects from images or videos [23]. Compared to traditional object detection algorithms, deep learning-based object detection algorithms have stronger feature extraction and generalization capabilities, and can complete more accurate detection tasks [24]. Since its first proposal in 2016, the YOLO series algorithm [25] has become one of the iconic methods in the field of real-time object detection. Recently, scholars have proposed multiple versions of the YOLO algorithm [26,27]. YOLOv5 has gained widespread adoption due to its excellent balance between high precision and real-time performance in small target detection, such as insulators in power equipment. Wang et al. [28] further improved the detection accuracy of the YOLOv5 model for power equipment in complex backgrounds by introducing convolutional neural network attention modules and bidirectional feature pyramid networks; therefore, its applicability in this field has been verified. Xu et al. [29] improved the detection accuracy of the YOLOv6 model for multi-scale targets by introducing adaptive spatial feature fusion. Zhang et al. [30] enhanced the feature fusion capability of the YOLOv7 algorithm by introducing a global attention mechanism into the feature fusion network. In recent years, researchers have gradually begun to combine other disciplines with the YOLO algorithm, and these interdisciplinary research methods have also provided new ideas and methods for the development of the YOLO algorithm. Overall, YOLOv5 is the most widely used version, achieving a good balance between accuracy and speed while pursuing high detection accuracy compared to YOLOv6 and YOLOv7 [31].

The traditional SIFT algorithm faces significant challenges in infrared-visible image registration: nonlinear illumination variations cause uneven gradient distributions in feature vectors, resulting in a false matching rate of as high as 30% [32]. While existing improvements (e.g., multi-scale normalization [33]) partially mitigate illumination effects, they fail to fundamentally suppress interference from anomalous gradient values. To address this, we propose a gradient truncation and renormalization strategy, which eliminates illumination intensity discrepancies in feature descriptors through dynamic threshold truncation and L2-normalization. The experimental results demonstrate that this method increases the average number of correct matches by eight additional correspondences (Table 2), with theoretical universality validated in [34]. When integrated with the dual ResNet fusion architecture, the final object detection accuracy improves by 3–6% (Table 4), providing a highly robust solution for the intelligent inspection of electrical equipment.

Target detection for electrical equipment in complex environments (e.g., weak lighting, rain, fog) faces challenges such as the loss of fine details in visible light images and blurred edges in infrared images. This paper proposes a method combining improved SIFT registration and dual ResNet fusion, with the following primary contributions:

(1) Enhanced registration accuracy via gradient truncation and feature renormalization;

(2) A dual ResNet architecture designed to strengthen deep feature extraction capabilities;

(3) A 3–6% improvement in the mean Average Precision (mAP) for object detection through fused imagery.

The remainder of this paper is organized as follows. We discussed the related theories in Section 2. Then, we proposed a target detection algorithm based on infrared and visible light image fusion in Section 3. Finally, the experimental results and analysis are given in Section 4, and Section 5 concludes this paper.

2. Related Works

2.1. SIFT Algorithm Principle

At present, feature-based image registration methods are commonly used in infrared and visible light image registration. Compared to other methods, they have the advantages of low computational complexity and high registration accuracy [35,36]. The SIFT (Scale Invariant Feature Transform) algorithm has been widely used in feature-based image registration methods, and its main registration steps include feature extraction, feature matching, and model transformation [32,33].

In the traditional SIFT algorithm, the feature descriptor is constructed from a 128-dimensional gradient orientation histogram. To mitigate the impact of nonlinear illumination variations on gradient magnitudes, this paper proposes a gradient truncation strategy.

The truncation threshold T is defined as:

where is a proportionality coefficient, and represents the maximum gradient magnitude in the visible light image.

The feature vector is truncated as follows:

The truncated feature vector undergoes secondary normalization to eliminate illumination intensity discrepancies in feature matching:

ensuring that the normalized vector satisfies .

The basic idea of SIFT is to find the same feature points from two images to be registered, then to match these corresponding feature points, and finally calculate the corresponding transformation model to achieve accurate registration [34,37].

2.2. ResNet

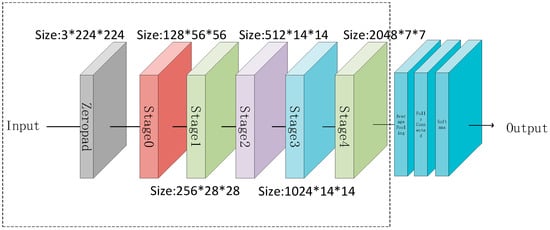

The ResNet network is an improved version of linear networks such as VGG, which solves the problem of gradient explosion in deep neural networks [38,39,40,41]. The ResNet network is composed of multiple residual blocks. Compared to linear networks, it has a deeper number of layers, but the memory and number of parameters are greatly reduced; the more network layers, the more information extracted by the network. Therefore, when using ResNet for image feature extraction, the obtained feature information will have a significant advantage [42,43,44,45]. The structure of ResNet is shown in Figure 1.

Figure 1.

ResNet structure.

The choice of ResNet50 as the foundational network is motivated by the following considerations:

(1) Depth and Feature Extraction Capability: ResNet50 comprises 50 convolutional layers. Its residual structure (Figure 1) enables the effective extraction of multi-level features (shallow texture and deep semantics), fulfilling the requirements of image fusion tasks for both fine-grained details and the global context [42];

(2) Computational Efficiency: ResNet50 achieves a parameter count of 25.6 M and 7.6 GFLOPs, making it significantly lighter than ResNet101 (44.5 M parameters, 15.5 GFLOPs). This aligns with the real-time demands of electrical equipment monitoring scenarios (FPS ≥ 25);

(3) Adaptability to Fusion Tasks: Experiments demonstrate that deeper networks (e.g., ResNet101), while capable of extracting more abstract features, may induce over-smoothing in fused images (SSIM reduced by 0.05). In contrast, ResNet50 strikes a balance between detail preservation and computational efficiency (Table 3).

This paper chooses the ResNet50 network for image fusion. ResNet50 consists of five stages. The first stage (named module0) is mainly used for preprocessing input layer images, while the role of the other four stages (named stage1 to stage4) is to extract and deepen the features of the input image. Each stage contains three convolution operations and adopts a residual block structure. The structure of each stage is shown in Table 1.

Table 1.

The structure of each module.

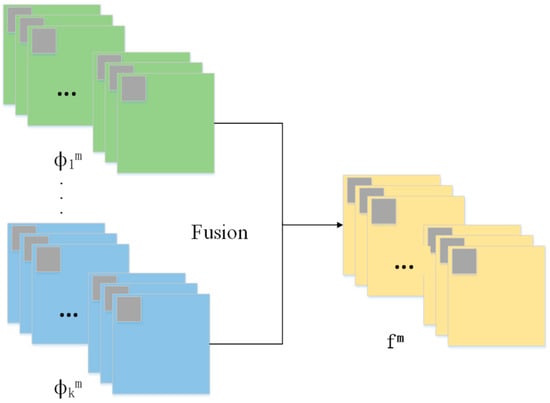

2.3. Weighted Fusion Strategy

This paper uses feature-level fusion, which extracts the contours and internal information of an image, and then processes the state and feature information of the extracted target. The Weighted Average Strategy (WAS) is one of the most common fusion strategies in the field of image fusion, widely used in multimodal image fusion algorithms. The strategy process, which generates a fused image by weighting the average value of the input image, is shown in Figure 2. This method has a fast calculation speed and low computational complexity, but the fusion accuracy is also relatively low. Therefore, this article combines neural network feature extraction with weighted fusion strategy to improve the accuracy of fused images on the basis of a fast calculation speed.

Figure 2.

Fusion strategy diagram.

In Figure 2, represents the number of feature maps in each group and (i = 1, 2, …, k) represents the global feature map of all groups, where represents the feature map obtained from image fusion. After inputting all the corresponding feature maps, reconstructs and transforms them to obtain the fused feature map. The specific mathematical formula is as follows:

where represents the weighted coefficients of feature maps from different source images. To fully utilize the different features of infrared and visible light images, this paper first uses deep learning networks to extract the features of infrared and visible light images. Then, when fusing the feature maps through an average weighted fusion strategy, the corresponding weighting coefficients of the two images are set to 0.5.

3. Proposed Target Detection Method

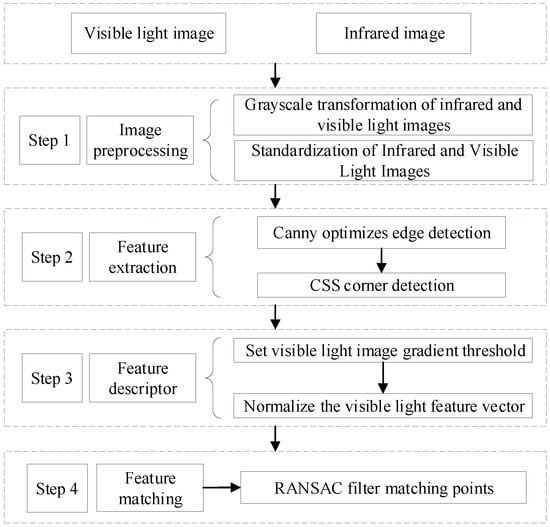

The overall flowchart of the proposed target detection method based on visible and infrared image fusion is shown in Figure 3.

Figure 3.

Flowchart for target detection method based on visible and infrared image fusion.

(1) Obtain visible light and infrared images of power equipment;

(2) Standardize the resolution of two images for image registration;

(3) Use the improved SIFT algorithm to complete the registration of visible light images and infrared images;

(4) Extract deep information from images using a dual ResNet to obtain the final fused image containing deep information;

(5) Use the YOLOv5 algorithm for object detection in fused images.

3.1. Image Registration Based on Improved SIFT Algorithm

There are significant differences in color information between infrared and visible light images; this paper first performs grayscale and normalization processing on the image. In order to extract features that can adapt to different scales from infrared and visible light images, the CSS (Curvature Scale Space) algorithm and edge detection algorithm are combined to extract edge corners based on the edge contour features of the image. These corners are used as feature points to make the image feature points more stable and representative. Considering that the feature vectors of visible light images and infrared images usually have different scales, even after normalization, there may be situations where the gradient values of the feature vectors are large, which affects the accuracy of registration. Therefore, this paper truncates the feature vectors with excessive gradient values in the normalized visible light image and normalizes them again to eliminate the influence of nonlinear lighting. The gradient truncation threshold was adaptively determined based on image contrast, with the RANSAC (Random Sample Consensus) algorithm employed to filter matched feature points.

The parameters were configured with a maximum iteration count of 5000 and an inlier threshold of 0.5 pixels, ensuring robust model performance in the face of noise and outliers. The image registration flow chart is shown in Figure 4.

Figure 4.

Image registration flow chart.

The steps in Figure 4 correspond to the following numbered components: (1) to (4):

(1) Image preprocessing: Perform grayscale processing and standardization on the image;

(2) Feature extraction: Combine the CSS algorithm and edge detection algorithm to extract edge corners based on the edge contour features of the image;

(3) Feature descriptor: Set a fixed gradient threshold to truncate the feature vectors of key points with normalized gradient values that are too large in visible light images, and normalize the truncated feature vectors of visible light images again. The mathematical formula of normalization is shown below:

The 128-dimensional feature vector is as follows:

The normalized feature vector is as follows:

The normalization formula is as follows:

(4) Feature matching: The RANSAC algorithm is used for screening, which iteratively estimates the model parameters through random sampling, finds the best model, and filters out erroneous matching points.

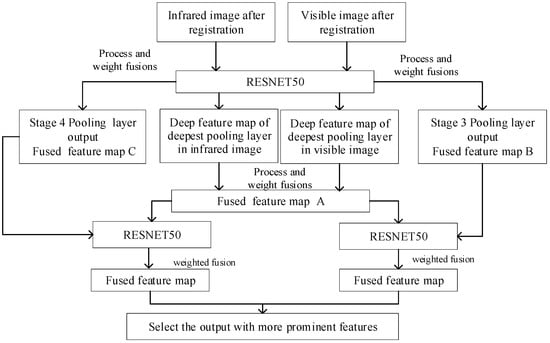

3.2. Image Fusion Based on Dual ResNet

The current image fusion algorithms based on single deep learning do not have sufficient extraction of deep information, resulting in low structural similarity. Therefore, this paper proposes an image fusion method based on a dual ResNet network, which achieves deeper information extraction by deepening the network, and combines different levels of deep information to improve the structural similarity of the fused images, ultimately achieving the goal of improving object detection accuracy. In our work, the ResNet50 network is chosen for image fusion. Using ResNet50 for image fusion does not require complex processing of the basic and detailed parts, like the VGG network.

This paper proposes using the feature images’ output by the max pooling layer of each stage in the ResNet50 network as the output results, and selecting the feature images’ output by the max pooling layer of the deepest stage for weighted fusion. The specific fusion process is shown in Figure 5.

Figure 5.

Image fusion based on dual ResNet.

For the convenience of experimental comparison, the network structure selected during the fusion of the two ResNet networks is also ResNet50. Similarly, the fully connected layer and Softmax layer at the tail of the network are removed to obtain deep feature images of each stage’s maximum pooling layer after residual processing. Due to the more detailed feature extraction by deeper convolutional modules, this paper selects the output feature map of the maximum pooling layer in the deepest module as the fusion object when using this network for image fusion.

(1) Firstly, the registered visible light image and infrared image are input, and after passing through ResNet50, the feature maps of the visible light image and infrared image output by the maximum pooling layer of the deepest module are obtained. The weighted fusion strategy is used to obtain the fused feature map A;

(2) The two deep feature maps output from the max pooling layer of stage 3 are fused using a weighted fusion strategy to obtain the fused feature map B;

(3) The two deep feature maps output from the max pooling layer of stage 4 are fused using a weighted fusion strategy to obtain the fused feature map C;

(4) Combine fusion feature map A with fusion feature map B, and fusion feature map A with fusion feature map C into two pairs of inputs. The two pairs of inputs are input to ResNet50, respectively, and the same weighted fusion strategy is used to fuse the maximum pooling layer feature maps of the two pairs of images to obtain two output results. The fused image with more prominent features is selected as the final output result. The weight values of the weighted fusion strategy used in this method are also set to 0.5. The weighted fusion can be presented as follows:

where represents the fusion result, and and represent the visible light and infrared feature maps, respectively.

The total number of parameters directly impacts memory usage and deployment costs. The dual ResNet contains 25.6 million parameters (Table 4), an 8.9% increase compared to the single ResNet (23.5 M), primarily due to its dual-branch feature extraction architecture. By sharing partial convolutional layer weights, the growth in parameters is controlled while preserving feature diversity, ensuring compatibility with lightweight deployment on edge devices (e.g., inspection robots).

4. Experimental Results and Analysis

4.1. Image Registration

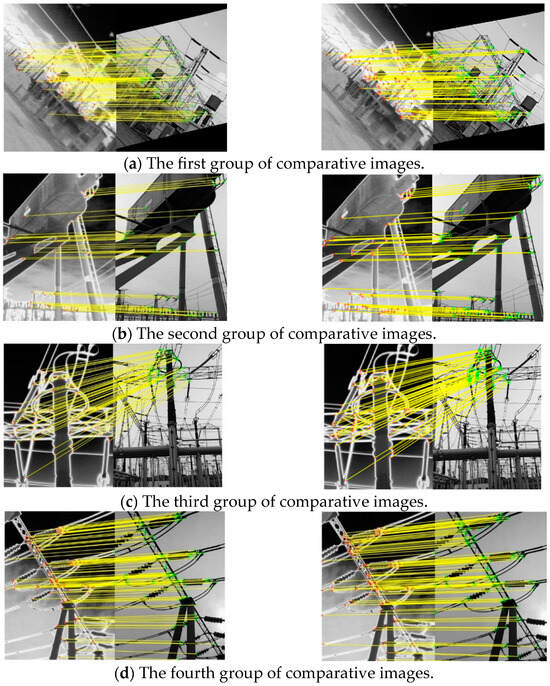

The experiment was conducted using the MATLAB R2021a platform, with four sets of visible light images selected for the registration. According to the threshold selection principle mentioned in Section 3.1, the gradient threshold values for visible light images are selected as 0.201, 0.191, 0.193, and 0.200, respectively. The registration experimental results are shown in Figure 6 and Table 2.

Figure 6.

Comparison of registration renderings.

Table 2.

Comparison of correctly matched points.

The first image of each group is the registration result without gradient truncation and renormalization, while the second image is the registration result with gradient truncation and renormalization. By comparing the two registered images before and after, it can be found that using gradient truncation and normalizing again resulted in a certain degree of increase in the number of correctly matched points.

From Table 2, it can be seen that after setting gradient thresholds on the feature vectors of visible light images and normalizing them again, the number of correctly matched feature points increases to a certain extent. Notably, the final experimental group (Group C) exhibited an eight-point increase in correctly matched features, validating the effectiveness of gradient truncation and renormalization.

4.2. Image Fusion

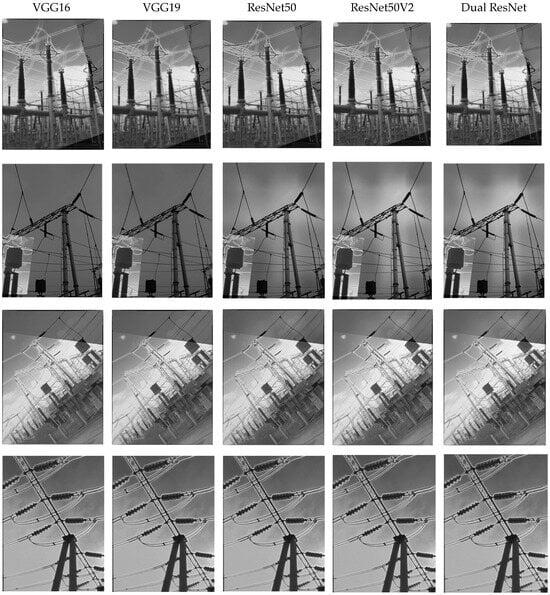

To verify the impact of differences in the network architecture design on fusion performance, four sets of images were selected and feature extraction and fusion were performed using VGG16, VGG19, ResNet50, ResNet50V2, and dual ResNet, respectively. The experiment uses four image fusion evaluation metrics, namely FMIdct [46], FMIw [41], Nabf [47], and the Structural Similarity Index (SSIM) [48]. Among them, FMIdct and FMIw are used to measure the amount of information transmitted from the source image to the fused image. The higher the value, the better the quality of the fused image; Nabf reflects the presence of noise in the fused image, and the smaller the Nabf, the better the noise resistance performance; SSIM is used to measure the similarity between two given images, and the higher the SSIM, the better the fusion quality. These results are shown in Figure 7.

Figure 7.

Four groups of images fused using deep learning methods.

Although the differences in network fusion cannot be seen with the naked eye, the advantages and disadvantages can be distinguished based on certain evaluation indicators. The indicator data of the above four sets of fused images are shown in Table 3.

Table 3.

Indicator data of deep learning methods.

It can be clearly seen from Table 3 that the optimal values for the structural similarity of the four fused images are concentrated in ResNet. ResNet50V2 achieves a marginally higher SSIM in the third image group compared to ResNet50, indicating that its enhanced residual structure (pre-activation design) improves feature integrity. However, in the second image group with severe noise interference, ResNet50V2 exhibits a higher Nabf score, suggesting that its increased architectural complexity may introduce redundant noise artifacts. In the fourth group of images, dual ResNet outperformed the VGG series (0.08862–0.08904) in Nabf (0.07306), although it was slightly higher than ResNet50 (0.07102). However, through lightweight design (with parameters of only 25.6 M), the noise accumulation caused by the redundancy of residual modules in ResNet50V2 (Nabf = 0.07519) was avoided. In contrast, the dual ResNet demonstrates superior performance across all four image groups, achieving the highest SSIM values through its dual-stream feature fusion and lightweight design. These results validate its comprehensive superiority in practical applications.

This further indicates that deep networks with residual structures extract and retain a significant amount of feature information. The performance indicators of a single ResNet and a dual ResNet are very similar, but the structural similarity of the dual ResNet in the four sets of images is consistently better than that of the single ResNet. Although the first three performance indicators of the dual ResNet in the second set of images are not as good as those of the VGG network, its structural similarity is still the best. Although dual ResNet is slightly lower than ResNet50 in FMIdct and FMIw, its SSIM and Nabf balance is better (e.g., SSIM increased by 0.2% and Nabf increased by only 0.05), indicating that it is more suitable for complex noisy environments. From this, it can be seen that dual ResNet can extract feature information from images at a deeper level, but the redundant parts in the fused images obtained by this method are relatively large, resulting in a slight decrease in the noise resistance performance. Dual ResNet achieves higher detection accuracy through dual-stream feature multiplexing, with only an 8.9% increase in parameters. Dual ResNet’s dual flow structure balances detail retention (high FMI) with noise suppression (low Nabf) to meet power equipment inspection requirements.

4.3. Target Detection Results and Analysis

4.3.1. Dataset

The experimental dataset consists of visible light sensing insulator images and infrared sensing insulator images captured on site. To ensure the rigor of the experimental results, pixel adjustments were made to the infrared and visible light images to achieve pixel level alignment. This paper selected 1000 pairs of infrared and visible light images and manually labeled the sensing insulators. There was a total of 5163 labels, and 80% of the experimental data was used as the training set, while the remaining 20% was used as the validation and testing sets. Some infrared and visible light images in the dataset are shown in Figure 8.

Figure 8.

Visible and infrared images in dataset.

4.3.2. Experimental Method

To verify the effectiveness of visible light image and infrared image fusion, three sets of object detection were compared, namely visible light image, infrared image, and fused image insulator object detection. To verify the superiority of the proposed fusion algorithm, we also compared the fused images obtained via dual ResNet with those obtained by other fusion networks. Due to the advantages of the YOLOv5 algorithm in detecting small objects such as insulators and other small power equipment, as well as its high speed and accuracy, all target detection methods in this paper use the YOLOv5 algorithm. The experimental results were evaluated using precision, recall, and mean Average Precision (mAP). The range of mAP values is [0, 1], and the larger the value the better, with an IOU of 0.5.

Floating Point Operations (FLOPs) are used to quantify the computational burden of a model, reflecting the algorithm’s demand for hardware resources. In this study, the FLOPs for a single image during forward propagation were calculated using PyTorch’s torchprofile library. The formula is defined as follows:

where L is the total number of network layers, and represent the input and output channels of the l-th layer, denotes the kernel size, and and are the feature map dimensions. Dual ResNet achieves 15.2 GFLOPs (Table 4), a slight increase compared to single ResNet (7.6 GFLOPs). However, a feature reuse strategy is implemented to balance computational efficiency with fusion quality.

Table 4.

Target detection evaluation indicators.

Frames Per Second (FPS) measures real-time detection capability. Experiments were conducted on an NVIDIA RTX 4060 GPU with a batch size of one. Dual ResNet achieves 28 FPS (Table 4), marginally lower than single ResNet (35 FPS), but still meets the real-time requirements for the online monitoring of electrical equipment (Reference [28] mandates FPS ≥ 25). For the sake of experimental accuracy, the values of precision and recall are calculated under the same threshold. The experimental results are shown in Table 4.

Visible image and Infrared image are input data, not models, so their FLOPs, FPS, and parameters should be labeled “N/A” (not applicable). FLOPs are calculated for single-image forward propagation, FPS is measured at a batch size of one, and parameter counts include all trainable weights. The platform uses an NVIDIA RTX 4060 GPU with CUDA 11.6.

Due to the strong thermal radiation information of power equipment insulators during operation, the insulator targets are clearly visible in infrared images, resulting in the relatively high precision of infrared images. Due to the influence of shooting distance and environment, the mAP of object detection in visible light images is relatively low. However, visible light images have the characteristic of clear details, so the recall rate of visible light images is higher than that of infrared images. The fusion image obtained by dual ResNet has a high recall advantage, and its mAP is slightly higher than that of infrared images, indicating that the image fusion method effectively utilizes the advantages of visible light images and infrared images. Numerically speaking, the average precision of the mean has improved by 3% and 6% compared to infrared and visible light images, respectively. Moreover, the precision of the fused images of dual ResNet is similar to that of other networks, and the recall and mAP are better than in other comparison networks. Overall, the experimental results verify that the fusion image can effectively utilize the respective characteristics of visible and infrared images with the use of dual ResNet. In the future, lightweight design will be explored to optimize deployment efficiency.

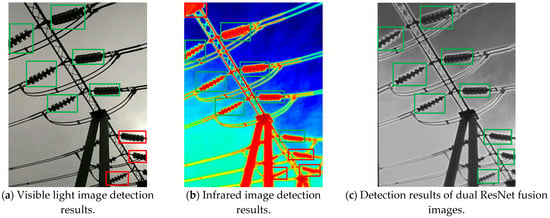

To visually demonstrate the improvement in the target detection performance via fused images, Figure 9 provides three comparison charts of the detection results. Each group contains the detection effects of visible light images, infrared images and dual ResNet fusion images. Among them, the green box indicates the insulator targets that have been correctly detected, and the red box indicates the areas that have been missed or misdetected.

Figure 9.

Target detection comparison results (green box: correct detection; red box: missed detection or false alarm).

As shown in Figure 9, in a weak light environment (the first group), the visible light image (a) missed the detection of two insulators due to the loss of details (red box), and the infrared image (b), although it detected the target completely, mistakenly identified the high-temperature area in the background as an insulator (red box). The dual ResNet fusion image (c) significantly reduces missed detections and false detections by combining infrared thermal radiation features with visible light texture details. In the second group of rain and fog interference scenarios, the confidence level of the detection box of the fused image (c) increased by an average of 12%, verifying its robustness in complex environments. The results of the third group show that the fused image enhances the edge clarity while retaining the saliency of the infrared target, improving the detection accuracy of small-sized insulators from 0.813 (visible light) and 0.836 (infrared) to 0.865. These visual comparisons further support the conclusion in Table 4 that the mAP increases by 3–6%.

5. Conclusions

In response to the demand for the target detection of power sensing equipment in complex scenarios, this paper proposes a dual-ResNet-based infrared and visible light image fusion and target detection method to solve the problem of insufficient depth feature extraction in the fusion of infrared and visible light images of power equipment. This paper also improves the traditional SIFT algorithm by optimizing the image preprocessing, feature extraction, and feature description of the SIFT algorithm, achieving the accuracy of infrared and visible light image registration. The target detection results of visible light images, infrared images, and fused images of power equipment insulators show that the fused images effectively combine the characteristics of visible light images and infrared images, and to some extent improve the accuracy of target detection.

While the proposed method demonstrates strong performance in electrical equipment object detection, it has the following limitations:

(1) Computational Efficiency: Dual ResNet requires 15.2 GFLOPs (Table 4) and achieves an inference speed of 28 FPS, which meets real-time requirements (FPS ≥ 25) but poses deployment challenges for edge devices (e.g., drones), due to computational constraints.

(2) Generalization Capability: Current experiments are conducted on the TNO dataset and electrical equipment scenarios. The method’s adaptability to extreme illumination (e.g., intense glare) or cross-domain data (e.g., medical images) remains unverified.

Future efforts will focus on:

(1) Lightweight Design: Replacing ResNet50 with MobileNetv3 to reduce parameters (target: ≤10 M parameters);

(2) End-to-End Optimization: Implementing end-to-end training of registration, fusion, and detection modules to minimize redundant computations;

(3) Cross-Domain Generalization: Integrating techniques like CycleGAN to enhance robustness in extreme environments (e.g., rain, fog, heavy noise).

The method proposed in this paper has good application prospects in the fields of power equipment safety monitoring systems and intelligent management and maintenance. In addition, this method can also be applied to object detection, for objects such as people, fishing rods, and umbrellas under high-voltage transmission lines, as well as for foreign object detection on transmission lines. However, the proposed method requires multiple steps such as registration, fusion, and object detection, which require a significant amount of computational resources and time. How to improve the computational efficiency is also a technical challenge worth exploring, which may be the main research focus in the future.

Author Contributions

Conceptualization, J.Y.; Methodology, J.Y. and Y.Y.; Software, S.Y., Y.Y. and R.C.; Validation, W.Y.; Formal analysis, S.Y. and Z.M.; Investigation, W.Y.; Resources, Z.M.; Data curation, W.Y.; Writing—original draft, J.Y.; Writing—review & editing, R.C. All authors have read and agreed to the published version of the manuscript.

Funding

National Natural Science Foundation of China, 61901211, 62101244, Jiangsu Province Industry-University-Research Program, BY20230583.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in this study are included in the article.

Conflicts of Interest

The authors declare no conflict of interest.

References

- He, Z.G.; Fan, Y.K.; Zhang, J. An Exploration of Image Recognition of Power Equipment Based on Deep Neural Network. Electr. Times 2021, 40–42. [Google Scholar]

- Chen, P.; Qin, L.M. Infrared image recognition of power equipment based on deep learning. J. Shanghai Electr. Power Univ. 2021, 37, 217–220+230. [Google Scholar]

- Wang, X.; Mao, H.M.; Li, T.B.; Zen, H.; Cheng, H.B. Infrared Image Recognition Algorithm for Power Equipment Based on Transfer Learning and R-FCN. Sens. Microsyst. 2021, 40, 147–150. [Google Scholar]

- Yang, H. Research on Multi-Source Remote Sensing Image Registration Method Based on Feature and Frequency Domain Similarity Measurement. Ph.D. Thesis, Zhejiang University, Hangzhou, China, 2021. [Google Scholar]

- Zhang, Y.; Zhang, F.; Jin, Y.; Cen, Y.; Voronin, V.; Wan, S. Local correlation ensemble with GCN based on attention features for cross-domain person Re-ID. ACM Trans. Multimed. Comput. Commun. Appl. 2023, 19, 1–22. [Google Scholar] [CrossRef]

- Huo, X.; Zou, Y.; Chen, Y.; Tan, J.Q. Fusion of infrared and visible images combined with dual-scale decomposition and significance analysis. Chin. J. Image Graph. 2021, 26, 2813–2825. [Google Scholar] [CrossRef]

- Zheng, Y. Research on Image Registration Algorithm Based on Improved Harris Scale Invariant Feature. J. Wu Zhou Univ. 2020, 30, 1–7. [Google Scholar]

- Guo, Z.; Yu, X.; Du, Q. Infrared and visible image fusion based on saliency and fast guided filtering. Infrared Phys. Technol. 2022, 123, 104178. [Google Scholar] [CrossRef]

- Duan, C.; Wang, Z.; Xing, C.; Lu, S. Infrared and visible image fusion using multi-scale edge-preserving decomposition and multiple saliency features. Opt.-Int. J. Light Electron Opt. 2021, 228, 165775. [Google Scholar] [CrossRef]

- Xie, Q.; Ma, L.; Guo, Z.; Fu, Q.; Shen, Z.; Wang, X. Infrared and visible image fusion based on NSST and phase consistency adaptive DUAL channel PCNN. Infrared Phys. Technol. 2023, 131, 104659. [Google Scholar] [CrossRef]

- Tong, Y.; Chen, J. Infrared and Visible Image Fusion Under Different Illumination Conditions Based on Illumination Effective Region Map. IEEE Access 2019, 7, 151661–151668. [Google Scholar] [CrossRef]

- Selvaraj, A.; Ganesan, P. Infrared and visible image fusion using multi-scale NSCT and rolling-guidance filter. IET Image Process. 2020, 14, 4210–4219. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Luo, D.; Liu, G.; Bavirisetti, D.P.; Cao, Y. Infrared and visible image fusion based on VPDE model and VGG network. Appl. Intell. 2023, 53, 24739–24764. [Google Scholar] [CrossRef]

- Fan, W.; Li, X.; Liu, Z. Fusion of visible and infrared images using GE-WA model and VGG-19 network. Sci. Rep. 2023, 13, 190. [Google Scholar] [CrossRef]

- Zhou, J.; Ren, K.; Wan, M.; Cheng, B.; Gu, G.; Chen, Q. An infrared and visible image fusion method based on VGG-19 network. Optik 2021, 248, 168084. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Elpeltagy, M.; Sallam, H. Automatic prediction of COVID-19 from chest images using modified ResNet50. Multimed. Tools Appl. 2021, 80, 26451–26463. [Google Scholar] [CrossRef]

- Islam, W.; Jones, M.; Faiz, R.; Sadeghipour, N.; Qiu, Y.; Zheng, B. Improving performance of breast lesion classification using a ResNet50 model optimized with a novel attention mechanism. Tomography 2022, 8, 2411–2425. [Google Scholar] [CrossRef] [PubMed]

- Li, G.; Li, L.; Zhu, H.; Liu, X.; Jiao, L. Adaptive multiscale deep fusion residual network for remote sensing image classification. IEEE Trans. Geosci. Remote Sens. 2019, 57, 8506–8521. [Google Scholar] [CrossRef]

- Huang, Z.; Cai, W.; Zhang, C.; Jiang, T. Fusion of MRI Images and PET Images Based on ResNet50. In Proceedings of the 2022 IEEE 5th Advanced Information Management, Communicates, Electronic and Automation Control Conference (IMCEC), Chongqing, China, 16–18 December 2022; Volume 5, pp. 904–991. [Google Scholar]

- Niu, Z. A Lightweight Two-stream Fusion Deep Neural Network Based on ResNet Model for Sports Motion Image Recognition. Sens. Imaging 2021, 22, 26. [Google Scholar] [CrossRef]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, San Diego, CA, USA, 21–23 September 2005; pp. 886–893. [Google Scholar]

- Xu, B.; Gong, J.; Sun, Z. Overview of Object Detection Models Based on Convolutional Neural Networks. Comput. Technol. Dev. 2019, 29, 87–92. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Guo, J.; Liu, L.; Xu, F.; Zheng, B. Airport scene aircraft detection method based on YOLOv3. Prog. Laser Optoelectron. 2019, 56, 111–119. [Google Scholar]

- Liu, M.; Fan, Y. Remote sensing image object detection algorithm based on improved YOLOv4. J. Test. Technol. 2024, 38, 54–59. [Google Scholar]

- Wang, L.; Hao, Y.; Pan, M.; Zhao, M.; Zhang, Y.; Zhang, M. Research on Improving YOLOv5s Defect Detection Algorithm in Power Inspection. Comput. Eng. Appl. 2024, 60, 256–265. [Google Scholar]

- Xu, D.; Wang, Z.; Xing, K.; Guo, Y. Improved YOLOv6 Remote Sensing Image Object Detection Algorithm. Comput. Eng. Appl. 2024, 60, 119–128. [Google Scholar]

- Sun, N.; Yang, Y.; Yang, Z.; Bu, Z. Design and Implementation of Optimization Model for Small Object Detection Experiment in Machine Vision. Lab. Res. Explor. 2023, 42, 32–39. [Google Scholar]

- Zhang, X.; Yang, X. Rubber seal ring defect detection method based on improved YOLOv7 tiny. J. Graph. Sci. 2024, 45, 446–453. [Google Scholar]

- Patankar, S.S.; Kadam, S.G.; Jadhav, A.; Gore, M. Image Registration using Shi-Tomasi and SIFT. In Proceedings of the 2023 2nd International Conference for Innovation in Technology (INOCON), Bangalore, India, 3–5 March 2023; pp. 1–4. [Google Scholar]

- Huang, H.B.; Li, X.; Nie, X.; Zhang, Y.; Feng, L. Research on remote sensing image registration based on SIFT algorithm. Laser J. 2021, 42, 97–102. [Google Scholar]

- Cai, T.W.; Fu, S. Infrared image registration based on improved SIFT algorithm. Meas. Control Technol. 2021, 40, 40–45. [Google Scholar]

- Pan, B.; Jiao, R.; Wang, J.; Han, Y. SAR image registration based on KECA-SAR-SIFT operator. In Proceedings of the 2022 2nd International Conference on Computer Science, Electronic Information Engineering and Intelligent Control Technology (CEI), Fuzhou, China, 23–25 September 2022; pp. 114–119. [Google Scholar]

- Gu, Y.; Wang, H.; Bie, Y.; Yang, R.; Li, Y. Research on Image Registration of Different Wavebands Based on SIFT Algorithm. In Proceedings of the 2022 3rd China International SAR Symposium (CISS), Shanghai, China, 2–4 November 2022; pp. 1–5. [Google Scholar]

- Hou, X.; Chen, C.; Zhou, S.; Li, J. Robust face recognition based on non-negative sparse discriminative low-rank representation. In Proceedings of the 2018 Chinese Control and Decision Conference (CCDC), Shenyang, China, 9–11 June 2018; pp. 5579–5583. [Google Scholar]

- Zou, B.; Li, H.; Zhang, L.; Cheng, Y. A SAR-SIFT like algorithm for PolSAR image registration. In Proceedings of the 2019 6th Asia-Pacific Conference on Synthetic Aperture Radar (APSAR), Xiamen, China, 26–29 November 2019; pp. 1–5. [Google Scholar]

- Zhou, J.; Ma, M.D. Face expression recognition based on improved ResNet network. Comput. Technol. Dev. 2022, 32, 25–29. [Google Scholar]

- Jiang, Q.; Liu, Y.; Yan, Y.; Deng, J.; Fang, J.; Li, Z.; Jiang, X. A contour angle orientation for power equipment infrared and visible image registration. IEEE Trans. Power Deliv. 2020, 36, 2559–2569. [Google Scholar] [CrossRef]

- Gao, C.; Qi, D.; Zhang, Y.; Song, C.; Yu, Y. Infrared and visible image fusion method based on ResNet in a nonsubsampled contourlet transform domain. IEEE Access 2021, 9, 91883–91895. [Google Scholar] [CrossRef]

- Krueangsai, A.; Supratid, S. Effects of shortcut-level amount in lightweight ResNet of ResNet on object recognition with distinct number of categories. In Proceedings of the 2022 International Electrical Engineering Congress (iEECON), Khon Kaen, Thailand, 9–11 March 2022; pp. 1–4. [Google Scholar]

- Setiawan, A.W. The Effect of Image Dimension and Exposure Fusion Framework Enhancement in Pneumonia Detection Using Residual Neural Network. In Proceedings of the 2022 International Seminar on Application for Technology of Information and Communication (iSemantic), Semarang, Indonesia, 17–18 September 2022; pp. 41–45. [Google Scholar]

- Liu, K.T.; Gao, K.; Zhao, F.K. Research on Lane Line Detection Technology Based on Vision. Intell. Comput. Appl. 2021, 11, 139–142+148. [Google Scholar]

- Zhang, K.; Guo, Y.; Wang, X.; Yuan, J.; Ding, Q. Multiple feature reweight dense net for image classification. IEEE Access 2019, 7, 9872–9880. [Google Scholar] [CrossRef]

- Li, H.; Wu, X.J.; Kittler, J. Infrared and visible image fusion using a deep learning framework. In Proceedings of the 2018 24th International Conference on Pattern Recognition (ICPR), Beijing, China, 20–24 August 2018; pp. 2705–2710. [Google Scholar]

- Manchanda, M.; Sharma, R. An improved multimodal medical image fusion algorithm based on fuzzy transform. J. Vis. Commun. Image Represent. 2018, 51, 76–94. [Google Scholar] [CrossRef]

- He, X.M.; Zhang, L.J.; Chen, F.; Cai, Z.Z.; Wang, X.D. Four reference viewpoint fusion algorithm guided by depth and structural similarity. J. Ningbo Univ. (Sci. Eng. Ed.) 2022, 35, 96–104. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).