Measurement of Maize Leaf Phenotypic Parameters Based on 3D Point Cloud

Abstract

1. Introduction

2. Materials and Methods

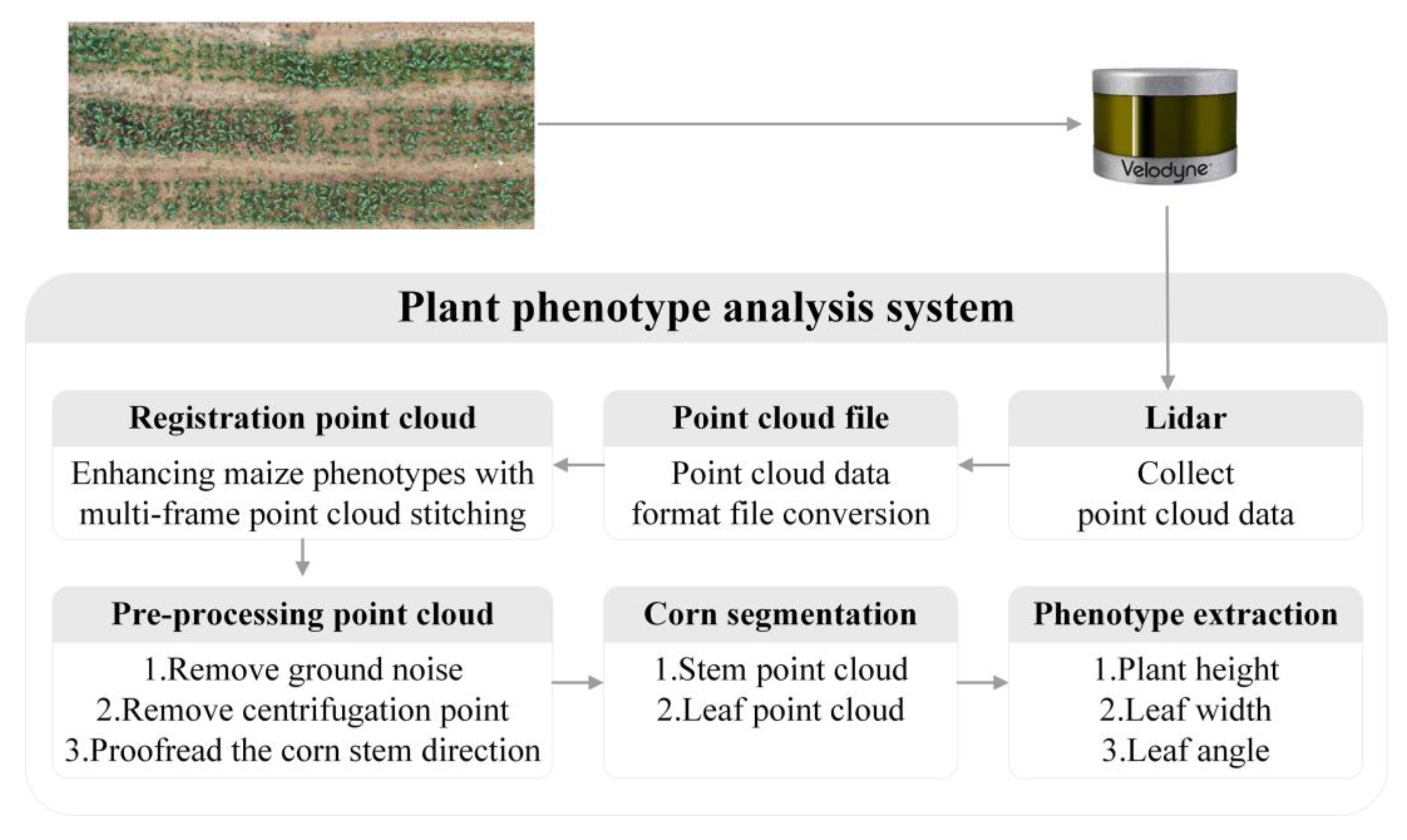

2.1. Architecture of the System

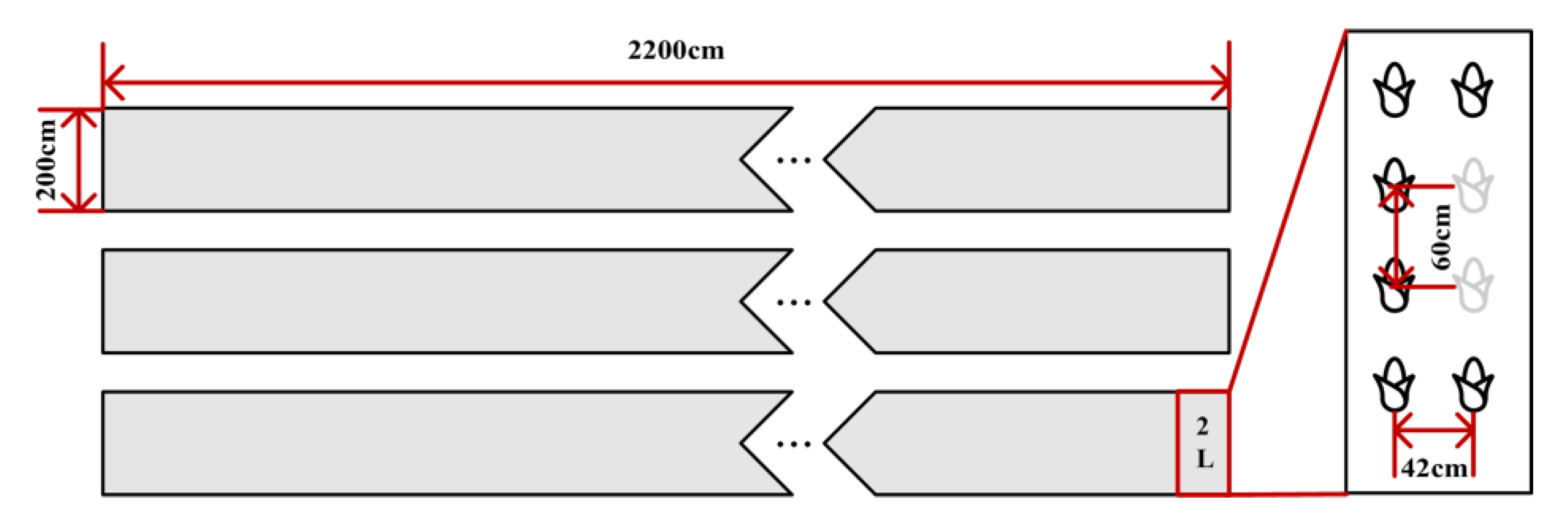

2.2. Data Collection

2.3. Three-Dimensional Point Cloud Data Processing

2.3.1. Data Preprocessing

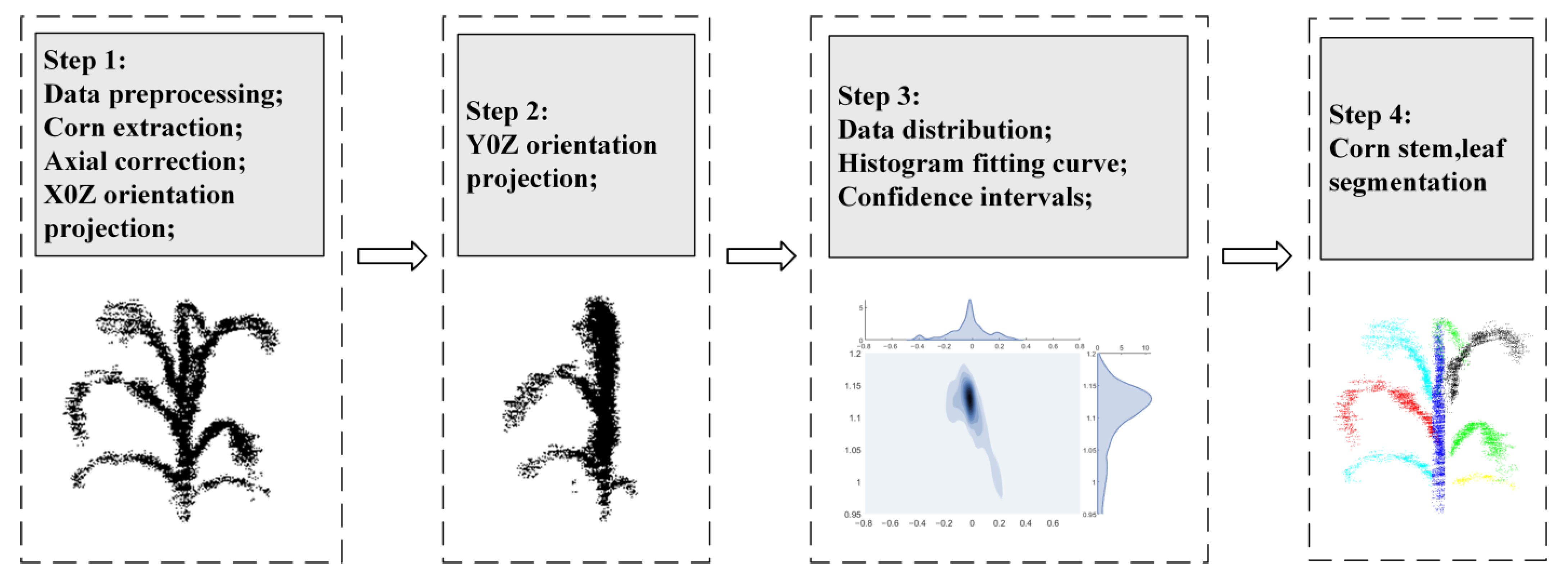

2.3.2. Maize Point Cloud Segmentation

| Algorithm 1. The Point Cloud Spatial Projection Stem Leaf Segmentation Method | |

| Input | Enter single maize point cloud; |

| Output | Output for maize stem and each leaf on the different colors; |

| Step1 | The maize point cloud is projected onto the X0Z and Y0Z planes to obtain the point cloud distribution histograms of the two planes; |

| Step2 | Set the confidence interval to obtain the estimation interval of the stem; |

| Step3 | Compare the point cloud in the stem area with the original point cloud to obtain the leaf point cloud; |

| Step4 | Set cluster tolerance, min cluster size, and max cluster size to obtain point clouds of different leaves. |

2.4. Calculation of PH, LW, and LA of Maize

2.4.1. Calculation of the PH

2.4.2. Calculation of the LW

2.4.3. Calculation of the LA

2.5. Evaluation Methodology for LW

2.6. Evaluating Metrics

3. Results and Analysis

3.1. Results of Stem–Leaf Segmentation

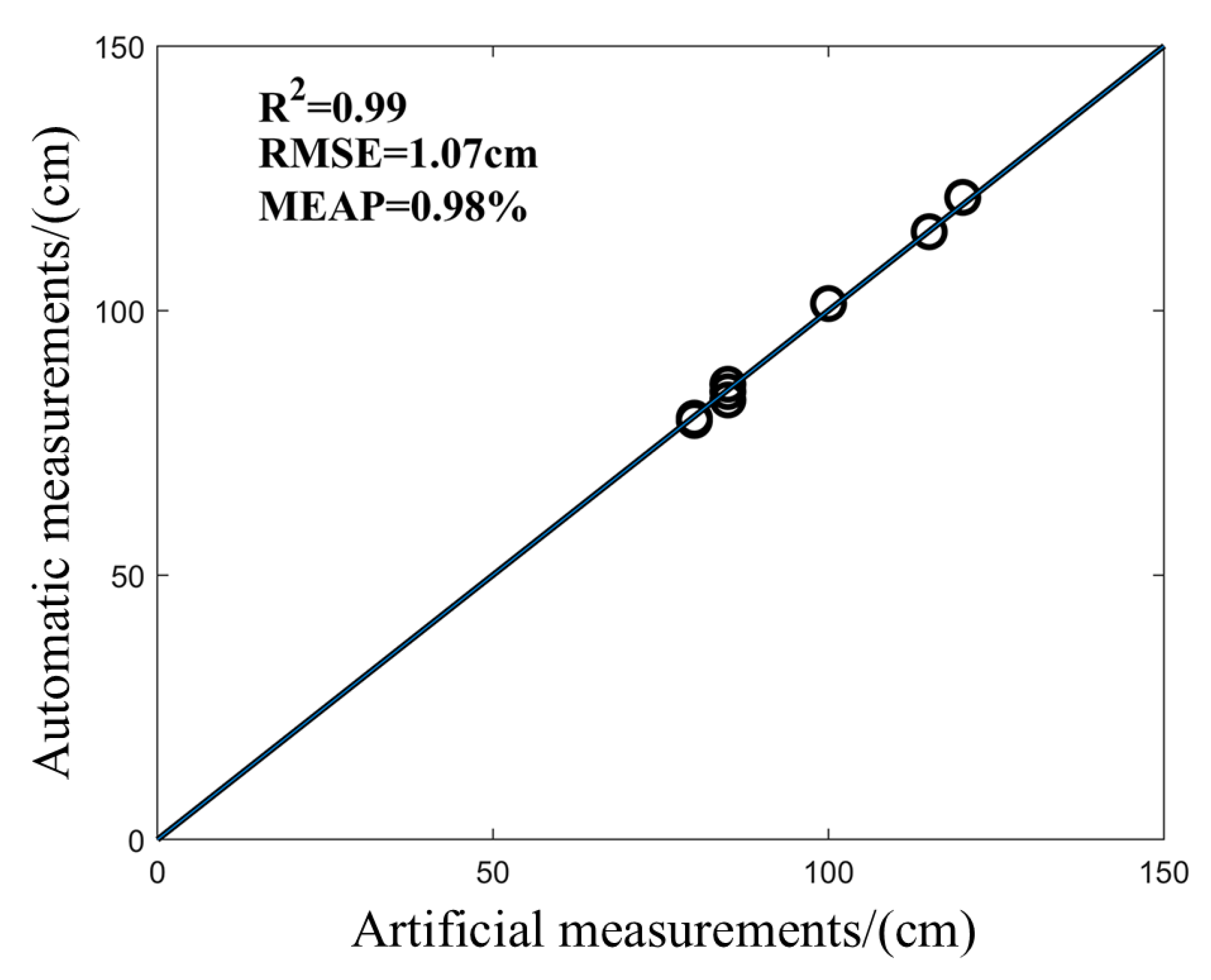

3.2. Evaluation Performance of the PH Value

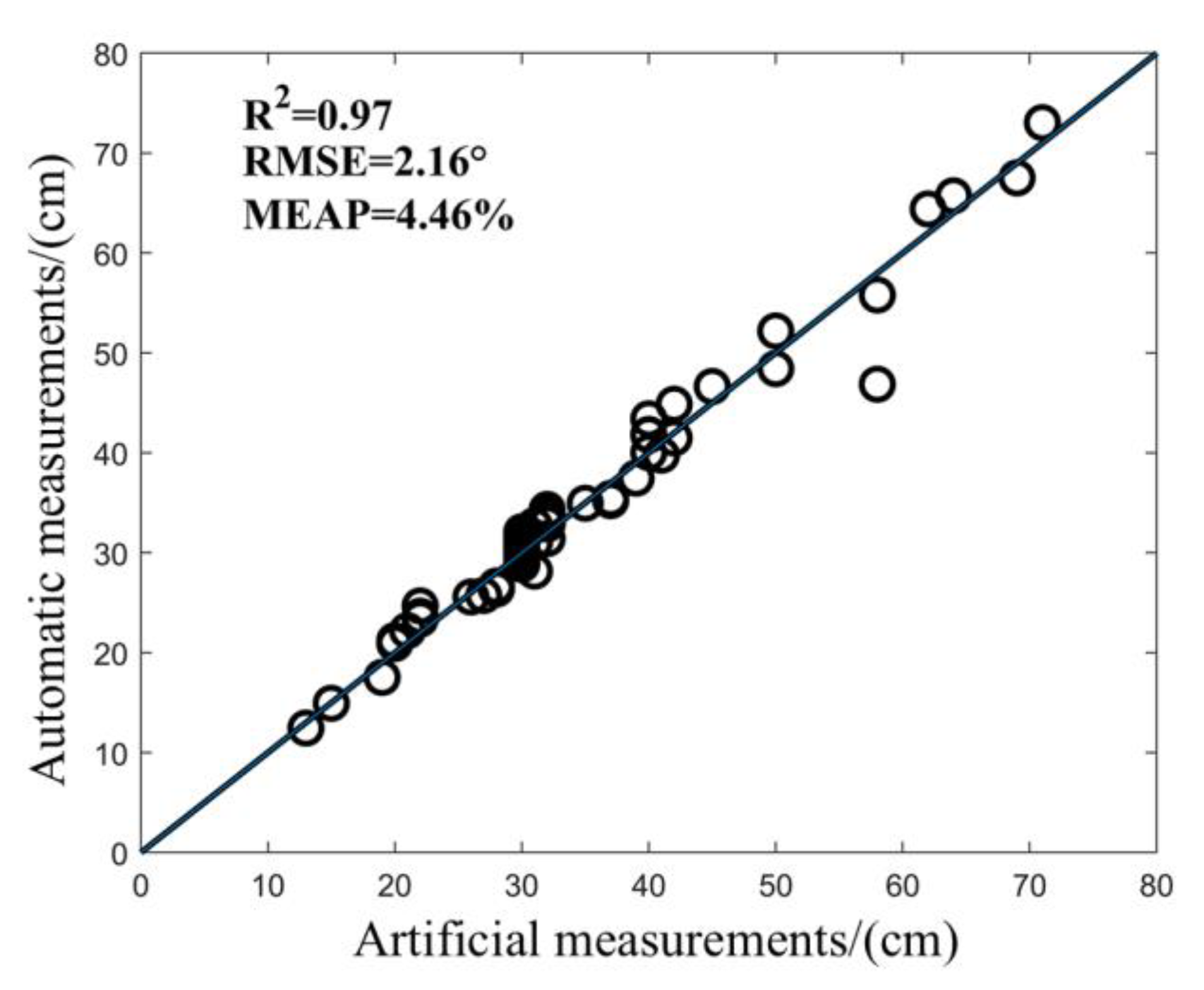

3.3. Evaluation of the LW Value

3.4. Evaluation of the LA Value

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ma, D.; Xie, R.; Yu, X.; Li, S.; Gao, J. Historical trends in maize morphology from the 1950s to the 2010s in China. J. Integr. Agric. 2022, 21, 2159–2167. [Google Scholar] [CrossRef]

- Li, W.; Zhang, C.; Ma, T.; Li, W. Estimation of summer maize biomass based on a crop growth mode. Emir. J. Food Agric. (EJFA) 2021, 33, 742–750. [Google Scholar] [CrossRef]

- Wang, B.; Deng, J.; Jiang, H. Markov transition field combined with convolutional neural network improved the predictive performance of near-infrared spectroscopy models for determination of aflatoxin B1 in maize. Foods 2022, 11, 2210. [Google Scholar] [CrossRef] [PubMed]

- Zhu, H.; Yang, L.; Wang, Y.; Wang, Y.; Hou, W.; Rao, Y.; Liu, L. Enhanced detection algorithm for apple bruises using structured light imaging. Artif. Intell. Agric. 2024, 11, 50–60. [Google Scholar] [CrossRef]

- Tian, J.; Wang, C.; Xia, J.; Wu, L.; Xu, G.; Wu, W.; Li, D.; Qin, W.; Han, X.; Chen, Q.; et al. Teosinte ligule allele narrows plant architecture and enhances high-density maize yields. Science 2019, 365, 658–664. [Google Scholar] [CrossRef]

- Yin, X.; McClure, M.A.; Jaja, N.; Tyler, D.D.; Hayes, R.M. In-Season Prediction of Corn Yield Using Plant Height under Major Production Systems. Agron. J. 2011, 103, 923–929. [Google Scholar] [CrossRef]

- Li, Y.; Wen, W.; Miao, T.; Wu, S.; Yu, Z.; Wang, X.; Guo, X.; Zhao, C. Automatic organ-level point cloud segmentation of maize shoots by integrating high-throughput data acquisition and deep learning. Comput. Electron. Agric. 2022, 193, 106702. [Google Scholar] [CrossRef]

- Liu, L.; Mei, T.; Niu, R.; Wang, J.; Liu, Y.; Chu, S. RBF-Based Monocular Vision Navigation for Small Vehicles in Narrow Space below Maize Canopy. Appl. Sci. 2016, 6, 182. [Google Scholar] [CrossRef]

- Wang, Z.; Zhang, Y.; Chen, L.; Wu, D.; Wang, Y.; Liu, L. High-throughput analysis of maize azimuth and spacing from Lidar data. Int. J. Agric. Biol. Eng. 2024, 17, 105–111. [Google Scholar] [CrossRef]

- Zhou, L.; Gu, X.; Cheng, S.; Yang, G.; Shu, M.; Sun, Q. Analysis of plant height changes of lodged maize using UAV-LiDAR data. Agriculture 2020, 10, 146. [Google Scholar] [CrossRef]

- Abdelsalam, A.; Mansour, M.; Porras, J.; Happonen, A. Depth accuracy analysis of the ZED 2i Stereo Camera in an indoor Environment. Robot. Auton. Syst. 2024, 179, 104753. [Google Scholar] [CrossRef]

- Ma, X.; Wang, T.; Lu, L.; Huang, H.; Ding, J.; Zhang, F. Developing a 3D clumping index model to improve optical measurement accuracy of crop leaf area index. Field Crops Res. 2022, 275, 108361. [Google Scholar] [CrossRef]

- Zermas, D.; Morellas, V.; Mulla, D.; Papanikolopoulos, N. 3D model processing for high throughput phenotype extraction—The case of corn. Comput. Electron. Agric. 2020, 172, 105047. [Google Scholar] [CrossRef]

- Raju, S.K.K.; Adkins, M.; Enersen, A.; Santana de Carvalho, D.; Studer, A.J.; Ganapathysubramanian, B.; Schnable, P.S.; Schnable, J.C. Leaf Angle eXtractor: A high-throughput image processing framework for leaf angle measurements in maize and sorghum. Appl. Plant Sci. 2020, 8, e11385. [Google Scholar] [CrossRef]

- Chaivivatrakul, S.; Tang, L.; Dailey, M.N.; Nakarmi, A.D. Automatic morphological trait characterization for corn plants via 3D holographic reconstruction. Comput. Electron. Agric. 2014, 109, 109–123. [Google Scholar] [CrossRef]

- Lu, H.; Tang, L.; Whitham, S.A.; Mei, Y. A Robotic Platform for Corn Seedling Morphological Traits Characterization. Sensors 2017, 17, 2082. [Google Scholar] [CrossRef]

- Zhu, D.; Zou, B.; Shi, Y.; Huang, W. Observation of the Oil Content of Fried Lotus (Nelumbo nucifera Gaertn.) Root Slices by Confocal Laser Scanning Microscopy Based on Three-Dimensional Model. J. Food Process. Preserv. 2017, 41, e12762. [Google Scholar] [CrossRef]

- Wang, X.; Pan, H.Z.; Guo, K.; Yang, X.; Luo, S. The evolution of LiDAR and its application in high precision measurement. IOP Conf. Ser. Earth Environ. Sci. 2020, 502, 012008. [Google Scholar] [CrossRef]

- Mirande, K.; Godin, C.; Tisserand, M.; Charlaix, J.; Besnard, F.; Hétroy-Wheeler, F. A graph-based approach for simultaneous semantic and instance segmentation of plant 3D point clouds. Front. Plant Sci. 2022, 13, 1012669. [Google Scholar] [CrossRef]

- Miao, T.; Zhu, C.; Xu, T.; Yang, T.; Li, N.; Zhou, Y.; Deng, H. Automatic stem-leaf segmentation of maize shoots using three-dimensional point cloud. Comput. Electron. Agric. 2021, 187, 106310. [Google Scholar] [CrossRef]

- Lai, Y.; Lu, S.; Qian, T.; Song, Z.; Chen, M. Three-Dimensional Point Cloud Segmentation for Plants. J. Appl. Sci. 2021, 39, 660–671. [Google Scholar] [CrossRef]

- Zhu, C.; Wu, F.; Liu, C.; Zhao, J.; Lin, L.; Tian, X.; Miao, T. Tassel segmentation of maize point cloud based on super voxels clustering and local features. Smart Agric. 2021, 3, 75–85. [Google Scholar] [CrossRef]

- Su, W.; Jiang, K.; Guo, H.; Liu, Z.; Zhu, D.; Zhang, X. Extraction of phenotypic information of maize plants in field by terrestrial laser scanning. Trans. Chin. Soc. Agric. Eng. 2019, 35, 125–130. [Google Scholar] [CrossRef]

- Qiu, Q.; Sun, N.; Bai, H.; Wang, N.; Fan, Z.; Wang, Y.; Meng, Z.; Li, B.; Cong, Y. Field-Based High-Throughput Phenotyping for Maize Plant Using 3D LiDAR Point Cloud Generated with a “Phenomobile”. Front. Plant Sci. 2019, 10, 554–557. [Google Scholar] [CrossRef]

- Wu, S.; Wen, W.; Xiao, B.; Guo, X.; Du, J.; Wang, C.; Wang, Y. An Accurate Skeleton Extraction Approach From 3D Point Clouds of Maize Plants. Front. Plant Sci. 2019, 10, 248–255. [Google Scholar] [CrossRef]

- Ao, Z.; Wu, F.; Hu, S.; Sun, Y.; Su, Y.; Guo, Q.; Xin, Q. Automatic segmentation of stem and leaf components and individual maize plants in field terrestrial LiDAR data using convolutional neural networks. Crop J. 2021, 9, 1239–1250. [Google Scholar] [CrossRef]

- Jin, S.; Su, Y.; Wu, F.; Pang, S.; Gao, S.; Hu, T.; Liu, J.; Guo, Q. Stem–Leaf Segmentation and Phenotypic Trait Extraction of Individual Maize Using Terrestrial LiDAR Data. IEEE Trans. Geosci. Remote Sens. 2019, 57, 1336–1346. [Google Scholar] [CrossRef]

- Miao, Y.; Peng, C.; Wang, L.; Qiu, R.; Li, H.; Zhang, M. Measurement method of maize morphological parameters based on point cloud image conversion. Comput. Electron. Agric. 2022, 199, 107174. [Google Scholar] [CrossRef]

- Liu, F.; Song, Q.; Zhao, J.; Mao, L.; Bu, H.; Hu, Y.; Zhu, X.-G. Canopy occupation volume as an indicator of canopy photosynthetic capacity. New Phytol. 2021, 232, 941–956. [Google Scholar] [CrossRef]

- Li, Y.; Wen, W.; Fan, J.; Gou, W.; Gu, S.; Lu, X.; Yu, Z.; Wang, X.; Guo, X. Multi-source data fusion improves time-series phenotype accuracy in maize under a field high-throughput phenotyping platform. Plant Phenomics 2023, 5, 0043. [Google Scholar] [CrossRef]

- Chen, H.; Liu, S.; Wang, C.; Wang, C.; Gong, K.; Li, Y.; Lan, Y. Point cloud completion of plant leaves under occlusion conditions based on deep learning. Plant Phenomics 2023, 5, 0117. [Google Scholar] [CrossRef] [PubMed]

- Murata, H.; Noshita, K. Three-dimensional leaf edge reconstruction combining two-and three-dimensional approaches. Plant Phenomics 2024, 6, 0181. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Cai, J.; Liu, Y.; Chen, X.; Wang, Y. Motion-induced error reduction for phase-shifting profilometry with phase probability equalization. Opt. Lasers Eng. 2022, 156, 107088. [Google Scholar] [CrossRef]

- Wang, Y.; Cai, J.; Zhang, D.; Chen, X.; Wan, Y. Nonlinear Correction for Fringe Projection Profilometry with Shifted-Phase Histogram Equalization. IEEE Trans. Instrum. Meas. 2022, 71, 5005509. [Google Scholar] [CrossRef]

- Wang, Y.; Zhu, H.; Wang, Y.; Chen, X.; Wang, Y. Efficient Half-Period Phase Histogram Equalization for General Phase-Shifting Algorithms with Phase Shift Errors. IEEE Trans. Instrum. Meas. 2022, 71, 5023810. [Google Scholar] [CrossRef]

| Specifications | Parameters |

|---|---|

| Laser line number | 16 |

| Measurement range | 0.5–100 m |

| Measurement accuracy | ±3 cm |

| Horizontal field of view | 360° |

| Vertical field of view | 30° (−15°–+15°) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Su, Y.; Li, R.; Wang, M.; Li, C.; Ou, M.; Liu, S.; Hou, W.; Wang, Y.; Liu, L. Measurement of Maize Leaf Phenotypic Parameters Based on 3D Point Cloud. Sensors 2025, 25, 2854. https://doi.org/10.3390/s25092854

Su Y, Li R, Wang M, Li C, Ou M, Liu S, Hou W, Wang Y, Liu L. Measurement of Maize Leaf Phenotypic Parameters Based on 3D Point Cloud. Sensors. 2025; 25(9):2854. https://doi.org/10.3390/s25092854

Chicago/Turabian StyleSu, Yuchen, Ran Li, Miao Wang, Chen Li, Mingxiong Ou, Sumei Liu, Wenhui Hou, Yuwei Wang, and Lu Liu. 2025. "Measurement of Maize Leaf Phenotypic Parameters Based on 3D Point Cloud" Sensors 25, no. 9: 2854. https://doi.org/10.3390/s25092854

APA StyleSu, Y., Li, R., Wang, M., Li, C., Ou, M., Liu, S., Hou, W., Wang, Y., & Liu, L. (2025). Measurement of Maize Leaf Phenotypic Parameters Based on 3D Point Cloud. Sensors, 25(9), 2854. https://doi.org/10.3390/s25092854