Multi-Scale Fusion Underwater Image Enhancement Based on HSV Color Space Equalization

Abstract

1. Introduction

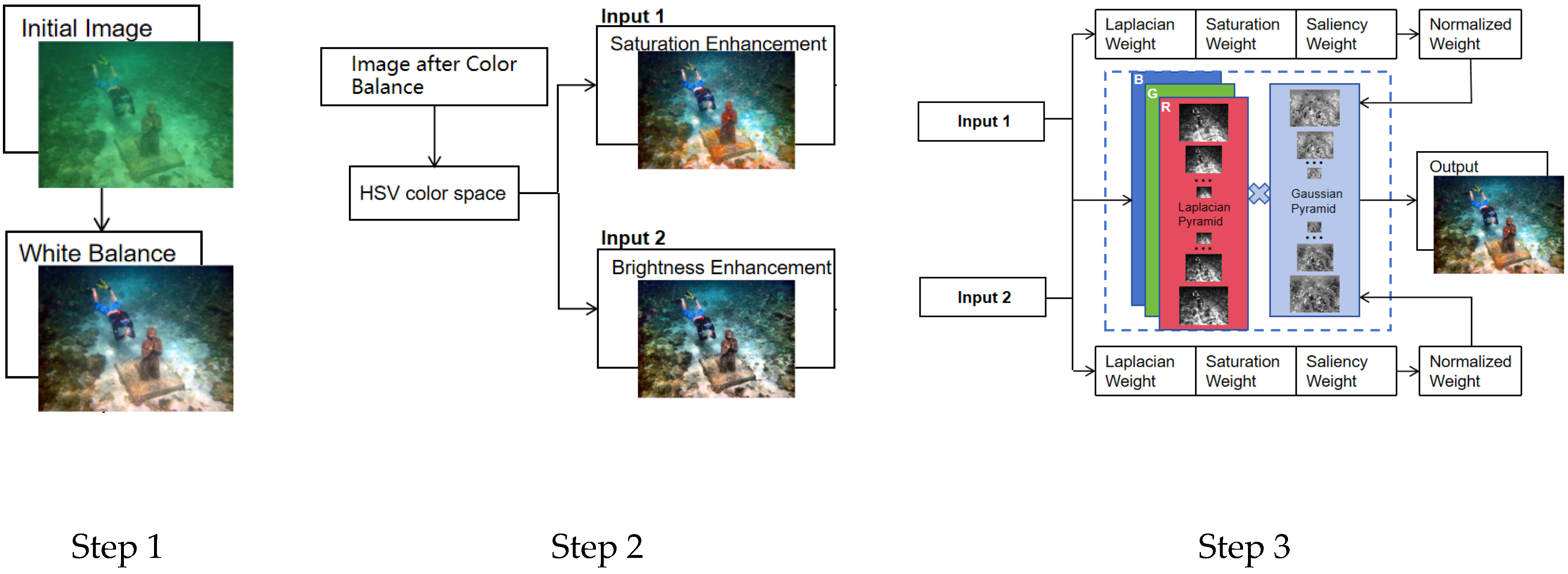

2. Materials and Methods

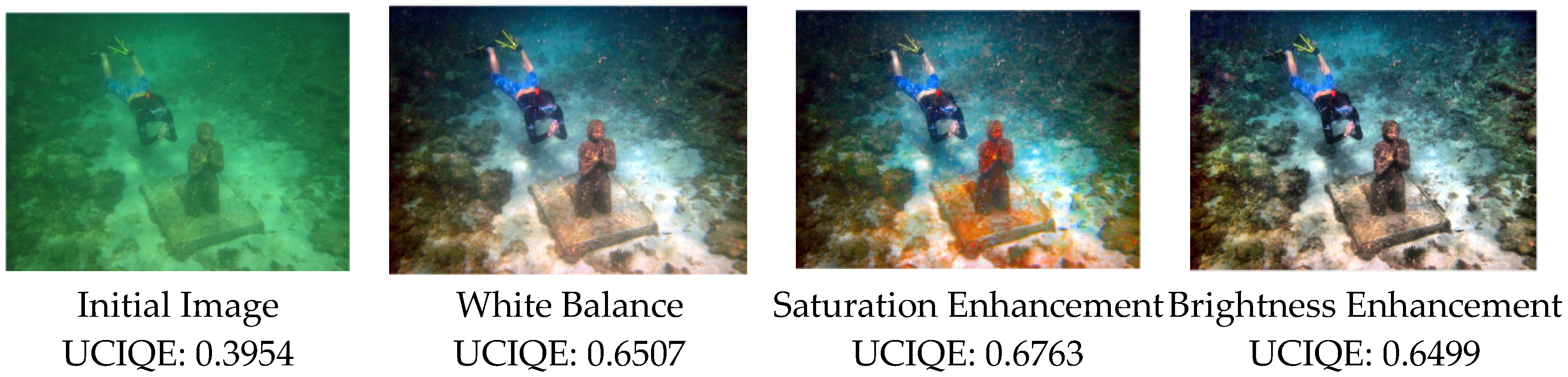

- Color balance using improved white balance

- Brightness and saturation enhancement in HSV color space

- Multi-scale fusion of enhanced omages

2.1. Color Balance

2.2. Saturation and Brightness Enhancement Based on HSV Color Space

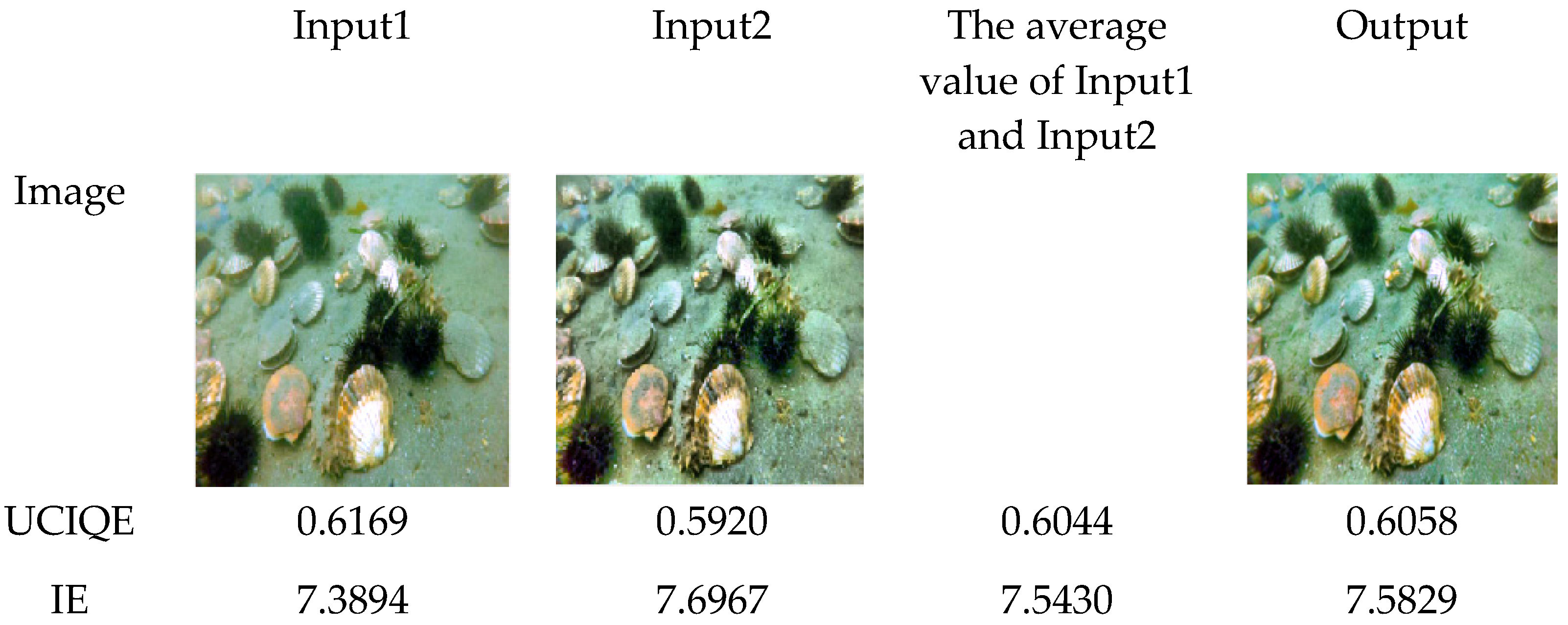

2.3. Multi-Scale Fusion Technology

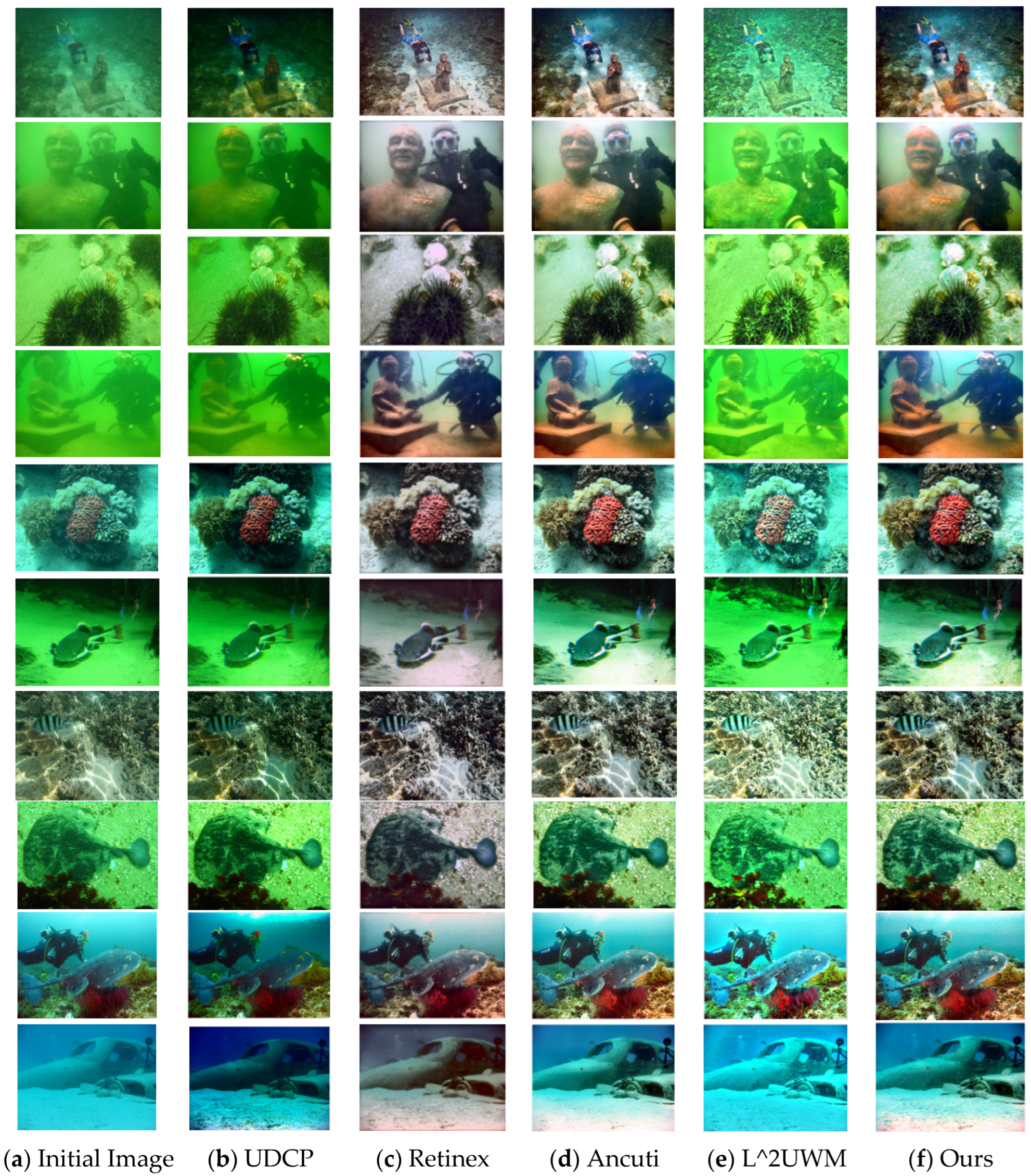

3. Results

3.1. Objective Evaluation Without Reference

- Underwater Color Image Quality Evaluation (UCIQE): UCIQE is a no-reference image quality metric specifically designed for underwater images, proposed by Yang et al. Unlike traditional quality metrics, UCIQE does not require a reference image for comparison. It assesses image quality based on the chromaticity, contrast, and sharpness of underwater images. This metric is a linear combination of three independent components: the standard deviation of chroma, the contrast of luminance, and the average of saturation. The index was initially developed for images from underwater pipeline inspections, with coefficients for different weights being derived through fitting. UCIQE has been widely adopted due to its effectiveness in reflecting color distortions and blurriness commonly encountered in underwater imaging.

- Underwater Image Quality Measure (UIQM): UIQM is another no-reference image quality assessment metric for underwater images, proposed by Panetta et al. It evaluates image quality based on three key aspects: colorfulness, sharpness, and contrast. Specifically, UIQM comprises a colorfulness measure that assesses deviations in the image colors from the typical blueish tint of underwater images; a sharpness measure that quantifies the amount of discernible detail within the image; and a contrast measure that evaluates the degree of visibility in the image. These components are combined to provide a comprehensive assessment of underwater image quality.

- Image Entropy (IE): Image entropy is a statistical measure used to describe the texture of an image. In the context of underwater imagery, a high entropy value typically indicates a richness of detail and texture, suggesting that the image is of high quality and contains a substantial amount of information. Conversely, a lower entropy value usually signifies a smoother image with a lack of details, which might be due to blur, low contrast, or color degradation. Although IE is a more general metric and not specifically designed for underwater images, it offers valuable insights into the degree of detail preserved during the image enhancement process.

3.2. SIFT

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Li, C.Y.; Guo, J.C.; Cong, R.M.; Pang, Y.W.; Wang, B. Underwater image enhancement by dehazing with minimum information loss and histogram distribution prior. IEEE Trans. Image Process. 2016, 25, 5664–5677. [Google Scholar] [CrossRef]

- He, K.; Sun, J.; Tang, X. Guided image filtering. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 35, 1397–1409. [Google Scholar] [CrossRef] [PubMed]

- Drews, P.; Nascimento, E.; Moraes, F.; Botelho, S.; Campos, M. Transmission estimation in underwater single images. In Proceedings of the 2013 IEEE International Conference on Computer Vision Workshops, Sydney, NSW, Australia, 2–8 December 2013. [Google Scholar]

- Iqbal, K.; Odetayo, M.; James, A.; Salam, R.A.; Talib, A.Z.H. Enhancing the low quality images using unsupervised colour correction method. In Proceedings of the 2010 IEEE International Conference on Systems, Man and Cybernetics, Istanbul, Turkey, 10–13 October 2010; IEEE: Piscataway, NJ, USA, 2010; pp. 1703–1709. [Google Scholar]

- Zhang, S.; Wang, T.; Dong, J.; Yu, H. Underwater image enhancement via extended multi-scale Retinex. Neurocomputing 2017, 245, 1–9. [Google Scholar] [CrossRef]

- Garg, D.; Garg, N.K.; Kumar, M. Underwater image enhancement using blending of CLAHE and percentile methodologies. Multimed. Tools Appl. 2018, 77, 26545–26561. [Google Scholar] [CrossRef]

- Ma, X.; Chen, Z.; Feng, Z. Underwater image restoration through a combination of improved dark channel prior and gray world algorithms. J. Electron. Imaging 2019, 28, 053033. [Google Scholar] [CrossRef]

- Li, C.; Guo, C.; Ren, W.; Cong, R.; Hou, J.; Kwong, S.; Tao, D. An underwater image enhancement benchmark dataset and beyond. IEEE Trans. Image Process. 2019, 29, 4376–4389. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Zhang, J.; Cao, Y.; Wang, Z. A deep CNN method for underwater image enhancement. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1382–1386. [Google Scholar]

- Ancuti, C.; Ancuti, C.O.; Haber, T.; Bekaert, P. Enhancing underwater images and videos by fusion. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 81–88. [Google Scholar]

- Ancuti, C.O.; Ancuti, C.; De Vleeschouwer, C.; Bekaert, P. Color balance and fusion for underwater image enhancement. IEEE Trans. Image Process. 2017, 27, 379–393. [Google Scholar] [CrossRef] [PubMed]

- Gao, F.; Wang, K.; Yang, Z.; Wang, Y.; Zhang, Q. Underwater image enhancement based on local contrast correction and multi-scale fusion. J. Mar. Sci. Eng. 2021, 9, 225. [Google Scholar] [CrossRef]

- Wu, J.; Liu, X.; Lu, Q.; Lin, Z.; Qin, N.; Shi, Q. FW-GAN: Underwater image enhancement using generative adversarial network with multi-scale fusion. Signal Process. Image Commun. 2022, 109, 116855. [Google Scholar] [CrossRef]

- Liao, K.; Peng, X. Underwater image enhancement using multi-task fusion. PLoS ONE 2024, 19, e0299110. [Google Scholar] [CrossRef] [PubMed]

- Miao, M.; Wang, S. PA-ColorNet: Progressive attention network based on RGB and HSV color spaces to improve the visual quality of underwater images. Signal Image Video Process. 2023, 17, 3405–3413. [Google Scholar] [CrossRef]

- Chen, Q.; Zhang, Z.; Li, G. Underwater image enhancement based on color balance and multi-scale fusion. IEEE Photonics J. 2022, 14, 3963010. [Google Scholar] [CrossRef]

- Yang, M.; Sowmya, A. An underwater color image quality evaluation metric. IEEE Trans. Image Process. 2015, 24, 6062–6071. [Google Scholar] [CrossRef] [PubMed]

- Panetta, K.; Gao, C.; Agaian, S. Human-visual-system-inspired underwater image quality measures. IEEE J. Ocean. Eng. 2015, 41, 541–551. [Google Scholar] [CrossRef]

- Marques, T.P.; Albu, A.B. L2uwe: A framework for the efficient enhancement of low-light underwater images using local contrast and multi-scale fusion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 538–539. [Google Scholar]

- Ke, Y.; Sukthankar, R. PCA-SIFT: A more distinctive representation for local image descriptors. In Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, 2004. CVPR 2004, Washington, DC, USA, 27 June–2 July 2004; IEEE: Piscataway, NJ, USA, 2004; Volume 2, p. II. [Google Scholar]

| Image | Initial Image | UDCP | Retinex | Ancuti | L^2UWM | Ours |

|---|---|---|---|---|---|---|

| Image1 | 0.4355 | 0.2589 | 0.5730 | 0.6421 | 0.2589 | 0.6670 |

| Image2 | 0.4364 | 0.2589 | 0.5723 | 0.6278 | 0.2593 | 0.6499 |

| Image3 | 0.5240 | 0.2587 | 0.5395 | 0.5720 | 0.2591 | 0.5913 |

| Image4 | 0.3954 | 0.2596 | 0.6431 | 0.6842 | 0.5328 | 0.6941 |

| Image5 | 0.5133 | 0.2587 | 0.5871 | 0.6366 | 0.2587 | 0.6551 |

| Image6 | 0.5667 | 0.2587 | 0.5612 | 0.5949 | 0.2590 | 0.6071 |

| Image7 | 0.5403 | 0.2587 | 0.5624 | 0.5950 | 0.2587 | 0.6247 |

| Image8 | 0.5679 | 0.2587 | 0.5825 | 0.6224 | 0.2598 | 0.6370 |

| Image9 | 0.6482 | 0.2603 | 0.6337 | 0.6738 | 0.2595 | 0.6808 |

| Image10 | 0.4420 | 0.2587 | 0.5878 | 0.6237 | 0.2587 | 0.6298 |

| Avg | 0.50697 | 0.25899 | 0.58426 | 0.62725 | 0.28645 | 0.64368 |

| Image | Initial Image | UDCP | Retinex | Ancuti | L^2UWM | Ours |

|---|---|---|---|---|---|---|

| Image1 | 1.5692 | 0.6144 | 4.6181 | 4.3992 | 3.3578 | 4.4556 |

| Image2 | 0.8171 | 0.9112 | 4.3584 | 4.1792 | 1.1468 | 4.1768 |

| Image3 | 2.4891 | 1.5223 | 5.1402 | 3.7155 | 3.9046 | 3.5338 |

| Image4 | 0.0086 | 0.5537 | 4.5593 | 4.2175 | 0.6246 | 4.2209 |

| Image5 | 2.7350 | 2.9442 | 5.3241 | 4.5343 | 4.3154 | 4.0058 |

| Image6 | 1.6510 | 1.4530 | 4.7705 | 3.3892 | 1.7610 | 2.9623 |

| Image7 | 4.1433 | −0.00016569 | 4.8622 | 4.6475 | 0.0282 | 4.3389 |

| Image8 | 2.7437 | 3.2210 | 5.5676 | 3.8919 | 5.5341 | 3.6389 |

| Image9 | 2.7501 | 2.8431 | 4.7506 | 3.6544 | 11.2107 | 3.5938 |

| Image10 | −0.6786 | 1.0072 | 3.8712 | 1.8699 | 0.5997 | 1.5851 |

| Avg | 1.82285 | 1.506993431 | 4.78222 | 3.84986 | 3.24829 | 3.65119 |

| Image | Initial Image | UDCP | Retinex | Ancuti | L^2UWM | Ours |

|---|---|---|---|---|---|---|

| Image1 | 6.7556 | 0.4050 | 7.6409 | 7.7450 | 0.7084 | 7.7318 |

| Image2 | 7.0016 | 0.9997 | 7.6775 | 7.8622 | 0.3147 | 7.8698 |

| Image3 | 7.2909 | 0.9197 | 7.6827 | 7.7164 | 0.7028 | 7.8050 |

| Image4 | 6.3964 | 0.7417 | 7.6231 | 7.6266 | 0.0134 | 7.6443 |

| Image5 | 7.3428 | 0.8465 | 7.6257 | 7.8335 | 0.9118 | 7.8600 |

| Image6 | 7.3689 | 0.9980 | 7.5956 | 7.8161 | 0.8324 | 7.9088 |

| Image7 | 7.4718 | 0.6450 | 7.6876 | 7.8332 | 0.9098 | 7.8382 |

| Image8 | 7.6236 | 0.9818 | 7.6648 | 7.8708 | 0.8515 | 7.9285 |

| Image9 | 7.6105 | 0.7244 | 7.6817 | 7.7538 | 0.8963 | 7.7984 |

| Image10 | 6.5511 | 0.9238 | 7.6498 | 7.6057 | 0.4505 | 7.6562 |

| Avg | 7.14132 | 0.81856 | 7.65294 | 7.76633 | 0.65916 | 7.8041 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, J.; Su, H.; Zhang, T.; Tian, H.; Fan, B. Multi-Scale Fusion Underwater Image Enhancement Based on HSV Color Space Equalization. Sensors 2025, 25, 2850. https://doi.org/10.3390/s25092850

Zhang J, Su H, Zhang T, Tian H, Fan B. Multi-Scale Fusion Underwater Image Enhancement Based on HSV Color Space Equalization. Sensors. 2025; 25(9):2850. https://doi.org/10.3390/s25092850

Chicago/Turabian StyleZhang, Jialiang, Haibing Su, Tao Zhang, Hu Tian, and Bin Fan. 2025. "Multi-Scale Fusion Underwater Image Enhancement Based on HSV Color Space Equalization" Sensors 25, no. 9: 2850. https://doi.org/10.3390/s25092850

APA StyleZhang, J., Su, H., Zhang, T., Tian, H., & Fan, B. (2025). Multi-Scale Fusion Underwater Image Enhancement Based on HSV Color Space Equalization. Sensors, 25(9), 2850. https://doi.org/10.3390/s25092850