Abstract

Circle detection remains a critical yet challenging task in computer vision, particularly under complex imaging conditions where existing measurement methods face persistent challenges in parameter configuration and noise resilience. This paper presents a novel circle detection algorithm based on two perceptually grounded parameters: the perceptual length difference resolution λ, derived from human cognitive models, and the minimum distinguishable distance threshold K, determined through empirical observations. The algorithm implements a local stochastic sampling strategy integrated with a bottom-up circular search mechanism, with all critical parameters in the algorithm derived adaptively based on λ and K, eliminating the need for repetitive hyperparameter search processes. Experiments demonstrate that our methodology achieves an exceptional Fscore of 85.5% on the public circle detection dataset, surpassing state-of-the-art approaches by approximately 7.3%. Notably, the framework maintains robust detection capability (Fscore = 85%) under extreme noise conditions (50% Gaussian noise contamination), maintaining superior performance relative to comparative methods. The adaptive parameterization strategy provides insights for developing vision systems that bridge computational efficiency with human perceptual robustness.

1. Introduction

In the field of computer vision, circle detection algorithms play an essential role in diverse areas such as traffic and road safety [1], industrial automation [2,3], medical image processing [4], and scientific research [5]. The accurate detection and precise measurement of circles are essential for numerous vision-based automated tasks.

Many circle detection algorithms utilize the Canny edge detector or its adaptive counterpart to extract image boundaries [6,7]. The Canny edge detector’s reliance is based on carefully selected hyperparameters—Gaussian filter standard deviation () and high/low thresholds (, ) and parameter optimization for specific tasks is often necessary to achieve optimal performance. Guo et al. introduced the bidirectionally connected Canny algorithm to refine edge detection [8], but it does not address the limitations imposed by hyperparameter selection. Yang et al. leveraged Otsu’s method for adaptive threshold calculation in the Canny operator [9], but it lacks sensitivity to subtle nuances and performs poorly in complex images. Meng et al. utilized a locally adaptive Canny algorithm for edge detection [10]; however, this can result in edge discontinuity, potentially compromising the accuracy of detection results. An alternative edge detection algorithm frequently employed is the edge detection parameter-free algorithm (EDPF) [11,12,13,14]. EDPF employs a 5 × 5 Gaussian kernel for image filtering, detects potential edge points based on gradient magnitude and direction, constructs an initial edge map, and finally eliminates spurious edges using the Helmholtz principle [15]. The EDPF demonstrates stronger noise robustness than Canny and operates reliably without hyperparameter tuning. This study employed EDPF as a preliminary edge extractor.

While the circle Hough Transform (CHT) algorithm is used widely in circle detection [16,17,18], it incurs computational and spatial inefficiencies. Furthermore, its accuracy is highly sensitive to numerous hyperparameters, including the accumulator threshold, radius range, accumulator resolution, and voting mechanism design, all of which directly impact result quality [19,20]. Memiş et al. combined the CHT with the integro-differential operator to improve circle matching accuracy [21], but their approach didn’t fundamentally address the high computational cost and hyperparameter dependence inherent in CHT. Kim et al. proposed a two-stage Hough transform algorithm that first uses a 2D accumulator matrix to locate circle centers and then a 1D histogram to determine the radius [22]. This method reduces computational time and memory consumption, but challenge of hyperparameter selection remains.

The random sample consensus (RANSAC)-based algorithm [23,24,25,26] demonstrates heightened efficiency on conventional imagery; its performance wanes when confronted with noisy interference or complex scenes. Moreover, several pivotal hyperparameters inherent in the algorithm, including sampling-point counts, accumulation thresholds, fitting precision requirements, and iteration count, significantly influence the algorithm’s final outputs [27].

To enhance circle detection accuracy, many algorithms have been investigated. Jiang et al. [28] implemented a refined RANSAC approach, augmenting the sampling density within a restricted region to achieve more precise circle parameter estimations. Zhao et al. [14] proposed a method that optimizes candidate circle parameters by verifying inscribed triangles. This method aims to connect as many circular arc segments as possible into a whole. Other researchers have investigated diverse optimization algorithms to further elevate the accuracy of circle parameter estimation [29,30,31]. These algorithms often introduced additional hyperparameters, compounding the challenges of hyperparameter optimization. Moreover, these approaches employed a greedy strategy to assemble circular arcs, thereby reducing the vast search space, yet they are prone to falling into the trap of local optima.

Researchers have also applied deep learning to circle detection tasks. Mekhalfi et al. compared the performance of three deep learning models (YOLOv5, Transformer, and EfficientDet) in detecting circular agricultural patterns in desert regions [32]. The authors constructed two datasets and evaluated the models’ respective performances on them. Yue et al. employed an enhanced YOLO algorithm for dish detection [33], which included circular object recognition. Gai et al. proposed a cherry detection algorithm based on the YOLO framework [34], replacing traditional anchor boxes with circular bounding boxes to better match the fruit’s morphology, thereby improving detection accuracy. Similarly, Liu et al. introduced the YOLO-Tomato model [35], utilizing circular bounding boxes (C-Bboxes) that better conform to tomato shapes to enhance both detection precision and IoU calculation accuracy in non-maximum suppression. These approaches predominantly employ deep localization models to identify circular objects in images, yielding promising results. These approaches are tailored specifically for detecting circular objects in particular contexts, rather than serving as general-purpose circle detection algorithms. Moreover, these methods generate bounding boxes other than precise circular parameters. In scenarios demanding accurate geometric measurements, traditional circle detection algorithms remain indispensable for precisely determining the parameters of circles within these bounding regions.

This study leveraged the EDPF algorithm for preliminary edge detection. Subsequently, a novel patch-based random sample consensus algorithm (pRC) was employed to detect linear segments and circular arcs in images, and a hierarchical search algorithm (HS) was proposed to combine these geometric primitives to candidate circles. Based upon these candidates, we introduced a circle parameter synthesis algorithm (CPS) to search, aggregate, and refine circular parameters. Finally, the arc length ratio was calculated to filter spurious detections while preserving true circle parameters.

The pRC algorithm partitions the entire image into patches and applies the RANSAC process within each patch to extract primitive geometric structures. This divide-and-conquer approach effectively addresses the hyperparameter selection challenges commonly encountered in traditional RANSAC algorithms. Our HS algorithm employs a bottom-up search strategy to iteratively coalesce circular arcs, ultimately converging to the globally optimal combination solution. The CPS searches for arc segments with similar geometric parameters, systematically aggregates them through a bottom-up hierarchical way, and thereby identifies optimal circular parameter configurations. Starting from edge points, these three algorithms iteratively aggregate candidate circle parameters to produce reliable circle estimations. The incorporation of a search strategy makes each step achieve a globally optimal solution. In contrast to other algorithms that employ a greedy strategy that often leads to local optima, our methodology exhibits enhanced robustness against noise.

2. Methodology

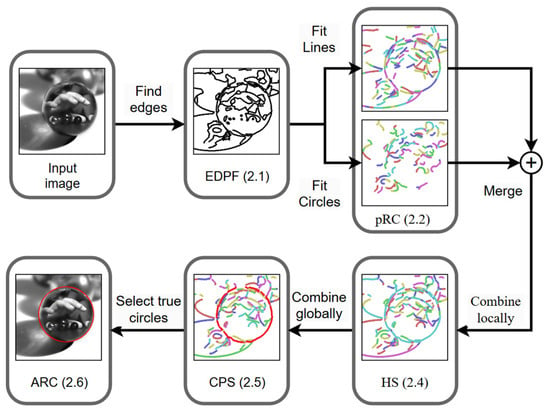

The flowchart of the algorithm is illustrated in Figure 1. For the input image, we first employed the EDPF to extract edge points. Subsequently, the pRC algorithm was applied to extract line segments and circular arcs as preliminary structural elements. The HS algorithm was then utilized to integrate adjacent structural elements into candidate circles. This was followed by the CPS to globally combine and optimize circle parameters. Finally, true circle parameters were obtained through arc length ratio criteria (ARC).

Figure 1.

The flowchart of the algorithm, distinct colors denote different segments.

2.1. Edge Point Detection

This work leveraged the EDPF algorithm for edge point detection; subsequently, a thinning algorithm [36] was applied to ensure the detected edges had a consistent width of one pixel. The EDPF filters the image with a 5 × 5 Gaussian kernel, identifies pixels with significant gradient magnitudes and directions as potential edge points, then connects them to form an initial edge map, and finally removes spurious edges using the Helmholtz principle [15]. Compared to the Canny algorithm, EDPF exhibits enhanced noise robustness, delivers connected edges other than discrete points, and, as its name suggests, performs reliably without hyperparameter tuning.

2.2. The pRC Algorithm

The pRC is a modified RANSAC approach to uncover preliminary structures within images. The image is partitioned into patches (see Section 2.2.1), and an optimized RANSAC was applied within each patch to detect structural elements (see Section 2.2.2). Then non-maximum suppression was utilized to eliminate redundant detection results (see Section 2.2.3). Since circular arcs with larger radii may approximate straight line segments within the local patches, both line segments and arc detection are required. This patch-based strategy enables parallel processing to increase computational speed and facilitates adaptive parameter estimation, thereby enhancing versatility across diverse images.

2.2.1. Edge Points Partitioning

We segmented the input image into a series of square patches () with a stride of , and edge points were extracted within each patch. Patch size and stride remain invariant regardless of the image dimensions, and patches along image edges lacking sufficient pixels were discarded. This configuration resulted in a 50% overlap between adjacent patches, which guaranteed that segments traversing patch boundaries also be detected. Values for and were derived from , as detailed in Appendix A.

2.2.2. RANSAC on Patches

RANSAC was then applied to each patch to identify line segments and circular arcs, with the critical parameter—iteration count —calculated adaptively. Here, we define as the desired probability of detecting the geometric structure, as the minimum number of points required to form a structure, and as the set of all edge points in the patch. The problem can be posed as a probabilistic proposition: “Given a set of edge points , how many repetitions () are required to ensure a probability for structures with length no less than ”. Therefore, can be calculated using Equations (1) and (2).

where denotes the number of elements in a set, and represents size of support points for a structural entity. For a line segment, ; for a circle, . The notation indicates the ceiling function, which rounds a number up to the nearest integer. The calculation for was detailed in Appendix A.

To maximize structure detection, we optimized RANSAC’s random sampling process. The algorithm employs an iterative strategy for structural extraction. During each iteration, it performs N random samplings and parameter estimations. At each step, support points were randomly selected from , and structural parameters were estimated using the least squares method. Subsequently, the algorithm identified the subset of points in V that satisfy the distance collinearity constraints relative to the derived parameters. When the number of identified collinear points exceeds the predefined threshold , the current parameters and their corresponding point set are recorded. Upon completing N cycles, the algorithm selects the optimal parameters from all recorded instances for the output result, simultaneously removing the corresponding points from . This process repeats until no qualifying point set can be found or the remaining points in fall below the threshold (see Algorithm 1).

| Algorithm 1: The pRC algorithm | |

| : All edge points in patch | |

| 1 | Calculate the loop count N using (1–2) |

| 2 | |

| 3 | |

| 4 | //for line segments, t = 2; for circular arcs, t = 3 |

| 5 | , THEN CONTINUE |

| 6 | |

| 7 | |

| 8 | |

| 9 | |

| 10 | THEN GOTO line 15 |

| 11 | |

| 12 | |

| 13 | |

| 14 | THEN GOTO line 1 |

| 15 | |

Points were considered on the arc if their distance to the curve is less than one pixel; therefore, the in line 7 is set to 1 pixel. The empirical hyperparameter was set to 3 pixels, which represents the minimal perceptible difference, detailed in Appendix A.

2.2.3. Non-Maximum Suppression (NMS)

Since each patch overlaps with its adjacent patches, redundant detection of structural elements may occur. It is essential to utilize a non-maximum suppression algorithm to eliminate superfluous detection results for both line segments and circular arcs. Let denote the initial set of all segments, and be the result of NMS, the algorithm iteratively selects the longest segment and extirpates any structures exhibiting overlap exceeding K. This process repeats until all results have been processed, see Equations (3)–(5), as follow:

where denotes the set of points lying on the line, denotes the set difference of and , and |·| indicates the cardinality of the set.

2.3. Distance Metric

We employed distance metric to evaluate the error of the circle parameters fitted by the least square. Given a segment , the distance from to the circle (characterized by parameters a, , and ) is defined as the maximum distance from all points on this line segment to the circle, as shown in (6).

If is less than the distance-limit value , the line segment was considered to meet the distance criterion, and was calculated using (7), as follows:

where λ = 0.05 was another hyperparameter that represented the minimum length difference discrimination ratio between line segments (see Appendix A).

2.4. The HS Algorithm

After obtaining the point sets of line segments and arc segments (collectively termed “segments”), we added all segments to the segment library (denoted as ). We employed a bottom-up hierarchical search algorithm (HS) method to incrementally construct candidate arcs and their parameters. The algorithm starts by employing least-squares to fit circular parameters for each segment. Subsequently, it iteratively identifies and merges the most suitable adjacent segments. This process continues until no further advantageous mergers are possible; see Algorithm 2.

| Algorithm 2: Calculate candidate circle parameters | |

| : : All segments derived from section C. | |

| : | |

| 1 | //: A function that fits circular parameters via least squares, returns parameters if its distance criterion is met, see Equations (6) and (7); otherwise, returns None. |

| 2 | |

| 3 | |

| 4 | |

| 5 | |

| 6 | |

| 7 | Q |

| 8 | |

| 9 | |

| 10 | < |

| 11 | End FOR EACH |

| 12 | THEN: |

| 13 | |

| 14 | |

| 15 | |

| 16 | THEN: |

| 17 | End FOR EACH |

| 18 | THEN: |

| 19 | |

| 20 | GOTO line 2 |

| 21 | End IF |

| 22 | |

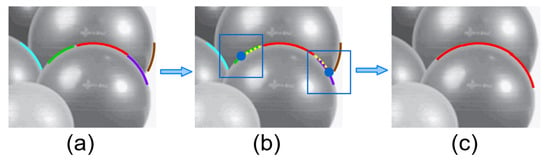

In line 4, the function was implemented to identify potential candidate segments for combination by examining square regions that extended beyond both endpoints of within . The square regions possess a fixed side length of , with their positions determined through the following protocol: for segments unbounded with circular parameters, linear extension was implemented by extending outward along the linear trajectory by . For circular-parameter-bounded segments, extension was performed along the arc length by . Segments were identified as candidates when any of their constituent points fell within the corresponding square region. The illustration is shown in Figure 2a image with the identified arcs; Figure 2b extend the central arc (red) with its parameter in both directions by a distance of (yellow dashed lines). The extension points (blue dots) are centers for square search regions (blue squares) of side length . Candidate segments are found within these regions (cyan and green on the left; purple and brown on the right); Figure 2c finally, an optimal combination pattern is sought, merging relevant segments into a unified arc (red, green, and purple combined).

Figure 2.

Schematic representation of extended segment search, using circular arcs as an illustrative example. Various colors signify distinct arcs, while dashed lines denote outward-expanding trajectories, and the blue bounding box delineates the search area, with blue dots marking extension points. Arrows illustrate the algorithmic flow.

2.5. The CPS Algorithm

In Section 2.4, we obtained candidate circle parameters along with their corresponding point sets. These parameters were collectively referred to as the circle parameter library , where represents the edge points of circle, represents the circle’s parameters: center coordinates and radius . We introduced the circle parameter synthesis () algorithm to search, aggregate, and refine circular parameters. For each circle in , the algorithm identifies the five nearest candidate circles within the 3D parameter space, merges their arc points to form potential new circles, and selects the optimal combination based on minimum distance. This procedure repeats until convergence, outputting the refined parameter collection, see Algorithm 3. To accelerate analogous circle retrieval, we employed the kd-tree algorithm [37].

| Algorithm 3: Circle Space Refinement | |

| : : Circle parameter library | |

| : Refined Circles | |

| 1 | |

| 2 | FOR EACH in : |

| 3 | |

| 4 | |

| 5 | //Select the circle minimizing the distance metric defined in Equation (6). |

| 6 | IF THEN CONTINUE |

| 7 | |

| 8 | |

| 9 | END FOR EACH |

| 10 | |

| 11 | IF THEN |

| 12 | |

| 13 | GOTO line 1 |

| 14 | END IF |

| 15 | RETURN |

2.6. Arc Length Ratio Criteria

After obtaining the circle parameters , , , , , and respectively represent the X-coordinate, Y-coordinate of the -th circle’s center, and its radius, while and denote the coordinates of the -th point on the -th circle. The arc completion was performed to select optimal circle parameters using Equations (8) and (9), as follow:

where , denotes the total number of circles detected in Section 2.5. The empirical parameter represents the minimum arc completion threshold. indicates the completion threshold for circle n, where arcs exceeding are considered valid circles. The value of ranges from to 0.9 according to circle radius variations, with smaller radii requiring higher completion levels. As demonstrated in Appendix A, η = 0.9 denotes the ratio between ideal and digitized circle circumferences. represents the parameters of finally detected circles.

3. Experiments and Results

3.1. Datasets

We employed the dataset collected by Zhao et al. [14], which comprises four distinct components:

- Dataset-Mini: A benchmark test set containing 10 low-resolution images with fixed pixel dimensions, presenting spectral reflection and occlusion challenges, originally established by Akinlar and Topal [11].

- Dataset-GH: A complex real-world collection of 258 grayscale images from diverse environments, exhibiting blurred boundaries, partial occlusions, and substantial radius variations.

- Dataset-PCB: An industrial dataset comprising 100 printed circuit board (PCB) images with concentric circular structures, containing significant noise pollution and edge blurring that present detection challenges.

- Dataset-MY: A newly compiled dataset of 111 smartphone-captured images featuring multiple circular targets per frame, characterized by cluttered backgrounds, perspective distortions, and elliptical deformations caused by angular perspectives.

The datasets above were widely used in circle detection tasks [6,7,12], and all images were manually annotated with ground truth.

3.2. Environment

The testing hardware environment comprised an Intel(R) Core (TM) i7-10700 CPU @ 2.90 GHz with 16-core architecture and 32 GB RAM. The software environment utilized Ubuntu 22.04.4 LTS, with the algorithm implementation coded in Rust programming language, employing the rayon crate for parallel processing during pRC, HS, and CPS.

3.3. Comparison Metrics

In this study, we integrated the four data sets and calculated the comprehensive algorithm performance across all images. We employed Precision, Recall, and Fscore metrics to evaluate algorithmic performance, as defined in Equations (10)–(12).

A detected circular parameter was classified as a true positive (TP) if its intersection-over-union ratio (IoU) with any ground truth label exceeded 0.8; otherwise, it was designated as a false positive (FP). The IoU threshold (0.8) was adopted in many circle detection tasks [6,7,14]. Ground truth labels lacking corresponding detected parameters were identified as false negatives (FN).

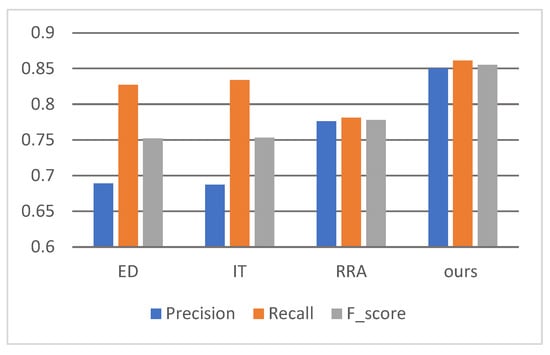

3.4. Performance

To evaluate our model’s performance, we conducted comparative evaluations against three established circle detection methods: the EDCircle algorithm (ED) [12], the inscribed triangles method (IT) [14], and the regionalized radius assistance technique (RRA) [7]. Their source codes are publicly available online, with ED and IT implemented in C++, while RRA was developed using MATLAB. The C++ code was compiled using GCC version 11.4.0, while the MATLAB scripts were executed on MATLAB 2018b. All comparative methods had demonstrated superior performance in prior circle detection research, and the hyperparameters of each algorithm were set to the optimal values as specified in their respective original papers. The performance of each algorithm is summarized in Table 1. We analyzed the experimental results and plotted them in a bar chart (Figure 3). The results demonstrate that our algorithm outperformed the comparative methods across all performance metrics, surpassing the second-best algorithm by 7.2% in Fscore. Furthermore, our approach exhibited greater consistency across all three metrics compared to other methods, suggesting enhanced result uniformity and reduced sensitivity to data distribution variations. This can improve consistency and enhance the algorithm’s applicability across diverse practical scenarios. Table 1’s fifth column presents the average execution times of algorithms across all images. Evidently, our algorithm requires more computation time than the real-time circle detection methods ED and IT. Nevertheless, with an average runtime under 0.8 s, it remains well within practical usability thresholds.

Table 1.

The performance comparison of the algorithms.

Figure 3.

The performance of all algorithms using a bar chart.

Representative experimental results are visualized in Figure 4. ED exhibits lower detection rates on some images while producing a higher number of false positives in others. IT generally presents more false positive detections. RRA shows balanced performance but yields inferior results compared to our method.

Figure 4.

The circle detection samples, arranged from left to right as follows: the raw image, ground truth, and the detection results of ED, IT, RRA, and ours. All algorithms exhibited some false or missed detections, but our method performed better overall. The red circles shown in the figures indicate the circular shapes detected by the algorithms.

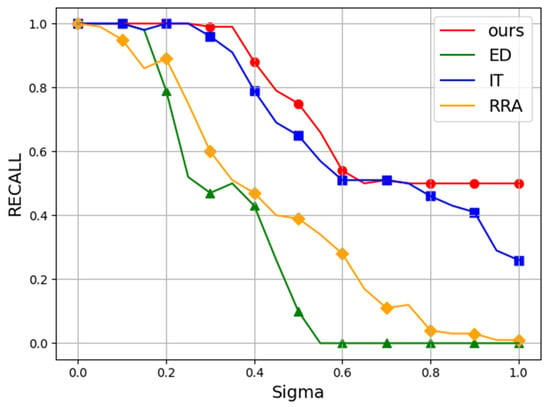

3.5. Noise Test

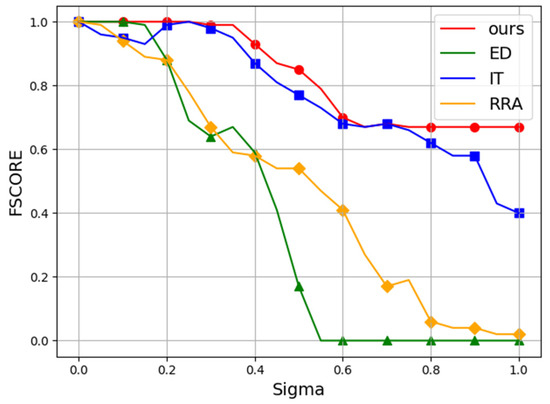

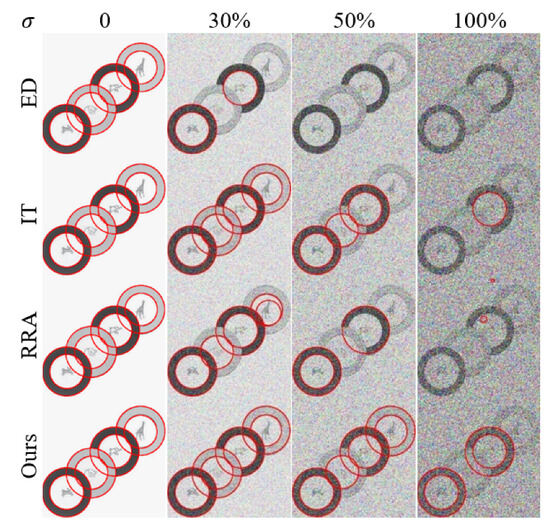

In addition, we evaluated the robustness of each algorithm against Gaussian noise perturbations. Following conventional practice, we employed the 400 × 300 “plates” image from Dataset Mini. Starting with a Gaussian noise level of , we progressively increased the noise intensity in 5% increments until reaching . The Gaussian noise was added to the original image, with pixel values subsequently clipped to . Experiments were repeated 10 times at each noise level, averaging the Recall and Fscore results. The average results are presented as line charts in Figure 5 and Figure 6, whereas Figure 7 shows representative detection outputs from the algorithms under different noise conditions.

Figure 5.

The Recall performance of all algorithms under noise interference. Red, green, blue, and yellow represent our method, ED, IT, and RRA, respectively. The vertical axis represents Recall, and the horizontal axis denotes the standard deviation (σ) of the applied Gaussian noise, ranging from 0% to 100%.

Figure 6.

The Fscore performance of all algorithms under noise interference. The vertical axis represents Fscore, and the horizontal axis denotes the standard deviation (σ) of the applied Gaussian noise, ranging from 0% to 100%.

Figure 7.

The outcomes of each algorithm on images with increasing noise (σ = 0–100%). Each row represents a different algorithm, while columns indicate noise levels. The red circles shown in the figures indicate the circular shapes detected by the algorithms.

It can be seen in Figure 7 that our algorithm demonstrated robustness by consistently avoiding false positives, even under high noise conditions, where some other algorithms falter. In Table 2 and Table 3, the Recall and Fscore of each model across various noise levels are presented. Our method surpasses competing algorithms, attaining an impressive Fscore of 0.85 even amidst 50% noise interference.

Table 2.

The Recall score of all methods on Gaussian noise perturbations.

Table 3.

The Fscore of all methods on Gaussian noise perturbations.

4. Conclusions

This study proposes an automatic circle detection framework that requires no manual parameter tuning. The algorithm implemented efficient detection through a four-stage process based on the divide-and-conquer principle and bottom-up search: First, the EDPF algorithm extracted continuous boundary points, eliminating reliance on Gaussian filtering and thresholds in Canny operator. Second, pRC divided images into local areas for adaptive detection of line segments and arcs. Third, HS and CPS dynamically merged adjacent geometric structures to approximate true circle parameters. Finally, the arc length ratio criteria filtered pseudo-circles. The innovation manifested in three aspects: (1) a region-based local parameter adaptation mechanism converted global hyperparameters into dynamic regional computations, eliminating parameter tuning burdens in complex scenarios; (2) intuitive parameter design aligned with human cognitive patterns enhanced algorithmic transparency; and (3) geometry-constrained hierarchical structure aggregation effectively resolved arc discontinuity caused by occlusion. Experiments demonstrated an 85.5% Fscore on industrial and natural scene datasets, achieving a 7.3% improvement over suboptimal methods. The framework’s robustness also outperformed existing algorithms due to parameter adaptation and hierarchical optimization. Its core design could also be extended to complex tasks like ellipse detection, providing high-generalization solutions for industrial quality inspection and medical image analysis.

Author Contributions

Conceptualization, L.H. and K.C.; data curation, L.H., Y.X. and G.L.; formal analysis, L.H., Y.X. and G.L.; funding acquisition, Y.Z., K.C. and J.L.; methodology, L.H. and K.C.; project administration, Y.Z., G.Y. and J.L.; resources, K.C.; software, L.H.; supervision, Y.Z., G.Y. and J.L.; validation, Y.Z., K.C., G.Y. and J.L.; writing—original draft, L.H.; writing—review and editing, Y.Z. and J.L. All authors have read and agreed to the published version of the manuscript.

Funding

This study was supported by the National Natural Science Foundation of China (No. 62406211), the Natural Science Foundation of Sichuan Province (No. 2024NSFSC0654 and No. 2023NSFSC0636), and the Enterprise Commissioned Technology Development Project of Sichuan University (No. 18H0832).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data used in this study was obtained from the GitHub repository at https://github.com/zikai1/CircleDetection, accessed on 30 November 2024.

Acknowledgments

The authors extend their sincere gratitude to collaborators from Sichuan Testing Center of Medical Devices (Chengdu, China) for their professional guidance, and to the Sichuan University Biomedical Engineering Experimental Teaching Center (Chengdu, China) for its invaluable support and the provision of advanced facilities.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| EDPF | Edge detection parameter-free algorithm |

| CHT | Circle Hough Transform |

| RANSAC | Random sample consensus |

| pRC | Patch-based random sample consensus algorithm |

| HS | Hierarchical search algorithm |

| CPS | Circle parameter synthesis algorithm |

Appendix A. Parameters and Their Determination Methodologies

The parameters employed throughout this study, their corresponding assigned values, and explanatory rationales are meticulously documented in Table A1:

Table A1.

Parameters, their values, and explanations.

Table A1.

Parameters, their values, and explanations.

| Parameter | Value | Explanation |

|---|---|---|

| 3 | Minimum perceptible distance discernible by human sensory capabilities. | |

| 0.05 | Minimum length difference discrimination ratio between line segments. | |

| 0.3 | An empirical parameter, employed to ascertain the minimal arc completion of an authentic circle. | |

| 0.9 | Ratio between ideal and digitized circle circumferences. | |

| 16 | Minimum length of a valid line segment. | |

| 32 | Length of the side of the square area employed to identify local segments. | |

| 16 | Sliding step length of the square region utilized for detecting local segments. |

- 1.

- Value of

represents the minimum distinguishable pixel distance. Pixels separated by less than this threshold are considered perceptually connected. The actual value depends on the angular resolution of human vision, viewing distance, and display resolution parameters, making theoretical determination prohibitively challenging. In this study, we referred to similar research works [38,39] and assigned K a value of 3 pixels.

- 2.

- Value of

The parameter λ represents the discrimination threshold for lengths of two non-adjacent line segments, which corresponds to the Weber’s fraction for length perception. Ono’s experimental findings [40] demonstrated that under non-simultaneous viewing conditions, the human eye’s response threshold to length stimuli varied between 2.16% and 5.12% across different experimental configurations. Subsequent research by Wang et al. [41] established a discrimination threshold range of 3.10% to 5.18% for object size variation. In this study, we assigned λ = 0.05 to accommodate broader application scenarios.

- 3.

- Value of

The parameter was the arc completion parameter used during the screening stage to identify true circles. During the circle parameter fitting stage, some local noise instances might be incorrectly combined into circular shapes, though such combinations generally did not form extensive arcs. An appropriately chosen could effectively filter these false positives while preserving valid detections. However, excessively large α values might eliminate genuine circles due to incomplete arc detection or occlusion scenarios. Through empirical observation, we found that when an appropriately sized arc exhibited over 30% completion, humans consistently perceived it as a complete circle. Based on this finding, we set to 0.3 in our implementation.

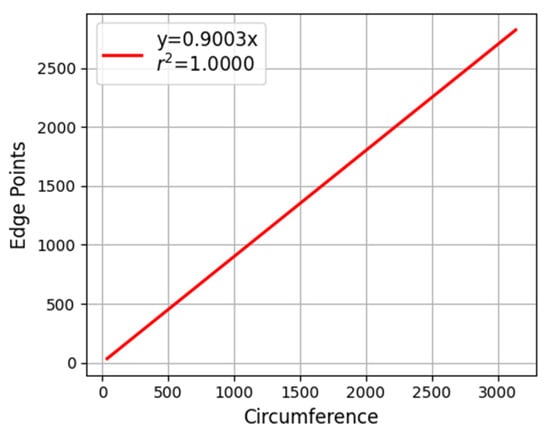

- 4.

- Value of

Mathematically, for a circle of radius , the circumference equals . In digital image processing, however, the focus shifts to quantifying boundary pixels of digitally represented circles. To investigate the relationship between boundary pixel counts and theoretical circumference values, we employed the Bresenham algorithm [42] to generate circles with radii ranging from 6 to 500 pixels. The boundary pixels were counted and subsequently compared against corresponding theoretical circumference values. The experimental data were shown in Figure A1. The ratio of the boundary pixel counts to the theoretical circumference (denoted as ) is approximately equal to 0.9, and there is a strong linear correlation between the two.

Figure A1.

The linear relationship between the theoretical circumference of a circle at different radii and the number of its boundary points.

- 5.

- Value of , and

Based on the definition of above, we assume that the radius of the smallest detectable circle is , yielding the length of the smallest circle’s boundary as For the convenience of calculation, we rounded down to 16, and this result coincides with the research of Jia et al. [43] and Zhao et al. [14]. Given , we can determine an appropriate patch size. For a circle with a large radius, its arc approximates a straight line within the patch. Ideally, to ensure detection of all arc points, a patch size equal to is sufficient; however, since the extracted boundaries are often discontinuous, the patch size should be appropriately enlarged. Here we defined patch size as . An overly large will increase the computational burden and the false detection rate, while a small will result in an excessive number of detection results. We set sliding stride to ensure that line segments crossing the boundary could be fully detected.

References

- Berkaya, S.K.; Gunduz, H.; Ozsen, O.; Akinlar, C.; Gunal, S. On Circular Traffic Sign Detection and Recognition. Expert Syst. Appl. 2016, 48, 67–75. [Google Scholar] [CrossRef]

- Jovančević, I.; Larnier, S.; Orteu, J.-J.; Sentenac, T. Automated Exterior Inspection of an Aircraft with a Pan-Tilt-Zoom Camera Mounted on a Mobile Robot. J. Electron. Imaging 2015, 24, 061110. [Google Scholar] [CrossRef]

- Xu, R.; Zhao, X.; Liu, F.; Tao, B. High-Precision Monocular Vision Guided Robotic Assembly Based on Local Pose Invariance. IEEE Trans. Instrum. Meas. 2023, 72, 1–12. [Google Scholar] [CrossRef]

- Alomari, Y.M.; Sheikh Abdullah, S.N.H.; Zaharatul Azma, R.; Omar, K. Automatic Detection and Quantification of WBCs and RBCs Using Iterative Structured Circle Detection Algorithm. Comput. Math. Methods Med. 2014, 2014, 979302. [Google Scholar] [CrossRef]

- Puente, J.M.R.; Garnica, J.M.R.; Isáis, C.A.G. Measuring Hardness System Based on Image Processing. In Proceedings of the 2024 21st International Conference on Electrical Engineering, Computing Science and Automatic Control (CCE), Mexico City, Mexico, 23–25 October 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–5. [Google Scholar]

- Ou, Y.; Deng, H.; Liu, Y.; Zhang, Z.; Lan, X. An Anti-Noise Fast Circle Detection Method Using Five-Quadrant Segmentation. Sensors 2023, 23, 2732. [Google Scholar] [CrossRef]

- Xu, X.; Yang, R.; Wang, N. A Robust Circle Detector with Regionalized Radius Aid. Pattern Recognit. 2024, 149, 110256. [Google Scholar] [CrossRef]

- Guo, J.; Yang, Y.; Xiong, X.; Yang, Y.; Shao, M. Brake Disc Positioning and Defect Detection Method Based on Improved Canny Operator. IET Image Process. 2024, 18, 1283–1295. [Google Scholar] [CrossRef]

- Yang, K.; Yu, L.; Xia, M.; Xu, T.; Li, W. Nonlinear RANSAC with Crossline Correction: An Algorithm for Vision-Based Curved Cable Detection System. Opt. Lasers Eng. 2021, 141, 106417. [Google Scholar] [CrossRef]

- Meng, Y.; Zhang, Z.; Yin, H.; Ma, T. Automatic Detection of Particle Size Distribution by Image Analysis Based on Local Adaptive Canny Edge Detection and Modified Circular Hough Transform. Micron 2018, 106, 34–41. [Google Scholar] [CrossRef]

- Akinlar, C.; Topal, C. EDPF: A real-time parameter-free edge segment detector with a false detection control. Int. J. Patt. Recogn. Artif. Intell. 2012, 26, 1255002. [Google Scholar] [CrossRef]

- Akinlar, C.; Topal, C. EDCircles: A Real-Time Circle Detector with a False Detection Control. Pattern Recognit. 2013, 46, 725–740. [Google Scholar] [CrossRef]

- Liu, W.; Yang, X.; Sun, H.; Yang, X.; Yu, X.; Gao, H. A Novel Subpixel Circle Detection Method Based on the Blurred Edge Model. IEEE Trans. Instrum. Meas. 2021, 71, 1–11. [Google Scholar] [CrossRef]

- Zhao, M.; Jia, X.; Yan, D.-M. An Occlusion-Resistant Circle Detector Using Inscribed Triangles. Pattern Recognit. 2021, 109, 107588. [Google Scholar] [CrossRef]

- Desolneux, A.; Moisan, L.; Morel, J.-M. From Gestalt Theory to Image Analysis: A Probabilistic Approach; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2007; Volume 34. [Google Scholar]

- Yao, Z.; Yi, W. Curvature Aided Hough Transform for Circle Detection. Expert Syst. Appl. 2016, 51, 26–33. [Google Scholar] [CrossRef]

- Adem, K. Exudate Detection for Diabetic Retinopathy with Circular Hough Transformation and Convolutional Neural Networks. Expert Syst. Appl. 2018, 114, 289–295. [Google Scholar] [CrossRef]

- An, Y.; Cao, Y.; Sun, Y.; Su, S.; Wang, F. Modified Hough Transform Based Registration Method for Heavy-Haul Railway Rail Profile with Intensive Environmental Noise. IEEE Trans. Instrum. Meas. 2025. early access. [Google Scholar] [CrossRef]

- Seifozzakerini, S.; Yau, W.-Y.; Mao, K.; Nejati, H. Hough Transform Implementation for Event-Based Systems: Concepts and Challenges. Front. Comput. Neurosci. 2018, 12, 103. [Google Scholar] [CrossRef]

- Smereka, M.; Dulęba, I. Circular Object Detection Using a Modified Hough Transform. Int. J. Appl. Math. Comput. Sci. 2008, 18, 85–91. [Google Scholar] [CrossRef]

- Memiş, A.; Albayrak, S.; Bilgili, F. A New Scheme for Automatic 2D Detection of Spheric and Aspheric Femoral Heads: A Case Study on Coronal MR Images of Bilateral Hip Joints of Patients with Legg-Calve-Perthes Disease. Comput. Methods Programs Biomed. 2019, 175, 83–93. [Google Scholar] [CrossRef]

- Kim, H.-S.; Kim, J.-H. A Two-Step Circle Detection Algorithm from the Intersecting Chords. Pattern Recognit. Lett. 2001, 22, 787–798. [Google Scholar] [CrossRef]

- Scitovski, R.; Majstorović, S.; Sabo, K. A Combination of RANSAC and DBSCAN Methods for Solving the Multiple Geometrical Object Detection Problem. J. Glob. Optim. 2021, 79, 669–686. [Google Scholar] [CrossRef]

- Ma, S.; Guo, P.; You, H.; He, P.; Li, G.; Li, H. An Image Matching Optimization Algorithm Based on Pixel Shift Clustering RANSAC. Inf. Sci. 2021, 562, 452–474. [Google Scholar] [CrossRef]

- Barath, D.; Matas, J. Graph-Cut RANSAC: Local Optimization on Spatially Coherent Structures. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 4961–4974. [Google Scholar] [CrossRef]

- Li, Z.; Shan, J. RANSAC-Based Multi Primitive Building Reconstruction from 3D Point Clouds. ISPRS J. Photogramm. Remote Sens. 2022, 185, 247–260. [Google Scholar] [CrossRef]

- Khan, R.U.; Almakdi, S.; Alshehri, M.; Haq, A.U.; Ullah, A.; Kumar, R. An Intelligent Neural Network Model to Detect Red Blood Cells for Various Blood Structure Classification in Microscopic Medical Images. Heliyon 2024, 10, e26149. [Google Scholar] [CrossRef]

- Jiang, L. A Fast and Accurate Circle Detection Algorithm Based on Random Sampling. Future Gener. Comput. Syst. 2021, 123, 245–256. [Google Scholar] [CrossRef]

- Chiu, S.-H.; Lin, K.-H.; Wen, C.-Y.; Lee, J.-H.; Chen, H.-M. A Fast Randomized Method for Efficient Circle/Arc Detection. Int. J. Innov. Comput. Inf. Control 2012, 8, 151–166. [Google Scholar]

- Lopez-Martinez, A.; Cuevas, F.J. Automatic Circle Detection on Images Using the Teaching Learning Based Optimization Algorithm and Gradient Analysis. Appl. Intell. 2019, 49, 2001–2016. [Google Scholar] [CrossRef]

- Ayala-Ramirez, V.; Garcia-Capulin, C.H.; Perez-Garcia, A.; Sanchez-Yanez, R.E. Circle Detection on Images Using Genetic Algorithms. Pattern Recognit. Lett. 2006, 27, 652–657. [Google Scholar] [CrossRef]

- Mekhalfi, M.L.; Nicolo, C.; Bazi, Y.; Rahhal, M.M.A.; Alsharif, N.A.; Maghayreh, E.A. Contrasting YOLOv5, Transformer, and EfficientDet Detectors for Crop Circle Detection in Desert. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Yue, X.; Li, H.; Shimizu, M.; Kawamura, S.; Meng, L. YOLO-GD: A Deep Learning-Based Object Detection Algorithm for Empty-Dish Recycling Robots. Machines 2022, 10, 294. [Google Scholar] [CrossRef]

- Gai, R.; Chen, N.; Yuan, H. A Detection Algorithm for Cherry Fruits Based on the Improved YOLO-v4 Model. Neural Comput. Appl. 2023, 35, 13895–13906. [Google Scholar] [CrossRef]

- Liu, G.; Nouaze, J.C.; Touko Mbouembe, P.L.; Kim, J.H. YOLO-Tomato: A Robust Algorithm for Tomato Detection Based on YOLOv3. Sensors 2020, 20, 2145. [Google Scholar] [CrossRef] [PubMed]

- Zhang, T.Y.; Suen, C.Y. A Fast Parallel Algorithm for Thinning Digital Patterns. Commun. ACM 1984, 27, 236–239. [Google Scholar] [CrossRef]

- Bentley, J.L. Multidimensional Binary Search Trees Used for Associative Searching. Commun. ACM 1975, 18, 509–517. [Google Scholar] [CrossRef]

- Yuan, H.; Hao, Y.-G.; Liu, J.-M. Research on Multi-Sensor Image Matching Algorithm Based on Improved Line Segments Feature. In Proceedings of the ITM Web of Conferences; EDP Sciences: Les Ulis, France, 2017; Volume 11, p. 05001. [Google Scholar]

- Bachiller-Burgos, P.; Manso, L.J.; Bustos, P. A Variant of the Hough Transform for the Combined Detection of Corners, Segments, and Polylines. J. Image Video Process. 2017, 2017, 32. [Google Scholar] [CrossRef]

- Ono, H. Difference Threshold for Stimulus Length under Simultaneous and Nonsimultaneous Viewing Conditions. Atten. Percept. Psychophys. 1967, 2, 201–207. [Google Scholar] [CrossRef]

- Wang, L.; Sandor, C. Can You Perceive the Size Change? Discrimination Thresholds for Size Changes in Augmented Reality. In Proceedings of the International Conference on Virtual Reality and Mixed Reality, Milan, Italy, 24–26 November 2021; Springer: Berlin/Heidelberg, Germany, 2021; pp. 25–36. [Google Scholar]

- Bresenham, J.E. Algorithm for Computer Control of a Digital Plotter. In Seminal Graphics; ACM: New York, NY, USA, 1998; pp. 1–6. ISBN 978-1-58113-052-2. [Google Scholar]

- Jia, Q.; Fan, X.; Luo, Z.; Song, L.; Qiu, T. A Fast Ellipse Detector Using Projective Invariant Pruning. IEEE Trans. Image Process. 2017, 26, 3665–3679. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).