Abstract

To bridge the modality gap between camera images and LiDAR point clouds in autonomous driving systems—a critical challenge exacerbated by current fusion methods’ inability to effectively integrate cross-modal features—we propose the Cross-Modal Fusion (CMF) framework. This attention-driven architecture enables hierarchical multi-sensor data fusion, achieving state-of-the-art performance in semantic segmentation tasks.The CMF framework first projects point clouds onto the camera coordinates through the use of perspective projection to provide spatio-depth information for RGB images. Then, a two-stream feature extraction network is proposed to extract features from the two modalities separately, and multilevel fusion of the two modalities is realized by a residual fusion module (RCF) with cross-modal attention. Finally, we design a perceptual alignment loss that integrates cross-entropy with feature matching terms, effectively minimizing the semantic discrepancy between camera and LiDAR representations during fusion. The experimental results based on the SemanticKITTI and nuScenes benchmark datasets demonstrate that the CMF method achieves mean intersection over union (mIoU) scores of 64.2% and 79.3%, respectively, outperforming existing state-of-the-art methods in regard to accuracy and exhibiting enhanced robustness in regard to complex scenarios. The results of the ablation studies further validate that enhancing the feature interaction and fusion capabilities in semantic segmentation models through cross-modal attention and perceptually guided cross-entropy loss (Pgce) is effective in regard to improving segmentation accuracy and robustness.

Keywords:

cross-modal fusion; deep learning; semantic segmentation; point cloud; image; multi-sensor 1. Introduction

With the development of deep learning technology, intelligent perception of semantic scenes has been applied in many fields, including autonomous driving, smart cities, robot navigation, and industrial control [1,2,3,4]. Among them, the semantic scene plays a crucial role in autonomous driving, and semantic segmentation is the key technology for sensing the surrounding environment in a driving scene. Deep learning-based semantic segmentation methods can recognize the environment pixel by pixel or point by point, identify and understand important targets around the road, and classify them into different semantic categories. This helps autonomous vehicles to achieve more accurate driving route planning and more intelligent decision making [5,6].

Currently, in-vehicle mobile platforms mainly collect two kinds of data: images and point clouds [7,8]. Images have a rich texture and boundary features, but they are more sensitive to changes in lighting conditions and lack spatial structure information, which may lead to inaccurate semantic segmentation of the scene [9,10]. In comparison, point clouds accurately reflect the spatial structure and can provide accurate 3D coordinates of the surrounding environment, which increases the accuracy and robustness of semantic segmentation and provides more comprehensive and accurate semantic information [11,12,13]. However, point cloud-based semantic segmentation methods face certain challenges due to the sparseness of in-vehicle point clouds, which cannot provide texture information and color descriptions [14,15]. Therefore, it is of great significance to study how to effectively fuse images and point clouds in order to realize in-vehicle point cloud semantic segmentation methods, by taking advantage of their respective strengths and making up for each other’s deficiencies. Despite emerging research [16,17] efforts in regard to multimodal learning for autonomous driving tasks, current approaches exhibit several critical limitations: oversimplified feature fusion strategies, insufficient capabilities in regard to handling dynamic objects and sparse point distributions, and excessive dependence on pre-trained models or multi-stage optimization frameworks. Consequently, the development of advanced in-vehicle point cloud semantic segmentation methods through the use of optimized multimodal fusion remains both technologically significant and scientifically challenging.

Based on the aforementioned issues, we leverage the advantages of point cloud and image data to achieve deep cross-modal feature interaction through the use of cross-modal attention, proposing a cross-modal attention-based multi-sensor feature fusion method (CMF). Unlike existing methods [18,19], we emphasize the importance of fusing RGB image appearance information with point cloud spatial depth information during semantic segmentation. Building on this concept, we propose a perception-oriented multi-sensor fusion scheme that coordinates the fusion of perceptual information from two data modalities in regard to three aspects. First, we employ perspective projection to project the point cloud onto the camera coordinate system, thereby obtaining the spatial depth information corresponding to the RGB image and achieving spatial alignment of the data. Second, we construct a dual-stream feature extractor (SDNet), consisting of a camera stream and a LiDAR stream, which extracts multi-dimensional perceptual features from multimodal sensors. Considering the instability of image information in outdoor environments, we design a residual cross-modal fusion (RCF) module based on cross-modal attention, which integrates the fused features into the LiDAR stream. This not only complements the strengths of point cloud and image data, but also ensures optimal feature fusion between different modalities when subject to dynamic changes. Finally, we propose a perception-guided cross-entropy loss function to measure the perceptual differences between the two data modalities, promoting the reliability of perceptual information fusion.

Our contributions are summarized as follows: (1) We propose a cross-modal attention-driven multi-sensor fusion semantic segmentation method (CMF) for effectively aligning RGB image appearance information and point cloud spatial depth information. (2) We design a dual-stream feature extraction network and achieve multi-level fusion of the two streams through a cross-modal attention-based residual fusion module, enhancing the interaction and fusion of point cloud and image features. (3) We design a perception-guided cross-entropy loss function to measure the perceptual differences between the two modalities, improving the reliability of the fusion results. (4) The relevant experimental results based on the SemanticKITTI and nuScenes benchmark datasets demonstrate the effectiveness of the proposed method.

2. Related Work

According to the sensors used, the existing semantic segmentation methods can be broadly divided into three categories: camera-based methods, LiDAR-based methods, and multi-sensor fusion-based methods.

2.1. Camera-Based Methods

Camera-acquired images are rich in texture, color, and other information. Camera-based methods achieve semantic segmentation of different targets by assigning specific category labels to each pixel point in the input image. A Full Convolutional Network (FCN) [20], as a landmark network, extends convolutional neural networks to pixel-level semantic segmentation tasks for the first time, and surpasses the traditional semantic segmentation methods in terms of accuracy and efficiency by introducing techniques such as full convolutional architecture and multi-scale feature fusion. On the basis of an FCN, a large number of classical semantic segmentation models have been proposed, such as U-Net [21], SegNet [22], and the DeepLab series [23,24]. Among the semantic segmentation methods for in-vehicle images, DDRNet [25] enhances the information exchange between context and detail branches by introducing bilateral connections, and achieves a balance between accuracy and efficiency. Recently, researchers have introduced a proportional–integral–derivative (PID) controller into convolutional networks and have constructed PIDNet [26], which fuses detail and contextual branches through a boundary attention mechanism and dramatically improves segmentation accuracy at the expense of partial efficiency. Transformer-based methods, such as SETR [27] and SegFormer [28], model the global context through self-attention mechanisms, but they have high computational complexity. Self-supervised learning-based methods like DenseCL [29] improve feature representations through contrastive learning, reducing the reliance on labeled data. However, the method that relies only on the camera is highly affected by the illumination and viewing angle, and lacks stereo information and reliability, which may lead to incomplete segmentation results.

2.2. LiDAR-Based Methods

The 3D point clouds acquired by LiDAR technology contain rich spatial structure and geometric information, and have stronger robustness in regard to complex scenes. LIDAR-based methods can be categorized into direct and indirect methods. The direct method uses original point clouds as the input, and the indirect method uses the structurally transformed point clouds as the input. PointNet [30], as a representative of the direct method, first proposes the use of multi-layer perceptrons (MLP) to process point clouds, but ignores the importance of local features. PointNet++ [31], based on PointNet, improves the performance of the network for point cloud segmentation tasks by extracting local features through multiple sampling and grouping. RandLA-Net [32] uses a random sampling method to improve computational efficiency and a local feature aggregation module is utilized to establish local contextual information in order to improve the segmentation efficiency of massive point clouds. Cylinder3D [33] involves a new method for outdoor point cloud segmentation, which utilizes cylindrical segmentation and an asymmetric 3D convolutional network to maintain the 3D geometric structure and properties. In addition, the method includes a correction module based on independent 3D points to reduce the interference of voxel lossy labeling/coding, which leads to excellent segmentation results. SphereFormer [34] aggregates dense near-point information to sparse far points and designs self-concerned radial windows to expand the receptive field, which significantly improves the segmentation accuracy of outdoor scene point clouds. However, direct methods usually involve high computational complexity, which limits their application in the field of autonomous driving. Indirect methods convert 3D point clouds into 2D meshes through projection. This 2D representation allows researchers to investigate efficient network architecture based on existing 2D convolutional networks [35,36]. RangeNet++ [37] is an early representative of the indirect method, which implements 2D convolutional networks based on spherical projections. SalsaNext [38] improves the representation and processing of point clouds by using as inputs the RV maps (range view maps) generated by spherical projections. In a recent study, RangeFormer [39] was used to convert point clouds into 2D representations with long range dependencies, and was trained on low-resolution 2D images through the use of a scalable range view training strategy (STR) to achieve efficient processing and 3D segmentation of point clouds. The Point Transformer [40,41,42] family introduced a transformer layer specifically designed for point clouds, leveraging self-attention to directly extract features from unordered point clouds, without converting them into voxels or grids. However, indirect methods that rely only on point clouds are still insufficient for segmentation when faced with sparse point clouds in the absence of texture information.

2.3. Multi-Sensor Fusion-Based Methods

Multi-sensor fusion-based methods improve the accuracy and robustness of 3D point cloud semantic segmentation algorithms by integrating data from camera images and LiDAR point clouds to realize the complementary advantages of both [43,44]. RGBAL [45] converts RGB images into polar grid mapping representations and introduces an early and middle-level fusion strategy. PointPainting [46] enhances the deep learning model’s ability to perceive semantic information by projecting semantic information onto point clouds, thus improving the accuracy of semantic segmentation of point clouds. In regard to PMF [47], point clouds are converted into distance images, so that point clouds and images can achieve multi-level feature fusion based on residual attention in two-dimensional space. Moreover, 2DPASS [16] enhances the representation learning of 3D semantic segmentation networks by distilling multimodal knowledge into a single point cloud modality. In addition, 2D3DNet [17] leverages labeled 2D image data to generate reliable 3D pseudo-labels and, through the use of a multi-view fusion strategy, back-projects these 2D predictions onto 3D point clouds, thereby enabling supervised training of a 3D semantic segmentation model without relying on expensive 3D annotations. Although the aforementioned methods have made some progress in regard to multi-sensor fusion research, they still suffer from insufficient cross-modal feature interaction and use a singular fusion strategy. The CMF framework adopts the collaborative fusion strategy involving multimodal data in the camera coordinate system, as seen in regard to PMF. By integrating camera images and LiDAR point clouds and leveraging a residual fusion module based on cross-modal attention, it achieves a thorough interaction and deep fusion of the two modal features, thereby enhancing the accuracy and robustness of point cloud semantic segmentation.

3. Network Principle and Design

In this paper, we propose a cross-modal attention-driven multi-sensor fusion method for the semantic segmentation of point clouds (CMF), aimed at achieving effective cross-modal feature fusion of perceptual information from RGB camera images and LiDAR point clouds. The CMF framework consists of four main parts: (1) data preprocessing, namely point cloud perspective projection transformation; (2) feature extraction, namely a two-stream feature extraction module for both the LiDAR stream and the camera stream; (3) feature fusion, namely a residual fusion module based on cross-modal attention; and (4) a loss function set: a loss function combination innovated with a perceptual guided cross-entropy loss function. The specific process of the CMF framework is as follows: First, the point cloud is projected onto the camera coordinate system via perspective projection. Then, a dual-stream feature extractor is used to extract the perceptual features from both modalities, after which the cross-modal features are fused and injected into the LiDAR stream. Finally, a perception-guided cross-entropy loss function is incorporated into the network optimization.

3.1. Dimensional Unification Based on Perspective Projection

The existing dimension unification methods for 3D point clouds with 2D images are mainly categorized into spherical projection and perspective projection. Spherical projection projects the 2D images onto the LiDAR coordinates; however, due to the sparseness of point clouds, the information from the images will be lost to a large extent during this process. To avoid this problem, we use perspective projection to realize the dimensional unification between the 3D point clouds and the 2D images, and project the sparse 3D point clouds onto the camera coordinates to maximize the retention of the pixel information from the images. The specific steps for dimension unification are as follows: first, use the extrinsic matrix to transform the point cloud from the world coordinate system to the camera coordinate system; then, project the point cloud onto the camera’s normalized image plane; and finally, use the intrinsic matrix to convert the normalized coordinates into pixel coordinates.

Let be one of the training samples from a given dataset, where represents a point cloud from LiDAR, represents the number of points, and 4 represents the four features , i.e., the 3D coordinates of each point and a reflectance value , for each point in point cloud . Let be an image from the RGB camera, where and represent the height and width of the image, respectively. is the set of semantic labels for point cloud .

In regard to perspective projection, we aim to project the point cloud from the LiDAR coordinate to the camera coordinate to obtain 2D LiDAR features . Here, represents the number of channels of the projected point clouds. Considering the shape of the transformation parameter matrix, the fourth column is added to to obtain , and the projected coordinates of each point cloud are computed in regard to the camera coordinate system.

where is the projection matrix from the LiDAR coordinates in regard to the camera coordinates. is obtained by adding zero-padded augmentation to the rotation matrix and setting . The coordinates of the projected point cloud on the image are calculated as follows:

where is the coordinate of point in the camera coordinate system, is the coordinate of point in the camera coordinate system, and is the coordinate in the camera coordinate system, which describes the position of the point in the depth direction, i.e., the distance from the point to the camera. Equations (2) and (3) divided by the coordinate take the depth information of the point into account to ensure that the perspective effect of near and far objects is correctly rendered on the image. Specifically, by dividing the and coordinates by the coordinate, normalized coordinates are obtained that take into account the depth information. These normalized coordinates can then be mapped onto the pixel coordinates of the image to determine the position of the point on the image.

3.2. CMF Network Structure

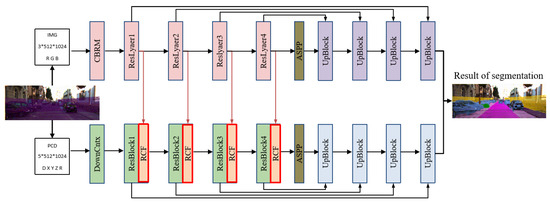

Since images and point clouds are different modality data, it is difficult for a single network to handle the feature information of both modalities. Inspired by related methods [40,48], we propose a cross-modal fusion-based point cloud segmentation network (CMFNet). The specific structure is shown in Figure 1. The network contains a two-stream feature extractor that processes images from cameras and point clouds from LiDAR, respectively; a cross-modal attention fusion (RCF) module that is used to realize the complementary strengths of image and point cloud features; and a pair of up-sampling modules with cross-layer connectivity, which can gradually restore the high-dimensional feature maps to the size of the input dimensions, respectively.

Figure 1.

Structure of CMF network.

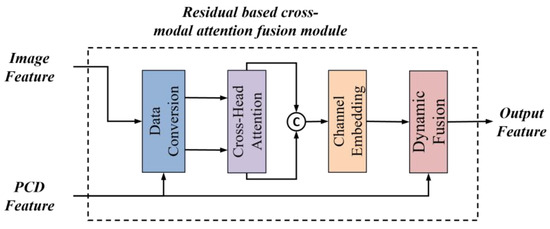

3.3. Residual Fusion Module Based on Cross-Modal Attention

Since the image features contain details of many objects in the scene and the point cloud features retain more spatial geometric features, we designed a residual fusion module based on cross-modal attention, as shown in Figure 2. The module consists of four submodules: data conversion, the crosspath module, channel embedding, and dynamic fusion. Data conversion projects the features onto a suitable representation space, the crosspath module is responsible for integrating different inputs, the channel embedding submodule is used for in-depth feature extraction, and dynamic fusion is responsible for fusing various types of features. The overall process aims to generate features with higher expressive power that can be used for subsequent tasks, such as classification, target detection, or semantic segmentation. The structure of this module allows the model to better understand the relationships between different data sources when dealing with multimodal data.

Figure 2.

Structure of fusion module.

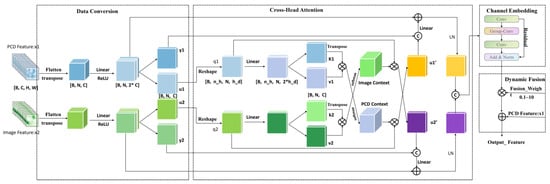

Data conversion consists of two identical channel projection modules that process the input point cloud features and image features , respectively, as shown in Figure 3. The purpose of channel projection is to project the original channel dimensional features onto a lower dimensional space in order to reduce the computational complexity and enhance inter-channel correlation. The cross-attention submodule is used to perform a cross-attention operation between the projected features, and , so that they can “focus” on each other and obtain information about each other. The key steps in the cross-attention computation are as follows: First, we project u1 and u2 into queries (q) and keys/values (k, v) through linear transformations. Subsequently, the attention mechanism computes the weights between each query and its corresponding keys, enabling targeted information aggregation.

Figure 3.

Detailed structure of RCF.

Finally, the attention-weighted values are weighted and summed up using these weights to generate the final cross-attention output. The channel embedding submodule embeds the channels in and to further change the representation of the channels. The dynamic fusion submodule dynamically assigns weights to the fused features of the channel embedded with the original inputs and then carries out a point-by-point summation fusion. The dynamic weight parameter controls the weights of the fusion and allows the model to learn how to balance the embedded features with the original features.

3.4. Perceptual Guided Cross-Entropy Loss Function

Since point clouds are sparse, LiDAR stream networks can only learn the local features of the points, ignoring the shape of the object. In contrast, camera streams are able to capture the shape, color, and texture features of an object from the dense pixels in an image. As a result, there is a large difference between the perceptual features obtained by the camera stream and the LiDAR stream. To address this problem, we introduce a perceptual guided cross-entropy loss function that assists the fusion network in focusing on the fused features from the camera and LiDAR streams. The designed loss function aims to create a consistency and complementarity between the point cloud data and the camera data so as to perform more accurate perceptual tasks.

where represents the perceptual guided cross-entropy loss, and are the perceptual loss terms in terms of the LiDAR stream probability graph and the camera stream probability graph, respectively. Moreover, represents a dynamic weight, and represents the absolute difference loss term, which represents the absolute difference between the point clouds and images. Where the specific formula for is as follows:

To measure the perceived difference between LiDAR data and camera data, the cross-entropy loss is used to calculate the difference between two probability distributions, and , and guide weights are utilized to weigh the point cloud data in regard to the loss calculation based on information importance and a threshold mask. Thus, when the confidence level of the LiDAR data is high, the guide weights are larger and the contribution of the LiDAR data in regard to the loss is more significant. The guideline weights multiply the points with information importance greater than zero by the absolute value of their information importance and weight them according to an adaptive weight function. The weights are then normalized to ensure that their sum is equal to one.

where represents the number of categories in the semantic segmentation and represents the probability distribution of the branch outputs. Entropy is used to measure the uncertainty or information content in terms of the probability distribution. Generally, a larger entropy represents a higher uncertainty, i.e., a more unstable probability distribution. We normalize the entropy to using . The confidence level is then computed from . For example, the confidence of the camera stream is higher in terms of the interior of the object, but may be lower at the edges. Therefore, a point cloud confidence threshold mask is created using a predefined threshold . These masks are used to determine what information is considered plausible in subsequent calculations.

where represents the information importance of the th data point, which is calculated according to the difference between the confidence level of the LiDAR stream and the camera stream. This value is positive, indicating that the reliability of the LiDAR stream is higher than that of the camera stream. On the contrary, the reliability of the LiDAR stream is lower than that of the camera stream.

where , , and represent the Focal Loss [49], the Lovász-Softmax loss [50], and the perceptual guided cross-entropy loss, respectively. Where and indicate the hyper-parameters that balance different losses.

In addition to the perceptual guided cross-entropy loss, we train the constructed network model using the Focal Loss, a loss function commonly used in existing segmentation work, as well as the Lovász-Softmax loss (Lov loss for short).

4. Experimental Results Processing and Analysis

In this section, we will evaluate the performance of the CMF framework based on the SemanticKITTI and nuScenes benchmark datasets. SemanticKITTI is a large-scale dataset based on the KITTI odometry benchmark, featuring semantic annotations for 43,000 scan points, with 21,000 scans (sequences 00–10) available for training and validation. The dataset covers 19 semantic categories for evaluation in semantic benchmarking. The nuScenes dataset contains 1000 driving scenarios in diverse weather and lighting conditions. These scenarios are divided into 28,130 training frames and 6019 validation frames, collected by a Velodyne HDL-32E sensor. Unlike the SemanticKITTI dataset, which only provides front-view camera images, the nuScenes dataset includes data from six cameras, providing multiple viewpoints that can be correlated with LiDAR data.

4.1. Implementation Details

Using the PyTorch 1.8.1 framework, we implement the proposed method and use ResNet-34 [50] and SalsaNext as the backbone networks for the LiDAR stream and camera stream, respectively. In regard to the LiDAR stream, we made some adjustments to ResNet-34 to ensure that the number of channels in the input feature maps is (64, 128, 256, 512) during cross-modal fusion. Since we process the point clouds in regard to the camera coordinates, the ASPP module is incorporated into the LiDAR stream network in order to adaptively tune the receptive field. We utilize an existing image classification network backbone and initialize the parameters of ResNet-34 using a pre-trained ImageNet model. During training, we use a hybrid optimization approach to train the branches in the model, i.e., the SGD [51] optimizer for the camera stream and the Adam [52] optimizer for the LiDAR stream. All the experiments were conducted using a system equipped with a GeForce RTX 3090 GPU (24GB VRAM). To reduce memory consumption and accelerate training, we utilized Automatic Mixed Precision (AMP). Through extensive experimentation, the hyperparameters λ and γ in the loss function combination were set to 0.5 and 0.5 for optimal performance. In regard to the SemanticKITTI dataset, we used a batch size of 4, with 40 training epochs, wherein the learning rate started at 0.00025 and decayed to 0. For the nuScenes dataset, a larger batch size of 24 and 150 training epochs were adopted due to the sparser point clouds, with the learning rate initialized at 0.001 and similarly decayed to 0 using cosine scheduling [52].

4.2. Comparisons of Benchmark Datasets

4.2.1. Results Based on SemanticKITTI

To evaluate our method based on the SemanticKITTI dataset, we compare the CMF framework to several more established LiDAR methods, including SalsaNext, Cylinder3D, and others. Since the SemanticKITTI dataset only provides images from a forward-looking camera, we project the point clouds onto the perspective view and retain only the points available on the images to construct a subset of the SemanticKITTI dataset. We use sequence 08 for validation and the remaining sequences (00–07 and 09–10) are used as training sets.

As seen in Table 1, the CMF framework exhibits the highest segmentation accuracy for the categories of other vehicle, building, fence, and pole, while it achieves the second-best segmentation accuracy for the categories of motorcycle, road, parking, sidewalk, vegetation, trunk, train, traffic sign, and other categories. In different scenarios in terms of the SemanticKITTI dataset, the CMF framework is able to effectively segment various types of vehicles (e.g., bicycles, motorcycles, trucks, etc.) and different types of roads (e.g., sidewalks, vehicular roads, etc.). In addition, the CMF framework can accurately recognize other kinds of elements, such as parking lots, traffic signs, pedestrians, buildings, and green belts. Compared with point cloud projection-based methods, the CMF framework outperforms RangeNet++, SalsaNext, and SequeezeSegV3 in terms of segmentation accuracy by 13.3%, 5.1%, and 11.2%, respectively. The CMF framework also has a significant advantage in comparison with the voxel-based SPVNAS and the point-based RandLa-Net. In regard to the point cloud semantic segmentation network with LiDAR and camera fusion, the CMF framework has an improved accuracy of 10.0%, 8.3%, and 1.3% compared to PointPainting, RGBAL, and PMF, respectively.

Table 1.

Semantic KITTI validation set comparison. L denotes the method using only LiDAR. L + C represents the fusion-based method. The black bold numbers indicate the best result.

4.2.2. Results Based on nuScenes

To further validate the advanced performance of the CMF framework in terms of the fusion strategy and point cloud semantic segmentation, we conducted model training and evaluation based on the nuScenes dataset. The comparative results with other relevant models are presented in Table 2.

Table 2.

Comparisons based on the nuScenes validation set. L denotes the method using only LiDAR. L + C represents the fusion-based method. The black bold numbers indicate the best result.

To evaluate our method in regard to more complex scenarios, we compared the CMF framework with state-of-the-art methods based on the nuScenes LiDAR segmentation validation set. The experimental results are shown in Table 2. It is worth noting that the point clouds in the nuScenes dataset are sparser than those in the SemanticKITTI dataset (35k points per frame vs. 125k points per frame), making the 3D segmentation task more challenging. In these conditions, the CMF framework achieved the best performance based on the nuScenes validation set. Specifically, the CMF framework outperformed the best LiDAR-only method, RangeFormer, by 1.2% in regard to the mIoU (mean intersection over union). Compared to the PMF, our CMF framework achieved a 2.4% improvement in the mIoU. These results indicate that the CMF framework can effectively handle challenging segmentation tasks involving extremely sparse point clouds, while further validating the superiority of its feature interaction

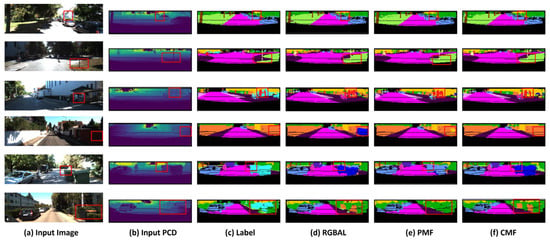

4.3. Qualitative Evaluation

In order to demonstrate the superiority of the CMF’s segmentation accuracy more intuitively, we visualized the prediction results of the CMF, RGBAL and PMF based on the SemanticKITTI dataset, and used red rectangles to mark the contrasting regions. From Figure 4, we can observe that the CMF framework has a superior segmentation effect at the edge of the object; comparing the fourth row, when the rider is riding a bicycle, the CMF is able to accurately segment the rider and the bicycle compared to RGBAL and PMF. When comparing Figure 4c,e, the cars, traffic signs, and roads, etc., segmented using the CMF have more complete shapes, and the overall scene segmentation effect is more significant. Meanwhile, when comparing Figure 4d,e, the CMF outperforms the PMF in regard to the segmentation of long-distance targets. Therefore, the CMF framework shows better segmentation performance compared to RGBAL and PMF in regard to long distance, interference areas, and small targets.

Figure 4.

Visualization of RGBAL, PMF, CMF prediction results on based SemanticKITTI dataset.

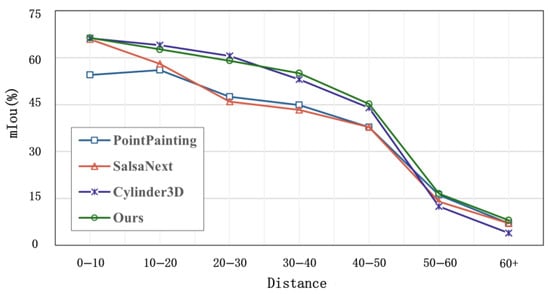

4.4. Distance-Based Evaluation

In order to evaluate the importance of long-range sensing for autonomous driving safety, we conduct a distance-based evaluation experiment. As shown in Figure 5, the segmentation accuracy of the CMF framework is slightly lower than that of Cylinder3D when the distance is less than 35 m. However, the segmentation accuracy of the CMF framework exceeds that of Cylinder3D when the distance is greater than 35 m.

Figure 5.

Distance-based semantic evaluation based on the SemanticKITTI dataset. As the distance increases, the point cloud becomes sparse.

We attribute this result to the increasing sparsity of the point clouds as the distance increases: LiDAR-only methods degrade in terms of performance, while camera data provides richer information on distant objects. The CMF framework dynamically fuses high-resolution image textures (e.g., vehicle contours) with precise point cloud depth through the use of cross-modal attention mechanisms. In distant sparse regions, image details effectively compensate for sparse LiDAR data. This further confirms that the CMF framework is better suited for semantic segmentation tasks involving sparse LiDAR data.

4.5. Ablation Experiments

4.5.1. Impact of Network Components

We analyze the impact on the overall performance of individual components of the CMF network, which include perspective projection (PP), empty space pyramid pooling (ASPP), the simple attention-based residual fusion module (RF), and the cross-attention-based residual fusion module (RCF). The experimental results are detailed in Table 3. To ensure the rigor of the ablation experiments, we use the structurally fine-tuned SalsaNext (spherical projection) as the baseline for our comparison experiments. In regard to unspecified ablation of the network components, the networks involved in the cross-modal feature fusion in the ablation studies use perceptual difference loss sets.

Table 3.

Ablation study of network components based on the SemanticKITTI validation set. The symbol “✓” represents the use of this module.

By comparing the experimental results in the above table, we can conclude that although the baseline using perspective projection improves the mIoU by 0.4% compared to the baseline using only spherical projection, the improvement is not significant. However, in the third row, the combination of perspective projection and null pyramid pooling improves the segmentation accuracy by 3% compared to the baseline using spherical projection, proving the effectiveness of this combination in the task of distance image semantic segmentation. Comparing the third line with the fifth line, our proposed CMF network improves the segmentation accuracy by 3.4% with the introduction of camera streams compared to SalsaNext without camera streams. In addition, comparing the fourth row with the fifth row, the cross-attention-based residual fusion module improves the accuracy by 0.8% compared to the simple attention-based residual fusion module. In summary, the experimental results fully demonstrate the importance of introducing camera streams for improving the semantic segmentation accuracy of point clouds and the positive role of the RCF module in the cross-modal feature fusion process.

4.5.2. Effect of the Loss Function

In addition to analyzing the key components in the model, we also thoroughly investigate the impact of different loss function combinations on the model’s performance. Taking the Foc loss and Lov loss as the base loss function groups, we compare the effects of the base, perceptual difference, and perceptual guidance groups on the performance of the CMF network, and validate the facilitating effect of the perceptual guided cross-loss function on cross-modal feature fusion.

According to the experimental results in Table 4, we can see that the CMF framework using the base group in the first row has a segmentation accuracy of only 61.7% based on the validation set. While the CMF using the perceptual difference group in the second row has a segmentation accuracy of up to 63.6% based on the validation set. The CMF using the perceptual guidance group in the third row has a segmentation accuracy of 64.2% based on the validation set, which improves the segmentation accuracy by 2.8% and 0.9% compared with the base group and the perceptual difference group, respectively. The results confirm that the proposed perceptual guided loss significantly improves cross-modal fusion.

Table 4.

CMF ablation study of loss function sets based on SemanticKITTI validation set. The symbol “✓” represents the use of the corresponding loss function.

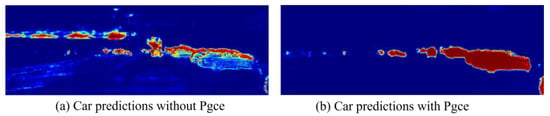

To further elaborate the impact of the perceptual guided cross-loss function (Pgce Loss), we present the prediction results of the LiDAR branch with and without Pgce Loss, respectively, in Figure 6. Taking a car as an example, the CMF model with Pgce Loss is able to learn the complete shape of the car during the training process, while the CMF model without introducing the Pgce Loss focuses mainly on local features. This further confirms that the introduction of the Pgce Loss helps the LiDAR branch to better fuse the camera stream information.

Figure 6.

Comparison of prediction results. Networks trained with and without perceptual guided cross-loss function are compared; Pgce denotes perceptual guided cross-loss function. For clarity, only the car-related predictions are shown in red. In the picture, the redder the area is, the greater the possibility that it is predicted to be a car.

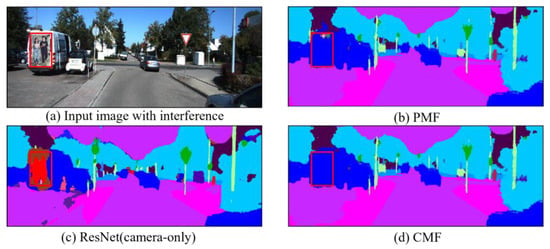

4.6. Confrontation Analys

When fusing image features, the model is susceptible to information interference, and in order to investigate the robustness of the CMF framework in regard to interfering samples, we insert additional targets into the image (e.g., the poster of the people on the back of the vehicle in Figure 7), while keeping the point clouds unchanged. In addition, based on the SemanticKITTI dataset, we implement a camera stream-only method, ResNet, and a camera and LiDAR fusion method, PMF to predict the results. Note that we do not use any adversarial training techniques during the training process.

Figure 7.

Comparison of CMF with ResNet and PMF in regard to adversarial experiments. ResNet uses only RGB images as inputs, while CMF and PMF use both images and point clouds as inputs. We highlight the inserted interfering objects with red boxes. The red pixels in the prediction results represent people, and the blue pixels represent cars.

As shown in Figure 7c, the methods relying solely on the image stream are prone to interference from distracting objects in the visual data, causing the segmentation results to be biased toward the semantic categories of these distractors. In Figure 7b, most segmentation outputs from PMF (highlighted in the red box) align with the dark blue labels of the “car” category, yet some erroneous segments clearly reflect interference from the distractors. However, the CMF framework addresses this limitation by employing the RCF module during fusion, which deepens the cross-modal feature interaction through the attention mechanism to integrate reliable point cloud features. Additionally, the Pgce Loss function further optimizes the flexibility of the CMF’s feature fusion, reducing the impact of visual noise. Consequently, as demonstrated in Figure 7d, the CMF framework correctly segments the car semantically without misclassifying the human figures in the poster as real entities.

Nevertheless, while the CMF framework demonstrates strong robustness against common distractors (e.g., the poster of humans in Figure 7), we observe some remaining limitations: highly camouflaged distractors (e.g., objects with vehicle-like colors/textures) may still induce misclassifications. In complex dynamic scenarios, the CMF framework exhibits instability in recognizing fast-moving objects due to LiDAR data sparsity and latency. To mitigate these issues, we plan to integrate dynamic filtering methods in future work to enhance the CMF’s adaptability to intricate real-world environments.

4.7. Efficiency Analysis

In this section, we evaluate the computational efficiency of the CMF framework based on a GeForce RTX 3090. It is important to note that we optimize the CMF’s efficiency through the use of two key strategies. First, since the camera stream’s predictions are fused into the LiDAR stream, we prune the camera stream’s decoder to accelerate inference. Second, both our PMF and CMF leverage 2D convolutions, enabling straightforward optimization via existing inference toolkits like TensorRT.

In comparison, Cylinder3D relies on 3D sparse convolutions, which are inherently less compatible with TensorRT acceleration. Table 5 reports the inference times of the TensorRT-optimized models. The results demonstrate that the CMF framework achieves state-of-the-art performance based on the SemanticKITTI dataset, while being 3.1 times faster than Cylinder3D (20.1 ms vs. 62.5 ms) with fewer parameters. Compared to PMF, the CMF framework achieves a 1.1× increase in speed (20.1 ms vs. 22.3 ms), alongside an mIoU improvement of 0.9%.

Table 5.

Inference time of different methods based on SemanticKITTI dataset using TensorRT.

5. Conclusions

This study aims to improve the accuracy of semantic segmentation in regard to autonomous driving road scenes by proposing a multi-sensor fusion semantic segmentation method based on cross-modal attention to fully leverage the advantages of point clouds and images. Unlike the commonly used spherical projection method, we employ perspective projection to align point clouds with the camera coordinate system. Additionally, we design a cross-modal residual fusion module with cross-attention at its core, enabling multi-level feature fusion during the feature extraction process, effectively addressing the current issue of insufficient cross-modal feature integration.

Moreover, during model training, we introduce a perceptual guided cross-loss function to further enhance cross-modal feature fusion. A series of experiments conducted based on the SemanticKITTI and nuScenes datasets verify the effectiveness of the cross-modal attention residual fusion module and the perceptual guided cross-loss function. The CMF framework achieved optimal mIoU scores of 64.5% and 79.3% based on the respective datasets. The related interference experiments also demonstrate the superiority and robustness of the CMF framework in regard to the task of semantic segmentation for in-vehicle sensor fusion. Although the CMF framework offers certain improvements in regard to the inference speed and parameter efficiency compared to the PMF, there is still room for further optimization. Additionally, the CMF framework is relatively dependent on precise sensor calibration, and calibration errors in real-world applications may compromise its robustness.

In future research, we plan to address these limitations by exploring lightweight model designs tailored for low-power devices and conducting further experiments to validate the CMF’s adaptability in complex driving environments. Since most cross-modal fusion theories rely on idealized perfect calibration, deep learning-based solutions for automatic sensor alignment/calibration could emerge as a promising direction. Furthermore, we intend to extend the related ideas and techniques in regard to this method to other tasks, such as object detection, to expand its practical applications.

Author Contributions

Conceptualization, H.S., X.W., J.Z. and X.H.; methodology, X.W. and J.Z.; software, X.W.; data curation, Xin Wang; writing—original draft preparation, H.S., X.W. and J.Z.; writing—review and editing, X.W. and X.H.; supervision, H.S. and J.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the National Natural Science Foundation of China (41601409, 41971350, 42171416, 52478037); the National Key Research and Development Program of China (2018YFC0807806); the Open Fund Project of State Key Laboratory of Geoinformation Engineering (SKLGIE2019-Z-3-1); the Fundamental Research Funds for the Central Universities, Beijing Uni-versity of Civil Engineering and Architecture (X18063); the Beijing Natural Science Foundation Project (8172016); the Open Research Fund of the State Key Laboratory of Surveying, Mapping and Remote Sensing Information Engineering, Wuhan University (19E01); the Open Research Fund Project of the Key Laboratory of Digital Surveying and Land Information Application, Ministry of Natural Resources (ZRZYBWD202102); the Software Science Research Project, Ministry of Housing and Urban-Rural Development (R2020287); the Major Decision-Making Consultation Project, Beijing Social Science Foundation (21JCA004); the National Key Research and Development Program of China during the 14th Five-Year Plan Period, Ministry of Science and Technology (2023YFC3807400).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data that support the findings of this study were derived from the following resources available in the public domain: [University of Bonn, www.semantic-kitti.org (accessed on 1 March 2025)].

Conflicts of Interest

Author Xin Wang was employed by the Beiqi Foton Motor Co., Ltd., company. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Zhang, H.; Li, Z.; Wang, W.; Hu, L.; Xu, J.; Yuan, M.; Wang, Z.; Ren, Y.; Ye, Y. Multi-supervised bidirectional fusion network for road-surface condition recognition. PeerJ Comput. Sci. 2023, 9, e1446. [Google Scholar] [CrossRef] [PubMed]

- Sharma, A.; Sharma, A.; Nikashina, P.; Gavrilenko, V.; Tselykh, A.; Bozhenyuk, A.V.; Masud, M.; Meshref, H. A Graph Neural Network (GNN)-Based Approach for Real-Time Estimation of Traffic Speed in Sustainable Smart Cities. Sustainability 2023, 15, 11893. [Google Scholar] [CrossRef]

- Kim, J.; Lee, C.; Chung, D.; Kim, J. Navigable Area Detection and Perception-Guided Model Predictive Control for Autonomous Navigation in Narrow Waterways. IEEE Robot. Autom. Lett. 2023, 8, 5456–5463. [Google Scholar] [CrossRef]

- Meng, X.; Liu, Y.; Fan, L.; Fan, J. YOLOv5s-Fog: An Improved Model Based on YOLOv5s for Object Detection in Foggy Weather Scenarios. Sensors 2023, 23, 5321. [Google Scholar] [CrossRef]

- Natan, O.; Miura, J. End-to-End Autonomous Driving with Semantic Depth Cloud Mapping and Multi-Agent. IEEE Trans. Intell. Veh. 2022, 8, 557–571. [Google Scholar] [CrossRef]

- Yang, J.; Chen, G.; Huang, J.; Ma, D.; Liu, J.; Zhu, H. GLE-net: Global-local information enhancement for semantic segmentation of remote sensing images. Sci. Rep. 2024, 14, 25282. [Google Scholar] [CrossRef]

- Behley, J.; Garbade, M.; Milioto, A.; Quenzel, J.; Behnke, S.; Stachniss, C.; Gall, J. SemanticKITTI: A Dataset for Semantic Scene Understanding of LiDAR Sequences. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9296–9306. [Google Scholar]

- Caesar, H.; Bankiti, V.; Lang, A.H.; Vora, S.; Liong, V.E.; Xu, Q.; Krishnan, A.; Pan, Y.; Baldan, G.; Beijbom, O. nuScenes: A Multimodal Dataset for Autonomous Driving. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11618–11628. [Google Scholar]

- Yang, R.; Zheng, C.; Wang, L.; Zhao, Y.; Fu, Z.; Dai, Q. MAE-BG: Dual-stream boundary optimization for remote sensing image semantic segmentation. Geocarto Int. 2023, 38, 2190622. [Google Scholar] [CrossRef]

- Wang, P.; Zhu, J.; Zhu, M.; Xie, Y.; He, H.; Liu, Y.; Guo, L.; Lai, J.; Guo, Y.; You, J. Fast and accurate semantic segmentation of road crack video in a complex dynamic environment. Int. J. Pavement Eng. 2023, 24, 2219366. [Google Scholar] [CrossRef]

- Liu, Y.; Liu, Y.; Duan, Y. MVG-Net: LiDAR Point Cloud Semantic Segmentation Network Integrating Multi-View Images. Remote Sens. 2024, 16, 2821. [Google Scholar] [CrossRef]

- Park, J.; Kim, K.; Shim, H. Rethinking Data Augmentation for Robust LiDAR Semantic Segmentation in Adverse Weather. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024. [Google Scholar]

- Meyer, G.P.; Charland, J.; Hegde, D.; Laddha, A.; Vallespi-Gonzalez, C. Sensor Fusion for Joint 3d Object Detection and Semantic Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–17 June 2019. [Google Scholar] [CrossRef]

- Xiao, A.; Zhang, X.; Shao, L.; Lu, S. A Survey of Label-Efficient Deep Learning for 3D Point Clouds. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 46, 9139–9160. [Google Scholar] [CrossRef]

- Vishnupriya Chowdhary, S.; Neelima, N. LiDAR Point Clouds in Autonomous Driving Integrated with Deep Learning: A Tech Prospect. In Proceedings of the 2024 Fourth International Conference on Advances in Electrical, Computing, Communication and Sus-tainable Technologies (ICAECT), Bhilai, India, 11–12 January 2024; pp. 1–5. [Google Scholar]

- Yan, X.; Gao, J.; Zheng, C.; Zheng, C.; Zhang, R.; Cui, S.; Li, Z. 2DPASS: 2D Priors Assisted Semantic Segmentation on LiDAR Point Clouds. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022. [Google Scholar]

- Genova, K.; Yin, X.; Kundu, A.; Pantofaru, C.; Cole, F.; Sud, A.; Brewington, B.; Shucker, B.; Funkhouser, T.A. Learning 3D Semantic Segmentation with only 2D Image Supervision. In Proceedings of the 2021 International Conference on 3D Vision (3DV), London, UK, 1–3 December 2021; pp. 361–372. [Google Scholar]

- Li, J.; Dai, H.; Ding, Y. Self-Distillation for Robust LiDAR Semantic Segmentation in Autonomous Driving. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022. [Google Scholar]

- Strudel, R.; Garcia Pinel, R.; Laptev, I.; Schmid, C. Segmenter: Transformer for Semantic Segmentation. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, BC, Canada, 11–17 October 2021; pp. 7242–7252. [Google Scholar]

- Shelhamer, E.; Long, J.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 640–651. [Google Scholar] [CrossRef] [PubMed]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. arXiv 2015, arXiv:1505.04597. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 39, 2481–2495. [Google Scholar] [CrossRef]

- Chen, L.; Papandreou, G.; Kokkinos, I.; Murphy, K.P.; Yuille, A.L. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 40, 834–848. [Google Scholar] [CrossRef]

- Chen, L.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking Atrous Convolution for Semantic Image Segmentation. arXiv 2017, arXiv:1706.05587. [Google Scholar]

- Yan, S.; Wu, C.; Wang, L.; Xu, F.; An, L.; Guo, K.; Liu, Y. DDRNet: Depth Map Denoising and Refinement for Consumer Depth Cameras Using Cascaded CNNs. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Xu, J.; Xiong, Z.; Bhattacharyya, S. PIDNet: A Real-time Semantic Segmentation Network Inspired by PID Controllers. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–23 June 2022; pp. 19529–19539. [Google Scholar]

- Zheng, S.; Lu, J.; Zhao, H.; Zhu, X.; Luo, Z.; Wang, Y.; Fu, Y.; Feng, J.; Xiang, T.; Torr, P.H.; et al. Rethinking Semantic Segmentation from a Sequence-to-Sequence Perspective with Transformers. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 6877–6886. [Google Scholar]

- Xie, E.; Wang, W.; Yu, Z.; Anandkumar, A.; Álvarez, J.M.; Luo, P. SegFormer: Simple and Efficient Design for Semantic Segmentation with Transformers. Neural Inf. Process. Syst. 2021, 34, 12077–12090. [Google Scholar]

- Wang, X.; Zhang, R.; Shen, C.; Kong, T.; Li, L. Dense Contrastive Learning for Self-Supervised Visual Pre-Training. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 3023–3032. [Google Scholar]

- Qi, C.; Su, H.; Mo, K.; Guibas, L.J. PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation Supplementary Material. arXiv 2017, arXiv:1612.00593v2. [Google Scholar]

- Qi, C.; Yi, L.; Su, H.; Guibas, L.J. PointNet++: Deep Hierarchical Feature Learning on Point Sets in a Metric Space. arXiv 2017, arXiv:1706.02413. [Google Scholar]

- Hu, Q.; Yang, B.; Xie, L.; Rosa, S.; Guo, Y.; Wang, Z.; Trigoni, A.; Markham, A. RandLA-Net: Efficient Semantic Segmentation of Large-Scale Point Clouds. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11105–11114. [Google Scholar]

- Zhou, H.; Zhu, X.; Song, X.; Ma, Y.; Wang, Z.; Li, H.; Lin, D. Cylinder3D: An Effective 3D Framework for Driving-scene LiDAR Semantic Segmentation. arXiv 2020, arXiv:2008.01550. [Google Scholar]

- Lai, X.; Chen, Y.; Lu, F.; Liu, J.; Jia, J. Spherical Transformer for LiDAR-Based 3D Recognition. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 17545–17555. [Google Scholar]

- Wu, B.; Wan, A.; Yue, X.; Keutzer, K. SqueezeSeg: Convolutional Neural Nets with Recurrent CRF for Real-Time Road-Object Segmentation from 3D LiDAR Point Cloud. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 1887–1893. [Google Scholar]

- Wu, B.; Zhou, X.; Zhao, S.; Yue, X.; Keutzer, K. SqueezeSegV2: Improved Model Structure and Unsupervised Domain Adaptation for Road-Object Segmentation from a LiDAR Point Cloud. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 4376–4382. [Google Scholar]

- Milioto, A.; Vizzo, I.; Behley, J.; Stachniss, C. RangeNet ++: Fast and Accurate LiDAR Semantic Segmentation. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; pp. 4213–4220. [Google Scholar]

- Cortinhal, T.; Tzelepis, G.; Aksoy, E.E. SalsaNext: Fast, Uncertainty-Aware Semantic Segmentation of LiDAR Point Clouds. In Proceedings of the International Symposium on Visual Computing, San Diego, CA, USA, 5–7 October 2020. [Google Scholar]

- Kong, L.; Liu, Y.; Chen, R.; Ma, Y.; Zhu, X.; Li, Y.; Hou, Y.; Qiao, Y.; Liu, Z. Rethinking range view representation for lidar segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 228–240. [Google Scholar] [CrossRef]

- Engel, N.; Belagiannis, V.; Dietmayer, K.C. Point Transformer. IEEE Access 2020, 9, 134826–134840. [Google Scholar] [CrossRef]

- Wu, X.; Lao, Y.; Jiang, L.; Liu, X.; Zhao, H. Point Transformer V2: Grouped Vector Attention and Partition-Based Pooling. arXiv 2022, arXiv:2210.05666. [Google Scholar]

- Wu, X.; Jiang, L.; Wang, P.; Liu, Z.; Liu, X.; Qiao, Y.; Ouyang, W.; He, T.; Zhao, H. Point Transformer V3: Simpler, Faster, Stronger. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 4840–4851. [Google Scholar]

- Li, J.; Dai, H.; Han, H.; Ding, Y. MSeg3D: Multi-Modal 3D Semantic Segmentation for Autonomous Driving. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 21694–21704. [Google Scholar]

- Yin, R.; Cheng, Y.; Wu, H.; Song, Y.; Yu, B.; Niu, R. FusionLane: Multi-Sensor Fusion for Lane Marking Semantic Segmentation Using Deep Neural Networks. IEEE Trans. Intell. Transp. Syst. 2022, 23, 1543–1553. [Google Scholar] [CrossRef]

- Madawy, K.E.; Rashed, H.; Sallab, A.E.; Nasr, O.A.; Kamel, H.; Yogamani, S.K. RGB and LiDAR fusion based 3D Semantic Segmentation for Autonomous Driving. In Proceedings of the 2019 IEEE Intelligent Transportation Systems Conference (ITSC), Auckland, New Zealand, 27–30 October 2019; pp. 7–12. [Google Scholar]

- Vora, S.; Lang, A.H.; Helou, B.; Beijbom, O. PointPainting: Sequential Fusion for 3D Object Detection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2019; pp. 4603–4611. [Google Scholar]

- Zhuang, Z.; Li, R.; Jia, K.; Wang, Q.; Li, Y.; Tan, M. Perception-Aware Multi-Sensor Fusion for 3d Lidar Semantic Segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 16280–16290. [Google Scholar]

- Biswas, D.; Tešić, J. Domain Adaptation with Contrastive Learning for Object Detection in Satellite Imagery. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–15. [Google Scholar] [CrossRef]

- Berman, M.; Triki, A.; Blaschko, M.B. The Lovász-Softmax Loss: A Tractable Surrogate for the Optimization of the Intersection-Over-Union Measure in Neural Networks. arXiv 2018, arXiv:1705.08790. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NE, USA, 27–30 June 2015; pp. 770–778. [Google Scholar]

- Loshchilov, I.; Hutter, F. SGDR: Stochastic Gradient Descent with Warm Restarts. arXiv 2016, arXiv:1608.03983v5. [Google Scholar]

- Ioannou, G.; Tagaris, T.; Stafylopatis, A. AdaLip: An Adaptive Learning Rate Method per Layer for Stochastic Optimization. Neural Process. Lett. 2023, 55, 6311–6338. [Google Scholar] [CrossRef]

- Xu, C.; Wu, B.; Wang, Z.; Zhan, W.; Vajda, P.; Keutzer, K.; Tomizuka, M. SqueezeSegV3: Spatially-Adaptive Convolution for Efficient Point-Cloud Segmentation. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020. [Google Scholar]

- Tang, H.; Liu, Z.; Zhao, S.; Lin, Y.; Lin, J.; Wang, H.; Han, S. Searching Efficient 3D Architectures with Sparse Point-Voxel Convolution. arXiv 2020, arXiv:2007.16100. [Google Scholar]

- Zhang, Y.; Zhou, Z.; David, P.; Yue, X.; Xi, Z.; Foroosh, H. PolarNet: An Improved Grid Representation for Online LiDAR Point Clouds Semantic Segmentation. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 9598–9607. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).