Abstract

The global aging trend is becoming increasingly severe, and the demand for life assistance and medical rehabilitation for frail and disabled elderly people is growing. As the best solution for assisting limb movement, guiding limb rehabilitation, and enhancing limb strength, exoskeleton robots are becoming the focus of attention from all walks of life. This paper reviews the progress of research on upper limb exoskeleton robots, sEMG technology, and intention recognition technology. It analyzes the literature using keyword clustering analysis and comprehensively discusses the application of sEMG technology, deep learning methods, and machine learning methods in the process of human movement intention recognition by exoskeleton robots. It is proposed that the focus of current research is to find algorithms with strong adaptability and high classification accuracy. Finally, traditional machine learning and deep learning algorithms are discussed, and future research directions are proposed, such as using a deep learning algorithm based on multi-information fusion to fuse EEG signals, electromyographic signals, and basic reference signals. A model with stronger generalization ability is obtained after training, thereby improving the accuracy of human movement intention recognition based on sEMG technology, which provides important support for the realization of human–machine fusion-embodied intelligence of exoskeleton robots.

1. Introduction

The problem of aging is very serious in today’s world. In developed countries, aging has become an important factor restricting economic development. At the same time, it also brings huge challenges to the fields of elderly care, medical care, rehabilitation, etc. Especially in the medical field, the decline of motor function in the elderly brings great risks to personal health and may also aggravate cardiovascular or nervous system diseases and even leave serious sequelae [1,2,3]. In addition, the impairment of physical function caused by illness or old age will also seriously affect the patient’s ability to work independently, as well as their quality of life, which causes serious psychological or mental illness.

Generally, life assistance measures for the frail population mainly include manual care, motion assistance devices, and exoskeleton assistance. Manual care is costly, and the effectiveness and labor occupation are difficult to apply proportionally to rehabilitation. Motion assistance devices, such as wheelchairs, crutches, and braces, have single functions and can only help patients complete basic movements. They are also difficult to carry and effectively utilize to help disabled people lead a normal life [4,5,6,7].

Due to the complex structure of the human body and joints, it is difficult for traditional assistive products to meet these needs, so the development of exoskeleton robots is extremely important and urgent. Exoskeleton robots are assistive machines that can be worn on the outside of the human body to provide additional strength. As an emerging technology, combined with advanced perception and control strategies, exoskeletons can provide precise and stable motion assistance. In heavy industry, manufacturing, and logistics, workers wearing waist exoskeletons can minimize lumbar spine injuries; in the field of sports rehabilitation, injured people can use exoskeleton robots to assist in limb rehabilitation; in the fields of military, firefighting, and mine safety, wearing exoskeletons can greatly enhance the combat capability of individual soldiers. Currently, exoskeleton robots have become a hot topic in the field of robotics research [8].

Exoskeleton robot research is divided into two categories: upper-limb exoskeletons and lower-limb exoskeletons. Upper-limb exoskeleton robots are mainly aimed at the upper limbs and joints of the human body, including shoulders, elbows, wrists, and fingers, while lower-limb exoskeleton robots are mainly aimed at the lower limbs and joints, including hips, knees, ankles, etc. The lower limbs mainly complete actions such as walking and squatting, while the upper limbs are more complex and diverse, completing various posture changes according to the actual working situation. Due to the large differences in the work tasks and structural functions of the upper and lower limbs, the corresponding upper and lower-limb exoskeleton robots also differ in structure, functional design, control strategy, etc. [9,10,11]. This article will focus on upper-limb exoskeleton robots.

Usually, upper-limb exoskeleton robots are composed of sub-modules, such as a perception system, control system, and decision system. The perception system is used to measure human physiological signals or physical signals, and the decision system processes and analyzes the signals to obtain continuous human movement intentions. Finally, the control system outputs a reasonable amount of control to make the drive device take corresponding actions to assist the user in exercising [10,12,13].

However, existing upper-limb exoskeleton robots face great challenges in both software and hardware. The inability to fully predict or identify the user’s movement intention results in the control system being unable to respond quickly, timely, and accurately, resulting in low power efficiency and even adverse effects on the user, greatly limiting the user’s interactive control performance. Therefore, accurately capturing movement intention information and judging movement intention are crucial for upper-limb exoskeleton interactive control. The interactive control method of the exoskeleton robot must find an appropriate input signal to accurately provide movement intention information with less delay. Movement intention signals are divided into two categories: physical signals and human biological signals [14,15,16,17,18,19].

Physical signals refer to signals from angle sensors, force sensors, acceleration sensors, etc., which are usually measured using inertial sensors (IMU) [20,21,22,23,24,25], plantar pressure sensors [26,27,28,29], etc. With the rapid development of sensor technology, these signals have high accuracy and reliability, and can well reflect the user’s movement process, and the drive, control, and communication technologies are relatively mature and are often used in various control fields. However, the change of this signal can only occur after the action occurs, and after the delay of the perception, control, and drive systems, the output to the end controller may cause excessive delay.

Human biological signals include electromyographic signals (EMG), electroencephalographic signals (EEG) [30,31], electrooculographic signals (EOG) [32,33], etc. Bioelectric signals are the potentials and signals excited when neurons carrying behavioral information are transmitted to related tissues/organs. They do not depend on the physical movements performed by the limbs. The signals have good real-time performance and can well balance the relationship between the initial movement intention and the interpretability of the signals. By processing the signals, the current state and movement trend can be obtained. With the development of neuroscience, the human biological signals that are currently widely used in intention recognition are surface electromyographic signals (sEMG). More and more exoskeleton robots use EMG signals as input signals to collect and record the current human movement state.

Therefore, this paper will review the research progress on exoskeleton robots, sEMG technology, and intention recognition technology. Then, keyword clustering analysis is used to comprehensively analyze the relevant literature. The work in this paper will fill the gap in the field of exoskeleton robots and the review of human motion intention recognition using sEMG technology and provide important support for the realization of human–machine fusion and embodied intelligence of exoskeleton robots.

2. Upper Limb Exoskeleton

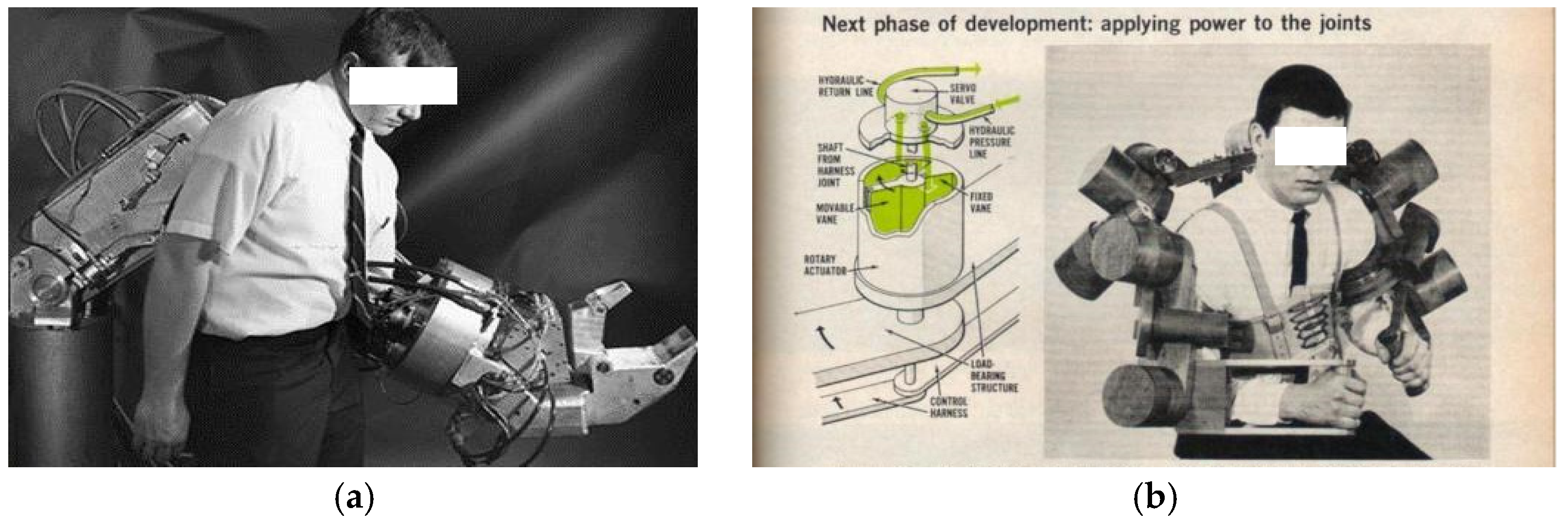

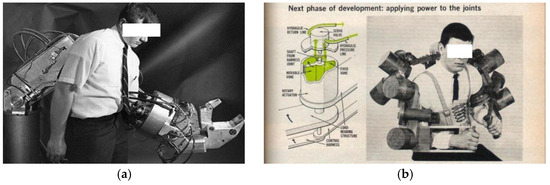

The development of upper limb exoskeleton robots can be divided into three stages: early exploration stage, technology accumulation stage, and rapid development stage. Early exploration stage: 1960s–1980s, represented by Cornell Aeronautical Laboratory’s “Man Amplifier” and General Electric’s “Hardiman”. The “Hardiman” upper limb exoskeleton was developed by General Electric in the United States [34,35], as shown in Figure 1a. It is driven by hydraulics and electricity and can amplify the wearer’s strength by 25 times. The project provided valuable experience for subsequent exoskeleton research and design. At the same time, the Cornell Aeronautical Laboratory (CAL) in the United States also launched the Man Amplifier model upper limb exoskeleton robot, as shown in Figure 1b, which is mainly driven by electricity and aims to enhance human upper limb strength and endurance through mechanical structure [36].

Figure 1.

Schematic diagram of the ‘Hardiman’ exoskeleton (a) and ‘Man Amplifier’ exoskeleton (b).

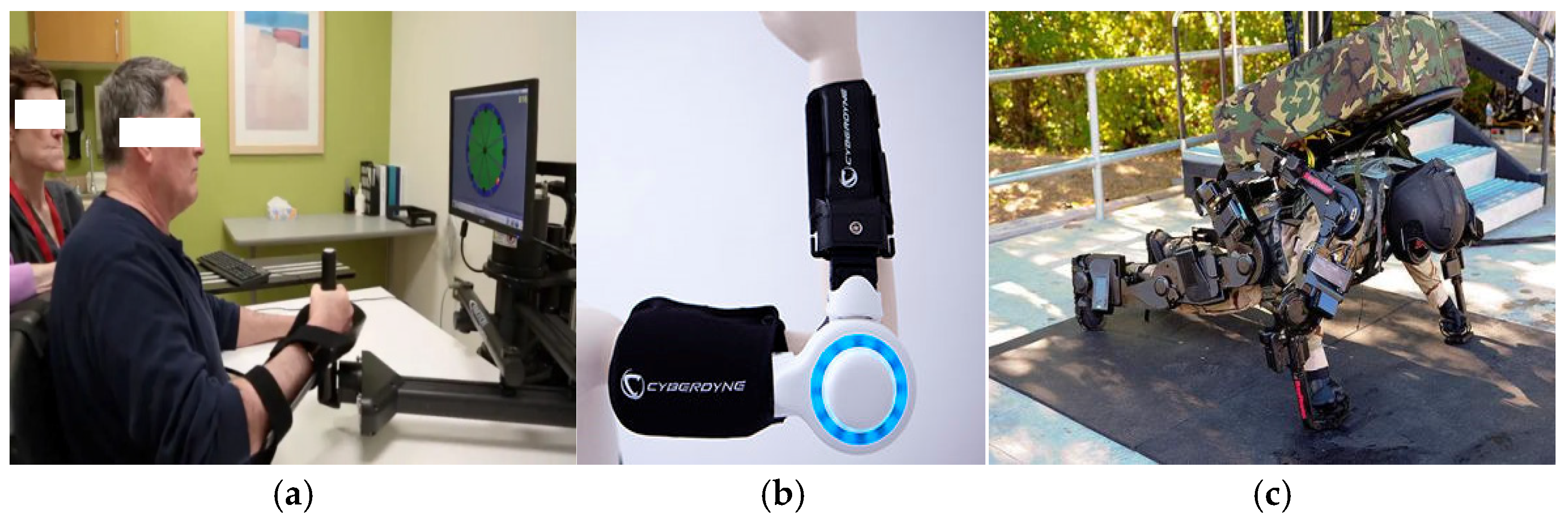

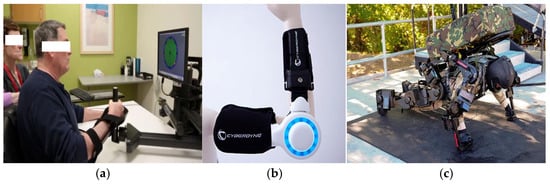

Technology accumulation stage: 1990s–2000s. MIT-Manus is the earliest upper limb exoskeleton robot used for rehabilitation training. It can accurately interact with patients and provide support when patients need it. At the same time, it can combine virtual reality technology to provide rich visual input, assist sensory motor training, and increase the fun and challenge of upper limb functional training [37], as shown in Figure 2a.

Figure 2.

Schematic diagram of the ‘MIT-Manus’ exoskeleton (a), ‘HAL’ exoskeleton (b), and ‘XOS’ exoskeleton (c).

The HAL (hybrid assistive limb) exoskeleton developed by Cyberdyne of Japan mainly adopts the design method of a motor–reducer–exoskeleton mechanism. It can automatically adjust the size of the device’s assistance according to the human body’s movement intention. Its main use is for elderly care, disabled assistance, firefighting, police, and other dangerous operations [38], as shown in Figure 2b.

The “XOS” exoskeleton developed by Sarcos of the United States is mainly for specific application scenarios of material handling. Through its innovative soft structure and ultra-light material design, it can significantly enhance the strength and endurance of soldiers; it can be used to carry heavy objects and perform high-intensity tasks [39], as shown in Figure 2c.

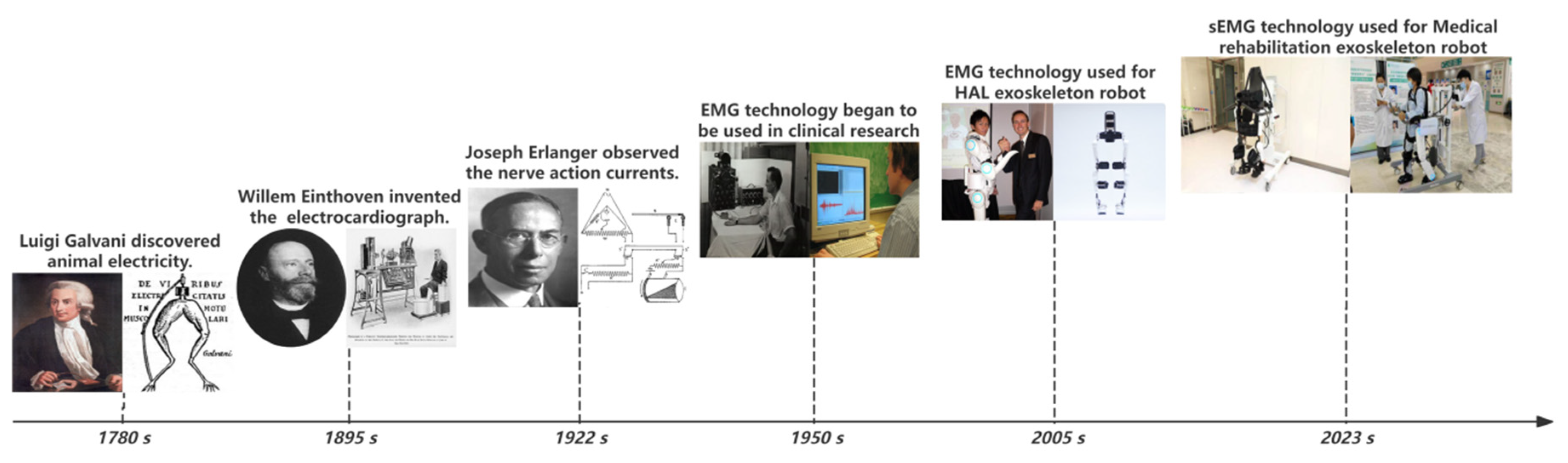

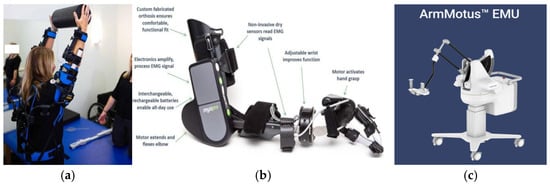

Rapid development stage: 2010s–present, EksoUE is a wearable upper limb exoskeleton that uses motors, springs, and mechanical structures for flexible driving. Its main function is to help patients with injured shoulders and arms perform guided, supportive movements and training during rehabilitation [40], as shown in Figure 3a.

Figure 3.

Schematic diagram of the ‘EksoUE’ exoskeleton (a), ‘MyoPro’ exoskeleton (b), and ‘Fourier Arm’ exoskeleton (c).

MyoPro is an electric arm that uses sEMG technology to read weak nerve signals on the surface of the skin, thereby helping the wearer restore paralyzed or weak upper limb functions and achieve autonomous operation in daily life [41], as shown in Figure 3b.

The “Fourier Arm”, developed by China’s Fourier Intelligence Company, is a lightweight end-traction upper limb rehabilitation exoskeleton robot that uses artificial intelligence and virtual reality technology to provide personalized rehabilitation training programs, as shown in Figure 3c.

In summary, upper limb exoskeleton robots have a wide range of applications. At the same time, with the rapid development of mechanical technology, sensor technology, material technology, and control technology, exoskeleton products are becoming increasingly mature and gradually moving towards practical applications. They are used to assist patients with muscular atrophy symptoms in their daily lives, for rehabilitation of stroke and hemiplegia patients, and for enhancing human functions.

3. Surface Electromyography

3.1. Evolution of Surface Electromyography Technology

Electromyography (EMG) reads signals generated by skeletal muscle activity, which comes from the potential generated by the activation of motor units. Surface electromyography (sEMG) reads the electrical signals generated by the muscle and nerve activity in the superficial layer of the epidermis. It is the sum of a large number of asynchronous action potentials of muscle fibers, which can directly reflect muscle activity. When the muscles are relaxed or tense, the force generated and the amplitude of the electromyographic signal have an approximately linear correspondence [42,43,44,45].

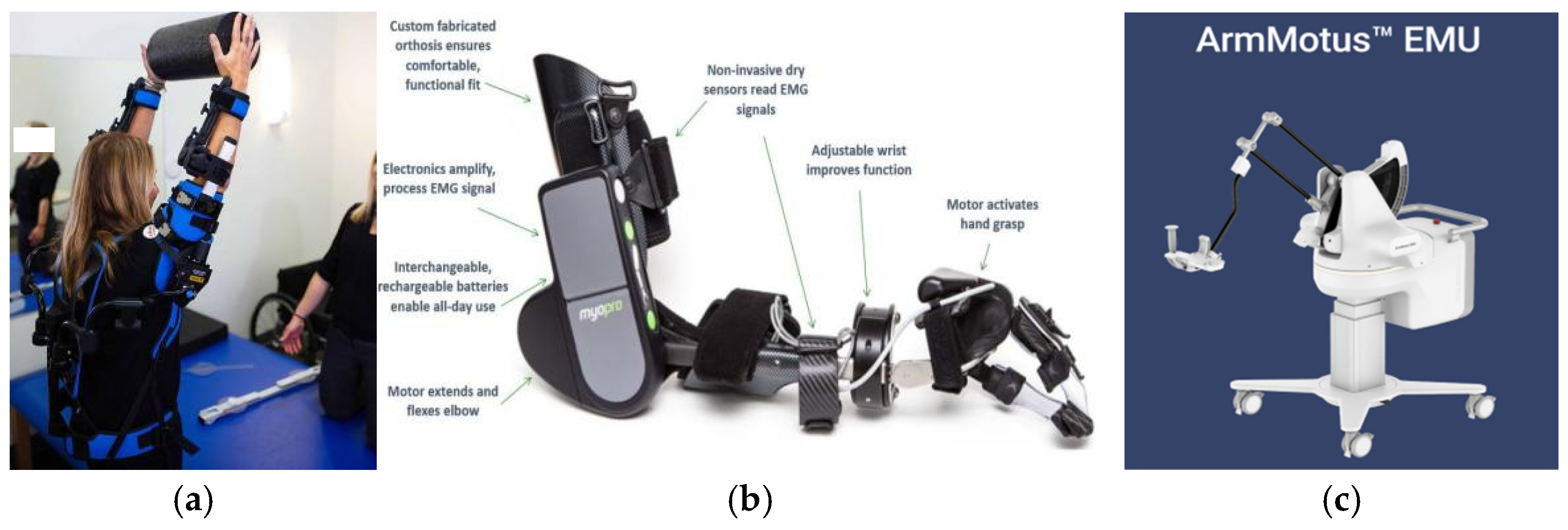

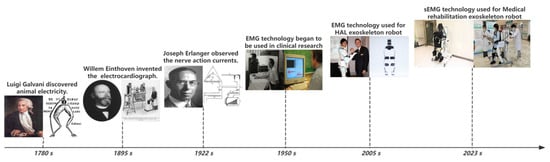

The development of surface electromyography (sEMG) technology can be traced back to the early 18th century, when Professor Galvani discovered the biological discharge phenomenon produced by frog thigh muscles during exercise and announced the theory of “animal electricity”, proving that muscle contraction has a strong relationship with electromyography. From then on, humans opened a new chapter in understanding electromyography [46].

Professor Willem Einthoven invented the string galvanometer in 1895 to record weak action potential signals. It was first used in clinical medicine to analyze and record electrocardiogram signals. This technology provided a theoretical basis for electrophysiology and pointed out the direction for the subsequent interpretation of electromyographic signals [47].

In 1922, Professor Joseph Erlanger used a cathode ray oscilloscope to replace the traditional galvanometer to successfully record the electromyographic signal for the first time, accurately recording the action potential of nerve fibers, revealing that nerve fibers control muscle contraction through electrical signals, and providing a theoretical basis for the study of electromyographic signals [48].

Since 1950, EMG technology has been widely used in clinical medical diagnosis and research of diseases such as muscle weakness and muscular atrophy, providing important reference information for doctors and patients. In 2005, the first HAL exoskeleton robot equipped with electromyography technology was launched in Japan, marking the beginning of the application of electromyography technology in the field of human–computer interaction. With the development of integrated circuits, flexible circuit boards, and microelectronics technology, electromyography technology has gradually developed towards non-invasive surface electromyography technology that can monitor muscle activity in real time. Combining sEMG technology with exoskeleton rehabilitation robots can achieve real-time monitoring and feedback of patients’ muscle activity, providing more accurate guidance for rehabilitation training. In 2023, medical rehabilitation exoskeleton robots equipped with surface electromyography technology were put into use in hospitals in Zhejiang, China. Due to its excellent rehabilitation treatment effect, it has won unanimous praise from patients. The evolution of electromyography technology is shown in Figure 4 [49].

Figure 4.

Evolution diagram of surface electromyography signal technology and application.

3.2. Introduction to Surface EMG Signal Acquisition Technology

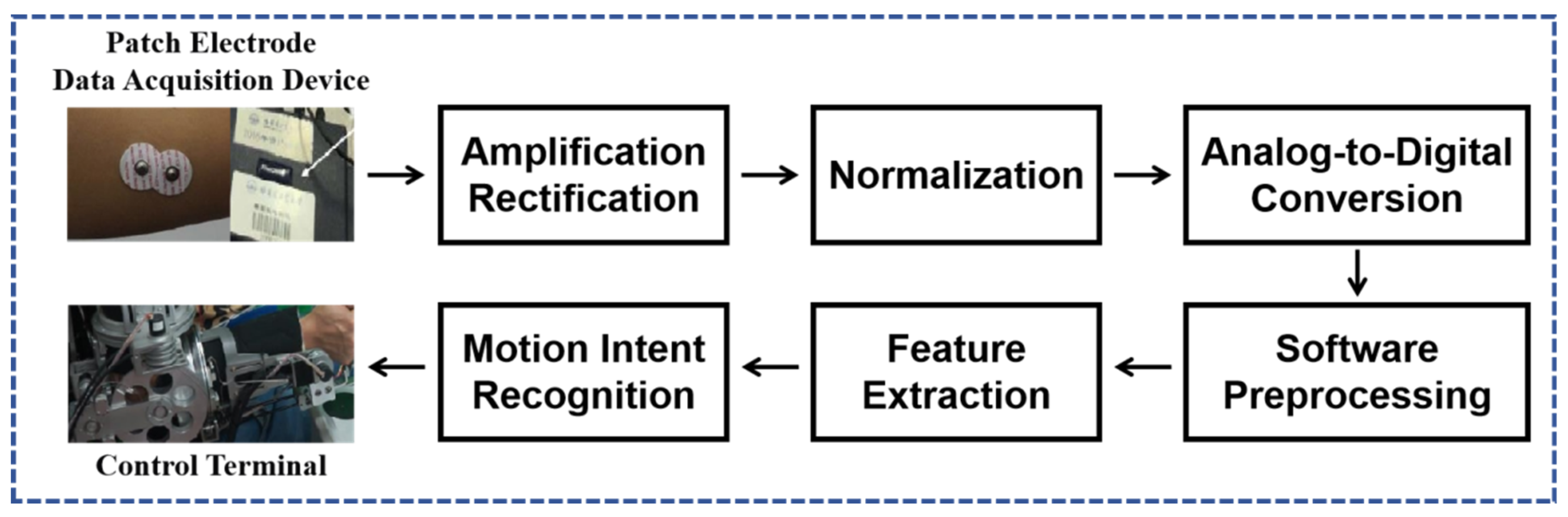

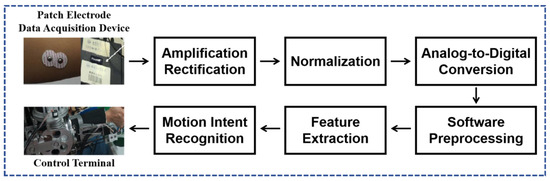

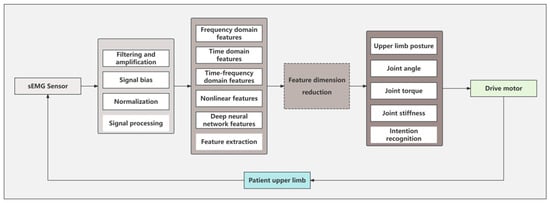

Myoelectric signals are weak signals and are very susceptible to external interference. The noise mainly includes several aspects: the noise generated by the muscles during upper limb movement, the noise generated by the relative movement between the electrode patch and the muscle, environmental noise, electrical noise, ECG artifacts, etc. Special weak signal processing methods are required [50]. The EMG signal acquisition and processing flow are shown in Figure 5.

Figure 5.

EMG signal acquisition and processing flowchart.

Generally speaking, surface electromyographic signals have the following characteristics:

- (1)

- Small amplitude, slight change, and susceptible to interference: the amplitude is generally 100–2000 μv, with a maximum of no more than 5 mv and a root mean square of 0–1.5 mv. Because the equipment collects data with high accuracy, it is very susceptible to environmental noise, and the data quality of different hardware is also different, so the performance and stability of the collection equipment are required to be high.

- (2)

- Low-frequency characteristics: The frequency concentration area of surface electromyographic signals is 10–500 Hz, and the energy is concentrated at 30–150 Hz. Even if the muscle force changes, the frequency distribution of the electromyographic signal remains relatively stable.

- (3)

- Amplitude alternation: The amplitude of the surface electromyographic signal can be positive or negative, and the absolute value of the signal has an approximate proportional relationship with the muscle force.

- (4)

- Pre-emptive nature: Since the signals transmitted by the nervous system of the human body during movement are transmitted to the arm through the central nervous system, the electromyographic signal can already reflect the movement of the muscle before the arm moves. The change in the electromyographic signal will be ahead of the change in human body movement, which is proactive [51].

These weak signals are amplified, filtered, normalized, etc., to remove noise and interference. After AD conversion, they are finally converted into discrete electromyographic signals that can be analyzed. The collected signals reflect the activity status of the nerves and muscles [52]. Noise is difficult to prevent during the acquisition of electromyographic signals, so after the original signal is acquired, signal preprocessing is required to remove noise and lay the foundation for subsequent discrimination and control.

In order to remove high-frequency noise in sEMG signals, the passband frequency is set within the energy concentration range, and a Butterworth bandpass filter is usually applied for filtering. Then, the software algorithm is used to remove erroneous values or data out of range, and feature extraction can be performed. Feature extraction of sEMG signals refers to the use of low-dimensional space to represent samples by mapping (or transforming) when the number of original features is relatively large, or the samples are in a high-dimensional space [50].

Time domain feature signals include mean absolute value, root mean square, mean, integrated EMG value, maximum and minimum values, variance, standard deviation, histogram, mean absolute value, number of zero crossings, skewness, kurtosis, Willison amplitude, and time domain model parameters, similar to AR model parameters to indicate the role of sEMG in the motion estimation process [53,54,55,56,57].

Common frequency domain features include conventional spectrum based on Fourier transform, median frequency, average frequency, cepstrum analysis, spectrum analysis based on parameter model, etc. Common time–frequency domain features include short-time Fourier transform, wavelet transform, wavelet packet transform, high-order spectrum analysis, Wigner–Ville distribution, etc. [58].

After the acquisition, preprocessing, and feature extraction are completed, the extracted feature matrix needs to be used for motion intention recognition. Different classification algorithms will select different features for prediction. When using it, it is necessary to flexibly select feature combinations according to actual conditions.

3.3. Keyword Cluster Analysis

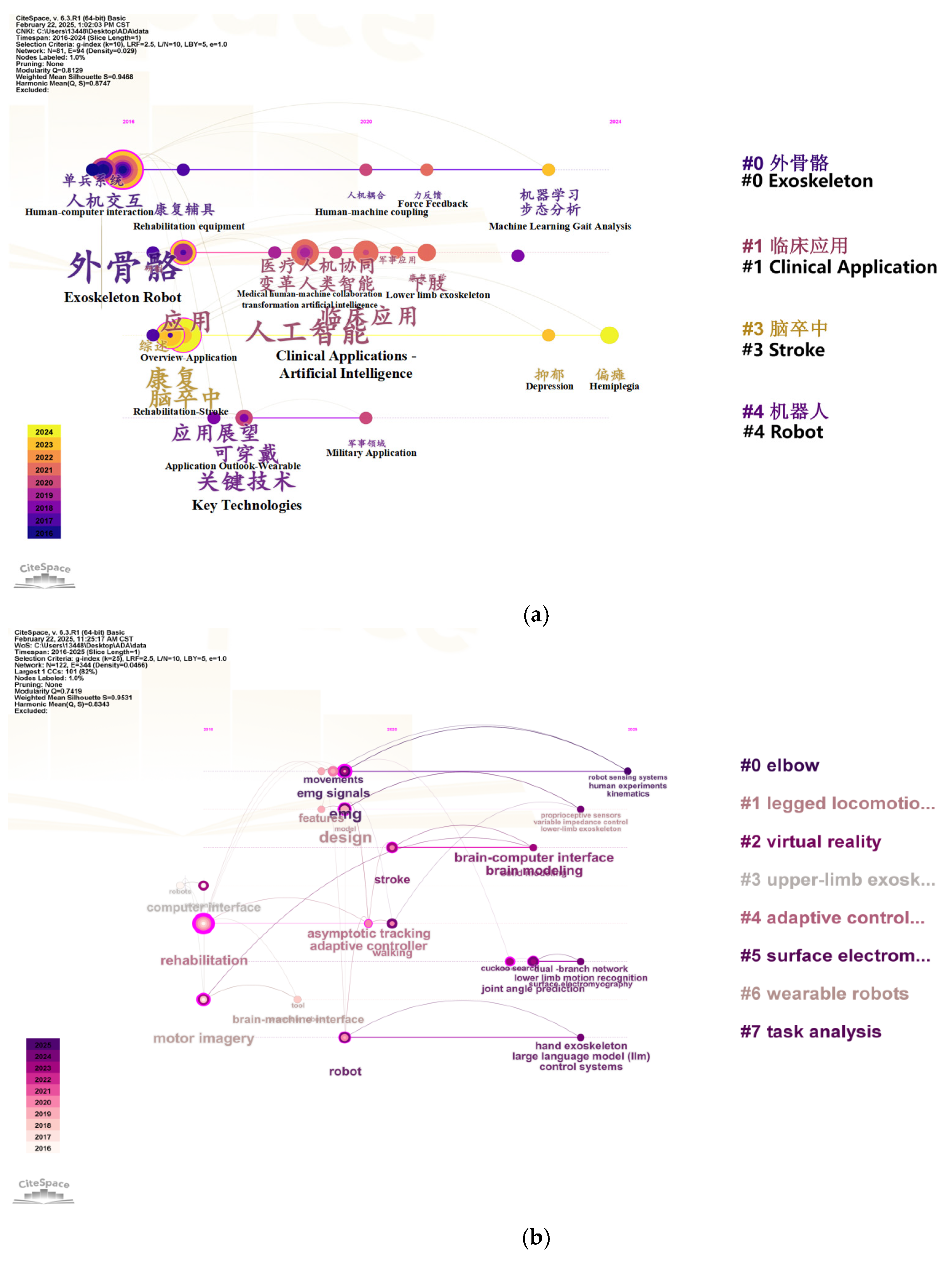

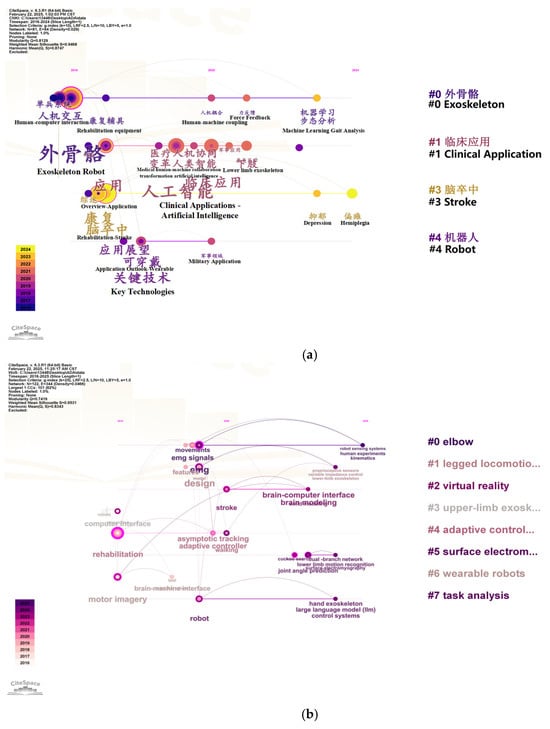

In order to more comprehensively and accurately grasp the application scenarios of sEMG technology, motion intention recognition technology, and exoskeleton robots, CiteSpace (CiteSpace 6. 3. R1. (64-bit) Basic) was used to analyze 128 Chinese and foreign scientific research papers with keywords focused on Motion Intention Recognition, Exoskeleton, and Scenario, as shown in Figure 6.

Figure 6.

Using CiteSpace to plot the timeline with Motion Intention Recognition, Exoskeleton, and Scenario as the keywords. (a) China National Knowledge network document mapping. (b) Web of Science literature mapping.

CiteSpace provides two important indicators for judging the effect of graph drawing based on the network structure and the clarity of clustering, namely modularity Q (Q value for short) and mean silhouette (S value for short). Among them, the Q value is generally in the interval [0, 1]. If Q > 0.3, it means that the view structure is significant; if S > 0.5, the clustering is considered reasonable; when the S value is 0.7, the clustering is considered to be efficient and convincing. As shown in Figure 6, the Q values of the two visualizations are 0.8129 and 0.7419, both greater than 0.3; the S values are 0.9468 and 0.9531, both greater than 0.7. The above analysis shows that the clustering structure of Chinese and English literature analyzed using the keywords intent recognition, exoskeleton, and application scenarios is significant and reasonable [59]. The criteria for literature screening in this article are academic papers related to the keywords of this article, selected from the Web of Science and CNKI official website, covering the time range of nearly ten years.

By using the keywords Motion Intention Recognition, Exoskeleton, and Scenario to analyze the significance and rationality of the clustering structure of Chinese and English literature, it can be concluded that exoskeleton robots using intention recognition technology have gradually become a hot topic in social research in recent years, the research direction is mainly focused on exoskeleton robots using intention recognition technology, but the application scenarios in China are relatively singular, mainly rehabilitation medicine, such as rehabilitation training for stroke patients. Intention recognition technology is more advanced in China than in foreign countries, and it is the first to introduce artificial intelligence to recognize human movement intentions. Foreign application scenarios, intention recognition technology, and application carriers are more diverse, and their main application scenarios are virtual reality, rehabilitation medicine, adaptive training, wearable robots, human motion analysis, upper-limb assistance, etc. Intention recognition technology innovatively uses a human–computer interface to achieve it.

Table 1 summarizes the latest innovative achievements of other articles using intention recognition technology on exoskeleton robots, such as artificial intelligence technology, human–computer interface technology, surface electromyography signal technology, etc.

Table 1.

Innovative development of motion intention recognition techniques and applications in exoskeleton robots.

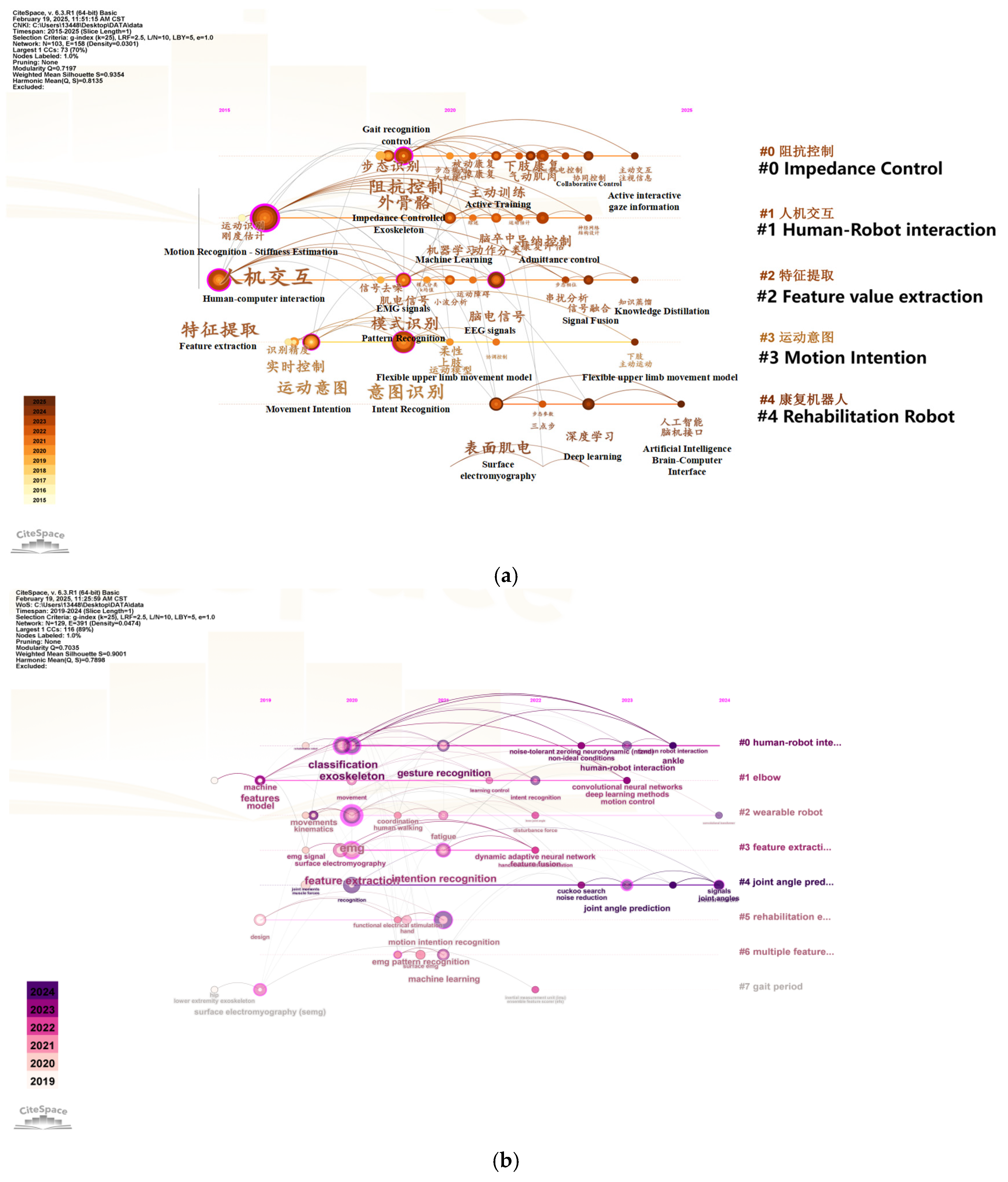

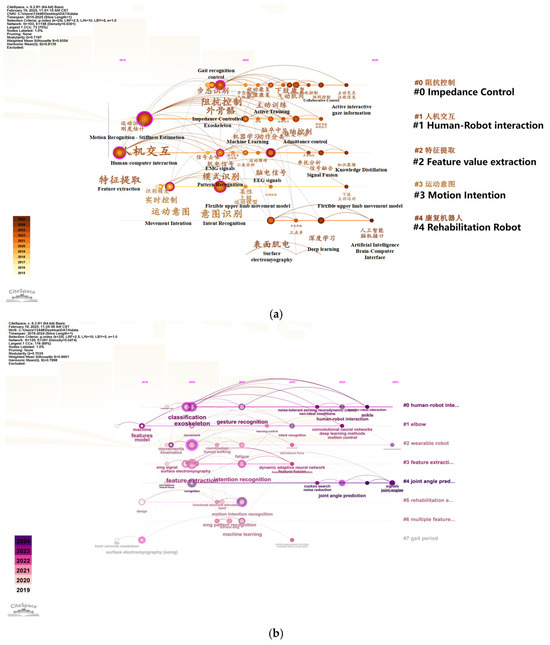

In order to further understand the importance, relevance and related algorithms of sEMG technology in the process of human motion intention recognition by exoskeleton robots, 321 Chinese and foreign scientific research papers were analyzed using CiteSpace software, with the keywords mainly focusing on sEMG, Motion Intention Recognition, and Exoskeleton, and the results are shown in Figure 7.

Figure 7.

Using CiteSpace to analyze 321 Chinese and foreign scientific research papers in which keywords focus on sEMG, Motion Intention Recognition, and Exoskeleton. (a) China National Knowledge network document mapping. (b) Web of Science literature mapping.

The CNKI visualization Q value obtained by CiteSpace analysis is 0.7197, and the S value is 0.9354. The Web of Science visualization Q value is 0.7035, and the S value is 0.9001. The clustering is significant and reasonable. Through analysis, it can be concluded that sEMG technology is important and highly relevant in the process of human motion intention recognition by exoskeleton robots. The algorithms applied are mainly machine learning and deep learning algorithms. Table 2 summarizes the latest innovative results of the remaining articles in using sEMG technology for motion intention recognition.

Table 2.

Innovative development of exoskeleton robots based on surface electromyography signal technology.

4. Movement Intention Recognition Technology Based on Surface Electromyography Signal

Movement intention classification refers to the recognition of the intention of upper limb movement, which usually includes three types: discrete action recognition, continuous action recognition, and continuous motion parameter recognition. The classification and recognition of discrete and continuous actions mainly classify preset actions. According to the data and prediction model, the most likely action is output, such as shoulder or elbow extension, and an independent action is output as a prediction of the user’s upcoming action. Most classification methods are based on artificial intelligence methods. Feature extraction is required before intention recognition. The extracted features need to be used for movement intention recognition using a classifier, and then the classification or regression results are converted into driving commands provided to the exoskeleton. The most commonly used classifiers include Bayesian classifiers [76], support vector machines [77], BP neural networks [78], linear discriminant analysis [79], fuzzy logic [80], random forests [81], etc. These methods learn rules from previous data and labeled training data sets, and the recognition accuracy of the classifier is the percentage of correctly output actions [82].

Continuous motion parameter recognition can ensure the continuous matching of human–machine motion. Compared with motion recognition, motion parameter recognition is more comprehensive and can effectively supplement the problem of incomplete motion classification. These motion parameters include joint angle, torque, acceleration, angular velocity, etc., which are usually obtained by regression. Compared with discrete motion intention classification, continuous motion intention recognition can adjust and update the trajectory online in real time, has higher flexibility, can handle the interference caused by emergencies in a timely manner, and can achieve better human–machine interaction.

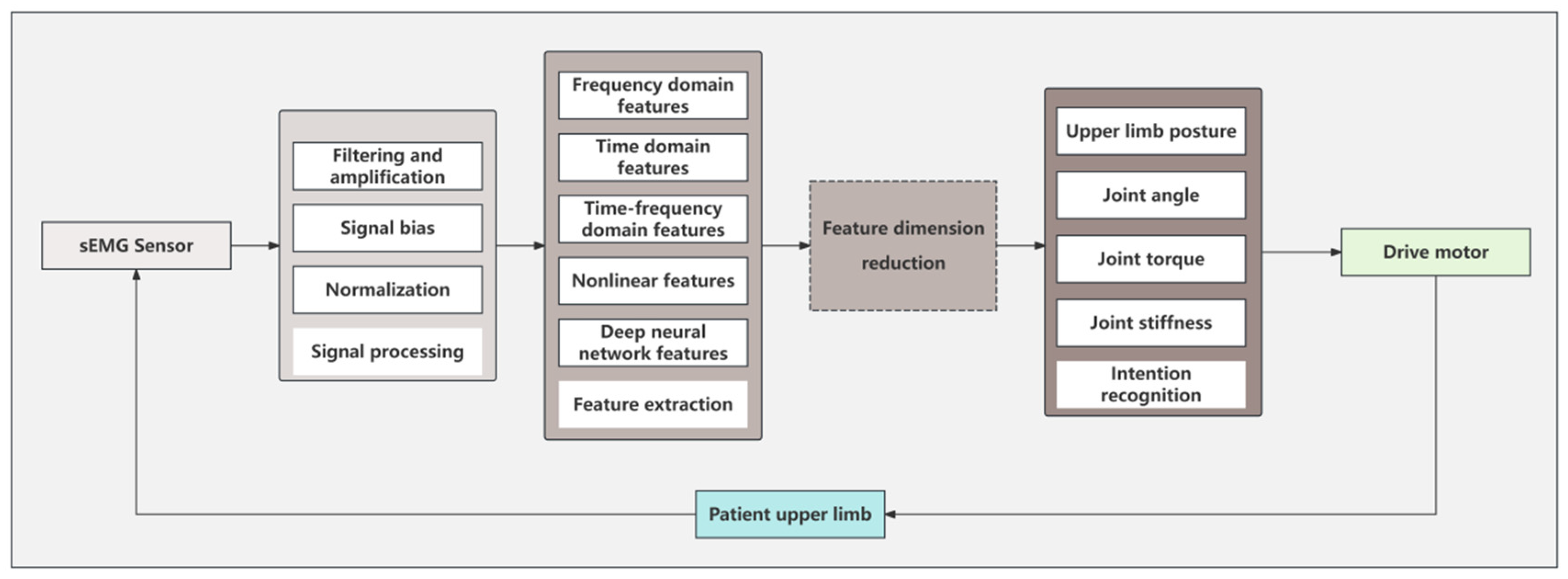

Because the surface electromyography signal has a low signal-to-noise ratio and certain randomness, it is a common processing method to first process the surface electromyography signal, extract features, and then perform motion intention recognition based on artificial intelligence algorithms. The processing flow is shown in Figure 8. The artificial intelligence algorithms used for final intention discrimination include traditional machine learning algorithms and deep learning algorithms. Machine learning requires less data and uses simple models and fewer parameters to simulate and learn. Deep learning uses a large number of layers and nonlinear relationships to simulate complex relationships in nature.

Figure 8.

Flowchart of motion intention recognition algorithm based on artificial intelligence algorithm.

By definition, deep learning is a special method and extension of machine learning. Different algorithms perform differently on different data sets. There are many reasons behind this phenomenon. The classification accuracy is not only affected by the number of categories in the classification task, the number of channels of the electromyographic signal, the acquisition time, the quality of the sensor, the layout of the sensor, the muscle fatigue state, but also the user’s health status, the posture of other parts of the body, the speed of limb movement, the mutual interference and noise between electrodes, and even the ambient temperature. These factors will have a serious impact on the classification. Therefore, finding an algorithm with strong adaptability and high classification accuracy has become the focus of current research. In order to facilitate the distinction, traditional machine learning and deep learning algorithms are used separately to better highlight the advantages and applicable conditions of different methods.

5. Movement Intention Recognition Based on Traditional Machine Learning

Traditional machine learning models have been maturely applied in the field of sEMG-based action recognition research, such as linear discriminant analysis (LDA), K-nearest neighbor classification (kNN), support vector machine (SVM), random forest, naive Bayes, etc. By learning from the training data, a parameterized model is obtained, and the model is used for prediction and decision-making. The trained model has high classification accuracy and strong generalization ability, but the feature selection is random and mainly relies on manual experience. It has limitations in adaptability, and the recognition results are easily affected.

In recent years, in order to eliminate the adverse effects of environmental changes on recognition accuracy, many experts have focused on how to improve the practicality of the algorithm without reducing the accuracy.

Duan et al. [83] designed a gesture recognition system that uses linear discriminant analysis (LDA) combined with time domain features to achieve the classification of nine gestures using sEMG signals from three channels. By applying root mean square ratio (RMSR) and autoregression (AR) as key features, the influence of force changes is effectively eliminated. A high average accuracy of 91.7% was achieved on a dataset of eight subjects, demonstrating its high efficiency and accuracy in gesture recognition. In addition, Qi et al. [84] proposed a method to use linear discriminant analysis (LDA) and extreme learning machine (ELM) to reduce redundant information in sEMG signals and improve recognition efficiency and accuracy. The characteristic graph slope (CMS) was innovatively extracted to strengthen the relationship between features across time domains and enhance the feasibility of cross-time recognition. This recognition framework can improve the generalization ability of human–computer interaction in long-term use and simplify the data collection stage before daily use of the device.

Naik [85] proposed a method for finger extension and flexion classification using a modified independent component analysis (ICA) weight matrix. First, the data were processed by principal component analysis (PCA), and the ICA matrix ratio was customized for different individuals for separation. The extracted features were classified by linear discriminant analysis (LDA), achieving a classification accuracy of nearly 90%, fully considering individual differences, and greatly improving the efficiency of the algorithm. Ali et al. [86] proposed a hierarchical control framework based on sEMG. Through machine learning methods, sEMG signals were analyzed in real time to achieve active–passive exoskeleton control for human–machine collaboration. When applied to exoskeleton robots, it can realize functions such as intention recognition, force regulation, and control mode regulation.

Since the classification decision boundary of upper-limb action recognition is very complex, the non-parametric method KNN classification method has shown better results. KNN is a supervised learning algorithm for classification and regression tasks. Its principle is to identify the k closest data points to a given input and classify the input according to the majority of classes among these neighbors. It mainly achieves action recognition by considering the similarity between sEMG data and samples in the training set.

Benalcázar et al. [87] proposed a real-time gesture recognition model based on the Myo armband, which learns to recognize hand movements through training. sEMG signals are used in combination with the kNN algorithm for classification, and muscle activity detection is integrated to improve processing speed and recognition accuracy. It was tested on five predefined gestures of the Myo armband, and an accuracy of 89.5% was achieved.

The action recognition method based on KNN is greatly affected by feature selection. Narayan [87] used a kNN classifier to classify sEMG signals in combination with the frequency domain (FD) and the time–frequency domain (TFD) features. Feature vectors were extracted by discrete wavelet transform. The experimental results show that the classification accuracy of the combination of TFD feature vector and kNN reaches 95.5%, which is better than 89% of the FD feature. However, the TFD feature is not always better than the FD feature for all systems. This needs to be adjusted according to system parameters and training data. Before using kNN for action recognition, appropriate features should be selected through experiments to improve the reliability of the algorithm [88].

In addition to the need to select appropriate features, environmental interference, gender, body state, etc. will affect the recognition accuracy. Bergil et al. [89] detected six basic hand movements based on the kNN algorithm and focused on the impact of gender on classification performance. The system extracts signal features through a four-layer symmetric wavelet transform and classifies and clusters after PCA dimensionality reduction. Tested on six subjects (three females and three males), a high accuracy of 86.33% to 100% was achieved.

Xue et al. [90] considered the impact of the use environment and proposed an algorithm based on tensor decomposition for hand gesture recognition in underwater environments. The algorithm uses Tucker tensor decomposition to extract and recognize the signal features of underwater hand gestures. The research team selected seven subjects to complete four hand gestures underwater, generated three-dimensional tensors through wavelet transform, and extracted signal features using tensor decomposition technology. The kNN algorithm was used for recognition, achieving a recognition accuracy of 96.43%. The KNN model is simple and easy to use. After selecting appropriate features, it has high recognition accuracy for different environments, genders, etc. However, the model needs to calculate all data when classifying, which has high computational costs and poor real-time performance. In the process of exoskeleton assistance, higher real-time performance means that the controller can change the output of the control quantity faster and achieve rapid response. Therefore, it is necessary to explore an algorithm model that can predict in real time. Support vector machine (SVM) provides a robust classification method that performs well in processing high-dimensional data and can achieve high classification accuracy even with limited training samples. It has a good real-time prediction effect and provides a new idea for the recognition of complex actions.

The SVM model processes nonlinear data through kernel functions and maps them to high-dimensional space, thereby achieving effective classification and solving nonlinear problems. The optimization of hyperparameters, namely the optimization of regularization parameters and kernel parameters, is crucial. Chen et al. [91] proposed a digital gesture pattern recognition method based on a 4-channel wireless surface electromyography signal system. The effects of three popular feature types (time domain feature (TD), autocorrelation and cross-correlation coefficients (ACCCs), spectral power amplitude (SPM)) and four popular classification algorithms (k-nearest neighbor (k-NN), linear discriminant analysis (LDA), quadratic discriminant analysis (QDA), and support vector machine (SVM)) in offline recognition were studied. Multi-kernel learning SVM (MKL-SVM) was further proposed. Experimental results show that the MKL-SVM method combined with three features achieved a maximum accuracy of 97.93%. It can be seen that the multi-kernel mapping method can significantly improve the accuracy of the SVM algorithm.

In order to better solve the real-time problem in exoskeleton control, Kong et al. [92] proposed a joint angle prediction model based on LSSVM to obtain the patient’s movement intention. According to the physiological characteristics of sEMG, preprocessing and feature extraction are completed. To address the time delay problem in real-time processing, feature optimization of time difference compensation is introduced. Experiments were conducted using sEMG signal data from six volunteers, and the results showed that this method can significantly improve the effect of rehabilitation training.

Pourmokhtari et al. developed a gesture recognition method based on single-channel EMG signals. Using kNN classification technology, five different finger movements were classified. The study used time domain features, such as maximum value (Max), minimum value (Min), and mean absolute value (MAV), combined with root mean square (RMS) and simple square integral (SSI) for classification. The results showed that the classification accuracy of the combination of MAV, Max, and Min on the four channels was 91.0%, 89.9%, 89.8%, and 96.0%, respectively, highlighting the simplicity, practicality, and cost-effectiveness of single-channel sEMG analysis [93].

Traditional machine learning algorithms have made great progress in motion intention recognition. By selecting different feature combinations, they have achieved an accuracy of nearly 90% or more on the corresponding data sets. However, feature selection and parameter setting rely on manual experience and require actual data as support, so there are still certain limitations in practical applications. A comparison of different algorithms is shown in Table 3. Deep learning relies on powerful neural networks to learn features from raw data and minimize human intervention.

Table 3.

Comparison of machine learning model algorithms.

6. Motion Intention Recognition Based on Deep Learning

Deep learning imitates and learns the working mechanism of the human brain by building multi-layer neural networks to process complex patterns and data, especially nonlinear problems that are difficult to handle with traditional machine learning methods. In addition, deep learning can automatically extract and learn features, optimize models, and process large-scale data sets, thereby improving the accuracy of predictions.

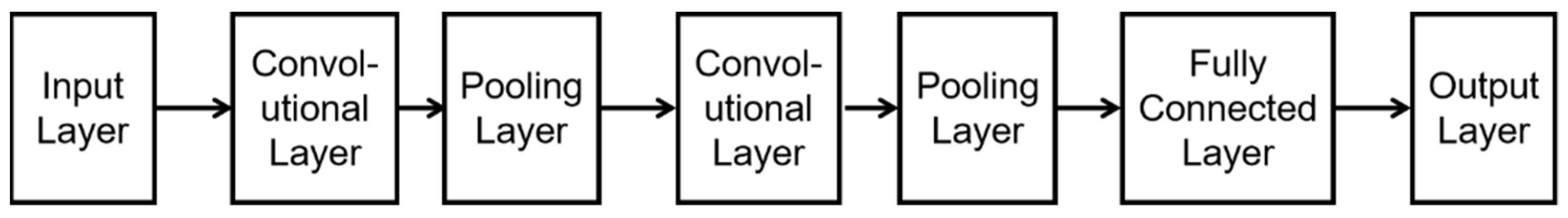

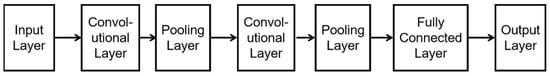

sEMG-based recognition systems face challenges such as low data variability and repeatability. These problems can be effectively solved through deep learning technology. With the continuous improvement of deep learning algorithms and computing power, deep learning models have shown broad application prospects in the field of motion recognition. Deep learning networks include an input layer, multiple hidden layers, and an output layer. There is no need to manually select features to learn high-level features of input sEMG data. Common classifiers include artificial neural network (ANN), convolutional neural network (CNN) as shown in Figure 9, hidden Markov model (HMM), and long short-term memory (LSTM).

Figure 9.

CNN architecture diagram.

In the deep learning network structure, the CNN network can automatically learn the characteristics of hand electromyographic signals from a large amount of data and classify them. It is one of the most widely used classification networks for hand movement recognition. Tam et al. proposed a real-time control strategy for prosthetic hands based on high-density electromyography and CNN. The strategy uses deep learning and transfer learning to adapt to the unique electromyographic signal pattern of each user. Through the transfer learning method, the training time is significantly reduced, making the system installation and calibration process easier. In real-time tests on 12 healthy users, the system achieved an accuracy of up to 93.43% and a response time of less than 116 milliseconds [94].

However, in the field of exoskeletons, surface electromyographic signals (sEMG) are easily blocked by skin, fat, and other muscles and are interfered with by sweat, movement, ECG artifacts, etc. Surface electromyographic signals have a low signal-to-noise ratio and a certain degree of randomness. Lin et al. [95] proposed a high-density EMG recognition framework combining generative adversarial networks (GANs) and CNNs to target contact artifacts and loose contact interference. The framework generates synthetic signals through GANs to simulate disturbed recording conditions and uses CNNs to train these data to enhance the model’s robustness to actual signal interference. Experimental results show that the classification accuracy of this method is improved from 64% to 99% in the presence of contact artifacts and loose contact interference.

Rehman et al. [96] directly used the EMG raw signal as the input of the deep network and used the network’s intrinsic feature extraction capability for classification. The MYO armband was used as a wearable EMG sensor, and EMG data from seven healthy subjects were collected for 15 consecutive days. The use of data-driven feature extraction methods may overcome the problems of feature calibration and selection in electromyographic control. The algorithm showed lower classification error, better performance and robustness, and better adaptability to capture the time-varying nature of electromyographic signals.

In addition, factors such as different individuals and different speeds will also have a significant impact on classification accuracy. Jiang et al. [97] used a CNN structure to process sEMG signals from shoulder and upper-limb muscles to identify upper arm movement patterns, including rest, drinking, forward and backward movement, and abduction. The features of the EMG signal were extracted through the time–space convolution structure of CNN, achieving high-accuracy shoulder movement pattern recognition. The accuracy of the normal movement speed CNN model in movement pattern recognition reached 97.57%.

Mohapatra et al. proposed a method based on a time–frequency domain deep neural network (TFDDNN) for the automatic recognition of gestures in multi-channel electromyography (MEMG) sensor data. The method first segmented the MEMG signal frame and calculated the average of all channel information of each frame, then applied continuous wavelet transform to obtain time–frequency representation (TFR) and finally used the deep representation learning network model for gesture recognition, with overall accuracy rates of 92.73% and 80.33%, respectively [98]. In response to key technical challenges such as non-intuitive control schemes, lack of sensory feedback, poor robustness, and single sensor modality, Jiang et al. [99] proposed a biorobotics research method for non-invasive myoelectric neural interface for upper limb prosthesis control. Deep learning combined with sEMG signal decomposition was used to improve the intuitiveness, naturalness, and user acceptance of prosthetic control. At the same time, since the myoelectric signals of amputees are different from those of ordinary people, using amputees’ myoelectric signals to accurately identify multi-force gestures is of great significance for amputees. Triwiyanto et al. [100] also proposed a classification method based on deep neural networks, which used amputees’ electromyographic signals to improve the recognition accuracy of multi-force gestures. It can recognize six gestures and maintain robustness at different force levels, with an average accuracy of 92.0%. This method can be applied to the development of prosthetic hands for amputees based on modern high-speed embedded system technology, and the algorithm can be applied to actual control.

At present, although a large number of studies have investigated the real-time control of upper limb exoskeletons based on user intentions and specific motion trajectories, the prediction of body joint position based on myoelectric features is still less explored. Sedighi et al. [101] proposed a new method to generate exoskeleton joint trajectories using a convolutional neural network and a long short-term memory (CNN-LSTM) model to effectively interpret the user’s usage intention. This method predicts the user’s intended position 450 ms ahead of the user by exploiting the natural delay in electromyographic activity.

Lv et al. proposed an algorithm combining a self-organizing map (SOM) and radial basis function (RBF) network to identify hand movement intention from sEMG signals. SOM was used for feature selection and preliminary clustering, followed by principal component analysis (PCA) for dimensionality reduction, and finally, the RBF network was used for pattern classification. A dataset of sEMG signals from six volunteers of eight gestures collected by MYO armband sensors was used. The innovation lies in the combination of SOM and RBF, which improves the accuracy and efficiency of recognition. It has the advantages of a maximum recognition rate of 100%, an average recognition accuracy of 96.875%, and a response time of 0.437 s [102].

Rajapriya et al. proposed a deep learning algorithm based on a high-order statistics-frequency domain (HOS-FD) feature set to improve the classification accuracy of hand movements in myoelectric control systems. Bispectral analysis was used to capture the nonlinear characteristics in EMG signals and combined with deep neural network (DNN) for pattern recognition. EMG datasets containing different limb positions were used for training and testing. The innovation lies in the proposal of a new HOS-FD feature set, which is translation/scale invariant and suitable for classification tasks under dynamic limb position changes. The final result presented is that when trained on all five limb position data, the DNN model achieved a high classification accuracy of 99.16% ± 0.14 [103].

Simão et al. proposed an online gesture classification algorithm based on EMG signals, which was implemented using a recurrent neural network (RNN), especially a long short-term memory network (LSTM). The EMG signals collected from the forearm muscles were used to improve the performance of online gesture classification through the dynamic model of LSTM. The UC2018 DualMyo and NinaPro DB5 datasets were used for evaluation, and a new performance indicator, gesture detection accuracy, was proposed to evaluate the performance of the model in online classification. In terms of final classification accuracy, LSTM and GRU significantly outperformed RNN and FFNN in terms of training and inference time and were similar to the static model (FFNN) in terms of accuracy, but GRU and LSTM performed slightly better [104].

However, the use of reinforcement learning (RL) technology to classify EMG is still a new and open research topic. RL-based methods have the advantages of good classification performance and online learning of user experience. Caraguay et al. [105] proposed a gesture recognition system based on deep and dual-deep Q networks. A feedforward artificial neural network (ANN) was used as a policy representation, and the performance of adding a long short-term memory (LSTM) layer was evaluated. The results showed that the DQN model without LSTM performed best in classification and recognition accuracy, reaching 90.37% and 82.52%, respectively, proving the effectiveness of reinforcement learning methods in classification and recognition problems based on EMG signals. And Table 4 gives a comprehensive comparison of deep learning model algorithms.

Table 4.

Comparison of deep learning model algorithms.

In summary, upper limb exoskeleton motion intention recognition technology based on deep learning has made great progress in recognition accuracy and generalization ability, but it has also put greater pressure on the demand for data and computing resources. Limited by the complexity of the model, the update and maintenance costs of the algorithm architecture have also increased. How to adapt different models with minimal overhead has also become one of the current research focuses. The comparison of some model algorithms is shown in Table 4.

Although the recognition of motion intention based on electromyographic signals has made great progress, there are still some practical problems that limit the actual application effect of the algorithm. First, electromyographic signals are very weak and easily interfered with by the external environment. They are also highly dependent on the installation location and sensor model. Second, the strength of electromyographic signals varies greatly between different people, and there are certain differences in the amplitude and frequency distribution of electromyographic signals between healthy people and patients with diseases. At present, the data sets of most models and algorithms are from healthy people, so when the models are applied to patients, the classification accuracy will be poor. In addition, electromyographic signals are also affected by many factors, such as age, fatigue, and exercise habits. Finally, although deep learning has high recognition accuracy, the recognition model is complex to calculate, requires a large amount of data calculation, and has weak model migration ability. When applied to human motion signal processing based on sEMG technology, there are obstacles in real-time and individual differences.

Therefore, some methods for improvement have been proposed, such as transfer learning methods. Chen et al. proposed a transfer learning strategy [106] to improve the generalization of sEMG-based gesture recognition and reduce the training burden. A CNN is trained to capture gesture features, and then these features are used in two target networks: pure CNN and CNN + LSTM. On three different datasets, the TL strategy significantly improved gesture recognition accuracy by 10–38%, significantly shortened training time, and ensured a recognition accuracy of more than 90%, which is of great value to the development of myoelectric control systems. Côté-Allard et al. used transfer learning technology to enhance CNN to utilize inter-user data from the first dataset and reduce the burden of data generation imposed on a single individual. On a dataset with 17 participants, the improved CNN achieved an average accuracy of 97.81% on seven hand/wrist gestures [107]. And Table 5 gives a comprehensive comparison of machine learning, deep learning, and hybrid approaches..

Table 5.

Comparison of machine learning, deep learning, and hybrid approaches.

Multi-source information fusion can improve the adaptability of the model or algorithm, reduce the impact of noise, and improve classification accuracy [108,109,110]. EEG signals and sEMG signals are used as model inputs, and deep multi-task learning is then used to predict the exoskeleton user’s movement intentions and cognitive states. After training, a model with stronger generalization capabilities is obtained, achieving “human–machine symbiosis” control and embodied intelligence that is more in line with the human neural mechanism. At the same time, while optimizing the algorithm, we can also start from the driving method of the exoskeleton. Long Zhang et al. [111] proposed that parallel elastic actuators can reduce the joint impedance of the exoskeleton. PEA stores energy through elastic elements and reduces the peak torque of the actuator, simulating the energy circulation characteristics of human tendons, thereby optimizing the flexibility of human–computer interaction. Luo, S. et al. [112] proposed an exoskeleton machine design architecture based on multi-source information fusion of biomechanical models, robot models, and data-driven signals. To a certain extent, it can provide prior knowledge through physical models and improve the development efficiency and machine learning efficiency of exoskeleton robots. Achilli et al. [113] proposed a multi-source information fusion design concept for a hand rehabilitation exoskeleton, which achieves precise adaptation of human–machine collaboration by integrating information such as the user’s clinical injury data, hand characteristics, and exoskeleton technical characteristics (rigid/soft/hybrid architecture). Geonea et al. [114] proposed an exoskeleton robot design idea based on multi-source data fusion, which mainly integrates mechanical design data, motion simulation data, and experimental data to provide basic data for closed-loop optimization design of virtual simulation and exoskeleton experimental verification, thereby reducing the iteration cost of physical prototypes. Tian J et al. [115] proposed a shoulder exoskeleton design scheme based on multi-source data fusion, which integrates the mechanical structure design data, controller design data, and experimental test data of the exoskeleton robot for analysis, providing a reference for the applicability analysis of the exoskeleton.

7. Summary and Prospects

This paper introduces the research progress of upper-limb exoskeleton robots, sEMG technology, and intention recognition technology in detail, analyzes the literature using keyword clustering analysis, and comprehensively discusses the application of sEMG technology, deep learning methods, and machine learning methods in the process of human movement intention recognition by exoskeleton robots.

Since sEMG signals themselves have the characteristics of weak intensity and susceptibility to environmental interference, and the characteristics of electromyographic signals of different individuals are significantly different, a comparative analysis of movement intention recognition technology based on electromyographic signals is conducted it is concluded that it is very challenging for exoskeleton robots to use sEMG signals to recognize human movement intentions. Therefore, the current research focuses on finding algorithms with strong adaptability and high classification accuracy. This paper further explores machine learning methods and deep learning methods. Traditional machine learning methods rely on manual feature extraction in electromyographic signal processing, which is suitable for small-scale data sets but has limited generalization ability and adaptability. Deep learning-based methods can automatically learn complex features from raw data, especially performing well on large-scale data sets, and have stronger generalization and adaptability. However, in practical applications, deep learning models need to overcome the characteristics of sEMG signals, which are weak and susceptible to environmental interference, as well as the significant differences in the characteristics of electromyographic signals of different individuals and the challenges of real-time performance. Therefore, research on the use of sEMG signals for human motion intention recognition by exoskeleton robots needs to further optimize the algorithm model and fuse multi-source information. This paper proposes a deep learning algorithm based on multi-information fusion to fuse EEG signals, electromyographic signals, and basic reference signals. After training, a model with stronger generalization ability and stronger recognition ability for complex motion patterns is obtained, thereby improving the accuracy of human motion intention recognition based on sEMG technology and providing important support for the realization of human–machine fusion-embodied intelligence of exoskeleton robots.

In the context of the rapid development of artificial intelligence, the future sEMG-based human movement intention recognition technology will make breakthroughs in the following three aspects: improving the accuracy of sEMG signal analysis, adaptive modeling of different individuals, and embodied intelligence of human–machine collaboration. The fusion of artificial intelligence and multi-information will bring new ideas to the development of exoskeleton technology, improve its applicability in different environments and individuals, and achieve a more natural control strategy and control accuracy that is more in line with the physiological characteristics of the human body.

Author Contributions

Conceptualization, X.Z., Y.Q. and X.X.; methodology, C.C. and X.X.; software, X.X. and C.C.; validation, Z.W., Y.Q. and C.C.; formal analysis, X.X.; investigation, G.Z. and X.X.; resources, G.Z., Y.Q., X.Z. and C.C.; data curation, X.X.; writing—original draft preparation, X.Z., Y.Q., C.C., X.X. and Z.W.; writing—review and editing, X.Z. and X.X.; visualization, X.X.; supervision, Y.Q. and G.Z.; project administration, G.Z.; funding acquisition, X.Z., Y.Q., X.X. and C.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (grant number U23B2094), the Technology Innovation and Entrepreneurship Fund Special Project of CCTEG (grant number 2022-2-MS004), and the Science and Technology Innovation Project of Shendong Coal Company (project no. E210100556).

Acknowledgments

The authors would like to thank the China Coal Research Institute and China Energy Group.

Conflicts of Interest

Xu Zhang, Yonggang Qu, Gang Zhang, and Zhiqiang Wang were employed by Shendong Coal Group Co., Ltd. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Daliri, M.; Ghorbani, M.; Akbarzadeh, A.; Negahban, H.; Ebrahimzadeh, M.H.; Rahmanipour, E.; Moradi, A. Powered single hip joint exoskeletons for gait rehabilitation: A systematic review and Meta-analysis. BMC Musculoskelet. Disord. 2024, 25, 80. [Google Scholar] [CrossRef] [PubMed]

- Rangan, R.P.; Babu, S.R. Exo skeleton pertinence and control techniques: A state-of-the-art review. Proc. Inst. Mech. Eng. Part C-J. Mech. Eng. Sci. 2024, 238, 6751–6782. [Google Scholar] [CrossRef]

- Yao, Y.; Shao, D.; Tarabini, M.; Moezi, S.A.; Li, K.; Saccomandi, P. Advancements in Sensor Technologies and Control Strategies for Lower-Limb Rehabilitation Exoskeletons: A Comprehensive Review. Micromachines 2024, 15, 489. [Google Scholar] [CrossRef]

- Pitzalis, R.F.; Park, D.; Caldwell, D.G.; Berselli, G.; Ortiz, J. State of the Art in Wearable Wrist Exoskeletons Part II: A Review of Commercial and Research Devices. Machines 2024, 12, 21. [Google Scholar] [CrossRef]

- Li, G.; Liang, X.; Lu, H.; Su, T.; Hou, Z.-G. Development and Validation of a Self-Aligning Knee Exoskeleton with Hip Rotation Capability. IEEE Trans. Neural Syst. Rehabil. Eng. 2024, 32, 472–481. [Google Scholar] [CrossRef] [PubMed]

- Ceccarelli, M.; Riabtsev, M.; Fort, A.; Russo, M.; Laribi, M.A.; Urizar, M. Design and Experimental Characterization of L-CADEL v2, an Assistive Device for Elbow Motion. Sensors 2021, 21, 5149. [Google Scholar] [CrossRef]

- Haoming, Y.; Yu, J.; Li, Y. Standing Device for Assisting Elderly People Indoors. J. Phys. Conf. Ser. 2021, 1865, 032003. [Google Scholar]

- Maciejasz, P.; Eschweiler, J.; Gerlach-Hahn, K.; Jansen-Troy, A.; Leonhardt, S. A survey on robotic devices for upper limb rehabilitation. J. Neuroeng. Rehabil. 2014, 11, 1–29. [Google Scholar] [CrossRef]

- Plaza, A.; Hernandez, M.; Puyuelo, G.; Garces, E.; Garcia, E. Lower-Limb Medical and Rehabilitation Exoskeletons: A Review of the Current Designs. IEEE Rev. Biomed. Eng. 2023, 16, 278–291. [Google Scholar] [CrossRef]

- Narayan, J.; Auepanwiriyakul, C.; Jhunjhunwala, S.; Abbas, M.; Dwivedy, S.K. Hierarchical Classification of Subject-Cooperative Control Strategies for Lower Limb Exoskeletons in Gait Rehabilitation: A Systematic Review. Machines 2023, 11, 764. [Google Scholar] [CrossRef]

- Nazari, F.; Mohajer, N.; Nahavandi, D.; Khosravi, A.; Nahavandi, S. Applied Exoskeleton Technology: A Comprehensive Review of Physical and Cognitive Human–Robot Interaction. IEEE Trans. Cogn. Dev. Syst. 2023, 15, 1102–1122. [Google Scholar] [CrossRef]

- Rodrigues, J.C.; Menezes, P.; Restivo, M.T. An augmented reality interface to control a collaborative robot in rehab: A preliminary usability evaluation. Front. Digit. Health 2023, 5, 1078511. [Google Scholar] [CrossRef] [PubMed]

- Deng, M.; Li, Z.; Kang, Y.; Chen, C.P.; Chu, X. A Learning-Based Hierarchical Control Scheme for an Exoskeleton Robot in Human-Robot Cooperative Manipulation. IEEE Trans. Cybern. 2020, 50, 112–125. [Google Scholar] [CrossRef]

- Li, J.; Cao, Q.; Dong, M.; Zhang, C. Compatibility evaluation of a 4-DOF ergonomic exoskeleton for upper limb rehabilitation. Mech. Mach. Theory 2021, 156, 104146. [Google Scholar] [CrossRef]

- Liu, B.; Li, N.; Yu, P.; Yang, T.; Chen, W.; Yang, Y.; Wang, W.; Yao, C. Research on the Control Methods of Upper Limb Rehabilitation Exoskeleton Robot. J. Univ. Electron. Sci. Technol. China 2020, 49, 643–651. [Google Scholar]

- Gopura, R.; Bandara, D.; Kiguchi, K.; Mann, G. Developments in hardware systems of active upper-limb exoskeleton robots: A review. Robot. Auton. Syst. 2016, 75, 203–220. [Google Scholar] [CrossRef]

- Gull, M.A.; Thoegersen, M.; Bengtson, S.H.; Mohammadi, M.; Struijk, L.N.S.A.; Moeslund, T.B.; Bak, T.; Bai, S. A 4-DOF Upper Limb Exoskeleton for Physical Assistance: Design, Modeling, Control and Performance Evaluation. Appl. Sci. 2021, 11, 5865. [Google Scholar] [CrossRef]

- Kapsalyamov, A.; Hussain, S.; Jamwal, P.K. State-of-the-Art Assistive Powered Upper Limb Exoskeletons for Elderly. IEEE Access 2020, 8, 178991–179001. [Google Scholar] [CrossRef]

- Bauer, G.; Pan, Y.-J. Review of Control Methods for Upper Limb Telerehabilitation with Robotic Exoskeletons. IEEE Access 2020, 8, 203382–203397. [Google Scholar] [CrossRef]

- Zhang, X.; Zhang, H.; Hu, J.; Deng, J.; Wang, Y. Motion Forecasting Network (MoFCNet): IMU-Based Human Motion Forecasting for Hip Assistive Exoskeleton. IEEE Robot. Autom. Lett. 2023, 8, 5783–5790. [Google Scholar] [CrossRef]

- Kim, J.U.; Kim, G.E.; Ji, Y.B.; Lee, A.R.; Lee, H.J.; Tae, K.S. Development of a Knee Exoskeleton for Rehabilitation Based EMG and IMU Sensor Feedback. J. Biomed. Eng. Res. 2019, 40, 223–229. [Google Scholar]

- Arceo, J.C.; Yu, L.; Bai, S. Robust Sensor Fusion and Biomimetic Control of a Lower-Limb Exoskeleton with Multimodal Sensors. IEEE Trans. Autom. Sci. Eng. 2024, 22, 5425–5435. [Google Scholar] [CrossRef]

- Goffredo, M.; Romano, P.; Infarinato, F.; Cioeta, M.; Franceschini, M.; Galafate, D.; Iacopini, R.; Pournajaf, S.; Ottaviani, M. Kinematic Analysis of Exoskeleton-Assisted Community Ambulation: An Observational Study in Outdoor Real-Life Scenarios. Sensors 2022, 22, 4533. [Google Scholar] [CrossRef] [PubMed]

- Zhu, L.; Wang, Z.; Ning, Z.; Zhang, Y.; Liu, Y.; Cao, W.; Wu, X.; Chen, C. A Novel Motion Intention Recognition Approach for Soft Exoskeleton via IMU. Electronics 2020, 9, 2176. [Google Scholar] [CrossRef]

- Jaramillo, I.E.; Jeong, J.G.; Lopez, P.R.; Lee, C.-H.; Kang, D.-Y.; Ha, T.-J.; Oh, J.-H.; Jung, H.; Lee, J.H.; Lee, W.H.; et al. Real-Time Human Activity Recognition with IMU and Encoder Sensors in Wearable Exoskeleton Robot via Deep Learning Networks. Sensors 2022, 22, 9690. [Google Scholar] [CrossRef] [PubMed]

- Hao, D.; Hang, Y.; Zhu, S.; Yang, Y.; Xu, Q. Study on Foot Force Measuring System for Lower Limb Power-assisted Exoskeleton. Mech. Sci. Technol. Aerosp. Eng. 2017, 36, 919–924. [Google Scholar]

- Ramadan, A.; Roy, A.; Smela, E. Recognizing Hemiparetic Ankle Deficits Using Wearable Pressure Sensors. IEEE J. Transl. Eng. Health Med. 2019, 7, 2100403. [Google Scholar] [CrossRef]

- Kim, J.-H.; Han, J.W.; Kim, D.Y.; Baek, Y.S. Design of a Walking Assistance Lower Limb Exoskeleton for Paraplegic Patients and Hardware Validation Using CoP. Int. J. Adv. Robot. Syst. 2013, 10, 113. [Google Scholar] [CrossRef]

- Kim, J.-H.; Shim, M.; Ahn, D.H.; Son, B.J.; Kim, S.-Y.; Kim, D.Y.; Baek, Y.S.; Cho, B.-K. Design of a Knee Exoskeleton Using Foot Pressure and Knee Torque Sensors. Int. J. Adv. Robot. Syst. 2015, 12, 112. [Google Scholar] [CrossRef]

- Wenlong, L.; Guoxin, X.; Zihao, W.; Bingqi, J.; Wenqing, G.; Yanjun, Z. Design of brain-controlled lower limb exoskeleton based on multiple environments. J. Environ. Prot. Ecol. 2020, 21, 738–746. [Google Scholar]

- Tang, Z.; Sun, S.; Zhang, K. Research on the Control Method of an Upper-limb Rehabilitation Exoskeleton Based on Classification of Motor Imagery EEG. J. Mech. Eng. 2017, 53, 60–69. [Google Scholar] [CrossRef]

- Witkowski, M.; Cortese, M.; Cempini, M.; Mellinger, J.; Vitiello, N.; Soekadar, S.R. Enhancing brain-machine interface (BMI) control of a hand exoskeleton using electrooculography (EOG). J. Neuroeng. Rehabil. 2014, 11, 165. [Google Scholar] [CrossRef]

- Cloud, W. Machines That Let You Carry a Ton. Pop. Sci. 1965, 12, 204. [Google Scholar]

- Guo, Q.; Hu, Z.; Fu, D. A review of upper limb power-assisted exoskeletons. Mech. Transm. 2023, 47, 141–155. [Google Scholar]

- Mosher, R.S. Handyman to hardiman. Sae Trans. 1968, 588–597. [Google Scholar]

- Popular Science. *Exoskeleton Man Amplifier*. November 1965. Available online: https://cyberneticzoo.com/wp-content/uploads/2010/04/Exoskeleton-PopularScienceNov65.pdf (accessed on 29 March 2025).

- Au-aff, A.A.A. Neurocognitive robot-assisted rehabilitation of hand function: A randomized control trial on motor recovery in subacute stroke. J. Neuroeng. Rehabil. 2020, 17, 115. [Google Scholar]

- Zhang, X. New extension of HAL (hybrid assistive limb) exoskeleton functions. Med. Equip. 2014, 35, 76. [Google Scholar]

- Zhang, S.; Gu, H.; Hu, H.; Ming, H. Military portable mechanical energy generation technology. Natl. Def. Sci. Technol. 2024, 45, 34–42. [Google Scholar]

- Zhang, F.; Sun, Z. Structural design and kinematic analysis of terminal traction upper limb exoskeleton. Mod. Manuf. Eng. 2024, 120–127+158. [Google Scholar]

- Chang, S.; Hofland, N.; Chen, Z.; Tatsuoka, C.; Richards, L.; Bruestle, M.; Kovelman, H.; Naft, J. Myoelectric Orthosis Assists Functional Activities in Individuals with Upper Limb Impairment Post-Stroke: 3-Month Home Outcomes. Arch. Phys. Med. Rehabil. 2024, 105, e31–e32. [Google Scholar] [CrossRef]

- Nazmi, N.; Rahman, M.A.A.; Yamamoto, S.-I.; Ahmad, S.A.; Zamzuri, H.; Mazlan, S.A. A Review of Classification Techniques of EMG Signals during Isotonic and Isometric Contractions. Sensors 2016, 16, 1304. [Google Scholar] [CrossRef] [PubMed]

- Xie, P.; Xu, M.; Shen, T.; Chen, J.; Jiang, G.; Xiao, J.; Chen, X. A channel-fused gated temporal convolutional network for EMG-based gesture recognition. Biomed. Signal Process. Control 2024, 95, 106408. [Google Scholar] [CrossRef]

- Li, Z.; Wei, D.; Feng, Y.; Yu, F.; Ma, Y. Research Progress of Surface Electromyography Hand Motion Recognition. Comput. Eng. Appl. 2024, 60, 29–43. [Google Scholar] [CrossRef]

- Parsaei, H.; Stashuk, D.W.; Rasheed, S.; Farkas, C.; Hamilton-Wright, A. Intramuscular EMG signal decomposition. Crit. Rev. Biomed. Eng. 2010, 38, 435–465. [Google Scholar] [CrossRef]

- Tang, G. Wearable Electronics for Surface and Needle Electromyography Measurements. In Proceedings of the 2nd International Conference on Biotechnology, Life Science and Medical Engineering (BLSME 2023), Jeju Island, Republic of Korea, 11–12 March 2023. [Google Scholar]

- Shi, X.; Yu, H.; Tang, Z.; Lu, S.; You, M.; Yin, H.; Chen, Q. Adhesive hydrogel interface for enhanced epidermal signal. Sci. China (Technol. Sci.) 2024, 67, 3136–3151. [Google Scholar] [CrossRef]

- Breathnach, C.S.; Moynihan, J.B. Joseph Erlanger (1874–1965): The cardiovascular investigator who won a Nobel Prize in neurophysiology. J. Med. Biogr. 2014, 22, 228–232. [Google Scholar] [CrossRef] [PubMed]

- Zhang, W.; Li, J.; Gao, F.; Yang, D.; Guo, Y. Research progress of wearable technology in rehabilitation medicine. Chin. J. Rehabil. Theory Pract. 2017, 23, 792–795. [Google Scholar]

- Chowdhury, R.H.; Reaz, M.B.I.; Ali, M.A.B.M.; Bakar, A.A.A.; Chellappan, K.; Chang, T.G. Surface electromyography signal processing and classification techniques. Sensors 2013, 13, 12431–12466. [Google Scholar] [CrossRef]

- Fleischer, C.; Hommel, G. A Human--exoskeleton interface utilizing electromyography. IEEE Trans. Robot. 2008, 24, 872–882. [Google Scholar] [CrossRef]

- Lehman, G.J.; McGill, S.M. The importance of normalization in the interpretation of surface electromyography: A proof of principle. J. Manip. Physiol. Ther. 1999, 22, 444–446. [Google Scholar] [CrossRef]

- Englehart, K.; Hudgins, B. A robust, real-time control scheme for multifunction myoelectric control. IEEE Trans. Biomed. Eng. 2003, 50, 848–854. [Google Scholar] [CrossRef]

- Huang, H.-P.; Chen, C.-Y. Development of a myoelectric discrimination system for a multi-degree prosthetic hand. In Proceedings of the 1999 IEEE International Conference on Robotics and Automation (Cat. No.99CH36288C), Detroit, MI, USA, 10–15 May 1999; pp. 2392–2397. [Google Scholar]

- Phinyomark, A.; Limsakul, L.; Phukpattaranont, P. A novel feature extraction for robust EMG pattern recognition. arXiv 2009, arXiv:0912.3973. [Google Scholar]

- Phinyomark, A.; Phukpattaranont, P.; Limsakul, C. Feature reduction and selection for EMG signal classification. Expert Syst. Appl. 2012, 39, 7420–7431. [Google Scholar] [CrossRef]

- Rechy-Ramirez, E.J.; Hu, H. Stages for Developing Control Systems Using EMG and EEG Signals: A Survey; School of computer science and electronic engineering, University of Essex: Colchester, UK, 2011; pp. 1744–8050. [Google Scholar]

- Han, J.S.; Song, W.K.; Kim, J.S.; Bang, W.C.; Lee, H.; Bien, Z. New EMG pattern recognition based on soft computing techniques and its application to control of a rehabilitation robotic arm. In Proceedings of the 6th International Conference on Soft Computing (IIZUKA2000), Iizuka, Japan, 1–4 October 2000; pp. 890–897. [Google Scholar]

- Du, B.; Wang, X. Review and Prospect: 20 Years of Research on Classification of Chinese Universities—Analysis Based on CiteSpace Knowledge Graph. High. Archit. Educ. 2019, 28, 1–10. [Google Scholar]

- Zheng, Y.; Jing, X.; Li, G. Application of Human-Machine Intelligence Collaboration in Medical Rehabilitation Robotics. Chin. J. Instrum. 2017, 38, 2373–2380. [Google Scholar]

- Wei, L.; Yang, A.; Mao, L.; Li, W.; Liu, M.; Wang, C.; Jaing, B.; Wang, Y. Application of Exoskeleton Robot Based on Artificial Intelligence. Mech. Electr. Eng. Technol. 2024, 1–11. [Google Scholar]

- Zhu, X.; Wang, J.; Wang, X. Application of Nonlinear Iterative Learning Algorithm in Robotic Upper Limb Rehabilitation. Control Decis. 2016, 31, 1325–1329. [Google Scholar]

- Liu, Y.; Lu, Y.; Xu, X.; Song, Q. Review of Exoskeleton Robot Assistance Effectiveness Test Method and Application. Acta Armamentarii 2024, 45, 2497–2519. [Google Scholar]

- Song, Z.; Zhao, P.; Wu, X.; Yang, R.; Gao, X. An Active Control Method for a Lower Limb Rehabilitation Robot with Human Motion Intention Recognition. Sensors 2025, 25, 713. [Google Scholar] [CrossRef]

- Wang, X.; Zhang, C.; Yu, Z.; Deng, C. Decoding of lower limb continuous movement intention from multi-channel sEMG and design of adaptive exoskeleton controller. Biomed. Signal Process. Control 2024, 94, 106245. [Google Scholar] [CrossRef]

- Kyeong, S.; Feng, J.; Ryu, J.K.; Park, J.J.; Lee, K.H.; Kim, J. Surface Electromyography Characteristics for Motion Intention Recognition and Implementation Issues in Lower-limb Exoskeletons. Int. J. Control Autom. Syst. 2022, 20, 1018–1028. [Google Scholar] [CrossRef]

- Xiao, F.; Gu, L.; Ma, W.; Zhu, Y.; Zhang, Z.; Wang, Y. Real time motion intention recognition method with limited number of surface electromyography sensors for A 7-DOF hand/wrist rehabilitation exoskeleton. Mechatronics 2021, 79, 102642. [Google Scholar] [CrossRef]

- Jun, L.; Yin, X.; Hui, X. Application of SEMG measurement circuit in exoskeleton upper limb rehabilitation robot. J. Mech. Manuf. Autom. 2017, 46, 146–149+173. [Google Scholar]

- Niu, M.; Lei, F. Research on motion intention recognition algorithm for lower limb exoskeleton. J. Intell. Syst. 2025, 1–9. [Google Scholar]

- Zhang, S.; You, Y.; Lin, J.; Wang, H.; Qin, Y.; Han, J.; Yu, N. Intelligent rehabilitation technology based on surface electromyography signals. Artif. Intell. 2022, 34–43. [Google Scholar]

- Shang, C.; Cheng, Z. Research on gait switching intention recognition and control based on surface electromyography signal. Control Eng. 2021, 28, 1662–1668. [Google Scholar]

- Song, J.; Zhu, A.; Tu, Y.; Huang, H.; Arif, M.A.; Shen, Z.; Zhang, X.; Cao, G. Effects of Different Feature Parameters of sEMG on Human Motion Pattern Recognition Using Multilayer Perceptrons and LSTM Neural Networks. Appl. Sci. 2020, 10, 3358. [Google Scholar] [CrossRef]

- Shi, X.; Qin, P.; Zhu, J.; Xu, S.; Shi, W. Lower Limb Motion Recognition Method Based on Improved Wavelet Packet Transform and Unscented Kalman Neural Network. Math. Probl. Eng. 2020, 2020, 5684812. [Google Scholar] [CrossRef]

- Liu, Q.; Wang, S.; Dai, Y.; Wu, X.; Guo, S.; Su, W. Two-dimensional identification of lower limb gait features based on the variational modal decomposition of sEMG signal and convolutional neural network. Gait Posture 2025, 117, 191–203. [Google Scholar] [CrossRef]

- Zhou, H.; Feng, R.; Peng, Y.; Jin, D.; Li, X.; Shou, D.; Li, G.; Wang, L. Integration of multiscale fusion of residual neural network with 2-D gramian angular fields for lower limb movement recognition based on multi-channel sEMG signals. Biomed. Signal Process. Control 2025, 99, 106807. [Google Scholar] [CrossRef]

- Kang, I.; Molinaro, D.D.; Choi, G.; Young, A.J. Continuous locomotion mode classification using a robotic hip exoskeleton. In Proceedings of the 2020 8th IEEE RAS/EMBS International Conference for Biomedical Robotics and Biomechatronics (BioRob), New York, NY, USA, 29 November–1 December 2020; pp. 376–381. [Google Scholar]

- Li, K.; Li, Z.; Zeng, H.; Wei, N. Control of Newly-Designed Wearable Robotic Hand Exoskeleton Based on Surface Electromyographic Signals. Front. Neurorobot. 2021, 15, 121. [Google Scholar] [CrossRef] [PubMed]

- Feng, Y.; Zhong, M.; Wang, X.; Lu, H.; Wang, H.; Liu, P.; Vladareanu, L. Active triggering control of pneumatic rehabilitation gloves based on surface electromyography sensors. PeerJ Comput. Sci. 2021, 7, e448. [Google Scholar] [CrossRef]

- Zhang, Q.; Zhang, T.; Zhao, Z. Human motion modes recognition based on multi-sensors. Transducer Microsyst. Technol. 2019, 38, 73–76. [Google Scholar]

- Abdallah, I.B.; Bouteraa, Y. An Optimized Stimulation Control System for Upper Limb Exoskeleton Robot-Assisted Rehabilitation Using a Fuzzy Logic-Based Pain Detection Approach. Sensors 2024, 24, 1047. [Google Scholar] [CrossRef]

- Zhang, R.; Zhang, X.; Guo, Y.; He, D.; Wang, R. Recognition and Analysis of Hand Gesture Based on sEMG Signals. J. Med. Biomech. 2022, 37, 818–825. [Google Scholar]

- Li, K.; Zhang, J.; Wang, L.; Zhang, M.; Li, J.; Bao, S. A review of the key technologies for sEMG-based human-robot interaction systems. Biomed. Signal Process. Control 2020, 62, 102074. [Google Scholar] [CrossRef]

- Duan, F.; Ren, X.; Yang, Y. A Gesture recognition system based on time domain features and linear discriminant analysis. IEEE Trans. Cogn. Dev. Syst. 2018, 13, 200–208. [Google Scholar] [CrossRef]

- Qi, J.; Jiang, G.; Li, G.; Sun, Y.; Tao, B. Intelligent human-computer interaction based on surface EMG gesture recognition. IEEE Access 2019, 7, 61378–61387. [Google Scholar] [CrossRef]

- Naik, G.R.; Acharyya, A.; Nguyen, H.T. Classification of finger extension and flexion of EMG and Cyberglove data with modified ICA weight matrix. In Proceedings of the 2014 36th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Chicago, IL, USA, 26–30 August 2014; pp. 3829–3832. [Google Scholar]

- Nasr, A.; Hunter, J.; Dickerson, C.R.; McPhee, J. Evaluation of a machine-learning-driven active–passive upper-limb exoskeleton robot: Experimental human-in-the-loop study. Wearable Technol. 2023, 4, e13. [Google Scholar] [CrossRef]

- Benalcazar, M.E.; Motoche, C.; Zea, J.A.; Jaramillo, A.G.; Anchundia, C.E.; Zambrano, P.; Segura, M.; Palacios, F.B.; Perez, M. Real-time hand gesture recognition using the Myo armband and muscle activity detection. In Proceedings of the 2017 IEEE Second Ecuador Technical Chapters Meeting (ETCM), Salinas, Ecuador, 16–20 October 2017; pp. 1–6. [Google Scholar]

- Narayan, Y. SEMG signal classification using KNN classifier with FD and TFD features. Mater. Today Proc. 2021, 37, 3219–3225. [Google Scholar] [CrossRef]

- Bergil, E.; Oral, C.; Ergul, E.U. Efficient hand movement detection using k-means clustering and k-nearest neighbor algorithms. J. Med. Biol. Eng. 2021, 41, 11–24. [Google Scholar] [CrossRef]

- Xue, J.; Sun, Z.; Duan, F.; Caiafa, C.F.; Solé-Casals, J. Underwater sEMG-based recognition of hand gestures using tensor decomposition. Pattern Recognit. Lett. 2023, 165, 39–46. [Google Scholar] [CrossRef]

- Chen, X.; Wang, Z.J. Pattern recognition of number gestures based on a wireless surface EMG system. Biomed. Signal Process. Control 2013, 8, 184–192. [Google Scholar] [CrossRef]

- Kong, D.; Wang, W.; Guo, D.; Shi, Y. RBF sliding mode control method for an upper limb rehabilitation exoskeleton based on intent recognition. Appl. Sci. 2022, 12, 4993. [Google Scholar] [CrossRef]

- Pourmokhtari, M.; Beigzadeh, B. Simple recognition of hand gestures using single-channel EMG signals. Proc. Inst. Mech. Eng. Part H J. Eng. Med. 2024, 238, 372–380. [Google Scholar] [CrossRef] [PubMed]

- Tam, S.; Boukadoum, M.; Campeau-Lecours, A.; Gosselin, B. Intuitive real-time control strategy for high-density myoelectric hand prosthesis using deep and transfer learning. Sci. Rep. 2021, 11, 11275. [Google Scholar] [CrossRef]

- Lin, Z.; Liang, P.; Zhang, X.; Qin, Z. Toward robust high-density EMG pattern recognition using generative adversarial network and convolutional neural network. In Proceedings of the 2023 11th International IEEE/EMBS Conference on Neural Engineering (NER), Baltimore, MD, USA, 24–27 April 2023; pp. 1–5. [Google Scholar]

- Rehman, M.Z.U.; Waris, A.; Gilani, S.O.; Jochumsen, M.; Niazi, I.K.; Jamil, M.; Farina, D.; Kamavuako, E.N. Multiday EMG-based classification of hand motions with deep learning techniques. Sensors 2018, 18, 2497. [Google Scholar] [CrossRef]

- Jiang, Y.; Chen, C.; Zhang, X.; Chen, C.; Zhou, Y.; Ni, G.; Muh, S.; Lemos, S. Shoulder muscle activation pattern recognition based on sEMG and machine learning algorithms. Comput. Methods Programs Biomed. 2020, 197, 105721. [Google Scholar] [CrossRef]

- Mohapatra, A.D.; Aggarwal, A.; Tripathy, R.K. Automated Recognition of Hand Gestures from Multichannel EMG Sensor Data Using Time–Frequency Domain Deep Learning for IoT Applications. IEEE Sens. Lett. 2024, 8, 7003204. [Google Scholar] [CrossRef]

- Jiang, N.; Chen, C.; He, J.; Meng, J.; Pan, L.; Su, S.; Zhu, X. Bio-robotics research for non-invasive myoelectric neural interfaces for upper-limb prosthetic control: A 10-year perspective review. Natl. Sci. Rev. 2023, 10, nwad048. [Google Scholar] [CrossRef]