Monitoring Opioid-Use-Disorder Treatment Adherence Using Smartwatch Gesture Recognition

Abstract

1. Introduction

2. Materials and Methods

- Simulated methadone-taking gesture;

- Simulated buprenorphine-taking gesture in sublingual strip form;

- Performing typical daily gestures unrelated to medication-taking.

- Data Collection: Sensor data was collected from individuals performing:

- (a)

- Simulated methadone (liquid ingestion).

- (b)

- Simulated buprenorphine (sublingual film ingestion).

- (c)

- Daily living activities unrelated to medication-taking.

- Data Processing: Raw sensor data were filtered, segmented into fixed-length windows, and labeled for supervised learning.

- Model Training and Evaluation: Data were split into training, validation, and test sets. Models were trained to classify gestures, optimized using validation data, and evaluated on the test set using F1 score, precision, recall, and confusion matrices.

- Representation Analysis: Gesture embeddings were visualized using t-SNE to assess learned feature separability.

- Deployment Considerations: Model performance on unseen participants and feasibility for real-time smartwatch classification were assessed.

2.1. Participants

2.2. Equipment

2.3. Data Collection and Storage

- Natural MTE (nMTE): Participants performed medication-taking gestures naturally for one week (5 days).

- Scripted MTE (sMTE): Participants performed gestures following a scripted protocol for a second week (5 days).

2.4. Data Labeling

2.5. Data Preprocessing

2.6. Independence Considerations

- Participant Level: Ensuring that no data from the same participant appears in both training and testing sets would represent the most stringent definition of independence. However, this assumption may be overly strict if the system is intended for deployment within the same group of participants it was trained on.

- Recording Level: Each participant provided multiple recordings, captured at different times, which may exhibit variations (e.g., differences in how the smartwatch was worn). Splitting recordings into separate training and testing sets would partially enforce independence but may still allow for intra-participant correlations.

- Gesture Level: Within each recording, gestures often exhibit self-similarity due to consistent movement patterns. Splitting at the gesture level ensures that no individual gesture contributes samples to both training and testing sets, minimizing overlap and ensuring independence.

2.7. Model Design

2.8. Optimization and Hardware Utilization

2.9. Evaluation Scenarios

2.9.1. Scenario 1

2.9.2. Scenario 2

2.9.3. Scenario 3

2.10. Evaluation Metrics

3. Results

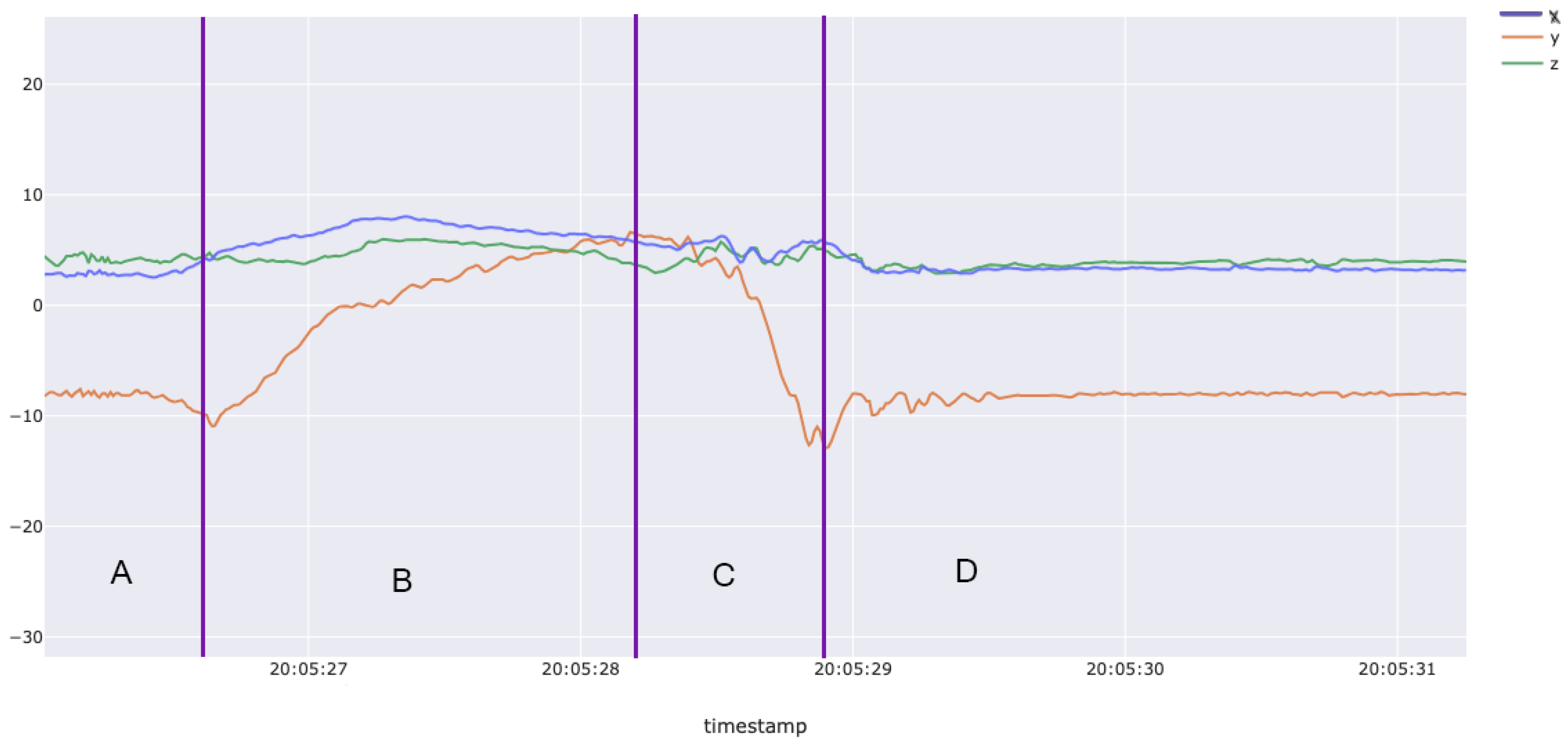

3.1. Dataset Qualitative Results

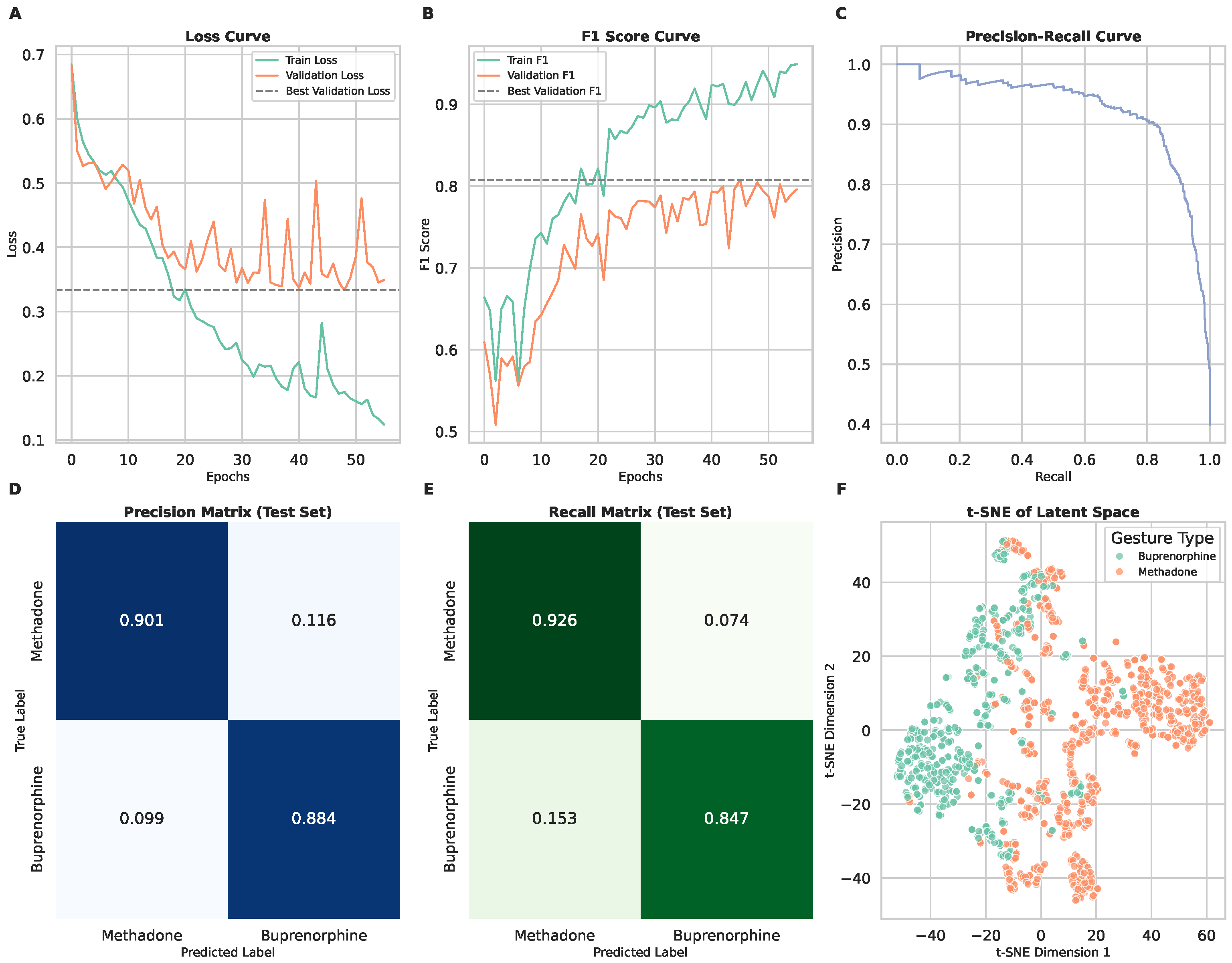

3.2. Scenario 1: Binary Classification of Medication Types

3.3. Scenario 2: Three-Class Classification (Including Daily Living Gestures)

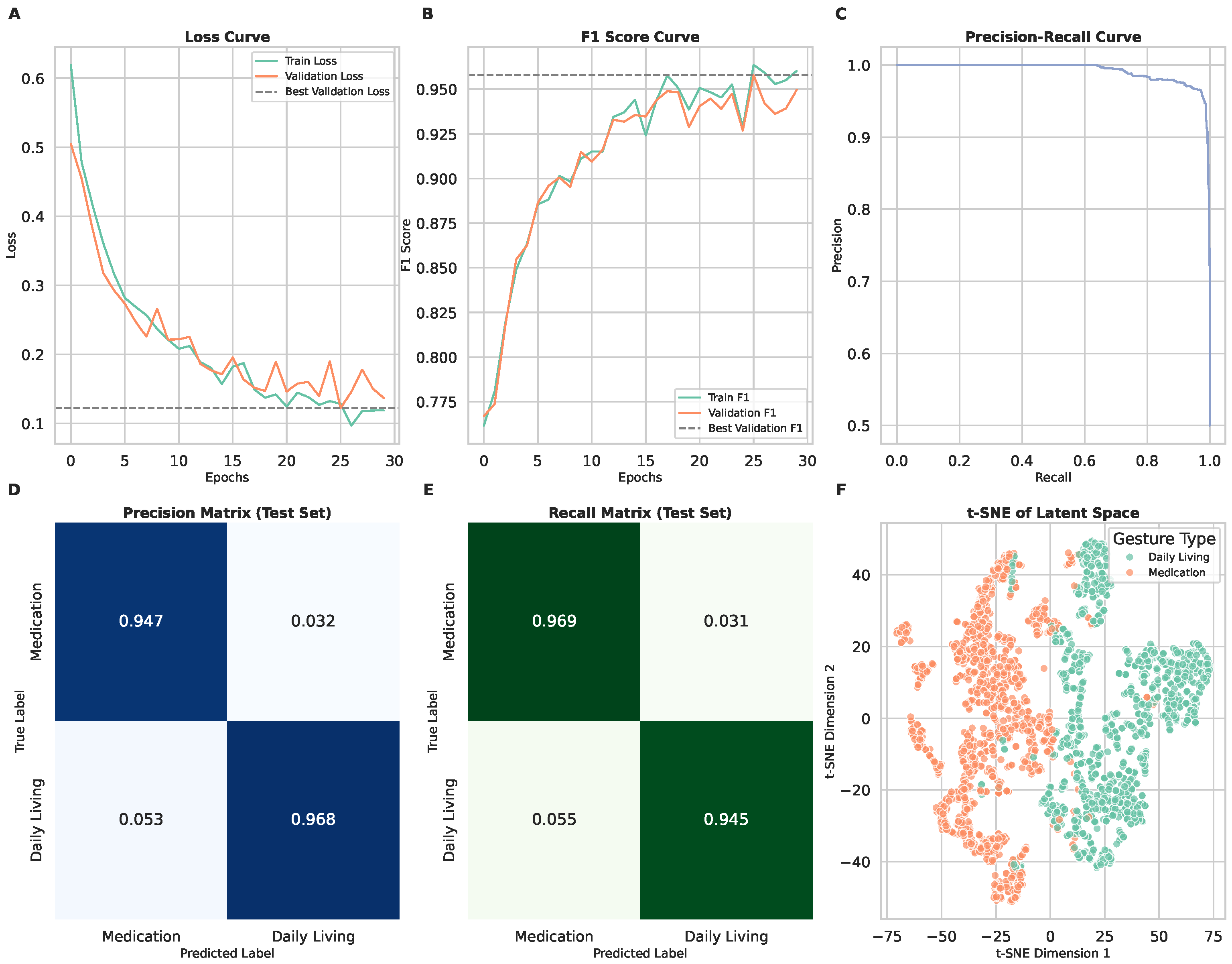

3.4. Scenario 3: Binary Classification of Medication-Taking vs. Daily Living

4. Conclusions

5. Discussion

6. Future Directions

- Clinical Validation: Conduct real-world studies with individuals actively undergoing OUD treatment to assess the practical effectiveness of the model.

- Feature Expansion: Incorporate additional smartwatch sensors, such as pulse oximetry, heart rate, time of day, and temperature monitoring, to improve classification performance.

- User Adaptability: Develop personalized machine learning models that can adjust to individual variations in gesture performance over time.

- Integration with Treatment Programs: Explore integration with mobile health applications and digital therapeutic platforms to provide real-time feedback and adherence support.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| MTE | Medication-Taking Event |

| OUD | Opiod Use Disorder |

| MOUD | Medications for Opiod Use Disorder |

| NOWS | Neonatal Opioid Withdrawal Syndrome |

| IRB | Institutional Review Board |

| ML | Machine Learning |

| AI | Artificial Intelligence |

| KDE | Kernel Density Estimation |

| CNN | Convolutional Neural Network |

| CSV | Comma-Separated Values |

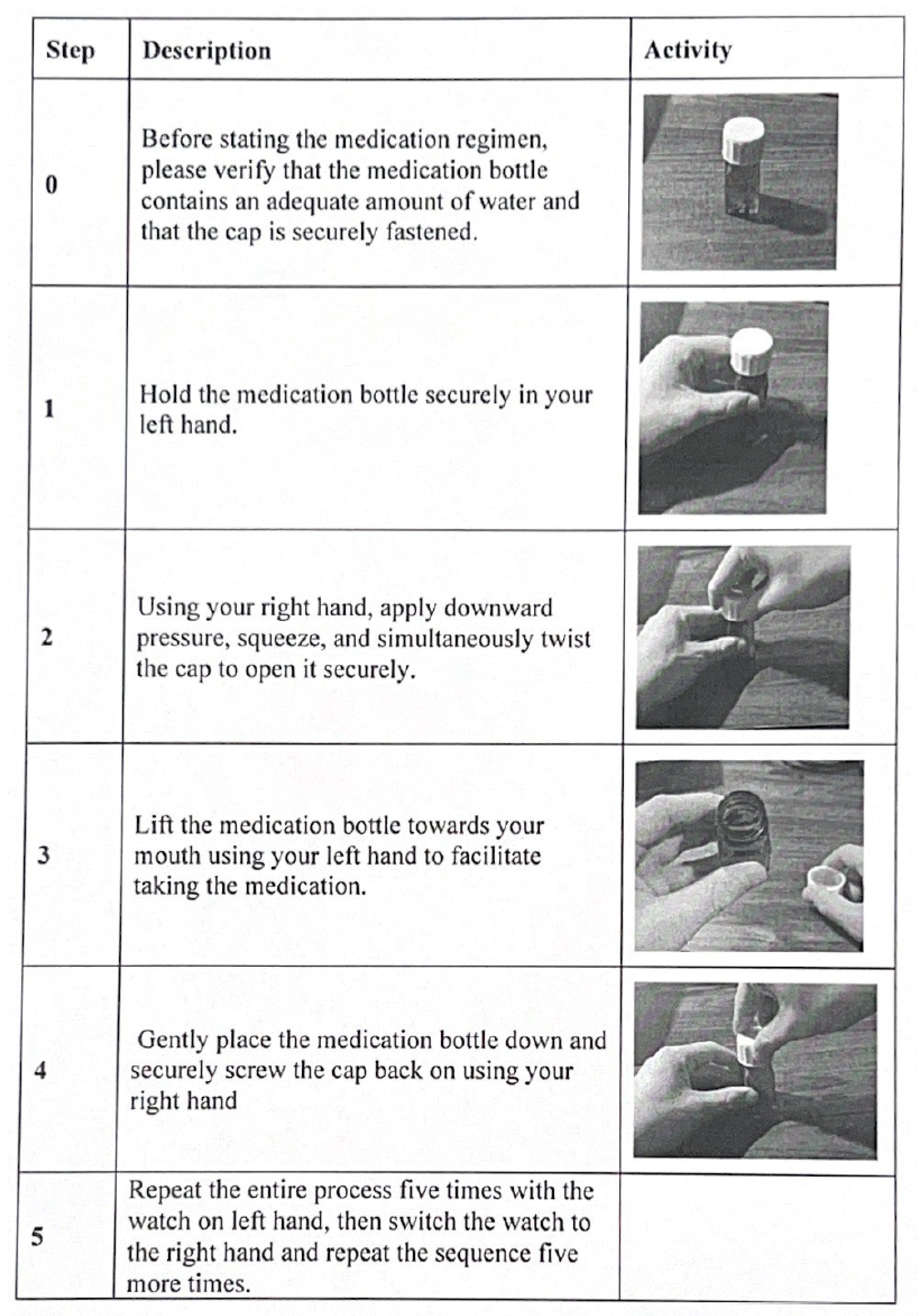

Appendix A. Standardized Medication-Taking Protocols

Appendix A.1. Medication Bottle Protocol

Appendix A.2. Sublingual Film/Packet Protocol

References

- Centers for Disease Control and Prevention. Understanding the Opioid Overdose Epidemic. CDC 2024. Available online: https://www.cdc.gov/overdose-prevention/about/understanding-the-opioid-overdose-epidemic.html (accessed on 11 February 2025).

- Kozhimannil, K.B.; Graves, A.J.; Jarlenski, M.; Kennedy-Hendricks, A.; Gollust, S.; Barry, C.L. Non-medical opioid use and sources of opioids among pregnant and non-pregnant reproductive-aged women. Drug Alcohol Depend. 2017, 174, 201–208. [Google Scholar] [CrossRef] [PubMed]

- Jenkins, C.; Hudnall, M.; Hanson, C.; Lewis, D.; Parton, J.M. Cost of care for newborns with neonatal abstinence syndrome in a state Medicaid program. JAMA Netw. Open 2024, 7, e240295. [Google Scholar] [CrossRef] [PubMed]

- Maglogiannis, I.; Spyroglou, G.; Panagopoulos, C.; Mazonaki, M.; Tsanakas, P. Mobile reminder system for furthering patient adherence utilizing commodity smartwatch and Android devices. In Proceedings of the 2014 4th International Conference on Wireless Mobile Communication and Healthcare, Athens, Greece, 3–5 November 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 124–127. [Google Scholar]

- Kalantarian, H.; Alshurafa, N.; Sarrafzadeh, M. A Survey of Diet Monitoring Technology. IEEE Pervasive Comput. 2017, 16, 57–65. [Google Scholar] [CrossRef]

- Kalantarian, H.; Alshurafa, N.; Nemati, E.; Le, T.; Sarrafzadeh, M. A smartwatch-based medication adherence system. In Proceedings of the 2015 IEEE 12th International Conference on Wearable and Implantable Body Sensor Networks, Cambridge, MA, USA, 9–12 June 2015; IEEE: Piscataway, NJ, USA, 2015. [Google Scholar] [CrossRef]

- Sensor Necklace Records When Pill Is Swallowed, and Prompts Patient When It Is Time to Take Another, 2008. Available online: https://www.sciencedaily.com/releases/2008/03/080305111857.htm (accessed on 31 January 2022).

- Kalantarian, H.; Motamed, B.; Alshurafa, N.; Sarrafzadeh, M. A wearable sensor system for medication adherence prediction. Artif. Intell. Med. 2016, 69, 43–52. [Google Scholar] [CrossRef]

- Changizi, M.; Kaveh, M.H. Effectiveness of the mHealth technology in improvement of healthy behaviors in an elderly population—A systematic review. mHealth 2017, 3, 51. [Google Scholar] [CrossRef]

- Bennett, J.; Rokas, O.; Chen, L. Healthcare in the Smart Home: A Study of Past, Present and Future. Sustainability 2017, 9, 840. [Google Scholar] [CrossRef]

- Iancu, I.; Iancu, B. Elderly in the Digital Era. Theoretical Perspectives on Assistive Technologies. Technologies 2017, 5, 60. [Google Scholar] [CrossRef]

- Attal, F.; Mohammed, S.; Dedabrishvili, M.; Chamroukhi, F.; Oukhellou, L.; Amirat, Y. Physical Human Activity Recognition Using Wearable Sensors. Sensors 2015, 15, 31314–31338. [Google Scholar] [CrossRef]

- Singh, N.; Varshney, U.; Rowe, F.; Klein, R. IT-based reminders for medication adherence: Systematic review, taxonomy, framework and research directions. Eur. J. Inf. Syst. 2019, 29, 84–108. [Google Scholar] [CrossRef]

- Anghel, L.A.; Farcas, A.M.; Oprean, R.N. An overview of the common methods used to measure treatment adherence. Med. Pharm. Rep. 2019, 92, 117–122. [Google Scholar] [CrossRef]

- McRae-Clark, A.L.; Baker, N.L.; Sonne, S.C.; DeVane, C.L.; Wagner, A.; Norton, J. Concordance of Direct and Indirect Measures of Medication Adherence in A Treatment Trial for Cannabis Dependence. J. Subst. Abus. Treat. 2015, 57, 70–74. [Google Scholar] [CrossRef] [PubMed]

- Tiako, M.J.; Friedman, A.; Culhane, J.; Smith, E.; Meisel, Z.F. Predictors of initiation of medication for opioid use disorder and retention in treatment among U.S. pregnant women, 2013–2017. Obstet. Gynecol. 2021, 137, 687–694. [Google Scholar] [CrossRef] [PubMed]

- National Institute on Drug Abuse. Medications to Treat Opioid Use Disorder Research Report Overview, 2021. Available online: https://www.freestatesocialwork.com/articles/medications-to-treat-opioid-use-disorder-research-report.pdf (accessed on 28 September 2023).

- Hoffman, K.A.; Terashime, J.P.; McCarty, D. Opioid use disorder and treatment: Challenges and opportunities. BMC Health Serv. Res. 2019, 19, 884. [Google Scholar] [CrossRef]

- Mehtani, N.J.; Ristau, J.T.; Snyder, H.; Surlyn, C.; Eveland, J.; Smith-Bernardin, S.; Knight, K.R. COVID-19: A catalyst for change in telehealth service delivery for opioid use disorder management. Subst. Abus. 2021, 42, 205–212. [Google Scholar] [CrossRef]

- Odhiambo, C.O.; Saha, S.; Martin, C.K.; Valafar, H. Human Activity Recognition on Time Series Accelerometer Sensor Data Using LSTM Recurrent Neural Networks. arXiv 2023, arXiv:2206.07654. [Google Scholar]

- Kazemi, D.M.; Borsari, B.; Levine, M.J.; Li, S.; Lamberson, K.A.; Matta, L.A. A systematic review of the mhealth interventions to prevent alcohol and substance abuse. J. Health Commun. 2017, 22, 413–432. [Google Scholar] [CrossRef]

- Marsch, L.A.; Campbell, A.; Campbell, C.; Chen, C.C.; Ertin, E.; Ghitza, U.; Lambert-Harris, C.; Hassanpour, S.; Holtyn, A.F.; Hser, Y.I.; et al. The application of digital health to the assessment and treatment of substance use disorders: The past, current, and future role of the National Drug Abuse Treatment Clinical Trails Network. J. Subst. Abus. Treat. 2020, 112, 4–11. [Google Scholar] [CrossRef]

- Campbell, A.N.; Nunes, E.V.; Matthews, A.G.; Stitzer, M.; Miele, G.M.; Polsky, D.; Turrigiano, E.; Walters, S.; McClure, E.A.; Kyle, T.L.; et al. Internet-delivered treatment for substance abuse: A multisite randomized controlled trial. Am. J. Psychiatry 2014, 171, 683–690. [Google Scholar] [CrossRef]

- Silang, K.; Sanguino, H.; Sohal, P.; Rioux, C.; Kim, H.; Tomfohr-Madsen, L.M. eHealth interventions to treat substance use in pregnancy: A systematic review and meta-analysis. Int. J. Environ. Res. Public Health 2021, 18, 9952. [Google Scholar] [CrossRef]

- Stirratt, M.J.; Dunbar-Jacob, J.; Crane, H.M.; Simoni, J.M.; Czajkowski, S.; Hilliard, M.E.; Aikens, J.E.; Hunter, C.M.; Velligan, D.I.; Huntley, K.; et al. Self-report measures of medication adherence behavior: Recommendations on optimal use. Transl. Behav. Med. 2015, 5, 470. [Google Scholar] [CrossRef]

- Aldeer, M.; Waterworth, D.; Hussain, Z.; Chowdhury, T.; Brito, C.; Sheng, Q.; Martin, R.; Ortiz, J. MedBuds: In-ear inertial medication taking detection using smart wireless earbuds. In Proceedings of the 2nd International Workshop on Cyber-Physical-Human System Design and Implementation CPHS 2022, United States, Milan, Italy, 3–6 May 2022; pp. 19–23. [Google Scholar] [CrossRef]

- Aldeer, M.; Javanmard, M.; Martin, R.P. A Review of Medication Adherence Monitoring Technologies. Appl. Syst. Innov. 2018, 1, 14. [Google Scholar] [CrossRef]

- Smith, A.; Anand, H.; Milosavljevic, S.; Rentschler, K.M.; Pocivavsek, A.; Valafar, H. Application of machine learning to sleep stage classification. In Proceedings of the 2021 International Conference on Computational Science and Computational Intelligence (CSCI), Las Vegas, NV, USA, 15–17 December 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 349–354. [Google Scholar]

- Rock Health. Put a Ring on It: Understanding Consumers’ Year-Over-Year Wearable Adoption Patterns, 2023. Available online: https://rockhealth.com/insights/put-a-ring-on-it-understanding-consumers-year-over-year-wearable-adoption-patterns/ (accessed on 28 September 2024).

- Long, A.J.; Chang, P. The Use of Health Smart Card in Bridging Discontinuity of Care for Pregnant Woman. In Proceedings of the 2009 Sixth International Conference on Information Technology: New Generations, Las Vegas, NV, USA, 27–29 April 2009; pp. 1492–1497. [Google Scholar] [CrossRef]

- Cole, C.A.; Powers, S.; Tomko, R.L.; Froeliger, B.; Valafar, H. Quantification of Smoking Characteristics Using Smartwatch Technology: Pilot Feasibility Study of New Technology. JMIR Form. Res. 2021, 5, e20464. [Google Scholar] [CrossRef] [PubMed]

- Smith, A.; Azeem, M.; Odhiambo, C.O.; Wright, P.J.; Diktas, H.E.; Upton, S.; Martin, C.K.; Froeliger, B.; Corbett, C.F.; Valafar, H. Toward Concurrent Identification of Human Activities with a Single Unifying Neural Network Classification: First Step. Sensors 2024, 24, 4542. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. arXiv 2015, arXiv:1512.03385. [Google Scholar]

- Radosavovic, I.; Kosaraju, R.P.; Girshick, R.B.; He, K.; Dollár, P. Designing Network Design Spaces. arXiv 2020, arXiv:2003.13678. [Google Scholar]

- Loshchilov, I.; Hutter, F. Fixing Weight Decay Regularization in Adam. arXiv 2017, arXiv:1711.05101. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- João, K.A.D.R.; de Jesus, S.N.; Carmo, C.; Pinto, P. The impact of sleep quality on the mental health of a non-clinical population. Sleep Med. 2018, 46, 69–73. [Google Scholar] [CrossRef]

- Baum, E.; Wilczek, F. Supervised learning of probability distributions by neural networks. In Proceedings of the Neural Information Processing Systems, Denver, CO, USA, 8–12 November 1987. [Google Scholar]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning representations by back-propagating errors. Nature 1986, 323, 533–536. [Google Scholar] [CrossRef]

- LeCun, Y.; Boser, B.; Denker, J.; Henderson, D.; Howard, R.; Hubbard, W.; Jackel, L. Handwritten digit recognition with a back-propagation network. Adv. Neural Inf. Process. Syst. 1989, 2, 396–404. [Google Scholar]

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 379–423. [Google Scholar] [CrossRef]

- Goutte, C.; Gaussier, E. A probabilistic interpretation of precision, recall and F-score, with implication for evaluation. In Proceedings of the European Conference on Information Retrieval, Santiago de Compostela, Spain, 21–23 March 2005; Springer: Berlin/Heidelberg, Germany, 2005; pp. 345–359. [Google Scholar]

| Scenario 1 | Scenario 2 | Scenario 3 | |

|---|---|---|---|

| Macro Performance | |||

| F1 Score | 0.89 | 0.88 | 0.96 |

| Recall | 0.88 | 0.87 | 0.96 |

| Precision | 0.89 | 0.88 | 0.96 |

| Methadone Performance | |||

| F1 Score | 0.91 | 0.87 | 0.96 |

| Recall | 0.92 | 0.85 | 0.97 |

| Precision | 0.90 | 0.90 | 0.95 |

| Buprenorphine Performance | |||

| F1 Score | 0.86 | 0.80 | 0.96 |

| Recall | 0.84 | 0.79 | 0.97 |

| Precision | 0.88 | 0.81 | 0.95 |

| Daily Living Performance | |||

| F1 Score | NA | 0.96 | 0.96 |

| Recall | NA | 0.99 | 0.94 |

| Precision | NA | 0.94 | 0.97 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Smith, A.; Jerzmanowski, K.; Raynor, P.; Corbett, C.F.; Valafar, H. Monitoring Opioid-Use-Disorder Treatment Adherence Using Smartwatch Gesture Recognition. Sensors 2025, 25, 2443. https://doi.org/10.3390/s25082443

Smith A, Jerzmanowski K, Raynor P, Corbett CF, Valafar H. Monitoring Opioid-Use-Disorder Treatment Adherence Using Smartwatch Gesture Recognition. Sensors. 2025; 25(8):2443. https://doi.org/10.3390/s25082443

Chicago/Turabian StyleSmith, Andrew, Kuba Jerzmanowski, Phyllis Raynor, Cynthia F. Corbett, and Homayoun Valafar. 2025. "Monitoring Opioid-Use-Disorder Treatment Adherence Using Smartwatch Gesture Recognition" Sensors 25, no. 8: 2443. https://doi.org/10.3390/s25082443

APA StyleSmith, A., Jerzmanowski, K., Raynor, P., Corbett, C. F., & Valafar, H. (2025). Monitoring Opioid-Use-Disorder Treatment Adherence Using Smartwatch Gesture Recognition. Sensors, 25(8), 2443. https://doi.org/10.3390/s25082443