Evaluating Sensor Fusion and Flight Parameters for Enhanced Plant Height Measurement in Dry Peas

Abstract

1. Introduction

2. Materials and Methods

2.1. Field Trial

2.2. Image Acquisition

2.3. Image Processing

2.4. Data Analyses

2.4.1. Dataset Creation and Cleaning

2.4.2. The Effect of Flight Configurations on the Estimation of Plant Height

2.4.3. Sensor Fusion

3. Results and Discussion

3.1. Selecting the Best Height Metric

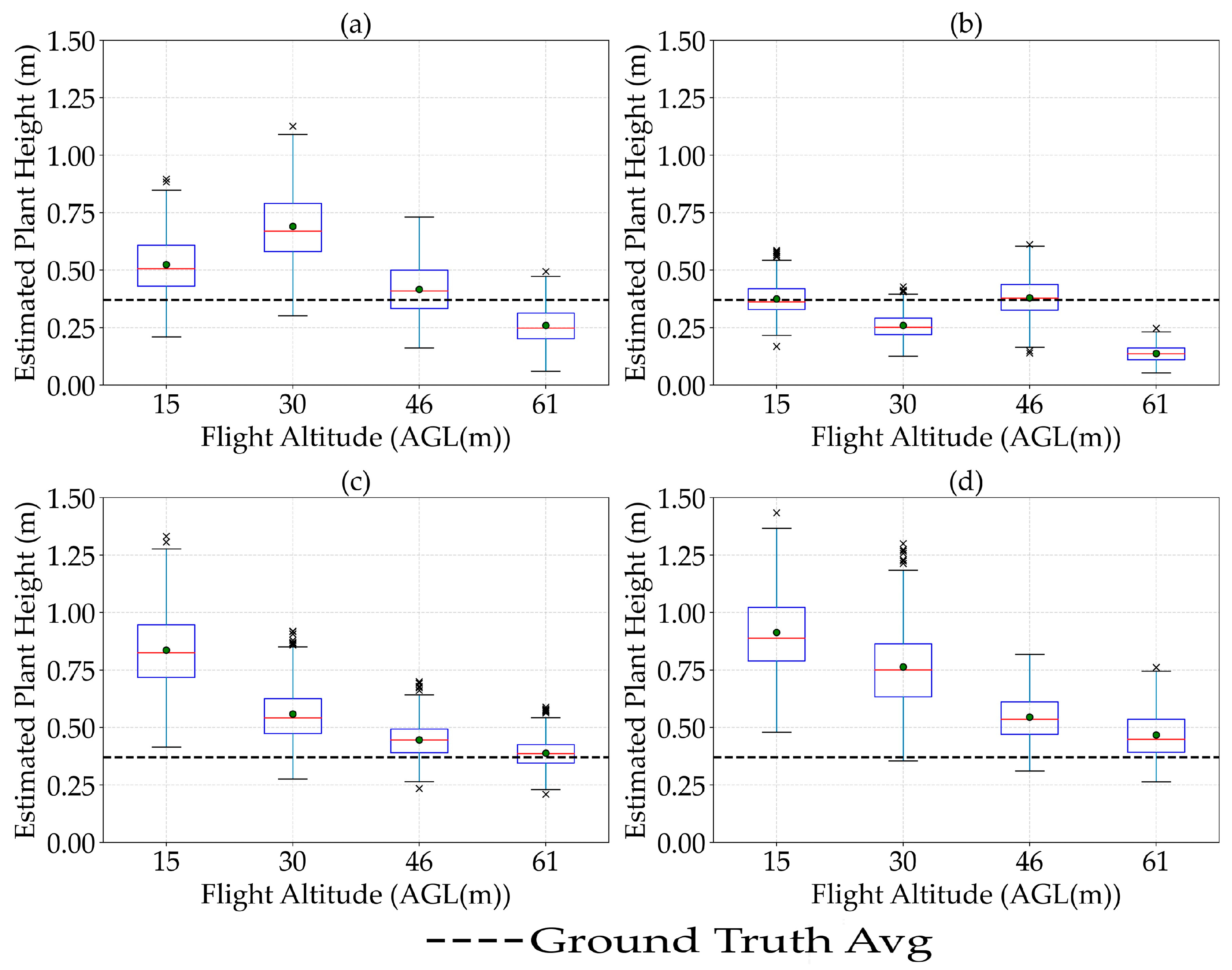

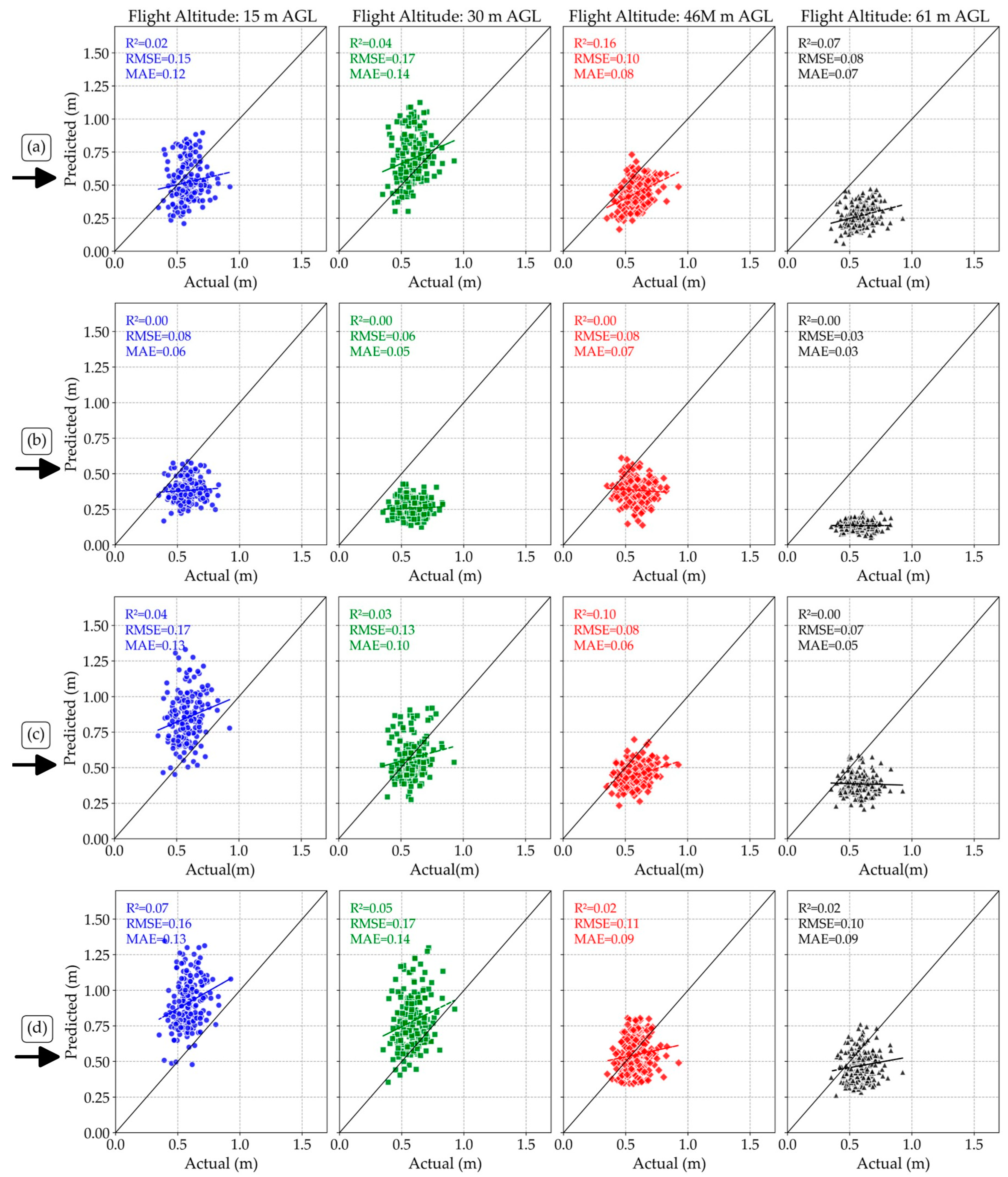

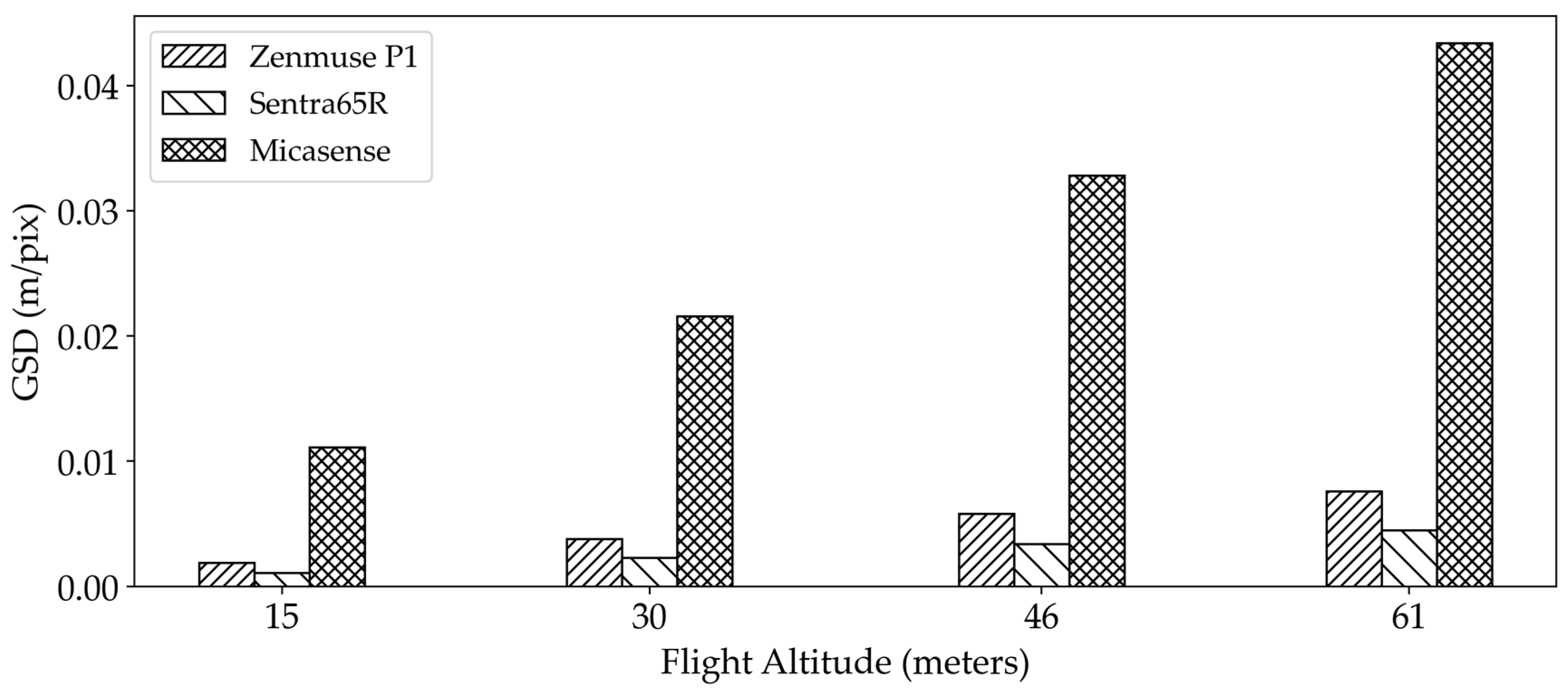

3.2. The Effect of Flight Altitude on the Estimation of Plant Height

3.3. The Effect of Flight Speed on the Estimation of Plant Height

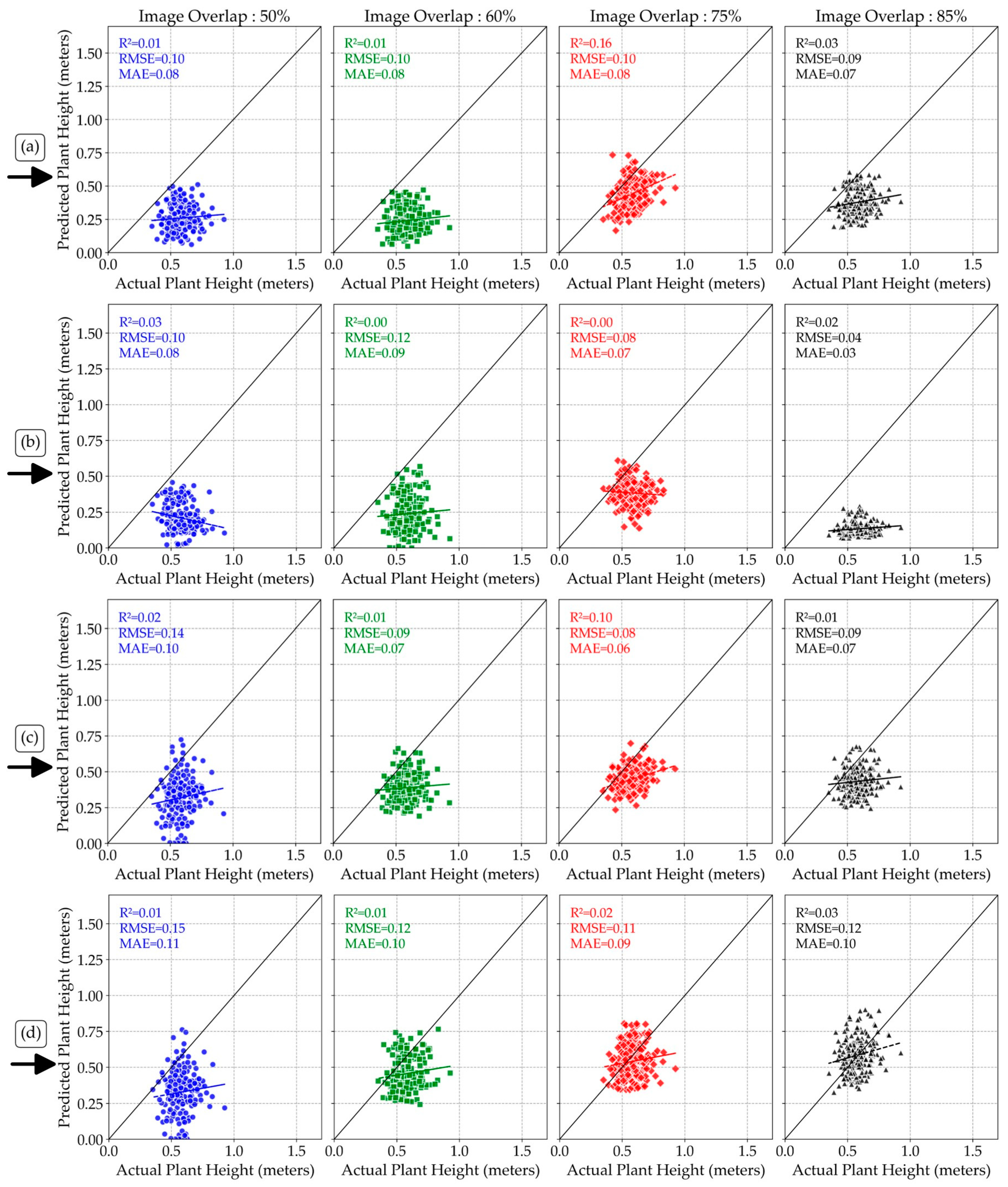

3.4. The Effect of Image Overlaps on Plant Height Estimation

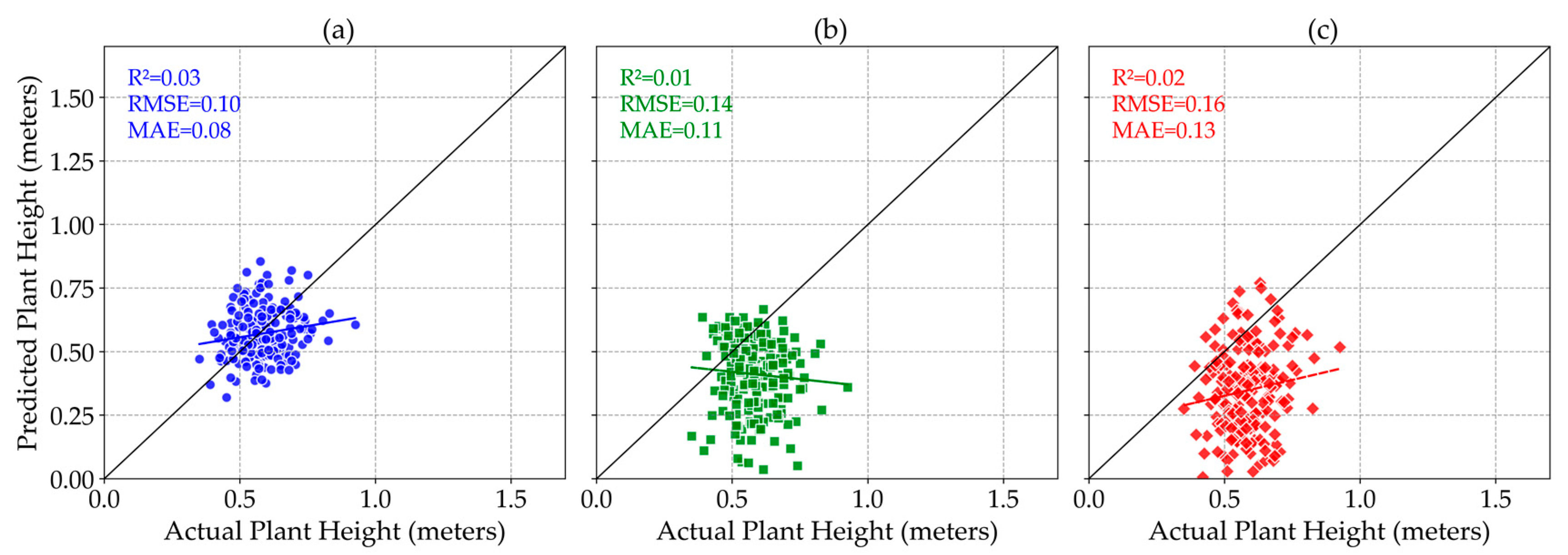

3.5. Effect of Sensor Fusion on Plant Height Estimation Accuracy

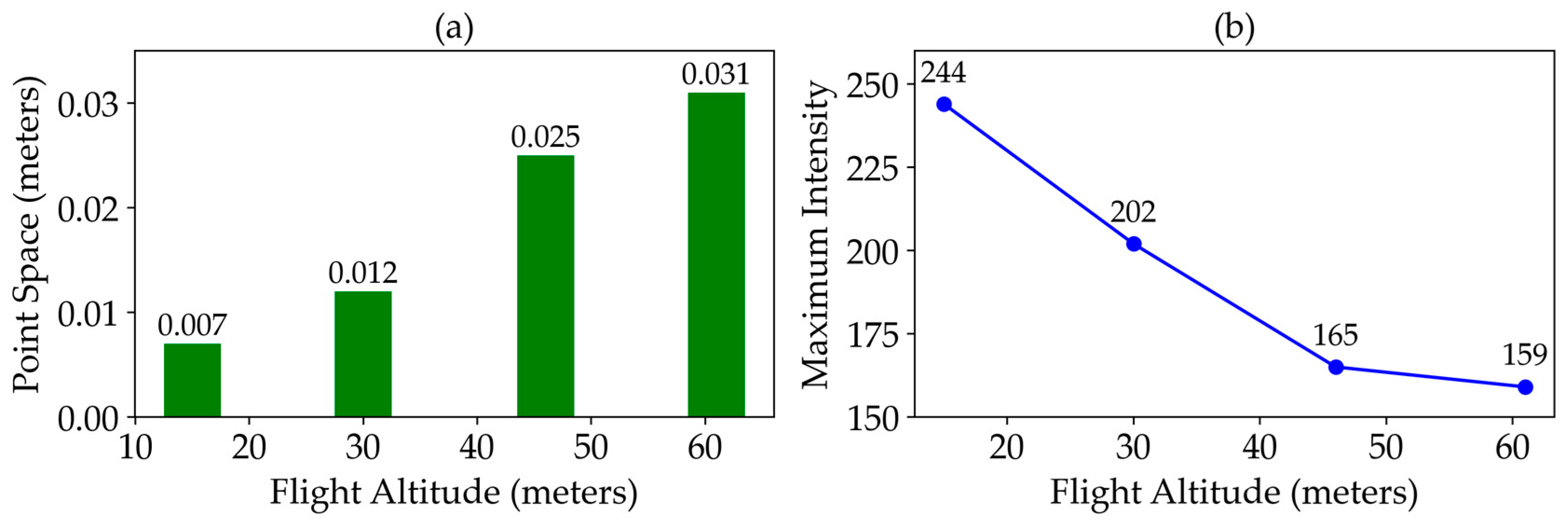

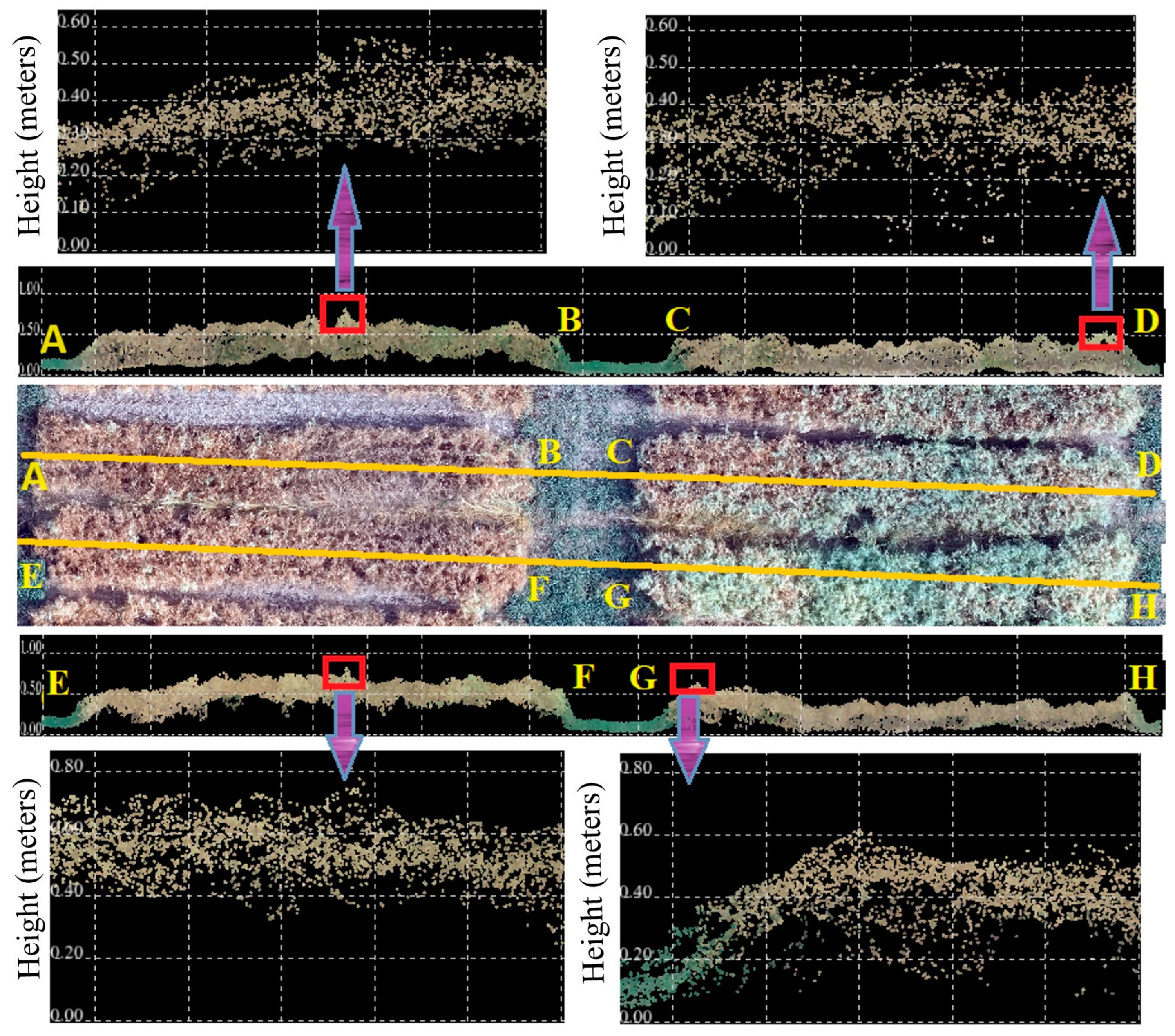

3.6. Limitations of Sensor Performance for Estimating Plant Height

3.7. Challenges and Future Works

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

Appendix B

References

- Rochester, I.J. Nitrogen and Legumes: A Meta-analysis. In Nitrogen in Agriculture—Updates; Rakshit, A., Singh, H.B., Singh, A.K., Eds.; Springer: Cham, Switzerland, 2018; pp. 277–314. [Google Scholar]

- Mann, A.; Kumar, A.; Sanwal, S.K.; Sharma, P.C. Sustainable production of pulses under saline lands in India. In Legume Crops-Prospects, Production and Uses; IntechOpen: Rijeka, Croatia, 2020. [Google Scholar]

- Gautam, U.; Paliwal, D.; Naberia, S. Improvement in livelihood security for small and marginal farmers through front line demonstrations on oilseed and pulse crops in central India. Indian Res. J. Ext. Educ. 2016, 7, 1–5. [Google Scholar]

- Didinger, C.; Thompson, H.J. The role of pulses in improving human health: A review. Legume Sci. 2022, 4, e147. [Google Scholar] [CrossRef]

- Holman, F.H.; Riche, A.B.; Michalski, A.; Castle, M.; Wooster, M.J.; Hawkesford, M.J. High throughput field phenotyping of wheat plant height and growth rate in field plot trials using UAV based remote sensing. Remote Sens. 2016, 8, 1031. [Google Scholar] [CrossRef]

- Yin, X.; McClure, M.A.; Jaja, N.; Tyler, D.D.; Hayes, R.M. In-season prediction of corn yield using plant height under major production systems. Agron. J. 2011, 103, 923–929. [Google Scholar] [CrossRef]

- Hassan, M.A.; Yang, M.; Fu, L.; Rasheed, A.; Zheng, B.; Xia, X.; Xiao, Y.; He, Z. Accuracy assessment of plant height using an unmanned aerial vehicle for quantitative genomic analysis in bread wheat. Plant Methods 2019, 15, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Wandinger, U. Introduction to lidar. In Lidar: Range-Resolved Optical Remote Sensing of the Atmosphere; Springer: Berlin/Heidelberg, Germany, 2005; pp. 1–18. [Google Scholar]

- Omasa, K.; Hosoi, F.; Konishi, A. 3D lidar imaging for detecting and understanding plant responses and canopy structure. J. Exp. Bot. 2007, 58, 881–898. [Google Scholar] [CrossRef] [PubMed]

- Andújar, D.; Calle, M.; Fernández-Quintanilla, C.; Ribeiro, Á.; Dorado, J. Three-dimensional modeling of weed plants using low-cost photogrammetry. Sensors 2018, 18, 1077. [Google Scholar] [CrossRef]

- Morgan, J.A.; Brogan, D.J.; Nelson, P.A. Application of Structure-from-Motion photogrammetry in laboratory flumes. Geomorphology 2017, 276, 125–143. [Google Scholar] [CrossRef]

- Jiang, S.; Jiang, C.; Jiang, W. Efficient structure from motion for large-scale UAV images: A review and a comparison of SfM tools. ISPRS J. Photogramm. Remote Sens. 2020, 167, 230–251. [Google Scholar] [CrossRef]

- ten Harkel, J.; Bartholomeus, H.; Kooistra, L. Biomass and crop height estimation of different crops using UAV-based LiDAR. Remote Sens. 2020, 12, 17. [Google Scholar] [CrossRef]

- Gu, Y.; Wang, Y.; Guo, T.; Guo, C.; Wang, X.; Jiang, C.; Cheng, T.; Zhu, Y.; Cao, W.; Chen, Q.; et al. Assessment of the influence of UAV-borne LiDAR scan angle and flight altitude on the estimation of wheat structural metrics with different leaf angle distributions. Comput. Electron. Agric. 2024, 220, 108858. [Google Scholar] [CrossRef]

- Wang, H.; Zhang, W.; Yang, G.; Lei, L.; Han, S.; Xu, W.; Chen, R.; Zhang, C.; Yang, H. Maize Ear Height and Ear–Plant Height Ratio Estimation with LiDAR Data and Vertical Leaf Area Profile. Remote Sens. 2023, 15, 964. [Google Scholar] [CrossRef]

- Gao, M.; Yang, F.; Wei, H.; Liu, X. Individual maize location and height estimation in field from uav-borne lidar and rgb images. Remote Sens. 2022, 14, 2292. [Google Scholar] [CrossRef]

- Zhao, J.; Chen, S.; Zhou, B.; He, H.; Zhao, Y.; Wang, Y.; Zhou, X. Multitemporal Field-Based Maize Plant Height Information Extraction and Verification Using Solid-State LiDAR. Agronomy 2024, 14, 1069. [Google Scholar] [CrossRef]

- Nyimbili, P.H.; Demirel, H.; Seker, D.Z.; Erden, T. Structure from motion (sfm)-approaches and applications. In Proceedings of the International Scientific Conference on Applied Sciences, Antalya, Turkey, 21–23 April 2016; pp. 27–30. [Google Scholar]

- Hanusz, Z.; Tarasinska, J.; Zielinski, W. Shapiro–Wilk test with known mean. REVSTAT-Stat. J. 2016, 14, 89–100. [Google Scholar]

- Lopes, R.H.; Reid, I.; Hobson, P.R. The two-dimensional Kolmogorov-Smirnov test. In Proceedings of the XI International Workshop on Advanced Computing and Analysis Techniques in Physics Research, Nikhef, Amsterdam, The Netherlands, 23–27 April 2007. [Google Scholar]

- Thode, H.C. Testing for normality; CRC Press: Boca Raton, FL, USA, 2002. [Google Scholar]

- Razali, N.M.; Wah, Y.B. Others Power comparisons of shapiro-wilk, kolmogorov-smirnov, lilliefors and anderson-darling tests. J. Stat. Model. Anal. 2011, 2, 21–33. [Google Scholar]

- Sakia, R.M. The Box-Cox transformation technique: A review. J. R. Stat. Soc. Ser. D Stat. 1992, 41, 169–178. [Google Scholar] [CrossRef]

- Logothetis, N. Box–Cox transformations and the Taguchi method. J. R. Stat. Soc. Ser. C Appl. Stat. 1990, 39, 31–48. [Google Scholar] [CrossRef]

- Fagerland, M.W.; Sandvik, L. The wilcoxon–mann–whitney test under scrutiny. Stat. Med. 2009, 28, 1487–1497. [Google Scholar] [CrossRef]

- Wilcox, R.R. A Guide to Robust Statistical Methods; Springer: Berlin/Heidelberg, Germany, 2023; Volume 746. [Google Scholar]

- Aggarwal, V.; Gupta, V.; Singh, P.; Sharma, K.; Sharma, N. Detection of spatial outlier by using improved Z-score test. In Proceedings of the 2019 3rd International Conference on Trends in Electronics and Informatics (ICOEI), Tirunelveli, India, 23–25 April 2019; pp. 788–790. [Google Scholar]

- Wisniak, J.; Polishuk, A. Analysis of residuals—A useful tool for phase equilibrium data analysis. Fluid Phase Equilibria 1999, 164, 61–82. [Google Scholar] [CrossRef]

- Cook, R.D. Regression Graphics: Ideas for Studying Regressions Through Graphics; John Wiley & Sons: Hoboken, NJ, USA, 2009. [Google Scholar]

- Kim, T.K. T test as a parametric statistic. Korean J. Anesthesiol. 2015, 68, 540–546. [Google Scholar] [CrossRef] [PubMed]

- Hodson, T.O. Root mean square error (RMSE) or mean absolute error (MAE): When to use them or not. Geosci. Model Dev. Discuss. 2022, 2022, 1–10. [Google Scholar] [CrossRef]

- Willmott, C.J.; Matsuura, K. Advantages of the mean absolute error (MAE) over the root mean square error (RMSE) in assessing average model performance. Clim. Res. 2005, 30, 79–82. [Google Scholar] [CrossRef]

- Nagelkerke, N.J. Others A note on a general definition of the coefficient of determination. Biometrika 1991, 78, 691–692. [Google Scholar] [CrossRef]

- Glenn, N.F.; Streutker, D.R.; Chadwick, D.J.; Thackray, G.D.; Dorsch, S.J. Analysis of LiDAR-derived topographic information for characterizing and differentiating landslide morphology and activity. Geomorphology 2006, 73, 131–148. [Google Scholar] [CrossRef]

- Vaze, J.; Teng, J.; Spencer, G. Impact of DEM accuracy and resolution on topographic indices. Environ. Model. Softw. 2010, 25, 1086–1098. [Google Scholar] [CrossRef]

- Goodwin, N.R.; Coops, N.C.; Culvenor, D.S. Assessment of forest structure with airborne LiDAR and the effects of platform altitude. Remote Sens. Environ. 2006, 103, 140–152. [Google Scholar] [CrossRef]

- Madec, S.; Baret, F.; De Solan, B.; Thomas, S.; Dutartre, D.; Jezequel, S.; Hemmerlé, M.; Colombeau, G.; Comar, A. High-throughput phenotyping of plant height: Comparing unmanned aerial vehicles and ground LiDAR estimates. Front. Plant Sci. 2017, 8, 2002. [Google Scholar] [CrossRef]

- Kucharczyk, M.; Hugenholtz, C.H.; Zou, X. UAV–LiDAR accuracy in vegetated terrain. J. Unmanned Veh. Syst. 2018, 6, 212–234. [Google Scholar] [CrossRef]

- Wallace, L.; Lucieer, A.; Watson, C. Assessing the feasibility of UAV-based LiDAR for high resolution forest change detection. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, 39, 499–504. [Google Scholar] [CrossRef]

- Zarco-Tejada, P.J.; Diaz-Varela, R.; Angileri, V.; Loudjani, P. Tree height quantification using very high resolution imagery acquired from an unmanned aerial vehicle (UAV) and automatic 3D photo-reconstruction methods. Eur. J. Agron. 2014, 55, 89–99. [Google Scholar] [CrossRef]

- Pun Magar, L.; Sandifer, J.; Khatri, D.; Poudel, S.; Kc, S.; Gyawali, B.; Gebremedhin, M.; Chiluwal, A. Plant height measurement using UAV-based aerial RGB and LiDAR images in soybean. Front. Plant Sci. 2025, 16, 1488760. [Google Scholar] [CrossRef]

- Amelunke, M.; Anderson, C.P.; Waldron, M.C.; Raber, G.T.; Carter, G.A. Influence of Flight Altitude and Surface Characteristics on UAS-LiDAR Ground Height Estimate Accuracy in Juncus roemerianus Scheele-Dominated Marshes. Remote Sens. 2024, 16, 384. [Google Scholar] [CrossRef]

- Iizuka, K.; Yonehara, T.; Itoh, M.; Kosugi, Y. Estimating tree height and diameter at breast height (DBH) from digital surface models and orthophotos obtained with an unmanned aerial system for a Japanese cypress (Chamaecyparis obtusa) forest. Remote Sens. 2017, 10, 13. [Google Scholar] [CrossRef]

- Mlambo, R.; Woodhouse, I.H.; Gerard, F.; Anderson, K. Structure from motion (SfM) photogrammetry with drone data: A low cost method for monitoring greenhouse gas emissions from forests in developing countries. Forests 2017, 8, 68. [Google Scholar] [CrossRef]

- Bazrafkan, A.; Delavarpour, N.; Oduor, P.G.; Bandillo, N.; Flores, P. An Overview of Using Unmanned Aerial System Mounted Sensors to Measure Plant Above-Ground Biomass. Remote Sens. 2023, 15, 3543. [Google Scholar] [CrossRef]

- Herrero-Huerta, M.; Felipe-García, B.; Belmar-Lizarán, S.; Hernández-López, D.; Rodríguez-Gonzálvez, P.; González-Aguilera, D. Dense Canopy Height Model from a low-cost photogrammetric platform and LiDAR data. Trees 2016, 30, 1287–1301. [Google Scholar] [CrossRef]

- Sun, S.; Li, C.; Paterson, A.H. In-field high-throughput phenotyping of cotton plant height using LiDAR. Remote Sens. 2017, 9, 377. [Google Scholar] [CrossRef]

- Bazrafkan, A.; Worral, H.; Bandillo, N.; Flores, P. Accurate Plant Height Estimation in Pulse Crops through Integration of LiDAR, Multispectral Information, and Machine Learning. Remote Sens. Appl. Soc. Environ. 2025, 37, 101517. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bazrafkan, A.; Worral, H.; Perdigon, C.; Oduor, P.G.; Bandillo, N.; Flores, P. Evaluating Sensor Fusion and Flight Parameters for Enhanced Plant Height Measurement in Dry Peas. Sensors 2025, 25, 2436. https://doi.org/10.3390/s25082436

Bazrafkan A, Worral H, Perdigon C, Oduor PG, Bandillo N, Flores P. Evaluating Sensor Fusion and Flight Parameters for Enhanced Plant Height Measurement in Dry Peas. Sensors. 2025; 25(8):2436. https://doi.org/10.3390/s25082436

Chicago/Turabian StyleBazrafkan, Aliasghar, Hannah Worral, Cristhian Perdigon, Peter G. Oduor, Nonoy Bandillo, and Paulo Flores. 2025. "Evaluating Sensor Fusion and Flight Parameters for Enhanced Plant Height Measurement in Dry Peas" Sensors 25, no. 8: 2436. https://doi.org/10.3390/s25082436

APA StyleBazrafkan, A., Worral, H., Perdigon, C., Oduor, P. G., Bandillo, N., & Flores, P. (2025). Evaluating Sensor Fusion and Flight Parameters for Enhanced Plant Height Measurement in Dry Peas. Sensors, 25(8), 2436. https://doi.org/10.3390/s25082436