Energy-Aware Edge Infrastructure Traffic Management Using Programmable Data Planes in 5G and Beyond

Abstract

1. Introduction

2. Technological Background

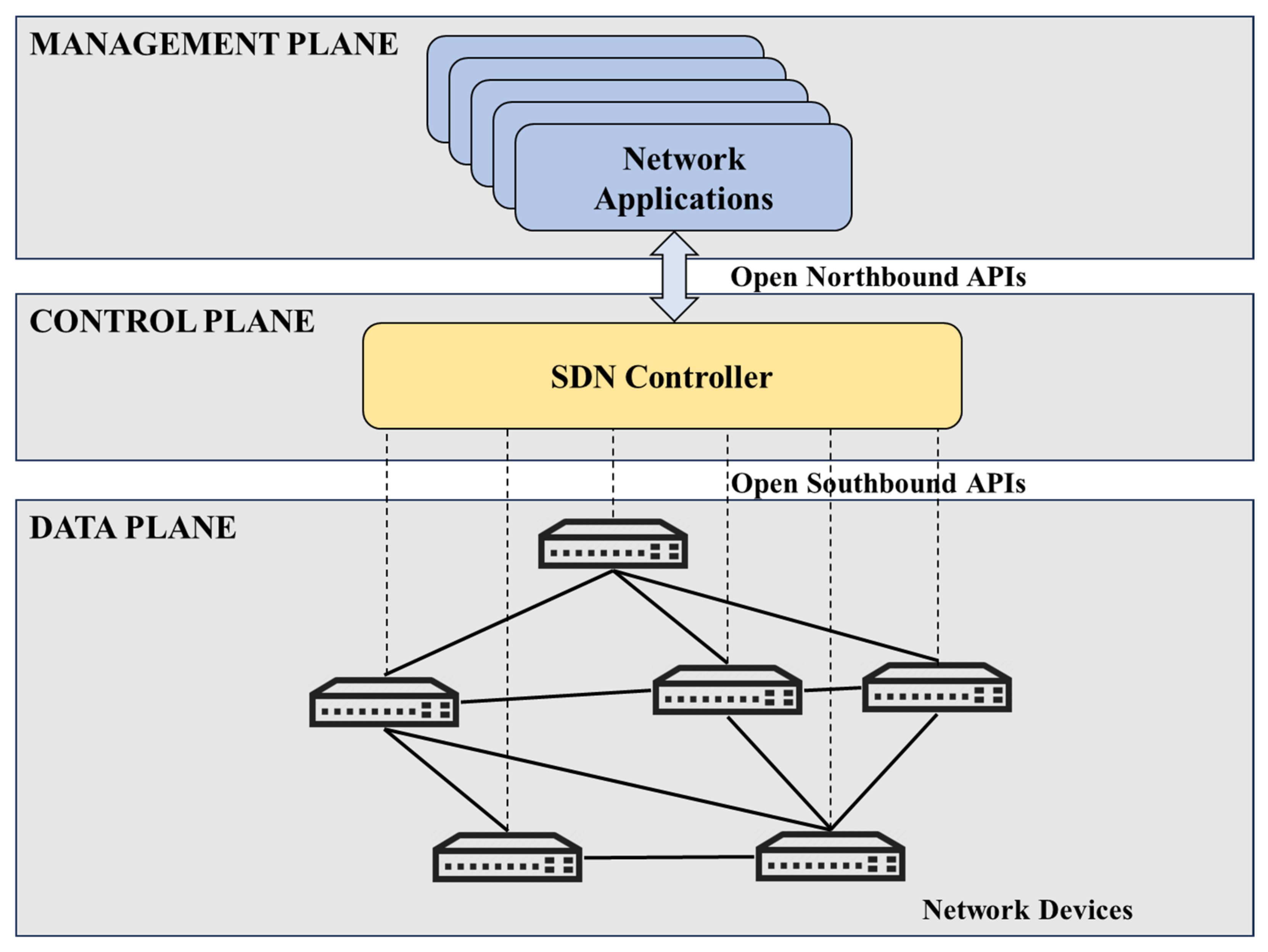

2.1. Software-Defined Networking

- Separation of control and data planes: The control plane, responsible for deciding how packets are forwarded, is separated from the data plane, which carries out the actual packet forwarding. This decoupling provides greater flexibility and scalability for managing the network.

- Centralized control: A centralized SDN controller oversees the network’s control logic, enabling better decision-making. This controller can run on commodity hardware, allowing for cost-effective network management.

- Programmability: SDN allows the network to be programmed through application programming interfaces (APIs) that run on top of the SDN controller. This makes it possible to automate and dynamically configure network functions.

- Network virtualization: SDN facilitates network virtualization, allowing multiple virtual networks to share the same physical infrastructure.

- Use of open standards: SDN relies on open standards and protocols, such as OpenFlow [17], to ensure interoperability between different vendors’ products, enabling a more open and innovative ecosystem.

2.2. Programmable Data Planes and P4

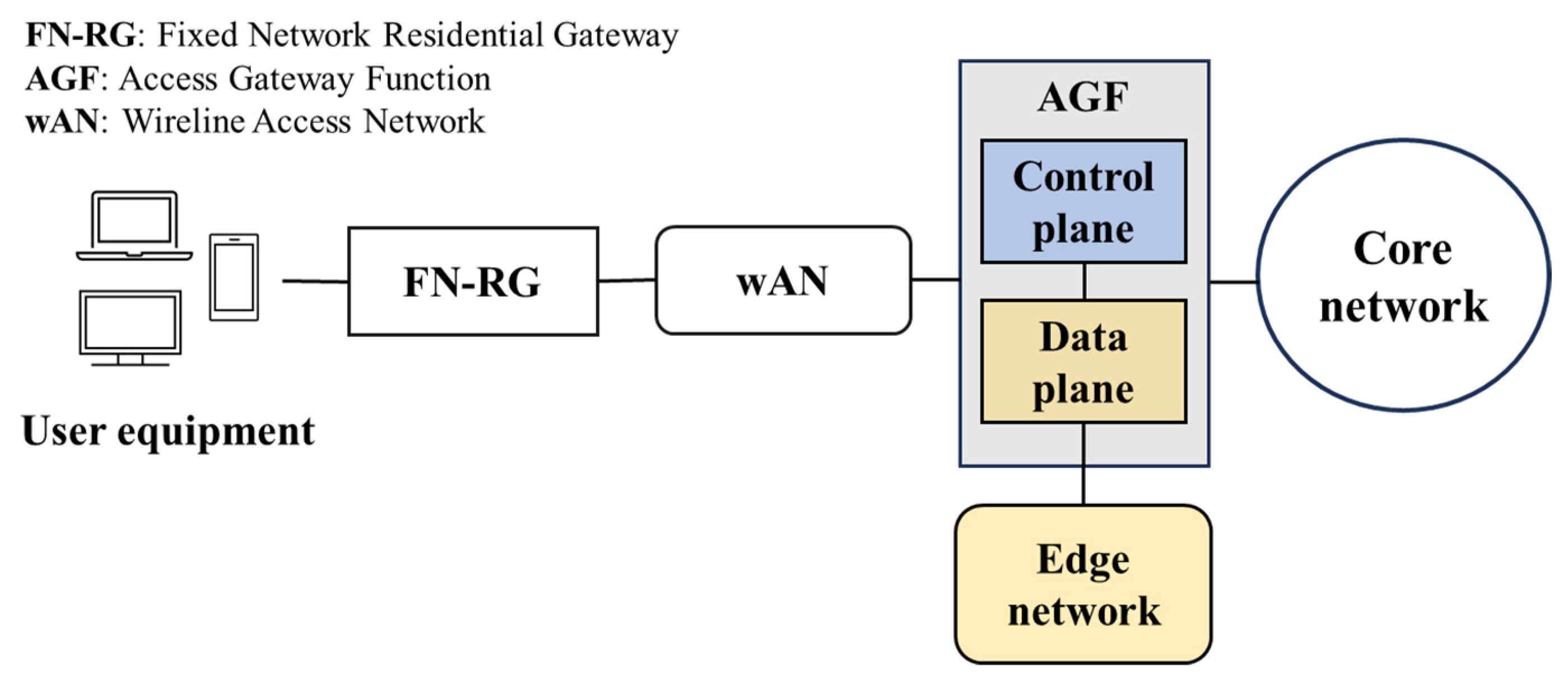

2.3. Fifth-Generation-and-Beyond Edge Technology

- Access Gateway Function (AGF): allows users from a wireline access network (wAN) to receive services provided by the same core network that supports mobile subscribers.

- User Plane Function (UPF): manages user data traffic by routing and forwarding packets. It also handles encapsulation and decapsulation, QoS management, and session statistics.

- Access and Mobility Management Function (AMF): handles signaling for authentication, connection and mobility.

- Session Management Function (SMF): oversees session management tasks, including the creation, modification, and termination of user data sessions.

- Authentication Server Function (AUSF): controls the authentication of a 3GPP or non-3GPP access.

- Network Repository Function (NRF): serves as a central registry of all NFs, allowing NFs to register and be discovered by other NFs.

- Policy Control Function (PCF): sets unified policy rules for control network functions, such as mobility, roaming, and network slicing.

- Unified Data Management (UDM): stores subscriber data and user profiles for use by the core network.

3. Related Work

4. Motivation, Architecture, and Methodology

4.1. Motivation

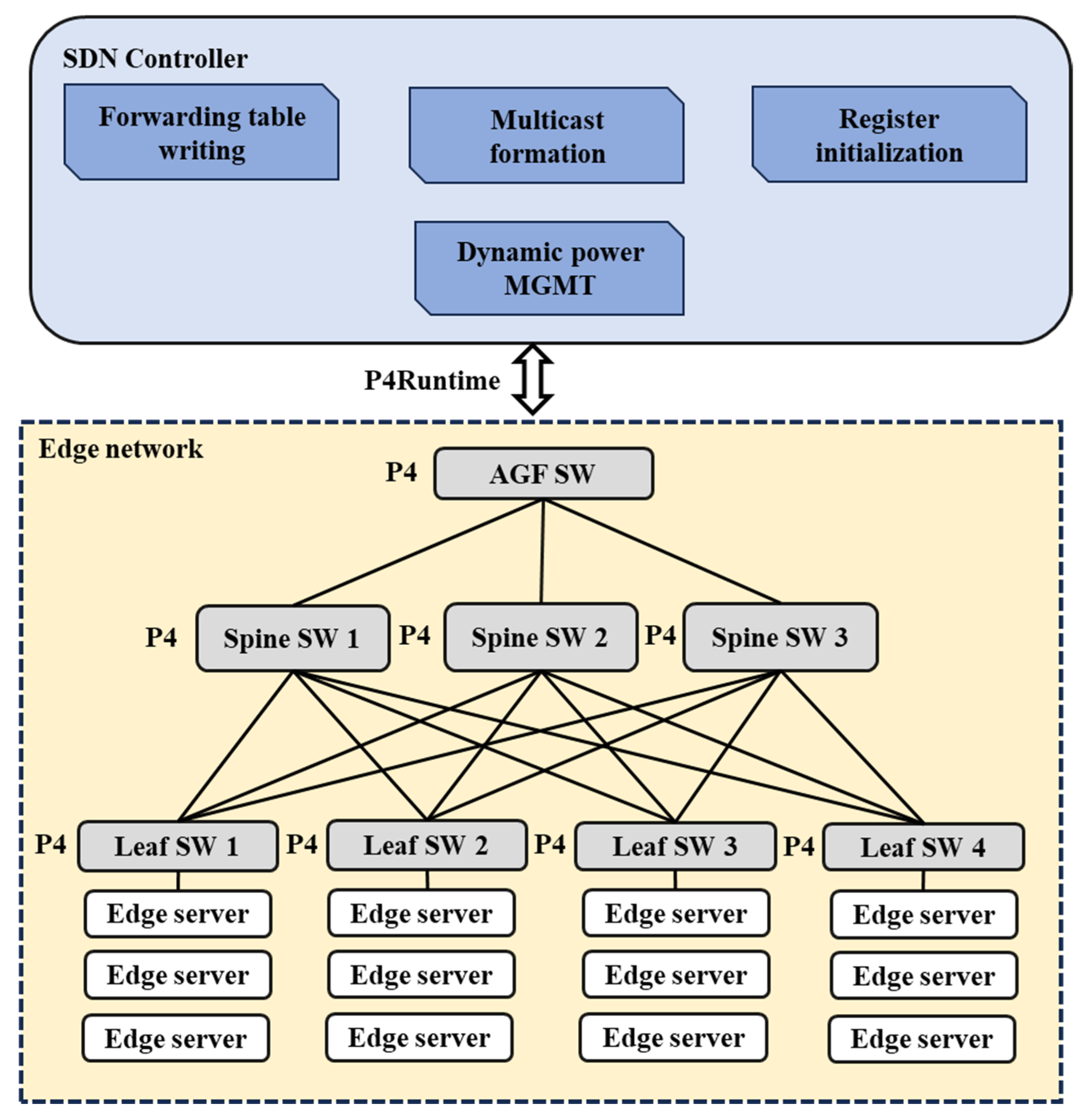

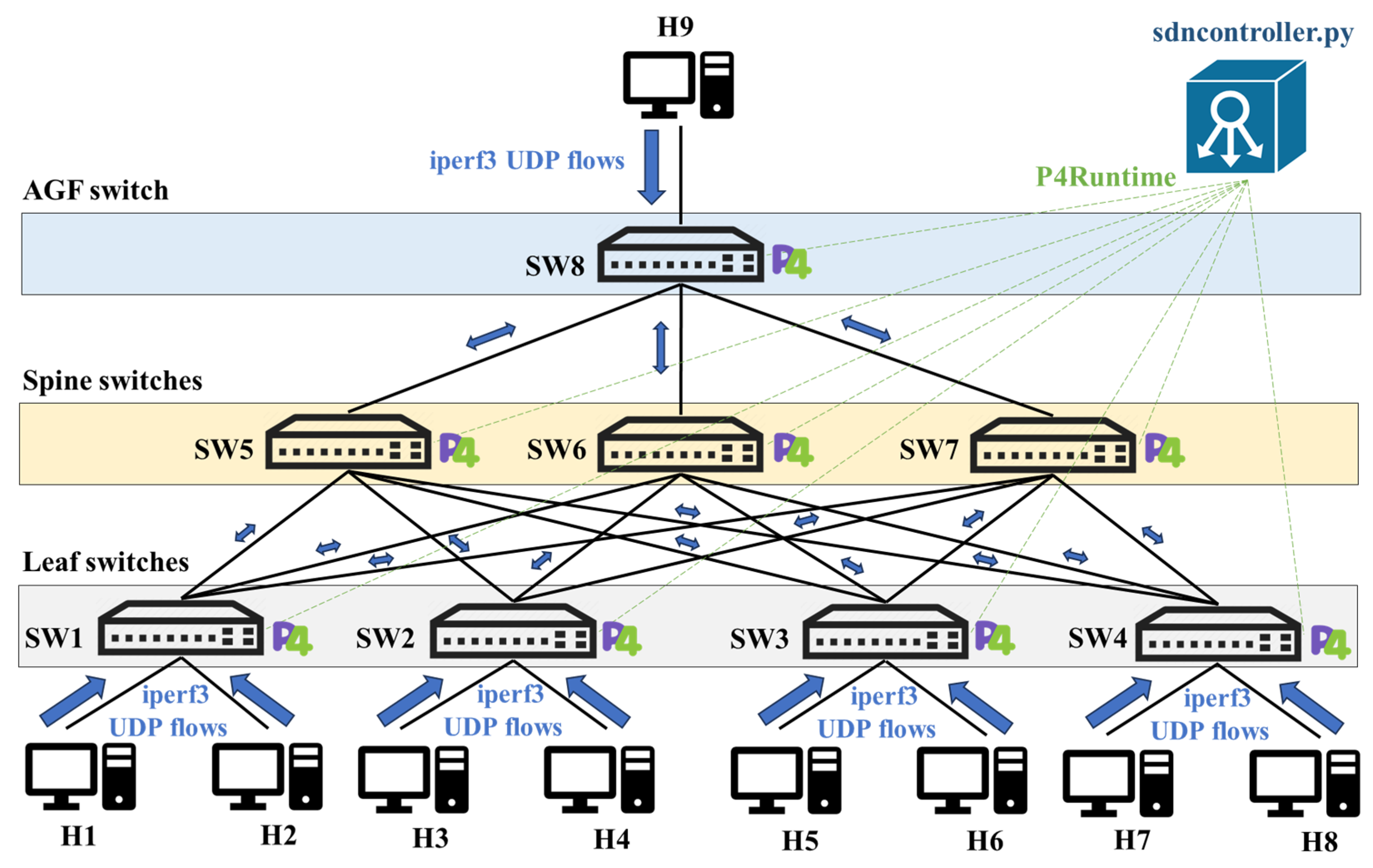

4.2. Architecture

- Leaf switches: these nodes connect edge servers to the network, acting as the access points for data traffic.

- Spine switches: responsible for aggregating traffic between the leaf switches and the AGF, these switches manage the flow of data across networks.

- AGF switch: positioned as the interface for user traffic to and from the AGF, this switch ensures seamless integration of the NF with the edge services.

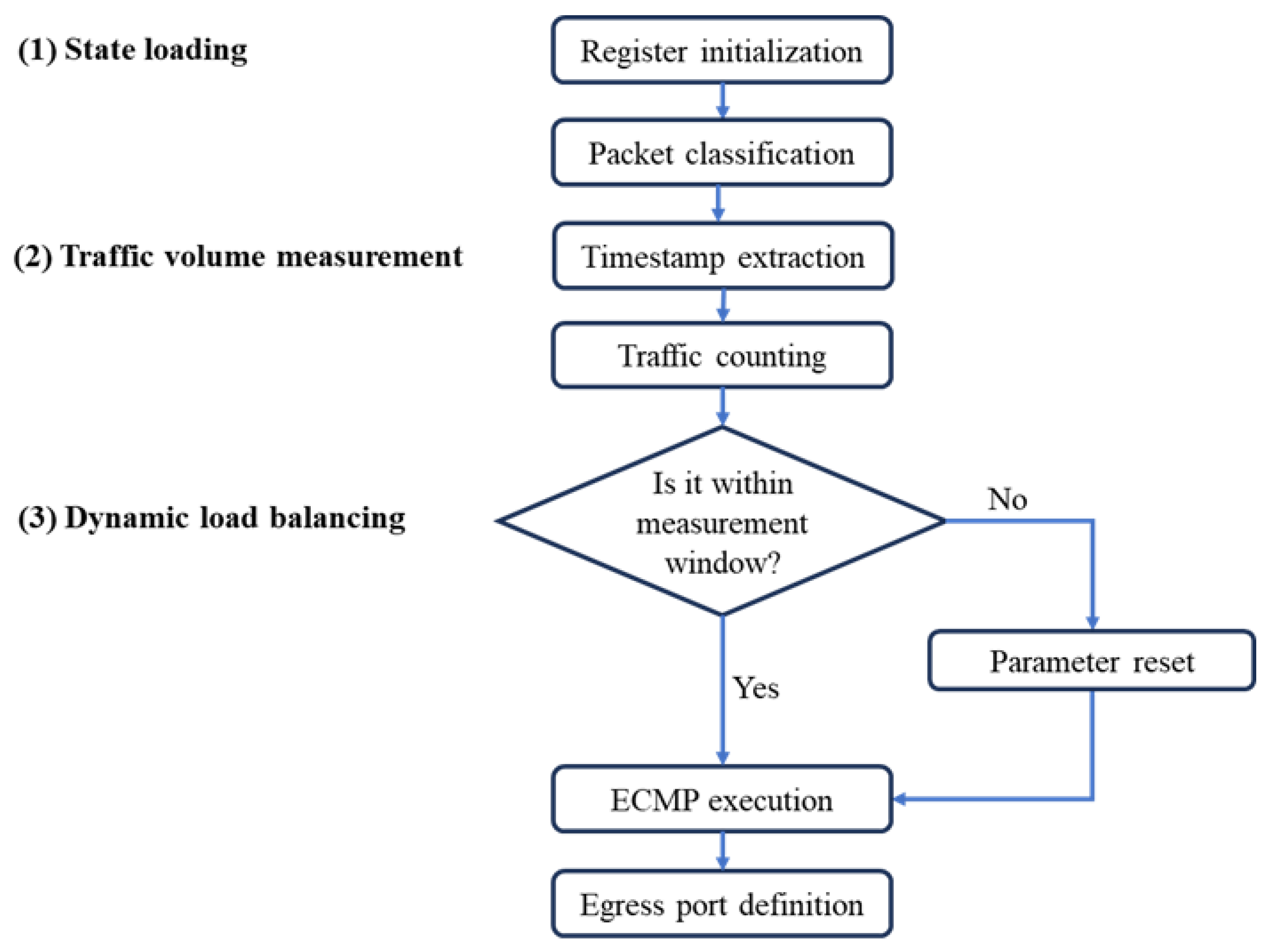

4.3. Methodology

4.3.1. Data Plane Load Balancing

- Parser: inspects Ethernet, ARP, IP, and TCP/UDP headers, extracting relevant fields such as source/destination IP addresses and transport ports.

- Ingress Pipeline: employs P4 registers, some of which are fully managed by the data plane ((a), (b), and (c)), while others are initialized by the controller ((d), (e), and (f)). This arrangement enables fast local decisions without constant control plane intervention. In more detail, we use the following:

- (a)

- Traffic Volume: tracks the cumulative amount of traffic (in bytes) observed at each switch.

- (b)

- Packet Timestamp: stores the initial time reference information for packets, facilitating traffic estimation within a specified time window.

- (c)

- Spine Switches: indicates the number of active spine switches needed to handle current traffic demands.

- (d)

- Traffic Thresholds: specifies traffic volume limits that prompt the pipeline to mark more or fewer spine switches as needed.

- (e)

- Measurement Window: defines the time interval duration for counting traffic before resetting the traffic volume estimation in (a).

- (f)

- Switch Type: identifies whether a device is a spine, leaf, or AGF.

- Deparser: headers are reassembled, and the packet is transmitted out of the appropriate port.

- State loading: The data plane sets registers (a), (b), and (c), while the SDN controller initializes registers (d), (e), and (f). A classification process identifies flows directed toward spine switches.

- Traffic volume measurement: Each packet increases the traffic counting in (a) by its size, with the timestamp from (b) helping determine whether the measurement window in (e) has expired. Once this time interval ends, traffic stored in (a) resets, and the timestamp in (b) is updated.

- Dynamic load balancing: The Spine Switch Calculation action compares the observed traffic in (a) against the traffic thresholds from (d) to determine how many spine switches are required, and then writes that number into (c). It subsequently applies the ECMP action, which relies on a 5-tuple hash over the source and destination IP addresses, IP protocol, as well as the TCP/UDP source and destination ports. This hash is bounded by the current value of spine switches from (c), scaling how many distinct paths are available. Moreover, a CRC-based hash is computed, and its outcome selects which port to use. As traffic fluctuates, the pipeline updates (c) on the fly, ensuring flows are automatically rehashed among the valid number of spine switches.

4.3.2. Control Plane Forwarding Table Writing

4.3.3. Control Plane Multicast Formation

4.3.4. Control Plane Register Initialization

4.3.5. Control Plane Dynamic Power Management

| Algorithm 1 Dynamic Power Management |

| Inputs: |

| switches[]: an array of all switches in the network spine_switch_type: an integer index that differentiates the spine switch role from the leaf and AGF types switch_interface_states: a dictionary mapping each switch with the current interface state (activated/deactivated) spine_start, spine_end: integer indices defining the range of spine switches Procedure: 1 for sw in switches: 2 if readRegister(sw, “switch_type”) == spine_switch_type: 3 continue 4 required_spine_switches = readRegister(sw, “spine_switches”) 5 if required_spine_switches == spine_end: 6 for i in range(spine_start, spine_end): 7 update_switch_interfaces(switches[i], On, switch_interface_states) 8 else 9 for i in range(spine_start, spine_end): 10 neededCount = spine_start + required_spine_switches 11 if i >= neededCount: |

| 12 update_switch_interfaces(switches[i], Off, switch_interface_states) 13 else |

| 14 update_switch_interfaces(switches[i], On, switch_interface_states) |

5. Experimental Setup

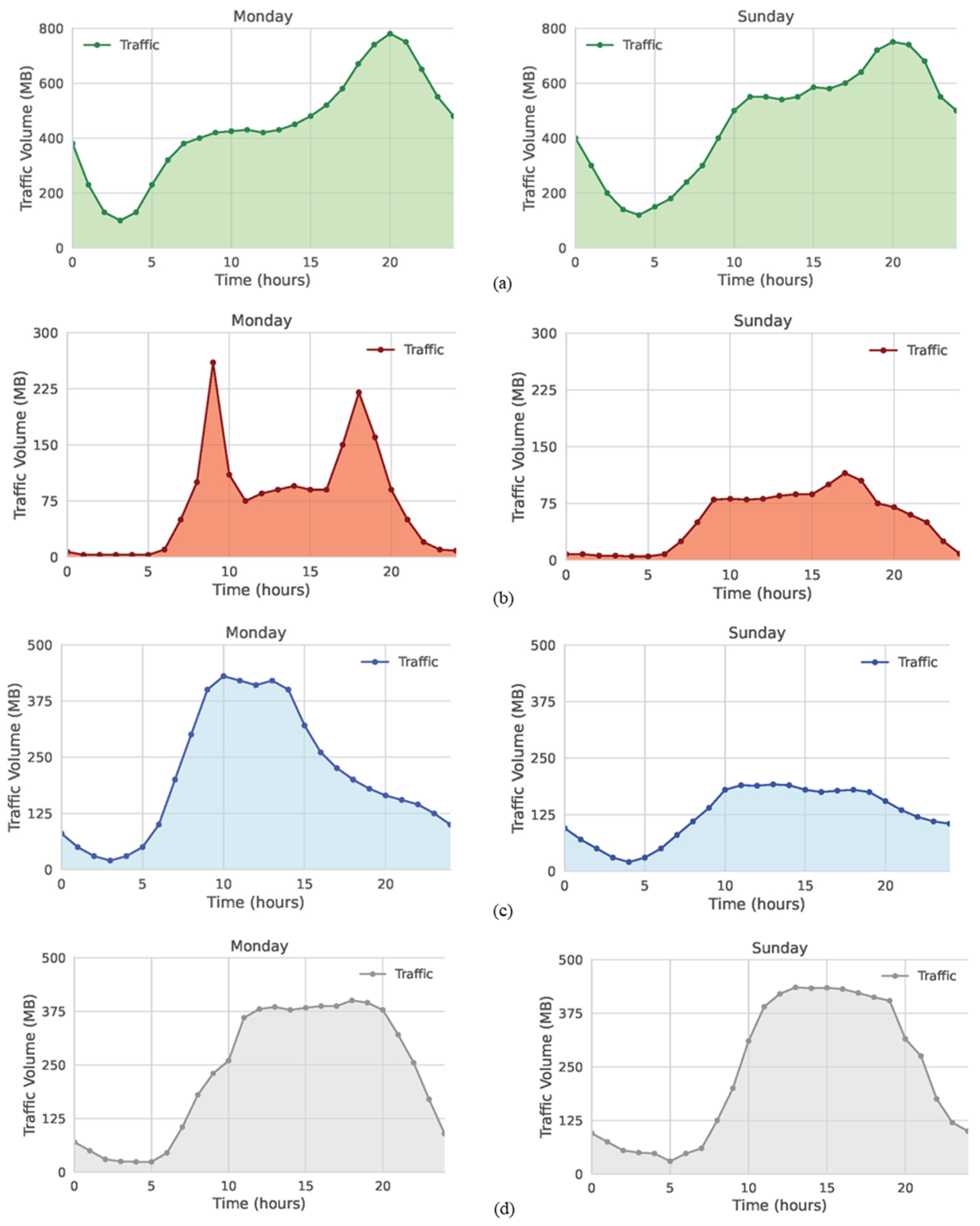

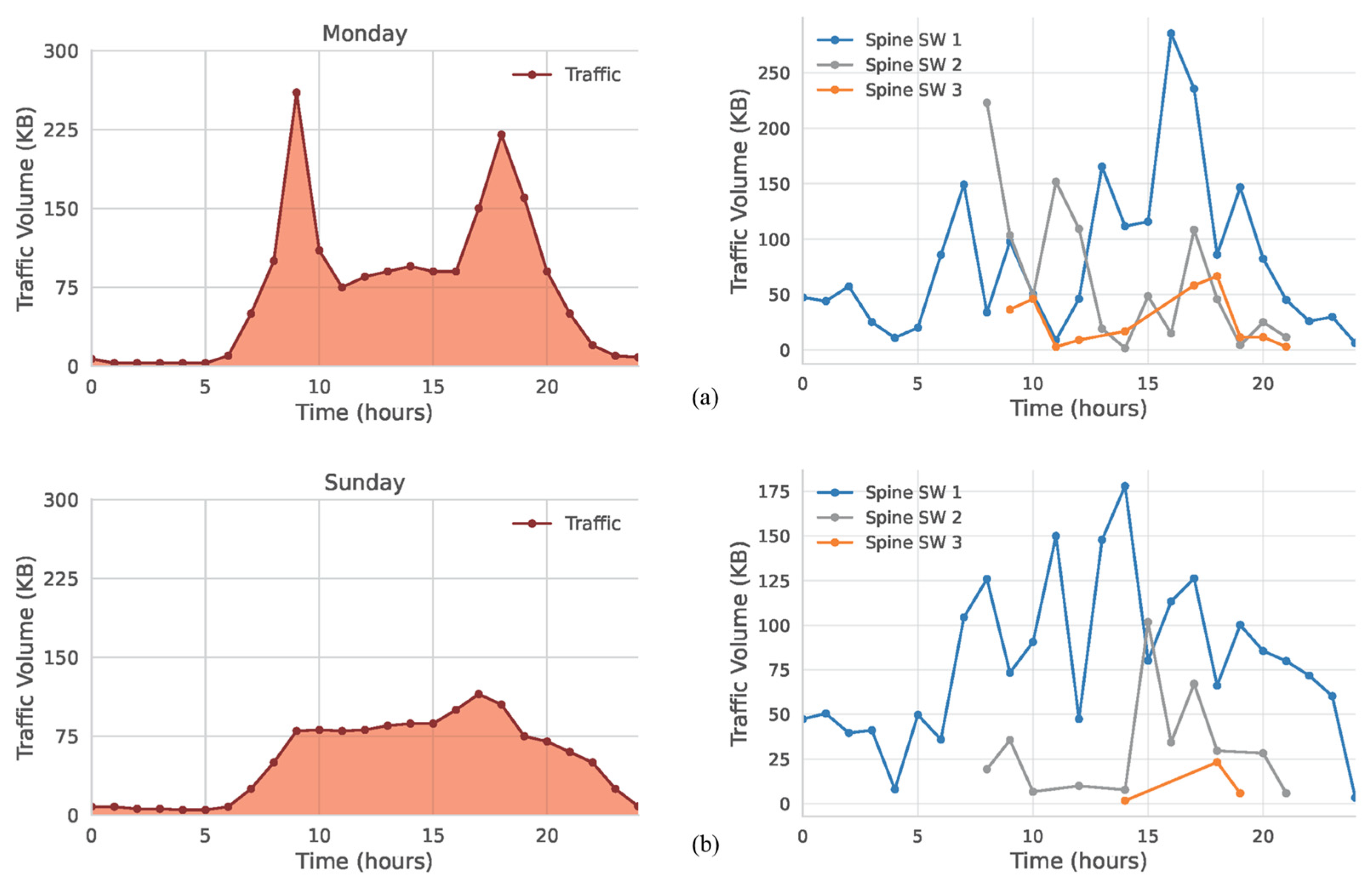

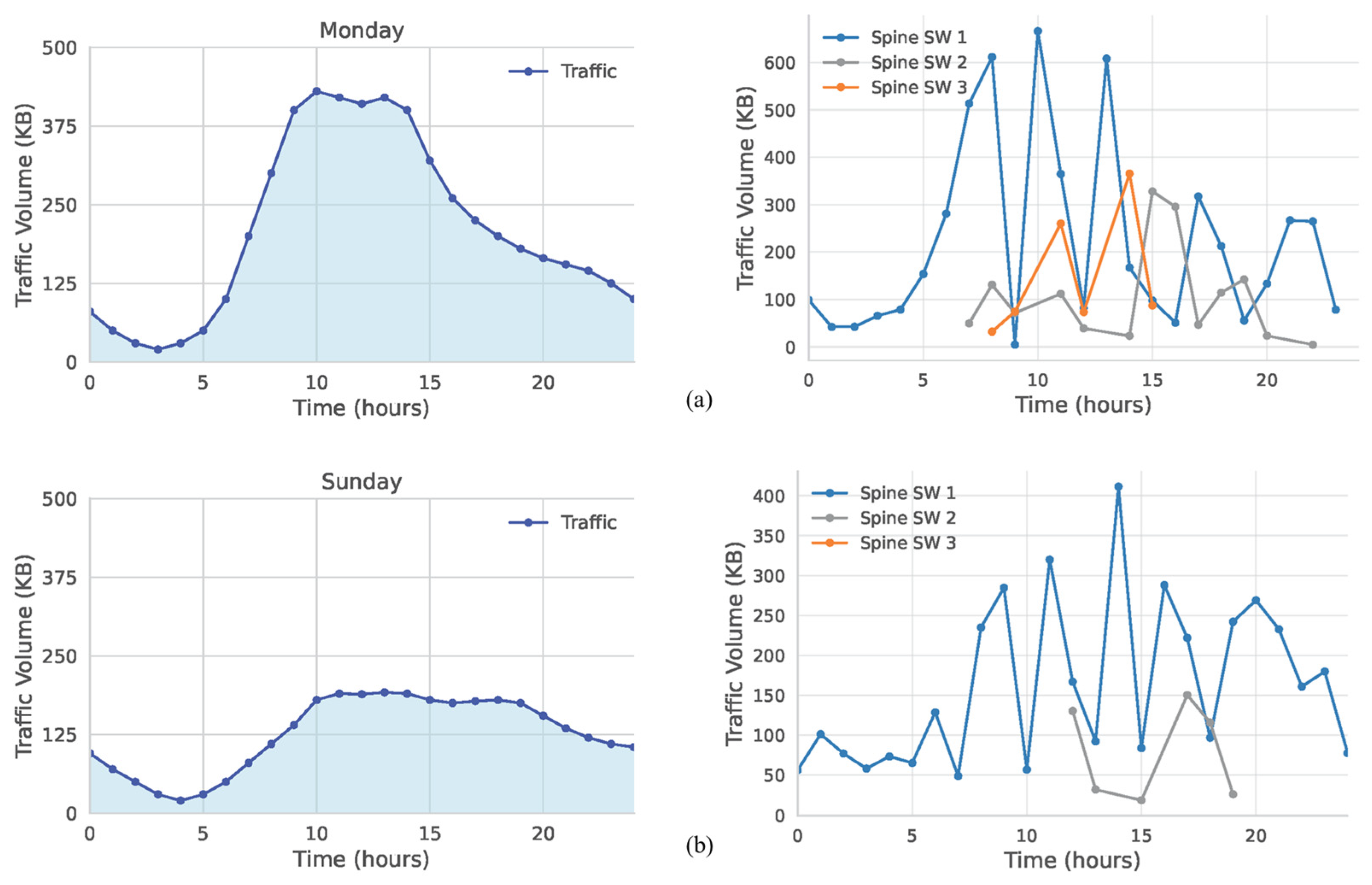

6. Evaluation

7. Projected Savings Analysis

8. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Hauser, F.; Häberle, M.; Merling, D.; Lindner, S.; Gurevich, V.; Zeiger, F.; Frank, R.; Menth, M. A survey on data plane programming with P4: Fundamentals, advances, and applied research. J. Netw. Comput. Appl. 2023, 212, 103561. [Google Scholar] [CrossRef]

- Kfoury, E.F.; Crichigno, J.; Bou-Harb, E. An Exhaustive Survey on P4 Programmable Data Plane Switches: Taxonomy, Applications, Challenges, and Future Trends. IEEE Access 2021, 9, 87094–87155. [Google Scholar] [CrossRef]

- Kianpisheh, S.; Taleb, T. A Survey on In-Network Computing: Programmable Data Plane and Technology Specific Applications. IEEE Commun. Surv. Tutor. 2023, 25, 701–761. [Google Scholar] [CrossRef]

- Liatifis, A.; Sarigiannidis, P.; Argyriou, V.; Lagkas, T. Advancing SDN from OpenFlow to P4: A Survey. ACM Comput. Surv. 2023, 55, 186. [Google Scholar] [CrossRef]

- Brito, J.A.; Moreno, J.I.; Contreras, L.M.; Alvarez-Campana, M.; Blanco Caamaño, M. Programmable Data Plane Applications in 5G and Beyond Architectures: A Systematic Review. Sensors 2023, 23, 6955. [Google Scholar] [CrossRef]

- IMT Vision–Framework and Overall Objectives of the Future Development of IMT for 2020 and Beyond, Document ITU-R M.2083-0. September 2015. Available online: https://www.itu.int/dms_pubrec/itu-r/rec/m/R-REC-M.2083-0-201509-I!!PDF-E.pdf (accessed on 19 February 2025).

- 3GPP. Edge Computing. Available online: https://www.3gpp.org/technologies/edge-computing (accessed on 19 February 2025).

- Lorincz, J.; Capone, A.; Wu, J. Greener, Energy-Efficient and Sustainable Networks: State-of-the-Art and New Trends. Sensors 2019, 19, 4864. [Google Scholar] [CrossRef]

- Mobile World Live. Global ICT Energy Efficiency Summit Paves Way for 5G. Available online: https://www.mobileworldlive.com/huawei/global-ict-energy-efficiency-summit-paves-way-for-5g/ (accessed on 19 February 2025).

- Ericsson. Breaking the Energy Curve: Why Service Providers Should Care About 5G Energy Efficiency. Available online: https://www.ericsson.com/en/blog/2019/2/breaking-the-energy-curve-5g-energy-efficiency (accessed on 19 February 2025).

- GSMA. Energy Efficiency: An Overview. Available online: https://www.gsma.com/solutions-and-impact/technologies/networks/gsma_resources/energy-efficiency-an-overview/ (accessed on 19 February 2025).

- LightReading. Bill Shock: Orange, China Telecom Fret About 5G Energy Costs. Available online: https://www.lightreading.com/5g/bill-shock-orange-china-telecom-fret-about-5g-energy-costs#close-modal (accessed on 19 February 2025).

- Vertiv. MWC19: Vertiv and 451 Research Survey Reveals More Than 90 Percent of Operators Fear Increasing Energy Costs for 5G and Edge. Available online: https://www.vertiv.com/en-emea/about/news-and-insights/news-releases/2019/mwc19-vertiv-and-451-research-survey-reveals-more-than-90-percent-of-operators-fear-increasing-energy-costs-for-5g-and-edge/ (accessed on 19 February 2025).

- Usama, M.; Erol-Kantarci, M. A Survey on Recent Trends and Open Issues in Energy Efficiency of 5G. Sensors 2019, 19, 3126. [Google Scholar] [CrossRef]

- 3GPP. Energy Efficiency in 3GPP Technologies. Available online: https://www.3gpp.org/technologies/ee-article (accessed on 19 February 2025).

- Kreutz, D.; Ramos, F.M.V.; Veríssimo, P.E.; Rothenberg, C.E.; Azodolmolky, S.; Uhlig, S. Software-Defined Networking: A Comprehensive Survey. Proc. IEEE 2015, 103, 14–76. [Google Scholar] [CrossRef]

- McKeown, N.; Anderson, T.; Balakrishnan, H.; Parulkar, G.; Peterson, L.; Rexford, J.; Shenker, S.; Turner, J. OpenFlow: Enabling innovation in campus networks. SIGCOMM Comput. Commun. Rev. 2008, 38, 69–74. [Google Scholar] [CrossRef]

- Bosshart, P.; Daly, D.; Gibb, G.; Izzard, M.; McKeown, N.; Rexford, J.; Schlesinger, C.; Talayco, D.; Vahdat, A.; Varghese, G. P4: Programming protocol-independent packet processors. ACM SIGCOMM Comput. Commun. Rev. 2014, 44, 87–95. [Google Scholar] [CrossRef]

- Sivaraman, A.; Cheung, A.; Budiu, M.; Kim, C.; Alizadeh, M.; Balakrishnan, H.; Varghese, G.; McKeown, N.; Licking, S. Packet trans: High-level programming for linerate switches. In Proceedings of the 2016 ACM SIGCOMM Conference, Florianopolis, Brazil, 22–26 August 2016; pp. 15–28. [Google Scholar]

- Sonchack, J.; Loehr, D.; Rexford, J.; Walker, D. Lucid: A language for control in the data plane. In Proceedings of the 2021 ACM SIGCOMM 2021 Conference (SIGCOMM ‘21), Virtual, 23–27 August 2021. [Google Scholar]

- Bosshart, P.; Gibb, G.; Kim, H.; Varghese, G.; McKeown, N.; Izzard, M.; Mujica, F.; Horowitz, M. Forwarding metamorphosis: Fast programmable match-action processing in hardware for SDN. In Proceedings of the ACM SIGCOMM 2013 Conference on SIGCOMM (SIGCOMM ‘13), Hong Kong, 12–16 August 2013; pp. 99–110. [Google Scholar]

- The P4 Language Consortium. Available online: https://p4.org/ (accessed on 19 February 2025).

- P4 Language Specification 14. Available online: https://p4.org/p4-spec/p4-14/v1.0.5/tex/p4.pdf (accessed on 19 February 2025).

- P4 Language Specification 16. Available online: https://p4.org/wp-content/uploads/2024/10/P4-16-spec-v1.2.5.pdf (accessed on 19 February 2025).

- P4Runtime API Specification. Available online: https://p4.org/wp-content/uploads/2024/10/P4Runtime-Spec-v1.4.1.pdf (accessed on 19 February 2025).

- 3GPP. 3GPP Specification Set: 5G. Available online: https://www.3gpp.org/specifications-technologies/releases (accessed on 19 February 2025).

- Minimum Requirements Related to Technical Performance for IMT-2020 Radio Interface(s), Document ITU-R M.2410-0. November 2017. Available online: https://www.itu.int/dms_pub/itu-r/opb/rep/R-REP-M.2410-2017-PDF-E.pdf (accessed on 19 February 2025).

- Bouras, C.; Kollia, A.; Papazois, A. SDN NFV in 5G: Advancements and challenges. In Proceedings of the 2017 20th Conference on Innovations in Clouds, Internet and Networks (ICIN), Paris, France, 7–9 March 2017; pp. 107–111. [Google Scholar]

- Foukas, X.; Patounas, G.; Elmokashfi, A.; Marina, M.K. Network Slicing in 5G: Survey and Challenges. IEEE Commun. Mag. 2017, 55, 94–100. [Google Scholar] [CrossRef]

- ETSI. Multi-Access Edge Computing (MEC). Available online: https://www.etsi.org/technologies/multi-access-edge-computing (accessed on 19 February 2025).

- MEC in 5G Networks. ETSI White Paper No. 28. June 2018. Available online: https://www.etsi.org/images/files/ETSIWhitePapers/etsi_wp28_mec_in_5G_FINAL.pdf (accessed on 19 February 2025).

- System Architecture for the 5G System, Document TS 23.501, V17.5.0, 3GPP. Available online: https://www.etsi.org/deliver/etsi_ts/123500_123599/123501/17.05.00_60/ts_123501v170500p.pdf (accessed on 19 February 2025).

- David, K.; Berndt, H. 6G Vision and Requirements: Is There Any Need for Beyond 5G? IEEE Veh. Technol. Mag. 2018, 13, 72–80. [Google Scholar] [CrossRef]

- Samsung Research. The Next Hyper Connected Experience for All. 2020. Available online: https://cdn.codeground.org/nsr/downloads/researchareas/20201201_6G_Vision_web.pdf (accessed on 19 February 2025).

- Viswanathan, H.; Mogensen, P.E. Communications in the 6G Era. IEEE Access 2020, 8, 57063–57074. [Google Scholar] [CrossRef]

- Ziegler, V.; Viswanathan, H.; Flinck, H.; Hoffmann, M.; Räisänen, V.; Hätönen, K. 6G Architecture to Connect the Worlds. IEEE Access 2020, 8, 173508–173520. [Google Scholar] [CrossRef]

- Hu, J.; He, Y.; Luo, W.; Huang, J.; Wang, J. Enhancing Load Balancing with In-Network Recirculation to Prevent Packet Reordering in Lossless Data Centers. IEEE/ACM Trans. Netw. 2024, 32, 4114–4127. [Google Scholar] [CrossRef]

- Hu, J.; Shen, H.; Liu, X.; Wang, J. RDMA transports in datacenter networks: Survey. IEEE Netw. 2024, 38, 380–387. [Google Scholar] [CrossRef]

- Hu, J.; Rao, S.; Zhu, M.; Huang, J.; Wang, J.; Wang, J. SRCC: Sub-RTT Congestion Control for Lossless Datacenter Networks. IEEE Trans. Ind. Inform. 2024, 21, 2799–2808. [Google Scholar] [CrossRef]

- Katta, N.; Hira, M.; Kim, C.; Sivaraman, A.; Rexford, J. Hula: Scalable load balancing using programmable data planes. In Proceedings of the Symposium on SDN Research, Santa Clara, CA, USA, 14–15 March 2016; pp. 1–12. [Google Scholar]

- Hsu, K.F.; Tammana, P.; Beckett, R.; Chen, A.; Rexford, J.; Walker, D. Adaptive weighted traffic splitting in programmable data planes. In Proceedings of the SOSR 2020—Proceedings of the Symposium on SDN Research, San Jose, CA, USA, 3 March 2020; pp. 103–109. [Google Scholar]

- Sapio, A.; Abdelaziz, I.; Aldilaijan, A.; Canini, M.; Kalnis, P. In-Network Computation Is A Dumb Idea Whose Time Has Come. In Proceedings of the 16th ACM Workshop on Hot Topics in Networks, Palo Alto, CA, USA, 30 November–1 December 2017; pp. 150–156. [Google Scholar]

- Hu, N.; Tian, Z.; Du, X.; Guizani, M. An Energy-Efficient In-Network Computing Paradigm for 6G. IEEE Trans. Green Commun. Netw. 2021, 5, 1722–1733. [Google Scholar] [CrossRef]

- Abdullaziz, O.I.; Capitani, M.; Casetti, C.E.; Chiasserini, C.F.; Chundrigar, S.B.; Landi, G.; Talat, S.T. Energy monitoring and management in 5G integrated fronthaul and backhaul. In Proceedings of the 2017 European Conference on Networks and Communications (EuCNC), Oulu, Finland, 12–15 June 2017; pp. 1–6. [Google Scholar]

- Fernández-Fernández, A.; Cervelló-Pastor, C.; Ochoa-Aday, L. Energy Efficiency and Network Performance: A Reality Check in SDN-Based 5G Systems. Energies 2017, 10, 2132. [Google Scholar] [CrossRef]

- Heller, B.; Seetharaman, S.; Mahadevan, P.; Yiakoumis, Y.; Sharma, P.; Banerjee, S.; McKeown, N. ElasticTree: Saving energy in data center networks. In Proceedings of the NSDI’10, San Jose, CA, USA, 28–30 April 2010; pp. 249–264. [Google Scholar]

- Klapez, M.; Grazia, C.A.; Casoni, M. Energy Savings of Sleep Modes Enabled by 5G Software-Defined Heterogeneous Networks. In Proceedings of the 2018 IEEE 4th International Forum on Research and Technology for Society and Industry (RTSI), Palermo, Italy, 10–13 September 2018; pp. 1–6. [Google Scholar]

- Yazdinejad, A.; Parizi, R.M.; Dehghantanha, A.; Zhang, Q.; Choo, K.-K.R. An Energy-efficient SDN Controller Architecture for IoT Networks with Blockchain-based Security. IEEE Trans. Serv. Comput. 2020, 13, 625–638. [Google Scholar] [CrossRef]

- Agrawal, A.; Kim, C. Intel Tofino2—A 12.9Tbps P4-Programmable Ethernet Switch. In Proceedings of the 2020 IEEE Hot Chips 32 Symposium (HCS), Palo Alto, CA, USA, 16–18 August 2020; pp. 1–32. [Google Scholar]

- Netdev 0x14. Creating an End-to-End Programming Model for Packet Forwarding. 2020. Available online: https://netdevconf.info/0x14/session.html?keynote-mckeown (accessed on 19 February 2025).

- Xing, J.; Hsu, K.F.; Kadosh, M.; Lo, A.; Piasetzky, Y.; Krishnamurthy, A.; Chen, A. Runtime programmable switches. In Proceedings of the 19th USENIX Symposium on Networked Systems Design and Implementation (NSDI 22), Renton, WA, USA, 4–6 April 2022; pp. 651–665. [Google Scholar]

- Moon, Y.; Han, Y.; Kim, S.; Sai, M.S.; Deshmukh, A.; Kim, D. Data Plane Acceleration Using Heterogeneous Programmable Network Devices Towards 6G. In Proceedings of the ICC 2024-IEEE International Conference on Communications, Denver, CO, USA, 9–13 June 2024; pp. 421–426. [Google Scholar]

- Liu, M.; Peter, S.; Krishnamurthy, A.; Phothilimthana, P.M. E3: Energy-Efficient Microservices on SmartNIC-Accelerated Servers. In Proceedings of the 2019 USENIX Annual Technical Conference (USENIX ATC 19), Renton, WA, USA, 10–12 July 2019; pp. 363–378. [Google Scholar]

- Röllin, L.; Jacob, R.; Vanbever, L. A Sleep Study for ISP Networks: Evaluating Link Sleeping on Real World Data. In Proceedings of the 3rd Workshop on Sustainable Computer Systems Design and Implementation (HotCarbon 2024), Santa Clara, CA, USA, 9 July 2024. [Google Scholar]

- Magnouche, Y.; Leguay, J.; Zeng, F. Safe Routing in Energy-aware IP networks. In Proceedings of the 19th International Conference on the Design of Reliable Communication Networks (DRCN), Vilanova, Spain, 17–20 April 2023; IEEE: New York, NY, USA; pp. 1–8. [Google Scholar]

- Pan, T.; Peng, X.; Shi, Q.; Bian, Z.; Lin, X.; Song, E.; Huang, T. GreenTE. ai: Power-Aware Traffic Engineering via Deep Reinforcement Learning. In Proceedings of the 2021 IEEE/ACM 29th International Symposium on Quality of Service (IWQOS), Tokyo, Japan, 25–28 June 2021; pp. 1–6. [Google Scholar]

- Grigoryan, G.; Liu, Y.; Kwon, M. iLoad: In-network load balancing with programmable data plane. In Proceedings of the 15th International Conference on emerging Networking EXperiments and Technologies, New York, NY, USA, 9–12 December 2019; pp. 17–19. [Google Scholar]

- Alonso, M.; Coll, S.; Martínez, J.; Santonja, V.; López, P. Power consumption management in fat-tree interconnection networks. Parallel Comput. 2015, 48, 59–80. [Google Scholar] [CrossRef]

- Jacob, R.; Lim, J.; Vanbever, L. Does rate adaptation at daily timescales make sense? In Proceedings of the 2nd Workshop on Sustainable Computer Systems, Boston, MA, USA, 9 July 2023; pp. 1–7. [Google Scholar]

- Grigoryan, G.; Kwon, M. Towards Greener Data Centers via Programmable Data Plane. In Proceedings of the 2023 IEEE 24th International Conference on High Performance Switching and Routing (HPSR), Albuquerque, NM, USA, 5–7 June 2023; pp. 62–67. [Google Scholar]

- Brito, J.A.; Moreno, J.I.; Contreras, L.M.; Caamaño, M.B. Architecture and Methodology for Green MEC Services Using Programmable Data Planes in 5G and Beyond Networks. In Proceedings of the 2024 IFIP Networking Conference (IFIP Networking), Thessaloniki, Greece, 3–6 June 2024; pp. 738–743. [Google Scholar]

- Fröhlich, P.; Gelenbe, E.; Fiołka, J.; Chęciński, J.; Nowak, M.; Filus, Z. Smart SDN Management of Fog Services to Optimize QoS and Energy. Sensors 2021, 21, 3105. [Google Scholar] [CrossRef] [PubMed]

- Han, H.; Terzenidis, N.; Syrivelis, D.; Beldachi, A.F.; Kanellos, G.T.; Demir, Y.; Hardavellas, N. Energy-Proportional Data Center Network Architecture Through OS, Switch and Laser Co-design. arXiv 2021, arXiv:2112.02083. [Google Scholar]

- BBF. TR-456: AGF Functional Requirements. Available online: https://www.broadband-forum.org/pdfs/tr-456-2-0-1.pdf (accessed on 19 February 2025).

- BBF. TR-470: 5G Wireless Wireline Convergence Architecture. Available online: https://www.broadband-forum.org/pdfs/tr-470-2-0-0.pdf (accessed on 19 February 2025).

- Peterson, L.; Cascone, C.; O’Connor, B.; Vachuska, T.; Davie, B. “Software-Defined Networks: A Systems Approach,” Systems Approach LLC. Chapter 2: Use Cases. 2022. Available online: https://sdn.systemsapproach.org/uses.html (accessed on 19 February 2025).

- Mininet. Available online: https://mininet.org/ (accessed on 19 February 2025).

- BMv2 Software Switch. Available online: https://github.com/p4lang/behavioral-model (accessed on 19 February 2025).

- iPerf—The Ultimate Speed Test Tool for TCP, UDP and SCTP. Available online: https://iperf.fr/ (accessed on 19 February 2025).

- Xu, F.; Li, Y.; Wang, H.; Zhang, P.; Jin, D. Understanding mobile traffic patterns of large scale cellular towers in urban environment. IEEE ACM Trans. Netw. 2017, 25, 1147–1161. [Google Scholar] [CrossRef]

- Edge-core Networks. EdgeCore Wedge 100BF-32Q Switch. Available online: https://www.edge-core.com/wp-content/uploads/2023/08/DCS801-Wedge100BF-32QS-DS-R05.pdf (accessed on 19 February 2025).

- Intel. Intel® Tofino™. Available online: https://www.intel.com/content/www/us/en/products/details/network-io/intelligent-fabric-processors/tofino.html (accessed on 19 February 2025).

- ETH Zurich Library. How Much Does It Burn? Profiling the Energy Model of a Tofino Switch. Available online: https://www.research-collection.ethz.ch/bitstream/handle/20.500.11850/618002/jackie_NDA.pdf?sequence=1&isAllowed=y (accessed on 19 February 2025).

- Franco, D.; Higuero, M.; Zaballa, E.O.; Unzilla, J.; Jacob, E. Quantitative measurement of link failure reaction time for devices with P4-programmable data planes. Telecommun. Syst. 2024, 85, 277–288. [Google Scholar] [CrossRef]

- The Extreme Networks Federal Data Center Design Series. Volume 3: Evaluation of Reliability and Availability of Network Services in the Data Center Infrastructure. Available online: https://www.extremenetworks.com/resources/solution-brief/the-extreme-networks-federal-data-center-design-series-volume-3 (accessed on 19 February 2025).

| KPI | Key Use Case | Values |

|---|---|---|

| Peak Data Rate | eMBB | DL: 20 Gbps, UL: 10 Gbps |

| Peak Spectral Efficiency | eMBB | DL: 30 bps/Hz, UL: 15 bps/Hz |

| User-Experienced Data Rate | eMBB | DL: 100 Mbps, UL: 50 Mbps (Dense Urban) |

| 5% User Spectral Efficiency | eMBB | DL: 0.3 bps/Hz, UL: 0.21 bps/Hz (Indoor Hotspot); DL: 0.225 bps/Hz, UL: 0.15 bps/Hz (Dense Urban); DL: 0.12 bps/Hz, UL: 0.045 bps/Hz (Rural) |

| Average Spectral Efficiency | eMBB | DL: 9 bps/Hz/TRxP, UL: 6.75 bps/Hz/TRxP (Indoor Hotspot); DL: 7.8 bps/Hz/TRxP, UL: 5.4 bps/Hz/TRxP (Dense Urban); DL: 3.3 bps/Hz/TRxP, UL: 1.6 bps/Hz/TRxP (Rural) |

| Area Traffic Capacity | eMBB | DL: 10 Mbps/m2 (Indoor Hotspot) |

| User Plane Latency | eMBB, uRLLC | 4 ms for eMBB and 1 ms for uRLLC |

| Control Plane Latency | eMBB, uRLLC | 20 ms for eMBB and uRLLC |

| Connection Density | mMTC | 1,000,000 devices/km2 |

| Energy Efficiency | eMBB | Capability to support high sleep ratio and long sleep duration to allow low energy consumption when there are no data (e.g., above 6 GHz) |

| Reliability | uRLLC | 1–10−5 success probability of transmitting a layer 2 protocol data unit of 32 bytes within 1 ms in channel quality of coverage edge |

| Mobility | eMBB | Up to 500 km/h |

| Mobility Interruption Time | eMBB, uRLLC | 0 ms |

| Bandwidth | eMBB | At least 100 MHz; up to 1 Gbps for operation in higher-frequency bands |

| Area | Monday | Sunday | ||||

|---|---|---|---|---|---|---|

| Spine SW 1 | Spine SW 2 | Spine SW 3 | Spine SW 1 | Spine SW 2 | Spine SW 3 | |

| Residential | 100% | 64% | 28% | 100% | 57% | 39% |

| Public transportation | 100% | 56% | 40% | 100% | 44% | 12% |

| Business | 100% | 54% | 25% | 100% | 24% | 0% |

| Recreational | 100% | 56% | 24% | 100% | 40% | 32% |

| Area | Monday | Sunday | ||||

|---|---|---|---|---|---|---|

| One Spine SW | Two Spine SWs | Three Spine SWs | One Spine SW | Two Spine SWs | Three Spine SWs | |

| Residential | 36% | 36% | 28% | 39% | 26% | 35% |

| Public transportation | 44% | 16% | 40% | 52% | 40% | 8% |

| Business | 46% | 29% | 25% | 76% | 24% | 0% |

| Recreational | 44% | 32% | 24% | 56% | 16% | 28% |

| Area | Monday | Sunday |

|---|---|---|

| Residential | 3.77 KWh | 3.63 KWh |

| Public transportation | 3.63 KWh | 5.03 KWh |

| Business | 4.23 KWh | 6.15 KWh |

| Recreational | 4.19 KWh | 4.47 KWh |

| Area | Monthly | Yearly |

|---|---|---|

| Residential | 115.47 KWh | 1.36 MWh |

| Public transportation | 126.53 KWh | 1.47 MWh |

| Business | 150.33 KWh | 1.74 MWh |

| Recreational | 132.69 KWh | 1.56 MWh |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Brito, J.A.; Moreno, J.I.; Contreras, L.M. Energy-Aware Edge Infrastructure Traffic Management Using Programmable Data Planes in 5G and Beyond. Sensors 2025, 25, 2375. https://doi.org/10.3390/s25082375

Brito JA, Moreno JI, Contreras LM. Energy-Aware Edge Infrastructure Traffic Management Using Programmable Data Planes in 5G and Beyond. Sensors. 2025; 25(8):2375. https://doi.org/10.3390/s25082375

Chicago/Turabian StyleBrito, Jorge Andrés, José Ignacio Moreno, and Luis M. Contreras. 2025. "Energy-Aware Edge Infrastructure Traffic Management Using Programmable Data Planes in 5G and Beyond" Sensors 25, no. 8: 2375. https://doi.org/10.3390/s25082375

APA StyleBrito, J. A., Moreno, J. I., & Contreras, L. M. (2025). Energy-Aware Edge Infrastructure Traffic Management Using Programmable Data Planes in 5G and Beyond. Sensors, 25(8), 2375. https://doi.org/10.3390/s25082375