Abstract

With global climate change and the deterioration of the ecological environment, the safety of hydraulic engineering faces severe challenges, among which soil-dwelling termite damage has become an issue that cannot be ignored. Reservoirs and embankments in China, primarily composed of earth and rocks, are often affected by soil-dwelling termites, such as Odontotermes formosanus and Macrotermes barneyi. Identifying soil-dwelling termite damage is crucial for implementing monitoring, early warning, and control strategies. This study developed an improved YOLOv8 model, named MCD-YOLOv8, for identifying traces of soil-dwelling termite activity, based on the Monte Carlo random sampling algorithm and a lightweight module. The Monte Carlo attention (MCA) module was introduced in the backbone part to generate attention maps through random sampling pooling operations, addressing cross-scale issues and improving the recognition accuracy of small targets. A lightweight module, known as dimension-aware selective integration (DASI), was added in the neck part to reduce computation time and memory consumption, enhancing detection accuracy and speed. The model was verified using a dataset of 2096 images from the termite damage survey in hydraulic engineering within Hubei Province in 2024, along with images captured by drone. The results showed that the improved YOLOv8 model outperformed four traditional or enhanced models in terms of precision and mean average precision for detecting soil-dwelling termite damage, while also exhibiting fewer parameters, reduced redundancy in detection boxes, and improved accuracy in detecting small targets. Specifically, the MCD-YOLOv8 model achieved increases in precision and mean average precision of 6.4% and 2.4%, respectively, compared to the YOLOv8 model, while simultaneously reducing the number of parameters by 105,320. The developed model is suitable for the intelligent identification of termite damage in complex environments, thereby enhancing the intelligent monitoring of termite activity and providing strong technical support for the development of termite control technologies.

1. Introduction

Termites, as ancient social insects distributed nearly globally, play an essential role in the Earth’s ecosystem [1]. However, their small size and extensive populations contribute to the covert foraging behaviors that result in considerable economic damage, categorizing them as significant pests [2]. The intrusion of human activities into termite habitats, coupled with the effects of climate change, has intensified their impact, particularly in the Yangtze and Pearl River basins in China, where the damage caused by soil-dwelling termites is particularly pronounced [3]. In China, the primary areas affected by termite damage include hydraulic engineering [4,5,6], buildings [7,8], and landscaping [9], with annual economic losses estimated between 2 to 2.5 billion yuan [10]. The characteristics of termite underground activity complicates detection and control efforts. The damage inflicted by termites frequently demonstrates strong concealment and rapid spread [11,12]. Consequently, the termite control in hydraulic engineering has emerged as a central focus of preventive strategies in China. In 2023, the Ministry of Water Resources in China underscored principles, such as “technological innovation” and “green and safety”. The Ministry advocated for innovation-driven methodologies, enhanced scientific research on termite control, the accelerated adoption of cutting-edge technologies and equipment, and the encouraged use of environmentally sustainable practices in termite control.

Traditional methods for identifying termite damage rely on manual field surveys and visual observation to find the indicators of termite activity (such as mud tunnels, swarming holes, and plants that are eaten by moths), which are labor-intensive and inefficient [13]. Recently, with advancements in computer vision and deep learning, intelligent methods for identifying termite damage have emerged. Lopes et al. introduced convolutional neural networks (CNNs) to assess the severity of termite damage, employing data augmentation techniques to enhance the original dataset of 181 samples and evaluating the performance of four distinct models [14]. Their findings indicated that the AlexNet model exhibited commendable performance on this dataset, although the results may be influenced by the dataset’s limited size. In a separate study, Huang et al. developed a deep neural network model to intelligently identify termite species, achieving over 90% accuracy in identifying worker and soldier termites using 18,000 images of different species [15]. Additional researchers have investigated the potential of utilizing termite sounds [16], volatile pheromones [17], carbon dioxide concentrations [18], and infrared thermography [19] for identification purposes, but these methods are mostly in the experimental stage with limited practical applications. Overall, the current state of intelligent technologies for termite damage identification is nascent and presents several challenges. For instance, these technologies are infrequently employed in assessing termite risks in dam structures, and many are constrained by reliance on single-source data, inadequate data volume, and diverse identification targets [13]. This results in low integration and challenges in adapting to varying geographic and lighting conditions.

Additionally, soil-dwelling termite activity signs mainly include mud tunnels, mud coverings, and swarming holes constructed by termites using soil, showcasing unique morphological characteristics [13]. Indicators of soil-dwelling termite damage frequently manifest within intricate forested environments and are typically of a centimeter scale, categorizing them as small objects in the realm of image recognition. Currently, the accuracy of the YOLOv8 algorithm in detecting small objects requires enhancement, prompting various researchers to propose strategies for improvement [20,21,22,23]. For example, Dai proposed a scale-variant attention network (SvANet) for the precise segmentation of small objects in medical images [24]. Xu et al. developed a deep learning framework known as HCF-Net, which incorporates multiple practical modules to significantly advance infrared small object detection, effectively addressing challenges associated with the diminutive size of targets and the complexity of infrared image backgrounds [25]. To improve the detection of the indicators of termite damage and enhance accuracy for small objects, it is necessary to develop an image recognition model specifically designed to accommodate the unique characteristics of soil-dwelling termite activity signs.

Current methods often lack the necessary adaptability and precision for such niche scenarios, underscoring the need for a more tailored approach. Our study fills this gap by introducing MCD-YOLOv8, a model specifically designed to address the unique challenges of small target detection in soil-dwelling termite activity signs. The main innovations of this study are as follows:

- (1)

- The development of a termite damage feature dataset tailored for multi-target detection, which encompasses four distinct labels: deadwood, detector, hole, and mud;

- (2)

- The MCA module is introduced in the backbone part, improving the accuracy of small object detection. This module extracts local and contextual information through random sampling, thereby increasing the recognizability of small objects;

- (3)

- The DASI module is added in the neck part to reduce computation time and memory consumption, enhancing detection accuracy and speed, enabling effective multi-scale feature fusion.

This research contributes to the timely detection and localization of termite-related damage, thereby mitigating potential damage losses, and holds considerable theoretical and practical significance for improving the level of termite control technologies.

2. Related Work

2.1. Target Detection Methods

In recent years, artificial intelligence technology has advanced rapidly, with algorithms based on deep learning theory achieving significant breakthroughs in the field of target detection. These algorithms are mainly categorized into two types [26]: two-stage algorithm and one-stage algorithm. Two-stage algorithms, like R-CNN [27] and FPN [28], generate candidate regions before conducting classification and bounding box regression. Although this method achieves high precision, it is computationally complex and has limited real-time performance [26]. On the other hand, single-stage algorithms, like SSD [29] and the YOLO series [30], bypass the candidate region generation, allowing for quick classification and localization, making them suitable for complex scenarios requiring instant response. Despite the accuracy advantage of the two-stage algorithm and the one-stage algorithm, especially the YOLO series, have demonstrated exceptional detection accuracy and efficient processing capabilities across numerous applications, particularly in the real-time detection of soil-dwelling termite activity traces in complex environments.

Serving as a paradigm of single-stage algorithms, YOLO excels in real-time applications with its outstanding detection speed and accuracy and is widely used in fields such as medicine, transportation, and agriculture [31]. Gu et al. innovatively introduced the YOLOv8-Skin, incorporating multi-scale features and edge enhancement, significantly improving the accuracy of melanoma segmentation [32]. Zhang et al. developed the RBT-YOLO, optimizing vehicle detection precision through multi-scale fusion and triplet attention, thus markedly enhancing performance while reducing computational demands [33]. Wang et al. optimized YOLOv8 using an adaptive color perception module, enhancing feature extraction capabilities in complex natural environments [13]. However, despite the YOLO model demonstrating exceptional performance across various fields, challenges remain in adapting to various scenarios and accurately locating small targets.

2.2. Small Object Detection

Small object detection, as a subfield of object detection, faces significant technical challenges. In recent years, YOLOv8 has made notable progress in this field. To address the issues of low accuracy and susceptibility to environmental factors in small object detection, Wang et al. employed WIoU regression loss and gradient allocation to enhance the model’s feature extraction capabilities [34]. Jiang et al. proposed the AEM-YOLOv8s algorithm by combining the strengths of alterable kernel convolution and efficient multi-scale attention within the C2f module and incorporating a dedicated small object detection layer and BiFPN structure, which significantly improves the performance of small object detection [35]. Li et al. tackled the difficulties in feature extraction in complex scenes and the issue of small object features being easily obscured by noise by proposing the self position module (SPM) attention mechanism, which enhanced detection accuracy [36]. Currently, research mainly focuses on improving the perception of small objects [37], using methods such as multi-scale feature fusion, feature pyramid networks, and attention mechanisms. These studies provide effective technical support for detecting traces of soil-dwelling termite activity, but further improving the accuracy of small object detection in complex backgrounds and varying lighting conditions remains a key challenge.

Despite these advancements, current methods still struggle with detecting small targets, such as soil-dwelling termite activity, particularly under complex backgrounds and varying lighting conditions. The existing algorithms often fail to balance accuracy and computational efficiency in such challenging scenarios, highlighting the need for more robust and specialized models. To address these challenges, we propose an improved YOLOv8 model (MCD-YOLOv8) that leverages multi-scale feature fusion and an adaptive attention mechanism. Unlike previous models, our approach specifically optimizes for small target detection in complex environments by enhancing feature extraction capabilities and improving robustness against background noise and lighting variations.

3. Data Collection and Processing

3.1. Data Sources

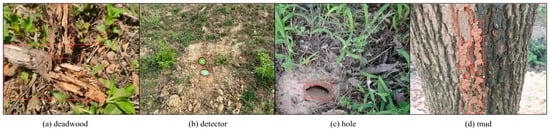

The dataset utilized in this study predominantly comprises images from termite damage surveys conducted in hydraulic engineering projects within Hubei Province in 2023, as well as images captured by a DJI Magic 3E drone (Shenzhen Dajiang Innovation Technology Co., Ltd., Shenzhen, China). It encompasses the following four distinct categories: deadwood, detector, hole, and mud, as illustrated in Figure 1. The survey images were acquired by technical personnel related to termite control in and around reservoirs and embankments, employing various angles and lighting conditions, which resulted in complex scenes characterized by small targets and multiple obstructions. The drone images ware captured along the dam axis directed towards the downstream slope, with parameters such as flight altitude and camera tilt angle in accordance with the height and slope of the dam. A total of 1093 raw images were compiled, including 196 drone images. All images within the dataset were meticulously annotated using Labelme v5.6.0 software and subsequently divided into training, validation, and testing sets in a ratio of 7:2:1. In contrast to indoor experimental data, this research leveraged thousands of preprocessed field images depicting soil-dwelling termite damage, thereby enhancing both the quantity and quality of samples, as well as improving the representativeness and diversity of the training set.

Figure 1.

The image dataset of soil-dwelling termite damage. The red square frame represents reference examples for each category.

3.2. Data Augmentation

To reduce the risk of model overfitting and enhance the diversity and generalization ability of the training data, image augmentation techniques, such as mirroring, rotation, color transformation, and brightness adjustment, were applied to the original data [38,39,40]. These techniques replicate a range of lighting conditions and perspectives typically encountered in natural environments, thereby expanding the model’s applicability [41,42,43,44,45]. After data augmentation, a comprehensive dataset comprising 2096 images was generated. This enhancement effectively improves the model’s robustness, equipping it to effectively navigate the diverse and intricate natural environments. By introducing more environmental factors during model training, this approach not only increases the informational content of the training set but also enhances the model’s adaptability and stability in practical applications.

4. Improved YOLOv8 Model

4.1. YOLOv8 Model

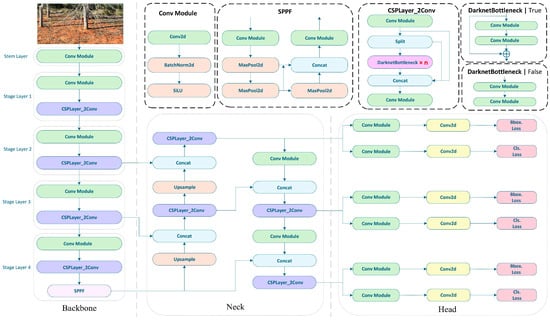

As shown in Figure 2, the architecture of the YOLOv8 network is an evolution of the YOLOv5 framework, comprising three fundamental components: the backbone for feature extraction, the neck for feature fusion, and the head for final recognition and detection [46,47]. In the backbone, the C3 module from YOLOv5 is replaced with the C2f module [48] to achieve further lightweighting of the model. The architecture retains the spatial pyramid pooling (SPPF) module used in YOLOv5. The implementation of the C2f module in place of the C3 module contributes to the model’s lightweight characteristics. In contrast to YOLOv5, the neck of YOLOv8 eliminates the convolutional operations during the upsampling phase and replaces the C3 module with the more efficient C2f module, thereby enhancing the model’s adaptability to objects of varying sizes and shapes. The detection head adopts a decoupled structure, separating classification and detection, which results in a reduction in both parameter count and computational complexity, while simultaneously improving generalization and stability. YOLOv8 computes the bounding box loss via the Conv2d layer and departs from traditional anchor-based prediction methodologies in favor of an anchor-free approach, which directly predicts the center coordinates and aspect ratios of objects. This shift minimizes the reliance on anchor boxes and enhances both detection speed and accuracy. Collectively, these advancements enable YOLOv8 to preserve the strengths of YOLOv5, while implementing nuanced adjustments and optimizations, thereby improving performance across a variety of scenarios.

Figure 2.

YOLOv8 model architecture.

4.2. MCA Module

The Monte Carlo attention (MCA) module is primarily designed to capture information at different scales, thereby improving the recognition of small objects [29]. Conventional techniques, such as squeeze-and-excitation (SE), produce a 1 × 1 output tensor via global average pooling to adjust inter-channel dependencies; however, they exhibit limitations in leveraging cross-scale correlations. To overcome these challenges, the MCA module creates attention maps of sizes 3 × 3, 2 × 2, and 1 × 1 through randomly sampled pooling operations, which enhances long-range semantic dependencies and effectively mitigates cross-scale issues. The implementation of the Monte Carlo sampling method facilitates the random selection of association probabilities, enabling the extraction of both local information (such as edges and colors) and contextual information (including overall image texture, spatial correlation, and color distribution). This approach significantly enhances the ability to discern the shape and precise location of small objects within images. For input tensor , the output attention map of the MCA module is calculated as follows:

where represents the output size of the attention map, is the average pooling function, and is the number of randomly generated attention maps. The association probability satisfies two conditions: (1) ; (2) .

4.3. DASI Module

In the context of multiple downsampling stages for the detection of small objects, there is a risk that high-dimensional features may lose critical information pertaining to these objects, while low-dimensional features may lack sufficient contextual information. To address this challenge, the dimension-aware selective integration (DASI) method is utilized, which adaptively selects and integrates relevant features based on the size and specific requirements of the target objects [30]. Specifically, DASI aligns high-dimensional and low-dimensional features with the features of the current layer through operations, such as convolution and interpolation. This process involves partitioning the features into four equal segments along the channel dimension, thereby facilitating the effective fusion of features across different scales within the same spatial dimensions. The , , and represent the -th () partition feature of high-dimensional features, low-dimensional features, and the current layer, respectively. These partitions are calculated using the following formulas:

where is the value obtained by applying the activation function to ; is the selective aggregation result of each partition. is the result of merging along the channel dimension; is the result after convolution (), batch normalization (), and rectified linear unit (). If > 0.5, the model prioritizes fine-grained features, and if < 0.5, it emphasizes contextual features.

4.4. MCD-YOLOv8 Model

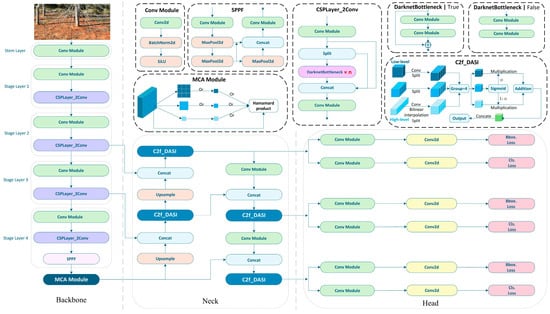

To facilitate the intelligent identification of the images of soil-dwelling termite damage, this study enhances the YOLOv8 model by developing a multi-objective detection dataset and integrating various modules to tackle challenges associated with the concealment of termite damage, the detection of small targets, and the complexity of image backgrounds. The architecture of the MCD-YOLOv8 model is illustrated in Figure 3. Although the overall framework of MCD-YOLOv8 retains the foundational structure of YOLOv8, consisting of the backbone, neck, and head components, it introduces the MAC module following the SPPF module within the backbone and replaces the C2f module in the neck with the DASI module. The enhancements made to the MCD-YOLOv8 model are particularly notable in two significant aspects:

Figure 3.

The architecture of MCD-YOLOv8 model.

- (1)

- The integration of the MCA module within the backbone, which facilitates the extraction of both local and contextual information through random sampling, thereby improving the model’s ability to recognize small objects.

- (2)

- The adoption of the DASI module in the neck, which substitutes the C2f module and adaptively enhances fine-grained or contextual features according to varying dimensional requirements, thereby further promoting multi-scale feature fusion.

4.5. Model Evaluation Metrics

In order to assess the efficiency and performance of the model, the study employs precision (), recall (), average precision (), and mean average precision () as evaluation metrics, utilizing the corresponding formulas, as follows:

where represents the accuracy of recognition, indicating the proportion of correctly predicted positive samples; represents the recall rate, indicating the proportion of correctly predicted samples that are actually positive; is the number of true positive samples correctly predicted by the model; is the number of false positive samples incorrectly predicted by the model; is the number of positive samples not predicted by the model; is the average precision, representing the area under the curve; a higher value indicates better model performance in recognizing specific targets; is the mean average precision, representing the average of across all categories; is the total number of categories.

5. Results Analysis

5.1. Category Recognition Results

This study develops the MCD-YOLOv8 improved model by combining the MAC and DASI modules based on the YOLOv8 model. The model utilizes original images as input, supplemented by data augmentation techniques to increase the diversity of training samples. During model training, we resized all images to 640 × 640 to maintain consistency and reduce computational load caused by varying original sizes. The backbone part uses four layers of Conv and CSP modules for initial feature extraction, with convolution kernel sizes of 3 × 3 or 1 × 1 and the SiLU activation function. The neck part incorporates FPN and PANet to enhance feature information propagation and introduces a lightweight module. For the loss functions, classification loss primarily uses varifocal loss (VFL), while regression loss combines complete intersection over union (CIOU) and distribution focal loss (DFL). The training process is set for a total of 200 iterations, with a batch size of 16, making it suitable for environments with limited resources and the need for quick data processing. The initial learning rate is set at 0.01; if set too high, it may lead to instability, while too low a rate can slow down convergence.

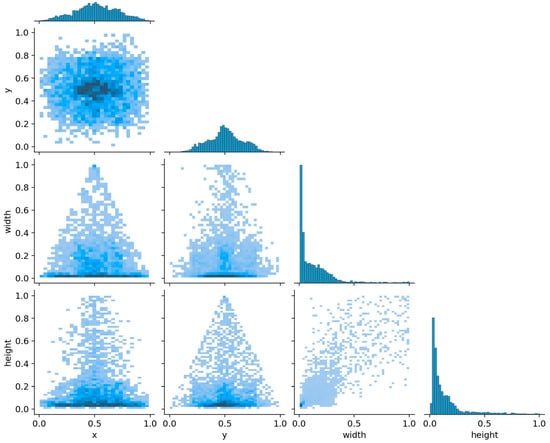

After the process of data augmentation, the dataset has been expanded to include a total of 2096 images, which are classified into the following four distinct categories: deadwood, detector, hole, and mud. The recognition outcomes for these categories, as presented in Table 1, indicate that the enhanced model achieved an overall accuracy of 0.88. Specifically, the deadwood category comprises 1211 instances with an accuracy of 0.891; the detector category includes 131 instances with an accuracy of 0.979; the hole category consists of 125 instances with an accuracy of 0.795; and the mud category contains 2689 instances with an accuracy of 0.857. Furthermore, as illustrated in Figure 4, the centers of the bounding boxes are predominantly located in the central region of the images. The majority of these bounding boxes exhibit widths and heights that are less than one-fifth of the overall image dimensions, with areas that are less than one twenty-fifth of the total image area. It is evident that most soil-dwelling termite activity signs are around 10% in size, classifying them as small targets. Compared to larger- or medium-sized targets, they possess less visual information, making it challenging to extract distinguishing features. Additionally, they are easily affected by environmental factors, making it difficult for detection models to accurately locate and identify these small targets.

Table 1.

Recognition results for four categories with MCD-YOLOv8 model.

Figure 4.

The statistical parameters associated with the labels. The x and y denote the coordinates of the center of the bounding box, with the top-left corner serving as the origin. The width and height parameters indicate the dimensions of the bounding box.

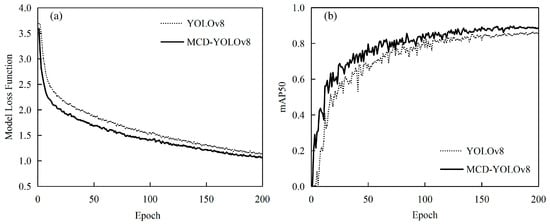

Figure 5 presents a comparative analysis of the loss function curve (Figure 5a) and the mean average precision (mAP) curve (Figure 5b) for the MCD-YOLOv8 and YOLOv8 models. Initially, the loss values for both models exhibit similarities; however, the MCD-YOLOv8 model demonstrates a more rapid and consistent decline in loss values, ultimately achieving a lower stabilization point. Specifically, the loss for MCD-YOLOv8 stabilizes at approximately 1.0, while the YOLOv8 model stabilizes at around 1.2. In terms of the mAP curve, after 50 iterations, the mAP for the MCD-YOLOv8 model increases to approximately 0.8, in contrast to YOLOv8, which reaches only about 0.6 and exhibits greater variability. Ultimately, the mAP for MCD-YOLOv8 stabilizes at approximately 0.886, whereas YOLOv8 stabilizes at 0.862. These findings indicate that MCD-YOLOv8 exhibits enhancements in both recognition accuracy and processing speed, thereby improving its efficiency in practical applications.

Figure 5.

Comparison of loss function curve (a) and mAP curve (b).

5.2. Ablation Study

This research enhances the framework for small object detection, addressing challenges related to object occlusion and overlap, through the development of the MCD-YOLOv8 model. To verify the impact of the MCA and DASI modules, an ablation study was conducted based on the YOLOv8 model. In Table 2, “×” indicates “not used”, and “√” indicates “used”. The MCD-YOLOv8 model, incorporating the MCA and DASI modules, shows significant improvements in recognition accuracy and efficiency compared to the original model without any added modules. Compared to YOLOv8, the MCD-YOLOv8 model’s precision increased to 88.0%, up by 6.4 percentage points; the mean average precision (mAP) increased to 88.6%, up by 2.4 percentage points; and the number of parameters decreased to approximately 2.9 million, reducing by 105,320. When using the MCA or DASI module individually, both improve model accuracy, but they affect the parameter count differently. The DASI module effectively reduces model parameters, while the MCA module increases model complexity. Therefore, the MCD-YOLOv8 model, with both MCA and DASI modules, achieves higher recognition accuracy for small objects in complex environments and maintains a lightweight design.

Table 2.

Ablation study results.

5.3. Comparison of Different Model Recognition Effects

To further objectively evaluate the advantages of the MCD-YOLOv8 model, experiments compared the recognition effects of the YOLOv8 model and other improved models. All models were subjected to training utilizing an identical dataset and configuration parameters. Table 3 presents the evaluation metrics with a test dataset for the YOLOv8, NonLocal, PPA, DWR, and MCD-YOLOv8 models. According to the comparative experimental results in Table 3, YOLOv8 has a precision, recall, mAP, and parameter count of 0.816, 0.841, 0.862, and 3,006,428, respectively. Building on YOLOv8, the introduction of the NonLocal, PPA, and DWR modules improves both precision and mAP values but leads to a reduction in the recall rate. Additionally, apart from the DWR module, the other two modules increase the parameter count to some extent. However, the MCD-YOLOv8 model achieves a precision (P) of 0.880, recall (R) of 0.842, and mAP of 0.886, outperforming other models, while having fewer parameters. Thus, the MCD-YOLOv8 model excels in recognition accuracy and lightweight design.

Table 3.

Comparison of validation results for different models. The bold numbers represent the best values for each parameter of the five models.

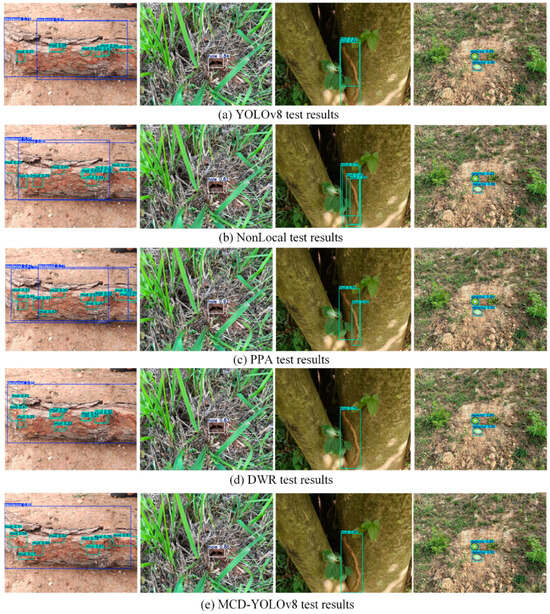

To assess the advantages of the enhanced model in detection capabilities, the following four distinct scenarios were evaluated: multi-category detection, small object recognition, partial occlusion, and complex background analysis. Different colored rectangles were utilized to denote the four category labels. The comparative results for the YOLOv8, NonLocal, PPA, DWR, and MCD-YOLOv8 models are illustrated in Figure 6. All models successfully identified the four categories; however, variations in the confidence levels were observed, particularly in the detection of deadwood and mud. The detection confidence for the YOLOv8, NonLocal, PPA, and DWR models ranged from 0.55 to 0.85 for deadwood and from 0.25 to 0.67 for mud. In contrast, the MCD-YOLOv8 model exhibited superior confidence levels, achieving 0.85 for deadwood and a range of 0.31 to 0.78 for mud, thereby facilitating the more accurate identification of soil-dwelling termite activity signs in the majority of instances.

Figure 6.

Comparison of recognition results for different models.

Furthermore, the YOLOv8, NonLocal, and PPA models continue to encounter challenges related to object occlusion and overlap, which hampers their ability to accurately extract the specific features of objects. In contrast, the DWR and MCD-YOLOv8 models demonstrate proficiency in extracting object features and distinguishing occluded objects. However, the DWR model exhibits slightly lower recognition accuracy and precision compared to the MCD-YOLOv8 model. Notably, in the context of detecting small objects (such as mud), the MCD-YOLOv8 model not only effectively differentiates object features but also improves recognition accuracy. Consequently, this model proves to be efficient in isolating targets within a single image and in identifying multiple features of termite damage, demonstrating satisfactory adaptability, stability, and robustness.

6. Conclusions

In response to the escalating severity of soil-dwelling termite damage, coupled with the inadequacy of current recognition methodologies to fulfill practical engineering requirements, a novel approach for termite damage identification has been developed based on the YOLOv8 framework. This method incorporates Monte Carlo random sampling and lightweight modules. Specifically, the Monte Carlo attention (MCA) module has been integrated into the backbone to produce attention maps via random sampling pooling operations, thereby addressing cross-scale challenges and enhancing the accuracy of small object recognition. Additionally, a lightweight DASI module has been introduced in the neck to optimize computational efficiency and reduce memory usage, which in turn improves both detection accuracy and processing speed.

When compared to traditional or enhanced models, such as YOLOv8, NonLocal, PPA, and DWR, the MCD-YOLOv8 model demonstrates a reduction in parameter count while preserving adequate feature extraction capabilities and enhancing the accuracy of small object detection. The MCD-YOLOv8 model significantly improves the precision of identifying signs of termite damage in complex environments, minimizes redundant detection boxes, and increases overall detection efficiency. In the context of recognizing termite activity indicators, this model exhibits superior performance, facilitating continuous automated monitoring and markedly enhancing detection efficiency.

In practical applications, particularly for tasks that involve recognizing traces of termite activity under varying lighting conditions and intricate environments, the MCD-YOLOv8 model proficiently and effectively identifies small targets, such as features indicative of termite damage, by mitigating cross-scale effects and employing lightweight module designs. This model presents substantial practical advantages over manual visual inspection methods, which are often characterized by high workloads and low efficiency, thereby providing significant value in termite management. Furthermore, the model holds potential for integration into intelligent inspection technologies, such as drones and robotic dogs [42,49,50], in the future, effectively addressing potential oversights and inaccuracies associated with manual monitoring. This advancement enhances the intelligence of termite activity monitoring and supports effective termite control strategies.

In this study, several advancements in termite damage detection were introduced through the development of the MCD-YOLOv8 model. However, there are notable limitations that warrant further exploration. First, while the model demonstrates improved accuracy in detecting small objects, its performance may still be affected by complex environmental conditions, such as extreme lighting variations or highly cluttered backgrounds. Additionally, the model’s reliance on drone-captured images, although beneficial in terms of large-area coverage, may not always account for the fine-grained details required for precise detection in areas with dense vegetation or structural obstructions. Future work could focus on enhancing the model’s robustness under these challenging conditions, integrating real-time data streams, and incorporating diverse data sources, including sensor-based and acoustic data, to further improve detection reliability. Moreover, exploring the scalability of the model across different geographic regions and varying termite species could broaden its applicability and effectiveness.

Author Contributions

Conceptualization, P.J. and L.J.; methodology, P.J. and L.J.; software, P.J.; validation, P.J. and L.J.; formal analysis, F.W., T.C. and M.W.; investigation, C.Z.; resources, L.J. and F.W.; data curation, P.J. and L.J.; writing—original draft preparation, P.J.; writing—review and editing, P.J., L.J. and M.W.; visualization, P.J.; supervision, P.J., L.J., F.W., T.C., M.W. and C.Z.; project administration, P.J. All authors have read and agreed to the published version of the manuscript.

Funding

This project was supported by the National Key Research and Development Program of China (No.2024YFC3211500) and the National Natural Science Foundation of China (No.U2443229d).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Restrictions apply to the availability of these data. Data were obtained from Hubei Provincial Department of Water Resources and are available from the authors with the permission of Hubei Provincial Department of Water Resources.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Verma, M.; Sharma, S.; Prasad, R. Biological Alternatives for Termite Control: A Review. Int. Biodeterior. Biodegrad. 2009, 63, 959–972. [Google Scholar]

- Sun, P.D. Studies on Mechanism of Caste Differentiation and Nestmate Discrimination in Higher Termites. Ph.D. Thesis, Huazhong Agricultural University, Wuhan, China, 2019. (In Chinese). [Google Scholar]

- Li, H. Species of Termites Attacking Trees in China. Sociobiology 2010, 56, 109–120. [Google Scholar]

- Wang, J.Z.; Li, H.; Zheng, B.; Shao, Q. Application and Promotion of New Technologies for Termite Control in Zhanghe Reservoir. Insect Res. Cent. China 2012, 8, 264–267. (In Chinese) [Google Scholar]

- Zhang, S.T.; Qu, Z.B.; Cai, Q.X.; Shi, L.; Zhang, J.S. Application Analysis of Termite Prevention and Control Technology in Water Conservancy Project. Henan Water Resour. South-North Water Divers. 2022, 51, 83–85. (In Chinese) [Google Scholar]

- Zhao, C.; Xiao, X.; Luo, S.M. Application of IPM technology for termite monitoring and trapping in Luban Reservoir. Water Conserv. Constr. Manag. 2022, 42, 70–74. (In Chinese) [Google Scholar]

- Huang, G.M. Discussion on the method of eliminating termites in housing construction in Lu’an City and water conservancy construction and management. Chin. J. Hyg. Insectic. Equip. 2014, 20, 390–391. (In Chinese) [Google Scholar]

- Huang, K.; Wei, J.G.; Wei, J.L.; Yang, M.; Chen, H.X.; Huang, F.N.; Pan, G.S. Comprehensive control technology for termites in the ancient architectural complex of Bo’ai Town, Funing County. Yunnan Agric. Sci. Technol. 2018, S1, 103–105. (In Chinese) [Google Scholar]

- Zhang, W.T.; Zhang, X.; Yang, Z.L.; Wang, W.J.; Zhang, X; Wang, J. Application of Intelligent Termite Monitoring and Control Technology in Termite Prevention and Control in Landscape Greening. Contemp. Hortic. 2024, 47, 82–84. (In Chinese) [Google Scholar]

- Song, C.G.; Ding, J.; Zhou, B.; Yan, J.M.; Deng, Z.G.; Xiao, W. Research Progress on Termite Hazards and Prevention in Chongqing City. Chin. J. Hyg. Insectic. Equip. 2020, 26, 480–485. (In Chinese) [Google Scholar]

- Xi, L.H.; Zhang, G.; Wang, X.F.; Fan, M. Research and Application of Termite Comprehensive Control Technology. Yunnan Water Power 2019, 35, 12–15. (In Chinese) [Google Scholar]

- Li, B. Guangdong Technology Center of Water Resources and Hydropower. History and Current Situation of Termite Control in Hydraulic Engineering of Guangdong Province. Guangdong Water Resour. Hydropower 2021, 12, 31–34+41. (In Chinese) [Google Scholar]

- Wang, Y.F.; Lu, W.P.; Yuan, T.; Chen, L.J.; Zhang, F. Identification of soil-dwelling termites activity signs based on ACP-YOLOv5s. Trans. Chin. Soc. Agric. Eng. (Trans. CSAE) 2025, 41, 387–396. (In Chinese) [Google Scholar]

- Lopes, D.J.V.; Burgreen, G.W.; dos Santos Bobadilha, G.; Barnes, H.M. Automated means to classify lab-scale termite damage. Comput. Electron. Agric. 2020, 168, 105105. [Google Scholar]

- Huang, J.H.; Liu, Y.T.; Ni, H.C.; Chen, B.Y.; Huang, S.Y.; Tsai, H.K.; Li, H.F. Termite Pest Identification Method Based on Deep Convolution Neural Networks. J. Econ. Entomol. 2021, 114, 2452–2459. [Google Scholar]

- Mankin, R.W.; Osbrink, W.L.; Oi, F.M.; Anderson, J.B. Acoustic Detection of Termite Infestations in Urban Trees. J. Econ. Entomol. 2002, 95, 981–988. [Google Scholar] [CrossRef]

- Yuki, M.; Naoki, M.; Kenji, M. Multi-functional roles of a soldier-specific volatile as a worker arrestant, primer pheromone and an antimicrobial agent in a termite. Proc. Biol. Sci. 2017, 286, 20171134. [Google Scholar]

- Hu, Y.; Zhang, L.; Deng, B.; Liu, Y.; Liu, Q.; Zheng, X.; Zheng, L.; Kong, F.; Guo, X.; Siemann, E. The non-additive effects of temperature and nitrogen deposition on CO2 emissions, nitrification, and nitrogen mineralization in soils mixed with termite nests. Catena 2017, 154, 12–20. [Google Scholar]

- Jouquet, P.; Capowiez, Y.; Bottinelli, N.; Traoré, S. Potential of Near Infrared Reflectance Spectroscopy (NIRS) for identifying termite species. Eur. J. Soil Biol. 2014, 60, 49–52. [Google Scholar]

- Luo, F.; Bian, W.; Jie, B.; Dong, H.; Fu, X. Arbfpn-yolov8: Auxiliary Reversible Bidirectional Feature Pyramid Network for UAV Small Target Detection. Signal Image Video Process. 2024, 19, 63. [Google Scholar]

- Zhang, X.; Zuo, G. Small Target Detection in UAV View Based on Improved Yolov8 Algorithm. Sci. Rep. 2025, 15, 421. [Google Scholar]

- Lv, Y.; Tian, B.; Guo, Q.; Zhang, D. A Lightweight Small Target Detection Algorithm for UAV Platforms. Appl. Sci. 2024, 15, 12. [Google Scholar] [CrossRef]

- Sun, L.; Shen, Y. Intelligent Monitoring of Small Target Detection Using Yolov8. Alex. Eng. J. 2025, 112, 701–710. [Google Scholar] [CrossRef]

- Dai, W. SvANet: A Scale-variant Attention-based Network for Small Medical Object Segmentation. arXiv 2024, arXiv:2407.07720. [Google Scholar]

- Xu, S.B.; Zheng, S.C.; Xu, W.H.; Xu, R.; Wang, C.; Zhang, J.; Teng, X.; Li, A.; Guo, L. HCF-Net: Hierarchical Context Fusion Network for Infrared Small Object Detection. In Proceedings of the 2024 IEEE International Conference on Multimedia and Expo (ICME), Niagara Falls, ON, Canada, 15–19 July 2024. [Google Scholar]

- Hao, S.; Ren, K.; Li, J.; Ma, X. Transmission Line Defect Target Detection Method Based on GR-YOLOv8. Sensors 2024, 24, 6838. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T. Region-based convolutional networks for accurate object detection and segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 142–158. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single shot multibox detector. In Computer Vision—ECCV 2016; Springer: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R. You only look once: Unified, ruth-time object detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Xu, Y.W.; Li, J.; Dong, Y.F.; Zhang, X.L. Survey of Development of YOLO Object Detection Algorithms. J. Front. Comput. Sci. Technol. 2024, 18, 2221–2239. (In Chinese) [Google Scholar]

- Gu, Q.; Sui, S.Y.; Wang, R.; Zhang, H.; Xu, T.P. Skin melanoma image segmentation algorithm based on improved YOLOv8. Comput. Eng. 2024. (In Chinese) [Google Scholar] [CrossRef]

- Zhang, L.F.; Tian, Y. Improved YOLOv8 multi-scale and lightweight vehicle object detection algorithm. Comput. Eng. Appl. 2023, 60, 129–137. (In Chinese) [Google Scholar]

- Wang, G.; Chen, Y.; An, P.; Hong, H.; Hu, J.; Huang, T. UAV-YOLOv8: A Small-object-detection Model Based on Improved Yolov8 for UAV Aerial Photography Scenarios. Sensors 2023, 23, 7190. [Google Scholar] [CrossRef]

- Jiang, W.; Wang, W.H.; Yang, J.J. AEM-YOLOv8s: Small Target Detection Algorithm for UAV Aerial Images. Comput. Eng. Appl. 2024, 60, 191–202. (In Chinese) [Google Scholar]

- Li, Y.; Li, Q.; Pan, J.; Zhou, Y.; Zhu, H.; Wei, H.; Liu, C. Sod-yolo: Small-object-detection Algorithm Based on Improved Yolov8 for UAV Images. Remote Sens. 2024, 16, 3057. [Google Scholar] [CrossRef]

- Huang, K.; Li, D.L. Improved YOLOv8-based Personnel Detection from a Drone’s Perspective. J. Chongqing Technol. Bus. Univ. (Nat. Sci. Ed.) 2025. Available online: https://link.cnki.net/urlid/50.1155.N.20250227.1711.006 (accessed on 20 January 2025). (In Chinese).

- Feng, S.; Huang, Y.; Zhang, N. An Improved Yolov8 OBB Model for Ship Detection Through Stable Diffusion Data Augmentation. Sensors 2024, 24, 5850. [Google Scholar] [CrossRef] [PubMed]

- Marullo, G.; Ulrich, L.; Antonaci, F.G.; Audisio, A.; Aprato, A.; Massè, A.; Vezzetti, E. Classification of AO/OTA 31A/B Femur Fractures in X-ray Images Using Yolov8 and Advanced Data Augmentation Techniques. Bone Rep. 2024, 22, 101801. [Google Scholar] [PubMed]

- Chen, F.Q.; Deng, M.L.; Gao, H.; Yang, X.; Zhang, D. NHD-YOLO: Improved Yolov8 Using Optimized Neck and Head for Product Surface Defect Detection with Data Augmentation. IET Image Process. 2024, 18, 1915–1926. [Google Scholar]

- Luo, W.; Yuan, S. Enhanced Yolov8 for Small-object Detection in Multiscale UAV Imagery: Innovations in Detection Accuracy and Efficiency. Digit. Signal Process. 2025, 158, 104964. [Google Scholar]

- Yilmaz, B.; Kutbay, U. Yolov8-based Drone Detection: Performance Analysis and Optimization. Computers 2024, 13, 234. [Google Scholar] [CrossRef]

- Zhou, M.; Wan, X.; Yang, Y.; Zhang, J.; Li, S.; Zhou, S.; Jiang, X. EBR-YOLO: A Lightweight Detection Method for Non-motorized Vehicles Based on Drone Aerial Images. Sensors 2025, 25, 196. [Google Scholar] [CrossRef]

- Alhawsawi, A.N.; Khan, S.D.; Rehman, F.U. Enhanced Yolov8-based Model with Context Enrichment Module for Crowd Counting in Complex Drone Imagery. Remote Sens. 2024, 16, 4175. [Google Scholar] [CrossRef]

- Chen, M.; Zheng, Z.; Sun, H.; Ma, D. Yolov8-mds: A Yolov8-based Multi-distance Scale Drone Detection Network. J. Phys. Conf. Ser. 2024, 2891, 152008. [Google Scholar]

- Casas, E.; Ramos, L.; Romero, C.; Rivas-Echeverría, F. A Comparative Study of Yolov5 and Yolov8 for Corrosion Segmentation Tasks in Metal Surfaces. Array 2024, 22, 100351. [Google Scholar]

- Lou, H.; Duan, X.; Guo, J.; Liu, H.; Gu, J.; Bi, L.; Chen, H. Dc-yolov8: Small-size Object Detection Algorithm Based on Camera Sensor. Electronics 2023, 12, 2323. [Google Scholar] [CrossRef]

- Jin, Y.; Tian, X.; Zhang, Z.; Liu, P.; Tang, X. C2F: An effective coarse-to-fine network for video summarization. Image Vis. Comput. 2024, 144, 104962. [Google Scholar]

- Pan, J.; Zhang, Y. Small Object Detection in Aerial Drone Imagery Based on Yolov8. IAENG Int. J. Comput. Sci. 2024, 51, 1346–1354. [Google Scholar]

- Sun, H.; Shen, Q.; Ke, H.; Duan, Z.; Tang, X. Power Transmission Lines Foreign Object Intrusion Detection Method for Drone Aerial Images Based on Improved Yolov8 Network. Drones 2024, 8, 346. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).