Highlights

What are the main findings?

- The kknn method empowers the phenocamera-based flowering time determination.

- The effectiveness of the developed methodology depends on the plant’s characteristics.

What is the implication of the main finding?

- The presented method enables the definition of the start, end, and flowering duration.

- Phenocameras can enhance the efficiency of conventional phenological research.

Abstract

Digital repeat photography is currently applied mainly in geophysical studies of ecosystems. However, its role as a tool that can be utilized in conventional phenology, tracking a plant’s seasonal developmental cycle, is growing. This study’s main goal was to develop an easy-to-reproduce, single-camera-based novel approach to determine the flowering phases of 12 woody plants of various deciduous species. Field observations served as binary class calibration datasets (flowering and non-flowering stages). All the image RGB parameters, designated for each plant separately, were used as plant features for the models’ parametrization. The training data were subjected to various transformations to achieve the best classifications using the weighted k-nearest neighbors algorithm. The developed models enabled the flowering classifications at the 0, 1, 2, 3, and 5 onset day shift (absolute values) for 2, 3, 3, 2, and 2 plants, respectively. For 9 plants, the presented method enabled the flowering duration estimation, which is a valuable yet rarely used parameter in conventional phenological studies. We found the presented method suitable for various plants, despite their petal color and flower size, until there is a considerable change in the crown color during the flowering stage.

1. Introduction

Plant phenology is the study of the timing of plant seasonal development and its relationship with environmental factors, both biotic and abiotic [1]. There are various scientific disciplines where plant growth stage studies are applied, e.g., bioclimatology, agronomy, and ecology [2]. The interest in phenological observations is growing [3], which is linked to the continuous progress of climate change leading to possible shifts in plants’ growth stages [4]. Flowering is the simplest phase to spot for both visual distinctiveness and the rapid character of changes in plant shoots, facilitating the monitoring of climate change’s impact on plants. Anthesis is a key period in the reproduction cycle, ensuring fruit and seed development, which are essential for wildlife and humans. Pollinators can benefit from nectar and pollen in exchange for pollination, enabling many plants to exchange genes and reproduce. While various species show different levels of climate sensitivity, changes in the flowering time can lead to mismatches between pollinators and plants [5]. Moreover, varying responses to climate change can increase the risks of alien species invasions as flowering determines the timing of the following growth stages, enabling alien species to close their reproduction cycle earlier and change their distribution.

At the beginning of modern phenology study, plants were observed by trained researchers, who recorded the dates of phases such as leaf development, inflorescence emergence, flowering, or senescence [2]. They used standardized guidelines and descriptions of phases of interest within a network, but these usually vary between countries. Despite attempts to create universal coding for phenophases (like the BBCH scale [6]), even recent studies use various descriptors for analyzing the growing stages (compare: [7,8]).

In recent decades, with the development of monitoring methods such as satellites [9] and phenocameras [10], plants can be observed remotely without or with limited laborious field studies. The satellite approach is focused mainly on a broader spatial scale than conventional field observations performed by individuals in a limited area, thus requiring large human resources to obtain observations on a regional scale. Nevertheless, satellite observations are usually restricted to distinguishing phases as the start, peak, and end of the season at the plant community level [11]. This arises from low temporal resolution, data contamination (caused by, for example, clouds or aerosols), or spatial resolution. Researchers sometimes use the abovementioned method to investigate more precise growing stages, such as flowering [7,12], but this is currently only for relatively homogenous land cover areas.

Near-surface remote sensing (digital repeat photography) was developed to address the spatial and temporal resolution constraints in remote plant monitoring [10]. While used mainly in sites measuring matter and energy fluxes [3], where phenological characteristics such as the start or length of the season are required, phenocameras enable more precise observations depending on the distance of the camera from the observed vegetation, taking into consideration the individual plant [10] and/or individual growing stage. While an increasing amount of research is focused on phenocamera usage, both the typical ‘landscape level’ frame and specific growing stages are rarely considered, leading to more general than precise phenological observations [13,14,15]. When studies are conducted to observe the precise growing stages, as in traditional phenological studies, they are constructed specifically for this case with a camera near the observed plant [8,16]. This is causing the need to utilize more cameras for multiple plant observations while the phenological information for the plant canopy is missing. In an era of rapid machine learning (ML) expansion, numerous studies apply ML to facilitate image classification or recognition [17].

This study aimed to develop an easy and efficient way to indicate the flowering of 12 deciduous woody plants in a typical phenocamera image time series (landscape level). A basic machine learning algorithm was trained on one year of images to create separate models for each plant to achieve this. Ground truth data obtained within periodic field observations of each woody plant were used in the training instances. The models were then tested on another set of whole-year image time series from the site for which the ground truth data were also available. The assessment of the model’s performance was intended to answer several questions:

- (a)

- Can flowering be distinguished from other vegetation statuses based on changes in the RGB indices in a plant canopy-level (landscape) resolution monitoring camera?

- (b)

- How do the models’ classification performances differ between plants with various flowering patterns?

- (c)

- What are the constraints of the developed method?

2. Materials and Methods

2.1. Study Site

This study was conducted in the Dendrological Garden of Poznań University of Life Sciences (PULS, located in Greater Poland voivodeship, Poland). Arboretum, founded in 1922, is located in a highly urbanized area of Poznań city. Still, as a part of the green wedges designed in the 1930s, the garden is partly surrounded by other green spaces. It is situated on post-glacial sandy deposits and river accumulation sands (fulfilling anthropogenic soil criteria at present), with an elevation of 70–80 m above sea level [18].

The average annual air temperature (Ta) for the Poznań area is 9.4 °C, and the average annual sum of precipitation (P) equals 539 mm, for the reference period 1991–2020. Considering the reference period, the two years analyzed in this study were warm (Ta = 10.9 °C in both 2022 and 2023) but they varied in precipitation greatly. The year 2022 can be assessed as dry, with P = 418.8 mm, and 2023 as humid with P = 710.5 mm (Institute of Meteorology and Water Management (IMGW) data [19] for precipitation, air temperature values derived from the meteorological station at the site).

Analyzed Plants

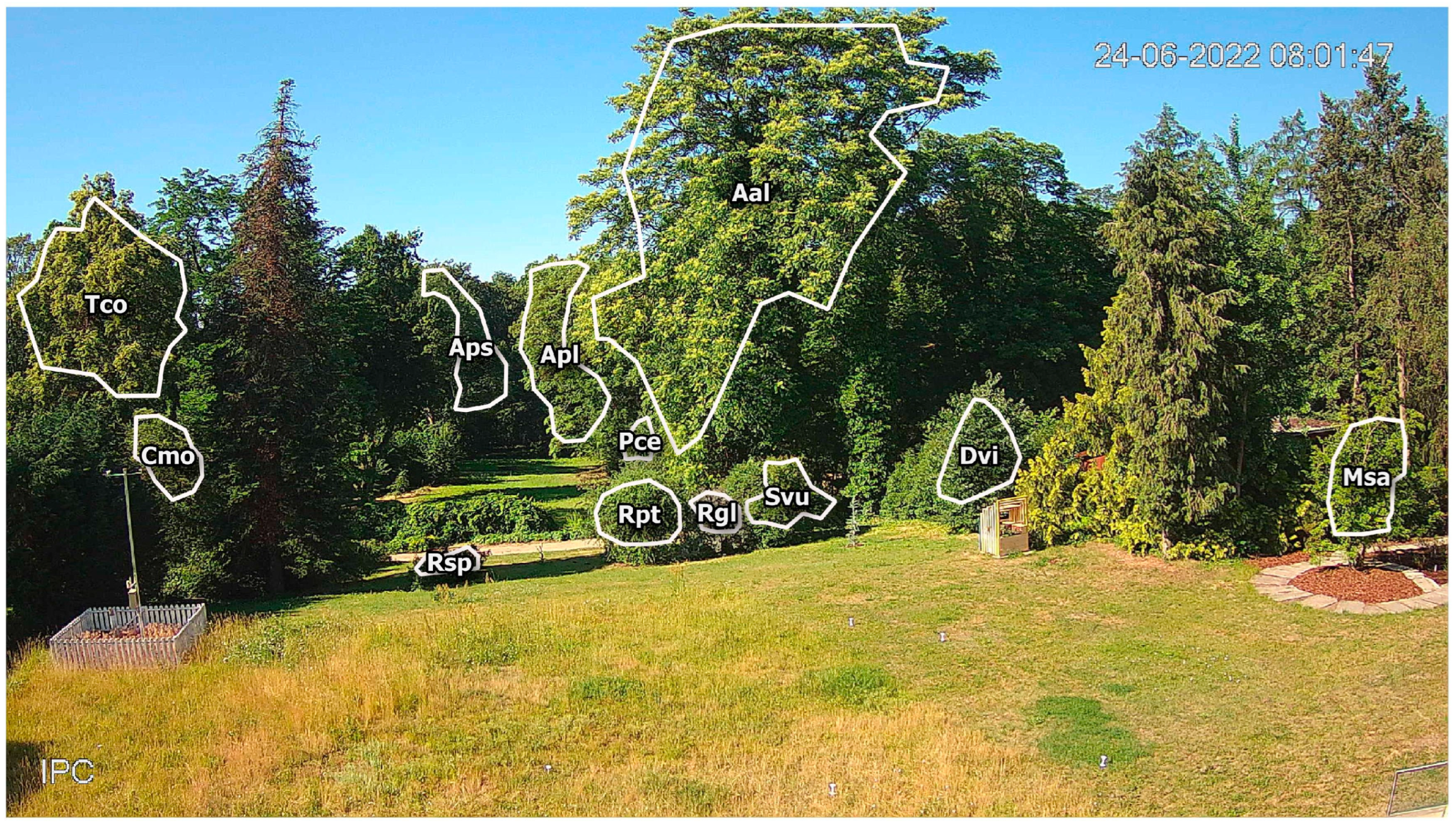

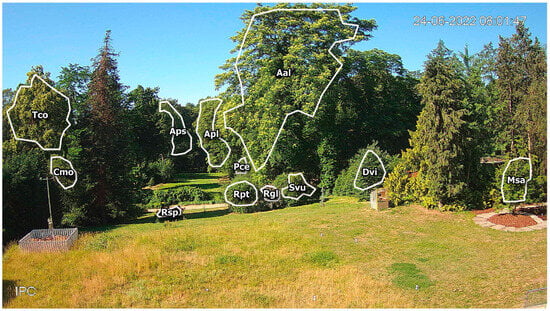

The camera frame covers one of the oldest parts of the garden, with a collection of woody plants, both locally occurring and of foreign origin (Figure 1). In the foreground, approximately 20 m from the camera, there is a lawn area with one of the observed plants: Magnolia salicifolia (Msa). In the distance 20–50 m from camera, most of the observed plants are present: three Rosa genus shrubs (Rosa glauca—Rgl, Rosa spinossisima—Rsp and Rosa × pteragonis—Rpt), lilac Syringa vulgaris (Svu), small-leaved linden Tilia cordata (Tco), single-seeded hawthorn Crataegus monogyna (Cmo), cherry plum Prunus cerasifera (Pce), common persimmon Diospyros virginiana (Dvi), and the tree of heaven Ailanthus altissima (Aal). The other two plants observed in this study were Norway maple Acer platanoides (Apl) and sycamore Acer pseudoplatanus (Aps), the distance of which from the camera is approximately 100 m. All these plants differ in the color and size of their flowers, the flower location on the shoots, and the order of seasonal flowering and leaf development (Table 1).

Figure 1.

Sample camera frame image with outlined regions of interest (ROIs) with corresponding plant codes.

Table 1.

Plant and flowering characteristics for 12 analyzed woody plants in the camera frame.

2.2. Digital Repeat Photography

2.2.1. Camera

The phenocamera was installed on 4 December 2020, on the first floor of one of the PULS buildings (N 52.426749°, E 16.895685°). The garden’s location in relation to the building forced the camera lens to face south, which is not a preferable direction for digital repeat photography due to the influence of direct radiation on the colors recorded [8,20].

The 4Mpx IP camera’s (model Dahua Technology Co., Ltd., Hangzhou, China) focal length was set at 2.7 mm with a field of view of 104º. Images are continuously taken at 10-min intervals for 24 h a day, leading to numerous monochromatic night images and daytime images in color. Every image has a size of 2560 × 1440 pixels with a resolution of 300 dpi, and its time is set to Coordinated Universal Time (UTC). The camera frame is fixed, meaning that every image presents the same fragment of the Dendrological Garden.

2.2.2. Images

The camera collected images throughout 2022 and 2023, allowing complete information on the vegetation’s state during the two analyzed years.

For each of the 12 analyzed plants, the regions of interest (ROIs) were defined (Figure 1). The ROIs were determined on the largest area of the image within the fragment of the analyzed plant, with attention paid to the possible seasonal partial plant overlay by neighboring species. Moreover, the definition of the ROIs considered possible shifts of the plant branches and shoots due to weather conditions (wind, rainfall) and increased biomass during the season.

For the ROI areas within every image, the following color indices (features) were calculated:

- digital numbers (dn) for red (R), green (G), and blue (B)—with the values scale from 0 to 255,

- brightness (BRI)—calculated as the sum of the RGB digital numbers (1),

BRI = Rdn + Gdn + Bdn

- relative values of RGB (RI, GI, BI)—with a scale between 0 and 1 (2),

- green excess index (GEI) [21] (3),

GEI = 2 Gdn − (Rdn + Bdn)

- standard deviations for all of the above.

As a result 16 features describing every plant ROI throughout the time series were created.

The ROI definition and RGB indices were calculated using the ROI-averaged approach described in Filippa et al. [22] and the phenopix (version 2.4.4) package [23] in the R programming language (version 4.4.0) [24].

2.3. Ground-Based Observations

Parallel to the phenocamera recording, the conventional phenological observations were carried out in the following periods: 15 March–7 October 2022 (52 times) and 22 March–28 September 2023 (47 times). The frequency of the observations was related to the occurrence of the flowering phase of the observed plants. Each field visit consisted of visual observations supplemented with the handheld photography of studied plants. A photography comparison allowed for a more precise plant phase assessment [25].

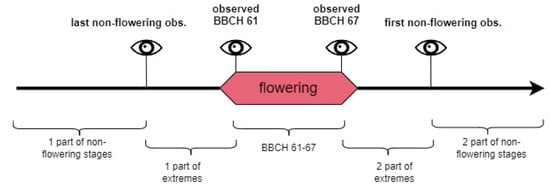

The plant flowering dates were determined based on field observations using the BBCH scale [26,27]. The BBCH scale was designed to be universal for various species. For many crops and some genera, there are specific versions of the scale [26,28]. The two-digit code created from digits 0–9 informs about the principal growth stage (first digit) and its progress (secondary growth stage, last digit). Flowering is indicated by code 6. The beginning of the flowering phase is usually marked as 60 [2] or 61 [4,7], where code 1 stands for 10% of open flowers. A greater number in the secondary code refers to the subsequent stage of development within the principal growth stage. Thus, 65 indicates full flowering (>50% open flowers), 67 means that most of the flowers have dry or fallen petals, while 69 describes the end of flowering with visible fruit structures [27].

To prepare the phenological data for the ML analysis, the flowering stages of the observed plants were marked between BBCH 61 and BBCH 67. Phase BBCH 61 is often used as a blooming start instead of BBCH 60 [27] due to the more robust certainty of the observations, as spotting the first open flowers can be extremely difficult in the case of large woody plants with no access to the whole crown. Phase BBCH 67 was selected as the end of the flowering stage due to the growth stages overlapping with the leaf (Apl, Msa, Pce) or fruit development (Aps, Cmo, Rgl, Rpt, Rsp, Svu, Tco). Aal and Dvi are dioecious plants, and both that are present in the camera frame are male; thus, there is no overlap of the growth stages (plants have fully developed leaves before flowering). However, these plants lose their flowers immediately after pollination, which leads to bare panicles/stems resembling non-flowering stages in the late flowering stage. The application of the BBCH 61–67 flowering period also prevents uncertainties related to the frequency of field observations. Since they were carried out at a non-daily frequency, the early signs of the start and end of flowering could be overlooked.

2.4. Machine Learning

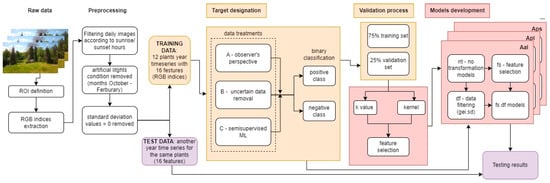

2.4.1. Preprocessing

Due to the presence of night images and the garden’s commercial activities, including evening light shows in the autumn-winter periods of the analyzed years, the color indices data were preprocessed according to the local sunrise and sunset schedules, attraction opening hours, and lastly, the values of the standard deviation of the color indices equaled to zero (which was the sign of a too dark ROI area). As the images from these periods had an unpreferable exposition (too dark at night periods and too bright, with unnatural colors in periods of commercial activity caused by artificial lights), they were removed from the datasets.

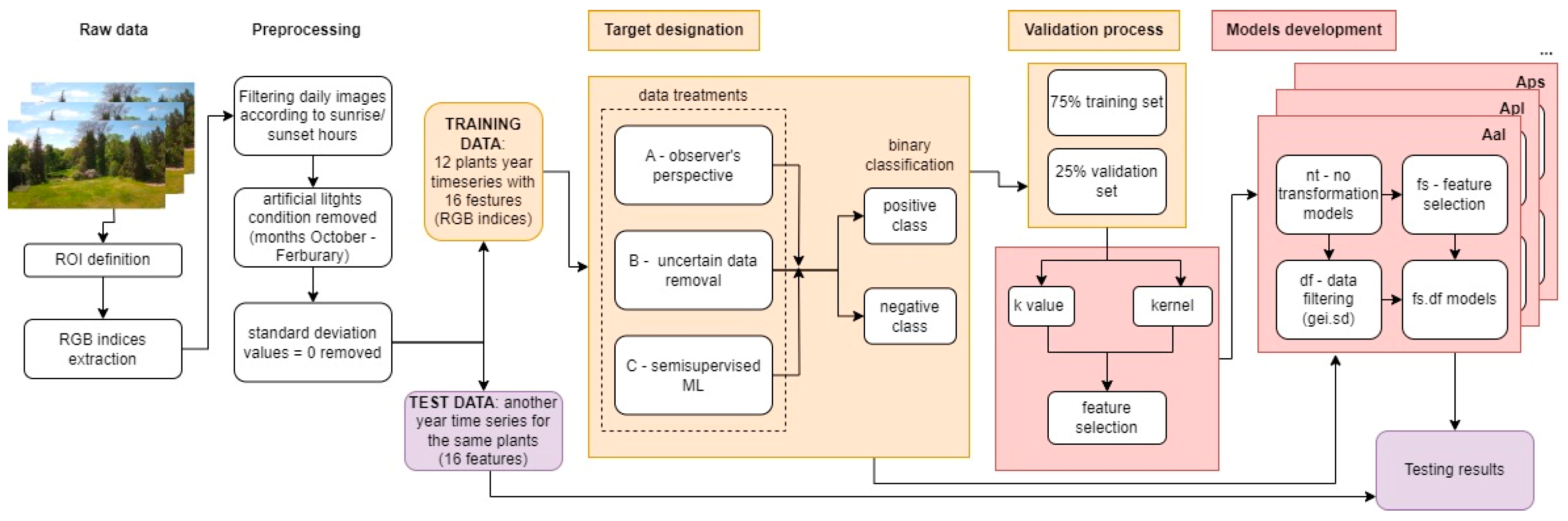

2.4.2. Algorithm Selection

Preprocessed data containing 16 numeric features (indices based on averaged RGB channels in the ROI, see Section 2.2.2) were the base for selecting the most adequate ML algorithm for the flowering classification. Data were labeled with binary classes: positive (flowering period) and negative (non-flowering period). The positive class (flowering) was marked for every image RGB index within phases BBCH 61–67, according to the observed stages at the research site. Then, the baseline model and five simple and computationally efficient classification algorithms were chosen for benchmarking: baseline algorithm that assigns a higher frequency class to all the images (featureless), weighted k-nearest neighbors (kknn), support vector machine (svm), random forest (ranger), naive bayes and classification tree (rpart) using the dedicated to ML purposes R package, mlr3verse (version 0.2.8) [29]. A subsampling cross-validation method was applied to evaluate the algorithms’ performance, with 75% of the randomly selected image indices from the one-year time series used as training sets and the remaining 25% as test datasets. This procedure was repeated 10 times to assess the variability of the evaluation results. The subsampling method was a basis for learner selection and the further validation processes described below (k value, kernel, and feature selection).

Each algorithm’s performance was evaluated using the recall (REC), precision (PREC), and Fβ score (Fβ) measures calculated from the confusion matrix. Recall is a score for true positives TP in all the positive observations, including false negatives FN (4).

It is an important measure in the case of unbalanced dataset classes [30], where the main focus of the model is to predict as much as possible of the target class, and the cost of having false positives is low.

On the other hand, precision is a score of true positives in a set of all positive responses, including false positives FP (5),

meaning it is not focused on the problem of missing out on the target class but rather on the current identification of this class, with the high cost of false positives. In the case of the image time series containing stages from the whole growing season, where flowering is a relatively short phase with a low representation within the dataset, excluding models with tendencies to omit the target class predictions was more important than allowing for false positives. Thus, REC was the main measure to evaluate the models’ performance in the study. PREC served as an additional measure.

However, because PREC tends to decrease with increasing REC and vice versa, the trade-off for these measures was necessary for the algorithm selection process, especially since some of the algorithms reached high REC values with a very poor performance in the PREC measure. As low PREC signaled that the models could not distinguish flowering from non-flowering phases, the Fβ score was calculated for benchmarking, allowing the assessment of both measures with one value (6).

In all 12 datasets selected for training, the best performance reached the weighted k-nearest neighbors algorithm (Table 2); thus, further analysis applies to this classification method.

Table 2.

Benchmarking Fβ score values for five classification learners (kknn, svm, ranger, naïve bayes, rpart).

2.4.3. Flowering Stage (Target) Designation for Training Purposes

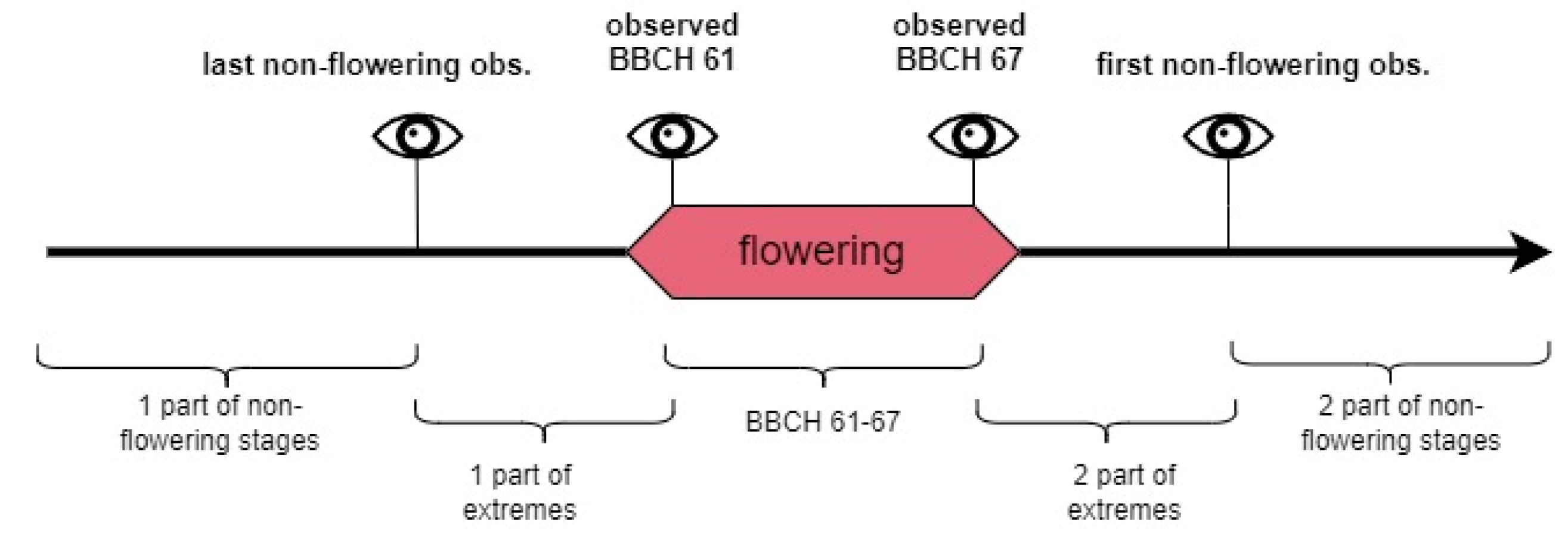

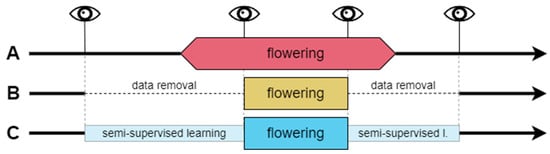

Phenological field observations are prone to error due to the observer’s subjective decisions and experience [2]. Even relatively precise BBCH scale observations are based on the visual assessment of the percentage of stage development that can be difficult to achieve accurately with large perennials, like trees, whose crowns are far from the observer. Taking those limitations into account, there were three following data treatments applied to assign the binary class labels (Figure 2, Table 3):

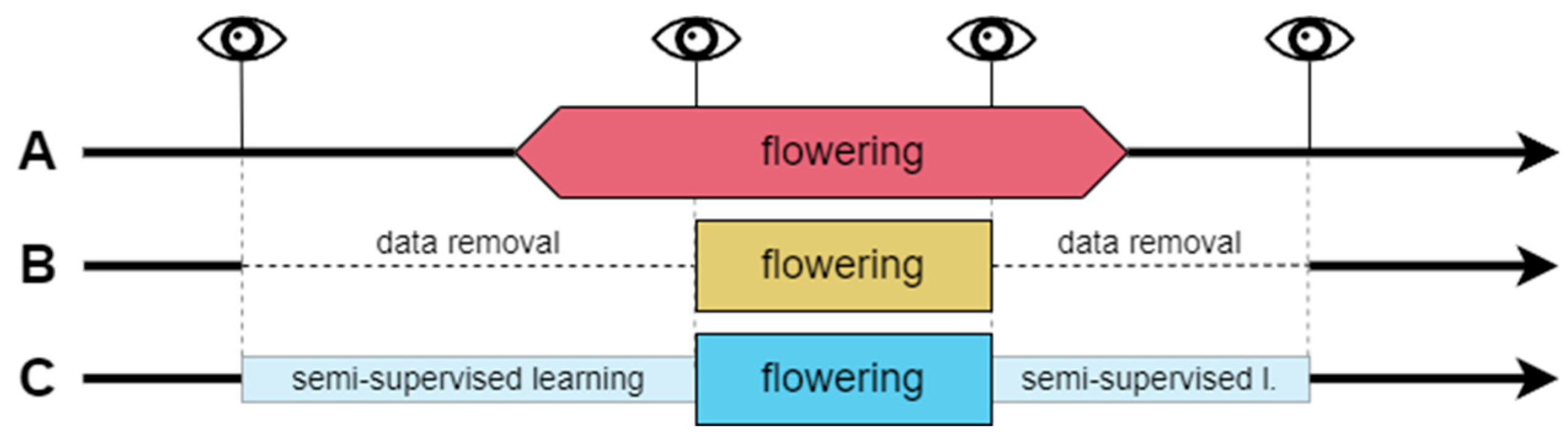

Figure 2.

Data treatments (A, B, C) applied to determine the binary classes (flowering and non-flowering phases). Thick black lines represent the whole year’s timeline with RGB parameters of non-flowering stages. Wider boxes (pink, yellow, and blue) indicate the flowering stage class in every data treatment. Eye symbols and vertical lines indicate field observations. Horizontal dashed lines represent the absence of data. Narrow boxes (light blue) represent part of the data used in semi-supervised flowering classifications.

Table 3.

Pros and cons of various data treatments applied to class determination.

A. Observer’s perspective—based on the decision to distinguish the flowering phase for stages between BBCH 61 and BBCH 67, while transitional stages, BBCH 60 and BBCH 68 to BBCH 69, are marked as non-flowering stages. In case of the absence of observations from stages BBCH 61 and BBCH 67, the flowering classes were extrapolated due to the number of days with missing observations within two known stages and the corresponding observations in other years with field observations to complete the BBCH 61–67 flowering period.

B. Uncertain data removal—this approach was an attempt to cope with the possible misinterpretation of observed stages, leading to the deterioration of the classification results. Removing data from days of year (DOYs) between the last non-flowering observation and the first observation in the range of the BBCH 61–67 flowering stage (thus some BBCH 60 observations were removed as well) and between the last observations within this range and the first non-flowering observation after blooming (which exclude observations in the range of BBCH 68–69) produces data with only the most certain observations remaining. Thus, selected training datasets contained a reduced amount of the ROI’s image color indices data.

C. Semi-supervised learning for label determination—since treatment B does not solve the problem of extremes in flowering stages because it cancels the ability of the algorithm to ‘learn’ from the transitional stages, a third approach to data treatment was applied. It used the kknn algorithm and small training datasets for each plant in semi-supervised learning. The dataset contained only the days of the flowering period and a range of 10 days before and after the first and last non-flowering stage observations, respectively. Those preceding and following flowering 10 days were used as training sets for the non-flowering classes since the color indices during this period were relatively similar to extremes of the phase. Training datasets for flowering classes were formed from days including phases 62–67 (when the specific plant’s observations did not consist of some of the phases, the training data included the period from the former to latter observations from this range, for example, 63–66). Stage 62 was selected to confirm if the 61 stage is similar enough to the rest of the stages in the training sets. The test sets included two subsets of data from before and after the flowering training sets. Learner hyperparameter selection, training, and prediction methods are described in the next section of this paper.

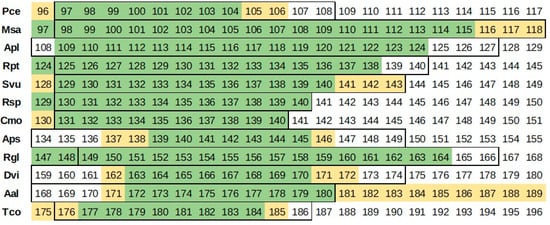

A comparison of the flowering classes to DOYs obtained with A, B, and C treatments is presented in Figure 3.

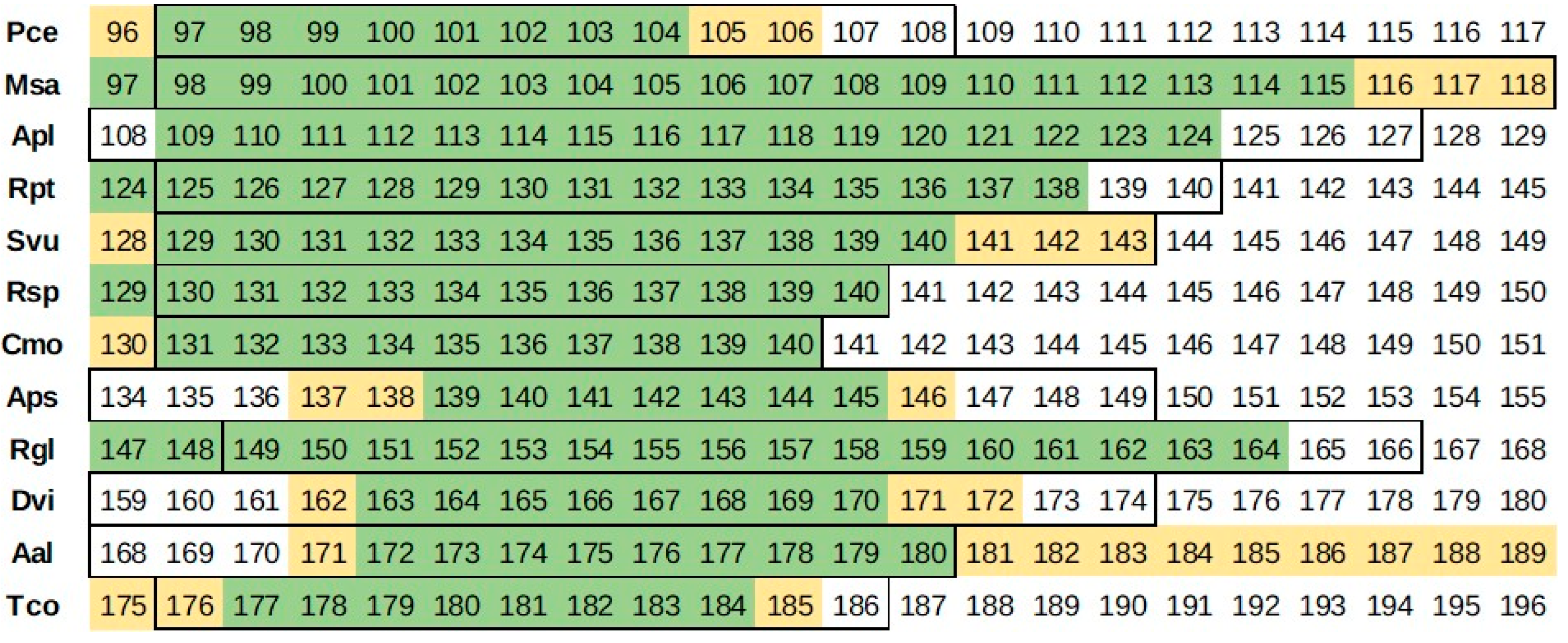

Figure 3.

Comparison of the flowering day of year (DOY) determination according to approach A (yellow/light), B (green/dark), and C (outlined).

2.4.4. Kernel (Weighted) k-Nearest Neighbors Method (kknn)

The kernel k-nearest neighbor algorithm (weighted k-nearest neighbors) is one of the simplest and most well-known classification techniques in ML, based on identifying nearest neighbors, that is, the closest points in the n-dimensional space according to some distance measures (kernel functions), and assigning to a given point the class of the majority of those neighbors [31,32]. It is a memory-based classification often named the ‘lazy learning’ technique since the algorithm just memorizes the training dataset and compares the new data with it.

2.4.5. Training Datasets

The general strategy was to train the kknn algorithm on the data from a one-year time series and then test the obtained model on another whole year of data unknown to the algorithm. However, the frequency of field observations in 2022 was more effective in terms of precisely determining the transitional stages (especially flowering onset) for eight plants: Apl, Cmo, Msa, Pce, Rgl, Rpt, Rsp, and Svu, while those stages for four plants (Aal, Aps, Dvi, Tco) were more accurately identified in 2023. Thus, there were eight training sets from 2022 and four from 2023. Each plant training set was labeled using the three data treatments described above: A, B, and C. The date and DOY information were not used for the algorithm training in any data treatment.

To tune kknn models, each of the previously prepared datasets was transformed using the following transformation categories:

- no transformations (nt)—none of the data were transformed, which means that all the data and all 16 features were used in the algorithm training,

- feature selection (fs)—individually calibrated modification in training sets to achieve the best set of features (color indices) for the flowering classification (Table 4),

Table 4. Feature selection results for all 12 plants (✓ stands for features that were most explanatory for the flowering classification and chosen for algorithm training).

Table 4. Feature selection results for all 12 plants (✓ stands for features that were most explanatory for the flowering classification and chosen for algorithm training). - data filtering (df)—the training and test datasets were filtered to remove 50% of the diel image RGB parameters with a green excess index standard deviation (gei.sd) value lower than its diel median. The gei.sd was chosen since this feature combines information from all three color (RGB) channels, and when the image is biased due to unfavorable camera exposure conditions, e.g., shadowing, filtering out lower diel gei.sd values usually enhances RGB dataset quality,

- both feature selection and data filtering (fs.df)—each plant dataset was transformed using both feature selection (fs) and data filtering (df).

As a result, 3 × 4 (data treatments A, B, C, and transformations nt, fs, df, fs.df) datasets were prepared for each of the 12 woody plants analyzed in this study, creating 144 training datasets for future models.

2.4.6. k-Value and Kernel Determination

For datasets A.nt, B.nt, and C.nt, the values of k (that is, the number of ‘neighbors’, closest points in the n-dimensional space, where n stands for a number of features, which equals 16 in this case) and kernel (weighted method to determine the ‘closest’ neighbors, 10 various to choose, see: [31]) were established using the subsampling method mentioned earlier (Section 2.4.2). The k-value tuning range was set to 3–15, where only odd values were applied to eliminate the tie in the classification results. The range limits were set due to the standard procedure, where k = 1 is eliminated from model tuning to prevent the singular closest neighbor from deciding about the predicted class, and k = 15 is a slightly higher value than the square root of the total number of diel images. The kernel and k-value were determined pairwise (7 k-values and 10 kernel methods) for each plant dataset using the mlr3verse package [33]. The tuning terminator was set to stop after 30 iterations without progress in the recall values (threshold progress = 0). The best kernel and k-values regarding the recall measure were determined since, with numerous data, the need to minimize false positives was lower than the ability to predict the flowering class. The kernel and k-value determined for A.nt, B.nt, and C.nt were then used in models of every type of transformation in the given approach (Figure 4).

Figure 4.

Machine learning process step by step. Arrows describe transitions to the next stage of data analysis, and colors indicate individual stages of the machine learning process (training—yellow, model development—red, testing—purple).

2.4.7. Test Datasets

After fitting the models to the training data by determining the best k-value and kernel, the models were tested using test datasets. These data were neither used in the validation process nor in model fitting. For Apl, Cmo, Msa, Pce, Rgl, Rpt, Rsp, and Svu, the plants camera observations were from 2023, and for plants Aal, Aps, Dvi, and Tco, they were from 2022, enabling the assessment of the efficiency of flowering stage identification using the developed models.

The models’ predictions for every image RGB indices were then converted into diel classifications, since each plant flowering stage record is usually carried out on diel resolution [2]. The following equation was used to designate each DOY of the flowering stage where a 50% threshold of positively labeled classes was applied (7):

where:

CLDOY—flowering stage class (1—positive, flowering or 0—negative, non-flowering),

P—number of diel positive predictions,

N—number of diel negative predictions.

The following criteria were used to determine each plant’s best model performance:

- onset day shift (ODS);ODS = ODpred − ODobs

where:

ODpred—predicted onset day [DOY],

ODobs—observed onset day [DOY];

- the flowering days share (FDS),

where:

FDpred—predicted flowering days

FDobs—total number of days in one of three observed periods: BBCH 61–67, extremes of the flowering phase, and non-flowering stages (Figure 5).

Figure 5.

Three periods for flowering days share assessment.

3. Results

3.1. Field Observations

Flowering stage observations for the analyzed plants are presented in Appendix A (short version in Table 5). The mean time break between the observations performed in the field was 3 days in 2022 and 3.1 days in 2023; however, the increased observation frequency concerned various months in both years.

Table 5.

Dates of flowering stages BBCH61 and BBCH67 and the flowering duration for each plant in both years of observations.

Comparable thermal conditions in both years of field observations resulted in a very homogeneous flowering start schedule of the observed plants. The earliest (April) were species that bloom before left unfolding: Pce, Msa, and Apl. In May, the flowering of Rpt, Svu, Rsp, Cmo Aps, and Rgl started. Late flowering plants in the camera frame were Dvi, Aal, and Tco.

Generally speaking, the blooming period of the ornamental plants (Msa, Svu, Rpt, Rsp, Rgl) was longer than that of other plants, except for Apl, whose flowering was prolonged likewise. The most significant difference between the blooming duration between 2 years was observed in Msa. It had a noticeably more extended flowering period in 2022 than in 2023 due to frost damage in the latter year. Additionally, the secondary blooming of roses (Rgl, Rpt, and Rsp) was observed during autumn 2023. However, their blooming events visually resembled stages not higher than flowering extremes (BBCH 61 or BBCH 68–69) of the primary flowering in the earlier part of the season.

Plants also differed in anthesis synchrony. Numerous Cmo flowers started to open at a similar time on the whole crown; thus, distinguishing phase BBCH 61 was hindered even with frequent observations. In contrast, Rgl’s flowering pattern includes a slow transition from the start to the peak of flowering (which appeared 2 weeks after blooming started) and a more rapid change from the peak to the end of flowering.

3.2. Classification Efficiency

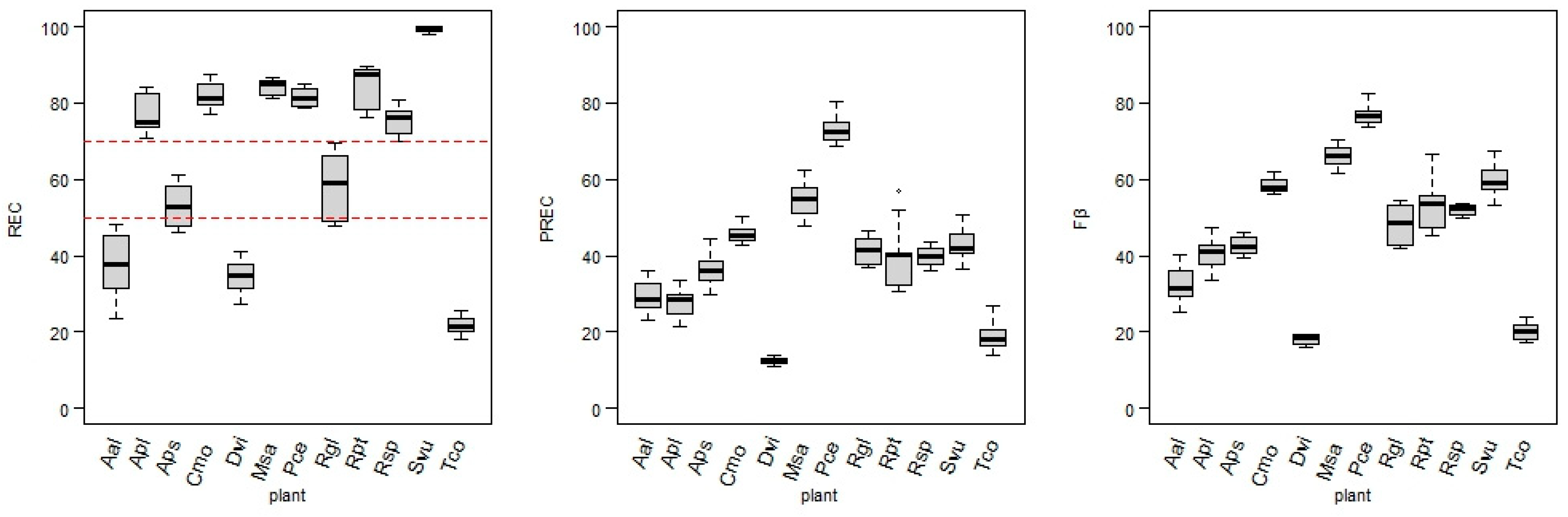

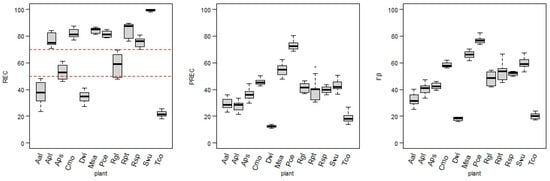

Target designation and data transformations led to the 144 versions of training datasets that were the inputs for the kknn models. As described above, three measures were applied to assess each developed model performance: REC, PREC, and Fβ. The REC was assumed to be the most adequate measure of the model’s performance when the target positive class is significantly less numerous than the non-flowering class. Thus, the model-fitting process was based on achieving the highest REC value. However, Figure 6 also contains precision and Fβ score values, as they are relevant in the model performance assessment.

Figure 6.

Values of recall (REC) (red dashed lines dividing models into three groups), precision (PREC), and Fβ score (Fβ) obtained for the models of each plant.

There are significant differences between the REC values of each plant model. The results can be divided into three groups. The highest REC group (REC ≥ 70%) consists of the following plants: Svu (97.9–100%), Rpt (76.3–89.6%), Cmo (76.9–87.4%), Msa (81.3–86.8%), Pce (78.6–84.9%), Apl (70.8–84.1%), and Rsp (69.9–80.8%). In the second group, with medium recall values (REC 45–70%), the following plant models were ranked: Rgl (47.6–69.7%) and Aps (46.1–60.8%). The third group, where none of the models exceed REC = 50%, contains models for plants: Aal (23.7–48.3%), Dvi (27.1–41.1%), and Tco (18.2–25.6%).

The PREC values were significantly lower than the REC values in the majority of models. While PREC was not the main focus in fitting the hyperparameters, values over 50% for this measure were reached by all models of Pce (68.5–80.2%) and some models of Msa, Rpt, Svu, and Cmo. The lowest precision values characterize models for Aal, Apl, Tco, and Dvi, with the precision value for the latter not reaching over 14% in any model.

The Fβ score (joining results for the measures mentioned above) for models achieved a wide range of values, with highest scores for Pce, Msa, Svu, Rpt, and Cmo (45.4–82.4%), medium for Rgl, Rsp, Apl, Aps, and Aal (25.0–54.6%), and lowest again for Dvi and Tco (below 25%).

3.3. Diel Classifications

While the REC and PREC values give a general idea of the model’s performance, only classifications summarized by days show clearly how accurate the models were in predicting the exact days of flowering stages for observed plants. The best-fitted models were chosen due to the diel classification results (Supplementary Materials), and the further description is related only to them (Figure 7 and Table 6).

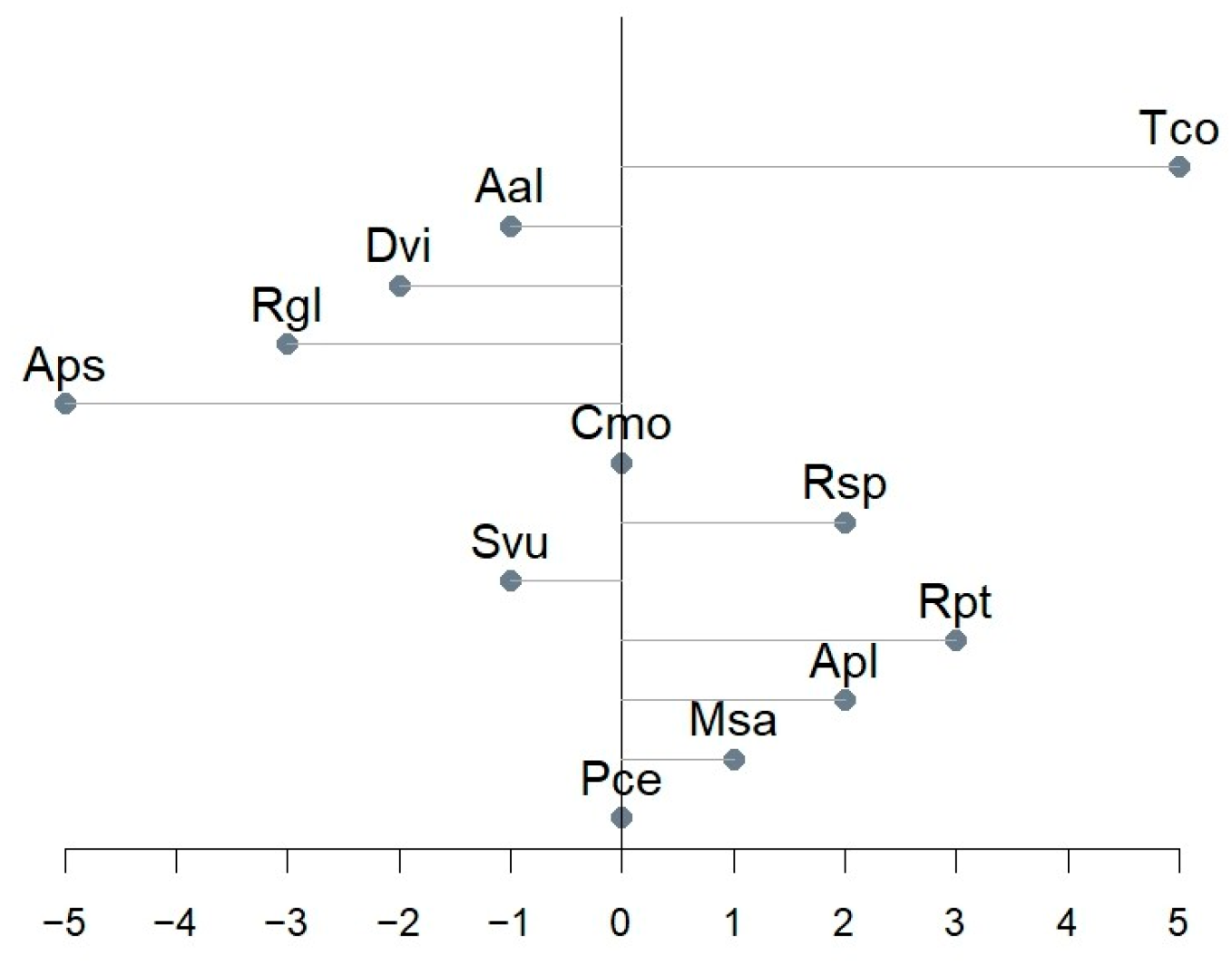

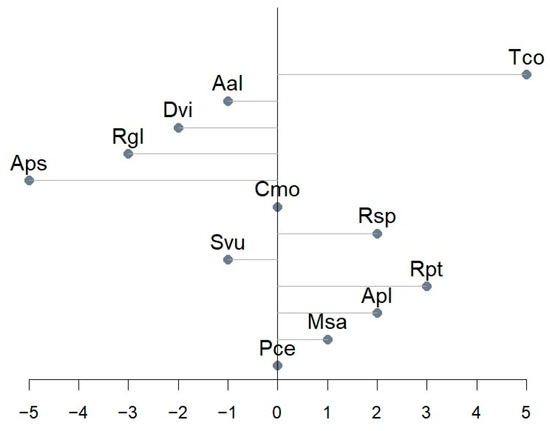

Figure 7.

Onset day shift between predicted and designated BBCH 61.

Table 6.

Diel classification results for each plant selected model.

The most common phenological observations include the onset of the phase [34]. Figure 7 presents the predicted beginnings of flowering in relation to the observed ones. Models of Cmo and Pce had no onset shift, while Aps and Tco had the most notable shift (5 days in the absolute value).

Unlike field observations, models enable the depiction of the duration of the flowering phase. Daily flowering predictions for Svu were the most accurate, with all (FDS = 100%) the observed days of flowering assigned to the positive class (Table 6). The Apl, Aps, Cmo, Msa, Pce, Rgl, and Rsp models reached an FDS between 86.7% and 100% (1–3 days misclassified). The plants with the poorest diel performance were Aal, Dvi, and Tco, with less than 50% of flowering days predicted by the models.

Additionally, models for Aal, Cmo, Pce, Rpt, Rsp, Svu, and Tco did not confuse any non-flowering days with flowering predictions (FDS = 0%). The Apl, Aps, Msa, and Rgl models assigned 2 and 3 days from this period as a flowering stage (FDS 0.7–1%), but the exact DOYs differed between plants. Msa and Rgl incorrectly predicted images with snow cover as flowering (DOY 331, 336–337, and 330–332 in Msa and Rgl, respectively), while the Apl model assigned days from early leaf discoloration (DOY 258 and 298). The Aps model misclassification (DOY 131–133) was related to stage BBCH 5 (inflorescence emergence). The Dvi model misclassifications during the non-flowering period reached 18 days (FDS 5.4%), even 2 months after the designated flowering stage.

The periods between the last observation before flowering (including BBCH 60) and BBCH 61, and between BBCH 67 and the first observation after any signs of the flowering phase (including these from phase BBCH 68 or 69, Figure 5) served as additional parameters in the models’ performance assessment (Table 6, flowering extremes). The Cmo, Msa, Rsp, and Tco models made no mistakes in classifying DOYs in these periods (FDS = 0%), and the Aal, Dvi, and Rpt models assigned one day from the extremes to the flowering class (FDS 5–11.1% depending on the period length). In other models, the flowering class was assigned 3 (Pce, Rgl, FDS = 10.7–25%), 5 (Aps, Svu, FDS = 35.7%), or 7 times (Apl, FDS = 58.3%). The FDS of the flowering extremes shows that more detailed models’ performance as misclassifications of the phase extremes can be treated as correct in contrast with, e.g., DOYs indicating fruit ripening or dormancy. For Svu and Apl, those positive classifications show extremes of BBCH 6 but in the Aps model, it is the continuation of the classifying stage BBCH 5 as flowering.

4. Discussion

4.1. Models’ Performance

In the presented study, we used various data and model-tuning approaches to obtain predictions of flowering in 12 deciduous woody plants that most closely reflected conventional field observations.

4.1.1. Data Treatment

When no additional tuning was applied, various target designation methods (A, B, or C) did not give an overall better performance in the REC values. According to the REC, data treatment A was the most suitable for Aal and Tco, while the least was for Apl, Aps, Dvi, Rgl, and Rpt (Table A3). Removing data from the start and end of flowering (data treatment B) was helpful in the cases of Cmo, Msa, Rgl, Rpt, Rsp, and Svu plants, but resulted in the lowest REC of Pce and Tco. The semi-supervised (C) data treatment resulted in the highest REC for Apl, Aps, Dvi, and Pce (for Aps, Dvi and Pce C.nt gave the best performance of all), while the lowest was for Aal, Cmo, Msa, Rsp, and Svu.

4.1.2. Model Tuning

Feature selection (fs) applied to each data treatment did not robustly influence most models’ performances. However, Apl and Cmo noticed a slight increase in the REC values, while for the Aal, Dvi, Msa, and Svu models, feature selection brought a deterioration in the REC values for all three data treatments. Data filtering (df) had a generally stronger impact on the model’s performance than feature selection (fs). Filtering positively affected all the treatments for plants such as Aal, Apl, Msa, Svu, Tco, and Rgl. In the case of Rgl, the REC has been raised by about 10% due to df. For the Cmo and Rsp models, REC values drop every time after gei.sd filtering.

The simultaneous application of feature selection and data filtering (fs.df) either enhanced or reduced the positive impact of a single (df or fs) model-tuning process in most cases. This transformation increased all the REC values for three data treatments of Apl and Rgl and caused REC decreases in Rsp models.

4.1.3. Joint Effect of Data Treatment and Model Tuning

The joint effect of data treatment and model-tuning analysis indicates that treatment A was sufficient enough to reach the highest REC of all the treatments in only two cases: Aal and Tco. However, both are characterized by low REC values in any model applied. Treatment B, jointly with model tuning, improved the models’ performance (by reaching the highest REC values) for five plants: Cmo, Msa, Rgl, Rsp, and Svu. Semi-supervised treatment (C) and tuning improved positive class identification in six plant models: Apl, Aps, Dvi, Pce, Rpt, and Svu. The Svu models B.df, B.fs.df, and C.fs.df were characterized by REC = 100%.

Considering various models’ performance with respect to the morphological characteristics of the analyzed plants, e.g., flower abundance, petal colors, and inflorescence placement on plant stems (Table 1), a separate model adaptation to each analyzed plant may be required in future method application.

4.1.4. Diel Classification Results

The effectiveness of the diel classifications, which can be compared with conventional observation, varies among the models. Taking into account the frequency of field observations (usually once or twice a week), most models (Aal, Apl, Cmo, Dvi, Msa, Pce, Rgl, Rpt, Rsp, and Svu) showed shifts from the onset date that were not higher or equal to the possible error resulting from the frequency of the observations.

The described methodology utilized two classes and was not designed to designate the peak of flowering, another commonly recorded phase in field phenological observations. However, the flowering duration is even more informative than another single point in time, as it can serve as data that are useful in a study of available food resources for pollinators, a plant’s condition, or its plasticity to environmental factors [5,34]. In this study, models for nine plants—Apl, Aps, Cmo, Msa, Pce, Rgl, Rpt, Rsp, and Svu—can predict the flowering duration from the date of the actual beginning (0–3 day shift) to the end of flowering, in some cases equal to the observed BBCH 67 (Cmo, Msa, Rgl, Rpt, and Rsp models), in others extended to late signs of flowering, as phases BBCH 68–69 (Apl, Aps, Pce, and Svu models).

As climatic factors have changed rapidly in recent years, with a significant increase in air temperatures, which is the main factor in advancing plant development timing [5,35], a deeper understanding of plant phenology at both the individual and population levels is suggested by multiple authors [5,34,36]. Through the presented method, flowering period designation (with its onset date, flowering termination, and duration) is more reachable than with time-consuming field observations. In addition, these parameters are far more accurate regarding the plant response to climatic factors than one point in time indicating when the flowering begins, to which conventional phenology is often limited [34].

The Aal, Dvi, and Tco models have the poorest performance in predicting flowering duration, with less than half of DOYs (or even none). However, CLDOY threshold value manipulation (lowering to 40%) enabled classification improvement for the Aal model, which resulted in a significant increase in predictions: from FDS 42% (5/12 days) to 75% (9/12 days). At the same time, the number of misclassified days rose by only one, but in a period of flowering extremes. Such threshold manipulation was not effective in the Dvi and Tco models due to inconsistencies in the percentage of correctly classified images even at the peak of flowering and a high rate of incorrect positive class predictions long after the end of the flowering stage. Nevertheless, even with a few days offset or missing from predictions, consistent phenological information obtained automatically can still provide valuable data about long-term trends in flowering time, since the first increase in the number of images classified as flowering occurs during the observed flowering.

4.2. Limitations

4.2.1. Plant Characteristics and Location

The models’ performance seems to be dependent on plant visual characteristics. The flowers or inflorescence of ornamental plants (Cmo, Msa, Pce, Rosa sp., or Svu) are clearly visible due to the distinguished petal color, size, and flower abundance, which caused the contrast to their overall crown color in comparison with leaf verdancy and resulted in a more robust change in the RGB parameters. Plants with flowering emergence before leaf unfolding (Apl in addition to Msa and Pce) have flowering that is contrasting with the crown’s bare branches. For the above-mentioned plants, the models’ application enables effective flowering predictions and allows for the future reduction or elimination of ground observations since the accuracy of automatic observations is comparable with the observer’s reports. The Aal, Dvi, and Tco models proved much less sufficient in flowering predictions. All three plants have flowers hidden between the leaves (Dvi) or small, greenish flowers (Aal, Tco), making no contrast with the crown color from the phases before and after flowering. Hence, plants with more subtle flowering patterns will limit the effectiveness of this method.

Plant distance from the camera may affect the predictions in a limited way. The Cmo, Msa, Pce, Rpt, Rsp, and Svu models, with good prediction results, are located relatively close to the phenocamera (20–50 m). Yet, Apl models resulted in good predictions even though the plant grows over 100 m from the camera and is partially covered by vegetation located closer to the camera. Further-located plants are also usually partially covered by neighboring plants. Nevertheless, the plant fragment depicted on the images was enough to predict the flowering of Apl, Cmo, and Pce.

4.2.2. Observer’s Bias

Supervised machine learning involves observer-labeled data, on which algorithms then “learn” to identify patterns that are difficult to distinguish using simple statistical analyses. Therefore, successful flowering classification in this study depended on field observations and the observer’s correct plant phenophase identification. However, field observations are known for being prone to bias according to the observer’s experience and/or even preferences. An assessment of the growth stage is subjective and may differ between observers, and it occurs most often in the case of extreme stages [25]. Some studies deal with this issue by sending several phenological observers to assess the plant growth stages [37].

Field observation frequency is not strictly specified [2,25], and it varies between research (e.g., compare [38] and [8]). In this study, observations carried out at 2–4-day intervals were sufficient to determine the dates of the beginning and end stages of the flowering of individual plants. Reducing the number of observations could decrease the quality of the field phenological data, especially if the observation gap occurred when the first flowers opened (phases BBCH 60 and BBCH 61). These situations happened in the first year (2022) of observations for Aps, Dvi, Tco, and Aal. Thus, the ground-based data collected in the next year (2023) were applied to determine more precise labels in the training sets for these four plants.

Field observations are also limited due to the observer’s position relative to the plant. In tall tree studies, when both distance and the flowers’ small size can bias the observations (Apl, Aps, and Tco in this paper), even the use of binoculars can be insufficient for observing the first signs of flowering (BBCH 60 and BBCH 61).

The traditional phenological observations are separated in time. Plant phenological events are continuous processes, and the transition from one phase to another can occur within hours rather than days. Then, reporting ground observations, even daily, may not reflect the actual date of the phase. That considers mainly the phase onset and secondary growth stages (percentage of opened flowers). In this study, Cmo and Rsp were characterized by a dynamic transition of the subsequent flowering phases, in which the development of the flowering stage from 10% of open flowers (BBCH 61) to full flowering (BBCH 65) took place in less than 2 days. Determining the rate of the opened flowers itself is a difficult task for the observer. Therefore, field observations are often limited to the first signs of flowering [2,39]).

Despite the mentioned limitations, conventional field phenological observations are significant in phenology studies and provide ground truth data for any further remote sensing method. The observation’s robustness can be enhanced by handheld photography, allowing the comparisons between secondary phases, and by binoculars that help to spot flowers in hardly reachable parts of the plants. In the context of historical field observations, thanks to their continuity and long time series (reaching even to previous centuries and millennia), ground observations determine valuable information about changes in the plant response to abiotic factors in time [2,36] and at a level that, for new and more advanced methods, is still under development.

4.3. Phenological Methods Comparison

Digital repeat photography originated in modern color-based ecosystem phenology, where improvements in CO2 flux studies were needed. This application required phenological data about the vegetation season’s start, peak, and end. In a similar way, satellite data began to be used for this purpose in earlier years. Despite that goal, multiple attempts were made to go beyond vegetation indices in both satellites and RGB cameras. They concern the automation of phenological observations, including flowering.

Dixon et al. [12] detected the flowering of Corymbia calophylla trees on satellite data using a random forest regression algorithm and ground truth data derived from UAV images from an RGB camera. A satellite pixel resolution of 6 × 6 m allowed for flowering predictions for trees with clearly visible flowers that were abundant and contrasting with the crown greenness, with the purpose of predicting the spatial proportion of flowering.

The research by Dixon et al. is based on earlier attempts at flowering recognition in Corymbia calophylla. Campbell and Fearns [40] used ground images of the plant to detect white flowers using pixels with a parallelepiped algorithm. This approach was constrained by a minimal resolution of 10 pixels per flower to accurately distinguish flowering from the background (leaves, stems, branches).

In research by Nagai et al. [14], Mann et al. [16], Li et al. [41], and Taylor and Browning [15], digital repeat photography derived from phenocameras was used for the flowering analysis; however, the methodologies in those studies vary considerably. In the first one, where the tropical forest canopy was observed, and individual crowns were analyzed, no automation of phenology classification was applied.

In Mann et al. [16] and Li et al. [41], the deep learning method (CNN and YOLO, respectively) and phenocamera close-ups were used for the flower detection of small Dryas spp. subshrubs and Camellia oleifera, respectively. The first research allowed for the precise definition of the start, peak, and end of flowering; in second yield estimation was based on the detection of plant’s particular growth stages.

Taylor and Browning [15] presented the machine learning method for the broadest application with data from various agricultural PhenoCam network image time series [20]. VGG16, a deep learning model, was trained to classify dominant cover types, crop types, and phenological status, including flowering. The image data for training were labeled according to the visual inspection of the images themselves, not based on field observations. Thus, some phenological stages had to be converted into one, including tassels, flowers, and seeds development in the cereal crop type, which in the BBCH scale are stages BBCH5, BBCH6, and BBCH7, respectively.

A combination of ground truth data and digital imagery was the research subject of Nezval et al. [8] and Guo et al. [42]. In both studies, vegetation curves calculated from RGB channels for the close-ups of three tree species in floodplain forest [8] and summer maize field [42] were compared with the dates of the growth stages obtained by observers at the sites. The former research used the daily aggregated data of green chromatic coordinates for predicting phenology, and in the latter, all the color indices (also aggregated) and relations between pixels were considered.

The mentioned research depicts many possibilities to use and combine various phenological tools. With their resolution, satellites are more suitable for vegetation canopy research than individuals [12]. Satellite data can cover large areas but with a low resolution, and they suffer due to data noise caused by clouds and/or aerosol presence. RGB cameras, both fixed and movable, can obtain information about the canopy [14,15] or individual plants [8,14,16,39,40,41]. However, their cost, in terms of funding and labor, can increase robustly when the research requires multiple cameras [16] due to the zoomed view or drones needed to operate them [12]. The way of processing data is also an important issue, as the techniques using large computing power [12,16,41] can reduce the practical usefulness of the method.

Considering all the research above, the method described here presents a novel approach to digital repeat photography analysis. It allows for the utilization of inputs from every single image of the whole year time series, while extracting the ROIs’ averaged data on RGB channels. This is a characteristic simplification of the standard approach in digital repeat photography. While the need for standardization of the phenocamera technique is signalized [3], there are attempts to reduce the illumination conditions’ effect on the image time series [43] in the context of vegetation curves reflecting canopy greenness more accurately. However, these undesired types of interferences allow flowering detection, and smoothing noisy vegetation indices would blur the flowering information from the data. Applying a weighted k-nearest neighbors binary classification algorithm on simplified but not smoothed color indices is computationally efficient compared with deep learning and some other machine learning techniques, allowing the quick and easy performance of the phenological analysis.

4.4. Potential Applications

A presented combination of the methods can be easily applied at sites where conventional observations are carried out, e.g., botanical gardens and arboreta, where plenty of ornamental woody plants are usually present and in the vicinity of each other. Sites with a relatively high density of plants that the camera frame can cover should be suitable to enable a cost-effective comparison of plants from various habitats and with different sensitivity levels to changes in crucial climate factors in one location [4,38,44]. Orchards and nurseries are the other types of sites that can gain from utilizing the presented method. It can allow comparisons between varieties and can be further used to study varieties’ adaptation and resilience to climatic factors. This method uses RGB parameters and data representing the differences between image pixels during flowering caused by the contrast in flowers and the background colors (leaves, branches), expressed by the standard deviation of the RGB features. Hence, the method is worthy of testing in other ecosystems, such as crops, grasslands, and shrub communities, especially with a predominance of one species.

Digital repeat photography enables treating plant development in terms of continuous processes by recording changes in plant visual characteristics in a high frequency, which is particularly desirable in dynamically changing environmental conditions.

Applying the presented method to previously collected image time series, when calibrated using field-reported data, creates an opportunity to extend the long-term flowering study of the plants that are present within the camera frame. Moreover, digital repeat photography can provide a backup dataset for the eventuality of the observer’s importance in research sites beyond simple growth stage determination. Applying this method can potentially reduce the number of research visits, hence reduce human labor costs, and therefore increase the number of studied plant species.

5. Conclusions

This paper presents a novel approach to both species-specific phenological research and digital repeat photography. Weighted k-nearest neighbors algorithm application with proper target calibration and model tuning resulted in an innovative method for determining the flowering period without ground observations and excessive computing power. The demonstrated method is suitable mainly for ornamental plants and any others with flowers that are distinguishable from the background (leaves or branches) by color. It provides information about flowering onset and phase duration that is comparable with that obtained in field observations for those plants. These properties not only facilitate conventional ground-based phenological research but enrich it substantially. The presented methodology can be easily applied in institutions that are already conducting phenological observations, as they often report the dates of plants’ growth stages needed for target calibration.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/s25072106/s1, Table S1: Details of diel results for 144 kknn models.

Author Contributions

Conceptualization, B.H.C. and M.A.R.; methodology, B.H.C. and M.A.R.; software, M.A.R.; validation, M.A.R.; formal analysis, B.H.C., K.M.H. and M.A.R.; investigation, B.H.C. and M.A.R.; resources, B.H.C., D.J. and M.A.R.; data curation, D.J. and M.A.R.; writing—original draft preparation, M.A.R.; writing—review and editing, B.H.C., D.J., K.M.H., M.A.R. and T.W.; visualization, M.A.R.; supervision, B.H.C. and T.W.; project administration, B.H.C.; funding acquisition, B.H.C. All authors have read and agreed to the published version of the manuscript.

Funding

The research was funded by the National Science Centre Poland within OPUS project Contract No. UMO-2017/27/B/ST10/02228.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors on request.

Acknowledgments

We thank PULS Dendrological Garden, who provided meteorological data and access to the arboretum, as well as the Dean of Faculty of Forestry and Wood Technology at Poznan University of Life Sciences for the phenocamera purchase.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Abbreviations

The following abbreviations are used in this manuscript:

| kknn | Kernel (weighted) k-nearest neighbors |

| ML | Machine learning |

| Ta | Air temperature |

| P | Precipitation |

| IMGW | Institute of Meteorology and Water Management |

| Aal | Ailanthus altissima |

| Apl | Acer platanoides |

| Aps | Acer pseudoplatanus |

| Cmo | Crataegus monogyna |

| Dvi | Diospyros virginiana |

| Msa | Magnolia salicifolia |

| Pce | Prunus cerasifera |

| Rgl | Rosa glauca |

| Rpt | Rosa pteragonis |

| Rsp | Rosa spinosissima |

| Svu | Syringa vulgaris |

| Tco | Tilia cordata |

| PULS | Poznań University of Live Sciences |

| UTC | Universal Time Coordinated |

| ROI | Region of interest |

| dn | Digital number |

| R | Red |

| G | Green |

| B | Blue |

| BRI | Brightness |

| RI | Red index |

| GI | Green index |

| BI | Blue index |

| GEI | Green excess index |

| REC | Recall |

| PREC | Precision |

| Fβ | F-beta score |

| TP | True positives |

| TN | True negatives |

| FP | False positives |

| FN | False negatives |

| svm | Support vector machine |

| Ranger | Random forest |

| Rpart | Classification tree |

| DOY | Day of year |

| nt | No transformation |

| fs | Feature selection |

| df | Data filtering |

| CLDOY | Flowering stage class |

| P | Number of diel positive predictions |

| N | Number of diel negative predictions |

| ODS | Onset day shift |

| ODpred | Predicted onset day |

| ODobs | Observed onset day |

| FDS | Flowering days share |

| FDpred | Predicted flowering days |

| FDobs | Total number of days in the period |

Appendix A

Field Flowering Observations

Table A1.

Field observations of flowering periods in the year 2022. Dark grey indicates the secondary flowering stages between BBCH 61 and BBCH 67, light grey indicates the extremes of the phase.

Table A1.

Field observations of flowering periods in the year 2022. Dark grey indicates the secondary flowering stages between BBCH 61 and BBCH 67, light grey indicates the extremes of the phase.

| Species Code → | Pce | Msa | Apl | Rpt | Svu | Rsp | Cmo | Aps | Rgl | Dvi | Aal | Tco | Month No. | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Date ↓ | Doy ↓ | |||||||||||||

| 15.03.2022 | 74 | 3 | ||||||||||||

| 22.03.2022 | 81 | |||||||||||||

| 25.03.2022 | 84 | |||||||||||||

| 28.03.2022 | 87 | |||||||||||||

| 1.04.2022 | 91 | 4 | ||||||||||||

| 5.04.2022 | 95 | 60 | ||||||||||||

| 7.04.2022 | 97 | 62 | 61 | |||||||||||

| 11.04.2022 | 101 | 64 | 62 | |||||||||||

| 14.04.2022 | 104 | 66 | 65 | |||||||||||

| 19.04.2022 | 109 | 68 | 66 | 61 | ||||||||||

| 21.04.2022 | 111 | 69 | 66 | 62 | ||||||||||

| 25.04.2022 | 115 | 67 | 65 | |||||||||||

| 28.04.2022 | 118 | 67 | 65 | |||||||||||

| 30.04.2022 | 120 | 68 | 65 | |||||||||||

| 2.05.2022 | 122 | 69 | 66 | 60 | 5 | |||||||||

| 4.05.2022 | 124 | 67 | 61 | |||||||||||

| 6.05.2022 | 126 | 68 | 63 | 60 | 59 | |||||||||

| 9.05.2022 | 129 | 69 | 65 | 62 | 61 | 60 | ||||||||

| 11.05.2022 | 131 | 65 | 64 | 63 | 64 | |||||||||

| 13.05.2022 | 133 | 66 | 65 | 65 | 65 | 60 | ||||||||

| 16.05.2022 | 136 | 66 | 65 | 66 | 65 | 61 | ||||||||

| 18.05.2022 | 138 | 67 | 65 | 66 | 66 | 62 | ||||||||

| 20.05.2022 | 140 | 68 | 66 | 67 | 67 | 65 | 60 | |||||||

| 24.05.2022 | 144 | 69 | 68 | 68 | 67 | 60 | ||||||||

| 26.05.2022 | 146 | 69 | 68 | 68 | 68 | 61 | ||||||||

| 27.05.2022 | 147 | 69 | 69 | 69 | - | 61 | ||||||||

| 30.05.2022 | 150 | 69 | 69 | 69 | 62 | |||||||||

| 3.06.2022 | 154 | 63 | 6 | |||||||||||

| 6.06.2022 | 157 | 65 | 59 | |||||||||||

| 13.06.2022 | 164 | 67 | 65 | |||||||||||

| 15.06.2022 | 166 | 68 | 66 | 59 | 59 | |||||||||

| 21.06.2022 | 172 | 67 | 63 | 60 | ||||||||||

| 27.06.2022 | 178 | 65 | 66 | |||||||||||

| 30.06.2022 | 181 | 67 | 67 | |||||||||||

| 4.07.2022 | 185 | 68 | 68 | 7 | ||||||||||

| 6.07.2022 | 187 | 69 | 69 | |||||||||||

| 8.07.2022 | 189 | |||||||||||||

| 12.07.2022 | 193 | |||||||||||||

| 15.07.2022 | 196 | |||||||||||||

| 18.07.2022 | 199 | |||||||||||||

| 22.07.2022 | 203 | |||||||||||||

| 29.07.2022 | 210 | |||||||||||||

| 1.08.2022 | 213 | 8 | ||||||||||||

| 3.08.2022 | 215 | |||||||||||||

| 8.08.2022 | 220 | |||||||||||||

| 12.08.2022 | 224 | |||||||||||||

| 19.08.2022 | 230 | |||||||||||||

| 24.08.2022 | 236 | |||||||||||||

| 2.09.2022 | 245 | 9 | ||||||||||||

| 9.09.2022 | 252 | |||||||||||||

| 20.09.2022 | 263 | |||||||||||||

| 7.10.2022 | 280 | 10 | ||||||||||||

Table A2.

Field observations of flowering periods in the year 2023. Dark grey indicates the secondary flowering stages between BBCH 61 and BBCH 67, light grey indicates the extremes of the phase.

Table A2.

Field observations of flowering periods in the year 2023. Dark grey indicates the secondary flowering stages between BBCH 61 and BBCH 67, light grey indicates the extremes of the phase.

| Species Code → | Pce | Msa | Apl | Rpt | Svu | Rsp | Cmo | Aps | Rgl | Dvi | Aal | Tco | Month No. | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Date ↓ | Doy ↓ | |||||||||||||

| 22.03.2023 | 81 | 3 | ||||||||||||

| 3.04.2023 | 93 | 61 | 59 | 4 | ||||||||||

| 13.04.2023 | 103 | 66 | 62 | |||||||||||

| 17.04.2023 | 107 | 67 | 65 | 59 | ||||||||||

| 20.04.2023 | 110 | 68 | 66 | 61 | ||||||||||

| 26.04.2023 | 116 | 69 | 68 | 65 | 59 | |||||||||

| 5.05.2023 | 125 | 67 | 62 | 60 | 5 | |||||||||

| 10.05.2023 | 130 | 69 | 64 | 62 | 60 | |||||||||

| 13.05.2023 | 133 | 65 | 59 | |||||||||||

| 16.05.2023 | 136 | 66 | 65 | 65 | 65 | 60 | ||||||||

| 19.05.2023 | 139 | 67 | 66 | 66 | 65 | 63 | ||||||||

| 22.05.2023 | 142 | 67 | 66 | 66 | 66 | 65 | ||||||||

| 25.05.2023 | 145 | 69 | 67 | 68 | 67 | 66 | 60 | |||||||

| 5.06.2023 | 156 | 65 | 6 | |||||||||||

| 7.06.2023 | 158 | 66 | ||||||||||||

| 9.06.2023 | 160 | 66 | 59 | |||||||||||

| 12.06.2023 | 163 | 67 | 62 | |||||||||||

| 14.06.2023 | 165 | 68 | 65 | |||||||||||

| 16.06.2023 | 167 | 69 | 66 | |||||||||||

| 19.06.2023 | 170 | 69 | 67 | |||||||||||

| 20.06.2023 | 171 | 69 | 67 | 60 | ||||||||||

| 21.06.2023 | 172 | 67 | 62 | 60 | ||||||||||

| 22.06.2023 | 173 | 63 | 60 | |||||||||||

| 23.06.2023 | 174 | 64 | 60 | |||||||||||

| 26.06.2023 | 177 | 65 | 62 | |||||||||||

| 28.06.2023 | 179 | 66 | 65 | |||||||||||

| 29.06.2023 | 180 | 66 | 65 | |||||||||||

| 30.06.2023 | 181 | 67 | 66 | |||||||||||

| 3.07.2023 | 184 | 67 | 66 | 7 | ||||||||||

| 4.07.2023 | 185 | 68 | 67 | |||||||||||

| 6.07.2023 | 187 | 68 | 68 | |||||||||||

| 10.07.2023 | 191 | 69 | 69 | |||||||||||

| 11.07.2023 | 192 | 69 | ||||||||||||

| 13.07.2023 | 194 | |||||||||||||

| 19.07.2023 | 200 | |||||||||||||

| 21.07.2023 | 202 | |||||||||||||

| 1.08.2023 | 213 | 8 | ||||||||||||

| 4.08.2023 | 216 | |||||||||||||

| 4.09.2023 | 247 | 9 | ||||||||||||

| 6.09.2023 | 249 | 6 | ||||||||||||

| 8.09.2023 | 251 | 6 | ||||||||||||

| 11.09.2023 | 254 | 6 | ||||||||||||

| 13.09.2023 | 256 | 6 | 6 | |||||||||||

| 15.09.2023 | 258 | |||||||||||||

| 18.09.2023 | 261 | 6 | 6 | 6 | ||||||||||

| 19.09.2023 | 262 | 6 | ||||||||||||

| 28.09.2023 | 271 | |||||||||||||

Appendix B

Table A3.

Recall values for every model of each plant.

Table A3.

Recall values for every model of each plant.

| A.nt | A.fs | A.df | A.fs.df | B.nt | B.fs | B.df | B.fs.df | C.nt | C.fs | C.df | C.fs.df | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Aal | 0.388889 | 0.368056 | 0.483301 | 0.449902 | 0.343254 | 0.323413 | 0.475442 | 0.455796 | 0.261905 | 0.237103 | 0.385069 | 0.308448 |

| Apl | 0.707904 | 0.726804 | 0.742373 | 0.820339 | 0.728522 | 0.80756 | 0.745763 | 0.827119 | 0.745704 | 0.828179 | 0.752542 | 0.840678 |

| Aps | 0.461447 | 0.46382 | 0.474057 | 0.478774 | 0.497034 | 0.510083 | 0.54717 | 0.551887 | 0.609727 | 0.577699 | 0.608491 | 0.589623 |

| Cmo | 0.81227 | 0.871166 | 0.769417 | 0.805825 | 0.834356 | 0.87362 | 0.786408 | 0.817961 | 0.811043 | 0.866258 | 0.769417 | 0.800971 |

| Dvi | 0.27952 | 0.271218 | 0.326007 | 0.309524 | 0.354244 | 0.318266 | 0.355311 | 0.346154 | 0.411439 | 0.363469 | 0.408425 | 0.391941 |

| Msa | 0.847575 | 0.840647 | 0.858447 | 0.849315 | 0.861432 | 0.852194 | 0.86758 | 0.853881 | 0.819861 | 0.817552 | 0.821918 | 0.812785 |

| Pce | 0.788235 | 0.790588 | 0.790698 | 0.804651 | 0.785882 | 0.790588 | 0.827907 | 0.846512 | 0.849412 | 0.832941 | 0.823256 | 0.837209 |

| Rgl | 0.479443 | 0.475958 | 0.627424 | 0.635734 | 0.55122 | 0.532404 | 0.696676 | 0.688366 | 0.490592 | 0.491986 | 0.660665 | 0.660665 |

| Rpt | 0.767557 | 0.762611 | 0.767123 | 0.798434 | 0.883284 | 0.892186 | 0.886497 | 0.880626 | 0.869436 | 0.896142 | 0.857143 | 0.886497 |

| Rsp | 0.775701 | 0.781041 | 0.707124 | 0.730871 | 0.801068 | 0.807744 | 0.741425 | 0.757256 | 0.769025 | 0.769025 | 0.699208 | 0.699208 |

| Svu | 0.988458 | 0.987408 | 0.997925 | 0.995851 | 0.990556 | 0.987408 | 1 | 1 | 0.982162 | 0.979014 | 0.997925 | 1 |

| Tco | 0.223077 | 0.256044 | 0.241304 | 0.252174 | 0.182418 | 0.196703 | 0.21087 | 0.217391 | 0.208791 | 0.194505 | 0.232609 | 0.215217 |

References

- Lieth, H. (Ed.) Phenology and Seasonality Modeling. In Ecological Studies; Springer: Berlin/Heidelberg, Germany, 1974; Volume 8. [Google Scholar] [CrossRef]

- Koch, E.; Bruns, E.; Chmielewski, F.-M.; Defila, C.; Lipa, W.; Menzel, A. Guidelines for Plant Phenological Observations; World Meteorological Organization (WMO): Geneva, Switzerland, 2007. [Google Scholar]

- Tang, J.; Körner, C.; Muraoka, H.; Piao, S.; Shen, M.; Thackeray, S.J.; Yang, X. Emerging opportunities and challenges in phenology: A review. Ecosphere 2016, 7, e01436. [Google Scholar] [CrossRef]

- Team, N.-P.; Templ, B.; Templ, M.; Filzmoser, P.; Lehoczky, A.; Bakšienè, E.; Fleck, S.; Gregow, H.; Hodzic, S.; Kalvane, G.; et al. Phenological patterns of flowering across biogeographical regions of Europe. Int. J. Biometeorol. 2017, 61, 1347–1358. [Google Scholar] [CrossRef]

- Forrest, J.; Miller-Rushing, A.J. Toward a synthetic understanding of the role of phenology in ecology and evolution. Philos. Trans. R. Soc. Lond. B. Biol. Sci. 2010, 365, 3101–3112. [Google Scholar] [CrossRef]

- Meier, U.; Bleiholder, H.; Buhr, L.; Feller, C.; Hack, H.; Heß, M.; Lancashire, P.D.; Schnock, U.; Stauß, R.; Van Den Boom, T.; et al. The BBCH system to coding the phenological growth stages of plants-history and publications. J. Kult. 2009, 61, 41–52. [Google Scholar] [CrossRef]

- D’andrimont, R.; Taymans, M.; Lemoine, G.; Ceglar, A.; Yordanov, M.; van der Velde, M. Detecting flowering phenology in oil seed rape parcels with Sentinel-1 and -2 time series. Remote Sens. Environ. 2020, 239, 111660. [Google Scholar] [CrossRef]

- Nezval, O.; Krejza, J.; Světlík, J.; Šigut, L.; Horáček, P. Comparison of traditional ground-based observations and digital remote sensing of phenological transitions in a floodplain forest. Agric. For. Meteorol. 2020, 291, 108079. [Google Scholar] [CrossRef]

- Zeng, L.; Wardlow, B.D.; Xiang, D.; Hu, S.; Li, D. A review of vegetation phenological metrics extraction using time-series, multispectral satellite data. Remote Sens. Environ. 2020, 237, 111511. [Google Scholar] [CrossRef]

- Richardson, A.D. Tracking seasonal rhythms of plants in diverse ecosystems with digital camera imagery. New Phytol. 2019, 222, 1742–1750. [Google Scholar] [CrossRef]

- Misra, G.; Cawkwell, F.; Wingler, A. Status of Phenological Research Using Sentinel-2 Data: A Review. Remote Sens. 2020, 12, 2760. [Google Scholar] [CrossRef]

- Dixon, D.; Callow, J.; Duncan, J.; Setterfield, S.; Pauli, N. Satellite prediction of forest flowering phenology. Remote. Sens. Environ. 2021, 255, 112197. [Google Scholar] [CrossRef]

- Kosmala, M.; Crall, A.; Cheng, R.; Hufkens, K.; Henderson, S.; Richardson, A. Season Spotter: Using Citizen Science to Validate and Scale Plant Phenology from Near-Surface Remote Sensing. Remote Sens. 2016, 8, 726. [Google Scholar] [CrossRef]

- Nagai, S.; Ichie, T.; Yoneyama, A.; Kobayashi, H.; Inoue, T.; Ishii, R.; Suzuki, R.; Itioka, T. Usability of time-lapse digital camera images to detect characteristics of tree phenology in a tropical rainforest. Ecol. Inform. 2016, 32, 91–106. [Google Scholar] [CrossRef]

- Taylor, S.D.; Browning, D.M. Classification of Daily Crop Phenology in PhenoCams Using Deep Learning and Hidden Markov Models. Remote Sens. 2022, 14, 286. [Google Scholar] [CrossRef]

- Mann, H.M.R.; Iosifidis, A.; Jepsen, J.U.; Welker, J.M.; Loonen, M.J.J.E.; Høye, T.T. Automatic flower detection and phenology monitoring using time-lapse cameras and deep learning. Remote Sens. Ecol. Conserv. 2022, 8, 765–777. [Google Scholar] [CrossRef]

- Katal, N.; Rzanny, M.; Mäder, P.; Wäldchen, J. Deep Learning in Plant Phenological Research: A Systematic Literature Review. Front. Plant Sci. 2022, 13, 805738. [Google Scholar] [CrossRef]

- Danielewicz, W.; Maliński, T. Drzewa i Krzewy Ogrodu Dendrologicznego Uniwersytetu Przyrodniczego w Poznaniu; Wydawnictwo Uniwersytetu Przyrodniczego: Poznań, Poland, 2011. [Google Scholar]

- IMGW-PIB. Instytut Meteorologii i Gospodarki Wodnej–Państwowy Instytut Badawczy. Dane Pomiarowo Obserwacyjne. Dane ze Stacji Synoptycznej Poznań Ławica (Nr Stacji: 352160330); IMGW-PIB: Warsaw, Poland, 2023. [Google Scholar]

- Richardson, A.D.; Hufkens, K.; Milliman, T.; Aubrecht, D.M.; Chen, M.; Gray, J.M.; Johnston, M.R.; Keenan, T.F.; Klosterman, S.T.; Kosmala, M.; et al. Tracking vegetation phenology across diverse North American biomes using PhenoCam imagery. Sci. Data 2018, 5, 180028. [Google Scholar] [CrossRef]

- Mizunuma, T.; Wilkinson, M.; Eaton, E.L.; Mencuccini, M.; Morison, J.I.L.; Grace, J. The relationship between carbon dioxide uptake and canopy colour from two camera systems in a deciduous forest in southern England. Funct. Ecol. 2013, 27, 196–207. [Google Scholar] [CrossRef]

- Filippa, G.; Cremonese, E.; Migliavacca, M.; Galvagno, M.; Forkel, M.; Wingate, L.; Tomelleri, E.; di Cella, U.M.; Richardson, A.D. Phenopix: A R package for image-based vegetation phenology. Agric. For. Meteorol. 2016, 220, 141–150. [Google Scholar] [CrossRef]

- Filippa, G. Phenopix R Package Vignettes 1/3: Base Vignette. 2020. Available online: https://www.researchgate.net/publication/289374477_phenopix_R_package_vignettes_13_base_vignette (accessed on 20 January 2025).

- R Core Team. A Language and Environment for Statistical Computing: R Foundation for Statistical Computing; R Core Team: Vienna, Austria, 2023; Available online: https://www.R-project.org (accessed on 20 January 2025).

- Primack, R.B.; Gallinat, A.S.; Ellwood, E.R.; Crimmins, T.M.; Schwartz, M.D.; Staudinger, M.D.; Miller-Rushing, A.J. Ten best practices for effective phenological research. Int. J. Biometeorol. 2023, 67, 1509–1522. [Google Scholar] [CrossRef]

- Meier, U. Growth Stages of Mono-and Dicotyledonous Plants; Federal Biological Research Centre for Agriculture and Forestry: Bonn, Germany, 2001. [Google Scholar]

- Finn, G.; Straszewski, A.; Peterson, V. A general growth stage key for describing trees and woody plants. Ann. Appl. Biol. 2007, 151, 127–131. [Google Scholar] [CrossRef]

- Meier, U.; Bleiholder, H.; Brumme, H.; Bruns, E.; Mehring, B.; Proll, T.; Wiegand, J. Phenological growth stages of roses (Rosa sp.): Codification and description according to the BBCH scale. Ann. Appl. Biol. 2008, 154, 231–238. [Google Scholar] [CrossRef]

- Casalicchio, G.; Burk, L. Evaluation and Benchmarking. In Applied Machine Learning Using mlr3 in R.; CRC Press: Boca Raton, FL, USA, 2024; Available online: https://mlr3book.mlr-org.com/evaluation_and_benchmarking.html (accessed on 20 January 2025).

- Bischl, B.; Sonabend, R.; Kotthoff, L.; Lang, M. Applied Machine Learning Using mlr3 in R; CRC Press: Boca Raton, FL, USA, 2024; Available online: https://mlr3book.mlr-org.com (accessed on 20 January 2025).

- Hechenbichler, K.; Schliep, K. Weighted k-Nearest-Neighbor Techniques and Ordinal Classification. Available online: https://epub.ub.uni-muenchen.de/1769/ (accessed on 13 May 2023).

- Cunningham, P.; Delany, S.J. k-Nearest Neighbour Classifiers—A Tutorial. ACM Comput. Surv. 2021, 54, 128. [Google Scholar] [CrossRef]

- Becker, M.; Schneider, L.; Fischer, S. Hyperparameter Optimization. In Applied Machine Learning Using mlr3 in R.; CRC Press: Boca Raton, FL, USA, 2024. [Google Scholar]

- Inouye, B.; Ehrlén, J.; Underwood, N. Phenology as a process rather than an event: From individual reaction norms to community metrics. Ecol. Monogr. 2018, 89, e01352. [Google Scholar] [CrossRef]

- Menzel, A.; Sparks, T.H.; Estrella, N.; Koch, E.; Aasa, A.; Ahas, R.; Alm-Kübler, K.; Bissolli, P.; Braslavská, O.; Briede, A.; et al. European phenological response to climate change matches the warming pattern. Glob. Change Biol. 2006, 12, 1969–1976. [Google Scholar] [CrossRef]

- Sparks, T.; Menzel, A. Observed changes in seasons: An overview. Int. J. Climatol. 2002, 22, 1715–1725. [Google Scholar] [CrossRef]

- Soudani, K.; Delpierre, N.; Berveiller, D.; Hmimina, G.; Pontailler, J.-Y.; Seureau, L.; Vincent, G.; Dufrêne, É. A survey of proximal methods for monitoring leaf phenology in temperate deciduous forests. Biogeosciences 2021, 18, 3391–3408. [Google Scholar] [CrossRef]

- Miller-Rushing, A.J.; Inouye, D.W.; Primack, R.B. How well do first flowering dates measure plant responses to climate change? The effects of population size and sampling frequency. J. Ecol. 2008, 96, 1289–1296. [Google Scholar] [CrossRef]

- Tooke, F.; Battey, N.H. Temperate flowering phenology. J. Exp. Bot. 2010, 61, 2853–2862. [Google Scholar] [CrossRef]

- Campbell, T.; Fearns, P. Simple remote sensing detection of Corymbia calophylla flowers using common 3–band imaging sensors. Remote Sens. Appl. Soc. Environ. 2018, 11, 51–63. [Google Scholar] [CrossRef]

- Li, H.; Yan, E.; Jiang, J.; Mo, D. Monitoring of key Camellia Oleifera phenology features using field cameras and deep learning. Comput. Electron. Agric. 2024, 219, 108748. [Google Scholar] [CrossRef]

- Guo, Y.; Chen, S.; Fu, Y.H.; Xiao, Y.; Wu, W.; Wang, H.; de Beurs, K. Comparison of Multi-Methods for Identifying Maize Phenology Using PhenoCams. Remote Sens. 2022, 14, 244. [Google Scholar] [CrossRef]

- Li, Q.; Shen, M.; Chen, X.; Wang, C.; Chen, J.; Cao, X.; Cui, X. Optimal Color Composition Method for Generating High-Quality Daily Photographic Time Series From PhenoCam. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 6179–6193. [Google Scholar] [CrossRef]

- Primack, R.B.; Ellwood, E.R.; Gallinat, A.S.; Miller-Rushing, A.J. The growing and vital role of botanical gardens in climate change research. New Phytol. 2021, 231, 917–932. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).