1. Introduction

In recent years, the field of drone technology has experienced remarkable advancements, drawing substantial attention from various industries. Due to their compact size and high maneuverability, drones have found widespread applications in areas such as aerial photography, logistics, communications, and environmental monitoring [

1,

2,

3]. Although these features enable numerous positive applications, they also raise concerns about potential misuse, with increasing reports of drones being used for malicious purposes. Incidents involving unauthorized drone operations near airports and attacks on public institutions or individuals have been documented [

4,

5]. Consequently, the development of effective anti-drone systems has become critical, both in civilian and military contexts, to prevent accidents and enable rapid response to such threats.

An anti-drone system functions by detecting unauthorized drones and tracking their locations to prevent malicious activities. Accurate localization of a drone is a key component in developing an effective anti-drone system, and numerous signal processing and localization techniques have been actively explored for this purpose. Prominent technologies include ultraviolet (UV) detection, thermal imaging, magnetic sensors, and acoustic source-based localization methods. UV detection is effective in identifying drones by detecting UV signatures, especially in low-light conditions. Thermal imaging is beneficial for detecting drones based on heat emissions, making it suitable for night-time operations or situations with visual obstructions. Magnetic sensors are used to detect the metallic components of drones, which is advantageous in environments where visual and acoustic signals are compromised. Acoustic source-based localization methods are based on the unique sounds produced by drone propellers, providing a resilient approach in dynamic environments with frequent visual obstructions. Each technique presents distinct advantages and limitations, making them suitable for different environments and operating conditions [

4,

5,

6]. Among these, acoustic source-based localization methods are particularly effective for drone detection as they are relatively resilient to environmental changes and provide high localization accuracy. Although acoustic signals may overlap with ambient noise, potentially leading to false detections, the characteristic sounds produced by drone engines or propellers offer a reliable means of tracking the sound source with precision. Specific filtering techniques, such as bandpass filtering, are typically employed to differentiate drone sounds from background noise, enhancing detection reliability. However, advanced methods such as spectral subtraction and adaptive noise cancellation were not specifically considered in this study and are potential areas for future exploration.

Acoustic source localization is traditionally performed using triangulation methods based on Time of Arrival (TOA), time difference of arrival (TDOA), and angle of arrival (AOA) measurements. These techniques are widely used in various localization applications due to their established high accuracy and robustness [

7,

8,

9,

10,

11,

12]. Among these approaches, GCC-PHAT, as described by Pourmohammad and Ahadi, coupled with array signal processing, enables efficient and precise localization [

12]. They proposed a real-time, high-accuracy 3D sound source localization technique using a simple four-microphone arrangement, which demonstrated the feasibility of achieving rapid localization with minimal hardware complexity. However, their method estimates AOA as azimuth, leading to a degree of inaccuracy because this simplification overlooks the intricate spatial variations necessary for accurately distinguishing three-dimensional positional attributes, especially in scenarios involving rapidly moving targets such as drones [

12]. For instance, when a drone rapidly changes altitude, the incorrect estimation of azimuth can lead to significant localization errors, making it difficult to accurately track the drone’s real-time position and potentially causing delays in response actions. Calculating AOA as azimuth results in angular errors since the AOA does not accurately represent the true azimuth angle. In conventional acoustic source localization, the difference between AOA and azimuth is relatively small and may not cause significant performance deterioration. However, in the case of drones, where the sound source is at a very high altitude, the discrepancy between AOA and azimuth becomes more pronounced, causing localization errors. Therefore, it is crucial to perform localization while considering these differences.

Array signal processing is critical for improving localization accuracy and is applied in numerous advanced research fields [

13,

14,

15,

16]. Although azimuth can be effectively estimated using array signal processing, determining both AOA and azimuth separately with a single set of microphones leads to increased signal processing complexity and higher error rates. To address these challenges, we employ two sets of microphone arrays to achieve both computational simplicity and enhanced accuracy.

The two sets of microphone arrays were positioned symmetrically on either side of the origin, each at a distance of 2 m. This distance was chosen to optimize the balance between spatial separation for effective triangulation and simplifying computational overhead, thereby enhancing localization performance in high-altitude scenarios. By adopting this configuration, we were able to develop a robust and efficient localization technique that effectively mitigates the computational burden while maintaining high accuracy, particularly for high-altitude drone localization.

In this study, we propose a localization system that estimates the position of a drone by calculating both azimuth and elevation angles using two distributed microphone arrays, combined with TDOA measurements obtained through the GCC-PHAT technique. Unlike existing methods that often estimate AOA as azimuth or rely on single-array setups, our approach uses dual arrays to achieve higher accuracy and reduce computational complexity. This configuration mitigates angular errors inherent in traditional approaches for tracking drones at high altitudes. The effectiveness of the proposed method is validated through a series of simulations and experimental evaluations.

2. Related Work

Sound source localization (SSL) has been widely studied in the literature, with TDOA and Generalized Cross-Correlation Phase Transform (GCC-PHAT) being the most commonly employed techniques [

1,

2]. These methods have been extensively utilized in various applications, including speech recognition, surveillance, and, more recently, drone detection.

One of the most notable studies in this field is the work by Ali Pourmohammad et al. [

12], who proposed a real-time high-accuracy 3D localization system based on a four-microphone array. Their method estimates the azimuth angle of a sound source using TDOA measurements, achieving reasonable localization accuracy in controlled environments. However, a major limitation of this approach is its inability to effectively capture elevation information, which is critical for applications involving airborne targets such as drones.

Several studies have attempted to address these limitations by improving microphone array configurations. Lee and Park [

17] introduced a phased microphone array mounted on a drone to enhance acoustic source localization. Their work demonstrated increased detection accuracy, particularly for sources positioned at varying altitudes. Similarly, Kim and Choi [

18] investigated a multi-microphone array system onboard UAVs, showing improvements in real-time tracking of nearby drones using advanced array signal processing techniques.

Beyond microphone configurations, recent research has also explored sensor fusion and machine learning techniques to further enhance localization accuracy. Wang and Chen [

19] proposed a drone detection system combining fiber-optic acoustic sensors with distributed microphone arrays, significantly improving robustness against background noise. Meanwhile, Smith and Brown [

20] applied deep learning-based 3D localization in urban environments, demonstrating that neural networks can effectively compensate for multi-path interference and noise.

Despite these advancements, accurate 3D localization in large-scale, real-world environments remains a challenge. Existing approaches either require a large number of microphones, which increases hardware complexity, or struggle with calibration issues in distributed setups. This highlights the need for a robust and cost-efficient localization method that can accurately estimate both azimuth and elevation angles.

2.1. Motivation and Contribution

In drone detection systems that require wide-area coverage, multiple microphone arrays must be deployed to enhance detection capabilities. The presence of multiple arrays enables performance improvements through inter-array calibration, while simultaneously ensuring that reducing the number of microphones per array remains economically advantageous.

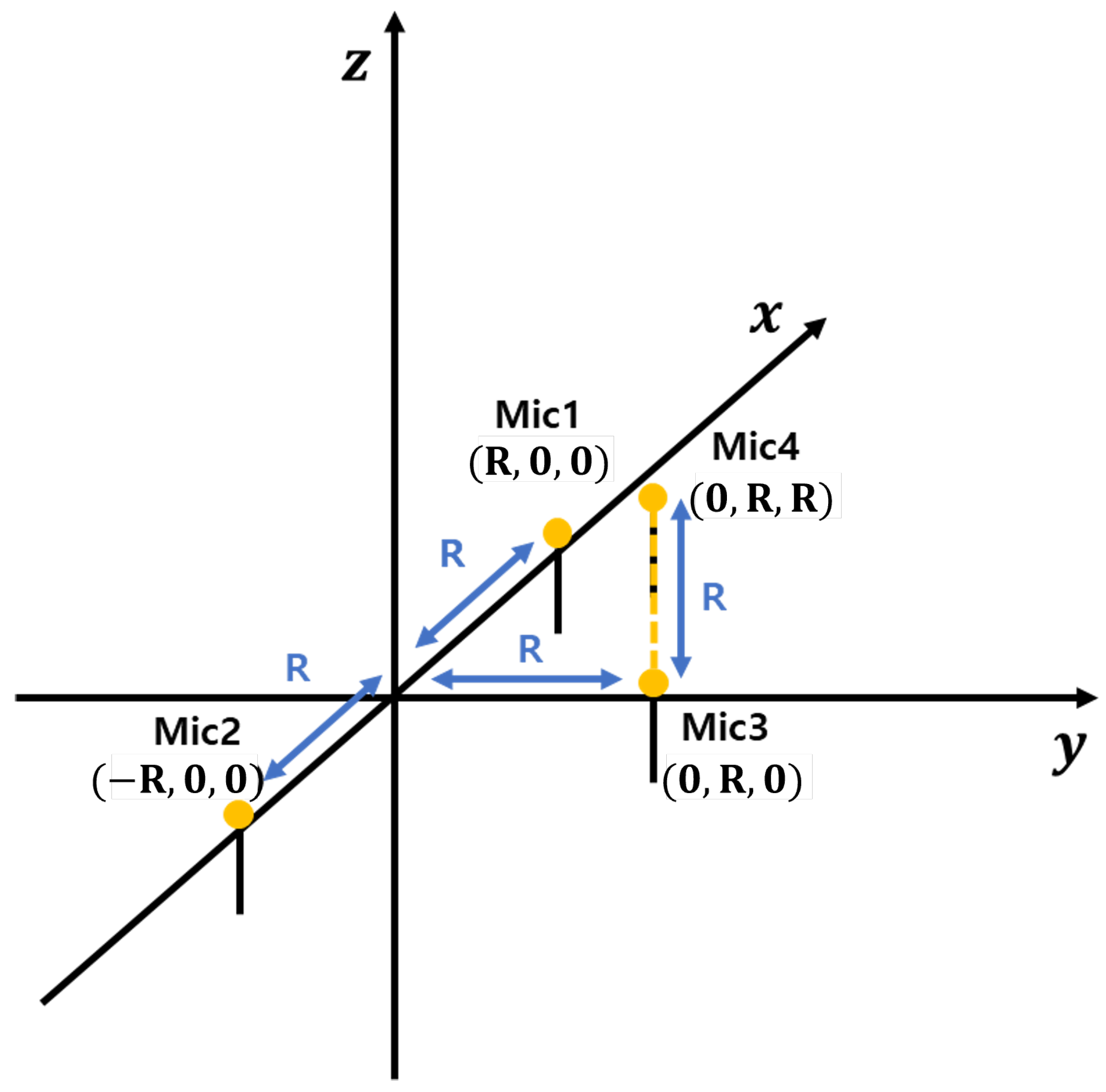

Based on these considerations, we selected the Ali method as a reference due to its low-complexity implementation and real-time processing capabilities. However, as mentioned earlier, the Ali method suffers from significant localization errors for high-altitude sound sources, such as drones. The microphone array used in this study is shown in

Figure 1. These errors can result in substantial inaccuracies in drone tracking and pose challenges in post-processing corrections.

To address these issues, we propose a novel approach that utilizes two distributed microphone arrays to enhance 3D localization accuracy. This method not only improves the localization performance of distributed sensor networks but also contributes to reducing hardware costs in large-scale detection systems.

Our key contributions are summarized as follows:

An extension of the GCC-PHAT-based TDOA framework to accurately compute both azimuth and elevation angles.

The development of a low-complexity hyperbolic intersection algorithm for real-time 3D localization.

Performance evaluation comparing our method with existing approaches, demonstrating improved accuracy and robustness in noisy environments.

The enhancement of distributed sensor networks, enabling more efficient deployment of microphone arrays for large-scale drone detection.

2.2. GCC-PHAT-Based TDOA Estimation

Acoustic source localization techniques primarily utilize GCC-PHAT with TDOA for high-precision positioning. These methods rely on correlation functions to assess the dependency between signal pairs, such as identifying time delays or phase relationships. Correlation quantifies the similarity between stochastic processes, revealing temporal or spectral dependencies within signals. It is categorized into auto-correlation and cross-correlation, depending on whether the reference signal matches the target signal.

The auto-correlation function measures a signal’s similarity to its time-shifted version, aiding in the detection of periodic or repetitive patterns. In contrast, cross-correlation evaluates the similarity between two distinct signals, enabling the analysis of their relationship in the time or frequency domain. This helps identify time delays and synchronization across signals. The mathematical formulation of the auto-correlation function in the time domain is given in Equation (

1).

In this case,

denotes the received signal,

represents its auto-correlation function, and

corresponds to the time delay. By leveraging the duality between time and frequency domains,

can also be expressed in the frequency domain, as shown in Equation (

2).

Here,

denotes the Fourier transform of

. Additionally, the cross-correlation function

between two signals

and

is defined as in Equation (

3).

If

is considered as the delayed version of

, the cross-correlation function reaches its maximum at the point where the time delay matches. This is similar to the concept where the auto-correlation function reaches its maximum when there is no time delay. Therefore, the time delay difference between

and

can be represented by Equation (

4).

By applying the cross-correlation method, the TDOA between microphones can be accurately measured. The TDOA is essential in drone localization, as it represents the relative propagation time differences of signals received at each microphone. These data are critical for accurately determining the drone’s position in three-dimensional space. However, for low-frequency signals, such as those emitted by acoustic sources, the performance of the conventional cross-correlation approach tends to deteriorate [

21]. This occurs because low-frequency signals have longer wavelengths, reducing spatial resolution and making it harder to distinguish between closely spaced sound sources, leading to increased interference and decreased accuracy. The long wavelengths associated with low-frequency signals also increase the likelihood of interference and distortion within the microphone array. These challenges are further exacerbated in environments with significant noise or multi-path propagation, where signal reflections and noise can heavily impact the accuracy of TDOA estimation.

To improve the accuracy of TDOA estimation, it is crucial to mitigate the influence of low-frequency components. The GCC-PHAT method is widely adopted to address this challenge. This approach determines time delay by utilizing the phase information of the signal in the frequency domain. By focusing on phase rather than amplitude, the method reduces the impact of low-frequency interference, enabling more accurate time delay estimation.

The application of the PHAT weighting function further refines this process by eliminating amplitude information, which enhances robustness against noise, and calculating the correlation solely based on phase. This technique ensures reliable delay estimation even in the presence of noise and multi-path effects. When the angular frequency is defined as

, the GCC-PHAT function is mathematically expressed as shown in Equation (

5).

Here,

is the PHAT weighting function, which is used to remove the amplitude component and can be expressed as in Equation (

6).

Furthermore, by utilizing the duality between the time and frequency domains, it can be expressed as Equation (

7).

This method simplifies the complex convolution operations involved in signal processing, enabling fast and accurate TDOA estimation. In this study, TDOA estimation was conducted using the GCC-PHAT technique. A system was designed and implemented to capture and analyze drone acoustic signals in an outdoor environment, employing GCC-PHAT alongside distributed microphone arrays.

2.3. Angle of Arrival Estimation and Hyperbolic Intersection-Based Localization

In this study, the TDOA is estimated between microphones using the GCC-PHAT method, which subsequently enables the calculation of the AOA. The AOA is given by

where

denotes the speed of sound in air and

represents the time delay obtained from the cross-correlation of two received signals. However, determining the exact source position requires additional information. The distance between the source and each microphone can be expressed as:

where

and

define the drone’s coordinates. The difference in these distances is given by:

Rewriting in terms of

x and

y instead of

and

, we obtain:

Since this equation contains two unknowns,

x and

y, additional constraints are required. Assuming both microphones are equidistant from the origin, with a separation distance

along the x-axis, the equation simplifies to:

Rearranging and simplifying the equation leads to:

where

y follows a hyperbolic geometric distribution relative to

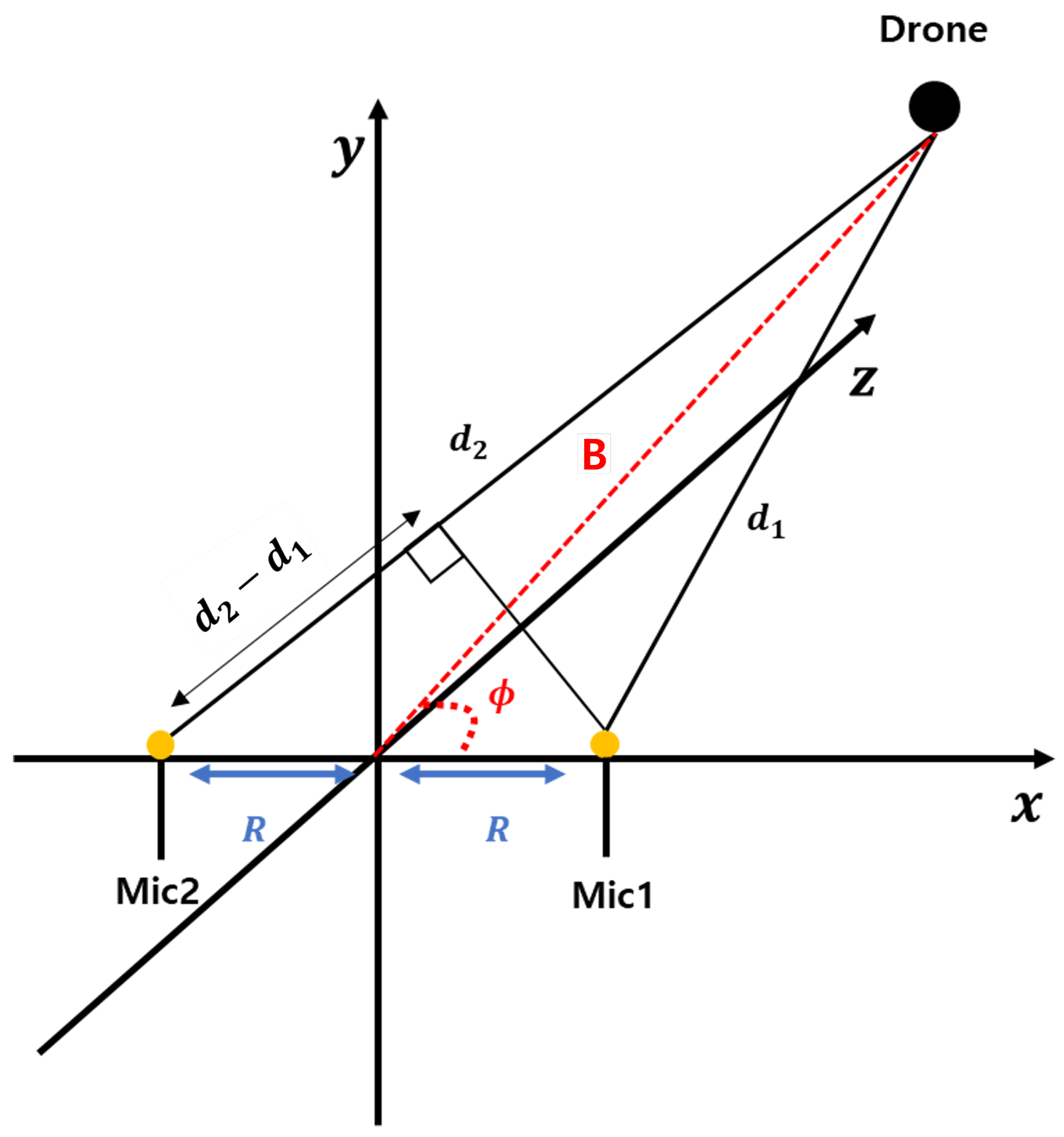

x, as depicted in

Figure 2.

To determine the exact coordinates

x and

y, a second equation is needed by incorporating a third microphone:

Since these are nonlinear equations, solving them requires a hyperbolic intersection approach, which typically involves numerical methods. Consequently, this increases computational complexity in localization, and there is a possibility that the solution may not converge.

2.4. Simplified Localization Calculations

Due to the complexity of solving Equation (

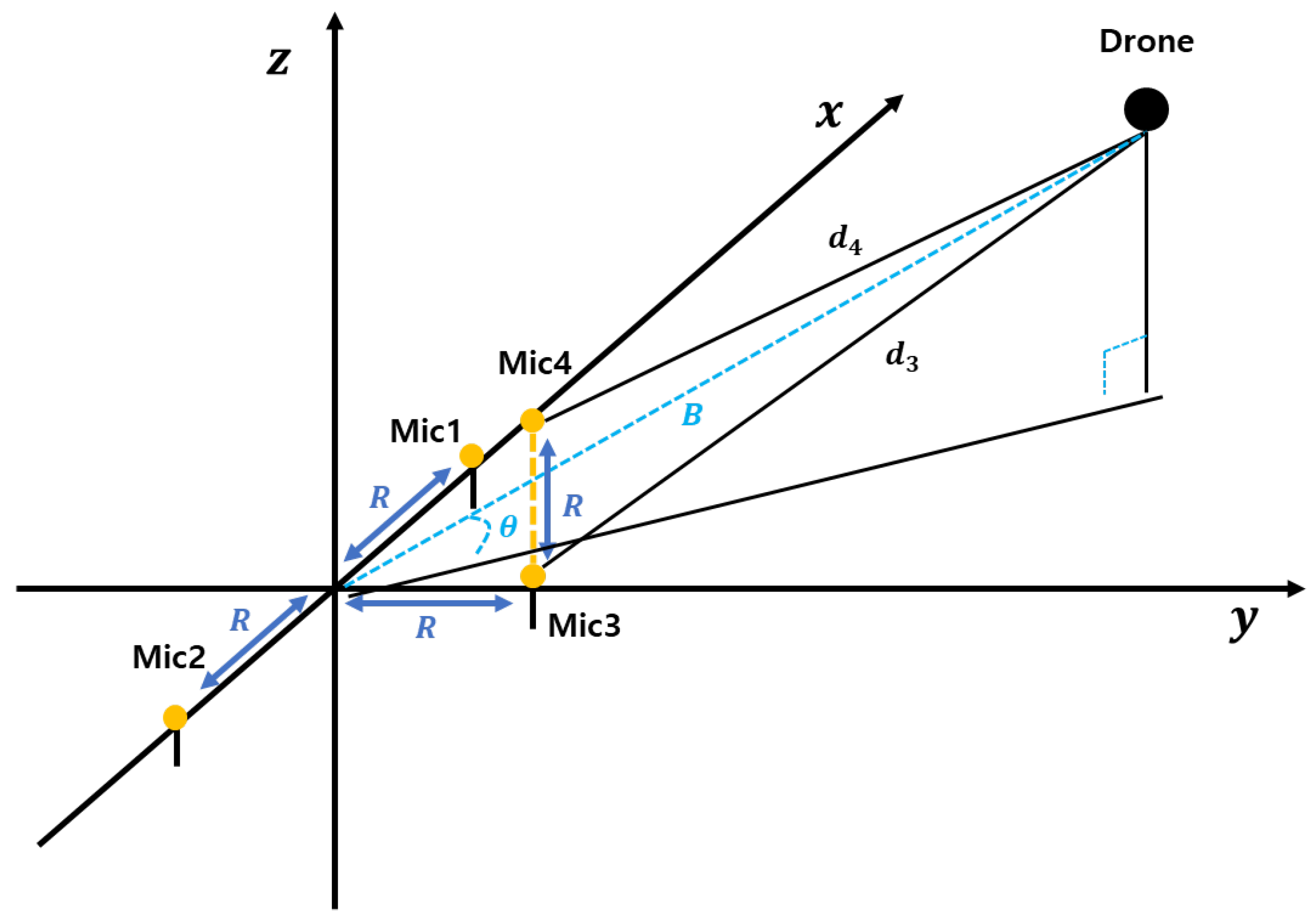

15), we adopt a more practical microphone placement configuration, as illustrated in

Figure 3. Based on this arrangement, we redefine the distance differences, leading to the following time delay expressions between microphone pairs:

Given Equations (17) and (18) with two unknowns,

x and

y, we can simplify the problem by leveraging the fact that for each of the three sources, either

x or

y is zero. This allows us to rewrite Equations (17) and (18) as:

Alternatively, solving Equations (16) and (17) with the same constraint (one coordinate being zero) allows us to rewrite them as:

Similarly, solving Equations (16) and (18) under the same assumption yields:

By replacing

a with

or

and similarly

b with

or

, the source position is constrained to the line

, as illustrated in

Figure 3. This allows us to employ four different approaches to determine the source location:

- 1.

Consider the incident line

and the line passing through the origin (line B in

Figure 2; see

Figure 3). The time delay between the second and first microphone signals,

and

, is denoted as

. Given that the angle of arrival is

, we can determine it at the origin using the following equation:

Assuming the source is at a distance

r from the origin, its coordinates can be expressed as:

Substituting these into the line equation

, we obtain:

which allows us to determine

x and

y.

- 2.

Combining the equation

with (16), we simplify (16) as follows:

Substituting

into (34) results in:

where

Solving this equation yields x, which can then be substituted into to determine y.

- 3.

Combining equation

with (17), we first simplify (17) as:

Substituting

into (37) and (38) results in:

Solving for x and substituting into gives y.

- 4.

Merging equation

with (18), we begin by simplifying (18):

Solving this equation provides x, which can then be substituted into to obtain y.

2.5. Extending Localization to Three Dimensions

For the 3D case, we consider a 2D plane that includes the source position and the

x-axis, forming an angle

with the

x-

y plane. We refer to this as the source-plane (

Figure 1). This plane also contains microphones 1 and 2. Under the far-field assumption, microphone 3 can be approximated as lying within this plane with negligible error. Based on these assumptions, and using Equation (

29), we can determine

, which represents the angle of arrival within the source-plane. This value is equivalent to the angle of arrival in the

x-

y plane.

Furthermore, using Equation (

32), we can calculate

r, which denotes the distance from the source to the origin. Alternatively, employing Equations (35), (36), or (39), we can derive the coordinates

x and

y within the source-plane, rather than the

x-

y plane, allowing us to compute

r. To refine this estimation, we introduce an additional microphone (mic4 in

Figure 1) positioned at

and

, which facilitates the calculation of

. Under the far-field assumption, microphones 3 and 4 can be approximated as being nearly aligned along the

z-axis. Consequently, using Equation (

29), we obtain

as

With the computed values of

r,

, and

, the three-dimensional coordinates

x,

y, and

z can be determined as

This approach enables the estimation of an obstacle’s three-dimensional position using a single microphone array. Since this technique calculates the angle of arrival based on the azimuth angle, it provides accurate localization when the obstacle is at a low elevation. However, at higher elevations, the accuracy of 3D localization declines. To improve localization performance, it is essential to separately estimate the azimuth and elevation angles.

3. Methods

3.1. Microphone Array Configuration for TDOA Estimation

The position of the sound source is determined by first calculating the TDOA using the spatial separation between microphones and then using the TDOA to estimate the angle information. The spatial separation between microphones allows for the calculation of the TDOA, which provides information on the time delays between received signals. These TDOA values are then used to determine the angle information, providing directional information about where the sound is coming from. Utilizing the microphone array configuration depicted in

Figure 1, the angular information of the drone can be extracted, which is crucial for accurately determining its position [

12]. Extracting angular information is crucial for accurately determining the drone’s direction and position, which is essential for effective localization. The coordinates of the sound source are represented as

, while the coordinates of the

n-th microphone are expressed as

. The Euclidean distance between these two points is defined by Equation (

44).

The time at which the sound is received by the

n-th microphone is denoted as

, while the reception time at the

m-th microphone is represented as

. The speed of sound, which plays a crucial role in determining the time delay, is approximated as 340 m/s in this study. While Equation (

45) provides a more detailed calculation of the speed of sound based on temperature, in this research, a constant value of 340 m/s was used for simplicity. Environmental factors such as humidity and altitude were not explicitly accounted for.

The time difference of arrival, denoted as

, and the distance difference

between the

n-th and

m-th microphones are related through the distance–velocity equation and can be expressed as follows:

The time delay differences between the microphones are represented by Equations (46) and (47) and are computed as detailed in Equations (48)–(51). Each of these equations serves a specific purpose: Equations (46) and (47) represent the initial time delay relationships, while Equations (48)–(51) provide detailed calculations for each microphone pair, allowing for accurate determination of angular and positional information.

3.2. Estimation of Angular Information

In this paper, the location of the drone is estimated using angle information, including AOA, azimuth, elevation, and a distributed microphone array. The preliminary estimation of angle information is critical, as it provides the basis for accurately determining the drone’s position. Without an initial estimate of angles such as AOA and elevation, subsequent calculations for precise localization would suffer from reduced accuracy. Elevation and AOA are derived from time difference values, with the required TDOA for each angle calculated using the GCC-PHAT method. The GCC-PHAT method is particularly suitable for this application because it emphasizes phase information, reducing the influence of noise and improving the accuracy of time delay estimation in challenging acoustic environments. Furthermore, the azimuth angle is derived by combining the earlier elevation and AOA. Specifically, the elevation provides information about the vertical orientation, while the AOA helps determine the direction of the sound source relative to the microphone array. By using both, the azimuth angle can be accurately calculated to pinpoint the drone’s location in three-dimensional space.

The arrival angle represents the direction at which the sound source reaches the microphone array, and by accurately estimating this angle, the location of the sound source can be determined with greater precision. In this study, the arrival angle

is estimated based on the TDOA between the microphones. As depicted in

Figure 2, with microphones 1 and 2 positioned, the channel environment is assumed to be in the far-field condition, allowing the arrival angle, which is defined as the angle between segment A and the x-axis, to be approximated. The far-field condition is assumed because it simplifies the calculation by allowing the sound waves to be treated as nearly parallel, reducing the complexity of the geometry involved in angle estimation. In this context, the far-field condition assumes that the distance between the sound source and the microphones is sufficiently large, such that the sound waves are nearly parallel when reaching the microphone array, satisfying the following condition.

Here,

represents the distance between the sound source and the origin of the microphone array,

denotes the maximum spacing between the microphones, and

refers to the wavelength of the signal. The condition for satisfying the far-field approximation is given as follows, which ensures that the sound waves can be considered nearly parallel, thereby simplifying the geometric calculations involved in determining angles.

In this context,

b refers to the angle formed between the y-axis and line A, while

D represents the separation between microphones 1 and 2. The arrival angle is calculated using Equations (55)–(59).

Elevation estimation involves calculating the

z-axis position of the drone. In a far-field condition, the elevation angle between the origin and the drone is determined using the vertical line

B, which bisects the distance between microphones 3 and 4. As illustrated in

Figure 3, microphone 3 is positioned at

and microphone 4 at

, with a vertical separation of

R between them. With this setup and the time difference of arrival

, the elevation angle can be determined. The elevation angle

is derived as follows:

The AOA and elevation angle of the drone were estimated using TDOA. Previous research approximated the AOA

as the azimuth angle; however,

refers to the angle between line A and the x-axis, and this approximation introduces increasing errors as the drone’s z-coordinate rises. Therefore, calculating the azimuth angle accurately becomes crucial. Based on the distance variables presented in

Figure 4, the AOA

, azimuth angle

, and elevation angle

are expressed as follows:

The procedure for deriving the azimuth angle using the equation above is outlined as follows.

By substituting Equations (65) and (66) into Equation (

63), Equation (

67) is obtained. Equation (

67) is significant as it enables the computation of the distance component in the spherical coordinate system, which is essential for accurately calculating the azimuth and elevation angles required for accurate localization.

As demonstrated above, all angular information in the spherical coordinate system can be computed using TDOA and the microphone array configuration.

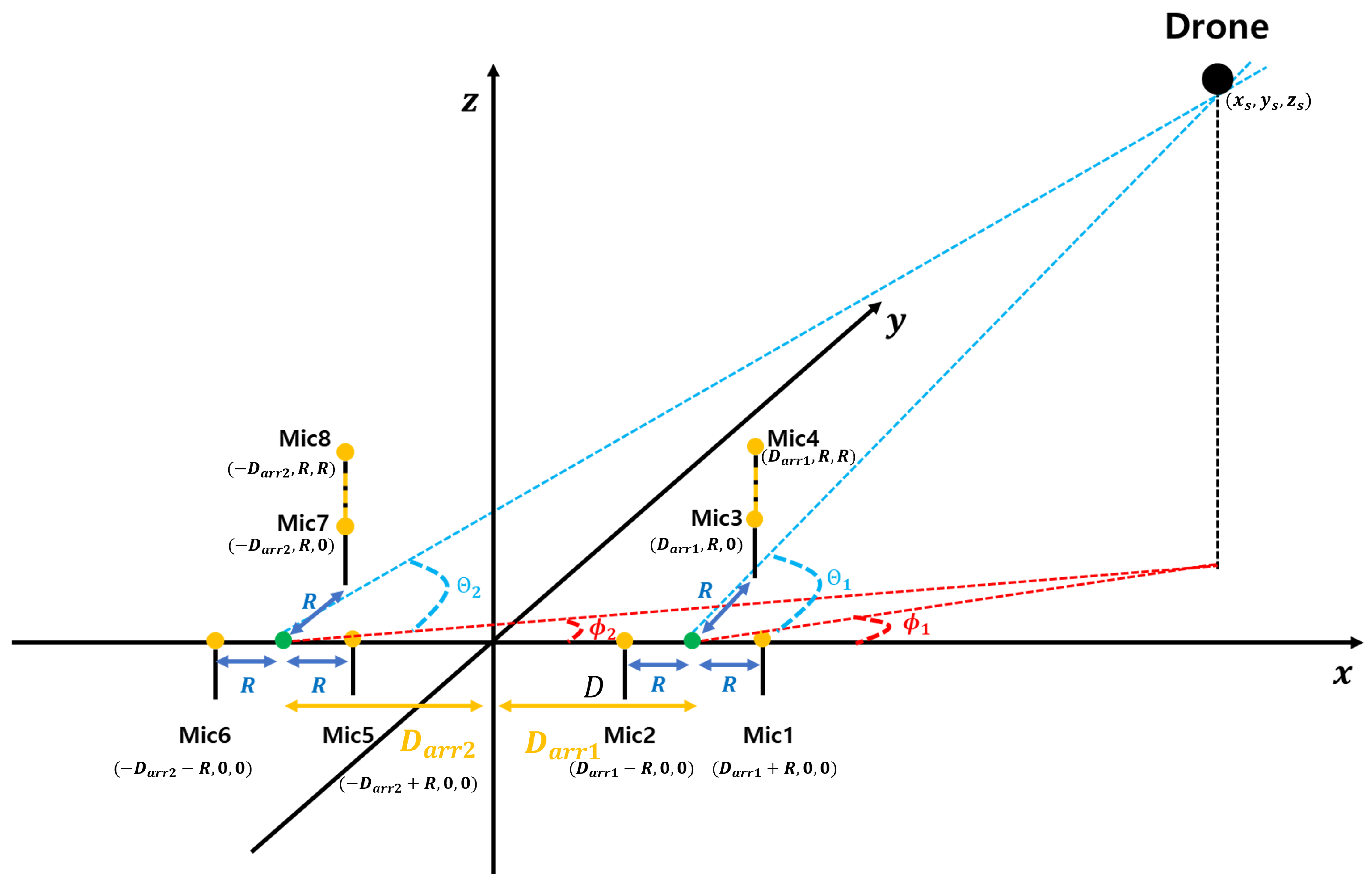

3.3. Localization Using Distributed Arrangement

Distributed arrangement is a technique used to more accurately estimate the position of the drone’s sound source. This approach reduces interference between the microphone arrays and provides better differentiation of signal arrival times, leading to improved localization accuracy. In this paper, as shown in

Figure 5, two microphone arrays were distributed, and the drone’s coordinates were estimated through the angle information obtained from each array. The following equation expresses

through the line connecting the reference point of each array and the xy coordinates of the drone.

Here,

and

are equal, representing the distance from the origin to the reference points of each array. Using Equations (68) and (69),

is expressed as shown in Equation (

70), where

and

denote the azimuth angles estimated from each microphone array.

By applying distributed placement as described above, the intersection of the two lines can be used to estimate

based on the speed of sound

and the TDOA

. This estimated

is then used to determine

. As illustrated in

Figure 5,

can be derived from the intersection of two lines connecting the origin of each microphone array and the drone, enabling the calculation of the drone’s elevation angle

. The estimation processes for

and

are independent of each other and can be calculated separately for each array. By averaging these estimates, as shown in the following equations, noise robustness is improved [

22]. Averaging helps to reduce random errors by smoothing out fluctuations, resulting in a more stable and accurate estimate of the coordinates.

At this stage,

and

represent measurement errors, and the estimated

, obtained by averaging the elevation angle estimates, is as follows.

Similarly, is calculated using the same approach, enabling the determination of the drone’s three-dimensional coordinates.

4. Performance Evaluation

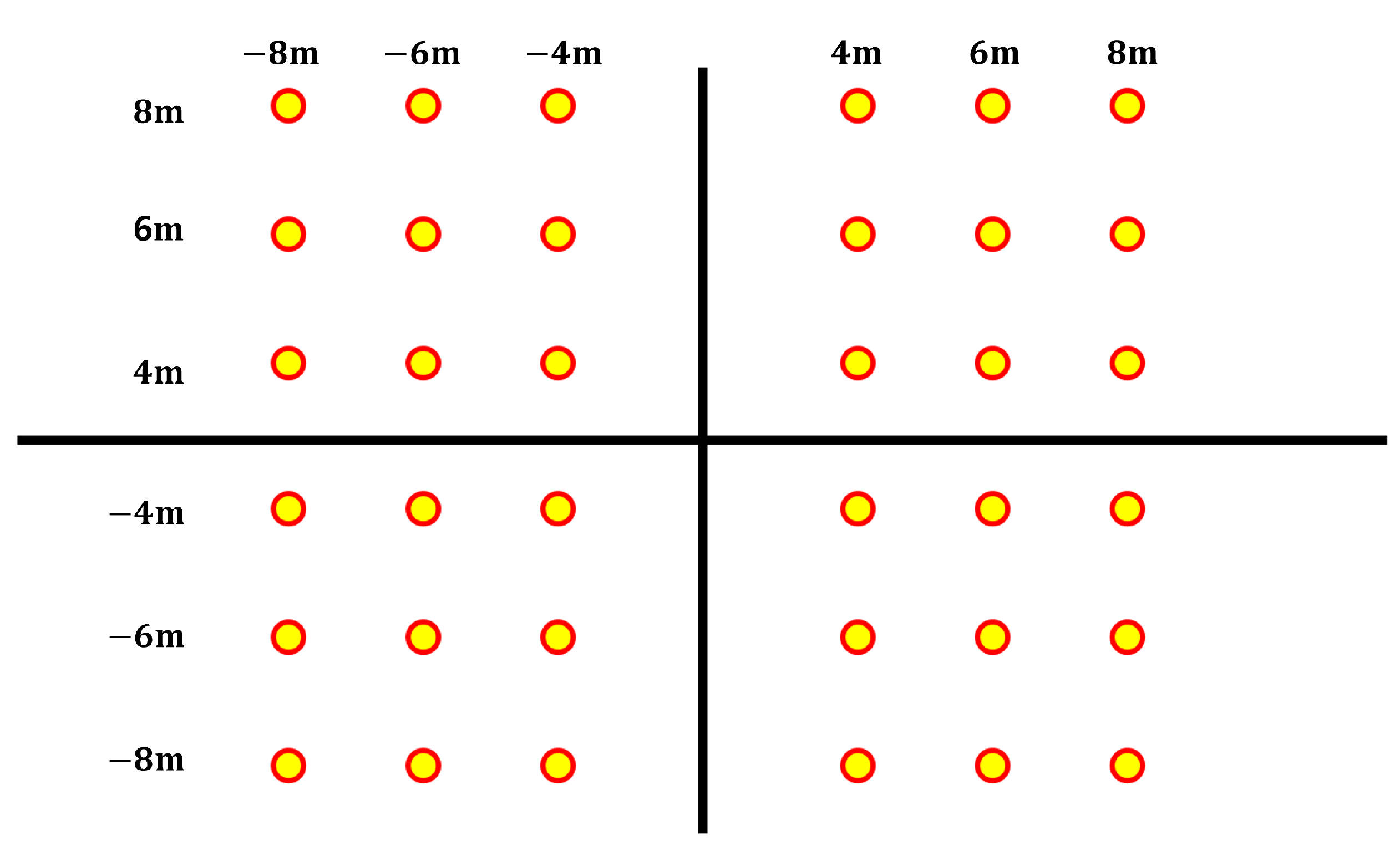

Before implementing the actual experiment, simulations were performed to validate the effectiveness of the algorithm. The simulations assessed the impact of noise, evaluated different drone positions, and tested the accuracy of angle estimation. These validations provided insights into the expected performance under varying conditions, helping to ensure the robustness of the approach. In the experiment, the xy coordinates of the drone were arranged according to the predefined experimental setup, and the z-axis coordinates were set to 4 m and 6 m, respectively, to evaluate performance under various conditions.

4.1. Simulation Results

In the simulation, a drone positioned 10 m above the origin was placed on a circular path with a radius of 10 m at intervals of approximately 10°, spanning from 0° to 180°. A circular path was chosen to simulate diverse drone positions relative to the microphones, ensuring a comprehensive evaluation of localization accuracy under varying angles. The microphone configuration was identical to that shown in

Figure 5, with the distance between the origin of each microphone array and the microphone in the xy-plane set to 1 m and the distance from the origin of the coordinate system to the origin of each microphone array set to 2 m. Additionally, it was assumed that acoustic data were collected under conditions simulating a real channel environment, focusing primarily on noise levels to evaluate localization performance realistically. The focus on noise levels was incorporated to create a realistic scenario for evaluating localization performance. The localization performance was evaluated by calculating the mean error over 1000 trials, defined as the distance between the estimated and actual coordinates. The number of trials was chosen to ensure statistical reliability and robustness of the results, reducing the impact of random variations in the measurements.

The channel environment was assumed to be a Line-of-Sight scenario, accounting for path loss, which was modeled using the Free Space Path Loss formula. This approach allowed for quantifying the reduction in signal strength over distance, providing a realistic evaluation of the channel conditions. To compare the performance of the proposed method with the baseline method, localization accuracy was evaluated in both Additive White Gaussian Noise (AWGN) and ideal channel conditions. These specific channel conditions were chosen to represent both realistic noise scenarios and an optimal environment without interference, providing a balanced comparison that highlights the strengths and limitations of each method under different conditions. The baseline method employed a localization technique that did not consider the azimuth angle, relying solely on elevation angle and TDOA measurements for positioning.

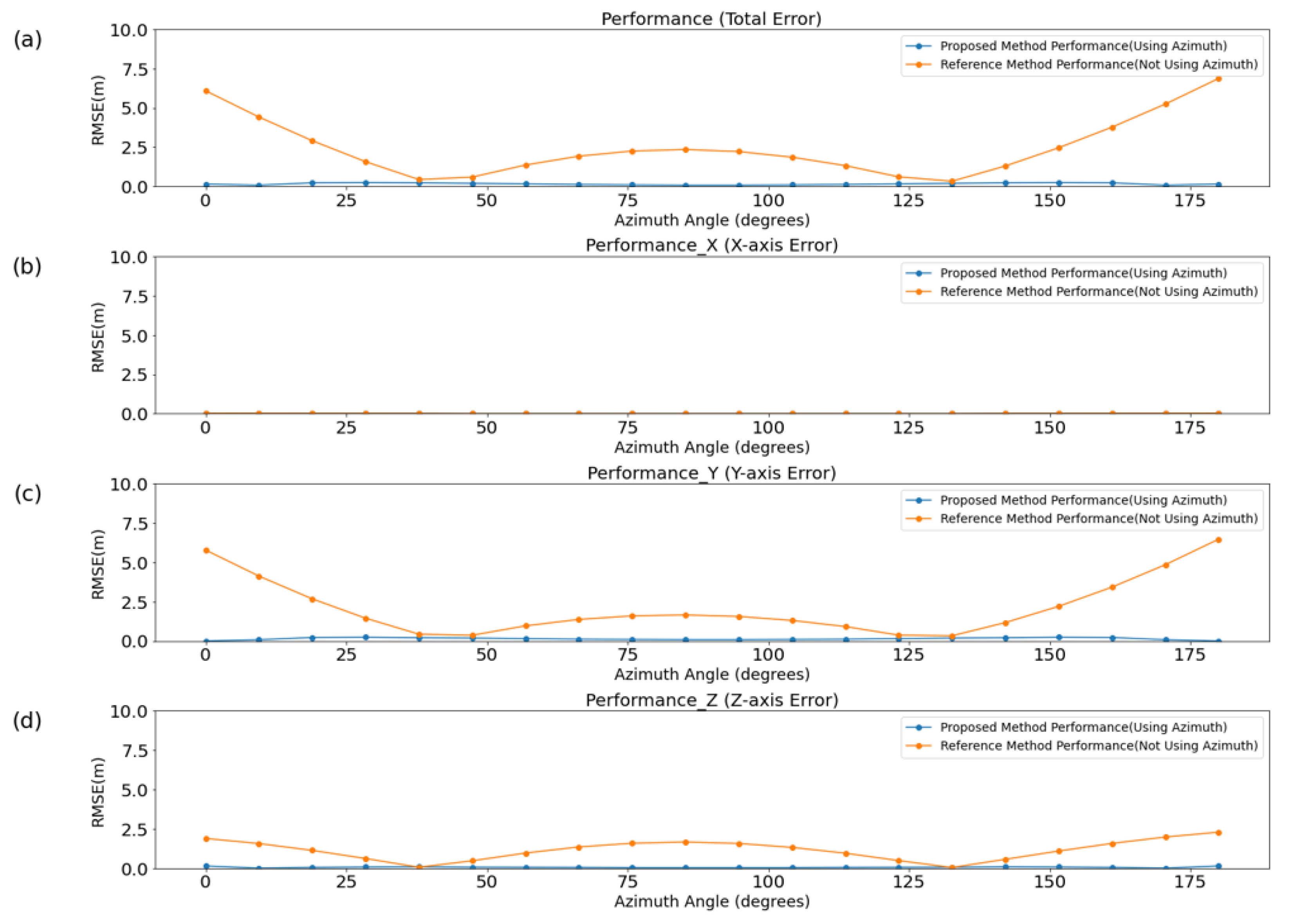

To evaluate the performance of each method in relation to the azimuth angle, simulations were conducted in an ideal channel, with the results presented in

Figure 6.

Table 1 and

Table 2 display the mean and variance of the overall error, as well as axis-specific errors, for each method. Even in an ideal environment, the difference between the AOA and azimuth, caused by the drone’s altitude, led to an increase in the mean and variance of errors across all axes except the x-axis. The x-axis did not experience an increase in error because the horizontal positioning remained relatively unaffected by changes in altitude, which primarily influenced the y- and z-axes.

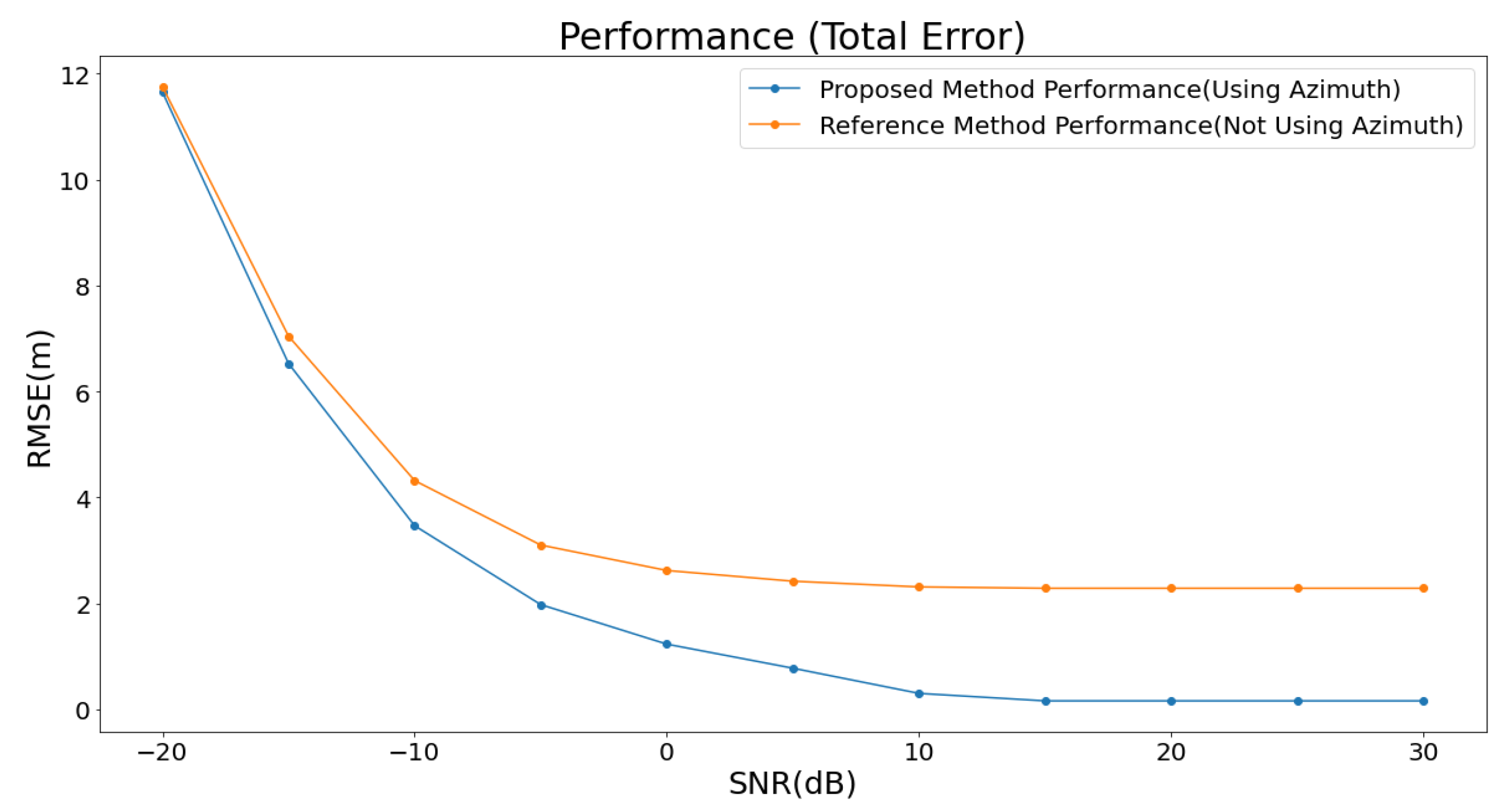

Additionally, the performance of each method was analyzed based on the SNR, as illustrated in

Figure 7.

The analysis was conducted by incrementally increasing the SNR in steps of 5 dB, ranging from −20 dB to 30 dB. For the proposed method, the average error decreased to under 3 m when the SNR exceeded 0 dB. It further dropped to below 0.3 m when the SNR surpassed 15 dB. At SNR levels above 20 dB, the average error converged to approximately 0.16 m. In contrast, the baseline method showed a tendency to stabilize at an average error of about 2.5 m, even when the SNR increased beyond 15 dB. When the SNR was below 0 dB, the performance difference between the two methods was less than 1 m. However, as the SNR exceeded 0 dB, a significant performance gap emerged, ranging from 1 m to a maximum of 2.34 m. This performance difference can be attributed to the proposed method’s effective utilization of angular information, which becomes increasingly beneficial as the signal quality improves with higher SNR.

4.2. Field Test

In this study, field tests were conducted with drones positioned at various locations to evaluate the performance of the proposed algorithm across different coordinates and verify its effectiveness in real-world conditions.

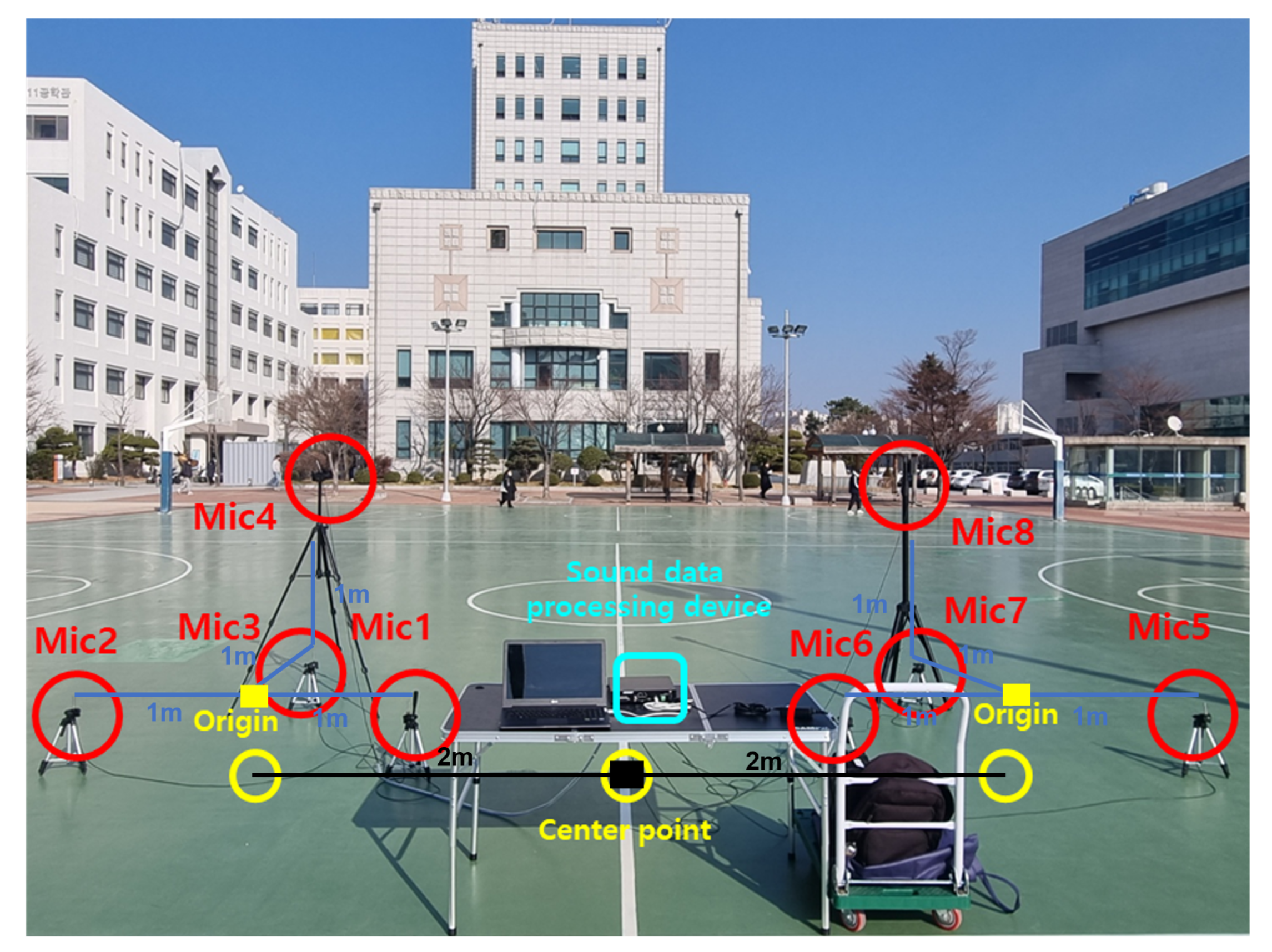

The acoustic signals generated by the drones were captured using an array of eight PCB Piezotronics 130F22 microphones (PCB Piezotronics, Depew, NY, USA), each with a 0.25-inch diaphragm, 45 mV/Pa sensitivity, and a frequency response of 10 Hz–20 kHz. These microphones were configured in a distributed array, as shown in

Figure 8, to enhance localization accuracy by leveraging the time difference of arrival and the GCC-PHAT techniques.

The microphone arrays were deployed in a structured configuration, ensuring optimal coverage for wide-area drone localization. The microphone configuration was identical to that shown in

Figure 8, with the distance between the center of each microphone array and the microphones in the xy-plane set to 1 m, while the distance from the coordinate system’s origin to the center of each microphone array was 2 m. This arrangement was designed to maximize localization performance while maintaining cost efficiency in large-scale deployments.

Each microphone was connected to a high-speed USB audio interface, and the signals were processed in real time by the NVIDIA Jetson AGX Xavier (NVIDIA Corporation, Santa Clara, CA, USA), which features an 8-core ARM CPU and a 512-core NVIDIA Volta GPU optimized for signal processing. The Jetson system performed real-time data acquisition and localization calculations, integrating the GCC-PHAT algorithm to estimate the drone’s position with high precision.

The localization error was defined as the distance between the actual drone coordinates and the estimated positions. The drones were positioned at the xy coordinates shown in

Figure 9, with altitudes of 4 m and 6 m, respectively. These altitudes were slightly reduced to ensure accurate evaluation, as the low signal strength of the drones used in the experiment posed measurement challenges.

Due to the low acoustic intensity of the drone’s emitted sound, the maximum experimental distance was limited to 10 m to ensure reliable detection. Despite this constraint, the proposed localization method demonstrated accurate performance within this range, validating its feasibility.

Furthermore, the planar wave approximation remains applicable in this setup, as the wavelength of the drone’s sound is relatively short compared to the microphone array’s spatial configuration, minimizing spherical wavefront distortions.

Following the placement of the drones, 10 measurements were taken at each location, and the average values were used to evaluate the localization performance. The results, as shown in

Table 3 and

Table 4, demonstrate the performance of the proposed algorithm in comparison to the baseline method.

As can be seen from the tables, the proposed method showed an average error reduction of approximately 1.7 m in all configurations of the real-world test environment. The variance was also reduced by approximately 0.9575 m2. These reductions demonstrate consistent localization performance across various angles, indicating the method’s effectiveness in minimizing errors even under different conditions.

While the baseline method outperformed its simulation results in real-world tests, the proposed method demonstrated slightly reduced performance under real-world conditions. This discrepancy can be explained by two key factors. First, significant background noise during the experiments led to a lower SNR, which negatively impacted the performance of the proposed method. Second, in the experimental setup, the drone was positioned 5 m lower than in the simulations due to difficulties in collecting sound signals at altitudes above 10 m. This adjustment reduced the angular difference between the azimuth and AOA. This reduction in angular disparity contributed to the improved performance of the baseline method in comparison to the simulation results.

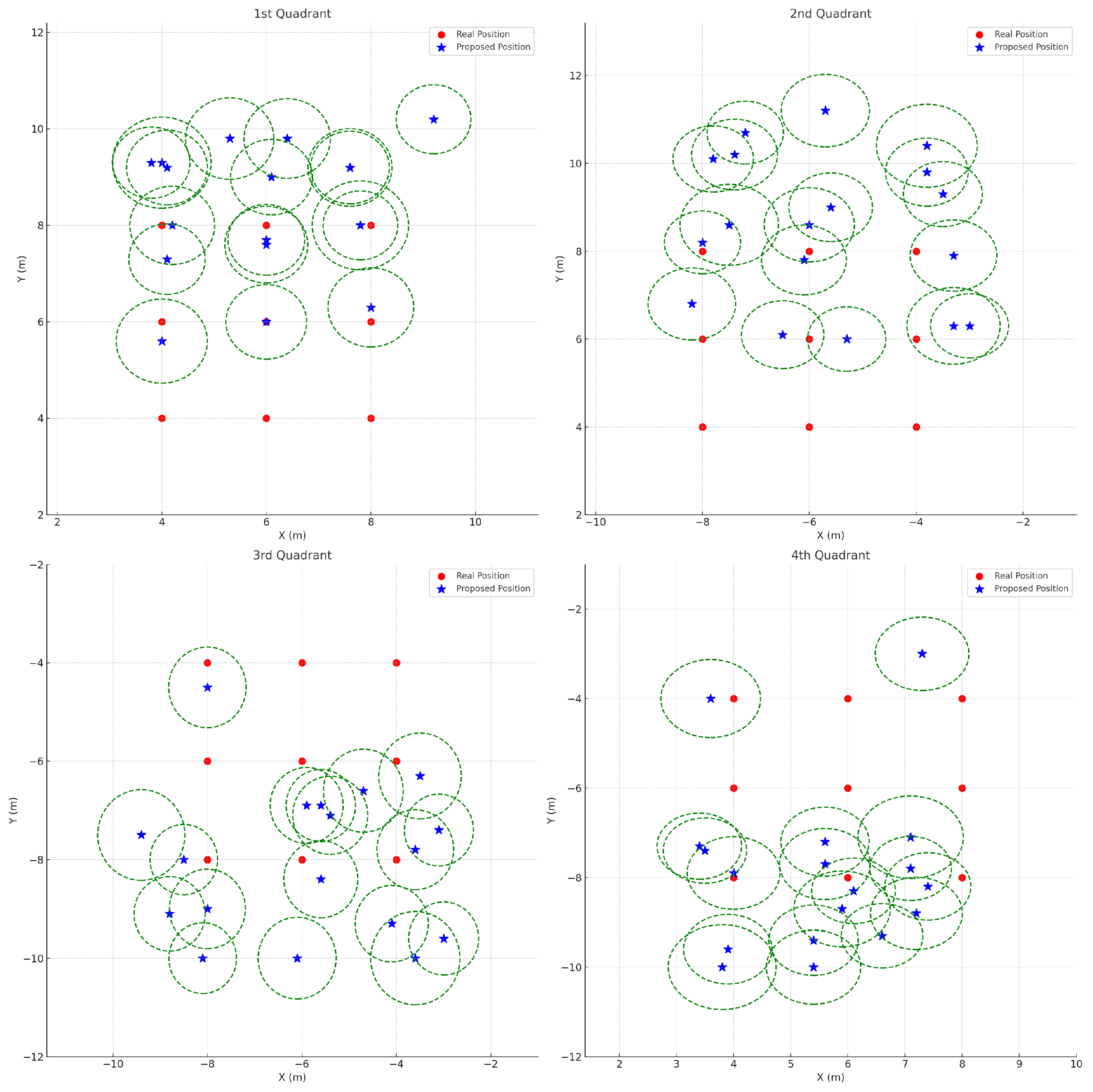

In

Figure 10, the error bars—depicted as green dashed circles—illustrate the 95% confidence intervals of the localization results obtained using the comparison method. These intervals were computed using the average standard deviations across all quadrants (

,

,

), a sample size of

, and a

t-value of 2.26. Notably, the comparison method exhibits relatively large localization errors in each quadrant, particularly along the y-axis in the second and fourth quadrants. This suggests that the baseline approach, which does not adequately account for the discrepancy between the azimuth and the angle of arrival (AOA), struggles to accurately estimate the true direction of the sound source, especially when the source is at a significant altitude. The resulting reliance solely on the AOA leads to increased errors, as evidenced by the wide error bars.

Moreover, because the estimation of the z-axis coordinate depends on the accuracy of the x- and y-axis estimates, the large variance in y-axis localization further degrades the performance of z-axis estimation. The overall large error bars in

Figure 10 serve as a clear indication of the increased uncertainty associated with this method.

In contrast,

Figure 11 shows the localization results achieved using the proposed method. Although the same confidence interval calculation approach is used, the average standard deviations here (

,

,

) differ, reflecting a refined estimation process. Consequently, the proposed method demonstrates reduced localization errors and lower variance—particularly along the y- and z-axes—as compared to the baseline method. The smaller error bars in

Figure 11 indicate that incorporating azimuth angle calculations into the algorithm substantially improves localization accuracy for sound sources at high altitudes.

Overall, the comparison between

Figure 10 and

Figure 11 demonstrates that the baseline (comparison) method suffers from significant localization uncertainty, whereas the proposed method offers superior performance by effectively integrating azimuth information. This enhancement is critical for applications such as drone detection, where precise 3D localization is essential.

5. Discussion

This paper proposed a localization technique that employs distributed placement and azimuth angle estimation to accurately determine the position of high-altitude drones. The effectiveness of the proposed method was validated through both simulations and real-world experiments.

The simulation results demonstrated that the localization error of the proposed method was significantly lower than that of the baseline method in an ideal channel. Additionally, the proposed method outperformed the baseline in noisy environments with SNRs ranging from −20 dB to 30 dB, with the performance gap widening as the SNR increased. Real-world test results further confirmed the superior localization accuracy of the proposed method across various locations. On average, the proposed method achieved a 1.7 m reduction in error compared to the baseline method, along with a variance reduction of 0.9575 m2. These findings indicate that incorporating azimuth angle estimation enhances both the accuracy and precision of drone localization systems.

While the proposed method has demonstrated significant improvements in drone localization accuracy, several areas remain for future exploration and enhancement.

One critical direction for future research is the extension of the proposed method to multiple drone scenarios (Drone Swarm Localization). The current approach focuses on localizing a single drone at a time; however, real-world applications often involve detecting and tracking multiple drones simultaneously. To address this, future studies could explore multi-source localization techniques, such as array signal processing for simultaneous TDOA estimation or machine learning-based source separation methods.

Another important research direction is expanding the operational range of the system. The experimental range was limited to 10 m due to the low acoustic intensity of the drone’s emitted sound, but real-world drone detection scenarios often require localization at distances exceeding 100 m. To extend this capability, future research could integrate high-sensitivity microphones, directional microphone arrays, or advanced signal processing techniques to improve detection at longer distances.

Furthermore, the proposed localization system could be integrated with emerging technologies such as 5G networks and edge computing to enable real-time, distributed processing. Leveraging 5G-enabled IoT infrastructure would allow for low-latency processing and network-based localization, making the system more scalable and efficient for real-world deployment.

Additionally, future research could explore the applicability of AI-driven localization models, which could dynamically adapt to environmental noise conditions and optimize localization performance in challenging urban or battlefield environments. Techniques such as deep learning-based denoising and adaptive filtering could further enhance the robustness of drone acoustic localization.

Lastly, this study focused on passive acoustic localization, but future research could investigate the integration of multi-modal sensor fusion, combining acoustic localization with RF-based positioning, computer vision, or LiDAR-based tracking to achieve higher reliability and accuracy in complex operational environments.

By addressing these challenges and integrating with next-generation technologies, the proposed method has the potential to significantly advance the capabilities of drone localization and surveillance systems for applications in aerospace security, smart cities, and autonomous monitoring systems.

6. Conclusions

This study introduced an innovative localization strategy that employs two sets of distributed microphone arrays in combination with the Generalized Cross-Correlation Phase Transform (GCC-PHAT) method to enhance anti-drone detection capabilities. Unlike traditional localization approaches, the proposed method achieves higher precision by accurately estimating the azimuth angle while leveraging the unique acoustic characteristics of drones. The key contribution of this study lies in the development of a robust localization framework that improves both accuracy and stability, even in the presence of noise and environmental uncertainties.

The effectiveness of the proposed system was validated through both simulation and field experiments, demonstrating substantial improvements in localization accuracy over existing techniques. In simulation environments, the proposed method significantly reduced both the mean and variance of localization errors compared to conventional approaches, leading to more precise positioning results. Additionally, in noisy conditions, the proposed system consistently outperformed baseline methods across a wide range of Signal-to-Noise Ratio (SNR) levels, achieving a peak localization improvement of 2.13 m when the SNR exceeded 0 dB. Notably, while traditional methods exhibited decreased accuracy along the y-axis and z-axis, the proposed approach maintained stable performance across all axes, highlighting its robustness in dynamic environments.

Field tests further reinforced the practical applicability and reliability of the proposed localization framework, as real-world experiments produced results highly consistent with simulation findings. By effectively differentiating between azimuth and elevation angles, this study demonstrates that the proposed method provides significant enhancements in localization accuracy, particularly in challenging and dynamic conditions where multi-path interference and environmental noise are prevalent.

In future work, the adaptability of the proposed method will be assessed across various drone types and broader environmental settings. Further comparisons with alternative localization techniques will be conducted to refine the algorithm and enhance real-time performance. Additionally, future research will explore the integration of advanced AI-driven models and 5G-enabled IoT networks to develop a scalable and efficient localization system for real-world deployment.

By addressing these challenges, this study contributes to advancing the field of acoustic drone localization and provides a foundation for future developments in aerospace security, smart surveillance, and autonomous monitoring systems.