1. Introduction

Oil spills pose threats to ecosystem balance and human health. They constitute emergency situations in which the correct identification of the affected area and the prompt mitigation measures is critical to avoid environmental damage and financial losses. At sea, the largest spills in terms of volume happen at oil extraction sites, where they can be dramatically larger than any spill involving tankers. For example, it is estimated that the explosion at the Deepwater Horizon mobile drilling rig on 20 April 2010 caused around 700,000 tons of crude oil to be released into the Gulf of Mexico [

1,

2]. When caused by a tanker, a spill is considered to be large if it exceeds 700 tons, as indicated in [

3], where the authors also mentioned that only one such spill occurred worldwide in 2023. Very large spills are thus events that occur with low frequency, but their potential environmental damage is devastating, as they are harmful to marine birds, mammals, fish, and shellfish, among others, impacting not only the ecosystem but also parts of the food chain and human food resources [

4]. In many cases, the oil forms so-called oil slicks due to its lower density, i.e., continuous films on the water surface; however, heavy oils could also sink if evaporation of the lighter compounds occurs, meaning that organisms from all water layers and the bottom surface would be at risk. For birds and mammals, the oil impacts the insulating and water-repellent abilities of fur and feathers, leaving them exposed to harsh elements and leading to their death due to hypothermia [

5]. Oil pollution affects the health and reproductive capacity of fish, birds (pelicans, gulls, and many others), sea turtles, and mammals (pinnipeds, cetaceans, manatees, sea otters, polar bears, dolphins, whales), but it also impacts invertebrates and plants [

5,

6]. Apart from their dramatic impact on the ecosystem, oil spills also have economic implications, not only short-term ones related to the cleanup activities, but also in the medium and long term, e.g., by making the exploitation of natural resources through fishing impossible. The cost of cleaning an oil spill is difficult to model and can vary significantly, as it depends on a multitude of specific traits, such as oil type (chemical composition), quantity, viscosity, tendency to emulsify, environmental conditions (temperature, wind, waves), weathering, spill location, etc., and many models and algorithms are inherently limited as they are based on empirical studies [

7]. For specific cases, the cleaning cost estimates indicate that the financial losses can be very high: a ship that ran aground in New Zealand in 2011 released more than 350 tons of heavy fuel oil and other contaminants, leading to a conservative cleaning cost of 60–70 million USD [

8], and the Deepwater Horizon accident cost the exploiting company around 69 billion USD, of which approximately 14 billion USD was committed only for response and cleaning activities, according to their own reports [

9].

Given the dramatic consequences of oil spills, intensive research efforts have been devoted to mitigating their effects. A critical part of such efforts is the detection and accurate delineation of the spill’s extent, which allow for rapid intervention in containing the spread and removing the spilled substances from the area. Remote sensing methods have been extensively employed in recent years to test their capabilities in observing and delimiting spills in open water. Both passive and active sensors are used to detect oil spills. The former are sensors that sense radiation that is naturally reflected or emitted by the observed objects, while the latter excite the observed surface using a source of radiation (light) and then sense the response of the observed object to this radiation. Optical imagery can be useful to distinguish oil from other materials (algae, water). In the UV spectral region, one of the most widespread techniques when dealing with crude oil is based on laser fluorosensors, which are active systems using a UV laser to excite oil compounds such that they emit radiation at higher wavelengths due to their fluorescent properties. The sensor is then able to observe the difference in the quantities of radiation received from the polluted and the clean areas [

10,

11,

12,

13], even at night. Due to the use of additional equipment (UV lasers), such a system is heavy and expensive. In some works, the use of passive UV sensors for oil spill detection, based on the contrast between the spill and water, has been investigated for crude oil based on airborne hyperspectral sensors [

14,

15,

16] and spaceborne multispectral sensors [

17,

18]. In the visible range, non-emulsified oil has no specific spectral features, which impedes its straightforward discrimination, along with the influence of other factors (illumination-view geometry, oil condition and thickness, weather) [

19,

20,

21,

22]. Multispectral sensors carried by satellite platforms such as Sentinel-2, MODIS, or Landsat have also been exploited for oil spill detection (see [

19] and the references therein). Hyperspectral data (airborne, spaceborne, or point measurements) benefit from high spectral resolution, thus being able to capture fine features in the short-wave infrared (SWIR) range, which can be helpful in distinguishing between oil and other materials, and even between different types of oil [

23,

24,

25,

26,

27,

28]. However, they require intensive data preprocessing, and they are more expensive to obtain than other imagery types, especially when real-time monitoring is targeted [

29]. Thermal imagery in the long-wave infrared spectral region (8–14 µm) has proven to be useful in detecting thick oil spills (>500 µm), but it is not useful to detect thinner oil layers [

30,

31]. A consistent number of scientific papers deal with oil spills by exploiting Synthetic-Aperture Radar (SAR) images [

32,

33,

34,

35]. SAR images offer advantages over optical images: they can operate during both day and night, they penetrate through clouds and smoke, and they are not affected by sun glint on water areas, among other factors. The principle behind spotting oil spills on water based on SAR imagery is that the oil film reduces the backscattering of the water area as it attenuates the roughness of the surface; thus, the affected area appears darker than the surrounding water (see [

36] and the references therein).

In this paper, a special case of oil spills is under investigation: oil spills occurring in port environments. In this type of environment, at least locally, the water’s surface roughness may not be high enough to be sensitive to an oil spill, preventing full efficiency of SAR-based methods. Oil spills in ports have characteristics that differ from those happening at sea or on shorelines, impeding the application of many methods developed for open-sea spills. First, it is usually refined oil and not crude oil, as is the case for extraction platforms and large tankers. Second, the thickness of the oil is low, meaning that methods based on thermal images or SWIR data are not efficient. Third, ports are crowded places in which fixed constructions and mobile objects are present, and spaceborne imagery, either optical or SAR, is less efficient due to the relatively coarse spatial resolution. A very important characteristic is that, unlike accidents happening at sea, large numbers of people are usually present in ports (port employees, clients, visitors). Oil spills in ports are events that occur with relatively high frequency. Even spills in low quantities, e.g., leaks during the refueling of ship tanks, should be treated seriously, as their harmful effects on the environment are certain. Furthermore, a large number of studies show that prolonged exposure to oil compounds could induce a variety of diseases in humans, including increased risk of cancer [

37,

38,

39,

40]. This means that spilled oil should be cleaned as soon as possible, such that port workers are minimally exposed. Furthermore, fast intervention is also needed from an economic point of view—when a spill occurs, financial losses are induced not only by the cleaning operations but also by the interruption or disturbance of the port’s activities. Ideally, a solution should be designed based on lightweight sensors that can be operated at the port from mobile platforms (e.g., drones or vessels), at low cost, and with very low risk of injuries or physical damage in case of accidents.

As mentioned above, many of the available techniques are either not applicable or have not been tested for the specific traits of spills occurring in port environments. However, for thin oil films, lightweight passive sensors in the ultraviolet (UV) spectral region, underdeveloped as compared to other methods [

14], could be useful in delimiting the spill. Specifically, the oil–water contrast is usually positive due to the higher refractive index of the oil in comparison to water, such that the oil reflects more incoming radiation than the surrounding water (see seos-project.eu; last accessed: 5 September 2024). However, positive contrast may occur not only for oil but also for cloud reflection. Moreover, another physical phenomenon, linked to the surface roughness, is in competition with the effect of the refractive index difference. Indeed, if oil dampens the surface roughness enough, outside the specular direction, the scattered signal is higher on water than on oil. The surface roughness effect leads to a negative oil–water contrast. Such a contrast can also occur between wind slicks and water. Nevertheless, a refractive index effect leading to a positive oil–water contrast is assumed for ports.

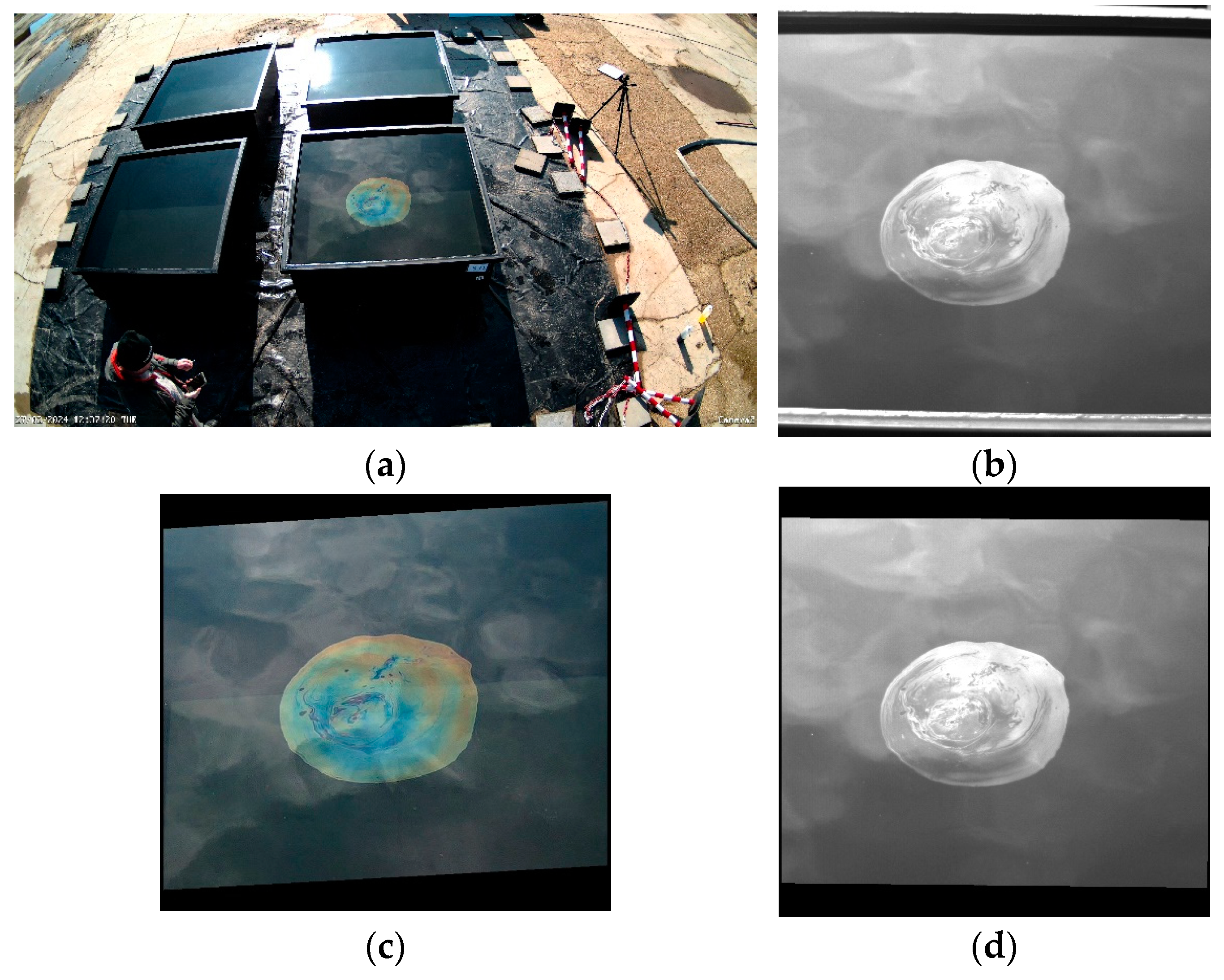

In this paper, the use of passive UV sensors for oil spill detection in port environments is investigated, motivated by the expected behavior based on physical phenomena and the identified lack of in-depth studies on this subject. Specifically, this study uses an experimental approach to shed light on the following fundamental questions: (i) Can passive UV sensors sense oil spills under the circumstances occurring in ports? (ii) Under which viewing and environmental conditions is it possible to detect oil spills by using passive UV sensors? (iii) What are the advantages of passive UV sensors over RGB sensors? (iv) Are the findings found in controlled environments applicable to real-world images? This study is the first to apply a twofold experimental approach to tackle these questions. First, the experiments were designed in a controlled environment to exploit the capabilities of a passive UV sensor to observe oil spills from various viewing angles and environmental conditions. Statistical metrics were computed in order to analyze the separability between the oil and the water areas under various experimental configurations. Second, the same sensing camera was installed on a vessel in a real port environment to analyze whether the experimental findings derived under controlled conditions would be confirmed by the observations made from the vessel during real spill events.

The rest of this paper is organized as follows:

Section 2 describes the experimental setup.

Section 3 presents the findings extracted from the performed experiments, followed by a discussion in

Section 4. Finally,

Section 5 draws the overall conclusions and includes pointers for future work.

4. Discussion

The experiments designed in this study addressed the particular case of oil spills in port environments. The need for such a study stems from the particularities of ports in comparison to open-sea environments, as well as the difficulties in applying established surveillance and detection methods in the open sea. The limitations can be summarized as follows: (i) Ports are crowded environments where free satellite imagery, including optical and SAR images provided by the Sentinel-2 and Sentinel-1 satellites, does not have a sufficiently high spatial resolution to resolve all objects; moreover, high-resolution satellite images offered by commercial companies induce additional costs and, similar to free-access data, do not provide a continuous monitoring of the port. (ii) Active sensors, such as those exploiting the fluorescence of oil compounds, are relatively heavy and voluminous, inducing additional safety and security risks if used in ports. (iii) The oil spilled in ports is usually refined and, therefore, different from the crude oil in open seas, leading to a need to assess the applicability of the established methods in the case of ports. (iv) Other sensing solutions, such as SWIR cameras, are more efficient for thick oil layers than for thin oil slicks, which is the case at ports. (v) The risks to human health are higher at ports than in the open sea, due to the presence of people in the area; thus, the intervention procedures are different, and continuous monitoring is needed. Therefore, lightweight systems, easily deployable in operational setups, are encouraged.

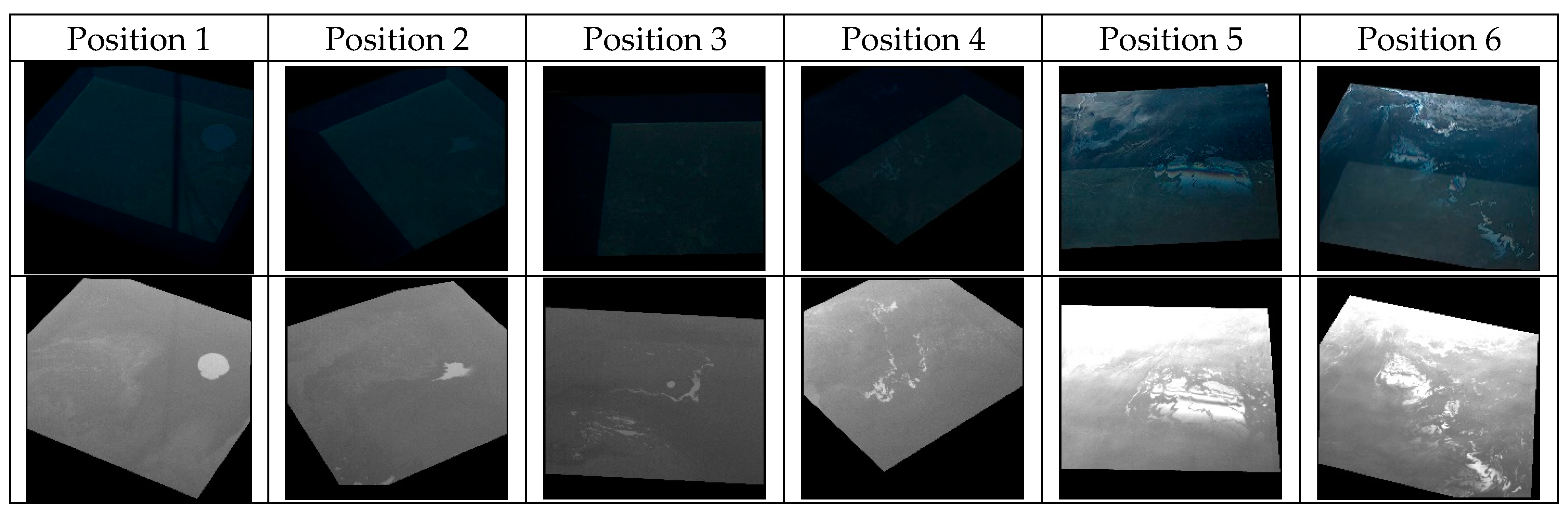

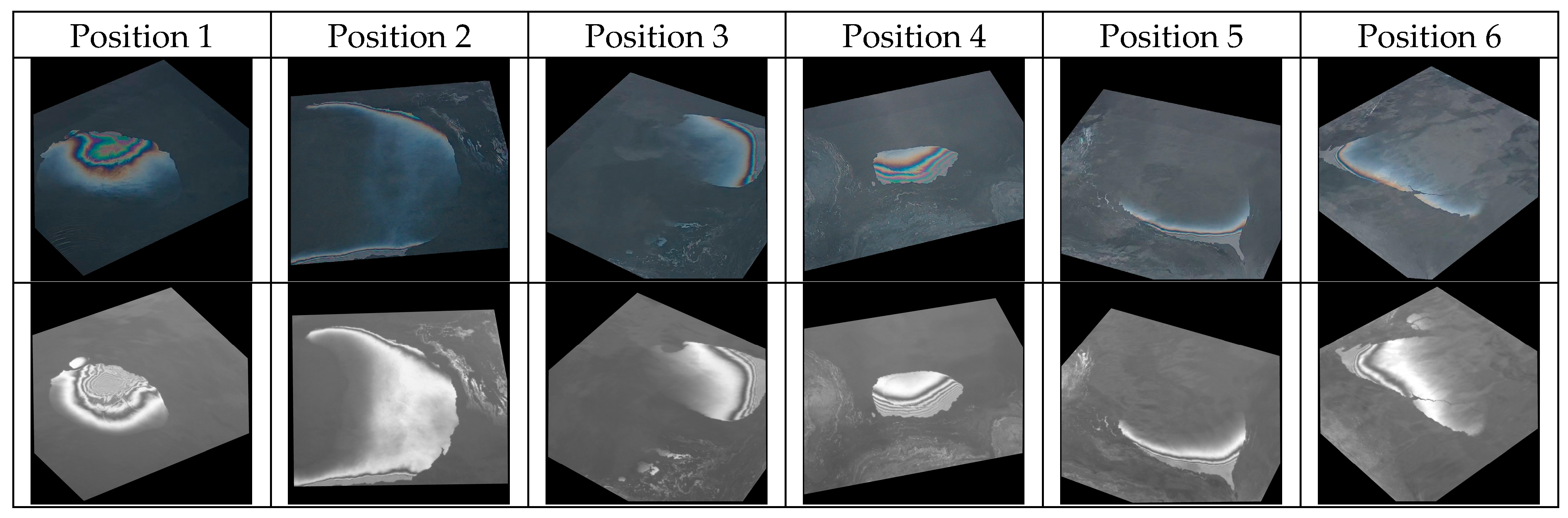

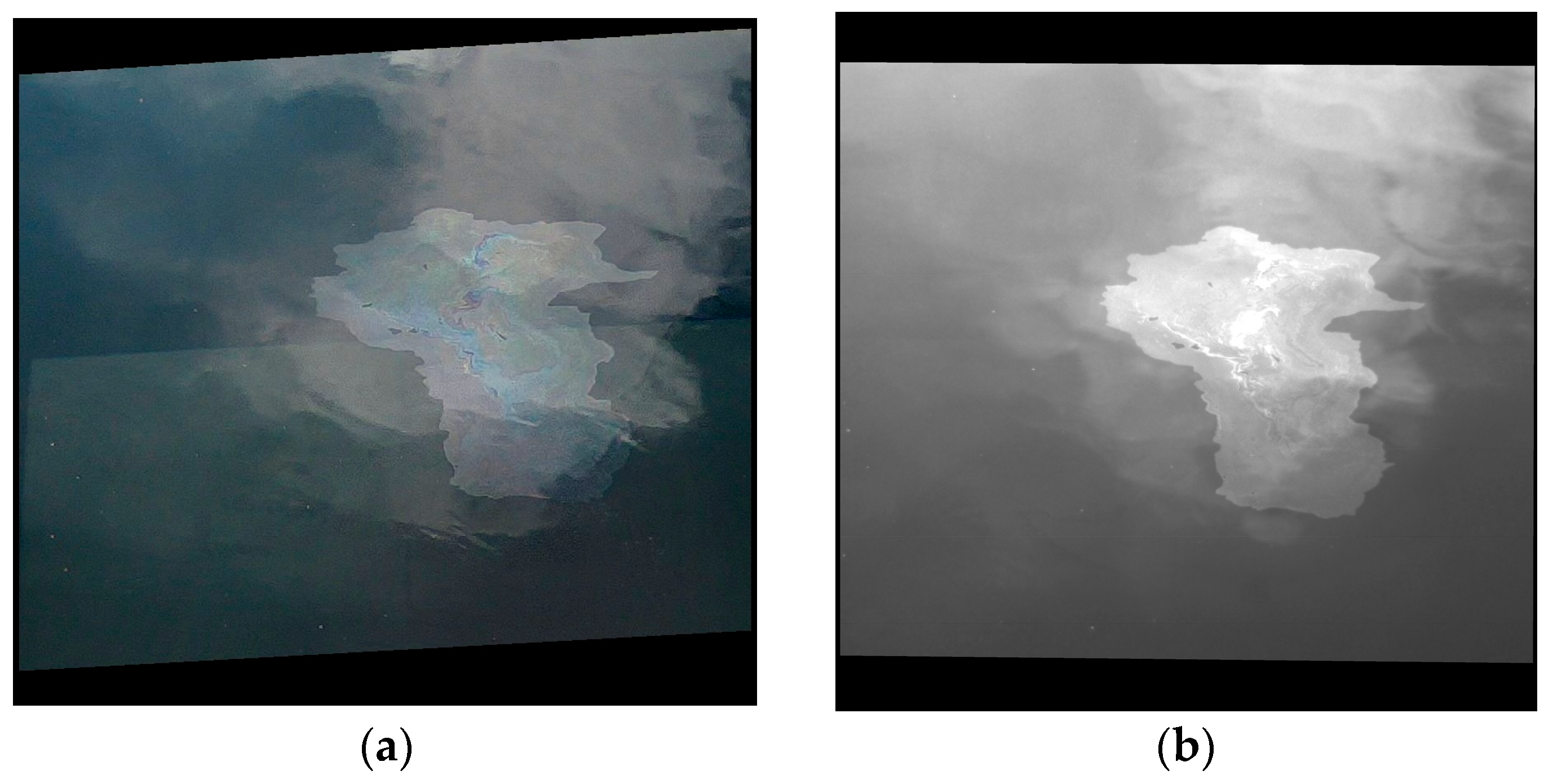

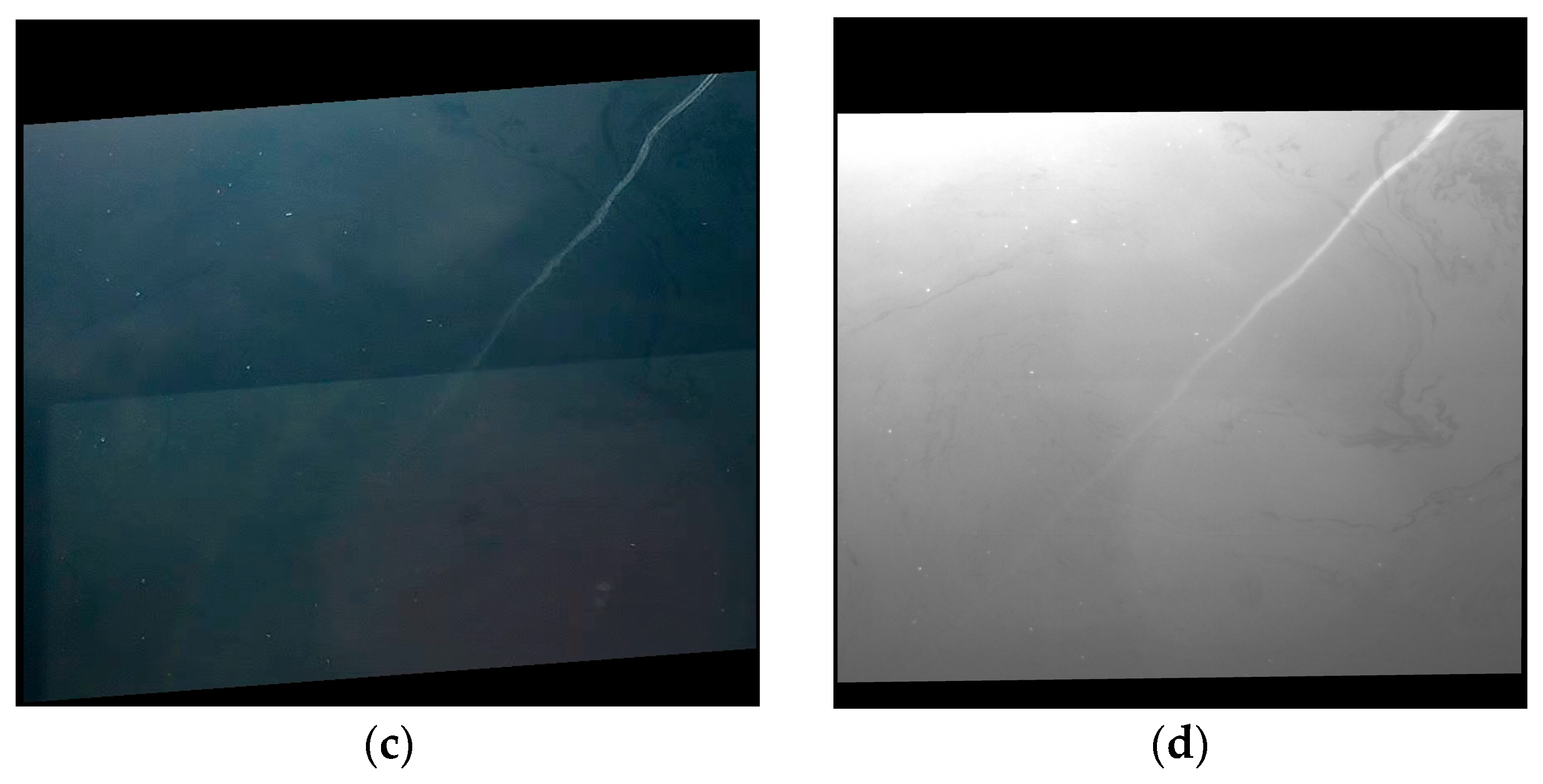

The controlled experiments designed in this study showed that the UV camera has higher discriminative power between the oil and water classes in almost all cases. As shown in

Table 5, which expands upon

Table 2,

Table 3 and

Table 4 by synthesizing the discriminative power of each band in terms of the various metrics, there is only one case where the UV band is inferior to another band: the near-nadir viewing of used oil. Unlike the other cases, the used oil might contain not only oil but also nano- or microparticles that result from the movement of the lubricated metallic parts and other residuals, e.g., from fuel or oil burns. Thus, the fact that the red band outperforms the other bands might be due to the presence of these non-oil components, not to the oil itself. However, as an overall observation, the near-nadir viewing case appears to be the only one where the differences between bands are reduced for diesel and marine diesel. This viewing angle is impractical in port environments, as the covered area is restricted in comparison to oblique viewing angles. The near-nadir view is not ideal for the surveillance of large areas; thus, the other viewing angles, where the UV camera outperforms the RGB camera, are more representative for operational setups. Equally importantly, the UV camera showed much less sensitivity to bottom effects. This is important if tailored algorithms are designed for the automated delineation of the oil spills and other classes are considered in the analysis, which was beyond the scope of the current study. For example, seagrass might be observed by an RGB camera but not by a UV camera, and the same holds for underwater plumes. A downside that equally affects the UV and the RGB images is the presence of clouds and other undesired reflections, which are captured by both data types, constituting an obstacle for automated algorithms due to the confusion that they introduce between classes. When analyzing images affected by sun glint, the oil can be spotted around the saturated areas in both UV and RGB images. However, for the UV camera, this is not necessarily an advantageous situation, as was shown to be the case for satellite data [

18,

51,

52], where other physical phenomena prevail, since the oil can be spotted from all tested positions. In practice, the data acquisition platforms (drones and/or ships) will scan the water surface while continuously changing position; thus, the images affected by sun glint could be discarded from the analysis without significantly decreasing the chances of spotting the polluted area.

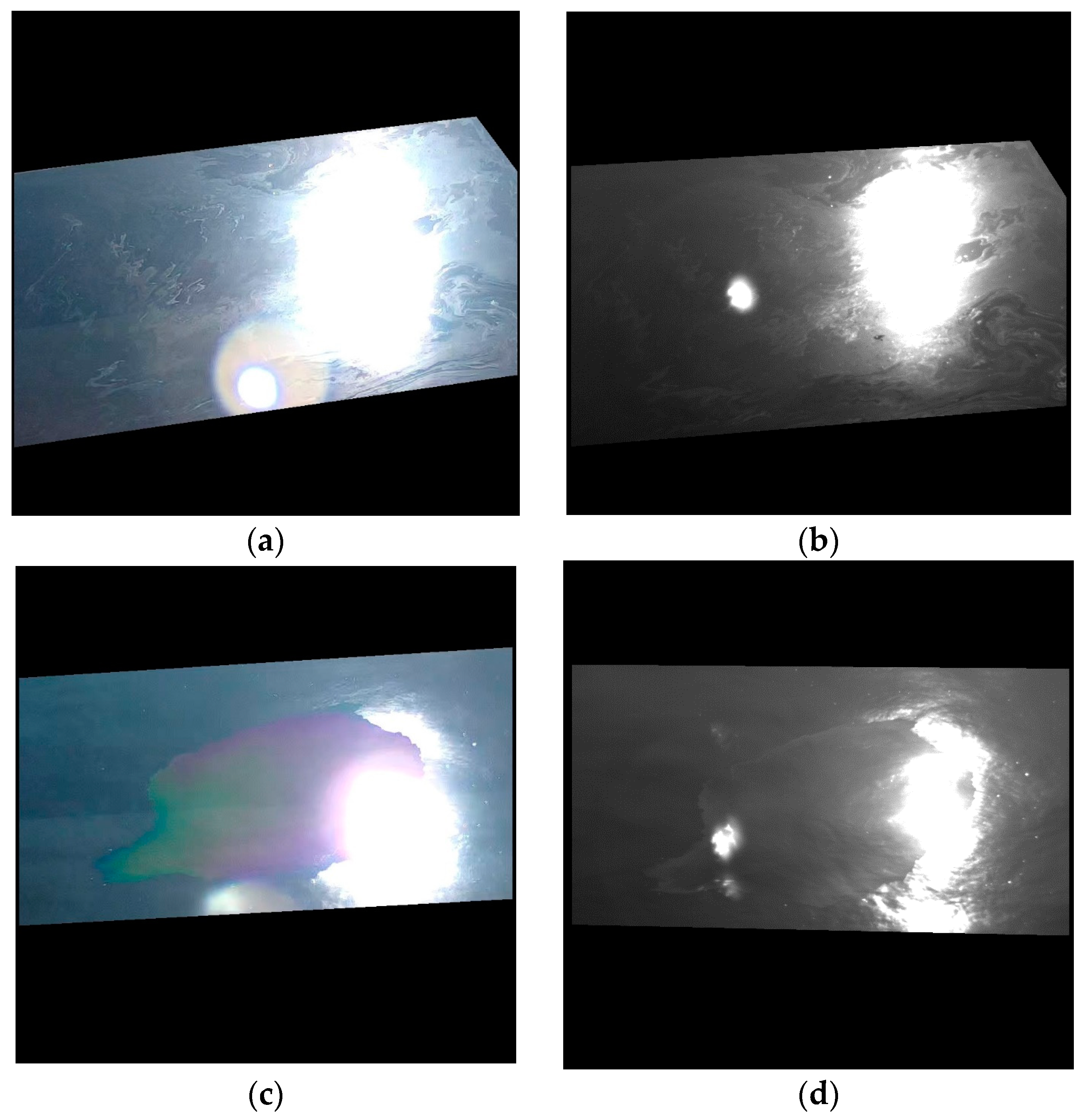

The two so-called rotation experiments, in which the cameras acquired images from a fixed viewing angle but with different relative viewing azimuth angles, showed that the UV camera is not significantly dependent on illumination angles, disregarding sun glint cases. In the reported experimental setup, the environmental illumination impacted the visibility of the spills in the acquired imagery much less, unlike the RGB images, where the presence of the Sun strongly impacted the visibility of the oil, depending on the relative viewing azimuth, as shown in

Figure 11 and

Figure 12. In practical terms, this means that, in order to acquire useful images of the spills, the RGB camera has stronger restrictions with respect to observation angles than the UV camera, which is another advantage for the latter. However, before claiming that this observation is generally valid (irrespective of the acquisition setup), further investigations are needed, e.g., when automated adaptation of camera settings is used concomitantly for both systems.

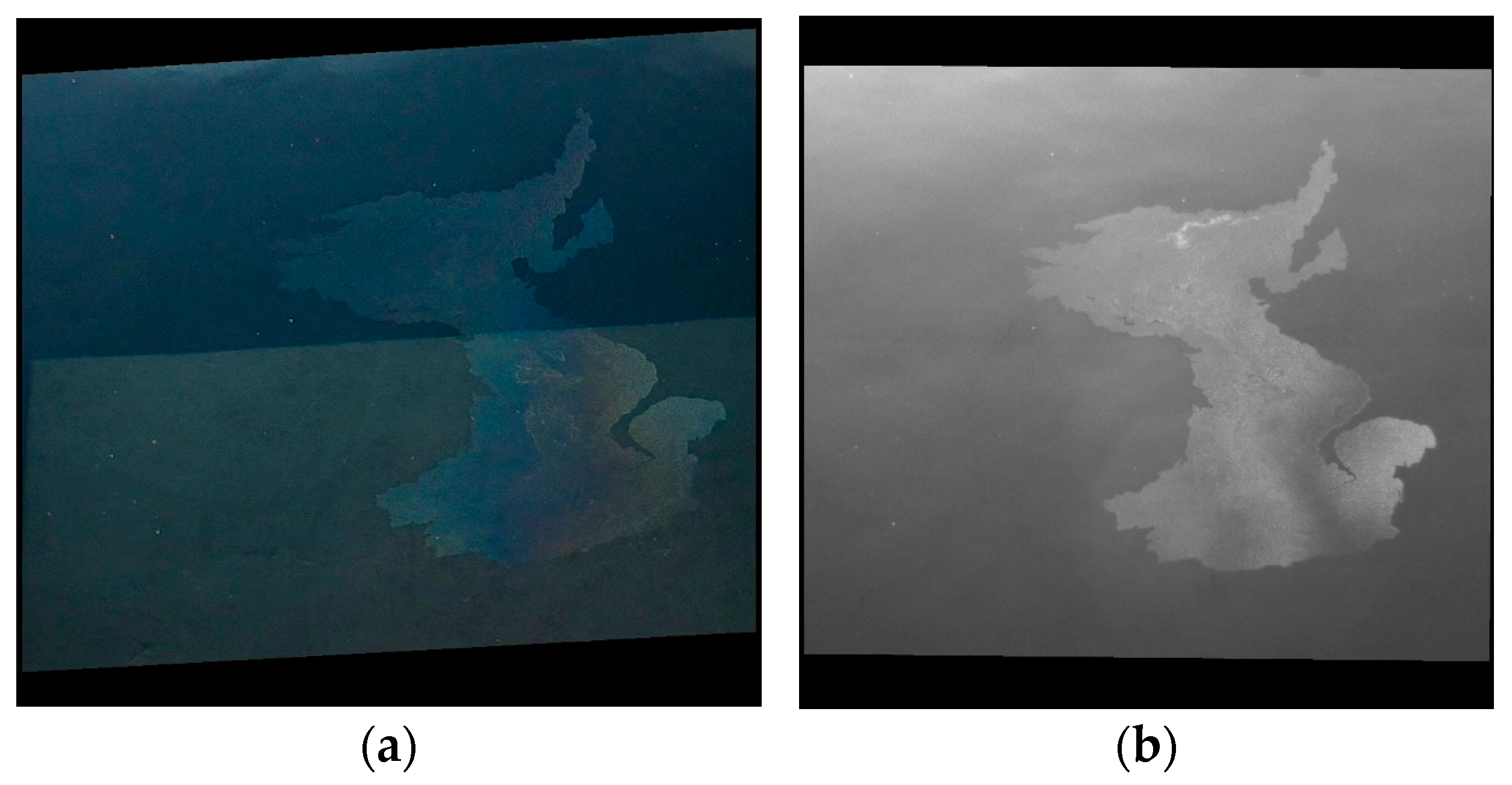

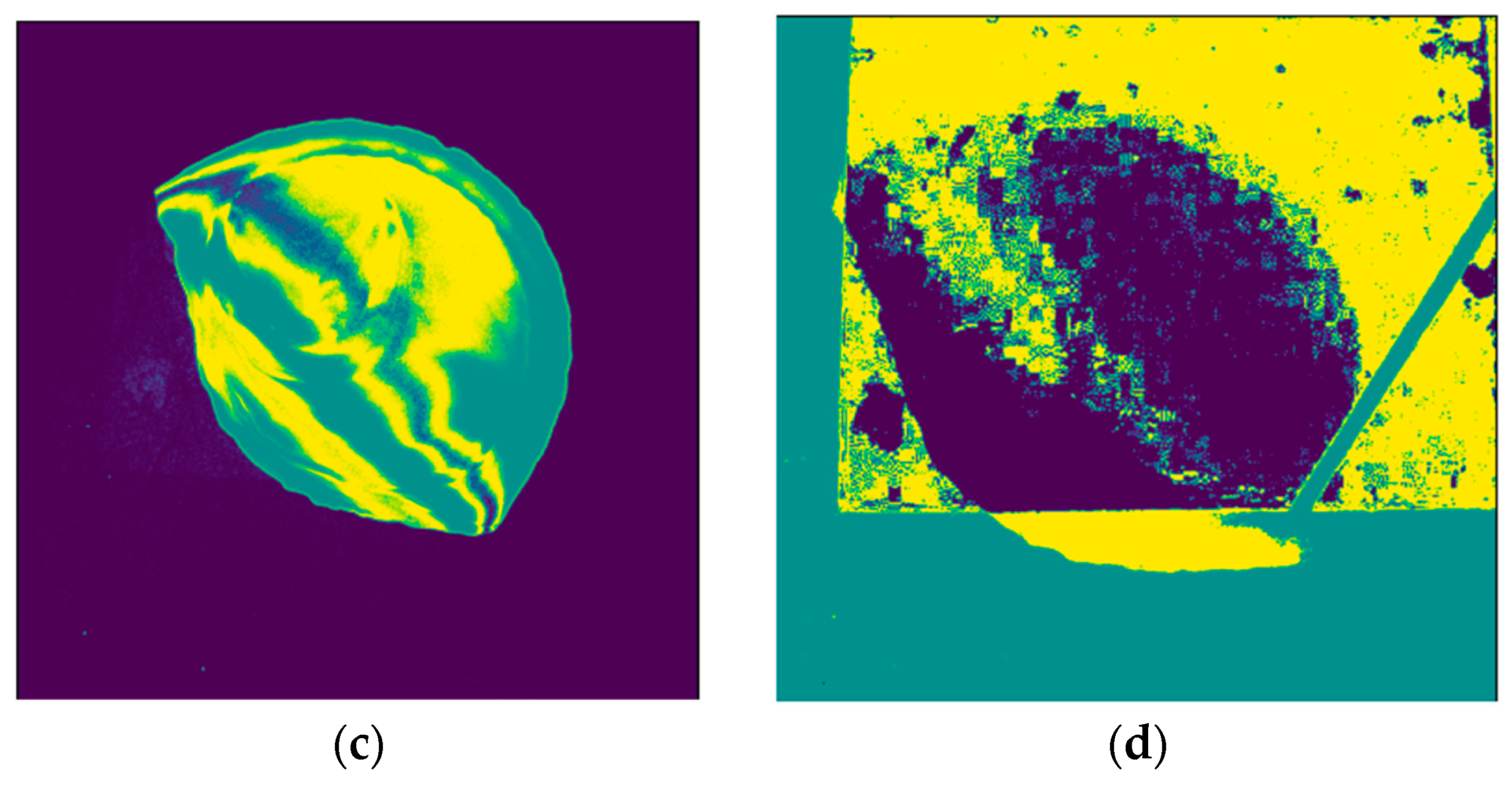

The development of automated oil spill delineation algorithms is beyond the scope of this paper, which focused on the fundamental capabilities of different spectral bands in discriminating between two classes of interest: clean water, and water covered by oil. However, as shown in

Figure 14, even simple approaches such as the k-means clustering algorithm prove that undesired bottom effects can impede the correct delineation of the areas polluted by oil, and to a much greater extent in RGB images than in UV images.

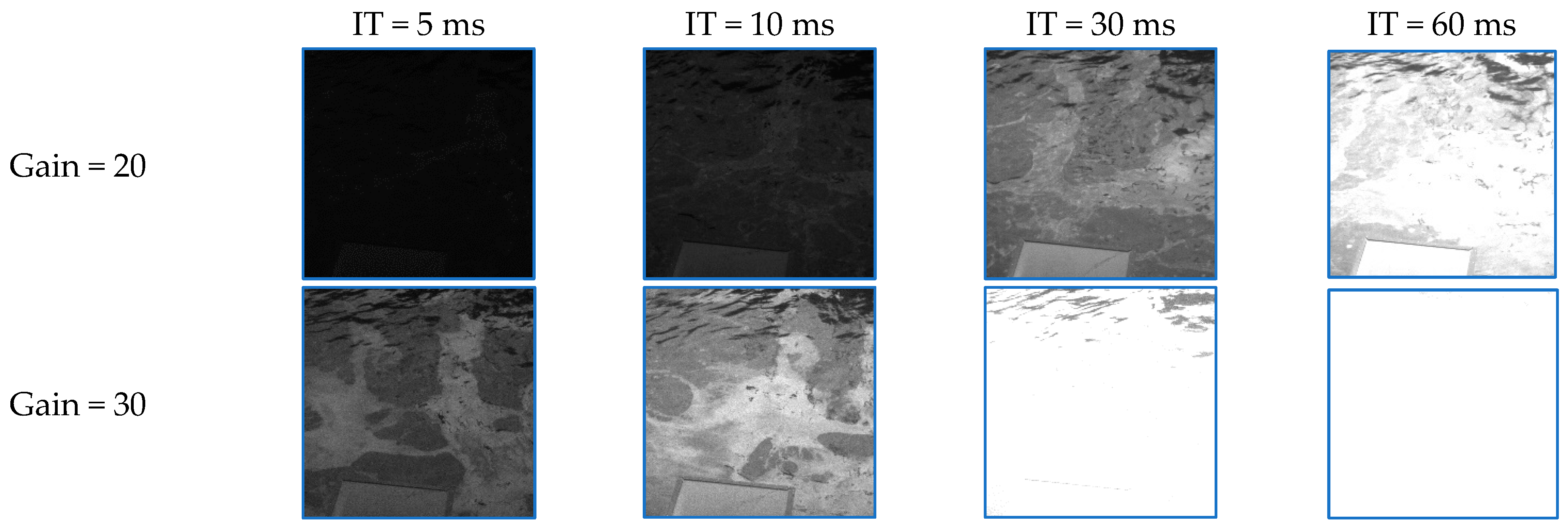

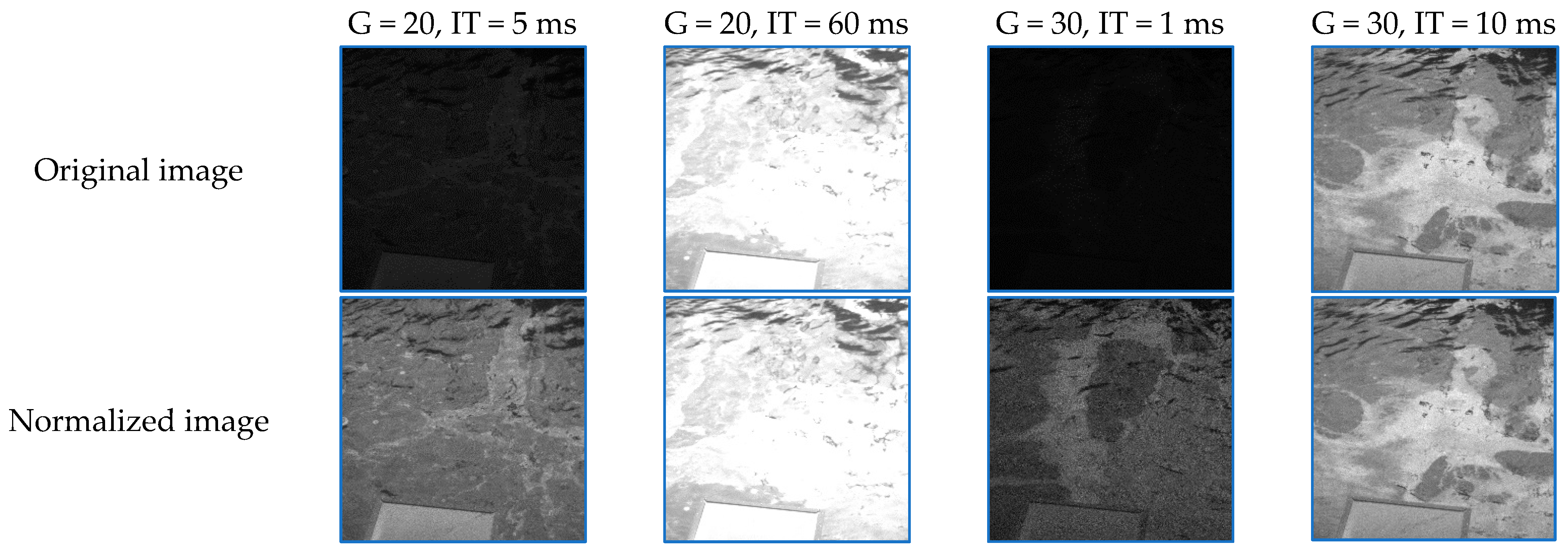

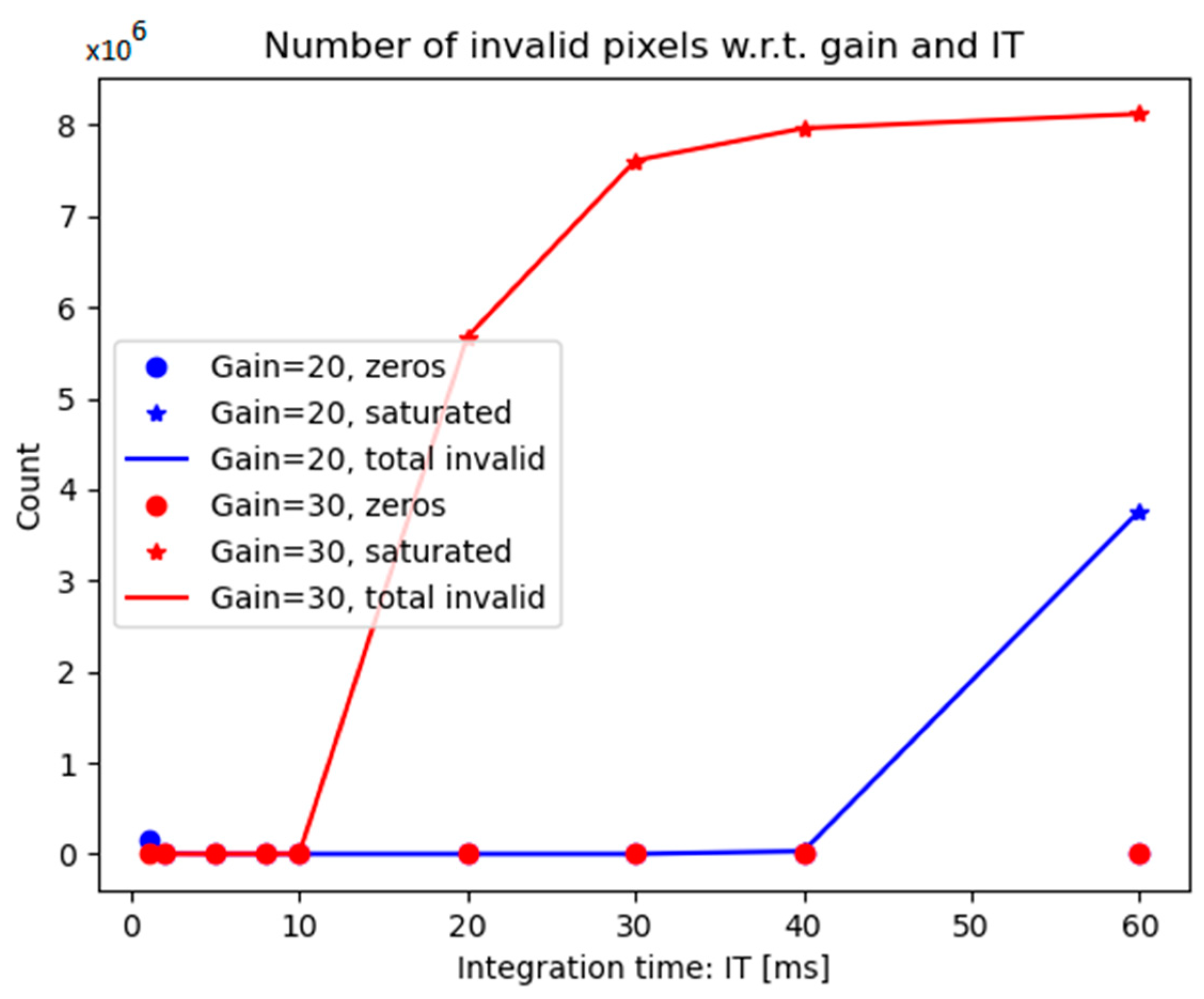

The data acquired in real-world conditions at the Port of Antwerp–Bruges confirmed the observations made in the controlled experiments. It is remarkable that, in the port imagery, the UV camera achieved the best performance with respect to all computed metrics. The data acquisition setup on the PROGRESS vessel allowed for inferring ideal camera settings, either fixed for all types of weather or tailored to instantaneous conditions by plugging in weather parameters recorded by dedicated sensors. In operational setups, the procedure can be progressively refined once more data are available. In the case of oil spill detection and monitoring with drones, imagery of a reference target when the drone leaves its parking area could also provide information for choosing the right camera settings. By using optimal camera settings during data acquisition, automated algorithms dedicated to the detection of the oil spills can ingest images with a good dynamic range for water and oil, therefore ensuring optimal efficiency. Overall, the UV cameras appear to be a viable solution for detecting and monitoring oil spills in port environments. Nevertheless, an interesting path to explore is the discriminative power of the UV camera when changing the UV spectral band, particularly by analyzing shorter wavelengths.

5. Conclusions

In this paper, the capabilities of UV cameras to detect and monitor oil spills in port environments were evaluated. This special type of oil spills has not been studied extensively in the previous literature, despite their common occurrence. The specificities of oil spills in ports call for tailored data types and methods, as many of the established methods applicable to crude oil spills in the open sea are not applicable (e.g., SWIR data have limited capabilities for thin oil films). In ports, additional constraints call for lightweight surveillance solutions; thus, this study can be considered to be one of the first to prove the suitability of such solutions. Our study provides insights into the capabilities of different spectral bands to distinguish between water and oil surfaces by computing separability metrics for various refined oil types, in both controlled and real-world data acquisitions.

The experiments carried out in a controlled environment showed that the UV camera is superior to RGB cameras in terms of discriminative power when two targeted classes are considered: oil surfaces, and clean water surfaces. These experiments were designed to cover a variety of settings, some of them being under the control of the operators (camera settings, relative viewing azimuth angles and positions, oil type and quantity) and others not being controllable by the operators (weather conditions). The discriminative metrics computed for the UV and RGB cameras show that the UV camera is a strong alternative to RGB cameras in port environments. Furthermore, the analysis of real-world images acquired at the Port of Antwerp–Bruges from a mobile platform (a vessel) confirmed the conclusions drawn from the controlled experiments. In this setup, a methodology to infer optimal camera settings with respect to instantaneous weather conditions was also defined.

This work opens a wide range of future research opportunities. While our study proves that the UV camera has a high discriminative power between water and oil areas, other classes of materials were not considered. In ports, it is expected that various objects will often enter the field of view of the cameras, so it is important to explore whether and how they can be separated from the classes of interest. The water’s constituents can also influence the discrimination power, and further research is needed to determine how other phenomena, such as the occurrence of turbidity plumes or phytoplankton blooms, impact the class discrimination. It is possible that using at least one other data type together with the UV data could be helpful to achieve this goal. For example, it is expected that technological advancements will support the continuous monitoring of the port basin by SAR sensors and other system types, such that the advantages of these systems, e.g., the lack of sensitivity to the presence of clouds, could be exploited in conjunction with optical sensors similar to the ones used in our study for an accurate representation of the port configuration at any given moment, including pollution events like oil spills. Furthermore, automated algorithms are needed in order to delimit the oil spills when they occur. When operated from drones, the flight parameters can impact the data quality; thus, it is of interest to study optimal ways to operate these platforms while considering the highest norms of safety for people in the area.

Our study provides a positive answer to a fundamental question: do UV cameras bring advantages over RGB cameras in terms of discrimination power between water and refined oil areas in ports? Thus, this study constitutes a first building block towards an operational monitoring system that includes UV cameras for oil spill detection in port environments, which will be a subject of our future work.