1. Introduction

Pipelines serve as critical infrastructure in urban and industrial environments, supporting essential industries like oil, gas, and water supply. However, as pipelines age and sustain damage, significant losses can occur, highlighting the need for regular inspections and maintenance to maintain safety and operational efficiency. Traditional inspection methods, such as manual techniques and closed-circuit television (CCTV) systems, face challenges due to the complex layouts, extensive lengths, and low-light conditions commonly found in pipelines [

1]. To address these challenges, autonomous in-pipe robots have emerged as a promising solution, enabling access to pipelines with intricate configurations and those buried underground, facilitating effective inspection and detection tasks [

2].

Pipelines are generally categorized by diameter: small (75–200 mm), medium (250–400 mm), and large (450–900 mm) [

3]. Sewer pipelines, typically measuring between 0.1 and 1 meter in diameter, pose substantial challenges for manual inspection, as their dimensions prevent direct human access [

4]. Miniature in-pipe robots have gained attention as a practical solution for navigating and inspecting these small-diameter pipelines [

5]. A key challenge for these robots is feature detection, which directly influences their ability to navigate, inspect, and operate autonomously in uncertain pipe environments. Feature detection methods for in-pipe robots are typically divided into range-based and vision-based approaches [

2].

Range-based methods rely on sensors to measure distances, detecting features such as elbows, joints, branches, or constrictions. For example, Nguyen et al. [

3] developed a miniature robot equipped with three time-of-flight (ToF) range sensors to operate in 75 mm diameter pipelines. The robot detected features by monitoring variations in distance measurements across the front, left, and right directions. Significant changes in sensor readings indicated the presence of features, enabling autonomous navigation and feature recognition. However, range-based sensors have inherent challenges, particularly instability caused by gear backlash. This instability becomes more pronounced when the misalignment of the wheel-legs induces vertical oscillations in the robot’s body, resulting in inconsistent measurements from the range sensors, thereby compromising the accuracy and reliability of the robot. Kim et al. [

6] introduced a weaving laser vision system (LVS) for autonomous mobile robots navigating pipelines, focusing on detecting T-junctions and elbow pipes. Using a 2D laser scanner with a weaving motor, the system creates selective 3D forward maps to identify pipeline features. Partial weaving is used for T-junction detection, while full weaving detects elbow angles and curvatures, modeled as sections of a torus. Experiments validated its effectiveness in controlled environments, but limitations include assumptions of empty pipelines and sensitivity to pitch and yaw.

Vision-based methods utilize cameras and illuminators to detect pipeline features. Ahrary et al. [

7] proposed a traditional computer vision-based navigation method for an autonomous sewer robot KANTARO. KANTARO is able to move in 200–300 mm diameter sewer pipes. By using stereo camera information, the approach extracts feature pixels from regions of interest (ROI) in stereo images and detects local features like manholes and pipe joints through edge detection. While computationally efficient, the approach lacks robustness to lighting changes and noise. Edwards et al. [

8] introduced a robust vision-based approach for pipe robots detecting features in feature-sparse sewer pipes, employing an electro-optical forward-facing camera. Pipe joints are detected using a bag-of-keypoint algorithm leveraging speeded-up robust features (SURFs), while manholes are identified with a linear support vector machine (SVM) classifier. The detection algorithms underwent unsupervised offline training and were tested on 860 frames from three real-world pipes with diameters of 600, 300, and 150 mm. The proposed detection method achieved 85% accuracy in joint detection, far outperforming the Hough Transform’s 25% accuracy. The accuracy of detecting a manhole is 98.5%. The inclusion of a windowing mechanism further enhanced detection robustness, ensuring improved reliability in challenging conditions.

Some researchers combined both range-based and vision-based methods into a single in-pipe robot. Li et al. [

9] introduced a real-time topological localization and mapping system for an autonomous in-pipe miniature robot that was developed from Nguyen et al. [

3]. It combines low-power distance sensors for navigation with vision-based localization, activating the camera only at junctions to save energy and reduce computation. Junctions are mapped as nodes and pipeline segments as edges, creating a compact, dynamically updated topological map. Using normalized cross-correlation for image matching, the system effectively identifies and maps junctions in a simulated pipe network, even under imperfect conditions. While the method excels in simulation, challenges like sensor limitations and environmental factors in real-world deployment remain. Lee et al. [

10,

11,

12] have performed a series of experiments using range and visual sensors for pipeline feature detection respectively. Additionally, they combined both range-based and vision-based methods into a single in-pipe robot MRINSPECT-V to detect natural gas pipeline (203 mm in diameter) features under different scenarios. The vision-based systems were equipped with 64 high-brightness LEDs and a camera that used distinct shadow patterns created by the robot’s illuminator to identify features, leveraging traditional image-processing techniques such as binarization, morphological operations, and Hu invariant moments for feature extraction. The range-based method utilizes a rotating line-laser beam to detect geometric features, offering enhanced robustness in contaminated environments. The experimental results demonstrate that, while the vision-based method is computationally efficient, it is sensitive to noise and contamination, whereas the range-based method is more resilient but computationally intensive, making both approaches complementary for different pipeline conditions [

10,

11,

12].

Vision-based methods offer notable advantages over range-based approaches by capturing detailed contextual information, including textures, colors, and patterns, making them well-suited for complex feature analysis, object recognition, and machine learning applications. These methods are versatile, cost-effective, and capable of detecting objects over longer distances under favorable lighting conditions. However, most current vision-based pipeline feature detection methods rely on traditional techniques, such as edge detection and geometric fitting. Although these approaches are computationally efficient and perform well in controlled settings, their reliance on handcrafted features such as manually defined edge patterns or geometric rules limits their robustness in scenarios with unpredictable noise, dynamic lighting, or intricate textures, commonly encountered in real-world environments.

In contrast, machine learning-based methods, particularly deep learning, automatically learn feature representations from data. As a class of deep learning model, CNN uses convolutional layers to efficiently handle high-dimensional image data without losing critical features, and it has proved its excellent ability in computer vision over the years. This enables higher accuracy, improved robustness to environmental variations, and adaptability to diverse pipeline conditions [

13]. However, deep learning models typically require significant computational and storage resources for training and deployment. Addressing this challenge, TinyML has emerged as a solution, compressing deep learning models to enable deployment on resource-constrained devices, such as internet of things (IoT) devices and microcontrollers. TinyML allows machine learning applications to operate within a few hundred kilobytes of memory and milliwatt-level power consumption [

14,

15,

16].

Recent studies demonstrated the potential of TinyML for object classification or pipeline feature detection in resource-constrained environments. Pleterski et al. [

17] applied TinyML into ultra-low resolution ToF sensors for a miniature mobile robot’s object classification, achieving 91.8% detection accuracy with high inference speed. Avellaneda et al. [

18] designed a TinyML assisted IoT method for indoor asset tracking and identification. The method can achieve a classification accuracy of 88%. Wang et al. [

19] demonstrated high accuracy, highlighting the potential of deep learning for pipeline feature detection in complex environments (120 mm in inner diameter). The authors utilized ResNet18 to classify four types of features: straight, curved, and T-shaped pipes. A dataset of 908 images captured under diverse conditions was used to train the model, achieving high accuracy and addressing the limitations of traditional methods. However, the study did not address the computational and memory constraints of miniature pipeline robots. Furthermore, the ResNet18 model was not deployed on the actual

-II robot, leaving critical challenges, such as onboard processing and real-world testing, unresolved.

The deployment of miniature mobile robots in pipeline environments remains a relatively unexplored area. Leveraging TinyML for feature detection in such constrained settings introduces a range of challenges. A primary limitation is the scarcity of comprehensive datasets that adequately represent the diversity of pipeline features across varying scenarios. Additionally, existing lightweight neural network architectures, such as the MobileNet series [

20,

21,

22], may not be optimized for the unique requirements of this application domain. Beyond accuracy, critical considerations such as inference time and computational efficiency are paramount when selecting models suitable for deployment on resource-constrained, cost-sensitive miniature robots.

This paper focuses on pipeline feature detection, specifically tailored for a miniature mobile robot operating in constrained environments. A specialized pipeline feature dataset was first collected to support model training and evaluation under diverse scenarios. To identify the most suitable learning-based approach, different CNN architectures were compared based on factors such as accuracy and resource constraints. The main contributions of this paper are fourfold: (1) Custom dataset development: A dedicated pipeline feature dataset, capturing various scenarios, is collected to support training and evaluation under diverse conditions. (2) Optimized CNN selection: A thorough comparison of CNN architectures is conducted, resulting in the selection of a custom CNN model optimized for resource-constrained environments by balancing key metrics such as inference time and accuracy. (3) High accuracy detection: Accurate detection (>90%) by a miniature mobile robot in small pipes is achieved using a camera and CNNs implemented on a low-end microcontroller (ESP32 with a 240 MHz CPU and 520 kB RAM). (4) Robust and real-time results: A sliding window data smoothing method is combined with the selected CNN model to enhance robustness and enable real-time performance. This method addresses the unique challenges of pipeline exploration by combining computational efficiency, robust detection, and adaptability to diverse environments, demonstrating its potential for real-world applications in autonomous robotic navigation.

The rest of the paper is structured as follows.

Section 2 outlines the methodology employed in this work.

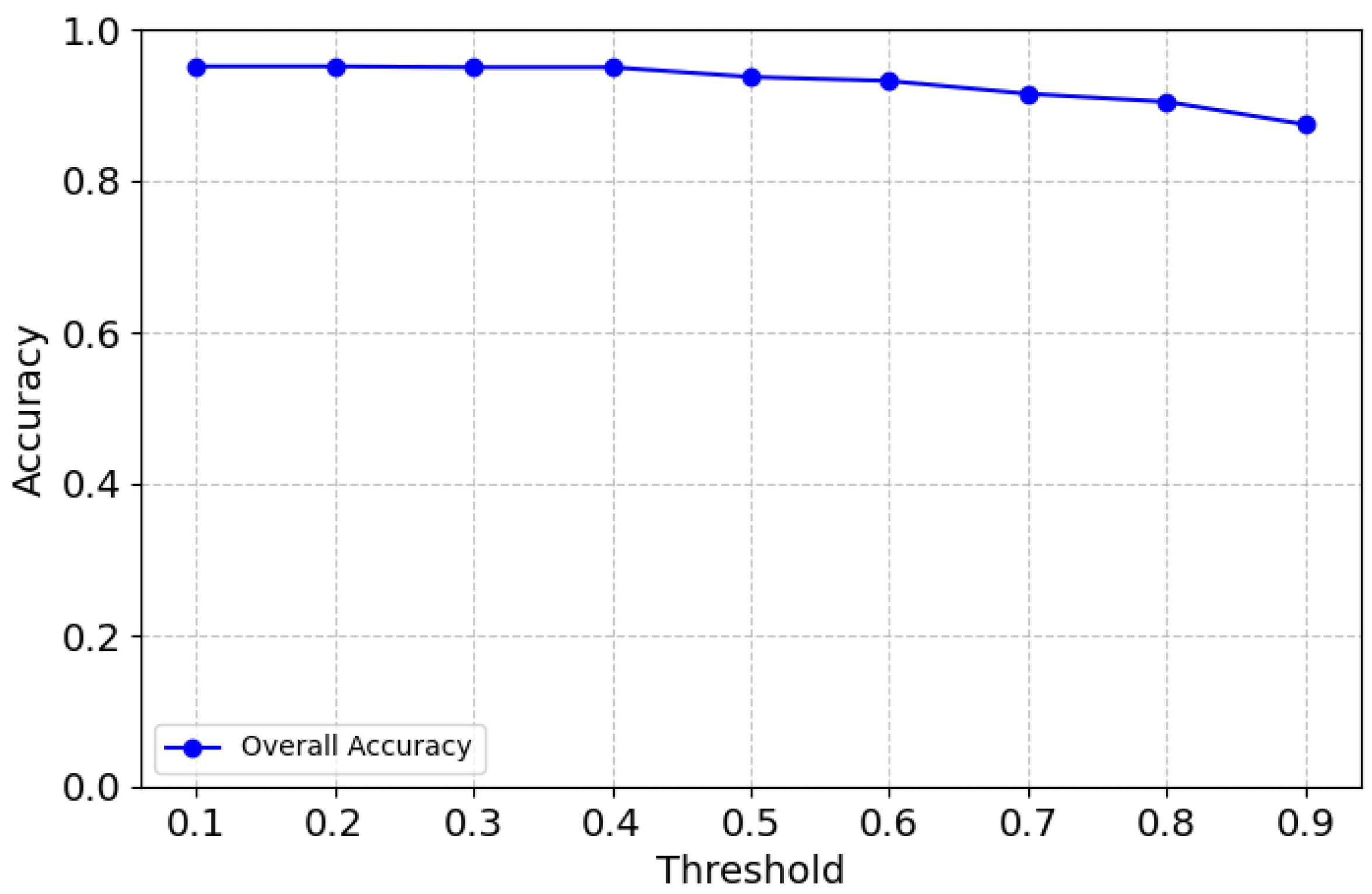

Section 3 presents the evaluation results of the CNN model and the data smoothing techniques.

Section 4 discusses the limitations of this study and suggests directions for future research. Finally,

Section 5 concludes the paper with a summary of the findings.

2. Materials and Methods

2.1. Dataset Collection

To enable effective navigation of unknown pipelines and ensure critical exploration opportunities are not missed, miniature robots require the ability to detect key pipeline features such as elbows, joints, and left turns. To support this, a custom dataset was specifically developed for training TinyML models.

This study employs a miniature in-pipe mobile robot named Joey, which originated from the Pipebots [

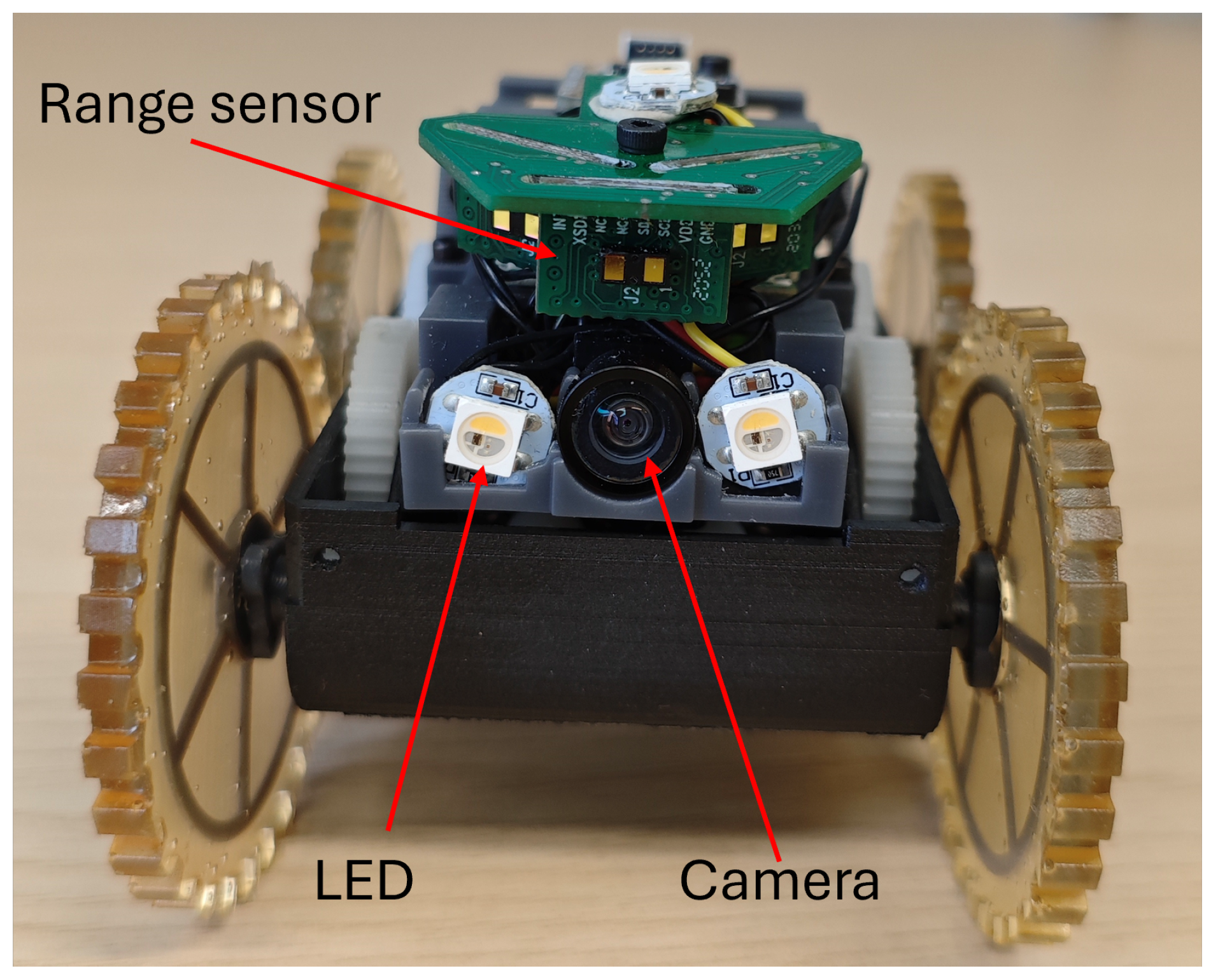

23] project. The Joey robot was designed to be carried and deployed by a larger robot for inspecting smaller sewer pipes. The robot used in this work builds upon the original Joey design, incorporating an upgraded frame and motor while retaining the same sensor setup. Joey is equipped with two LED lights and an OV2640 camera mounted at the front, as illustrated in

Figure 1.

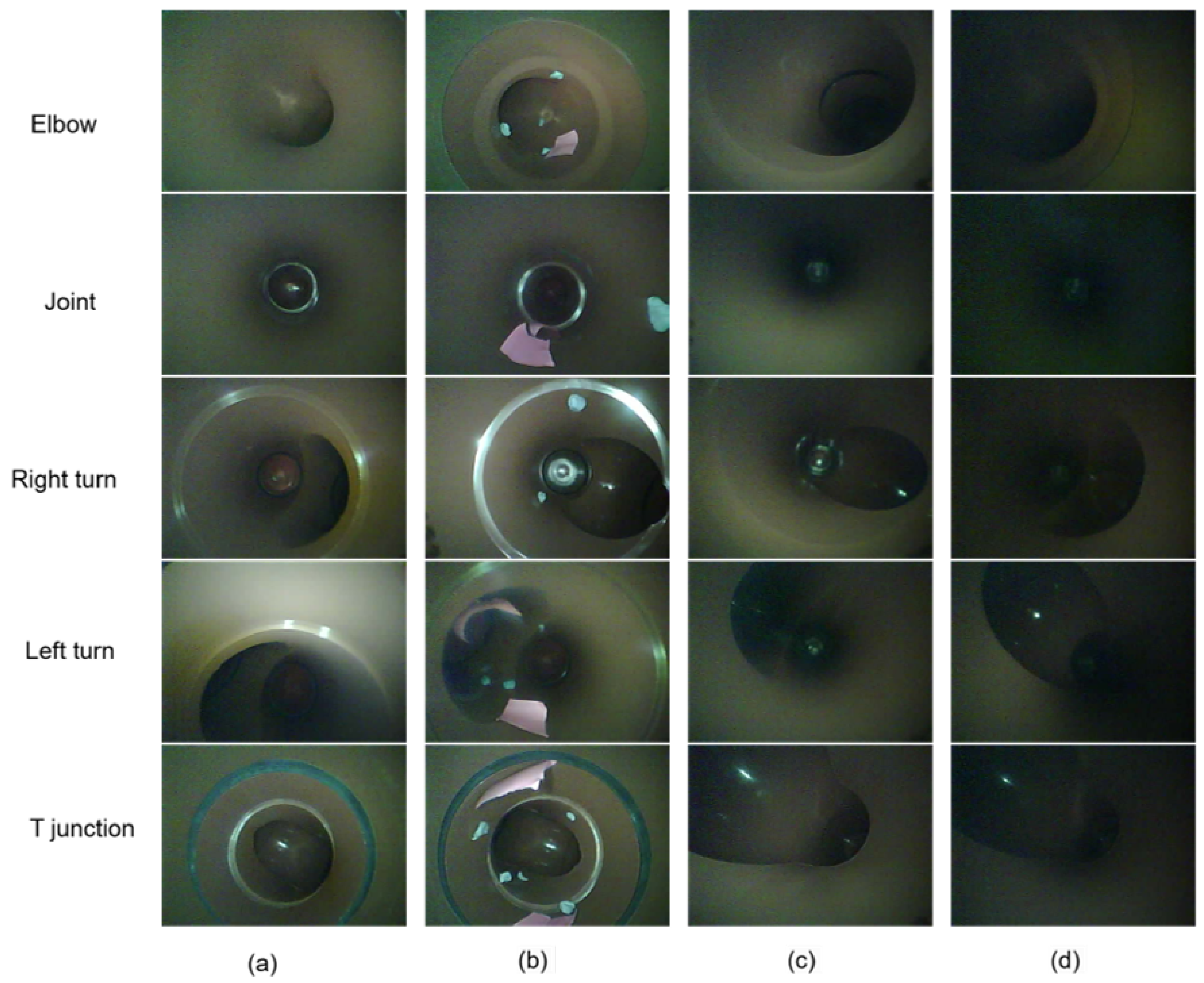

The dataset comprises 4629 unique images, collected under four distinct conditions to enhance the robustness and adaptability of the TinyML algorithm. These conditions include two pipe diameters (110 mm and 160 mm), low-light environments, and simulated interference. Interference within the pipeline was emulated by placing shredded paper and plasticine inside the pipe, mimicking realistic operational scenarios. Low-light conditions were achieved by deactivating one of the LED lights during image acquisition.

Figure 2 illustrates examples of images captured under these various conditions. This diverse dataset aims to improve the algorithm’s performance across varying real-world pipeline environments.

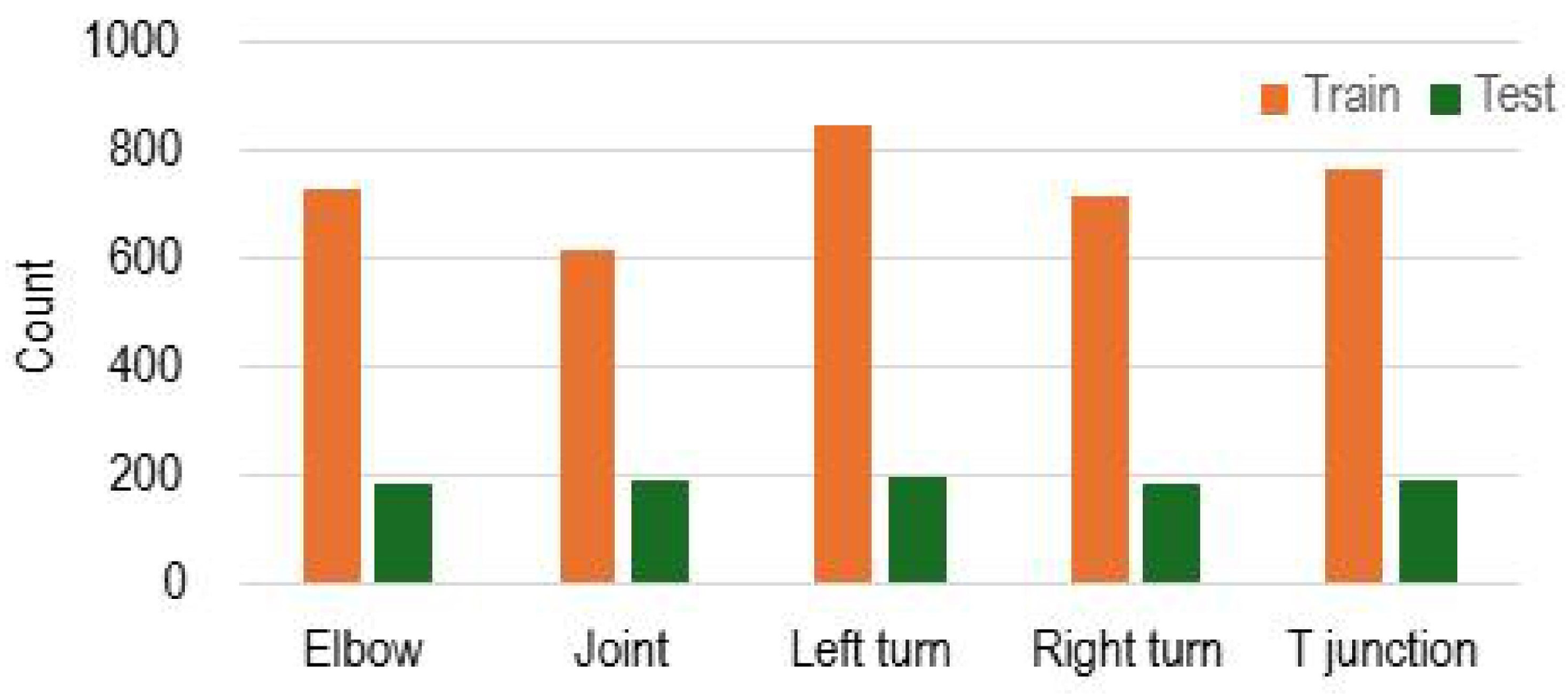

The distribution of the pipeline feature dataset is shown in

Figure 3. The dataset consists of five classes, which are elbow, joint, left turn, right turn, and T-junction. Images were labeled manually for pipeline feature recognition. To evaluate the machine learning model and escape over-fitting of the model, the dataset is approximately separated into 80% training images and 20% test images. Slight variations exist to ensure even test samples and to maintain integer sample counts during partitioning. These adjustments help provide a fair and reliable performance evaluation across all classes.

2.2. Training

To identify an optimal CNN architecture for pipeline feature detection, various neural network models were evaluated. These models were classified into two categories: training from scratch and transfer learning.

Training from scratch involves building a model entirely from the ground up, with randomly initialized weights. The model learns feature representations directly from the provided dataset without utilizing prior knowledge. This traditional approach requires significant computational resources and a sufficiently large dataset to achieve high performance. In contrast, transfer learning leverages knowledge from a pre-trained model, typically trained on a large, generic dataset, and applies it to a new, task-specific dataset. This method mitigates challenges associated with limited data availability and significantly reduces training time and computational cost.

The evaluation was conducted using Edge Impulse [

24], a platform that provides pre-trained MobileNetV2 models [

21] with various depth multipliers. The dataset described in

Section 2.1 was imported into the platform for training. To ensure efficient deployment on low-resource devices, 8-bit quantization was applied to the models. Quantization is a widely adopted model compression technique that reduces computational and storage requirements. Random seeds were set during training to control the inherent randomness across different stages of the training and evaluation processes, ensuring consistent and reproducible experimental results [

25]. Fixing random seeds is a commonly adopted practice in experimental research [

26]. The performance metrics, including accuracy, loss, inference time, peak RAM usage, and flash usage, were systematically compared, as presented in

Table 1. Unless specified otherwise, the default input image size was

.

A convolutional layer is a key part of CNNs that extracts features from data, like edges or patterns in images. It applies small filters across the input, detecting local details while sharing parameters to reduce complexity. This makes CNNs efficient and effective for image analysis. Models trained from scratch included architectures with varying depths (2, 3, 4, 5, and 6 convolutional layers (Conv)), models with larger input sizes (

), architectures incorporating DepthwiseConv2D and PointwiseConv2D (Conv+DW+PW+Conv), and models enhanced with attention mechanisms such as squeeze-and-excitation (SE) [

27] and efficient channel attention (ECA) [

28] (e.g., 3 Conv+SE and 3 Conv+ECA). DepthwiseConv2D (DW) combined with PointwiseConv2D (PW) forms a depthwise separable convolution, an efficient alternative to standard convolution that is frequently utilized in lightweight architectures like MobileNet [

22,

29].

Transfer learning utilized MobileNetV1 [

20] and MobileNetV2 [

21] architectures with different depth multipliers, capitalizing on their lightweight design and proven efficiency in resource-constrained environments.

The experimental results revealed that models trained from scratch generally achieved higher accuracy with deeper architectures. For example, the 5 Conv attained the highest accuracy (0.971) but at the expense of higher resource consumption, including flash usage (427.9 kB) and inference time (1693 ms). Interestingly, increasing the depth further (6 Conv) led to higher resource demands but lower accuracy (0.967). On the other hand, simpler architectures like the 2 Conv demonstrated greater resource efficiency but achieved a lower accuracy of 0.849.

Input size also played a significant role in resource utilization: models with larger input dimensions () required more storage and longer inference times compared to those with , making them less practical for low-end microcontroller deployment. Additionally, modifications such as attention mechanisms (SE and ECA) and depthwise separable convolutions (DW+PW), contrary to expectations, failed to improve performance. Instead, these adjustments resulted in reduced accuracy and increased inference times, highlighting the trade-offs involved in optimizing models for resource-constrained environments.

Transfer learning models, such as MobileNetV2, strike a balance between efficiency and performance. For example, MobileNetV2 with a width multiplier of 0.35 achieves an accuracy of 0.90 while maintaining moderate resource usage compared to other MobileNet variants. However, transfer learning models with significantly lower width multipliers, such as MobileNetV1 with a width multiplier of 0.1, exhibit substantial resource savings at the cost of reduced accuracy. In comparison, CNN models trained from scratch generally outperform MobileNet-based transfer learning models in terms of accuracy, albeit with higher resource requirements for deeper architectures.

The ESP32 board, with 520 kB of RAM and 4 MB of flash memory, imposes strict constraints on the selection of machine learning models for deployment. To meet these resource limitations while ensuring high performance, the 5 Conv model was selected as the final architecture for pipeline feature detection. This model strikes an effective balance between accuracy and resource efficiency, making it an optimal choice for the application.

The architecture of the selected model is illustrated in

Figure 4. It consists of five convolutional layers, each with a kernel size of 3, followed by max pooling layers with a stride of 2. The model also includes a flatten layer to prepare the data for subsequent dense layers, a dropout layer to prevent overfitting and a final dense layer for classification. The model is trained using categorical cross-entropy loss, which is standard for multi-class classification tasks. The functionality of each layer is detailed below.

Input Layer: The input to the model is a grayscale image of size , denoted as . The image represents the raw pipeline scene captured by the onboard camera.

Convolutional Layers: The model employs five convolutional layers, each followed by a max-pooling layer for spatial downsampling. Each convolutional operation is defined as follows:

where

is the output feature map;

is the input at location in channel c;

is the convolution kernel with k output channels;

is the bias term for the k-th filter;

is the activation function, in this case, ReLU:

Each convolutional layer has the following configuration:

Layer 1: 16 filters of size .

Layer 2: 32 filters of size .

Layer 3: 64 filters of size .

Layer 4: 128 filters of size .

Layer 5: 256 filters of size .

Pooling Layers: After each convolutional layer, a max-pooling operation is applied to reduce spatial dimensions. The max-pooling operation is defined as follows:

where

represents the pooling region. A pooling size of

with stride 2 is used.

Fully Connected Layer: The feature maps are flattened into a 1D vector and passed to a fully connected dense layer. This layer maps the features to

C output classes using the Softmax activation function:

where

is the output of the

k-th neuron before activation.

Dropout Regularization: To prevent overfitting, a dropout layer with a rate of is applied before the dense layer.

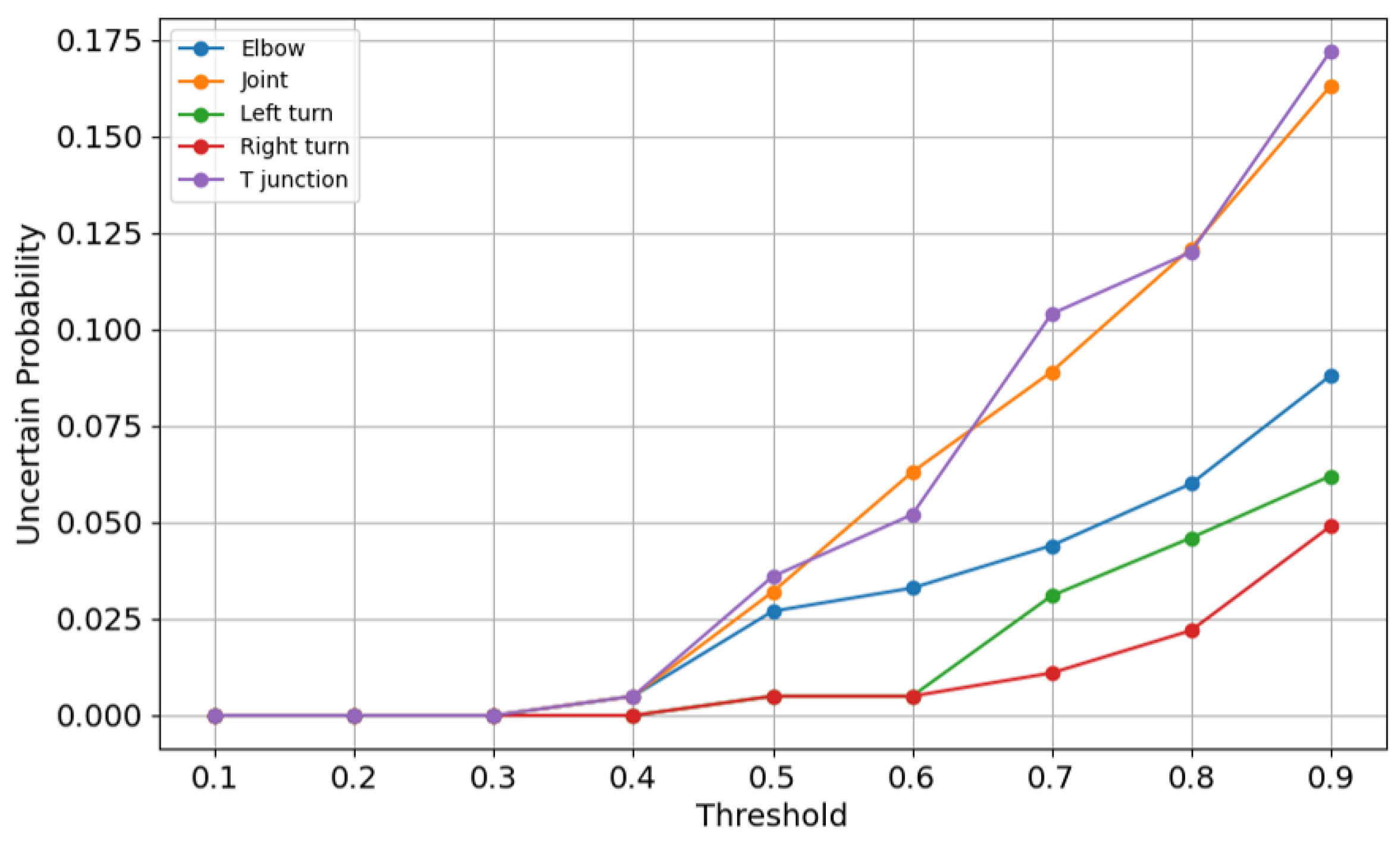

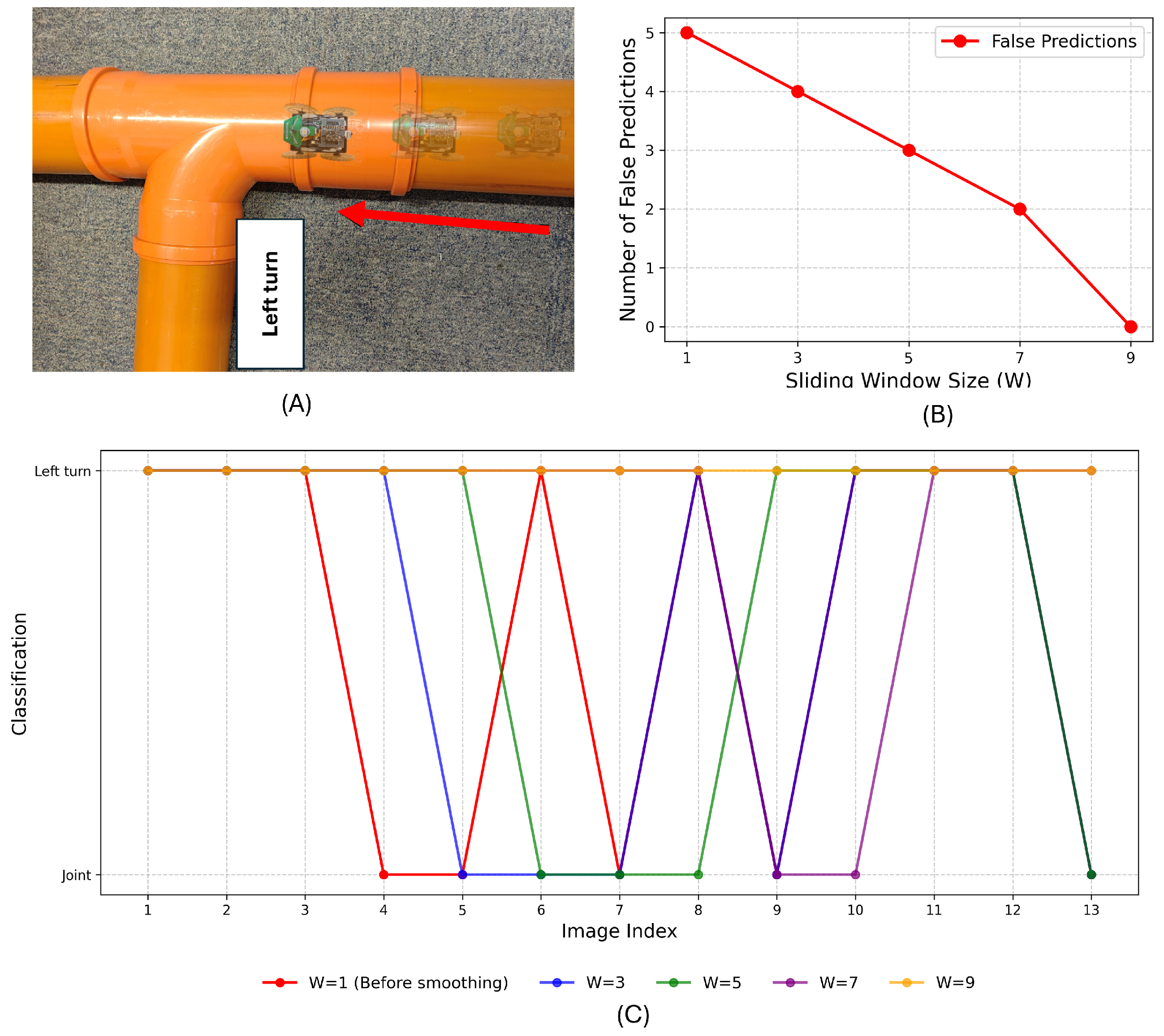

2.3. Data Smoothing

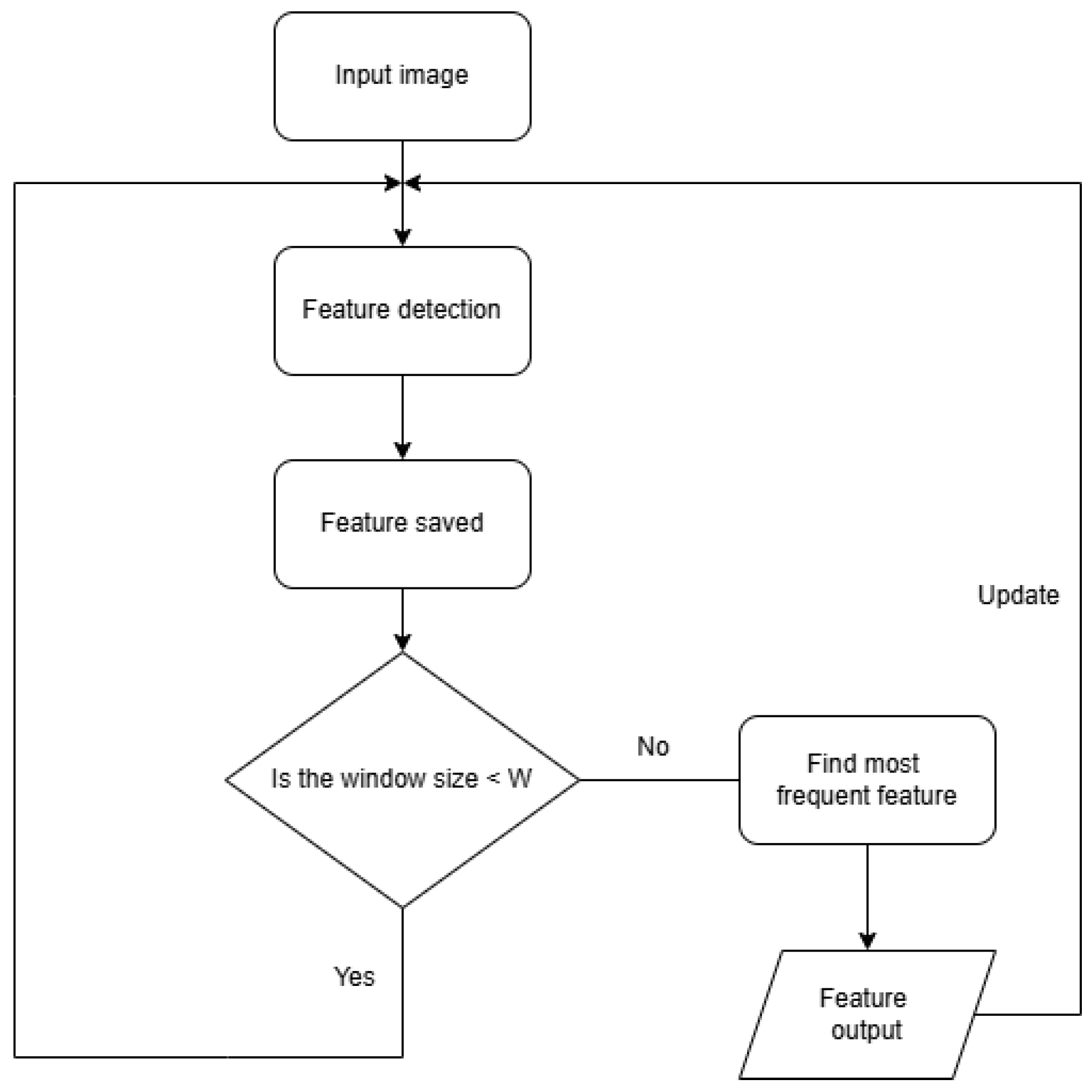

To minimize the impact of errors from single-frame detection and obtain a more reliable and stable detection result, a sliding window smoothing strategy is used. The flowchart of the strategy is shown in

Figure 5. The specific process is as follows:

Input image: The process begins with capturing an input image, which serves as the raw data for the pipeline feature detection method. This image is typically taken by the robot’s camera while it navigates the pipeline.

Feature detection: The method processes the image to identify specific pipeline features, such as elbows, right turns, or joints. The feature detection step relies on the model’s ability to classify features from the image.

Feature saved: Once a feature is detected, it is saved into a sliding window buffer. Let

represent the feature detected at time

t. A sliding window of size

W is defined, maintaining a sequence of the last

W detected features:

Find most frequent feature: The final detected feature

is determined using majority voting within the sliding window:

where:

Feature output: The most frequent feature from the sliding window is then outputted as the final detected feature. This ensures that the algorithm’s decision is not overly influenced by any single erroneous frame. After outputting the final feature, the algorithm goes back to capturing the next input image and repeats the process. As new features are detected, they replace the oldest feature in the sliding window, ensuring the size of the window remains constant at W.

The delay time for different

W values is analyzed in

Figure 6. The results show that a larger

W increases the initial delay, as more frames are needed to establish a stable prediction. However, once enough frames have been processed, the delay stabilizes since the time required to determine the most frequent feature remains nearly constant. Additionally,

W influences the smoothness of feature transitions. When a feature change occurs, a larger

W extends the transition period, requiring more frames and leading to a longer adjustment time.

Therefore, the choice of W is critical to balancing robustness and responsiveness:

A larger W increases stability by smoothing transient misclassifications but introduces latency in adapting to new features.

A smaller W reduces latency, enabling quicker responses to changes, but may result in less robust smoothing.

An optimal value of W is selected empirically to suit the pipeline detection task, ensuring reliable performance under diverse conditions.

By using this sliding window smoothing strategy, the impact of transient errors or noise in single frames can be minimized. The mechanism ensures that detections are consistent across multiple frames, providing more reliable results for navigation or decision-making in the pipeline environment.