Abstract

Object detection is essential for the perception systems of intelligent driving vehicles. RT-DETR has emerged as a prominent model. However, its direct application in intelligent driving vehicles still faces issues with the misdetection of occluded or small targets. To address these challenges, we propose a High-Precision Real-Time object detection algorithm (HPRT-DETR). We designed a Basic-iRMB-CGA (BIC) Block for a backbone network that efficiently extracts features and reduces the model’s parameters. We thus propose a Deformable Attention-based Intra-scale Feature Interaction (DAIFI) module by combining the Deformable Attention mechanism with the Intra-Scale Feature Interaction module. This enables the model to capture rich semantic features and enhance object detection accuracy in occlusion. The Local Feature Extraction Fusion (LFEF) block was created by integrating the local feature extraction module with the CNN-based Cross-scale Feature Fusion (CCFF) module. This integration expands the model’s receptive field and enhances feature extraction without adding learnable parameters or complex computations, effectively minimizing missed detections of small targets. Experiments on the KITTI dataset show that, compared to RT-DETR, HPRT-DETR improves mAP50 and FPS by 1.98% and 15.25%, respectively. Additionally, its generalization ability is assessed on the SODA 10M dataset, where HPRT-DETR outperforms RT-DETR in most evaluation metrics, confirming the model’s effectiveness.

1. Introduction

At present, artificial intelligence technology is developing rapidly. As an important branch of this field, intelligent driving vehicles are constantly breaking through technical bottlenecks and promoting the innovation of transportation modes. Object detection serves as a fundamental component of environmental perception in intelligent driving vehicles. Its primary objective is to accurately and rapidly locate and identify various traffic participants within complex traffic scenarios, thereby ensuring the safe driving of intelligent driving vehicles. In real-world traffic driving scenes, most of the detection targets are in complex backgrounds, and the scales of the targets change significantly, with mutual occlusion among the targets. Many object detection models, such as the YOLO series and DETR series, have achieved good detection results. However, when these models are directly applied to the object detection of intelligent driving vehicles, challenges arise. Specifically, if the targets are small or obstructed by other objects, these models may still experience missed or false detection. Therefore, it is essential to develop a high-precision real-time object detection algorithm that is suitable for intelligent driving vehicles.

To address the challenges associated with object detection, several models based on Convolutional Neural Networks (CNNs) have been developed, including Faster R-CNN [1], Mask R-CNN [2], Cascade R-CNN [3], SSD [4], and the YOLO series. In 2017, Vaswani et al. proposed the Transformer model, which effectively addressed the long-distance dependency problem by capturing global contextual relationships [5]. The remarkable success of this model in the field of Natural Language Processing (NLP) has prompted an increasing number of researchers to focus their attention on the Transformer model. In 2020, Facebook AI launched the Detection Transformer (DETR) model, which was the first end-to-end object detection model using the Transformer structure [6]. It can directly predict the position and category of objects without complex post-processing. In 2023, the RT-DETR model developed by Zhao et al. demonstrated superior performance in both detection accuracy and speed compared to concurrent YOLO models [7]. This achievement was made possible through Attention-based Intra-scale Feature Interaction (AIFI) and CNN-based Cross-scale Feature Fusion (CCFF), as well as the comprehensive utilization of image feature extraction methods provided by the backbone network. Despite the remarkable performance of the RT-DETR model, it still encounters several challenges when applied to real-world traffic scenarios. These challenges include the issues of missed detections and false detections when confronted with small targets and occlusion situations. In light of this, we develop a high-precision real-time object detection model, namely HPRT-DETR. This model not only reduces the complexity and computational load but also extracts multi-scale semantic features. Consequently, it significantly decreases the rates of missed detection and false detection. Our main contributions are summarized as follows.

- We propose an efficient and lightweight feature extraction module for the backbone network, namely the Basic-iRMB-CGA Block (BIC Block). The network architecture design of this module is inspired by the Inverted Residual Mobile Block (iRMB). By integrating convolution operations with a Cascaded Group Attention mechanism, the module can capture local features and global contextual information, thereby significantly enhancing the model’s feature representation capability. To effectively reduce the complexity of the model, this study also incorporates depthwise separable convolutions, enabling real-time inference capabilities while maintaining a high detection accuracy.

- We developed the Deformable Attention-based Intra-scale Feature Interaction (DAIFI) module, which is designed to effectively capture essential features. The Multi-head Attention in the AIFI module of RT-DETR is replaced by Deformable Attention, forming the DAIFI module. This module enhances the model’s capability to capture essential features and concentrate on critical areas in the image. As a result, it can accurately identify targets even in situations where they may be occluded.

- We employed Local Feature Extraction to enhance the Fusion Block module within the CNN-based Cross-scale Feature Fusion module, thereby constructing a Local Feature Extraction Fusion (LFEF) Block that efficiently extracts local features. The shift-convolution operation in this module can provide a larger receptive field than the 1 × 1 convolution without introducing additional learnable parameters or computational burden. As a result, it can extract the local features of images efficiently and reduce the missed detection rate of small objects.

The remainder of this paper is organized as follows. Section 2 outlines the current status and limitations of object detection, as well as the primary motivations behind the proposed algorithm. The model architecture of the HPRT-DETR is detailed in Section 3. In Section 4, we comprehensively evaluate the effectiveness of the proposed algorithm. Finally, Section 5 summarizes the findings of this study and offers insights into ongoing challenges and future research trends in this field.

2. Related Work

With the advancement of the deep learning theory, numerous distinct types of models have emerged in the domain of object detection. The R-CNN series, ranging from the R-CNN [8] to Mask R-CNN, has been constantly improving the approaches for generating candidate regions and extracting features, thereby enhancing the detection performance. The YOLO series, ranging from v1 to v10, is known for its efficiency of single-stage detection. The network architecture and detection mechanism are continuously refined, achieving an optimal balance between real-time performance and accuracy. SSD conducts detection by setting default boxes on multi-scale feature maps, yielding good detection effects for small objects and fast processing speed. DETR employs the Transformer architecture, simplifying the detection procedure. The CenterNet [9] series converts detection into a keypoint detection issue and CenterNet++ further improves performance, achieving high detection precision and efficiency. Swin-Transformer [10], which is based on the Transformer architecture, achieves high-accuracy detection through set prediction. EfficientDet [11] ensures high precision while facilitating real-time detection by employing an efficient backbone network and a well-designed detection head. These models have unique features and advantages in various applications and datasets, driving the ongoing progress of object detection.

In real-world traffic scenarios, intelligent driving object detection faces numerous challenges, including mutual occlusion among detection targets, variations in the scale of traffic targets, and complex background environments. Numerous researchers have developed relevant algorithms to address these challenges. In the study conducted by Li et al. [12], the traditional upsampling layer was replaced with a content-aware feature reconstruction module to enhance the fusion of image features. Additionally, the conventional convolutional structure was substituted with the SPD-Conv module, thereby improving the detection accuracy for low-resolution images and extremely small objects. Wang et al. [13] developed a multi-channel attention block to integrate features from various levels, thereby enhancing the foundational information for feature fusion within the model. They introduced an factor based on Intersection over Union (IoU) [14] to improve the regression accuracy of object detection. Zhang et al. [15] integrated the Receptive Field Block (RFB) [16] module into the feature fusion to enhance feature diversity and reduce computational costs, thereby improving the network’s detection efficiency for multi-scale traffic targets. Tang et al. [17] enhanced the feature fusion module of YOLO by introducing a Dual Fusion Gradual Pyramid structure (DFGPN). This approach effectively addresses the feature attenuation in complex traffic scenarios. Additionally, target information is reinforced through a feature channel weighting strategy and the Residual Multi-head self-Attention (RMA) module, thereby reducing background interference. Qiu et al. [18] proposed the IDOD-YOLOv7 framework, which enhances the Image-Dehazing Object Detection (IDOD) module by utilizing parallel convolution to compensate for information loss during the convolution process, thereby improving image defogging quality. Additionally, the Self-Adaption Image Processing (SAIP) is introduced to ensure that the images meet the requirements for object detection. Khan et al. [19] enhanced the Localized Semantic Feature Mixers (LSFM) [20] model by advancing it from single pedestrian detection to multi-class traffic object detection, meeting the needs for multi-object detection in complex autonomous driving environments. Furthermore, several hyperspectral target detection methods based on the Transformer architecture have provided novel approaches for addressing the issues explored in this study [21,22,23].

Although the aforementioned algorithms have demonstrated satisfactory detection performance, there are still certain limitations that need to be addressed. DETR demonstrates superior performance in complex scenarios due to its end-to-end training method and global context perception ability, but it also has significant limitations. For instance, it suffers from a slow convergence speed during training, requires a substantial amount of training time, and displays suboptimal detection performance for small targets. These limitations restrict its application in scenarios that demand higher real-time performance. Subsequently, RT-DETR, an enhanced version of DETR, eliminated the Non-Maximum Suppression (NMS) step, thereby achieving true real-time performance. The comprehensive structural optimization of RT-DETR significantly improved its inference speed while maintaining high detection accuracy. Therefore, we selected RT-DETR as the benchmark model for the study. Although RT-DETR has achieved remarkable results in the field of object detection, it still faces some urgent problems to be solved in real-world traffic driving scenarios. Firstly, RT-DETR utilizes on ResNet18 or ResNet50 as its backbone network for feature extraction, which limits its feature extraction capabilities. The model parameters of this backbone network are relatively large, which adversely affects the speed of object detection. Moreover, the AIFI module in RT-DETR still employs a traditional Multi-head attention mechanism. This approach demonstrates insufficient capability in capturing critical feature information when processing high-level feature layers, making it challenging to fully extract deep semantic information from these feature layers. In addition, within the CCFF module, shallow features contribute little to detection performance and may even introduce noise. This can lead to a low efficiency in merging information from different scale features, thereby hindering the advantages offered by the CCFF module. The aforementioned issues may result in RT-DETR experiencing missed detection or false detection, particularly in scenarios involving small objects and occlusions.

Based on the analysis above, our improvement strategies are as follows. Firstly, a lightweight feature extraction module BIC Block is employed to address the issues of limited feature extraction capability and excessive computational burden. Furthermore, a Deformable Attention mechanism is introduced in the high-level feature extraction layer to construct an efficient feature extraction module, namely the DAIFI module. This approach aims to address the inadequate capability of capturing critical feature information within the high-level feature layers. Finally, the feature fusion module with an extensive receptive field, termed the LFEF Block, is integrated into the CNN-based Cross-scale Feature Fusion network. This integration addresses the issue of inefficient feature information fusion between multi-scale features.

3. Methods

3.1. The HPRT-DETR Model Architecture

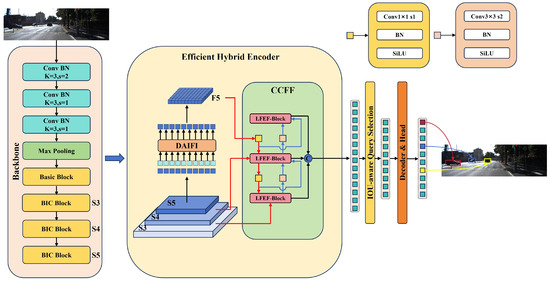

This paper proposes an efficient and lightweight HPRT-DETR model, the structure of which is shown in Figure 1. The model consists of four components: a backbone, an efficient hybrid encoder, a transformer decoder, and an auxiliary prediction head.

Figure 1.

HPRT-DETR model structure diagram.

Firstly, the input image is fed into the backbone network, where a lightweight feature extraction module known as the BIC Block is utilized to extract multi-scale features S3, S4, and S5. Secondly, the features from S5 are fed into the DAIFI module to extract essential characteristics for generating F5, which is then reshaped to match the dimensions of S5. Subsequently, the S3, S4, and F5 features are fed into the CCFF module, where the LFEF Block module is employed to achieve efficient fusion of cross-scale features. Afterwards, a fixed number of image features are selected as initial object queries from the sequence of image features generated by the Encoder through IoU-aware Query Selection. Then, these initial object queries are fed into the Transformer Decoder. Finally, the decoder iteratively optimizes the initial object queries through auxiliary prediction heads, thereby generating precise bounding boxes and reliable confidence scores.

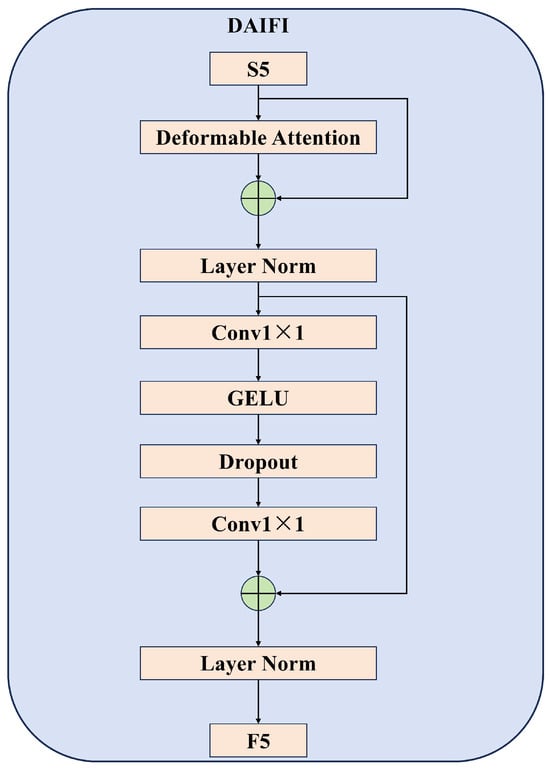

3.2. The Backbone Network Feature Extraction Module BIC Block

The RT-DETR model utilizes ResNet [24], which is based on a traditional CNN architecture, as the backbone network for feature extraction. Considering the trade-off between computational resource efficiency and model performance, this study selects the lightweight ResNet-18 as the foundational backbone network. ResNet-18 is mainly composed of multiple stacked Basic Block, the structure of which is shown in Figure 2.

Figure 2.

Basic Block structure diagram.

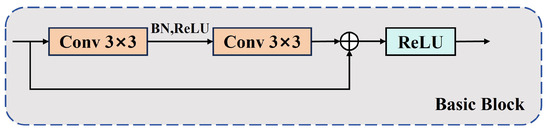

The BIC Block primarily focuses on feature extraction within the confines of local receptive fields during the feature extraction process. This limitation hinders its ability to establish global dependencies between cross-regional feature information. To address the aforementioned issues, we design an efficient and lightweight backbone network feature extraction module, namely the BIC Block (as shown in Figure 3b). It integrates convolution operations with a Cascaded Group Attention mechanism [25], facilitating the collaborative extraction of local features and global features. The design inspiration for the BIC Block is derived from the iRMB network architecture [26] (as shown in Figure 3a).

Figure 3.

Inverted Residual Mobile Block (iRMB) and Basic-iRMB-CGA (BIC) Block structure diagram. (a) The iRMB structure diagram; (b) the BIC Block structure diagram.

The process of the BIC Block feature extraction is as follows. Firstly, 1 × 1 convolution is employed to increase the dimensionality of the input image features, thereby enhancing their expressive capability. Then, these features are subjected to Batch Normalization and ReLU activation before being fed into the Cascaded Group Attention for deep feature extraction. Specifically, this attention mechanism divides the input features into non-overlapping sub-features, with each sub-feature being assigned a corresponding attention head to process the feature information. The proposed method is capable of constructing global dependency relationships between cross-regional feature information more efficiently. However, this mechanism necessitates the computation of correlations between each position in the input sequence and all other positions during the feature extraction process. This results in a computational complexity that grows exponentially with the length of the input sequence. To address the aforementioned issues, this study utilizes depthwise separable convolution [27] to reduce the model’s computational parameters and enhance its computational efficiency. Finally, the output of the extracted image features is obtained through the application of the ReLU activation function.

The depthwise separable convolution is composed of depthwise convolution and 1 × 1 pointwise convolution. The ratio of parameter calculations between depthwise separable convolution and standard convolution is illustrated in Equation (1).

where represents the size of the convolution kernel, and and represent the number of input and output channels, respectively. According to Equation (1), the incorporation of depthwise separable convolutions can significantly reduce the computational parameters of the model.

It is noteworthy that, in order to address the loss of inter-channel feature dependencies caused by the reduction of computational parameters in depthwise convolution, this study incorporates an SE attention [28] layer into the intermediate stage of depthwise separable convolutions. This approach enhances the model’s ability to comprehend the interdependencies among different channels by generating channel attention weights. At the same time, the BIC Block effectively alleviates the issue of vanishing gradients during the training process through the use of residual connections.

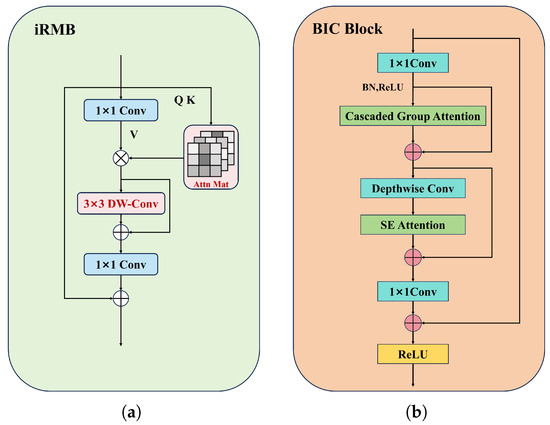

3.3. The DAIFI Module

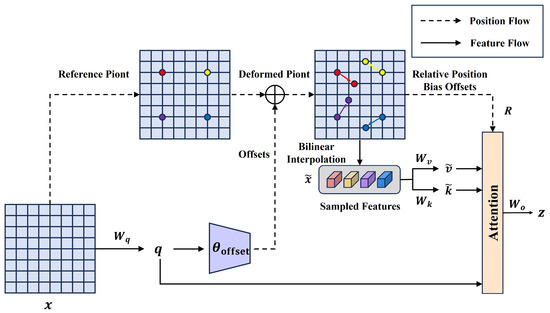

The AIFI module in the RT-DETR model employs a single-layer Multi-head Attention Transformer encoder to process deep features. This design exhibits significant limitations when addressing complex scenarios. Specifically, in scenarios where occluded targets are present, traditional Multi-head Attention mechanisms struggle to effectively focus on the local features of the occluded regions due to their reliance on a uniform global attention weight allocation strategy. This limitation adversely affects the model’s detection accuracy. To address the aforementioned issues, this study integrates a Deformable Attention mechanism [29] into the intra-scale feature interaction module, replacing the existing Multi-head Attention mechanism and thereby constructing the DAIFI module. The structure of this module is illustrated in Figure 4. Compared to the original AIFI module, the DAIFI module enhances the model’s ability to focus on the feature information of key target areas by incorporating a Deformable Attention mechanism.

Figure 4.

Deformable Attention-based Intra-scale Feature Interaction (DAIFI) module structure diagram.

As illustrated in Figure 5, the core idea of the Deformable Attention mechanism is to introduce a learnable offset network that dynamically adjusts the spatial distribution of feature sampling points. This approach enables adaptive focusing on key regions within the input feature map. Specifically, this mechanism generates uniformly distributed reference points based on the geometric characteristics of the input feature map. Simultaneously, it utilizes query features as input to predict spatial offsets through an offset network, enabling the reference points to adaptively move towards key feature regions. Subsequently, the features of the deformed sampled points are extracted through bilinear interpolation. These features serve as the and after deformation, which will be utilized in subsequent attention calculations. Furthermore, this mechanism captures the spatial dependencies between features by calculating relative positional offsets through interpolation operations. Finally, the deformable attention weights are computed by integrating the query features, the deformed key and value pairs, and the relative positional offsets. The final output features are obtained by performing a weighted sum of different attention weights, followed by a linear transformation. The detailed calculation process is as follows:

where denotes the computations involved in bilinear interpolation, , and represent the linear projection matrices for queries, keys, values, and output, m denotes the m-th head of attention, d represents the dimensionality of each head, and signifies the relative position bias.

Figure 5.

Deformable Attention mechanism structure diagram.

As illustrated in Figure 4, the output features processed by the Deformable Attention mechanism must sequentially undergo layer normalization, 1 × 1 convolution, and the GELU activation function for feature reorganization. This process provides dimensionally matched and reasonably distributed feature representations, facilitating subsequent cross-scale feature fusion.

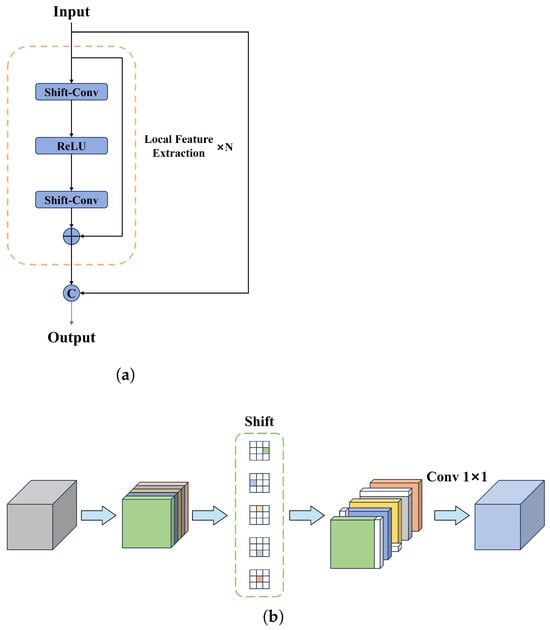

3.4. LFEF Block

In the CCFF module of the RT-DETR model, the Fusion Block processes features through two consecutive 1 × 1 convolutions. This design restricts its receptive field to 1 × 1. It results in a relatively limited contribution of shallow features to the overall detection performance. In some cases, shallow features can introduce noise, leading to missed detection of small targets. If we can efficiently fuse cross-scale features in images while simultaneously expanding the model’s receptive field, it will enhance the detection efficiency of small targets. Therefore, we utilize a Local Feature Extraction module (LFE) [30] to improve the Fusion Block within CCFF, thereby constructing a novel feature fusion module known as the LFEF Block. The LFEF block structure is illustrated in Figure 6a.

Figure 6.

Local Feature Extraction Fusion (LFEF) Block and Shift-convolution structure diagram. (a) the LFEF Block structure diagram; (b) the Shift-convolution structure diagram.

In Figure 6a, the LFEF Block consists of N groups of LFE modules. The final output is composed of the features obtained from the input characteristics, which are concatenated with the features extracted by N groups of local feature extraction modules through residual connections. The local feature extraction module contains shift-convolution. In the shift-convolution [31], we divide the input features into five equal groups. As shown in Figure 6b, the first four groups of features are shifted along different spatial dimensions (specifically left, right, up, and down), while the final group remains unchanged. This approach effectively leverages information from neighboring pixels. Without introducing additional learnable parameters and extensive computations, shift-convolution can offer a larger receptive field while maintaining computational complexity that is nearly identical to that of the original module. As a result, the LFEF Block can extract local features of images efficiently and reduce the missed detection rate of small objects.

4. Experiment

4.1. Datasets and Experimental Settings

In this study, the 2D object dataset from KITTI [32] is utilized to validate the efficiency of the proposed model. This dataset is one of the most commonly used evaluation datasets for computer vision algorithms in autonomous driving scenarios. It contains 7481 images collected from real-world traffic driving scenarios in urban areas, rural regions, and highways. The dataset comprises six categories of detection targets, including automobiles, trucks, vans, pedestrians, bicycles, and trams. In order to meet the requirements of the experiment, the dataset was segmented into training, validation, and testing subsets in a ratio of 7:2:1. At the same time, this study also employs the SODA 10M [33] dataset to evaluate the generalization capabilities of the model. This dataset comprises a total of 10,000 images. It includes six categories of detection targets: pedestrians, cars, trucks, trams, bicycles, and tricycles. The division ratio of this dataset is consistent with that of the KITTI dataset.

The experimental environment is based on the Windows operating system, Python 3.8.0, and PyTorch1.13.1. The hardware and model parameters are detailed in Table 1. The batch size was set to 4, with a training duration of 200 epochs, and the learning rate was set at 0.0001.

Table 1.

Hardware configuration and model parameters.

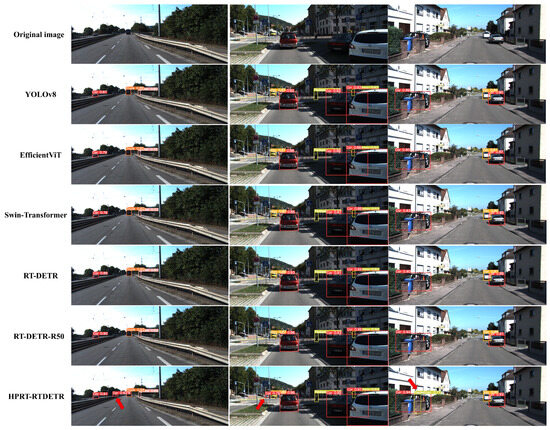

4.2. The Performance Comparison of Different Detection Models

To demonstrate the performance of the improved model in real-world traffic scenarios, we compared the proposed HPRT-DETR model with mainstream detection models including YOLOv8 [34], EfficientViT, Swin-Transformer, and the RT-DETR series. The comparison results are illustrated in Figure 7. The final row of Figure 7 presents the results of the HPRT-DETR model, achieving higher scores in multiple object detection categories than other models. The HPRT-DETR model demonstrates its capability to successfully identify small targets at long distances, vehicles obscured by traffic signs, and pedestrians obscured by walls (as indicated by the red arrows in the last row of Figure 7).

Figure 7.

Comparison of detection results from different models.

The results show that the HPRT-DETR model exhibits superior performance in addressing the challenges of small targets and obscured targets in real-world traffic scenarios. The reason for this is that the proposed DAIFI module and the LFEF Block module are capable of extracting high-level semantic features as well as multi-scale features.

To further highlight the advantages of the HPRT-DETR model, a comprehensive comparison of its performance metrics against those of other object detection models is conducted. The comparison results are presented in Table 2.

Table 2.

Comparison of evaluation metrics for different models.

As shown in Table 2, the HPRT-DETR model has achieved significant improvements in both mAP50 and mAP50:95. The improvement in detection accuracy can be primarily attributed to the introduction of the DAIFI module and the LFEF Block. The DAIFI module is capable of more efficiently extracting semantic feature information from deep feature layers, thereby enhancing the detection accuracy of the model. The LFEF Block enhances the efficiency of cross-scale feature fusion by expanding the model’s receptive field, thereby improving the model’s capability to detect small targets. Moreover, as indicated in the last column of Table 2, the FPS performance of the HPRT-DETR model surpasses that of other models. This suggests that the model we proposed possesses a high detection speed. This is primarily attributed to the BIC Block. The introduction of BIC Block significantly reduces the number of model parameters, thereby alleviating the computational burden. It significantly improves the operational speed of the model while maintaining detection accuracy.

In summary, the HPRT-DETR model demonstrates superior performance in both detection accuracy and real-time. The improved model can meet the performance requirements of object detection algorithms in real-world traffic driving environments.

4.3. Experimental Details and Discussions

4.3.1. Comparative Experiment of the Backbone Network

In real-world traffic driving environments, object detection algorithms must not only accurately locate pedestrians and vehicles but also possess excellent real-time performance. To enhance detection speed while maintaining detection accuracy, we optimized the backbone network of RT-DETR and introduced the BIC Block to reduce model parameters and computational load. We assessed the performance of the BIC Block by comparing it against mainstream backbone networks. These backbone networks primarily include the Basic Block [7], Star Block [35], WTConv Block [36], DualConv Block [37], AKConv Block [38], and DCNv2 Block [39]. This study utilizes the Basic Block derived from the backbone network ResNet-18 in RT-DETR. The comparative results are presented in Table 3.

Table 3.

Comparison of evaluation metrics for different backbone networks.

As shown in the first row of Table 3, the Basic Block achieves performance metrics of 81.58% for precision, 76.59% for recall, 78.87% for mAP50, 49.69% for mAP50:95, 19.97 M for parameters, and 57.3 for GFLOPs. The detection performance exhibits a relatively balanced level.The Star-Block and WTConv-Block exhibit remarkable performance in reducing model parameters and computational load. However, their mAP50 values decrease by 2.43% and 1.22%, respectively. This indicates that, although these models are lightweight, their detection accuracies may be somewhat compromised. The performance of the DualConv-Block significantly lags behind that of the Basic Block, failing to achieve superior results in all evaluated metrics. While the AKConv-Block achieves a significant reduction in model parameters and computational load, it also experiences a decrease in mAP50. This backbone network is unable to maintain stable detection performance while reducing the consumption of computing resources. The DCNv2 Block demonstrates a substantial improvement in precision, achieving a 5.31% increase compared to the Basic Block. However, this enhancement is accompanied by an increase in model parameters and computational requirements, which may limit its applicability in resource-constrained environments. As shown in the last row of Table 3, the proposed BIC Block not only achieves substantial reductions in both the overall parameter and computational load but also enhances accuracy. This indicates that the BIC Block achieves a relatively optimal balance between model optimization and performance enhancement.

In conclusion, the comparative experimental results presented in Table 3 clearly demonstrate the excellent performance of the BIC Block across various performance metrics, thereby validating its effectiveness comprehensively.

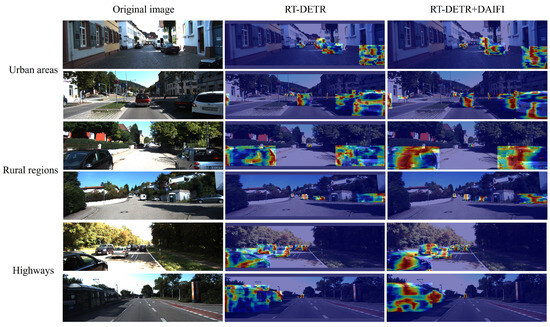

4.3.2. Experiment for Verifying the Effectiveness of the DAIFI Module

To validate the efficiency of the DAIFI module, we utilized GradCAM [40] to generate heatmaps both before and after the introduction of the Deformable Attention mechanism. Comparative experiments were carried out in various driving environments, including urban areas, rural regions, and highways. The comparative results of the heatmap are illustrated in Figure 8.

Figure 8.

Comparison of model heatmaps before and after the introduction of the DAIFI module.

As shown in the second column of Figure 8, the RT-DETR model without the DAIFI module, the red areas in the heatmap are relatively dispersed. This indicates that the model is unable to focus on key areas effectively. Compared to the heatmap in the second column, the heatmap in the third column exhibits a more concentrated red region around the detected target and is positioned closer to the center of that target. The introduction of the DA-AIFI module enhances the precision and focus of the model’s attention. This allows the model to more effectively concentrate on key detection targets within images, thereby enabling accurate localization of target positions even in complex and variable backgrounds. The results presented in these heatmaps clearly illustrate the superior performance of the DAIFI module. This advantage is attributed to the module’s capability to precisely and flexibly extract high-level semantic features while capturing intricate details within images.

4.3.3. The Ablation Experiments of Different Modules

To investigate the enhancement effects of the BIC Block, DAIFI module, and LFEF Block on the HPRT-DETR model, we conducted six sets of ablation studies. The experimental results are presented in Table 4.

Table 4.

Results of ablation experiments.

From the data in Method 2 of Table 4, introducing the BIC Block module improves mAP50 and mAP50:95 by 0.65% and 0.45%, respectively, while reducing the parameter count and GFLOPs by 23.08% and 17.63%. This indicates that the model exhibits superior feature extraction capabilities and achieves faster detection speeds. From the data presented in Method 3 of Table 4, it is evident that integrating the DAIFI module into the model results in the increase of mAP50 by 0.34% and MAP50:95 by 0.11%, respectively, along with a small improvement in FPS. From the data in Method 4 of Table 4, the application of the LFEF Block leads to improvements in mAP50 and mAP50:95 by 0.73% and 0.56%, respectively, while also resulting in a slight increase in FPS. It has been demonstrated that the LFEF Block achieves a superior feature fusion effect. Finally, after incorporating all the improvement strategies, the HPRT-DETR model achieved increases of 1.98%, 2.03%, and 15.25% in mAP50, mAP50:95, and FPS, respectively. The ablation experiments demonstrate that each of the proposed improvement strategies contributes to the detection performance of the HPRT-DETR model.

4.3.4. Model Generalization Ability Test

To further validate the efficiency of the models, we evaluated the generalization capabilities of both the RT-DETR and HPRT-DETR models using the SODA10M dataset. The results of the two models on the SODA10M dataset are presented in Table 5. As shown in Table 5, the performance metrics of HPRT-DETR consistently surpass those of RT-DETR.

Table 5.

The results of the generalization ability of the model on the SODA 10M dataset.

The visualization detection results of the RT-DETR and HPRT-DETR models on the SODA10M dataset are presented in Figure 9.

Figure 9.

The visualization results of the RT-DETR and HPRT-DETR models on SODA 10M.

From the first row of Figure 9, it is evident that the RT-DETR model mistakenly identifies the background as the target Tram, while the HPRT-DETR model avoids this misdetection. From the second and third images in the last column (the areas highlighted by the red arrows), the HPRT-DETR model successfully detects a cyclist and a truck, which are not detected by the original RT-DETR model. The experimental data on generalization capability and the comparison results of visualization detection indicate that the HPRT-DETR model demonstrates superior detection performance and accuracy on the SODA10M dataset. Furthermore, the improved model is able to significantly reduce false and missed detection.

5. Conclusions

This study proposes a high-precision, real-time object detection model known as HPRT-DETR, which is specifically designed for intelligent driving vehicles. The model effectively reduces missed and false detection for small targets and occlusions, improving detection accuracy. It also enhances efficiency to meet the real-time requirements of intelligent vehicle object detection. HPRT-DETR features three key enhancements: the BIC Block that efficiently extract feature and effectively reduces the model’s parameters, the DAIFI module that captures essential features and lowers missed and false detection rates in occluded scenarios, and the LFEF Block for feature fusion that addresses missed detection of small targets. The superiority of the HPRT-DETR model’s performance is validated on the KITTI dataset and the SODA10M dataset. The HPRT-DETR model shows superior detection accuracy and speed, but it needs further improvement in traffic scenarios under complex weather conditions. In future work, we aim to address these issues to improve the performance of the model.

Author Contributions

Conceptualization, X.S. and B.F.; methodology, B.F.; software, B.F. and H.L.; validation, L.W., X.S. and J.N.; formal analysis, X.S.; investigation, J.N.; resources, H.L. and X.S.; data curation, B.F. and X.S.; writing—original draft preparation, B.F.; writing—review and editing, X.S.; visualization, B.F.; supervision, L.W., X.S. and J.N.; project administration, H.L.; funding acquisition, X.S. and L.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported financially by the National Natural Science Foundation of China (Grant No.: 61472444 and 61671470), the Scientific and Technological Project of Henan Province (NO. 232102211035, 232102240058), the Foreign Expert Project of Ministry of Science and Technology of the People’s Republic of China (G2023026004L), the Henan Province Key R&D Special Project (231111112700), the ZHONGYUAN Talent Program (ZYYCYU202012112), and the Water Conservancy Equipment and Intelligent Operation & Maintenance Engineering Technology Research Centre in Henan Province (Yukeshi2024-01).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Cai, Z.; Vasconcelos, N. Cascade r-cnn: Delving into high quality object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6154–6162. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the Computer Vision—ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part I 14. Springer: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- Vaswani, A. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 5998–6008. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 213–229. [Google Scholar]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. Detrs beat yolos on real-time object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 16965–16974. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Zhou, X.; Wang, D.; Krähenbühl, P. Objects as points. arXiv 2019, arXiv:1904.07850. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10781–10790. [Google Scholar]

- Li, A.; Sun, S.; Zhang, Z.; Feng, M.; Wu, C.; Li, W. A multi-scale traffic object detection algorithm for road scenes based on improved YOLOv5. Electronics 2023, 12, 878. [Google Scholar] [CrossRef]

- Wang, S.y.; Qu, Z.; Li, C.j.; Gao, L.y. BANet: Small and multi-object detection with a bidirectional attention network for traffic scenes. Eng. Appl. Artif. Intell. 2023, 117, 105504. [Google Scholar] [CrossRef]

- Yu, J.; Jiang, Y.; Wang, Z.; Cao, Z.; Huang, T. Unitbox: An advanced object detection network. In Proceedings of the 24th ACM International Conference on Multimedia, Amsterdam, The Netherlands, 15–19 October 2016; pp. 516–520. [Google Scholar]

- Zhang, L.J.; Fang, J.J.; Liu, Y.X.; Le, H.F.; Rao, Z.Q.; Zhao, J.X. CR-YOLOv8: Multiscale object detection in traffic sign images. IEEE Access 2023, 12, 219–228. [Google Scholar] [CrossRef]

- Liu, S.; Huang, D. Receptive field block net for accurate and fast object detection. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 385–400. [Google Scholar]

- Tang, L.; Yun, L.; Chen, Z.; Cheng, F. HRYNet: A highly robust YOLO network for complex road traffic object detection. Sensors 2024, 24, 642. [Google Scholar] [CrossRef] [PubMed]

- Qiu, Y.; Lu, Y.; Wang, Y.; Jiang, H. IDOD-YOLOV7: Image-dehazing YOLOV7 for object detection in low-light foggy traffic environments. Sensors 2023, 23, 1347. [Google Scholar] [CrossRef] [PubMed]

- Khan, A.H.; Rizvi, S.T.R.; Dengel, A. Real-time Traffic Object Detection for Autonomous Driving. arXiv 2024, arXiv:2402.00128. [Google Scholar]

- Khan, A.H.; Nawaz, M.S.; Dengel, A. Localized semantic feature mixers for efficient pedestrian detection in autonomous driving. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–23 June 2023; pp. 5476–5485. [Google Scholar]

- Wang, Y.; Chen, X.; Zhao, E.; Zhao, C.; Song, M.; Yu, C. An Unsupervised Momentum Contrastive Learning Based Transformer Network for Hyperspectral Target Detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 9053–9068. [Google Scholar] [CrossRef]

- Chen, X.; Zhang, Y.; Dong, Y.; Du, B. Generative Self-Supervised Learning with Spectral-Spatial Masking for Hyperspectral Target Detection. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5522713. [Google Scholar] [CrossRef]

- Feng, S.; Wang, X.; Feng, R.; Xiong, F.; Zhao, C.; Li, W.; Tao, R. Transformer-Based Cross-Domain Few-Shot Learning for Hyperspectral Target Detection. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5501716. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Liu, X.; Peng, H.; Zheng, N.; Yang, Y.; Hu, H.; Yuan, Y. Efficientvit: Memory efficient vision transformer with cascaded group attention. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 14420–14430. [Google Scholar]

- Zhang, J.; Li, X.; Li, J.; Liu, L.; Xue, Z.; Zhang, B.; Jiang, Z.; Huang, T.; Wang, Y.; Wang, C. Rethinking mobile block for efficient attention-based models. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–3 October 2023; pp. 1389–1400. [Google Scholar]

- Howard, A.G. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Xia, Z.; Pan, X.; Song, S.; Li, L.E.; Huang, G. Vision transformer with deformable attention. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 4794–4803. [Google Scholar]

- Zhang, X.; Zeng, H.; Guo, S.; Zhang, L. Efficient long-range attention network for image super-resolution. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 649–667. [Google Scholar]

- Wu, B.; Wan, A.; Yue, X.; Jin, P.; Zhao, S.; Golmant, N.; Gholaminejad, A.; Gonzalez, J.; Keutzer, K. Shift: A zero flop, zero parameter alternative to spatial convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 9127–9135. [Google Scholar]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for autonomous driving? The kitti vision benchmark suite. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 3354–3361. [Google Scholar]

- Han, J.; Liang, X.; Xu, H.; Chen, K.; Hong, L.; Mao, J.; Ye, C.; Zhang, W.; Li, Z.; Liang, X.; et al. Soda10m: A large-scale 2D self/semi-supervised object detection dataset for autonomous driving. arXiv 2021, arXiv:2106.11118. [Google Scholar]

- Varghese, R.; Sambath, M. YOLOv8: A Novel Object Detection Algorithm with Enhanced Performance and Robustness. In Proceedings of the 2024 International Conference on Advances in Data Engineering and Intelligent Computing Systems (ADICS), Chennai, India, 18–19 April 2024; pp. 1–6. [Google Scholar]

- Ma, X.; Dai, X.; Bai, Y.; Wang, Y.; Fu, Y. Rewrite the Stars. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 5694–5703. [Google Scholar]

- Finder, S.E.; Amoyal, R.; Treister, E.; Freifeld, O. Wavelet convolutions for large receptive fields. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 363–380. [Google Scholar]

- Pan, J.; Liu, S.; Sun, D.; Zhang, J.; Liu, Y.; Ren, J.; Li, Z.; Tang, J.; Lu, H.; Tai, Y.W.; et al. Learning dual convolutional neural networks for low-level vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 3070–3079. [Google Scholar]

- Zhang, X.; Song, Y.; Song, T.; Yang, D.; Ye, Y.; Zhou, J.; Zhang, L. AKConv: Convolutional kernel with arbitrary sampled shapes and arbitrary number of parameters. arXiv 2023, arXiv:2311.11587. [Google Scholar]

- Zhu, X.; Hu, H.; Lin, S.; Dai, J. Deformable convnets v2: More deformable, better results. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 9308–9316. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).