Application of Cloud Simulation Techniques for Robotic Software Validation

Abstract

1. Introduction

State of the Art

2. Proposed Approach

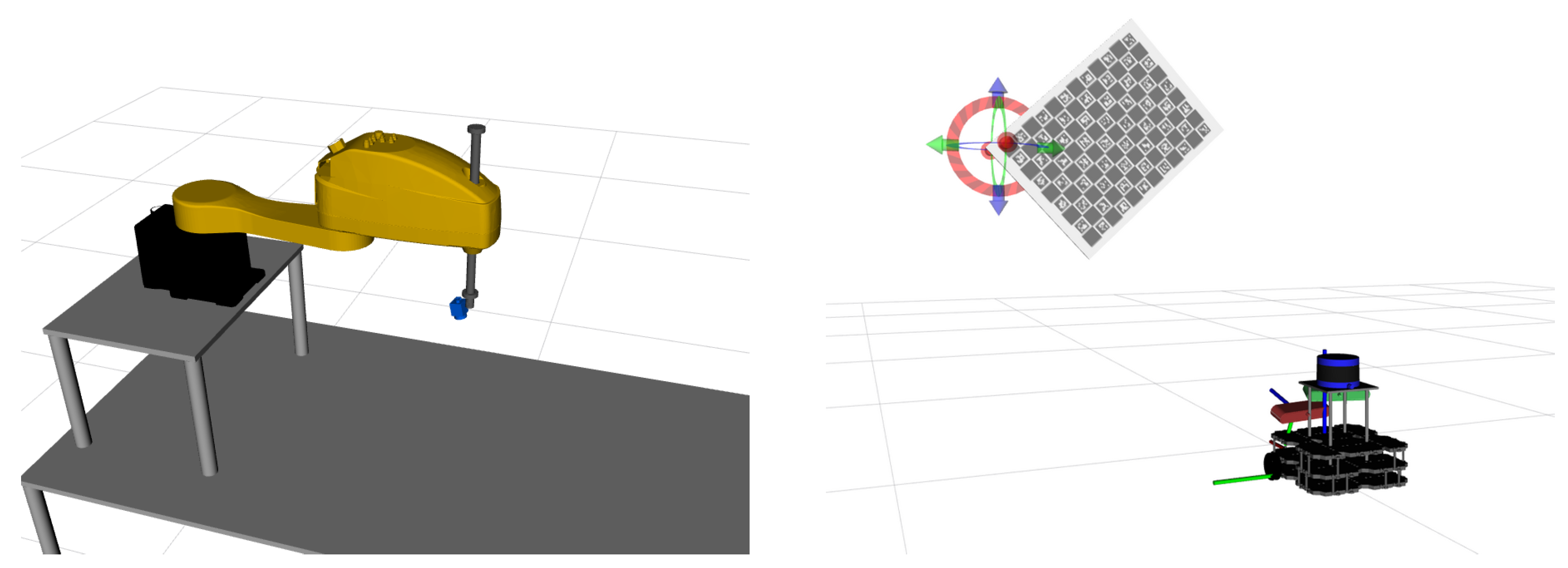

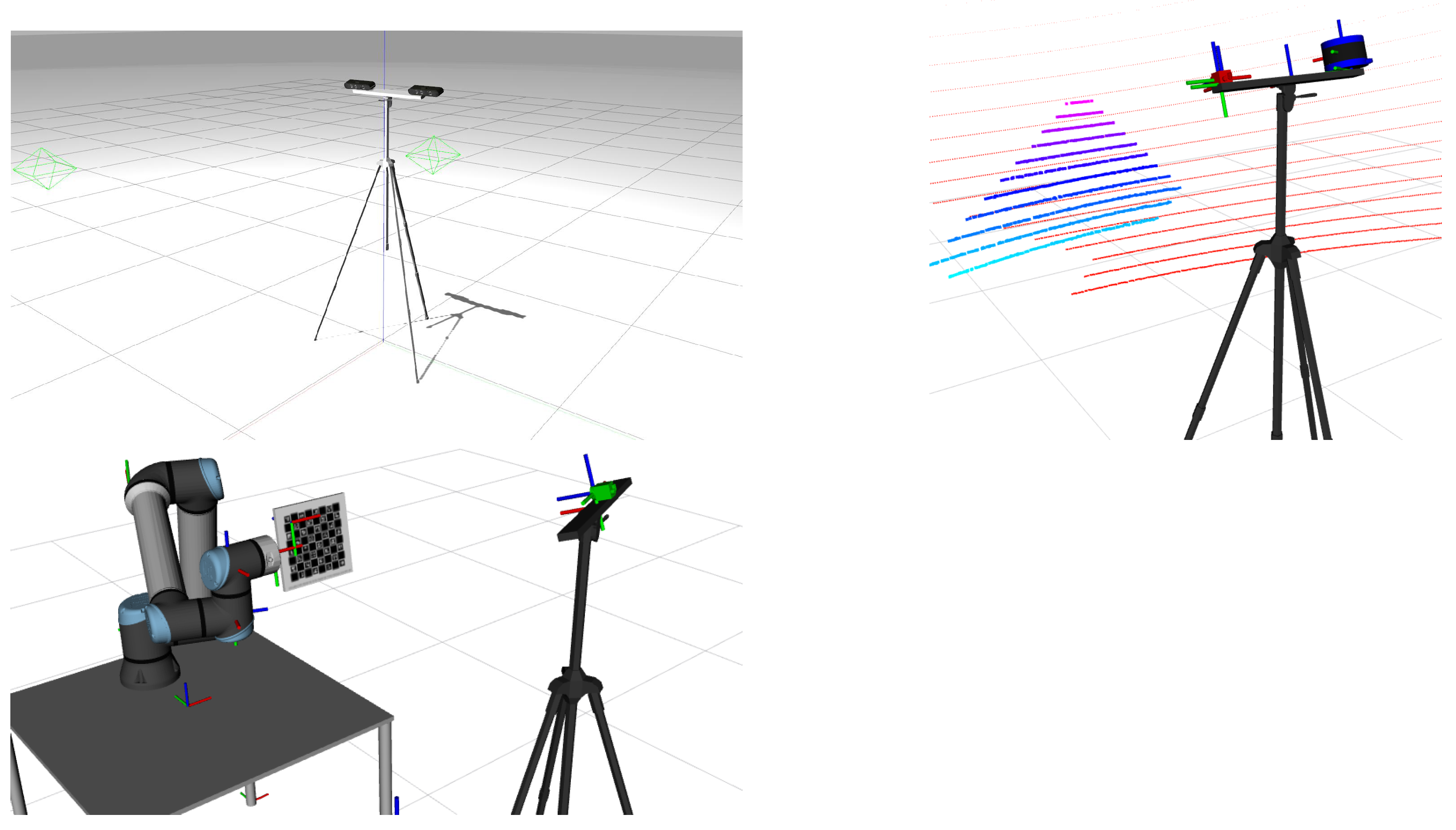

2.1. Robotic System for Testing

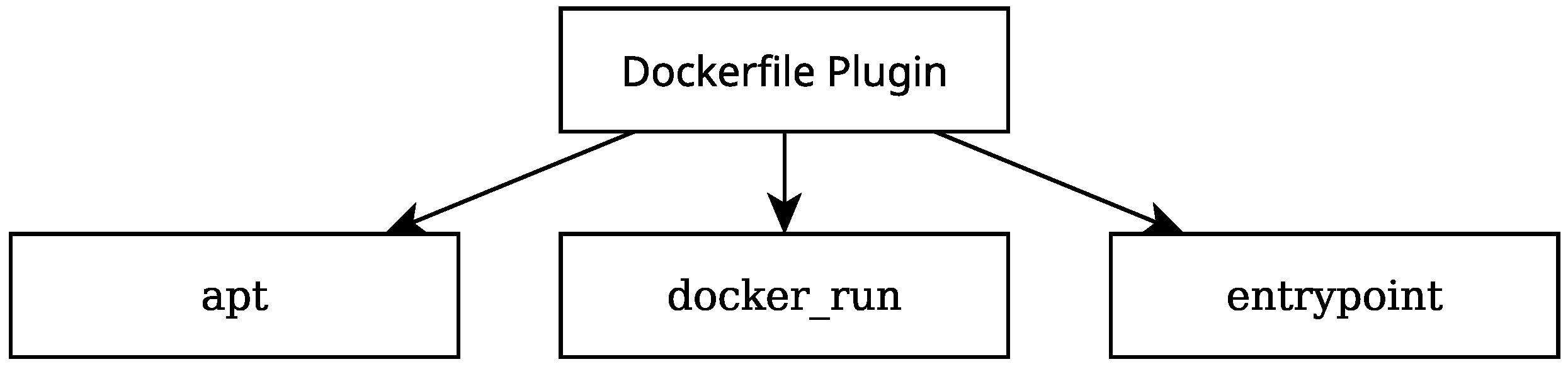

2.2. Container Image Creation

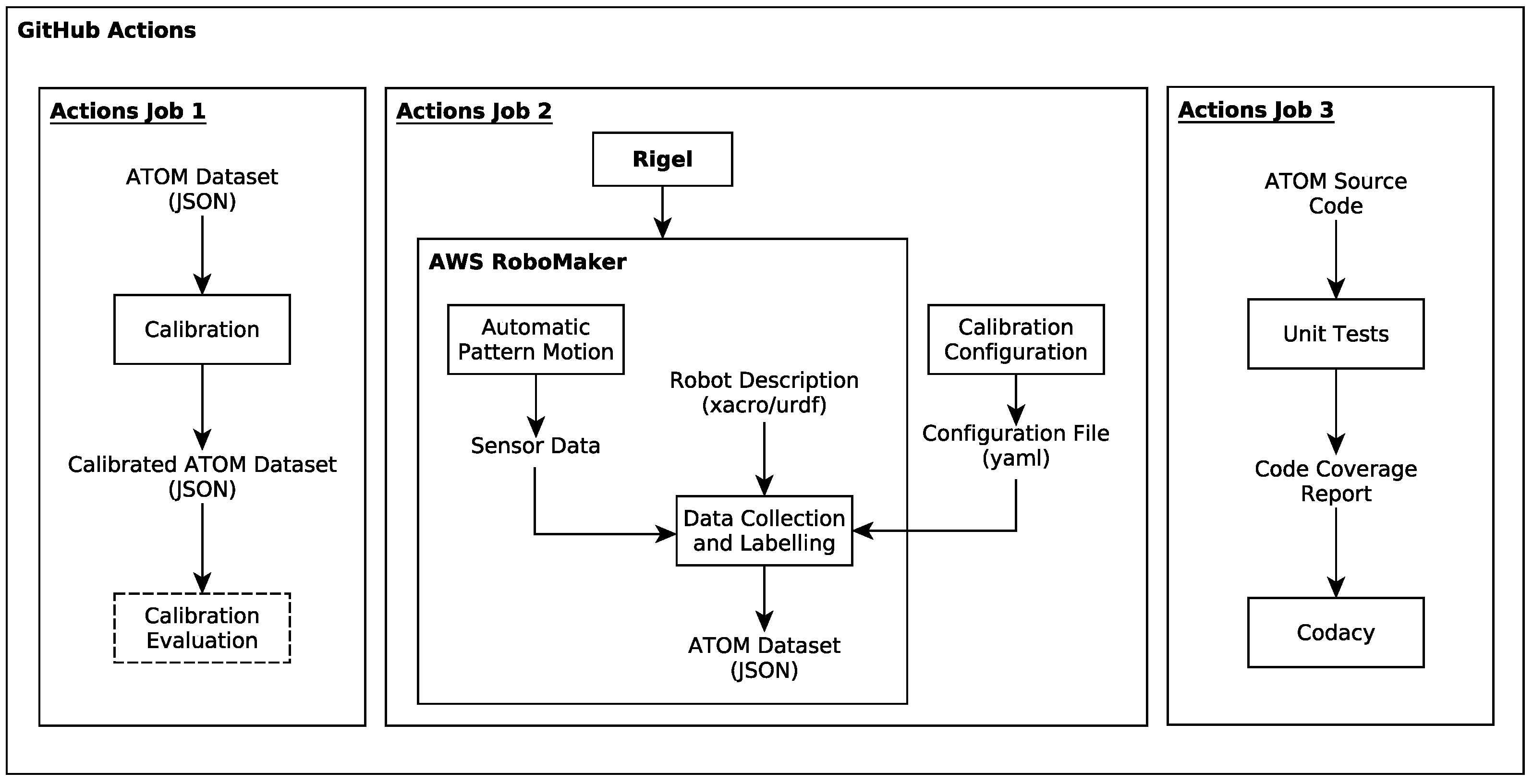

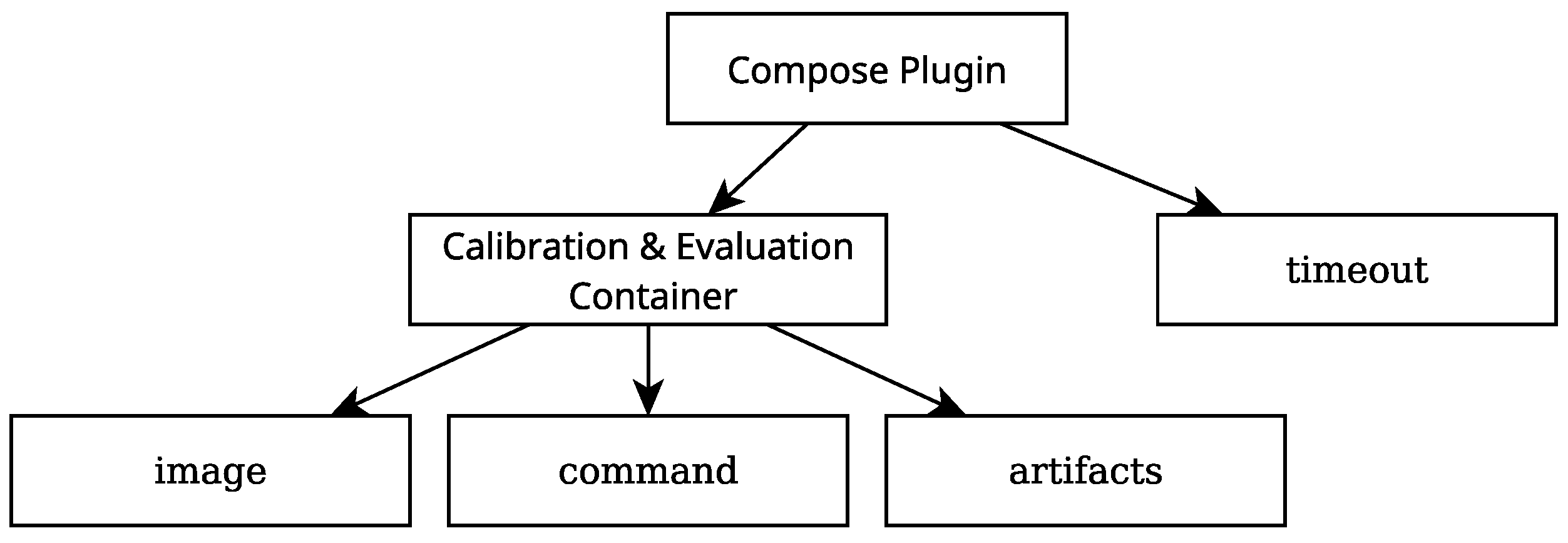

2.3. Container Image Orchestration

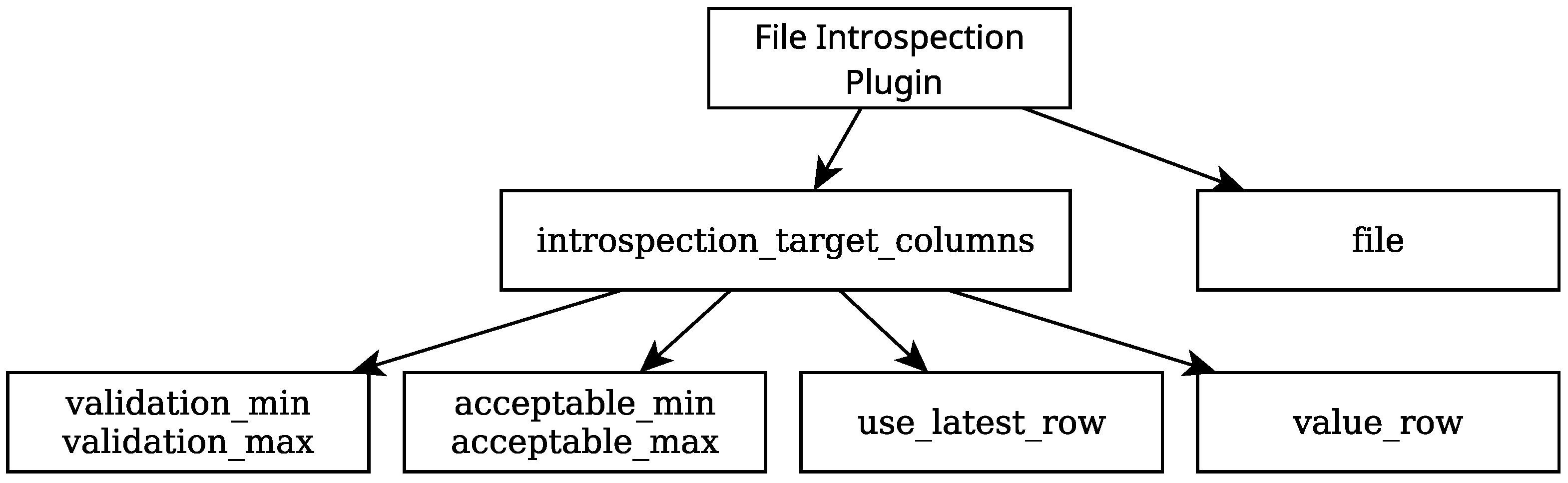

2.4. The File Introspection Plugin

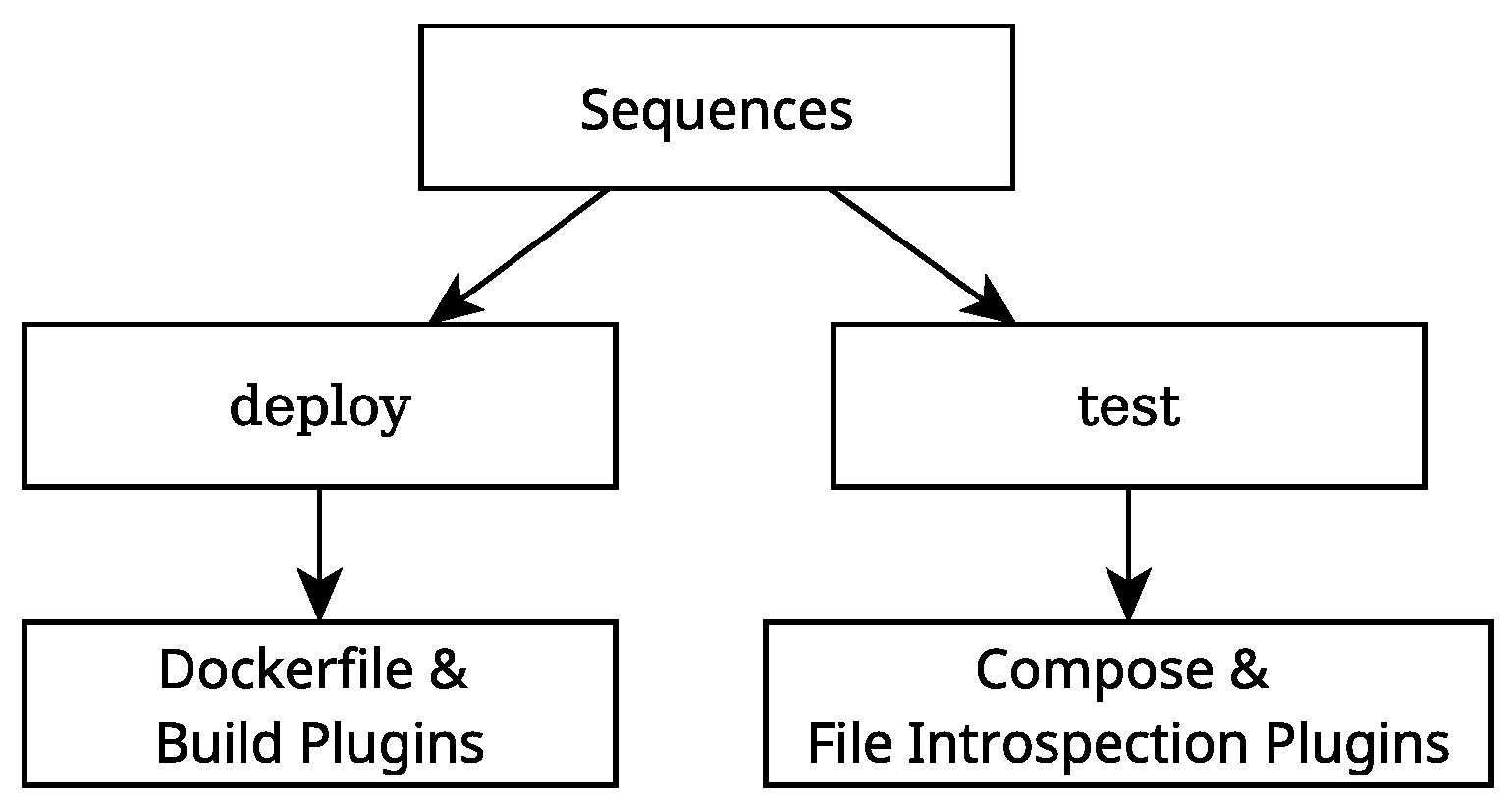

2.5. Rigel Job Sequence Definition

2.6. Automatic Data Collection

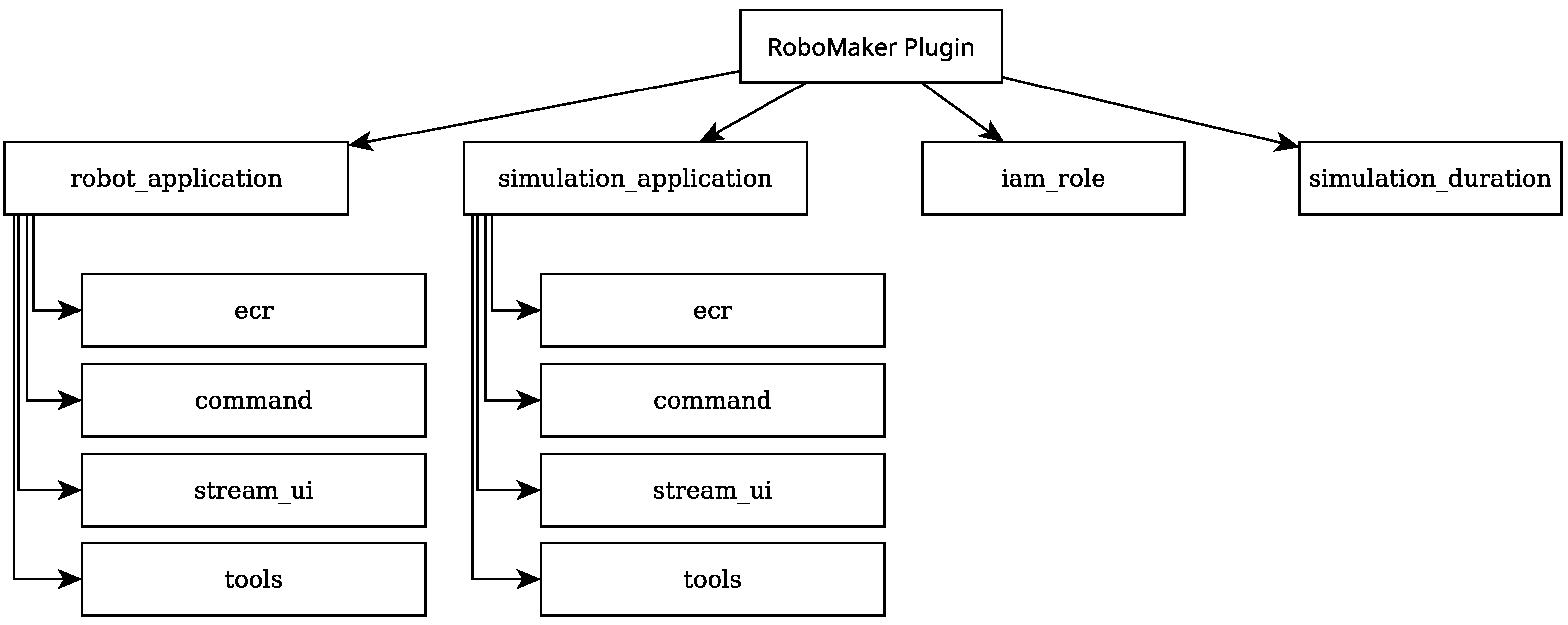

2.7. AWS RoboMaker Implementation with Rigel

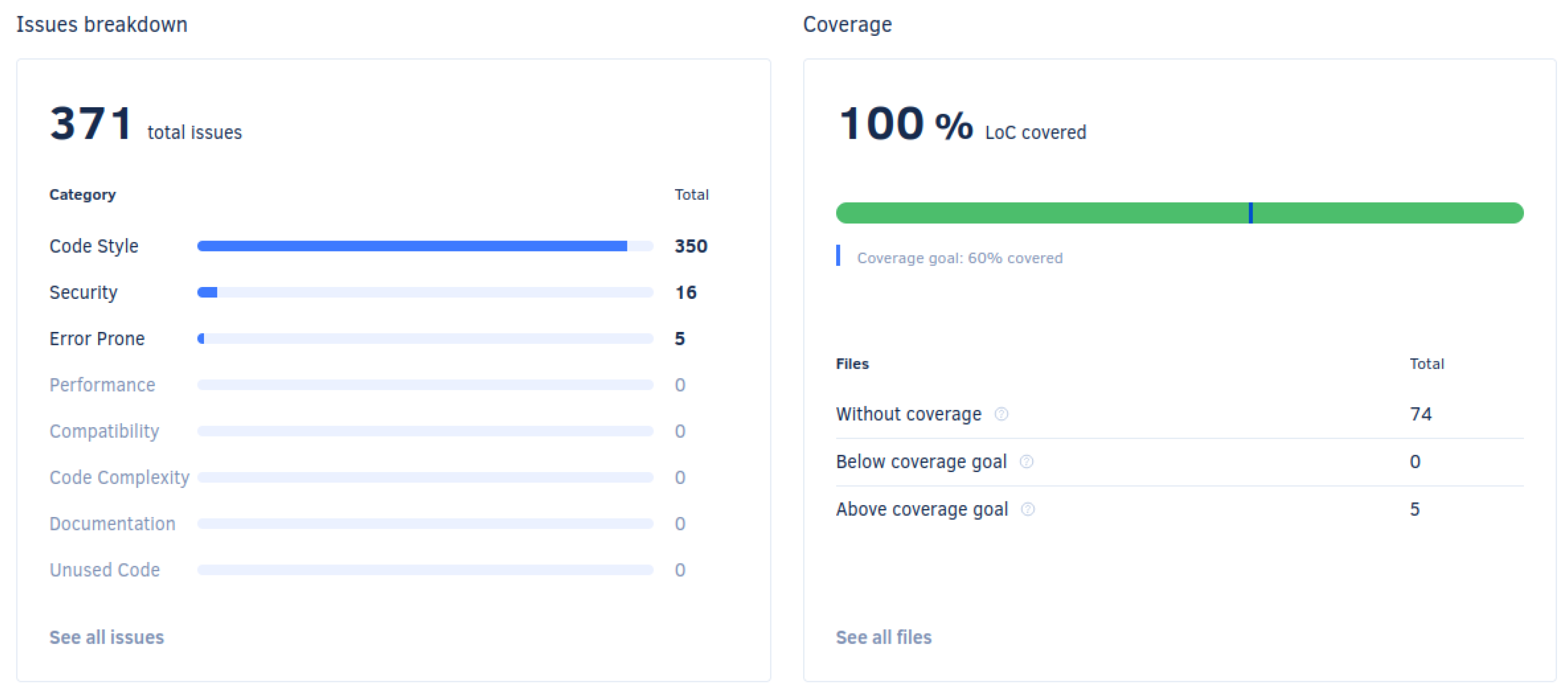

2.8. Unit Testing and Coverage Report

| Algorithm 1 Example of a unit test, targeting the function generateName(). |

|

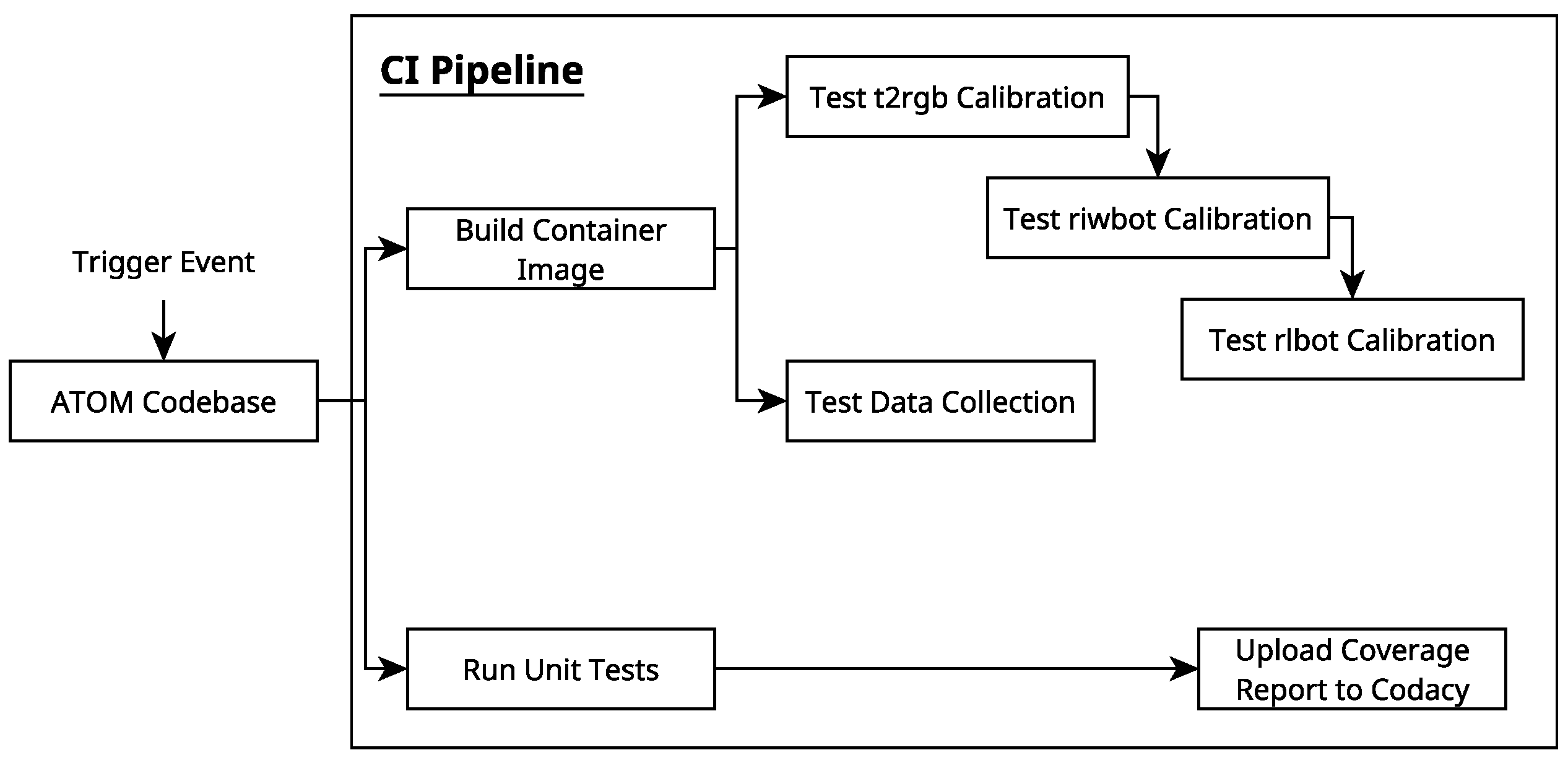

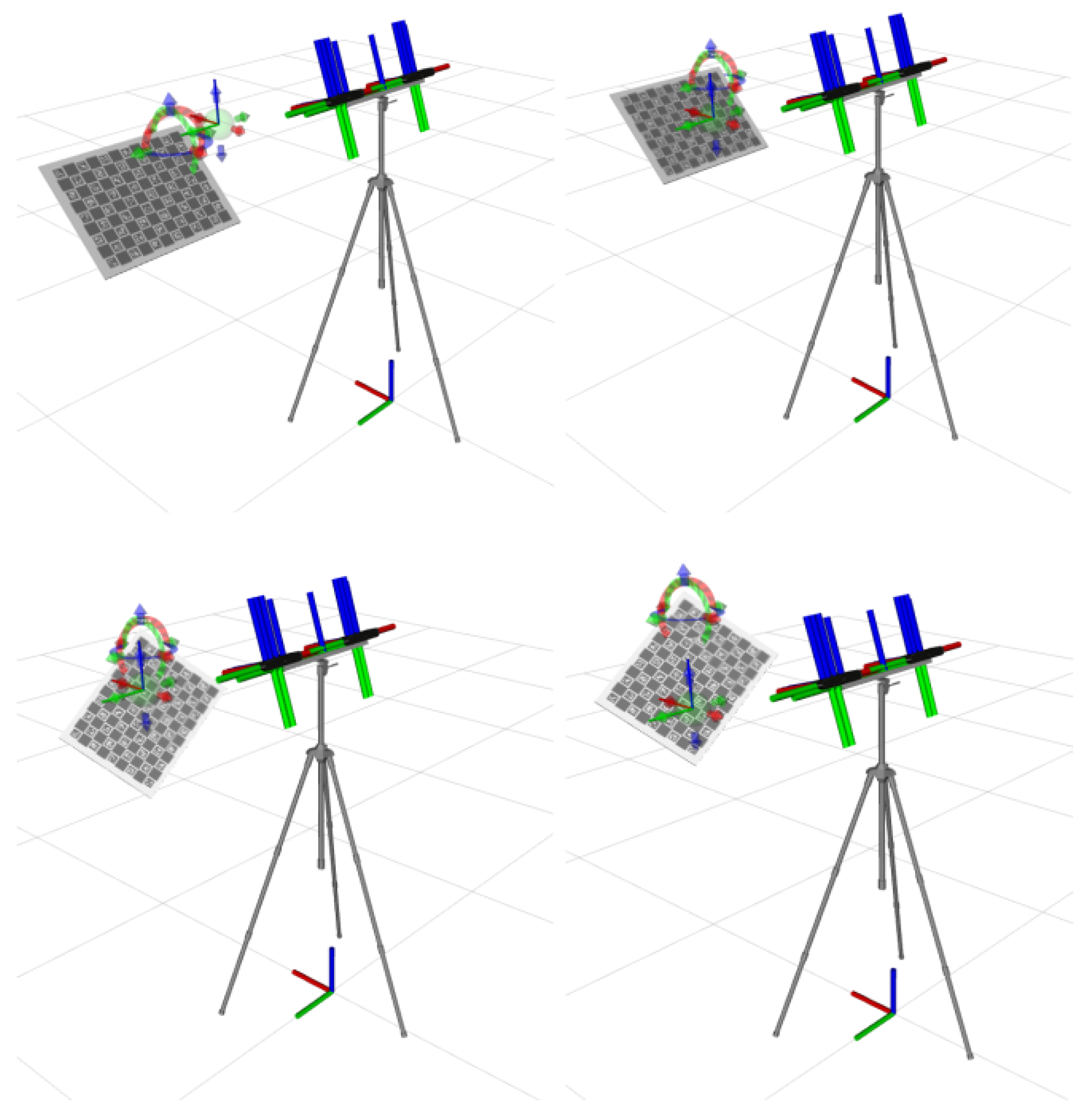

2.9. CI Workflow Creation and Calibration Testing

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Quigley, M.; Conley, K.; Gerkey, B.; Faust, J.; Foote, T.; Leibs, J.; Wheeler, R.; Ng, A.Y. ROS: An open-source Robot Operating System. In Proceedings of the ICRA Workshop on Open Source Software, Kobe, Japan, 12–17 May 2009; Volume 3, p. 5. [Google Scholar]

- Oliveira, M.; Pedrosa, E.; de Aguiar, A.P.; Rato, D.; dos Santos, F.N.; Dias, P.; Santos, V. ATOM: A general calibration framework for multi-modal, multi-sensor systems. Expert Syst. Appl. 2022, 207, 118000. [Google Scholar] [CrossRef]

- Teixeira, S.; Arrais, R.; Veiga, G. Cloud Simulation for Continuous Integration and Deployment in Robotics. In Proceedings of the 2021 IEEE 19th International Conference on Industrial Informatics (INDIN), Palma de Mallorca, Spain, 21–23 July 2021; pp. 1–8. [Google Scholar] [CrossRef]

- Melo, P.; Arrais, R.; Teixeira, S.; Veiga, G. A Container-Based Framework for Developing ROS Applications. In Proceedings of the 2022 IEEE 20th International Conference on Industrial Informatics (INDIN), Perth, Australia, 25–28 July 2022; pp. 280–285. [Google Scholar] [CrossRef]

- Guizzo, E. Robots with their heads in the clouds. IEEE Spectr. 2011, 48, 16–18. [Google Scholar] [CrossRef]

- Zhang, Y.; Li, L.; Nicho, J.; Ripperger, M.; Fumagalli, A.; Veeraraghavan, M. Gilbreth 2.0: An Industrial Cloud Robotics Pick-and-Sort Application. In Proceedings of the 2019 Third IEEE International Conference on Robotic Computing (IRC), Naples, Italy, 25–27 February 2019; pp. 38–45. [Google Scholar] [CrossRef]

- Shahin, M.; Ali Babar, M.; Zhu, L. Continuous Integration, Delivery and Deployment: A Systematic Review on Approaches, Tools, Challenges and Practices. IEEE Access 2017, 5, 3909–3943. [Google Scholar] [CrossRef]

- Mossige, M.; Gotlieb, A.; Meling, H. Testing robot controllers using constraint programming and continuous integration. Inf. Softw. Technol. 2015, 57, 169–185. [Google Scholar] [CrossRef]

- Jiang, M.; Miller, K.; Sun, D.; Liu, Z.; Jia, Y.; Datta, A.; Ozay, N.; Mitra, S. Continuous Integration and Testing for Autonomous Racing Software: An Experience Report from GRAIC. In Proceedings of the IEEE ICRA 2021, International Conference on Robotics and Automation, Workshop on Opportunities and Challenges with Autonomous Racing, Xi’an, China, 30 May–5 June 2021. [Google Scholar] [CrossRef]

- Docker. What Is a Container? 2023. Available online: https://www.docker.com/resources/what-container/ (accessed on 30 January 2023).

- Lumpp, F.; Panato, M.; Fummi, F.; Bombieri, N. A container-based design methodology for robotic applications on kubernetes edge-cloud architectures. In Proceedings of the Forum on Specification and Design Languages, Antibes, France, 8–10 September 2021. [Google Scholar] [CrossRef]

- Melo, P.; Arrais, R.; Veiga, G. Development and Deployment of Complex Robotic Applications using Containerized Infrastructures. In Proceedings of the 2021 IEEE 19th International Conference on Industrial Informatics (INDIN), Palma de Mallorca, Spain, 21–23 July 2021; pp. 1–8. [Google Scholar] [CrossRef]

- Santos, A.; Cunha, A.; Macedo, N.; Lourenço, C. A framework for quality assessment of ROS repositories. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Republic of Korea, 9–14 October 2016; pp. 4491–4496. [Google Scholar]

- Ayewah, N.; Pugh, W.; Hovemeyer, D.; Morgenthaler, J.D.; Penix, J. Using static analysis to find bugs. IEEE Softw. 2008, 25, 22–29. [Google Scholar] [CrossRef]

- Santos, A.; Cunha, A.; Macedo, N. The High-Assurance ROS Framework. In Proceedings of the 2021 IEEE/ACM 3rd International Workshop on Robotics Software Engineering, RoSE, Madrid, Spain, 2 June 2021; pp. 37–40. [Google Scholar] [CrossRef]

- Niedermayr, R.; Juergens, E.; Wagner, S. Will my tests tell me if I break this code? In Proceedings of the International Workshop on Continuous Software Evolution and Delivery, Austin, TX, USA, 14–15 May 2016; pp. 23–29. [Google Scholar] [CrossRef]

- Taufiqurrahman, F.; Widowati, S.; Alibasa, M.J. The Impacts of Test Driven Development on Code Coverage. In Proceedings of the 2022 1st International Conference on Software Engineering and Information Technology (ICoSEIT), Bandung, Indonesia, 22–23 November 2022; pp. 46–50. [Google Scholar] [CrossRef]

- Ivankovi, M.; Petrovi, G.; Just, R.; Fraser, G. Code coverage at Google. In Proceedings of the 2019 27th ACM Joint Meeting on European Software Engineering Conference and Symposium on the Foundations of Software Engineering, Tallinn, Estonia, 26–30 August 2019; pp. 955–963. [Google Scholar] [CrossRef]

- Shah, M. Solving the Robot-World/Hand-Eye Calibration Problem Using the Kronecker Product. J. Mech. Robot. 2013, 5, 031007. [Google Scholar] [CrossRef]

- Li, A.; Wang, L.; Wu, D. Simultaneous robot-world and hand-eye calibration using dual-quaternions and Kronecker product. Int. J. Phys. Sci. 2010, 5, 1530–1536. [Google Scholar]

| Method | Trans. Noise | Rot. Noise | RMS Error (pix) | ||

|---|---|---|---|---|---|

| t2rgb | riwbot | rlbot | |||

| ATOM | 0.0 | 0.0 | 0.6000 | 0.7161 | 2.8133 |

| 0.5 | 0.0 | 0.6009 | 0.7418 | 2.8094 | |

| 0.0 | 0.5 | 0.6004 | 0.7205 | 3.1954 | |

| 0.5 | 0.5 | 0.6007 | 0.7315 | 2.8443 | |

| OpenCV | 0 | 0 | 1.6510 | — | — |

| Shah [19] | 0 | 0 | — | 0.8317 | — |

| Li et al. [20] | 0 | 0 | — | 1.3206 | — |

| Run # | Build | Calibration | Data Collection | Unit Test | Complete | ||

|---|---|---|---|---|---|---|---|

| t2rgb | rlbot | riwbot | |||||

| #1 | 17 m 32 s | 7 m 43 s | 5 m 5 s | 5 m 4 s | 7 m 43 s | 16 s | 36 m 47 s |

| #2 | 16 m 30 s | 7 m 7 s | 5 m 5 s | 5 m 5 s | 7 m 50 s | 15 s | 35 m 13 s |

| #3 | 17 m 3 s | 7 m 3 s | 5 m 5 s | 5 m 4 s | 7 m 53 s | 31 s | 35 m 46 s |

| #4 | 19 m 19 s | 6 m 48 s | 5 m 5 s | 5 m 4 s | 7 m 51 s | 19 s | 37 m 50 s |

| #5 | 16 m 0 s | 6 m 46 s | 5 m 5 s | 5 m 5 s | 8 m 4 s | 16 s | 34 m 21 s |

| #6 | 17 m 23 s | 6 m 46 s | 5 m 4 s | 5 m 5 s | 7 m 55 s | 15 s | 35 m 47 s |

| #7 | 17 m 47 s | 6 m 46 s | 5 m 4 s | 5 m 4 s | 7 m 59 s | 15 s | 36 m 14 s |

| #8 | 15 m 53 s | 6 m 54 s | 5 m 4 s | 5 m 4 s | 7 m 47 s | 21 s | 34 m 13 s |

| #9 | 16 m 52 s | 6 m 44 s | 5 m 5 s | 5 m 5 s | 7 m 39 s | 14 s | 35 m 1 s |

| #10 | 16 m 11 s | 6 m 51 s | 5 m 5 s | 5 m 4 s | 7 m 53 s | 14 s | 34 m 25 s |

| Average | 17 m 3 s | 6 m 57 s | 5 m 5 s | 5 m 4 s | 7 m 51 s | 18 s | 35 m 34 s |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vieira, D.; Oliveira, M.; Arrais, R.; Melo, P. Application of Cloud Simulation Techniques for Robotic Software Validation. Sensors 2025, 25, 1693. https://doi.org/10.3390/s25061693

Vieira D, Oliveira M, Arrais R, Melo P. Application of Cloud Simulation Techniques for Robotic Software Validation. Sensors. 2025; 25(6):1693. https://doi.org/10.3390/s25061693

Chicago/Turabian StyleVieira, Diogo, Miguel Oliveira, Rafael Arrais, and Pedro Melo. 2025. "Application of Cloud Simulation Techniques for Robotic Software Validation" Sensors 25, no. 6: 1693. https://doi.org/10.3390/s25061693

APA StyleVieira, D., Oliveira, M., Arrais, R., & Melo, P. (2025). Application of Cloud Simulation Techniques for Robotic Software Validation. Sensors, 25(6), 1693. https://doi.org/10.3390/s25061693