Evaluating Machine Learning-Based Soft Sensors for Effluent Quality Prediction in Wastewater Treatment Under Variable Weather Conditions

Abstract

1. Introduction

2. Materials and Methods

2.1. Effluent Quality Index (EQI)

2.2. Simulation Scenarios

- Dry weather, which represents the baseline conditions of BSM2 with relatively constant flow and load;

- Short-duration rain events (two to four hours), which simulate moderate rainfall that constantly increases the flow and partially dilutes the pollutants;

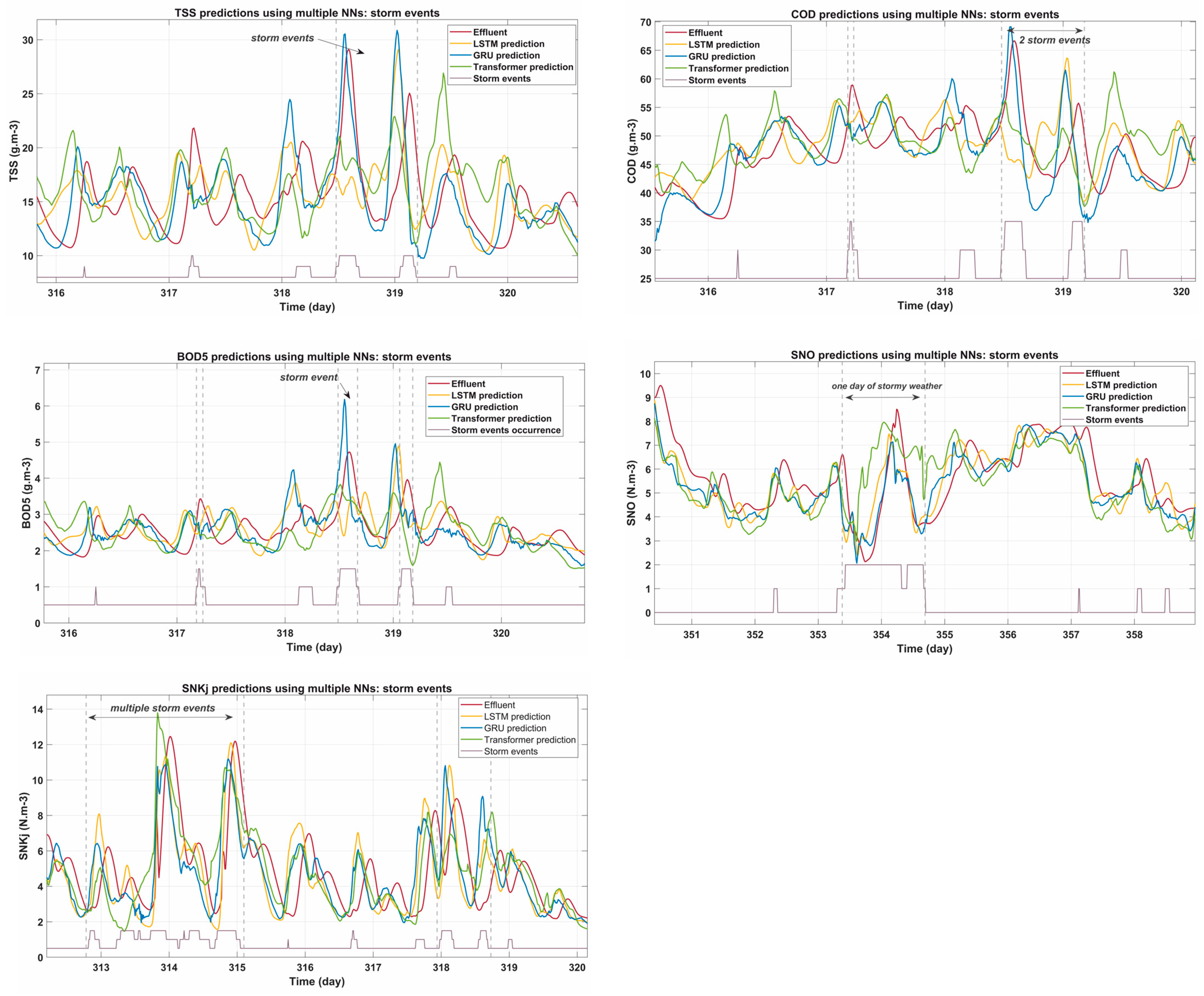

- Storm events, which often extend over several hours and produce episodes of intense rainfall, exceeding the usual capacity of the treatment plant and causing major fluctuations, especially observed in the case of certain parameters.

2.3. Data Preparation

2.3.1. Data Acquisition

2.3.2. Data Preprocessing

2.4. Machine Learning Models

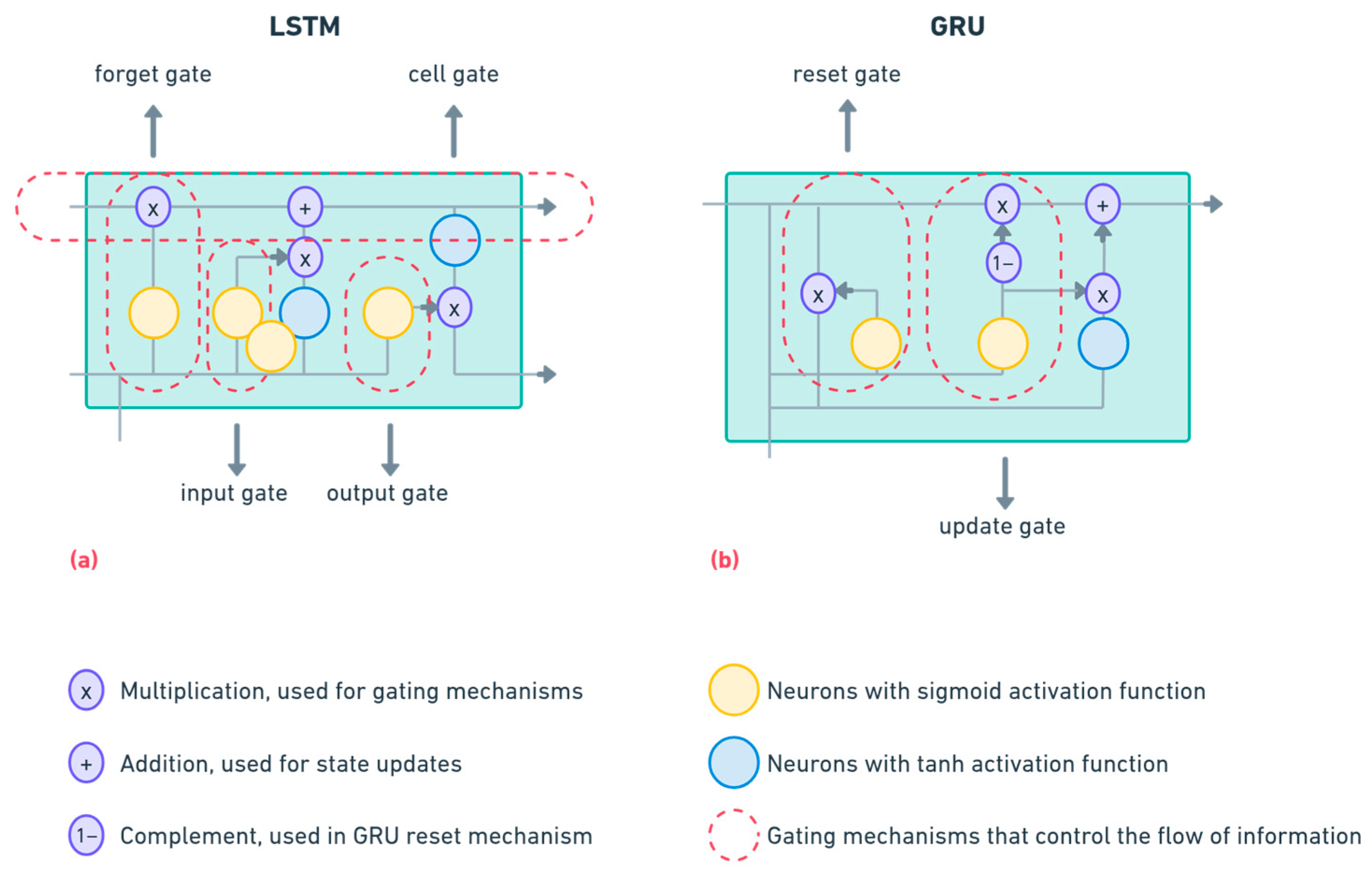

2.4.1. Long Short-Term Memory (LSTM) Network

2.4.2. Gated Recurrent Units (GRU) Network

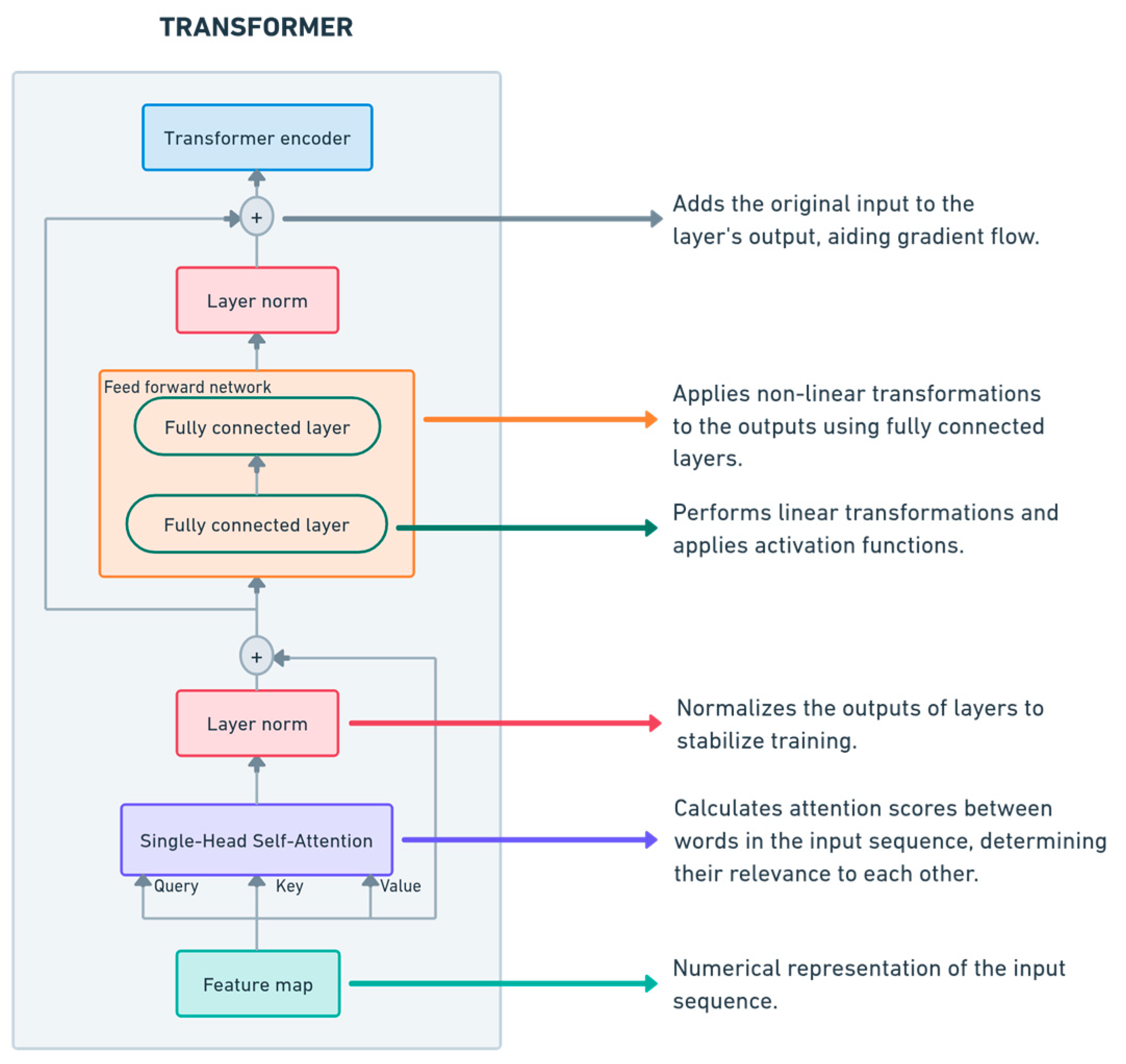

2.4.3. Transformer Network

2.4.4. Meta-Classification Using Random Forest

2.5. Evaluation Metrics

3. Results

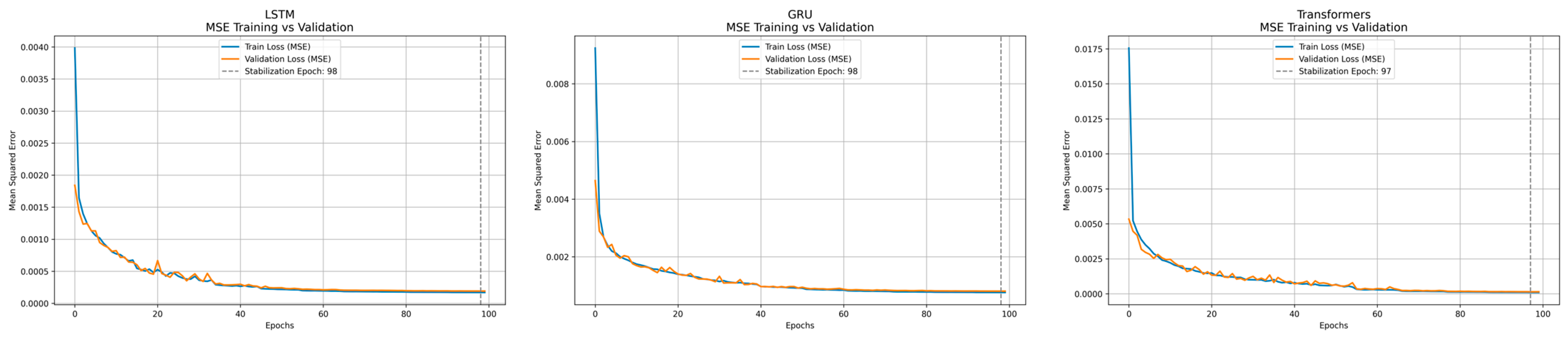

3.1. Training Performance of Neural Networks

3.1.1. Training Loss Evaluation

3.1.2. General Performance Metrics

3.1.3. Computational Requirements

- Training time: although GRU and Transformer have similar training times, LSTM completes fewer epochs faster, possibly due to differences in parameter initialization or smaller effective batch sizes;

- Inference speed: Transformer demonstrates the fastest inference time, suggesting that its self-attention mechanism benefits from parallelization, despite its larger number of parameters;

- Resource footprint: LSTM and GRU models use comparable GPU memory, while Transformer memory usage is slightly lower in these experiments, reflecting implementation specifics and different usage patterns;

- Model complexity: The number of Transformer parameters is substantially larger than that of recurrent models, which partly explains the improved accuracy but also increases storage requirements. Taken together, the Transformer architecture provides superior accuracy and efficient inference but requires more model complexity. In contrast, LSTM and GRU networks are easier to train and implement, making them strong candidates for scenarios where resources are constrained or where modest accuracy tradeoffs are acceptable.

3.2. Model Performance in Dry Weather

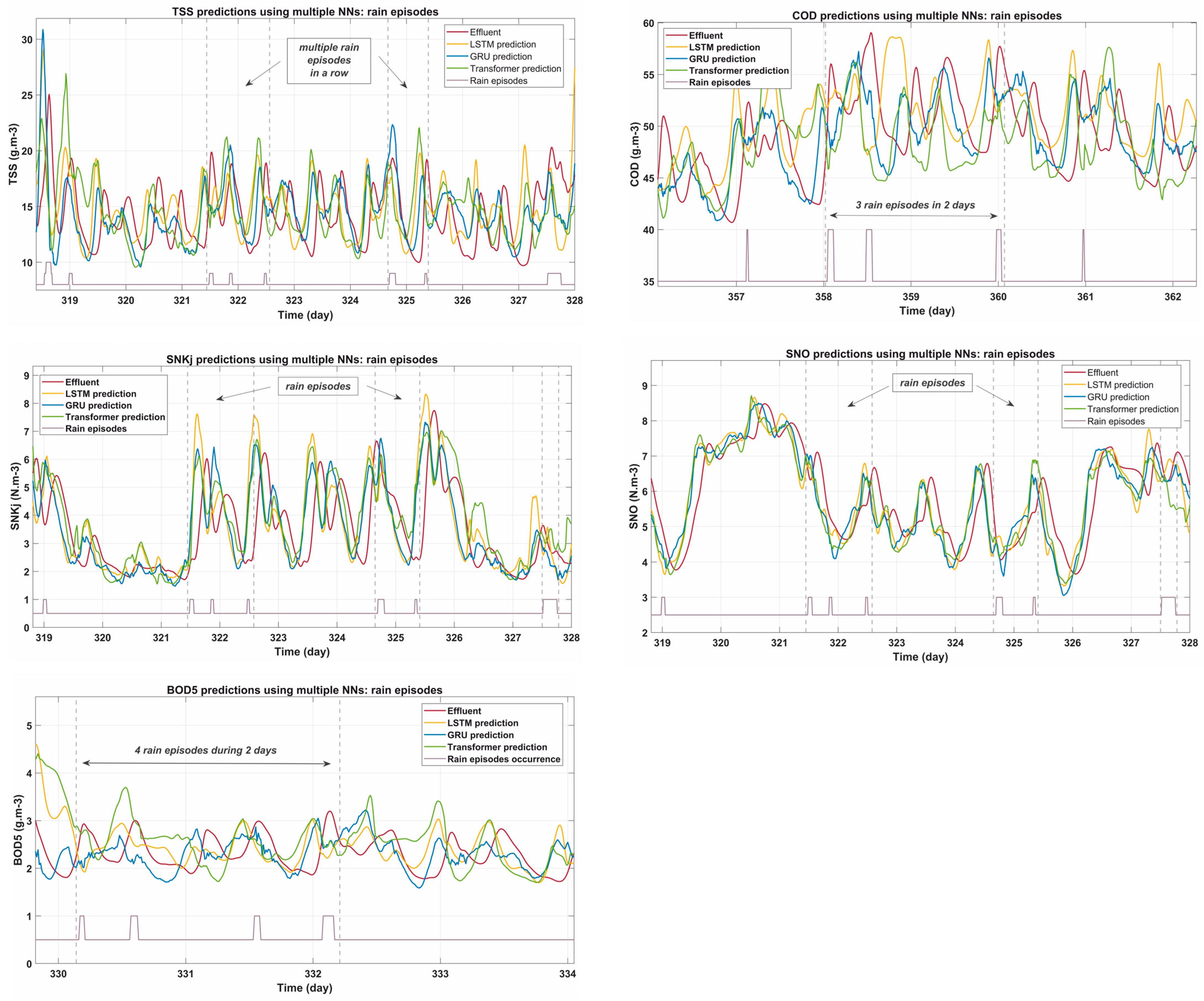

3.3. Model Performance During Rainfall Episodes

- Initial rainfall impact: All models experience increased prediction errors at the onset of rain events. This highlights the difficulty in adapting in real time to sudden flow and pollutant peaks.

- Post-rain stabilization: GRU demonstrates the best ability to maintain stability and accuracy during the stabilization phase, while Transformer shows a slight advantage in capturing complex rebound effects.

- Overall adaptability: For all parameters, GRU consistently balances predictive responsiveness and stability, making it a strong candidate for real-time monitoring during rain episodes.

3.4. Model Performance During Storm Events

3.5. Comparison Across Scenarios

3.6. Scenario-Based Classification Using RF

3.6.1. Performance Metrics of RF

3.6.2. RF-Based Model Classification Results

4. Discussions

4.1. Key Observations

4.2. Practical Implications and Limitations

4.3. Future Directions

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | Artificial intelligence |

| ANN | Artificial neural network |

| BOD5 | Five-day biochemical oxygen demand |

| BSM2 | Benchmark simulation model no. 2 |

| CL | Closed loop |

| COD | Chemical oxygen demand |

| DTW | Dynamic time warping |

| EQI | Effluent quality index |

| FN | False negatives |

| FP | False positives |

| GRU | Gated Recurrent Unit |

| LSTM | Long Short-Term Memory |

| MAE | Mean absolute error |

| MAPE | Absolute percentage error |

| MATLAB | Matrix laboratory |

| ML | Machine learning |

| MPC | Model predictive controller |

| MSE | Mean squared error |

| NN | Neural network |

| OL | Open loop |

| R2 | Coefficient of determination |

| RF | Random Forest |

| SNKj | Kjeldahl nitrogen |

| SNO | Nitrate nitrogen |

| TN | True Negatives |

| TP | True Positives |

| TSS | Total suspended solids |

| WWTP | Wastewater treatment plant |

Appendix A

| Condition | Model | MSE | R2 | Composite Score |

|---|---|---|---|---|

| Dry (0) | LSTM | 2.43959 | 0.97068 | 0.20016 |

| GRU | 2.24334 | 0.97341 | 0.18369 | |

| Transformer | 1.96981 | 0.97985 | 0.15809 | |

| Rain (1) | LSTM | 3.11630 | 0.96360 | 0.28264 |

| GRU | 3.14540 | 0.96599 | 0.28254 | |

| Transformer | 3.20165 | 0.96493 | 0.28805 | |

| Storm (2) | LSTM | 6.21162 | 0.87506 | 0.26266 |

| GRU | 5.81337 | 0.88798 | 0.24091 | |

| Transformer | 6.01183 | 0.86973 | 0.26356 |

References

- Bolan, S.; Padhye, L.P.; Jasemizad, T.; Govarthanan, M.; Karmegam, N.; Wijesekara, H.; Amarasiri, D.; Hou, D.; Zhou, P.; Biswal, B.K.; et al. Impacts of climate change on the fate of contaminants through extreme weather events. Sci. Total Environ. 2023, 909, 168388. [Google Scholar] [CrossRef] [PubMed]

- Silva, J.A. Wastewater Treatment and Reuse for Sustainable Water Resources Management: A Systematic Literature Review. Sustainability 2023, 15, 10940. [Google Scholar] [CrossRef]

- Obaideen, K.; Shehata, N.; Sayed, E.T.; Abdelkareem, M.A.; Mahmoud, M.S.; Olabi, A. The role of wastewater treatment in achieving sustainable development goals (SDGs) and sustainability guideline. Energy Nexus 2022, 7, 100112. [Google Scholar] [CrossRef]

- Singh, B.J.; Chakraborty, A.; Sehgal, R. A systematic review of industrial wastewater management: Evaluating challenges and enablers. J. Environ. Manag. 2023, 348, 119230. [Google Scholar] [CrossRef]

- Capodaglio, A.G.; Callegari, A. Use, Potential, Needs, and Limits of AI in Wastewater Treatment Applications. Water 2025, 17, 170. [Google Scholar] [CrossRef]

- Mahanna, H.; El-Rashidy, N.; Kaloop, M.R.; El-Sapakh, S.; Alluqmani, A.; Hassan, R. Prediction of wastewater treatment plant performance through machine learning techniques. Desalin. Water Treat. 2024, 319, 100524. [Google Scholar] [CrossRef]

- Tejaswini, E.S.S.; Babu, G.U.B.; Rao, A.S. Design and evaluation of advanced automatic control strategies in a total nitrogen removal activated sludge plant. Water Environ. J. 2020, 35, 791–806. [Google Scholar] [CrossRef]

- Lee, M.W.; Hong, S.H.; Choi, H.; Kim, J.; Lee, D.S.; Park, J.M. Real-time remote monitoring of small-scaled biological wastewater treatment plants by a multivariate statistical process control and neural network-based software sensors. Process Biochem. 2008, 43, 1107–1113. [Google Scholar] [CrossRef]

- Zainurin, S.N.; Wan Ismail, W.Z.; Mahamud, S.N.I.; Ismail, I.; Jamaludin, J.; Ariffin, K.N.Z.; Wan Ahmad Kamil, W.M. Advancements in Monitoring Water Quality Based on Various Sensing Methods: A Systematic Review. Int. J. Environ. Res. Public Health 2022, 19, 14080. [Google Scholar] [CrossRef]

- Saleem, M.A.; Harrou, F.; Sun, Y. Explainable machine learning methods for predicting water treatment plant features under varying weather conditions. Results Eng. 2024, 21, 101930. [Google Scholar] [CrossRef]

- Jeppsson, U.; Pons, M.; Nopens, I.; Alex, J.; Copp, J.; Gernaey, K.; Rosen, C.; Steyer, J.; Vanrolleghem, P. Benchmark simulation model no 2: General protocol and exploratory case studies. Water Sci. Technol. 2007, 56, 67–78. [Google Scholar] [CrossRef] [PubMed]

- Meneses, M.; Concepción, H.; Vrecko, D.; Vilanova, R. Life Cycle Assessment as an environmental evaluation tool for control strategies in wastewater treatment plants. J. Clean. Prod. 2015, 107, 653–661. [Google Scholar] [CrossRef]

- Vrecko, D.; Gernaey, K.; Rosen, C.; Jeppsson, U. Benchmark Simulation Model No 2 in Matlab-Simulink: Towards plant-wide WWTP control strategy evaluation. Water Sci. Technol. 2006, 54, 65–72. [Google Scholar] [CrossRef] [PubMed]

- Zeng, Q.; Luo, X.; Yan, F. The pollution scale weighting model in water quality evaluation based on the improved fuzzy variable theory. Ecol. Indic. 2022, 135, 108562. [Google Scholar] [CrossRef]

- Allen, L.; Cordiner, J. Knowledge-enhanced data-driven modeling of wastewater treatment processes for energy consumption prediction. Comput. Chem. Eng. 2025, 194, 108982. [Google Scholar] [CrossRef]

- Jeppsson, U.; Alex, J.; Batstone, D.J.; Benedetti, L.; Comas, J.; Copp, J.B.; Corominas, L.; Flores-Alsina, X.; Gernaey, K.V.; Nopens, I.; et al. Benchmark simulation models, quo vadis? Water Sci. Technol. 2013, 68, 1–15. [Google Scholar] [CrossRef] [PubMed]

- Flores-Alsina, X.; Gernaey, K.V.; Jeppsson, U. Global sensitivity analysis of the BSM2 dynamic influent disturbance scenario generator. Water Sci. Technol. 2012, 65, 1912–1922. [Google Scholar] [CrossRef][Green Version]

- Ivan, H.L.; Zaccaria, V. Exploring the effects of faults on the performance of a biological wastewater treatment process. Water Sci. Technol. 2024, 90, 474–489. [Google Scholar] [CrossRef]

- Gernaey, K.V.; Flores-Alsina, X.; Rosen, C.; Benedetti, L.; Jeppsson, U. Dynamic influent pollutant disturbance scenario generation using a phenomenological modelling approach. Environ. Model. Softw. 2011, 26, 1255–1267. [Google Scholar] [CrossRef]

- Pisa, I.; Santin, I.; Vicario, J.L.; Morell, A.; Vilanova, R. Data Preprocessing for ANN-based Industrial Time-Series Forecasting with Imbalanced Data. In Proceedings of the 2021 29th European Signal Processing Conference (EUSIPCO), A Coruna, Spain, 2–6 September 2019; pp. 1–5. [Google Scholar] [CrossRef]

- Cabello-Solorzano, K.; De Araujo, I.O.; Peña, M.; Correia, L.; Tallón-Ballesteros, A.J. The impact of data normalization on the accuracy of machine learning algorithms: A comparative analysis. In Proceedings of the 18th International Conference on Soft Computing Models in Industrial and Environmental Applications (SOCO 2023), Salamanca, Spain, 5–7 September 2023; Lecture Notes in Networks and Systems. pp. 344–353. [Google Scholar] [CrossRef]

- Țîru, A.E.; Vasiliev, I.; Diaconu, L.; Vilanova, R.; Voipan, D.; Ratnaweera, H. Integration of ANN for accurate estimation and control in wastewater treatment. In Proceedings of the 2022 IEEE 27th International Conference on Emerging Technologies and Factory Automation (ETFA), Sinaia, Romania, 12–15 September 2023; pp. 1–4. [Google Scholar] [CrossRef]

- Pisa, I.; Santín, I.; Vicario, J.L.; Morell, A.; Vilanova, R. ANN-Based Soft Sensor to Predict Effluent Violations in Wastewater Treatment Plants. Sensors 2019, 19, 1280. [Google Scholar] [CrossRef]

- Wang, A.; Li, H.; He, Z.; Tao, Y.; Wang, H.; Yang, M.; Savic, D.; Daigger, G.T.; Ren, N. Digital Twins for Wastewater Treatment: A technical review. Engineering 2024, 36, 21–35. [Google Scholar] [CrossRef]

- Waqas, M.; Humphries, U.W. A Critical review of RNN and LSTM variants in hydrological Time Series predictions. MethodsX 2024, 13, 102946. [Google Scholar] [CrossRef]

- Venna, S.R.; Tavanaei, A.; Gottumukkala, R.N.; Raghavan, V.V.; Maida, A.S.; Nichols, S. A novel Data-Driven model for Real-Time influenza Forecasting. IEEE Access 2018, 7, 7691–7701. [Google Scholar] [CrossRef]

- Cheng, T.; Harrou, F.; Kadri, F.; Sun, Y.; Leiknes, T. Forecasting of Wastewater Treatment Plant Key Features Using Deep Learning-Based Models: A Case study. IEEE Access 2020, 8, 184475–184485. [Google Scholar] [CrossRef]

- Voipan, D.; Vasiliev, I.; Diaconu, L.; Voipan, A.; Barbu, M. Enhancing Effluent Quality Predictions in Wastewater Treatment with LSTM Neural Network. In Proceedings of the 2022 26th International Conference on System Theory, Control and Computing (ICSTCC), Sinaia, Romania, 10–12 October 2024; pp. 83–88. [Google Scholar] [CrossRef]

- Raschka, S.; Patterson, J.; Nolet, C. Machine Learning in Python: Main Developments and Technology Trends in Data Science, Machine Learning, and Artificial Intelligence. Information 2020, 11, 193. [Google Scholar] [CrossRef]

- Cheng, H.; Ai, Q. A Cost Optimization Method Based on Adam Algorithm for Integrated Demand Response. Electronics 2023, 12, 4731. [Google Scholar] [CrossRef]

- Jierula, A.; Wang, S.; OH, T.-M.; Wang, P. Study on Accuracy Metrics for Evaluating the Predictions of Damage Locations in Deep Piles Using Artificial Neural Networks with Acoustic Emission Data. Appl. Sci. 2021, 11, 2314. [Google Scholar] [CrossRef]

- Mienye, I.D.; Swart, T.G.; Obaido, G. Recurrent Neural Networks: A Comprehensive Review of Architectures, Variants, and Applications. Information 2024, 15, 517. [Google Scholar] [CrossRef]

- Zarzycki, K.; Ławryńczuk, M. LSTM and GRU Neural Networks as Models of Dynamical Processes Used in Predictive Control: A Comparison of Models Developed for Two Chemical Reactors. Sensors 2021, 21, 5625. [Google Scholar] [CrossRef]

- Hommel, B.E.; Wollang, F.M.; Kotova, V.; Zacher, H.; Schmukle, S.C. Transformer-Based deep neural language modeling for Construct-Specific Automatic item Generation. Psychometrika 2021, 87, 749–772. [Google Scholar] [CrossRef]

- Lin, T.; Wang, Y.; Liu, X.; Qiu, X. A survey of transformers. AI Open 2022, 3, 111–132. [Google Scholar] [CrossRef]

- Gulshin, I.; Kuzina, O. Machine Learning Methods for the Prediction of Wastewater Treatment Efficiency and Anomaly Classification with Lack of Historical Data. Appl. Sci. 2024, 14, 10689. [Google Scholar] [CrossRef]

- Vergni, L.; Todisco, F. A Random Forest Machine Learning Approach for the Identification and Quantification of Erosive Events. Water 2023, 15, 2225. [Google Scholar] [CrossRef]

- Chicco, D.; Warrens, M.J.; Jurman, G. The coefficient of determination R-squared is more informative than SMAPE, MAE, MAPE, MSE and RMSE in regression analysis evaluation. PeerJ Comput. Sci. 2021, 7, e623. [Google Scholar] [CrossRef] [PubMed]

- Imen, S.; Croll, H.C.; McLellan, N.L.; Bartlett, M.; Lehman, G.; Jacangelo, J.G. Application of machine learning at wastewater treatment facilities: A review of the science, challenges and barriers by level of implementation. Environ. Technol. Rev. 2023, 12, 493–516. [Google Scholar] [CrossRef]

- Liu, L.; Liu, J.; Han, J. Multi-head or single-head? An empirical comparison for transformer training. arXiv 2021, arXiv:2106.09650. [Google Scholar] [CrossRef]

- Reza, S.; Ferreira, M.C.; Machado, J.; Tavares, J.M.R. A multi-head attention-based transformer model for traffic flow forecasting with a comparative analysis to recurrent neural networks. Expert Syst. Appl. 2022, 202, 117275. [Google Scholar] [CrossRef]

- Sinha, S.; Lee, Y.M. Challenges with developing and deploying AI models and applications in industrial systems. Discov. Artif. Intell. 2024, 4, 55. [Google Scholar] [CrossRef]

- Ahmed, S.F.; Alam, M.S.B.; Hassan, M.; Rozbu, M.R.; Ishtiak, T.; Rafa, N.; Mofijur, M.; Ali, A.B.M.S.; Gandomi, A.H. Deep learning modelling techniques: Current progress, applications, advantages, and challenges. Artif. Intell. Rev. 2023, 56, 13521–13617. [Google Scholar] [CrossRef]

- Sheik, A.G.; Kumar, A.; Srungavarapu, C.S.; Azari, M.; Ambati, S.R.; Bux, F.; Patan, A.K. Insights into the application of explainable artificial intelligence for biological wastewater treatment plants: Updates and perspectives. Eng. Appl. Artif. Intell. 2025, 144, 110132. [Google Scholar] [CrossRef]

- Cao, K.; Zhang, T.; Huang, J. Advanced hybrid LSTM-transformer architecture for real-time multi-task prediction in engineering systems. Sci. Rep. 2024, 14, 4890. [Google Scholar] [CrossRef] [PubMed]

- Yan, Q.; Lu, Z.; Liu, H.; He, X.; Zhang, X.; Guo, J. An improved feature-time Transformer encoder-Bi-LSTM for short-term forecasting of user-level integrated energy loads. Energy Build. 2023, 297, 113396. [Google Scholar] [CrossRef]

- Vasiliev, I.; Luca, L.; Barbu, M.; Vilanova, R.; Caraman, S. Reducing the environmental impact of sewer network overflows using model Predictive Control strategy. Water Resour. Res. 2024, 60, e2023WR035448. [Google Scholar] [CrossRef]

| Evaluation Metrics | Equation | Details |

|---|---|---|

| MAE | , | MAE measures the mean absolute deviation between predicted and actual values, and unlike MSE, MAE treats all errors equally, making it robust against large outliers. It has been particularly useful in minimizing mean deviation rather than penalizing extreme errors. |

| MSE | MSE calculates the mean squared deviation between the predictions and the actual values, penalizing large errors more than small ones, making it effective for identifying models that minimize substantial deviations. However, its sensitivity to outliers had to be considered when evaluating the results. | |

| MAPE | MAPE expresses the prediction error as a percentage of the true value and provides a normalized measure of error, which is particularly useful when comparing models from datasets with different scales, in this case with different values for EQI parameters. | |

| R2 | The R2 score measures how well the model explains the variation in the actual data. A value of 1 indicates perfect predictions, and 0 means that the model is no better than using the mean. Therefore, values should be as close to 1 as possible. | |

| DTW | It measures the similarity between time series sequences by aligning them nonlinearly in the time dimension. It was chosen because it is suitable for sequences with similar trends but different time scales, in our case the sliding window protocol. | |

| Confusion Matrix | The confusion matrix was used in the classification to measure how well the RF model distinguished the weather conditions. In the formula, True Positives (TP) and True Negatives (TN) represent correct classifications, while False Positives (FP) and False Negatives (FN) indicate misclassifications. | |

| Normalized Scoring System | This system was used to rank model performance. It normalizes the MSE and R2 values against their maximum values for all models and ensures a balanced assessment in which both absolute error and explanation of variation are taken into account. |

| Model | MAE | MSE | MAPE | R2 | Best K-Fold 1 |

|---|---|---|---|---|---|

| LSTM | 0.41 | 0.54 | 3.57% | 0.95 | 2 |

| GRU | 0.46 | 0.74 | 3.99% | 0.94 | 5 |

| Transformer | 0.006 | 0.00 | 2.34% | 0.98 | 5 |

| Model | Training Time (s) 1 | Inference Time (s) | GPU Memory Usage (MB) 2 | Total Parameters |

|---|---|---|---|---|

| LSTM | 226.97 | 0.95 | 68.82 | 25,237 |

| GRU | 293.47 | 1.13 | 70.57 | 19,189 |

| Transformer | 293.29 | 0.78 | 65.53 | 92,934 |

| EQI Parameter | Rank | NN | MAE | MSE | DTW |

|---|---|---|---|---|---|

| TSS (mg/L) | 1 | GRU | 2.47 | 11.97 | 16,555.11 |

| 2 | Transformer | 2.89 | 15.37 | 11,207.21 | |

| 3 | LSTM | 3.09 | 16.12 | 19,952.33 | |

| COD (mg/L) | 1 | GRU | 3.33 | 22.10 | 23,303.08 |

| 2 | Transformer | 3.83 | 27.72 | 15,360.53 | |

| 3 | LSTM | 4.14 | 29.38 | 28,558.55 | |

| SNKj (mg/L) | 1 | Transformer | 1.02 | 2.10 | 2667.36 |

| 2 | GRU | 1.09 | 2.33 | 3728.43 | |

| 3 | LSTM | 1.14 | 2.61 | 4234.05 | |

| SNO (mg/L) | 1 | Transformer | 0.60 | 0.63 | 2124.51 |

| 2 | GRU | 0.60 | 0.61 | 2597.26 | |

| 3 | LSTM | 0.67 | 0.77 | 3338.26 | |

| BOD5 (mg/L) | 1 | GRU | 0.43 | 1.26 | 3972.47 |

| 2 | Transformer | 0.48 | 1.62 | 2734.55 | |

| 3 | LSTM | 0.51 | 1.45 | 4442.02 |

| Scenario | Precision | Recall | F1-Score | Support |

|---|---|---|---|---|

| Dry (0) | 0.98 | 1.00 | 0.99 | 6371 |

| Rain (1) | 0.88 | 0.67 | 0.76 | 379 |

| Storm (2) | 0.95 | 0.94 | 0.94 | 239 |

| Weighted Average | 0.97 | 0.98 | 0.97 | 6989 |

| Scenario | Selected ANN | MSE | R2 | Notes |

|---|---|---|---|---|

| Dry (0) | Transformer | 1.96 | 0.97 | Best for stable flow and diurnal trends. |

| Rain (1) | GRU | 3.14 | 0.96 | Adaptable to moderate and persistent fluctuations. |

| Storm (2) | GRU | 5.81 | 0.88 | Handles extreme spikes with better stability. |

| Weather Condition | Recommended Model | Reasoning |

|---|---|---|

| Dry weather | Transformer | Captures stable flow variations and diurnal patterns with high accuracy. |

| Rain events | GRU | Adapts well to persistent fluctuations while maintaining stable predictions. |

| Storms | GRU | Robust in handling extreme variations and effectively recovers after abrupt spikes. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Voipan, D.; Voipan, A.E.; Barbu, M. Evaluating Machine Learning-Based Soft Sensors for Effluent Quality Prediction in Wastewater Treatment Under Variable Weather Conditions. Sensors 2025, 25, 1692. https://doi.org/10.3390/s25061692

Voipan D, Voipan AE, Barbu M. Evaluating Machine Learning-Based Soft Sensors for Effluent Quality Prediction in Wastewater Treatment Under Variable Weather Conditions. Sensors. 2025; 25(6):1692. https://doi.org/10.3390/s25061692

Chicago/Turabian StyleVoipan, Daniel, Andreea Elena Voipan, and Marian Barbu. 2025. "Evaluating Machine Learning-Based Soft Sensors for Effluent Quality Prediction in Wastewater Treatment Under Variable Weather Conditions" Sensors 25, no. 6: 1692. https://doi.org/10.3390/s25061692

APA StyleVoipan, D., Voipan, A. E., & Barbu, M. (2025). Evaluating Machine Learning-Based Soft Sensors for Effluent Quality Prediction in Wastewater Treatment Under Variable Weather Conditions. Sensors, 25(6), 1692. https://doi.org/10.3390/s25061692