Domain-Adaptive Transformer Partial Discharge Recognition Method Combining AlexNet-KAN with DANN

Abstract

1. Introduction

2. Overall Model Construction

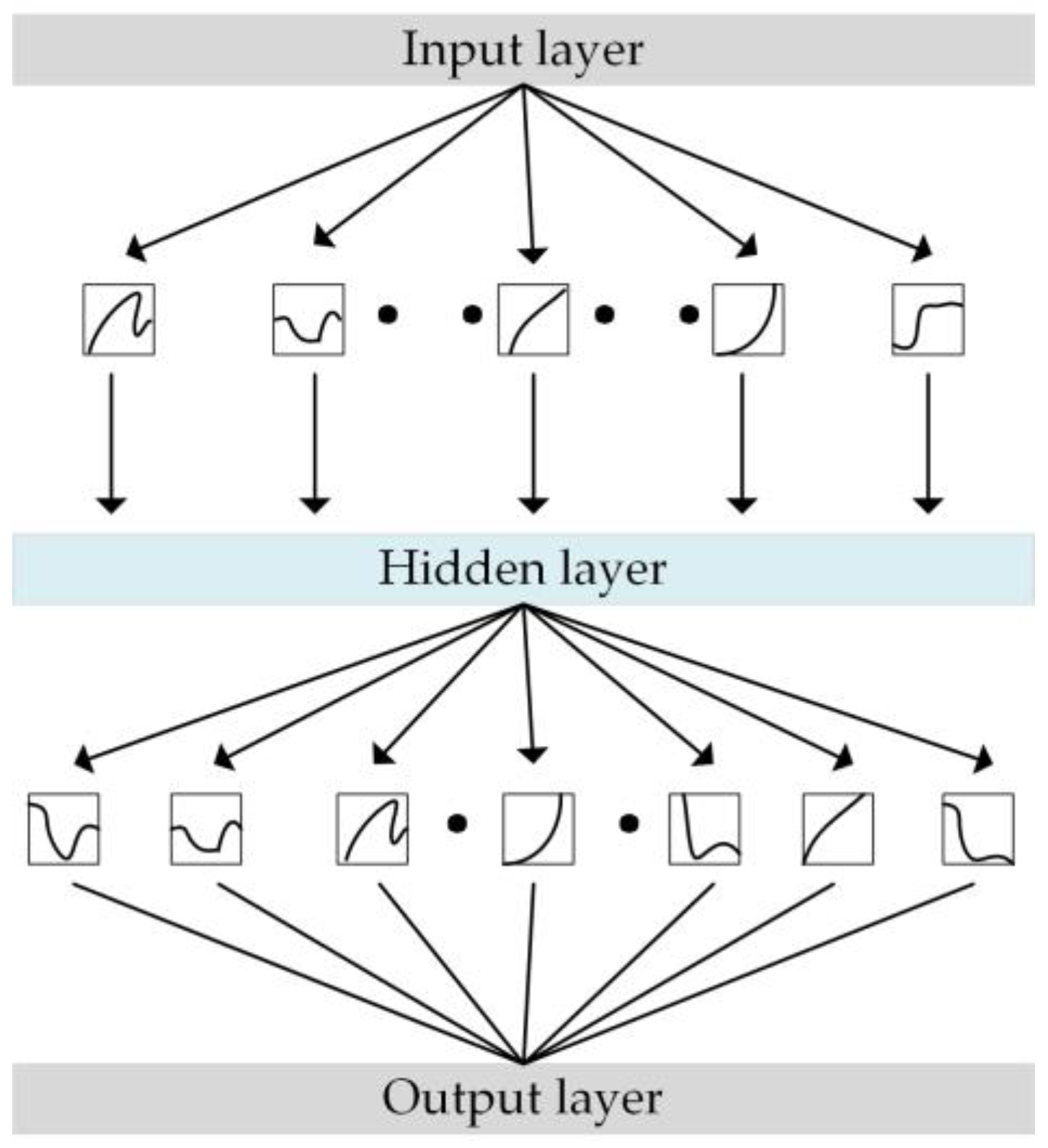

2.1. Improvement Based on KAN

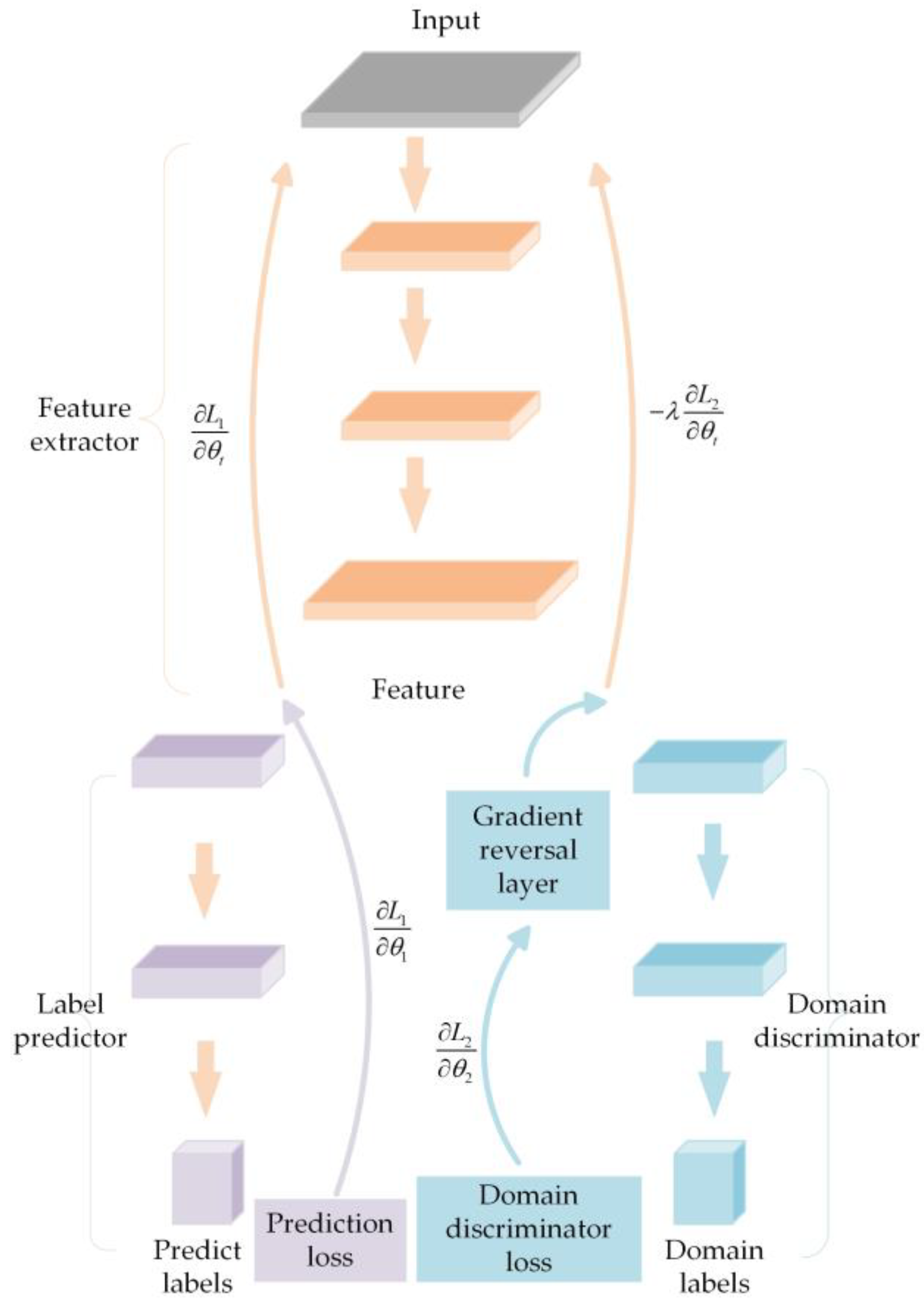

2.2. Integration with DANN

2.3. Domain-Adaptive Transformer Partial Discharge Recognition Method Combining AlexNet-KAN with DANN

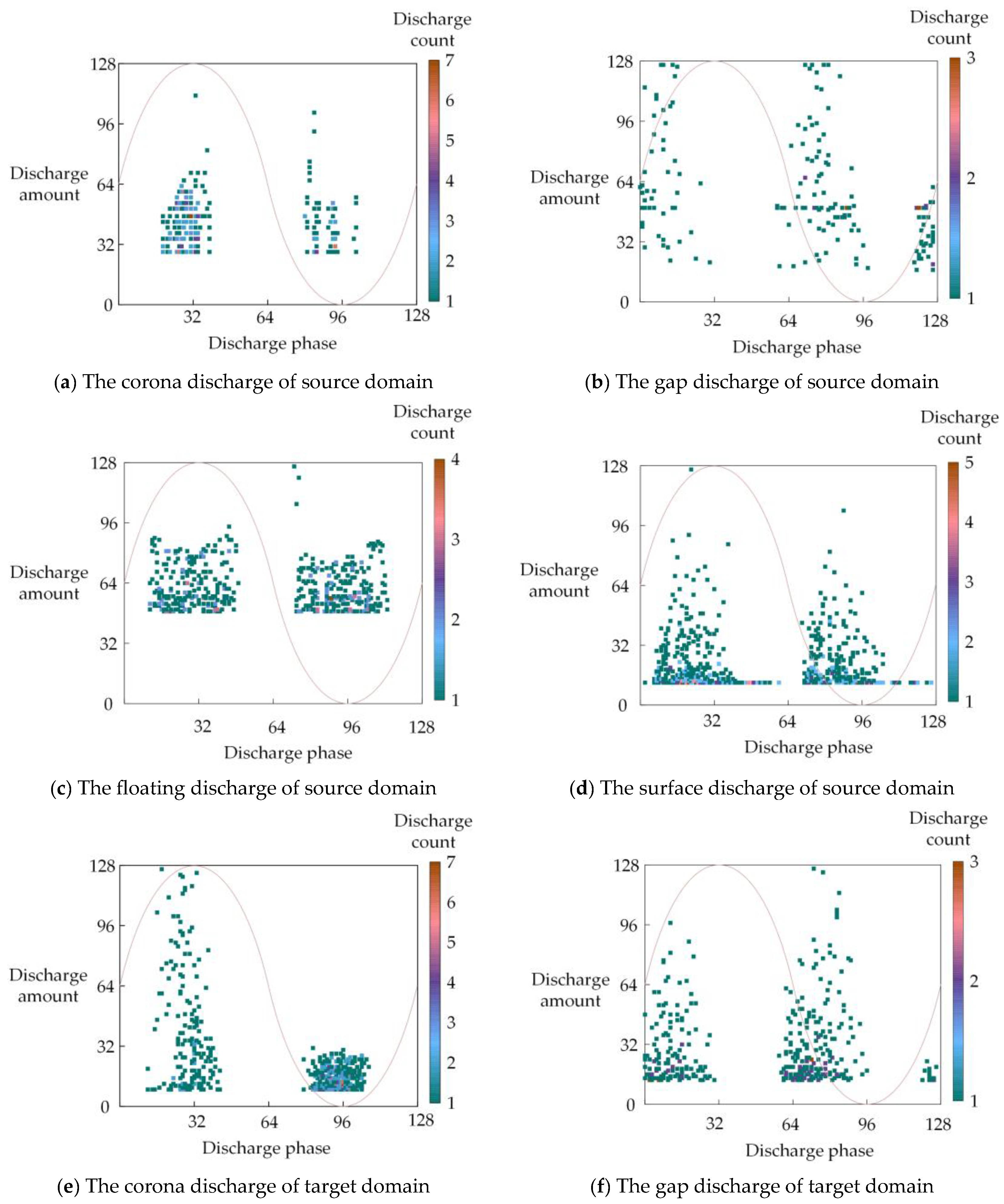

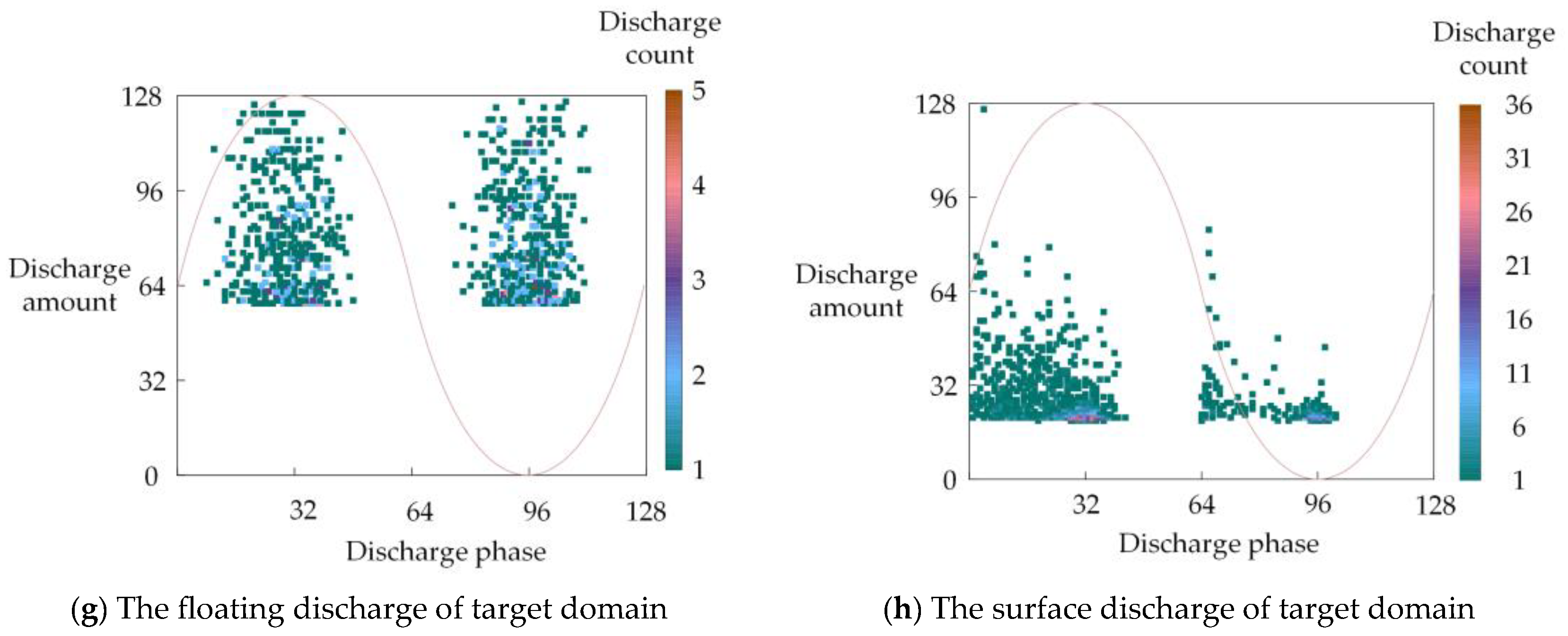

- Data Collection: Transformer partial discharge data are collected and phase resolved partial discharge (PRPD) spectra are generated for both the source domain and the target domain , which are then compressed into two-dimensional PRPD feature fingerprint images. The datasets are divided into the source domain training set , source domain test set , target domain training set , and target domain test set ;

- Domain Labeling: The domain labels for the source domain training set and the target domain training set are one-hot encoded, with the domain label of set to 0 and set to 1. These two datasets are then merged to form a combined training dataset ;

- Feature Extraction: is input into the proposed transformer partial discharge domain-adaptive recognition model, which combines AlexNet-KAN with DANN, to perform feature extraction. The extracted features are then fed into the model’s label predictor and domain discriminator;

- Partial Discharge Recognition and Domain Classification: The KAN label predictor performs partial discharge recognition on the transformer data, while the KAN domain discriminator determines whether the input data originates from the source domain or the target domain;

- Iterative Optimization: The model undergoes iterative optimization until the specified iteration limit is achieved. During this process, the KAN label predictor’s parameters are optimized to minimize partial discharge recognition errors, the KAN domain discriminator’s parameters are adjusted to reduce domain classification errors (facilitated by the gradient reversal layer), and the feature extractor’s parameters are optimized to minimize the KAN label predictor’s loss (ensuring feature discriminability) and maximize the KAN domain discriminator’s loss (ensuring domain-invariant features);

- Fine-tuning with Labeled Data: If a small amount of labeled transformer partial discharge data are available in the target domain, then these data are used to fine-tune the model to improve the mapping between the source and target domains and further enhance classification accuracy;

- Testing and Evaluation: The source domain test set and the target domain test set are input into the model for testing, and the results are analyzed.

3. Experiments and Results Analysis

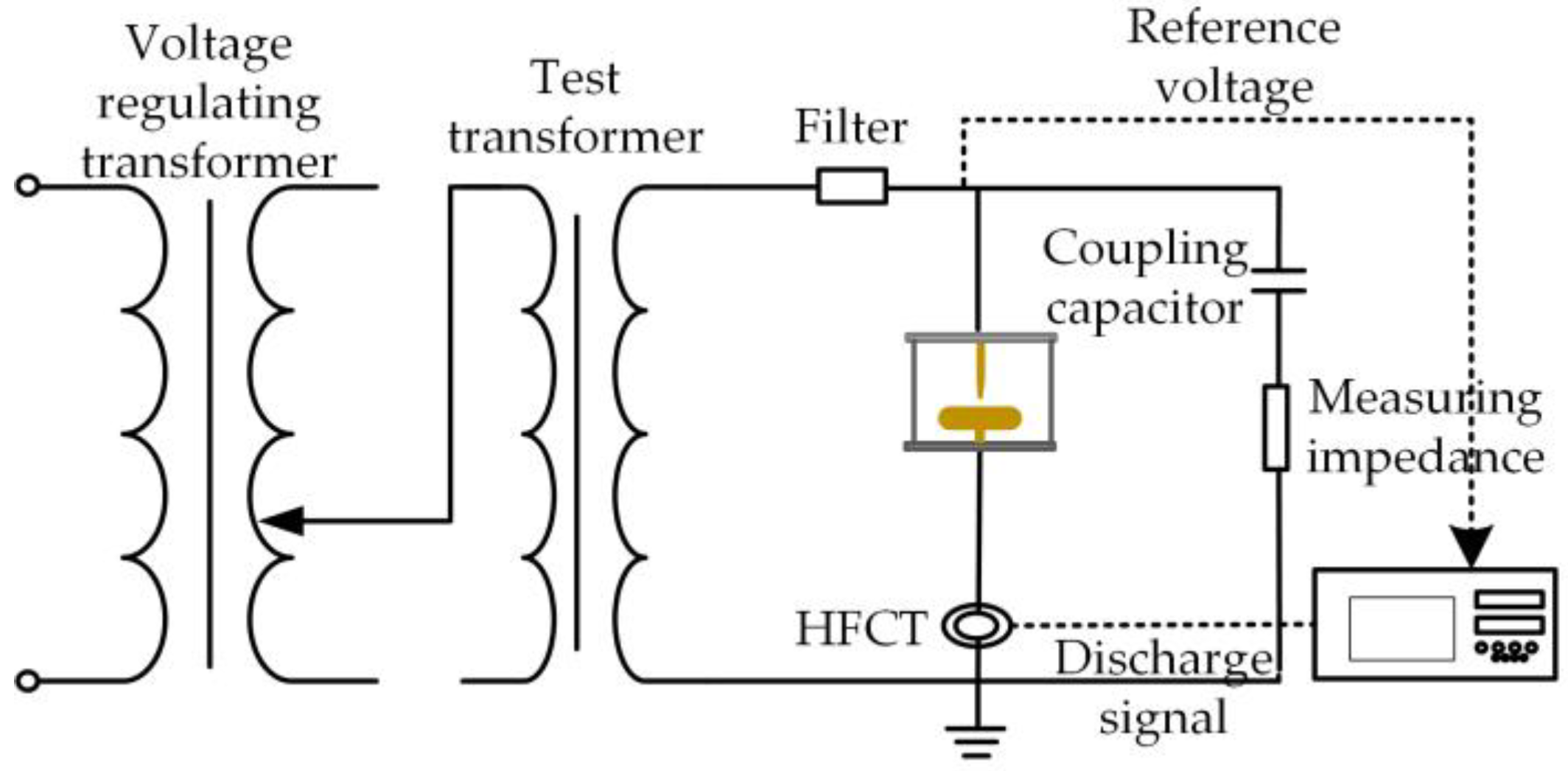

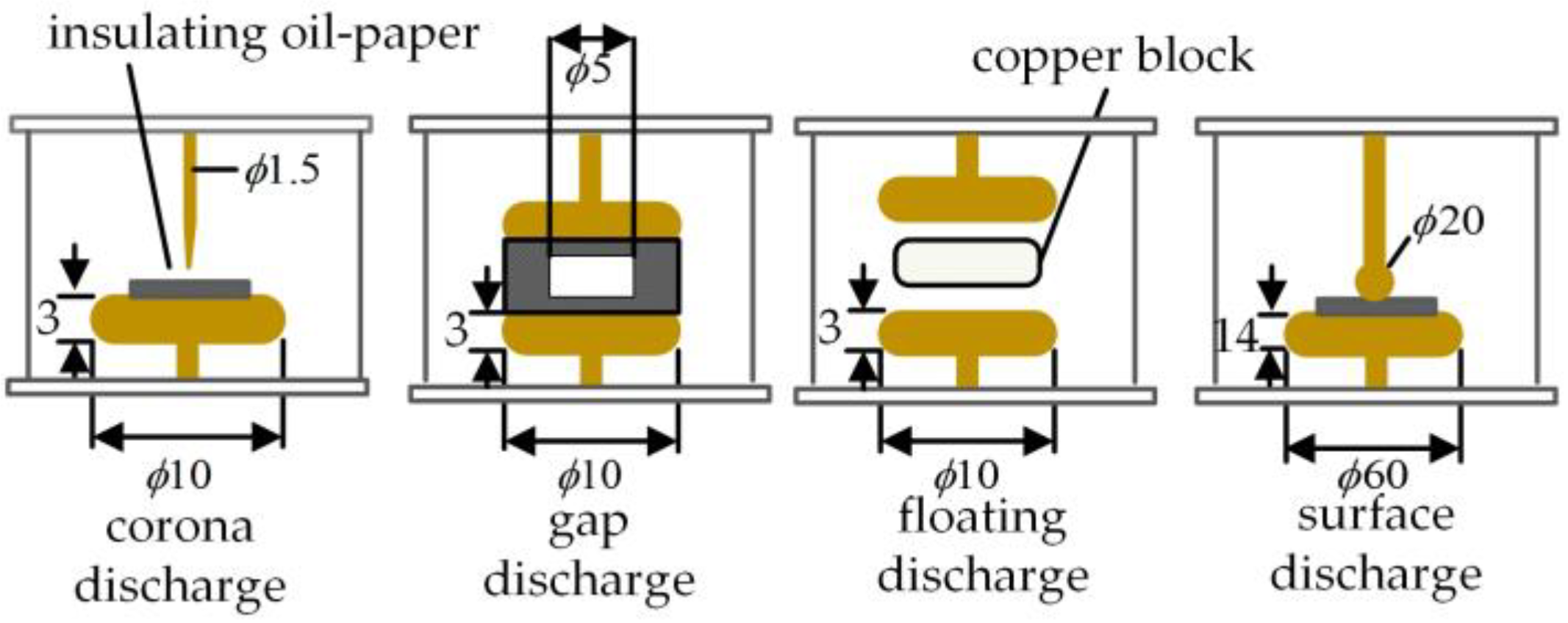

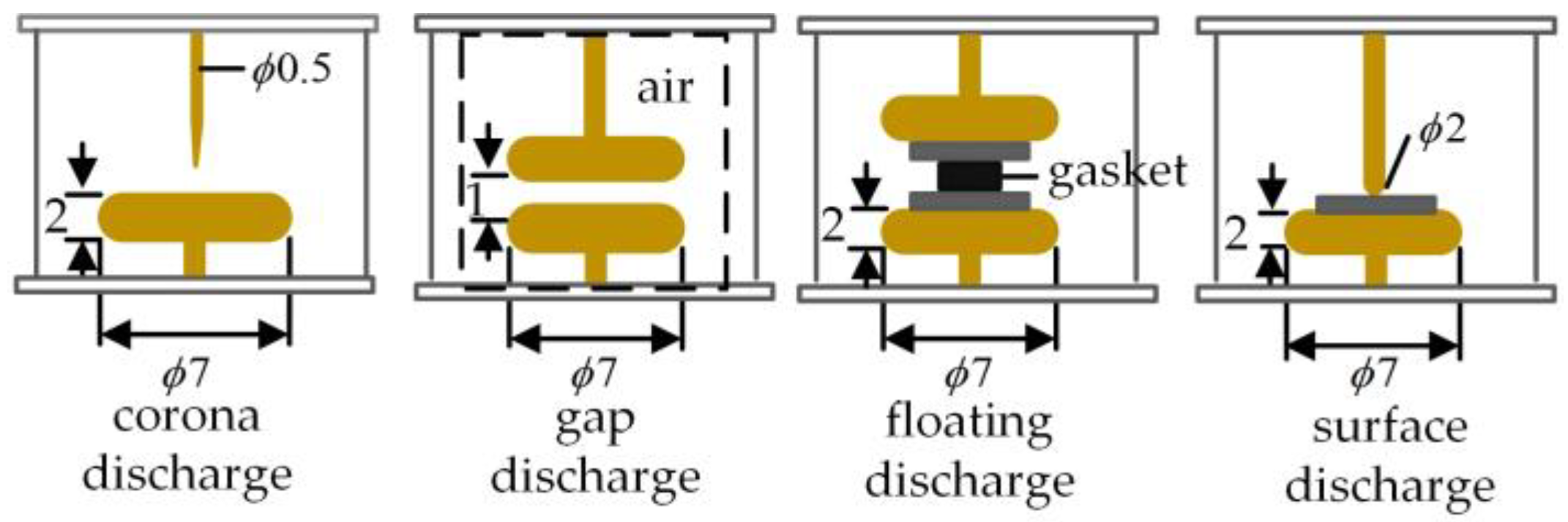

3.1. Collection of Experimental Data

3.2. Network Architecture and Parameter Settings

- : Training the AlexNet model directly with source domain data;

- : Training the proposed AlexNet-KAN model directly with source domain data;

- : Training the proposed model by combining AlexNet-KAN with a DANN with mixed source and target domain data without fine-tuning;

- : Training the proposed model by combining AlexNet-KAN with a DANN with mixed source and target domain data and fine-tuning the model.

4. Conclusions

- Using a KAN to improve AlexNet can enhance the expressive capability of the transformer partial discharge recognition model, thereby increasing the accuracy of the model in identifying transformer partial discharge data;

- To enhance the model’s recognition accuracy for new, unlabeled transformer partial discharge data with distribution shifts, the domain adversarial mechanism is introduced into AlexNet-KAN, resulting in a domain-adaptive transformer partial discharge recognition model combining AlexNet-KAN with a DANN. This model employs the domain adversarial mechanism to extract features with both discriminative and domain-invariant properties from both the source and target domains, thereby better aligning the data distributions between the source and target domains. It reliably addresses the issue of low recognition accuracy in the original partial discharge recognition model due to distribution shifts and the presence of unlabeled or sparsely labeled new data, effectively integrating deep learning and DDANs in the field of transformer partial discharge recognition;

- To further validate the effectiveness and scalability of the method, a comparison is made between the transformer partial discharge recognition model that combines AlexNet-KAN with a DANN (the proposed method) and classical models, as well as classical models improved by the proposed method. This comparison is conducted on data with no distribution shift and on data with distribution shift and missing labels. The results show that the proposed method outperforms classical methods in terms of partial discharge recognition rates and demonstrates a certain degree of scalability;

- After adding noise interference to the target domain, the proposed method maintains a high level of partial discharge recognition accuracy on the target domain, further validating the effectiveness of the method presented in this paper.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Deng, R.; Zhu, Y.; Liu, X.; Zhai, Y. Pattern Recognition of Unknown Types in Partial Discharge Signals Based on Variable Predictive Model and Tanimoto. Diangong Jishu Xuebao/Trans. China Electrotech. Soc. 2020, 35, 3105–3115. [Google Scholar] [CrossRef]

- Li, Y.; Xian, R.; Zhang, H.; Zhao, F.; Li, J.; Wang, W.; Li, Z. Fault Diagnosis for Power Transformers Based on Improved Grey Wolf Algorithm Coupled With Least Squares Support Vector Machine. Dianwang Jishu/Power Syst. Technol. 2023, 47, 1470–1477. [Google Scholar] [CrossRef]

- Cheng, Y.; Zhang, Z. Multi-source Partial Discharge Diagnosis of Transformer Based on Random Forest. Zhongguo Dianji Gongcheng Xuebao/Proc. Chin. Soc. Electr. Eng. 2018, 38, 5246–5256. [Google Scholar] [CrossRef]

- Li, S.; Tang, S.; Li, F.; Qi, J.; Xiong, W. Progress in biomedical data analysis based on deep learning. Shengwu Yixue Gongchengxue Zazhi/J. Biomed. Eng. 2020, 37, 349–357. [Google Scholar] [CrossRef]

- Qiu, S.; Chen, B.; Hu, W.-B.; Wang, W.-D.; Wu, D.-Z.; Zhang, C.-L.; Wang, W.-J.; Gao, H.-B.; Wang, J. Automated Pavement-wide Injury State Sensing Based on Deep Learning and Virtual Models. Zhongguo Gonglu Xuebao/China J. Highw. Transp. 2023, 36, 61–69. [Google Scholar] [CrossRef]

- Zhang, Y.; Wen, G.-Z.; Mi, S.-Y.; Zhang, M.-L.; Geng, X. Overview on 2D Human Pose Estimation Based on Deep Learning. Ruan Jian Xue Bao/J. Softw. 2022, 33, 4173–4191. [Google Scholar] [CrossRef]

- Song, H.; Dai, J.; Sheng, G.; Jiang, X. GIS partial discharge pattern recognition via deep convolutional neural network under complex data source. IEEE Trans. Dielectr. Electr. Insul. 2018, 25, 678–685. [Google Scholar] [CrossRef]

- Zhang, Z.; Yue, H.; Wang, B.; Liu, Y.; Luo, S. Pattern Recognition of Partial Discharge Ultrasonic Signal Based on Similar Matrix BSS and Deep Learning CNN. Dianwang Jishu/Power Syst. Technol. 2019, 43, 1900–1906. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Montanelli, H.; Yang, H. Error bounds for deep ReLU networks using the Kolmogorov–Arnold superposition theorem. Neural Netw. 2020, 129, 1–6. [Google Scholar] [CrossRef]

- Schmidt-Hieber, J. The Kolmogorov–Arnold representation theorem revisited. Neural Netw. 2021, 137, 119–126. [Google Scholar] [CrossRef] [PubMed]

- Ismayilova, A.; Ismailov, V.E. On the Kolmogorov neural networks. Neural Netw. 2024, 176, 106333. [Google Scholar] [CrossRef] [PubMed]

- Liu, Z.; Wang, Y.; Vaidya, S.; Ruehle, F.; Halverson, J.; Soljai, M.; Hou, T.Y.; Tegmark, M. KAN: Kolmogorov—Arnold Networks. arXiv 2024, arXiv:2404.19756. [Google Scholar]

- Yang, X.; Deng, C.; Liu, T.; Tao, D. Heterogeneous Graph Attention Network for Unsupervised Multiple-Target Domain Adaptation. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 1992–2003. [Google Scholar] [CrossRef] [PubMed]

- Fernando, B.; Habrard, A.; Sebban, M.; Tuytelaars, T. Unsupervised visual domain adaptation using subspace alignment. In Proceedings of the 2013 14th IEEE International Conference on Computer Vision, ICCV 2013, Sydney, NSW, Australia, 1–8 December 2013; pp. 2960–2967. [Google Scholar]

- Ji, K.; Zhang, Q.; Zhu, S. Subdomain alignment based open-set domain adaptation image classification. J. Vis. Commun. Image Represent. 2024, 98, 104047. [Google Scholar] [CrossRef]

- Liu, X.; Huang, Y.; Wang, H.; Xiao, Z.; Zhang, S. Universal and Scalable Weakly-Supervised Domain Adaptation. IEEE Trans. Image Process. 2024, 33, 1313–1325. [Google Scholar] [CrossRef] [PubMed]

- Ganin, Y.; Lempitsky, V. Unsupervised domain adaptation by backpropagation. In Proceedings of the 32nd International Conference on Machine Learning, ICML 2015, Lile, France, 6–11 July 2015; pp. 1180–1189. [Google Scholar]

- Xu, J.; Ramos, S.; Vazquez, D.; Lopez, A.M. Domain adaptation of deformable part-based models. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 2367–2380. [Google Scholar] [CrossRef] [PubMed]

| Layer Type | Number of Layers | Channels | Convolution Kernel (Stride) | |

|---|---|---|---|---|

| AlexNet | Convolutional Layer + Batch Normalization Layer + Max Pooling Layer | 5 | 64 | 3 × 3 (2) |

| Fully Connected Layer | 3 | 128, 64, 4 | ||

| AlexNet-KAN | Convolutional Layer + Batch Normalization Layer + Max Pooling Layer | 5 | 64 | 3 × 3 (2) |

| KAN Layer | 3 | 128, 10, 4 |

| No. | Source Domain Recognition Accuracy | Target Domain Recognition Accuracy |

|---|---|---|

| 95.32% | 42.46% | |

| 98.75% | 45.73% | |

| 98.14% | 78.48% | |

| 97.62% | 86.85% |

| Model Name | Source Domain Recognition Accuracy | Target Domain Recognition Accuracy |

|---|---|---|

| LeNet | 92.96% | 35.82% |

| The combination of LeNet-KAN and DANN | 94.84% | 66.28% |

| ResNet | 94.95% | 38.71% |

| The combination of ResNet-KAN and DANN | 96.28% | 79.26% |

| AlexNet | 95.32% | 42.46% |

| The combination of AlexNet-KAN and DANN | 97.62% | 86.85% |

| SNR/dB | Target Domain Recognition Accuracy | |

|---|---|---|

| 20 | 40.57% | 84.76% |

| 18 | 36.12% | 82.35% |

| 16 | 27.46% | 76.64% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Niu, J.; Zhu, Y. Domain-Adaptive Transformer Partial Discharge Recognition Method Combining AlexNet-KAN with DANN. Sensors 2025, 25, 1672. https://doi.org/10.3390/s25061672

Niu J, Zhu Y. Domain-Adaptive Transformer Partial Discharge Recognition Method Combining AlexNet-KAN with DANN. Sensors. 2025; 25(6):1672. https://doi.org/10.3390/s25061672

Chicago/Turabian StyleNiu, Jianfeng, and Yongli Zhu. 2025. "Domain-Adaptive Transformer Partial Discharge Recognition Method Combining AlexNet-KAN with DANN" Sensors 25, no. 6: 1672. https://doi.org/10.3390/s25061672

APA StyleNiu, J., & Zhu, Y. (2025). Domain-Adaptive Transformer Partial Discharge Recognition Method Combining AlexNet-KAN with DANN. Sensors, 25(6), 1672. https://doi.org/10.3390/s25061672