1. Introduction

The advancement of modern sensor technology has facilitated the proliferation of automated data acquisition systems across diverse fields, resulting in the generation of extensive temporal observations. These data, structured as time series, document the dynamic evolution of system states, establishing a fundamental basis for predictive analysis and decision support. Numerous time series forecasting methods have been proposed [

1,

2]. The superior interpretability of fuzzy time series forecasting models (FTSFMs) has led to their widespread application in various fields, including financial markets [

3] and wind energy [

4]. FTSFMs utilize fuzzy sets to model systematic uncertainties in data, specifically imprecision and vagueness. Ref. [

5] introduced the FTSFM to address time series forecasting problems. The model first fuzzifies precise original data using fuzzy sets. Next, it employs max–min composition operations on the fuzzified data to build a fuzzy relation matrix that indicates the relationships between various time points. Using historical data and the fuzzy relationship matrix, the model generates fuzzy forecast values, which are converted into precise ones. Ref. [

6] improved the model of [

5] by replacing the complex max–min operations with simpler arithmetic operations.

Traditional FTSFMs are optimized for stationary time series, assuming data generation from a fixed, albeit unknown, process. However, the time series generation process often changes over time in practical applications. The dynamic nature of the generation process is reflected by the data produced, which is called a non-stationary time series. The probabilistic properties of non-stationary time series change irregularly over time [

7]. The development of effective fuzzy time series forecasting methodologies for such non-stationary data represents a critical research challenge.

FTSFMs struggle to keep pace with the dynamic variations of non-stationary time series, affecting forecast accuracy. To address this challenge, researchers have employed two primary approaches for reducing non-stationarity: differencing operations and decomposition methods [

8,

9]. Ref. [

10] demonstrated that training with first-order differentiated data improves prediction accuracy compared with raw time series data. Ref. [

11] proposed an intuitionistic FTSFM using the percentage of first-order differenced data between consecutive time intervals. Additionally, utilizing empirical mode decomposition methods can transform raw data into relatively more stationary multivariate time series [

12,

13]. Both methods decompose the original time series into multiple subsequences known as intrinsic mode functions, which possess stronger stationarity than the original non-stationary time series. However, these subsequences may incorporate future information, leading to the so-called look-ahead bias [

14]. The presence of look-ahead bias can severely distort experimental results. While these stationarity transformation approaches can partially mitigate non-stationarity, they cannot completely eliminate the dynamic nature inherent in time series, necessitating fundamental improvements to FTSFMs to better accommodate temporal variations.

Several improved FTSFMs have been developed to adapt to irregular changes in non-stationary time series and reduce data non-stationarity. Ref. [

5] defined the time-variant FTSFM: if the fuzzy relation matrix changes over time, it is called a time-variant FTSFM. Ref. [

15] further explained the detailed implementation steps for the time-variant FTSFM. For each prediction, a fuzzy relation matrix is built using historical data from a preceding period, enabling the fuzzy relationships to change over time. The length of the historical period is referred to as the window base. Many researchers have explored time-variant FTSFMs and combined them with the aforementioned time series stabilization methods. Ref. [

16] utilized differenced time series for time-variant fuzzy time series forecasting. Ref. [

17] advanced the work of [

16] by improving outlier handling, data fuzzification, and window base determination. Ref. [

18] proposed a novel time-variant FTSFM for differenced seasonal data with a systematic search algorithm for the window base. Ref. [

19] presented a time-variant FTSFM incorporating a sliding window approach [

20], where the fuzzy relation matrix changes as the window slides. A propositional linear temporal logic formula is proposed to analyze the data trend in the window, thereby supporting forecasting. These time-variant FTSFMs aim to construct predictive models that can adapt to the characteristics of the latest period of data. However, neglecting the valuable information in previously trained models may result in reduced prediction quality. Since new data evolves from historical data, fully utilizing previously trained models becomes crucial for improving prediction accuracy on incoming data.

FTSFMs quantify the imprecision and vagueness within time series using fuzzy set theory. It is challenging for fixed fuzzy sets to accommodate dynamic changes in non-stationary time series. Ref. [

21] proposed the definition of the non-stationary fuzzy set for dynamically adjusting fuzzy sets. A non-stationary fuzzy set is created by integrating a basic fuzzy set with a perturbation function, where the parameters of the membership function change according to the values of the perturbation function at different time points. Ref. [

22] applied non-stationary fuzzy sets to FTSFMs to predict non-stationary time series with trends and scale changes, with interpolation functions serving as perturbation functions. Ref. [

23] introduced a non-stationary fuzzy time series (NSFTS) forecasting model capable of handling non-stationary and heteroskedastic time series. The NSFTS model utilizes a residual-based perturbation function to adaptively adjust the membership function parameters of the basic fuzzy set, reflecting changes in the non-stationary time series. The numerical forecast is calculated by combining the midpoints of the right-hand side (RHS) of each matched rule and the membership grades of the observations. Non-stationary fuzzy sets-based forecasting models perform well in short-term non-stationary time series forecasting. However, the unchanging fuzzy relationships limit their performance in long-term non-stationary time series forecasting.

Ref. [

24] proposed a time-varying FTSFM that incorporates non-stationary fuzzy sets to improve the accuracy of wind power predictions. The model handles time series variability by dividing the series into segments, each with unique membership and partition functions. Adjustments to membership function parameters employ non-stationary fuzzy set methods to suit non-stationary time series. The model retrains using the latest data window when the most recent prediction error surpasses a predefined threshold to reduce computational requirements. While [

24] offers computational efficiency, its strategy of maintaining only the fuzzy relationships from the latest data window may result in the loss of valuable temporal patterns embedded in historical relationships, potentially limiting the model’s ability to capture long-term temporal relationships.

Apart from adaptively adjusting fuzzy sets in the fuzzification stage, adaptive methods have been employed to enhance other aspects of FTSFMs, thereby better accommodating the dynamic nature of non-stationary time series. Ref. [

25] proposed an adaptive method that automatically modifies the order of the FTSFM based on prediction accuracy for forecasting various data. Ref. [

26] applied the adaptive expectation model [

27,

28] to optimize the forecast outcomes of a trend-weighted FTSFM following the initial forecasts from the FTSFM. The adaptive expectation model adjusts the forecast value using the difference between it and the observation at the previous time point. Ref. [

29] utilized a modified adaptive expectation model with adaptive parameters to enhance forecasting performance. Changes in the adaptive expectation model parameters indicate stock fluctuation and oscillation.

Ref. [

30] introduced the Bayesian network (BN) concept. A BN represents knowledge through a probabilistic graph, with nodes denoting random variables and directed edges indicating the dependence relationships between variables. The strength of dependence between two variables is represented by their conditional probability distributions (CPDs) within a BN. The initial task when constructing a BN is to establish the BN structure that depicts the dependence relationships among variables. These relationships serve to model the causal interactions within the system. The BN structure can be manually set based on domain knowledge. In certain situations, the dependence relationships among variables are unknown and need to be inferred from data. Due to the advantages of modeling dependence relationships and handling statistical uncertainty in complex systems, several studies have applied BNs to time series forecasting. Ref. [

31] initially applied BN structure learning to determine the dependence relationships in the price-earnings ratio at various time points, representing these as a BN structure. The CPDs of the time points were determined through BN parameter learning. Given historical observations at previous time points, the BN conducts probabilistic inference to generate the predicted values. Ref. [

32] leveraged domain knowledge to construct the BN structure after determining the set of variables. The prediction phase initially utilized a BN to forecast the stochastic vehicular speed, followed by error compensation performed by a backpropagation neural network. Ref. [

33] utilized Bayesian networks to discover direct and indirect dependence relationships across various time points in time series. Potential temporal patterns were modeled by integrating the BN structure with fuzzy logic relationships (FLRs). The study developed fuzzy empirical probability-weighted fuzzy logical relationship groups (FLRGs) to model statistical and systematic uncertainties, fully accounting for both relationships. In the above BN-based time series forecasting model, once dependence relationships are set based on all training data, they remain unchanged. This restriction reduces the model’s flexibility, which is necessary for effective time series forecasting in many situations. Ref. [

34] proposed a method to construct BNs at each time point using data from a preceding period. With this approach, we can intuitively observe changes in the causal relationships within the system. Experimental results from the U.S. and Chinese stock markets indicate that the BN structure remains stable in the short term but changes over the long term. It shows that a fixed BN alone is inadequate for capturing the changing characteristics of the time series. In other words, changes in the dependencies within the BN structure can reflect the diversity of causal relationships in time series. Therefore, to enhance the BN’s ability to model complex relationships in non-stationary time series, it is necessary to develop BN structure learning methods that dynamically change based on input data.

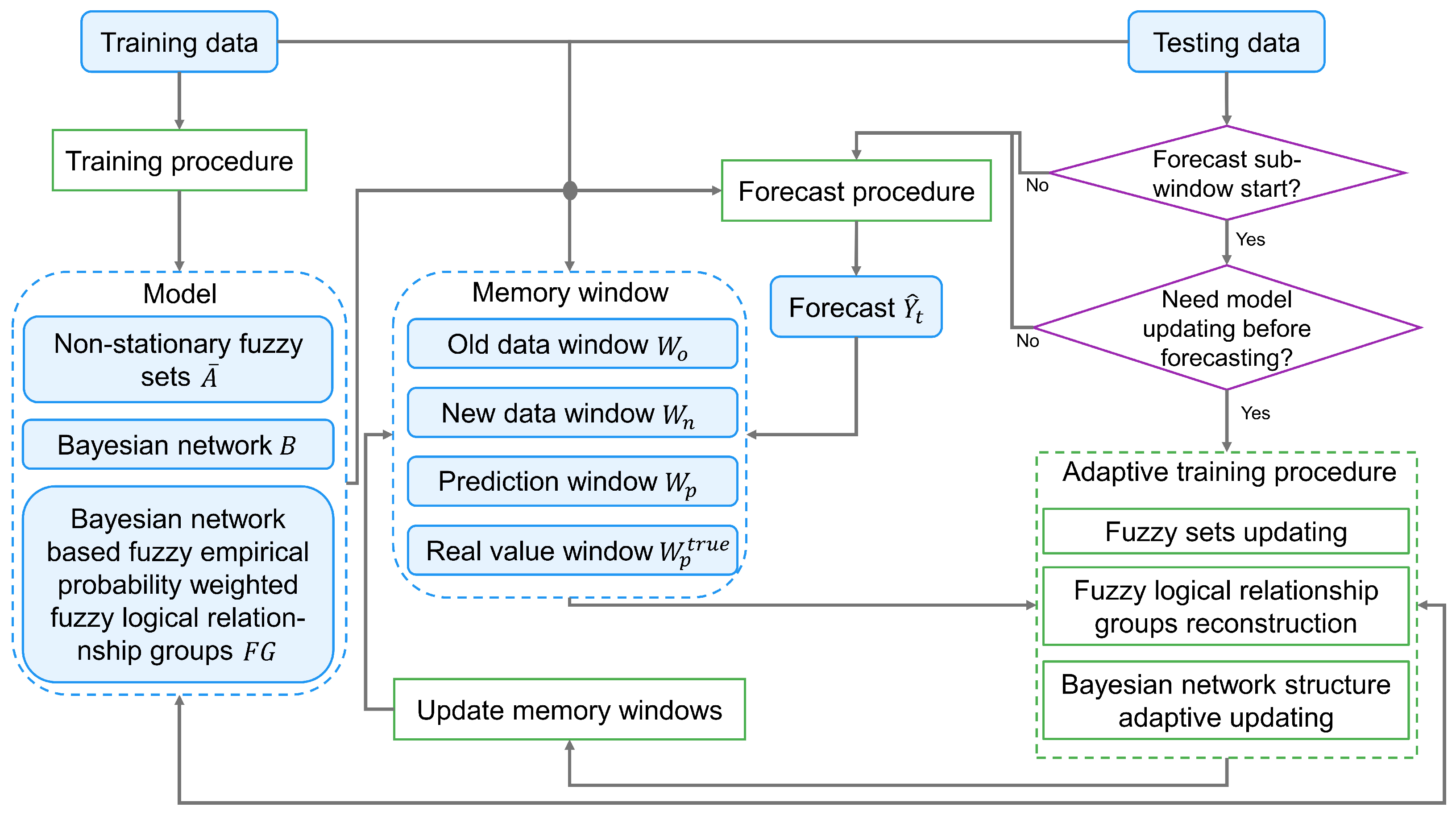

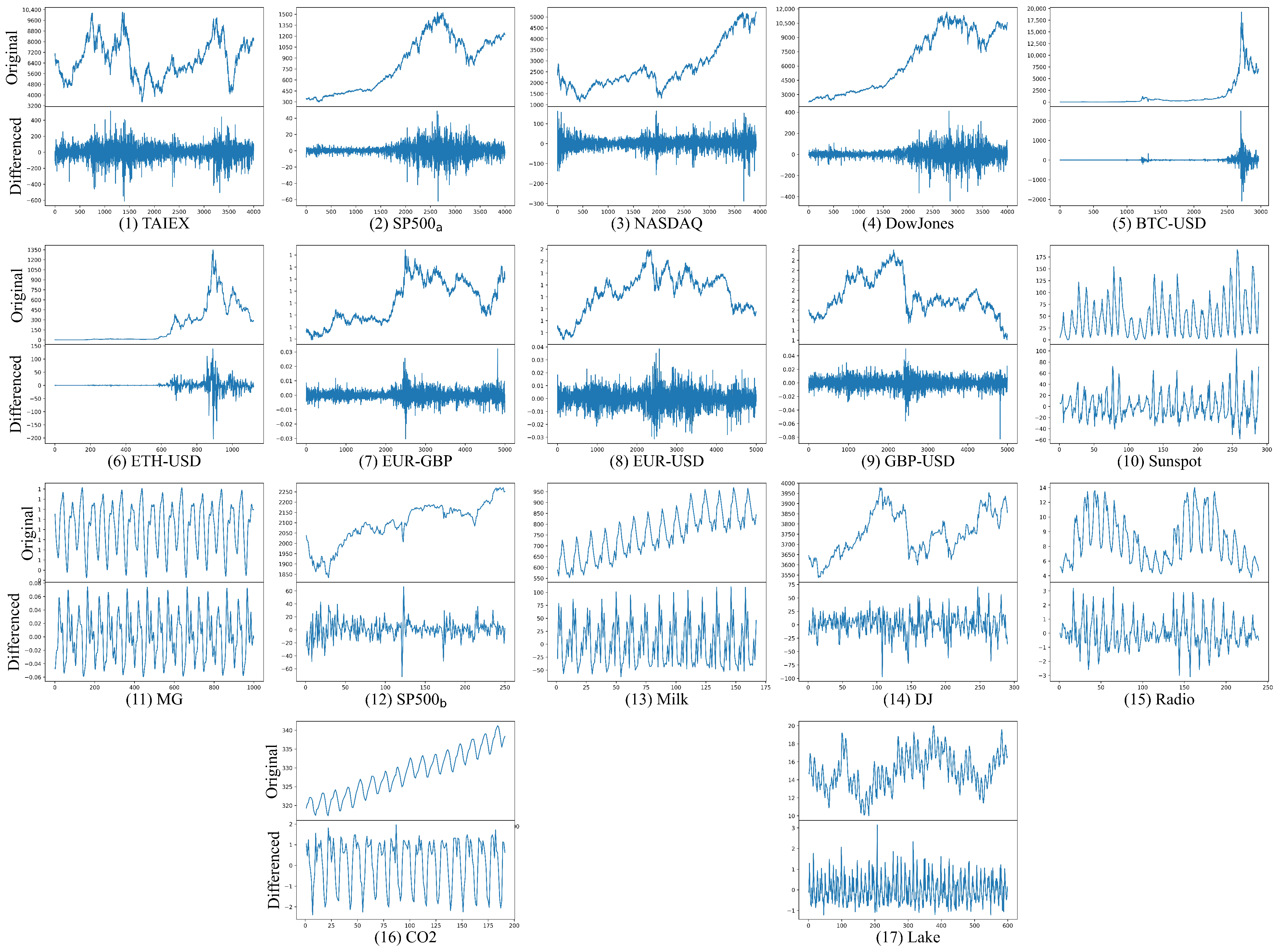

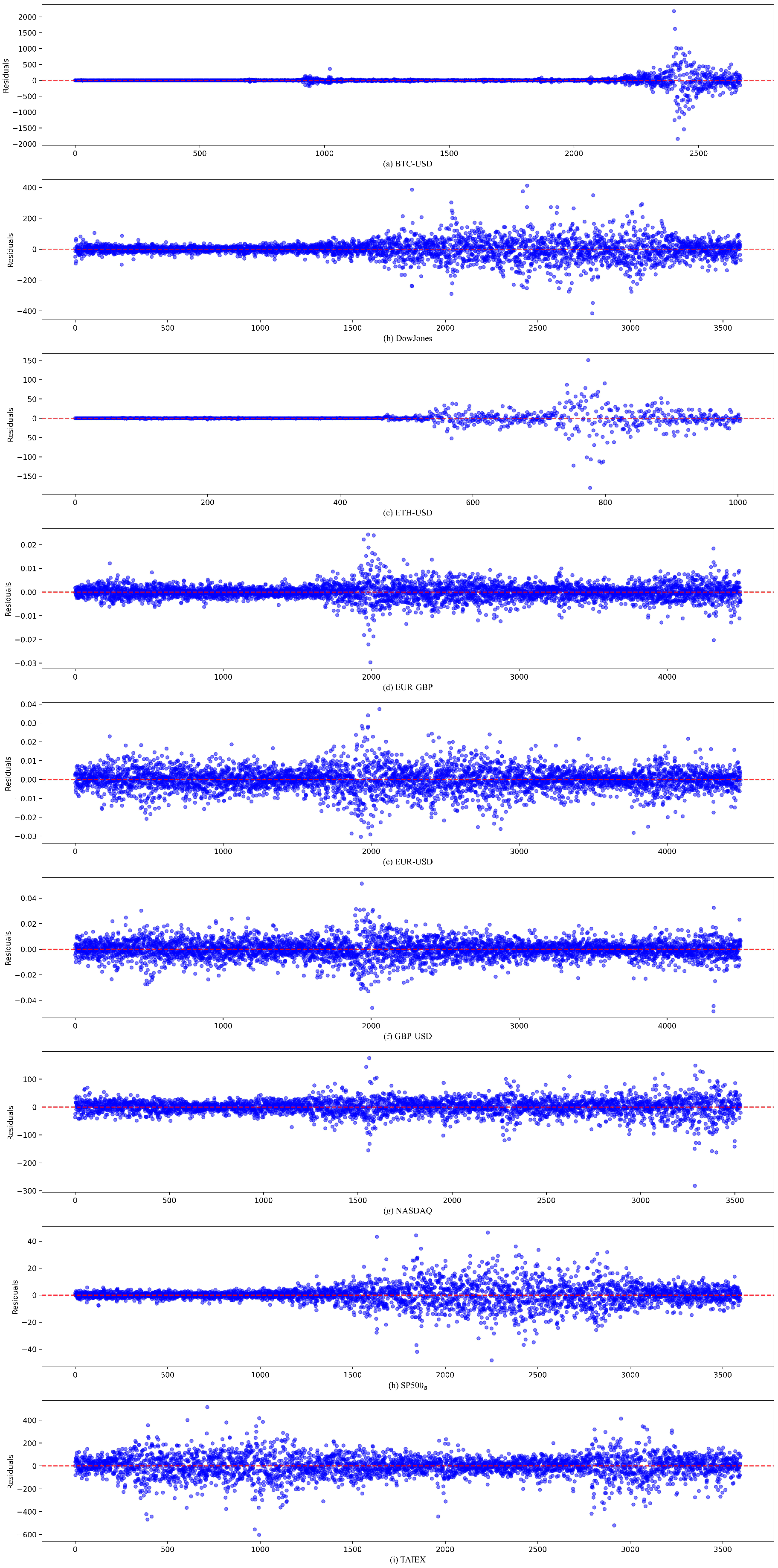

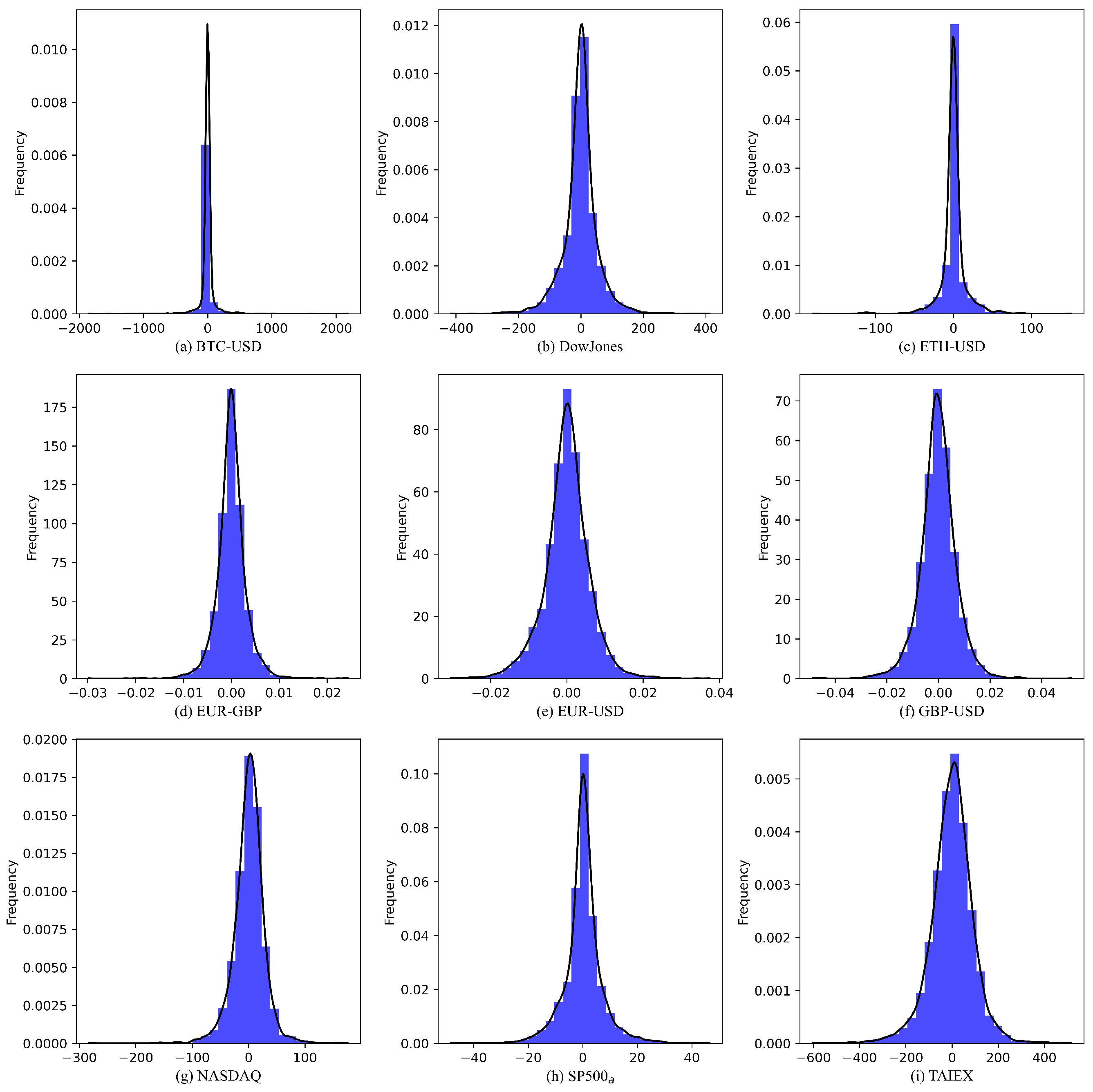

In this study, we present a new hybrid FTSFM to enhance the accuracy of non-stationary time series forecasting. The proposed method begins by performing first-order differencing on the raw time series data. This differencing operation reduces non-stationarity while extracting information, producing a variation time series that captures fluctuations between adjacent time points. We establish the initial FTSFM using the training set of the variation time series. BN and fuzzy logical relationships (FLRs) represent the data’s temporal patterns. The BN structure visually illustrates the dependence relationships between different time points in the variation time series. At the same time, FLRs capture the fuzzy relationships between historical and forecasting moments after fuzzifying the variation time series. Uncertainty in the variation of time series is quantitatively described using FLRGs weighted by fuzzy empirical probabilities, which aggregate the membership values of corresponding FLRs within each FLRG. During the forecasting phase, we employ a sliding window approach, dividing the entire prediction dataset into multiple forecasting windows. The model remains unchanged within each window. The decision to update the existing model is based on its forecasting performance in the previous window. If no update is required, predictions are generated using the existing model; otherwise, the model is updated before predicting. When model updates are required, the proposed method employs a comprehensive updating mechanism: utilizing the training data for the existing model as old data and the actual observations from all prediction windows since the last model update as new data. The proposed method adjusts the parameters of non-stationary fuzzy sets using prediction residuals of the new data, achieving smooth transitions of fuzzy sets to respond to dynamic changes in the variation time series. The adaptive BN structure learning method employs a novel adaptive structure scoring function using old and new data, enhancing structural adaptability to new data while preserving valuable information from the dependence relationships in the existing BN. The model then reconstructs fuzzy empirical probability-weighted FLRGs using the updated BN and non-stationary fuzzy sets. After completing the model update, the framework generates predictions using the updated FLRGs and BN. The main contributions of this study are as follows:

- 1.

We propose a novel hybrid FTSFM that integrates time-variant FTSFM, BN, and non-stationary fuzzy sets. The traditional time-variant FTSFM update strategy handles the dynamic update of fuzzy relationships. BN structure learning captures adaptive changes in temporal dependence relationships between specific time points. Non-stationary fuzzy sets address irregular changes in data imprecision. This multi-dimensional modeling strategy significantly enhances the model’s adaptability and forecasting accuracy for non-stationary time series.

- 2.

We develop an adaptive BN structure updating method with a novel dynamic scoring mechanism. The proposed method enables continuous refinement of temporal dependence relationships while preserving crucial historical patterns, thereby achieving an optimal balance between stability and adaptability in temporal relationship modeling.

- 3.

We introduce a novel non-stationary fuzzy set approach that enhances existing methods through an innovative residual-based perturbation mechanism. This perturbation function enables each fuzzy set to share the impact of prediction residuals through distinct displacement degrees, facilitating smooth transitions of fuzzy sets. It ensures the model’s sensitivity to changes in the vagueness of non-stationary time series while enhancing its stability.

The remaining sections of this paper are structured as follows. In

Section 2, we provide a detailed explanation of FTSFMs and BNs serving as the basis for the proposed algorithm.

Section 3 provides an in-depth description of the proposed FTSFMs with BNs in non-stationary environments. Experimental result analyses are presented in

Section 4.

Section 5 concludes the paper.

2. Preliminaries

In this section, basic definitions of FTSFMs, non-stationary fuzzy sets, and BNs are briefly presented.

2.1. Basic Concepts of Fuzzy Time Series Model

Let

U be the universe of discourse. A fuzzy set

A on

U is expressed as

where

denotes the membership function of

A,

.

is the membership grade of

, and

. Let the parameters of

be

.

can be expressed as

.

A triangular fuzzy set

A takes the triangular function as the underlying membership function. Denote the lower, midpoint, and upper values of the triangle as

and

c, respectively. The membership function is defined as

Suppose a time series is given with . Fuzzy sets are defined on the universe of discourse U. Membership grades of belong to the fuzzy sets formed from the fuzzified data at the moment t. F is the fuzzy time series defined on Y with the collection of .

When results from , FLRs represent the fuzzy relationship between the antecedent moments and the consequent moment t. An FLR for has the format , where the membership grade of on the fuzzy set () is greater than zero. Each combination of can yield multiple FLRs, given that each may comprise multiple elements with non-zero membership grades. FLRs that have the same left-hand side constitute an FLRG denoted as .

2.2. Non-Stationary Fuzzy Set

Ref. [

21] introduced non-stationary fuzzy sets with the help of the non-stationary membership function and the perturbation function. The non-stationary membership function reflects the temporal variability present in membership functions. The perturbation function calculates the dynamic component of function parameters when the membership function changes. Non-stationary fuzzy sets reflect data change through positional shifts, changes in width, and noise-induced variations in the membership grade.

A non-stationary fuzzy set

is denoted as follows:

where

T contains a series of time points and

is the non-stationary membership function.

changes over time in the time interval

T, which can be expressed as

with a time-variant perturbation function

and a constant

for

.

2.3. Bayesian Network

Bayesian networks have demonstrated their remarkable effectiveness for complex data-analysis problems [

35]. The BN is a member of probabilistic graphical models. BNs can represent the latent patterns within data by incorporating a set of variables and their dependence in the form of directed acyclic graphs. A BN includes

nodes representing random variables. Values of these nodes are possible observations of the variables. BNs visually represent conditional independence through directed acyclic graphs. A BN also utilizes an adjacency matrix

G representing the edges between variables, with

indicating the directed dependence relationship from the

i-th node to the

j-th node. The CPD

for the

i-th node represents the strength of the dependence between the

i-th node and its parent nodes

.

denotes the probability of the value

of the

i-th node given the

j-th set of observations

of parent nodes

. The directed acyclic graph of a BN factorizes the joint probability distribution over variables

:

Representing dependence relationships between variables in a BN requires acquiring the directed acyclic graph structure through learning methods, typically achieved using BN structure learning techniques. Apart from structure learning, BN learning also includes parameter learning. Parameter learning involves the determination of CPD parameters, while structure learning aims at generating the adjacency matrix to discover dependence relationships between variables. Structure and parameter learning are interdependent, as parameter learning needs the BN structure to identify the parents of each node before computing CPDs. Moreover, parameter learning is essential for evaluating the matching degree of a candidate network structure and the data.

Data-driven BN structure learning methods principally fall into two categories: constraint-based algorithms and score-based algorithms. The core idea of the latter is to explore the space of all potential directed acyclic graphs using a search strategy to select the optimal graph based on the values yielded from a scoring function on the gathered data. The present research employs a score-based structural learning method using the hill-climbing search method and Bayesian information criterion (BIC) as the score function [

36]. The BIC scoring function offers the benefit of decomposability and clear intuitiveness. The BIC score approximates the marginal likelihood function as follows:

where

is the logarithm likelihood of the graph structure

G given the dataset

D. The CPD parameters are determined by the maximum likelihood estimation algorithm. The penalty term

helps prevent overfitting.

represents the count of parameters in all CPDs within the BN. The dataset

D contains

data instances. The BIC function for a BN can be factorized as

where

is the BIC score of the variable

and

.

The hill-climbing method is a widely used search algorithm. The search starts with an initial model, which can either be an empty graph or a specific graph. Each search iteration produces candidate models derived from a single modification of the current model. When applying the hill-climbing algorithm to BN structure learning, the model with superior performance is preserved by comparing the scores of each candidate model and the current model via the scoring function. Operations such as adding, deleting, or reversing an edge generate candidate models during each iteration. As the BIC score function is decomposable, the comparison between candidate models and the current model can focus solely on the score of their dissimilar segments. Combining domain knowledge with a data-driven learning method is a common practice in BN learning. This paper models the BN structure by blending temporal adjacency relationships as domain knowledge with raw time-series data. A detailed description of the hill climbing and BIC-based BN structure learning method is introduced in [

33].