Fault Diagnosis Method for Centrifugal Pumps in Nuclear Power Plants Based on a Multi-Scale Convolutional Self-Attention Network

Abstract

Highlights

- A multi-scale convolutional self-attention (MS-CSA) method is proposed to improve the accuracy of fault diagnosis for rolling bearings in nuclear power plants.

- This paper designs a multi-scale hybrid feature idea to enrich the fault information present in the features.

- Verification tests were conducted based on sound and vibration experimental data, and the results showed that the fault diagnosis model based on the multi-scale convolutional self-attention (MS-CSA) method significantly improved its diagnostic performance.

Abstract

1. Introduction

2. Materials and Methods

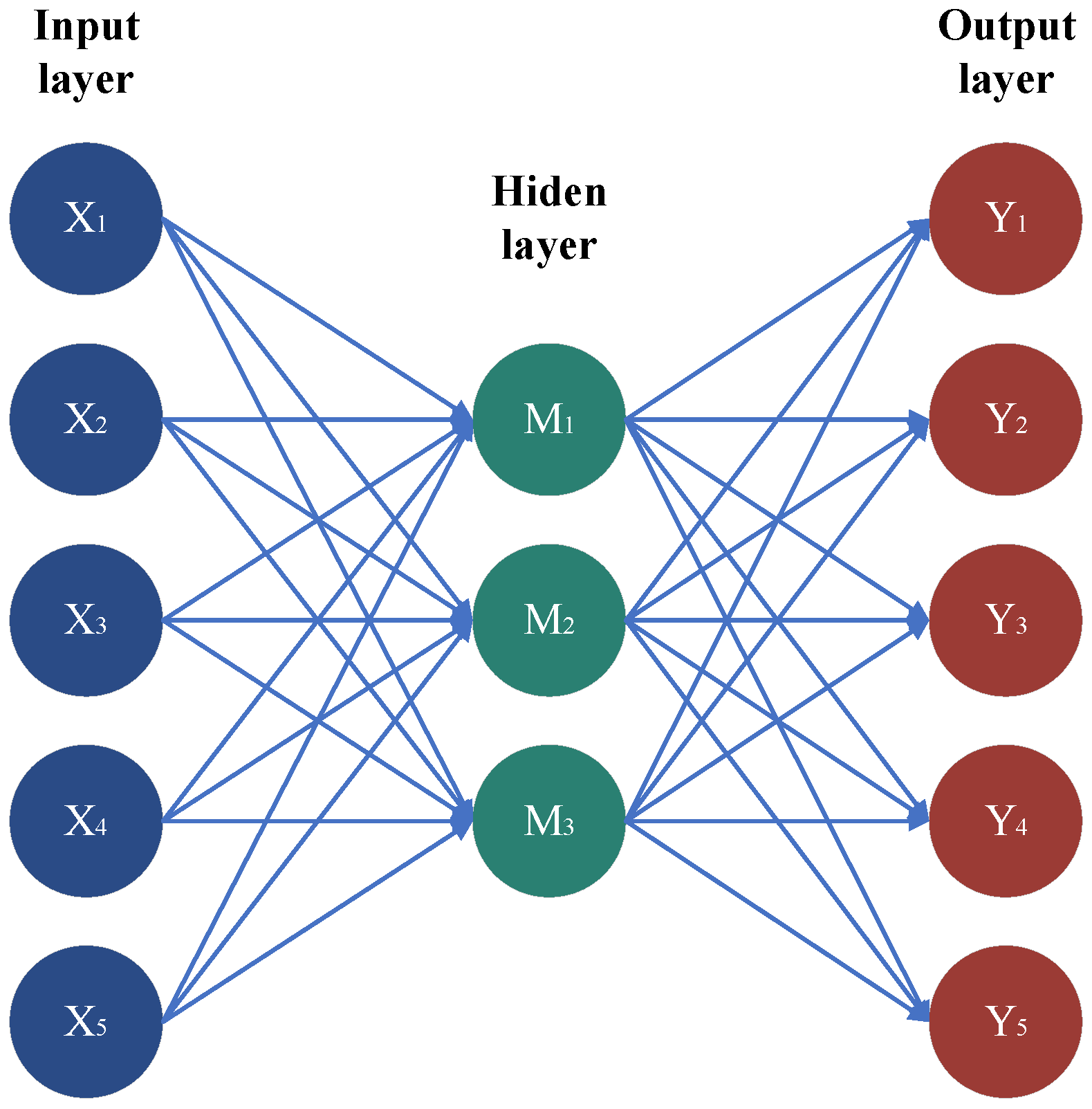

2.1. Convolutional Neural Network (CNN)

2.2. Attention Mechanism

2.3. Auto Encoder (AE)

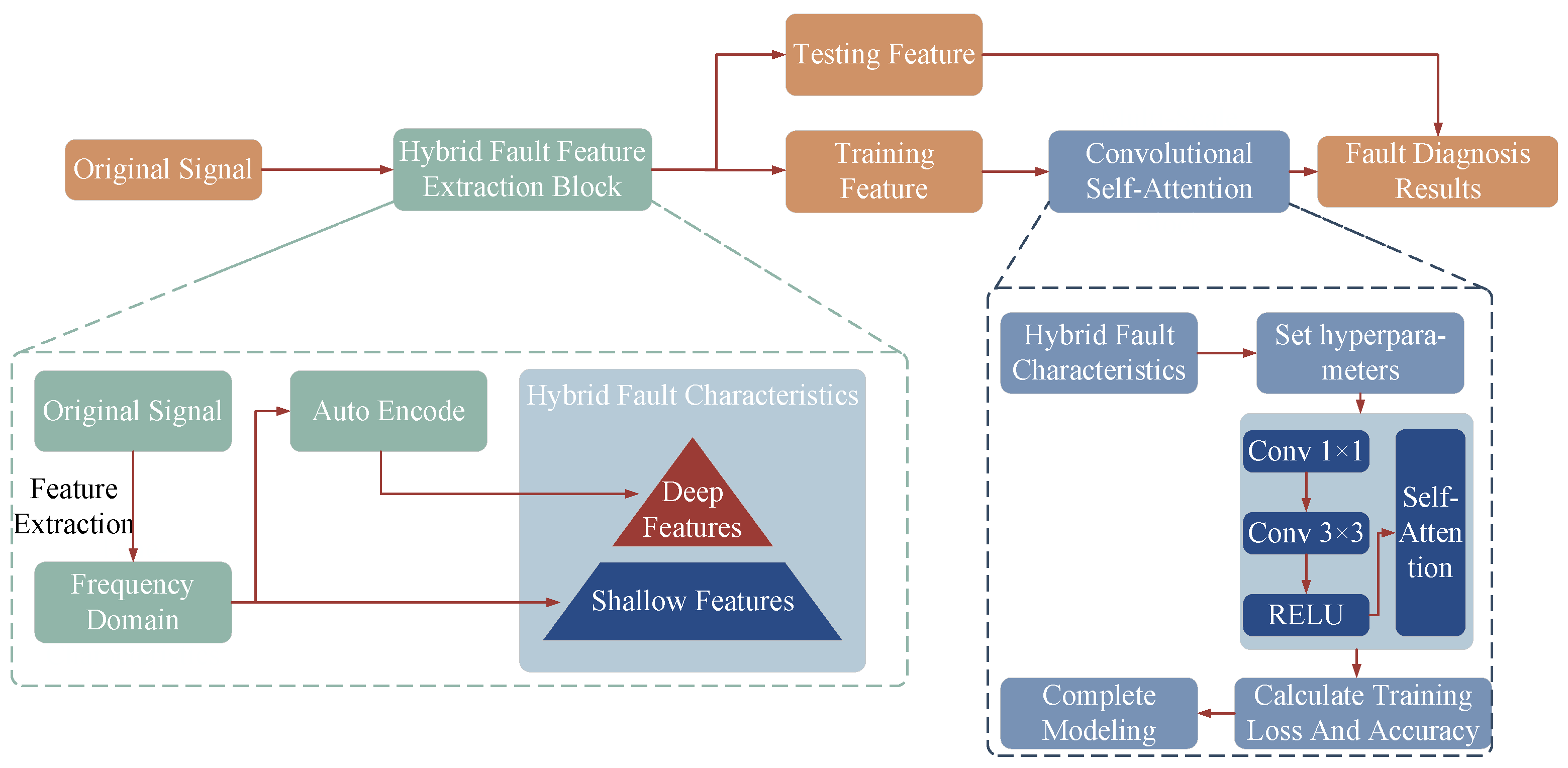

2.4. Multi-Scale Convolutional Self-Attention (MS-CSA)

- 1.

- To fully reveal the inherent patterns and characteristics present in the collected signals from the circulating water pump’s rolling bearings, a shallow feature dataset is constructed using time–frequency domain indicators such as standard deviation, variance, root mean square value, kurtosis, skewness, clearance factor, peak factor, impulse factor, shape factor, information entropy, permutation entropy, and Theil coefficient. This initial step extracts key information that can identify fault characteristics or abnormal states.

- 2.

- The shallow features representing the inherent patterns and related characteristics of the rolling bearing signals are further processed through an autoencoder for feature extraction. This generates deep fault features, which are then combined with the aforementioned time–frequency domain features to form a mixed-scale feature set that integrates both deep and shallow features.

- 3.

- A multi-scale convolutional self-attention network is constructed by stacking convolutional kernels of different scales with a self-attention mechanism. The local optimality of 1 × 1 and 3 × 3 convolutional kernels is utilized for feature extraction from shallow features. Additionally, the global receptive field of the self-attention mechanism is employed to extract key features from the mixed-scale feature set, aiming to clarify the nonlinear mapping relationship between rolling bearing fault modes and their characteristics.

- 4.

- The obtained mixed-scale feature set is used to train the multi-scale convolutional self-attention network. After training, a validation set is utilized to assess the feasibility and effectiveness of the fault diagnosis model. This model can guide the periodic maintenance of nuclear power plants, ensuring operational safety while enhancing economic efficiency.

3. Experiment

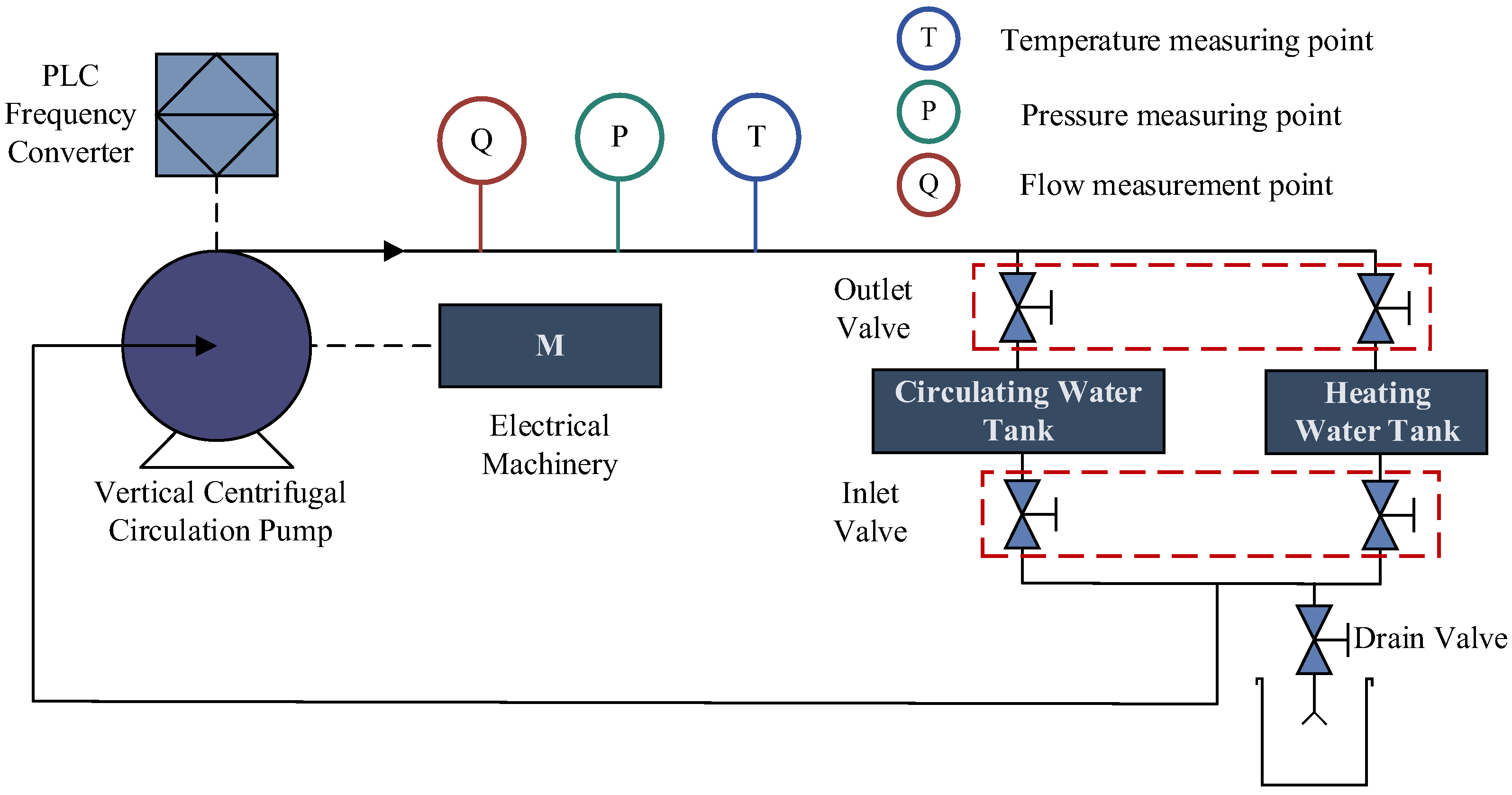

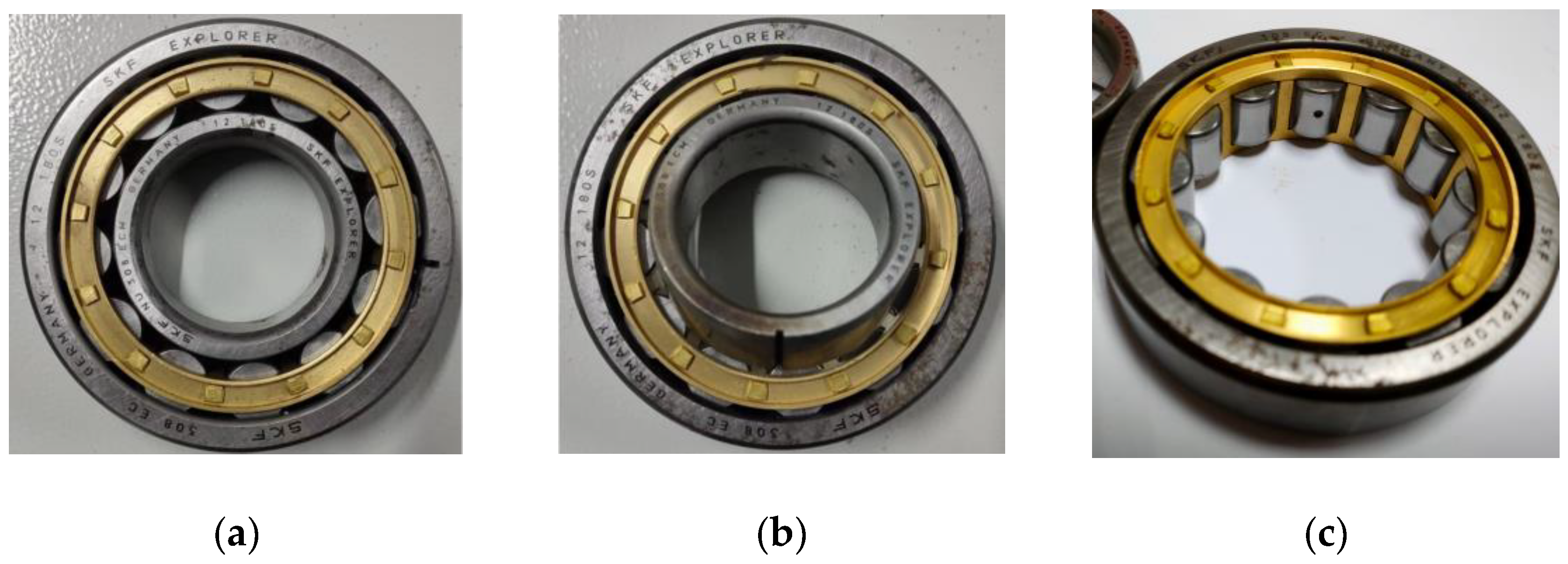

3.1. Experimental Test Bench for Rolling Bearing Faults

3.2. Experimental Setup for Rolling Bearing Faults

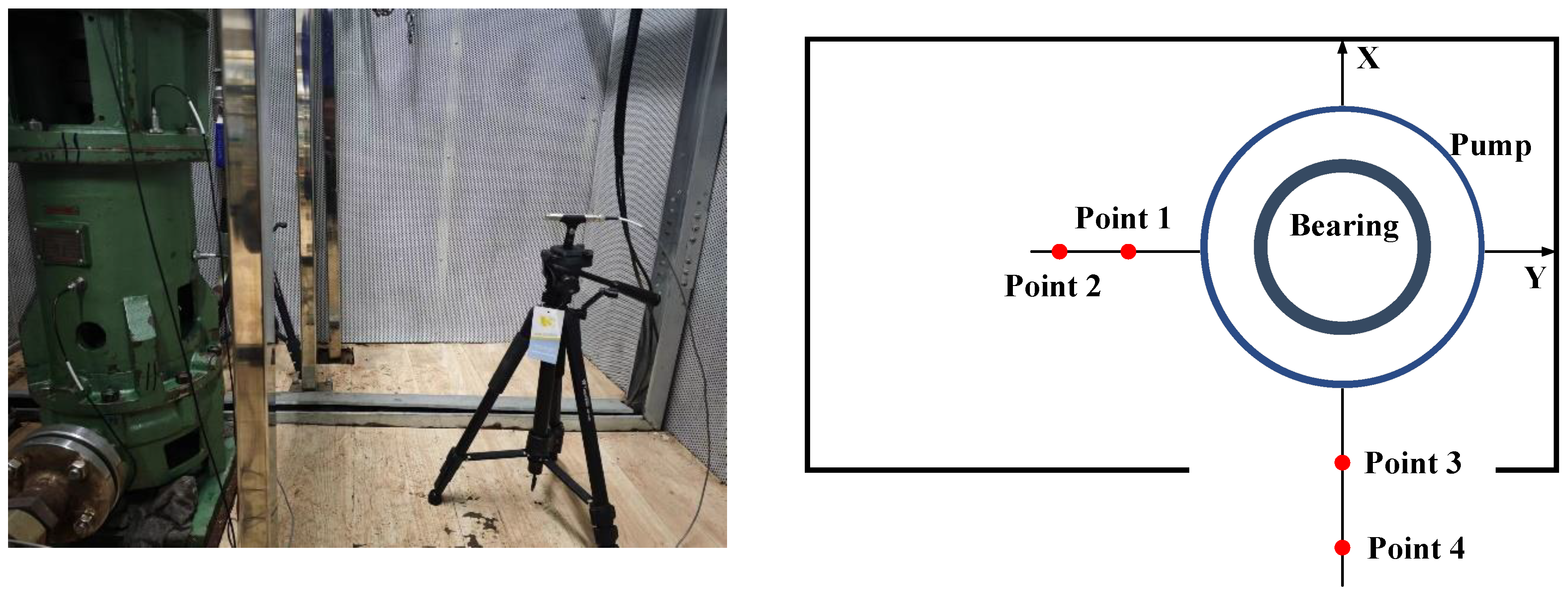

3.2.1. Layout of Vibration Signal Measuring Points

3.2.2. Layout of Acoustic Signal Measurement Points

4. Result Analysis

4.1. Experiment Dataset

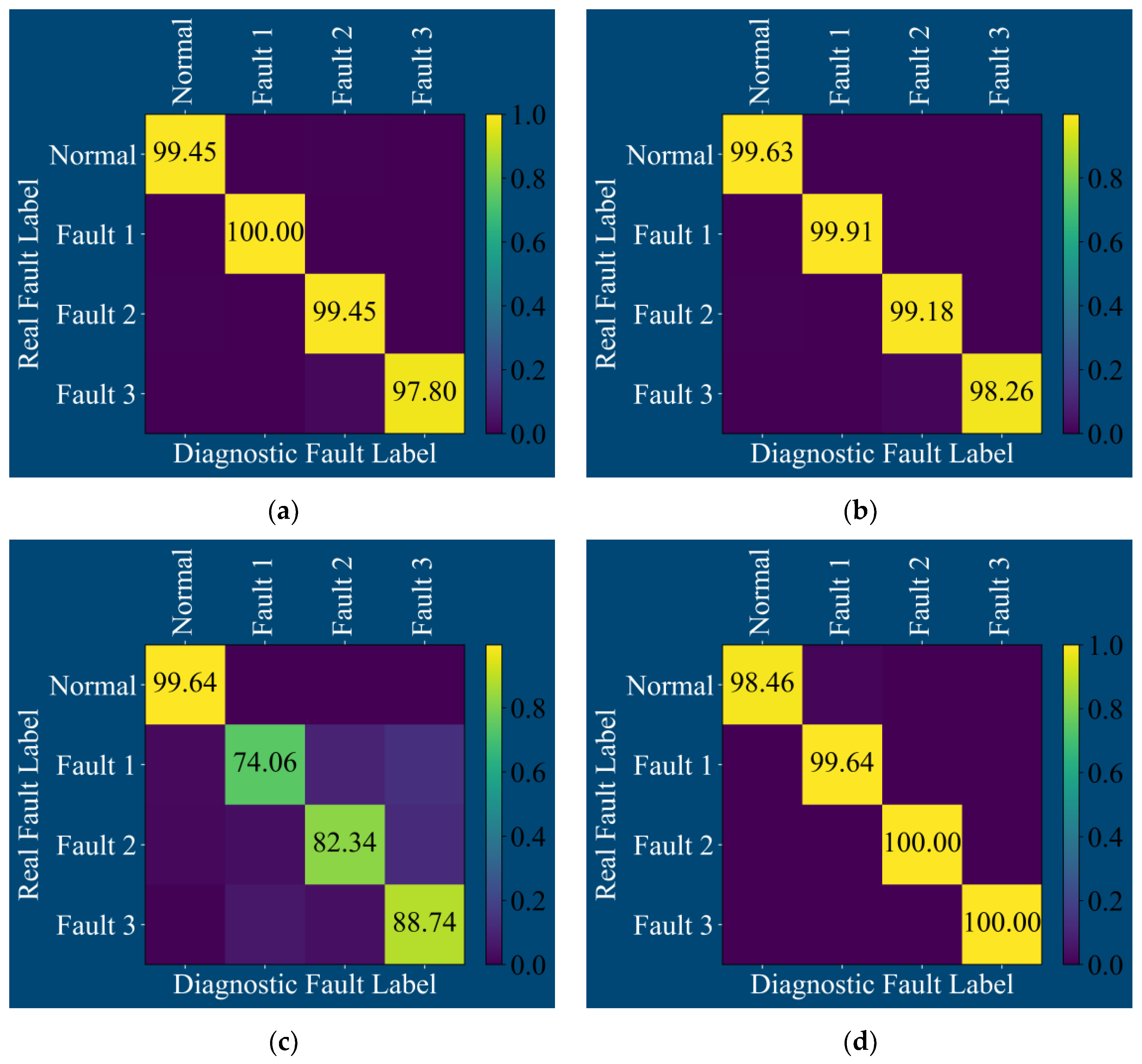

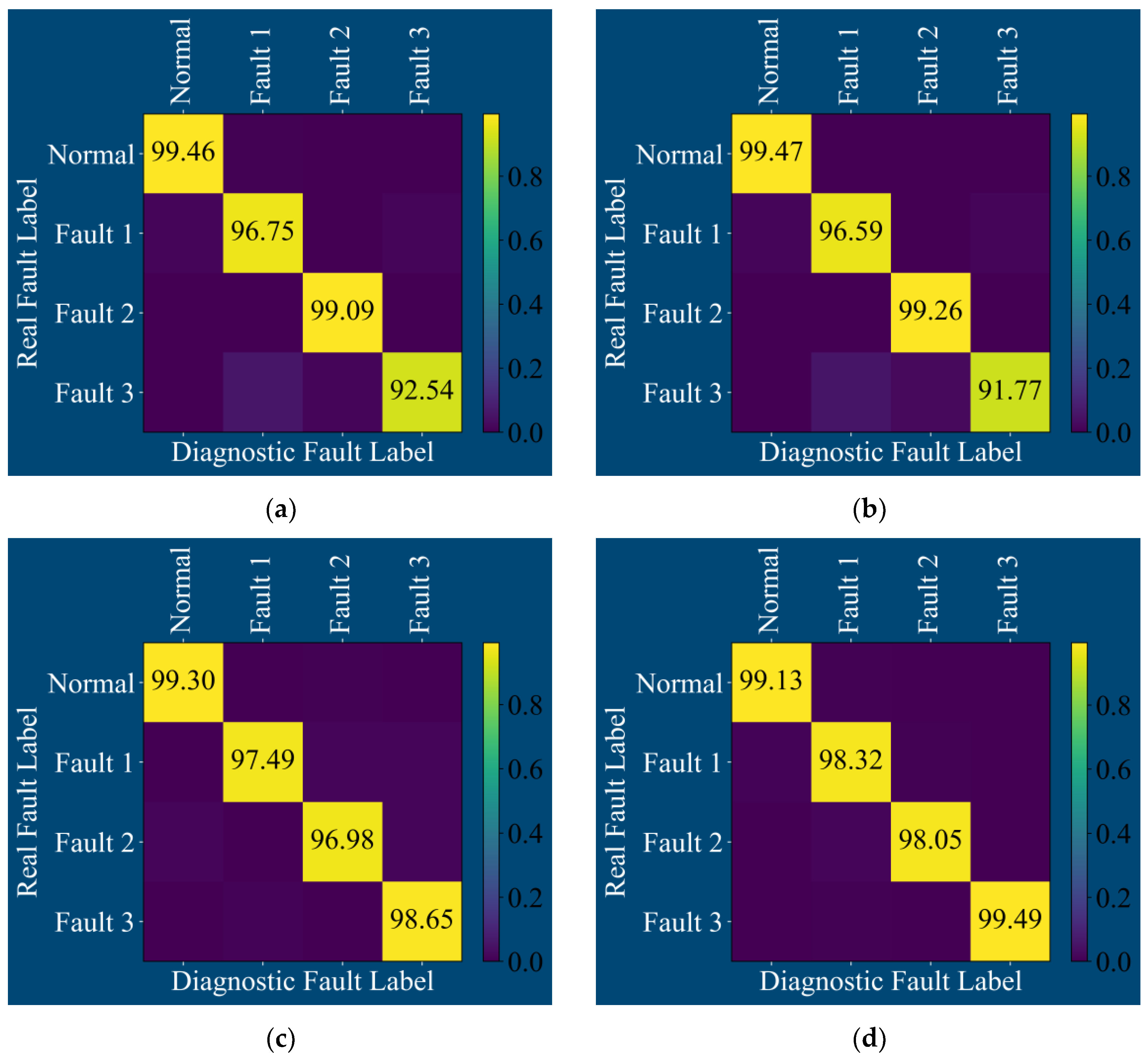

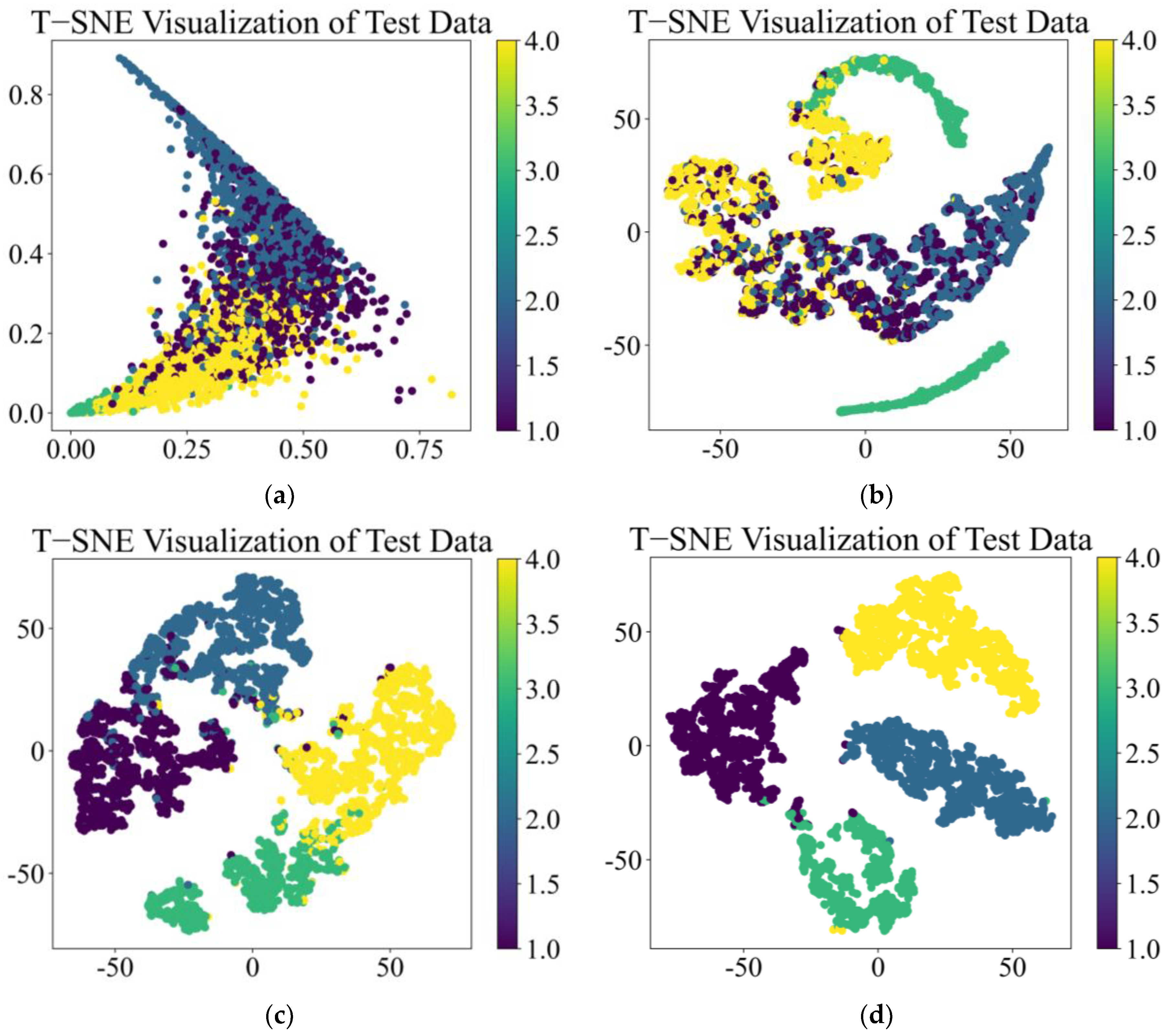

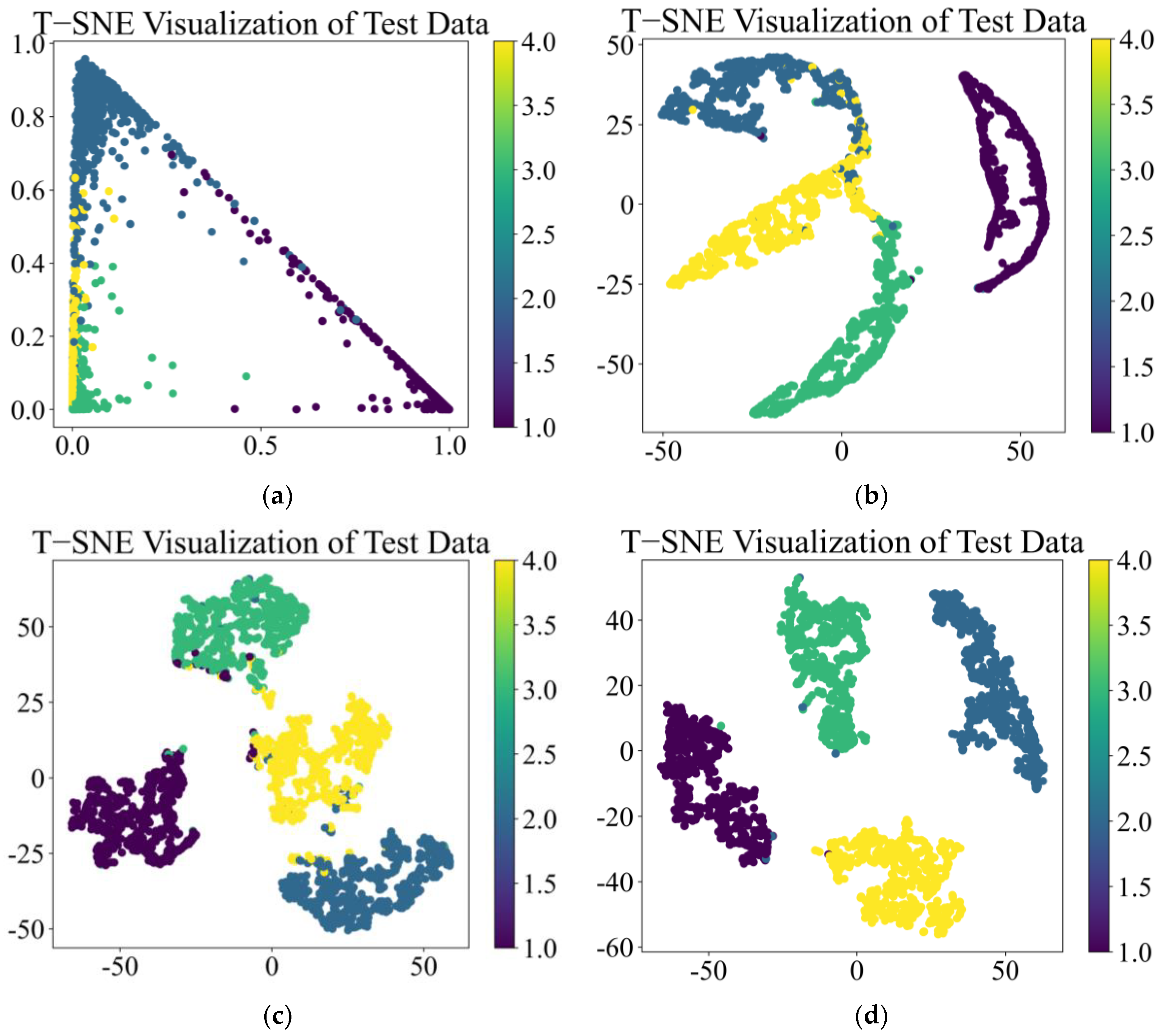

4.2. Vibration Signal Test

4.3. Acoustic Signal Test

4.4. Case Western Reserve University (CWRU) Bearing Data Test

5. Conclusions

- 1.

- Traditional convolutional network models such as CNN and TCN perform well in fault diagnosis under vibration signal conditions, but their fault diagnosis performance is not outstanding in acoustic signals with higher noise levels. The CSA constructed by adding an attention module to the traditional convolutional model performs poorly in fault diagnosis under vibration signal conditions but performs better in acoustic signals with higher noise levels.

- 2.

- Compared with the three fault diagnosis models, i.e., CNN, TCN, and CSA, the MS-CSA model exhibits better performance in terms of model convergence speed, model convergence capability, and validation set accuracy. This model achieves an accuracy rate of 99.5% for both vibration signals and acoustic signals.

- 3.

- Comparing the CSA model with the MS-CSA model, it can be found that combining shallow data-driven models with multi-scale network ideas does not significantly increase model complexity and feature data levels, nor does it significantly increase the training time required. But by combining shallow data-driven models with multi-scale network ideas, the convergence and fault diagnosis capabilities of the original model can be significantly improved, and excellent fault diagnosis performance can be achieved.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zhang, W.; Li, C.; Peng, G.; Chen, Y.; Zhang, Z. A deep convolutional neural network with new training methods for bearing fault diagnosis under noisy environment and different working load. Mech. Syst. Signal Process. 2018, 100, 439–453. [Google Scholar] [CrossRef]

- Tang, S.; Zhu, Y.; Yuan, S.; Li, G. Intelligent Diagnosis towards Hydraulic Axial Piston Pump Using a Novel Integrated CNN Model. Sensors 2020, 20, 7152. [Google Scholar] [CrossRef] [PubMed]

- Kumar, A.; Gandhi, C.P.; Zhou, Y.; Kumar, R.; Xiang, J. Improved deep convolution neural network (CNN) for the identification of defects in the centrifugal pump using acoustic images. Appl. Acoust. 2020, 167, 107399. [Google Scholar] [CrossRef]

- Zhong, X.; Ban, H. Crack fault diagnosis of rotating machine in nuclear power plant based on ensemble learning. Ann. Nucl. Energy 2022, 168, 108909. [Google Scholar] [CrossRef]

- Liu, J.; Yang, X.; Macian-Juan, R.; Kosuch, N. A novel transfer CNN with spatiotemporal input for accurate nuclear power fault diagnosis under different operating conditions. Ann. Nucl. Energy 2023, 194, 110070. [Google Scholar] [CrossRef]

- Wang, Z.; Xia, H.; Zhu, S.; Peng, B.; Zhang, J.; Jiang, Y.; Annor-Nyarko, M. Cross-domain fault diagnosis of rotating machinery in nuclear power plant based on improved domain adaptation method. J. Nucl. Sci. Technol. 2022, 59, 67–77. [Google Scholar] [CrossRef]

- Dao, F.; Zeng, Y.; Qian, J. Fault diagnosis of hydro-turbine via the incorporation of bayesian algorithm optimized CNN-LSTM neural network. Energy 2024, 290, 130326. [Google Scholar] [CrossRef]

- Qin, Y.; Shi, X. Fault Diagnosis Method for Rolling Bearings Based on Two-Channel CNN under Unbalanced Datasets. Appl. Sci. 2022, 12, 8474. [Google Scholar] [CrossRef]

- Shan, S.; Liu, J.; Wu, S.; Shao, Y.; Li, H. A motor bearing fault voiceprint recognition method based on Mel-CNN model. Measurement 2023, 207, 112408. [Google Scholar] [CrossRef]

- Cheng, W.; Liu, X.; Xing, J.; Chen, X.; Ding, B.; Zhang, R.; Zhou, K.; Huang, Q. AFARN: Domain Adaptation for Intelligent Cross-Domain Bearing Fault Diagnosis in Nuclear Circulating Water Pump. IEEE Trans. Ind. Inform. 2023, 19, 3229–3239. [Google Scholar] [CrossRef]

- Hu, B.; Tang, J.; Wu, J.; Qing, J. An Attention EfficientNet-Based Strategy for Bearing Fault Diagnosis under Strong Noise. Sensors 2022, 22, 6570. [Google Scholar] [CrossRef] [PubMed]

- Jiang, Q.; Bao, B.; Hou, X.; Huang, A.; Jiang, J.; Mao, Z. Feature Mining and Sensitivity Analysis with Adaptive Sparse Attention for Bearing Fault Diagnosis. Appl. Sci. 2023, 13, 718. [Google Scholar] [CrossRef]

- Li, X.; Xiao, S.; Zhang, F.; Huang, J.; Xie, Z.; Kong, X. A fault diagnosis method with AT-ICNN based on a hybrid attention mechanism and improved convolutional layers☆. Appl. Acoust. 2024, 225, 110191. [Google Scholar] [CrossRef]

- Tang, Y.; Zhang, C.; Wu, J.; Xie, Y.; Shen, W.; Wu, J. Deep Learning-Based Bearing Fault Diagnosis Using a Trusted Multiscale Quadratic Attention-Embedded Convolutional Neural Network. IEEE Trans. Instrum. Meas. 2024, 73, 3513215. [Google Scholar] [CrossRef]

- Xia, S.; Zhou, X.; Shi, H.; Li, S.; Xu, C. A fault diagnosis method with multi-source data fusion based on hierarchical attention for AUV. Ocean Eng. 2022, 266, 112595. [Google Scholar] [CrossRef]

- Xin, R.; Feng, X.; Wang, T.; Miao, F.; Yu, C. A Multi-Task-Based Deep Multi-Scale Information Fusion Method for Intelligent Diagnosis of Bearing Faults. Machines 2023, 11, 198. [Google Scholar] [CrossRef]

- Zhang, W.; Yang, J.; Bo, X.; Yang, Z. A dual attention mechanism network with self-attention and frequency channel attention for intelligent diagnosis of multiple rolling bearing fault types. Meas. Sci. Technol. 2024, 35, 036112. [Google Scholar] [CrossRef]

- Zhong, X.; Li, Y.; Xia, T. Parallel learning attention-guided CNN for signal denoising and mechanical fault diagnosis. J. Braz. Soc. Mech. Sci. Eng. 2023, 45, 239. [Google Scholar] [CrossRef]

- Zhou, H.; Liu, R.; Li, Y.; Wang, J.; Xie, S. A rolling bearing fault diagnosis method based on a convolutional neural network with frequency attention mechanism. Struct. Health Monit. Int. J. 2024, 23, 2475–2495. [Google Scholar] [CrossRef]

- Wang, Q.; Sun, Z.; Zhu, Y.; Song, C.; Li, D. Intelligent fault diagnosis algorithm of rolling bearing based on optimization algorithm fusion convolutional neural network. Math. Biosci. Eng. 2023, 20, 19963–19982. [Google Scholar] [CrossRef]

- Yan, S.; Shao, H.D.; Wang, J.; Zheng, X.Y.; Liu, B. LiConvFormer: A lightweight fault diagnosis framework using separable multiscale convolution and broadcast self-attention. Expert Syst. Appl. 2024, 237, 121338. [Google Scholar] [CrossRef]

- Dong, Z.L.; Zhao, D.Z.; Cui, L.L. An intelligent bearing fault diagnosis framework: One-dimensional improved self-attention-enhanced CNN and empirical wavelet transform. Nonlinear Dyn. 2024, 112, 6439–6459. [Google Scholar] [CrossRef]

- Zhou, K.; Diehl, E.; Tang, J. Deep convolutional generative adversarial network with semi-supervised learning enabled physics elucidation for extended gear fault diagnosis under data limitations. Mech. Syst. Signal Process. 2023, 185, 109772. [Google Scholar] [CrossRef]

- Xiong, J.B.; Liu, M.H.; Li, C.L.; Cen, J.; Zhang, Q.H.; Liu, Q.Q. A Bearing Fault Diagnosis Method Based on Improved Mutual Dimensionless and Deep Learning. IEEE Sens. J. 2023, 23, 18338–18348. [Google Scholar] [CrossRef]

- Yu, X.; Wang, Y.J.; Liang, Z.T.; Shao, H.D.; Yu, K.; Yu, W.L. An Adaptive Domain Adaptation Method for Rolling Bearings’ Fault Diagnosis Fusing Deep Convolution and Self-Attention Networks. IEEE Trans. Instrum. Meas. 2023, 72, 3509814. [Google Scholar] [CrossRef]

- Liu, J.; Zhang, C.; Jiang, X. Imbalanced fault diagnosis of rolling bearing using improved MsR-GAN and feature enhancement-driven CapsNet. Mech. Syst. Signal Process. 2022, 168, 108664. [Google Scholar] [CrossRef]

- Chen, X.; Zhang, B.; Gao, D. Bearing fault diagnosis base on multi-scale CNN and LSTM model. J. Intell. Manuf. 2021, 32, 971–987. [Google Scholar] [CrossRef]

- Wang, X.; Shen, C.Q.; Xia, M.; Wang, D.; Zhu, J.; Zhu, Z.K. Multi-scale deep intra-class transfer learning for bearing fault diagnosis. Reliab. Eng. Syst. Saf. 2020, 202, 107050. [Google Scholar] [CrossRef]

- Sun, Y.; Weng, Y.; Luo, B.; Li, G.; Tao, B.; Jiang, D.; Chen, D. Gesture recognition algorithm based on multi-scale feature fusion in RGB-D images. IET Image Process. 2023, 17, 1280–1290. [Google Scholar] [CrossRef]

- Xu, X.; Wang, J.; Zhong, B.; Ming, W.; Chen, M. Deep learning-based tool wear prediction and its application for machining process using multi-scale feature fusion and channel attention mechanism. Measurement 2021, 177, 109254. [Google Scholar] [CrossRef]

| Model | Number of Rolling Elements | Aperture | Outside Diameter | Inner Raceway Diameter |

|---|---|---|---|---|

| NU 308 ECM | 12 | 40 mm | 90 mm | 52 mm |

| Signal Type | Rolling Bearing Status | Data Size | Label |

|---|---|---|---|

| Vibration Signal | Normal Bearing Operation | 974,848 | Normal |

| Outer Race Fracture | 907,264 | Fault 1 | |

| Inner Race Fracture | 630,784 | Fault 2 | |

| Rolling Element Pitting | 1,030,144 | Fault 3 | |

| Acoustic Signal | Normal Bearing Operation | 974,848 | Normal |

| Outer Race Fracture | 907,264 | Fault 1 | |

| Inner Race Fracture | 630,784 | Fault 2 | |

| Rolling Element Pitting | 1,030,144 | Fault 3 |

| Number | Fault Feature |

|---|---|

| 1 | Standard Deviation |

| 2 | Variance |

| 3 | Root Mean Square Value |

| 4 | Kurtosis |

| 5 | Margin |

| 6 | Skewness |

| 7 | Peak Factor |

| 8 | Pulse Factor |

| 9 | Waveform Factor |

| 10 | Information Entropy |

| 11 | Permutation Entropy |

| 12 | Theil Index |

| Training Set | Testing Set | Validation Set | |

|---|---|---|---|

| Proportion | 56% | 24% | 20% |

| Training Time (t/s) | |

|---|---|

| CSA | 59.21 |

| MS-CSA | 62.74 |

| Normal | Outer Ring Fracture | Inner Ring Fracture | Rolling Element Pitting Corrosion | |

|---|---|---|---|---|

| CNN | 96.57% | 91.43% | 95.98% | 96.25% |

| TCN | 98.65% | 93.88% | 97.63% | 96.25% |

| CSA | 99.18% | 82.28% | 91.56% | 89.21% |

| MC-CSA | 99.90% | 99.78% | 100% | 100% |

| Training Time (t/s) | |

|---|---|

| CSA | 62.61 |

| MS-CSA | 68.68 |

| Normal | Outer Ring Fracture | Inner Ring Fracture | Rolling Element Pitting Corrosion | |

|---|---|---|---|---|

| CNN | 55.45% | 57.03% | 92.41% | 72.91% |

| TCN | 55.24% | 49.44% | 91.11% | 74.48% |

| CSA | 98.57% | 94.30% | 88.75% | 97.21% |

| MC-CSA | 99.39% | 100% | 99.53% | 100% |

| Training Time (t/s) | |

|---|---|

| CSA | 61.79 |

| MS-CSA | 65.51 |

| Normal | Outer Ring Fracture | Inner Ring Fracture | Rolling Element Pitting Corrosion | |

|---|---|---|---|---|

| CNN | 89.24% | 93.45% | 99.36% | 99.57% |

| TCN | 88.60% | 91.98% | 98.95% | 94.09% |

| CSA | 99.80% | 98.39% | 96.57% | 97.98% |

| MC-CSA | 99.19% | 98.98% | 98.25% | 100% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, C.; Liu, X.; Wang, H.; Peng, M. Fault Diagnosis Method for Centrifugal Pumps in Nuclear Power Plants Based on a Multi-Scale Convolutional Self-Attention Network. Sensors 2025, 25, 1589. https://doi.org/10.3390/s25051589

Li C, Liu X, Wang H, Peng M. Fault Diagnosis Method for Centrifugal Pumps in Nuclear Power Plants Based on a Multi-Scale Convolutional Self-Attention Network. Sensors. 2025; 25(5):1589. https://doi.org/10.3390/s25051589

Chicago/Turabian StyleLi, Chen, Xinkai Liu, Hang Wang, and Minjun Peng. 2025. "Fault Diagnosis Method for Centrifugal Pumps in Nuclear Power Plants Based on a Multi-Scale Convolutional Self-Attention Network" Sensors 25, no. 5: 1589. https://doi.org/10.3390/s25051589

APA StyleLi, C., Liu, X., Wang, H., & Peng, M. (2025). Fault Diagnosis Method for Centrifugal Pumps in Nuclear Power Plants Based on a Multi-Scale Convolutional Self-Attention Network. Sensors, 25(5), 1589. https://doi.org/10.3390/s25051589