1. Introduction

In recent years, significant advancements in infrared imaging technology and manufacturing processes have led to widespread application in thermal imaging, environmental monitoring, and military operations [

1,

2]. However, infrared detectors are susceptible to environmental temperature and voltage fluctuations, which cause variations in response coefficients. These variations generate fixed-pattern streak noise that is difficult to eliminate through hardware solutions, thereby degrading image quality and adversely affecting subsequent image processing [

3,

4,

5,

6].

Researchers have developed non-uniformity correction methods to address these challenges, mitigate streak noise, and improve image quality. Non-uniformity correction methods can be broadly classified into reference-based [

7] and scene-based approaches [

8,

9]. Reference-based correction methods use blackbody images to calculate correction coefficients [

10,

11,

12,

13,

14,

15,

16]. While straightforward and easy to implement, these methods have notable limitations. They require periodic calibration, which is particularly inconvenient in dynamic environments where calibration coefficients can drift over time as operating conditions change [

13]. Recent studies have attempted to address thermal drift via a semi-transparent shutter [

17] or camera housing stabilization [

18]. However, such hardware-based solutions can still be challenging for real-time or large-scale deployments. Moreover, standard global calibrations may be inadequate in fine-detail scenarios, as Pron and Bouache [

19] show that localized or pixel-level calibration can outperform manufacturer-provided corrections, albeit with increased computational overhead. Furthermore, reference-based methods struggle with complex noise patterns, especially in variable environments, and fail to provide effective real-time solutions.

Unlike reference-based methods, scene-based correction methods do not rely on external reference images and include correction algorithms based on filtering, statistics, model optimization, and neural networks. Despite their promise, scene-based methods face several challenges. Filter-based methods effectively remove low-frequency noise but often cause significant detail loss, particularly in high-resolution images [

20,

21,

22,

23,

24,

25,

26,

27]. Optimization-based methods, which leverage techniques such as total variation regularization or low-rank matrix decomposition, can effectively separate noise but suffer from high computational complexity, making them less practical for real-time high-resolution image processing [

28,

29,

30,

31,

32,

33]. Neural network-based methods excel in noise suppression and image enhancement but require extensive training datasets and computational resources, limiting their applicability [

34,

35,

36,

37]. Statistical methods, such as histogram and moment matching, infer noise distribution based on grayscale statistics [

38,

39,

40,

41,

42]. However, their performance diminishes when noise deviates from assumed distributions, and they often fail to preserve image details and structural features.

High-resolution infrared line-scanning images present unique challenges due to their directional and structured noise, which arises from the same detector element generating each row of pixels. To tackle these challenges, this paper introduces an innovative non-uniformity correction algorithm based on residual guidance and adaptive weighting. The key contributions of this work are as follows:

(1) Residual-guided filtering and dual-guidance mechanism: The proposed method combines residual and original images as guidance for detail preservation and global smoothing, respectively. Through weighted fusion, it addresses the edge-blurring issues inherent in traditional guided filtering, significantly enhancing detail preservation, particularly in high-resolution images.

(2) Iterative residual compensation scheme: A dynamic residual compensation mechanism is introduced to optimize the correction results by gradually smoothing the Gaussian filtering, eliminating the residual noise while avoiding introducing artifacts by over-compensation. Compared with the static compensation scheme in the traditional method, the dynamic compensation can adaptively adjust the compensation intensity according to the distribution of noise, which significantly improves the robustness of the algorithm.

(3) Weighted linear regression based on local variance: Local variance is used to adjust the weights of each region dynamically, and differentiated correction is implemented for areas with different noise intensities, improving global correction accuracy. Unlike the traditional method that uses uniform weights, this algorithm can adaptively adjust according to the local features of the image, which is especially suitable for infrared image processing in complex scenes.

(4) Efficiently adapting to complex scenes: A local image interception strategy based on scene complexity analysis is proposed to optimize computational efficiency, enabling the algorithm to meet the real-time processing requirements in high-resolution images. Compared with the correction method based on region segmentation, this algorithm can characterize the noise distribution of the whole image more accurately without introducing apparent deviations.

Experimental results show that the proposed algorithm achieves excellent denoising and detail preservation under varying noise intensities and complex scenes. Compared with mainstream methods such as those implemented by Cao [

43], Li [

23], and Ahmed [

24], the algorithm significantly improves PSNR and SSIM metrics and demonstrates robust performance in high-resolution infrared line-scanning images. By effectively addressing the limitations of detail preservation and the high computational costs of existing methods, this algorithm provides an efficient and practical solution for non-uniformity correction in complex scenes within infrared line-scanning systems.

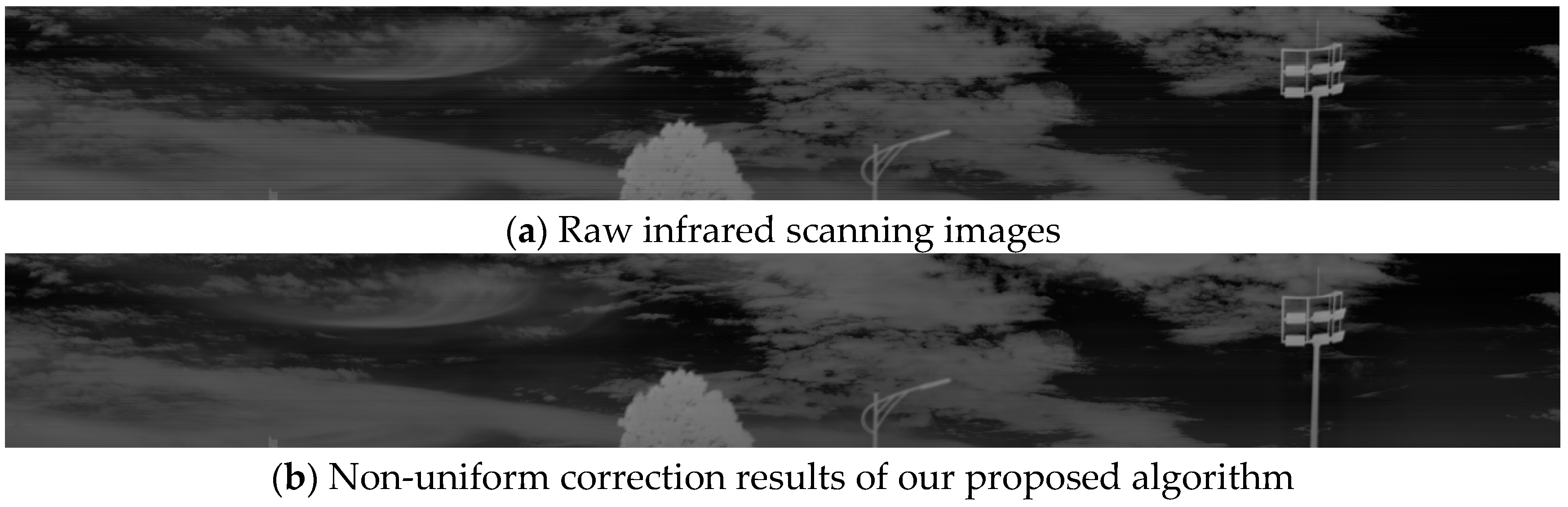

3. Results

Our algorithm was compared with several state-of-the-art methods for infrared detectors to evaluate the effectiveness of the proposed non-uniformity correction algorithm. The compared algorithms included the one-dimensional guided filtering algorithm for low-texture infrared images (1DGF) proposed by Cao in 2016 [

22], the multi-stage wavelet transform and guided filtering-based denoising algorithm (MSGF) proposed by Cao in 2018 [

43], the improved mixed-noise removal method based on non-local means (CNLM) proposed by Li in 2019 [

39], the non-uniformity correction method combining one-dimensional guided filtering and linear fitting (GFLF) proposed by Li in 2023 [

23], and the stripe noise removal algorithm for one-dimensional signals based on 2D-to-1D image conversion (ENSI) proposed in 2023 [

24]. The dataset used in this study comprises six parts: the FLIRADAS dataset [

45], the MassMIND dataset [

46], Tendero’s dataset [

38], the KAIST dataset [

47], the LLVIP dataset [

48], and long-wave infrared weekly swept real line-scanning images. Experiments were conducted on a 12th generation Intel

® Core™ i7-12700H CPU @ 3.61 GHz system with 32 GB RAM and a 64-bit Windows operating system. The software platform used for algorithm implementation was Matlab 2024a.

3.1. Noise Modeling Analysis

Unlike array detectors, where each pixel operates independently, infrared line-scanning detectors use the same pixel to scan each row, resulting in each image being generated by the same detector element. This imaging mechanism introduces unique noise characteristics, mainly horizontal streak artifacts. The primary sources of non-uniformity include variations in pixel response and random noise during signal readout.

A noise model comprising gain, bias, and random noise is typically constructed to model non-uniformity and evaluate the effectiveness of the correction algorithm. Changes in gain and bias are modeled as Gaussian random variables: the gain

at row I follows a Gaussian distribution with a mean of 1 and variance

, and the bias

follows a Gaussian distribution with a mean of 0 and variance

. In addition, random white noise

is modeled as a Gaussian distribution with a mean of 0 and variance

. Combining these factors, the simulated image with noise can be represented as follows:

where

denotes the ideal image without noise and

represents the random white noise affecting each pixel.

3.2. Effect of Image Size on Non-Uniformity Correction

This experiment analyzes how the number of intercepted columns affects non-uniformity correction and provides guidance for selecting an optimal column count.

The experimental data consist of 40 frames of noise-free high-resolution infrared images with an original image size of 1024 × 55,000. Intercepted images of size 1024 × 8192 were used for evaluation. Streak noise was added, with the gain coefficient following a Gaussian distribution (mean = 1, variance = 0.02) and the bias coefficient following a Gaussian distribution (mean = 0, variance = 0.02). No additional Gaussian white noise was introduced.

The number of intercepted columns was incrementally increased from 400 to 3200, and correction parameters were calculated and applied to the full map. The correction effect was evaluated using the mean PSNR, as shown in

Table 1.

As shown in

Table 1, the PSNR value improves as the number of intercepted columns increases. Beyond 1600 columns, the PSNR stabilizes, indicating that the correction parameters effectively capture the noise characteristics of the entire map. Increasing the number of columns beyond 1600 yields less improvement (less than 0.1 in PSNR) while increasing computational overhead. Thus, selecting around 1600 columns for practical applications balances correction accuracy and computational efficiency.

3.3. Effect of Image Scene Complexity on Non-Uniformity Correction

To study the impact of scene complexity on calibration performance, this experiment simulates infrared line-scanning images by adding Gaussian white noise with varying variances. Simulated images of size 1024 × 8192 were used, with 1600 columns intercepted for correction parameter calculation. The gain

and bias

follow Gaussian distributions with means of 1 and 0 and variances of 0.02, respectively. The variance of the Gaussian white noise

was gradually increased from 0.02 to 0.2 to simulate scenes of varying complexity. The experiment was conducted on 40 frames of noise-free, 14-bit infrared line-scanning images. An example of the noisy image after adding Gaussian noise is shown in

Figure 6, and the PSNR values for corrected images under different noise variances are presented in

Table 2.

From

Table 2, the PSNR increases as the noise variance grows, reaching a peak at a variance of 0.1. This indicates that the algorithm effectively separates streak noise from the background while maintaining image structure under moderate noise levels. However, when the noise variance exceeds 0.1, the PSNR declines due to the increased noise intensity destroying local structural information, making it challenging for the algorithm to fully recover the original image details.

These results demonstrate that the algorithm is robust in varying scene complexities. It handles non-uniformity correction in complex scenarios, making it suitable for infrared line-scanning detectors under diverse conditions.

3.4. Quantitative Analysis of Algorithmic Non-Uniformity Correction

The algorithms in this paper are compared experimentally with state-of-the-art non-uniformity correction methods, including MSGF, 1DGF, GFLF, ENSI, and CNLM. To evaluate performance comprehensively, reference evaluation metrics such as PSNR, SSIM, and roughness are used in the simulated images. In contrast, non-reference metrics such as ICV and GC assess the correction effect on real images.

3.4.1. Experimental Datasets

This study utilizes two types of experimental dataset: simulated and real.

Simulated datasets were created to compare streak noise removal models under controlled conditions. The FLIRADAS and MassMIND datasets serve as reference datasets. FLIRADAS is a thermal imaging dataset captured with a vehicle-mounted RGB thermal camera, containing 4224 infrared images at a resolution of 480 × 640. MassMIND is a long-wave infrared oceanographic dataset comprising 2916 images with a resolution of 512 × 640. From these datasets, 100 images were randomly selected, and five types of streak noise were added using Equation (21):

Case 1: and follow Gaussian distributions (mean = 1, variance = 0.02). No Gaussian white noise is added, simulating weak streak noise to test the algorithm’s correction ability under mild conditions.

Case 2: and follow Gaussian distributions (mean = 1, variance = 0.05). Moderate streak noise is simulated to evaluate correction under intermediate conditions.

Case 3: and follow Gaussian distributions (mean = 1, variance = 0.08). This case simulates strong streak noise, testing the algorithm’s performance under extreme conditions.

Case 4: gain noise varies linearly and periodically in the horizontal direction, simulating complex, non-uniform streak noise.

Case 5: and follow Gaussian distributions (mean = 1, variance = 0.02), with added Gaussian white noise (variance = 0.04) to simulate environmental interference and test robustness.

Two real datasets containing streak noise were also used for comparison: Tendero’s Dataset, which consists of 20 infrared images of varying resolutions, and the long wave infrared weekly scanning dataset, which contains 40 images with a resolution of 1024 × 55,000. For the experiments, 1024 × 8192 columns were intercepted from the long-wave dataset.

3.4.2. Parameter Setting

All algorithms were run on the same set of infrared images to ensure a fair comparison under consistent pre-processing steps. Parameters were drawn from each method’s original references whenever possible, with minor modifications to accommodate this study’s resolutions and noise characteristics. Specifically, for our proposed method, we adopted a [15 × 1] window in the guided filtering stage to capture horizontal streak noise effectively, with a smoothing parameter

balancing noise suppression and detail retention. The iterative compensation was capped at five cycles after pilot tests showed negligible PSNR/SSIM improvements beyond five iterations, and an initial compensation coefficient of

was chosen to avoid over-smoothing. In contrast, MSGF [

43] employed wavelet decomposition (sym8, three levels), followed by a guided filter of size [5 × 5] and a smoothing parameter

. The 1DGF [

23] used a one-dimensional guided filter with a [1 × 100] window and

, reflecting its emphasis on row-wise filtering in low-texture infrared settings. For GFLF [

22], we set an [8 × 1] horizontal filtering window and a [1 × 100] vertical filtering window, applying smoothing parameters of 0.04 horizontally and 0.16 vertically to address both row- and column-wise artifacts. ENSI [

24] employed a Gaussian filter window of [1 × 5] after converting the image dimension from 2D to 1D. At the same time, CNLM [

39] used a [7 × 7] search window for identifying similar patches and a similarity threshold of h

2 = 0.1, thereby balancing fine detail preservation with effective noise removal. Notably, for the large-scale long-wave infrared weekly scanning dataset, our algorithm and GFLF extracted 1600 columns to capture the global noise distribution efficiently. All other parameters were consistent with their respective references, ensuring each algorithm operated near its recommended conditions for a fair and transparent performance evaluation.

3.4.3. Parameter Sensitivity Analysis

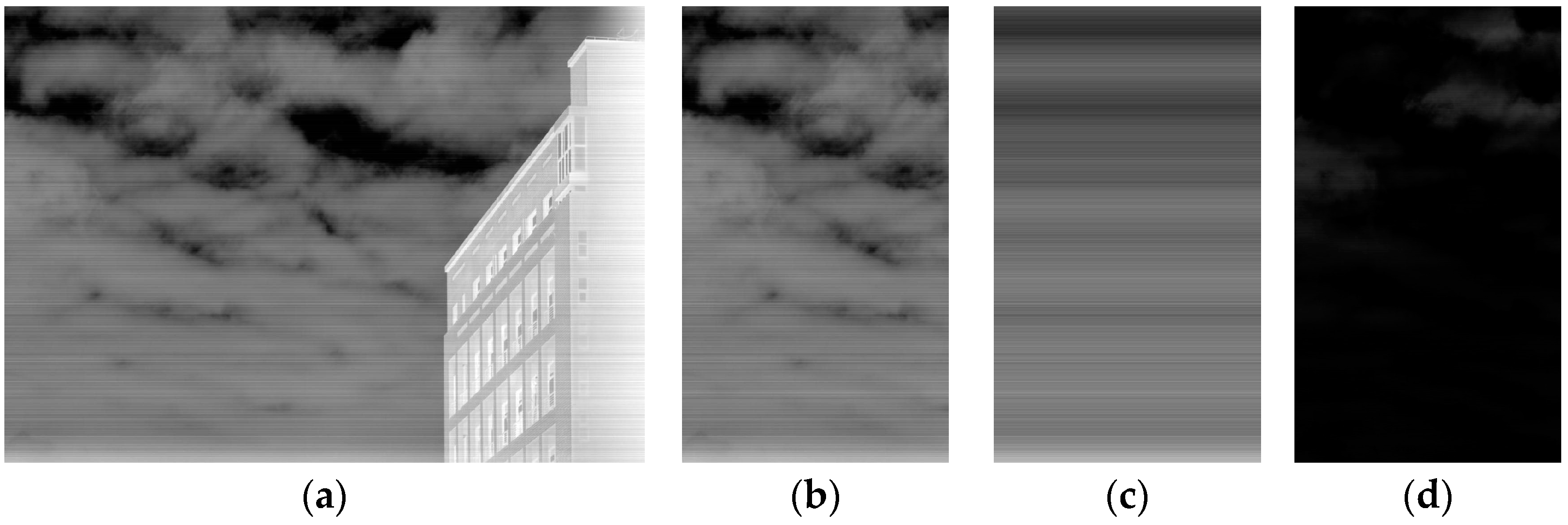

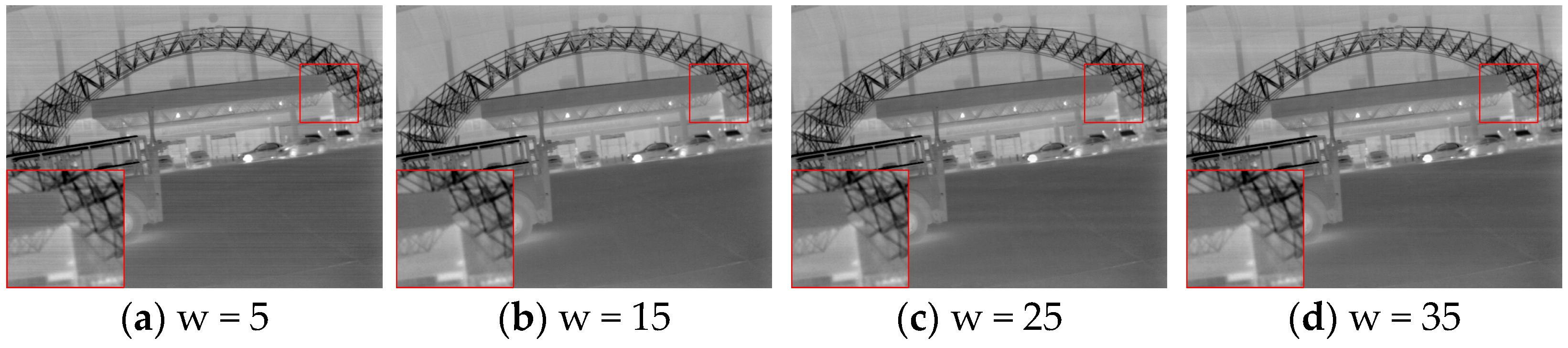

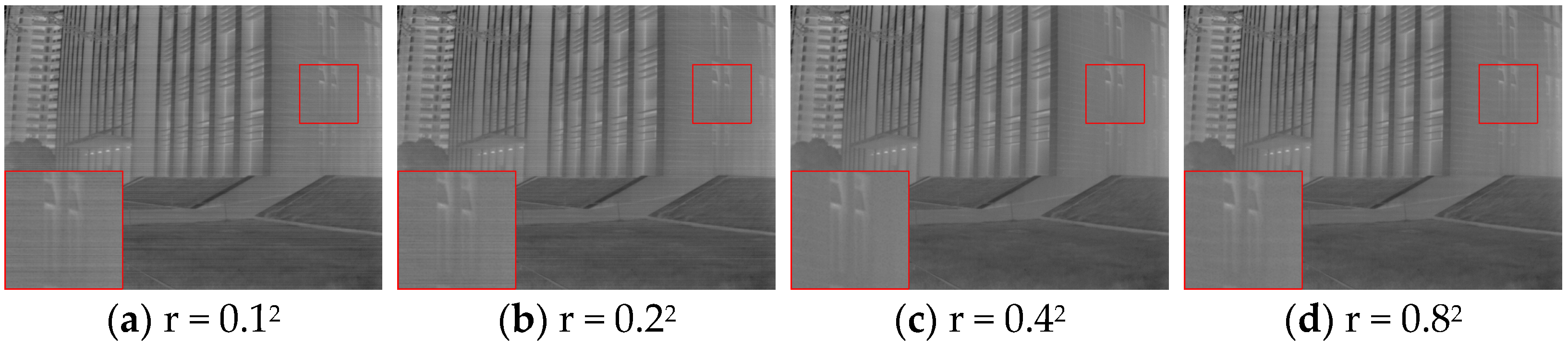

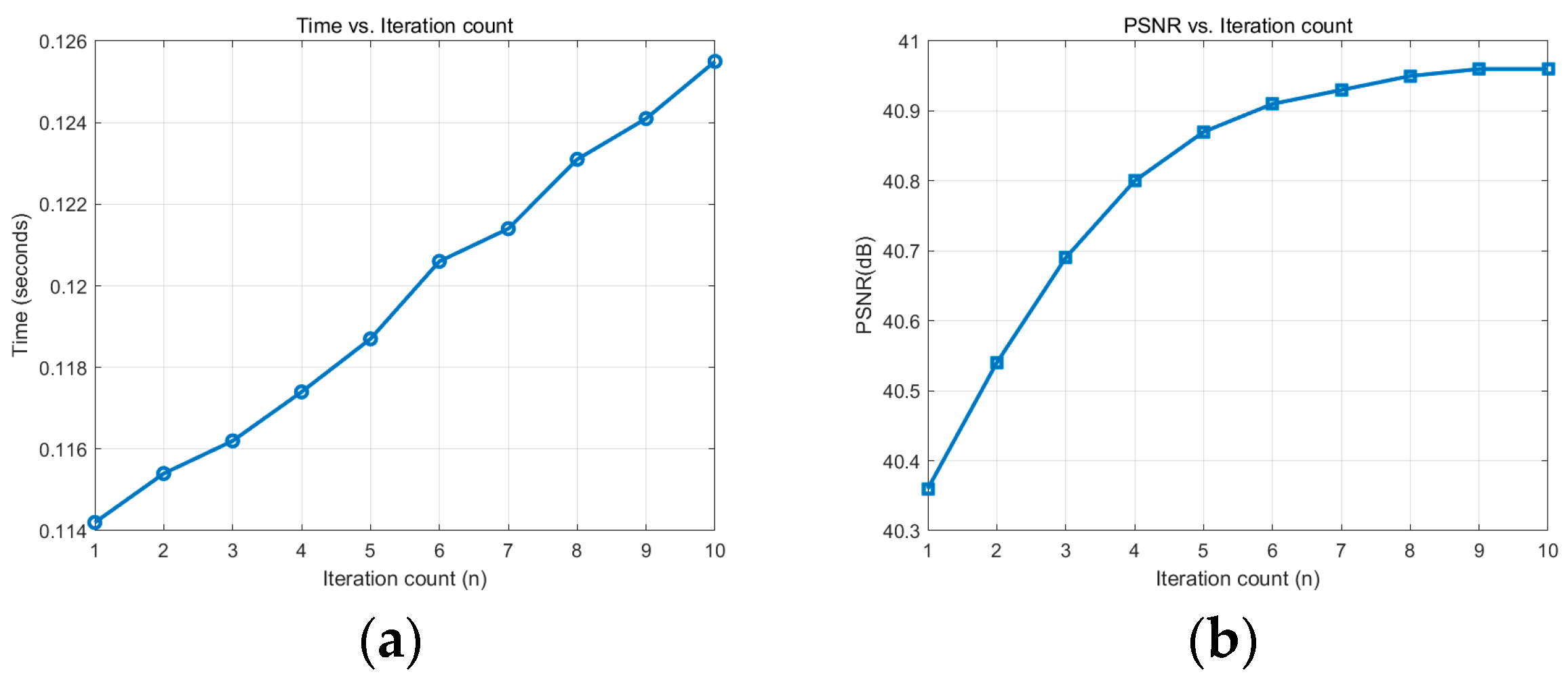

To further address the choice of multiple parameters in the algorithm, we performed an ablation study on four key hyperparameters: (1) the guide filtering window size , (2) the smoothing parameter , (3) the maximum iteration count , and (4) the initial compensation coefficient . We selected 30 representative images from the FLIRADAS dataset (Case1 noise scenario) and systematically varied each parameter while keeping the others fixed at their default values.

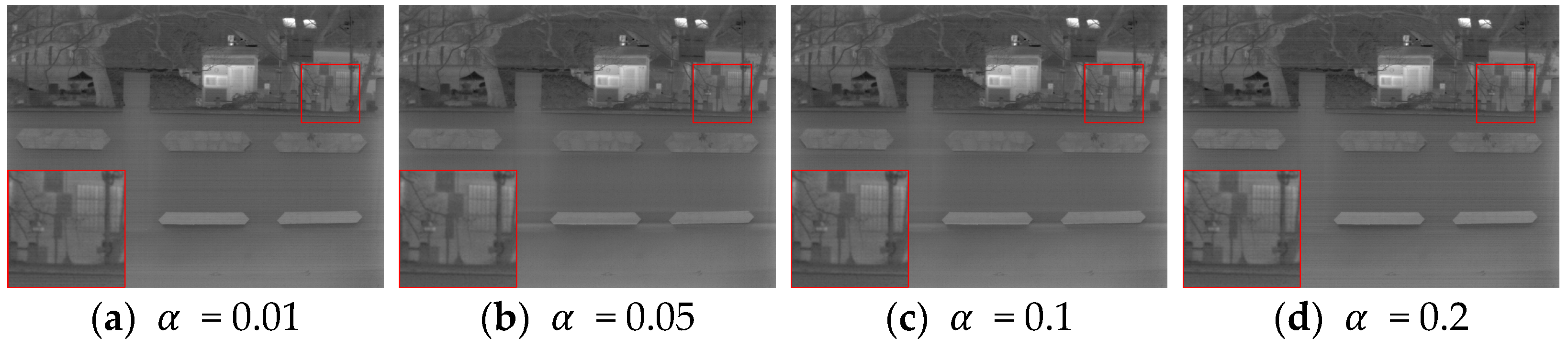

The zoomed-in area in

Figure 7 shows that a smaller window size [5 × 1] leaves noticeable streaks, while a larger window size [35 × 1] causes blurred edges. A medium-sized window size [15 × 1] can balance detail preservation and denoising.

From the enlarged area of

Figure 8, we can see that when r = 0.1

2 or r = 0.2

2, the image is relatively smooth, and the details are relatively clear, but more noise is left. When r = 0.4

2 or 0.8

2, the noise removal effect is noticeable, but excessive smoothing leads to a loss of image details, especially when r = 0.8

2, when the texture of the building becomes blurred.

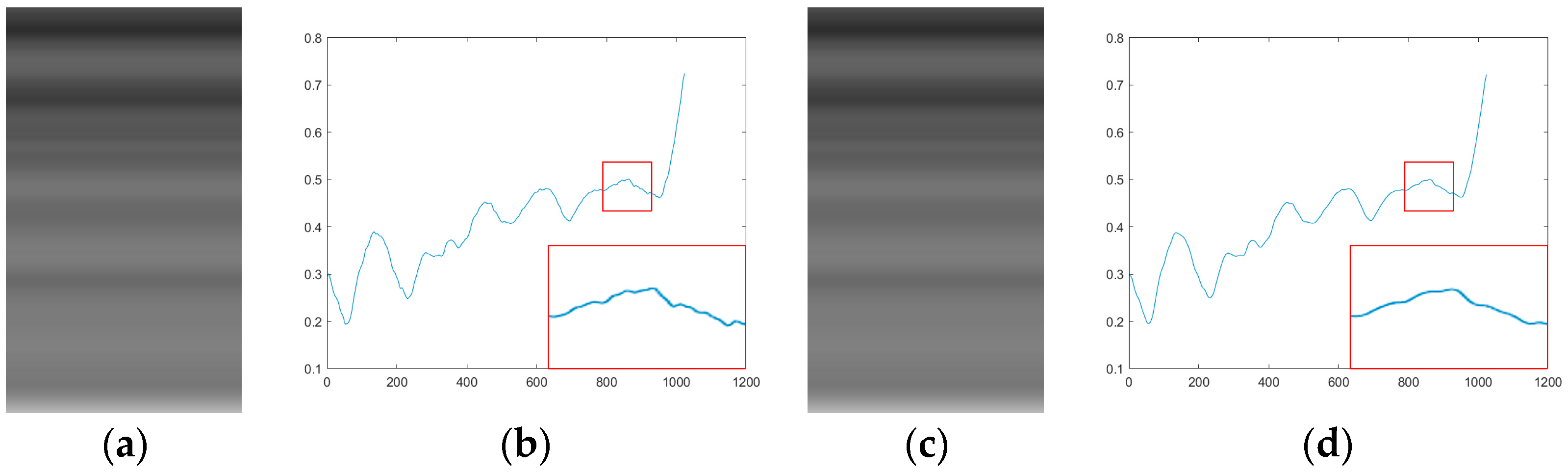

According to

Figure 9, as the number of iterations n increases, the PSNR gradually increases, but the increase decreases while the time increases linearly. Considering the performance and computing time, it is ideal to choose n = 5 to n = 7 because, in this range, the improvement of PSNR has stabilized, and the running time is relatively reasonable.

As can be seen from

Figure 10, when

= 0.2, the correction will overshoot, and when

is small, more iterations are required to achieve the same effect. Between 0.05 and 0.1, the convergence speed and correction quality are better balanced.

Based on these observations, we suggest using [15 × 1] for the guide window, = 0.16, up to five iterations, and = 0.05 as default. Nevertheless, users can fine-tune these parameters if confronted with significantly different imaging conditions or noise intensities.

3.4.4. Evaluation Indicators

Several evaluation metrics are employed to assess the performance of the algorithms:

PSNR evaluates the similarity between the corrected image and the original image. A higher PSNR indicates better correction and detail retention. The formula is as follows:

where

is the maximum possible pixel value of the image and

represents the mean squared error between the corrected and reference images.

measures the brightness, contrast, and structure similarity between the corrected and original images. The closer the SSIM value is to 1, the higher the subjective quality. The formula is as follows:

where

,

,

, and

are the mean and variance of

and

, respectively;

is the covariance between

and

; and

and

are constants to avoid division by zero.

Roughness is used to measure the overall frequency characteristics of an image. The closer the roughness value of the corrected image is to that of the original image, the better the correction effect. The calculation formula is as follows:

where

denotes the L-1 norm,

denotes the convolution operation,

is the horizontal gradient operator,

is the corrected image,

is the vertical gradient operator, and

is the input image.

assesses uniformity and contrast enhancement by measuring the ratio of the mean to the standard deviation of the pixel values in selected regions. A higher

indicates better uniformity. The formula is as follows:

where

and

are the mean and standard deviation of the selected region.

GC reflects the retention of image details by comparing the gradients of the corrected and original images. A smaller GC value indicates higher detail preservation. The formula is as follows:

where

denotes the gradient calculation of the image,

is the input image, and

is the output image.

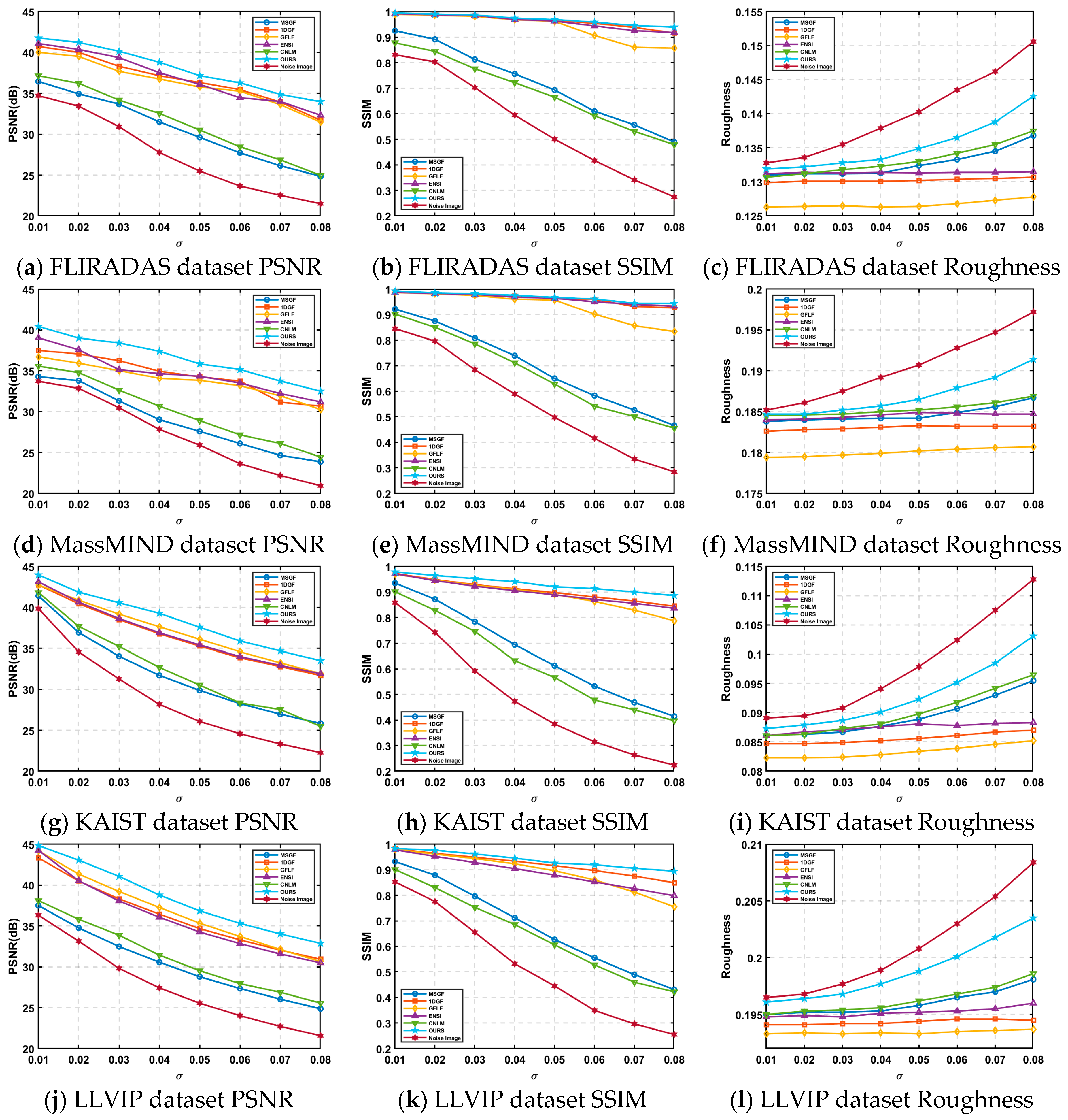

3.4.5. Quantitative Testing of Simulated Datasets

The proposed algorithm’s performance was evaluated using simulated datasets derived from FLIRADAS and MassMIND, incorporating five types of streak noise. The proposed method was compared against the MSGF, 1DGF, GFLF, ENSI, and CNLM algorithms regarding PSNR, SSIM, and roughness. The results are summarized in

Table 3. To provide a clearer and more focused comparison, the row-mean images from these algorithms are shown in

Figure 7 and

Figure 8, with each case displayed separately for better clarity.

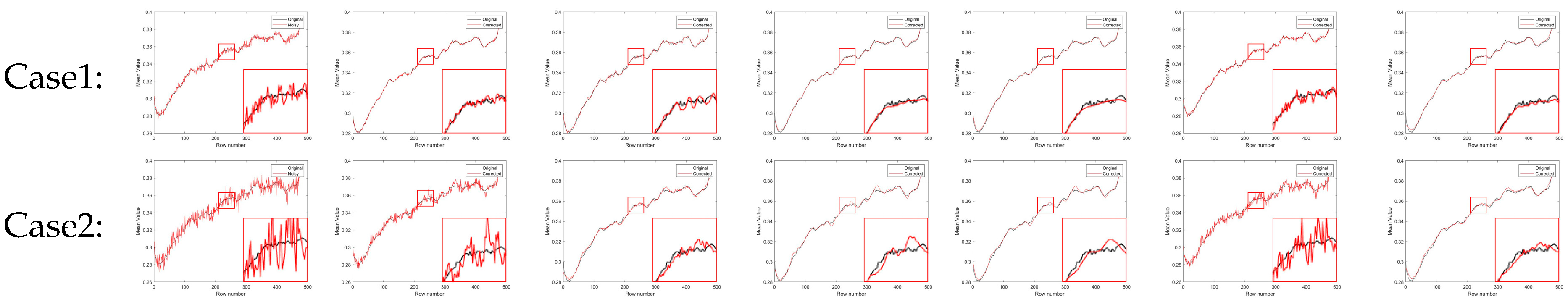

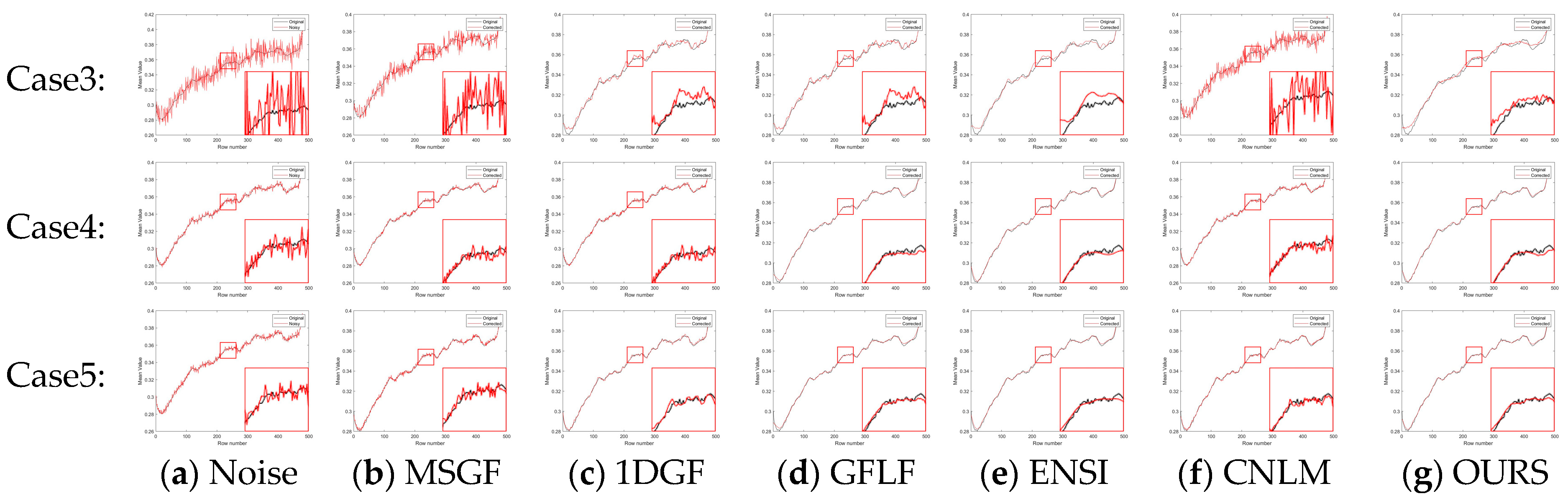

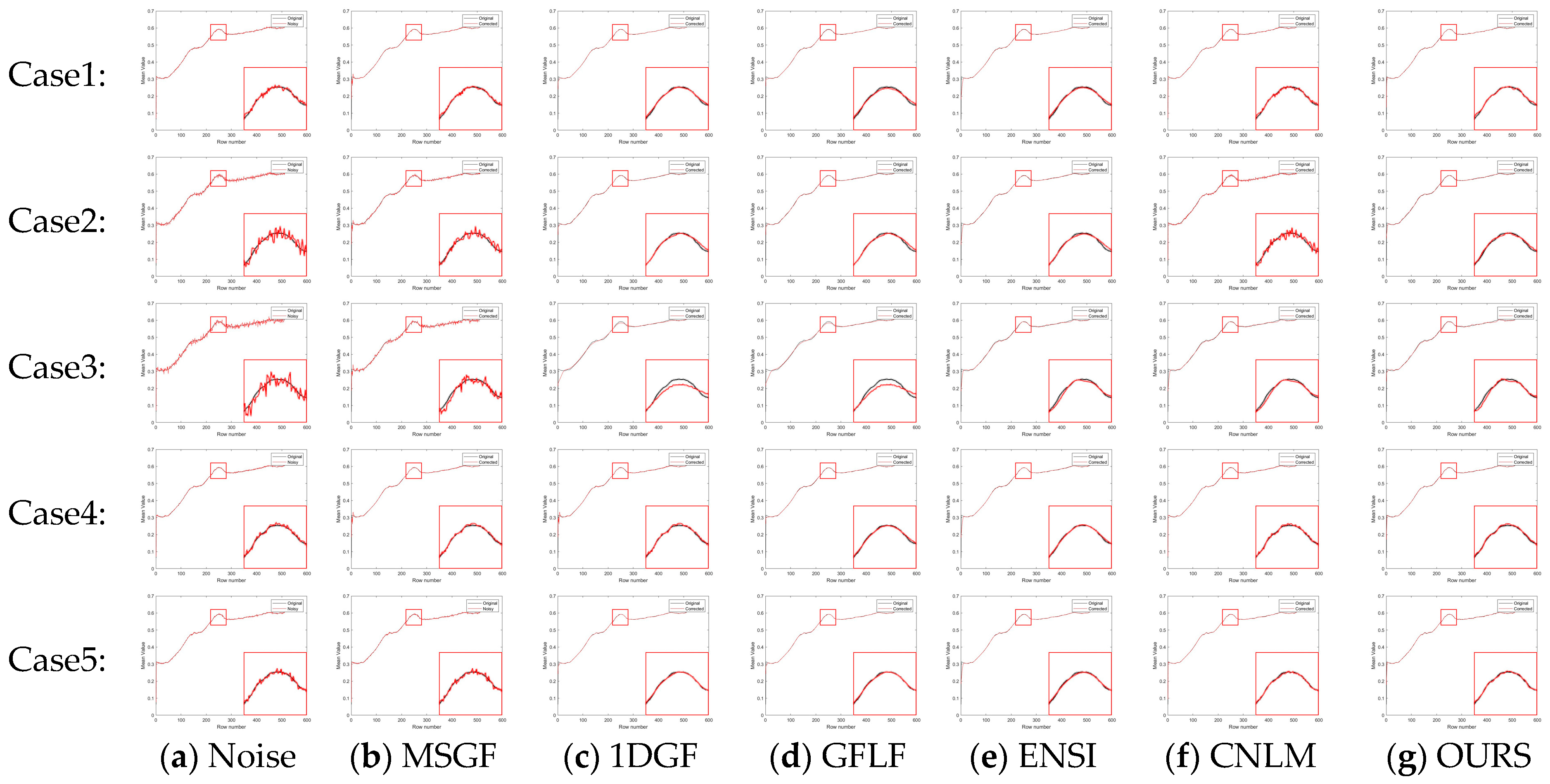

In

Figure 11a–g and

Figure 12a–g, each row represents a specific noise level, and each column corresponds to a different algorithm. We show the row-mean images of different algorithms under various noise conditions. These figures clearly show that the proposed method outperforms other methods, especially in suppressing noise without losing key image details, where our proposed algorithm consistently performs the best under all noise levels.

In contrast, existing methods exhibited clear limitations. MSGF and 1DGF could not handle complex noise patterns effectively, often losing high-frequency details or introducing ripple artifacts. GFLF balanced denoising and detail retention but encountered artifacts due to its region division strategy. ENSI performed adequately for simple noise but failed in scenarios with higher noise intensity, while CNLM managed small-scale noise but struggled to recover details in larger-scale noise regions. The proposed method demonstrated superior adaptability and robustness in scenarios with significant challenges, such as Case4 and Case5, making it a reliable choice for complex noise conditions.

For further illustration, we zoom in on specific regions of the row-mean images obtained by different algorithms. In addition, the overall comparison in

Table 3 provides a quantitative analysis of PSNR, SSIM, and roughness metrics, highlighting the superior performance of our method.

3.4.6. Quantitative Testing of Real Datasets

To further evaluate the effectiveness of the proposed algorithm, experiments were conducted on real datasets, including Tendero’s dataset and the long-wave infrared weekly scanning dataset. These datasets contain more complex noise characteristics and inhomogeneities than simulated datasets, providing a more realistic assessment of the algorithm’s performance in practical applications.

Table 4 summarizes the results for Tendero’s dataset, while

Table 5 presents the long-wave infrared weekly scanning dataset results.

For Tendero’s dataset, the proposed algorithm achieves an ICV value of 2.3522, which is significantly higher than other algorithms, indicating superior contrast uniformity and effective suppression of streak noise. Additionally, the GC value of 0.0015, the lowest among all of the algorithms, reflects better retention of gradient details and avoidance of over-smoothing. By comparison, the MSGF and GFLF algorithms perform poorly in complex noise regions, losing details and resulting in lower ICV and higher GC values.

The proposed algorithm consistently outperforms alternatives in the long-wave infrared weekly scanning dataset. It achieves a PSNR of 43.21 dB and an SSIM of 0.9439, indicating enhanced denoising and structure preservation. While GFLF and 1DGF demonstrate reasonable performance, they suffer from detail loss and insufficient correction in high-noise regions, resulting in slightly lower PSNR and SSIM values. The algorithm’s ability to achieve an ICV value of 2.8053, the highest among all of the methods, further underscores its capability to provide uniform and high-quality correction for complex real-world infrared images.

3.4.7. Analysis of the Quantitative Results of the Simulated Dataset

Four simulated datasets evaluated the proposed algorithm’s non-uniform correction capability. The KAIST dataset has 8995 images with a resolution of 512 × 640, which contain rich urban dynamic environments and day and night scenes and can complement the FLIRADAS dataset. The LLVIP dataset provides many low-light night scenes, consisting of 3463 images with a resolution of 1024 × 1280. One hundred images were randomly selected from each of the four simulated datasets. Noise was added to these images using Formula (21) for further testing.

As evident from

Figure 13, the proposed algorithm consistently achieves higher PSNR and SSIM values across all noise scenarios, particularly excelling in high-noise conditions. This indicates its robust ability to suppress noise while preserving structural details and overall image quality. By leveraging residual-based compensation and weighted linear regression, the algorithm addresses the challenges of detail loss and uneven correction faced by traditional methods.

Overall, the algorithm demonstrates superior adaptability and effectiveness across both simulated and real datasets, establishing itself as a reliable solution for denoising high-resolution infrared images under complex noise conditions.

3.5. Qualitative Analysis of the Effect of Algorithmic Non-Uniformity Correction

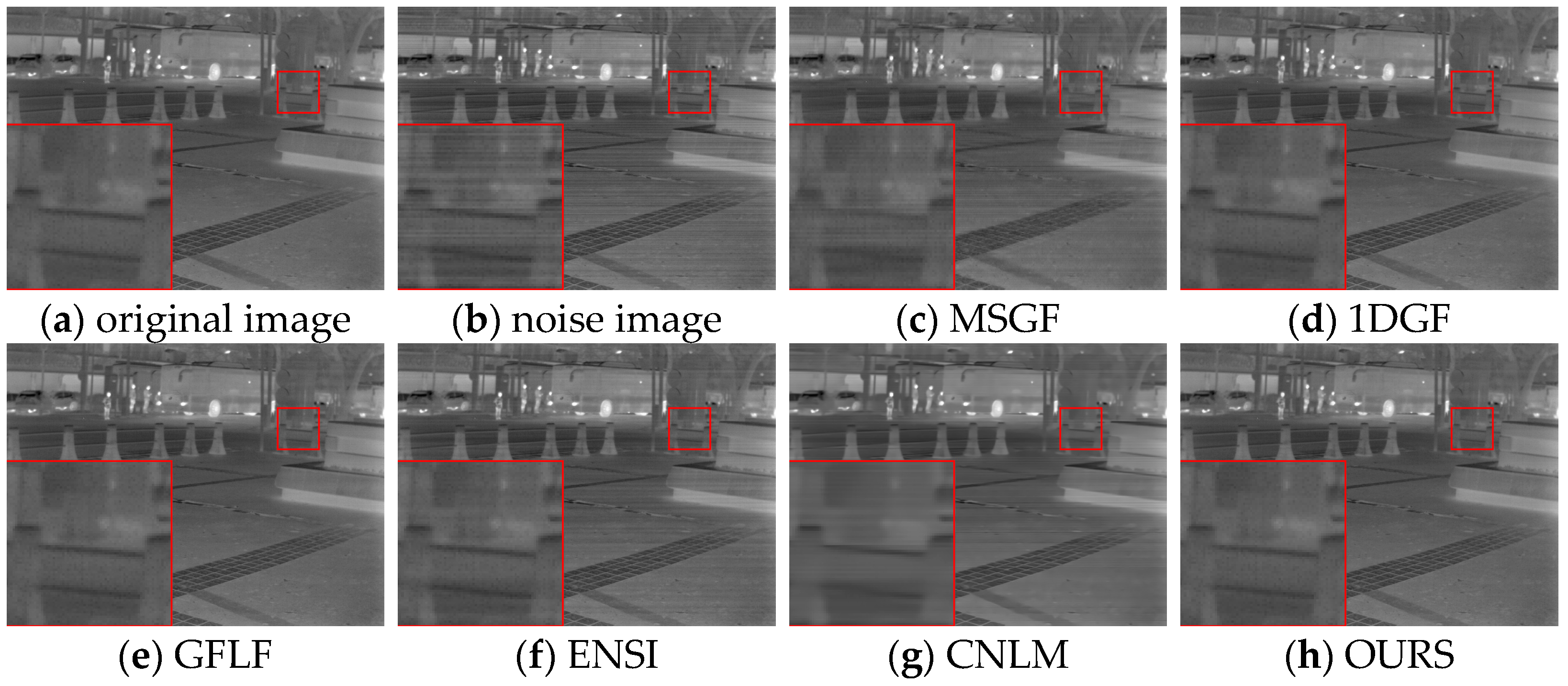

3.5.1. Qualitative Analysis of Simulated Data

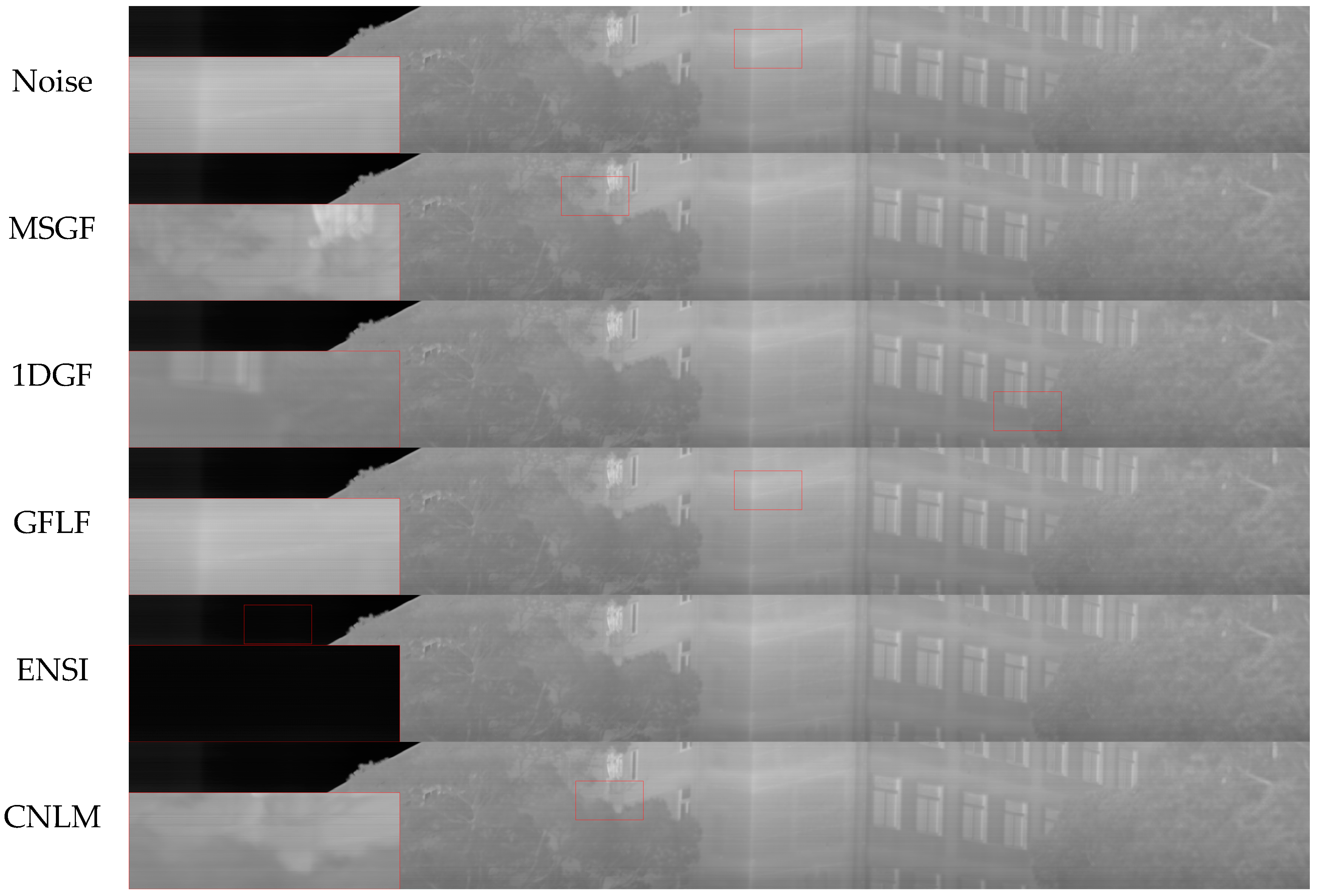

Under varying noise conditions, different algorithms’ denoising performance and detail preservation were evaluated qualitatively. As shown in

Figure 14, under weaker noise conditions, the MSGF algorithm leaves behind noticeable streak noise in certain regions, failing to preserve finer image details. The 1DGF algorithm effectively suppresses noise but introduces a degree of over-smoothing, particularly evident in the blurring of image edges and structural information. Conversely, the GFLF algorithm provides better noise suppression but sacrifices some texture fidelity due to excessive smoothing in complex structural areas. The ENSI algorithm exhibits high background smoothness but struggles with local uniformity and fails to address noise consistently. The CNLM method retains more texture details but leaves residual streak noise, reflecting an imbalance between noise suppression and detail retention. In comparison, the proposed algorithm achieves a superior balance, effectively removing streak noise while maintaining texture integrity, particularly in regions of structural complexity.

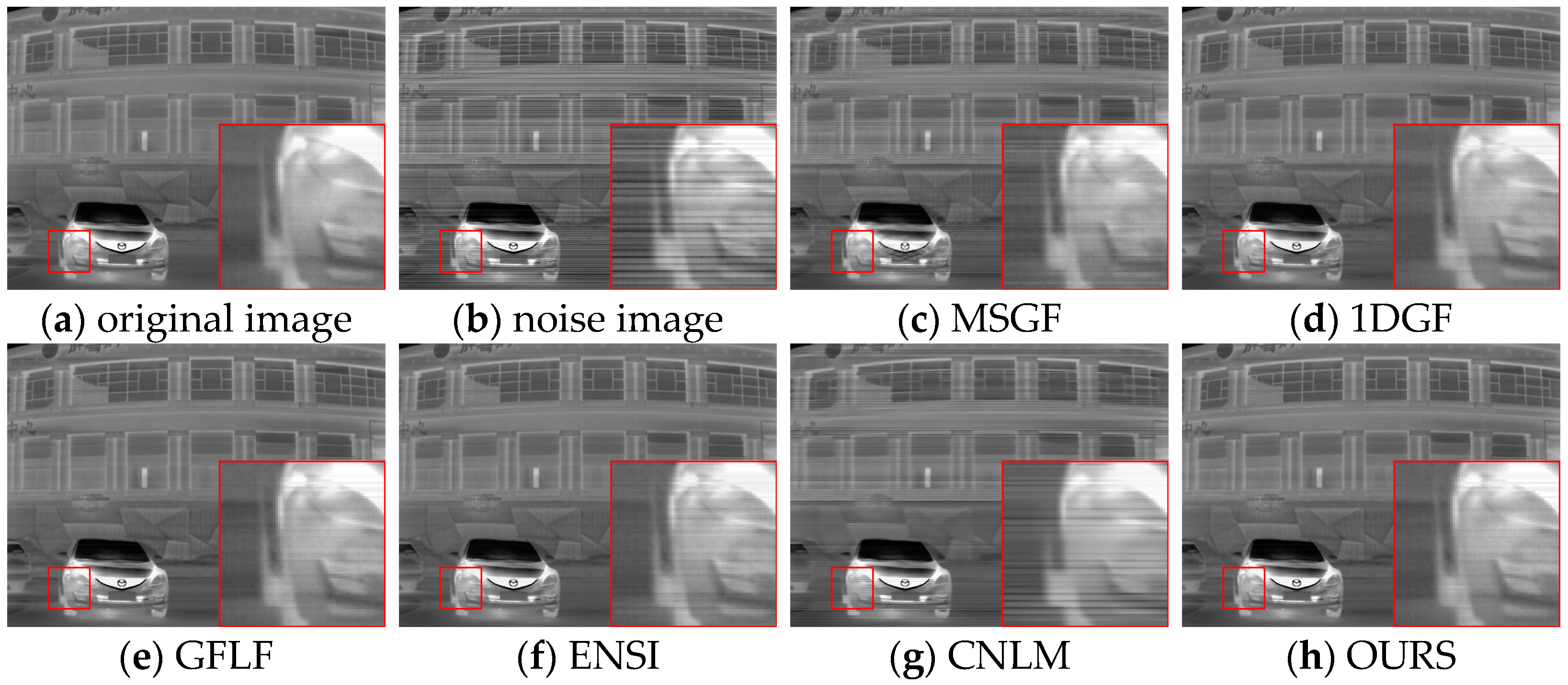

For simulated datasets, including the FLIRADAS and MassMIND datasets, the proposed algorithm demonstrated robustness in addressing non-uniformity and preserving essential image features under various noise intensities. As depicted in

Figure 15, our algorithm effectively removes horizontal stripe noise under moderate noise conditions while maintaining detailed texture information, such as structural contours and edge clarity. In contrast, the MSGF and CNLM algorithms leave significant noise artefacts, particularly in low-contrast areas, while the 1DGF algorithm struggles with over-smoothing. The red-boxed areas show that the GFLF and ENSI methods provide acceptable denoising performance but lose details in complex image regions.

Figure 16 illustrates the calibration results for Case3 noise intensity using the MassMIND dataset. Here, the GFLF algorithm exhibits a more apparent trade-off between stripe noise removal and texture preservation, with noticeable blurring in high-noise regions. The CNLM algorithm fails to adapt to the complex noise distribution fully, leaving residual noise streaks. However, the proposed algorithm achieves a robust balance by leveraging local weight fusion and adaptive smoothing mechanisms, delivering visually consistent results with improved detail retention.

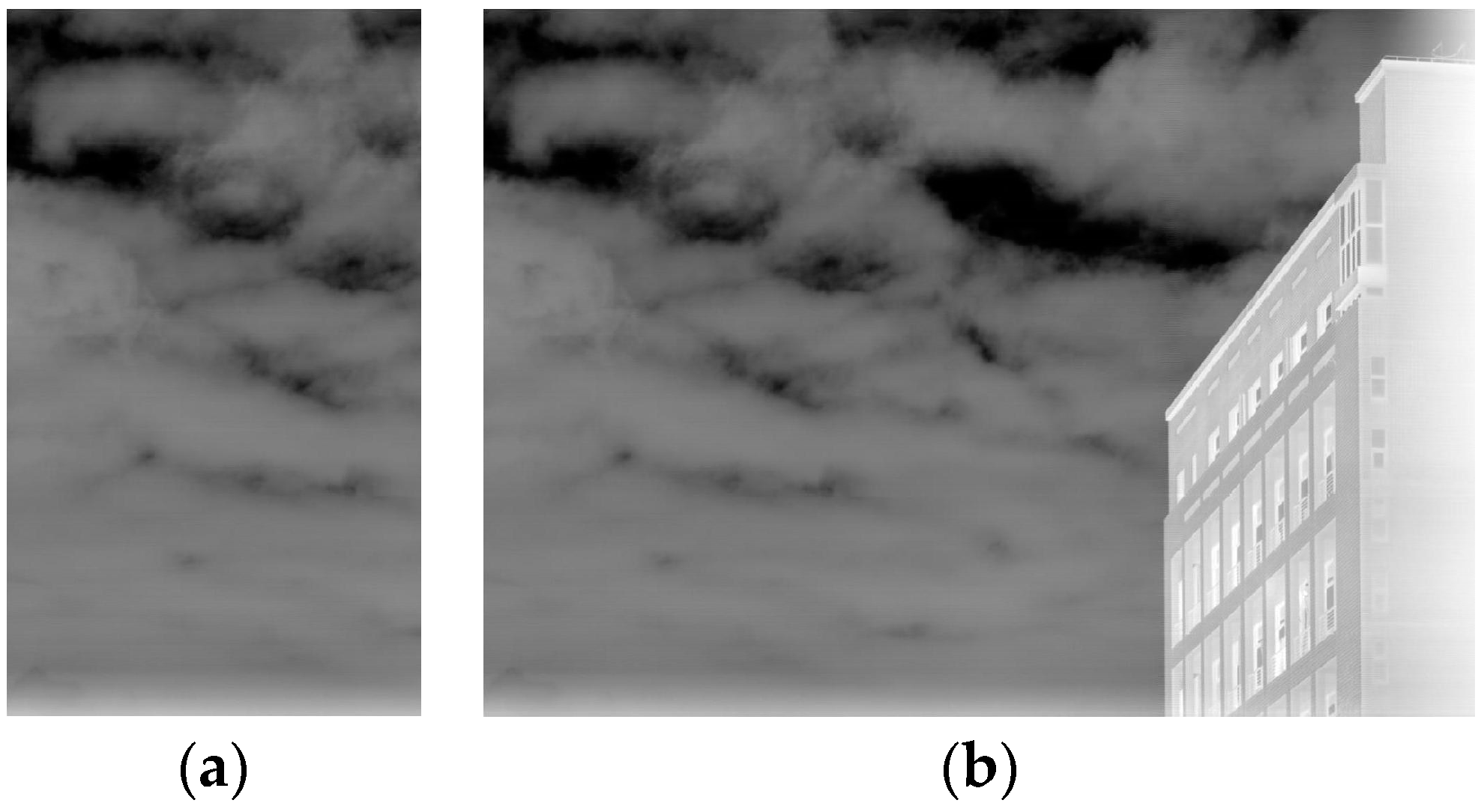

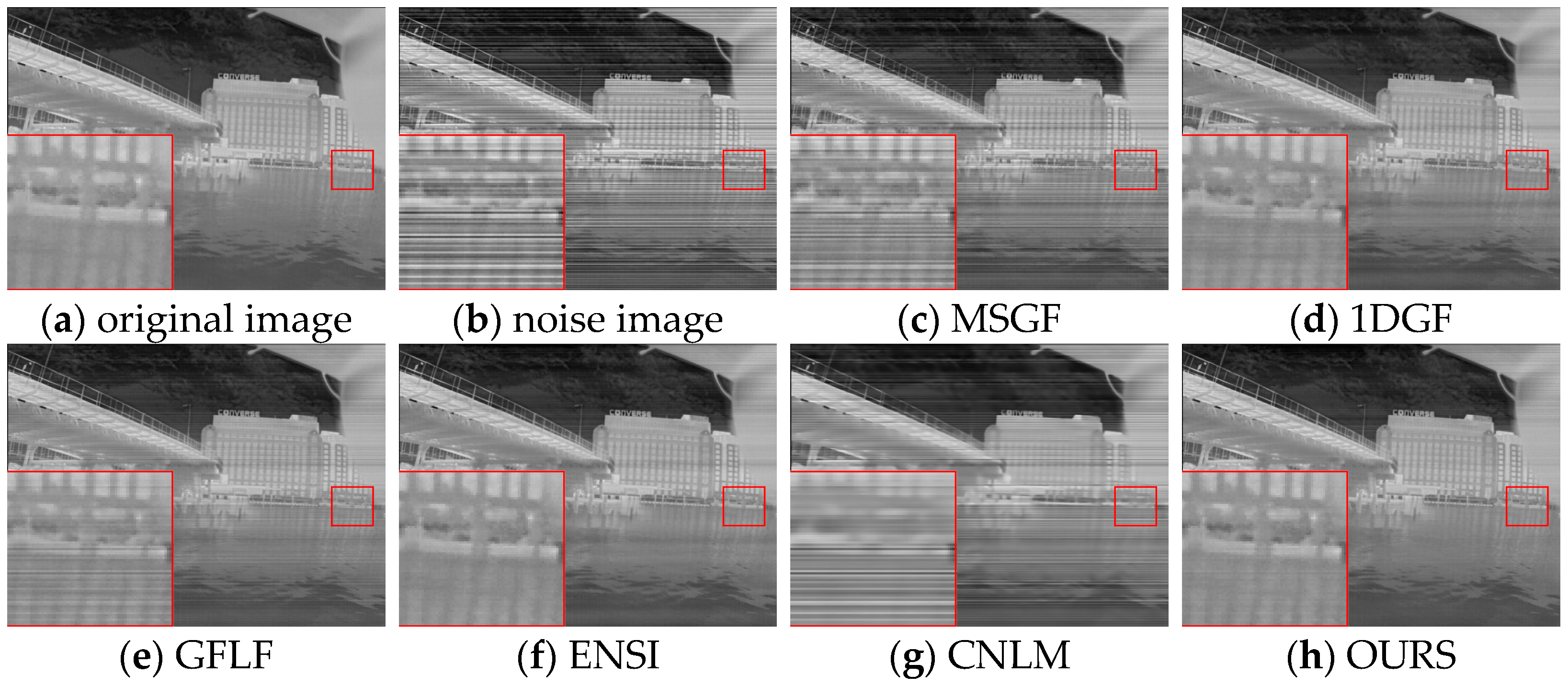

3.5.2. Qualitative Analysis of Real Data

As observed in

Figure 17, the MSGF method shows poor denoising performance, with prominent horizontal stripe noise remaining due to the loss of structural information caused by the wavelet transform. The 1DGF algorithm exhibits blurred details in the tree region on the right side and slight brightness unevenness in the transition between the left and right areas. The GFLF method leaves horizontal texture residues on the building wall, where noise blends with structural details. ENSI displays noticeable horizontal noise in the sky region on the left, attributed to inadequate handling of low-frequency backgrounds during smoothing, resulting in residual noise and a blurred tree texture. Similarly, the CNLM method leaves residual noise in the sky and flat building areas, with uneven brightness at the boundary between the sky and trees. In contrast, the OURS algorithm effectively suppresses horizontal stripe noise while preserving image details, particularly along building edges and tree contours. The noise is smoothed while retaining clear structural information without introducing significant blurring.

3.6. Algorithm Time Complexity Analysis

To analyze the time efficiency of our proposed algorithm, experiments were conducted on real high-resolution infrared line-scanning images with dimensions of 1024 × 55,000 pixels and 14-bit acquisition accuracy.

Table 6 compares the average runtime of 10 executions for our algorithm against WAGE [

21], 1DGF [

22], GFLF [

23], ADOM [

28], TV-STV [

29], CNLM [

39], and MSGF [

43].

As shown in

Table 6, our algorithm achieves a runtime of 1.6821 s, which is competitive with GFLF (1.5114 s) and significantly faster than other methods like ADOM and FTV-STV, which require over 180 and 887 s, respectively. The lightweight iterative compensation and efficient guide filtering contribute to this advantage. In comparison, the IDGF method also demonstrates reasonable performance with a runtime of 5.2821 s, but its processing involves computational overhead for row and column guide filtering. Other methods, such as MSGF and WAGE, rely on multiscale or high-dimensional processing, resulting in significantly longer runtimes.

To further evaluate the efficiency of our algorithm,

Table 7 and

Table 8 present detailed performance metrics for varying numbers of intercepted columns during the correction process. Our algorithm demonstrates a steady increase in runtime yet maintains high efficiency with less than 2 s required for up to 6000 columns. Similarly, the PSNR and SSIM metrics remain consistent and robust across different column intercepts, achieving average values of 36.97 dB and 0.8685 at the final iteration.

Table 9 and

Table 10 detail the performance for selected scenarios with 5000 and 6000 columns, where

represents the number of iterations. Our algorithm consistently achieves superior PSNR and SSIM metrics compared to the GFLF algorithm while maintaining minimal computational overhead. For instance, our algorithm reports a PSNR of 36.84 dB and an SSIM of 0.8642 for 6000 columns, clearly outperforming GFLF’s performance under the same conditions.

The proposed algorithm effectively balances computational efficiency and correction quality, demonstrating significant advantages in real-time processing scenarios, particularly for high-resolution infrared line-scanning images.

4. Discussion

This study introduces a non-uniformity correction method tailored for high-resolution infrared line-scanning images, leveraging residual guidance and adaptive weighting. The proposed framework addresses challenges such as directional stripe noise, loss of detail, and stringent real-time processing requirements. Our method demonstrates significant improvements in denoising performance while maintaining high levels of detail preservation, which is crucial for infrared imaging applications, especially those involving complex, non-uniform noise patterns.

The proposed algorithm effectively combines residual guided filtering and adaptive weighting techniques. Utilizing both residual and original images optimizes preserving fine details while smoothing the noise in a controlled manner. This dual-guidance mechanism helps overcome the edge-blurring issues typically associated with traditional guided filtering methods. The adaptive compensation mechanism further enhances performance by dynamically adjusting the compensation strength, thus preventing over-correction and ensuring that critical image details are retained during noise suppression.

Moreover, using weighted linear regression based on local variance allows the algorithm to adapt to varying noise intensities across different image regions. This localized correction ensures a more accurate global correction, especially in complex and noisy regions. In contrast, conventional methods that rely on uniform correction parameters often struggle with maintaining detail in areas with varying noise levels.

Extensive experimental results, both quantitative and qualitative, confirm that our algorithm outperforms existing methods in terms of key performance metrics, such as PSNR, SSIM, and roughness. Our method achieves superior noise suppression and preserves the image’s structural integrity across multiple datasets, including simulated and real infrared images. In particular, the algorithm excels in scenarios with higher noise intensities and more complex noise patterns, demonstrating its robustness and adaptability to challenging conditions.

One key advantage of our approach is its ability to meet real-time processing requirements without compromising the quality of the correction. The proposed algorithm achieves efficient correction with minimal computational overhead, making it suitable for real-time infrared imaging systems applications. This efficiency, combined with the high-quality denoising performance, ensures that the algorithm can be effectively used in dynamic environments where high resolutions and fast processing are essential.

Despite the strengths of our method, some areas could benefit from further improvement. The current method assumes a Gaussian noise model, which may not fully capture the complexities of real-world noise, particularly in more dynamic environments. Future work could incorporate more advanced noise models to handle a broader range of noise types. Additionally, using parallel computing techniques or hardware accelerators, such as GPUs or FPGAs, could further enhance the real-time processing capabilities of the algorithm, allowing it to handle even larger datasets more efficiently.

In conclusion, the proposed algorithm represents a robust and efficient solution for non-uniformity correction in high-resolution infrared line-scanning images. It significantly improves existing methods by addressing the challenges of directional stripe noise and maintaining fine detail. Its adaptability to complex noise conditions and real-time performance make it a promising tool for various infrared imaging applications, including environmental monitoring, military surveillance, and thermal imaging.