Abstract

With the widespread adoption of 3D scanning technology, depth view-driven 3D reconstruction has become crucial for applications such as SLAM, virtual reality, and autonomous vehicles. However, due to the effects of self-occlusion and environmental occlusion, obtaining complete and error-free 3D shapes directly from 3D scans remains challenging, as previous reconstruction methods tend to lose details. To this end, we propose Dynamic Quality Refinement Network (DQRNet) for reconstructing complete and accurate 3D shape from a single depth view. DQRNet introduces a dynamic encoder–decoder and a detail quality refiner to generate high-resolution 3D shapes, where the former designs a dynamic latent extractor to adaptively select important parts of an object and the latter designs global and local point refiners to enhance the reconstruction quality. Experimental results show that DQRNet is able to focus on capturing the details at boundaries and key areas on ShapeNet dataset, thereby achieving better accuracy and robustness than SOTA methods.

1. Introduction

Over the past few years, the rise in use of 3D scanning technology has led to major advancements in 3D sensing applications, including SLAM, virtual reality, and autonomous vehicles [1,2,3,4,5,6]. Due to self-occlusion and environmental occlusion, obtaining perfect 3D shapes directly from a 3D scanner is not easy in the real world, and the obtained 3D shapes often miss bits, which can mess up how well it works for applications. Therefore, 3D reconstruction based on depth images is becoming more and more important [7]. For different applications, the main representations of 3D shapes contain point clouds [8], meshes [9,10], and 3D voxels [11,12]. As a regular structure, voxels are a straightforward generation of pixels to 3D cases, and are easily fitted into deep networks which are popular for generative 3D tasks and worthy of further exploration [13].

Conventional 3D reconstruction methods often use 3D retrieval-based techniques [14,15], Poisson surface reconstruction [16], and database fitting [17] to recover missing parts of objects and fill in existing holes. However, these methods generally yield sparse 3D shapes lacking in fine details, need an extensive amount of images, and rely on crafted algorithms for feature extraction [18]. To tackle these problems, researchers have come up with deep learning models which mine valuable patterns from a large amount of data to fill in the missing parts [19,20,21,22,23,24]. These learning-based methods usually use an autoencoder and try to rebuild the whole shape by making the predicted shapes as close as possible to the full shapes. However, due to the interference background of objects and the noise generated during data processing, the reconstruction quality is poor. Meanwhile, the reconstruction results often lose details due to self-occlusion and significant shape variations among different categories of objects.

To address the above challenges, we propose Dynamic Quality Refinement Network (DQRNet), which can reconstruct a fine-grained 3D shape with high-resolution and detailed boundaries from a single depth view. DQRNet first converts a single depth view into a 2.5D voxel grid and feeds it into an encoder–decoder network to reconstruct a coarse 3D shape with the resolution of , where DQRNet introduces a dynamic latent extractor between the encoder and decoder to avoid the interference from noisy features and enhancing the robustness. The coarse prediction is then fed to a detail quality refiner consisting of a global point refiner and a local point refiner, which guide the generation of reasonable 3D shapes by enhancing both global and local points. The global point refiner adopts a discriminator based on multi-head self-attention, which helps it to focus on different levels of global features, thereby improving details by fully capturing long-distance dependencies of objects. The local point refiner samples points with higher partial instability, extracts multilevel local and nonlocal features of these points, then utilizes a weighted fusion network to adaptively combine the pre- and post-predicted points to obtain boundary details of objects.

Our contributions are as follows:

- DQRNet introduces a dynamic encoder–decoder, which adopts a dynamic latent extractor to select the most valuable latent information. This can reduce noise and enhance robustness.

- DQRNet introduces a detail quality refiner to enhance the predictions, which consists of global point refiner and local point refiner. The global refiner employs a discriminator based on multi-head self-attention to update global information. The local refiner combines the pre- and post-judgments by weighted fusion, which helps to refine the details at the boundaries.

- DQRNet improves the average IoU by 2.09% and average CE by 2.88% on the ShapeNet dataset.

2. Related Work

Single RGB View for 3D Reconstruction. The challenge of creating three-dimensional representations from two-dimensional images is a fundamental issue with extensive applications in the field of computer vision. The most common way to tackle this involves presenting it as a predictive challenge in which a data-driven model is taught to generate a three-dimensional representation from two-dimensional image input. Many deep learning techniques have attempted this by predicting various types of 3D shapes, including meshes [25,26,27,28], point clouds [29,30,31], and neural implicit fields [32,33,34]. While these methods have had some success, they often have trouble creating detailed 3D models of complex objects because there is a great deal of uncertainty about what is in the parts of the object that are not visible in the 2D image. These regression-based methods are limited in that they cannot really deal with the uncertainty in single-view reconstruction. To mitigate this limitation, recent works [35,36] have explored global context modeling using transformers. While these methods improve reconstruction quality, they still lack fine-grained details due to the limited information available from a single view.

Single Depth View for 3D Reconstruction. As depth sensors have improved, depth images are also being used to reconstruct the structure of objects. Depth images provide explicit geometric information, reducing the ambiguity present in RGB-based methods. Several works [17,37,38,39,40] have developed methods that can predict 3D shapes with higher resolution. Zhao et al. [41] proposed 3D-RVP as an uncomplicated but effective approach for accurately reconstructing a complete 3D shape from a single depth view. Yang et al. [24] took a different approach by reconstructing shapes from one depth view using a framework which includes an adversarial component for enhancing the quality of the reconstruction. Aboukhadra et al. [42] introduced a groundbreaking approach to reconstructing the shapes of hands and objects. Liu et al. [13] introduced a spatial relationship preserving adversarial network for detailed 3D reconstruction from a single depth image, transitioning from a rough outline to fine details. Despite these advances, existing depth-based methods can still exhibit inaccuracies in structure and geometry. This is primarily because these techniques rely heavily on convolutional layers that are limited to capturing local information. To achieve a more accurate structure from incomplete shape, it is crucial to consider the nonlocal relationships between different parts of the object. Unfortunately, this aspect has not been adequately addressed in previous research on 3D shape completion from single depth view. Our work overcomes this limitation by introducing a dynamic encoder–decoder that adaptively selects important latent features, thereby reducing noise interference and improving reconstruction fidelity.

Deep Generative Models for 3D Reconstruction. Generative models have been widely explored for 3D shape synthesis, contributing to both shape reconstruction and detail refinement. In the last few years, there have been many studies on creating 3D shapes, and current 3D generative models are based on different structures. These include Generative Adversarial Networks (GANs) [43,44,45,46], Variational Autoencoders (VAEs) [19,47,48,49], normalizing flows [19,50], autoregressive models [51], and energy-based models [52]. GAN-based methods employ adversarial training to generate plausible 3D shapes. However, GAN-based models often suffer from mode collapse, leading to limited shape diversity. VAEs and normalizing flow models aim to learn structured latent spaces for shape generation, but often lack high-resolution details. Inspired by the success of 2D latent diffusion models [53], researchers have applied diffusion models to 3D shape generation [36,54,55]. These methods improve shape quality but require high computational resources. Following the the performance of latent diffusion-based models [53] in producing 2D images, a number of studies [56,57,58] have utilized generative modeling in the latent space for 3D shapes. This aspect aims to decrease computational demands and improve the quality of the generated 3D structures. Our DQRNet differs from these generative models by its incorporation of dynamic refinement reconstruction. Rather than learning a latent shape distribution from scratch, DQRNet enhances coarse reconstructions by means of a structured latent extractor and a multistage quality refinement mechanism. Recently, Neural Radiance Fields (NeRF) have become popular for 3D scene rendering and novel view synthesis. However, NeRF-based methods differ fundamentally from our DQRNet in both 3D representation and task objectives. NeRF represents 3D information as an implicit radiance field, encoding scene geometry and appearance within a neural network. In contrast, DQRNet explicitly reconstructs 3D shapes using voxel representations and generates the complete 3D occupancy grid from a partial observation. In this paper, we focus on comparing methods that share the same explicit 3D shape representation.

3. DQRNet

3.1. Overview

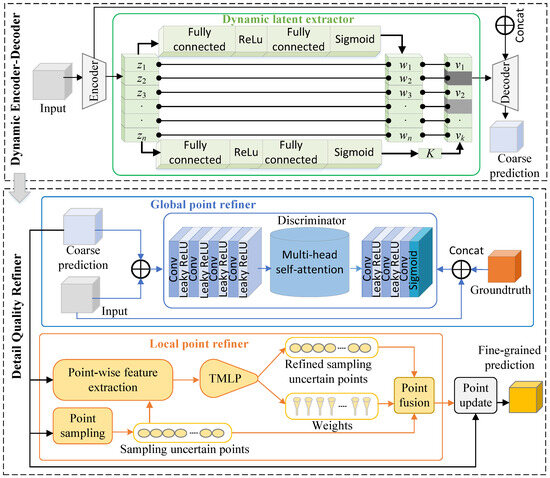

As shown in Figure 1, DQRNet performs dense reconstruction while preserving the boundary details from a single depth view of an object. DQRNet contains two parts: a dynamic encoder–decoder network, and a detail quality refiner consisting of a global point refiner and a local point refiner. DQRNet utilizes the dynamic encoder–decoder network to select the most valuable latent information from a single depth view (see Section 3.2), then introduces a global point refiner to capture long-distance dependencies of objects, thereby improving the overall global structure (see Section 3.3.1). The local point refiner samples points with higher instability, extracts multilevel features, and utilizes a weighted fusion network to adaptively combine pre- and post-predicted points, thereby refining the boundary details (see Section 3.3.2). By integrating these components, DQRNet effectively enhances the 3D shape reconstruction quality, achieving improved IoU and Chamfer error on the ShapeNet dataset. In this paper, the 3D shape of an object is expressed as the probability distribution of binary variables on a 3D voxel grid, where 1 indicates that the voxel is occupied and 0 indicates that the voxel is unoccupied.

Figure 1.

The architecture of our proposed DQRNet. DQRNet introduces a dynamic encoder–decoder to obtain a coarse prediction and multiscale features from a single depth view. DQRNet then utilizes global and local point refiners to enhance the reconstruction quality and achieve a fine-grained 3D shape.

A few notations are introduced as follows: scalars, vectors, tensors, and functions are respectively denoted by non-bold italic letters, bold lowercase letters, bold uppercase letters, and calligraphic uppercase letters letters.

3.2. Dynamic Encoder–Decoder

3.2.1. Encoder–Decoder

This part is composed of an encoder–decoder with a skip connection and an upsampling component. Standard encoder–decoder architectures often tend to compress important structural details into a latent space, leading to loss of fine-grained features. Inspired by [59], our encoder–decoder is designed as a residual structure. The encoder extracts multiscale features while suppressing noise via residual blocks. The decoder leverages the skip connection to integrate local structural details with global shape priors, enabling plausible completion of occluded regions.

Specifically, the encoder consists of 18 layers. The first layer is a convolution operation with 64 channels, while the subsequent 16 layers are a residual network with four blocks. Each block consists of four convolutional layers with kernels. The strides are set to , , , and , respectively, followed by a LeakyReLU activation function. The channels are doubled at each block. The final layer is a fully connected layer that outputs a 2000-dimensional latent vector. The decoder is composed of four symmetric up-convolutional blocks. Each block consists of four transpose convolution layers with kernel sizes of and strides of , except for the first layer, which uses a stride of . Each layer is followed by a ReLU activation function. The decoder finally outputs a shape. The upsampling component comprises two up-convolutional layers, which increase the resolution of the shape to .

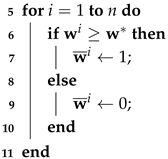

3.2.2. Dynamic Latent Extractor

In a standard encoder–decoder model, the latent space of an object is represented as a vector in which each element has the same weight. However, in real-world environments not all latent features are equally relevant due to the presence of noise and redundant information. These irrelevant features can result in diminished quality of the reconstructed model. To tackle this issue, a dynamic latent extractor is introduced to select and preserve the relevant latent features. The implementation details are shown in Algorithm 1, which consists of two neural networks and having the same structure. However, the numbers of nodes in the two fully connected layers of the former are 2000 and 2000, respectively, while those of the latter are 1000 and 1, respectively. The feature vector from the above encoder is fed into the neural network to predict the weight of each latent code , represented as , where the network contains two fully connected layers. They adopt a ReLU and sigmoid activation function, respectively. Similarly, the latent vector is fed into another neural network to predict a scalar k, denoted as . Here, k is a dynamic parameter that is used to select the relevant latent features. A function is then introduced to obtain the kth value among the top k values of the , sorted in descending order. In this way, we obtain a new vector by Equation (1). Note that we maintain the positional consistency between the latent vector and , ensuring that the corresponding features are mapped to the correct positions:

where represents the latent vector after dynamic selection and ⊙ denotes the element-wise multiplication. By suppressing latent features with low relevance, the negative impact of noise on reconstruction quality can be reduced. In addition, the dynamic latent vector is also used as a strong sparse regularization to avoid over-fitting certain features, which helps to improve the robustness of the model.

| Algorithm 1: Dynamic latent extractor |

| Input : Latent vector from the encoder Output: Latent vector //Step 1: Predict the weights 1 Initialization: 2 //Step 2: Predict a threshold to retain the top k latent features 3 //Step 3: Generate binary masks by retaining the top k dimensions 4 //Obtain the kth value among the top k values in the descending sorted  //Step 4: Refine the latent vector by element-wise multiplication 12 by Equation (2) //Preserve the original positional mapping 13 return |

3.3. Detail Quality Refiner

To enhance the points with high instability in the above coarse predictions, a detail quality refiner is developed. The detail quality refiner consists of a global point refiner and a local point refiner. The former leverages nonlocal information to improve the overall structure, while the latter selectively refines uncertain regions to obtain the boundary details of shape.

3.3.1. Global Point Refiner

To enhance the authenticity and realism of the predicted 3D shapes, the global point refiner is designed as a discriminator with seven 3D convolutional layers and 3D multi-head self-attention (MSA). Each convolutional layer employs filters with strides of followed by a LeakyReLU activation function, except for the final layer, which uses a sigmoid activation function. The number of output channels for these convolutional layers starts at 16 and doubles with each subsequent layer, ending up with 1024. The MSA can learn relevant clues from nonlocal regions in order to capture multilevel global information and improved details. The initial convolutional layer merges the coarse 3D shape (i.e., a 3D voxel grid of ) and input 2.5D depth view (i.e., a 2.5D voxel grid of ) as the fake input, then merges the ground truth 3D shape (i.e., a 3D voxel grid of ) and input 2.5D depth view as the real input. The global point refiner finally outputs fake and real discriminative feature vectors with sizes of 8192.

3.3.2. Local Point Refiner

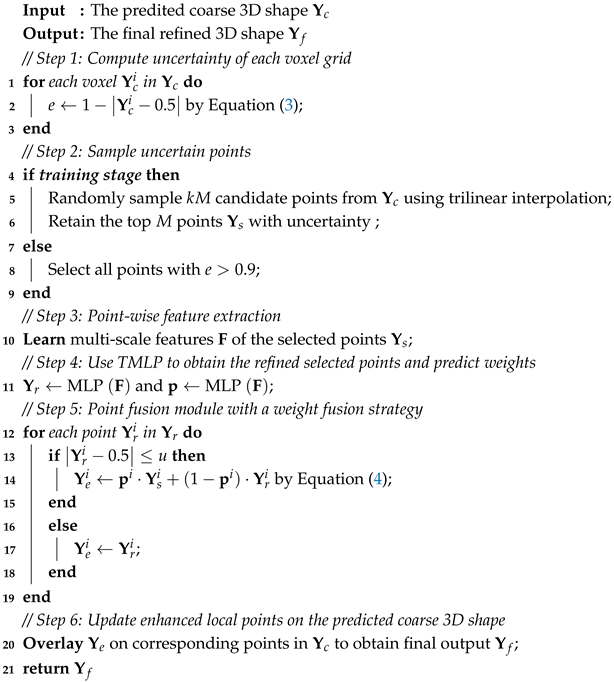

The main source of reconstruction errors often lies in regions with high uncertainty. These uncertain regions primarily arise at object boundaries due to occlusions and missing data. To address this problem, we introduce local point refiner. The implementation details are shown in Algorithm 2. The local point refiner has four stages: a point sampling module, a point-wise feature extraction module, two multilayer perceptron (TMLP) networks, and a point fusion module.

| Algorithm 2: Local point refiner |

|

The point sampling process seeks to find points with high uncertainty in the coarse prediction. Each predicted voxel has an occupied probability. The closer the probability is to 0.5, the higher its uncertainty. The uncertainty e is obtained by the following Equation (3):

where is the occupied probability of the ith voxel for the predicted coarse shape .

With the aim of accelerating training and enhancing the generalization ability, we adopt different point sampling strategies in the training and inference stages. In the training stage, we sample candidate points from the coarse prediction using trilinear interpolation based on randomly generated coordinates of a uniform distribution, where k determines the inclination towards regions with high uncertainty. We then select M points with the highest uncertainty from the candidate points, where M and k are empirically set to 65536 and 4 in this experiment, respectively. During inference, we sample the points with uncertainty in the experiments.

The point-wise feature extraction process is responsible for learning more rich features of the selected points. For any selected point, its occupancy probability can be extracted from this coarse output. We obtain the multiscale features of the selected points by performing trilinear interpolation on both fine-grained features and coarse-grained features. The former are taken from the first layer and the first block of the encoder, while the latter are taken from the last two blocks of the decoder. The fine-grained features contain more local information, enabling the model to capture complex details, while the coarse-grained features contain more global information, helping to provide broader context cues.

The TMLP networks aim to refine the sampled uncertain points and predict their weights based on the point-wise features obtained in the previous step. This allows the model to adaptively control the influence of refined points, preventing overcorrection. A TMLP network consists of two structurally consistent MLP networks. One MLP outputs the refined predictions for each selected point, while the other MLP outputs their adaptive weights. Each MLP network consists of four layers; the first layer has 152 nodes, corresponding to the dimensionality of the point-wise features, while the two hidden layers have 100 and 50 nodes, respectively, and the last layer has one node. Each layer is followed by a ReLU activation function, except for the last layer, which uses a sigmoid activation function.

The point fusion module is designed to fuse the sampled uncertain points and refined uncertain points using the predicted weights from the TMLP. To reduce unnecessary computational overhead, we apply a selective weighted fusion strategy to low-confidence points only, while keeping the high-confidence points unchanged. Specifically, we compute the confidence of the points using Equation (3). To refine points with low confidence while retaining points with high confidence, we set a threshold u. As shown in Equation (4), we retain those points with higher confidence than u from the output of the TMLP, and design a weight fusion strategy to deal with the points with a lower confidence than u. The comparison results of different values of u are shown in ablation studies. The tables are 6 and 7. If it is not a, I have revised it as ablation studies. As a result, the local point refiner outputs the enhanced points .

Finally, we overlay the enhanced local points on the coarse shape to obtain the final output .

4. Loss Function

Our loss function consists of four parts: the dynamic encoder–decoder loss (), local point refiner loss (), global point refiner loss ( and ), and fine-grained prediction loss (). For the dynamic encoder–decoder loss () and fine-grained prediction loss (), we utilize a modified binary cross-entropy loss. This loss function includes a penalty parameter that increases the penalty when a voxel with a value of 1 is mistakenly identified as 0. The losses are expressed as follows:

where N represents the number of voxels in a voxel grid, and are the occupied probability of the ith voxel for predicted coarse and fine-grained shapes, respectively, is the corresponding ground truth, and is used to balance the weights for predicting occupied and unoccupied voxels in the experiments.

The global point refiner loss consists of two parts, the generator loss and the discriminator loss :

where , is the input depth view, is the predicted coarse shape, is the corresponding ground truth, and D is the discriminator. The weight is a commonly used regularization parameter that controls the balance between optimizing the gradient penalty and the original objective in WGAN-GP [60] to ensure stable training.

The local point refiner loss () is used to punish the sampling of uncertain points in the predicted coarse shape, and a cross-entropy loss function is employed. This loss can help the model to concentrate on learning the detailed and local information in a voxel grid, and is expressed as follows:

where M is the total number of uncertain sampling points, is the predicted latent representation of the ith point for the coarse shape, and is the corresponding ground truth obtained by interpolation from the nearest value in a voxel grid.

In summary, the total generative loss and the discriminative loss are alternately optimized in the experiments. The loss is expressed as follows:

where the weights and are determined through empirical tuning and experimental validation. Considering that the local point refiner loss focuses on refining specific regions, it is assigned a relatively low weight of . In contrast, both the dynamic encoder–decoder loss and the fine-grained prediction loss are aimed at optimizing the overall reconstruction quality, and as such are assigned higher weights to ensure their stronger influence in the training process. Moreover, compared with the losses , which contribute to the core 3D completion task, the adversarial loss is assigned a relatively low weight of . This helps to mitigate the instability introduced by adversarial training while ensuring that the primary completion objectives remain dominant.

5. Experimental Results

5.1. Dataset

To train DQRNet, a single depth view and its corresponding comprehensive 3D model are essential. We utilize the dataset [24] from the ShapeNet database. The training dataset contains 213 CAD models and comprises four categories: bench, chair, couch, and table. Each model captures 125 different views, with each view corresponding to a 2.5D depth image and its corresponding 3D voxel grid. Therefore, there are 26,625 training pairs for each category. The testing dataset contains 37 CAD models, and like the training dataset has four categories. For each category, two sets are generated. The first set is scanned from the same 125 views, with each category containing 4625 testing pairs. The second set is scanned from 216 different views, with each category containing 7992 testing pairs.

5.2. Experimental Setup

All experiments were implemented on an Intel Xeon Platinum 8362 CPU (Intel Corporation, Santa Clara, CA, USA) and NVIDIA GeForce RTX 3090 GPU (NVIDIA Corporation, Santa Clara, CA, USA) with 2.8 GHZ and 64 GB RAM. DQRNet was trained with a batch size of 3. The voxel grid size was set as . The learning rates of the generative loss and discriminator loss were set to 0.0006 and 0.002. To train all the parameters of DQRNet, the Adam optimizer [61] was adopted with = 0.9 and = 0.999. The weights , , and were set as 10, 0.1, and 0.85, respectively.

5.3. Metrics

To quantitatively assess the performance of our method, we employed the Intersection over Union (IoU) and Cross-Entropy (CE) loss, both of which are commonly used in 3D shape completion tasks. The IoU quantitatively measures the overlap between the predicted and ground truth shapes. A higher IoU value suggests better shape completion performance. The IoU is defined as follows:

where and represent the occupancy values of the ith voxel for the predicted and ground truth shapes, respectively, N is the total number of voxels in a voxel grid, and is a threshold used to binarize the predicted occupancy values. If , the voxel is considered occupied; otherwise, it is considered empty. Here, we assigned a value of 0.5 in our experiments. Finally, is an indicator function that maps a condition to a binary value, with 1 if X is true and 0 otherwise.

In addition, the CE loss is to further assess the performance of our model by quantifying the difference between the predicted probability distribution and the real distribution. A lower CE loss indicates a better match between the predicted and true voxel occupancy. The CE loss is defined as follows:

5.4. Comparisons with State-of-the-Art Methods

We conducted a comparative analysis with several recent studies that focus on 3D shape reconstruction from single depth images. To ensure fairness and consistency, we utilized the same evaluation datasets and metrics across all studies.

(1) 3D-EPN [17] presents a method based on data that integrates volumetric deep neural networks with 3D shape generation, introduced to reconstruct incomplete 3D shapes.

(2) Varley et al. [62] presented a robotic grasp planning framework utilizing a 3D-CNN trained on a large dataset to enable fast shape completion from 2.5D images. This framework can obtain unseen objects with improved performance.

(3) SeedFormer [63] utilizes patch seeds to capture both global and local information, and incorporates an upsample transformer to incorporate spatial and semantic relationships. For this comparison, we converted the point cloud output from SeedFormer into 3D voxels with a resolution of .

(4) SnowflakeNet [64] presents snowflake point deconvolution layers, which progressively refine the point cloud. Each layer generates child points by splitting parent points. For this comparison, we voxelized its outputs.

(5) 3D-RecGAN++ [24] is a straightforward and efficient framework that integrates a skip-connected 3D encoder–decoder with adversarial training to produce a compete and detailed 3D structure from a single 2.5D view. The skip connection preserves high-frequency details, while the adversarial training process enhances shape plausibility and fine details.

(6) 3D-RVP [41] is a two-stage approach for reconstructing a complete 3D geometry from a single depth view. It first utilizes an encoder–decoder network to generate a coarse 3D shape as a voxel grid. Then, a point prediction network selectively samples and predicts occupancies of uncertain voxels, iteratively improving the resolution and accuracy of the 3D reconstruction.

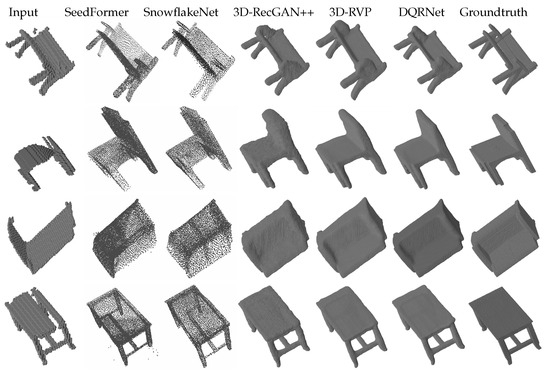

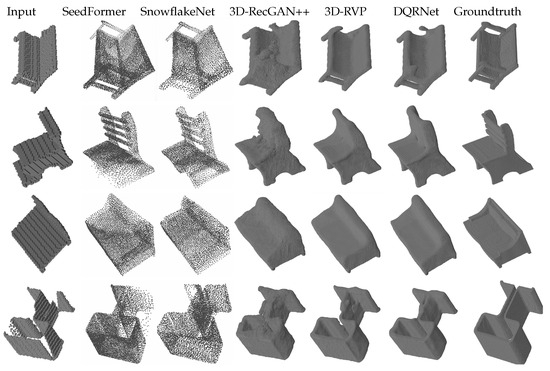

We conducted two groups of experiments on the dataset [24]. The first group involved per-category experiments, where the training set and testing set were set as each category. The testing sets consisted of the same 125 views as in the training along with 216 cross views. The results are shown in Table 1 and Table 2 along with Figure 2 and Figure 3. From Table 1 and Figure 2, the results show that DQRNet achieves the best relative performance on the reconstruction of the same views in terms of both IoU and CE loss; compared to 3D-RVP, the average IoU improves by 1.26%, while the average CE loss is reduced by 4.66%. From Table 2 and Figure 3, the results show that DQRNet also attains the best IoU on the reconstruction of the cross views for all categories, along with the best CE for three categories. The average IoU of DQRNet improves by 2.23% compared to 3D-RVP, while the CE loss is reduced by 6.83%. From the qualitative results in Figure 2 and Figure 3, Seedformer tends to cause fragmented shapes, whereas DQRNet produces more complete results and retains finer details. SnowflakeNet only outputs a point cloud with a resolution of 8192 points, and its point-based representation lacks delicate structures; for example, the ends of chair legs often appear incomplete or overly smoothed. In contrast, DQRNet enables more precise reconstruction, effectively capturing both global structure and fine-grained details, leading to a more faithful 3D completion. These results demonstrate that DQRNet is able to capture the intricacies of objects, resulting in reconstructions that are not only more comprehensive but also preserve critical features.

Table 1.

Per-category IoU and CE loss with same views.

Table 2.

Per-category IoU and CE loss with cross views.

Figure 2.

Qualitative results of per-category reconstruction on the testing set with same views.

Figure 3.

Qualitative results of per-category reconstruction on testing set with the cross views.

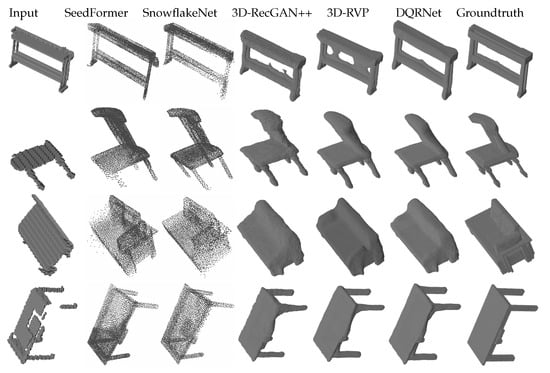

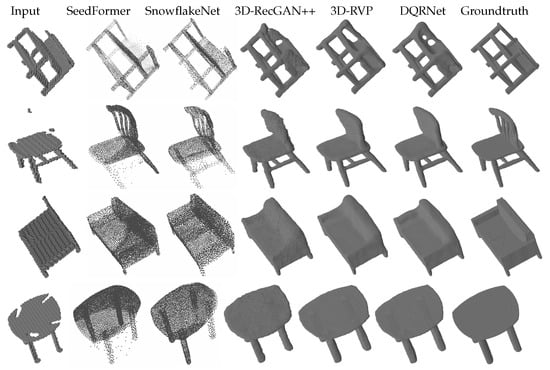

The second group of experiments consisted of multi-category tests. These experiments measure the domain adaptation capability among different categories of 3D objects, with the training set and testing set representing multiple categories. Similar to the first group of experiments, the testing sets consisted of both same and cross views. The results are shown in Table 3 and Table 4 along with Figure 4 and Figure 5. As shown in Table 3, DQRNet achieves the best performance on the same views in terms of both IoU and CE. Notably, DQRNet surpasses 3D-RVP in IoU by an average of 1.77% on the same views, further underscoring the effectiveness of our model. For the bench category, it can be seen from Figure 4 that the other methods often suffer from incomplete shapes, such as missing legs or overly complicated reconstructions. In contrast, DQRNet can accurately reconstruct realistic and plausible shapes, demonstrating its superiority in capturing intricate details. Table 4 demonstrates that DQRNet achieves an impressive average IoU improvement of 3.05% on cross views when compared to 3D-RVP. Figure 5 further demonstrates the robust generalization capability of DQRNet and its ability to preserve local details with remarkable fidelity. These results highlight the superiority of DQRNet in reconstructing multi-category objects from varying views.

Table 3.

Multi-category IoU and CE loss with same views.

Table 4.

Multi-category IoU and CE loss with cross views.

Figure 4.

Qualitative results of multi-category reconstruction on testing set with the same views.

Figure 5.

Qualitative results of multi-category reconstruction on the testing set with cross views.

From the perspective of views, the same-view results of DQRNet are better the cross-view results. We believe that the same-view settings can make shape reconstruction more reliable and enable more confident voxel occupancy predictions, as the views are known and seen during training. However, the model can only capture limited information when encountering unseen views, making it difficult to predict missing regions of objects. From the perspective of categories, the multi-category results are better than the per-category results. We believe that multi-category training can help the model to generalize better and produce more stable occupancy distributions of objects. In other words, multi-category training generally leads to better performance, as the model is exposed to a more diverse dataset, which helps it to infer missing regions more smoothly. These findings highlight the tradeoffs between specialization and generalization in 3D shape completion, and emphasize the impact of view distribution on model performance.

In addition, we evaluated the computational efficiency of DQRNet in terms of model parameters, floating-point operations (flops), and inference time. DQRNet has 5.5% more parameters (191 M vs. 181 M) and 26.2% more flops (135.41 G vs. 107.29 G) compared with 3D-RVP; however, the inference speed of DQRNet is comparable to that of 3D-RVP. The reason for this is attributed to our local point refiner, which selectively fuses only a subset of points instead of processing all points, thereby reducing unnecessary computations. Moreover, DQRNet delivers superior shape completion results, demonstrating the effectiveness of our design in balancing performance and efficiency.

5.5. Ablation Study

We conducted per-category experiments with the same views to validate the effectiveness of the proposed components by progressively adding them to the baseline encoder–decoder network. As presented in Table 5, the quantitative results indicate a significant improvement in both IoU and CE upon introducing the Dynamic Latent Extractor (DLE). This notable enhancement validates the ability of DLE to effectively preserve the most pertinent latent features while mitigating the adverse effects of noise.

Table 5.

Quantitative results of the ablation study. DLE, LPR and GPR denote the dynamic latent extractor, local point refiner, and global point refiner, respectively.

Next, we added the Local Point Refiner (LPR) and the Global Point Refiner (GPR) individually. From Table 5, it can be seen that both LPR and GPR contribute to a degree of performance enhancement. Based on these results, GPR can learn more rich global information using the discriminator, while LPR can precisely locate the points that are most likely to be erroneous and re-evaluate them through weighted fusion, which is conductive to more authoritative predictions. By integrating all components, DQRNet achieves the best overall performance, further corroborating the effectiveness of our proposed components.

In addition, we conducted two types of experiments on the uncertainty e in Equation (3) and threshold u in Equation (4). The comparison results in terms of IoU performance on the bench category with different values of e and u are shown in Table 6. It can be observed that the reconstruction performance of is better than that of . Based on this analysis, when , DQRNet is more effective at selecting points with high uncertainty while minimizing changes to points that have already been correctly predicted. This can ensure that the model focuses on refining those points that are most likely to be incorrect. The multi-category comparison results in terms of IoU performance with different u are presented in Table 7, considering . It can be observed that the performance is better when . Our goal is to enhance the reliability of the predicted inaccurate points. As shown in Equation (4), we use the expression to represent the confidence level of the points . When the threshold u is set close to 0.5, it includes more high-confidence points, thereby incorporating more accurate points; conversely, setting u closer to 0 results in the inclusion of too few points. Therefore, selecting a balanced value of yields better performance.

Table 6.

Comparison of IoU performance with different e and u.

Table 7.

Comparison of IoU performance with different u.

6. Conclusions and Discussion

In this paper, we have proposed a novel framework called DQRNet which can reconstruct missing shapes in 3D scans caused by occlusion problems. By innovatively combining a dynamic latent extractor and detail quality refiner, DQRNet is able to generate complete, high-resolution, and detailed 3D shapes from a single depth view. Our experimental results demonstrate that DQRNet can enhance reconstruction accuracy and robustness, particularly for the boundaries and key regions of 3D shapes.

In the future, we will continue to optimize the performance of DQRNet while exploring practical application scenarios and applying it to more challenging 3D reconstruction tasks. Additionally, we will investigate how to utilize advanced technique such as diffusion models and NeRF in order to achieve more efficient and accurate 3D reconstruction.

Author Contributions

Conceptualization, C.L. and H.L.; methodology, C.L. and M.Z.; software, M.Z., J.L. and Q.Y.; formal analysis, M.Z.; resources, H.L.; writing—original draft, C.L., M.Z. and X.W.; writing—review and editing, C.L., X.W. and H.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (No. 62402018, 62277001), Beijing Natural Science Foundation (L233026), R&D Program of Beijing Municipal Education Commission (No. KM202410011017), and Beijing Technology and Business University 2024 Postgraduate Research Ability Improvement Program Project.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Details of the data are shown in Section 5.1.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Liu, Q.; Su, H.; Duanmu, Z.; Liu, W.; Wang, Z. Perceptual Quality Assessment of Colored 3D Point Clouds. IEEE Trans. Vis. Comput. Graph. 2023, 29, 3642–3655. [Google Scholar] [CrossRef] [PubMed]

- Cui, Y.; Chen, R.; Chu, W.; Chen, L.; Tian, D.; Li, Y.; Cao, D. Deep Learning for Image and Point Cloud Fusion in Autonomous Driving: A Review. IEEE Trans. Intell. Transp. Syst. 2022, 23, 722–739. [Google Scholar] [CrossRef]

- Zhu, Z.; Peng, S.; Larsson, V.; Xu, W.; Bao, H.; Cui, Z.; Oswald, M.R.; Pollefeys, M. Nice-slam: Neural implicit scalable encoding for slam. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 12786–12796. [Google Scholar]

- Ye, S.; Chen, D.; Han, S.; Wan, Z.; Liao, J. Meta-PU: An Arbitrary-Scale Upsampling Network for Point Cloud. IEEE Trans. Vis. Comput. Graph. 2022, 28, 3206–3218. [Google Scholar] [CrossRef]

- Lei, N.; Li, Z.; Xu, Z.; Li, Y.; Gu, X. What’s the Situation with Intelligent Mesh Generation: A Survey and Perspectives. IEEE Trans. Vis. Comput. Graph. 2023, 30, 4997–5017. [Google Scholar] [CrossRef]

- Zhang, L.; Li, H.; Liu, R.; Wang, X.; Wu, X. Quality guided metric learning for domain adaptation person re-identification. IEEE Trans. Consum. Electron. 2024, 70, 6023–6030. [Google Scholar] [CrossRef]

- Yi, X.; Deng, J.; Sun, Q.; Hua, X.S.; Lim, J.H.; Zhang, H. Invariant Training 2D-3D Joint Hard Samples for Few-Shot Point Cloud Recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; pp. 14463–14474. [Google Scholar]

- Zeng, G.; Li, H.; Wang, X.; Li, N. Point cloud up-sampling network with multi-level spatial local feature aggregation. Comput. Electr. Eng. 2021, 94, 107337. [Google Scholar] [CrossRef]

- Zhang, H.; Li, H.; Li, N. MeshLink: A surface structured mesh generation framework to facilitate automated data linkage. Adv. Eng. Softw. 2024, 194, 103661. [Google Scholar] [CrossRef]

- Zhang, H.; Li, H.; Wu, X.; Li, N. MTGNet: Multi-label mesh quality evaluation using topology-guided graph neural network. Eng. Comput. 2024, 1–13. [Google Scholar] [CrossRef]

- Alhamazani, F.; Lai, Y.K.; Rosin, P.L. 3DCascade-GAN: Shape completion from single-view depth images. Comput. Graph. 2023, 115, 412–422. [Google Scholar] [CrossRef]

- Zheng, Y.; Zeng, G.; Li, H.; Cai, Q.; Du, J. Colorful 3D reconstruction at high resolution using multi-view representation. J. Vis. Commun. Image Represent. 2022, 85, 103486. [Google Scholar] [CrossRef]

- Liu, C.; Kong, D.; Wang, S.; Li, J.; Yin, B. A Spatial Relationship Preserving Adversarial Network for 3D Reconstruction from a Single Depth View. ACM Trans. Multimed. Comput. Commun. Appl. 2022, 18, 110. [Google Scholar] [CrossRef]

- Li, H.; Zheng, Y.; Cao, J.; Cai, Q. Multi-view-based siamese convolutional neural network for 3D object retrieval. Comput. Electr. Eng. 2019, 78, 11–21. [Google Scholar] [CrossRef]

- Li, H.; Sun, L.; Dong, S.; Zhu, X.; Cai, Q.; Du, J. Efficient 3d object retrieval based on compact views and hamming embedding. IEEE Access 2018, 6, 31854–31861. [Google Scholar] [CrossRef]

- Kazhdan, M.; Hoppe, H. Screened poisson surface reconstruction. Acm Trans. Graph. (ToG) 2013, 32, 1–13. [Google Scholar] [CrossRef]

- Dai, A.; Ruizhongtai Qi, C.; Niessner, M. Shape Completion Using 3D-Encoder-Predictor CNNs and Shape Synthesis. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5868–5877. [Google Scholar]

- Li, H.; Zhao, T.; Li, N.; Cai, Q.; Du, J. Feature Matching of Multi-view 3D Models Based on Hash Binary Encoding. Neural Netw. World 2017, 27, 95–105. [Google Scholar] [CrossRef]

- Mittal, P.; Cheng, Y.C.; Singh, M.; Tulsiani, S. AutoSDF: Shape Priors for 3D Completion, Reconstruction and Generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 306–315. [Google Scholar]

- Rao, Y.; Nie, Y.; Dai, A. PatchComplete: Learning Multi-Resolution Patch Priors for 3D Shape Completion on Unseen Categories. In Proceedings of the Advances in Neural Information Processing Systems, New Orleans, LA, USA, 28 November–9 December 2022; Volume 35, pp. 34436–34450. [Google Scholar]

- Xu, M.; Wang, Y.; Liu, Y.; He, T.; Qiao, Y. CP3: Unifying Point Cloud Completion by Pretrain-Prompt-Predict Paradigm. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 9583–9594. [Google Scholar] [CrossRef] [PubMed]

- Wang, M.; Liu, Y.S.; Gao, Y.; Shi, K.; Fang, Y.; Han, Z. LP-DIF: Learning Local Pattern-Specific Deep Implicit Function for 3D Objects and Scenes. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 18–22 June 2023; pp. 21856–21865. [Google Scholar]

- Wu, Y.; Yan, Z.; Chen, C.; Wei, L.; Li, X.; Li, G.; Li, Y.; Cui, S.; Han, X. SCoDA: Domain Adaptive Shape Completion for Real Scans. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 18–22 June 2023; pp. 17630–17641. [Google Scholar]

- Yang, B.; Rosa, S.; Markham, A.; Trigoni, N.; Wen, H. Dense 3D Object Reconstruction from a Single Depth View. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 41, 2820–2834. [Google Scholar] [CrossRef]

- Zhang, H.; Li, H.; Wu, X.; Li, N. Surface Structured Quadrilateral Mesh Generation Based on Topology Consistent-Preserved Patch Segmentation. Int. J. Numer. Methods Eng. 2025, 126, e7644. [Google Scholar] [CrossRef]

- Kanazawa, A.; Tulsiani, S.; Efros, A.A.; Malik, J. Learning Category-Specific Mesh Reconstruction from Image Collections. In Proceedings of the European Conferenceon Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 386–402. [Google Scholar]

- Wang, N.; Zhang, Y.; Li, Z.; Fu, Y.; Liu, W.; Jiang, Y.G. Pixel2Mesh: Generating 3D Mesh Models from Single RGB Images. In Proceedings of the European Conferenceon Computer Vision (European Conferenceon Computer Vision (ECCV)), Tel Aviv, Israel, 23–27 October 2022; pp. 55–71. [Google Scholar]

- Xu, Q.; Wang, W.; Ceylan, D.; Mech, R.; Neumann, U. DISN: Deep Implicit Surface Network for High-quality Single-view 3D Reconstruction. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; Volume 32. [Google Scholar]

- Fan, H.; Su, H.; Guibas, L.J. A Point Set Generation Network for 3D Object Reconstruction From a Single Image. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 605–613. [Google Scholar]

- Navaneet, K.L.; Mathew, A.; Kashyap, S.; Hung, W.C.; Jampani, V.; Babu, R.V. From Image Collections to Point Clouds With Self-Supervised Shape and Pose Networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 1132–1140. [Google Scholar]

- Wu, C.Y.; Johnson, J.; Malik, J.; Feichtenhofer, C.; Gkioxari, G. Multiview Compressive Coding for 3D Reconstruction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 18–22 June 2023; pp. 9065–9075. [Google Scholar]

- Lin, C.H.; Wang, C.; Lucey, S. SDF-SRN: Learning Signed Distance 3D Object Reconstruction from Static Images. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 6–12 December 2020; Volume 33, pp. 11453–11464. [Google Scholar]

- Mescheder, L.; Oechsle, M.; Niemeyer, M.; Nowozin, S.; Geiger, A. Occupancy Networks: Learning 3D Reconstruction in Function Space. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–17 June 2019; pp. 4460–4470. [Google Scholar]

- Alwala, K.V.; Gupta, A.; Tulsiani, S. Pre-Train, Self-Train, Distill: A Simple Recipe for Supersizing 3D Reconstruction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 3773–3782. [Google Scholar]

- Jia, D.; Ruan, X.; Xia, K.; Zou, Z.; Wang, L.; Tang, W. Analysis-by-Synthesis Transformer for Single-View 3D Reconstruction. In Proceedings of the European Conferenceon Computer Vision (ECCV), Milan, Italy, 29 Septembe –4 October 2024; pp. 259–277. [Google Scholar]

- Lee, J.J.; Benes, B. RGB2Point: 3D Point Cloud Generation from Single RGB Images. arXiv 2024, arXiv:2407.14979. [Google Scholar]

- Han, X.; Li, Z.; Huang, H.; Kalogerakis, E.; Yu, Y. High-resolution shape completion using deep neural networks for global structure and local geometry inference. In Proceedings of the IEEE International Conference on Computer vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 85–93. [Google Scholar]

- Song, S.; Yu, F.; Zeng, A.; Chang, A.X.; Savva, M.; Funkhouser, T. Semantic Scene Completion From a Single Depth Image. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1746–1754. [Google Scholar]

- Wang, W.; Huang, Q.; You, S.; Yang, C.; Neumann, U. Shape inpainting using 3D generative adversarial network and recurrent convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2298–2306. [Google Scholar]

- Liu, C.; Kong, D.; Wang, S.; Li, J.; Yin, B. Latent Feature-Aware and Local Structure-Preserving Network for 3D Completion from a Single Depth View. In Proceedings of the Artificial Neural Networks and Machine Learning, Bratislava, Slovakia, 14–17 September 2021; pp. 67–79. [Google Scholar]

- Zhao, M.; Xiong, G.; Zhou, M.; Shen, Z.; Wang, F.Y. 3D-RVP: A method for 3D object reconstruction from a single depth view using voxel and point. Neurocomputing 2021, 430, 94–103. [Google Scholar] [CrossRef]

- Aboukhadra, A.T.; Malik, J.; Robertini, N.; Elhayek, A.; Stricker, D. ShapeGraFormer: GraFormer-Based Network for Hand-Object Reconstruction from a Single Depth Map. arXiv 2023, arXiv:2310.11811. [Google Scholar] [CrossRef]

- Achlioptas, P.; Diamanti, O.; Mitliagkas, I.; Guibas, L. Learning representations and generative models for 3d point clouds. In Proceedings of the International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; pp. 40–49. [Google Scholar]

- Zheng, X.; Liu, Y.; Wang, P.; Tong, X. SDF-StyleGAN: Implicit SDF-Based StyleGAN for 3D Shape Generation. Comput. Graph. Forum 2022, 41, 52–63. [Google Scholar] [CrossRef]

- Li, H.; Zheng, Y.; Wu, X.; Cai, Q. 3D model generation and reconstruction using conditional generative adversarial network. Int. J. Comput. Intell. Syst. 2019, 12, 697–705. [Google Scholar] [CrossRef]

- Liu, C.; Chen, Y.; Zhu, M.; Hao, C.; Li, H.; Wang, X. DEGAN: Detail-Enhanced Generative Adversarial Network for Monocular Depth-Based 3D Reconstruction. ACM Trans. Multimed. Comput. Commun. Appl. 2024, 20, 365. [Google Scholar] [CrossRef]

- Gao, L.; Wu, T.; Yuan, Y.J.; Lin, M.X.; Lai, Y.K.; Zhang, H. TM-NET: Deep generative networks for textured meshes. Acm Trans. Graph. (ToG) 2021, 40, 263. [Google Scholar] [CrossRef]

- Kim, J.; Yoo, J.; Lee, J.; Hong, S. SetVAE: Learning Hierarchical Composition for Generative Modeling of Set-Structured Data. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 15059–15068. [Google Scholar]

- Tan, Q.; Gao, L.; Lai, Y.K.; Xia, S. Variational Autoencoders for Deforming 3D Mesh Models. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 5841–5850. [Google Scholar]

- Yang, G.; Huang, X.; Hao, Z.; Liu, M.Y.; Belongie, S.; Hariharan, B. Pointflow: 3d point cloud generation with continuous normalizing flows. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 4541–4550. [Google Scholar]

- Sun, Y.; Wang, Y.; Liu, Z.; Siegel, J.; Sarma, S.E. PointGrow: Autoregressively Learned Point Cloud Generation with Self-Attention. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Village, CO, USA, 1–5 March 2020; pp. 61–70. [Google Scholar]

- Xie, J.; Xu, Y.; Zheng, Z.; Zhu, S.C.; Wu, Y.N. Generative pointnet: Deep energy-based learning on unordered point sets for 3D generation, reconstruction and classification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 14976–14985. [Google Scholar]

- Rombach, R.; Blattmann, A.; Lorenz, D.; Esser, P.; Ommer, B. High-Resolution Image Synthesis With Latent Diffusion Models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 10684–10695. [Google Scholar]

- Luo, S.; Hu, W. Diffusion Probabilistic Models for 3D Point Cloud Generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 2837–2845. [Google Scholar]

- Zheng, X.Y.; Pan, H.; Wang, P.S.; Tong, X.; Liu, Y.; Shum, H.Y. Locally attentional sdf diffusion for controllable 3d shape generation. Acm Trans. Graph. (ToG) 2023, 42, 1–13. [Google Scholar] [CrossRef]

- Cheng, Y.C.; Lee, H.Y.; Tulyakov, S.; Schwing, A.G.; Gui, L.Y. SDFusion: Multimodal 3D Shape Completion, Reconstruction, and Generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 18–22 June 2023; pp. 4456–4465. [Google Scholar]

- Li, Y.; Dou, Y.; Chen, X.; Ni, B.; Sun, Y.; Liu, Y.; Wang, F. Generalized Deep 3D Shape Prior via Part-Discretized Diffusion Process. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 18–22 June 2023; pp. 16784–16794. [Google Scholar]

- Vahdat, A.; Williams, F.; Gojcic, Z.; Litany, O.; Fidler, S.; Kreis, K. Lion: Latent point diffusion models for 3D shape generation. Adv. Neural Inf. Process. Syst. 2022, 35, 10021–10039. [Google Scholar]

- Çiçek, Ö.; Abdulkadir, A.; Lienkamp, S.S.; Brox, T.; Ronneberger, O. 3D U-Net: Learning dense volumetric segmentation from sparse annotation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2016, Athens, Greece, 17–21 October 2016; pp. 424–432. [Google Scholar]

- Gulrajani, I.; Ahmed, F.; Arjovsky, M.; Dumoulin, V.; Courville, A.C. Improved training of wasserstein gans. Adv. Neural Inf. Process. Syst. 2017, 30, 5769–5779. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. ICLR (Poster) 2015, 5. [Google Scholar]

- Varley, J.; DeChant, C.; Richardson, A.; Ruales, J.; Allen, P. Shape completion enabled robotic grasping. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 2442–2447. [Google Scholar]

- Zhou, H.; Cao, Y.; Chu, W.; Zhu, J.; Lu, T.; Tai, Y.; Wang, C. SeedFormer: Patch Seeds Based Point Cloud Completion with Upsample Transformer. In Proceedings of the European Conferenceon Computer Vision (ECCV), Tel Aviv, Israel, 23–27 October 2022; pp. 416–432. [Google Scholar]

- Xiang, P.; Wen, X.; Liu, Y.S.; Cao, Y.P.; Wan, P.; Zheng, W.; Han, Z. Snowflake Point Deconvolution for Point Cloud Completion and Generation with Skip-Transformer. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 6320–6338. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).