Abstract

Image distortion correction is a fundamental yet challenging task in image restoration, especially in scenarios with complex distortions and fine details. Existing methods often rely on fixed-scale feature extraction, which struggles to capture multi-scale distortions. This limitation results in difficulties in achieving a balance between global structural consistency and local detail preservation on distorted images with varying levels of complexity, resulting in suboptimal restoration quality for highly complex distortions. To address these challenges, this paper proposes a dynamic channel attention network (DCAN) for multi-scale distortion correction. Firstly, DCAN employs a multi-scale design and utilizes the optical flow network for distortion feature extraction, effectively balancing global structural consistency and local detail preservation under varying levels of distortion. Secondly, we present the channel attention and fusion selective module (CAFSM), which dynamically recalibrates feature importance across multi-scale distortions. By embedding CAFSM into the upsampling stage, the network enhances its ability to refine local features while preserving global structural integrity. Moreover, to further improve detail preservation and structural consistency, a comprehensive loss function is designed, incorporating structural similarity loss (SSIM Loss) to balance local and global optimization. Experimental results on the widely used Places2 dataset demonstrate that DCAN achieves state-of-the-art performance, with an average improvement of 1.55 dB in PSNR and 0.06 in SSIM compared with existing methods.

1. Introduction

Fisheye lenses are widely used in computer vision and digital image processing applications, including video surveillance systems [1,2], autonomous driving [3,4,5], VR devices [6,7], and robotics applications [8,9], due to their ability to capture a wider field of view compared with conventional cameras. However, fisheye lenses introduce significant challenges in the form of image distortion, particularly radial distortion. This distortion results in objects near the image center being overstretched, while the image periphery becomes overly compressed, leading to a loss of critical visual information. Consequently, fisheye images cannot be directly utilized for downstream tasks such as pose estimation [10,11,12], scene segmentation [13,14,15], and object recognition [16,17,18]. Therefore, correcting distortion in fisheye images is essential as a preprocessing step for geometric vision applications that rely on fisheye lenses.

Traditional vision calibration methods rely on calibration objects like checkerboards or geometric patterns, combined with multi-view images, to determine camera distortion parameters [19,20,21]. However, their dependence on such setups limits their practicality, particularly in real-time or dynamic environments [22,23]. To address these limitations, researchers have developed feature-based calibration methods that leverage scene geometry for automatic distortion correction. Thormahlen et al. [24] proposed a line-based calibration approach that eliminates the need for calibration objects or intrinsic parameters by removing outliers caused by true 3D curves, enhancing the robustness and accuracy of radial distortion estimation. However, this method is primarily suited for scenes with prominent linear features. Wang et al. [25] introduced a circularity-based correction method, which directly computes distortion parameters using the circular properties of distorted points, expanding the applicability to more diverse scenes while improving flexibility and robustness. Later, Bukhari et al. [26] developed a vertical-line-based method, integrating a division model and robust parameter estimation to achieve fast and precise radial distortion correction, particularly effective in structured environments. These approaches bypass the need for calibration objects by relying on inherent geometric features like lines and arcs, significantly improving their adaptability in dynamic or calibration-free scenarios. However, in natural scenes with sparse geometric features, detection errors may accumulate, potentially impacting the accuracy of distortion correction.

In recent years, deep learning has made significant progress in distortion correction, with convolutional neural networks (CNNs) emerging as the preferred approach due to their strong capability in local feature extraction. In 2017, Rong et al. [27] proposed a CNN-based radial distortion correction method that outperformed traditional baseline methods. The following year, Bogdan et al. [28] introduced a fully automated camera calibration method capable of regressing focal length and distortion parameters using only single images of regular scenes. Yin et al. [29] developed an end-to-end multi-context deep network that significantly improved fisheye distortion correction by integrating semantic-level and appearance-level feature learning. These parameter-based supervised learning methods directly map distorted parameters to their undistorted counterparts, demonstrating strong performance in controlled environments. However, they are highly sensitive to parameter estimation errors, where even minor inaccuracies accumulate across the image, leading to residual distortions. This sensitivity limits their robustness and precision, particularly in scenarios requiring high accuracy. To address these limitations, generative methods have emerged, leveraging deep neural networks to directly produce rectified images without explicit parameter estimation, exhibiting greater robustness and flexibility. For instance, Liao et al. [30] proposed a multi-level framework that decomposes the correction task into three stages: structural recovery, semantic embedding, and texture rendering, progressively generating the corrected image. Similarly, Yang et al. [31] introduced a dual diffusion architecture (DDA) that improves generalization by gradually introducing noise. Xu et al. [32] proposed a multi-scale progressive fusion strategy that demonstrated significant optimization potential in image registration tasks, inspiring its application in distortion correction to progressively capture global and local features, thereby improving correction accuracy and detail reconstruction. Compared with parameter regression methods, generative approaches exhibit stronger robustness and flexibility. However, they still face challenges in effectively incorporating selective attention during feature representation, which impacts global consistency and local detail preservation. Furthermore, many existing methods lack effective selective attention mechanisms [33], making it difficult to balance global consistency and local refinement, particularly in scenarios with complex distortions.

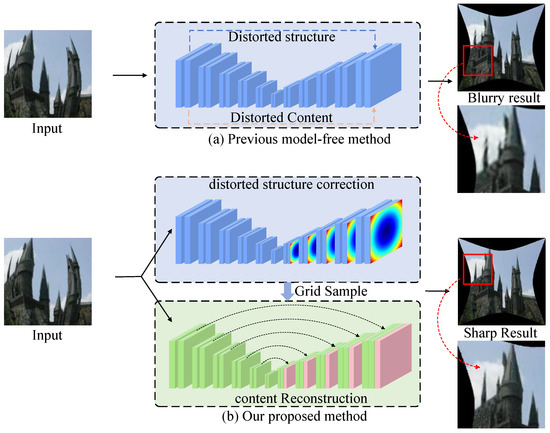

To address the challenges of fisheye distortion correction, we propose the dynamic channel attention network (DCAN), which leverages a multi-scale framework to balance global structural consistency and local detail preservation. By integrating the channel attention and fusion selective module (CAFSM) into its architecture, DCAN dynamically prioritizes and refines features across multiple scales, enabling it to adapt to varying distortion levels effectively. Unlike traditional single-scale methods [34,35] that directly reconstruct images based on distorted features, often resulting in blurred and inconsistent outputs (Figure 1a), DCAN employs a multi-path progressive complementary mechanism to pre-correct distorted structures while refining content features. This cascaded correction approach (Figure 1b) ensures more accurate restoration and higher visual fidelity, even under complex distortion scenarios.

Figure 1.

Comparison between previous single-scale correction methods and the proposed DCAN with multi-scale integration. (a) The previous single-scale-based method reconstructs distorted images directly from distorted features [34,35], resulting in blurry outputs and loss of structural details. (b) The proposed DCAN employs CAFSM to perform a multi-scale correction process, combining dynamic feature prioritization and selective fusion to refine both structure and content. By integrating predicted distortion features and correcting them through a cascade approach, DCAN achieves sharper results and better consistency in distortion correction.

Here is a summary of our contributions:

- We propose the dynamic channel attention network (DCAN), which leverages a multi-path progressive complementary mechanism to effectively address the challenge of balancing global structural consistency and local detail preservation under varying distortion levels.

- We introduce the channel attention and fusion selective module (CAFSM), which dynamically prioritizes critical features and integrates multi-scale information, enhancing adaptability and feature representation of the network.

- We design a comprehensive loss function incorporating structural similarity loss (SSIM Loss) to ensure fine detail preservation and structural consistency, improving visual fidelity in the corrected images.

2. Related Work

2.1. GAN-Based Distortion Correction Methods

In the field of distortion correction, researchers have begun to explore GAN-based frameworks to address challenges in rectifying distorted images. Liao et al. [35] further advanced this direction with DR-GAN, an end-to-end adversarial framework designed for radial distortion correction. DR-GAN bypasses the explicit parameter estimation process by directly learning the mapping between distorted and rectified images. This method significantly enhances flexibility and robustness in distortion correction tasks. However, like other GAN-based approaches, DR-GAN may face challenges in fine-grained detail reconstruction, particularly in scenarios involving severe or complex distortions. Chao et al. [36] proposed fisheye GAN (FE-GAN), which employs self-supervised learning and adversarial training to predict distortion flow and rectify fisheye distortions. By introducing geometric constraints such as cross-rotation consistency, FE-GAN effectively performs correction without requiring paired datasets or ground truth parameters. However, its reliance on pre-defined constraints limits its generalization to complex real-world distortions. To address these limitations, Yang et al. [37] introduced the progressively complementary network (PCN). By decomposing the correction process into multiple stages, PCN progressively refines distortion correction at different scales, improving both global consistency and local detail preservation.

2.2. Attention-Based Distortion Correction Methods

Attention-based methods leverage their ability to focus on significant features, enabling enhanced distortion correction by modeling global dependencies and capturing fine-grained details. For example, Guo et al. [38] proposed QueryCDR, which utilizes a query-based mechanism combined with attention modules to adaptively correct distortions at different levels. This approach improves flexibility and control by dynamically focusing on relevant features during correction. However, the added computational complexity of the query mechanism may limit its efficiency in real-time applications. Similarly, Feng et al. [39] introduced SimFIR, which integrates vision transformers (VIT) to capture multi-scale distortion features. By employing self-supervised learning, SimFIR enhances the representation of distortion patterns, contributing to improved downstream correction tasks. Despite its effectiveness in global feature modeling, the method lacks generative capabilities, limiting its ability to reconstruct fine-grained details in highly distorted images.

2.3. Integration of Channel Attention and Fusion Selective

Multi-scale feature integration plays a vital role in distortion correction tasks, as it balances global structural consistency with local detail refinement. Recent studies have explored feature integration techniques to enhance correction performance. Yang et al. [37] proposed the Progressively Complementary Network (PCN), which refines features at multiple stages, progressively improving the correction from coarse to fine. The network employs a recursive fusion mechanism, where each stage refines features by fusing information from previous stages. However, PCN lacks dynamic prioritization of key features, making it less adaptive in handling complex distortions with varying feature distributions. This limitation has also been addressed in earlier work on spatial feature selection [40].

In contrast, Hu et al. [41] proposed the squeeze-and-excitation (SE) module, which enhances feature representation by dynamically recalibrating channel weights. The module first reduces the global spatial information to channel descriptors and then generates adaptive weights for each channel. These recalibrated channel weights are applied to the feature map to refine the feature representation. Building on existing methods, we propose DCAN, which integrates the CAFSM into a multi-scale framework. This design addresses the limitations of previous approaches by dynamically prioritizing key features, combining progressive refinement with channel recalibration, and ensuring better adaptability to varying distortion levels.

3. Proposed Method

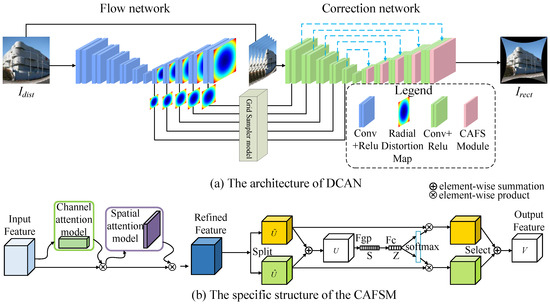

In this section, we introduce the DCAN for multi-scale distortion correction. The overall architecture of the network is depicted in Figure 2, comprising three main components: an optical flow estimation module, a resampling module, and an aberration correction module. The implementation of DCAN is available at https://github.com/psj-yyds/fisheye_eye-correction.git, accessed on 6 January 2025. The optical flow estimation module is designed to extract and generate optical flow maps at multiple levels. By employing downsampling and upsampling operations, the module captures multi-scale features from the input fisheye image and produces optical flow maps that guide subsequent resampling and distortion correction. The resampling module adjusts pixel positions based on the generated optical flow maps, creating a pre-corrected image that reduces severe distortions. This intermediate output is then refined in the aberration correction module. A key innovation of the proposed method lies in the aberration correction module, where we introduce CAFSM. CAFSM enhances feature representation by dynamically prioritizing and integrating features across multiple scales. This integration effectively balances global structural consistency and local detail preservation, addressing the challenges posed by varying distortion complexities.

Figure 2.

Overview network of the proposed DCAN method. (a) The architecture of DCAN. (b) The specific structure of the CAFSM.

3.1. Flow Network

The optical flow estimation module utilizes an encoder–decoder framework, effectively extracting image features and producing a sequence of appearance flows, taking full advantage of this design. In the decoding phase, the module integrates a progressive complementarity mechanism, ensuring that the output from each decoder layer aids in generating the appearance flows. Notably, skip connections are established between encoder and decoder features, maintaining the precise spatial resolution. The encoder part consists of six hierarchical layers, each maintaining a 4 × 4 kernel size, with a stride of 2 (), padding of 1, followed by a LeakyReLU activation function () and an instance normalization layer. The number of filters for each layer is as follows: 32, 64, 128, 256, 512, and 512.

The decoder comprises five hierarchical layers, each employing feature fusion, conventional convolutional processing, residual block enhancement, and transposed convolutional layers to progressively restore the spatial dimensions of the image and refine features. In detail, each layer begins by upsampling through a transposed convolutional layer, doubling the spatial dimensions while gradually reducing the number of channels to match the feature depth of the corresponding encoder layer. The first decoder layer halves the feature channels from 512 at the deepest layer to 256 and restores some spatial dimensions through a transposed convolutional layer. Subsequently, the output of each layer is fused with the output from the corresponding encoder layer, utilizing these cross-layer connections to enhance feature representation and supplement missing spatial information. The fused features are then further processed by conventional convolution, with residual blocks enhancing feature expression capability, followed by another transposed convolutional layer to continue enlarging spatial dimensions and reducing feature channels. This process is repeated across the decoder layers until the final layer reduces the channels to 16 while fully restoring the spatial dimensions to nearly match the input size. Before the final output, a positional layer combines the outputs of the decoder and the first encoder layer, generating the final two-channel flow map through convolution and residual blocks, accurately representing the movement direction of each pixel in the image. Ultimately, the module produces five appearance flows at different resolutions: 128, 64, 32, 16, and 8. The entire process can be represented as

where stands for the input fisheye image, F refers to the optical flow estimation module, and represents the optical flow output from the i-th decoder layer.

3.2. Resample Network

The resampling module is a technique that adjusts the pixel positions of an image based on the optical flow field to generate a new image with specific transformation adjustments. This module generates a grid of coordinates for each pixel, adjusts these coordinates according to the optical flow field, and then resamples the original image using the modified coordinates to obtain the newly transformed image. The entire process can be represented as

where coordinate grid U is a matrix of ; each element u represents the pixel position of the image. This grid undergoes a displacement adjustment by the optical flow field , which is generated hierarchically by the different layers of the optical flow estimation module.

This spatial transformation process can be expressed as: . This equation can be expanded as

where represents the feature map of the i-th layer in the encoder–decoder module; represent the horizontal and vertical coordinates in the coordinate grid; and denote the displacement fields along the horizontal and vertical directions in the optical flow field, respectively. These displacement values are calculated based on the original pixel coordinates and are used to compute the final displacement.

3.3. Correction Module

In the distortion correction module, the CAFSM operates on multi-scale feature maps , extracted from the decoder layers. For each decoder layer, the input feature map is derived by applying a convolutional operation to the previous layer’s feature map, expressed as

where is the feature map from the previous decoder layer. This hierarchical approach enables the module to leverage multi-scale features, progressively refining them at different levels for improved distortion correction.

To dynamically adjust feature importance, CAFSM employs a channel attention mechanism. For each feature channel, the global descriptor is computed as the spatial average of feature values across the height (H) and width (W):

followed by generating channel weights through two fully connected layers and activation functions

where and denote the Sigmoid and ReLU functions, respectively. The adjusted feature map is obtained by scaling the original map with

this mechanism enables the DCAN to focus on crucial channels while suppressing less relevant ones.

To enhance the integration of local and global features, CAFSM introduces a fusion selective mechanism. This mechanism adaptively combines refined local features and global features derived from all prior layers. The fused feature map is calculated as

where and are learnable weights that balance the contributions of local and global information. The fused features are progressively integrated into the subsequent layers, with the updated input feature map at layer expressed as

where represents the fusion operation. Finally, the reconstructed feature map is generated through an upsampling operation

where L denotes the last decoder layer. This progressive refinement effectively balances global consistency and local detail preservation, significantly improving the precision and robustness of distortion correction.

3.4. Training and Loss Function

To achieve superior performance in distortion correction, we optimize the DCAN with a comprehensive loss function designed to balance global structural consistency, local detail refinement, and multi-scale feature integration. This loss function combines three key components, each addressing a specific aspect of image correction. The overall loss function is formulated as

where , , and represent the SSIM Loss, Enhanced Loss, and Multi-Scale Loss, respectively. The hyperparameters are introduced to balance the contribution of each loss component during training. The rationale for incorporating these losses is detailed below.

SSIM Loss (SSL): To ensure the perceptual alignment of the output image with the ground truth , we adopt an enhanced SSIM Loss. This loss extends the traditional SSIM metric by incorporating dynamically computed channel weights and spatial feature intensities , derived from the CAFSM. This design not only captures brightness, contrast, and structure but also dynamically prioritizes features based on their importance. The enhanced SSIM is expressed as

where represents channel-wise importance, and denotes spatial feature intensity. The SSIM Loss is computed as

by directly optimizing for structural similarity, this loss ensures that the generated images maintain both global structure and local detail fidelity.

Enhanced Loss (EHL): While the SSIM Loss captures perceptual quality, Enhanced Loss focuses on refining texture details and preserving structural accuracy. This loss combines content loss and style loss to balance pixel-level similarity and stylistic coherence

Content Loss: The Content Loss emphasizes feature-level alignment between the output and ground truth images. It leverages a pre-trained VGG network, which effectively captures high-level semantic and structural features beyond simple pixel-wise differences. Compared with traditional L1 or L2 loss, VGG-based content loss aligns better with human visual perception, ensuring that the corrected image preserves fine details and global consistency while reducing distortion. This is particularly beneficial for fisheye image rectification, where maintaining structural integrity is crucial. The loss is defined as

where represents the feature map from the j-th layer of the VGG network.

Style Loss: The Style Loss captures stylistic alignment by comparing the Gram matrices of the feature maps from the output and ground truth images

here, denotes the Gram matrix of feature maps. By integrating both content loss and style loss, the enhanced loss ensures that the output images retain critical texture details and stylistic coherence.

Multi-Scale Loss (MSL): To leverage multi-scale information for comprehensive correction, we introduce the Multi-Scale Loss. This loss incorporates features at different resolutions, ensuring effective correction across both coarse and fine-grained scales. The formulation is

where resizes x to of its original size, and , represent features from the decoder and correction layers, respectively. The operator ⊕ denotes feature concatenation.

Together, these three components form a robust framework for optimizing the network. The Structural Similarity Loss enhances perceptual quality, the Enhanced Loss refines structural and textural details, and the Multi-Scale Loss ensures that features from different resolutions contribute effectively to the final output. The hyperparameters are empirically tuned to maximize performance, demonstrating the efficacy of the proposed loss function in distortion correction tasks.

4. Experimental Procedure and Results

The evaluation of the proposed method is conducted using a synthetic fisheye dataset specifically designed to simulate diverse distortion scenarios. This dataset is generated based on the official Place2 dataset [42], which comprises over 10 million high-resolution images spanning more than 400 diverse scenes. To ensure sufficient variability for robust training and testing, one million images are randomly sampled for training, while 8000 images are reserved for testing. All images are resized to pixels to match the input requirements of the model.

The fisheye distortions are simulated using a polynomial distortion model parameterized by , defined as

where and represent the undistorted and distorted radii, respectively, relative to the image center. The distortion coefficients , , , and control the degree of fisheye distortion, with higher-order terms capturing more complex distortion effects. In this study, the parameters are carefully selected within the ranges , , , and . As illustrated in Figure 3, this parameterized approach allows for the generation of a comprehensive dataset exhibiting a wide range of distortion intensities and patterns, supporting both model generalization and evaluation. By leveraging this dataset, the proposed method’s ability to handle varying levels of distortion is thoroughly assessed in both quantitative and qualitative experiments.

Figure 3.

Synthetic fisheye dataset. (Top): Original images from Place2 dataset [42]. (Middle): Corrected images corresponding to the distorted samples. (Bottom): Generated fisheye images with different distortion levels.

This parameterized approach allowed for the generation of a comprehensive dataset exhibiting a wide range of distortion intensities and patterns, supporting both model generalization and evaluation. By leveraging this dataset, the proposed method’s ability to handle varying levels of distortion is thoroughly assessed in both quantitative and qualitative experiments.

To provide a thorough validation of the proposed method, comparisons are conducted against a selection of state-of-the-art fisheye distortion correction approaches, including SimFIR [39], DeepCalib [28], DR-GAN [35], PCN [37], and QueryCDR [38]. These methods incorporate advanced neural network designs and attention mechanisms, representing a broad spectrum of strategies for addressing distortion correction. The evaluation protocol involved both quantitative metrics and qualitative visual assessments, ensuring a comprehensive analysis. Furthermore, to ensure consistency and fairness, all methods are trained and tested using the same dataset configuration.

The performance of DCAN is quantitatively evaluated using structural similarity (SSIM) [43], peak signal-to-noise ratio (PSNR) [44], and Fréchet Inception Distance (FID) [45]. These metrics are selected to comprehensively assess the structural consistency, pixel-level fidelity, and perceptual quality of the corrected images. Additionally, a complexity metric is introduced to classify the test dataset into different levels of difficulty, enabling a more detailed analysis of model robustness under varying scenarios. The mathematical definitions of the evaluation metrics are outlined below,

where are the mean intensities, are variances, is the covariance, and are stabilizing constants.

where MSE is the mean squared error and MAX is the maximum possible pixel value.

where and are the means and covariances of the reference and generated images in the feature space.

Additionally, the complexity metric is defined as

where KD, TV, and ED represent keypoint density, texture variance, and edge density, respectively. Based on , the test dataset is categorized into three complexity levels: low (), medium (), and high ().

The training process is carried out using the Adam optimizer, with , , and an initial learning rate of . To balance the contributions of different loss components in the overall objective function, the hyperparameters are empirically set to , , and . These values ensure that the SSIM Loss (), Enhanced Loss (), and Multi-Scale Loss () collectively contribute to the optimization process while maintaining their respective impacts. Furthermore, learnable weights and are introduced in the CAFSM to dynamically balance the integration of local and global features. Initially, these weights are set as 0.5 to ensure an equal contribution of local and global features at the start of training. These weights are updated dynamically through backpropagation and are constrained within the range , ensuring numerical stability and avoiding overfitting. This adaptive mechanism allows the model to prioritize features based on the characteristics of the input distortions, effectively improving the balance between global consistency and local detail preservation. By combining the carefully tuned hyperparameters and the dynamic adjustment mechanism of and , the proposed framework demonstrates superior adaptability and robustness across varying distortion levels.

The batch size is set to 32, and the model is trained for 180 epochs. The learning rate linearly decays from 0.2 to 0.05 over the training iterations. All experiments are conducted using the PyTorch framework (version 2.1.2) on an NVIDIA GeForce RTX 4090 GPU, ensuring efficient computation of the large-scale dataset.

4.1. Comparison Results

Quantitative Evaluation: The quantitative evaluation results, summarized in Table 1, present the PSNR, SSIM, and FID metrics across various complexity levels (, , , , , and ). These metrics comprehensively evaluate reconstruction accuracy, structural similarity, and perceptual quality, respectively. The dataset complexity is categorized into very low, low, medium, high, very high, and extreme levels based on keypoint density, texture variance, and edge density. In very low complexity scenarios (), where distortions are minimal, the proposed method achieves a PSNR of 24.64 dB, exceeding the next-best performer, QueryCDR, by 1.32 dB. The SSIM of 0.9199 shows an improvement of 3.03% compared with QueryCDR, while the FID of 27.7 indicates a perceptual quality enhancement over QueryCDR, which achieves a value of 28.3. At low complexity levels (), DCAN achieves a PSNR of 24.58 dB and an SSIM of 0.9159, reflecting improvements of 1.62 dB and 5.71% over QueryCDR and PCN, respectively. The FID of 27.8 further highlights the perceptual advantage, with QueryCDR recording a higher value of 28.9. In medium complexity scenarios (), DCAN achieves a PSNR of 24.43 dB, SSIM of 0.9191, and FID of 28.1. Compared with SimFIR, which records a PSNR of 21.84 dB and SSIM of 0.8411, the proposed method provides improvements of 11.85% and 9.27%, respectively. The reduction in FID by 6.03% compared with QueryCDR further demonstrates robustness in handling moderate distortions. For high complexity levels (), the proposed method achieves a PSNR of 24.47 dB and an SSIM of 0.9195, both outperforming QueryCDR, which achieves values of 22.79 dB and 0.7983. The FID of 28.0, which is lower than the value of 30.1 achieved by QueryCDR, highlights the ability of the proposed method to preserve perceptual fidelity in challenging conditions.At very high complexity levels (), DCAN obtains a PSNR of 24.52 dB, SSIM of 0.9189, and FID of 28.2, maintaining a consistent advantage over competing approaches. When compared with SimFIR, the proposed method achieves an SSIM improvement of more than 10.37%, demonstrating its effectiveness in preserving structural details even in scenarios with significant distortions. At the extreme complexity level (), the proposed method achieves a PSNR of 24.41 dB, SSIM of 0.9186, and FID of 28.6. These results exceed the corresponding values of QueryCDR, which records a PSNR of 22.86 dB, SSIM of 0.7895, and FID of 30.9, further validating the adaptability and robustness of the proposed method. Across all complexity levels, the proposed method attains an average PSNR of 24.51 dB, which is an improvement of 6.75% over QueryCDR, with an average PSNR of 22.96 dB. Similarly, the average SSIM is 0.9187, representing an enhancement of 6.98% compared with PCN, which achieves an average SSIM of 0.8587. The average FID of 28.1 is also superior to QueryCDR, which records a value of 29.9, reflecting a perceptual improvement of 6.02%. These results highlight the effectiveness of the advanced feature fusion mechanisms and structural preservation strategies employed by DCAN. Unlike fixed-pattern approaches such as QueryCDR, DCAN dynamically adjusts to varying levels of distortion, ensuring superior reconstruction accuracy and perceptual quality. This adaptability makes the proposed method well suited for real-world applications in diverse and challenging scenarios.

Table 1.

Comparison across different complexity levels (, , , , , ) (bold indicates the best value).

As shown in Table 2, DCAN achieves an effective balance between computational complexity and inference speed through multi-scale feature extraction and progressive refinement. While it has slightly higher FLOPs and parameters than lightweight architectures such as SimFIR and DeepCalib, it maintains competitive runtime efficiency, demonstrating its capability to process high-resolution distorted images effectively. This trade-off highlights the architectural optimization that ensures both computational efficiency and high-fidelity correction, making DCAN well suited for demanding distortion correction tasks.

Table 2.

Computational complexity and inference time comparison (bold indicates the best value, underline indicates the second-best value).

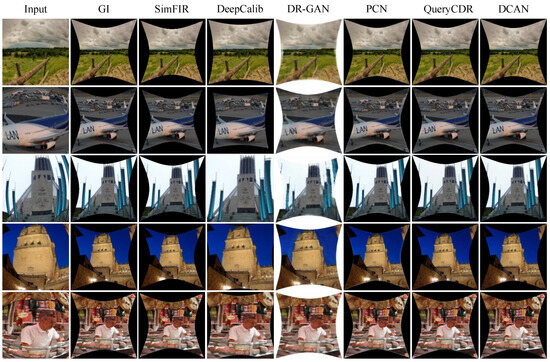

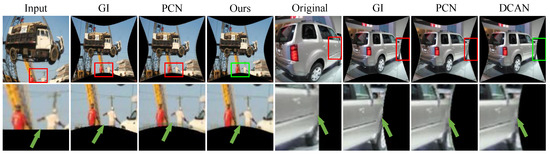

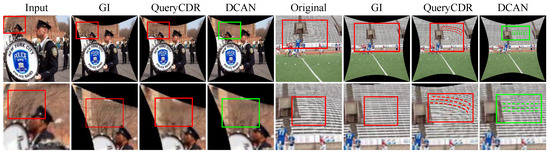

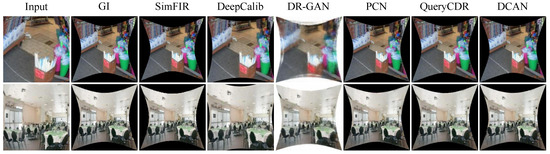

Qualitative Evaluation: In this section, we use our synthetic dataset to visualize the corrected photos of the various algorithms in order to provide a visual comparison. The learning methods SimFIR, DeepCalib, DR-GAN, PCN, and QueryCDR achieve improved correction performance in terms of visual appearance thanks to the benefits of the global semantic features supplied by neural networks, as Figure 4 illustrates. However, these approaches have difficulty recovering appropriate distributions from substantially distorted situations because of the simplicity and inadequacy of the learning methodology. The distorted parts of the image are corrected by SimFIR, although the ground truth picture of physical objects is often smaller and loses some edge information as a result. DeepCalib [28] corrects the inner areas of the picture well while degrading in the boundary regions. The network’s inherent properties impose limitations on DR-GAN and the images it generates show blurring. PCN frequently yields overcorrected results, which makes it difficult to flexibly adjust to varying degrees of distortion. Additionally, Figure 5, Figure 6 and Figure 7 provide a comprehensive comparison with SimFIR, PCN, and QueryCDR. The comparative results with SimFIR and PCN can be visually observed and clearly demonstrate the advantages of DCAN. To further highlight the differences between DCAN and QueryCDR, we introduce bounding boxes in the corrected images. Specifically, green boxes mark areas with high-quality correction results, while red boxes indicate regions where correction performance is less satisfactory. These visual comparisons effectively illustrate the strengths of DCAN in recovering fine details and achieving better correction consistency across the entire image. The results demonstrate that DCAN achieves significantly better correction quality compared with the other methods, highlighting its superior ability to retain fine details and avoid excessive smoothing. This indicates that DCAN not only effectively prevents information loss and blurring but also ensures a high level of detail recovery in the corrected images. Moreover, in qualitative evaluations, DCAN outperforms most of the compared approaches, achieving the best corrective performance and closely approximating the ground truth.

Figure 4.

Comparison of synthetic images. Visual results comparison in different scenarios. The state-of-the-art methods include the regression-based method (DeepCalib) and four generation-based methods (SimFIR, DR-GAN, PCN, and QueryCDR).

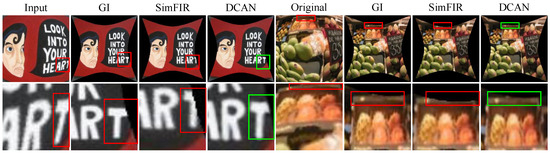

Figure 5.

Additional comparison between SimFIR and DCAN. DCAN results provide additional information.The red borders (regions with distortion or unclear details) and the green borders (regions where DCAN improves clarity and preserves details) are used to highlight the improvements.

Figure 6.

Additional comparison between PCN and DCAN. DCAN results provide additional details.The red borders (regions with distortion or unclear details) and the green borders (regions where DCAN improves clarity and preserves details) are used to highlight the improvements.

Figure 7.

Additional comparison between QueryCDR and DCAN. DCAN results demonstrate notable performance in both detail correction and image clarity to a significant extent.The red borders (regions with distortion or unclear details) and the green borders (regions where DCAN improves clarity and preserves details) are used to highlight the improvements.

Comparison of Real-world Images: To confirm the feasibility of the approach, we used artificial data to train the network. Subsequently, we test the generalization performance of DCAN using real-world fisheye photos, as Figure 8 illustrates. The figure shows that, despite the inevitable disparities between artificial and natural fisheye photos, the correction outcomes of DCAN are comparatively superior to previous approaches in terms of both global scene distribution and information retention. The outcomes demonstrate how effectively DCAN generalizes to fisheye photos found in the real world.

Figure 8.

Qualitative comparison of real-world fisheye images. From left to right, we show the input distorted images, the results of five different methods (SimFIR, DeepCalib, DR-GAN, and PCN) and QueryCDR, and the results of our proposed method. Our method achieves the best overall visual quality among all compared methods.

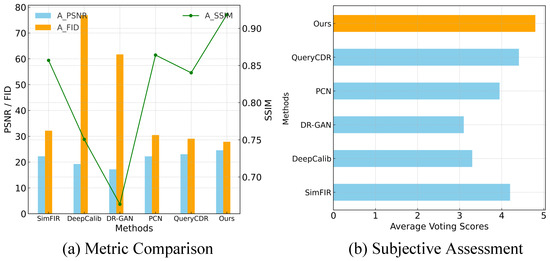

To comprehensively evaluate the performance of different fisheye correction methods, both objective metrics and subjective assessments are utilized. Figure 9a presents the average performance of PSNR, SSIM, and FID across various methods, where higher PSNR and SSIM values indicate better image fidelity and lower FID scores reflect more realistic and visually consistent results. For subjective evaluation, a voting experiment is conducted involving ten volunteers with experience in image processing. A total of 200 fisheye images are randomly selected from diverse scenes, including campus, streets, and indoor environments, and are rectified using different methods. To ensure fairness, the images are divided into ten groups, each containing 20 images, with their order randomized to avoid bias. Each group is evaluated by a single volunteer, who rated the rectified images on a scale of 0 (worst) to 5 (best). All participants have a strong background in image processing and are familiar with the image content, ensuring reliable feedback. As shown in Figure 9b, DCAN achieved the highest subjective ratings among all approaches.

Figure 9.

Metric comparison and subjective evaluation. (a) A comparison of the average PSNR, SSIM, and FID across different fisheye correction methods. (b) Subjective assessment based on voting scores for correction results.

4.2. Ablation Study

Comprehensive ablation experiments are performed to assess the contributions of individual modules and loss functions to the overall performance of DCAN. This section specifically examines the influence of the CAFSM on the quality of the generated images. By systematically removing these components, we aim to elucidate their roles in the image restoration process.

Experimental analysis of structure and loss function ablation: Ablation studies are conducted on both the network structure and loss functions of DCAN to evaluate their respective contributions to the overall performance, as shown in Table 3. The removal of the flow estimation module (w/o FNM) results in substantial performance degradation, with PSNR decreasing to 15.88 dB, SSIM to 0.4713, and FID increasing to 198.7, highlighting its critical role in effective distortion correction. Similarly, the absence of the distortion correction module (w/o DCM) leads to improved results compared with w/o FNM, with PSNR and SSIM reaching 17.96 dB and 0.6434, respectively, though still significantly lower than the complete model. Furthermore, excluding the channel attention and selective fusion module (w/o CAFSM) reduces PSNR to 22.36 dB and SSIM to 0.8779, emphasizing its importance in enhancing correction capabilities. Regarding loss functions, the removal of all losses (w/o EHL and MSL and SSL) causes noticeable performance degradation, with PSNR, SSIM, and FID recorded at 22.38, 0.8688, and 31.9, respectively. The exclusion of structural similarity loss (w/o SSL) further reduces SSIM to 0.8772, underscoring its significance in preserving structural details. Similarly, removing enhancement and multi-scale losses (w/o EHL and MSL) results in PSNR and SSIM values of 23.87 dB and 0.8801, respectively. The complete model of DCAN, integrating all modules and loss functions, achieves the best performance, with a PSNR of 24.59 dB, an SSIM of 0.9110, and an FID of 28.6, validating the soundness of the proposed design and the complementary contributions of each component.

Table 3.

Performance comparison of different structures and loss functions (bold indicates the best value).

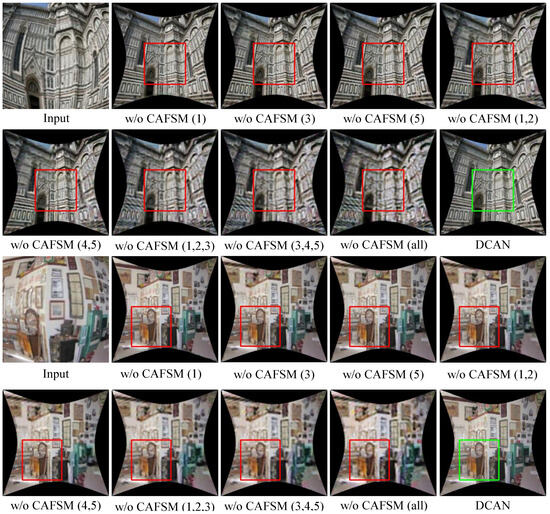

Experimental analysis of CAFSM ablation: To further explore the role of CAFSM in different convolutional layers, layer-by-layer ablation experiments are designed, and the results are shown in Table 4 and Figure 10. Firstly, removing the CAFSM in layer 1 (w/o CAFSM (1)) decreases the PSNR to 23.12 dB and the SSIM to 0.8600, indicating that the CAFSM in layer 1 is essential in correcting the external features. The performance decreases slightly when the CAFSM of layers 3 and 5 (w/o CAFSM (3) and CAFSM (5)) are removed, indicating that these layers also contribute to detail correction. Removing multiple CAFSMs (w/o CAFSM (1,2) and CAFSM (4,5)) results in a further decrease in PSNR and SSIM; in particular, the removal of all CAFSMs (w/o CAFSM (all)) results in a PSNR of only 22.36 dB and an SSIM of 0.8779. To further visualize the results of these ablation experiments, the corrected regions in the test images are highlighted using bounding boxes, where green boxes denote areas with high-quality correction, and red boxes indicate regions with noticeable degradation or suboptimal correction. This visualization method intuitively demonstrates the contribution of the CAFSM at different layers to the correction of specific image regions. Comparing the full model results shows that the CAFSM contributes positively to the final correction effect at every layer, especially at the middle and deep layers of the convolutional network. Thus, it is shown that the CAFSM plays an indispensable role in improving image correction accuracy and enhancing image detail processing capability. Through these ablation experiments, we further demonstrate the core value of the CAFSM in DCAN, which is crucial for the overall image correction effect and provides targeted optimization at different feature levels.

Table 4.

Performance of using channel attention and selective fusion modules on different convolutional layer outputs (bold indicates the best value).

Figure 10.

A comparison of results without CAFSM on different layers.

5. Conclusions

In conclusion, DCAN integrates a dedicated streaming network and a correction network augmented with the CAFSM, achieving improvements in addressing complex fisheye distortions. By combining channel and spatial attention mechanisms with a selective kernel fusion strategy, CAFSM enhances feature representation during up-sampling, enabling precise and visually superior corrections. The network effectively preserves essential details and ensures structural consistency across different image regions, demonstrating robustness and adaptability. Comprehensive experiments validate the proposed DCAN. The results demonstrate consistent outperformance of existing approaches across evaluation metrics, including an improvement of +1.5 dB in PSNR, +0.10 in SSIM, and −1.8 in FID when compared with the latest method, QueryCDR. These improvements highlight the dual-path architecture and the effectiveness of CAFSM in addressing challenging distortion scenarios while preserving fine details and structural integrity. Additionally, the modular design of the network provides flexibility for future enhancements and potential applications in broader image-processing tasks.

Future Works

Future work will enhance the model’s robustness for extreme distortion and complex content while exploring domain adaptation. We will also optimize it for real-time applications like video correction and AR, ensuring stable performance on hardware accelerators. These efforts aim to expand DCAN’s use in autonomous driving and media.

Author Contributions

Methodology, J.L. and S.P.; Software, S.P.; Writing—original draft, S.P.; Writing—review and editing, J.L., A.G. and J.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the special funds for promoting high-quality industrial development in Shanghai under Grant JJ-ZDHYLY-01-23-0004 and in part by the National Natural Science Foundation of China under Grant 62204044 and 62404132.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

All datasets used are available online with open access.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Muhammad, K.; Ahmad, J.; Lv, Z.; Bellavista, P.; Yang, P.; Baik, S.W. Efficient deep CNN-based fire detection and localization in video surveillance applications. IEEE Trans. Syst. Man Cybern. Syst. 2018, 49, 1419–1434. [Google Scholar] [CrossRef]

- Eichenseer, A.; Bätz, M.; Kaup, A. Motion estimation for fisheye video with an application to temporal resolution enhancement. IEEE Trans. Circuits Syst. Video Technol. 2018, 29, 2376–2390. [Google Scholar] [CrossRef]

- Abu Alhaija, H.; Mustikovela, S.K.; Mescheder, L.; Geiger, A.; Rother, C. Augmented reality meets computer vision: Efficient data generation for urban driving scenes. Int. J. Comput. Vis. 2018, 126, 961–972. [Google Scholar] [CrossRef]

- Grigorescu, S.; Trasnea, B.; Cocias, T.; Macesanu, G. A survey of deep learning techniques for autonomous driving. J. Field Robot. 2020, 37, 362–386. [Google Scholar] [CrossRef]

- Sun, P.; Kretzschmar, H.; Dotiwalla, X.; Chouard, A.; Patnaik, V.; Tsui, P.; Guo, J.; Zhou, Y.; Chai, Y.; Caine, B.; et al. Scalability in perception for autonomous driving: Waymo open dataset. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 2446–2454. [Google Scholar]

- Wei, X.; Zhou, M.; Jia, W. 360° Streaming. IEEE Trans. Ind. Inform. 2022, 19, 6326–6336. [Google Scholar] [CrossRef]

- Lin, H.S.; Chang, C.C.; Chang, H.Y.; Chuang, Y.Y.; Lin, T.L.; Ouhyoung, M. A low-cost portable polycamera for stereoscopic 360 imaging. IEEE Trans. Circuits Syst. Video Technol. 2018, 29, 915–929. [Google Scholar] [CrossRef]

- Courbon, J.; Mezouar, Y.; Eckt, L.; Martinet, P. A generic fisheye camera model for robotic applications. In Proceedings of the 2007 IEEE/RSJ International Conference on Intelligent Robots and Systems, San Diego, CA, USA, 29 October–2 November 2007; pp. 1683–1688. [Google Scholar]

- Courbon, J.; Mezouar, Y.; Martinet, P. Evaluation of the unified model of the sphere for fisheye cameras in robotic applications. Adv. Robot. 2012, 26, 947–967. [Google Scholar] [CrossRef]

- Zhang, Y.; You, S.; Gevers, T. Automatic calibration of the fisheye camera for egocentric 3D human pose estimation from a single image. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 5–9 January 2021; pp. 1772–1781. [Google Scholar]

- Aso, K.; Hwang, D.H.; Koike, H. Portable 3D human pose estimation for human-human interaction using a chest-mounted fisheye camera. In Proceedings of the Augmented Humans International Conference 2021, Rovaniemi, Finland, 22–24 February 2021; pp. 116–120. [Google Scholar]

- Xie, C.; Luo, J.; Li, K.; Yan, Z.; Li, F.; Jia, X.; Wang, Y. Image-Based Bolt-Loosening Detection Using a Checkerboard Perspective Correction Method. Sensors 2024, 24, 3271. [Google Scholar] [CrossRef] [PubMed]

- Blott, G.; Takami, M.; Heipke, C. Semantic segmentation of fisheye images. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops, Munich, Germany, 8–14 September 2018; pp. 1–15. [Google Scholar]

- Deng, L.; Yang, M.; Qian, Y.; Wang, C.; Wang, B. CNN based semantic segmentation for urban traffic scenes using fisheye camera. In Proceedings of the 2017 IEEE Intelligent Vehicles Symposium (IV), Los Angeles, CA, USA, 11–14 June 2017; pp. 231–236. [Google Scholar]

- Kumar, V.R.; Klingner, M.; Yogamani, S.; Milz, S.; Fingscheidt, T.; Mader, P. Syndistnet: Self-supervised monocular fisheye camera distance estimation synergized with semantic segmentation for autonomous driving. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Virtual, 5–9 January 2021; pp. 61–71. [Google Scholar]

- Joseph, K.; Khan, S.; Khan, F.S.; Balasubramanian, V.N. Towards open world object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 5830–5840. [Google Scholar]

- Liu, L.; Ouyang, W.; Wang, X.; Fieguth, P.; Chen, J.; Liu, X.; Pietikäinen, M. Deep learning for generic object detection: A survey. Int. J. Comput. Vis. 2020, 128, 261–318. [Google Scholar] [CrossRef]

- Julia, A.; Iguernaissi, R.; Michel, F.J.; Matarazzo, V.; Merad, D. Distortion Correction and Denoising of Light Sheet Fluorescence Images. Sensors 2024, 24, 2053. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Toepfer, C.; Ehlgen, T. A unifying omnidirectional camera model and its applications. In Proceedings of the 2007 IEEE 11th International Conference on Computer Vision, Rio de Janeiro, Brazil, 14–20 October 2007; pp. 1–5. [Google Scholar]

- Yin, W.; Zang, X.; Wu, L.; Zhang, X.; Zhao, J. A Distortion Correction Method Based on Actual Camera Imaging Principles. Sensors 2024, 24, 2406. [Google Scholar] [CrossRef] [PubMed]

- Barreto, J.P.; Daniilidis, K. Fundamental matrix for cameras with radial distortion. In Proceedings of the Tenth IEEE International Conference on Computer Vision (ICCV’05), Beijing, China, 17–20 October 2005; Volume 1, pp. 625–632. [Google Scholar]

- Geiger, A.; Moosmann, F.; Car, Ö.; Schuster, B. Automatic camera and range sensor calibration using a single shot. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation, St. Paul, MN, USA, 14–18 May 2012; pp. 3936–3943. [Google Scholar]

- Thormählen, T.; Broszio, H.; Wassermann, I. Robust line-based calibration of lens distortion from a single view. In Proceedings of the Mirage 2003, Rocquencourt, France, 10–12 March 2003; pp. 105–112. [Google Scholar]

- Wang, A.; Qiu, T.; Shao, L. A simple method of radial distortion correction with centre of distortion estimation. J. Math. Imaging Vis. 2009, 35, 165–172. [Google Scholar] [CrossRef]

- Bukhari, F.; Dailey, M.N. Automatic radial distortion estimation from a single image. J. Math. Imaging Vis. 2013, 45, 31–45. [Google Scholar] [CrossRef]

- Rong, J.; Huang, S.; Shang, Z.; Ying, X. Radial lens distortion correction using convolutional neural networks trained with synthesized images. In Proceedings of the Computer Vision—ACCV 2016: 13th Asian Conference on Computer Vision, Taipei, Taiwan, 20–24 November 2016; Revised Selected Papers, Part III 13. Springer: Berlin/Heidelberg, Germany, 2017; pp. 35–49. [Google Scholar]

- Bogdan, O.; Eckstein, V.; Rameau, F.; Bazin, J.C. DeepCalib: A deep learning approach for automatic intrinsic calibration of wide field-of-view cameras. In Proceedings of the 15th ACM SIGGRAPH European Conference on Visual Media Production, London, UK, 13–14 December 2018; pp. 1–10. [Google Scholar]

- Yin, X.; Wang, X.; Yu, J.; Zhang, M.; Fua, P.; Tao, D. Fisheyerecnet: A multi-context collaborative deep network for fisheye image rectification. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 469–484. [Google Scholar]

- Liao, K.; Lin, C.; Liao, L.; Zhao, Y.; Lin, W. Multi-level curriculum for training a distortion-aware barrel distortion rectification model. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021; pp. 4389–4398. [Google Scholar]

- Yang, S.; Lin, C.; Liao, K.; Zhao, Y. Dual diffusion architecture for fisheye image rectification: Synthetic-to-real generalization. arXiv 2023, arXiv:2301.11785. [Google Scholar]

- Xu, H.; Yuan, J.; Ma, J. Murf: Mutually reinforcing multi-modal image registration and fusion. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 12148–12166. [Google Scholar] [CrossRef] [PubMed]

- Wang, W.; Feng, H.; Zhou, W.; Liao, Z.; Li, H. Model-aware pre-training for radial distortion rectification. IEEE Trans. Image Process. 2023, 32, 5764–5778. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Zhang, B.; Sander, P.V.; Liao, J. Blind geometric distortion correction on images through deep learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 4855–4864. [Google Scholar]

- Liao, K.; Lin, C.; Zhao, Y.; Gabbouj, M. DR-GAN: Automatic radial distortion rectification using conditional GAN in real-time. IEEE Trans. Circuits Syst. Video Technol. 2019, 30, 725–733. [Google Scholar] [CrossRef]

- Chao, C.H.; Hsu, P.L.; Lee, H.Y.; Wang, Y.C.F. Self-supervised deep learning for fisheye image rectification. In Proceedings of the ICASSP 2020—2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 2248–2252. [Google Scholar]

- Yang, S.; Lin, C.; Liao, K.; Zhang, C.; Zhao, Y. Progressively complementary network for fisheye image rectification using appearance flow. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 6348–6357. [Google Scholar]

- Guo, P.; Liu, C.; Hou, X.; Qian, X. QueryCDR: Query-based Controllable Distortion Rectification Network for Fisheye Images. In Computer Vision—ECCV 2024, Proceedings of the 18th European Conference, Milan, Italy, 29 September–4 October 2024; Springer: Cham, Switzerland, 2024. [Google Scholar]

- Feng, H.; Wang, W.; Deng, J.; Zhou, W.; Li, L.; Li, H. Simfir: A simple framework for fisheye image rectification with self-supervised representation learning. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 4–6 October 2023; pp. 12418–12427. [Google Scholar]

- Jaderberg, M.; Simonyan, K.; Zisserman, A.; Kavukcuoglu, K. Spatial transformer networks. In Advances in Neural Information Processing Systems, Proceedings of the 28th International Conference on Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; Curran Associates Inc.: Red Hook, NY, USA, 2015; Volume 28. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Zhou, B.; Lapedriza, A.; Khosla, A.; Oliva, A.; Torralba, A. Places: A 10 million image database for scene recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 1452–1464. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Hore, A.; Ziou, D. Image quality metrics: PSNR vs. SSIM. In Proceedings of the 2010 20th International Conference on Pattern Recognition, Istanbul, Turkey, 23–26 August 2010; pp. 2366–2369. [Google Scholar]

- Heusel, M.; Ramsauer, H.; Unterthiner, T.; Nessler, B.; Hochreiter, S. Gans trained by a two time-scale update rule converge to a local nash equilibrium. In Advances in Neural Information Processing Systems, Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Curran Associates Inc.: Red Hook, NY, USA, 2017; Volume 30. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).