Abstract

Despite significant advances in image denoising, most algorithms rely on supervised learning, with their performance largely dependent on the quality and diversity of training data. It is widely assumed that digital image distortions are caused by spatially invariant Additive White Gaussian Noise (AWGN). However, the analysis of real-world data suggests that this assumption is invalid. Therefore, this paper tackles image corruption by real noise, providing a framework to capture and utilise the underlying structural information of an image along with the spatial information conventionally used for deep learning tasks. We propose a novel denoising loss function that incorporates topological invariants and is informed by textural information extracted from the image wavelet domain. The effectiveness of this proposed method was evaluated by training state-of-the-art denoising models on the BVI-Lowlight dataset, which features a wide range of real noise distortions. Adding a topological term to common loss functions leads to a significant increase in the LPIPS (Learned Perceptual Image Patch Similarity) metric, with the improvement reaching up to 25%. The results indicate that the proposed loss function enables neural networks to learn noise characteristics better. We demonstrate that they can consequently extract the topological features of noise-free images, resulting in enhanced contrast and preserved textural information.

1. Introduction

Denoising is a common task in processing low-quality image data and is an important component in the processing pipeline for cases where acquisition is performed under low-light conditions. Applications include object detection and object tracking in autonomous vehicles, biomedical image analysis, natural history filmmaking and consumer video production. The primary objective of image denoising is to eliminate the noise present while preserving the original features. However, in practice, denoising poses an inverse problem with intrinsically ambiguous solutions, and hence always results in a trade-off between signal distortion and noise removal. Moreover, denoising methods should not introduce new artefacts, for example over smoothing (blurring), that can degrade the visual quality of the image.

Conventional denoising algorithms (pre-machine learning), such as Non-Local Means (NLMs) [1] and Block-Matching and 3D filtering (BM3D) [2], rely on mathematical models and involve pre-defined filters to remove noise from images. Latterly, state-of-the-art denoising methods have exploited deep learning-based approaches [3]. Some methods have adopted a self-supervised learning framework to address the issue of unavailable ground truth data, e.g., [4,5,6,7,8]. However, the performance of these methods is limited, particularly for low-light content where, for example, edges are significantly distorted due to the presence of noise [9]. The best-performing methods hence rely on supervised learning, where models are trained either by applying synthetic noise to the clean images or by creating pseudo ground truth data from real noise images [10,11,12,13]. A comprehensive review of learning-based image denoising is available in [14].

Deep learning approaches are typically developed by designing a network architecture and defining a loss function. In this paper, we focus on the latter. The loss function plays a critical role in measuring the performance of the neural network, and the goal of training is to minimise this loss. Through the backpropagation algorithm, the loss function provides feedback to the neural network by computing its gradient, which is then used to update the network’s weights. Commonly used loss functions for image denoising include , , Charbonnier, SSIM, Laplacian loss, and a combination of these [12]. Other losses have also been employed, including perceptual loss, wavelet-based loss, gradient loss and total variation loss, but no performance improvement has been reported with these methods [9]. Despite significant efforts to improve the performance of denoising methods, their results still typically exhibit distortion artefacts or lost signal information. Moreover, there are no reports that analyse how the loss function influences noise removal for different content classes.

In this paper, we introduce a novel loss function, combining local low-level features and topological features extracted from the image. The denoising model is trained to be selective with respect to the textured areas of the image, resulting in improvements in contrast and performance on edges. Our proposed loss function is based on our previous work in [15]; we further enhance performance by using wavelets to provide different content classes, such as homogeneous regions, edges, structures, and textures with different weights. We then create a weight map to apply the weighed loss to each category. This is applied at the pixel level resulting in enhanced sharpness and spatial contrast in the output image. Additionally, we calculate topological invariants of the whole image instead of patches (as reported in [15]). We compare the performance of the new loss function across popular denoising architectures, revealing that adding a topological term to conventional loss functions helps to improve subjective results by enhancing contrast while preserving texture. In conclusion, the main contributions of our paper are

- A novel denoising loss function that incorporates topological invariants and leverages textural information from the image wavelet domain. The proposed framework effectively integrates both the underlying structural information and spatial characteristics of images.

- Evaluation of the proposed loss function with multiple denoising models on the BVI-Lowlight dataset, which is specifically designed for real noise distortions in low-light conditions.

The remainder of this paper is organised as follows. The problem statement and existing solutions are presented in Section 2. Details of topological invariants and the proposed loss function for image denoising are described in Section 3 and Section 4, respectively. The performance of the method is evaluated in Section 5, followed by the conclusions in Section 6.

2. Image Denoising Problem and Current Solutions

2.1. Image Denoising Problem

Given an image corrupted by noise N, the goal is to obtain a denoised image that accurately represents the underlying clean image . Mathematically, this can be expressed as follows:

where represents the denoising function or model that takes the noisy image as input and produces the denoised image as output. The noisy image in this case is the result of some function (e.g., signal-dependent noise) of :

The task of denoising can then be formulated as an optimisation problem, where one searches for an optimal parameter set for a denoising model through minimising loss function :

The denoising process aims to estimate the clean image by suppressing or removing the noise component from the noisy image . This involves leveraging statistical properties of the noise and exploiting the underlying structure and features present in the image.

2.2. Current Image Denoising Methods

Denoising methods can be categorised based on the domain they operate on and the type of approach employed.

In terms of the domain, denoising methods can be classified into spatial domain, transform domain, and hybrid (spatio-domain) methods. Spatial domain methods operate directly on the pixel values of an image. They include local methods such as mean filters, order-statistic filters, and adaptive filters. Non-local methods, like non-local means (NLMs), take advantage of image self-similarity for denoising. Techniques such as steering kernel regression (SKR) [16] and Wiener filtering [17] have been proposed to improve the performance of spatial filters in structured areas containing edges [18].

Transform domain methods involve representing image patches using orthogonal basic functions and adjusting coefficients to denoise the image. Wavelet-based denoising methods have gained popularity due to their sparsity and multiresolution properties. Thresholding techniques, such as VISUShrink [19] and BayesShrink [20], are commonly used in wavelet domain denoising [21,22,23,24,25].

Dual-domain methods combine spatial and transform domain filtering. The BM3D algorithm, proposed by Dabov et al. [26], utilises collaborative filtering in both the spatial and transform domains. It groups similar image patches, applies transform-domain shrinkage, and performs inverse transforms to obtain denoised images. Shape-adaptive versions of BM3D further improve the preservation of image details [2,27].

In terms of the type of approach, denoising methods can be divided into conventional and learning-based methods. Conventional methods typically employ handcrafted algorithms and heuristics to remove noise from images. These approaches include filters such as mean filters, median filters, order-statistic filters, and many of the above-mentioned techniques (NLM, BM3D, and thresholding).

Learning-based methods, such as sparse representation and dictionary learning, have been applied to image denoising. Techniques such as K-SVD [28] and locally learned dictionaries (K-LLD) [29] utilise sparse coding and clustering techniques to represent image patches. The LSSC method proposed by Mairal et al. [30] combines local and non-local sparsity within a neighbourhood. Deep learning techniques, specifically convolutional neural networks (CNNs), have shown remarkable performance in image denoising. DnCNN, introduced by Zhang et al. [10], is a deep neural network architecture based on residual learning that has achieved excellent results. One of the state-of-the-art image denoising methods, RIDNET [11], utilises attention mechanisms with short and long connections to learn both local and global features. More recent learning-based denoisers combine transformers with UNet architectures, which enhances feature extraction and hierarchical representation capabilities [31]. The performance is comparable to RDUNet [32], where residual dense network is employed through UNet architectures.

It is worth noting that there is a distinction between supervised and unsupervised deep learning denoising. Supervised methods rely on ground truth images for training, while unsupervised methods aim to denoise images without ground truth information. Deep Image Prior [6], Noise2Noise [33], and Blind2Unblind [7] are examples of unsupervised deep learning approaches, where networks are trained using only noisy input images. Conventional techniques often suffer from insufficient adaptability due to their reliance on fixed filters or predefined parameters, while learning-based denoising methods face challenges related to data requirements, generalisation, and handling of new noise types. Both conventional and learning-based denoising approaches may encounter difficulties in preserving fine image details while reducing noise. However, learning-based algorithms have demonstrated better capabilities in addressing this challenge compared to conventional techniques.

3. Topological Invariants of Image Data

This section describes the mathematical concepts required to create a topological loss function and explains how it can be exploited for image denoising.

Topological Data Analysis (TDA) has emerged as a powerful tool for analyzing complex datasets in various fields. It uses algebraic topology to study the shape and structure of data, including clusters, holes, and voids, which is particularly useful when dealing with data that may be high-dimensional or noisy. The TDA technique we employ here is called ‘persistent homology’, since it has been successfully applied to various problems, including genomics, material science, analysis of financial networks, and image processing [34,35,36,37,38,39].

In particular, the concept of persistent homology in TDA has shown promising results in topological image denoising [15]. It has been shown that, by applying a topological based loss function that operates in image patch space, noise removal can be enhanced while preserving essential topological features of the image.

Persistent homology can be used to deal with images by providing a robust and stable way to extract topological information from the image. By incorporating this information in the backpropagation process, persistent homology can assist in filtering out noise and extracting the underlying signal. The proposed loss function ensures that persistent homology features represented by the persistent diagram of the denoised output of the neural network are close to those of the clean image.

TDA was originally derived for continuous curves and manifolds, making it unsuitable for discrete objects such as images. To identify and compute topological invariants, which are properties of geometric objects that remain constant under certain transformations, the concept of a simplicial complex is introduced. A simplicial complex consists of simple shapes of various dimensions like points, lines, triangles, and tetrahedrons. These shapes are arranged by significance using a technique called filtration, which orders them from simpler to more complex. The basic idea is to begin with the most straightforward simplicial complex, which generally includes unconnected points or vertices, and then systematically include additional simplices to the complex in a controlled manner. This allows us to study the evolution of the topology of the object as it becomes more complex.

Definition 1 (Simplicial Complexes).

Let V be a finite nonempty set, the elements of which are called vertices. A simplicial complex on V is a collection of nonempty subsets of V that satisfies the following conditions:

- (i)

- , the set lies in ;

- (ii)

- and , where β is also an element of . α and β are called a simplex and a face, respectively.

Conditions (i)–() mean that any face or subset of vertices of a simplex in the collection is also in the collection and the intersection of any two simplices in the collection is either empty or a face of both simplices.

The dimension of a simplex is defined as , the dimension of is the highest dimension of constituent simplices.

In other words, a simplicial complex is a collection of simplices that fit together like puzzle pieces, where the boundaries of each simplex are shared with other simplices in the collection.

A subcomplex of a simplicial complex is a subset of its simplices that itself forms a simplicial complex. In other words, a subcomplex is a simplicial complex that is contained within another simplicial complex, or more formally, is called a subcomplex of , if and is a simplicial complex itself. Intuitively, a subcomplex of a simplicial complex is a smaller “piece" of the original complex that retains its simplicial structure. For example, a triangle that is a face of a larger tetrahedron can be considered a subcomplex of that tetrahedron.

A simplicial complex is a general concept in topology that provides a structured way to study the topological properties of spaces. One specific type of a simplicial complex used in topological data analysis is the Vietoris–Rips complex.

Definition 2 (Vietoris–Rips Complex).

Given a finite set of points P in a metric space and a distance threshold , the Vietoris–Rips complex is a simplicial complex whose k-simplices correspond to subsets with diameter less than or equal to ε, i.e., . Formally, a k-simplex belongs to if and only if for all .

In other words, the Vietoris–Rips complex is constructed from a set of data points by connecting those points that are within a certain distance threshold of each other. This type of complexes is useful for analyzing the shape and clustering of multi-dimensional data, revealing different levels of detail as changes.

There are other types of widely used complexes in topological analysis. One of them, a cubical complex, specifically caters to the data structure of digital images [40]. Cubical complexes provide a different approach by utilising the structure composed of axis-parallel cubes that encapsulate the geometry and relationships between cells of varying dimensions in a more grid-like manner.

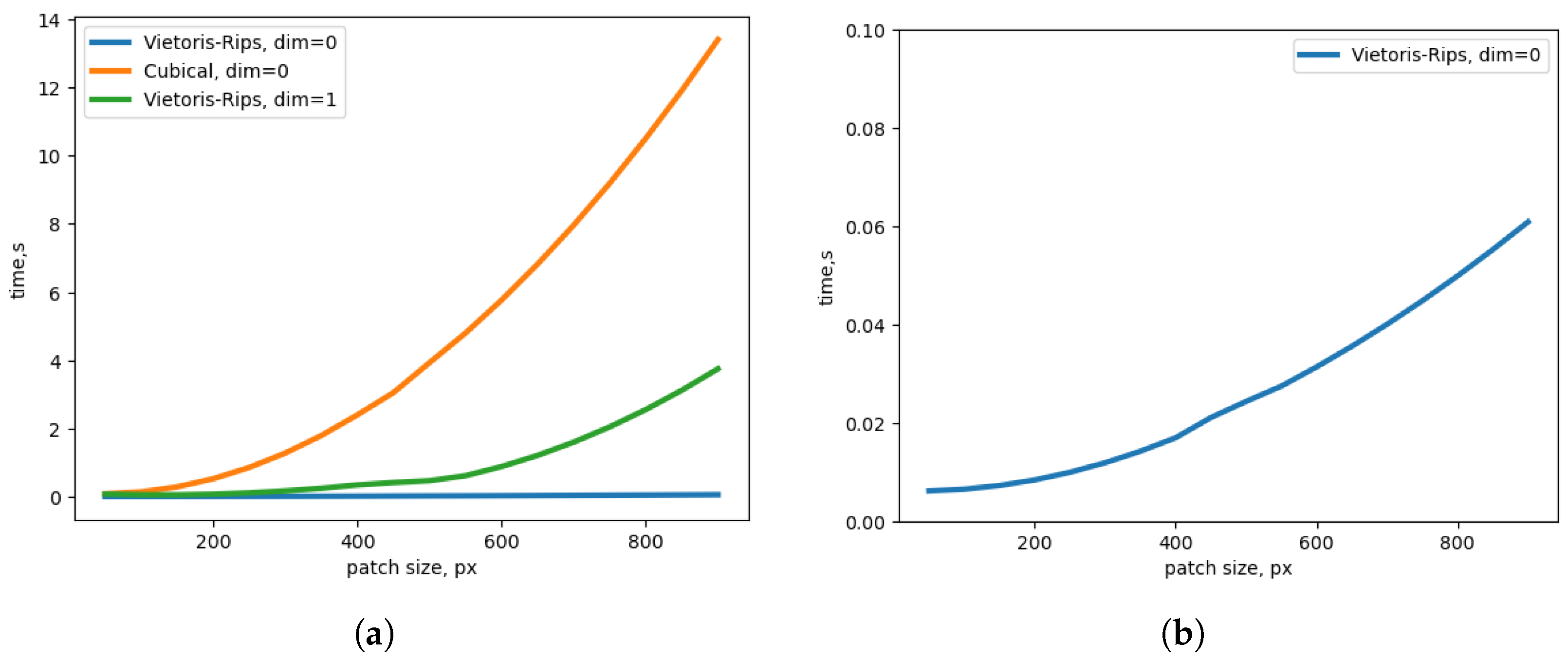

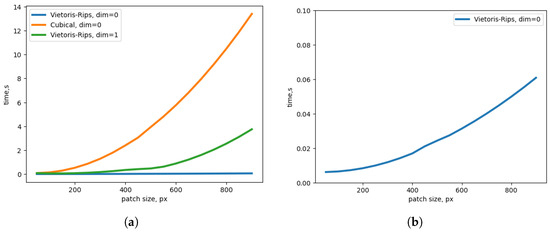

However, although cubical complexes are useful for digital image data [40], they have an inherent higher-dimensional nature and a larger number of possible interactions between cells. This results in increased computational overhead and slower processing times compared to the relatively simpler pairwise distance calculations used in the Vietoris–Rips complex construction. Computation times for RGB image patches are shown in Figure 1. For example, for the 512 × 512 patch, the computation time for cubical complex (the computation time is the same for 0 and 1 dimensions due to the algorithm) is ∼5-times higher than for the 1-dim Vietoris–Rips and ∼170-times higher than for the 0-dim Vietoris–Rips complex (computed on Nvidia 2080 Ti). Due to this considerable limitation and the critical importance of the balance of computational speed and image patch size for deep learning-based denoising, throughout this paper, we will assume the use of Vietoris–Rips complexes.

Figure 1.

(a) Computation times of Vietoris–Rips complexes and cubical complexes of dimensions 0 and 1. (b) Computation times of the Vietoris–Rips complex of dimension 0.

Simplices in a simplicial complex are arranged by significance using a technique called filtration.

Definition 3 (Filtrations).

A (n-)filtration of a simplicial complex is a sequence of subcomplexes, ordered by inclusion, such that each subcomplex is contained in the next. In other words, it is a sequence of simplicial complexes:

In TDA, while simplicial complexes are a convenient way to represent the connectivity and topological relationships within data, a filtration is used to provide a hierarchical representation of these relationship, revealing the evolution and persistence of topological features across different scales.

The purpose of filtrations is to capture the occurrence (“birth”) and disappearance (“death”) of topological-like homology classes [36], that can emerge and vanish as the parameter of the filtration changes (for Vietoris–Rips complexes).

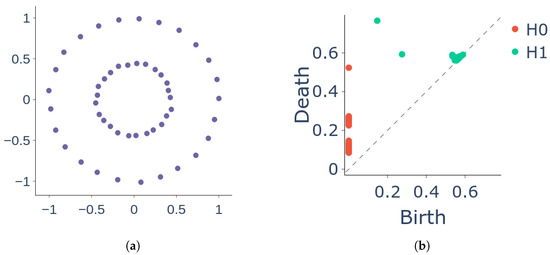

To visualise the changes in the topology of a simplicial complex over a range of filtration scales, a persistent diagram is created. The diagram displays each homology class [36] as a point, where the horizontal axis and the vertical axis represent the “birth” and “death” times, respectively.

Definition 4 (Persistent diagrams).

A persistent diagram of a filtration on an n-dimensional complex is a collection of mappings , each of which map every k-dimensional topological feature to a pair :

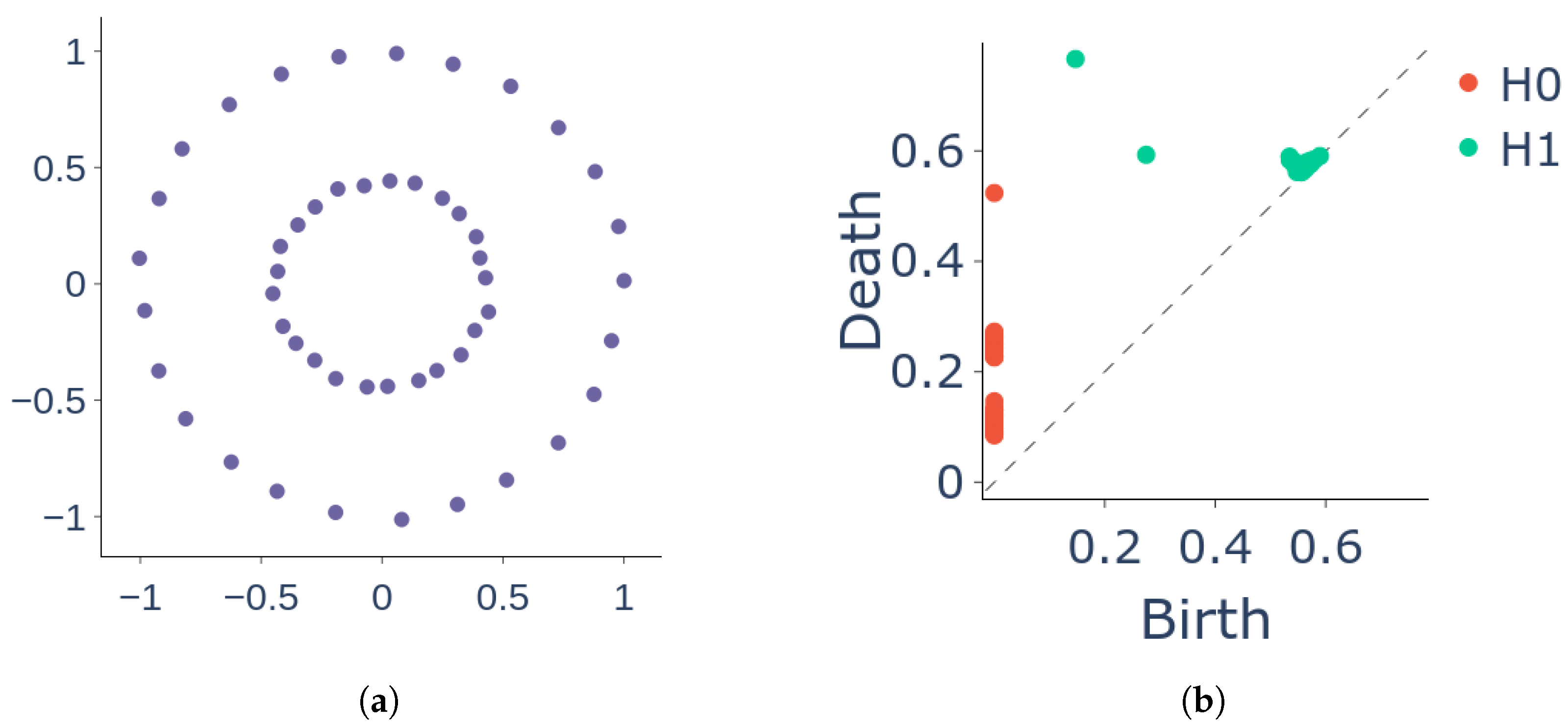

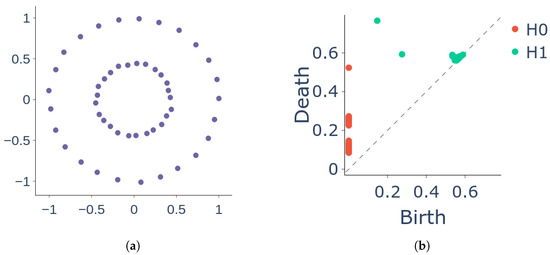

where represents the appearance (“birth”) of the feature when and represents its disappearance (“death”) when (see Figure 2). When , each point in the persistent diagram represents a connected component and when , a point corresponds to a 1-dimensional holes in filtered subcomplexes of .

Figure 2.

A persistent diagram of a data cloud shows the birth (represented by the x coordinate) and death (represented by the y coordinate) of topological features of different dimensions (0-dimensional and 1-dimensional features, denoted as and , respectively). Each point in the diagram corresponds to one of these features. (a) Datacloud . (b) Persistent diagram for the datacloud .

For image data, we calculate persistent diagrams on the filtrations of Vietoris–Rips complexes over the image intensity scale, tracking the occurrence and disappearance of topological features at different intensity values. For our task, we consider to represent the number of connected components and to represent the number of 1-dimensional holes in filtered subcomplexes of . As this paper focuses on specific aspects of the topic and cannot accommodate a comprehensive discussion of concepts such as homology classes and groups, we refer the readers to [36] for more theoretical foundations and a deeper theoretical understanding of persistent diagrams.

4. Wavelet-Based Topological Loss Function

This work addresses the limitations of our previous approach [15] while still utilising topological information for denoising. Unlike our prior method, which extracted topological features from the patch space, we now compute them directly in the image spatial domain. Additionally, we introduce a wavelet-based mask to suppress artefacts in homogeneous areas.

4.1. Topological Loss Function

To construct a topological loss for a pair of ground truth and output images , we first calculate persistence diagrams and using intensity-based filtrations of Vietoris–Rips complexes calculated in the spatial dimension of the images. The topological distance between the two diagrams is then calculated using total persistence as a dissimilarity measure.

Definition 5 (Total Persistence).

p-total persistence of a persistence diagram is defined as a total sum of the lifespans of each point in a diagram:

where and are “death” and “birth” values of . For our experiments, we use .

Total persistence can be interpreted as the overall importance of topological features in a simplicial complex over a range of filtration scales.

Finally, we can use total persistence as the dissimilarity measure for the persistence diagrams space. We introduce the topological component of our future loss function, which we define as shown in Equation (4), where the absolute value is used instead of squaring to ensure robustness to outliers:

A topological loss function based on persistent homology in the space of contrast patches sampled spatially from an image was shown to improve the image denoising task by Malyugina et al. in [15]. Unlike [15], in this work, we obtain topological information directly from the image spatial domain, leading to increased information on both local and global features. We combine it with a spatial loss to retain local spatial information. For the calculation of complexes, filtrations, and persistence diagrams, we used torch-topological (https://github.com/aidos-lab/pytorch-topological, accessed on 1 January 2025) package.

4.2. Texture Mask

In our experiments with , defined in Equation (4), we observed that the topological loss component works better in textured areas or on edges, while sometimes creating undesired artefacts particularly in homogeneous regions.

Persistent homology inherently relies on intensity variations to capture topological features, which makes it especially sensitive to high-contrast regions. In such regions, strong intensity gradients define distinct topological features, such as connected components and loops, that emerge rapidly during the filtration process. These changes in the filtrations lead to a set of persistent features that dominate the persistence diagram due to the global nature of topological features. This can cause the loss function to predominantly focus on structural details in high-contrast areas while neglecting smoother regions. Such an imbalance may force the network to preserve noise-induced or non-essential details, resulting in artefacts during denoising.

To mitigate these issues, we incorporate a wavelet-based texture mask that selectively applies the topological loss in regions with strong textural content while reducing its influence in homogeneous areas. This ensures that the model benefits from topological constraints where they are most meaningful while avoiding unnecessary artefacts in textureless regions. Wavelets have been proven to be successful in capturing textual information in images through various applications, including texture classification [41], and image denoising with texture preservation [42]. The patterns and variations in the wavelet coefficients can provide information about the spatial frequency content and orientation of the texture.

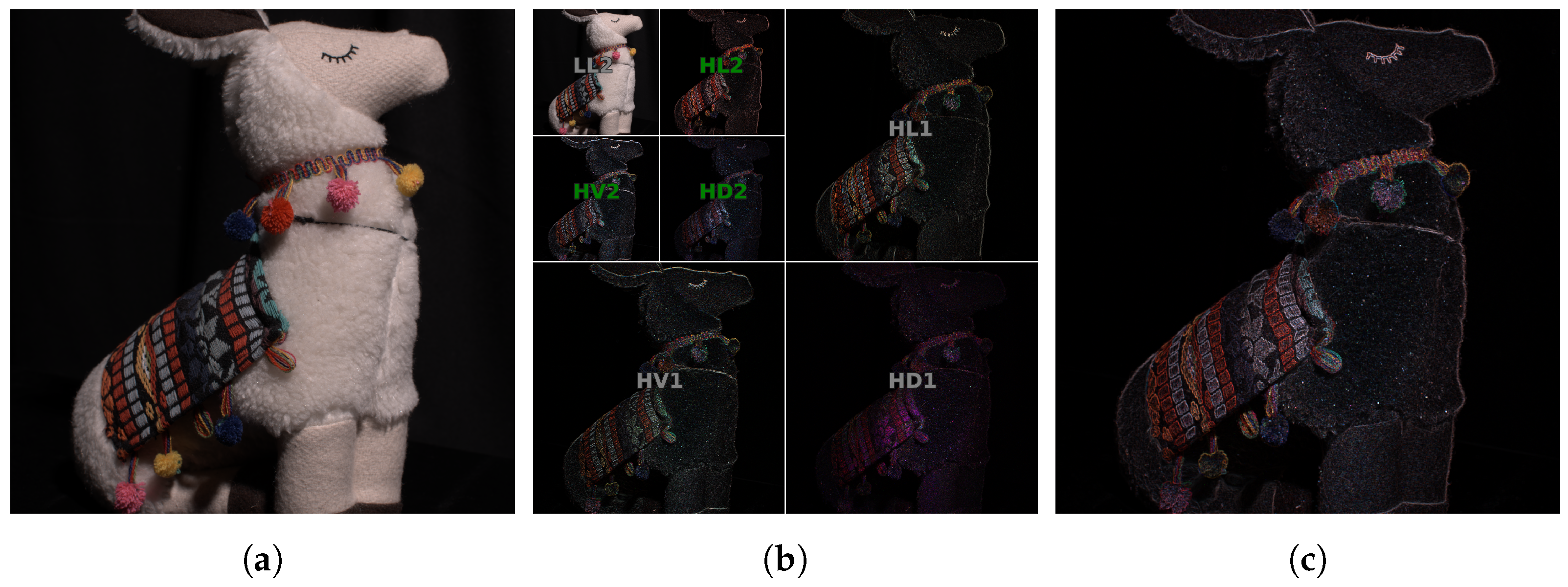

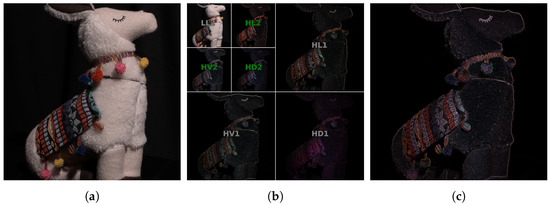

The Discrete Wavelet Transform (DWT) decomposes a signal into a set of mutually orthogonal basis wavelet functions. To retrieve textured areas from the ground truth image , we first apply 1-level decomposition with Haar base functions [43] and take only low-frequency bands, . This aims to ignore the areas with fine textural detail and noise [44], producing a smoother mask. Subsequently, we apply another DWT to and retrieve absolute values from the high-pass subbands and of , where , , and denote high-pass subbands in horizontal, vertical, and diagonal directions, respectively. Finally, we take mean values of these three level 2 DWT subbands, thus resulting in a texture mask (see Figure 3):

where

and denotes upscaling with the factor of 4 and ∘ is a function superposition.

Figure 3.

(a) Ground truth image ; (b) wavelet bands of 2-level decomposition ; (c) resulting wavelet mask built by averaging 2nd-order high-pass bands (green in (b)).

Finally, to apply topological loss only to textured areas, we use the texture mask for image , producing and combining it with pixelwise basic loss , where or :

where ⊙ is an element-wise product and is a scalar hyperparameter. The respective method is shown in Algorithm 1.

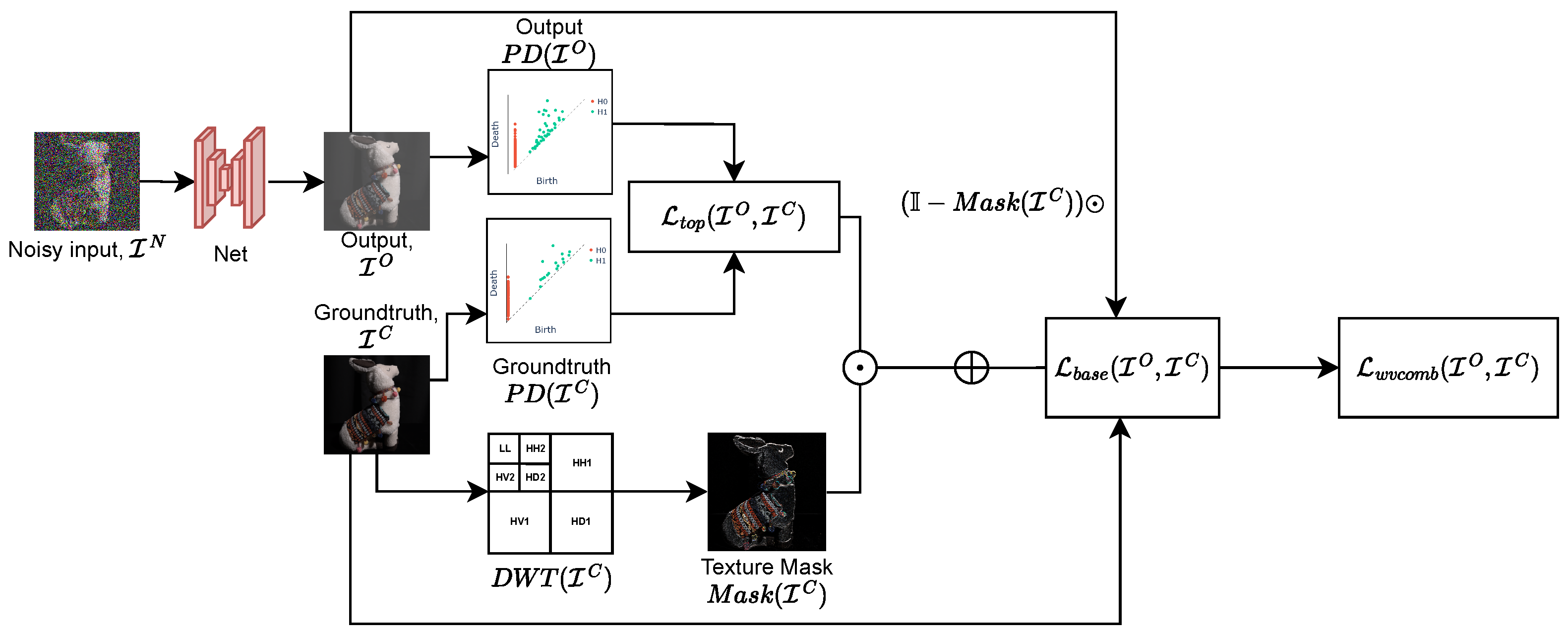

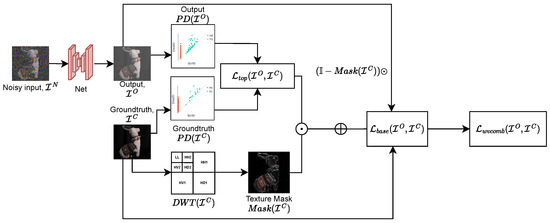

Figure 4 shows the diagram of the proposed wavelet-based topological loss function. The restored image output of the denoising network is transformed into topological features, which are subsequently compared with those of the groundtruth, . The final loss function is a combination of and with the weighting determined by the textural information extracted from the wavelet domain.

| Algorithm 1: Wavelet Topological Image Denoising |

| Input: Noisy image , Groundtruth image , Trainable model Output: Optimised parameter set Step 1: Wavelet Decomposition; Perform wavelet decomposition on to obtain band wavelet coefficients; Step 2: Texture Mask Calculation; Calculate the texture mask; Step 3: Acquire model output ; Apply model to noisy image: Step 4: Persistence Diagram Calculation; Calculate the persistence diagrams and of and , respectively; Step 5: Topological Loss Term Calculation; Calculate the total persistence values and for and , respectively; Calculate the topological loss component : Step 6: Base Loss Term Calculation; Calculate the base loss component as the loss between and ; Step 7: Combined Loss Calculation; Calculate the pixelwise mask-weighted topological loss to enforce the topological guidance in textured areas: and in remaining “plain” areas tune it down: Calculate the combined wavelet loss as the (-weighted) sum of the mask-weighted losses with gain ; Step 8: Find the parameters of the network, minimising combined loss; return ; |

Figure 4.

Wavelet topological loss calculation for a pair of images: output image and groundtruth . First, the texture mask of is calculated based on wavelet decomposition of ground truth image . The topological loss component is calculated as the absolute difference in total persistence values of diagrams and . We also calculate ( loss) to retain image spatial information. The resulting wavelet combined loss is calculated as pixelwise mask-weighted base loss and .

5. Experiments and Discussion

5.1. Dataset

We trained and tested the models on the BVI-Lowlight dataset [15,45], which was collected to overcome the limitations of existing denoising datasets in terms of variations in noise levels and diverse content with varying textures. Unlike synthetic datasets, BVI-Lowlight captures complex spatial noise patterns, making it well-suited for testing our method’s ability to preserve texture. Its higher bit depth ensures a wider dynamic range and more accurate noise representation while preserving the details and textures in low light areas.

This dataset consists of 31,800 14-bit images captured from 20 scenes using two cameras, Nikon D7000 and Sony A7SII, with ISO settings ranging from 100 to 25,600 and 100 to 409,600, respectively. We ensured consistent lighting conditions by using non-flickering LED lights. Pseudo-ground truth images were generated by applying postprocessing techniques, including the removal of oversaturated pixels, intensity alignment, and image registration. Further details can be found in [15].

5.2. Training

In our experiments, we employed both traditional and state-of-the-art architectures for our baselines: (i) A residual-based denoising convolutional neural network (DnCNN) introduced in [10] by Zhang et al., which is a widely used method for benchmarking deep image denoisers. (ii) UNet, an encoder-decoder style fully convolutional network [46], which has been extensively used as a foundational framework for image denoising. (iii) RIDNet, a single-stage blind real image denoising network that incorporates a feature attention mechanism [11].

We compared the proposed loss with other denoising losses, including conventional losses , , and VGG loss ( is set to as in [47]). In our experiments, we set topological loss term gain to as it demonstrated best performance on test sets. We also tested several combinations of losses and models.

All models presented in this paper were trained using 11K patches from the BVI-Lowlight dataset. The patches were sized 256 × 256, and we trained the models for 40 epochs. The initial learning rate was set to , and the batch size was 16, which was limited by the memory capacity of our computing system.

5.3. Results

We assessed the performance of our method by training state-of-the-art denoising models with various loss functions and their combinations.

We evaluated image quality using Peak Signal-to-Noise Ratio (PSNR), Structural Similarity Index Measure (SSIM), and Learned Perceptual Image Patch Similarity (LPIPS). PSNR measures pixel-wise fidelity, defined as

where and are pixel intensities of the original and denoised images, is the image size, and MAX is the maximum possible image pixel intensity value.

SSIM assesses structural similarity by incorporating terms related to luminance, contrast, and correlation:

where and are mean intensities, and are variances, is the covariance, and , are small constants to ensure stability.

LPIPS [48] is an image quality metric that computes feature-space distances from a pre-trained neural network (for our task, we used AlexNet) and is aligned more closely with human perception.

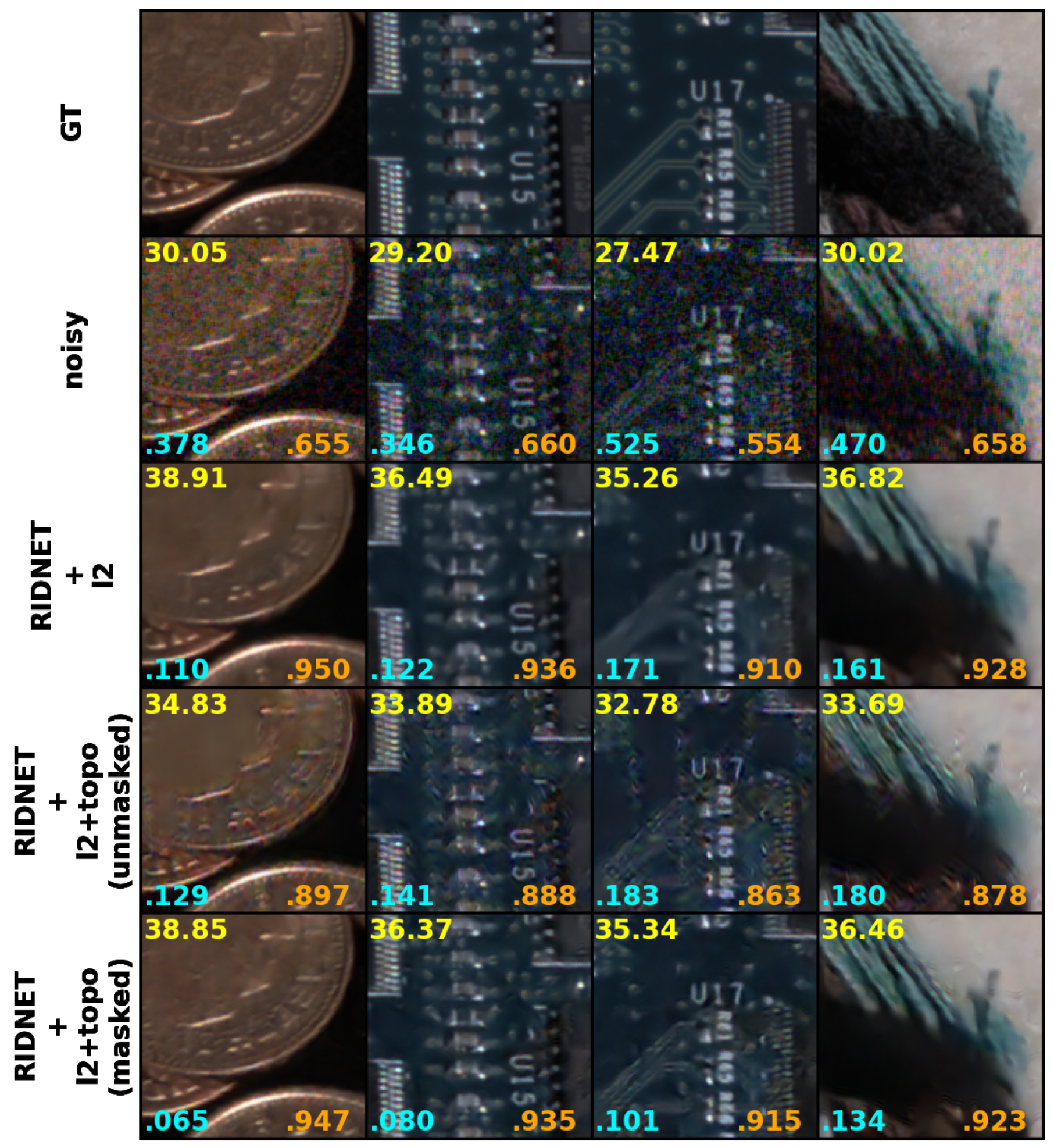

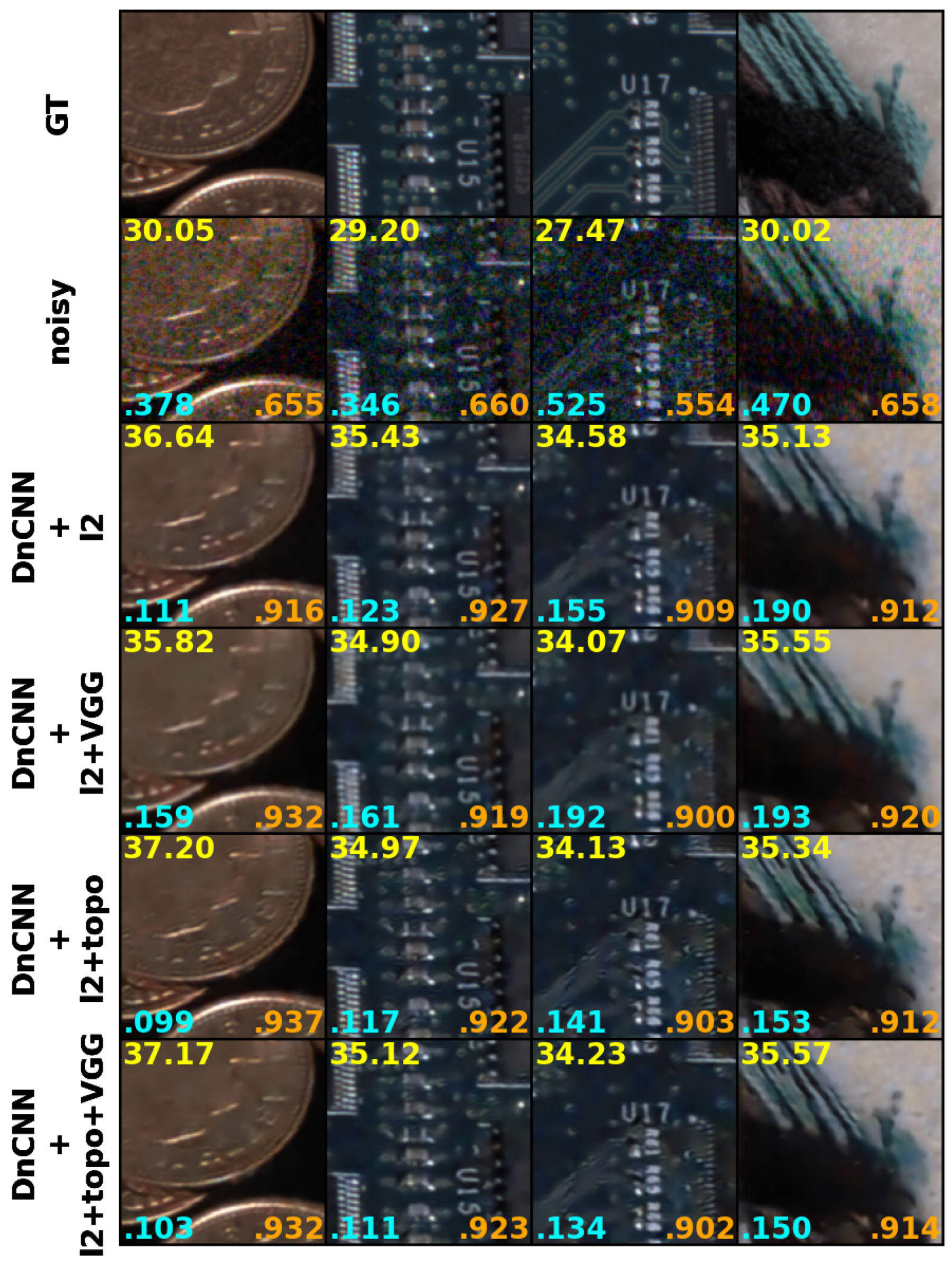

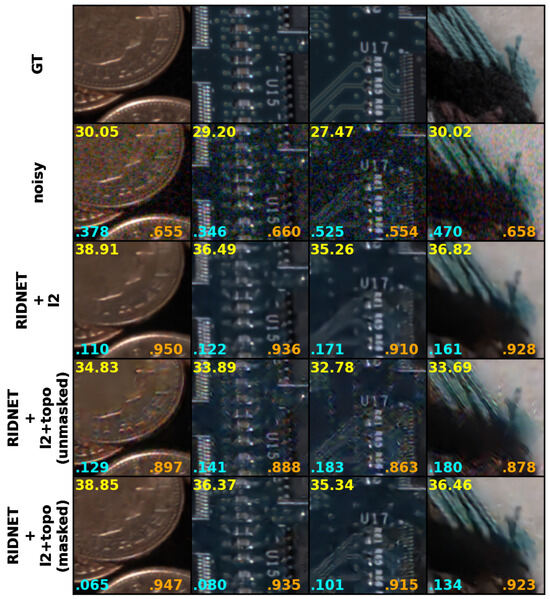

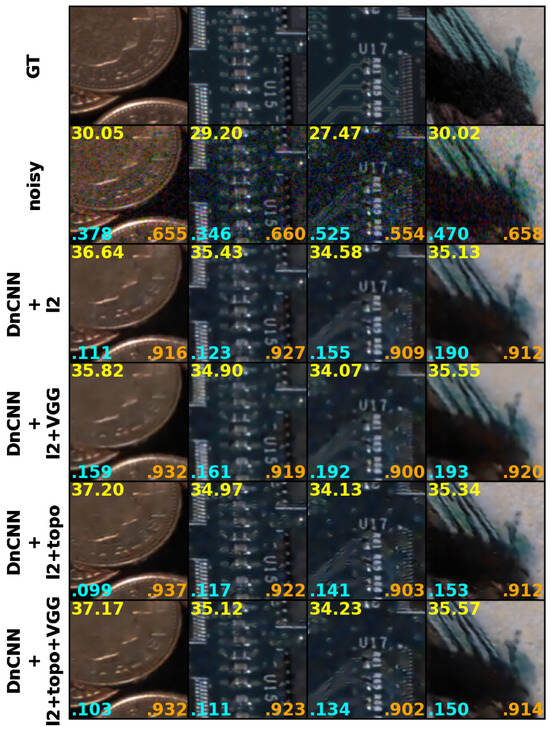

The results based on objective metrics are presented in Table 1 and the examples for subjective assessment are shown in Figure 5 and Figure 6. These results demonstrate improved performance in terms of objective metrics that correlate more strongly with subjective quality assessment [48].

Table 1.

Metrics values for the outputs of a corresponding model (DnCNN, RIDNET, or UNet) trained with (i) only (ii) combination of with VGG loss (iii) combination of with persistence-based loss (iv) combination of with persistence-based loss and VGG loss . The first-place result for each model is highlighted in bold with a light green background, while the best overall result is highlighted in bold with a bright green background.

Figure 5.

Using wavelet mask with . Top row: Sample patches from dataset ground truth. Second row: Sample patches from noisy images with ISO varying from 160,000 to 400,000. Third row: Sample patches of the outputs from RIDNET trained with only. Fourth row: Sample patches of the outputs from DnCNN trained with topology loss (unmasked) combined with . Bottom row: Sample patches of the outputs from DnCNN trained with topology loss (masked) combined with . PSNR (yellow), LPIPS (cyan) and SSIM (orange) values are calculated per corresponding pairs. Note the preserved edges, enhanced contrast and improvement in LPIPS metric values when using masked topological loss compared to loss only. Using a wavelet texture mask also prevents the network from producing undesirable artefacts.

Figure 6.

Results. Top row: Sample patches from dataset ground truth. Second row: Sample patches from noisy images with ISO varying from 160,000 to 400,000. Third row: Sample patches of the outputs from DnCNN trained with only. Fourth row: Sample patches of the outputs from DnCNN trained with VGG loss combined with . Fifth row: Sample patches of the outputs from DnCNN trained with topology loss (masked) combined with . Bottom row: Sample patches of the outputs from DnCNN trained with topology loss (masked) combined with VGG and . PSNR (yellow), LPIPS (cyan) and SSIM (orange) values are calculated per corresponding pairs.

The LPIPS metric shows the highest and most consistent improvement across all architectures when using the topological loss, demonstrating its effectiveness in enhancing perceptual quality. Even when combined with VGG loss, consistently outperforms conventional loss functions. We also have observed that perceptually, adding VGG loss in some cases helps to attenuate artefacts that can appear in untextured areas when using masked topological loss only.

For the RIDNET architecture, while PSNR for models trained with is slightly lower compared to those trained with or , the LPIPS values remain significantly better, indicating superior perceptual quality. Notably, SSIM and PSNR are highest for RIDNET+, suggesting that the model may require different weighting coefficients for the topological and VGG losses to fully optimize its performance. Examining the subjective results further in Figure 5 (rows 3 and 5), we can confirm that the RIDNET++ (masked) model produces sharper textures and preserves fine details better than RIDNET+. In particular, in regions with complex structures, such as circuitry and fabric, the masked topological loss reduces oversmoothing while maintaining perceptual consistency. This aligns with the improved LPIPS metric, suggesting that the model benefits from the topological loss in preserving high-level perceptual features without significantly altering SSIM or PSNR.

It is also worth noting that adding VGG loss term to a minimization criterion does not always improve the performance, comparing to models trained with basic loss only (see values for DnCNN with ). This further highlights the importance of careful loss balancing when integrating multiple perceptual and structural constraints.

Figure 5 shows that using a wavelet mask together with a topological term for the loss helps to reduce the occurrence of artefacts in homogeneous areas while preserving the texture and enhancing the contrast. For example, the denoised coin image using RIDNET with our proposed loss function exhibits sharper edges and better details compared to those denoised using alone or without a mask. Additionally, RIDNET with the unmasked loss function fails to completely remove color noise.

Figure 6 shows the denoised results using DnCNN. The results obtained by training it with a topological loss are shown with wavelet-based masks. Comparing the results in the 5th and 6th rows to those in the 3rd and 4th rows, our proposed masked topological loss produces sharper results with better fine details. Specifically, the fonts in the third column become more readable, such as ‘R61’. Without a topological loss, the VGG loss appears to remove the fine texture present in the top-right area of the images in the fourth column, which is the white area of the lama’s doll fur. More subjective results are available in Supplemental Material; see Figure S1.

Overall, the evaluation of the results based on the BVI-Lowlight dataset, featuring real-world noise levels, has demonstrated the effectiveness of the proposed loss function in enabling denoising models to better learn both noise characteristics and the underlying signal. This, in turn, leads to enhanced contrast and preserved textural information in the denoised images.

In the presence of noise, the topological properties of an image can become distorted or disrupted. By incorporating topological invariants into the loss function, the algorithm can effectively capture and preserve the original structural features of the image while removing the noise. This approach helps to maintain the integrity of textured areas, and enhance the overall visual quality of the denoised image, as well as provide a valuable framework for improving the performance of existing image denoising models and combating common problems such as oversmoothing.

6. Conclusions and Future Work

In this paper, we introduce a novel method for image denoising that incorporates a wavelet-based topological loss function and utilizes textural information from the image wavelet domain. Our proposed loss function effectively captures noise characteristics while preserving image texture, resulting in denoised images with enhanced visual quality and preserved perceptual fidelity of the original content. The experimental results on real noise confirm the efficacy of our method, showing superior perceptual results and improved performance in objective metrics that are highly correlated with subjective quality assessment.

This study demonstrates that the proposed wavelet-based topological loss function improves low-light image denoising. However, there are still areas to explore. One direction is testing the loss function performance on different datasets with various noise patterns and scene types. Our experiments focus on the BVI-Lowlight dataset, which represents real-world low-light noise. Future research could extend this to datasets with different camera models and environments.

Another key aspect to investigate is the sensitivity of hyperparameters, especially the impact of the topological loss weight, . We found an optimal value through experiments, but a more detailed study across a wider range of values could offer better insights.

Finally, since deep learning-based denoising models are evolving quickly, future work could also integrate our loss function into newer architectures, such as transformer-based denoisers or diffusion models. While we tested it with established models like DnCNN, UNet, and RIDNet, applying it to these newer approaches would further confirm its usefulness in modern image restoration.

By addressing these aspects in future research, the proposed loss function can be more comprehensively assessed in terms of its generalization capabilities, parameter robustness, and applicability to the latest state-of-the-art architectures for image denoising.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/s25072047/s1, Figure S1: Subjective results of RIDNET (row 1 and 2), DnCNN (row 3 and 4) and UNet (row 5 and 6), tested on the scenes from BVI-Lowlight denoising dataset. Zoom in for better resolution.

Author Contributions

Conceptualization, A.M., N.A. and D.B.; methodology, A.M., N.A. and D.B.; software, A.M.; validation, A.M.; formal analysis, A.M. and N.A.; investigation, A.M., N.A. and D.B.; resources, A.M.; data curation, A.M.; writing—original draft preparation, A.M.; writing—review and editing, N.A. and D.B.; visualization, A.M.; supervision, N.A. and D.B.; project administration, D.B.; funding acquisition, D.B. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by UKRI MyWorld Strength in Places Programme (SIPF00006/1).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The datasets generated and analysed during the current study are available from the corresponding author on reasonable request.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Buades, A.; Coll, B.; Morel, J.M. A non-local algorithm for image denoising. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; Volume 2, pp. 60–65. [Google Scholar]

- Dabov, K.; Foi, A.; Katkovnik, V.; Egiazarian, K. BM3D image denoising with shape-adaptive principal component analysis. In Proceedings of the SPARS’09 Signal Processing with Adaptive Sparse Structured Representations, Saint-Malo, France, 6–9 April 2009. [Google Scholar]

- Anantrasirichai, N.; Bull, D. Artificial intelligence in the creative industries: A review. Artif. Intell. Rev. 2022, 55, 589–656. [Google Scholar]

- Krull, A.; Buchholz, T.O.; Jug, F. Noise2void-learning denoising from single noisy images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–17 June 2019; pp. 2129–2137. [Google Scholar]

- Batson, J.; Royer, L. Noise2self: Blind denoising by self-supervision. In Proceedings of the 36th International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 524–533. [Google Scholar]

- Ulyanov, D.; Vedaldi, A.; Lempitsky, V. Deep image prior. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 16 December 2018; pp. 9446–9454. [Google Scholar]

- Wang, Z.; Liu, J.; Li, G.; Han, H. Blind2Unblind: Self-Supervised Image Denoising With Visible Blind Spots. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 2027–2036. [Google Scholar]

- Jang, H.; Park, J.; Jung, D.; Lew, J.; Bae, H.; Yoon, S. PUCA: Patch-Unshuffle and Channel Attention for Enhanced Self-Supervised Image Denoising. In Advances in Neural Information Processing Systems; Oh, A., Naumann, T., Globerson, A., Saenko, K., Hardt, M., Levine, S., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2023; Volume 36, pp. 19217–19229. [Google Scholar]

- Anantrasirichai, N.; Bull, D. Contextual Colorization and Denoising for Low-Light Ultra High Resolution Sequences. In Proceedings of the 2021 IEEE International Conference on Image Processing (ICIP), Anchorage, AK, USA, 19–22 September 2021; pp. 1614–1618. [Google Scholar]

- Zhang, K.; Zuo, W.; Chen, Y.; Meng, D.; Zhang, L. Beyond a gaussian denoiser: Residual learning of deep cnn for image denoising. IEEE Trans. Image Process. 2017, 26, 3142–3155. [Google Scholar]

- Anwar, S.; Barnes, N. Real Image Denoising with Feature Attention. In Proceedings of the IEEE International Conference on Computer Vision (ICCV-Oral), Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Abdelhamed, A.; Afifi, M.; Timofte, R.; Brown, M.S. NTIRE 2020 Challenge on Real Image Denoising: Dataset, Methods and Results. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Wu, W.; Liu, S.; Xia, Y.; Zhang, Y. Dual residual attention network for image denoising. Pattern Recognit. 2024, 149, 110291. [Google Scholar] [CrossRef]

- Jebur, R.S.; Zabil, M.H.B.M.; Hammood, D.A.; Cheng, L.K. A comprehensive review of image denoising in deep learning. Multimed. Tools Appl. 2024, 83, 58181–58199. [Google Scholar] [CrossRef]

- Malyugina, A.; Anantrasirichai, N.; Bull, D. A Topological Loss Function for Image Denoising on a new BVI-lowlight Dataset. Signal Process. 2023, 211, 109081. [Google Scholar] [CrossRef]

- Takeda, H.; Farsiu, S.; Milanfar, P. Kernel regression for image processing and reconstruction. IEEE Trans. Image Process. 2007, 16, 349–366. [Google Scholar]

- Wiener, N. Extrapolation, Interpolation and Smoothing of Stationary Time Series with Engineering Applications; The MIT Press: Cambridge, MA, USA, 1949. [Google Scholar]

- Tronicke, J.; Böniger, U. Steering kernel regression: An adaptive denoising tool to process GPR data. In Proceedings of the 2013 7th International Workshop on Advanced Ground Penetrating Radar, Nantes, France, 2–5 July 2013; pp. 1–4. [Google Scholar]

- Donoho, D.L.; Johnstone, J.M. Ideal spatial adaptation by wavelet shrinkage. Biometrika 1994, 81, 425–455. [Google Scholar]

- Chipman, H.A.; Kolaczyk, E.D.; McCulloch, R.E. Adaptive Bayesian wavelet shrinkage. J. Am. Stat. Assoc. 1997, 92, 1413–1421. [Google Scholar] [CrossRef]

- Shui, P.L. Image denoising using 2-D separable oversampled DFT modulated filter banks. IET Image Process. 2009, 3, 163–173. [Google Scholar] [CrossRef]

- Yu, G.; Sapiro, G. DCT image denoising: A simple and effective image denoising algorithm. Image Process. Line 2011, 1, 292–296. [Google Scholar]

- Foi, A.; Katkovnik, V.; Egiazarian, K. Pointwise shape-adaptive DCT for high-quality denoising and deblocking of grayscale and color images. IEEE Trans. Image Proc. 2007, 16, 1395–1411. [Google Scholar]

- Simoncelli, E.P.; Adelson, E.H. Noise removal via Bayesian wavelet coring. In Proceedings of the 3rd IEEE International Conference on Image Processing, Lausanne, Switzerland, 19 September 1996; Volume 1. [Google Scholar]

- Mihcak, M.K.; Kozintsev, I.; Ramchandran, K.; Moulin, P. Low-complexity image denoising based on statistical modeling of wavelet coefficients. IEEE Signal Process. Lett. 1999, 6, 300–303. [Google Scholar]

- Dabov, K.; Foi, A.; Katkovnik, V.; Egiazarian, K. Image denoising by sparse 3-D transform-domain collaborative filtering. IEEE Trans. Image Process. 2007, 16, 2080–2095. [Google Scholar]

- Dabov, K.; Foi, A.; Katkovnik, V.; Egiazarian, K. A Nonlocal and Shape-Adaptive Transform-Domain Collaborative Filtering. In Proceedings of the The 2008 International Workshop on Local and Non-Local Approximation in Image Processing, Lausanne, Switzerland, 23–24 August 2008. [Google Scholar]

- Aharon, M.; Elad, M.; Bruckstein, A. K-SVD: An algorithm for designing overcomplete dictionaries for sparse representation. IEEE Trans. Signal Process. 2006, 54, 4311. [Google Scholar]

- Chatterjee, P.; Milanfar, P. Clustering-based denoising with locally learned dictionaries. IEEE Trans. Image Process. 2009, 18, 1438–1451. [Google Scholar]

- Mairal, J.; Bach, F.; Ponce, J.; Sapiro, G.; Zisserman, A. Non-local sparse models for image restoration. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009; pp. 2272–2279. [Google Scholar]

- Fan, C.M.; Liu, T.J.; Liu, K.H. SUNet: Swin Transformer UNet for Image Denoising. In Proceedings of the 2022 IEEE International Symposium on Circuits and Systems (ISCAS), Austin, TX, USA, 27 May–1 June 2022; pp. 2333–2337. [Google Scholar] [CrossRef]

- Gurrola-Ramos, J.; Dalmau, O.; Alarcón, T.E. A Residual Dense U-Net Neural Network for Image Denoising. IEEE Access 2021, 9, 31742–31754. [Google Scholar] [CrossRef]

- Lehtinen, J.; Munkberg, J.; Hasselgren, J.; Laine, S.; Karras, T.; Aittala, M.; Aila, T. Noise2noise: Learning image restoration without clean data. arXiv 2018, arXiv:1803.04189. [Google Scholar]

- Byrne, N.; Clough, J.R.; Valverde, I.; Montana, G.; King, A.P. A Persistent Homology-Based Topological Loss for CNN-Based Multiclass Segmentation of CMR. IEEE Trans. Med. Imaging 2023, 42, 3–14. [Google Scholar] [CrossRef]

- Zomorodian, A.; Carlsson, G. Computing persistent homology. In Proceedings of the Twentieth Annual Symposium on Computational Geometry, Brooklyn, NY, USA, 8–11 June 2004. [Google Scholar]

- Edelsbrunner, H.; Letscher, D.; Zomorodian, A. Topological persistence and simplification. Discret. Comput. Geom. 2002, 28, 511–533. [Google Scholar]

- Epstein, C.; Carlsson, G.; Edelsbrunner, H. Topological data analysis. Inverse Probl. 2011, 27, 120201. [Google Scholar]

- Malyugina, A.; Anantrasirichai, N.; Bull, D. A Topological Loss Function: Image Denoising on a Low-Light Dataset. arXiv 2022, arXiv:2208.04573. [Google Scholar] [CrossRef]

- Carlsson, G.; Ishkhanov, T.; Silva, V.D.; Zomorodian, A. On the local behavior of spaces of natural images. Int. J. Comp. Vis. 2008, 76, 1–12. [Google Scholar] [CrossRef]

- Choe, S.; Ramanna, S. Cubical Homology-Based Machine Learning: An Application in Image Classification. Axioms 2022, 11, 112. [Google Scholar] [CrossRef]

- Anantrasirichai, N.; Burn, J.; Bull, D. Terrain Classification From Body-Mounted Cameras During Human Locomotion. IEEE Trans. Cybern. 2015, 45, 2249–2260. [Google Scholar] [CrossRef] [PubMed]

- Hill, P.; Anantrasirichai, N.; Achim, A.; Al-Mualla, M.; Bull, D. Undecimated Dual-Tree Complex Wavelet Transforms. Signal Process. Image Commun. 2015, 35, 61–70. [Google Scholar] [CrossRef]

- Schmidt, E. Zur Theorie der linearen und nichtlinearen Integralgleichungen. Math. Ann. 1907, 63, 433–476. [Google Scholar] [CrossRef]

- Anantrasirichai, N.; Burn, J.; Bull, D.R. Robust texture features based on undecimated dual-tree complex wavelets and local magnitude binary patterns. In Proceedings of the 2015 IEEE International Conference on Image Processing (ICIP), Quebec City, QC, Canada, 27–30 September 2015; pp. 3957–3961. [Google Scholar] [CrossRef]

- Malyugina, A.; Anantrasirichai, N.; Bull, D. BVI-LOWLIGHT, 2022. Available online: https://ieee-dataport.org/documents/bvi-lowlight-images (accessed on 30 October 2023).

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015, Munich, Germany, 5–9 October 2015; Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Johnson, J.; Alahi, A.; Fei Fei, L. Perceptual Losses for Real-Time Style Transfer and Super-Resolution. arXiv 2016, arXiv:1603.08155. [Google Scholar] [CrossRef]

- Zhang, R.; Isola, P.; Efros, A.A.; Shechtman, E.; Wang, O. The Unreasonable Effectiveness of Deep Features as a Perceptual Metric. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).