Research on a Burn Severity Detection Method Based on Hyperspectral Imaging

Abstract

1. Introduction

2. Related Works

2.1. Application of HSI in Burn Assessment

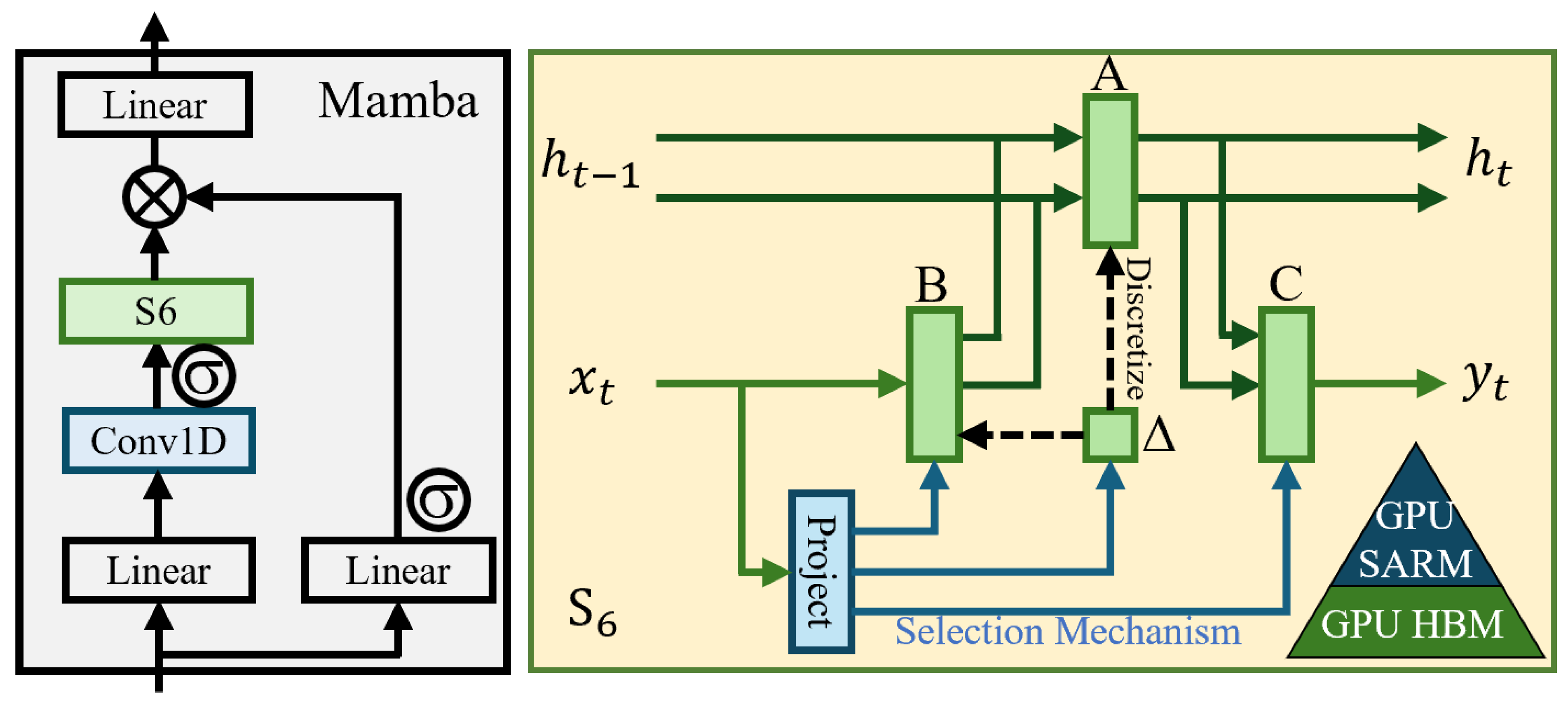

2.2. Mamba Model

3. Proposed Method

3.1. Network Architecture

3.2. Parameter Settings

4. Discussion

4.1. Dataset

4.2. Methods for Comparison

- KNN [6]: An instance-based learning algorithm that, given a test sample, finds the K-nearest neighbors in the training set and predicts the class or value of the test sample based on the classes of its neighbors.

- SVM [7]: A supervised learning algorithm widely used for classification tasks, which aims to find a hyperplane that separates samples of different classes while maximizing the distance between the hyperplane and the nearest samples.

- RF [8]: An ensemble learning algorithm that constructs multiple decision trees using the Bagging (Bootstrap Aggregating) method and generates the final prediction through voting or averaging.

- GBM [9]: A gradient boosting algorithm that sequentially trains multiple weak learners (usually decision trees), with each new model correcting the errors of the previous one, thereby improving overall predictive performance.

- LDA [10]: A supervised learning method that performs classification by finding the feature combinations that most effectively differentiate between different classes.

- MLP [36]: A feedforward neural network composed of multiple layers, typically including an input layer, several hidden layers, and an output layer. Each neuron is fully connected to those in the previous layer, making it suitable for solving nonlinear problems.

- 1D CNN [37]: A deep learning method that extracts local features from one-dimensional data through convolution operations, thereby building high-level representations of the data.

- Transformer [17]: A deep learning architecture that uses the self-attention mechanism to process sequential data in parallel, enabling efficient capture of long-range dependencies and relationships.

4.3. Quantitative Performance Metrics

4.4. Comparison of Results

4.5. Ablation Experiments

4.6. Processing Time

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Peck, M.D. Epidemiology of burns throughout the world. Part I: Distribution and risk factors. Burns 2011, 37, 1087–1100. [Google Scholar] [CrossRef] [PubMed]

- Atiyeh, B.S.; Gunn, S.W.; Hayek, S.N. State of the art in burn treatment. World J. Surg. 2005, 29, 131–148. [Google Scholar] [CrossRef] [PubMed]

- Jaskille, A.D.; Shupp, J.W.; Jordan, M.H.; Jeng, J.C. Critical review of burn depth assessment techniques: Part I. Historical review. J. Burn Care Res. 2009, 30, 937–947. [Google Scholar] [CrossRef] [PubMed]

- Wang, P.; Cao, Y.; Yin, M.; Li, Y.; Lv, S.; Huang, L.; Zhang, D.; Luo, Y.; Wu, J. Full-field burn depth detection based on near-infrared hyperspectral imaging and ensemble regression. Rev. Sci. Instrum. 2019, 90, 064103. [Google Scholar] [CrossRef] [PubMed]

- Jaskille, A.D.; Ramella-Roman, J.C.; Shupp, J.W.; Jordan, M.H.; Jeng, J.C. Critical review of burn depth assessment techniques: Part II. Review of laser doppler technology. J. Burn Care Res. 2010, 31, 151–157. [Google Scholar] [CrossRef]

- Kadry, R.; Ismael, O. A New Hybrid KNN Classification Approach based on Particle Swarm Optimization. Int. J. Adv. Comput. Sci. Appl. 2020, 11, 291–296. [Google Scholar] [CrossRef]

- Hearst, M.A.; Dumais, S.T.; Osuna, E.; Platt, J.; Scholkopf, B. Support vector machines. IEEE Intell. Syst. Appl. 1998, 13, 18–28. [Google Scholar] [CrossRef]

- Rigatti, S.J. Random forest. J. Insur. Med. 2017, 47, 31–39. [Google Scholar] [CrossRef]

- Natekin, A.; Knoll, A. Gradient boosting machines, a tutorial. Front. Neurorobot. 2013, 7, 21. [Google Scholar] [CrossRef]

- Xanthopoulos, P.; Pardalos, P.M.; Trafalis, T.B.; Xanthopoulos, P.; Pardalos, P.M.; Trafalis, T.B. Linear discriminant analysis. In Robust Data Mining; Springer: New York, NY, USA, 2013; pp. 27–33. [Google Scholar]

- Zhang, M.; Yang, M.; Xie, H.; Yue, P.; Zhang, W.; Jiao, Q.; Xu, L.; Tan, X. A Global Spatial-Spectral Feature Fused Autoencoder for Nonlinear Hyperspectral Unmixing. Remote Sens. 2024, 16, 3149. [Google Scholar] [CrossRef]

- Li, Z.; Liu, F.; Yang, W.; Peng, S.; Zhou, J. A survey of convolutional neural networks: Analysis, applications, and prospects. IEEE Trans. Neural Netw. Learn. Syst. 2021, 33, 6999–7019. [Google Scholar] [CrossRef] [PubMed]

- Medsker, L.R.; Jain, L. Recurrent neural networks. Des. Appl. 2001, 5, 2. [Google Scholar]

- Gu, A.; Dao, T. Mamba: Linear-time sequence modeling with selective state spaces. arXiv 2023, arXiv:2312.00752. [Google Scholar]

- Gu, A.; Johnson, I.; Goel, K.; Saab, K.; Dao, T.; Rudra, A.; Ré, C. Combining recurrent, convolutional, and continuous-time models with linear state space layers. Adv. Neural Inf. Process. Syst. 2021, 34, 572–585. [Google Scholar]

- Gu, A.; Goel, K.; Ré, C. Efficiently modeling long sequences with structured state spaces. arXiv 2021, arXiv:2111.00396. [Google Scholar]

- Vaswani, A. Attention is all you need. In Proceedings of the 31st Annual Conference on Neural Information Processing Systems (NIPS), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Fu, D.Y.; Dao, T.; Saab, K.K.; Thomas, A.W.; Rudra, A.; Ré, C. Hungry hungry hippos: Towards language modeling with state space models. arXiv 2022, arXiv:2212.14052. [Google Scholar]

- Li, Y.; Cai, T.; Zhang, Y.; Chen, D.; Dey, D. What makes convolutional models great on long sequence modeling? arXiv 2022, arXiv:2210.09298. [Google Scholar]

- Li, W.; Lv, M.; Chen, T.; Chu, Z.; Tao, R. Application of a hyperspectral image in medical field: A review. Image Graph 2021, 26, 1764–1785. [Google Scholar] [CrossRef]

- Fei, B. Hyperspectral imaging in medical applications. In Data Handling in Science and Technology; Elsevier: Amsterdam, The Netherlands, 2019; Volume 32, pp. 523–565. [Google Scholar]

- Cui, R.; Yu, H.; Xu, T.; Xing, X.; Cao, X.; Yan, K.; Chen, J. Deep learning in medical hyperspectral images: A review. Sensors 2022, 22, 9790. [Google Scholar] [CrossRef]

- Cui, K.; Li, R.; Polk, S.L.; Lin, Y.; Zhang, H.; Murphy, J.M.; Plemmons, R.J.; Chan, R.H. Superpixel-based and Spatially-regularized Diffusion Learning for Unsupervised Hyperspectral Image Clustering. IEEE Trans. Geosci. Remote Sens. 2024, 62, 4405818. [Google Scholar] [CrossRef]

- Anselmo, V.J.; Zawacki, B.E. Multispectral photographic analysis a new quantitative tool to assist in the early diagnosis of thermal burn depth. Ann. Biomed. Eng. 1977, 5, 179–193. [Google Scholar] [CrossRef] [PubMed]

- Eisenbeiß, W.; Marotz, J.; Schrade, J.P. Reflection-optical multispectral imaging method for objective determination of burn depth. Burns 1999, 25, 697–704. [Google Scholar] [CrossRef] [PubMed]

- Calin, M.A.; Parasca, S.V.; Savastru, R.; Manea, D. Characterization of burns using hyperspectral imaging technique—A preliminary study. Burns 2015, 41, 118–124. [Google Scholar] [CrossRef] [PubMed]

- Wang, P.; Cao, Y.; Yin, M.; Li, Y.; Wu, J. A burn depth detection system based on near infrared spectroscopy and ensemble learning. Rev. Sci. Instrum. 2017, 88, 114302. [Google Scholar] [CrossRef]

- Huang, Q.; Li, W.; Zhang, B.; Li, Q.; Tao, R.; Lovell, N.H. Blood cell classification based on hyperspectral imaging with modulated Gabor and CNN. IEEE J. Biomed. Health Inform. 2019, 24, 160–170. [Google Scholar] [CrossRef]

- Sommer, F.; Sun, B.; Fischer, J.; Goldammer, M.; Thiele, C.; Malberg, H.; Markgraf, W. Hyperspectral imaging during normothermic machine perfusion—A functional classification of ex vivo kidneys based on convolutional neural networks. Biomedicines 2022, 10, 397. [Google Scholar] [CrossRef]

- Zunair, H.; Hamza, A.B. Melanoma detection using adversarial training and deep transfer learning. Phys. Med. Biol. 2020, 65, 135005. [Google Scholar] [CrossRef]

- Ortega, S.; Halicek, M.; Fabelo, H.; Guerra, R.; Lopez, C.; Lejaune, M.; Godtliebsen, F.; Callico, G.M.; Fei, B. Hyperspectral imaging and deep learning for the detection of breast cancer cells in digitized histological images. In Proceedings of the SPIE—The International Society for Optical Engineering; NIH Public Access: Bethesda, MD, USA, 2020; Volume 11320. [Google Scholar]

- Urbanos, G.; Martín, A.; Vázquez, G.; Villanueva, M.; Villa, M.; Jimenez-Roldan, L.; Chavarrías, M.; Lagares, A.; Juárez, E.; Sanz, C. Supervised machine learning methods and hyperspectral imaging techniques jointly applied for brain cancer classification. Sensors 2021, 21, 3827. [Google Scholar] [CrossRef]

- Manifold, B.; Men, S.; Hu, R.; Fu, D. A versatile deep learning architecture for classification and label-free prediction of hyperspectral images. Nat. Mach. Intell. 2021, 3, 306–315. [Google Scholar] [CrossRef]

- Li, Y.; Luo, Y.; Zhang, L.; Wang, Z.; Du, B. MambaHSI: Spatial-spectral mamba for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5524216. [Google Scholar] [CrossRef]

- Ma, X.; Ni, Z.; Chen, X. TinyViM: Frequency Decoupling for Tiny Hybrid Vision Mamba. arXiv 2024, arXiv:2411.17473. [Google Scholar]

- Bebis, G.; Georgiopoulos, M. Feed-forward neural networks. IEEE Potentials 1994, 13, 27–31. [Google Scholar] [CrossRef]

- Qazi, E.U.H.; Almorjan, A.; Zia, T. A one-dimensional convolutional neural network (1D-CNN) based deep learning system for network intrusion detection. Appl. Sci. 2022, 12, 7986. [Google Scholar] [CrossRef]

| CLASS | KNN | SVM | RF | GBM | LDA | MLP | 1D CNN | Transformer | MBNet |

|---|---|---|---|---|---|---|---|---|---|

| 50 °C | 100.00 | 100.00 | 100.00 | 99.66 | 99.83 | 99.83 | 100.00 | 99.34 | 99.50 |

| 100 °C | 81.61 | 91.52 | 84.55 | 86.24 | 84.14 | 93.87 | 96.52 | 91.68 | 96.15 |

| 150 °C | 87.65 | 86.13 | 88.17 | 90.22 | 73.00 | 90.05 | 95.86 | 92.86 | 96.47 |

| 200 °C | 69.84 | 75.63 | 70.51 | 74.39 | 66.85 | 88.12 | 85.52 | 87.50 | 92.28 |

| 250 °C | 71.36 | 75.53 | 73.29 | 68.85 | 68.10 | 86.00 | 86.03 | 80.14 | 84.08 |

| 300 °C | 86.60 | 87.28 | 84.75 | 79.70 | 82.12 | 86.92 | 86.25 | 84.86 | 90.09 |

| OA (%) | 82.42 | 85.69 | 83.19 | 83.08 | 78.97 | 90.86 | 91.61 | 89.47 | 93.08 |

| AA (%) | 82.84 | 86.02 | 83.54 | 83.18 | 79.01 | 90.80 | 91.70 | 89.40 | 93.10 |

| Kappa | 0.7890 | 0.8283 | 0.7983 | 0.7970 | 0.7477 | 0.8903 | 0.8993 | 0.8737 | 0.9170 |

| Remove | Sequential | Reverse | Bidirectional | |

|---|---|---|---|---|

| OA (%) | 0.8892 | 92.44 | 92.83 | 93.08 |

| AA (%) | 0.8879 | 92.36 | 92.76 | 93.10 |

| Kappa | 0.8670 | 0.9033 | 0.9140 | 0.9170 |

| KNN | SVM | RF | GBM | LDA | MLP | 1D CNN | Transformer | MBNet | |

|---|---|---|---|---|---|---|---|---|---|

| Training | 0.01 | 0.97 | 7.98 | 256.82 | 0.11 | 3.31 | 187.58 | 927.49 | 18.44 |

| Prediction | 0.21 | 1.30 | 0.03 | 0.03 | 0.01 | 0.01 | 0.06 | 0.46 | 0.02 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, S.; Gu, M.; Zhang, M.; Tan, X. Research on a Burn Severity Detection Method Based on Hyperspectral Imaging. Sensors 2025, 25, 1330. https://doi.org/10.3390/s25051330

Wang S, Gu M, Zhang M, Tan X. Research on a Burn Severity Detection Method Based on Hyperspectral Imaging. Sensors. 2025; 25(5):1330. https://doi.org/10.3390/s25051330

Chicago/Turabian StyleWang, Sijia, Minghui Gu, Mingle Zhang, and Xin Tan. 2025. "Research on a Burn Severity Detection Method Based on Hyperspectral Imaging" Sensors 25, no. 5: 1330. https://doi.org/10.3390/s25051330

APA StyleWang, S., Gu, M., Zhang, M., & Tan, X. (2025). Research on a Burn Severity Detection Method Based on Hyperspectral Imaging. Sensors, 25(5), 1330. https://doi.org/10.3390/s25051330