Abstract

Internet of Medical Things (IoMT) technology has emerged from the introduction of the Internet of Things in the healthcare sector. However, the resource-constrained characteristics and heterogeneity of IoMT networks make these networks susceptible to various types of threats. Thus, it is necessary to develop novel security solutions (e.g., efficient and accurate Anomaly-based Intrusion Detection Systems), considering the inherent limitations of IoMT networks, before these networks reach their full potential in the market. In this paper, we propose an AIDS specifically designed for resource-constrained devices within IoMT networks. The proposed lightweight AIDS leverages novelty detection and outlier detection algorithms instead of conventional classification algorithms to achieve (a) enhanced detection performance against both known and unknown attack patterns and (b) minimal computational costs.

1. Introduction

Internet of Medical Things (IoMT) technology has emerged from the introduction of the Internet of Things in the healthcare sector. The purpose of the IoMT is to improve the patient’s quality of life by enabling personalized e-health services without time and location limitations [1,2,3,4]. Nevertheless, the resource-constrained characteristics and heterogeneity of IoMT networks make these networks susceptible to various types of threats, and this, in turn, means that IoMT networks, as well as the healthcare systems relying on these networks, face several security and privacy challenges [5,6]. For example, an attacker may intrude into the IoMT network with the goal of gaining unauthorized access to sensitive information (e.g., medical data). In addition, an adversary may exploit vulnerabilities in the IoMT networks to compromise the corresponding IoT-based healthcare systems in order to disrupt the normal operation of compromised IoT-based healthcare systems (e.g., by flooding the resource-constrained IoMT network with a large number of requests) and/or to tamper with sensing data (e.g., by injecting fake data). This, in turn, can endanger the availability and/or integrity of the healthcare services provided by the compromised IoT-based healthcare systems [2]. Consequently, the development of security solutions protecting IoMT networks from attackers is crucial to ensure the acceptance and wide adoption of IoMT networks in the coming years.

However, conventional security mechanisms are complex and resource-intensive; therefore, they are not suitable for IoMT networks. On the one hand, conventional security mechanisms cannot be afforded by IoMT devices, which are resource-constrained and possess limited processing power, storage capacities, and battery lives. On the other hand, the IoMT devices are deployed and interconnected using lightweight communication protocols in a constrained environment that cannot support conventional security mechanisms [7]. Therefore, to ensure that IoMT networks earn the trust of all stakeholders and realize their full potential in the healthcare sector, it is crucial to create innovative security mechanisms. These mechanisms must effectively and efficiently tackle the significant security challenges faced by IoMT networks, taking into account their unique limitations due to resource constraints [6,8].

To achieve this goal, anomaly-based intrusion detection systems (AIDSs) are recognized by both industry professionals and researchers as a promising security solution that can significantly enhance the protection of IoT networks. However, for these systems to be effective, it is essential to develop innovative lightweight AIDSs tailored for resource-constrained environments [7,9]. Currently, as far as we are aware, only a limited number of AIDSs (i.e., [10,11,12,13,14,15,16]) have been introduced in the literature for protecting resource-limited IoMT devices within IoMT networks. In addition, only two of them (i.e., [13,14]) appear to have been implemented. However, they have not been evaluated during runtime. Moreover, most of the proposed AIDSs for IoMT networks in the literature employ conventional classification machine learning (ML) algorithms (e.g., Naïve Bayes or Random Forest) that are more focused on classifying a new record into one of the classes for which the classification algorithms have been trained [17]. In the case of a record that was not part of the training data, conventional classification ML algorithms cannot make accurate predictions.

Towards this direction, in this work, we propose an AIDS specifically designed for resource-constrained devices within IoMT networks. The proposed AIDS consists of two main components: (a) the monitoring and data acquisition (MDA) component, which is specifically engineered to operate on resource-constrained IoMT devices, and (b) the remote detection engine (RDE) component, which functions on the gateway. The proposed AIDS leverages novelty detection and outlier detection algorithms instead of conventional classification algorithms. The runtime performance of the proposed AIDS was evaluated using an IoMT testbed and dataset produced in our previous works [18,19]. The runtime performance of the implemented AIDS was evaluated using custom scripts that launched both known and unknown (i.e., unknown to the trained ML models) attacks. The runtime performance evaluation results showed that the implemented AIDS can achieve (a) enhanced detection performance against both known and unknown attack patterns and (b) minimal computational costs, with CPU and memory usage for the MDA component remaining below 0.1%.

The rest of this work is organized as follows. Section 2 provides the design of the proposed AIDS for resource-constrained IoMT devices including a detailed description of its various components and internal modules. Afterward, Section 3 describes the novelty detection and outlier detection algorithms employed, the training dataset used, and the runtime performance evaluation of the implemented AIDS. Moreover, Section 4 presents a comparison with state-of-the-art AIDSs. Lastly, Section 5 concludes this paper and provides hints for future work.

2. Proposed AIDS

2.1. System Architecture

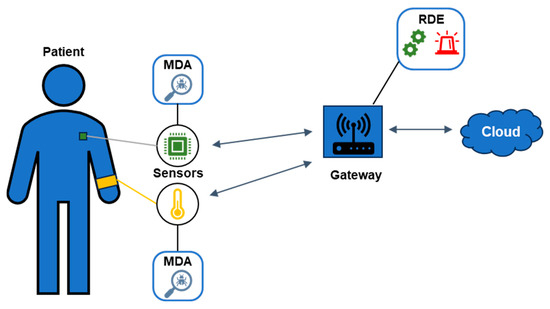

The proposed AIDS is designed to protect resource-constrained IoMT devices within an IoMT network from both internal and external threats that exploit the inherent security vulnerabilities associated with IoT technology. Our approach not only addresses currently known IoT attack vectors but also potential unknown threats that may emerge in the future, affecting all four layers of the ITU-T IoT reference model [20]. As illustrated in Figure 1, the proposed AIDS consists of two main components: (a) the monitoring and data acquisition (MDA) component, which is specifically engineered to operate on resource-constrained IoMT devices, and (b) the remote detection engine (RDE) component, which functions on the gateway. Detailed descriptions of the MDA and RDE components can be found in Section 2.2 and Section 2.3, respectively.

Figure 1.

Architecture of the proposed AIDS in the IoMT network.

At this point, it is important to mention that alerts are produced by the RDE component when intrusions that target the IoMT devices connected to the gateway are detected. These produced alerts are sent to a cloud server for further processing and visualization, as depicted in Figure 1. Additionally, the gateway, where the RDE component runs, is required to have enough computational resources (e.g., Raspberry Pi 4 Model B) to support both its standard operations as a relay node and the functions of the RDE component running on it.

In addition, each IoMT device that incorporates the MDA component must meet several essential requirements to function effectively. First, it should have the capability to access its own behavioral data, such as CPU usage and memory utilization. This access is crucial for monitoring performance and identifying potential issues. Second, the device must possess adequate communication bandwidth to efficiently transmit the collected behavioral data to the gateway. This ensures timely and accurate data transfer, which is vital for continuous monitoring and analysis. Lastly, the device needs sufficient computational resources to manage its primary functions as a sensor or actuator while simultaneously supporting the operations of the MDA component. For the implementation of the MDA component, we utilized a Raspberry Pi 4 Model B, which serves as an exemplary IoMT sensor device capable of generating humidity and temperature sensing data. This choice of hardware not only demonstrates the practical application of the MDA component but also highlights its effectiveness in a resource-constrained environment.

2.2. Monitoring and Data Acquisition (MDA) Component

The MDA component runs on an IoMT device connected to the gateway. The MDA component performs two operations: (a) monitoring of the behavior of the IoMT device, hosting it, and collection of relevant behavior data (e.g., CPU usage and memory usage) during a specific monitoring period (i.e., the sampling period) and (b) transmission of the collected data to the gateway where the RDE component runs. Then, the RDE component on the gateway can identify whether an attack incident has occurred in the IoMT device hosting the MDA component.

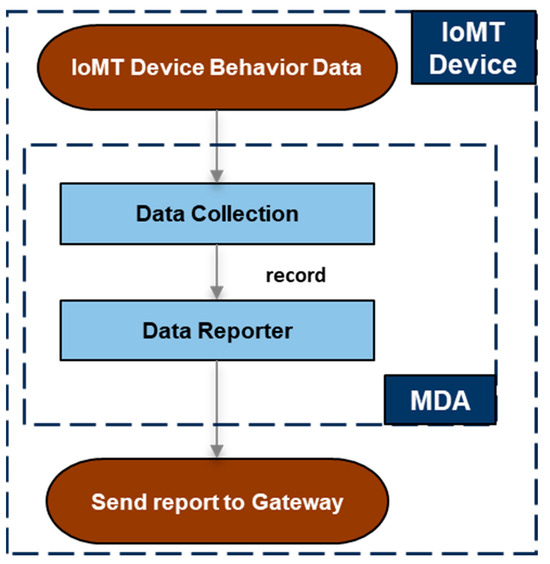

In essence, the MDA component runs on a resource-constrained IoMT device that cannot perform local intrusion detection on its own. The MDA component was implemented by considering an IoMT device (e.g., Raspberry Pi 4 Model B) running a Linux-based OS, and more specifically, a Debian-based OS (e.g., Ubuntu OS [21]). For the implementation of the MDA component, the C programming language was used to minimize the required resources. In addition, apart from the standard C libraries, the implementation uses one external and freely available library, the Eclipse Paho C client library [22], so that the collected behavior data can be sent to the gateway through the MQTT protocol. The internal architecture of the MDA component, along with its modules, is shown in Figure 2.

Figure 2.

The Monitoring and Data Acquisition (MDA) component.

2.2.1. Data Collection

The “data collection” module is responsible for the collection of behavior data regarding the IoMT device on runtime during a sampling period (i.e., behavior sampling period). The collected behavior data include the set of features presented in Table 1 along with their descriptions. Based on the collected behavior data, a record in CSV format is created as the output of the “data collection” module.

Table 1.

Summary of features collected by the “Data Collection” module of the MDA component.

In particular, the module accesses a specific directory (i.e., “/proc” directory) that is present in a Linux-based OS and gathers the required feature values that are present in the “/proc/stat”, “/proc/meminfo”, and “/proc/diskstats” files. Table 1 presents the feature values collected by the “data collection” module along with their descriptions based on the documentation of the “/proc” directory included in [23,24,25,26].

At this point, it is important to note that some of the collected feature values in Table 1 are related to a specific system resource type (i.e., CPU, memory, or storage) and thus can be organized into the following three feature groups:

- the CPU mode group (i.e., indexes 2–11) containing all feature values related to the time (in ticks) that the CPU spends in a specific mode of operation,

- the memory group (i.e., indexes 17–25) containing all feature values that describe how the system memory is used, and

- the disk stats group (i.e., indexes 26–33) containing all feature values that describe how the OS interacts with storage drives.

2.2.2. Data Reporter

The “data reporter” module receives the collected records from the “data collection” module. For each collected record, a report is created and transmitted to the gateway by the “data reporter” module. The implemented “data reporter” module transmits reports to the gateway using the MQTT application protocol. A report includes two types of information: (i) one line containing a unique identifier of the device where the MDA component is running, and (ii) another line involving the collected record in CSV format. An example of a report is shown below.

| Title: MDA report (two lines) |

| iomtSensor2 |

| 1733143854599,102899,11863,20796,1155510,1625,0,1016,0,0,0,3532646,11824936,6992,2, 1717639,2035460,124560,610688,614092,886328,826624,126760,8624,20364,68185,5664298, 20705,45733,3789920,75991,0,124190 |

2.3. Remote Detection Engine (RDE) Component

The RDE component runs on the gateway of the IoMT network. The aim of the RDE component is to:

- receive the MDA reports from the IoMT devices (i.e., hosting the MDA component) that are connected to the gateway and leverage the received MDA reports to identify whether an attack incident has occurred in the connected IoMT devices, and

- send appropriate security alerts to the cloud server for further processing and visualization when attack incidents are detected.

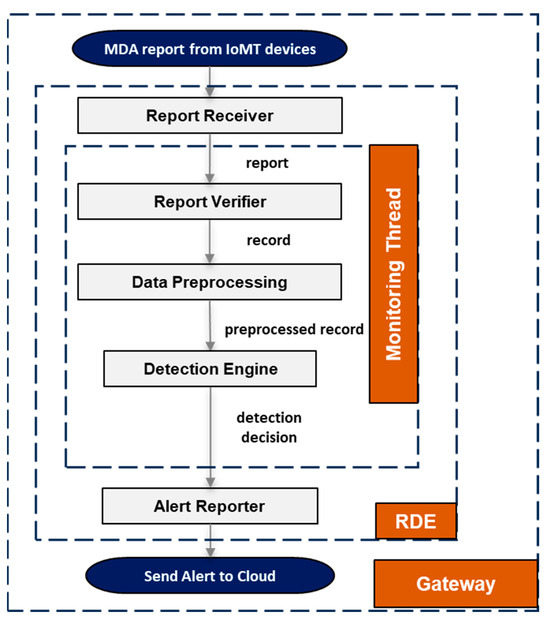

The RDE component was implemented by considering a gateway device (e.g., Raspberry Pi 4 Model B) running a Linux-based OS, and more specifically, a Debian-based OS (e.g., Ubuntu OS [21]). The RDE component is implemented using the Java programming language and the Python programming language. In addition, apart from the standard included Java and Python libraries, the implementation uses the external and freely available libraries described in Table 2. The internal architecture of the RDE component, along with its modules, is shown in Figure 3.

Table 2.

Employed external libraries and short descriptions of their use.

Figure 3.

The Remote Detection Engine (RDE) component.

2.3.1. Report Receiver

The “report receiver” module receives an MDA report during runtime on the gateway from a connected IoMT device where the MDA component is running. The purpose of the “report receiver” module is to (a) either create a new CPU thread (i.e., monitoring thread) to process the current MDA report or (b) to redirect the MDA report to an existing processing thread. At this point, it is worth mentioning that the intrusion detection for IoMT devices where the MDA components are running is performed on a per-device basis, meaning that a specific thread created by the “report receiver” module is responsible for performing intrusion detection for only one specific IoMT device based only on the corresponding received MDA reports.

As a new MDA report is received, the “report receiver” module performs the following operations:

- The “report receiver” module splits the report into its two parts: (a) the unique ID of the IoMT device from where the MDA report originates and (b) the enclosed record collected on the IoMT device.

- The “report receiver” module checks if the unique ID of the IoMT device is present (i.e., registered) in the configuration file of the RDE component. The configuration file of the RDE component contains an array of unique device IDs that must include all unique IDs of the IoMT devices that are connected to the gateway and where MDA components are running.

- Only after ensuring that the IoMT device related to the received MDA report is registered in the configuration file of the RDE component does the “report receiver” module proceed to check whether a processing thread regarding this IoMT device already exists.

- In the case of an existing thread, the new MDA report is redirected to it; otherwise, a new thread is created to process the new MDA report.

2.3.2. Report Verifier

The “report verifier” module processes an MDA report on runtime. The module performs the following operations:

- The “report verifier” module splits the report into its two parts: (a) the unique ID of the IoMT device from where the MDA report originates and (b) the enclosed record collected on the IoMT device.

- The “report verifier” module performs a check on the enclosed record to verify whether the record is valid. In particular, the check is performed to ensure that the record follows the CSV format and that it contains the expected number of features with their expected types.

- If the record is deemed valid, it is forwarded to the “data preprocessing” module. Otherwise, if the record is deemed invalid, it is discarded, and the thread waits for a new MDA report.

2.3.3. Data Preprocessing

The input of the “data preprocessing” module is a record produced by the “report verifier” module. The output of the “data preprocessing” module is a preprocessed record in CSV format that can be used by the “detection engine” module. The “data preprocessing” module processes the input record in two stages to produce the output preprocessed record.

Based on the description of the features in Table 1, it can be observed that some feature values (i.e., features with indexes 1–14, 16, 26–31, and 33) depend heavily on the time that has elapsed since the system boot. As long as the monitored system (i.e., IoMT device) is up and running, these feature values will be continuously increasing. In this context, our focus is not on the actual value of these features but on the increment that has occurred between subsequent sampling periods. Thus, the objective of the first preprocessing stage is the decoupling of the feature values of a collected record from the runtime duration of the underlying system.

The first preprocessing stage processes the values of the features with indexes 1–14, 16, 26–31, and 33. In contrast, the values of the remaining features (i.e., features with indexes 15, 17–25, and 32) do not depend on the runtime duration of the system. Therefore, they are simply forwarded to the second preprocessing stage without being processed in the first preprocessing stage.

Below, we present an appropriate equation to clarify further how the first preprocessing stage works. We assume that the record () collected at time , containing 33 feature values, is denoted as . In addition, we present as the record produced after the first preprocessing stage (i.e., pp1) based on . In addition, let denote the feature value of , where is an integer in range [1, 33]. Furthermore, we assume that represents the corresponding output feature value, after the first preprocessing stage, for the feature value . Finally, we assume that the first record is collected at . Then, Equation (1) shows how is computed based on for different moments in time and different features indexes. Furthermore, the output feature values of a record, after the first preprocessing stage, are summarized in Table 3.

Table 3.

Output feature values after the first preprocessing stage.

The output of the first preprocessing stage is received by the second preprocessing stage that, in turn, performs normalization on: (a) the feature values of the “CPU mode” feature group, (b) the feature values of the “memory” feature group, and (c) the output “delta values” of the first preprocessing stage based on the “timestamp_delta” value (i.e., the feature value with index 1). The objective of the second preprocessing stage is the decoupling of the feature values of a record produced by the first preprocessing stage from the collection sampling period of the “data collection” module of the MDA component.

Below, we present an appropriate equation to further clarify how the second preprocessing stage works. We denote as a record generated by the first preprocessing stage at time containing 33 feature values. In addition, we define as the record produced by the second preprocessing stage (i.e., pp2), containing 31 feature values, based on . In addition, let represent the feature value of , where is an integer in range [1, 33]. Similarly, we consider as the feature value of , where is an integer in range [1, 31]. Finally, we consider that the first record is collected at . Then, Equation (2) shows how is computed based on for different feature indexes.

At this point, it is worth explaining the above Equation (2) further. In a record produced by the first preprocessing stage, the values of the “CPU mode” feature group (i.e., feature indexes 2 to 11) detail the time (i.e., in ticks) that the CPU has spent in different modes of operation during the last behavior sampling period. During the second preprocessing stage, the feature values of the “CPU mode” group are summed, with the sum being equal to the total CPU ticks during the last behavior sampling period. Then, each feature value of the “CPU mode” group (i.e., feature indexes 2 to 11) is divided by the computed sum. The ten computed feature values are among the output values of the second preprocessing stage, and they describe the percentage of CPU ticks that the CPU has spent in a specific mode of operation during the last behavior sampling period.

Afterward, regarding the values of the “memory” feature group (i.e., feature indexes 17 to 25), almost every value (i.e., feature indexes 18 to 25) is divided by the value of the “memTotal” feature (i.e., feature index 17). The eight computed feature values are among the output values of the second preprocessing stage, and they describe the memory usage as percentages in the range [0, 1].

Moreover, the values of features with indexes of 12–14, 16, 26–31, and 33 from the first preprocessing stage are divided by the value of the elapsed time between two collected records (i.e., the “timestamp_delta” value in index 1). The eleven computed feature values are among the output values of the second preprocessing stage. As a result of the performed division process, the eleven computed values do not depend anymore on the magnitude of the elapsed time between two collected records.

Lastly, the remaining feature values from the first preprocessing stage (i.e., feature values with indexes 15 and 32) are not processed in the second preprocessing stage and thus are simply forwarded to the output.

The output feature values of a record after the second preprocessing stage are the output of the “data preprocessing” module and are summarized in Table 4.

Table 4.

Output feature values after the second preprocessing stage of the “Data Preprocessing” module.

2.3.4. Detection Engine

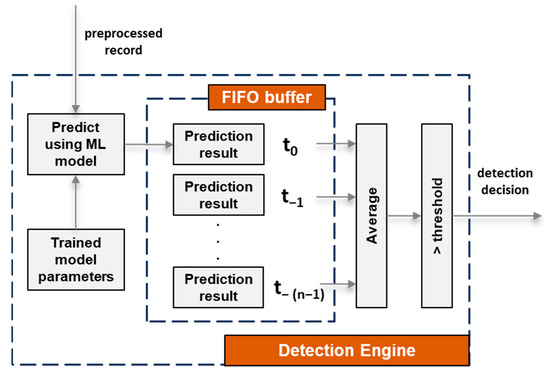

The output of the “data preprocessing” module is a preprocessed record, and it is received by the “detection engine” module as shown in Figure 3. Based on the input preprocessed record, the “detection engine” module detects whether or not an intrusion has occurred on the connected device hosting the MDA component, meaning that the output of the “detection engine” module is a detection decision. In principle, this is the core module of the proposed RDE component and uses ML algorithms to identify both known and unknown attacks targeting the resource-constrained devices connected to the gateway. The internal architecture of the “detection engine” module is depicted in Figure 4.

Figure 4.

Internal architecture of the “Detection Engine” module of the RDE component.

As shown in Figure 4, for each received preprocessed record, a trained ML model is used to calculate a prediction result. There are two possible values (i.e., “1” or “0”) for the prediction result, signifying whether an intrusion event has occurred or not based on a specific preprocessed record. Subsequently, the calculated prediction result for each record is stored in a FIFO buffer with a length equal to “n”, which is configurable within the RDE component. The FIFO buffer stores the most recent prediction result, as well as the previous “n − 1” prediction results that were computed based on the last “n − 1” preprocessed records. Afterward, an average value is computed based on all the values of the prediction results inside the buffer. The computed value (i.e., intrusion probability) is then compared to a “threshold” value so that the “detection engine” module can determine whether an intrusion event has occurred or not.

In principle, the purpose of the FIFO buffer is to enable the “detection engine” module to consider not only the last received preprocessed record but also a specific number (i.e., n − 1) of previously received preprocessed records for the task of performing intrusion detection. In addition, the length of the FIFO buffer inside the “detection engine” module is configurable and this, in turn, means that the intrusion detection process can be fine-tuned in one more way than by just training a better ML algorithm.

Moreover, as mentioned, a “threshold” value is compared with the intrusion probability calculated by averaging the prediction results in the FIFO buffer to determine whether an intrusion event has occurred or not. The “threshold” value is also controlled by a respective configuration parameter (i.e., “threshold” parameter) of the RDE component. The purpose of the “threshold” parameter is to provide an extra manner of fine-tuning the intrusion detection process of the “detection engine” module. A higher value for the “threshold” parameter means that positive decisions (i.e., decisions that an intrusion event has occurred) will occur only at a higher intrusion probability value and vice versa.

Lastly, it is important to mention that the “detection engine” module makes a prediction based on one preprocessed record by using a custom Python script. The functionality of the custom Python scripts follows three steps:

- Parse the input preprocessed record,

- Load the trained ML model, and

- Make a prediction.

The use of custom Python scripts increases the ease and flexibility of integrating different ML algorithms into the “detection engine” module of the RDE component.

2.3.5. Alert Reporter

The input of the “alert reporter” module is the detection decisions from the “detection engine” module. When an intrusion is detected, the “alert reporter” module sends an appropriate alert, in JSON format, to the cloud server. The “alert reporter” module is implemented to be able to send alerts to the cloud server through either the HTTP application protocol or the MQTT application protocol. Each alert contains three key pieces of information: (i) a timestamp indicating the time that the intrusion incident occurred, (ii) a unique identifier of the device hosting the MDA component, and (iii) the intrusion probability, represented as a decimal number within the range [0, 1], which reflects the likelihood that an intrusion incident has occurred. An example of an alert, in JSON format, produced by the RDE component for a connected resource-constrained device (i.e., “iomtSensor1”), hosting the MDA component, is shown below.

| Title: RDE intrusion alert based on detection decisions in JSON format |

| { |

| “ts”: “2024-10-04 10:13:34”, |

| “dev_ID”: “iomtSensor1”, |

| “intrusion_prob”: “1.0” |

| } |

3. Runtime Performance Evaluation

The proposed AIDS was implemented on the IoMT security testbed that was developed as part of our previous works in [18,19]. The next step is to train and integrate novelty detection and outlier detection algorithms into the “detection engine” module of the RDE component in order to evaluate the performance of the implemented AIDS during runtime. The runtime performance evaluation focuses on two key aspects: (a) assessing the performance of the ML models in detecting attacks during runtime and (b) measuring the computational cost of the implemented AIDS components on the IoMT device (i.e., MDA component) and the gateway (i.e., RDE component).

3.1. Employed Detection Algorithms, Training Dataset, and Hyperparameters

At this point, it is necessary to discuss why the focus is on novelty detection and outlier detection algorithms in this work. This is because these algorithms need to be trained mostly on records from a single known class to determine whether a new record is similar to the training records [17,31]. In our context, it is feasible to collect training records that represent the normal operation of the IoMT network, which corresponds to a single known class. On the other hand, obtaining training records related to IoMT networks under attack (i.e., known or unknown attacks) is not possible. This limitation underscores the necessity for advanced detection algorithms, such as novelty detection and outlier detection algorithms, capable of identifying anomalies without relying on labeled datasets from attack scenarios. By focusing on these algorithms, we aim to enhance the intrusion detection performance in IoMT networks, where labeled attack data are unavailable.

In addition, the aim is to use algorithms that are already implemented and readily available for use. This leads to focusing on novelty detection and outlier detection algorithms available in two popular Python libraries, namely Scikit-Learn [32,33] and PyOD [34,35]. These libraries provide robust implementations of these algorithms, facilitating the integration into the proposed AIDS.

Furthermore, regarding the novelty detection and outlier detection algorithms that are to be integrated into the RDE component (i.e., the “detection engine” module), we are using a dataset generated in our work in [19], along with the hyperparameters for training these algorithms as presented in [19]. In [19], a dataset (i.e., the LDE dataset) was generated by capturing behavior records from an IoMT device (i.e., the Raspberry Pi device) that was meant to function as a sensor device, similarly to the IoMT sensor device (i.e., the Raspberry Pi device) hosting the MDA component. In addition, the “LDE dataset” contains records with the same features as those used by the “detection engine” module of the RDE component. Thus, in this case, we trained the employed algorithms using the “LDE dataset” and applied the same hyperparameters for these algorithms as investigated in [19].

Based on our work in [19], we trained and evaluated the runtime performance of five novelty detection algorithms and one outlier detection algorithm: (i) one class support vector machine (OCSVM), (ii) local outlier factor (LOF), (iii) Gaussian kernel density estimation (G_KDE), (iv) Parzen window kernel density estimation (PW_KDE), (v) Bayesian Gaussian mixture models (B_GMM), and minimum covariance determinant (MCD). Moreover, based on our work in [19], Table 5 shows the hyperparameters used when training the six detection algorithms.

Table 5.

Hyperparameters used when training the six detection algorithms based on the LDE dataset.

3.2. Runtime Performance Evaluation Methodology

The performance evaluation was performed during various sessions (i.e., evaluation sessions). During each session, a trained ML model is integrated into the RDE component and its performance is evaluated during runtime. More specifically, we followed the steps described below during each evaluation session:

- It is ensured that all the components of the IoMT testbed are up and running.

- One detection algorithm is selected from Table 5, along with its hyperparameter set. The “LDE dataset” is used to train a corresponding ML model that is then integrated into the “detection engine” module of the RDE component of the AIDS.

- The RDE component is executed on the Raspberry Pi 4 device acting as a gateway.

- The MDA component is executed on the Raspberry Pi 4 device acting as a sensor device.

- We measure the CPU usage and memory usage of the MDA component on the IoMT device, hosting it by executing a custom bash script (i.e., the “cpu-mem usage” script) on the IoMT device during runtime.

- We measure the CPU usage and memory usage of the RDE component on the gateway by executing the “cpu-mem usage” script on the gateway during runtime.

- We launch attacks against the IoMT device hosting the MDA component by executing custom scripts.

- The successful (or unsuccessful) detection of the launched attacks is verified by checking both the logs generated by the attack scripts during execution as well as the internal logs produced by the AIDS.

At this point, it is worth describing how attacks were launched against the IoMT device hosting the MDA component. During the evaluation, we created artificial resource consumption on the IoMT device of the testbed by executing the stress-ng tool [36]. This artificial resource consumption is designed to replicate a scenario where an adversary executes an attack that exhausts the computational resources of the IoMT device. The documentation of the stress-ng tool presented in [37] is used, and we execute the stress-ng tool on the IoMT device using the following two commands during the evaluation sessions. It is worth mentioning that the following two commands were used to assess the performance of the implemented AIDS against attacks included in the training dataset of the ML model.

| stress-ng --cpu 1 --vm 1 --vm-bytes 512M |

| stress-ng --cpu 2 --vm 2 --vm-bytes 512M |

In addition, during evaluation sessions, the following new commands were used to assess the performance of the implemented AIDS against attacks that were not included in the training dataset of the ML model.

| stress-ng --matrix 4 --matrix-size 64 |

| stress-ng --vm 2 --vm-bytes 2G --mmap 2 --mmap-bytes 2G |

| stress-ng --timer 32 --timer-freq 1000000 |

During the evaluation, each command was executed for a certain period of time (e.g., 4 min) and periods with no command execution were mixed in. The purpose of using two different groups of commands is to measure the performance of the AIDS in detecting both known and unknown attack patterns.

3.3. Runtime Performance Evaluation of Detection Algorithms

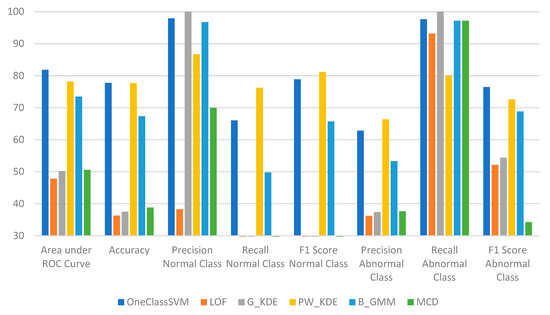

As mentioned in Section 3.1, the six detection algorithms are integrated into the “detection engine” module of the RDE component. The runtime evaluation results of the six detection algorithms, when measured during various evaluation sessions, are presented in Table 6 and Figure 5.

Table 6.

Runtime evaluation results of the six detection algorithms when integrated into the “Detection Engine” module of the RDE component of the AIDS.

Figure 5.

Runtime evaluation results of the six detection algorithms when integrated into the “Detection Engine” module of the RDE component of the AIDS.

The runtime evaluation results indicate that among the six algorithms tested, LOF, G_KDE, and MCD display low performance. The inferior performance of these algorithms suggests that these algorithms have been overfitted on the training dataset. On the other hand, OCSVM, PW_KDE, and B_GMM demonstrate relatively good performance, effectively distinguishing between attack incidents and non-attack incidents. Even though the evaluation metrics of these three algorithms indicate that overfitting may have occurred, they still exhibit adequate performance overall.

3.4. Runtime CPU and Memory Usage Measurements

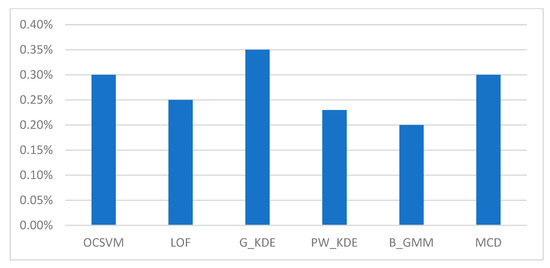

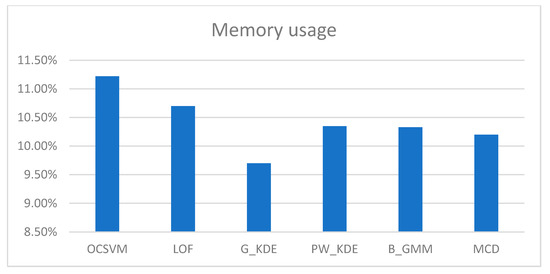

We executed the “cpu-mem usage” script in two scenarios: (a) on the gateway while the RDE component was running, and on (b) the IoMT device while the MDA component was running. The CPU and memory consumption of the RDE component, when running on the gateway, for different detection algorithms during runtime are shown in Table 7 and depicted in Figure 6 and Figure 7.

Table 7.

CPU and Memory consumption of the RDE component for different detection algorithms.

Figure 6.

CPU consumption of the RDE component for different detection algorithms.

Figure 7.

Memory consumption of the RDE component for different detection algorithms.

In addition, the CPU and memory usage of the MDA component on the IoMT device hosting it were approximately 0.01% and 0.05%, respectively. The measurements taken during the runtime operation of the AIDS indicate that the implemented RDE component requires a minimal percentage (i.e., less than 1%) of the available CPU resources and a moderate percentage of the available memory. Moreover, it is clear that the computational cost of the MDA component, designed for resource-constrained IoMT devices, is further minimized (i.e., less than 0.1%). In addition, as shown in Table 7, the resource requirements during runtime for the RDE component are not significantly affected by the type of algorithm used in the “detection engine” module. Therefore, the implemented AIDS represents a lightweight solution for detecting intrusions targeting resource-constrained IoMT devices.

4. Comparison with Existing Works

As mentioned in the introduction, currently, as far as we are aware, only a limited number of AIDSs (i.e., [10,11,12,13,14,15,16]) exist in the literature for protecting resource-limited IoMT devices within IoMT networks. In addition, only two of them (i.e., [13,14]) appear to have been implemented. However, they have not been evaluated during runtime. In this context, our work is compared only to the works in [13,14].

Firstly, in [13], a simulated topology is used to generate datasets to train only one OCSVM model for identifying cyberattacks. The performance of the trained OCSVM model was measured using the recall metric, which ranged from 60.8% to 93.4% for different types of attacks (i.e., rank attacks, version number modification attacks, and flooding attacks). In comparison, in our work, as mentioned in Section 3.3, the three best-performing models (i.e., OCSVM, PW_KDE, and B_GMM) showed better performances, with values ranging from 80% to 97% for the corresponding recall metric (i.e., recall on abnormal class) for different types of attacks (i.e., DoS attacks, CPU exhaustion attacks, and memory exhaustion attacks).

On the other hand, in [14], a smart healthcare testbed is used to generate two datasets with the purpose of training several supervised and unsupervised ML algorithms for identifying cyberattacks (i.e., man-in-the-middle attacks and DoS attacks). This work and the work in [14] are comparable only in the detection of DoS attacks. Table 8 compares (a) the performance evaluation metrics for the best supervised model (i.e., random forest—RF) from the work in [14] and (b) the runtime evaluation metrics for the best novelty detection model (i.e., OCSVM) mentioned in Section 3.4 of this manuscript.

Table 8.

Comparison of metrics for DoS attacks with work in [14].

Table 8 shows that the RF model from the work in [14] has a better performance than the novelty detection model of this work. This is an expected outcome as conventional supervised ML algorithms, such as RF, focus more on the classification of a new record into one of the classes for which the classification algorithms have been trained [17]. Conventional classification ML algorithms are capable of accurate predictions in cases where a new record is included in the training dataset. In contrast, conventional classification ML algorithms cannot make accurate predictions if a new record is not part of the training dataset.

On the other hand, novelty detection and outlier detection algorithms are trained mostly on records from a single known class. This, in turn, means that novelty detection and outlier detection algorithms can assess whether a new record is similar to the training records or not [17]. In essence, the main advantage of using novelty detection and outlier detection algorithms in this work is their ability to detect both known and unknown attacks (i.e., unknown to the training data).

This work advances the state-of-the-art in two ways. Firstly, our proposed AIDS is evaluated during runtime in contrast to the state-of-the-art AIDSs that do not include a runtime performance evaluation. Secondly, we use novelty detection and outlier detection algorithms for detecting both known and unknown (i.e., unknown to the training data) attack patterns. In parallel, the computational costs of the implemented AIDS are kept at low levels, meaning that the proposed AIDS constitutes a lightweight security solution that is suitable for IoMT networks and their resource-constrained IoMT devices.

5. Conclusions

In this paper, an AIDS specifically designed for resource-constrained devices within IoMT networks was proposed. The design of the proposed lightweight AIDS was presented along with implementation details. The proposed AIDS leverages novelty detection and outlier detection algorithms instead of conventional classification algorithms. The runtime performance of the proposed AIDS was evaluated using an IoMT testbed and dataset developed in our previous works [18,19]. The runtime evaluation results showed that the implemented AIDS can achieve (a) enhanced detection performance against both known and unknown attack patterns and (b) minimal computational costs, with CPU and memory usage for the MDA component remaining below 0.1%.

For future work, we aim to investigate additional outlier detection algorithms that are available in the PyOD [35] library for intrusion detection purposes. In addition, we plan to investigate (a) various combinations (i.e., ensembles) of novelty detection algorithms and/or outlier detection algorithms, (b) deep learning (DL) algorithms, and (c) the possibility of integrating novelty/outlier detection algorithms with conventional classification ML algorithms in an effort to improve the intrusion detection capabilities of the proposed AIDS while keeping the computational cost at low levels as it would need to run on a resource-constrained gateway in an IoMT network (e.g., Raspberry Pi 4 device). The newly explored detection algorithms could be integrated into the detection engine of the proposed AIDS, allowing us to evaluate their performance in detecting intrusions and their computational overhead during runtime.

Furthermore, we aim to experiment with various feature importance and feature reduction methods in order to discover the important features among the full set of features used in the training and decision-making processes. Through these experiments, we will be able to pinpoint combinations of features that can be used in the training and decision-making processes to enhance the performance of the proposed AIDS in detecting intrusions during runtime.

Moreover, we intend to extend the implementation of the MDA component so that it can operate on IoMT devices without requiring a Linux-based OS. For example, popular IoT OSes such as Contiki OS [38] and Zephyr RTOS [39] could be supported. In parallel, the IoMT testbed from our previous work [18] needs to be extended to include devices supporting a wide range of OSes, such as integrating Tmote Sky devices [40] running the Contiki OS.

Author Contributions

Conceptualization, G.Z., G.M., K.P. and J.R.; methodology, G.Z. and G.M.; software, G.Z.; validation, G.Z. and G.M.; investigation, G.Z. and G.M.; writing—original draft preparation, G.Z. and G.M.; writing—review and editing, G.Z., G.M., K.P. and J.R.; visualization, G.Z. and G.M.; supervision, G.M., K.P. and J.R. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Rodrigues, J.J.P.C.; Segundo, D.B.D.R.; Junqueira, H.A.; Sabino, M.H.; Prince, R.M.I.; Al-Muhtadi, J.; De Albuquerque, V.H.C. Enabling Technologies for the Internet of Health Things. IEEE Access 2018, 6, 13129–13141. [Google Scholar] [CrossRef]

- Papaioannou, M.; Karageorgou, M.; Mantas, G.; Sucasas, V.; Essop, I.; Rodriguez, J.; Lymberopoulos, D. A Survey on Security Threats and Countermeasures in Internet of Medical Things (IoMT). Trans. Emerg. Telecommun. Technol. 2020, 33, e4049. [Google Scholar] [CrossRef]

- Islam, S.M.R.; Kwak, D.; Kabir, M.H.; Hossain, M.; Kwak, K.S. The Internet of Things for Health Care: A Comprehensive Survey. IEEE Access 2015, 3, 678–708. [Google Scholar] [CrossRef]

- Fengou, M.-A.; Mantas, G.; Lymberopoulos, D.; Komninos, N.; Fengos, S.; Lazarou, N. A New Framework Architecture for Next Generation E-Health Services. IEEE J. Biomed. Health Inform. 2013, 17, 9–18. [Google Scholar] [CrossRef] [PubMed]

- Makhdoom, I.; Abolhasan, M.; Lipman, J.; Liu, R.P.; Ni, W. Anatomy of Threats to the Internet of Things. IEEE Commun. Surv. Tutor. 2019, 21, 1636–1675. [Google Scholar] [CrossRef]

- Zhang, M.; Raghunathan, A.; Jha, N.K. Trustworthiness of Medical Devices and Body Area Networks. Proc. IEEE 2014, 102, 1174–1188. [Google Scholar] [CrossRef]

- Essop, I.; Ribeiro, J.C.; Papaioannou, M.; Zachos, G.; Mantas, G.; Rodriguez, J. Generating Datasets for Anomaly-Based Intrusion Detection Systems in Iot and Industrial Iot Networks. Sensors 2021, 21, 1528. [Google Scholar] [CrossRef] [PubMed]

- Alsubaei, F.; Abuhussein, A.; Shiva, S. Security and Privacy in the Internet of Medical Things: Taxonomy and Risk Assessment. In Proceedings of the 2017 IEEE 42nd Conference on Local Computer Networks Workshops, LCN Workshops 2017, Singapore, 9–12 October 2017; Institute of Electrical and Electronics Engineers Inc.: New York, NY, USA, 2017; pp. 112–120. [Google Scholar]

- Asharf, J.; Moustafa, N.; Khurshid, H.; Debie, E.; Haider, W.; Wahab, A. A Review of Intrusion Detection Systems Using Machine and Deep Learning in Internet of Things: Challenges, Solutions and Future Directions. Electronics 2020, 9, 1177. [Google Scholar] [CrossRef]

- Newaz, A.I.; Sikder, A.K.; Rahman, M.A.; Uluagac, A.S. HealthGuard: A Machine Learning-Based Security Framework for Smart Healthcare Systems. In Proceedings of the 2019 6th International Conference on Social Networks Analysis, Management and Security, SNAMS 2019, Granada, Spain, 22–25 October 2019; Institute of Electrical and Electronics Engineers Inc.: New York, NY, USA, 2019; pp. 389–396. [Google Scholar]

- Schneble, W.; Thamilarasu, G. Attack Detection Using Federated Learning in Medical Cyber-Physical Systems. In Proceedings of the 28th International Conference on Computer Communication and Networks (ICCCN), Valencia, Spain, 29 July–1 August 2019; Volume 29, pp. 1–8. [Google Scholar]

- Thamilarasu, G.; Odesile, A.; Hoang, A. An Intrusion Detection System for Internet of Medical Things. IEEE Access 2020, 8, 181560–181576. [Google Scholar] [CrossRef]

- Said, A.M.; Yahyaoui, A.; Abdellatif, T. Efficient Anomaly Detection for Smart Hospital IoT Systems. Sensors 2021, 21, 1026. [Google Scholar] [CrossRef] [PubMed]

- Zubair, M.; Ghubaish, A.; Unal, D.; Al-Ali, A.; Reimann, T.; Alinier, G.; Hammoudeh, M.; Qadir, J. Secure Bluetooth Communication in Smart Healthcare Systems: A Novel Community Dataset and Intrusion Detection System. Sensors 2022, 22, 8280. [Google Scholar] [CrossRef]

- Nandini, A.; Behera, A.; Mishra, T.K. Detecting Threats in IoT Based Healthcare Using Machine Learning Algorithms. In Proceedings of the 2024 IEEE 9th International Conference for Convergence in Technology (I2CT), Pune, India, 5–7 April 2024; pp. 1–6. [Google Scholar]

- Zukaib, U.; Cui, X.; Zheng, C.; Hassan, M.; Shen, Z. Meta-IDS: Meta-Learning-Based Smart Intrusion Detection System for Internet of Medical Things (IoMT) Network. IEEE Internet Things J. 2024, 11, 23080–23095. [Google Scholar] [CrossRef]

- Pimentel, M.A.F.; Clifton, D.A.; Clifton, L.; Tarassenko, L. A Review of Novelty Detection. Signal Process. 2014, 99, 215–249. [Google Scholar] [CrossRef]

- Zachos, G.; Mantas, G.; Essop, I.; Porfyrakis, K.; Bastos, J.M.C.S.; Rodriguez, J. An IoT/IoMT Security Testbed for Anomaly-Based Intrusion Detection Systems. In Proceedings of the 2023 IFIP Networking Conference (IFIP Networking), Barcelona, Spain, 12–15 June 2023; pp. 1–6. [Google Scholar]

- Zachos, G.; Mantas, G.; Porfyrakis, K.; Bastos, J.M.C.S.; Rodriguez, J. Anomaly-Based Intrusion Detection for IoMT Networks: Design, Implementation, Dataset Generation and ML Algorithms Evaluation. IEEE Access 2024. under review. [Google Scholar]

- Sector, I.T.U.T.S. Recommendation ITU-T Y.2060: Overview of the Internet of Things. Available online: https://www.itu.int/ITU-T/recommendations/rec.aspx?rec=y.2060 (accessed on 20 October 2022).

- Ubuntu 20.04.5 LTS (Focal Fossa). Available online: https://releases.ubuntu.com/focal/ (accessed on 20 March 2023).

- Eclipse Paho|The Eclipse Foundation. Available online: https://eclipse.dev/ (accessed on 8 January 2024).

- Proc(5)—Linux Manual Page. Available online: https://man7.org/linux/man-pages/man5/proc.5.html (accessed on 22 February 2024).

- Proc_stat(5)—Linux Manual Page. Available online: https://man7.org/linux/man-pages/man5/proc_stat.5.html (accessed on 23 August 2024).

- Proc_meminfo(5)—Linux Manual Page. Available online: https://man7.org/linux/man-pages/man5/proc_meminfo.5.html (accessed on 23 August 2024).

- Proc_diskstats(5)—Linux Manual Page. Available online: https://man7.org/linux/man-pages/man5/proc_diskstats.5.html (accessed on 23 August 2024).

- JSON-Java. Available online: https://stleary.github.io/JSON-java/index.html (accessed on 13 November 2024).

- Apache HttpComponents. Available online: https://hc.apache.org/ (accessed on 13 November 2024).

- Apache Commons IO. Available online: https://commons.apache.org/proper/commons-io/ (accessed on 13 November 2024).

- Argparse4j. Available online: https://argparse4j.github.io/ (accessed on 13 November 2024).

- Scikit-Learn: Novelty and Outlier Detection. Available online: https://scikit-learn.org/stable/modules/outlier_detection.html (accessed on 13 November 2024).

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-Learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Scikit-Learn. Available online: https://scikit-learn.org/stable/ (accessed on 10 November 2021).

- Zhao, Y.; Nasrullah, Z.; Li, Z. PyOD: A Python Toolbox for Scalable Outlier Detection. J. Mach. Learn. Res. 2019, 20, 1–7. [Google Scholar]

- Pyod 2.0.2 Documentation. Available online: https://pyod.readthedocs.io/en/latest/index.html (accessed on 9 September 2024).

- GitHub—ColinIanKing/Stress-Ng. Available online: https://github.com/ColinIanKing/stress-ng (accessed on 3 September 2024).

- Kernel/Reference/Stress-Ng—Ubuntu Wiki. Available online: https://wiki.ubuntu.com/Kernel/Reference/stress-ng (accessed on 3 September 2024).

- Oikonomou, G.; Duquennoy, S.; Elsts, A.; Eriksson, J.; Tanaka, Y.; Tsiftes, N. The {Contiki-NG} Open Source Operating System for next Generation {IoT} Devices. SoftwareX 2022, 18, 101089. [Google Scholar] [CrossRef]

- Zephyr Project Documentation—Zephyr Project Documentation. Available online: https://docs.zephyrproject.org/latest/index.html (accessed on 24 January 2023).

- Moteiv Corporation Tmote Sky—Ultra Low Power IEEE 802.15.4 Compliant Wireless Sensor Module. Available online: http://www.crew-project.eu/sites/default/files/tmote-sky-datasheet.pdf (accessed on 6 September 2021).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).