Abstract

Multiview clustering (MVC) is a proven, effective approach to boosting the various downstream tasks given by unlabeled data. In contemporary society, domain-specific multiview data, such as multiphase postoperative liver tumor contrast-enhanced computed tomography (CECT) images, may be vulnerable to exploitation by illicit organizations or may not be comprehensively collected due to patient privacy concerns. Thus, these can be modeled as incomplete multiview clustering (IMVC) problems. Most existing IMVC methods have three issues: (1) most methods rely on paired views, which are often unavailable in clinical practice; (2) directly predicting the features of missing views may omit key features; and (3) recovered views still have subtle differences from the originals. To overcome these challenges, we proposed a novel framework named fuzzy clustering combined with information theory arithmetic based on feature reconstruction (FCITAFR). Specifically, we propose a method for reconstructing the characteristics of prevailing perspectives for each sample. Based on this, we utilized the reconstructed features to predict the missing views. Then, based on the predicted features, we used variational fuzzy c-means clustering (FCM) combined with information theory to learn the mutual information among views. The experimental results indicate the advantages of FCITAFR in comparison to state-of-the-art methods, on both in-house and external datasets, in terms of accuracy (ACC) (77.5%), normalized mutual information (NMI) (37.9%), and adjusted rand index (ARI) (29.5%).

1. Introduction

Liver tumors represent a significant contributor to mortality and are associated with various diseases, posing a considerable threat to human health [1,2,3]. A widely utilized method for the postoperative diagnosis of liver tumors is multiphase contrast-enhanced computed tomography (CECT) [4]. Compared with single-view methods, in clinical practice, multiphase views provide consistent and complementary information from the same patient [5,6], thus providing more precision regarding postoperative liver tumor type. Liver tumor CECT images of a patient can be divided into four phases: non-contrast (NC), arterial phase (AP), portal venous (PV), and delay phase (DP) [7,8]. Judging tumor type from CECT images is a laborious and time-wasting task for clinicians, meaning that a large number of these images are not “gold standard”. Moreover, some medical workers do not have enough experience. Hence, to further utilize such unlabeled multiview data, it is essential to introduce an unsupervised method, such as multiview clustering (MVC) [9,10,11,12]. Unfortunately, due to data theft and patient privacy, it is hard to collect complete data during transmission.

To solve the above-mentioned problems, plenty of clinical scenarios have been pro- posed with the aim of exploring information from incomplete data without labeling, which are set as incomplete multiview clustering [13,14,15] (IMVC). IMVC aims to address the issue of heterogeneous features across complete and incomplete views, where the main insight is to predict missing view features. There are two categories of IMVC methods in clinical diagnosis: traditional and deep learning IMVC. Traditional IMVC methods can be categorized as multi-kernel learning [16], matrix factorization [17,18], tensor factorization [19,20] or graph learning [21,22,23,24]. These methods are restricted in obtaining latent features, which increase computationally complex calculations. Other than traditional IMVC methods, deep IMVC methods make rich use of the latent features to achieve missing view reconstruction [25,26,27,28,29]. Although these methods present acceptable performances, the following issues remain:

- These methods rely significantly on complete instances (both views exist), which are often unavailable in clinical practice. For instance, in postoperative liver tumor diagnosis, few patients willingly provide complete views, making it hard to obtain complete samples.

- Direct feature prediction may not highlight key features; then, easily predicted error features affect the clustering performance [25,26,30,31,32], especially in the absence of paired samples.

- Due to noise interference, recovered missing views still have subtle difference from the originals [33,34]. With clustering tasks without labels, subtle differences may also lead to error clusters in samples.

Thus, it is hard to accomplish multiview clustering with fully incomplete information and in all instances missing at least one view.

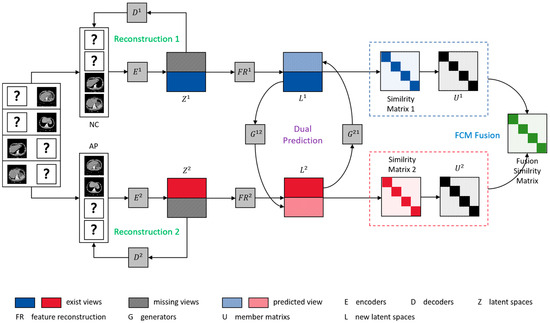

In this study, we introduce a framework called fuzzy clustering combined with information theory arithmetic based on feature reconstruction (FCITAFR), as indicated in Figure 1. The framework primarily comprises two modules: a feature reconstruction prediction module and a fuzzy clustering contrast learning module. More specifically, we firstly reconstruct and correlate features of the existing views for all samples, aiming to optimize the latent features and enhance the relations between them. Based on the reconstructed features of views, we utilize a variational fuzzy c-means clustering (FCM) arithmetic to learn the membership among samples and then combine this with information theory [31]. We obtained the Euclidean distance among views and combined this with the membership matrix to obtain the similarity between single views. Then, we obtained the fusion similarity between different views combined with information theory, thus ascertaining consistence information among views. The major contributions of our work are as follows:

Figure 1.

The framework of FCITAFR, where “?” donates the missing views. The within-view reconstruction loss uses encoders to obtain the latent representation . Then, via feature reconstruct prediction loss , in order to reconstruct the latent representation of existing views to obtain new latent representation , the generators G predict the missing views of each sample. Finally, via fuzzy clustering with information theory loss , in order to obtain the membership matrix of each view, the fusion similarity matrix among views can be obtained via information theory.

- Different from existing predicting methods, we reconstruct the features of existing views to enhance the relations among features.

- We propose a novel arithmetic that utilizes variational FCM arithmetic combined with information theory to learn the mutual information among views.

- The experimental results from two multiphase liver tumor datasets demonstrate that FCITAFR outperforms the state-of-the-art IMVC methods in terms of ACC (77.5%), NMI (37.9%), and ARI (29.5%).

2. Related Work

2.1. IMVC Methods

Traditional IMVC methods are categorized as multiply kernel learning, graph learning, and tensor factorization. By using the complete part of the datasets, the multiply kernel IMVC method creates the kernel matrix of the incomplete part. In order to regularize the clustering matrix, Liu et al. [35] integrated past information and gathered each imperfect base matrix produced by missing views with a clustering matrix. Graph learning-based IMVC methods gather patterns by extracting graph structure information. Chao et al. [24] proposed instance-level fusion and high-confident guiding to obtain complementary information; then, instance-level contrastive learning was utilized to obtain consistent information. Tensor factorization-based IMVC methods seek to incorporate tensor constraints to characterize the high-order correlations among tensors and elucidate the internal structure associated with cross-view data. Li et al. [36] proposed an IMVC method based on tensors to disperse multiview block-diagonal structure knowledge among views.

Deep IMVC methods, of which contrastive learning is one of the most significant, can learn the deep features of views. Xu et al. [30] proposed an adaptive feature projection-based deep IMVC method, where the auto-encoders were modeled to reconstruct missing data. Lin et al. [31] proposed a deep contrastive and dual-prediction framework to learn the consistence mutual information among different views via contrastive learning and minimized conditional entropy. Zhang et al. [29] proposed a self-attention-based encoder network and obtained common information by learning feature sub-vectors.

2.2. IMVC for Medical Image Analysis

In clinical practice, the numbers of paired samples are seriously limited in multiview learning. Some methods remove incomplete samples from the dataset [26]; however, removing data from medical datasets is not optimal, since incomplete samples still possess valuable information. Recently, recovering missing data is the mainstream IMVC method in medical practice. In terms of traditional methods, Wen et al. [16] proposed a framework for authenticating involuntary multiple muscle tics in children based on multiview (instance) and multiclass (clusters) features. Peng et al. [17] proposed a group sparse algorithm to combine non-negative matrix factorization and an orthogonal subspace. Different from traditional methods, deep learning methods aim to use encoders to represent the latent features of views, learning the deep relationships between them. Pan et al. [37] proposed a statement framework based on the space constraint for diagnosing brain diseases from incomplete multiview neuroimages, addressing the lack of positron emission tomography (PET) in patients with only brain MRI.

Nevertheless, these methods may have more limitations. Although the key idea of other methods has directly predicted the features of missing views, they may ignore some features and make the missing view as a wrong clustering. In order to solve this problem, the motivation is to design an arithmetic to reconstruct the features of instances, which can adequately reconstruct the features that are easy to ignore. Different from the aforementioned arithmetic, FCITAFR reconstructs the features of existing views before predicting the features of missing views. Moreover, we utilize the fuzzy clustering arithmetic combined with the information theory to learn the common features of different views after they are predicted.

3. Methodology

3.1. Notations

To facilitate the discussion, we let the number of views be 2. denotes the -th view with being the number of instances, and each has dimensions. and are denoted as the -th view with being the number of samples, and being the dimensions in latent spaces and feature reconstruction, respectively. Here, further notations and descriptions are listed in Table 1.

Table 1.

Notations with corresponding descriptions.

Based on the aforementioned definitions, we present the objective’s comprehensive loss function:

where , and are fuzzy clustering with information loss, within-view reconstruction loss, and feature reconstruction prediction loss, respectively. The parameters and are balance parameters of loss functions; initially, we simply set them as 0.1.

3.2. Within-View Reconstruction

We pass all views through autoencoders to obtain the latent feature representation by

where denotes the -th sample in . Meanwhile, the latent representation in the -th view for all instances is given by

3.3. Feature Reconstruction Prediction

Based on the latent representation obtained by , we propose a methodology for reconstructing the characteristics of samples, seeking to take into account the interrelationships between features and among various samples within a singular perspective. The primary objective is to determine the weights of all features in accordance with the specified indicators [38]. When acquiring latent representations from each perspective, the latent spaces encompass various samples and distinct characteristics. More specific, consists of samples, with each sample characterized by features. The -th sample indicates , and the -th feature indicates . We employ the entropy weighting method to analyze the obtained latent features, associating them with each unique characteristic of every sample, and subsequently generate a novel latent representation.

Firstly, for the feature of each sample, we decline the difference of the distribution. We gathered the average of features for all samples, and obtained the distribution matrix as follows:

where donates the average of features for all samples.

Next, we analyzed the relationships between features across various samples, specifically focusing on the -th column of . We computed the entropy value to integrate the different features:

where , and donates the averages of all samples for all corresponding features.

In conclusion, it is essential to calculate the entropy coefficient for each column and subsequently multiply the aggregate coefficients by the initial latent representation . The formulas for these calculations are as follows:

After obtaining the reconstructed latent representation , we utilized generators to predict the missing views. Our main task was to connect all features of each sample. In order to predict the features of the other view, the importance is not to ignore the key features. The loss function can be obtained as follows:

where and are generators with a multilayer, fully-connected network.

3.4. Fuzzy Clustering with Information Theory

To learn more mutual information among views, we used variational fuzzy c-means clustering (FCM) [39], combined with information theory [31,32], to obtain the relationship among samples and obtain the fusion similarity between views. We used all samples as the clustering centers to determine the degree of membership for the other samples. First, we obtained the similarity matrix of single views, which is formulated as follows:

In this function, donates the time complexity matrix of the algorithm, which is calculated by for each view. donates the feature matrix of each view after feature reconstruction, which consists of number of samples and, each sample has features. In order to determine the similarity among all samples in each view, we calculated the feature matrix () times the transposed feature matrix (). As a result, we can obtain the time complexity matrix. And denotes the Euclidean distance between the -th and -th samples , and are variational samples. donates the membership matrix, which stands for the degree of membership among samples; a larger number means more similarity. Then, the membership matrix among views is calculated as follows:

where represents the membership factor, which is set as 2 in this study.

Subsequently, we enhanced the mutual information between by integrating it with information theory. The final layer of the encoder, which is based on the softmax function [33], can be understood as representing a probability distribution that facilitates clustering. Thus, the cross-view joint probability distribution matrix is calculated by . Let and become the marginal probability distributions, which denote the -th row and the -th column on . The function can be represented as follows:

where donates the entropy balancing parameter, which is set to 9. The implementation details of FCITAFR are indicated in Algorithm 1.

| Algorithm 1 Fuzzy clustering combined with information theory arithmetic based on feature reconstruction (FCITAFR) |

| Input: Incomplete dataset . Output: Total loss . 1: Initialize overall loss , epoch number is , the number of clusters ; the parameters , . 2: for do 3: Compute with-in view reconstruction loss by (2). 4: Compute feature reconstruct prediction loss by (8). 5: Compute fuzzy clustering with information theory loss by (11). 6: Compute total loss by (1) and update balance parameters. 7: 8: return 9: Update parameter . 10: Update parameter . |

4. Experiments

4.1. Experimental Setting

4.1.1. Dataset

We performed experiments using both our internal dataset and external multiview liver tumor CECT dataset, with all volumes obtained from Philips iCT 256 scanners using NC and AP imaging.

- Zhongda includes 82 patients with 328 multiphase CT scans obtained from Zhongda Hospital, Southeast University. Each volume has an in-plane dimension of 512 × 512, with spacing varying between 0.601 mm and 0.851 mm. The number of slices in the volumes varies from 36 to 139, with a slice spacing of 2.5 mm.

- Zheyi includes 475 patients with 1900 multiphase CT scans obtained from The First Affiliated Hospital of Zhejiang University. Each volume has an in-plane dimension of 512 × 512, with spacing varying between 0.560 mm and 0.847 mm. The number of slices in the volumes varies from 25 to 89, with a slice spacing of 3.0 mm.

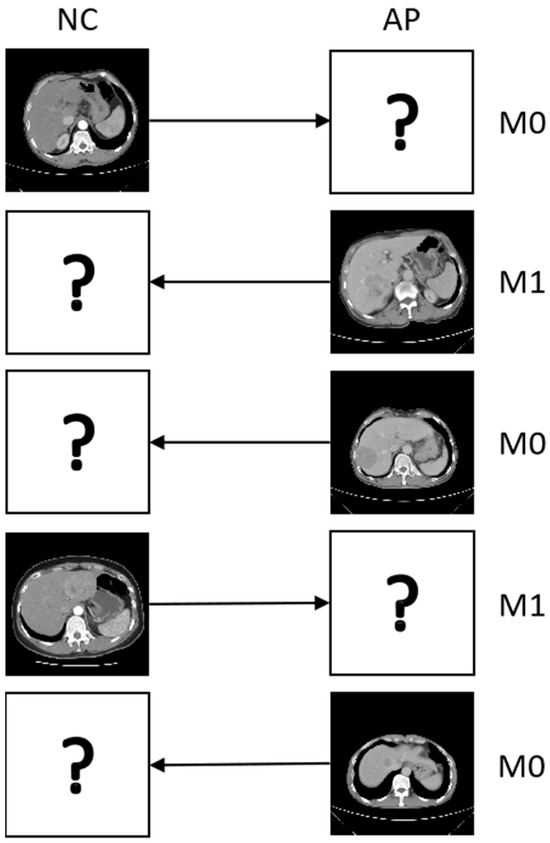

To ensure clustering validity, we retain 55 and 286 instances (for Zhongda and Zheyi, respectively) after removing samples with tiny tumors. These instances are annotated with two levels (M0 and M1) of microvascular invasion (MVI), which is a popular indicator of postoperative liver diagnosis for hepatocellular carcinoma (HCC) [40]. As shown in Figure 2, for the CECT images that have five instances, and each sample only has one view, which means all patients are missing one view. Here, we introduce the phases of NC and AP as the views for all the methods, with missing rates of used to validate the methods in incomplete multiview scenarios.

Figure 2.

Five instances of liver tumor CECT images from the different periods; “?” denotes a missing view and “→” denotes mapping the missing view.

4.1.2. Competitor

We compare FCITAFR with the state-of-the-art methods as follows:

- SNFR [29] considers feature reconstruction to learn common information, utilizing information theory to learn the mutual information based on the reconstructed feature.

- COMPLETER [31] learns the common information and constructs a contrastive prediction module to infer missing views.

- DCP [32] extends more than two views based on [31] and divides these into core-view and complete-view algorithms.

- Prolmp [33] is a dual contrastive learning-based IMVC that learns the relationship between the view-level and instance prototypes.

- SURE [34] considers the view-unaligned and missing problems.

4.1.3. Implementation Details and Metrics

We implement our approach using PyTorch 1.8.0 on an NVIDIA GeForce RTX 2080Ti GPU (NVIDIA, Santa Clara, CA, USA). The Adam optimizer is employed, with an initial learning rate of 0.0001 applied across all datasets. Initially, we set the batch size to 256 and the training epoch to 500 for all datasets. We set up the two trade-off hyper-parameters and to 0.1 and 0.1, respectively. The evaluation metrics validate the cluster performances as follows: ACC, NMI, and ARI.

4.2. Comparison with State-of-the-Art

Table 2 indicates the clustering results of all methods with the different missing rates and compares with DCP under a missing rate of 100%. This can explain the clustering performances in different missing rates and highlights better clustering performances in high missing rates compare with competitors. High missing rates are more common in clinical practices, and a robust framework can suit them, where the framework can obtain a better clustering performance in high missing rates, called robust framework. Note that “-” means that the algorithm cannot be operated smoothly due an to out-of-memory error.

Table 2.

A clustering performance comparison of two liver tumor datasets. The 1st/2nd best results are in bold and underlined.

Based on the statistical analysis of the results, the generated observation can be summarized as follows:

- (1)

- Compared with other methods, FCITAFR clustering performances can achieve the best results. For instance, in the low missing rates, such as 10%, FCITAFR achieves the best clustering performances on all datasets, where ACC improved 6.1% and 5.7% compared to the second-best method on Zhongda and Zheyi, respectively. Moreover, NMI improved obviously on Zhongda, which improved about 11% compared to the second-best one, and also improved about 3% on Zheyi. Furthermore, ARI improved about 2% and 9% on Zhongda and Zheyi, respectively. On the missing rate of 30%, mostly clustering performances obtained the best one on FCITAFR, especially on Zhongda, which improved about 10% to 20% of all clustering performances compared to the second-best one.

- (2)

- FCITAFR has significant advantages. All other deep learning methods, SNFR, COMPLETER, DCP, Prolmp and SURE, directly predict the features of missing views and may predict incorrect features, even with lower missing rates. FCITAFR further reconstructs the features of latent representations of existing views, which obtain the average of all features, and then utilizes the average to recalculate the original features. This informs the obvious efficacy of further reconstruction for the features of latent space.

- (3)

- With the increasing missing rate, although the clustering performance of FCITAFR declined, it is still outstanding compared to competitors. For example, on the missing rate of 50%, although some clustering performances obtained the second-best one, ACC improved about 1% on all datasets compared to the second-best method. On the other hand, with the missing rate of 70%, the clustering performances improved about 1% to 2% on Zheyi, and NMI improved about 7.5 on Zhongda compared to the second-best one. Our method suits the case of all instances missing one view. We compared with DCP with the missing rate of 100%, which improved about 2% for all performances compared to DCP.

4.3. Time Cost Comparison

The running time comparison between the Prolmp [33] and SURE [34] are indicated in Table 3. As shown in Table 3, our method is remarkably efficient compared to Prolmp and SURE on all datasets with the missing rate of 0.5. It may save about 10 s on Zhongda and save 1 min on Zheyi. Note that all methods run on the GPU.

Table 3.

Time cost comparison.

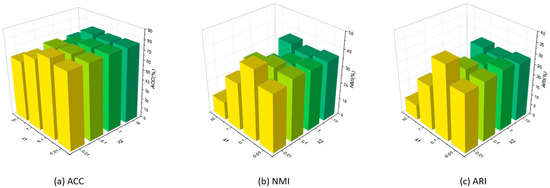

4.4. Parameter Analysis

To analyze the impact of trade-off hyper-parameters, we conducted a series of experiments with the missing rate set as 0.3 on Zhongda. and are set to intervals of . As shown in Figure 3, based on the statistical analysis, the following observations can be summarized:

Figure 3.

Parameter evaluation on Zhongda with a missing rate of 0.3.

- (1)

- When it is fixed, the performance will be worse when it is too large. For instance, when we set as 0.01, our method can obtain the best performances when fixed as all figures. However, when the parameter is increased, the clustering performance significantly declined when it set as 0.1, which declined about 3%, 5% and 6% of ACC, NMI and ARI, respectively. And when is set as larger, the clustering performances continually declined. This is because the feature reconstruction prediction loss is used, as predicted by the latent features, and when it is set too large, the structures of features will change more, where original features may be destroyed.

- (2)

- When is fixed, the performance will decline significantly when is too large or small. When we set as 0.1, our method can obtain the best performances when fixed as 0.01 and 0.1. When set as 0.01, although ACC moderately decreased, NMI and ARI decreased about 7% and 8%, respectively. On the other hand, when is fixed as 0.01, all clustering performances frequently declined. Moreover, when is fixed as other figures, fluctuates in different sets. This is because a small and a large trade-off parameter may destroy the robustness of the framework, which can cause the features to misregister the original features.

- (3)

- When both and are less than 0.1, the clustering performances will be worse when is 0.1. On the other hand, when both and are too large, the clustering performances will significantly decline.

Therefore, after analyzing the different sets of trade-off hyper-parameters, the value of and are suggested as and , respectively.

4.5. Ablation Studies

To further confirm the effectiveness of our approach, we performed ablation experiments on Zhongda with a missing rate of 0.3, as indicated in Table 4. A series of FCITAFR variants is modeled with different combinations of , and . We can observe that the performance declined sightly when one or two loss functions ablated; all loss functions play significant roles in the arithmetic. The statistical analysis can be shown as follows:

Table 4.

Effect of different loss functions on Zhongda with a missing rate of 0.3, “√” donates the loss function is participated in experiments.

- (1)

- The fuzzy clustering with information theory loss may be the most important loss function in the method. In the first line, with only , the clustering performances will decline by only about 1%. When this function is ablated, the clustering performance ACC is declined by about 3% to 6%. Such as in line 3, ACC, NMI, and ARI decreased about 6%, 4% and 10%, respectively, when is also ablated. This is because it will hardly ignore the significant features for each sample when this loss function is ablated, and also can learn the important features of views.

- (2)

- For with-in view reconstruction loss , many works demonstrate that autoencoders are widely used in unsupervised learning; autoencoder structure is helpful to avoid the trivial solution. Other loss function also needed to learn the latent representation from encoders by autoencoders. Overall, the aforementioned observations have verified the effectiveness of the proposed loss function in our method. In the third and the fifth line, the clustering performances declined about 2% to 5% when was ablated.

- (3)

- For feature reconstruction prediction loss , the feature reconstruction can change the features of views, which may improve the relationship by different features, and avoid ignoring some important features and margin features. Therefore, this loss function may improve the clustering performances. In addition, in the fourth line, ACC declined about 2% when was ablated, and NMI and ARI decrease more than 10% without . Furthermore, compared with line 4, ACC, NMI, and ARI are increased about 3%, 10%, and 7%, and it can be clearly seen that reconstructing the features of existing views is so significant. Obtaining the average of features about existing views can let the features equalize more, which can close the distance of different features.

Overall, the fuzzy clustering with information theory loss is the most significant loss function in this method, and have mutual promotion and also can cause a minor effect when only exists. And the other two loss functions also play an important role in this algorithm, since they may obtain a better clustering performance when they exist together than only exist alone.

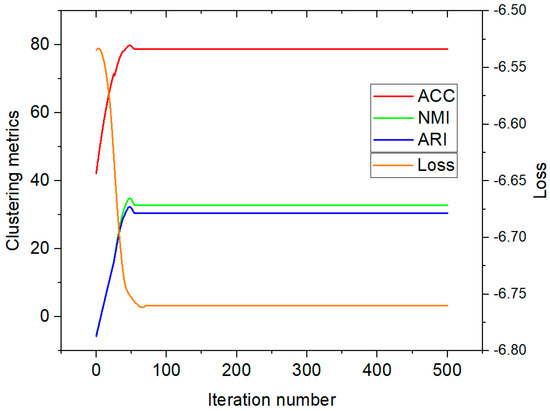

4.6. Convergence Analysis

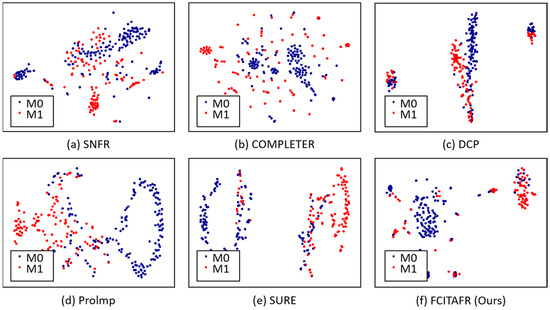

Furthermore, we visualized the latent space via t-SNE on Zheyi with the missing rate of 0.3, as shown in Figure 4. Based on the statistical analysis, the following observations can be summarized: (1) The clustering performance sharply increased during the first 75 epochs; this is because FCITAFR effectively learns the structures of the framework and then the features learn adequately. As a result, the clustering performance levels stay consistent. These findings indicate that the proposed method achieved training convergence. (2) The loss is significantly decreased during the first 75 epochs. This is because FCITAFR utilizes the latent features and reduces the gap between the original features and predicted features.

Figure 4.

Visualization of latent space via t-SNE on Zheyi with the missing rate of 0.3. With an epoch of 500, compared with other methods, FCITAFR constructed a more discriminative latent space.

To explore the convergence procedure of FCITAFR, we plotted the values of ACC, NMI, and ARI, and the total loss on Zhongda with the missing rate of 0.3 in the training stage, as shown in Figure 5. In comparison with the qualitative results of other approaches, our method shows an improved performance with more epochs, leading to a greater distinction between the features of the two different types.

Figure 5.

Convergence evaluation on Zhongda with a missing rate of 0.3.

5. Conclusions

In this paper, we propose a novel incomplete multiview clustering (IMVC) method with fully incomplete information. First, we built a feature reconstruction arithmetic to reconstruct the features of existing views, utilizing them to predict missing views. Then, we combined information with variational fuzzy c-means (FCM) clustering to learn the mutual information among views. The experimental findings show that FCITAFR outperforms the leading methods on both the internal and external datasets. Also, we analyzed the impact of trade-off hyper-parameters, and analyzed the effect of one parameter and both two parameters. Although our arithmetic obtained an outcome performance compared with one that was state-of-the-art, it only suited the multiview scene of two views, where multiview datasets in clinical experiments included more than two views; our method only can analyze the common and complementary information two-by-two. On the other hand, although our method is a time-saving arithmetic compared with the competitors during the time cost experiment, our method is not suited to the situation of more than two views; it is also waste of time, since the experiments need to analyze the views two-by-two. In the future, we will extend these FCITAFR insights to unaligned samples and extend the framework to the situation of more than two views, thus enhancing the capabilities of FCITAFR in real clinical scenarios.

Author Contributions

Conceptualization, S.L. and X.L.; methodology, S.L.; software, S.L.; validation, S.L. and X.L.; formal analysis, S.L.; investigation, S.L.; resources, S.L.; data curation, S.L.; writing—original draft preparation, S.L.; writing—review and editing, S.L.; visualization, S.L.; supervision, S.L.; project administration, S.L.; funding acquisition, X.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Natural Science Foundation of China under grants 62233003 and 62073072, the Key Projects of the Key R&D Program of Jiangsu Province under grants BE2020006 and BE2020006-1, and the Shenzhen Science and Technology Program under grants JCYJ20210324132202005 and JCYJ20220818101206014.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors on request.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| CECT | Contrast-enhanced computed tomograph |

| IMVC | Incomplete multiview clustering |

| NC | Non-contrast |

| AP | Arterial phase |

| PV | Portal venous |

| DP | Delay-phase |

| FCM | Fuzzy c-means clustering |

| ACC | Accuracy |

| NMI | Normalized mutual information |

| ARI | Adjusted rand index |

References

- Ates, G.C.; Mohan, P.; Celik, E. Dual cross-attention for medical image segmentation. Eng. Appl. Artif. Intell. 2023, 126, 107139. [Google Scholar] [CrossRef]

- Yang, G.; Zhang, S.; Li, X.; Shen, T.; Jia, X.; Ding, Y.; Zhang, B.; Wang, H.; Li, X.; Ren, P. A systemic study on the performance of different quantitative ultrasound imaging techniques for microwave ablation monitoring of liver. IEEE Trans. Instrum. Meas. 2023, 72, 1–11. [Google Scholar] [CrossRef]

- Yang, C.; Hu, X.; Zhu, Q.; Tu, Q.; Geng, H.; Xu, J.; Liu, Z.; Wang, Y.; Wang, J. Individual Medical Costs Prediction Methods Based On Clinical Notes and DRGs. IEEE J. Radio Freq. Identif. 2024, 8, 412–418. [Google Scholar] [CrossRef]

- Zhang, H.; Guo, L.; Wang, J.; Ying, S.; Shi, J. Multiview feature transformation based SVM+ for computer-aided diagnosis of liver cancers with ultrasound images. IEEE J. Biomed. Health Inform. 2023, 27, 1512–1523. [Google Scholar] [CrossRef] [PubMed]

- Wang, D.; Qi, H.; Lian, B.; Liu, Y.; Song, H. Resilient Decentralized Cooperative Localization for Multi-Source Multi-Robot System. IEEE Trans. Instrum. Meas. 2023, 72, 8504713. [Google Scholar]

- Chen, B.; Huang, X.; Liu, Y.; Zhang, Z.; Lu, G.; Zhou, Z.; Pan, J. Attention-Guided and Noise-Resistant Learning for Robust Medical Image Segmentation. IEEE Trans. Instrum. Meas. 2024, 73, 4008013. [Google Scholar] [CrossRef]

- Gorji, L.; Brown, Z.J.; Limkemann, A.; Schenk, A.D.; Pawlik, T.M. Liver Transplant as a Treatment of Primary and Secondary Liver Neoplasms. JAMA Surg. 2024, 159, 211–218. [Google Scholar] [CrossRef] [PubMed]

- Singh, S.; Anand, R.S. Multimodal medical image fusion using hybrid layer decomposition with CNN-based feature mapping and structural clustering. IEEE Trans. Instrum. Meas. 2019, 69, 3855–3865. [Google Scholar] [CrossRef]

- Zheng, S.; Zhu, Z.; Liu, Z.; Guo, Z.; Liu, Y.; Yang, Y.; Zhao, Y. Multi-modal graph learning for disease prediction. IEEE Trans. Med. Imaging 2022, 41, 2207–2216. [Google Scholar] [CrossRef]

- Bayram, H.C.; Rekik, I. A federated multigraph integration approach for connectional brain template learning. In Proceedings of the Multimodal Learning for Clinical Decision Support: 11th International Workshop, ML-CDS 2021, Held in Conjunction with MICCAI 2021, Strasbourg, France, 1 October 2021; Proceedings 11. Springer: Berlin/Heidelberg, Germany, 2021; pp. 36–47. [Google Scholar]

- Poornima, D.; Shabu, S.J.; Aswin, T.; Sruthi, V.; Sruthi, K.D. A Machine Learning-based Multi-Phase Medical Image Classification for Internet of Medical Things. In Proceedings of the 2023 7th International Conference on I-SMAC (IoT in Social, Mobile, Analytics and Cloud) (I-SMAC), Kirtipur, Nepal, 11–13 October 2023; pp. 178–185. [Google Scholar]

- Chen, L.; Qi, Y.; Wu, A.; Deng, L.; Jiang, T. TeaBERT: An Efficient Knowledge Infused Cross-Lingual Language Model for Mapping Chinese Medical Entities to the Unified Medical Language System. IEEE J. Biomed. Health Inform. 2023, 27, 6029–6038. [Google Scholar] [CrossRef]

- Zhu, Y.; Wang, S.; Xin, T.; Zhang, Z.; Zhang, H. Partition-a-medical-image: Extracting multiple representative sub-regions for few-shot medical image segmentation. IEEE Trans. Instrum. Meas. 2024, 73, 5016312. [Google Scholar] [CrossRef]

- Li, S.Y.; Jiang, Y.; Zhou, Z.H. Partial multiview clustering. In Proceedings of the AAAI Conference on Artificial Intelligence, Québec City, QC, Canada, 27–31 July 2014; Volume 28. [Google Scholar]

- Weiwei, K.; Qiguang, M.; Yang, L. Multimodal Sensor Medical Image Fusion Based on Local Difference in Non-Subsampled Domain. IEEE Trans. Instrum. Meas. 2018, 64, 938–951. [Google Scholar]

- Wen, H.; Liu, Y.; Rekik, I.; Wang, S.; Chen, Z.; Zhang, J.; Zhang, Y.; Peng, Y.; He, H. Multi-modal multiple kernel learning for accurate identification of Tourette syndrome children. Pattern Recognit. 2017, 63, 601–611. [Google Scholar] [CrossRef]

- Peng, P.; Zhang, Y.; Ju, Y.; Wang, K.; Li, G.; Calhoun, V.D.; Wang, Y.P. Group sparse joint non-negative matrix factorization on orthogonal subspace for multi-modal imaging genetics data analysis. IEEE/ACM Trans. Comput. Biol. Bioinform. 2020, 19, 479–490. [Google Scholar]

- Vivar, G.; Zwergal, A.; Navab, N.; Ahmadi, S.A. Multi-modal disease classification in incomplete datasets using geometric matrix completion. In Proceedings of the Graphs in Biomedical Image Analysis and Integrating Medical Imaging and Non-Imaging Modalities: Second International Workshop, GRAIL 2018 and First International Workshop, Beyond MIC 2018, Held in Conjunction with MICCAI 2018, Granada, Spain, 20 September 2018; Proceedings 2. Springer: Berlin/Heidelberg, Germany, 2018; pp. 24–31. [Google Scholar]

- Demir, U.; Gharsallaoui, M.A.; Rekik, I. Clustering-based deep brain multigraph integrator network for learning connectional brain templates. In Proceedings of the Uncertainty for Safe Utilization of Machine Learning in Medical Imaging, and Graphs in Biomedical Image Analysis: Second International Workshop, UNSURE 2020, and Third International Workshop, GRAIL 2020, Held in Conjunction with MICCAI 2020, Lima, Peru, 8 October 2020; Proceedings 2. Springer: Berlin/Heidelberg, Germany, 2020; pp. 109–120. [Google Scholar]

- Song, P.; Liu, Z.; Mu, J.; Cheng, Y. Deep embedding based tensor incomplete multiview clustering. Digit. Signal Process. 2024, 151, 104534. [Google Scholar] [CrossRef]

- Hamilton, W.; Ying, Z.; Leskovec, J. Inductive representation learning on large graphs. Adv. Neural Inf. Process. Syst. 2017, arXiv:1706.02216. [Google Scholar]

- Bessadok, A.; Mahjoub, M.A.; Rekik, I. Topology-aware generative adversarial network for joint prediction of multiple brain graphs from a single brain graph. In Proceedings of the Medical Image Computing and Computer Assisted Intervention–MICCAI 2020: 23rd International Conference, Lima, Peru, 4–8 October 2020; Proceedings, Part VII 23. Springer: Berlin/Heidelberg, Germany, 2020; pp. 551–561. [Google Scholar]

- Pu, J.; Cui, C.; Chen, X.; Ren, Y.; Pu, X.; Hao, Z.; Philip, S.Y.; He, L. Adaptive Feature Imputation with Latent Graph for Deep Incomplete Multiview Clustering. In Proceedings of the Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2024; Volume 38, pp. 14633–14641. [Google Scholar]

- Chao, G.; Jiang, Y.; Chu, D. Incomplete contrastive multiview clustering with high-confidence guiding. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 20–27 February2024; Volume 38, pp. 11221–11229. [Google Scholar]

- Liu, Y.; Yue, L.; Xiao, S.; Yang, W.; Shen, D.; Liu, M. Assessing clinical progression from subjective cognitive decline to mild cognitive impairment with incomplete multi-modal neuroimages. Med. Image Anal. 2022, 75, 102266. [Google Scholar] [CrossRef]

- Jiao, J.; Sun, H.; Huang, Y.; Xia, M.; Qiao, M.; Ren, Y.; Wang, Y.; Guo, Y. GMRLNet: A graph-based manifold regularization learning framework for placental insufficiency diagnosis on incomplete multimodal ultrasound data. IEEE Trans. Med. Imaging 2023, 42, 3205–3218. [Google Scholar] [CrossRef] [PubMed]

- Sarawgi, U. Uncertainty-Aware Ensembling in Multi-Modal AI and Its Applications in Digital Health for Neurodegenerative Disorders. Ph.D. Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, 2021. [Google Scholar]

- Zhu, Q.; Xu, B.; Huang, J.; Wang, H.; Xu, R.; Shao, W.; Zhang, D. Deep multi-modal discriminative and interpretability network for Alzheimer’s disease diagnosis. IEEE Trans. Med. Imaging 2022, 42, 1472–1483. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Jiang, L.; Liu, D.; Liu, W. Incomplete multiview clustering via self-attention networks and feature reconstruction. Appl. Intell. 2024, 54, 2998–3016. [Google Scholar] [CrossRef]

- Xu, J.; Li, C.; Peng, L.; Ren, Y.; Shi, X.; Shen, H.T.; Zhu, X. Adaptive feature projection with distribution alignment for deep incomplete multiview clustering. IEEE Trans. Image Process. 2023, 32, 1354–1366. [Google Scholar] [CrossRef]

- Lin, Y.; Gou, Y.; Liu, Z.; Li, B.; Lv, J.; Peng, X. Completer: Incomplete multiview clustering via contrastive prediction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2021; pp. 11174–11183. [Google Scholar]

- Lin, Y.; Gou, Y.; Liu, X.; Bai, J.; Lv, J.; Peng, X. Dual contrastive prediction for incomplete multiview representation learning. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 4447–4461. [Google Scholar]

- Li, H.; Li, Y.; Yang, M.; Hu, P.; Peng, D.; Peng, X. Incomplete multiview clustering via prototype-based imputation. arXiv 2023, arXiv:2301.11045. [Google Scholar]

- Yang, M.; Li, Y.; Hu, P.; Bai, J.; Lv, J.; Peng, X. Robust multiview clustering with incomplete information. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 1055–1069. [Google Scholar] [CrossRef] [PubMed]

- Liu, X.; Li, M.; Tang, C.; Xia, J.; Xiong, J.; Liu, L.; Kloft, M.; Zhu, E. Efficient and effective regularized incomplete multiview clustering. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 2634–2646. [Google Scholar]

- Li, Z.; Tang, C.; Liu, X.; Zheng, X.; Zhang, W.; Zhu, E. Tensor-based multiview block-diagonal structure diffusion for clustering incomplete multiview data. In Proceedings of the 2021 IEEE International Conference on Multimedia and Expo (ICME), Shenzhen, China, 5–9 July 2021; pp. 1–6. [Google Scholar]

- Pan, Y.; Liu, M.; Lian, C.; Xia, Y.; Shen, D. Spatially-constrained fisher representation for brain disease identification with incomplete multi-modal neuroimages. IEEE Trans. Med. Imaging 2020, 39, 2965–2975. [Google Scholar] [CrossRef] [PubMed]

- Zhu, Y.; Tian, D.; Yan, F. Effectiveness of entropy weight method in decision-making. Math. Probl. Eng. 2020, 2020, 3564835. [Google Scholar] [CrossRef]

- Li, Y.; Hu, X.; Zhu, T.; Liu, J.; Liu, X.; Liu, Z. Discriminative Embedded Multiview Fuzzy C-Means Clustering for Feature- redundant and Incomplete Data. Inf. Sci. 2024, 677, 120830. [Google Scholar] [CrossRef]

- Zhou, Z.; Xia, T.; Zhang, T.; Du, M.; Zhong, J.; Huang, Y.; Xuan, K.; Xu, G.; Wan, Z.; Ju, S.; et al. Prediction of preoperative microvascular invasion by dynamic radiomic analysis based on contrast-enhanced computed tomography. Abdom. Radiol. 2024, 49, 611–624. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).