Abstract

The linear variable differential transformer is a key component for measuring vibration noise and active vibration isolation. The nonlinear output associated with increased differential displacement in LVDT constrains the measurement range. To extend the measurement range, this paper proposes an advanced Snake Optimization–Tangential Functional Link Artificial Neural Network (ASO-TFLANN) model to extend the linear range of LVDT. First, the Latin hypercube sampling method and the Levy flight method are introduced into the snake optimization (SO) algorithm, which enhances the global search ability and diversity preservation ability of the SO algorithm and effectively solves the common overfitting and local optimal problems in the training process of the gradient descent method. Second, a voltage–displacement test bench is constructed, collecting the input and output data of the LVDT under four different main excitation conditions. Then, the collected input and output data are fed into the ASO-TFLANN model to determine the optimal weight vectors of the tangential functional link Artificial Neural Network (TFLANN). Finally, by comparing with the simulation experiments of several algorithms, it is proven that the ASO proposed in this paper effectively solves the common overfitting and local optimization problems in the training process of the gradient descent method. On this basis, through offline simulation comparison experiments and online tests, it is proven that the method effectively reduces while expanding the linear range of LVDT and significantly improves the measurement range, which provides a reliable basis for improving measurement range and accuracy.

1. Introduction

The linear variable differential transformer (LVDT) is a key component in vibration noise measurement and active vibration isolation. It is extensively employed in domains such as aerospace [1,2], manufacturing [3,4], and industry [5,6] for measuring physical quantities such as distance, position [7], and pressure [8]. The LVDT consists of the primary coil and the two secondary coils that are symmetrically positioned on either side of the primary coil [9]. The primary coil is excited by a 10–50 kHz AC voltage signal and moves linearly between the two oppositely connected secondary coils. The secondary coils generate differential signals based on their relative position to the primary coil. However, as displacement increases, the LVDT exhibits inherent nonlinear input–output characteristics. To guarantee measurement precision, the LVDTs are generally used only within their linear region, which limits their measurement range. Therefore, expanding the measurement range has become a major research focus for experts and scholars worldwide.

Y. Kano et al. [10] proposed a design whose main feature is that the secondary coil is placed obliquely on both sides of the primary coil. Parthasarathi Veeraian et al. [11] introduced a method to extend the linearity range of LVDT by fractional order modelling without significantly affecting sensitivity and linearity. Pipat Prommee et al. [12] utilized logarithmic approximation to perform summation and subtraction operations to eliminate certain LVDT nonlinear characteristics. Hanjari Ram et al. [13] used a dual-slope circuit structure to intelligently process the secondary output under sawtooth wave excitation. Harikumar Ganesan et al. proposed an innovative technique based on an oscillator to control oscillation frequency by adjusting the mutual inductance between the primary and secondary coils [14]. The mutual inductance varies with the motion of the displacement core. The technique requires a microcontroller to generate digital sinusoidal signals and transmit the acquired signals through an analog-to-digital converter. The oscillator approach is further optimized in [15] by adjusting the mutual inductance between the primary and secondary coils in response to the movement of the displacement core to control oscillation frequency. Wandee Petchmaneelumka et al. [16] extended the linear range of LVDT using an inverse function technique. G.Y. Tian et al. [17] presented an equivalent magnetic circuit of LVDT, calculating the mutual inductance, output voltage, and sensitivity. Then, the theory was verified by experimental comparison of two LVDTs with the same structural parameters, as well as with different magnetic materials. These studies focused on mechanical structures or the circuit, enhancing LVDT performance through optimization of mechanical structures and circuit design. However, optimizing mechanical structures and circuits is costly and involves longer design cycles. Unlike fixed mechanical and circuit compensation systems, algorithmic optimization offers greater flexibility, allowing adjustments based on varying operational conditions and application scenarios, such as different frequencies, displacement ranges, or other operating parameters. In contrast, mechanical and circuit systems typically require redesigns or component replacements, making adjustments less flexible.

With the development of neural networks, many scholars have begun to use neural networks to solve this problem. Zhongxun Wang et al. [18] proposed a nonlinear compensation method for LVDT based on a radial-basis function (RBF) neural network. Saroj Kumar Mishra et al. [19] used FLANN to extend the linear range of LVDT and experimentally verified the method’s validity. Based on the previous work, Saroj Kumar Mishra et al. [20] applied the method to two LVDTs and further demonstrated that the method is applicable to any transducer with nonlinear characteristics. Based on the work of Saroj Kumar Mishra [19], Sarita Das et al. proposed a two-stage FLANN network [21]. The paper first uses a low-order FLANN to roughly compensate the nonlinearity of the LVDT model; then a high-order FLANN is used to further compensate the remaining nonlinearity. The results show that the inversion model based on the two-stage FLANN network exhibits higher measurement accuracy and better precision.

However, FLANN uses the gradient descent method to adjust parameters, coming with problems such as slow convergence and reliance on gradient information. To address these limitations, experts have explored various bionic optimization algorithms. However, there is only one example in the field of LVDT output optimization. Li Minghui et al. [22] proposed a BP neural network optimized with the ant colony algorithm to compensate for the nonlinear output of the LVDT. This approach leverages the ant colony algorithm to search for the optimal ranges of neural network weights and thresholds, thereby overcoming common BP neural network shortcomings, such as susceptibility to local minima and slow convergence. The study provides valuable insights for this article, which employs a meta-heuristic algorithm to further improve the compensation process for the nonlinear output of the LVDT. Nevertheless, all of these studies used only a limited number of data points, a dozen, and all of the displaced data points were integers.

Hashim Fatma A. [23] introduced a new metaheuristic approach known as the snake optimization (SO) algorithm. By modelling the unique behaviors of snakes—such as foraging, fighting, mating, and laying eggs—as mathematical processes like global search, diversity maintenance, and the introduction of randomness, the algorithm aims to discover optimal solutions within a defined search space. The SO algorithm does not rely on gradient information and has the advantage of high computational efficiency, which has been applied to the optimization process of the power grid [24]. Ibrahim AlShourbaji et al. [25] applied the SO algorithm to feature selection, which reduces the chances of traditional methods falling into the local optimum. Qilin Li et al. [26] applied the SO algorithm to path planning to reduce the problem of premature convergence of traditional methods. Building upon previous studies in the field of snake optimization [23,25,26], this study proposes a method to refine the weight coefficient matrix of the controller. By leveraging insights from existing research, our work applies the SO algorithm to address the limitations of the linear quadratic regulator (LQR) in vehicle active suspension systems, where defining the weight coefficient matrices Q and R is often subjective and inefficient. Comparative simulations and experiments have demonstrated that the SO algorithm effectively optimizes the LQR controller weight coefficient matrix [27].

This paper adopts SO theory to solve the problems of relying on gradient information and low computational efficiency encountered when FLANN utilizes the gradient descent method to adjust the parameters between input and output layers. However, the optimization effect of SO is easily affected by the initial population. If the initial population is not properly selected, it may cause the search to fall into a local optimum, which in turn affects the global search capability of the algorithm and the accuracy of the final results. Hence, Latin hypercubic sampling (LHS) is employed to initialize the population and enhance the diversity of the snake population. In addition, the selection of the step size of SO depends on the current solution position, which can easily lead to a local optimum. Thus, Levy flight is adapted to generate the step size, resulting in a more randomized step size to avoid falling into local optimization. An adaptive inverse model based on SO and Tangential Functional Link Artificial Neural Network (TFLANN) is proposed to achieve nonlinear compensation of LVDT and to broaden the measurement range of LVDT. The contributions of this paper can be summarized as follows.

- Based on the SO algorithm, the population diversity is optimized by LHS using Levy flight generating the step size to reduce the impact of the SO algorithm’s species parameter settings on the optimization results and jump out of the local optimum through a larger step size, which makes it more likely to converge to the globally optimal solution;

- Introducing tangent function into FLANN to construct the inverse model of LVDT;

- A large number of comparative simulation experiments are conducted between the ASO algorithm and other algorithms to verify the superiority of ASO in dealing with single-peak function and multi-peak function problems;

- Offline comparative simulation experiments are conducted between ASO-TFLANN and other methods to verify the effectiveness of ASO-TFLANN in extending the linear range of LVDT; online experiments are conducted using ASO-TFLANN to verify the feasibility of ASO-TFLANN in extending the linear range of LVDT.

The remainder of this paper is organized as follows. Section 2 describes the working principle of LVDT. Section 3 discusses the study of the ASO-TFLANN method for the nonlinear compensation of LVDT. Section 4 compares the performance of the proposed ASO algorithm with seven other algorithms and details the experimental program, including the training of the ASO-TFLANN model, the offline comparative simulation tests using the experimental data, and the subsequent online simulation tests on the trained ASO-TFLANN model. Finally, Section 5 presents the conclusions.

2. LVDT Working Principle

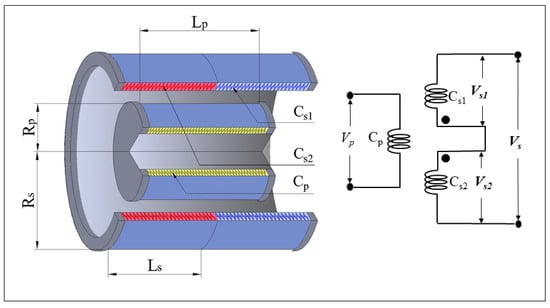

Figure 1 illustrates the operating principle of the LVDT. Unlike traditional LVDT sensor structures, which use a movable core to produce displacement, the LVDT is designed with a primary coil () and a secondary coil group ( and ). In this configuration, the primary coil () is completely enclosed by the secondary coil group, ensuring uniform coupling and improved measurement stability. The primary coil has turns and a length of , where refers to the number of turns and is the physical length of the coil. The secondary coils ( and ) are symmetrically positioned at the midpoint of , each with turns and a length of , where and represent the number of turns and the length of the secondary coils, respectively. All coils are constructed with eight layers of 36AWG (diameter 0.127 mm) polyimide-coated wire, and each coil has a thickness of 2 mm. The primary coil is typically excited by a sinusoidal AC signal in the frequency range of 10 to 50 kHz, with an effective voltage of 5–15 V, which induces an alternating current through the secondary coils. In the stationary state, when the primary coil () is centered at the midpoint, the induced voltage in the secondary coils is ideally zero due to symmetry. The time-varying magnetic field from the primary coil induces equal and opposite voltages in the two secondary coils, leading to cancellation of the signals. As a result, the secondary coil group does not generate a net sinusoidal voltage signal. However, when there is any displacement between the coils, this symmetry is broken, and the secondary coils generate differential sinusoidal voltage signals that are proportional to the displacement. However, when there is a small relative displacement between the primary and secondary coils, the secondary coil group induces differential sinusoidal voltage signals, where the amplitude is proportional to the differential displacement between and the secondary coil group. Nonlinearity occurs when there is a relatively large relative displacement between the primary and secondary coils. Additionally, if the coils move in the opposite direction, the phase of the induced sinusoidal voltage signal shifts by 180°.

Figure 1.

LVDT operating principle diagram.

According to Faraday’s law of electromagnetic induction, the induced voltages of and are denoted as (1) and (2), respectively:

where k is a constant of the proportionality. and are the magnetic fluxes of the secondary coils and , respectively, as shown in (3) and (4):

where is the maximum magnetic flux. is the angular frequency of the AC signal. is the phase angle of , and is the phase angle of .

Using a Taylor expansion for the sinusoidal functions of the magnetic fluxes and , (1), (2) is deformed as

where and are linear proportionality constants; and are nonlinear proportionality constants for the quadratic term; and are nonlinear proportionality constants for the cubic term; is the differential displacement value. The differential voltage of the two secondary coils is represented as follows:

Differential voltage is linearly proportional to displacement . It is effective for measuring small displacements within the linear range of the LVDT. However, as displacement increases, the higher-order terms become more important, leading to non-linear output voltage. Therefore, non-linear compensation methods are necessary to extend the accurate measurement range of LVDT.

3. ASO-TFLANN Method for Nonlinear Compensation of LVDT

3.1. Snake Optimizer

For the snake optimization algorithm, the snake’s foraging and mating behaviors are mainly affected by the amount of food and the ambient temperature. Based on the conditions of insufficient and sufficient food, the algorithm is divided into two phases of global and local search, and the amount of food and the ambient temperature are defined as (8) and (9), respectively:

where t signifies the current iteration count and T indicates the maximum number of iterations.

3.1.1. Global Search Phase (Food Scarcity)

When ( is the food threshold), the algorithm enters the global exploration phase, where both male and female individuals choose random positions to search for food, and the positions are optimized as (10) and (11):

where denotes the value at dimension j for the ith female(male) snake individual in generation t. () indicates the position value at dimension j for a randomly selected individual guiding the female (male) population in generation . () refers to the position value of a randomly selected individual guiding the male (female) population, with a corresponding fitness (). represents the ith male snake individual, with its fitness , and denotes the ith female individual, with its fitness . Additionally, is a number drawn from a uniform distribution within the range . and denote the upper and lower bounds of dimension j for the ith individual, respectively.

3.1.2. Local Search Phase (Adequate Food)

When and ( is the temperature threshold), the snake population transitions into a foraging state, signaling the algorithm to enter a local search phase. During this phase, both male and female individuals perform a search around the position of the global best individual, . This process is illustrated in (12) and (13):

where represents the position of the best individual in generation t of the snake population, represents the position of the ith male(female) snake in generation t of the snake population.

When and , the snake population enters a fighting or mating state. During the fighting state, the position update formula for male and female individuals is as in (14) and (15):

where and , respectively, represent the position of the best individual in generation t of the male and female snake populations.

When in the mating state, the position update for male and female individuals is shown in (16) and (17):

where and , respectively, represent the mating capabilities of the ith female and male individuals. If snake eggs hatch, the selection of the weakest male and female for elimination is replaced by Equations (18) and (19):

where () is the worst male (female) individual. is the lower bound of feasible solution X, and is the upper bound of feasible solution X.

3.2. Advanced Snake Optimization Algorithm

SO uses a stochastic approach to initialize the snake population, which is a pseudo-random initialization, leading to an uneven distribution of the snake population in the solution space. To overcome this problem, in this article, the Latin hypercube sampling (LHS) method is used in the snake population initialization process to make the distribution of the snake population more uniform. Unlike simple random sampling, LHS ensures that the values of the variables in each dimension cover the entire search space uniformly, thus improving the sampling efficiency and representativeness. When sampling n points in a d-dimensional space is recorded, each dimension of the sampling space is defined as the interval [0, 1] and wish to generate , where each row of X denotes a sampled point, and each dimension covers [0, 1] uniformly. This method improves the global search by segmenting the values within each dimension to ensure that the samples cover the entire search space. The basic procedure of the Latin hypercube sampling method is as follows:

- Each scene dimension is divided into n equal partitions according to the cumulative density function (n is the scene element dimension).

- Data points are randomly selected within a single-dimensional partition.

- The results of each dimension are merged to generate the sampling space.

- The desired samples in the sampling space are selected using a random method.

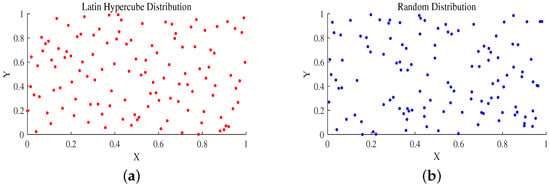

Figure 2a,b show the Latin hypercubic sampling distribution and random distribution, respectively. The LHS ensures that each dimension is uniformly covered and that the data points show a more even distribution along the X and Y axes, with relatively uniform distances between points, avoiding any obvious clustering or sparsity. This method improves the ability of global search by dividing each dimension into multiple intervals, thus ensuring that the samples can fully cover the search space.

Figure 2.

(a) Latin hypercube distribution; (b) random distribution.

In the SO, the step size of the snake’s movement is usually determined by the current position of the individual and the global optimal position. The step size is relatively small and tends to be continuous, and the snake moves more smoothly in the solution space, which makes it easy to fall into the local optimum. To solve this problem, Levy flight is introduced as a stochastic search method conforming to the Levy distribution. The hybrid search behavior of the Levy flight combines short-range and occasionally long-range searches, which endows the algorithm with a powerful global search capability. In snake optimization algorithms, using random step sizes generated by Levy flights ensures a certain level of convergence accuracy. It also provides the opportunity to jump out of the local optimum through larger step sizes, thus making it more likely to converge to a globally optimal solution. The steps of introducing Levy flight into the snake optimization algorithm are similar to the original algorithm, except that at each iteration, the step size s is no longer a random value generated by the system but is generated by the Levy flight law. The snake’s position update formula thus become (20) and (21):

where s is the Levy flight step, given by (22). , , and is taken to be 1.5. The variance is given by (23)

Using the Advanced Snake Optimization Algorithm (ASO) to update , Algorithm 1 shows the pseudo-code of the ASO algorithm to optimize .

| Algorithm 1 Pseudo-code of the ASO algorithm to optimize WT. | |

| 1 | Initialize the problem setting. |

| 2 | Randomly initialize male and female populations. |

| 3 | Define Temp using Equation (9). |

| 4 | While () do |

| 5 | Evaluate each group and . |

| 6 | Find the best male and . |

| 7 | Define food quantity using Equation (8). |

| 8 | If () |

| 9 | Perform exploration using Equations (20) and (21). |

| 10 | Else if () |

| 11 | Perform exploitation using Equations (12) and (13). |

| 12 | Else |

| 13 | If (rand ) |

| 14 | Snakes in fight mode using Equations (14) and (15). |

| 15 | Else |

| 16 | Snakes in mating mode using Equations (16) and (17). |

| 17 | Change the worst male and female using Equations (18) and (19). |

| 18 | End if |

| 19 | End if |

| 20 | End while |

| 21 | Return the best solution. |

3.3. TFLANN Model Construction of LVDT

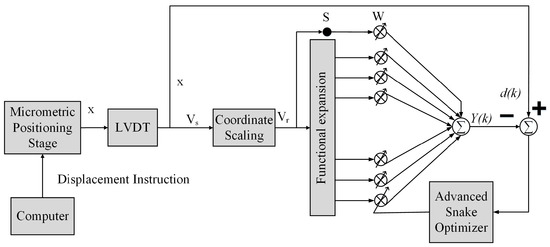

In this subsection, the displacement-voltage data of the LVDT are obtained through experiments and expanded with higher-order tangent to obtain the nonlinear mapping values. Based on the ASO and the TFLANN, an adaptive inverse model of the LVDT is established. The weights between the input and output layers of the TFLANN by employing the methods of the snake optimization theory, such as global searching, diversity maintenance, and stochastic introduction, etc., are optimized to solve the overfitting and local optimization problems that occur when using the gradient descent method. The solution for obtaining the optimal weight vector of TFLANN to realize the LVDT nonlinear compensation and its working principle are shown in Figure 3.

Figure 3.

ASO-TFLANN nonlinear compensation methods.

The functional link processes either an individual element of a pattern or the entire pattern by creating a set of linearly independent functions, which are then assessed using the pattern as the input argument [28,29]. To prevent the tangent function from exceeding the definition domain, is scaled by coordinates to obtain , which is inputted into TFLANN to perform a fifty-dimensional tangent function chain expansion to obtain the nonlinear mapping values, which are used together with as inputs to the model, and the function chain expansion is shown in (24):

where P denotes the dimensionality of the neural network’s input layer. represents the ith neuron;

Building a combined prediction model with optimized weights:

where y denotes the final prediction result. is the weight occupied by a single neuron. S is the prediction model input layer vector. is the transpose of the optimal weight vector of the prediction model input layer.

In addition, it converts virtual displacements accepted by the LVDT voltage–displacement test bench into actual displacement values:

where denotes the actual displacement. signifies the grating sensor reading when the differential voltage output of the secondary coil group of the LVDT is zero. R denotes the resolution of the grating sensor’s encoder.

The quality of individual positions in the snake population is evaluated by the fitness function . For ease of handling, the fitness function is chosen as (27)

where represents the actual displacement measured by the LVDT during the ith experimental trial. is the predicted output derived from the same input. n denotes the total number of distinct displacement experiments conducted.

4. Numerical Simulation and Experimental Verification

The local and global optimization capabilities of the ASO and SO algorithms on single-peak and multi-peak functions are evaluated through 48 independent comparison experiments between other algorithms under the same conditions using six benchmark functions. To verify the advantages of the ASO-TFLANN nonlinear compensation method, the LVDT voltage–displacement test bench was constructed for testing, and the displacement–voltage data of the LVDT under four different frequencies of primary excitation were obtained. The displacement and voltage data of the LVDT under the primary excitation of the four different frequencies were fed to the four models for iterative training, which are the SO-TFLANN, the Advanced Snake Optimization–Sine–Cosine Functional Linked Network Chain (ASO-SCFLANN), the Tangent Functional Linked Artificial Neural Network (TFLANN), and the Sine–Cosine Functional Artificial Neural Linked Network (SCFLANN). The optimal parameters obtained from the training are given to the above four models for comparative analysis in simulation experiments.

4.1. ASO Performance Test

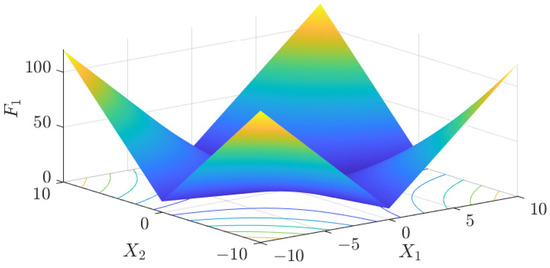

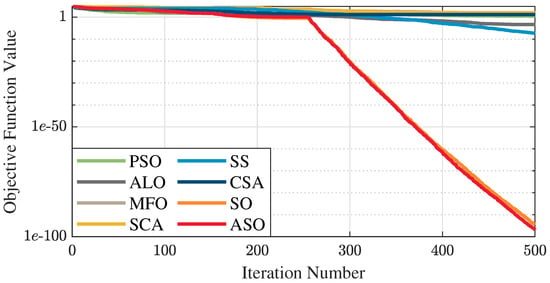

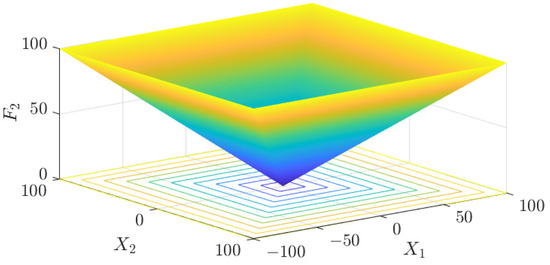

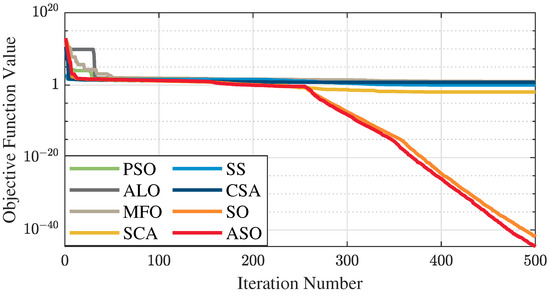

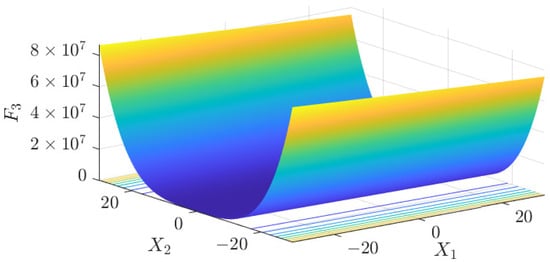

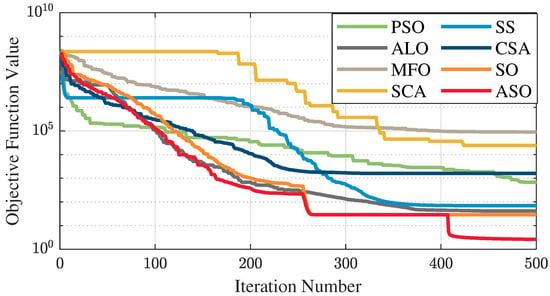

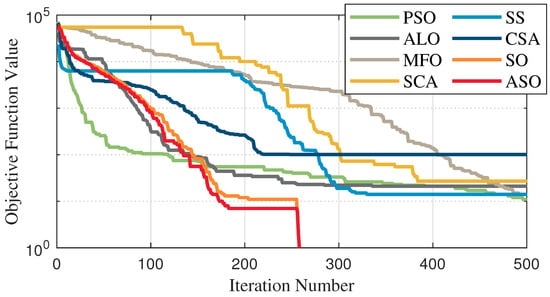

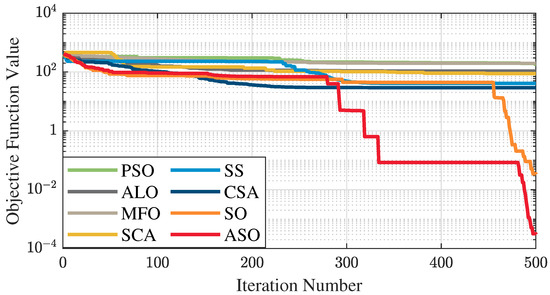

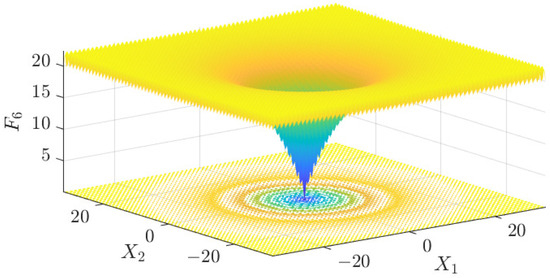

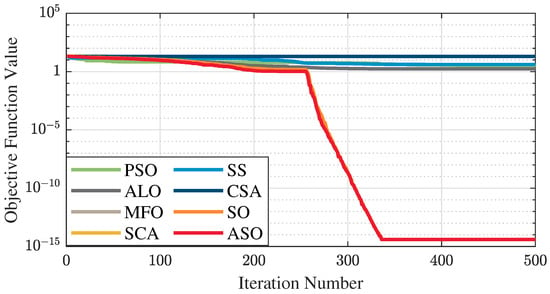

To evaluate the optimization performance of ASO, Particle Swarm Optimization (PSO), Ant Lion Optimization (ALO), and Moth Flame Optimization (MFO) by six benchmark functions with a swarm size of 30 and an iteration number of 500, Sine–Cosine Algorithm (SCA), Salp Swarm Algorithm (SS), Chameleon Search Algorithm (CSA), snake optimization (SO), and ASO conducted 70 independent comparison experiments. The six benchmark functions are listed in Table 1. F1, F2, and F3 functions are single-peak functions that can be used to test the local optimization ability of the algorithms. F4, F5, and F6 functions are multi-peak functions that can be used to test the global optimization ability of the algorithms. The resultant data use the mean value which measures the accuracy of the algorithm. The above algorithm was run on Lenovo Savior R7000P with the Windows 10 system and MATLAB version 2023b.

Table 1.

Benchmark functions and their properties.

The test data of the eight algorithms for the above benchmark functions are given in Table 2. Figure 4, Figure 5, Figure 6, Figure 7, Figure 8, Figure 9, Figure 10, Figure 11, Figure 12, Figure 13, Figure 14 and Figure 15 display 3D plots of the benchmark functions F1, F2, F3, F4, F5, and F6, along with the average fitness curves of the algorithms following optimization. Figure 5 shows that ASO, SO, and SS optimize the F1 function better compared to PSO, ALO, MFO, SCA, and CSA. At about the 250th iteration, the value of the objective function for ASO drops dramatically, far below that of the other algorithms, and eventually reaches a value close to the order of magnitude of −100. Among them, ASO is 1000 times more accurate than SO and 90 orders of magnitude higher than SS. As shown in Figure 7, ASO shows outstanding performance throughout. Initially, all algorithms have relatively close objective function values, but as the number of iterations increases, ASO quickly shows a significant advantage after about 300 iterations, with the objective function value rapidly decreasing to be much lower than the other algorithms. At the end of 500 iterations, the objective function value of ASO is close to , and the objective function value of SO is close to , indicating that ASO has very high accuracy and excellent convergence on this function. In contrast, the other algorithms perform mediocrely on the F2 function, especially the objective function values of MFO and CSA decrease only slightly in the late iteration. Taken together, ASO shows excellent global search capability on the F2 test function and can quickly converge to very small values, far exceeding the performance of the other algorithms. In the optimization tests for the F3 function, ASO still had the best results, followed by SO (see Figure 9). At the end of 250 iterations, SO drops rapidly, and the value is the same as that of ASO; at the end of 400 iterations, ASO drops rapidly again to 2.6087, breaking through the local optimum. Comprehensively, ASO shows excellent global search ability in the late iteration of the F3 test function using Levy flight, which can break through the local optimum and far outperforms the performance of other algorithms. Figure 11 shows that in the F4 function optimization test, both ASO and SO reach the theoretical optimal value of 0 on average, but ASO finds the optimal solution relatively faster; the second-ranked PSO has an average of 11, and SS and MFO are both in the third place with 14. Figure 13 shows that ASO, SO, and CSA outperform PSO, ALO, MFO, SCA, and SS in the optimization test for F5 functions. ASO has the value of the objective function that is three orders of magnitude lower than that of SO, after four fast drops at iterations 250-350. At iterations 450-500, there is one fast drop for both SO and ASO, but ASO is still 100 times more accurate than SO. Figure 15 shows that ASO, SO, and CSA are better optimized in the optimization test of the F6 function, and ASO and SO have the same performance. Overall, the convergence trend of ASO is more obvious from Figure 4 to Figure 15. Especially when the number of iterations exceeds 200, the average fitting curves of F3 and F5 of ASO can find better solutions than the other algorithms after several step-downs. This indicates that ASO has faster convergence speed and higher search accuracy than SO and other algorithms, and is less likely to fall into local optimization, thus showing stronger competitiveness.

Table 2.

Algorithm performance—mean in the 500th generation.

Figure 4.

F1 three-dimensional plot of the basis function.

Figure 5.

Average fitness curve of the F1 benchmark function.

Figure 6.

F2 three-dimensional plot of the basis function.

Figure 7.

Average fitness curve of the F2 benchmark function.

Figure 8.

F3 three-dimensional plot of the basis function.

Figure 9.

Average fitness curve of the F3 benchmark function.

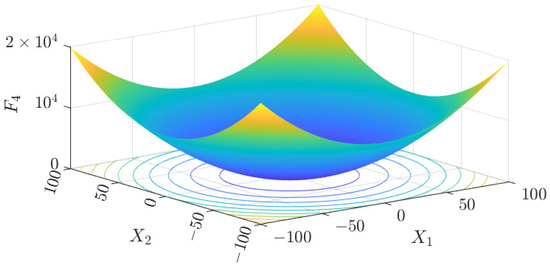

Figure 10.

F4 three-dimensional plot of the basis function.

Figure 11.

Average fitness curve of the F4 benchmark function.

Figure 12.

F5 three-dimensional plot of the basis function.

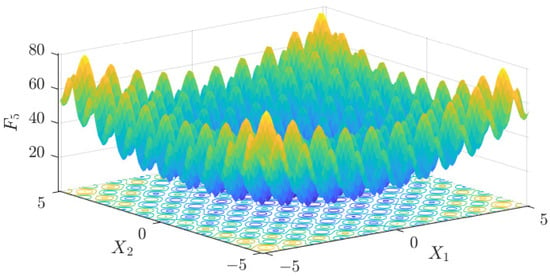

Figure 13.

Average fitness curve of the F5 benchmark function.

Figure 14.

F6 three-dimensional plot of the basis function.

Figure 15.

Average fitness curve of the F6 benchmark function.

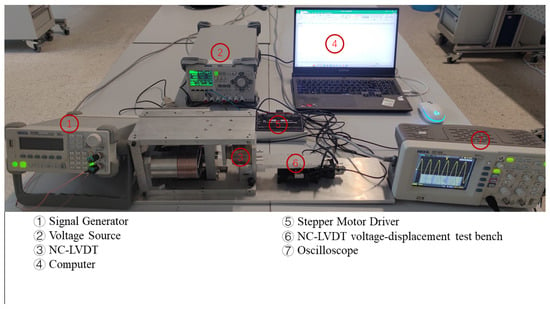

4.2. ASO-TFLANN Model Training and Comparative Simulation

The LVDT voltage–displacement test bench consists of a high-precision stepping motor, a grating sensor, a stepping motor driver, a signal generator, an oscilloscope, and DC power supply. High-precision stepping motor’s stepping angle is 1.8°/step, the lead range is 1 mm, repeatable positioning accuracy is ±0.005 mm. The stepping motor is connected with the primary coil of LVDT. The grating sensor’s pitch is 20 m, accuracy is 1 m, the displacement signal is fed back to the stepping motor to achieve closed-loop regulation, model ATOM4TO-300. The stepper motor driver converts the displacement command into a stepping angle, enabling the precise control of the stepper motor in a closed-loop system. The signal generator (model DG1022G) provides sinusoidal voltage signals to the primary coil of the LVDT. The computer sends non-integer displacement commands to the stepper motor driver to generate the desired displacement of the LVDT’s primary coil, which helps avoid integer displacement values in the test results. The oscilloscope (model DS1102E) is used to record the differential voltage across the secondary coil, capturing the secondary displacement of the stepper motor. A visual representation of the setup used for the tests at four different frequencies, namely 10 kHz, 20 kHz, 30 kHz, and 50 kHz, is shown in Figure 16. The same setup was used for the subsequent online experiments as well.

Figure 16.

LVDT voltage–displacement test bench.

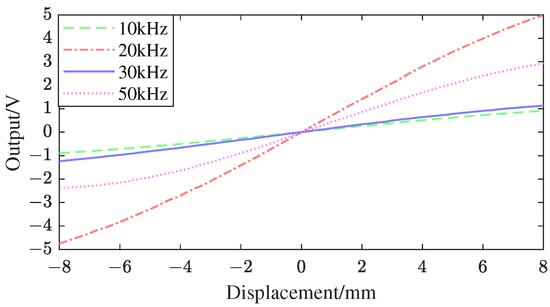

Under the primary excitation of four different frequencies, namely 10 kHz, 20 kHz, 30 kHz, and 50 kHz, 17 sets of experimental data for each frequency are presented in Table 3. is the virtual displacement accepted by the LVDT voltage–displacement test bench, and is dimensionless; is the secondary coil differential voltage in volts. The displacement-voltage output curve is shown in Figure 17.

Table 3.

Seventeen sets of experimental data for each frequency.

Figure 17.

LVDT voltage−displacement test bench.

To verify the advantages of the ASO-TFLANN nonlinear compensation method, the collected voltage–displacement data of four primary excitations at 10 kHz, 20 kHz, 30 kHz, and 50 kHz were input into four models (such as ASO-TFLANN, SO-SCFLANN, TFLANN, and SCFLANN) for offline comparative simulation experiments and analysis. The error , maximum absolute error , and maximum full-scale error [30] of the four methods in the LVDT measurement range were obtained through Equations (28)–(30). Table 4 shows the of the four methods at different frequencies, and Table 5 shows the of the four methods at different frequencies.

Table 4.

Four different methods of LVDT’s .

Table 5.

Four different methods of LVDT’s .

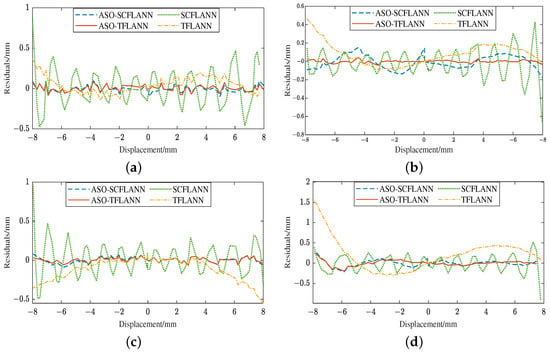

Figure 18a shows the comparison of output error images of different methods at 10 kHz. From Figure 18a, Table 4 and Table 5, it can be seen that when the primary excitation is 10 kHz, the using ASO-TFLANN, ASO-SCFLANN, and TFLANN are 84.40 m, 96.89 m, 365.74 m, respectively, which are lower than the 560.13 m using SCFLANN, and the ASO-TFLANN calculates the smallest of 0.61%, which is 84.9% lower than that of SCFLANN, followed by ASO-SCFLANN and TFLANN, which are 82.7% and 34.7% lower, respectively.

Figure 18.

Comparison of output error images of different methods at 10 kHz, 20 kHz, 30 kHz, and 50 kHz. (a) Comparison of output error images of different methods at 10 kHz. (b) Comparison of output error images of different methods at 20 kHz. (c) Comparison of output error images of different methods at 30 kHz. (d) Comparison of output error images of different methods at 50 kHz.

Figure 18b shows the comparison of output error images of different methods at 20 kHz. As shown in Figure 18b, Table 4 and Table 5, when the primary excitation is 20 kHz, the SCFLANN’s is 668.33 m, the ASO-TFLANN’s is the smallest, 42.41 m, which is 93.65% lower than that of the SCFLANN, the ASO- SCFLANN is the next smallest at 150.62 m, which is 77.46% lower, and the TFLANN’s is 463.51 m, which is 30.64% lower; furthermore, the ASO-TFLANN’s is smallest among the four frequencies of the four methods, which is only 0.27%; taking the optimization effect of SCFLANN as a benchmark, is reduced by 93.7%, 77.5%, and 30.6% after using ASO-TFLANN, ASO-SCFLANN, and TFLANN, in that order.

Figure 18c shows the comparison of output error images of different methods at 30 kHz. From Figure 18c, Table 4 and Table 5, it can be seen that when the primary excitation is 30 kHz, the of the four methods, ASO-TFLANN, ASO-SCFLANN, TFLANN, and SCFLANN, are 89.98 m, 90.64 m, 505.47 m, 90.64 m, 505.47 m, 968.15 m; computed by ASO-TFLANN and ASO-SCFLANN are very similar to each other, respectively, 0.56% and 0.57%; the optimization effect of TFLANN is the worst, with the of only 3.15%, which is 47.8% lower than that of SCFLANN.

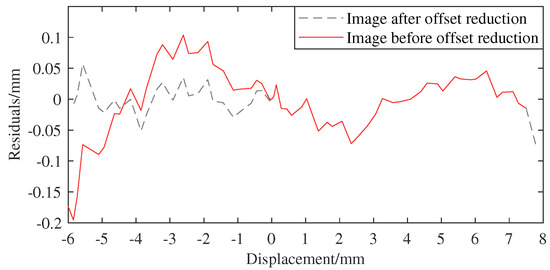

Figure 18d compares output error images of different methods at 50 kHz. As can be seen from Figure 18d, Table 4 and Table 5, when the primary excitation is 50 kHz, the of ASO-TFLANN and ASO-SCFLANN are 244.41 m, 293.65 m, respectively, which are lower than that of the value of SCFLANN, 902.14 m; and ASO-TFLANN’s is the smallest, and the calculated is only 1.53%; TFLANN outputs the largest , which is 1562.04 m, and higher than SCFLANN’s 902.14 m. Using SCFLANN’s optimization effect as a benchmark, ASO-TFLANN’s and ASO-SCFLANN’s decrease by 72.9% and 67.4%, respectively, while TFLANN’s increases by 73.1%. It is found that when the primary excitation is 50 kHz, the errors of all four methods are larger than 10 kHz, 20 kHz, and 30 kHz, which occurs because the position of the voltage zero point of the NC-LVDT is subsequently shifted when the frequency is increased. Taking the voltage origin position at 10 kHz as a reference, the 20 kHz offset is +0.075 mm, the 30 kHz offset is +0.1545 mm, and the 50 kHz offset is 1.775 mm. After cutting down the offsets and re-running the analog test simulation, the error range of the 50 kHz frequency is narrowed down to within ±60 m, as shown in Figure 19.

Figure 19.

ASO−FLANN tandem LVDT output image at 50 khz before and after offset cut.

From Figure 18a–d, in the region of small displacement (−4 mm to 4 mm), the output errors of each method are small, especially ASO-TFLANN and ASO-SCFLANN show a smoother error trend. However, when the displacement increases to near the ends (±8 mm), the error gradually increases, especially the error fluctuation of SCFLANN is larger. This indicates that as the displacement increases, the nonlinear effect of the system gradually increases, leading to an increase in the error, whereas at smaller displacements, the system has stronger linear properties, and thus the methods are better able to compensate. In addition, the maximum errors all occur at displacements of 4 mm to 8 mm. This further verifies that ASO-TFLANN and ASO-SCFLANN have good nonlinear error handling capability in different displacement ranges and outperform the conventional methods in overall error control.

4.3. On-Line Test Validation

To assess the practicality and effectiveness of the ASO-TFLANN nonlinear compensation method, the LVDT is connected to an oscilloscope, which is then connected to a laptop. The data received by the oscilloscope from the LVDT are transmitted to the laptop for online experimental verification. The voltage–displacement test bench adjusts the displacement of the LVDT primary coil, and the output error of the model, after the series connection with the actual displacement, is presented in Table 6. The variance and mean of the error (both calculated using the absolute values of the errors) are shown in Table 7. From Table 6, when the primary excitation is 10 kHz, the maximum output error measured in the online experiment is −55.47 m; when the primary excitation is 20 kHz, the maximum output error measured in the online experiment is −29.67 m; when the primary excitation is 30 kHz, the maximum output error measured in the online experiment is 57.55 m; when the primary excitation is 50 kHz, the maximum output error measured in the online experiment is 105.27 m. The maximum errors in the online experiment at the four frequencies are smaller than those measured in the offline experiment, which proves that the introduced method can effectively improve the nonlinearity of the LVDT. When the primary excitation frequency is 50 kHz, the error is still the largest under the four frequencies; when the primary excitation frequency is 20 kHz, the error is still the smallest under the four frequencies, which is consistent with the offline simulation experiments. From Table 7, we can see that the mean and variance of the output errors are smallest at 20 kHz. The mean error at 20 kHz is 10.42 m, and the variance is 92.06 , making it the most stable frequency compared to the other frequencies. This suggests that the system performs most consistently at 20 kHz, with minimal error and variability.

Table 6.

Output error—actual displacement table for the model after series connection. is the output error, unit is m.

Table 7.

Statistical analysis of (calculated using absolute values) for different frequencies.

5. Conclusions

In this article, the ASO-TFLANN nonlinear compensation method, which integrates the ASO with the TFLANN, is proposed, and a fitness function associated with the linearity of LVDT is established. Based on validation through offline comparative simulation tests with ASO-SCFLANN and TFLANN as well as online testing, the following conclusions are drawn:

- Based on the benchmark function test results, it can be concluded that the ASO algorithm outperforms other algorithms in the optimization of several test functions, showing excellent global search capability and fast convergence. Specifically, ASO achieves the best results in the optimization of the F1, F2, F3, F4, F5, and F6 functions, especially in the F1 function, where the objective function value is significantly lower than that of the other algorithms, and it rapidly decreases to a value close to in the F2 function, which shows high accuracy and excellent convergence. In addition, in the later iterations of the F3 function, ASO successfully breaks through the local optimum, further improving the optimization effect. Overall, ASO demonstrates excellent performance in dealing with complex optimization problems, clearly outperforming other algorithms such as SO, PSO, ALO, MFO, SCA, and SS.

- The proposed ASO-TFLANN nonlinear compensation method, compared to ASO-SCFLANN, SCFLANN, TFLANN, and other methods, minimizes and when operating under different frequency excitations, especially when the excitation frequency is 20 kHz, is only 42.42 m, is only 0.27%. Compared with the sine–cosine function, the tangent function is more effective in optimizing the linearity of LVDT.

- The proposed ASO-TFLANN method, compared with the traditional neural network using the gradient descent method, has stronger global and local search ability and faster convergence speed. It does not easily fall into local optimum. It requires lower requirements on the objective function, suitable for a wide range of application scenarios such as discontinuous, nonlinear, discrete problems, etc., and it has better optimization design of LVDT, which can effectively improve the LVDT. The optimization design of LVDT is more effective, and can effectively improve the linearity of LVDT.

- Through online tests, the ASO-TFLANN nonlinear compensation method is proven to be feasible and effective, which is of practical significance for improving the linearity of LVDT.

Author Contributions

Methodology, X.Z.; Validation, X.Z.; Writing—original draft, X.Z.; Writing—review & editing, Q.F., Z.W., L.X. and Q.Z.; Funding acquisition, Q.F. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Key National Science and Technology Cooperation Project, Shanxi Province Science and Technology Cooperation Exchange Project (Grant No. 202304041101007), and the National Key Research and Development Program: Research on Integration Technology of Laser Interferometry Measurement Systems and Inertial Sensors (Grant No. 2024YFC2207100).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Kammegne, M.T.; Botez, R.; Grigorie, L.; Mamou, M.; Mébarki, Y. A new hybrid control methodology for a morphing aircraft wing-tip actuation mechanism. Aeronaut. J. 2019, 123, 1757–1787. [Google Scholar] [CrossRef]

- Kim, R.W.; Kim, C.M.; Hwang, K.H.; Kim, S.R. Embedded based real-time monitoring in the high-pressure resin transfer molding process for CFRP. Appl. Sci. 2019, 9, 1795. [Google Scholar] [CrossRef]

- Nhung, N.T.C.; Nguyen, H.Q.; Huyen, D.T.; Nguyen, D.B.; Quang, M.T. Development and application of linear variable differential transformer (LVDT) sensors for the structural health monitoring of an urban railway bridge in Vietnam. Eng. Technol. Appl. Sci. Res. 2023, 13, 11622–11627. [Google Scholar] [CrossRef]

- Pham, T.T.; Kim, D.; Woo, U.; Jeong, S.G.; Choi, H. Development of Non-Contact Measurement Techniques for Concrete Elements Using Light Detection and Ranging. Appl. Sci. 2023, 13, 13025. [Google Scholar] [CrossRef]

- Ludeno, G.; Cavalagli, N.; Ubertini, F.; Soldovieri, F.; Catapano, I. On the combined use of ground penetrating radar and crack meter sensors for structural monitoring: Application to the historical Consoli Palace in Gubbio, Italy. Surv. Geophys. 2020, 41, 647–667. [Google Scholar] [CrossRef]

- Sigrüner, M.; Hüsken, G.; Pirskawetz, S.; Herz, J.; Muscat, D.; Strübbe, N. Pull-out behavior of polymer fibers in concrete. J. Polym. Sci. 2023, 61, 2708–2720. [Google Scholar] [CrossRef]

- McDonagh, M.D.; Laman, J.A.; McDevitt, T.E.; Reichard, K.M. Long gage length interferometric fiber optic sensor for structural damage detection. Nondestruct. Test. Eval. 1998, 14, 293–321. [Google Scholar] [CrossRef]

- Petchmaneelumka, W.; Koodtalang, W.; Songsuwankit, K.; Riewruja, V. Linear range extension for LVDT using analog lookup table. In Proceedings of the 2018 International Conference on Engineering, Applied Sciences, and Technology (ICEAST), Phuket, Thailand, 4–7 July 2018; pp. 1–4. [Google Scholar]

- Tariq, H.; Takamori, A.; Vetrano, F.; Wang, C.; Bertolini, A.; Calamai, G.; DeSalvo, R.; Gennai, A.; Holloway, L.; Losurdo, G.; et al. The linear variable differential transformer (LVDT) position sensor for gravitational wave interferometer low-frequency controls. Nucl. Instrum. Methods Phys. Res. Sect. Accel. Spectrometers Detect. Assoc. Equip. 2002, 489, 570–576. [Google Scholar] [CrossRef]

- Kano, Y.; Hasebe, S.; Miyaji, H. New linear variable differential transformer with square coils. IEEE Trans. Magn. 1990, 26, 2020–2022. [Google Scholar] [CrossRef]

- Veeraian, P.; Gandhi, U.; Mangalanathan, U. Fractional order linear variable differential transformer: Design and analysis. AEU-Int. J. Electron. Commun. 2017, 79, 141–150. [Google Scholar] [CrossRef]

- Prommee, P.; Angkeaw, K.; Karawanich, K. Low-cost linearity range enhancement for linear variable differential transformer. IEEE Sens. J. 2022, 22, 3316–3325. [Google Scholar] [CrossRef]

- Ram, H.; Sreekantan, A.C.; George, B. Improved Digitizing Scheme for LVDT: Design and Evaluation. IEEE Sens. Lett. 2023, 7, 1–4. [Google Scholar] [CrossRef]

- Ganesan, H.; George, B.; Aniruddhan, S. Design and analysis of a relaxation oscillator-based interface circuit for LVDT. IEEE Trans. Instrum. Meas. 2018, 68, 1261–1270. [Google Scholar] [CrossRef]

- Ganesan, H.; George, B.; Aniruddhan, S.; Haneefa, S. A dual slope LVDT-to-digital converter. IEEE Sens. J. 2018, 19, 868–876. [Google Scholar] [CrossRef]

- Petchmaneelumka, W.; Koodtalang, W.; Riewruja, V. Simple technique for linear-range extension of linear variable differential transformer. IEEE Sens. J. 2019, 19, 5045–5052. [Google Scholar] [CrossRef]

- Tian, G.; Zhao, Z.; Baines, R.; Zhang, N. Computational algorithms for linear variable differential transformers (LVDTs). IEEE Proc.-Sci. Meas. Technol. 1997, 144, 189–192. [Google Scholar] [CrossRef]

- Wang, Z.; Duan, Z. The research of LVDT nonlinearity data compensation based on RBF neural network. In Proceedings of the 2008 7th World Congress on Intelligent Control and Automation, Chongqing, China, 25–27 June 2008; pp. 4591–4594. [Google Scholar]

- Mishra, S.K.; Panda, G.; Das, D.P.; Pattanaik, S.K.; Meher, M.R. A novel method of designing LVDT using artificial neural network. In Proceedings of the 2005 International Conference on Intelligent Sensing and Information Processing, Chennai, India, 4–7 January 2005; pp. 223–227. [Google Scholar]

- Mishra, S.K.; Panda, G.; Das, D.P. A novel method of extending the linearity range of linear variable differential transformer using artificial neural network. IEEE Trans. Instrum. Meas. 2009, 59, 947–953. [Google Scholar] [CrossRef]

- Das, S.; Das, D.P.; Behera, S.K. Enhancing the linearity of LVDT by two-stage functional link artificial neural network with high accuracy and precision. In Proceedings of the 2013 IEEE 8th Conference on Industrial Electronics and Applications (ICIEA), Melbourne, VIC, Australia, 19–21 June 2013; pp. 1358–1363. [Google Scholar]

- Minghui, L.; Qiangling, G.; Wenkai, W.; Hongyu, H.; Chenpei, M.; Chang, C. Nonlinear correction of LVDT sensor based on ACO-BP neural network. J. Phys. Conf. Ser. 2020, 1678, 012084. [Google Scholar] [CrossRef]

- Hashim, F.A.; Hussien, A.G. Snake Optimizer: A novel meta-heuristic optimization algorithm. Knowl.-Based Syst. 2022, 242, 108320. [Google Scholar] [CrossRef]

- Li, G.; Liang, Z.; Yang, W. Fault location of distribution network based on improved binary snake optimization algorithm. Sci. Technol. Eng. 2024, 24, 7710–7718. [Google Scholar]

- Al-Shourbaji, I.; Kachare, P.H.; Alshathri, S.; Duraibi, S.; Elnaim, B.; Abd Elaziz, M. An efficient parallel reptile search algorithm and snake optimizer approach for feature selection. Mathematics 2022, 10, 2351. [Google Scholar] [CrossRef]

- Li, Q.; Ma, Q.; Weng, X. Dynamic path planning for mobile robots based on artificial potential field enhanced improved multiobjective snake optimization (APF-IMOSO). J. Field Robot. 2024, 41, 1843–1863. [Google Scholar] [CrossRef]

- Qiuxia, F.; Ke, Z.; Xu, L.; Kaile, C. Optimal design of linear quadratic regulator for active suspension based on snake algorithm. Sci. Technol. Eng. 2024, 24, 3852–3860. [Google Scholar]

- Patra, J.C.; Pal, R.N. A functional link artificial neural network for adaptive channel equalization. Signal Process. 1995, 43, 181–195. [Google Scholar] [CrossRef]

- Patra, J.C.; Pal, R.N.; Baliarsingh, R.; Panda, G. Nonlinear channel equalization for QAM signal constellation using artificial neural networks. IEEE Trans. Syst. Man Cybern. Part (Cybernetics) 1999, 29, 262–271. [Google Scholar] [CrossRef] [PubMed]

- Webster, J.; Eren, H. The Measurement, Instrumentation, and Sensors: Handbook; IEEE Press: New York, NY, USA, 1999; pp. 5–6. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).