Abstract

Accurate air pollution monitoring is critical to understand and mitigate the impacts of air pollution on human health and ecosystems. Due to the limited number and geographical coverage of advanced, highly accurate sensors monitoring air pollutants, many low-cost and low-accuracy sensors have been deployed. Calibrating low-cost sensors is essential to fill the geographical gap in sensor coverage. We systematically examined how different machine learning (ML) models and open-source packages could help improve the accuracy of particulate matter (PM) 2.5 data collected by Purple Air sensors. Eleven ML models and five packages were examined. This systematic study found that both models and packages impacted accuracy, while the random training/testing split ratio (e.g., 80/20 vs. 70/30) had minimal impact (0.745% difference for R2). Long Short-Term Memory (LSTM) models trained in RStudio and TensorFlow excelled, with high R2 scores of 0.856 and 0.857 and low Root Mean Squared Errors (RMSEs) of 4.25 µg/m3 and 4.26 µg/m3, respectively. However, LSTM models may be too slow (1.5 h) or computation-intensive for applications with fast response requirements. Tree-boosted models including XGBoost (0.7612, 5.377 µg/m3) in RStudio and Random Forest (RF) (0.7632, 5.366 µg/m3) in TensorFlow offered good performance with shorter training times (<1 min) and may be suitable for such applications. These findings suggest that AI/ML models, particularly LSTM models, can effectively calibrate low-cost sensors to produce precise, localized air quality data. This research is among the most comprehensive studies on AI/ML for air pollutant calibration. We also discussed limitations, applicability to other sensors, and the explanations for good model performances. This research can be adapted to enhance air quality monitoring for public health risk assessments, support broader environmental health initiatives, and inform policy decisions.

Keywords:

sensors; air quality; calibration; particulate matter; AI/ML; model accuracy; environment; sensor calibration 1. Introduction

Climate change, urbanization, fossil fuel energy consumption, and other factors have exacerbated air pollution and related public health issues [1,2]. Effective Air Quality (AQ) monitoring is vital for safeguarding public health, especially in densely populated urban areas where pollution levels are often higher. Traditional high-cost, high-maintenance AQ monitoring systems, while accurate, are often limited in geographic coverage and flexibility, making comprehensive AQ monitoring and surveillance challenging. Recent advancements in sensor technology and manufacturing have seen a rise in the application of low-cost sensors (LCSs), which offer broader geographic coverage.

The advent of LCSs presents a transformative challenge and opportunity to enhance AQ monitoring. Purple Air (PA) sensors, one type of low-cost sensor, have gained prominence due to their affordability, ease of deployment, and timely readings [3]. However, the practical utilization of these sensors is significantly compromised by their low accuracy and reliability under different environmental and manmade conditions [3], and they often require calibration to match the accuracy of traditional systems [4].

PA sensors operate using an optical sensing principle, specifically, laser-based light scattering. Each sensor contains a low-cost laser particle counter that detects airborne particulate matter by illuminating these particles with a laser and measuring the intensity of the scattered light [5]. Specifically, PA sensors utilize the PMS*003 series laser particle sensor, which, unlike many commercial sensors, can measure particulate matter (PM) in three size ranges: PM1.0 (particles with a diameter of less than 1.0 μm), PM2.5 (less than 2.5 μm) and PM10 (less than 10 μm). Their detection range is 0.3–10 µm, with a resolution of 0.3 µm. The performance can also be affected by high humidity, and they record environmental variables such as temperature, humidity, and pressure for further processing. These sensors also include dual laser systems, allowing for cross-validation and improved data quality.

For this study, we focused on PM2.5, particulate matter with a diameter of less than 2.5 μm, which can penetrate deep into the respiratory tract and enter the bloodstream, causing health risks, including respiratory, cardiovascular, and neurological diseases [6]. However, the AI/ML approach will not be applicable in instances where particle sizes are smaller than 300 nm, as this is a physical limitation of existing sensor technologies. Monitoring PM2.5 is necessary for assessing exposure and implementing strategies to mitigate public health impacts. Large amounts of PA data are available, and previous studies have proved the potential of machine learning (ML) approaches to improve accuracy [7]. Calibration is one of the first investigated methods that has been using AI/ML to correct inherent sensor biases and ensure the comparability of data across different sensors and environments. However, the challenge with PM2.5 calibration lies in its sensitivity to ambient environmental changes, such as relative humidity and temperature, which can negatively impact sensor performance and accuracy [8]. Although previous studies (such as [4,9]) have explored ML models for sensor calibration, none has yet provided a comprehensive comparison across as many models and environment variables as this study proposes, nor covered entire sensor networks over a large geographic area.

In this study, fine-tuning refers to the process of making adjustments to ensure correct readings from devices, while calibration is defined as the preliminary step of establishing the relationship between a measured value and a device’s indicated value. Calibration is a preliminary step before tuning. Although we focused on fine-tuning to achieve accurate PM2.5 measurements, we refer to this process as calibration, in alignment with the existing literature.

This study focused on the Los Angeles region, selected due to its high density of PA sensors compared to other regions. This area encompasses a mixture of urban, industrial, and residential zones, providing a diverse range of air quality conditions for a more comprehensive evaluation of sensor performance across different environmental settings.

In general, this study sought to bridge the gap between the affordability of LCS and the precision required for scientific and regulatory purposes. Our objective was to systematically evaluate AI/ML models and software packages to identify the most effective model and software package for improving the accuracy of low-cost sensor (LCS) measurements. Each of the AI/ML models was tested to identify the optimal model and package. In total, 64 pairs of Purple Air (LCS) and EPA sensors were used in this study, with the validated EPA measurements as ground truth. Eleven regression models were systematically considered across four Python-based software packages: XGBoost, Scikit-learn, TensorFlow, and PyTorch, as well as a fifth R-based IDE, RStudio. The models in this study included Decision Tree Regressor (DTR), Random Forest (RF), K-Nearest Neighbor, XGBRegressor, Support Vector Regression (SVR), Simple Neural Network (SNN), Deep Neural Network (DNN), Long Short-Term Memory (LSTM) neural network, Recurrent Neural Network (RNN), Ordinary Least Square Regression (OLS), and Least Absolute Shrinkage and Selection Operator (Lasso) regression. The details are provided in the following five sections: Section 2 reviews existing calibration methods conducted using both traditional calibration techniques (field and laboratory methods) and recent advancements involving empirical and geophysical ML models. Section 3 introduces the study area, data, and pre-processing for the PA sensors. Section 4 reports the experimental results. Section 5 presents the results and Section 6 discusses the reasons for model performance differences, comparisons with existing studies, limitations, and future research directions.

2. Literature Review

2.1. AQ Calibration

AQ calibration has advanced in recent years to address inherent biases and uncertainties from electronics, installation, and configurations, serving as a crucial process to align readings with established reference standards and uphold data validity [10]. Several calibration methods stand out for their efficacy and application diversity. Field calibration, which involves the direct comparison of sensor data with reference-grade instruments in the environment, is used to ensure in situ sensor accuracy [11]. Additionally, laboratory calibration techniques, which subject sensors to controlled conditions and known concentrations of pollutants, allow for the meticulous adjustment of sensor responses before their deployment in the field [12,13]. Calibration techniques also vary by pollutants and sensors. Metal Oxide Semiconductor (MOS) sensors, used for detecting NO2 (nitrogen dioxide), O3 (ozone), SO2 (sulfur dioxide), CO (carbon monoxide), and CO2 (carbon dioxide), undergo calibration to correlate electrical conductivity changes with specific target gas concentrations, ensuring accurate readings [14]. Electrochemical (EC) sensors for CO, NO2, and SO2 monitoring undergo calibration via controlled oxidation–reduction reactions, linking measured currents to gas concentrations for accurate field readings [15]. Non-Dispersive Infrared (NDIR) sensors for CO2 measurement require calibration that accounts for spectral variations. This involves exposing the sensor to a range of CO2 concentrations and analyzing infrared light absorption patterns to ensure accurate CO2 detection [16]. Satellite sensors were introduced to extend AQ observations to regional and global scales and relevant calibration methods were developed for, for example, post-launch atmospheric effects calibration [17,18,19].

Calibrating AQ sensors is essential for maintaining data integrity, particularly when reconciling the lower precision of emerging LCSs with the established accuracy of reference-grade instruments. While field calibration directly aligns sensors with real-world conditions, laboratory techniques refine sensor accuracy under controlled parameters. Despite these advances, the challenge remains to develop calibration methodologies that can navigate the complex interplay of sensor responses with dynamic environmental factors—a focus area that warrants a systematic investigation to enhance AQ monitoring frameworks.

2.2. Calibration of LCSs for PM Measurement

LCSs are revolutionizing AQ monitoring by making it more accessible and participatory [20], especially for under-served communities, to collect vital PM data [21]. This grassroots approach offers a richer, more localized view of AQ than is possible with sparser, traditional monitoring networks [22]. However, the accuracy of LCSs is low due to various factors, including environmental influences [23,24] and inherent limitations of the sensors themselves [25].

Most LCSs use light scattering to count particle numbers [26], which is sensitive to fluctuations in temperature, pressure, and humidity [27,28]. Although many LCSs come with built-in mechanisms to track environmental factors and are encased in protective shells to lessen weather impacts, data accuracy is still significantly compromised under extreme weather conditions or values [29,30]. Moreover, different LCS types and their corresponding data interpretation models introduce biases related to the sensor’s location, varying humidity levels, and the hygroscopic growth of aerosol particles [30]. For example, at relative humidity (RH) levels below 100%, hygroscopic PM2.5 particles, such as sodium chloride (NaCl), can absorb moisture from the air, leading to an increase in particle size and a change in their optical properties. These alterations can significantly affect the light scattering process, which is central to the operation of LCSs [31,32].

These investigations emphasize rigorous calibration and correction methods to counteract the influence of environmental and other factors, thereby enhancing data reliability in diverse environmental conditions [13,27,33]. While field calibration is essential for aligning LCS readings with standard measurements—requiring placement alongside reference monitors for measurement refinement [20,32]—the development and application of robust and well-performing calibration models are paramount. Such models, when implemented across the sensor network, significantly enhance data consistency and reliability, thereby augmenting the overall efficacy of AQ monitoring efforts.

2.3. Models to Calibrate LCSs

There are two types of models for calibrating LCS data to enhance accuracy in AQ monitoring—physics-based models and empirical models. The physics-based model employs fundamental physical principles, such as the κ-Köhler theory and Mie theory, to accurately correlate the sensor’s light scattering measurements [34]. For example, ref. [35] applied κ-Köhler and Mie theories to a low-cost PM sensor, significantly enhancing the accuracy, with coefficient of determination (R2) values of up to 0.91 and lower Root Mean Square Error (RMSE) and Mean Absolute Error (MAE) values. Ref. [36] showcased a physics-based calibration approach for PA sensors, which, when aligned with Beta Attenuation Monitor (BAM) standards, exhibited high consistency, with correlations above 0.9, alongside an MAE of 3–4 µg/m3.

Empirical models leverage the availability of large amounts of observed data to establish a statistical relationship between sensor readings and reference measurements, often incorporating environmental variables to enhance the accuracy and reliability of LCSs [20,37]. The empirical calibration models commonly assume a correlation between the data from LCSs and high-quality reference-grade measurements. For example, ref. [25] reported a linear calibration model for PM2.5, evidencing an enhanced fit with an R2 of 0.86 under dry conditions and 0.75 under humid conditions compared to reference measurements. Refs. [10,38,39] emphasized the importance of including environmental variables, notably relative humidity, which affects particle count and sensor outputs. To address these challenges, non-linear and ML models have been utilized for better alignment with high-quality reference instruments [40,41].

Given the critical role of environmental variables, it is important to consider the strong correlation between RH and temperature, which can significantly influence the development of calibration models by introducing multicollinearity, potentially leading to biased predictions [24]. In linear models, for instance, collinearity can distort regression coefficients, making it difficult to assess the true impact of each variable [41,42]. Modern machine learning (ML) models, such as random forests, address this by incorporating the interrelationships between these variables into their algorithms, allowing them to account for correlations when determining variable importance [42,43].

The current literature presents a wealth of individual cases examining the calibration of LCSs with quite limited settings, e.g., limited numbers of sensors and input values. It can also be found that there is no collective, comparative, and systematic study that encompasses diverse calibration models and software packages. This gap is critical because PM2.5 measurements from LCSs can vary significantly due to inherent differences in sensing technology, geographical regions, and environmental conditions. Without a systematic approach considering these factors, calibrations may not effectively address these variations, leading to inaccurate data and hindering our ability to fully understand AQ variations. Therefore, our objective was to conduct an in-depth systematic study of AI/ML models and packages to identify the most accurate combinations and improve the accuracy of LCS measurements using AI/ML approaches. We utilized five popular software packages and 11 ML models, mentioned at the end of Section 1, to conduct an in-depth, systematic analysis for a more detailed and nuanced understanding of LCS behaviors and their alignment with standard observations.

3. Data and Methodology

3.1. Training Data Preparation

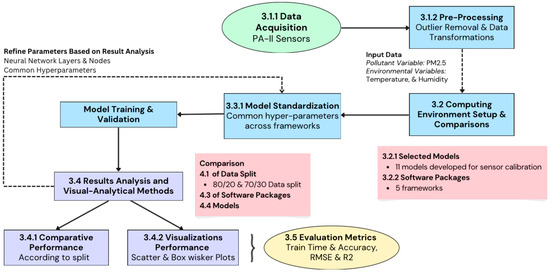

Figure 1 illustrates a detailed workflow of our study, starting from data acquisition and pre-processing, through model standardization and training, to results analysis and visualization. After step 3.4, where metrics were produced by the different software packages across computing environments, visualizations were produced using RStudio’s ggplot2 library. These visualizations enabled a thorough comparative performance analysis according to different splits, software packages, and models.

Figure 1.

The research workflow includes five major steps including data processing, computing, model setup, experiments, and analyses. Each step is detailed in the subsections.

3.1.1. Data Acquisition

We downloaded the PA-II sensor data (PMS-5003) and relevant pressure, temperature, and humidity data (AQMD, 2016). Data were kept on two database tables: a sensor table and a reading table. We also utilized data from the U.S. Environmental Protection Agency (EPA) as a benchmark to ensure the accuracy and reliability of our study [44]. EPA sensors utilize Federal Reference Methods (FRMs) and Federal Equivalent Methods (FEMs) [45] to ensure accurate and reliable measurements of PM. These methods involve stringent quality assurance and control (QA/QC) protocols, gravimetric analysis, regular calibration, and strict adherence to regulatory standards. The EPA’s monitoring stations continuously collect data that undergo rigorous validation before being used to determine compliance with National Ambient Air Quality Standards (NAAQS) [45]. Given these processes, EPA data are often considered the gold standard, making them an essential reference for comparing non-regulated sensors.

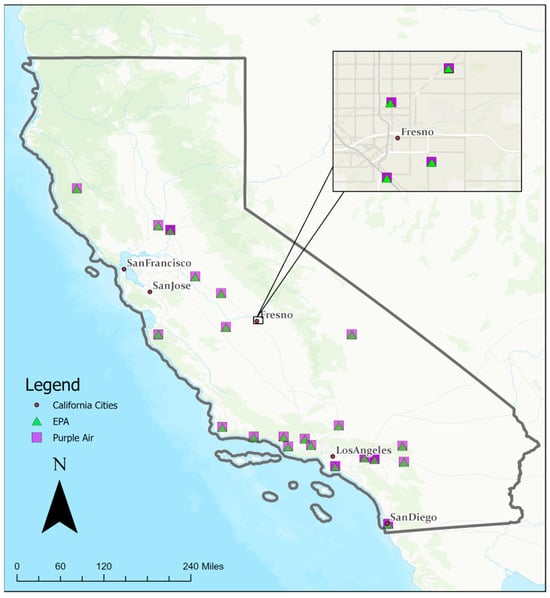

Data were kept on two database tables: a sensor table and a reading table. The sensor table included metadata including hardware component information, unique sensor ID, geographic location, and indoor/outdoor placement. The reading table stored continuous time series data for each sensor, with sensor IDs as primary keys to link the records in both tables. The date attribute was set in UTC for all measurements including pollutant and environmental variables. The table included two types of PM variables: ATM (where Calibration Factor = Atmosphere) and CF_1 (where Calibration Factor = 1) for three target pollutants: PM1.0, PM2.5, and PM10. CF_1 used the “average particle density” for indoor PM and CF_ATM used the “average particle density” for outdoor PM. The PA sensors utilized in this study were outdoor sensors. We identified 64 sensor pairs across California for a total of 876,831 data entries from 10 July 2017 to 1 September 2022 (Figure 2). These 64 sensor pairs consisted of 64 unique PA sensors and 25 unique EPA sensors; these sensors were paired based on their proximity to one another. These sensor pairs were mostly within 10 m of one another, and the furthest distance was below 100 m.

Figure 2.

Map of collocated California sensor pairs.

3.1.2. Data Pre-Processing

Data preprocessing utilized a threshold of >0.7 Pearson correlation coefficient between “epa_pm25” and “pm25_cf_1” to ensure a strong linear relationship. Next, data were aggregated from a two-minute temporal resolution into an hourly resolution and adjusted for local time zones. Sensor malfunctions such as readings exceeding 500 were removed, as were data records with missing information from either the “pm25_cf_1_a” or “pm25_cf_1_b” columns. Additionally, readings with a zero 5 h moving standard deviation in either channel were removed as this indicated potential sensor issues. Finally, we applied a dual-channel agreement criterion grouped by year and month. The data were then reduced to the following columns: “datetime”, “pm25_cf_1”, “humidity”, and “temperature”, which composed the training data.

For the LSTM and RNN models, we created sequences using the previous 23 h of data for each sensor. For all models, we then split the data into training and testing sets and scaled them using a standard scaler in a random fashion.

3.2. Computing Environmental Setups and Comparisons

For this study, we tested 11 ML models across 5 different packages: Scikit (1.3.2), XGBoost (2.0.2), Pytorch (1.13.1), TensorFlow (2.13), and RStudio (2023.09.1 running R4.3.2). These packages were chosen for their respective strengths and popularity in the academic community. We utilized the same training data and models across each package, all on a consistent machine configuration featuring Microsoft Windows 11 Enterprise OS, a 13th gen Intel(R) Core (TM) i7-13700 at 2100 MHz, 16 cores, 24 logical processors, and 32 GB of RAM. After a thorough analysis and literature review of models supported by each package, we selected 11 AI/ML models suitable for the calibration task (Table 1).

Table 1.

Models supported by each package.

3.2.1. Selected Models

The 11 regression and ML models (detailed in Appendix A as DTR, RF, kNN, XGBRegressor, SVR, SNN, DNN, LSTM, RNN, OLS, and Lasso) that supported calibration required two types of independent and target variables represented by X and Y, respectively, with the goal of mapping a function such that y = f(x_n) + ε, where ε is the degree of error and x_n encapsulates more than one independent variable (e.g., temperature and relative humidity). The strong correlation between temperature and relative humidity could introduce multicollinearity into the model, which could complicate the estimation of the individual effects of these variables on the target outcome. In traditional regression models, this multicollinearity can lead to the inflated variance of the coefficient estimates, potentially resulting in less reliable predictions. However, in the context of the 11 ML models, they were designed to handle such correlations more robustly, either by regularization techniques, e.g., Lasso regression, or by leveraging the complex interrelationships among the variables, e.g., RF, XGBoost, thus minimizing the adverse effects of multicollinearity on the calibration process. We applied regression algorithms to develop similar functions that described the impact of the input variables (measurements) from in situ PA sensors against the measurements aligning with the EPA readings.

3.3. Software Packages

The five packages included XGBoost, Scikit-Learn, TensorFlow, PyTorch, and RStudio, as detailed in Appendix B.

Each of the five packages offers unique strengths and limitations, and each available model in the five packages was used to identify the best-suited model and package for PM2.5 calibration. For our systematic study, the packages, models, and training data were tested to obtain comprehensive analyses. The training process was repeated 10 times for each experiment, and we calculated an average value for the performance metrics (R2 and RMSE).

Note: How PyTorch and TensorFlow implemented the OLS Model:

PyTorch and TensorFlow employed an SNN to define the OLS regression model. Neural networks process simple sequences of feed-forward layers [46]. However, these two packages differ in how they define the model and add layers. TensorFlow utilizes a sequential API for model definition, while PyTorch uses a class-based approach [47]. Moreover, in TensorFlow, the computational graph is a static computation graph, while PyTorch uses a dynamic computation graph. The performance gap between the two packages may stem from differences in these computation graph implementations. Nodes represent the neutral network layers, while edges carry the data as tensors [48].

Model Configuration Standardization Across Packages

For comparability across packages and models, we standardize model configuration and hyperparameters for each model. For neural network architectures, we standardized the number and type of layers and the number of nodes of each layer across packages compatible with each model. For several models, such as XGBoost and DTRs, it was not possible to completely standardize models across packages because each software and associated package utilized different hyperparameters. In these cases, we used default hyperparameters unique to each package and ensured that common hyperparameter values across packages were the same. Each model can have dozens of hyper-parameters, we only include those hyper-parameters which were common across packages (Table 2).

Table 2.

Standardized hyperparameter settings for each package.

The model configuration standardization used the hyperparameters displayed in Table 2 and included the following four aspects:

- Model Configuration: Each model was configured using the default hyperparameter settings provided in their documentation to ensure that they were consistent across all packages. In cases where there were no default hyperparameter settings listed, we set hyperparameters to be equal to the most frequently occurring hyperparameter for that setting. This approach ensured consistency across implementations while maintaining the integrity of each model’s intended configuration. For neural network models, the number and type of layers, the number of nodes per layer, activation functions, and optimization methods were standardized. For tree-based models and regressions, parameters like tree depth, learning rates, and regularization terms were kept consistent.

- Data Preparation: Data input into each model were prepared using a standard preprocessing pipeline. This involved scaling features, handling missing data, and transforming temporal data into sequences for time series models like LSTM models.

- Training and Test Splits: The data were split into training and testing sets, using both 80/20 and 70/30 splits to ensure consistency across all experiments.

- Computation Environment: All models were trained on a consistent hardware setup to eliminate variations in computing resources.

3.4. Results and Visual–Analytical Methods

3.4.1. Comparative Performance Across Models and Packages

We evaluated each model and package based on two key criteria: time to train and accuracy (RMSE and R2). Averaging the performance metrics (R2 and RMSE) from 10 runs of each model provided insight into which packages delivered higher accuracy and reliability. This allowed us to consider both the ability of a particular configuration to accurately calibrate LCS and their suitability for various applications. We identified which models offered the best trade-off between training time and predictive accuracy.

We also considered the influence of the package (e.g., RStudio) and model (e.g., LSTM) on results. These factors were highly intertwined, and the performance of a particular setup depended on both the package and ML model. As such, we took a two-pronged approach to analyze the results. First, we assessed the average performance of a model across all packages or a package across all models. Then, we assessed the effect that package choice had by comparing each model’s relative performance across all packages. By considering each model individually and comparing the difference in results when training in one package or another, we could better analyze the influence of package and model choice.

3.4.2. Visual–Analytical Methods

To succinctly convey our findings, we employed several visual–analytical methods using the “ggplot2” package in RStudio:

- Line and bar graphs were used to plot the performance metrics for the 70/30 and 80/20 splits across all models and packages, illustrating the differences and their consistency.

- A series of box and whisker plots were used to depict the range and distribution of performance scores within each package. This visualization highlighted the internal variability and helped identify packages that generally over- or underperformed.

- Model-specific performance was displayed using both box and whisker plots and point charts. The box plots provided a clear view of variability within each model category, while the point charts detailed how model performance correlated with package choice, effectively illustrating package compatibility and model robustness.

These visual analytics together with the model evaluations can help to refine the selection process for future modeling efforts and ensure that the most effective model/package is chosen for AQ calibration tasks.

3.5. Evaluation Metrics

This study utilized the evaluation metrics Root Mean Square Error (RMSE) and Coefficient of Determination (R2) to evaluate the fit of the PM2.5 calibration models against the EPA data used as a benchmark.

The RMSE was calculated using the formula

where yi represents the actual PM2.5 values from the EPA data, ŷi denotes the predicted calibrated PM2.5 values from the model, and n is the number of spatiotemporal data points. This metric measured the average magnitude of the errors between the model’s predictions and the actual benchmark EPA data. A lower RMSE value indicated a model with higher accuracy, reflecting a closer fit to the benchmark.

The Coefficient of Determination, denoted as R2, was given by

In this formula, RSS is the sum of the squares of residuals—the difference between the actual and predicted values—and TSS is the total sum of the squares—the difference between the actual values and their mean value. R2 represents the proportion of variance in the observed EPA PM2.5 levels that was predictable from the models. An R2 value close to 1 suggested that the model had a high degree of explanatory power, aligning well with the variability observed in the EPA dataset.

For a comprehensive understanding of the model’s performance, the RMSE and R2 were obtained. The RMSE provided a direct measure of prediction accuracy, while the R2 offered insight into how well the model captured the overall variance in the EPA dataset. Together, these metrics were crucial for validating the effectiveness of the calibrated PM2.5 models in replicating the benchmark data. The RMSE was more resistant to systematic adjustment errors than R2 and, as such, was used as the primary metric.

Furthermore, we investigated the training time for different models to identify “sweet spots”—models that were exceptionally accurate compared to their training time. This analysis is crucial for optimizing model selection in practical scenarios where both time and accuracy are critical constraints.

4. Experiments and Results

To obtain a comprehensive result, we implemented a series of experiments to compare the impacts of training/testing data splits, packages, and ML models on accuracy and computing time.

4.1. Training and Testing Data Splits

The popular training data splits of 80/20 and 70/30 were examined. The choice of an 80/20 vs. a 70/30 split was found to have minimal impact across models and packages where splits were random, while there was a 2.2% difference in R2 performance and a 3% difference in RMSE performance in the 80/20 vs. the 70/30 LSTM model where splits were sequential. The mean difference between the two splits in RMSE across all models and packages was 0.051 µg/m3 and the mean difference in R2 was 0.00381; there was a mean percent difference of 1.55% for RMSE and a mean percent difference of 0.745% for R2 across all packages and models (Figure 3).

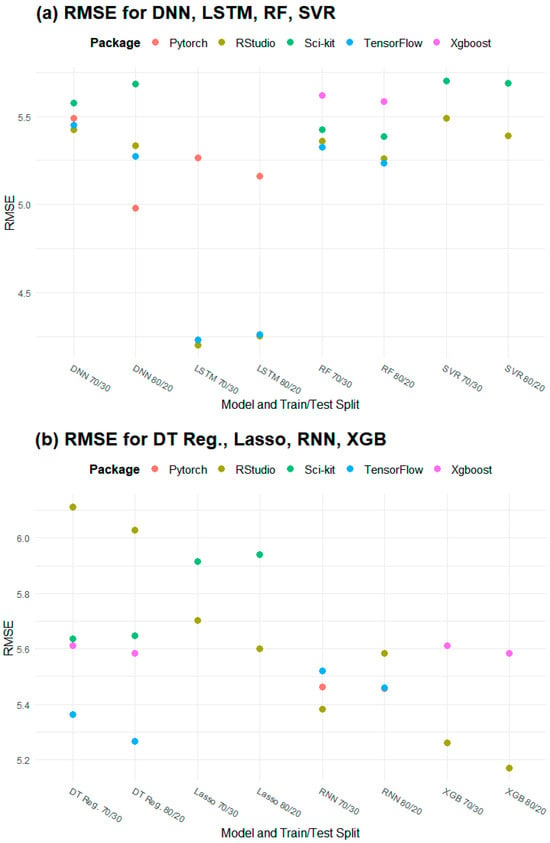

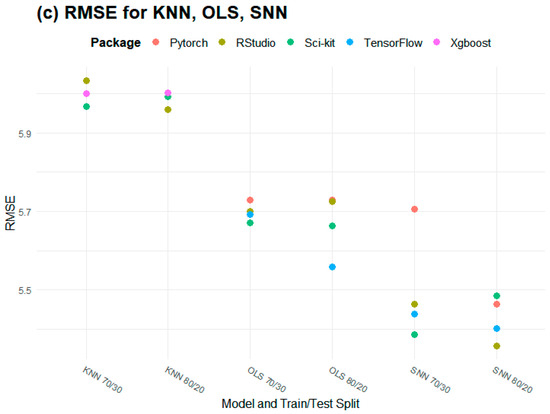

Figure 3.

RMSE differences according to training/testing data split (in µg/m3): (a) DNN, LSTM, RF, SVR; (b) DT Reg, Lasso, RNN, XGB; (c) RNN, OLS, SNN.

The largest difference between the 70/30 and 80/20 splits in terms of RMSE was for DNNs in PyTorch, with an absolute difference of 0.51 µg/m3; the largest difference in terms of R2 was 0.020 for SNN in PyTorch. This translated to percent differences of 9.75% and 2.83%, respectively. Of the 35 model/package combinations tested, 29 had a difference below 2% for RMSE and 33 had a percent difference below 2% for R2 (Figure 3).

While the differences between splits were minimal (Figure 3), the 80/20 split (mean R2 = 0.750, mean RMSE = 5.46 µg/m3) slightly outperformed the 70/30 split (mean R2 = 0.746, mean RMSE = 5.51 µg/m3) on average. Therefore, we used the 80/20 split to compare the packages and models.

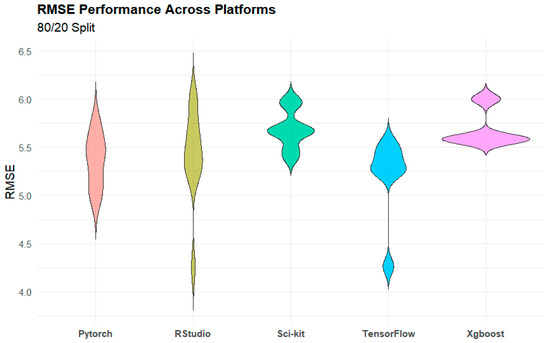

4.2. Software Package Comparison

When considering the performance of all models (Figure 4), TensorFlow (mean R2 = 0.773) and RStudio (mean R2 = 0.756) outperformed the other packages, none of which had an average R2 above 0.736. This success was driven in part by the strong performance of LSTM in these packages. Apart from RNN, the top-performing packages across models in terms of maximum R2 were RStudio and TensorFlow. PyTorch produced the best model for RNN (R2 = 0.7658), slightly edging out TensorFlow (R2 = 0.7657). Conversely, XGBoost and Scikit-Learn did not produce the best results for any of the models tested. TensorFlow emerged as a package particularly well-suited to the calibration task because every model that TensorFlow supported produced either the best or second-best R2 (Figure 3).

Figure 4.

Performance across packages for RMSE (unit µg/m3).

However, it is important to note that in cases where a model was compatible with several different packages, the difference in performance between the best and second-best packages was negligible. When considering only models that were compatible with three or more packages, the average percent difference in R2 between the top-performing package and the worst-performing package across all models was 6.09%. However, the percentage difference between the top-performing packages and the second-best packages was only 0.96%.

This suggests that while packages did have a significant effect on performance, for each model, multiple potential packages could produce effective results.

Because not every model was available for every package, comparing overall performance did not fully capture the variation between packages. By considering the relative performance of individual models between packages, we could better elucidate which model and package combination was best suited to the calibration task. While many of the models were consistent across packages, there were some notable outliers. OLS regression displayed the largest difference in performance across packages in terms of R2, with a percentage difference of 10.06% between the best-performing package (TensorFlow) and the worst-performing package (PyTorch). This difference could be attributed to the different methods that these packages used to calculate linear regression, as discussed in Section 3.2. DTR, LSTM, and SNN all saw a percentage absolute difference of 9% to 10% for R2 between the best-performing and worst-performing models. Other models had a percentage absolute difference between 1% and 5% across packages (Table 3).

Table 3.

Package performance by model (R2). Best model in bold.

The effect of package choice was even more pronounced when considering the RMSE. For example, the absolute percent difference between LSTM when training in the worst-performing package, PyTorch, and in the best-performing package, TensorFlow, was 19.3% (Table 4). In certain cases, like LSTM, the selection of packages could have a significant effect on performance, even when the same model was selected. The best performing model is highlighted in bold in Table 4.

Table 4.

Package performance by model (RMSE). Best model in bold.

While LSTM produced the best results in all packages that supported the model, it was significantly more accurate when trained in RStudio and TensorFlow than in PyTorch (Table 3 and Table 4). It is unsurprising that RStudio and TensorFlow exhibited notably similar performances because LSTM in RStudio was powered by TensorFlow.

The time and performance differences between PyTorch and TensorFlow may have been the result of the different ways that the two packages implemented the models. TensorFlow incorporates parameters within the model compilation process through Keras. In contrast, in PyTorch parameters are instantiated as variables and incorporated into custom training loops, as opposed to the more streamlined .fit() method utilized in TensorFlow [49]. Furthermore, PyTorch employs a dynamic computation graph for the seamless tracking of operations, while the TensorFlow static computational graph requires explicit directives [47]. PyTorch leverages an automatic differentiation engine to compute derivatives and gradients of computations. Moreover, PyTorch’s DataLoader class offers a way to load and preprocess data, thus reducing the time required for data loading.

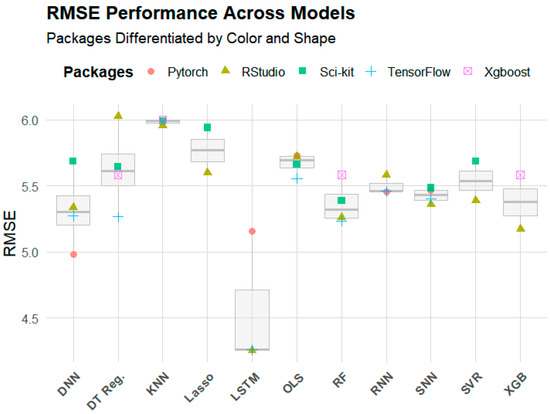

4.3. Model Comparison

Each model’s performance was determined by the model itself and the supporting package. However, the model chosen had a greater overall effect on accuracy than which package was selected. Certain models generally outperformed or underperformed regardless of package.

The top-performing model by average R2 and RMSE across software packages was LSTM, which outperformed all other models by a large margin (R2 = 0.832, RMSE = 4.55 µg/m3) (Table 3 and Table 4). LSTM and RNN are a type of neural network specifically designed for time series modeling and incorporate past data to support predictions of future sensor values [50].

Compared to LSTM, all other models significantly underperformed. Variation in performance among the remaining models was relatively minor (Figure 5). In fact, the gap in mean R2 (0.07) between LSTM and the second-best model, RF, was larger than the gap between RF to the worst-performing model, KNN (0.06) (Table 3). The same pattern held true for the RMSE. The gap between LSTM and the second-best model in terms of mean RMSE, DNN, was 0.76 µg/m3. The difference between DNN and KNN was 0.66 µg/m3.

Figure 5.

RMSE values (µg/m3) across models and packages.

Table 4 and Table 5 summarize the percentage difference in R2 and RMSE between models when considering the best-performing packages for each model. The percentage difference between each model’s best performer compared to the median performer, SNN, and the worst performer, KNN, is included. While LSTM outperformed the median by 11.48% in terms of R2, none of the other models was more than 7% different from the median. In fact, 8 of the 11 models had an R2 within 3% of the median performance. When comparing models to the worst performer, the same trend was evident. While LSTM had an R2 18.46% higher than the worst performer, no other model outperformed the minimum by more than 9.1%. The same pattern held true for the RMSE, although there was slightly more variance between the models. LSTM again was by far the best performer, with a 23% lower RMSE value than the median model (DNN by this metric). All other models were within 10.6% of the median, and 8 of the 11 models were within 5% of the median value (Table 6).

Table 5.

Deviation from median across models (R2).

Table 6.

Deviation from median across models (RMSE, µg/m3).

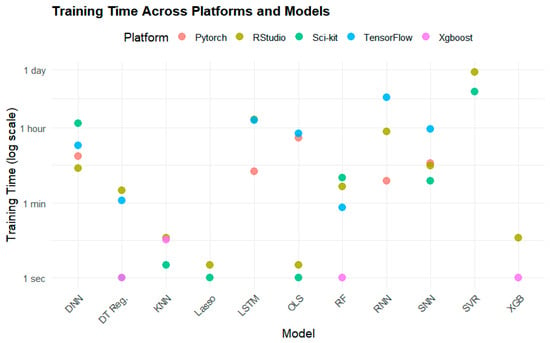

While these models displayed a relatively minor difference in performance in terms of R2 and RMSE, their training time was vastly different. Figure 6 demonstrates the elapsed training time across these models. For example, XGBoost took only 5 s to train on average, while SVR took 13 h, 45 min, and 17 s to train (Table 7).

Figure 6.

Time to train by model and package in the 80/20 split. Note that the y-axis specifies time non-linearly.

Table 7.

Time to train (hh:mm:ss) 80/20 split.

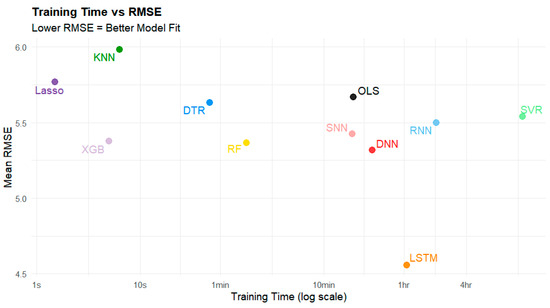

While LSTM produced a high R2 value, it took significantly longer to train than most other models (Figure 6, Table 7, where NA means not applicable). The fastest models to train were DTR, XGBoost, RF, KNN, and Lasso, all of which took less than two minutes to train. Among these models, XGBoost (0.7612, 5.377 µg/m3) and RF (0.7632, 5.366 µg/m3) performed the best in terms of R2 and RMSE (Figure 7).

Figure 7.

Time to train vs. RMSE (µg/m3) in the 80/20 split. Note that the x-axis is non-linear.

These results indicate that the LSTM model in TensorFlow and RStudio provided the highest accuracy, making it suitable for real-time AQ monitoring applications, where high precision is crucial.

5. Discussion

This section elaborates on why the 11 models performed differently, how our results compare to the latest relevant research, the applicability of our research to other sensors, and the limitations of this study.

5.1. Model Performances and Structures

Purple Air and EPA’s PM2.5 datasets are inherently point-based, hourly time series that require models to account for time series handling. These PM2.5 readings are influenced by complex and dynamic relationships with meteorological factors like temperature and relative humidity, so these need to be considered as variables in addition to raw PM2.5 data when building a model. As evidenced in Table 8, models like RF, DT, and XGB, while effective for multivariable inputs and feature interaction, treat data points independently. This makes them less suitable for time series tasks without significant feature engineering [51]. Meanwhile, models such as KNN and SVR lack the capability of capturing time series patterns, limiting their effectiveness for time series-based calibration [52]. However, LSTM models excel in handling time series data by using hidden and cell states to learn temporal patterns across time steps [53] and capture these nonlinear and interdependent relationships between the input variables and the output with their combined influences through their ability to utilize time series data. Forget and memory gates enable LSTM models to smooth irregular patterns in PM2.5 data, reducing the impact of noise and sensor errors commonly found in environmental datasets [54]. Unlike traditional RNNs, which suffer from the vanishing/exploding gradient problem, LSTM’s architecture is better suited to this task [54,55]. This design allows LSTM models to retain long-term dependencies, which are essential in understanding the temporal patterns in PM2.5 data [56,57]. In conclusion, LSTM’s dual hidden state allows it to maintain short- and long-term dependencies across time series data, capture nonlinear influences between PM2.5 and meteorological variables without manual feature engineering, and smooth irregular patterns in data through memory gates.

Table 8.

Each model’s capabilities.

5.2. Comparison to Existing Studies

We compared our study with all PM2.5 calibrations using AI/ML techniques (Table 9) and a number of factors: sensor count, sensor quality and performance, comprehensive model evaluation, and sensor type and calibration. A key factor influencing model performance was the number of sensors used. This study utilized 64 Purple Air sensors, a significantly larger dataset compared to previous works, which often relied on fewer sensors (e.g., 1–9). The use of a larger sensor network introduced greater data variability, enhancing the model’s ability to capture diverse environmental conditions. The studies by [74,75] demonstrated that calibrating single LCSs using models yielded high R2 values. However, their limited sensor count could reduce generalizability across different environmental conditions, as fewer sensors could not fully capture the natural variability present in PM2.5 measurements.

Table 9.

Summary of calibration methods for LCSs for PM2.5 in various study areas using different models and limitations.

Another key factor in this comparison is comprehensive model evaluation. This study systematically evaluated 11 machine learning models across five software packages, including TensorFlow and PyTorch. By incorporating a diverse range of models, from simple linear methods to complex architectures like DNN and LSTM, it offers a more extensive performance assessment than previous studies, which often focused on fewer models within a single framework. While this study evaluated 11 models, ref. [76] used a maximum of three models (ANN, GBDT, RF) in its calibration efforts, demonstrating a more limited exploration of model diversity. Our multi-model, multi-package approach helped identify the best-performing models for the given data while minimizing bias and improving the reliability of the PM2.5 calibration results.

The third key factor for comparison is sensor type and quality. The high accuracy reported by [77] was attributed to comprehensive field calibration against industry-grade instruments, ensuring robust validation. Similarly, ref. [78] achieved high accuracy due to the initial high agreement among sensors (R2 of 0.89), indicating strong baseline data quality prior to extensive calibration. In contrast, this study utilized 64 Purple Air PMS5003 sensors without direct field calibration but instead applied a much more flexible agreement threshold of R2 ≥ 0.70 to ensure data quality while capturing diverse environmental conditions. Additionally, sensor type can influence calibration outcomes, as seen in [50], where the SPS30 sensor paired with a hybrid LSTM calibration model yielded a high R2 of 0.93. Despite being a low-cost sensor (LCS), the SPS30 often outperformed other sensors like the PMS5003, likely due to its higher sensitivity and improved particle size differentiation [79].

5.3. Applicability of the Results to Other Air Quality Sensors

Table 10 explores the applicability of this methodology to other air quality sensor technologies. This workflow could be applicable to any sensor testing for PM2.5; however, the approach may vary depending on each specific sensor’s needs and the technologies that they utilize. This general workflow could be applied to other pollutants and aerosols; however, the results may differ due to the differences in dispersion behavior that occur from pollutant to pollutant.

Table 10.

Applicability scope of various air quality sensors.

6. Conclusions

This paper reported a systematic investigation of the suitability of five popular software packages and 11 ML models for LCS AQ data calibration. Our investigation revealed that the choice of training/testing split—80/20 vs. 70/30—had minimal impact on the performance across various models and packages. The percentage difference between the model split performance (R2) averaged as 0.745% and, therefore, we focused on the 80/20 split for a detailed comparison in subsequent analyses.

In the package comparison, RStudio and TensorFlow were the top performers, particularly excelling with LSTM models. Their performance showed R2 scores of 0.8578 and 0.857 and low RMSEs of 4.2518 µg/m3 and 4.26 µg/m3, respectively. Their strong ability to process high-volume data and capture complex relationships with neural network models such as LSTM was evident. However, while RStudio outperformed TensorFlow by 0.09% for LSTM, TensorFlow typically outperformed RStudio for every other model by 1.7%, averaging an R2 of 0.773 in TensorFlow and 0.756 in RStudio.

The choice of packages affected the outcomes when the same models were implemented across different packages. For example, the performance discrepancies in OLS regression across packages underscored the influence of software-specific implementations on model efficacy. When averaging across all models, R2 scores varied by 6.09% between the most and least accurate packages.

This study also highlighted the importance of selecting the appropriate combination of model and package based on the specific requirements of the task. While some packages showed a broad range in performance, packages like Scikit-Learn showed less variability, indicating a more consistent handling of the models. While the choice of model generally had a greater impact on performance than the package, the nuances in how each package processed and trained the models could lead to significant variations in both accuracy and efficiency. For example, while LSTMs generally performed well, their implementation in TensorFlow consistently outperformed that in PyTorch. This highlights the differences in how these packages manage computation graphs.

In conclusion, the detailed insights gained from this research advocate for a context-driven approach in the selection of ML packages and models, ensuring that both model and package choices are optimally aligned to the specific needs and constraints of the predictive task. Across all experiments, two optimal approaches emerged. The overall best-performing model in terms of RMSE and R2 was clearly LSTM. However, LSTM algorithms are particularly time-intensive to train, each taking over one hour and thirty minutes to train a single model. In addition, preparing sequential training data is a somewhat computationally expensive process. LSTM’s computational demands may make it too slow or expensive to train for certain applications, such as those with large study areas or applications that require model training on the fly. The high computational load of LSTM models is particularly important to consider for in-depth explorations, such as hyperparameter tuning. The hyperparameter tuning of these models can require hundreds of training runs, leading to long calculation times. The results also suggest a second potential approach, indicated by the relatively high performance of tree-boosted models in comparison to their training time. XGBoost in RStudio and RF in TensorFlow both exhibited R2 values above 0.77, RMSE values below 5.3 µg/m3, and a time to train below one minute. In cases where computational resources are low or models need to be trained quickly on the fly, models such as RF and XGBRegressor may be more applicable than the top-performing time series models.

6.1. Limitations

We have presented a systematic calibration study for PM2.5 sensors with promising results. There are some limitations that can guide interpretations of the findings and future research. These limitations span the geographic and technological scope of sensor deployment, the pollutant species, computational constraints, and the limited available meteorological variables.

- Sensor Pair Distribution: The current study utilized 64 sensor pairs from California, incorporating data from 25 unique EPA sensors. This limited geographic and technological scope may limit the broader applicability of the models, particularly for nationwide or larger-scale contexts. Further research could be conducted to determine the optimal scope and effectiveness of the trained models across diverse regions.

- Pollutant Species: This calibration study was exclusively focused on PM2.5 and did not extend its methodology to other pollutants. The generalizability of the approach to additional pollutants, such as ozone or nitrogen dioxide, could be investigated through similar calibration efforts.

- Sensor Technology: This study was confined to data collected from EPA and Purple Air sensors. While these sensors are widely used, the approach should be repeated when translating to other types of PM2.5 sensors or to sensors measuring different pollutants. Future studies should explore the calibration and performance of alternative sensor technologies to enhance this study’s applicability.

- Computational Constraints: The calibration process was conducted using CPU-based processing, which required approximately one month of continuous runtime. This computational limitation suggests that further studies could benefit significantly from leveraging GPU-based processing to reduce runtime [68]. Additionally, adopting containerization technologies such as Docker could streamline setup and configuration, thereby improving efficiency and reproducibility.

- Meteorological Constraints: While this study accounted for the impact of temperature and humidity on sensor calibration, it did not consider other potentially influential meteorological factors, such as wind speed, wind direction, and atmospheric pressure. These features were either found to have marginal impacts in the case of pressure or were unavailable in the dataset such as in cases of wind speed and direction. Further studies with sensors that measure these variables could potentially further improve model accuracy.

6.2. Future Work

Though this study is extensive and systematic, four aspects need further investigation to best leverage AI/ML for air quality studies on various pollutants, data analytical components, and further improvements of accuracy for calibration:

- Hyperparameter tuning should be able to further improve accuracy and reduce uncertainty but will require significant computing power and long durations of model training to investigate different combinations. LSTM emerged as the best-performing model in this study. We plan to further explore the application of this model, including detailed hyperparameter tuning/model optimization.

- The incorporation of a broader set of evaluation metrics, including MAPE and additional robustness measures, could provide a more comprehensive assessment of model performance across conditions.

- Different species of air pollutants may have different patterns so a systematic study on each of them might be needed for, e.g., NO2 and ozone or methane, within various events such as wildfire and wars [5,79]. In situ sensors offer comprehensive temporal coverage but lack continuous geographic coverage; introducing the satellite retrieval of pollutants could complement air pollution detection.

- Further exploration of other analytics such as data downscaling, upscaling, interoperation, and fusion to best replicate air pollution status is needed for overall air pollutants data integration.

- To better facilitate systematic studies and extensive AI/ML model runs, an adaptable ML toolkit and potential Python package could be developed and packaged to speed up AQ and forecasting research.

- Additionally, future studies should apply this methodology to datasets from various regions with different climates and pollution levels, as geographic location can significantly impact air quality patterns and model performance. This would help to validate the robustness and generalizability of the models under diverse conditions.

Author Contributions

Conceptualization, C.Y., A.S.M., S.S. and D.Q.D.; methodology, T.T., S.S., C.Y., A.S.M. and G.L.; validation, T.T., S.S. and S.L.; writing—original draft preparation, S.S., T.T., A.S.M., C.Y. and S.L.; writing—review and editing, C.Y., T.T. and S.S.; data acquisition, J.L., A.S.M. and S.S.; software, S.S., A.S.M., S.L., J.L., X.J., Z.W., J.C., T.H., M.P., G.L., W.P., S.H., J.R. and K.M.; experiments, S.S., A.S.M., S.L., J.L., X.J., Z.W., J.C., T.H., M.P., W.P., S.H., J.R. and K.M.; funding, C.Y. and D.Q.D.; management and coordination, C.Y. and S.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by NASA AIST (NASA-AIST-QRS-23-02); NASA Goddard CISTO, and NSF I/UCRC program (1841520).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The training data used in this study are openly available on GitHub at https://github.com/stccenter/AQ-Formal-Study/tree/main/Training%20Data, accessible as of 6 February 2025.

Acknowledgments

Tayven Stover put the source code on GitHub.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. The Machine Learning Models Used in This Study

- Decision Tree Regressor (DTR) predicts a target value by learning simple decision rules inferred from training data [80]. It splits the training data into increasingly specific subsets based on feature thresholds, with each leaf node in the tree providing a prediction that represents the mean of the values in that segment.

- Random Forest (RF) is an ensemble learning model that builds multiple decision trees during training and outputs the average prediction of the individual trees [81]. It improves model accuracy and overcomes the overfitting problem of single trees by averaging outputs from multiple deep decision trees, each trained on a random subset of features and samples.

- K-Nearest Neighbor (KNN) is a non-parametric model used for regression and classification problems [82]. The output is calculated as the average of the values of its k nearest neighbors. KNN works by finding the k closest training examples in the feature space and averaging their values for prediction.

- XGBRegressor is part of the XGBoost package that performs highly efficient and scalable implementation of a gradient-boosting framework [83]. This model uses a series of decision trees, where each tree corrects errors made by the previous ones, and it includes regularization terms to prevent overfitting.

- Support Vector Regression (SVR) is an extension of the Support Vector Machine (SVM) [84]. SVR tries to fit an error within a certain threshold and is robust against outliers. It uses kernel functions to handle non-linear relationships:where w is the weight vector, φ(a) is the feature function representing the input variables, and (w * φ(a)) is the dot product targeting the prediction results.

- Simple Neural Network (SNN) is often a single-layer network with direct connections between inputs and outputs [85]. It can model relationships in data by adjusting the weights of these connections, typically using methods like backpropagation.

- Deep Neural Network (DNN) composes multiple layers between the input and output layers (known here as hidden layers), which enable the modeling of complex patterns with large datasets [86]. Each layer transforms its input data into a slightly more abstract and composite representation. Further experimentation is needed to identify the balanced layer number and overfitting for the specific use case of sensor calibration.

- Recurrent Neural Network (RNN) is a class of neural networks where the node connections form a directed graph along a temporal sequence to exhibit dynamic temporal behavior and process sequences of inputs, making it suitable for tasks like time series forecasting [87].

One setup equation for RNN is

where is the input data point (scalar/real valued vector) at time step t for the i(th) training/test example, is the target (scalar/real valued vector) at time step t for the i(th) training/test sample, is the predicted output (scalar/real valued vector) at time step t for the i(th) training/test example, is the hidden state (real valued vector) at time step t for the i(th) training/test example, and are the weight matrices associated with the input, output, and hidden states, respectively. In this study, pertains to the input PM2.5, relative humidity, and temperature data points at a certain time t.

- 9.

- Long Short-Term Memory (LSTM) is a type of RNN that consists of three gates in its memory cell: the forget gate, the input gate (the PM2.5, temperature, and relative humidity), and the output gate (the calibrated values) [88].

This is later used to build an LSTM structure:

where ft = forget gate, o = sigmoid, wf = weight, ht − 1 = output of previous block, xt = input vector, and bf = bias. The multiplication is performed elementwise, ct = cell state, ht = hidden state, and Ot = output gate. The input sequence comprises the PM2.5, relative humidity, and temperature values at a given time t.

- 10.

- Ordinary Least Square (OLS) regression is a method for estimating unknown parameters [89]. OLS chooses the parameters that minimize the sum of the squared differences between the observed dependent variable and those predicted by the linear function.

- 11.

- Lasso (Least Absolute Shrinkage and Selection Operator) regression is a type of linear regression that uses shrinkage (minimizing coefficients) [90]. It adds a regularization term to the cost function, which involves the L1 norm of the weights, promoting a sparse model where few weights are non-zero.

Appendix B. The Five Open-Source Packages

XGBoost, standing for eXtreme Gradient Boosting, is a highly efficient implementation of gradient-boosted decision trees designed for speed and performance [91]. This standalone library excels in handling various types of predictive modeling tasks including regression, classification, and ranking. XGBoost can be used with several data science environments and programming languages including Python, R, and Julia, among others. XGBoost works well to build hybrid models as it integrates smoothly with both Scikit-Learn and TensorFlow via wrappers that allow its algorithms to be tuned and cross-validated in a consistent matter. It also functions well as a standalone model, using functions like XGBRegressor.

Scikit-Learn is a comprehensive library used extensively for data preparation, model training, and evaluation across a spectrum of ML tasks such as classification, regression, and clustering [92]. It supports many algorithms included in this study and other advanced regression and AI/ML algorithms. This package excels due to its ease of use, efficiency, and broad applicability in tackling both simple and complex ML problems.

TensorFlow, coupled with its high-level API Keras, provides a robust environment for designing a diverse array of ML models [93]. It is particularly effective for developing neural network models such as the ones employed in this study. TensorFlow uses “tf.keras” to implement regression models. RF and Lasso are built with extensions like TensorFlow Decision Forests (TF-DF), demonstrating its versatility across both deep learning and traditional ML domains.

PyTorch, known for its flexibility and powerful GPU acceleration, is used in the development of DL models [94]. While it is not traditionally used for simple regression models, it is ideal for constructing complex neural network models. For some regression modeling, external packages or custom implementations are necessary to bridge its capabilities to traditional statistical modeling tasks.

RStudio facilitates ML through its integration with R and Python, offering access to various packages and frameworks [95]. It utilizes the Caret package for training conventional ML models such as RF and XGBoost. For regression models like OLS and Lasso, RStudio leverages native R packages and Python integrations through Reticulate. Advanced DL models including LSTM and RNN are also supported using TensorFlow and Keras, providing a flexible and powerful toolset for both classical and modern ML approaches.

References

- NIH, National Institute of Environmental Health Sciences. Air Pollution and Your Health. 2024. Available online: https://www.niehs.nih.gov/health/topics/agents/air-pollution (accessed on 6 February 2025).

- Yang, C.; Bao, S.; Guan, W.; Howell, K.; Hu, T.; Lan, H.; Wang, Z. Challenges and opportunities of the spatiotemporal responses to the global pandemic of COVID-19. Ann. GIS 2022, 28, 425–434. [Google Scholar] [CrossRef]

- Chojer, H.; Branco, P.; Martins, F.; Alvim-Ferraz, M.; Sousa, S. Development of low-cost indoor air quality monitoring devices: Recent advancements. Sci. Total Environ. 2020, 727, 138385. [Google Scholar] [CrossRef]

- Masood, A.; Ahmad, K. A review on emerging artificial intelligence (AI) techniques for air pollution forecasting: Fundamentals, application and performance. J. Clean. Prod. 2021, 322, 129072. [Google Scholar] [CrossRef]

- Sayahi, T.; Butterfield, A.; Kelly, K.E. Long-term field evaluation of the Plantower PMS low-cost particulate matter sensors. Environ. Pollut. 2019, 245, 932–940. [Google Scholar] [CrossRef]

- Bu, X.; Xie, Z.; Liu, J.; Wei, L.; Wang, X.; Chen, M.; Ren, H. Global PM2.5-attributable health burden from 1990 to 2017: Estimates from the Global Burden of disease study 2017. Environ. Res. 2021, 197, 111123. [Google Scholar] [CrossRef] [PubMed]

- Fan, K.; Dhammapala, R.; Harrington, K.; Lamb, B.; Lee, Y. Machine learning-based ozone and PM2.5 forecasting: Application to multiple AQS sites in the Pacific Northwest. Front. Big Data 2023, 6, 1124148. [Google Scholar] [CrossRef] [PubMed]

- Tai, A.P.K.; Mickley, L.J.; Jacob, D.J. Correlations between fine particulate matter (PM2.5) and meteorological variables in the United States: Implications for the sensitivity of PM2.5 to climate change. Atmos. Environ. 2010, 44, 3976–3984. [Google Scholar] [CrossRef]

- Kumar, N.; Park, R.J.; Jeong, J.I.; Woo, J.-H.; Kim, Y.; Johnson, J.; Yarwood, G.; Kang, S.; Chun, S.; Knipping, E. Contributions of international sources to PM2.5 in South Korea. Atmos. Environ. 2021, 261, 118542. [Google Scholar] [CrossRef]

- Chu, H.-J.; Ali, M.Z.; He, Y.-C. Spatial calibration and PM2.5 mapping of low-cost air quality sensors. Sci. Rep. 2020, 10, 22079. [Google Scholar] [CrossRef]

- Kim, J.; Shusterman, A.A.; Lieschke, K.J.; Newman, C.; Cohen, R.C. The Berkeley atmospheric CO2 observation network: Field calibration and evaluation of low-cost air quality sensors. Atmos. Meas. Tech. 2018, 11, 1937–1946. [Google Scholar] [CrossRef]

- Polidori, A.; Papapostolou, V.; Zhang, H. Laboratory Evaluation of Low-Cost Air Quality Sensors; South Coast Air Quality Management District: Diamondbar, CA, USA, 2016. [Google Scholar]

- Wang, Y.; Li, J.; Jing, H.; Zhang, Q.; Jiang, J.; Biswas, P. Laboratory Evaluation and Calibration of Three Low-Cost Particle Sensors for Particulate Matter Measurement. Aerosol Sci. Technol. 2015, 49, 1063–1077. [Google Scholar] [CrossRef]

- Kim, M.-G.; Choi, J.-S.; Park, W.-T. MEMS PZT oscillating platform for fine dust particle removal. Int. J. Precis. Eng. Manuf. 2018, 19, 1851–1859. [Google Scholar] [CrossRef]

- Mead, M.; Popoola, O.; Stewart, G.; Landshoff, P.; Calleja, M.; Hayes, M.; Baldovi, J.; McLeod, M.; Hodgson, T.; Dicks, J.; et al. The use of electrochemical sensors for monitoring urban air quality in low-cost, high-density networks. Atmos. Environ. 2013, 70, 186–203. [Google Scholar] [CrossRef]

- Spinelle, L.; Gerboles, M.; Villani, M.G.; Aleixandre, M.; Bonavitacola, F. Field calibration of a cluster of low-cost commercially available sensors for air quality monitoring. Part B: NO, CO and CO2. Sens. Actuators B Chem. 2017, 238, 706–715. [Google Scholar] [CrossRef]

- Lyapustin, A.; Wang, Y.; Xiong, X.; Meister, G.; Platnick, S.; Levy, R.; Franz, B.; Korkin, S.; Hilker, T.; Tucker, J.; et al. Scientific impact of MODIS C5 calibration degradation and C6+ improvements. Atmos. Meas. Tech. 2014, 7, 4353–4365. [Google Scholar] [CrossRef]

- Wang, C.; Liu, Q.; Ying, N.; Wang, X.; Ma, J. Air quality evaluation on an urban scale based on MODIS satellite images. Atmos. Res. 2013, 132–133, 22–34. [Google Scholar] [CrossRef]

- Zhang, Y.; Li, Z.; Bai, K.; Wei, Y.; Xie, Y.; Zhang, Y.; Ou, Y.; Cohen, J.; Zhang, Y.; Peng, Z.; et al. Satellite remote sensing of atmospheric particulate matter mass concentration: Advances, challenges, and perspectives. Fundam. Res. 2021, 1, 240–258. [Google Scholar] [CrossRef]

- Desouza, P.; Kahn, R.; Stockman, T.; Obermann, W.; Crawford, B.; Wang, A.; Crooks, J.; Li, J.; Kinney, P. Calibrating networks of low-cost air quality sensors. Atmos. Meas. Tech. 2022, 15, 6309–6328. [Google Scholar] [CrossRef]

- Lu, T.; Liu, Y.; Garcia, A.; Wang, M.; Li, Y.; Bravo-Villasenor, G.; Campos, K.; Xu, J.; Han, B. Leveraging Citizen Science and Low-Cost Sensors to Characterize Air Pollution Exposure of Disadvantaged Communities in Southern California. Int. J. Environ. Res. Public Health 2022, 19, 8777. [Google Scholar] [CrossRef]

- Caseiro, A.; Schmitz, S.; Villena, G.; Jagatha, J.V.; von Schneidemesser, E. Ambient characterisation of PurpleAir particulate matter monitors for measurements to be considered as indicative. Environ. Sci. Atmos. 2022, 2, 1400–1410. [Google Scholar] [CrossRef]

- Giordano, M.R.; Malings, C.; Pandis, S.N.; Presto, A.A.; McNeill, V.; Westervelt, D.M.; Beekmann, M.; Subramanian, R. From low-cost sensors to high-quality data: A summary of challenges and best practices for effectively calibrating low-cost particulate matter mass sensors. J. Aerosol Sci. 2021, 158, 105833. [Google Scholar] [CrossRef]

- Lee, C.-H.; Wang, Y.-B.; Yu, H.-L. An efficient spatiotemporal data calibration approach for the low-cost PM2.5 sensing network: A case study in Taiwan. Environ. Int. 2019, 130, 104838. [Google Scholar] [CrossRef] [PubMed]

- Hua, J.; Zhang, Y.; de Foy, B.; Mei, X.; Shang, J.; Zhang, Y.; Sulaymon, I.D.; Zhou, D. Improved PM2.5 concentration estimates from low-cost sensors using calibration models categorized by relative humidity. Aerosol Sci. Technol. 2021, 55, 600–613. [Google Scholar] [CrossRef]

- Raysoni, A.U.; Pinakana, S.D.; Mendez, E.; Wladyka, D.; Sepielak, K.; Temby, O. A Review of Literature on the Usage of Low-Cost Sensors to Measure Particulate Matter. Earth 2023, 4, 168–186. [Google Scholar] [CrossRef]

- Johnson, K.K.; Bergin, M.H.; Russell, A.G.; Hagler, G.S. Field Test of Several Low-Cost Particulate Matter Sensors in High and Low Concentration Urban Environments. Aerosol Air Qual. Res. 2018, 18, 565–578. [Google Scholar] [CrossRef]

- Khreis, H.; Johnson, J.; Jack, K.; Dadashova, B.; Park, E.S. Evaluating the Performance of Low-Cost Air Quality Monitors in Dallas, Texas. Int. J. Environ. Res. Public Health 2022, 19, 1647. [Google Scholar] [CrossRef] [PubMed]

- Mykhaylova, N. Low-Cost Sensor Array Devices as a Method for Reliable Assessment of Exposure to Traffic-Related Air Pollution. Ph.D. Thesis, University of Toronto, Toronto, ON, Canada, 2018. Available online: https://www.proquest.com/dissertations-theses/low-cost-sensor-array-devices-as-method-reliable/docview/2149673888/se-2?accountid=14541 (accessed on 6 February 2025).

- Pan, P.; Malarvizhi, A.S.; Yang, C. Data Augmentation Strategies for Improved PM2.5 Forecasting Using Transformer Architectures. Atmosphere 2025, 16, 127. [Google Scholar] [CrossRef]

- Di Antonio, A.; Popoola, O.A.M.; Ouyang, B.; Saffell, J.; Jones, R.L. Developing a Relative Humidity Correction for Low-Cost Sensors Measuring Ambient Particulate Matter. Sensors 2018, 18, 2790. [Google Scholar] [CrossRef] [PubMed]

- Holstius, D.M.; Pillarisetti, A.; Smith, K.R.; Seto, E. Field calibrations of a low-cost aerosol sensor at a regulatory monitoring site in California. Atmos. Meas. Tech. 2014, 7, 1121–1131. [Google Scholar] [CrossRef]

- Kim, D.; Shin, D.; Hwang, J. Calibration of Low-cost Sensors for Measurement of Indoor Particulate Matter Concentrations via Laboratory/Field Evaluation. Aerosol Air Qual. Res. 2023, 23, 230097. [Google Scholar] [CrossRef]

- Hagan, D.H.; Kroll, J.H. Assessing the accuracy of low-cost optical particle sensors using a physics-based approach. Atmos. Meas. Tech. 2020, 13, 6343–6355. [Google Scholar] [CrossRef] [PubMed]

- Prajapati, B.; Dharaiya, V.; Sahu, M.; Venkatraman, C.; Biswas, P.; Yadav, K.; Pullokaran, D.; Raman, R.S.; Bhat, R.; Najar, T.A.; et al. Development of a physics-based method for calibration of low-cost particulate matter sensors and comparison with machine learning models. J. Aerosol Sci. 2024, 175, 106284. [Google Scholar] [CrossRef]

- Malings, C.; Tanzer, R.; Hauryliuk, A.; Saha, P.K.; Robinson, A.L.; Presto, A.A.; Subramanian, R. Fine particle mass monitoring with low-cost sensors: Corrections and long-term performance evaluation. Aerosol Sci. Technol. 2020, 54, 160–174. [Google Scholar] [CrossRef]

- Bulot, F.M.; Ossont, S.J.; Morris, A.K.; Basford, P.J.; Easton, N.H.; Mitchell, H.L.; Foster, G.L.; Cox, S.J.; Loxham, M. Characterisation and calibration of low-cost PM sensors at high temporal resolution to reference-grade performance. Heliyon 2023, 9, e15943. [Google Scholar] [CrossRef]

- Jovašević-Stojanović, M.; Bartonova, A.; Topalović, D.; Lazović, I.; Pokrić, B.; Ristovski, Z. On the use of small and cheaper sensors and devices for indicative citizen-based monitoring of respirable particulate matter. Environ. Pollut. 2015, 206, 696–704. [Google Scholar] [CrossRef]

- Nakayama, T.; Matsumi, Y.; Kawahito, K.; Watabe, Y. Development and evaluation of a palm-sized optical PM2.5 sensor. Aerosol Sci. Technol. 2018, 52, 2–12. [Google Scholar] [CrossRef]

- Topalovic, D.B.; Davidovic, M.D.; Jovanović, M.; Bartonova, A.; Ristovski, Z.; Jovašević-Stojanović, M. In search of an optimal in-field calibration method of low-cost gas sensors for ambient air pollutants: Comparison of linear, multilinear and artificial neural network approaches. Atmos. Environ. 2019, 213, 640–658. [Google Scholar] [CrossRef]

- Wang, Y.; Du, Y.; Wang, J.; Li, T. Calibration of a low-cost PM2.5 monitor using a random forest model. Environ. Int. 2019, 133, 105161. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Li, Y.; Wang, K.; Cain, J.; Salami, M.; Duffy, D.Q.; Little, M.M.; Yang, C. Adopting GPU computing to support DL-based Earth science applications. Int. J. Digit. Earth 2023, 16, 2660–2680. [Google Scholar] [CrossRef]

- Yu, H.; Jiang, S.; Land, K.C. Multicollinearity in hierarchical linear models. Soc. Sci. Res. 2015, 53, 118–136. [Google Scholar] [CrossRef] [PubMed]

- Tomaschek, F.; Hendrix, P.; Baayen, R.H. Strategies for addressing collinearity in multivariate linguistic data. J. Phon. 2018, 71, 249–267. [Google Scholar] [CrossRef]

- Watson, J.G.; Chow, J.C.; DuBois, D.; Green, M.; Frank, N. Guidance for the Network Design and Optimum Site Exposure for PM2.5 and PM10. 1997. Available online: https://www3.epa.gov/ttnamti1/files/ambient/pm25/network/r-99-022.pdf (accessed on 6 February 2025).

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. Pytorch: An imperative style, high-performance deep learning library. arXiv 2019, arXiv:1912.01703. [Google Scholar]

- Kurama, V. PyTorch vs. TensorFlow: Key Differences to Know for Deep Learning. Built In. 7 March 2024. Available online: https://builtin.com/data-science/pytorch-vs-tensorflow (accessed on 6 February 2025).

- The Educative Team. Pytorch vs. Tensorflow: The Key Differences That You Should Know. Medium, Dev Learning Daily, 1 March 2024. [Google Scholar]

- Splitwire ML. PyTorch vs TensorFlow: Model Training—Splitwire ML—Medium. 8 September 2023. Available online: https://medium.com/@splitwireML/pytorch-vs-tensorflow-model-training-7518b7aa7a5e (accessed on 6 February 2025).

- Park, D.; Yoo, G.-W.; Park, S.-H.; Lee, J.-H. Assessment and Calibration of a Low-Cost PM2.5 Sensor Using Machine Learning (HybridLSTM Neural Network): Feasibility Study to Build an Air Quality Monitoring System. Atmosphere 2021, 12, 1306. [Google Scholar] [CrossRef]

- Ullmann, T.; Heinze, G.; Hafermann, L.; Schilhart-Wallisch, C.; Dunkler, D. Evaluating variable selection methods for multivariable regression models: A simulation study protocol. PLoS ONE 2024, 19, e0308543. [Google Scholar] [CrossRef] [PubMed]

- Semmelmann, L.; Henni, S.; Weinhardt, C. Load forecasting for energy communities: A novel LSTM-XGBoost hybrid model based on smart meter data. Energy Inform. 2022, 5, 24. [Google Scholar] [CrossRef]

- Shwartz-Ziv, R.; Armon, A. Tabular data: Deep learning is not all you need. Inf. Fusion 2022, 81, 84–90. [Google Scholar] [CrossRef]

- Hochreiter, S. Long Short-Term Memory; Neural Computation MIT-Press: La Jolla, CA, USA, 1997. [Google Scholar]

- Graves, A.; Schmidhuber, J. Framewise phoneme classification with bidirectional LSTM and other neural network architectures. Neural Netw. 2005, 18, 602–610. [Google Scholar] [CrossRef]

- Al-Selwi, S.M.; Hassan, M.F.; Abdulkadir, S.J.; Muneer, A. LSTM inefficiency in long-term dependencies regression problems. J. Adv. Res. Appl. Sci. Eng. Technol. 2023, 30, 16–31. [Google Scholar] [CrossRef]

- Gilliam, J.; Hall, E. Reference Equivalent Methods Used to Measure National Ambient Air Quality Standards (Naaqs) Criteria Air Pollutants; Environmental Protection Agency: Washington, DC, USA, 2016; Volume I. Available online: https://nepis.epa.gov/Exe/ZyPDF.cgi/P100RTU1.PDF?Dockey=P100RTU1.PDF (accessed on 6 February 2025).

- Zarzycki, K.; Ławryńczuk, M. LSTM for Modelling and Predictive Control of Multivariable Processes. In International Conference on Innovative Techniques and Applications of Artificial Intelligence, Tokyo, Japan, 16–18 March 2024; Springer Nature: Cham, Switzerland, 2024; pp. 74–87. [Google Scholar]

- Krause, B.; Lu, L.; Murray, I.; Renals, S. Multiplicative LSTM for sequence modelling. arXiv 2016, arXiv:1609.07959. [Google Scholar]

- Tam, Y.-C.; Shi, Y.; Chen, H.; Hwang, M.-Y. RNN-based labeled data generation for spoken language understanding. In Proceedings of the INTERSPEECH, Dresden, Germany, 6–10 September 2015; pp. 125–129. [Google Scholar]

- Keren, G.; Schuller, B. Convolutional RNN: An enhanced model for extracting features from sequential data. In Proceedings of the 2016 International Joint Conference on Neural Networks (IJCNN), Vancouver, BC, Canada, 24–29 July 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 3412–3419. [Google Scholar]

- El Fouki, M.; Aknin, N.; El Kadiri, K.E. Multidimensional Approach Based on Deep Learning to Improve the Prediction Performance of DNN Models. Int. J. Emerg. Technol. Learn. 2019, 14, 30–41. [Google Scholar] [CrossRef]

- Zhang, J.; Zheng, Y.; Qi, D.; Li, R.; Yi, X. DNN-based prediction model for spatio-temporal data. In Proceedings of the 24th ACM SIGSPATIAL International Conference on Advances in Geographic Information Systems, Burlingame, CA, USA, 31 October–3 November 2016; pp. 1–4. [Google Scholar]

- Caselli, M.; Trizio, L.; de Gennaro, G.; Ielpo, P. A simple feedforward neural network for the PM10 forecasting: Comparison with a radial basis function network and a multivariate linear regression model. Water Air Soil Pollut. 2009, 201, 365–377. [Google Scholar] [CrossRef]

- He, Z.; Wu, Z.; Xu, G.; Liu, Y.; Zou, Q. Decision tree for sequences. IEEE Trans. Knowl. Data Eng. 2021, 35, 251–263. [Google Scholar] [CrossRef]

- Siciliano, R.; Mola, F. Multivariate data analysis and modeling through classification and regression trees. Comput. Stat. Data Anal. 2000, 32, 285–301. [Google Scholar] [CrossRef]

- Fan, G.-F.; Zhang, L.-Z.; Yu, M.; Hong, W.-C.; Dong, S.-Q. Applications of random forest in multivariable response surface for short-term load forecasting. Int. J. Electr. Power Energy Syst. 2022, 139, 108073. [Google Scholar] [CrossRef]

- Goehry, B.; Yan, H.; Goude, Y.; Massart, P.; Poggi, J.M. Random forests for time series. REVSTAT-Stat. J. 2023, 21, 283–302. [Google Scholar]