Abstract

In the domain of autonomous driving systems, vehicle trajectory prediction represents a critical aspect, as it significantly contributes to the safe maneuvering of vehicles within intricate traffic environments. Nevertheless, a preponderance of extant research efforts have been chiefly centered on the spatio-temporal relationships intrinsic to the vehicle itself, thereby exhibiting deficiencies in the dynamic perception of and interaction capabilities with adjacent vehicles. In light of this limitation, we propose a vehicle trajectory prediction algorithm predicated on a hybrid prediction model. Initially, the algorithm extracts pertinent context information pertaining to the target vehicle and its neighboring vehicles through the application of a two-layer long short-term memory network. Subsequently, a fusion module is deployed to assimilate the characteristics of the temporal influence, spatial influence, and interactive influence of the surrounding vehicles, followed by the integration of these attributes. Ultimately, the prediction module is engaged to yield the predicted movement positions of the vehicles, expressed in coordinate form. The proposed algorithm was trained and validated using the publicly accessible datasets I-80 and US-101. The experimental results demonstrate that our proposed algorithm is capable of generating more precise prediction results.

1. Introduction

In recent years, with the advancement of sensor technology, the number and types of sensors equipped on intelligent vehicles have increased significantly, thereby greatly promoting the development of intelligent driving technology. In the traditional vehicle driving process, various sensor data could only play an auxiliary role, and drivers still needed to make corresponding judgments by combining visual information and the information fed back by sensors. To achieve the goal of intelligent driving, vehicles need to rely on sensor information to make independent decisions, such as by using cameras and LiDAR. Trajectory prediction serves as a critical connection within the realm of environmental information collection and decision making. The precision of the trajectory prediction directly influences the rationality of the subsequent decision making [1]. Currently, some enterprises have already provided relatively feasible solutions. For example, Baidu Apollo autonomous vehicles use the Inter-TNT model for prediction [2]. However, in complex scenarios with a large number of vehicles, the intentions of different vehicles may influence each other. How to predict vehicle trajectories with high efficiency and accuracy remains a well-recognized, difficult problem in this field [3].

In some relatively simple scenarios (for example, on a road without other vehicles or obstacles), the future trajectory of a vehicle is largely derived from its own historical trajectory. Since it is not interfered with by other factors, the behavior patterns it adopts are also easy to statistically analyze and summarize. In such scenarios, the time-based influence of the vehicle itself is the main factor to be considered in trajectory prediction. Therefore, even relatively simple prediction models can achieve good results, such as solutions based on classical physical models and machine learning solutions. Among them, most schemes based on classical physical models regard vehicle behaviors as pure physical motions. The physical models used include the uniform acceleration model [4], the model based on Kalman filtering [5], the model based on Monte Carlo [6], etc. However, due to the large number of interference factors affecting vehicle intentions in complex traffic environments, these classical physical models are only effective within a very short period of time (usually less than 1 s), which is obviously far from sufficient. With the continuous progress of theories of machine learning, schemes based on machine learning have gradually become mainstream. Compared with classical physical models, schemes based on machine learning can exhibit better prediction effects and have also enabled vehicle trajectory prediction capabilities to grow from short-term prediction to prediction in simple driving scenarios [7], for example, by using Support Vector Machines (SVMs) [8], Hidden Markov Models [9], etc. However, as driving scenarios become more complex, simple machine learning schemes can no longer meet the needs in complex traffic scenarios. Therefore, scholars in this field have shifted their attention to more advanced and complex machine learning models, such as deep learning.

When there are many vehicles around, the distance between vehicles also becomes an important factor in trajectory prediction, which is also an additional part considered by many of the current prediction models. For example, when the distance between vehicles is too close, drivers usually take actions such as decelerating or giving way. Essentially, trajectory prediction belongs to time series prediction. As one of the solutions capable of addressing long-term dependence issues, the Recurrent Neural Network (RNN) is one of the popular research directions in the field of trajectory prediction. In Reference [10], a hybrid trajectory prediction framework encoded by long short-term memory (LSTM) is proposed to evaluate the impact of a vehicle’s own behavior on surrounding vehicles. It introduces a reactive social convolution structure, which was designed to simulate the planned trajectory of the ego vehicle and the historical trajectories of surrounding vehicles. By doing so, it aims to mitigate the uncertainty of potential trajectories and effectively reduce the errors in trajectory prediction. Reference [11] puts forward a vehicle trajectory prediction model grounded in multivariate interaction modeling. By modeling the dynamic interactions among vehicles to obtain and fuse interaction information, while simultaneously using LSTM to construct a prediction module, the accuracy of the trajectory prediction was effectively improved. Reference [12] puts forward a novel lane-change decision model that caters to long-term prediction requirements. This model employs a long-term trajectory prediction model grounded in LSTM to predict the trajectories of surrounding vehicles. Additionally, the authors established a lane-change decision model based on fuzzy inference to infer the relationships between the target vehicle and other vehicles. In Reference [13], the authors started from improving the prediction accuracy and propose a distributed, decoupled long short-term memory (LSTM) self-trajectory prediction method. It uses a decoupling gate and a control gate to construct parallel LSTM cells and utilizes parallel cells to establish a distributed network architecture, substantially enhancing the accuracy of vehicle trajectory prediction.

Besides the RNN and its corresponding variants, the emergence of Transformer has also attracted researchers’ attention to the attention mechanism [14,15,16,17,18]. Consequently, certain researchers have endeavored to extend the application of this approach to the domain of trajectory prediction and have attained notable outcomes. Reference [19] puts forward a collaborative vehicle positioning and trajectory prediction framework grounded in belief propagation and the Transformer model. In this framework, a factor graph is meticulously constructed based on the sensor measurement values transmitted by vehicles. Subsequently, an enhanced belief propagation process is employed to approximate the posterior distribution of vehicles. Moreover, hidden features are extracted from historical position and vehicle motion data to model the long-term and short-term motion patterns of vehicles. Although researchers have made great efforts in vehicle trajectory prediction, the current achievements still have certain limitations. Most of the existing neural network-based methods start from the time-based influence of vehicles and the spatial distance influence between vehicles, such as the historical trajectory of the vehicle itself, the historical trajectories of surrounding vehicles, and the historical positions and spatial distances between vehicles. Although these factors are sufficient to show a good performance in ordinary traffic scenarios, they are still insufficient for complex traffic scenarios. To further improve the prediction accuracy in complex traffic scenarios, more influencing factors need to be taken into account.

Faced with diverse driving environments and complex and changeable collected information, some commonly used machine learning models may gradually fail to meet the requirements. Some researchers choose to construct suitable frameworks and models on their own according to specific application scenarios and application requirements. Reference [20] considers the perception failure situations that occur during the autonomous driving process and proposes a prediction-oriented perception enhancement framework to improve the performances of the existing interaction-based trajectory prediction models in the face of vehicle and sensor failures in the real world and could effectively reduce unreasonable trajectory inputs. Reference [21] shifts its focus to the problems commonly existing in prediction methods, such as deployment difficulties, insufficient computing resources, and error propagation, and specifically proposes a new multi-task parallel joint framework. Based on original LiDAR data, it conducts vehicle detection, state assessment, tracking, and trajectory prediction simultaneously. This approach effectively enhances the capabilities of the model. Reference [22] puts forward a greedy trajectory prediction method for autonomous vehicles. This method is grounded in the discrete-time-dimensional Gaussian process–information entropy probability framework, along with the hazard index map. Through this framework, it aims to estimate the intentions of surrounding vehicles and carry out trajectory prediction.

In recent years, the development of reinforcement learning techniques and generative models has also provided new ideas for vehicle trajectory prediction. Reference [23] elaborates on the basic principles of reinforcement learning and its applications in fields such as vehicle trajectory prediction and autonomous driving. It summarizes and deeply discusses reinforcement learning algorithms in recent years, such as imitation learning and deep Q-learning. Reference [24] combines the attention mechanism with inverse reinforcement learning. By using a social attention model based on the distance between vehicles to generate feature vectors and determine the weights of the attention mechanism, more surrounding vehicles can be taken into account. Reference [25] proposes a more generalized, secure, and robust hierarchical reinforcement learning framework. It adopts hierarchical double-deep Q-learning combined with LSTM to mitigate the impacts of noise and dynamic driving behaviors, thereby obtaining a more stable and versatile model.

In terms of generative models, Reference [26] presents a GAN model based on LSTM, where the GAN module can use a generative adversarial network to obtain the predicted trajectories of pedestrians. Although it is not specifically for vehicles, it can still provide new inspiration for vehicle trajectory prediction. Considering the problem of the insufficient extraction of vehicle hidden states by the social generative adversarial network, Reference [27] proposes an attention generative adversarial network. It uses the attention mechanism to focus on the weights of the influence of surrounding vehicles on the target vehicle and then conducts joint training with the generative adversarial network to generate predicted trajectories that meet the constraints. Reference [28] proposes a context-aware method, ContextVAE, for multi-modal vehicle trajectory prediction. Based on a time-varying auto-encoder, it uses a dual-attention mechanism to simultaneously focus on the environmental context and the states of dynamic agents, thereby providing accurate trajectory predictions. Although reinforcement learning and generative model techniques can produce more detailed and accurate results compared with other prediction methods, these techniques require a large amount of time and computational resources for training.

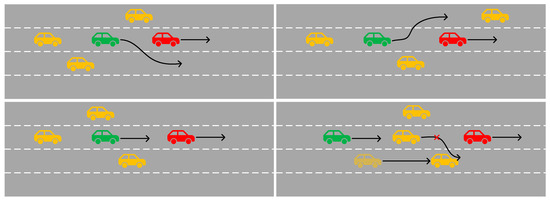

In complex traffic scenarios, the intentions of drivers may also be affected by the behaviors of other vehicles. Figure 1 shows several possible lane situations. In the scenario in the lower-right corner, the vehicle in front of the target vehicle is overtaking, but the fast-approaching vehicle in the adjacent lane makes that vehicle give up the intention of overtaking and return to its original lane. During this process, the target vehicle may switch from a decelerating state (providing enough space for the vehicle in front to overtake) to an accelerating state (occupying the space left after the vehicle in front overtakes), and then quickly switch back to a decelerating state (providing enough space for the vehicle in front that gives up overtaking). As the traffic scenarios become more complex, there will be more and more factors affecting the vehicle trajectories. In order to effectively improve the accuracy of vehicle trajectory prediction and the utilization rate of sensor data, it is very necessary to consider more influencing factors.

Figure 1.

The trajectories of vehicles in different scenarios. The green ones represents the target vehicles, the yellow ones indicate those with the same speed as the main vehicle, and the red ones stand for those with a higher speed than the target vehicle. The black arrow represents the next driving route and direction of the vehicle.

By synthesizing the achievements of researchers in related fields, in this study, we finally selected three main influencing factors to form a set of multiple influencing factors, namely, the time-based influence, spatial influence, and interaction influence of surrounding vehicles. Through a comprehensive analysis of these factors, the model can better understand vehicle behaviors. Compared with the existing neural network-based methods, this paper additionally adds the factor of the interaction influence of surrounding vehicles, which helps the model to more accurately predict the future trajectory of the target vehicle. In order to fully calculate the influence of time, space, and the motion state on vehicle trajectories, this paper proposes a vehicle trajectory prediction algorithm based on a hybrid prediction model on the basis of these multiple influencing factors. Firstly, the algorithm utilizes the feature extraction module based on the two-layer long short-term memory (LSTM) network to obtain the corresponding context information. Subsequently, the fusion module is employed to conduct an in-depth exploration of the features and fuse the obtained features. Finally, the fused features are output to the prediction module based on LSTM to directly output multi-step predictions in the future, that is, the predicted trajectories of the target vehicle. The main contributions of this paper are as follows:

1. A hierarchical feature extraction module is proposed to learn the influencing factors that may change the future trajectories of vehicles in complex driving scenarios, including the impact of the time, spatial distance, and motion patterns. Through comparative analysis, it can be seen that this method can effectively improve the accuracy of trajectory prediction;

2. A fusion module for mining and integrating features is proposed. Combined with social tensors, it extracts the hidden deep features in common spatial distances and motion patterns and fuses these features into mixed impact features so that the fusion module can capture multiple influencing factors;

3. A prediction module is proposed. It only adopts a one-step forward process to directly output multi-step predictions in the future. While ensuring the prediction effect, it speeds up the inference speed, enabling it to meet the requirements of real-time prediction.

2. Materials and Methods

Before introducing the hybrid prediction model, it is necessary to analyze the trajectory prediction scenarios first. We regard the entire lane as a plane and establish a coordinate system, and the predicted coordinates of the target vehicle follow a bivariate Gaussian distribution. Then, the coordinates of all vehicles within a certain time period () can be expressed as follows:

Then, the trajectory that needs to be predicted in the future can be expressed as follows:

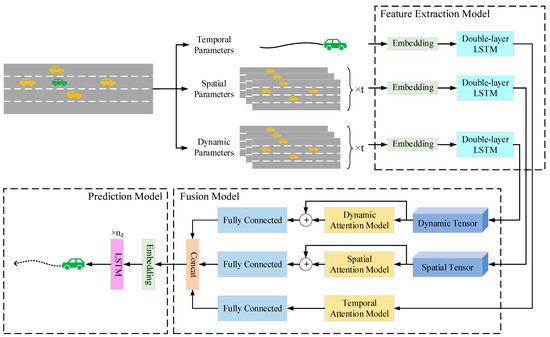

where represents the parameter set of all vehicles in the past time, represents the parameter set of all vehicles at time , represents the parameter set of the target vehicle. is the abscissa, is the ordinate, represents the lateral velocity, represents the longitudinal velocity, represents the lateral acceleration, and represents the longitudinal acceleration. is the total number of other vehicles. Since we only focus on the future trajectory of the target vehicle, the output () we want to obtain is all the coordinate information of the target vehicle within the future time. Therefore, the vehicle trajectory prediction problem can be simplified to inputting the historical trajectories of the target vehicle and its surrounding vehicles and outputting the predicted trajectories in the future. Based on this, this paper proposes a hybrid prediction model, and the framework of this model is shown in Figure 2. It mainly consists of three parts: a feature extraction module, which is used to extract the relevant features of the target vehicle and its surrounding vehicles; a fusion module, which is used to extract the hidden time impact features, spatial distance impact features, and motion pattern impact features and fuse them; a prediction module, which is used to output the predicted trajectory of the target vehicle.

Figure 2.

The structure diagram of the hybrid prediction model. The green ones represents the target vehicles, the yellow ones indicate those with the same speed as the main vehicle.

2.1. Feature Extraction Module

This module consists of three embedding layers and multiple two-layer long short-term memory (LSTM) networks. Each embedding layer is composed of three parallel, fully connected layers, which are respectively responsible for processing the time-related parameters of the target vehicle (such as the coordinates, velocity, and acceleration), the spatial distance parameters of the surrounding vehicles, and the motion state parameters of the surrounding vehicles. The activation function chosen is LeakyReLU. The calculation methods of velocity and acceleration are as follows:

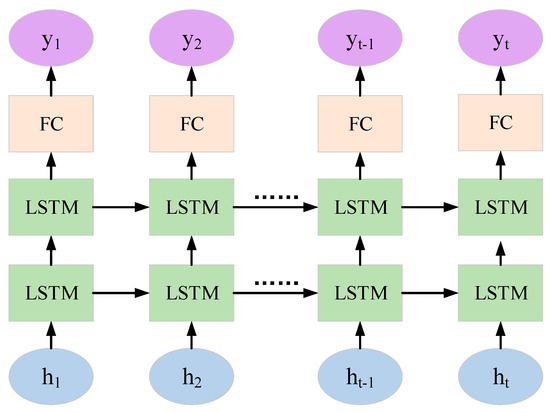

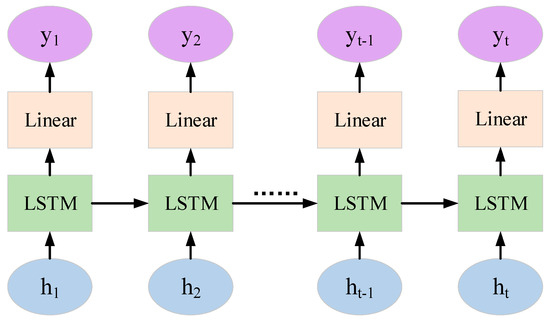

where and are the lateral and longitudinal velocities of the vehicle at time (), and are the lateral and longitudinal accelerations of the vehicle at time (), and is the sampling frequency. Since vehicle trajectory prediction belongs to long-distance dependence, traditional recurrent neural networks have difficulty dealing with it. Therefore, we adopt an improved recurrent neural network, namely, the long short-term memory (LSTM) network. It introduces a unique gate control mechanism, enabling it to retain information from a long time ago while also preventing insignificant content from entering the memory. Moreover, the multi-dimensional hidden outputs of the multiple time steps in the first layer of the two-layer LSTM are used as the time step inputs for the second layer, thereby helping the model capture more complex sequence features and enhancing the depth and learning ability of the model. Consequently, this paper selects the two-layer LSTM as the backbone of the feature extraction module and inputs the feature information processed by the embedding layer to extract hidden features. Its specific structure is shown in Figure 3.

Figure 3.

The structure diagram of the double-layer long short-term memory network.

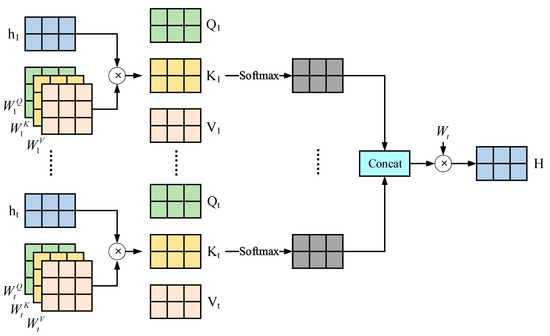

2.2. Fusion Module

Since we need to take more influencing factors into account in complex scenarios, we set up a fusion part to fuse multiple different features. The specific structure is shown in Figure 4. Firstly, the states of the target vehicle at different moments will have a certain impact on the trajectory [29]. It is crucial to effectively capture and analyze this temporal impact. Therefore, we choose the multi-head attention mechanism to construct a time attention module so as to learn the impact of time on vehicles. The attention mechanism captures and learns the weights of vehicles at different moments, and the multi-head attention mechanism enables the model to pay attention to information at different positions simultaneously and improves the stability of the model.

Figure 4.

The structure diagram of the multi-head attention.

We input the extracted from the target vehicle parameters in the previous part into the time attention module and then obtain the time impact feature (). The specific calculation formula is as follows:

where , , and are the query matrix, key matrix, and value matrix of the scaled dot-product attention corresponding to the i-th attention head in the time attention module, respectively. is the scaling factor, is the finally obtained time impact feature, and the parameters starting with are all trainable parameters. Apart from time, the spatial distances between vehicles and the motion patterns of surrounding vehicles are also important factors influencing the trajectories. In the traditional driving process, these two parameters are also the main bases for drivers to make decisions. For this reason, in this part, we add social tensors on the basis of the multi-head attention mechanism to extract deeper features. The social tensor was originally proposed as S-LSTM, aiming to analyze the intentions and interactions among pedestrians, and it was adjusted and applied to the vehicle field in CS-LSTM [30]. Specifically, we construct the tensor () that describes the spatial distance and the tensor () that describes the vehicle motion state, respectively, according to the and obtained in the feature extraction module. The specific formulas are shown as follows:

where and are the coordinate matrices of the other vehicle () and the target vehicle, respectively, is the indicator function, and is the total number of other vehicles. After that, the obtained tensors are respectively passed into the spatial distance attention module and the motion pattern attention module, which are similar to the time attention module, so as to extract the hidden features and in the tensors. Considering that this part needs to extract deeper features in order to alleviate the possible vanishing gradient problem, we also add residual connections. Eventually, we can obtain the spatial distance impact feature and the motion pattern impact feature:

By fusing the three types of features obtained from the above processes, the mixed impact feature can be obtained:

2.3. Prediction Module

Currently, most long time series predictions based on LSTM adopt the Seq2Seq method. This method uses an encoder–decoder architecture to process time series data. The encoder encodes the input sequence into a fixed-length vector, and then the decoder uses this vector to generate the output sequence. This method can capture the long-term dependence relationships in the sequence, but it is rather difficult to train and slows down the inference speed of the model. Moreover, since this method outputs the previous prediction time step together with the hidden state to the current prediction time step, it will also lead to an increase in the network error. Therefore, we adopt the direct multi-output method, as shown in Figure 5. This method directly outputs multi-step predictions in the future with only one forward step, saving the time for step-by-step iterative inference. The specific formula is shown as follows:

where represents all the operations included in the LSTM network, and the parameters starting with are all trainable parameters. Specifically, this module first processes the time dimension of the obtained mixed impact features, converts it to the target prediction time length (), and then inputs it into the LSTM network and outputs the corresponding prediction results. Finally, the entire hybrid prediction model is trained by minimizing the negative log-likelihood loss while updating the parameters of the model:

where , , and are the mean, standard deviation, and correlation coefficient, respectively.

Figure 5.

The structure diagram of the direct multi-step prediction model.

3. Experiment Results and Discussion

3.1. Datasets

To evaluate the hybrid prediction model proposed in this paper, we used two parts, namely, I-80 and US-101, from the Next Generation Simulation (NGSIM) dataset of highway driving in the United States collected by the Federal Highway Administration (FHWA) [31]. This dataset records the driving conditions of all vehicles on specified roads within a certain period of time. The specific time periods are from 4:00 pm to 4:15 pm, from 5:00 pm to 5:15 pm, and from 5:15 pm to 5:30 pm, totaling 45 min. The sampling frequency of the data is 10 Hz, and the data include information such as vehicle coordinates and speeds. It can effectively restore traffic scene information under different levels of complexity and simultaneously verify the robustness of the algorithm proposed in this paper.

Compared with the commonly used KITTI dataset and NuScenes dataset [32], the most prominent feature of the NGSIM dataset is that its data are collected by high-precision sensors installed at road scenes, rather than from the perspective of the vehicle itself. Therefore, for complex traffic scenarios, the NGSIM dataset based on the road segment perspective can provide a more objective and accurate data source for vehicle trajectory prediction research.

In the selected parts of I-80 and US-101, 70% of the content was used as the training set, and 30% was used as the test set. Regarding the pre-processing scheme, since we needed to compare it with other neural network schemes, to avoid errors in the final experimental results caused by inconsistent pre-processing steps, we followed the processing scheme proposed by Nachiket Deo et al. in Reference [30]. In this scheme, the original data are downsampled to 5 Hz, and the duration of each trajectory is cropped to 8 s. Among these data, the first 3 s are used as the historical trajectory to extract relevant information, and the subsequent 5 s are used for comparison with the prediction results, so as to evaluate the error between the predicted results and the actual results.

3.2. Evaluation Metrics

In the evaluation stage, we selected three commonly used evaluation metrics in the field of vehicle trajectory prediction, namely, the average displacement error (ADE) [33], final displacement error (FDE) [33], and root mean square error (RMSE) [34]. The calculation methods are shown as follows:

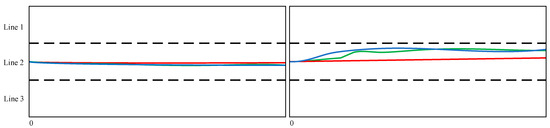

In terms of the performance comparison, we chose three models, namely, V-LSTM [30], S-LSTM [35], and CS-LSTM [30]. Among them, V-LSTM only conducts trajectory prediction based on the time impact of the target vehicle itself and uses LSTM for trajectory prediction, yet it lacks the analysis of the influence of surrounding vehicles. S-LSTM transfers the pedestrian trajectory prediction method to the vehicle trajectory prediction field and also has the problem of insufficient analysis of the time impact on the target vehicle itself to some extent. CS-LSTM is an improvement based on S-LSTM, replacing the fully connected layer with a convolutional pooling network. The performances of the different models are shown in Table 1, Table 2 and Table 3. The visualization results are shown in Figure 6.

Table 1.

Comparison of RMSEs among different models.

Table 2.

Comparison of ADEs among different models.

Table 3.

Comparison of FDEs among different models.

Figure 6.

Visualization of prediction result display. For the convenience of viewing, we connected the predicted points, drew the corresponding prediction curves, and cropped the starting point of the target vehicle to the leftmost side. Among them, the black dotted line represents the lane line, the blue one is the real trajectory, the green one is the prediction trajectory given by our model, and the red one is the prediction trajectory given by the CS-LSTM model.

3.3. Analysis

The hybrid prediction model proposed in this paper is implemented within the Python programming language and the PyTorch deep learning platform framework. To simplify the analysis, the research scope of this paper was set to the two lanes adjacent to the target vehicle, and the lengths of both the front and rear lanes were set to 90 feet. During the training process, we used the Adam optimizer with a learning rate of 0.001, the activation function was Leaky ReLU, and the batch size was 128. Regarding the specific parameters of the model, in the feature extraction module, the fully connected layer expands the dimension of the original features to 32, and the number of layers of the double-layer LSTM is set to 2. In the fusion module, the number of heads of the three attention modules is set to 3. In the prediction module, the fully connected layer expands the time dimension to 25, the number of layers of the LSTM is set to 1, and the number of hidden units is 128.

From the perspective of the root mean square error (RMSE), as the simplest model, the RMSE of the V-LSTM is much larger than those of the other three methods. Even compared with the S-LSTM, which has the second-worst performance, the gap reaches 34%. This indicates that only considering the time impact of the vehicle itself is very inaccurate and also shows that surrounding vehicles have a relatively obvious impact on the future trajectory of the target vehicle. S-LSTM and CS-LSTM, which take into account both the spatial interaction and time effect simultaneously, exhibit relatively higher accuracies. However, they still fall short compared to the model proposed in this paper. Compared with S-LSTM and CS-LSTM, the average RMSE values of our model are reduced by 9.7% and 6.1%, respectively.

Regarding the aspects of the average displacement error (ADE) and final displacement error (FDE), the accuracy of the trajectory prediction by S-LSTM and CS-LSTM has been significantly improved. Our model further considers the impact of the motion patterns among vehicles on this basis, so the accuracy has been improved to some extent. The indicators within all time ranges are the best, which also proves that the three factors influencing vehicle trajectory prediction proposed by us are reasonable and necessary, namely, the vehicle states at different moments, the spatial distances between vehicles, and the motion patterns of surrounding vehicles. Compared with the relatively better-performing CS-LSTM, the average displacement error of our model is reduced by 9.4%, and the average final displacement error is reduced by 7.3%.

3.4. Ablation Experiment

The model in this paper takes into account three different influencing factors, namely, the temporal influence, spatial influence, and surrounding vehicle interaction influence. To demonstrate the effectiveness of the newly added influencing factors, we additionally constructed two different variants of the model based on the same dataset. One is the model lacking the features of the surrounding vehicle interaction influence (abbreviated as Lack-SVI), and the other is the model directly using the Seq2Seq multi-step prediction module in the prediction stage (abbreviated as Seq2Seq). The comparison of the prediction results between these two variant models and those of our model is shown in Table 4. For ease of representation, in each table, the first number is the average displacement error (ADE), the second number is the final displacement error (FDE), and the third number is the root mean square error (RMSE).

Table 4.

The comparison results of the ablation experiment.

It can be observed that in the initial period, the prediction performances of the three models were not significantly different. However, as the prediction time increased, the variant models gradually failed to fully capture the key information around the target vehicle, resulting in higher prediction errors. As the prediction time continued to increase, the prediction errors became larger and larger. This fully demonstrates the effectiveness of our adoption of three different influencing factors. They can accurately and comprehensively capture the surrounding feature information, thereby effectively reducing prediction errors and improving the prediction performance of the model.

In addition, to verify the rationality of the number of LSTM layers in the model, we modified the number of layers (n1) of the double-layer LSTM in the feature extraction module and the number of layers (n2) of the LSTM in the prediction module of the model. Then, we conducted corresponding tests in the same way as the ablation experiment of the variant models. The test results are shown in Table 5. Finally, the network configuration of the model was determined, that is, n1 = 2 and n2 = 1.

Table 5.

Error comparison of different numbers of LSTM layers.

4. Conclusions

In the domain of intelligent driving, vehicle trajectory prediction has emerged as a prominent and highly challenging research area. Nevertheless, the current state of vehicle trajectory prediction research is marred by the issue of the inadequate consideration of influencing factors. The majority of the existing models predominantly base their predictions either on the state alterations of the vehicle per se or on the spatial elements in its vicinity, which may suffice for relatively uncomplicated driving scenarios. However, in the context of complex driving situations, the intentions of surrounding vehicles assume a crucial significance.

In light of this circumstance, this paper puts forward a vehicle trajectory prediction algorithm predicated on a hybrid prediction model. This algorithm comprehensively incorporates three influential factors: the vehicle states at diverse time instants, the spatial distances between vehicles, and the motion patterns of the surrounding vehicles. The objective is to address the problem of the suboptimal prediction accuracy prevalent in the field of vehicle trajectory prediction. We utilized publicly accessible datasets, namely, US-101 and I-80, for the training and subsequent analysis. Based on the obtained analysis results, it can be inferred that the model proposed herein demonstrates superiority over other comparative models in terms of three evaluation metrics, namely, the average displacement error, final displacement error, and root mean square error. This implies that the vehicle trajectory prediction algorithm proposed in this paper is capable of effectively augmenting the accuracy of trajectory prediction.

Nonetheless, the algorithm proposed in this paper is not without certain limitations. For instance, the model confines its consideration to vehicles as the principal entities, rendering it rather arduous for the management of various irregularly shaped obstacles (such as motorcycles and bicycles). Recently, graph neural networks have piqued our interest. They possess advantages such as taking neighbor information into account, weight sharing, and strong generalization capabilities, and are also applicable to vehicle trajectory prediction. Our next plan is to integrate graph neural networks with LSTM to conduct more in-depth research on the recognition of the vehicle’s surrounding environment and vehicle trajectory prediction [36].

Author Contributions

Conceptualization, T.W.; data curation, T.W., X.C., and Y.X.; formal analysis, T.W.; investigation, T.W., X.C., and Y.X.; methodology, Y.F.; project administration, L.L. and Z.H.; resources, X.C. and Y.X.; supervision, L.L. and Z.H.; writing—original draft, Y.F.; writing—review and editing, Y.F. All authors have read and agreed to the published version of the manuscript.

Funding

The authors appreciate the financial support from the Beijing Municipal Natural Science Foundation under Grant 4232004; the National Natural Science Foundation of China Youth Fund under Grants 62001034 and 62102130; the Hebei Provincial Natural Science Foundation Youth Fund under Grant F2020204003; the Hebei Province Youth Top Talent Program Project under Grant BJ2019008; the Natural Science Basic Research Project of Shaanxi Province under Grant 2023-JC-QN-0516.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Restrictions apply to the availability of these data. Data were obtained from the Federal Highway Administration and are available at https://data.transportation.gov/Automobiles/Next-Generation-Simulation-NGSIM-Vehicle-Trajector/8ect-6jqj (accessed on 24 November 2020) with the permission of the Federal Highway Administration.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Fernando, T.; Denman, S.; Sridharan, S.; Fookes, C. Deep Inverse Reinforcement Learning for Behavior Prediction in Autonomous Driving: Accurate Forecasts of Vehicle Motion. IEEE Signal Process. Mag. 2021, 38, 87–96. [Google Scholar] [CrossRef]

- Zhao, H.; Gao, J.; Lan, T.; Sun, C.; Sapp, B.; Varadarajan, B.; Shen, Y.; Shen, Y.; Chai, Y.; Schmid, C.; et al. TNT: Target-driven trajectory prediction. In Proceedings of the 2020 Conference on Robot Learning (CoRL), London, UK, 16–18 November 2021; pp. 895–904. [Google Scholar]

- Zhang, H.; Liu, J.; Zhao, H.; Wang, P.; Kato, N. Blockchain-Based Trust Management for Internet of Vehicles. IEEE Trans. Emerg. Top. Comput. 2021, 9, 1397–1409. [Google Scholar] [CrossRef]

- Brouwer, N.; Kloeden, H.; Stiller, C. Comparison and evaluation of pedestrian motion models for vehicle safety systems. In Proceeding of the IEEE 19th International Conference on Intelligent Transportation Systems (ITSC), Cologne, Germany, 1 November 2016; pp. 1–6. [Google Scholar]

- Jin, B.; Jiu, B.; Su, T.; Liu, H.; Liu, G. Switched Kalman filter-interacting multiple model algorithm based on optimal autoregressive model for maneuvering target tracking. IET Radar Sonar Navigat. 2015, 9, 199–209. [Google Scholar] [CrossRef]

- Althoff, M.; Mergel, A. Comparison of Markov chain abstraction and Monte Carlo simulation for the safety assessment of autonomous cars. IEEE Trans. Intell. Transp. Syst. 2011, 12, 1237–1247. [Google Scholar] [CrossRef]

- Qiao, S.; Shen, D.; Wang, X.; Han, N.; Zhu, W. A self-adaptive parameter selection trajectory prediction approach via hidden Markov models. IEEE Trans. Intell. Transp. Syst. 2015, 16, 284–296. [Google Scholar] [CrossRef]

- Kumar, P.; Perrollaz, M.; Lefèvre, S.; Laugier, C. Learning-based approach for online lane change intention prediction. In Proceedings of the 2013 IEEE Intelligent Vehicles Symposium (IV), Gold Coast, QLD, Australia, 23–26 June 2013; pp. 797–802. [Google Scholar]

- Deng, Q.; Söffker, D. Improved driving behaviors prediction based on fuzzy logic-hidden Markov model (FL-HMM). In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium (IV), Gold Coast, QLD, Australia, 26–30 June 2018; pp. 2003–2008. [Google Scholar]

- Wang, M.; Zhang, L.; Chen, J.; Zhang, Z.; Wang, Z.; Cao, D. A Hybrid Trajectory Prediction Framework for Automated Vehicles with Attention Mechanisms. IEEE Trans. Transp. Electrif. 2024, 10, 6178–6194. [Google Scholar] [CrossRef]

- Sun, D.; Guo, H.; Wang, W. Vehicle Trajectory Prediction Based on Multivariate Interaction Modeling. IEEE Access 2023, 11, 131639–131650. [Google Scholar] [CrossRef]

- Wang, X.; Hu, J.; Wei, C.; Li, L.; Li, Y.; Du, M. A Novel Lane-Change Decision-Making with Long-Time Trajectory Prediction for Autonomous Vehicle. IEEE Access 2023, 11, 137437–137449. [Google Scholar] [CrossRef]

- Qie, T.; Wang, W.; Yang, C.; Li, Y. A Self-Trajectory Prediction Approach for Autonomous Vehicles Using Distributed Decouple LSTM. IEEE Trans. Ind. Inform. 2024, 20, 6708–6717. [Google Scholar] [CrossRef]

- Messaoud, K.; Yahiaoui, I.; Verroust-Blondet, A.; Nashashibi, F. Attention based vehicle trajectory prediction. IEEE Trans. Intell. Veh. 2021, 6, 175–185. [Google Scholar] [CrossRef]

- Chen, X.; Zhang, H.; Zhao, F.; Hu, Y.; Tan, C.; Yang, J. Intention-aware vehicle trajectory prediction based on spatial-temporal dynamic attention network for Internet of Vehicles. IEEE Trans. Intell. Transp. Syst. 2022, 23, 19471–19483. [Google Scholar] [CrossRef]

- Guo, H.; Meng, Q.; Cao, D.; Chen, H.; Liu, J.; Shang, B. Vehicle trajectory prediction method coupled with ego vehicle motion trend under dual attention mechanism. IEEE Trans. Instr. Meas. 2022, 71, 2507516. [Google Scholar] [CrossRef]

- Wu, Y.; Chen, G.; Li, Z.; Zhang, L.; Xiong, L.; Liu, Z.; Knoll, A. HSTA: A hierarchical spatio-temporal attention model for trajectory prediction. IEEE Trans. Veh. Technol. 2021, 70, 11295–11307. [Google Scholar] [CrossRef]

- Li, Y.; Liu, B.; Zhang, W. Driving-Related Cognitive Abilities Prediction Based on Transformer’s Multimodal Fusion Framework. Sensors. 2025, 25, 174. [Google Scholar] [CrossRef] [PubMed]

- Jin, F.; Liu, K.; Liu, C.; Cheng, T.; Zhang, H.; Lee, V.C.S. A Cooperative Vehicle Localization and Trajectory Prediction Framework Based on Belief Propagation and Transformer Model. IEEE Trans. Consum. Electron. 2024, 70, 2746–2758. [Google Scholar] [CrossRef]

- Li, Y.; Jiang, Y.; Xiong, Z.; Wu, X. Improving Interaction-Based Vehicle Trajectory Prediction via Handling Sensing Failures. IEEE Sens. J. 2024, 24, 22907–22915. [Google Scholar] [CrossRef]

- Meng, Q.; Guo, H.; Li, J.; Dai, Q.; Liu, J. Vehicle Trajectory Prediction Method Driven by Raw Sensing Data for Intelligent Vehicles. IEEE Trans. Intell. Veh. 2023, 8, 3799–3812. [Google Scholar] [CrossRef]

- Zhao, Y.; Zhang, Z.; Li, B.; Zhang, Z.; Wang, Y. Greedy Trajectory Prediction of Autonomous Vehicles Based on DTDGP-IE Framework and Hazard Index Graph. IEEE Trans. Veh. Technol. 2024, 73, 9525–9535. [Google Scholar] [CrossRef]

- Kiran, B.R.; Sobh, I.; Talpert, V.; Mannion, P.; Al Sallab, A.A.; Yogamani, S.; Pérez, P. Deep Reinforcement Learning for Autonomous Driving: A Survey. IEEE Trans. Intell. Transp. Syst. 2022, 23, 4909–4926. [Google Scholar] [CrossRef]

- Lu, L.; Ning, Q.; Qiu, Y.; Chu, D. Vehicle Trajectory Prediction Model Based on Attention Mechanism and Inverse Reinforcement Learning. In Proceedings of the 2022 IEEE 34th International Conference on Tools with Artificial Intelligence (ICTAI), Macao, China, 31 October–2 November 2022; pp. 1160–1166. [Google Scholar]

- Naveed, K.B.; Qiao, Z.; Dolan, J.M. Trajectory Planning for Autonomous Vehicles Using Hierarchical Reinforcement Learning. In Proceedings of the 2021 IEEE International Intelligent Transportation Systems Conference (ITSC), Indianapolis, IN, USA, 19–22 September 2021; pp. 601–606. [Google Scholar]

- Guan, H.; Guo, P. Research on pedestrian trajectory prediction by GAN model based on LSTM. In Proceedings of the 2023 IEEE 3rd International Conference on Power, Electronics and Computer Applications (ICPECA), Shenyang, China, 29–31 January 2023; pp. 1400–1405. [Google Scholar]

- Wang, Y.; Chen, W.; Wang, C.; Wang, S. Vehicle Trajectory Prediction Based on Attention Mechanism and GAN. In Proceedings of the 2021 7th International Conference on Systems and Informatics (ICSAI), Chongqing, China, 13–15 November 2021; pp. 1–6. [Google Scholar]

- Xu, P.; Hayet, J.-B.; Karamouzas, I. Context-Aware Timewise VAEs for Real-Time Vehicle Trajectory Prediction. IEEE Robot. Autom. Lett. 2023, 8, 5440–5447. [Google Scholar] [CrossRef]

- Shi, L.; Wang, L.; Long, C.; Zhou, S.; Zhou, M.; Niu, Z.; Hua, G. SGCN: Sparse graph convolution network for pedestrian trajectory prediction. In Proceedings of the 2021 IEEE/CVF Conference Computer Vision Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 8990–8999. [Google Scholar]

- Deo, N.; Trivedi, M.M. Convolutional social pooling for vehicle trajectory prediction. In Proceedings of the IEEE/CVF Conference Computer Vision Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018; pp. 1549–15498. [Google Scholar]

- Traffic Analysis Tools: Next Generation Simulation-FHWA Operations. Available online: https://ops.fhwa.dot.gov/trafficanalysistools/ngsim.html (accessed on 24 November 2020).

- Stepanyants, V.; Andzhusheva, M.; Romanov, A. A Pipeline for Traffic Accident Dataset Development. In Proceedings of the 2023 International Russian Smart Industry Conference (SmartIndustryCon), Sochi, Russian Federation, 27–31 March 2023; pp. 621–626. [Google Scholar]

- Wen, F.; Li, M.; Wang, R. Social Transformer: A Pedestrian Trajectory Prediction Method based on Social Feature Processing Using Transformer. In Proceedings of the 2022 International Joint Conference on Neural Networks (IJCNN), Padua, Italy, 18–23 July 2022; pp. 1–7. [Google Scholar]

- Wang, Q.; Tan, X.; Zhu, C. Vehicle Trajectory Prediction on Interaction Driving Scenarios. In Proceedings of the 2023 IEEE 11th Joint International Information Technology and Artificial Intelligence Conference (ITAIC), Chongqing, China, 8–10 December 2023; pp. 1791–1795. [Google Scholar]

- Alahi, A.; Goel, K.; Ramanathan, V.; Robicquet, A.; Fei-Fei, L.; Savarese, S. Social LSTM: Human trajectory prediction in crowded spaces. In Proceedings of the IEEE Conference Computer Vision Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 961–971. [Google Scholar]

- Yang, M.; Zhu, H.; Wang, T.; Cai, J.; Weng, X.; Feng, H.; Fang, K. Vehicle Interactive Dynamic Graph Neural Network-Based Trajectory Prediction for Internet of Vehicles. IEEE Internet Things J. 2024, 11, 35777–35790. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).