Acoustic Waves and Their Application in Modern Fire Detection Using Artificial Vision Systems: A Review

Abstract

1. Introduction

2. Mathematical Laws of Acoustic Wave Propagation

2.1. The Pattern of Decrease in the Intensity of a Propagating Acoustic Wave

2.2. The Influence of Temperature Variability in the Indoor Air Environment, as a Result of Season and Day Changes, on the Change in the Speed of Sound

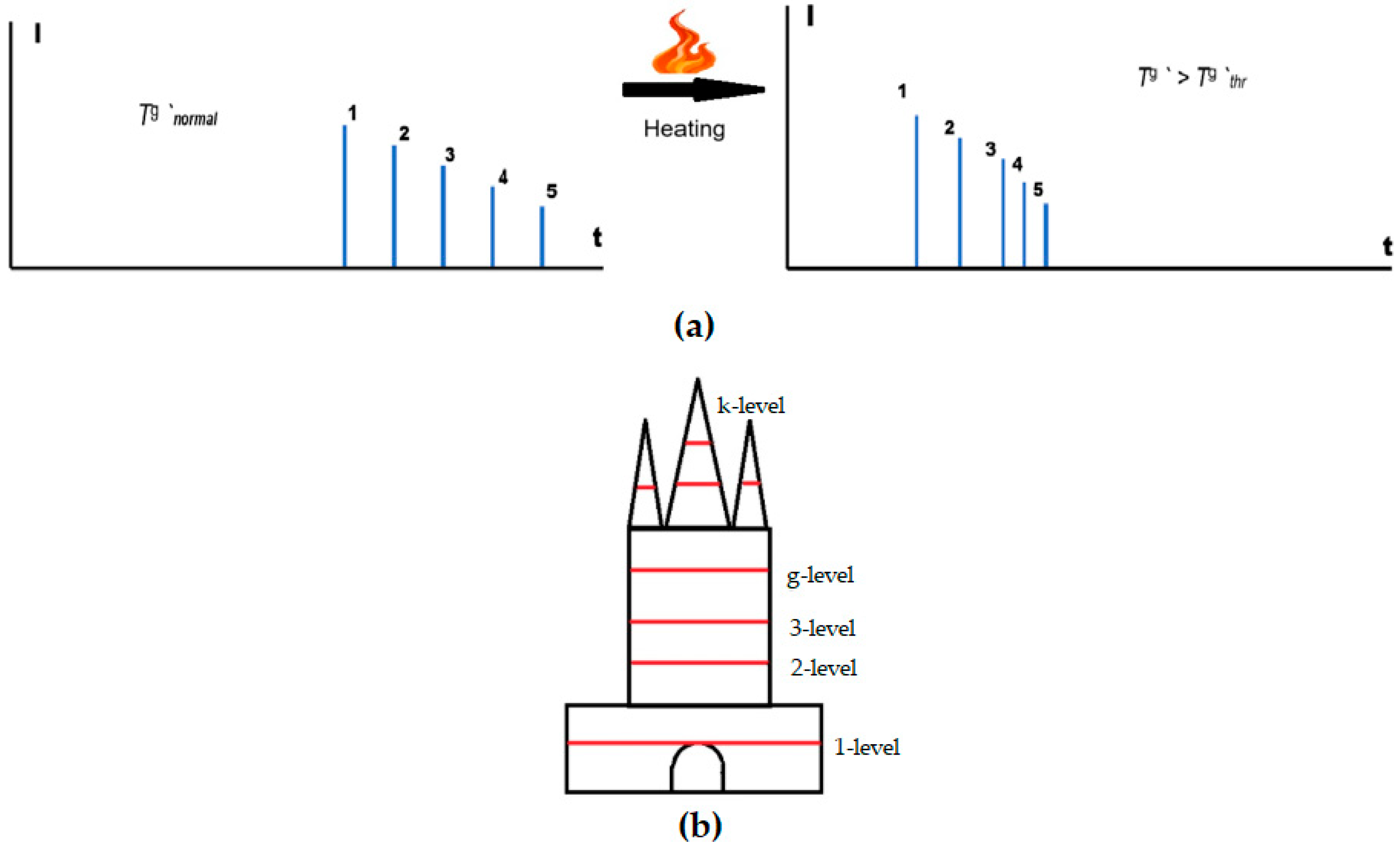

2.3. The Mathematical Model of Acoustic Temperature Control in an Isolated Room, and the Detection of Fires and Outbreaks by Means of Pulse Acoustic Probing

3. The Use of Vision Robots to Serve Humans

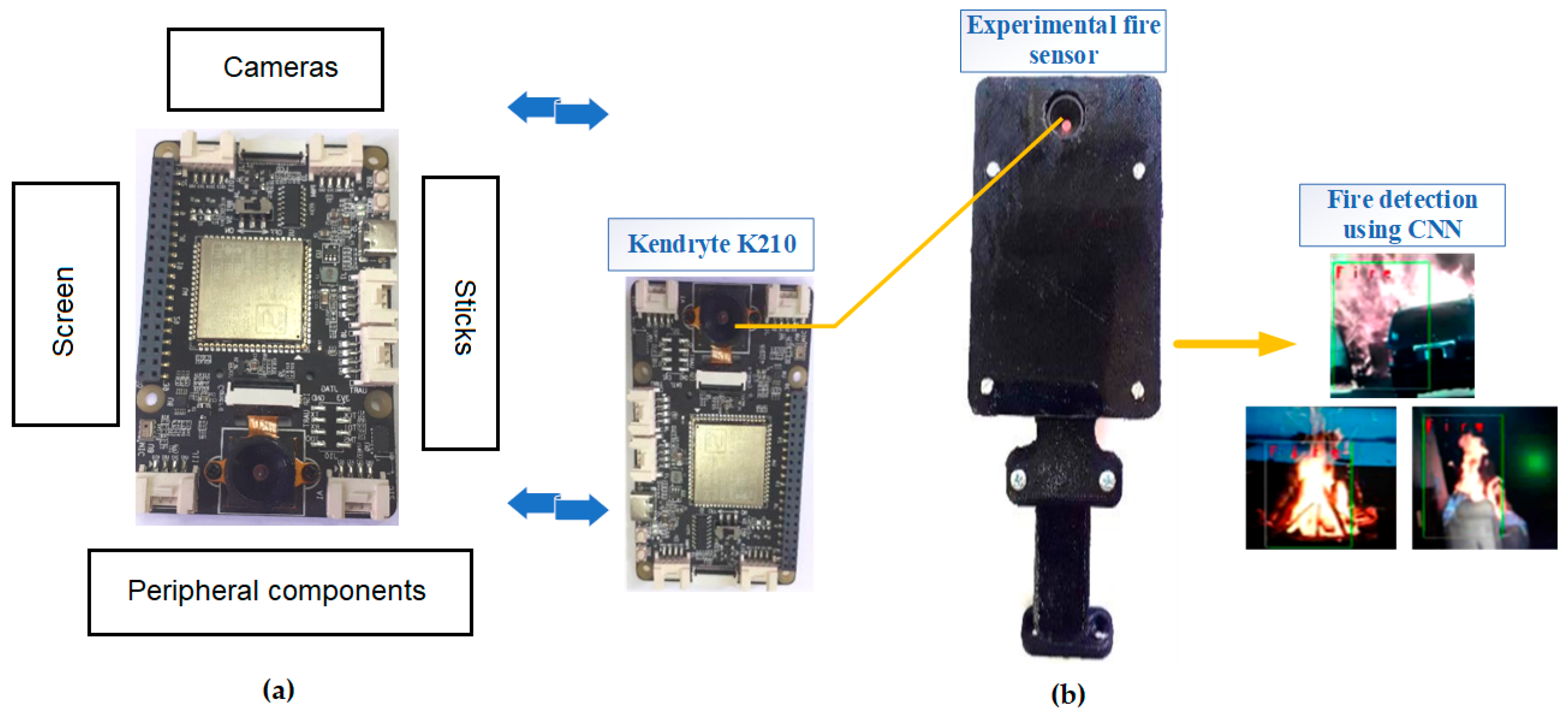

3.1. Using State-of-the-Art Techniques for Flame Detection

3.2. Fire Detection Techniques Using Artificial Vision Systems and Video Cameras

3.3. Fire Detection Categories

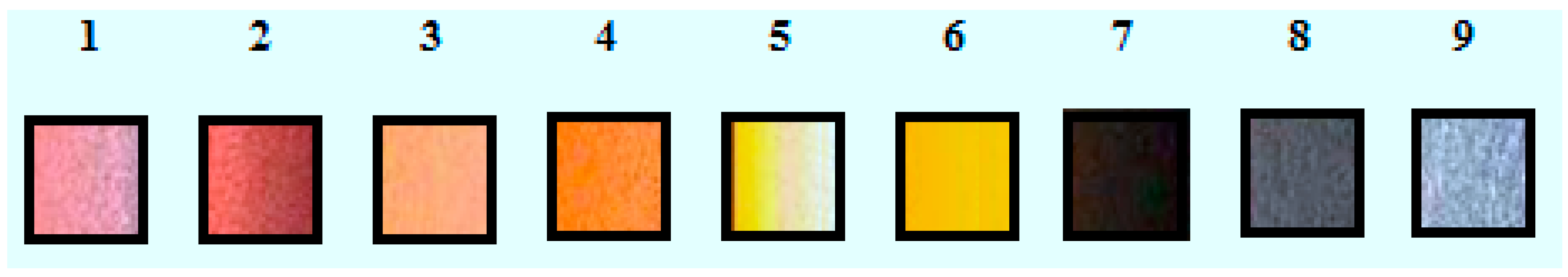

3.4. Pixel Detection Methods

4. Unresolved Problems

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- World Fire Statistics. Report No 29. Center for Fire Statistics of CTIF 2024. Available online: https://www.ctif.org/sites/default/files/2024-06/CTIF_Report29_ERG.pdf (accessed on 1 November 2024).

- Tryhuba, A.; Ratushnyi, A.; Lub, P.; Rudynets, M.; Visyn, O. The model of the formation of values and the information system of their determination in the projects of the creation of territorial emergency and rescue structures. CEUR Workshop Proc. 2023, 3453, 59–70. Available online: https://ceur-ws.org/Vol-3453/ (accessed on 1 November 2024).

- Myroshnychenko, A.; Loboichenko, V.; Divizinyuk, M.; Levterov, A.; Rashkevich, N.; Shevchenko, O.; Shevchenko, R. Application of Up-to-Date Technologies for Monitoring the State of Surface Water in Populated Areas Affected by Hostilities. Bull. Georgian Natl. Acad. Sci. 2022, 16, 50–59. [Google Scholar]

- Kochmar, I.M.; Karabyn, V.V.; Kordan, V.M. Ecological and geochemical aspects of thermal effects on argillites of the Lviv-Volyn coal basin spoil tips. Nauk. Visnyk Natsionalnoho Hirnychoho Univ. 2024, 3, 100–107. [Google Scholar] [CrossRef]

- NFPA. NFPA 10: Standard for Portable Fire Extinguishers. Available online: https://www.nfpa.org/codes-and-standards/all-codes-and-standards/list-of-codes-and-standards/detail?code=10 (accessed on 1 November 2024).

- ISO 3941:2007; Classification of Fires, 2nd ed. International Organization for Standardization: Geneva, Switzerland, 2007.

- Jahura, F.T.; Mazumder, N.-U.-S.; Hossain, M.T.; Kasebi, A.; Girase, A.; Ormond, R.B. Exploring the Prospects and Challenges of Fluorine-Free Firefighting Foams (F3) as Alternatives to Aqueous Film-Forming Foams (AFFF): A Review. ACS Omega 2024, 9, 37430–37444. [Google Scholar] [CrossRef]

- Sheng, Y.; Zhang, S.; Hu, D.; Ma, L.; Li, Y. Investigation on Thermal Stability of Highly Stable Fluorine-Free Foam Co-Stabilized by Silica Nanoparticles and Multi-Component Surfactants. J. Mol. Liq. 2023, 382, 122039. [Google Scholar] [CrossRef]

- Dadashov, I.F.; Loboichenko, V.M.; Strelets, V.M. About the environmental characteristics of fire extinguishing substances used in extinguishing oil and petroleum products. SOCAR Proc. 2020, 1, 79–84. [Google Scholar] [CrossRef]

- Gurbanova, M.; Loboichenko, V.; Leonova, N.; Strelets, V.; Shevchenko, R. Comparative Assessment of the Ecological Characteristics of Auxiliary Organic Compounds in the Composition of Foaming Agents Used for Fire Fighting. Bull. Georgian Natl. Acad. Sci. 2020, 14, 58–66. [Google Scholar]

- Zhao, J.; Yang, J.; Hu, Z.; Kang, R.; Zhang, J. Development of an Environmentally Friendly Gel Foam and Assessment of Its Thermal Stability and Fire Suppression Properties in Liquid Pool Fires. Colloids Surf. A Physicochem. Eng. Asp. 2024, 692, 133990. [Google Scholar] [CrossRef]

- Shcherbak, O.; Loboichenko, V.; Skorobahatko, T.; Shevchenko, R.; Levterov, A.; Pruskyi, A.; Khrystych, V.; Khmyrova, A.; Fedorchuk-Moroz, V.; Bondarenko, S. Study of Organic Carbon-Containing Additives to Water Used in Fire Fighting, in Terms of Their Environmental Friendliness. Fire Technol. 2024, 60, 3739–3765. [Google Scholar] [CrossRef]

- Atay, G.Y.; Loboichenko, V.; Wilk-Jakubowski, J.Ł. Investigation of calcite and huntite/hydromagnesite mineral in co-presence regarding flame retardant and mechanical properties of wood composites. Cem. Wapno Beton. 2024, 29, 40–53. [Google Scholar] [CrossRef]

- Nabipour, H.; Shi, H.; Wang, X.; Hu, X.; Song, L.; Hu, Y. Flame retardant Cellulose-Based hybrid hydrogels for firefighting and fire prevention. Fire Technol. 2022, 58, 2077–2091. [Google Scholar] [CrossRef]

- Zhao, J.; Zhang, Z.; Liu, S.; Tao, Y.; Liu, Y. Design and research of an articulated tracked firefighting robot. Sensors 2022, 22, 5086. [Google Scholar] [CrossRef] [PubMed]

- Li, N.; Shi, Z.; Jin, J.; Feng, J.; Zhang, A.; Xie, M.; Min, L.; Zhao, Y.; Lei, Y. Design of Intelligent Firefighting and Smart Escape Route Planning System Based on Improved Ant Colony Algorithm. Sensors 2024, 24, 6438. [Google Scholar] [CrossRef]

- Cliftmann, J.M.; Anderson, B.E. Remotely extinguishing flames through transient acoustic streaming using time reversal focusing of sound. Sci. Rep. 2024, 14, 30049. [Google Scholar] [CrossRef] [PubMed]

- Shi, X.; Zhang, J.; Zhang, Y.; Zhang, Y.; Zhao, Y.; Sun, K.; Li, S.; Yu, Y.; Jiao, F.; Cao, W. Combustion and Extinction Characteristics of an Ethanol Pool Fire Perturbed by Low–Frequency Acoustic Waves. Case Stud. Therm. Eng. 2024, 60, 104829. [Google Scholar] [CrossRef]

- Shi, X.; Zhang, Y.; Chen, X.; Zhang, Y.; Ma, Q.; Lin, G. The Response of an Ethanol Pool Fire to Transverse Acoustic Waves. Fire Saf. J. 2021, 125, 103416. [Google Scholar] [CrossRef]

- Taspinar, Y.S.; Koklu, M.; Altin, M. Acoustic-Driven Airflow Flame Extinguishing System Design and Analysis of Capabilities of Low Frequency in Different Fuels. Fire Technol. 2022, 58, 1579–1597. [Google Scholar] [CrossRef]

- Loboichenko, V.; Wilk-Jakubowski, J.L.; Levterov, A.; Wilk-Jakubowski, G.; Statyvka, Y.; Shevchenko, O. Using the burning of polymer compounds to determine the applicability of the acoustic method in fire extinguishing. Polymers 2024, 16, 3413. [Google Scholar] [CrossRef]

- Wang, Z.; Wang, Y.; Liao, M.; Sun, Y.; Wang, S.; Sun, X.; Shi, X.; Kang, Y.; Tian, M.; Bao, T.; et al. FireSonic: Design and Implementation of an Ultrasound Sensing-Based Fire Type Identification System. Sensors 2024, 24, 4360. [Google Scholar] [CrossRef] [PubMed]

- Su, Z.; Qi, D.; Yu, R.; Yang, K.; Chen, M.; Zhao, X.; Zhang, G.; Ying, Y.; Liu, D. Response Behavior of Inverse Diffusion Flame to Low Frequency Acoustic Field. Combust. Sci. Technol. 2024, 1–29. [Google Scholar] [CrossRef]

- Xiong, C.-Y.; Liu, Y.-H.; Xu, C.-S.; Huang, X.-Y. Response of Buoyant Diffusion Flame to the Low-frequency Sound. Kung Cheng Je Wu Li Hsueh Pao J. Eng. Thermophys. 2022, 43, 553–558. [Google Scholar]

- Xiong, C.; Liu, Y.; Fan, H.; Huang, X.; Nakamura, Y. Fluctuation and Extinction of Laminar Diffusion Flame Induced by External Acoustic Wave and Source. Sci. Rep. 2021, 11, 14402. [Google Scholar] [CrossRef]

- Zhang, Y.-J.; Jamil, H.; Wei, Y.-J.; Yang, Y.-J. Displacement and Extinction of Jet Diffusion Flame Exposed to Speaker-Generated Traveling Sound Waves. Appl. Sci. 2022, 12, 12978. [Google Scholar] [CrossRef]

- Rai, S.K.; Mahajan, K.A.; Roundal, V.B.; Gorane, P.S.; Patil, S.A.; Gadhave, S.L.; Javanjal, V.K.; Ingle, P. IOT Based Portable Fire Extinguisher Using Acoustic Setup. Panam. Math. J. 2024, 33, 15–29. [Google Scholar] [CrossRef]

- Xiong, C.; Liu, Y.; Xu, C.; Huang, X. Extinguishing the Dripping Flame by Acoustic Wave. Fire Saf. J. 2021, 120, 103109. [Google Scholar] [CrossRef]

- Jain, S.; Ranjan, A.; Fatima, M.; Siddharth. Performance Evaluation of Sonic Fire Fighting System. In Proceedings of the 7th International Conference on Advanced Computing and Communication Systems (ICACCS), Coimbatore, India, 19–20 March 2021. [Google Scholar] [CrossRef]

- Beisner, E.; Wiggins, N.D.; Yue, K.-B.; Rosales, M.; Penny, J.; Lockridge, J.; Page, R.; Smith, A.; Guerrero, L. Acoustic Flame Suppression Mechanics in a Microgravity Environment. Microgravity Sci. Technol. 2015, 27, 141–144. [Google Scholar] [CrossRef]

- Xiong, C.; Liu, Y.; Xu, C.; Huang, X. Acoustical Extinction of Flame on Moving Firebrand for the Fire Protection in Wildland–Urban Interface. Fire Technol. 2020, 57, 1365–1380. [Google Scholar] [CrossRef]

- De Luna, R.G.; Baylon, Z.A.P.; Garcia, C.A.D.; Huevos, J.R.G.; Ilagan, J.L.S.; Rocha, M.J.T. A Comparative Analysis of Machine Learning Approaches for Sound Wave Flame Extinction System Towards Environmental Friendly Fire Suppression. In Proceedings of the IEEE Region 10 Conference (TENCON 2023), Chiang Mai, Thailand, 31 October–3 November 2023. [Google Scholar] [CrossRef]

- Taspinar, Y.S.; Koklu, M.; Altin, M. Classification of Flame Extinction Based on Acoustic Oscillations Using Artificial Intelligence Methods. Case Stud. Therm. Eng. 2021, 28, 101561. [Google Scholar] [CrossRef]

- Ivanov, S.; Stankov, S. Acoustic Extinguishing of Flames Detected by Deep Neural Networks in Embedded Systems. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2021, 46, 307–312. [Google Scholar] [CrossRef]

- Ivanov, S.; Stankov, S. The Artificial Intelligence Platform with the Use of DNN to Detect Flames: A Case of Acoustic Extinguisher. In Proceedings of the International Conference on Intelligent Computing & Optimization 2021, Hua Hin, Thailand, 30–31 December 2021; Volume 371, pp. 24–34. [Google Scholar] [CrossRef]

- Wilk-Jakubowski, J.; Stawczyk, P.; Ivanov, S.; Stankov, S. High-power acoustic fire extinguisher with artificial intelligence platform. Int. J. Comput. Vis. Robot. 2022, 12, 236–249. [Google Scholar] [CrossRef]

- Wilk-Jakubowski, J.; Stawczyk, P.; Ivanov, S.; Stankov, S. The using of Deep Neural Networks and natural mechanisms of acoustic waves propagation for extinguishing flames. Int. J. Comput. Vis. Robot. 2022, 12, 101–119. [Google Scholar] [CrossRef]

- Wilk-Jakubowski, J. Analysis of Flame Suppression Capabilities Using Low-Frequency Acoustic Waves and Frequency Sweeping Techniques. Symmetry 2021, 13, 1299. [Google Scholar] [CrossRef]

- Niegodajew, P.; Gruszka, K.; Gnatowska, R.; Šofer, M. Application of acoustic oscillations in flame extinction in a presence of obstacle. In Proceedings of the XXIII Fluid Mechanics Conference (KKMP 2018), Zawiercie, Poland, 9–12 September 2018. [Google Scholar] [CrossRef]

- Niegodajew, P.; Łukasiak, K.; Radomiak, H.; Musiał, D.; Zajemska, M.; Poskart, A.; Gruszka, K. Application of acoustic oscillations in quenching of gas burner flame. Combust. Flame 2018, 194, 245–249. [Google Scholar] [CrossRef]

- Stawczyk, P.; Wilk-Jakubowski, J. Non-invasive attempts to extinguish flames with the use of high-power acoustic extinguisher. Open Eng. 2021, 11, 349–355. [Google Scholar] [CrossRef]

- McKinney, D.J.; Dunn-Rankin, D. Acoustically driven extinction in a droplet stream flame. Combust. Sci. Technol. 2007, 161, 27–48. [Google Scholar] [CrossRef]

- Węsierski, T.; Wilczkowski, S.; Radomiak, H. Wygaszanie procesu spalania przy pomocy fal akustycznych. Bezpieczeństwo Tech. Pożarnicza 2013, 30, 59–64. Available online: https://sft.cnbop.pl/pl/bi-tp-vol-2-issue-30-2013-wygaszanie-procesu-spalania-przy-pomocy-fal-akustycznych (accessed on 20 November 2024).

- Sai, R.T.; Sharma, G. Sonic Fire Extinguisher. Pramana Res. J. 2017, 8, 337–346. Available online: https://www.pramanaresearch.org/gallery/prj-p334_1.pdf (accessed on 20 November 2024).

- Wilk-Jakubowski, J.; Stawczyk, P.; Ivanov, S.; Stankov, S. Control of acoustic extinguisher with Deep Neural Networks for fire detection. Elektron. Electrotech. 2022, 28, 52–59. [Google Scholar] [CrossRef]

- Loboichenko, V.; Wilk-Jakubowski, J.; Wilk-Jakubowski, G.; Harabin, R.; Shevchenko, R.; Strelets, V.; Levterov, A.; Soshinskiy, A.; Tregub, N.; Antoshkin, O. The Use of Acoustic Effects for the Prevention and Elimination of Fires as an Element of Modern Environmental Technologies. Environ. Clim. Technol. 2022, 26, 319–330. [Google Scholar] [CrossRef]

- Yılmaz-Atay, H.; Wilk-Jakubowski, J.L. A Review of Environmentally Friendly Approaches in Fire Extinguishing: From Chemical Sciences to Innovations in Electrical Engineering. Polymers 2022, 14, 1224. [Google Scholar] [CrossRef] [PubMed]

- Vovchuk, T.S.; Wilk-Jakubowski, J.L.; Telelim, V.M.; Loboichenko, V.M.; Shevchenko, R.I.; Shevchenko, O.S.; Tregub, N.S. Investigation of the use of the acoustic effect in extinguishing fires of oil and petroleum products. SOCAR Proc. 2021, 2, 24–31. [Google Scholar] [CrossRef]

- Pronobis, M. Modernizacja Kotłów Energetycznych; Wydawnictwo Naukowo-Techniczne: Warszawa, Poland, 2002. [Google Scholar]

- Jędrusyna, A.; Noga, A. Wykorzystanie generatora fal infradźwiękowych dużej mocy do oczyszczania z osadów powierzchni grzewczych kotłów energetycznych. Piece Przem. Kotły 2012, 11, 30–37. [Google Scholar]

- Noga, A. Przegląd obecnego stanu wiedzy z zakresu techniki infradźwiękowej i możliwości wykorzystania fal akustycznych do oczyszczania urządzeń energetycznych. Zesz. Energetyczne 2014, 1, 225–234. [Google Scholar]

- Yu, P. Doronin. Physics of the Ocean; Girometeoizdat: Leningrad, Russia, 1978. (In Russian) [Google Scholar]

- Korovin, V.P.; Chvertkin, E.I. Marine Hydrometry; Girometeoizdat: Leningrad, Russia, 1988. (In Russian) [Google Scholar]

- Azarenko, O.; Goncharenko, Y.; Divizinyuk, M.; Loboichenko, V.; Farrakhov, O.; Polyakov, S. Mathematical model of acoustic temperature control in a local room, detection of ignitions and fire inside it by pulse acoustic probing. Grail Sci. 2024, 35, 122–135. (In Ukrainian) [Google Scholar] [CrossRef]

- Azarenko, O.; Honcharenko, Y.; Divizinyuk, M.; Mirnenko, V.; Strilets, V. The influence of technical and geographical parameters on the range of recording language information when solving applied problems. J. Sci. Pap. Soc. Dev. Secur. 2021, 11, 15–30. (In Ukrainian) [Google Scholar] [CrossRef]

- Divizinyuk, M.; Lutsyk, I.; Rak, V.; Kasatkina, N.; Franko, Y. Mathematical model of identification of radar targets for security of objects of critical infrastructure. In Proceedings of the 12th International Conference on Advanced Computer Information Technologies (ACIT), Deggendorf, Germany, 15–17 September 2021. [Google Scholar] [CrossRef]

- Azarenko, O.; Honcharenko, Y.; Divizinyuk, M.; Myroshnyk, O.; Polyakov, S.; Farrakhov, O. Patterns of sound propagation in the atmosphere as a means of monitoring the condition of local premises of a critical infrastructure facility. Inter. Conf. 2023, 40, 585–599. (In Ukrainian) [Google Scholar] [CrossRef]

- Rossi, L.; Akhloufi, M.; Tison, Y. On the use of stereovision to develop a novel instrumentation system to extract geometric fire fronts characteristics. Fire Saf. J. 2011, 46, 9–20. [Google Scholar] [CrossRef]

- Toulouse, T.; Rossi, L.; Campana, A.; Çelik, T.; Akhloufi, M. Computer vision for wildfire research: An evolving image dataset for processing and analysis. Fire Saf. J. 2017, 92, 188–194. [Google Scholar] [CrossRef]

- Toulouse, T.; Rossi, L.; Akhloufi, M.; Çelik, T.; Maldague, X. Benchmarking of wildland fire colour segmentation algorithms. IET Image Process. 2015, 9, 1064–1072. [Google Scholar] [CrossRef]

- Liu, Z.-G.; Yang, Y.; Ji, X.-H. Flame detection algorithm based on a saliency detection technique and the uniform local binary pattern in the YCbCr color space. Signal Image Video Process. 2016, 10, 277–284. [Google Scholar] [CrossRef]

- Wilk, J.Ł. Techniki Cyfrowego Rozpoznawania Krawędzi Obrazów; Wydawnictwo Stowarzyszenia Współpracy Polska-Wschód, Oddział Świętokrzyski: Kielce, Poland, 2009. (In Polish) [Google Scholar]

- Thokale, A.; Sonar, P. Hybrid approach to detect a fire based on motion color and edge. Digit. Image Process. 2015, 7, 273–277. Available online: http://www.ciitresearch.org/dl/index.php/dip/article/view/DIP102015003 (accessed on 1 December 2024).

- Kong, S.G.; Jin, D.; Li, S.; Kim, H. Fast fire flame detection in surveillance video using logistic regression and temporal smoothing. Fire Saf. J. 2016, 79, 37–43. [Google Scholar] [CrossRef]

- Chen, X.; Zhang, X.; Zhang, Q. Fire Alarm Using Multi-rules Detection and Texture Features Classification in Video Surveillance. In Proceedings of the 7th International Conference on Intelligent Computation Technology and Automation, Changsha, China, 25–26 October 2014. [Google Scholar] [CrossRef]

- Çelik, T.; Demirel, H. Fire detection in video sequences using a generic color model. Fire Saf. J. 2009, 44, 147–158. [Google Scholar] [CrossRef]

- Töreyin, B.U. Fire Detection Algorithms Using Multimodal Signal and Image Analysis. Ph.D. Thesis, Bilkent University, Ankara, Turkey, 2009. [Google Scholar]

- Töreyin, B.U.; Dedeoğlu, Y.; Güdükbay, U.; Çetin, A.E. Computer vision based method for real-time fire and flame detection. Pattern Recogn. Lett. 2006, 27, 49–58. [Google Scholar] [CrossRef]

- Matthews, B.W. Comparison of the predicted and observed secondary structure of T4 phage lysozyme. Biochim. Biophys. Acta BBA Protein Struct. 1975, 405, 442–451. [Google Scholar] [CrossRef]

- Blair, D.C. Information Retrieval, 2nd ed.; Van Rijsbergen, C.J., Ed.; Butterworths: London, UK, 1979. [Google Scholar] [CrossRef]

- Toulouse, T.; Rossi, L.; Çelik, T.; Akhloufi, M. Automatic fire pixel detection using image processing: A comparative analysis of rule-based and machine learning-based methods. Signal Image Video Process. 2016, 10, 647–654. [Google Scholar] [CrossRef]

- Hafiane, A.; Chabrier, S.; Rosenberger, C.; Laurent, H. A New Supervised Evaluation Criterion for Region Based Segmentation Methods. In Proceedings of the 9th International Conference on Advanced Concepts for Intelligent Vision Systems (ACIVS 2007), Delft, The Netherlands, 28–31 August 2007. [Google Scholar] [CrossRef]

- Celen, V.B.; Demirci, M.F. Fire Detection in Different Color Models. In Proceedings of the WorldComp 2012 Proceedings, Las Vegas, NV, USA, 16–19 July 2012; pp. 1–7. Available online: http://worldcomp-proceedings.com/proc/p2012/IPC8008.pdf (accessed on 8 December 2024).

- Phillips III, W.; Shah, M.; da Vitoria Lobo, N. Flame recognition in video. Pattern Recogn. Lett. 2000, 23, 319–327. Available online: http://citeseerx.ist.psu.edu/viewdoc/summary?doi=10.1.1.24.4615 (accessed on 8 December 2024). [CrossRef]

- Çelik, T.; Demirel, H.; Ozkaramanli, H.; Uyguroglu, M. Fire detection using statistical color model in video sequences. J. Vis. Com. Image Represent. 2007, 18, 176–185. [Google Scholar] [CrossRef]

- Ko, B.C.; Cheong, K.-H.; Nam, J.-Y. Fire detection based on vision sensor and support vector machines. Fire Saf. J. 2009, 44, 322–329. [Google Scholar] [CrossRef]

- Madani, K.; Kachurka, V.; Sabourin, C.; Amarger, V.; Golovko, V.; Rossi, L. A human-like visual-attention-based artificial vision system for wildland firefighting assistance. Appl. Intell. 2017, 48, 2157–2179. [Google Scholar] [CrossRef]

- Chen, T.; Wu, P.; Chiou, Y. An early fire-detection method based on image processing. In Proceedings of the IEEE International Conference on Image Processing (ICIP’04), Singapore, 24–27 October 2004. [Google Scholar] [CrossRef]

- Szegedy, C.; Toshev, A.; Erhan, D. Deep Neural Networks for Object Detection. Adv. Neural Inf. Process. Syst. 2013, 26, 1–9. [Google Scholar]

- Janků, P.; Komínková Oplatková, Z.; Dulík, T. Fire detection in video stream by using simple artificial neural network. Mendel 2018, 24, 55–60. [Google Scholar] [CrossRef]

- Foley, D.; O’Reilly, R. An Evaluation of Convolutional Neural Network Models for Object Detection in Images on Low-End Devices. In Proceedings of the 26th Irish Conference on Artificial Intelligence and Cognitive Science, Dublin, Ireland, 6–7 December 2018; Available online: http://ceur-ws.org/Vol-2259/aics_32.pdf (accessed on 12 December 2024).

- Simple Understanding of Mask RCNN. Available online: https://medium.com/@alittlepain833/simple-understanding-of-mask-rcnn-134b5b330e95 (accessed on 12 December 2024).

- Kurup, R. Vision-Based Fire Flame Detection System Using Optical flow Features and Artificial Neural Network. Int. J. Sci. Res. 2014, 3, 2161–2168. [Google Scholar]

- Collumeau, J.-F.; Laurent, H.; Hafiane, A.; Chetehouna, K. Fire scene segmentations for forest fire characterization: A comparative study. In Proceedings of the 18th International Conference on Image Processing (ICIP), Brussels, Belgium, 11–14 September 2011. [Google Scholar] [CrossRef]

- Rudz, S.; Chetehouna, K.; Hafiane, A.; Laurent, H.; Séro-Guillaume, O. Investigation of a novel image segmentation method dedicated to forest fire applications. Measurem. Sci. Technol. 2013, 24, 075403. [Google Scholar] [CrossRef]

- Yamagishi, H.; Yamaguchi, J. A contour fluctuation data processing method for fire flame detection using a color camera. In Proceedings of the 26th Annual Conference of the IEEE Industrial Electronics Society (IECON 2000), Nagoya, Japan, 22–28 October 2000. [Google Scholar] [CrossRef]

- Liu, C.-B.; Ahuja, N. Vision based fire detection. In Proceedings of the 17th International Conference on Pattern Recognition (ICPR 2004), Cambridge, UK, 26August 2004. [Google Scholar] [CrossRef]

- Marbach, G.; Loepfe, M.; Brupbacher, T. An image processing technique for fire detection in video images. Fire Saf. J. 2006, 41, 285–289. [Google Scholar] [CrossRef]

- Çelik, T. Fast and Efficient Method for Fire Detection Using Image Processing. ETRI J. 2010, 32, 881–890. [Google Scholar] [CrossRef]

- Horng, W.-B.; Peng, J.-W.; Chen, C.-Y. A New Image-Based Real-Time Flame Detection Method Using Color Analysis. In Proceedings of the IEEE International Conference on Networking, Sensing and Control, Tucson, AZ, USA, 19–22 March 2005. [Google Scholar] [CrossRef]

- Rossi, L.; Akhloufi, M. Dynamic Fire 3D Modeling Using a Real-Time Stereovision System. In Technological Developments in Education and Automation; Iskander, M., Kapila, V., Karim, M., Eds.; Springer: Dordrecht, The Netherlands, 2010; pp. 33–38. [Google Scholar] [CrossRef]

- Li, Z.; Mihaylova, L.S.; Isupova, O.; Rossi, L. Autonomous Flame Detection in Videos with a Dirichlet Process Gaussian Mixture Color Model. IEEE Trans. Ind. Inform. 2017, 14, 1146–1154. [Google Scholar] [CrossRef]

- Rothermel, R.C.; Anderson, H.E. Fire Spread Characteristics Determined in the Laboratory; US Department of Agriculture: Ogden, UT, USA, 1966. Available online: https://www.fs.usda.gov/rm/pubs_int/int_rp030.pdf (accessed on 15 December 2024).

- Grishin, A.M. Mathematical Modeling of Forest Fires and New Methods of Fighting Them; Publishing House of the Tomsk State University: Tomsk, Russia, 1997. [Google Scholar]

- Rossi, J.-L.; Chetehouna, K.; Collin, A.; Moretti, B.; Balbi, J.-H. Simplified Flame Models and Prediction of the Thermal Radiation Emitted by a Flame Front in an Outdoor Fire. Combust. Sci. Technol. 2010, 182, 1457–1477. [Google Scholar] [CrossRef]

- Foggia, P.; Saggese, A.; Vento, M. Real-time fire detection for video-surveillance applications using a combination of experts based on color, shape, and motion. IEEE Trans. Circ. Sys. Video Technol. 2015, 25, 1545–1556. [Google Scholar] [CrossRef]

- Habiboğlu, Y.H.; Günay, O.; Çetin, A.E. Covariance matrix-based fire and flame detection method in video. Mach. Vis. Appl. 2012, 23, 1103–1113. [Google Scholar] [CrossRef]

- Mueller, M.; Karasev, P.; Kolesov, I.; Tannenbaum, A. Optical Flow Estimation for Flame Detection in Videos. IEEE Trans. Image Process. 2013, 22, 2786–2797. [Google Scholar] [CrossRef] [PubMed]

- Chi, R.; Lu, Z.-M.; Ji, Q.-G. Real-time multi-feature based fire flame detection in video. IET Image Process. 2017, 11, 31–37. [Google Scholar] [CrossRef]

- Görür, D.; Rasmussen, C.E. Dirichlet Process Gaussian Mixture Models: Choice of the Base Distribution. J. Comput. Sci. Technol. 2010, 25, 653–664. [Google Scholar] [CrossRef]

- Teh, Y.W.; Jordan, M.I.; Beal, M.J.; Blei, D.M. Hierarchical Dirichlet processes. J. Am. Stat. Assoc. 2006, 101, 1566–1581. Available online: https://www.jstor.org/stable/27639773 (accessed on 18 December 2024). [CrossRef]

- Borges, P.V.K.; Izquierdo, E. A Probabilistic Approach for Vision-Based Fire Detection in Videos. IEEE Trans. Circ. Sys. Video Techn. 2010, 20, 721–731. [Google Scholar] [CrossRef]

- Wang, D.-C.; Cui, X.; Park, E.; Jin, C.; Kim, H. Adaptive flame detection using randomness testing and robust features. Fire Saf. J. 2013, 55, 116–125. [Google Scholar] [CrossRef]

- Wald, A.; Wolfowitz, J. An Exact Test for Randomness in the Non-Parametric Case Based on Serial Correlation. Ann. Math. Stat. 1943, 14, 378–388. [Google Scholar] [CrossRef]

- Martínez-de Dios, J.R.; Merino, L.; Caballero, F.; Ollero, A. Automatic forest-fire measuring using ground stations and Unmanned Aerial Systems. Sensors 2011, 11, 6328–6353. [Google Scholar] [CrossRef]

- Plucinski, M.P. A Review of Wildfire Occurrence Research; Bushfire Cooperative Research Centre: Melbourne, Australia, 2012. [Google Scholar]

- Pérez, Y.; Pastor, E.; Planas, E.; Plucinski, M.; Gould, J. Computing forest fires aerial suppression effectiveness by IR monitoring. Fire Saf. J. 2011, 46, 2–8. [Google Scholar] [CrossRef]

- Karimi, N. Response of a conical, laminar premixed flame to low amplitude acoustic forcing—A comparison between experiment and kinematic theories. Energy 2014, 78, 490–500. [Google Scholar] [CrossRef][Green Version]

- DARPA. DARPA Demos Acoustics Suppression of Flame. Available online: https://www.youtube.com/watch?v=DanOeC2EpeA&t=9s (accessed on 20 December 2024).

- Im, H.G.; Law, C.K.; Axelbaum, R.L. Opening of the Burke-Schumann Flame Tip and the Effects of Curvature on Diffusion Flame Extinction. Proc. Combust. Inst. 1991, 23, 551–558. [Google Scholar] [CrossRef]

- Radomiak, H.; Mazur, M.; Zajemska, M.; Musiał, D. Gaszenie płomienia dyfuzyjnego przy pomocy fal akustycznych. Bezpieczeństwo Technol. Pożarnicza 2015, 40, 29–38. [Google Scholar] [CrossRef]

- Marek, M. Bayesian Regression Model Estimation: A Road Safety Aspect. In Proceedings of the International Conference on Smart City Applications SCA 2022, Castelo Branco, Portugal, 19–21 October 2022; Volume 5, pp. 163–175. [Google Scholar] [CrossRef]

- Marek, M. Wykorzystanie ekonometrycznego modelu klasycznej funkcji regresji liniowej do przeprowadzenia analiz ilościowych w naukach ekonomicznych. In Rola Informatyki w Naukach Ekonomicznych i Społecznych. Innowacje i Implikacje Interdyscyplinarne; Wydawnictwo Wyższej Szkoły Handlowej im. B. Markowskiego w Kielcach: Kielce, Poland, 2013. [Google Scholar]

- Wilk-Jakubowski, G.; Harabin, R.; Skoczek, T.; Wilk-Jakubowski, J. Preparation of the Police in the Field of Counter-terrorism in Opinions of the Independent Counter-terrorist Sub-division of the Regional Police Headquarters in Cracow. Slovak. J. Political Sci. 2022, 22, 174–208. [Google Scholar] [CrossRef]

- Marek, M. Aspects of Road Safety: A Case of Education by Research—Analysis of Parameters Affecting Accident. In Proceedings of the Education and Research in the Information Society Conference (ERIS), Plovdiv, Bulgaria, 27–28 September 2021; Available online: https://ceur-ws.org/Vol-3061/ERIS_2021-art07(reg).pdf (accessed on 20 December 2024).

- Chitade, A.Z.; Katiyar, S.K. Colour based image segmentation using k-means clustering. Int. J. Eng. Sci. Technol. 2010, 2, 5319–5325. Available online: https://www.oalib.com/research/2110040 (accessed on 22 December 2024).

- Chen, J.; He, Y.; Wang, J. Multi-feature fusion based fast video flame detection. Build. Environ. 2010, 45, 1113–1122. [Google Scholar] [CrossRef]

- Pan, H.; Badawi, D.; Zhang, X.; Çetin, A.E. Additive neural network for forest fire detection. Signal Image Video Process 2020, 14, 675–682. [Google Scholar] [CrossRef]

- Wilk-Jakubowski, G.; Harabin, R.; Ivanov, S. Robotics in crisis management: A review. Technol. Soc. 2022, 68, 101935. [Google Scholar] [CrossRef]

- Wilk-Jakubowski, G. Normative Dimension of Crisis Management System in the Third Republic of Poland in an International Context. Organizational and Economic Aspects; Wydawnictwo Społecznej Akademii Nauk: Łódź-Warszawa, Poland, 2019. [Google Scholar]

- San-Miguel-Ayanz, J.; Ravail, N. Active Fire Detection for Fire Emergency Management: Potential and Limitations for the Operational Use of Remote Sensing. Nat. Hazards 2005, 35, 361–376. [Google Scholar] [CrossRef]

- Scott, R.; Nowell, B. Networks and Crisis Management. Oxford Research Encyclopedia of Politics. 2020. Available online: https://oxfordre.com/politics/view/10.1093/acrefore/9780190228637.001.0001/acrefore-9780190228637-e-1650 (accessed on 22 December 2024).

- Wilk-Jakubowski, J. Broadband satellite data networks in the context of available protocols and digital platforms. Inform. Autom. Pomiary Gospod. Ochr. Sr. 2021, 11, 56–60. [Google Scholar] [CrossRef]

- Wilk-Jakubowski, J. Overview of broadband information systems architecture for crisis management. Inform. Autom. Pomiary Gospod. Ochr. Sr. 2020, 10, 20–23. [Google Scholar] [CrossRef]

- Suematsu, N.; Oguma, H.; Eguchi, S.; Kameda, S.; Sasanuma, M.; Kuroda, K. Multi-mode SDR VSAT against big disasters. In Proceedings of the European Microwave Conference’13, Nuremberg, Germany, 6–10 October 2013; Available online: https://ieeexplore.ieee.org/document/6686788 (accessed on 28 December 2024).

- Azarenko, O.; Honcharenko, Y.; Divizinyuk, M.; Mirnenko, V.; Strilets, V.; Wilk-Jakubowski, J.L. Influence of anthropogenic factors on the solution of applied problems of recording language information in the open area. Soc. Dev. Secur. 2022, 12, 135–143. [Google Scholar] [CrossRef]

- Šerić, L.; Stipanicev, D.; Krstinić, D. ML/AI in Intelligent Forest Fire Observer Network. In Proceedings of the 3rd EAI International Conference on Management of Manufacturing Systems, Dubrovnik, Croatia, 6–8 November 2018. [Google Scholar] [CrossRef]

- Wilk-Jakubowski, J. Information systems engineering using VSAT networks. Yugosl. J. Oper. Res. 2021, 31, 409–428. [Google Scholar] [CrossRef]

- Azarenko, O.; Honcharenko, Y.; Divizinyuk, M.; Mirnenko, V.; Strilets, V.; Wilk-Jakubowski, J.L. The influence of air environment properties on the solution of applied problems of capturing speech information in the open terrain. Soc. Dev. Secur. 2022, 12, 64–77. [Google Scholar] [CrossRef]

- Zeng, L.; Zhang, C.; Qin, P.; Zhou, Y.; Cai, Y. One Method for Predicting Satellite Communication Terminal Service Demands Based on Artificial Intelligence Algorithms. Appl. Sci. 2024, 14, 6019. [Google Scholar] [CrossRef]

- Wilk-Jakubowski, J. Measuring Rain Rates Exceeding the Polish Average by 0.01%. Pol. J. Environ. Stud. 2018, 27, 383–390. [Google Scholar] [CrossRef] [PubMed]

- Negi, P.; Pathani, A.; Bhatt, B.C.; Swami, S.; Singh, R.; Gehlot, A.; Thakur, A.K.; Gupta, L.R.; Priyadarshi, N.; Twala, B.; et al. Integration of Industry 4.0 Technologies in Fire and Safety Management. Fire 2024, 7, 335. [Google Scholar] [CrossRef]

- Chen, Y.; Morton, D.C.; Randerson, J.T. Remote sensing for wildfire monitoring: Insights into burned area, emissions, and fire dynamics. ONE Earth 2024, 7, 1022–1028. [Google Scholar] [CrossRef]

- NASA. Earth Science—Applied Sciences. Monitoring Fires with Fast-Acting Data. Available online: https://appliedsciences.nasa.gov/our-impact/story/monitoring-fires-fast-acting-data (accessed on 28 December 2024).

- Wilk-Jakubowski, J. Total Signal Degradation of Polish 26-50 GHz Satellite Systems Due to Rain. Pol. J. Environ. Stud. 2018, 27, 397–402. [Google Scholar] [CrossRef]

- Wilk-Jakubowski, J. Predicting Satellite System Signal Degradation due to Rain in the Frequency Range of 1 to 25 GHz. Pol. J. Environ. Stud. 2018, 27, 391–396. [Google Scholar] [CrossRef]

- Stankov, S.; Ivanov, S. Intelligent Sensor For Fire Detection With Deep Neural Networks. J. Inf. Inn. Technol. JIIT 2020, 1, 25–28. Available online: https://journal.iiit.bg/wp-content/uploads/2020/08/4_INTELLIGENT-SENSOR-FOR-FIRE-DETECTION.pdf (accessed on 29 December 2024).

- Dirik, M. Fire extinguishers based on acoustic oscillations in airflow using fuzzy classification. J. Fuzzy Ext. Appl. 2023, 4, 217–234. Available online: https://www.journal-fea.com/article_175269_e5257a225a450ed0d7840f6368c55f60.pdf (accessed on 29 December 2024).

- Yadav, R.; Shirazi, R.; Choudhary, A.; Yadav, S.; Raghuvanshi, R. Designing of Fire Extinguisher Based on Sound Waves. Int. J. Eng. Adv. Technol. 2020, 9, 927–930. Available online: https://www.ijeat.org/wp-content/uploads/papers/v9i4/D7301049420.pdf (accessed on 29 December 2024). [CrossRef]

- Fegade, R.; Rai, K.; Dalvi, S. Extinguishing Fire Using Low Frequency Sound from Subwoofer. Gradiva Rev. J. 2022, 8, 708–713. [Google Scholar]

- Wilk-Jakubowski, J.L. Acoustic firefighting method on the basis of European research: A review. Akustika 2023, 46, 31–45. [Google Scholar] [CrossRef]

- Koklu, M.; Taspinar, Y.S. Determining the extinguishing status of fuel flames with sound wave by machine learning methods. IEEE Access 2021, 9, 207–216. [Google Scholar] [CrossRef]

- Wilk-Jakubowski, J. Experimental Investigation of Amplitude-Modulated Waves for Flame Extinguishing: A Case of Acoustic Environmentally Friendly Technology. Environ. Clim. Technol. 2023, 27, 627–638. [Google Scholar] [CrossRef]

- Loboichenko, V.; Wilk-Jakubowski, G.; Wilk-Jakubowski, J.L.; Ciosmak, J. Application of Low-Frequency Acoustic Waves to Extinguish Flames on the Basis of Selected Experimental Attempts. Appl. Sci. 2024, 14, 8872. [Google Scholar] [CrossRef]

- Wilk-Jakubowski, J.; Wilk-Jakubowski, G.; Loboichenko, V. Experimental Attempts of Using Modulated and Unmodulated Waves in Low-Frequency Acoustic Wave Flame Extinguishing Technology: A Review of Selected Cases. Stroj. Vestn. J. Mech. Eng. 2024, 70, 270–281. [Google Scholar] [CrossRef]

- Wilk-Jakubowski, J.L. Experimental Study of the Influence of Even Harmonics on Flame Extinguishing by Low-Frequency Acoustic Waves with the Use of High-Power Extinguisher. Appl. Sci. 2024, 14, 11809. [Google Scholar] [CrossRef]

- Jin, J.; Kim, S.; Moon, J. Development of a Firefighting Drone for Constructing Fire-breaks to Suppress Nascent Low-Intensity Fires. Appl. Sci. 2024, 14, 1652. [Google Scholar] [CrossRef]

- Future Content. Fire Retardant Material—A History. Available online: https://specialistworkclothing.wordpress.com/2014/03/05/fire-retardant-material-a-history (accessed on 30 December 2024).

- LeVan, S.L. Chemistry of Fire Retardancy; U.S. Department of Agriculture, Forest Service, Forest Products Laboratory: Madison, WI, USA, 1984; pp. 531–574.

- Salasinska, K.; Mizera, K.; Celiński, M.; Kozikowski, P.; Mirowski, J.; Gajek, A. Thermal properties and fire behavior of a flexible poly(vinyl chloride) modified with complex of 3-aminotriazole with zinc phosphate. Fire Saf. J. 2021, 122, 103326. [Google Scholar] [CrossRef]

- Rabajczyk, A.; Zielecka, M.; Popielarczyk, T.; Sowa, T. Nanotechnology in Fire Protection—Application and Requirements. Materials 2021, 14, 7849. [Google Scholar] [CrossRef] [PubMed]

- Bras, M.L.; Wilkie, C.A.; Bourbigot, S. Fire Retardancy of Polymers-New Applications of Mineral Fillers; The Royal Society of Chemistry: Sawston, UK, 2005; pp. 4–6. [Google Scholar]

- Rabajczyk, A.; Zielecka, M.; Gniazdowska, J. Application of Nanotechnology in Extinguishing Agents. Materials 2022, 15, 8876. [Google Scholar] [CrossRef] [PubMed]

- Wicklein, B.; Kocjan, D.; Carosio, F.; Camino, G.; Bergström, L. Tuning the nanocellulose–borate interaction to achieve highly flame retardant hybrid materials. Chem. Mater. 2016, 28, 1985–1989. [Google Scholar] [CrossRef]

| Properties | List of Publications |

|---|---|

| Color | [66,78,86] |

| Texture | [97,99] |

| Shape | [87,96,103] |

| Flickering properties | [68,92] |

| Dynamics | [68,86,97,98] |

| Combined features | [61,62,63,64,99] |

| Processing techniques |

| Type of Model | Description |

|---|---|

| Empirical inequality models | The use of these models is based on empirical inequality (there are experimental thresholds). They work well in detecting real flame pixels, but not in filtering out noise. |

| Statistical models | The use of these models is based on models trained with real data. The effectiveness is higher if an appropriate model trained with sufficient data is used (the number of mixture components is not known in advance). |

| Color Space Used for Detection | Authors | List of Publications |

|---|---|---|

| RGB | W. Phillips III, M. Shah, and N. da Vitoria Lobo; B. U. Töreyin, Y. Dedeoğlu, U. Güdükbay, and A. E. Çetin; T. Çelik, H. Demirel, H. Ozkaramanli, and M. Uyguroglu; B. C. Ko, K-H. Cheong, and J-Y. Nam; J-F. Collumeau, H. Laurent, A. Hafiane, and K. Chetehouna | [68,74,75,76,84] |

| YCbCr | T. Toulouse, L. Rossi, M. Akhloufi, T. Çelik, and X. Maldague; T. Çelik and H. Demirel | [60,66] |

| HIS | W-B. Horng, J-W. Peng, and C-Y. Chen | [90] |

| HSV | C-B. Liu and N. Ahuja | [87] |

| YUV | G. Marbach, M. Loepfe, and T. Brupbacher | [88] |

| L*a*b* | T. Çelik; A. Z. Chitade and S. K. Katiyar | [89,116] |

| Other, combinations of color spaces, roles | T. Chen, P. Wu, and Y. Chiou; J. Chen, Y. He, and J. Wang; L. Rossi, M. Akhloufi, and Y. Tison; S. Rudz, K. Chetehouna, A. Hafiane, H. Laurent, and O. Séro-Guillaume | [58,78,85,91,117] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wilk-Jakubowski, J.L.; Loboichenko, V.; Divizinyuk, M.; Wilk-Jakubowski, G.; Shevchenko, R.; Ivanov, S.; Strelets, V. Acoustic Waves and Their Application in Modern Fire Detection Using Artificial Vision Systems: A Review. Sensors 2025, 25, 935. https://doi.org/10.3390/s25030935

Wilk-Jakubowski JL, Loboichenko V, Divizinyuk M, Wilk-Jakubowski G, Shevchenko R, Ivanov S, Strelets V. Acoustic Waves and Their Application in Modern Fire Detection Using Artificial Vision Systems: A Review. Sensors. 2025; 25(3):935. https://doi.org/10.3390/s25030935

Chicago/Turabian StyleWilk-Jakubowski, Jacek Lukasz, Valentyna Loboichenko, Mikhail Divizinyuk, Grzegorz Wilk-Jakubowski, Roman Shevchenko, Stefan Ivanov, and Viktor Strelets. 2025. "Acoustic Waves and Their Application in Modern Fire Detection Using Artificial Vision Systems: A Review" Sensors 25, no. 3: 935. https://doi.org/10.3390/s25030935

APA StyleWilk-Jakubowski, J. L., Loboichenko, V., Divizinyuk, M., Wilk-Jakubowski, G., Shevchenko, R., Ivanov, S., & Strelets, V. (2025). Acoustic Waves and Their Application in Modern Fire Detection Using Artificial Vision Systems: A Review. Sensors, 25(3), 935. https://doi.org/10.3390/s25030935