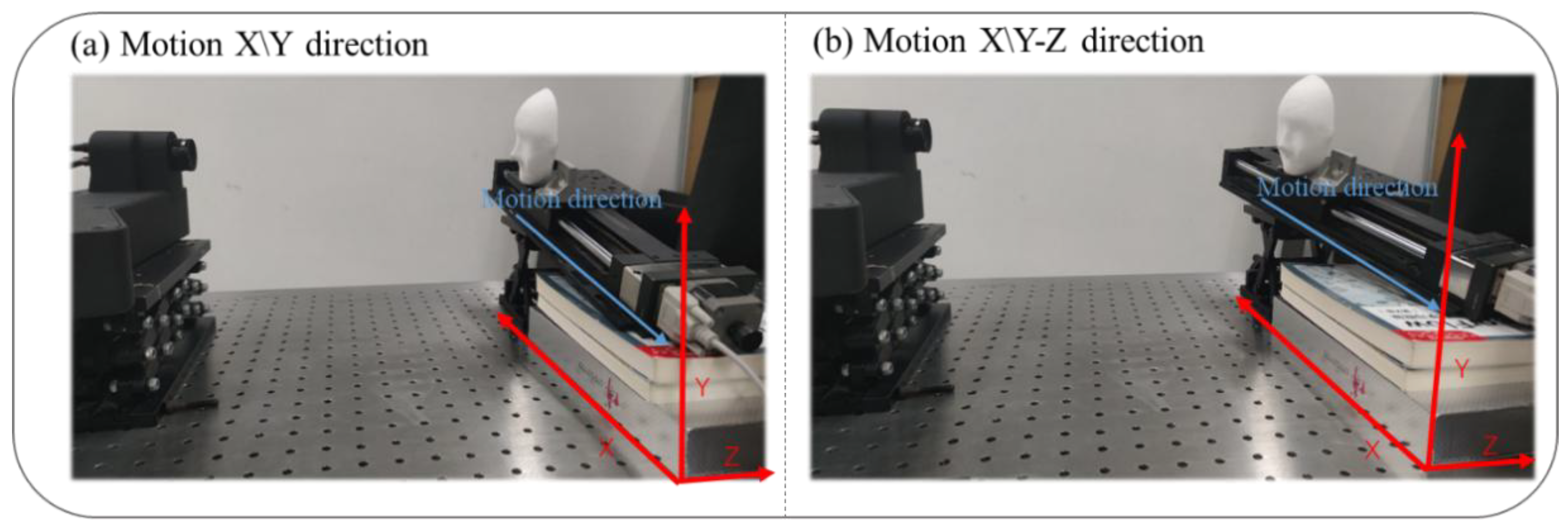

To validate the effectiveness of the proposed method, we designed and implemented a phase-shifting profilometry (PSP) system equipped with a GVD PDC03 projector (800 × 1200 pixels) and an IDS UI-124XSE-M camera (1600 × 1200 pixels), both operating at a synchronized frame rate of 120 Hz. This high frame rate ensured that the system could capture dynamic scenes with minimal motion blur, providing a robust platform for testing the algorithm under various motion scenarios. The phase unwrapping process in the system employed a three-frequency phase-shifting algorithm, which is widely recognized for its ability to handle complex phase distributions and resolve ambiguity in phase retrieval. This algorithm allowed the system to accurately reconstruct the surface profile of objects by sequentially projecting three fringe patterns of varying frequencies onto the object surface. The reflected patterns were captured by the camera and analyzed to extract precise phase information. Additionally, to simulate realistic conditions and further evaluate the robustness of the method, the system was configured to measure objects undergoing controlled motion at different velocities and directions. This setup enabled us to assess the performance of the algorithm not only in static conditions, but also under dynamic environments, ensuring its applicability in real-world scenarios where motion-induced errors are prevalent. By combining advanced hardware and algorithmic techniques, the experimental setup provided a comprehensive framework for testing and validating the proposed motion error compensation method.

3.1. Dynamic Reconstruction Accuracy

In the experiment, a standard ball was moved along the X/Y and Z axes.

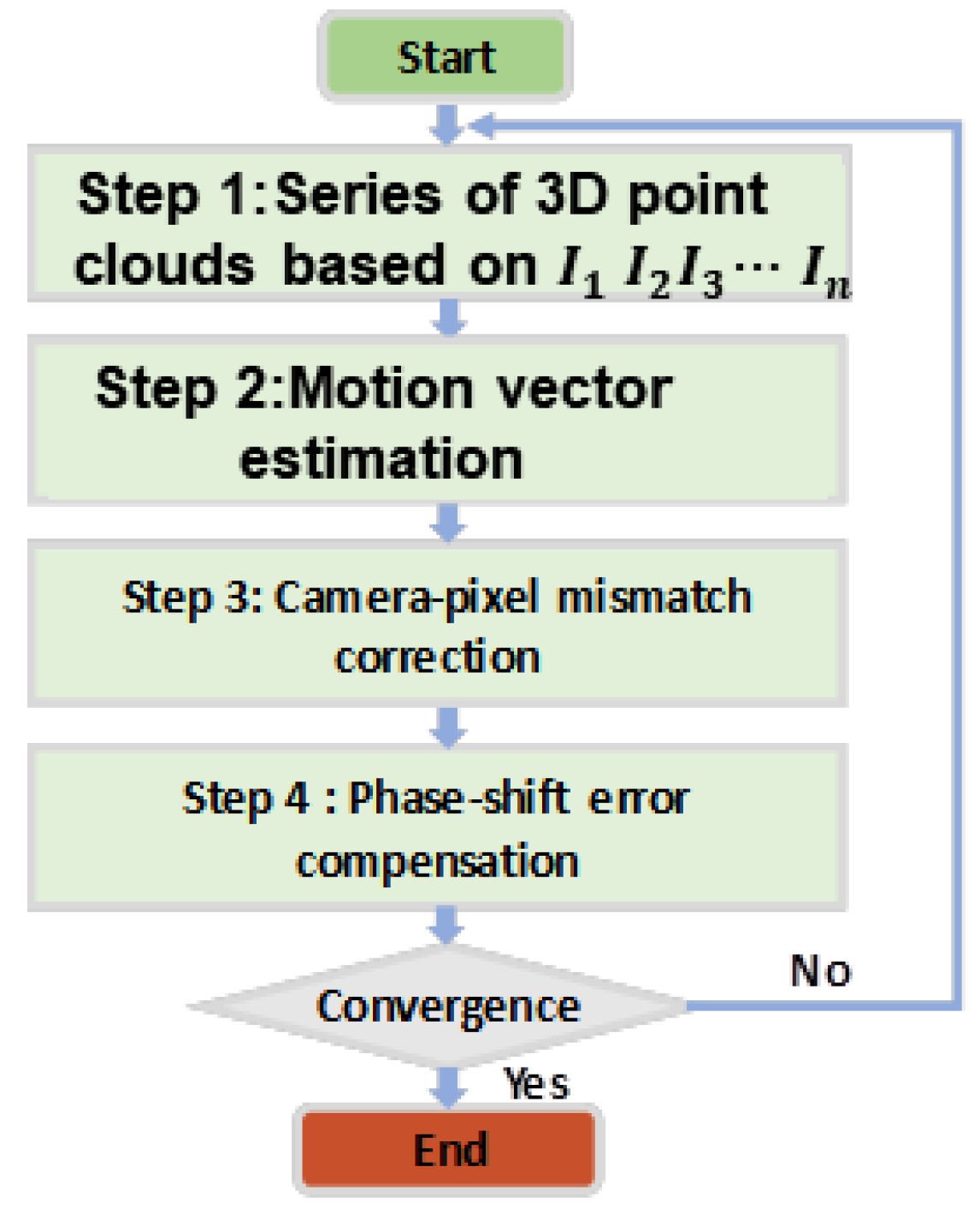

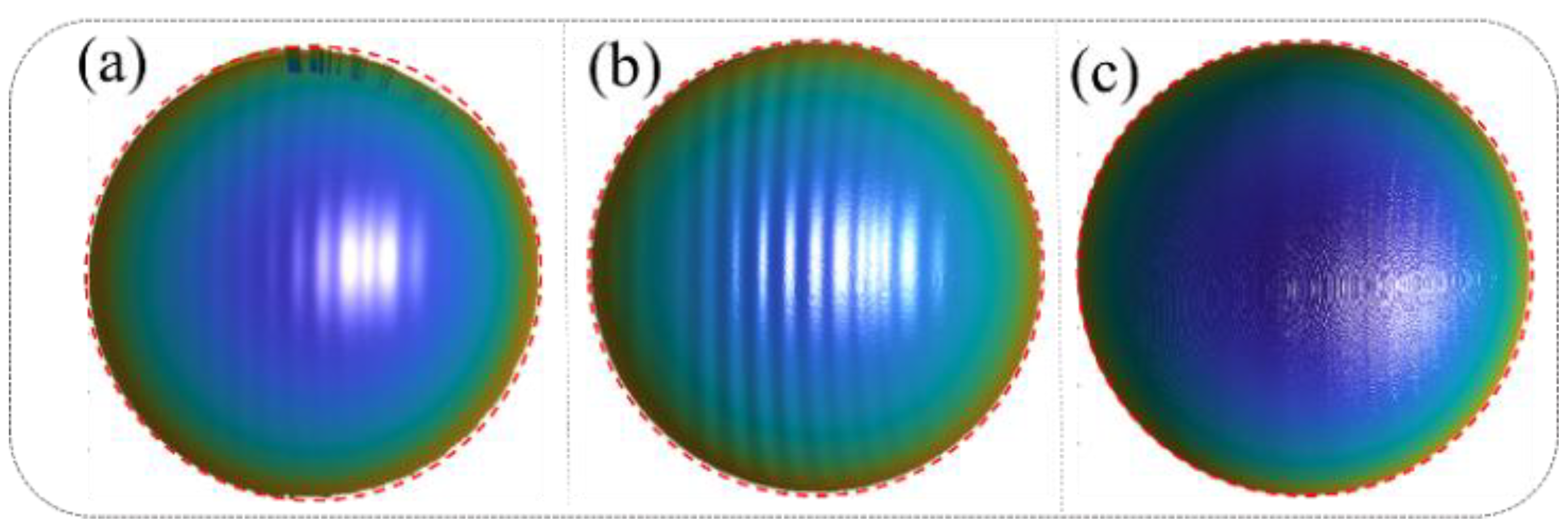

Figure 4 presents a comparative analysis of the 3D reconstruction results under different error compensation conditions, demonstrating the progressive improvements achieved through the proposed method.

Figure 4a illustrates the 3D reconstruction results obtained using the traditional phase-shifting algorithm without any error compensation. Significant edge distortions are evident in the reconstruction, particularly in areas with rapid transitions, indicating the detrimental effects of motion-induced errors on the accuracy of the measurement. Quantitatively, the mean error in this case is 0.142 mm, with a root mean square error (RMSE) of 0.453 mm, highlighting the limitations of the conventional algorithm in dynamic scenarios.

Figure 4b presents the reconstruction results after applying correction for camera-pixel mismatch alone. While this step reduces edge distortions to some extent, it fails to fully address the motion-induced errors, as periodic sinusoidal phase errors persist across the surface of the reconstructed object. These residual errors underscore the need for a more comprehensive approach. The mean error in this case increases to 0.673 mm, with an RMSE of 0.863 mm, indicating that correcting only camera-pixel mismatches is insufficient for achieving high-accuracy reconstructions.

Figure 4c showcases the results after simultaneously compensating for both camera-pixel mismatch and phase-shifting errors using the proposed method. The reconstruction demonstrates a marked improvement in accuracy, with edge distortions effectively eliminated and the sinusoidal phase errors significantly mitigated. This dual-correction approach achieves a mean error of just 0.0296 mm and an RMSE of 0.252 mm, representing a substantial enhancement compared to the previous cases. These results validate the effectiveness and robustness of the proposed algorithm in addressing motion-induced errors in dynamic 3D measurement scenarios. The comparison in

Figure 4 highlights the progressive benefits of integrating camera-pixel mismatch correction with phase-shift error mitigation, emphasizing the importance of a comprehensive error compensation strategy for achieving precise and reliable 3D reconstructions in complex motion conditions. In this study, we built a more general-purpose 3D structured light imaging system, consisting of the GVD PDC03 projector (800 × 1200 pixels) and the IDS UI-124XSE-M camera (1600 × 1200 pixels), and compared the performance of our motion compensation algorithm with other algorithms on the same system. This was carried out to demonstrate that the compensation accuracy of our algorithm has a certain level of generalizability. Of course, different structured light imaging systems inherently have varying levels of precision. However, the 3D measurement system used in our experiments achieves an accuracy of better than 0.03 mm when measuring static objects. Additionally, with the application of our motion compensation algorithm, we are able to achieve similar high-precision measurements (within 0.03 mm) in dynamic scenes. Therefore, the algorithm’s accuracy is also influenced by the inherent measurement accuracy of the system, and it will improve as the original system’s accuracy improves.

3.2. Dynamic Reconstruction in Complex Scenarios

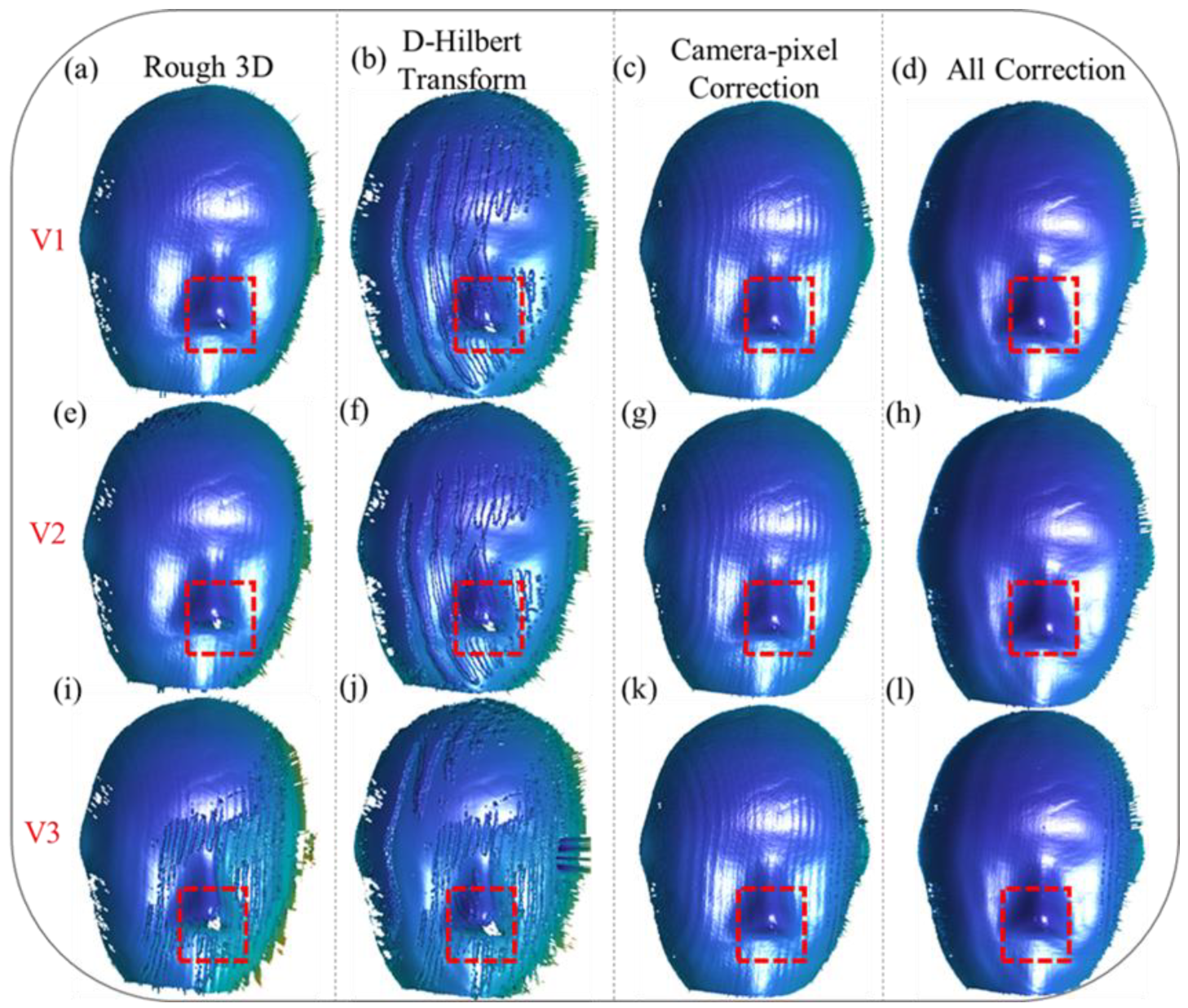

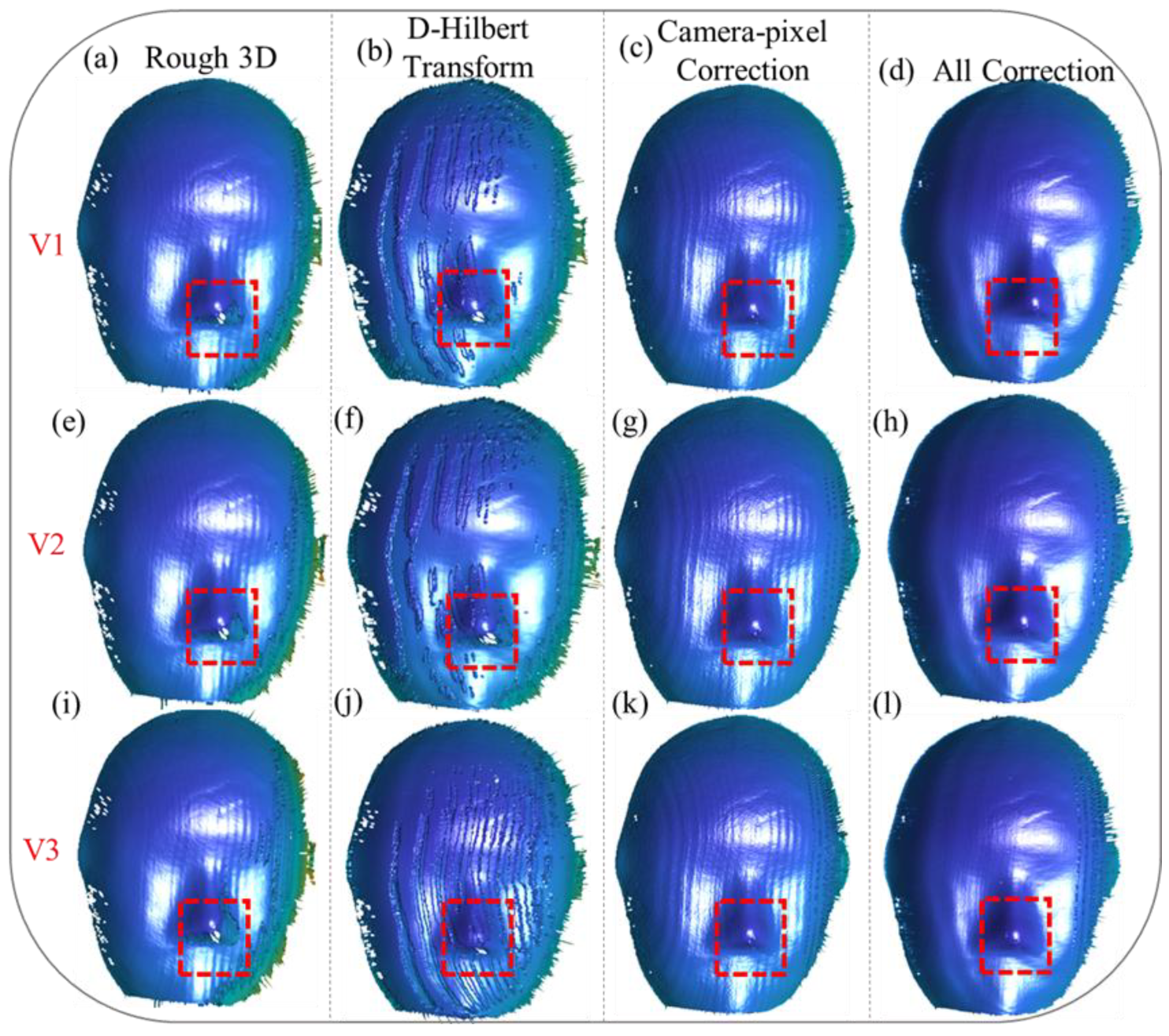

To evaluate the robustness of our proposed method under varying motion velocities, we conducted a series of 3D reconstruction experiments. These experiments involved scenarios where the mask moved at three distinct speeds, denoted as V1, V2, V3, along two different motion directions. These speeds were chosen to represent a range of motion dynamics, from relatively slow movements to faster, more challenging scenarios that test the limits of motion compensation techniques. The experimental setup allowed us to systematically analyze the performance of our method under diverse motion conditions and assess its ability to maintain reconstruction accuracy across varying velocities.

Furthermore, to highlight the superior generalizability and effectiveness of our proposed method compared to existing motion compensation approaches, we performed a comprehensive comparative analysis. This analysis included reconstruction results obtained using our algorithm, as well as those achieved through motion error compensation with the Hilbert transform, a commonly employed technique in the field. By comparing the outcomes, we aimed to demonstrate how our approach not only addresses camera-pixel mismatches, but also effectively mitigates phase-shifting errors, which are often inadequately handled by traditional methods.

The comparative analysis was conducted across various motion conditions, encompassing both unidirectional and multidirectional motion patterns, as well as scenarios with different velocity profiles. This allowed us to evaluate the adaptability of our method for handling complex and dynamic motion environments. The experimental results underscore the robustness of our approach, showing that it consistently outperforms conventional methods in terms of reconstruction accuracy and error mitigation, regardless of the motion conditions.

As illustrated in

Figure 5a,e,i, motion along the X and Y directions (X/Y) introduces significant errors in the initial 3D reconstructions. These errors are particularly pronounced as the velocity increases, leading to the emergence of periodic distortions on the reconstructed mask surface. These distortions disrupt the smoothness and continuity of the reconstructed surface, affecting the overall accuracy and reliability of the measurement. In addition to the periodic errors, the edge regions, as highlighted by the red boxes, suffer from severe issues of missing data caused by pixel shifting. These issues become increasingly problematic at higher velocities, as the rapid motion exacerbates the misalignment between the captured data and the actual object geometry.

Applying the Hilbert transform alone, as shown in

Figure 5b,f,j, demonstrates its limitations in resolving these issues. While it can partially reduce periodic distortions, it proves inadequate for addressing the missing data problems in the edge regions, particularly in areas where motion effects are more pronounced. This underscores the necessity of addressing camera-pixel mismatches as a fundamental step in error compensation. Without correcting this mismatch, the reconstruction remains prone to inaccuracies, limiting its applicability in high-precision scenarios.

When the camera-pixel mismatch is corrected, as depicted in

Figure 5c,g,k, significant improvements in reconstruction accuracy can be observed. Pixel misalignment-induced errors are effectively eliminated, and missing reconstruction details in the edge regions, particularly within the red-boxed areas, are successfully restored. The corrected reconstruction provides a more accurate representation of the object’s geometry, even under motion conditions. However, despite these advancements, periodic phase errors persist on the mask surface, indicating that addressing pixel mismatches alone is insufficient for achieving high-accuracy results.

Finally, as demonstrated in

Figure 5d,h,l, our proposed method provides a comprehensive solution by simultaneously addressing both camera-pixel mismatch and phase-shifting errors. This dual-compensation approach resolves the missing data issues in the edge regions, completely restoring the lost details, and significantly mitigates the periodic distortions across all three velocities. The integration of these compensation steps results in smoother and more accurate 3D reconstructions, free from the limitations observed in previous approaches. These results highlight the robustness and effectiveness of our method for achieving high-fidelity reconstructions, even under challenging dynamic conditions. This comprehensive correction process not only ensures accurate measurements in complex motion scenarios, but also showcases the potential of our method for broader applications in dynamic 3D measurement tasks.

We also tested a motion scenario with the object moving along the X/Y-Z (X, Y, and Z) directions, and the results are illustrated in the following figure.

Figure 6a,e,i depict the initial 3D reconstruction results at different velocities, namely V1, V2, V3, showcasing the effects of motion on reconstruction accuracy. For motion along the X/Y-Z directions, the reconstructions reveal even more pronounced periodic errors compared to those along the X/Y directions, particularly as velocity increases. These periodic distortions are most evident on the mask surface, where the structured patterns exhibit noticeable deviations. Additionally, in the edge regions, as highlighted by the red boxes, the inaccuracies in the reconstruction become increasingly severe as velocity rises, leading to substantial data loss and misalignment issues.

Applying the Hilbert transform alone, as shown in

Figure 6b,f,j, demonstrates its limited ability to address these errors. While this approach reduces some periodic distortions, it fails to eliminate them completely, especially in the edge regions, where pixel misalignment causes significant data loss. This highlights the critical need to address camera-pixel mismatches as a fundamental step in the error correction process.

When the camera-pixel mismatch is corrected, as shown in

Figure 6c,g,k, there is a noticeable improvement in the reconstruction. Errors caused by pixel misalignment are effectively eliminated, and missing reconstruction details in the edge regions, particularly within the red-boxed areas, are successfully restored. Despite these advancements, periodic errors on the mask surface remain unresolved, indicating the limitations of addressing pixel mismatches alone.

Ultimately, our proposed method, as depicted in

Figure 6d,h,l, provides a comprehensive correction that simultaneously compensates for camera-pixel mismatch and phase-shift errors. This dual-correction approach fully resolves inaccuracies in the edge regions, completely restoring the lost data, while significantly suppressing periodic errors across all velocities. The results clearly demonstrate the robustness and efficacy of the proposed method for handling complex motion scenarios, achieving precise and high-quality 3D reconstructions even under challenging conditions. These findings validate the capability of the proposed algorithm to enhance reconstruction accuracy, making it a valuable solution for dynamic 3D measurement tasks.

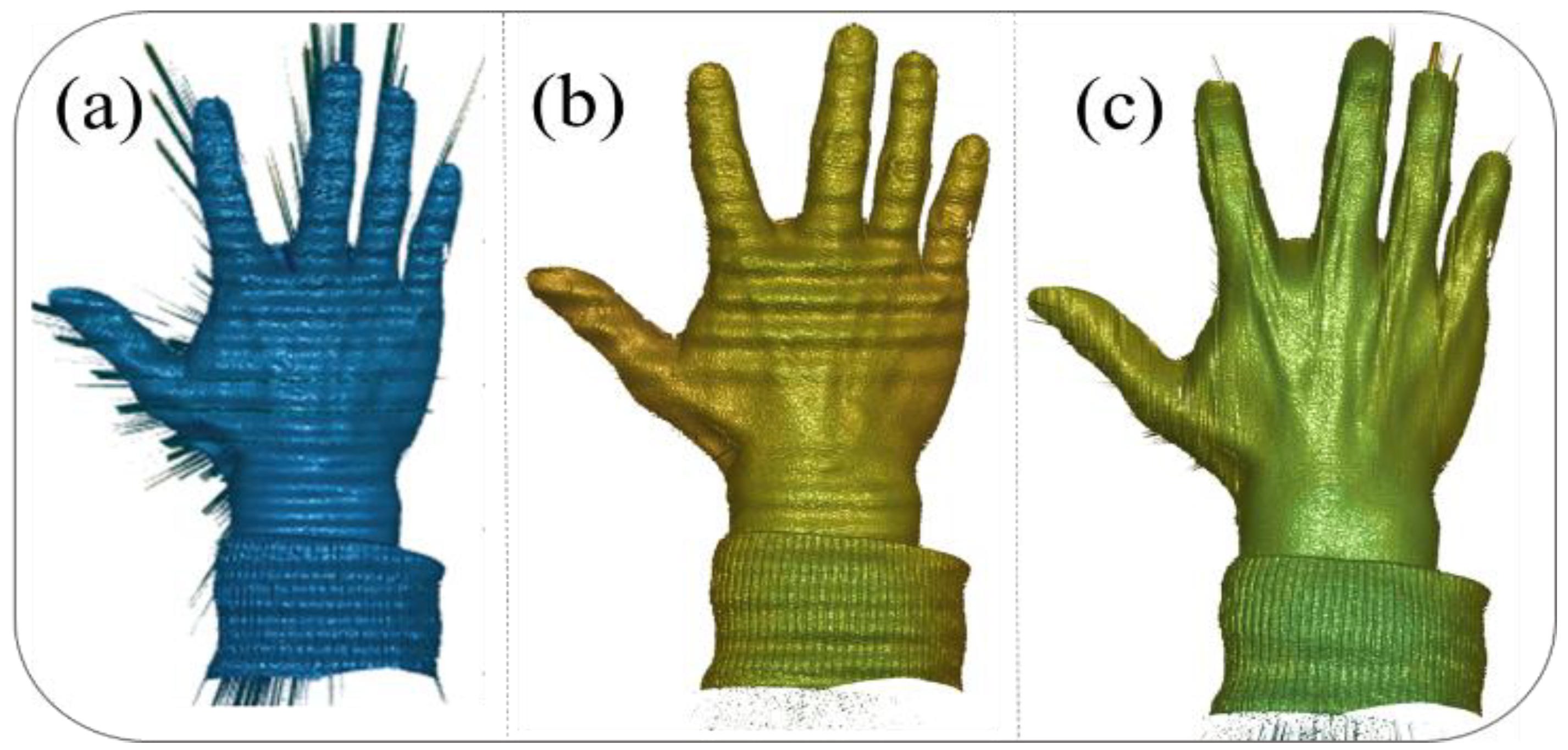

To further verify that our motion compensation method is not only effective for simple geometric shapes, but also applicable to complex objects, we selected a new reconstruction target for experimentation:

Figure 7 illustrates the reconstruction results for a moving hand under different correction strategies, highlighting the effectiveness of the proposed method. In

Figure 7a, the reconstruction results using the conventional phase-shifting algorithm without any corrections exhibit significant distortions and inaccuracies, due to the presence of both camera-pixel mismatch and phase-shifting errors, particularly in regions of rapid motion.

Figure 7b shows the results after correcting only the camera-pixel mismatch, where the pixel misalignment-induced errors are eliminated, leading to a clearer reconstruction. However, periodic phase errors remain visible, particularly on the surface of the hand, causing noticeable distortions. Finally,

Figure 7c presents the reconstruction results after simultaneously compensating for both pixel mismatch and phase-shifting errors, demonstrating a significant improvement in accuracy. The hand’s surface is reconstructed with minimal distortions, and the previously prominent errors are effectively mitigated, showcasing the robustness and precision of the proposed method for handling dynamic scenes.