Highlights

What are the main findings?

- An ES-YOLO framework combining EACSA attention, LSCD lightweight spatial–channel decoupling, and a hierarchical multi-scale design markedly improves multi-scale ship detection, especially for small and dense targets.

- The proposed model outperforms common detectors in challenging port scenes (occlusion, reflections, and clutter), delivering higher recall and overall mAP.

What are the implications of the main findings?

- Improved accuracy and robustness for ship detection in complex marine/port environments, supporting real-time maritime monitoring and port management.

- Establishes the TJShip benchmark and provides a practical methodology to guide future multi-scale ship detection research in complex port environments.

Abstract

With the rapid development of remote sensing technology and deep learning, the port ship detection based on a single-stage algorithm has achieved remarkable results in optical imagery. However, most of the existing methods are designed and verified in specific scenes, such as fixed viewing angle, uniform background, or open sea, which makes it difficult to deal with the problem of ship detection in complex environments, such as cloud occlusion, wave fluctuation, complex buildings in the harbor, and multi-ship aggregation. To this end, ES-YOLO framework is proposed to solve the limitations of ship detection. A novel edge perception channel, Spatial Attention Mechanism (EACSA), is proposed to enhance the extraction of edge information and improve the ability to capture feature details. A lightweight spatial–channel decoupled down-sampling module (LSCD) is designed to replace the down-sampling structure of the original network and reduce the complexity of the down-sampling stage. A new hierarchical scale structure is designed to balance the detection effect of different scale differences. In this paper, a remote sensing ship dataset, TJShip, is constructed based on Gaofen-2 images, which covers multi-scale targets from small fishing boats to large cargo ships. The TJShip dataset was adopted as the data source, and the ES-YOLO model was employed to conduct ablation and comparison experiments. The results show that the introduction of EACSA attention mechanism, LSCD, and multi-scale structure improves the mAP of ship detection by 0.83%, 0.54%, and 1.06%, respectively, compared with the baseline model, also performing well in precision, recall and F1. Compared with Faster R-CNN, RetinaNet, YOLOv5, YOLOv7, and YOLOv8 methods, the results show that the ES-YOLO model improves the mAP by 46.87%, 8.14%, 1.85%, 1.75%, and 0.86%, respectively, under the same experimental conditions, which provides research ideas for ship detection.

1. Introduction

With the rapid development of remote sensing imaging technology and deep learning, ship detection based on remote sensing images has become an important research direction in the fields of marine monitoring [1,2,3,4,5,6], port management, maritime traffic supervision, disaster prevention and reduction, and military target recognition. As an important carrier of marine activities, the detection and identification of ships is of great significance for the reasonable development of marine resources, maritime security, and the maintenance of national maritime rights and interests. However, the complex imaging conditions, sea surface environment, and ship distribution characteristics make ship detection tasks face great challenges in remote sensing images. Ship targets vary significantly in size, shape, orientation, and illumination conditions, and are often disturbed by waves [7,8], sea fog, shadows, and background clutter, which significantly reduces the robustness of the model in detecting small ships, densely distributed ships, and low-contrast scenes.

With the continuous development of high-resolution remote sensing satellites and unmanned aerial vehicle (UAV) platforms, it is possible to obtain multi-source, multi-scale and high-precision image data, which provides rich data support for ship detection. The existing public datasets, such as HSRC2016 [9] and SeaShips [10], construct diverse samples in different seas and resolutions, which provide a basis for research. However, these datasets still have problems, such as concentrated ship scale distribution, insufficient attitude variation, and incomplete coverage of complex backgrounds, making it difficult to fully reflect the diversity and complexity of the real port environment. In addition, some studies have attempted to construct high-resolution ship detection datasets for specific scenes to make up for the shortcomings of existing samples in complex coastal environments [11]. However, there is still a lack of comprehensive data resources that take into account multi-scale, multi-type ships and multi-background scenes in general.

Ship detection methods have experienced the evolution from traditional artificial features to deep learning features. Early ship detection methods mainly rely on artificial features, such as HOG-SVM and Haar-like detectors [12,13], which are not robust in complex scenes. In recent years, the emergence of deep learning technology, especially Convolutional Neural Networks (CNN), has enabled the model to automatically extract multi-scale features from massive remote sensing images, which significantly improves the detection performance. The current mainstream deep learning detection methods are mainly divided into two categories: the two-stage method based on candidate boxes Faster R-CNN [14,15,16,17] and the end-to-end single-stage methods YOLO series [18,19,20,21,22], RetinaNet [23], etc. The former performs well in accuracy, but its inference speed is slow. The latter has significant advantages in detection efficiency and real-time performance, especially suitable for the rapid detection of large-scale remote sensing images. Despite the remarkable progress of deep learning methods, there are still several key problems in complex ocean scenes. Firstly, in terms of multi-scale feature fusion, most of the existing detection frameworks rely on a fixed feature pyramid structure [24,25] (Feature Pyramid Networks, FPN or Path Aggregation Network, PAN), and the feature transfer path is relatively single. It is difficult to fully integrate the semantic and detailed information of different scales, which leads to the weakening or even loss of small ship features after multiple down-sampling. Secondly, the robustness is still insufficient under complex background. The complex texture of the sea surface, severe illumination changes, and obvious wave reflection make the edges of the ship fuzzy and low contrast. When the texture of the ship and the background is similar, false detection and missed detection often occur. For example, in the harbor area, dock buildings, moored ships, and shadows are often misclassified as ships. Thirdly, the lack of context modeling ability limits the detection accuracy. The semantic recognition of ships not only depends on their own morphological features, but is also closely related to the scene elements such as surrounding waters, shoreline, and port facilities. If this environmental information is ignored, it is difficult for the model to correctly distinguish ships from non-ship structures in near-shore or complex backgrounds. Therefore, researchers have proposed a variety of contextual information enhancement strategies to improve the scene understanding ability of the model. Gong et al. [26] proposed Context-RoIs mechanism to dynamically generate extended regions around the target region to capture local spatial relationships. Wu et al. [27] designed a global context aggregation module (GCAM) to establish the semantic dependency between object and background in a wider range. The global boundary attention module (GB module) proposed by Wang [28] improves the detection accuracy in complex scenes by integrating multi-dimensional global features and local detail information. Chen et al. [29] proposed the CLGSA method to enhance the generalization ability of the model through cross-sample semantic correlation. These methods effectively enhance the detection performance in complex scenes but also bring the problems of computational overhead and structural complexity. In addition, Guan et al. [30] proposed an oriented SAR ship detection method based on edge deformable convolution and point set representation, which enhances the modeling capability of hull boundary structures through an edge-aware deformable sampling strategy and flexible geometric representation. This method effectively improves the detection robustness under significant ship rotation and morphological deformation. However, it is mainly designed for oriented target extraction in SAR scenarios and relies heavily on explicit geometric modeling. Its improvements do not directly address the multi-scale feature coupling, complex background suppression, and edge–detail semantic integration challenges in optical remote sensing ship detection tasks considered in this work. Therefore, how to achieve lightweight and real-time detection while maintaining high accuracy has become an important direction of current ship detection research. In recent years, attention mechanism and multi-scale feature fusion strategy have been widely introduced to enhance the feature adaptation ability and multi-scale expression ability of the model.

Aiming at the problems of insufficient multi-scale feature fusion, weak-edge information extraction ability, and significant complex background interference in ship detection in the above complex ocean environment, this paper conducts improvement research based on the YOLOv7 framework, aiming to improve the detection robustness and accuracy of the model in port and inshore scenes. The overall idea of the research is to enhance the comprehensive representation ability of the model for ship edge features, spatial details, and multi-scale semantic information while maintaining the lightweight and real-time performance of the network structure. To this end, this paper explores three aspects. Firstly, the edge and detail feature guidance mechanism is introduced to strengthen the response of the model to the boundaries and structural features of the ship, so as to reduce the interference of complex backgrounds such as waves and shadows. Secondly, an efficient decoupling strategy of spatial and channel features is designed to reduce feature redundancy and optimize the down-sampling process, so that the model can effectively transfer features between different scales. Thirdly, a multi-scale fusion hierarchical detection structure is constructed to balance the feature expression ability of large, medium, and small ship targets. Based on the self-built multi-scene remote sensing ship dataset TJShip, the proposed improvement idea is experimentally verified.

2. Materials and Methods

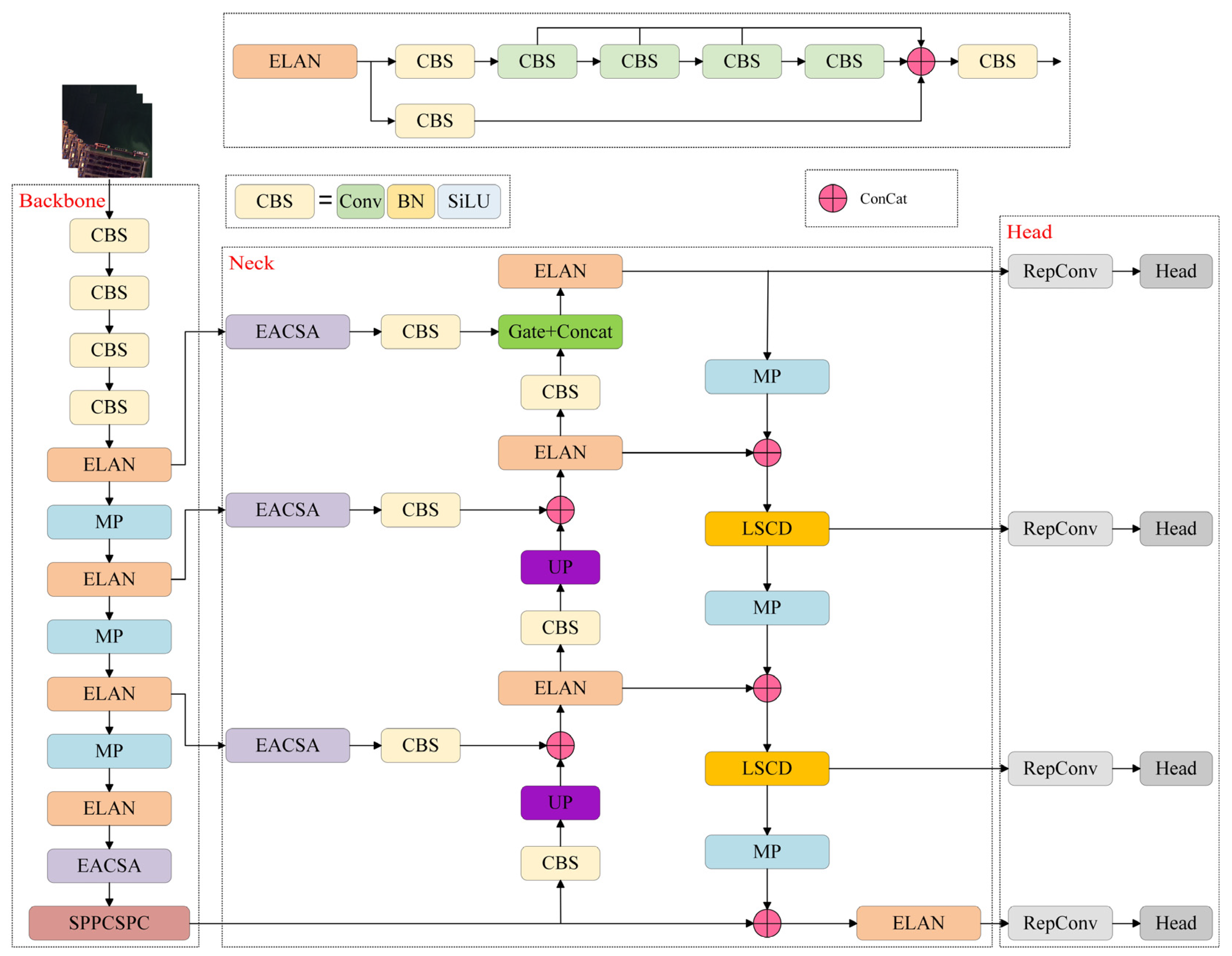

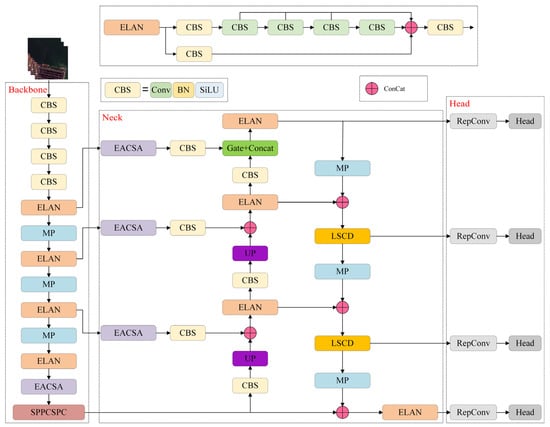

The overall architecture of the ES-YOLO model proposed in this paper is shown in Figure 1, which is composed of three parts: backbone, neck, and head. For the input image, the backbone network first processes the image and extracts hierarchical features, which represent very low, low, medium, and high-level semantic features, respectively. The EACSA attention module of the neck network was used to extract the output features, and the edge information and detail feature information of the ship were extracted. In the down-sampling stage, the output information of the upper network is extracted through the LSCD module, and the calculation is effectively reduced by the separate convolution operation, while maintaining the extraction ability of key features, especially in the detection of complex backgrounds and small ships. Finally, the output (i = 1, 2, 3, 4) is obtained by the detection head. .

Figure 1.

Overall framework of ES-YOLO. The network consists of three main components: (1) backbone, which extracts hierarchical semantic features from very low-level to high-level representations; (2) neck, where the proposed EACSA attention module enhances edge contours and suppresses background interference, while LSCD performs lightweight spatial–channel decoupled down-sampling; and (3) head, which generates final multi-scale detection outputs at four resolutions. The proposed modules work collaboratively to improve fine-grained ship boundary extraction, multi-scale feature fusion, and detection robustness in complex port scenes.

2.1. EACSA Module

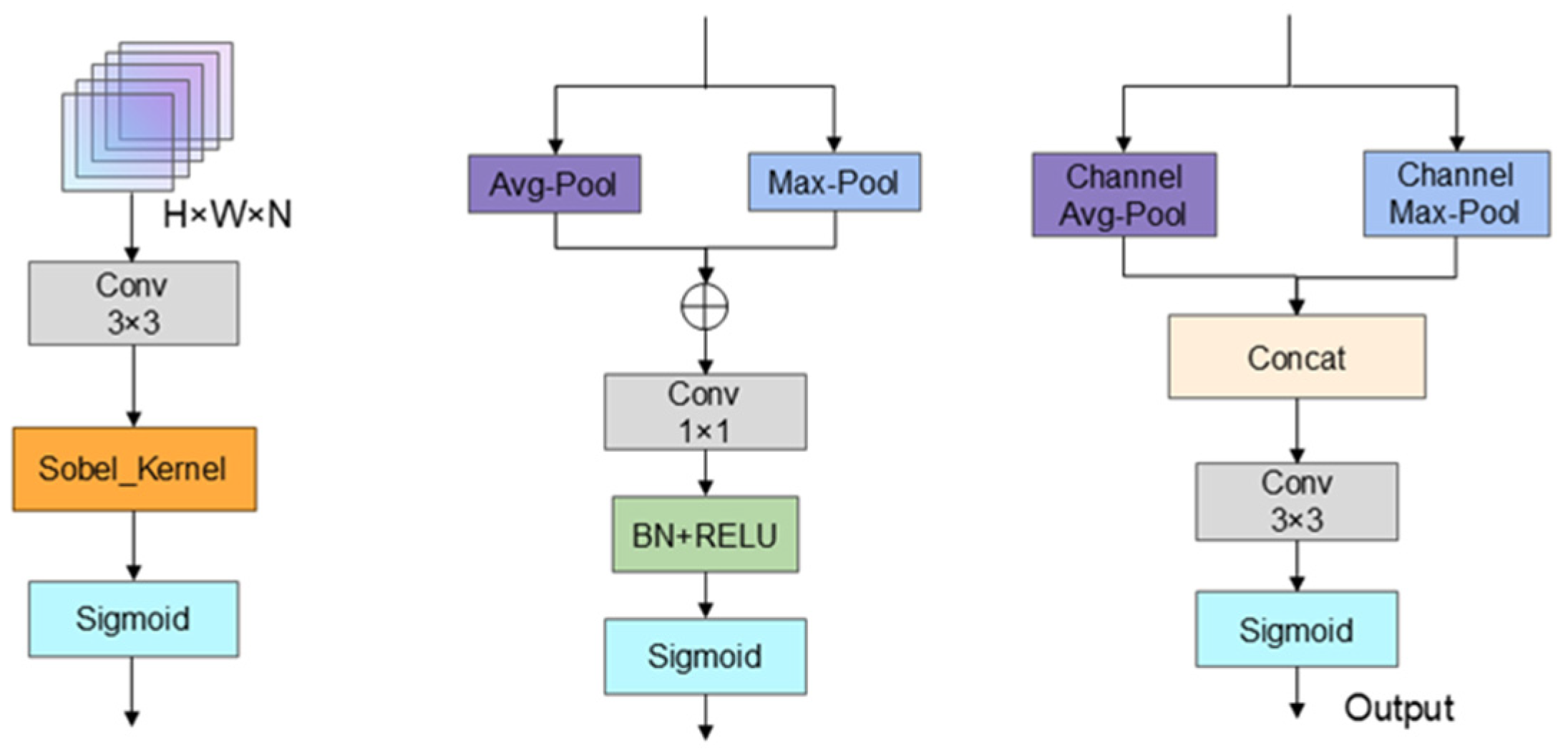

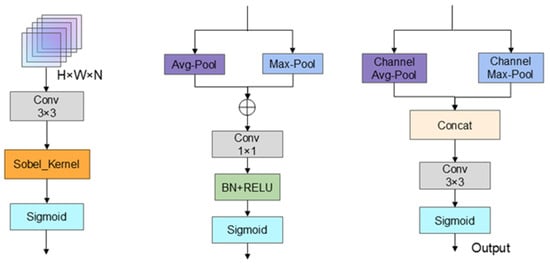

In order to solve the problem that, with the traditional attention mechanism in ship detection tasks, in complex scenes, it is difficult to fully capture the structural information of the ship edge, and that it cannot effectively integrate channel and spatial dimensional features, this paper proposes an edge-aware multi-dimensional attention mechanism EACSA, as shown in Figure 2. The edge information is integrated into the channel and spatial attention module, and the edge structure information of the ship is extracted through the edge attention module to enhance the perception ability of the feature to the ship contour.

Figure 2.

Structure of EACSA. EACSA integrates edge extraction, channel attention, and spatial attention to strengthen contour features and suppress background responses. The module enhances ship boundary perception without increasing large computational overhead.

Given the input feature map as , where and represent the height and width of the feature map, respectively, and the specified number of channels is 3, the edge detection convolutional layer uses depthwise separable convolution, and its convolution kernel is the predefined Sobel operator. This convolutional layer first performs a convolution operation on the input feature map to obtain the edge attention weight. Taking the absolute value of the feature map gives , and then summing over the channel dimension yields a single-channel edge feature map . The edge attention weight is generated through the Sigmoid function. The edge attention weight is applied to the input feature map to obtain the edge-enhanced feature map (Equation (1)).

where is the Sigmoid activation function, denotes the c-th channel of , and is the edge response map.

On the basis of edge enhancement, EACSA constructs a lightweight channel–spatial attention fusion structure. The channel attention module combines global average pooling and global Max pooling to capture the dependencies between channels. Secondly, a fully connected network composed of two 1 × 1 convolutional layers is used to generate channel attention weights. The channel attention weights are applied to the edge-enhanced feature maps to obtain the channel-enhanced feature maps (Equations (2) and (3)).

where denotes the fully connected network.

The spatial attention module performs maximum pooling and average pooling in the channel dimension , and then generates spatial attention weights (Equation (4)) through a 3 × 3 convolutional layer and Sigmoid function after splicing. The spatial attention weights are applied to , and the enhanced feature map (Equation (5)) is obtained.

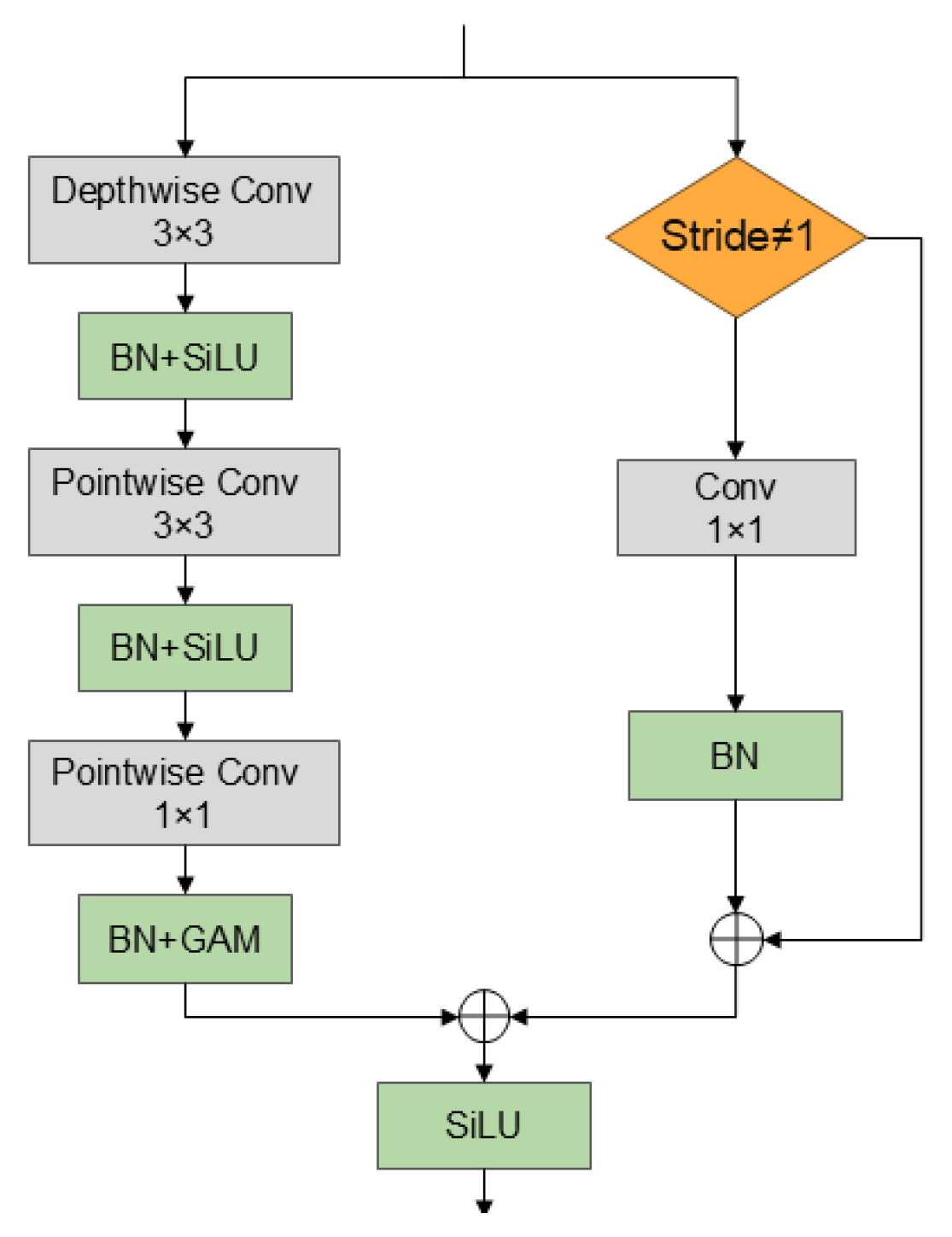

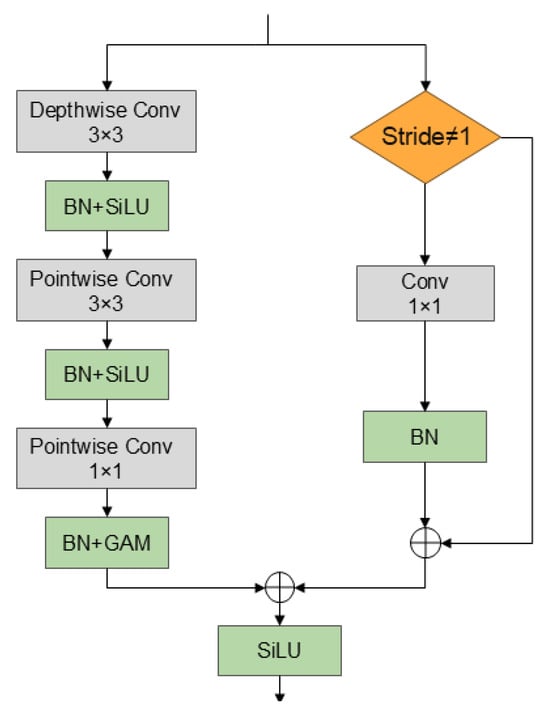

2.2. LSCD Module

In the ship detection task, the efficient down-sampling module is extremely critical for the effective extraction and fusion of ship features at different scales. In this paper, a down-sampling module based on LSCD is proposed, as shown in Figure 3, with the help of spatial and channel decoupling design, lightweight attention mechanism GAM [31]. When dealing with ships of different scales, with the traditional down-sampling module, it is difficult to balance spatial detail preservation and channel semantic extraction. LSCD decouples spatial and channel operations to achieve refined feature processing. Let the input feature . In the spatial decoupling operation, the depth separable convolution is used for spatial down-sampling, the convolution kernel size is , and the step size is . First, the depth separable convolution is performed, followed by batch normalization, and finally the activation function is used to obtain the feature (Equation (6)).

Figure 3.

LSCD network structure. LSCD performs lightweight spatial–channel decoupled down-sampling to preserve discriminative features during resolution reduction. It improves small-ship feature retention while keeping computation efficient.

Through depthwise separable convolution, the computational complexity is reduced while the spatial details are preserved, and the excessive compression of spatial information by traditional convolution is avoided. In the channel decoupling operation, the spatially decoupled features are adjusted through two 1 × 1 convolutional layers for channel dimension adjustment, and the batch normalization and activation function are processed in the middle. Firstly, the number of channels was adjusted by the first 1 × 1 convolution, and then the batch normalization and activation function processing were performed to obtain (Equation (7)), and then the second 1 × 1 convolution and batch normalization processing were performed to obtain (Equation (8)). Among them, the feature interaction between channels is enhanced and the feature expression ability is improved by first reducing the dimension and then increasing the dimension .

In order to further highlight the characteristics of the ship and suppress the background noise, LSCD is embedded with a lightweight attention mechanism GAM. GAM calculates channel attention and spatial attention separately and multiplies them with input features. The channel attention is realized by global average pooling and 1 × 1 convolution and activation function, and the spatial attention is realized by 7 × 7 convolution, and finally (Equation (9)) is obtained. The two methods combine to highlight the channel and spatial features of the ship.

In order to avoid information loss during down-sampling, LSCD introduces residual connection. When the number of input channels is inconsistent with the number of output channels , the dimension of the input features is adjusted by 1 × 1 convolution and batch normalization to obtain (Equation (10)). Then, the attention-processed features and the adjusted residual features are added to obtain , and finally the activation function is used to obtain . The residual connection ensures the transmission of important features in the down-sampling process, alleviates the problem of gradient disappearance, and improves the stability of model training and feature extraction ability.

Unlike existing down-sampling operators (e.g., RepConv, DSConv, and GSConv), the proposed LSCD performs spatial and channel decoupling in two independent branches and then fuses them with a lightweight attention gate. This design differs from prior methods that only compress channel dimensions or only factorize the convolution kernel and is the core originality of our down-sampling modification.

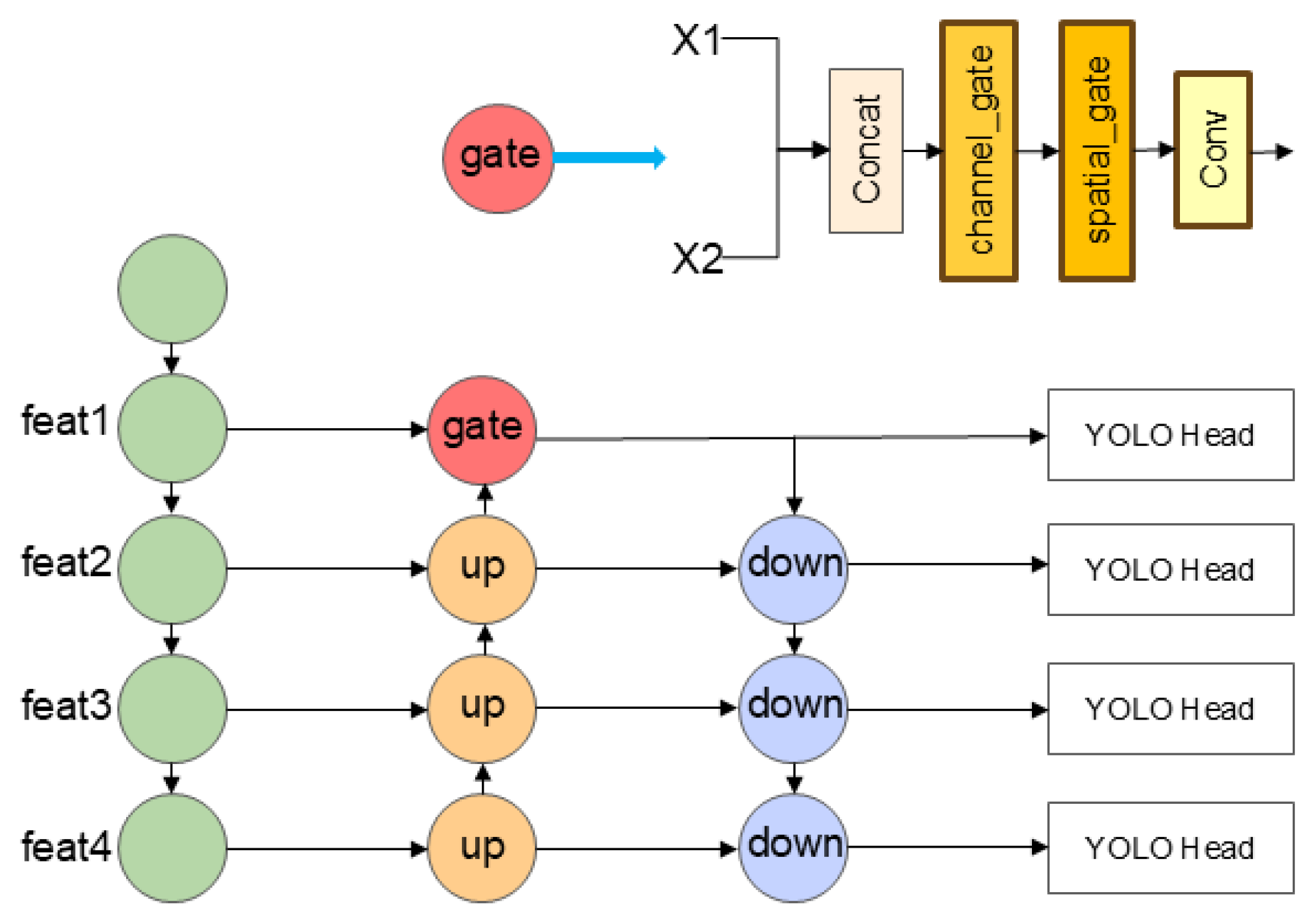

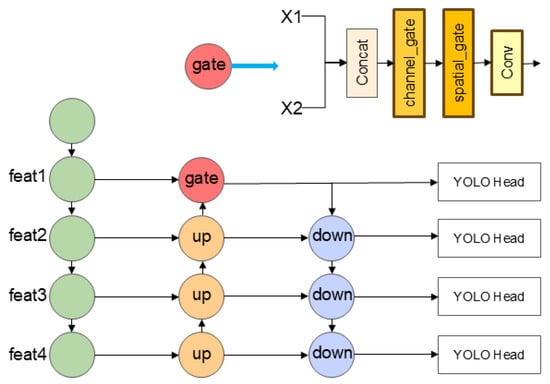

2.3. Multi-Scale Structure

Aiming at the problem that YOLOv7 has insufficient detection ability for multi-scale ships, especially small ships in complex scenes, this paper introduces a hierarchical scale feature enhancement structure based on the original feature pyramid network PANet [32]. As shown in Figure 4, through cross-level feature fusion and a gating mechanism, a more refined multi-scale feature interaction path is constructed.

Figure 4.

Multi-scale structure. Enhanced feature interaction across scales strengthens the detection of ships with large size variations. Channel–spatial gating further suppresses background noise during multi-scale information flow.

To avoid noise interference during cross-scale feature fusion, the Channel Spatial Gate module is introduced to dynamically adjust the contribution of features from different sources through the attention mechanism. At the scale fusion node, the global average/Max pooling of the channel dimension is performed on the input features to generate the channel attention weight. At the same time, spatial convolution is used to capture the local context and generate spatial attention masks. The gating mechanism outputs the weights between 0 and 1 through the Sigmoid function, adaptively selects the effective feature regions, and suppresses the interference of irrelevant background on the fusion features. For example, in small ship detection, the gating module preferentially activates the edge and contour information in the low-level features, which is complementary to the high-level semantic features to improve the ship positioning accuracy.

The input feature maps (i = 1, 2) are spliced through the gating mechanism, and the channel gating and spatial gating operations are performed in turn. Finally, the number of channels is adjusted through a 1 × 1 convolutional layer to make it the same as the total number of channels before splicing, and the final output is obtained as (Equations (11)–(13)).

where , and is the feature map after splicing.

3. Data and Experimental Setup

3.1. Experimental Dataset Description

- (1)

- TJShip dataset

Tianjin Port (north latitude 38°57′55″–39°10′50″, east longitude 117°31′50″–117°45′30″) is the largest comprehensive port in North China, with a total area of about 384 km2. Tianjin Port has a deep water channel, diversified berth system, and a complex ship activity scene, including cargo ships, passenger ships, fishing boats, engineering ships, and other ship types, which provide an ideal experimental area for carrying out ship monitoring research in the port.

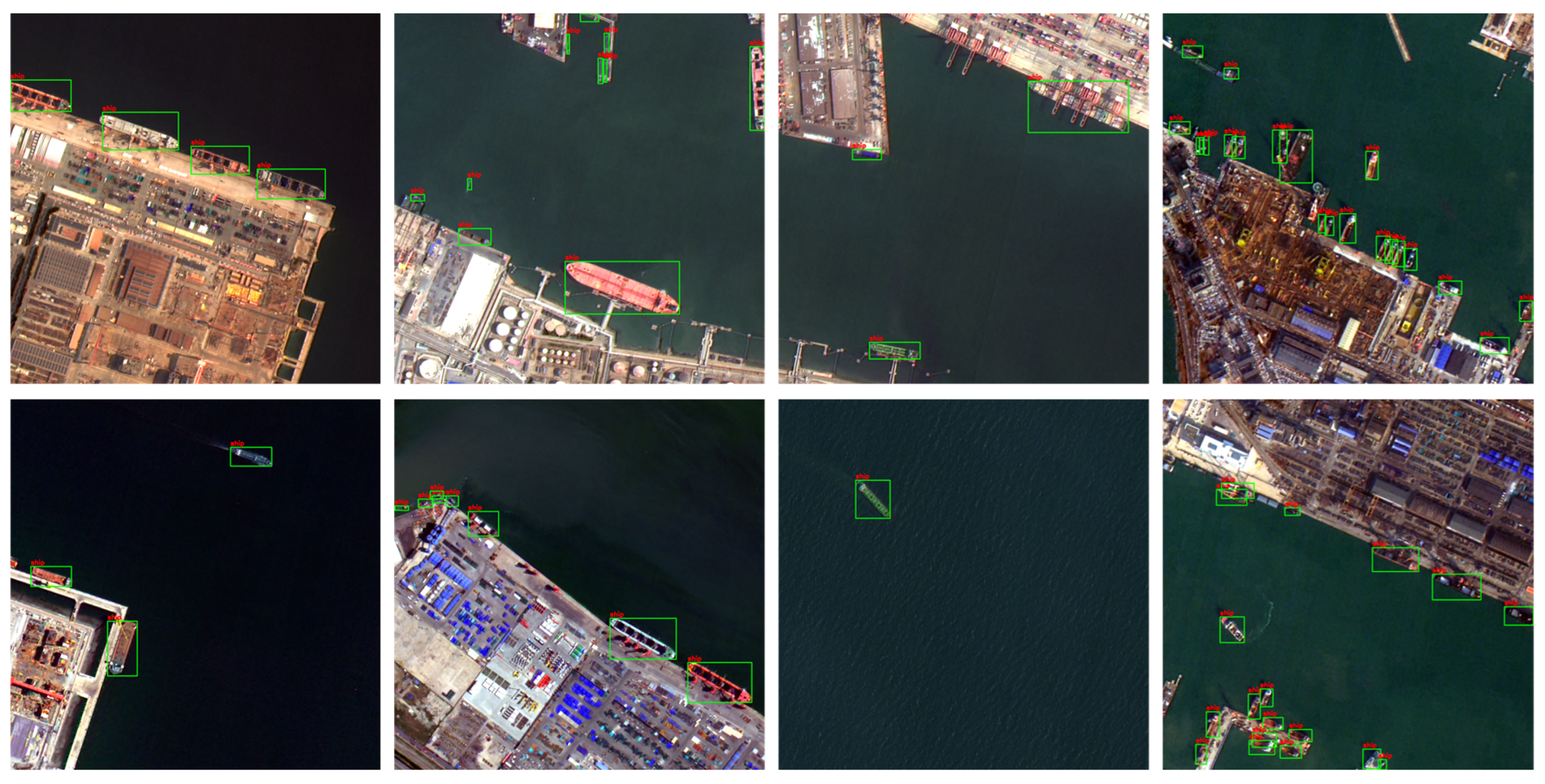

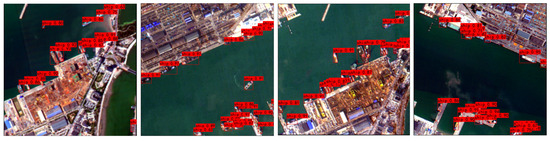

In this paper, the Gaofen-2 satellite is used as the data source to produce the TJShip dataset. Two GF-2 satellite remote sensing images on 5 December 2023 and 19 June 2024 with good weather conditions were selected as data sources. The acquired images were preprocessed, including radiometric calibration, atmospheric correction, orthographic correction, image cropping, and image fusion. The preprocessed images were cropped according to the size of 1024 × 1024, and the cropped data were augmented, including random flipping, random rotation, random scaling, etc., to increase the diversity of the training data. The TJShip dataset consisted of 1863 images, which were divided into a training set and test set according to 8:2. The specific information of the dataset is shown in Table 1 and Figure 5.

Table 1.

Details of TJShip dataset.

Figure 5.

TJShip part of the training sample.

- (2)

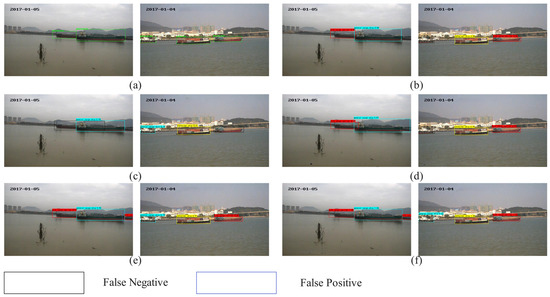

- SeaShips dataset

The SeaShips [10] dataset is a representative large-scale public benchmark widely used in ship detection research, particularly suitable for port and near-shore surveillance scenarios. Developed by Dalian Maritime University, the dataset includes six typical ship categories—passenger ships, cargo vessels, fishing boats, engineering ships, oil tankers, and tugboats—captured from various real maritime monitoring environments. It features complex sea backgrounds, dense berth scenes, multi-scale targets, occlusions, and illumination variations, closely matching the characteristics of practical port surveillance imagery. All images are provided with accurate bounding-box annotations, enabling comprehensive evaluation of one-stage detection algorithms in challenging maritime conditions. As such, the SeaShips dataset serves as a fundamental benchmark for validating ship detection performance in port scenarios. The specific information of the dataset is shown in Figure 6.

Figure 6.

SeaShips part of the training sample.

3.2. Experimental Design

The experiment was run on Ubuntu 18.04, the server was equipped with Intel (R) Xeon (R) Gold 6430 processor, and the graphics card was NVIDIA GeForce RTX 4090 GPU. The training and testing environment is constructed based on CUDA 11.3 and PyTorch 1.10.0 framework. In the training process, the training parameter set includes the number of iterations (epoch), batch size (batch size), initial learning rate (lr0), final learning rate (lr1), momentum, etc. The epoch was set to 100, the batch size was set to 4, the lr0 was set to 1 × 10−3, the lr1 was set to 1 × 10−5, and the momentum was set to 0.937 [33]. The IoU threshold was set to 0.5, and the SGD optimizer was used to update the network model parameters iteratively. The input image size is uniformly scaled to 1024 × 1024.

- (1)

- Ablation experiments

In order to evaluate the enhancement effects of EACSA module, LSCD module, and multi-scale structure on YOLO model, eight ablation experiments (none of which used pre-trained model) were designed. The detailed configurations are shown in Table 2.

Table 2.

Ablation experiment parameter Table.

In order to quantitatively evaluate the impact of reduction in EACSA module on detection performance (precision, recall, mAP, and F1), and find the optimal compression ratio setting between accuracy and efficiency, four groups of experiments are designed, as shown in Table 3.

Table 3.

Experimental parameter table of compression ratio.

In order to quantitatively compare the influence of five activation functions of LeakyReLU, GeLU, PReLU, SiLU, and ReLU on the detection performance (precision, recall, mAP, and F1) in the LSCD down-sampling module, five groups of experiments were designed, and the detailed experimental parameters are shown in Table 4.

Table 4.

Compression ratio experimental parameter table.

- (2)

- Comparative experiments

In order to evaluate the performance of ES-YOLO in ship detection, Faster R-CNN, RetinaNet, YOLOv5, and YOLOv8 are selected as the comparison models, and the parameters are shown in Table 5.

Table 5.

Comparative experimental parameter table.

3.3. Evaluation Metrics

In this paper, precision, recall, mAP and F1 scores are used to evaluate the accuracy of ship recognition. Recall (Equation (14)) measures the ability of the model to correctly identify all actual positive examples in the recognition task, which is the ratio of the number of positive examples identified by the model to the number of positive examples that actually exist.

where TP is the score predicted by the model as a ship which is also the score actually as a ship; FP is the fraction of the model that incorrectly identifies other classes as ships; and FN is the part of the model that incorrectly identifies other objects as ships.

Accuracy (Equation (15)) reflects the reliability of the model’s prediction for positive samples, which is the proportion of samples that the model identifies as positive samples that are actually positive.

The F1 score (Equation (16)) intuitively reflects the trade-off effect of the model in accurately identifying positive samples and avoiding false positives and is a kind of weighted average of precision and recall.

mAP (Equation (17)) is the average of the average detection accuracy of all classes, where the threshold for IoU is set to 0.5.

4. Experimental Results and Analysis

4.1. Ablation Experiments

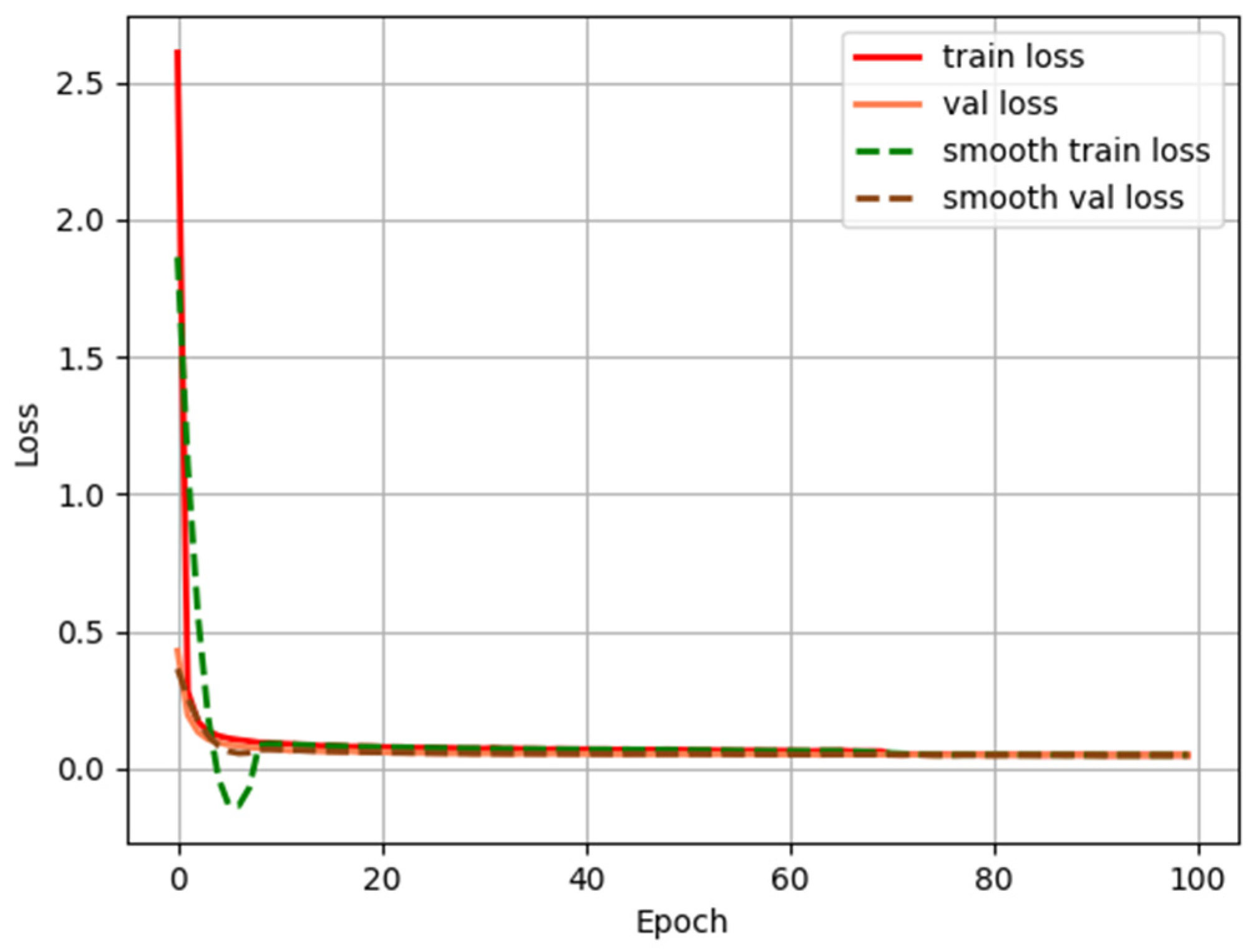

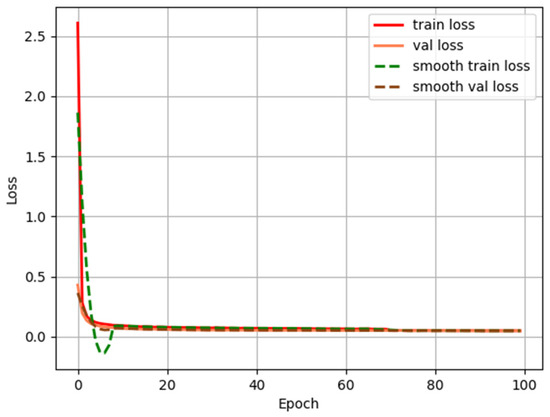

The accuracy evaluation results of ablation experiments are shown in Table 6, and the loss changes during the training of the ES-YOLO model are shown in Figure 7.

Table 6.

Results of ablation experiments.

Figure 7.

Training set and validation set loss values during model training.

Figure 7 shows the changes in the training set and validation set loss of the ES-YOLO model within 100 epochs. It can be seen that the training set loss and validation set loss values are decreasing, and the validation set loss value starts to level off at a certain state, indicating that the model is trained normally.

- (1)

- EACSA

In the EACSA module, the channel attention realizes the adaptive recalibration of channel features through a two-layer convolution structure, and the compression ratio is used to control the dimension reduction ratio of the channel to achieve a balance between model complexity and feature expression ability. The experimental results are shown in Table 7.

Table 7.

Effect of different compression ratios on EACSA.

The experimental results show that the compression ratio has a significant impact on the model checking performance. When reduction is 4, more feature information is retained, but the redundancy between channels is high, and the attention distribution is not concentrated. When reduction is 16 or 32, the channel information is excessively compressed, and the key semantic features are lost, resulting in a decrease in detection accuracy. Comprehensive comparison shows that when reduction is 8, the model achieves 91.54% in precision, 70.77% in recall, 82.74% in mAP, and 80% in F1, respectively, and the overall performance is the best. Moderate channel compression can not only reduce the computational burden but also enhance the ability of the attention module to focus on key features, making the model more stable in small target detection and complex background scenes. Therefore, reduction to 8 can be used as the best balance setting between detection accuracy and model complexity for EACSA module.

In order to verify the influence of EACSA module on the performance of the model, this module is introduced on the base model for comparative experiments, and the results are shown in Table 6. After the introduction of EACSA, the overall detection performance of the model is improved, in which precision is increased to 94.01%, recall is increased to 72.38%, mAP is increased to 84%, and F1 value is increased to 82%. From the results, the precision is increased by 2.9%, indicating that the false detection rate of the model is significantly reduced in complex backgrounds. mAP was increased by 0.83%, indicating that the overall detection accuracy was optimized. At the same time, recall is increased by 1.6%, which reflects the enhanced detection ability of the model in weak-target or low-contrast scenes. This shows that the EACSA module plays a positive role in feature extraction and salient region focusing. The reason for the performance improvement is that the EACSA module enhances the responsiveness of the model to key features by introducing a joint attention mechanism in the channel and spatial dimensions. Channel attention uses the fusion of global average pooling and maximum pooling to adaptively allocate channel weights, which effectively strengthens semantic salient features and suppresses irrelevant background information, thereby reducing false detections and improving precision. Spatial attention captures salient regions in the two-dimensional space, so that the model can more accurately locate the edge and contour structure of the ship in the complex sea background, thereby improving recall and F1 value. In addition, Sobel convolution enhancement, an edge-aware mechanism embedded in EACSA, further strengthens the gradient response of the target boundary and improves the adaptability of the model to blurred contours, uneven illumination, and wave interference scenes. This synergistic effect of edge enhancement and attention focusing makes the model have stronger environmental robustness while maintaining high detection accuracy.

- (2)

- LSCD

To investigate the impact of nonlinear activations on the proposed LSCD architecture, five commonly used activation functions (LeakyReLU, GeLU, PReLU, SiLU, and ReLU) were evaluated under identical network configurations and training settings. The results are summarized in Table 8. Among them, ReLU achieves the highest detection accuracy, with precision, recall, mAP, and F1 reaching 92.53%, 70.46%, 83.56%, and 0.80, respectively. PReLU and GeLU obtain comparable performance but remain slightly inferior to ReLU in all metrics, while SiLU and LeakyReLU show a noticeable decline in both recall and mAP.

Table 8.

Influence of different activation functions on the down-sampling structure.

Although SiLU is the default activation in YOLOv7, its smooth nonlinear compression weakens the response at object boundaries, resulting in insufficient preservation of fine-grained features for small ships. In contrast, the hard-threshold property of ReLU introduces stronger activation sparsity, effectively suppressing sea surface noise and enhancing the feature contrast between ships and background regions. This behavior is particularly beneficial in the down-sampling stage, where edge-aware decoupling is employed. Moreover, the residual and multi-branch fusion structure of LSCD mitigates the neuron inactivation issue commonly associated with ReLU, ensuring stable gradient flow during optimization.

Overall, the results indicate that activation selection is highly architecture dependent. In our LSCD framework, ReLU provides a more favorable trade-off between gradient sparsity, edge preservation, and convergence stability, leading to superior detection performance in complex maritime scenes.

In order to evaluate the influence of LSCD module on the performance of the model, LSCD is introduced into the basic model for comparison test. The experimental results are shown in Table 6. When only LSCD module is introduced, the precision, recall, mAP, and F1 values of the model are increased to 93.74%, 71.46%, 83.71%, and 81%, respectively. The LSCD module reduces the computational cost of feature down-sampling while maintaining accuracy and improves the detection efficiency of the model while maintaining the accuracy of the model. The LSCD module introduces a feature mapping method that decouples space and channel, so that the down-sampling process can focus on spatial structure and semantic information, respectively, avoiding the information loss problem caused by feature coupling in traditional convolution down-sampling. At the same time, the lightweight design inside the LSCD module can improve the accuracy of feature selection while reducing the amount of parameters and calculations, which makes the model perform better in small ship detection and complex background suppression.

In addition, the stable contribution of LSCD can be further verified from the results of the combination with other modules. When LSCD and EACSA are used at the same time, the mAP of the model is improved by about 0.63% compared with the Baseline, indicating that the two have complementary advantages in the feature extraction and down-sampling stage. When LSCD is combined with the multi-scale structure, the model improves the recall significantly, indicating that the LSCD module can effectively enhance the retention ability of features at different scales and further improve the recall performance of detection.

In summary, the LSCD module significantly improves the information expression quality of the feature down-sampling stage under the premise of keeping the overall model size unchanged and improves the feature retention and complex background suppression effect of small ships. It is an important part of the ES-YOLO framework to achieve efficient feature extraction and performance improvement.

- (3)

- Multi-scale

In order to verify the influence of the multi-scale structure on the performance of the model, this paper introduces the multi-scale structure on the basis of the benchmark model for comparison tests. The experimental results are shown in Table 6. The precision, recall, mAP, and F1 values of the model reach 91.03%, 72.62%, 84.23%, and 81%, respectively. Among them, the precision is slightly decreased compared with the baseline model, while the recall and mAP are significantly improved, especially the mAP, which is increased by about 1.1%, indicating that the multi-scale structure can significantly enhance the detection ability of the model on objects of different scales. It can be seen from the results that the multi-scale structure effectively improves the detection performance of small objects. The core idea is to enable the model to simultaneously obtain high-level semantic information and low-level detailed features through the parallel multi-scale feature pathway, so as to improve the diversity and complementarity of the feature space. Compared with the traditional single-path feature transfer method, the problems of the decline of spatial resolution of high-level features and the insufficient semantic expression of low-level features after multiple down-sampling are effectively alleviated. The improvement of recall indicates that the model is more sensitive in capturing fine-grained targets such as small ships, and the overall improvement of mAP reflects that multi-scale information fusion enhances the robustness of the model in complex scenes. The precision slightly decreases, which is caused by the redundancy of some features or the introduction of noise in the scale fusion process, but the overall F1 value is still higher than that of the baseline model, indicating that the multi-scale structure effectively improves the comprehensiveness and robustness of detection while maintaining the overall detection accuracy.

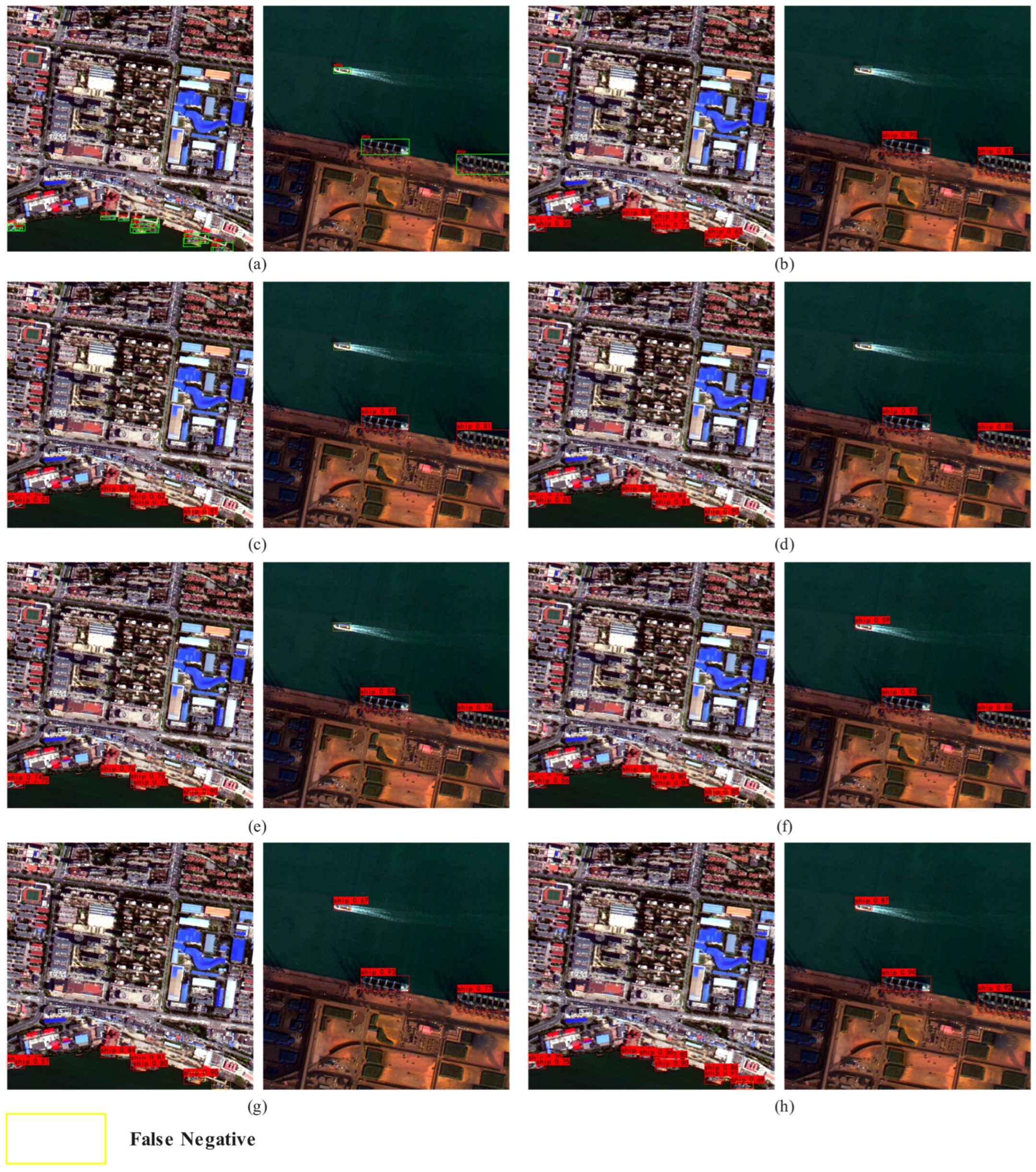

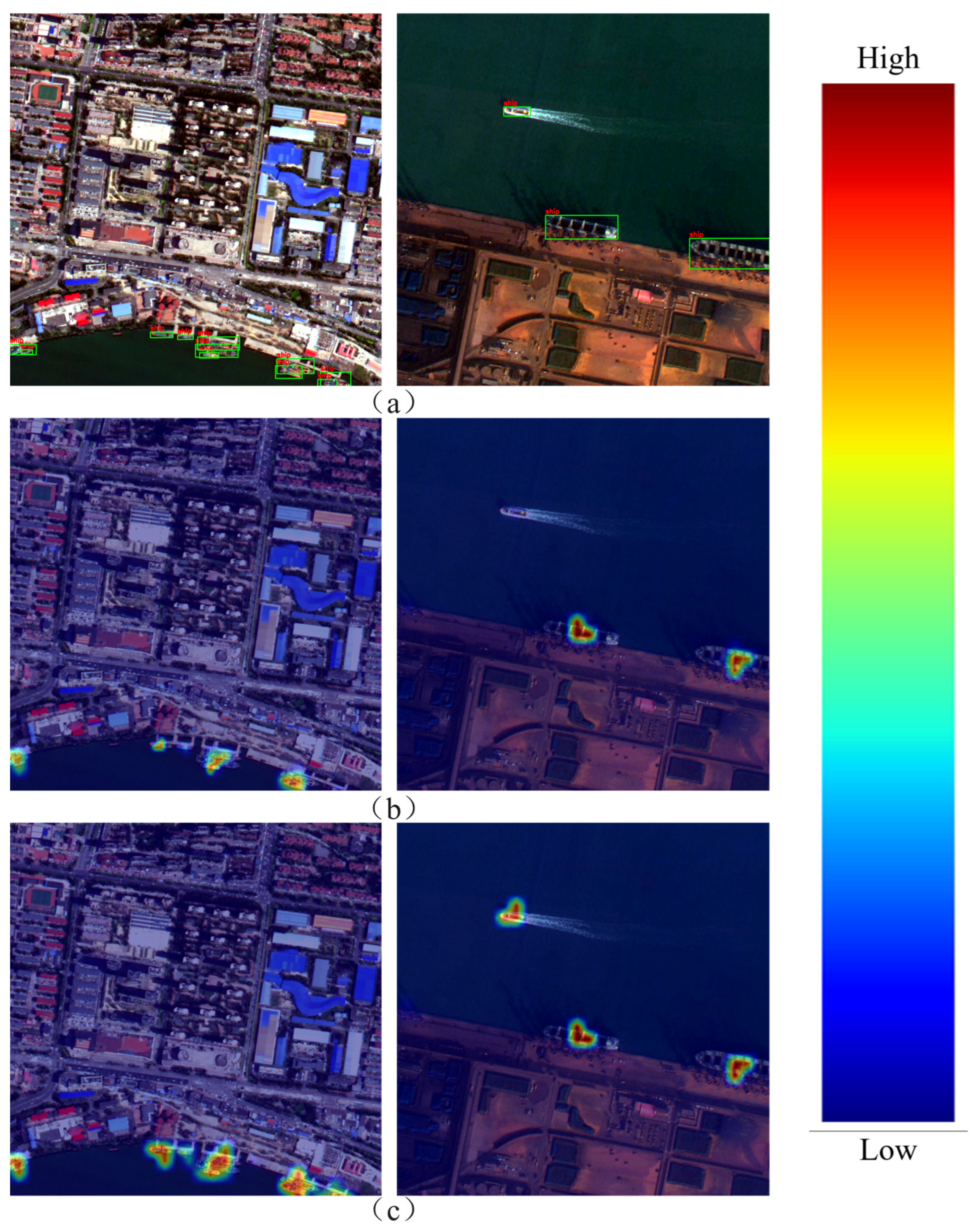

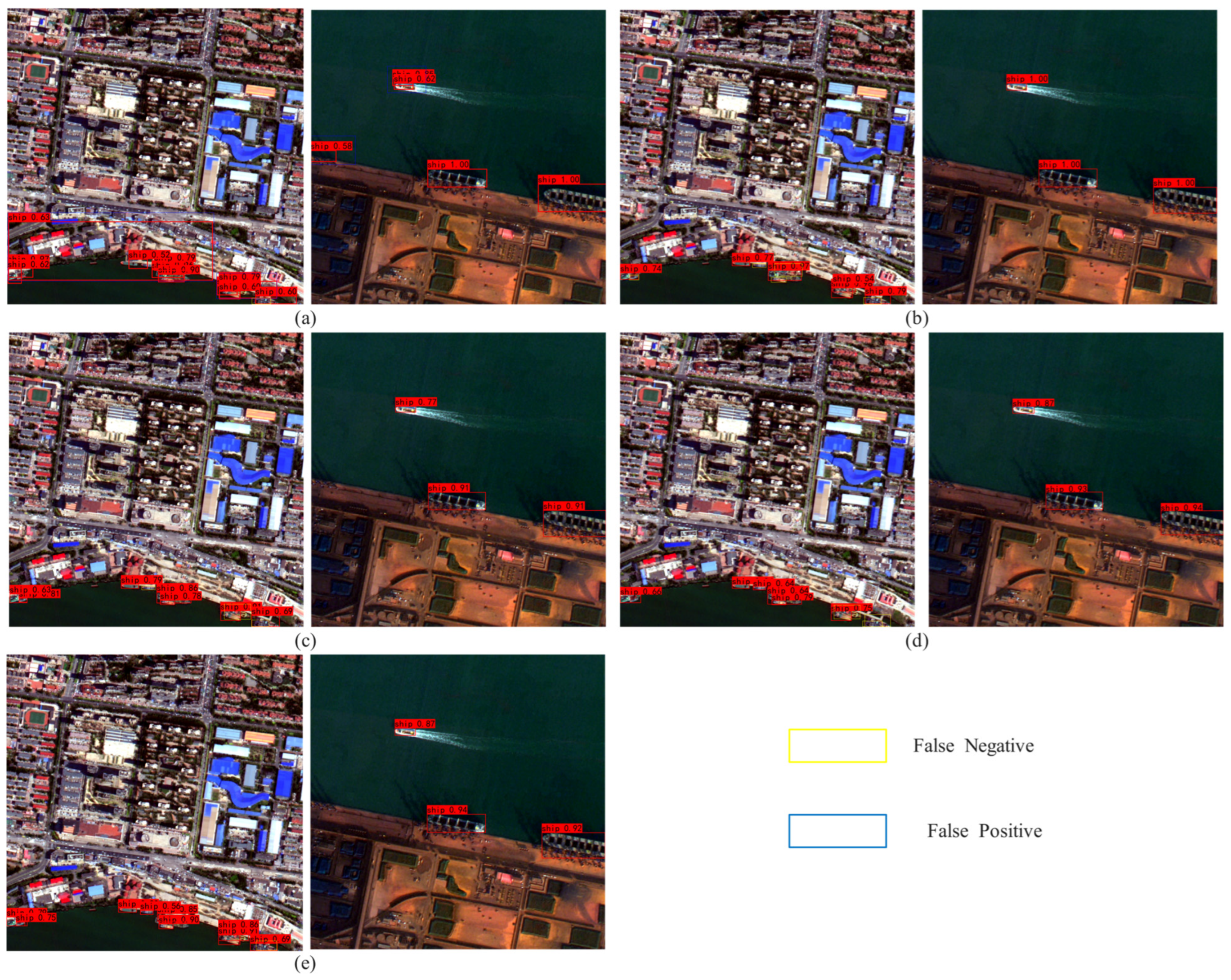

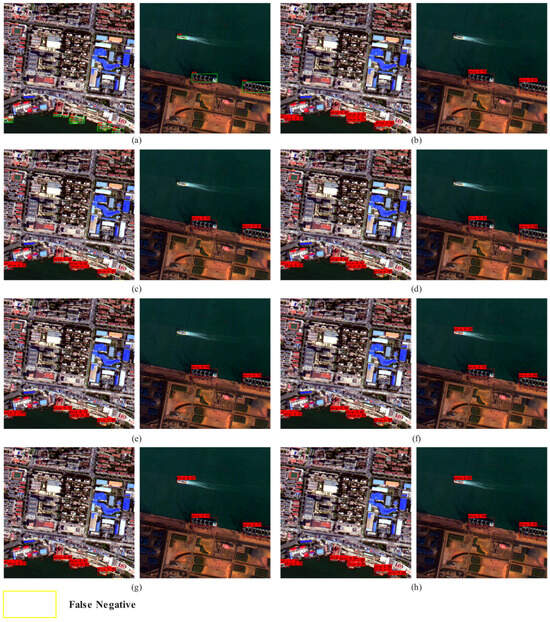

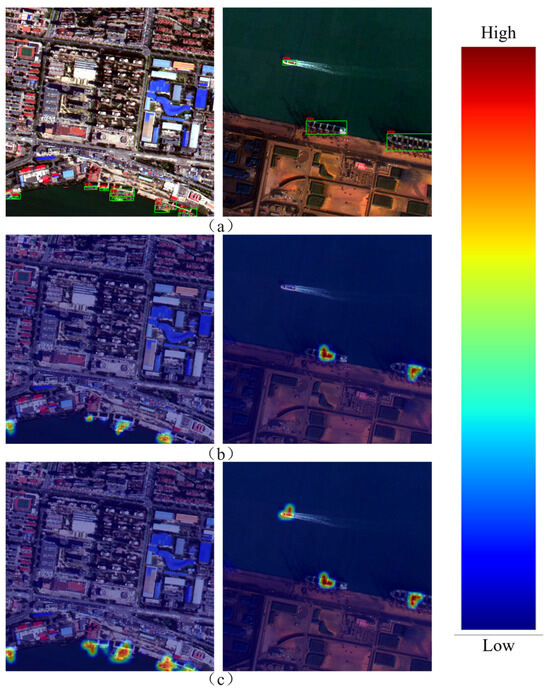

In addition, in order to more intuitively illustrate the improvement of model performance by each module, this paper conducts visual analysis. As shown in Figure 8, where (a) represents the real labeling results, (b)–(h) correspond to the detection results after removing or adding different modules, respectively, the red box represents the detection results, and the yellow box represents the missed detection. It can be seen from the Figure that when the key module is missing, the model is prone to problems such as incomplete detection boxes and missed detection in complex backgrounds or dense ship areas. With the gradual introduction of the improved module, the detection results are gradually closer to the real annotation, which can better cover the ship targets in different scales and complex environments and significantly reduce the missed detection phenomenon. Figure 9 presents the heatmap visualization results of ES-YOLO and the baseline model. High-activation regions correspond to ship bodies and boundary structures, indicating strong feature responses, whereas low-activation regions mainly appear in sea clutter and non-target areas. This visualization confirms that the designed modules not only enhance the representation of ship-related features but also effectively suppress background responses, thereby improving detection robustness in complex maritime scenes.

Figure 8.

Results of ablation experiments. (a) Label; (b) +EACSA; (c) +LSCD; (d) +Multi; (e) +EACSA + LSCD; (f) +EACSA + Multi; (g) +LSCD + Multi; (h) ES-YOLO.

Figure 9.

Heat map visualization. (a) Original image; (b) feature map of the original image; (c) the feature map after ES-YOLO processing.

4.2. Comparative Experiments

In order to prove the effectiveness of ES-YOLO method, it is compared with three other object detection algorithms: Faster R-CNN, RetinaNet, YOLOv5, and YOLOv8. The detection performance on TJShip dataset is shown in Table 9.

Table 9.

Performance comparison of different methods.

As shown in Table 9, the proposed model algorithm shows significant advantages in all performance metrics. The precision, recall, mAP, and F1 of ES-YOLO reach 94.16%, 73.89%, 84.92%, and 82%, respectively, and the ship recognition accuracy is the highest. The recognition accuracy of Faster R-CNN network is the lowest, which is due to the fact that Faster R-CNN is a two-stage detection method with a complex structure and slow inference speed, which is not conducive to efficient detection under complex background and multi-scale ship. Compared with YOLOv5 and YOLOv8, with relatively high accuracy, ES-YOLO has an accuracy increased by 1.42% and the recall rate increased by 7.04%. Due to the limited capability of feature fusion and context modeling, the detection performance of small ships and dense ships in complex port scenes is still insufficient. ES-YOLO greatly improves its feature extraction ability through the introduction of EACSA and significantly improves the recall ability of small ships through multi-scale structure. Although RetinaNet has a certain ability to detect ships with high accuracy, the recall is only 65.92%, indicating that most real ships have not been detected.

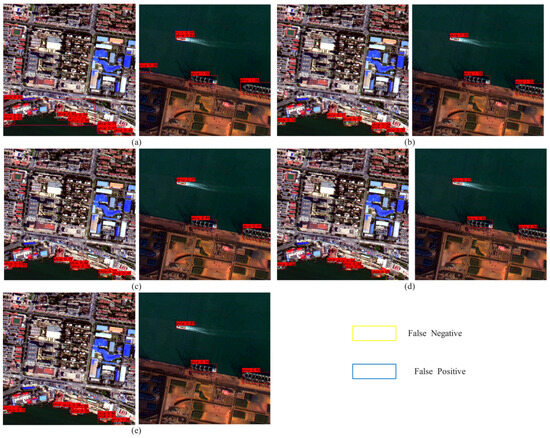

In order to visually show and compare the detection performance of various algorithms, this paper carries out a visual analysis, and the results are shown in Figure 10. Figure 10a–e correspond to the visualization of the detection results of Faster R-CNN, RetinaNet, YOLOv5, YOLOv8, and ES-YOLO, respectively. Figure 10a shows that Faster R-CNN has a large number of false detections and missed detections, which further illustrates that the complex two-stage algorithm is not suitable for ship detection in complex scenes. It can be seen from Figure 10b that RetinaNet has achieved good detection accuracy on some ships with no background occlusion and relatively complete hull, but there are still many missed detections. YOLOv5 and YOLOv8 achieve a certain balance between detection accuracy and recall rate, while ES-YOLO, proposed in this paper, can still accurately identify multi-scale ship targets under complex backgrounds, improve the detection effect of small ships, improve the detection accuracy, and obtain excellent detection performance.

Figure 10.

Compares the experimental results. (a) Faster R-CNN; (b) RetinaNet; (c) YOLOv5; (d) YOLOv8; (e) ES-YOLO.

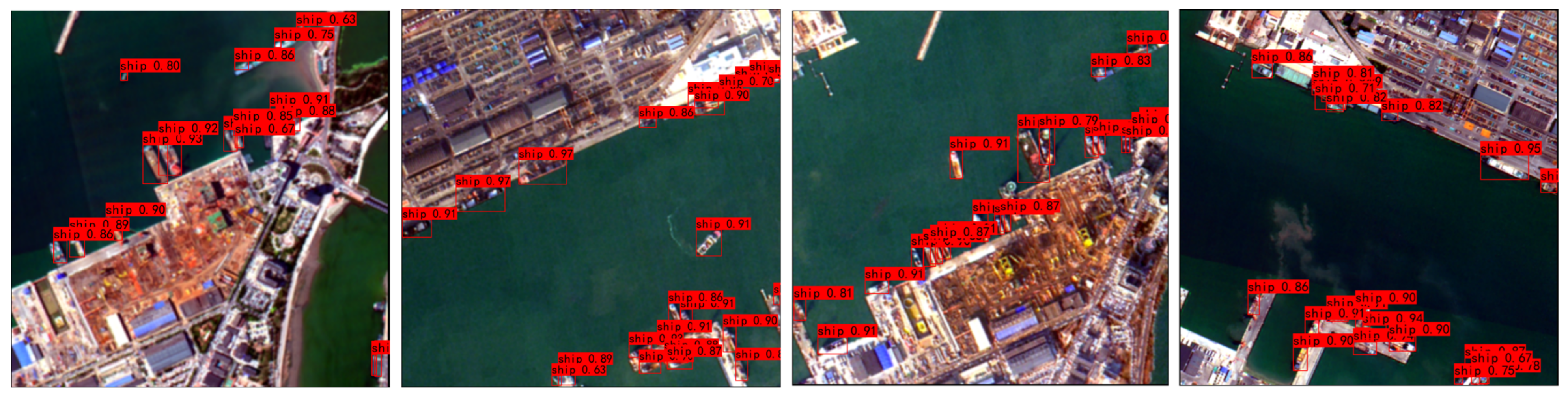

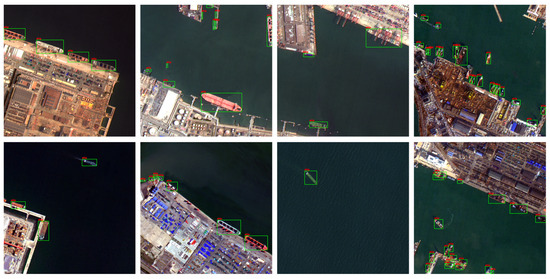

In addition to the quantitative comparison, we further verify the robustness of ES-YOLO under challenging maritime scenarios. Although no dedicated quantitative subset is provided for extreme-density or weather-specific evaluation, we show in Figure 11 that the proposed method remains robust under dense berth scenes, wake interference, and low-contrast illumination conditions.

Figure 11.

Results under dense conditions.

These visual examples provide complementary evidence that ES-YOLO maintains consistent detection behavior in real-world degraded environments.

4.3. Generalization Experiments

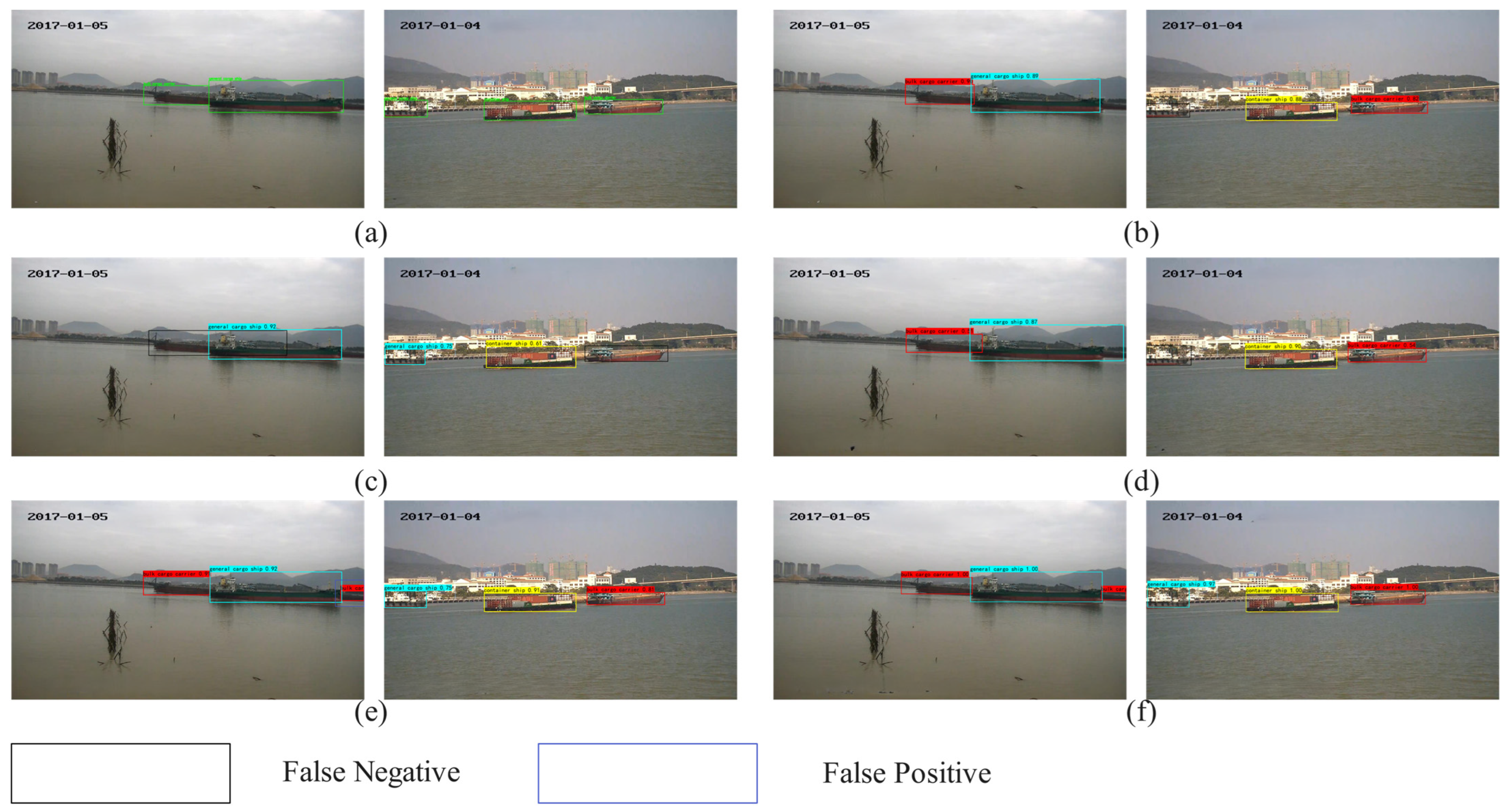

According to the quantitative results in Table 10 and the mAP comparison shown in Figure 12, clear performance differences can be observed among various detectors in port-scene ship detection. Overall, one-stage detectors achieve high accuracy while maintaining real-time inference capabilities. YOLOv8 and ES-YOLO exhibit particularly strong performance, achieving near-saturated accuracy across almost all categories, with mAP values of 97.50% and 97.83%, respectively. Notably, ES-YOLO achieves nearly perfect accuracy for bulk cargo and ore carriers, indicating that its enhanced feature representation is highly effective at capturing large ship structures while suppressing ocean-surface background interference.

Table 10.

Performance comparison of different methods on SeaShips.

Figure 12.

Generalized experimental results. (a) Label; (b) Faster R-CNN; (c) RetinaNet; (d) YOLOv5; (e) YOLOv8; (f) ES-YOLO.

In comparison, Faster R-CNN demonstrates stable performance, but its accuracy decreases for small-scale categories such as fishing boats and passenger ships. This suggests that the traditional two-stage framework still has limitations in localizing fine-grained targets under complex port backgrounds. YOLOv5 shows balanced performance overall, but its accuracy remains lower than the more advanced YOLOv8 family.

A prominent observation is the significant performance drop of RetinaNet in the ore carrier category, where the accuracy falls to only 0.22, resulting in a relatively low overall mAP of 74.66%. This indicates that RetinaNet’s feature pyramid struggles with large-scale variations and elongated ship structures commonly found in port environments.

In summary, the experimental results demonstrate that advanced one-stage detectors (ES-YOLO) significantly outperform both traditional two-stage models and earlier one-stage approaches in port-scene ship detection. Their superior robustness to multi-scale targets, densely berthed ship clusters, and visually complex maritime backgrounds make them more suitable for practical deployment in real-world port monitoring systems.

5. Discussion

In order to solve the problems of ship detection in optical remote sensing images, such as the difficulty of small target detection, strong complex background interference, and significant scale change, an improved single-stage detection model named ES-YOLO is proposed. The model uses YOLOv7 as the basic framework and effectively improves the detection accuracy and robustness in complex ocean and port scenes through structure optimization and feature enhancement mechanism. Specifically, the edge-aware Channel-Spatial Attention Module (EACSA) is introduced to pay attention to both the edge details and semantic feature channels of the target in the feature extraction process, which significantly enhances the feature expression ability of the model for small ship targets. A lightweight spatial–channel decoupled down-sampling module (LSCD) is designed to reduce the computational complexity and realize the efficient fusion of multi-level features, which improves the detection efficiency and information transmission ability of the model. In addition, a hierarchical multi-scale structure is used to fully exploit the correlation and complementarity between features at different scales, so as to enhance the adaptability of the model to scale changes and complex backgrounds.

Experimental results on the TJShip dataset show that the ES-YOLO model proposed in this paper is superior to the mainstream detection algorithms in multiple evaluation indicators. Compared with the original YOLOv7 model, the ES-YOLO model has the mAP improved by about 1.75%, the precision improved by about 3.06%, and the recall improved by about 3.89%. Compared with the classical detection networks such as YOLOv5, RetinaNet, and Faster R-CNN, the mAP of ES-YOLO is increased by 46.87%, 8.14%, and 1.85%, respectively, which verifies that ES-YOLO has better detection performance and stronger generalization ability in complex port, dense occlusion and strong reflection scenes. The practicability and robustness of the proposed method under complex background conditions are proven.

The ES-YOLO model proposed in this paper significantly improves the accuracy and stability of ship target detection while maintaining a high detection speed by introducing feature enhancement and multi-scale fusion mechanism, which provides an efficient and reliable technical approach for small target detection in high-resolution remote sensing images. However, there is still room for further improvement in the detection ability of the model in extremely dense distribution, severe occlusion, and multi-class mixed scenes. Robustness under weather degradation and severe occlusion is an important future direction. A dedicated benchmark containing stratified weather conditions and density-level annotations will be integrated in future work to further validate ES-YOLO. Robustness under weather degradation and severe occlusion is an important future direction. A dedicated benchmark containing stratified weather conditions and density-level annotations will be integrated in future work to further validate ES-YOLO.

Author Contributions

Conceptualization, L.C. and J.X.; methodology, L.C.; validation, L.C., Z.X. and T.F.; formal analysis, L.C.; investigation, J.X.; resources, X.T.; data curation, T.F.; writing—original draft preparation, L.C.; writing—review and editing, T.F.; visualization, L.C.; supervision, X.T.; project administration, X.T.; funding acquisition, X.T. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Typical Ground Object Target Optical Characteristics Library for the Common Application Support Platform Project of the National Civil Space Infrastructure “13th Five Year Plan” Land Observation Satellite, grant number YG202102H and Research Fund Project of North China Institute of Aerospace Engineering, grant number BKY202134.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

For the private TJShip dataset, requests for access can be directed to caolixiang1@stumail.nciae.edu.cn.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Er, M.J.; Zhang, Y.; Chen, J.; Gao, W. Ship detection with deep learning: A survey. Artif. Intell. Rev. 2023, 56, 11825–11865. [Google Scholar] [CrossRef]

- Zhang, F.; Wang, X.; Zhou, S.; Wang, Y.; Hou, Y. Arbitrary-oriented ship detection through center-head point extraction. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5612414. [Google Scholar] [CrossRef]

- Tong, K.; Wu, Y.; Zhou, F. Recent advances in small object detection based on deep learning: A review. Image Vis. Comput. 2020, 97, 103910. [Google Scholar] [CrossRef]

- Wang, L.; Fan, S.; Liu, Y.; Li, Y.; Fei, C.; Liu, J.; Liu, B.; Dong, Y.; Liu, Z.; Zhao, X. A review of methods for ship detection with electro-optical images in marine environments. J. Mar. Sci. Eng. 2021, 9, 1408. [Google Scholar] [CrossRef]

- Kanjir, U.; Greidanus, H.; Oštir, K. Vessel detection and classification from spaceborne optical images: A literature survey. Remote Sens. Environ. 2018, 207, 1–26. [Google Scholar] [CrossRef] [PubMed]

- Li, B.; Xie, X.; Wei, X.; Tang, W. Ship detection and classification from optical remote sensing images: A survey. Chin. J. Aeronaut. 2021, 34, 145–163. [Google Scholar] [CrossRef]

- Marques, T.P.; Albu, A.B.; O’Hara, P.; Serra, N.; Morrow, B.; McWhinnie, L.; Canessa, R. Size-invariant detection of marine vessels from visual time series. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2021; pp. 443–453. [Google Scholar]

- Choi, K.; Song, T.; Kim, S.; Jang, H.; Ha, N.; Sohn, K. Deep cascade network for noise-robust SAR ship detection with label augmentation. IEEE Geosci. Remote Sens. Lett. 2022, 19, 4514005. [Google Scholar] [CrossRef]

- Liu, Z.; Yuan, L.; Weng, L.; Yang, Y. A high resolution optical satellite image dataset for ship recognition and some new baselines. In Proceedings of the International Conference on Pattern Recognition Applications and Methods, Porto, Portugal, 24–26 February 2017; pp. 324–331. [Google Scholar]

- Shao, Z.; Wu, W.; Wang, Z.; Du, W.; Li, C. Seaships: A large-scale precisely annotated dataset for ship detection. IEEE Trans. Multimed. 2018, 20, 2593–2604. [Google Scholar] [CrossRef]

- Sun, X.; Wang, P.; Yan, Z.; Xu, F.; Wang, R.; Diao, W.; Chen, J.; Li, J.; Feng, Y.; Xu, T. FAIR1M: A benchmark dataset for fine-grained object recognition in high-resolution remote sensing imagery. ISPRS J. Photogramm. Remote Sens. 2022, 184, 116–130. [Google Scholar] [CrossRef]

- Radman, A.; Zainal, N.; Suandi, S.A. Automated segmentation of iris images acquired in an unconstrained environment using HOG-SVM and GrowCut. Digit. Signal Process. 2017, 64, 60–70. [Google Scholar] [CrossRef]

- Park, K.-Y.; Hwang, S.-Y. An improved Haar-like feature for efficient object detection. Pattern Recognit. Lett. 2014, 42, 148–153. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28, 1137–1149. [Google Scholar] [CrossRef]

- Zhang, S.; Wu, R.; Xu, K.; Wang, J.; Sun, W. R-CNN-Based Ship Detection from High Resolution Remote Sensing Imagery. Remote Sens. 2019, 11, 631. [Google Scholar] [CrossRef]

- Han, Z.; Ma, L.; Chen, H. Optimization of ship target detection algorithm based on random forest and regional convolutional network. In Proceedings of the 2019 International Conference on Electronic Engineering and Informatics (EEI), Nanjing, China, 8–10 November 2019; pp. 375–382. [Google Scholar]

- Loran, T.; da Silva, A.B.C.; Joshi, S.K.; Baumgartner, S.V.; Krieger, G. Ship detection based on faster R-CNN using range-compressed airborne radar data. IEEE Geosci. Remote Sens. Lett. 2022, 20, 3500205. [Google Scholar] [CrossRef]

- Chang, Y.-L.; Anagaw, A.; Chang, L.; Wang, Y.C.; Hsiao, C.-Y.; Lee, W.-H. Ship detection based on YOLOv2 for SAR imagery. Remote Sens. 2019, 11, 786. [Google Scholar] [CrossRef]

- Chen, L.; Shi, W.; Deng, D. Improved YOLOv3 based on attention mechanism for fast and accurate ship detection in optical remote sensing images. Remote Sens. 2021, 13, 660. [Google Scholar] [CrossRef]

- Jiang, J.; Fu, X.; Qin, R.; Wang, X.; Ma, Z. High-speed lightweight ship detection algorithm based on YOLO-v4 for three-channels RGB SAR image. Remote Sens. 2021, 13, 1909. [Google Scholar] [CrossRef]

- Liu, T.; Zhou, B.; Zhao, Y.; Yan, S. Ship detection algorithm based on improved YOLO V5. In Proceedings of the 2021 6th International Conference on Automation, Control and Robotics Engineering (CACRE), Dalian, China, 15–17 July 2021; pp. 483–487. [Google Scholar]

- Wang, H.; Han, D.; Cui, M.; Chen, C. NAS-YOLOX: A SAR ship detection using neural architecture search and multi-scale attention. Connect. Sci. 2023, 35, 1–32. [Google Scholar] [CrossRef]

- Miao, T.; Zeng, H.; Yang, W.; Chu, B.; Zou, F.; Ren, W.; Chen, J. An improved lightweight RetinaNet for ship detection in SAR images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 4667–4679. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Liu, S.; Yu, H.; Liao, C.; Li, J.; Lin, W.; Liu, A.X.; Dustdar, S. Pyraformer: Low-complexity pyramidal attention for long-range time series modeling and forecasting. In Proceedings of the Tenth International Conference on Learning Representations, Virtual, 25 April 2022. [Google Scholar]

- Gong, Y.; Xiao, Z.; Tan, X.; Sui, H.; Xu, C.; Duan, H.; Li, D. Context-aware convolutional neural network for object detection in VHR remote sensing imagery. IEEE Trans. Geosci. Remote Sens. 2019, 58, 34–44. [Google Scholar] [CrossRef]

- Wu, Y.; Zhang, K.; Wang, J.; Wang, Y.; Wang, Q.; Li, X. GCWNet: A global context-weaving network for object detection in remote sensing images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5619912. [Google Scholar] [CrossRef]

- Wang, Z.; Zang, T.; Fu, Z.; Yang, H.; Du, W. RLPGB-Net: Reinforcement learning of feature fusion and global context boundary attention for infrared dim small target detection. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5003615. [Google Scholar] [CrossRef]

- Chen, X.; Zheng, X.; Lu, X. Context-Aware Local-Global Semantic Alignment for Remote Sensing Image-Text Retrieval. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5617312. [Google Scholar] [CrossRef]

- Guan, T.; Chang, S.; Deng, Y.; Xue, F.; Wang, C.; Jia, X. Oriented SAR Ship Detection Based on Edge Deformable Convolution and Point Set Representation. Remote Sens. 2025, 17, 1612. [Google Scholar] [CrossRef]

- Liu, Y.; Shao, Z.; Hoffmann, N. Global attention mechanism: Retain information to enhance channel-spatial interactions. arXiv 2021, arXiv:2112.05561. [Google Scholar] [CrossRef]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Zhang, Y.; Ye, M.; Zhu, G.; Liu, Y.; Guo, P.; Yan, J. FFCA-YOLO for Small Object Detection in Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5611215. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).