AI-Driven Motion Capture Data Recovery: A Comprehensive Review and Future Outlook

Abstract

1. Introduction

2. Basic Concepts of Motion Capture Systems

3. Survey Methodology

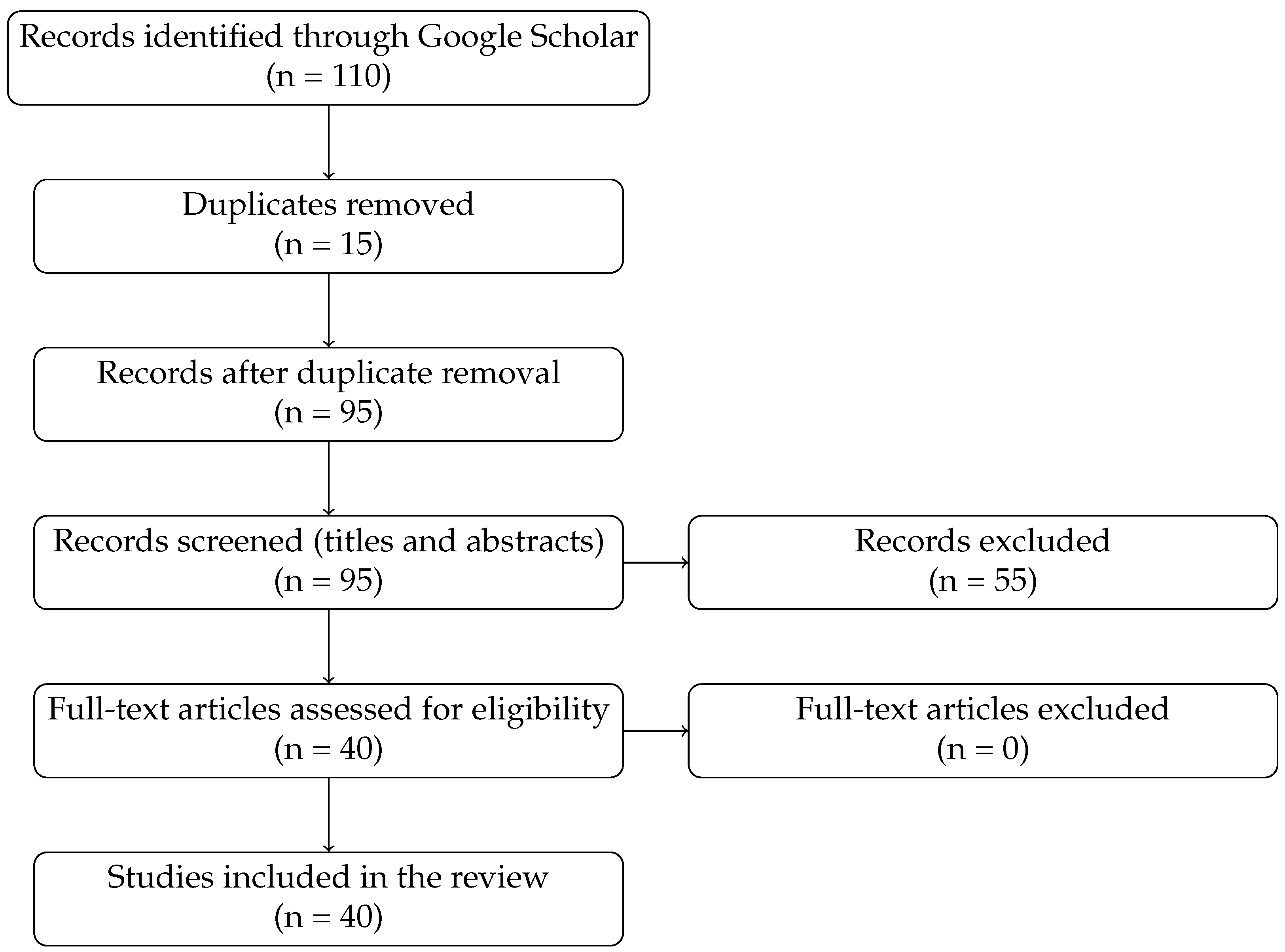

3.1. Literature Search Process

3.2. Inclusion and Exclusion Criteria

- Inclusion Criteria: Studies published between 2016 and 2024 in peer-reviewed journals or conference proceedings were considered. They needed to address motion capture data recovery, reconstruction, or gap-filling, emphasising deep learning methods or traditional computational techniques.

- Exclusion Criteria: Studies that focus exclusively on synthetic data without practical application, research centred on motion capture data generation from other modalities (e.g., text or images), and techniques aimed solely at style transfer or human activity recognition were excluded. These topics were considered outside the scope of MoCap data reconstruction.

3.3. Data Extraction and Analysis

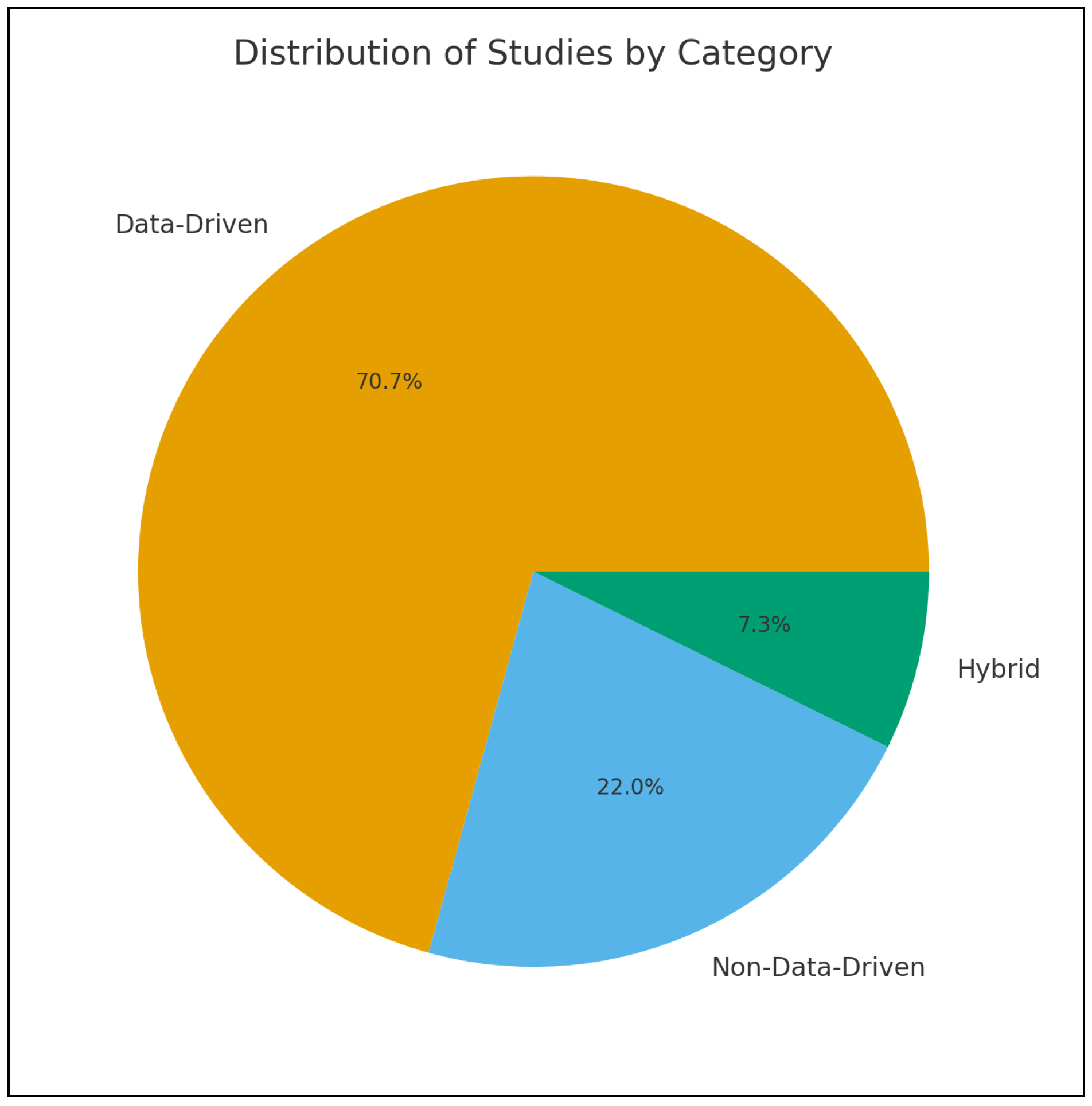

4. Taxonomy of Motion Data Recovery Techniques

4.1. Non-Data-Driven Methods

4.2. Data-Driven Methods

4.2.1. Neural Network-Based Models

4.2.2. Traditional Machine Learning Approaches

4.3. Hybrid Approaches

5. Comprehensive Analysis of Motion Recovery Techniques: Trends and Future Directions

5.1. Techniques Among Purposes

5.2. Datasets

5.3. Types of Human Movement Representation

5.4. Evaluation Methods

5.5. The Most Effective Techniques in the Human Motion Recovery Field

5.6. Analyzing Citation Patterns

5.7. Limitations and Future Directions

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zhou, L.; Lannan, N.; Fan, G. A review of depth-based human motion enhancement: Past and present. IEEE J. Biomed. Health Inform. 2024, 28, 633–644. [Google Scholar] [CrossRef]

- Shi, Z.; Peng, S.; Xu, Y.; Geiger, A.; Liao, Y.; Shen, Y. Deep generative models on 3d representations: A survey. arXiv 2022, arXiv:2210.15663. [Google Scholar] [CrossRef]

- Zhang, J.; Peng, J.; Lv, N. Spatial-Temporal Transformer Network for Human Mocap Data Recovery. In Proceedings of the 2023 IEEE International Conference on Image Processing (ICIP), Lumpur, Malaysia, 8–11 October 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 2305–2309. [Google Scholar] [CrossRef]

- Lyu, K.; Chen, H.; Liu, Z.; Zhang, B.; Wang, R. 3d human motion prediction: A survey. Neurocomputing 2022, 489, 345–365. [Google Scholar] [CrossRef]

- Martini, E.; Calanca, A.; Bombieri, N. Denoising and completion filters for human motion software: A survey with code. Comput. Sci. Rev. 2025, 58, 100780. [Google Scholar] [CrossRef]

- Akber, S.M.A.; Kazmi, S.N.; Mohsin, S.M.; Szczęsna, A. Deep learning-based motion style transfer tools, techniques and future challenges. Sensors 2023, 23, 2597. [Google Scholar] [CrossRef]

- Xue, H.; Luo, X.; Hu, Z.; Zhang, X.; Xiang, X.; Dai, Y.; Liu, J.; Zhang, Z.; Li, M.; Yang, J.; et al. Human Motion Video Generation: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2025, 47, 10709–10730. [Google Scholar] [CrossRef]

- Rudenko, A.; Palmieri, L.; Herman, M.; Kitani, K.M.; Gavrila, D.M.; Arras, K.O. Human motion trajectory prediction: A survey. Int. J. Robot. Res. 2020, 39, 895–935. [Google Scholar] [CrossRef]

- Suzuki, M.; Matsuo, Y. A survey of multimodal deep generative models. Adv. Robot. 2022, 36, 261–278. [Google Scholar] [CrossRef]

- Ye, Z.; Wu, H.; Jia, J. Human motion modeling with deep learning: A survey. AI Open 2022, 3, 35–39. [Google Scholar] [CrossRef]

- Loi, I.; Zacharaki, E.I.; Moustakas, K. Machine learning approaches for 3D motion synthesis and musculoskeletal dynamics estimation: A Survey. IEEE Trans. Vis. Comput. Graph. 2024, 30, 5810–5829. [Google Scholar] [CrossRef]

- Ceseracciu, E.; Sawacha, Z.; Cobelli, C. Comparison of markerless and marker-based motion capture technologies through simultaneous data collection during gait: Proof of concept. PLoS ONE 2014, 9, e87640. [Google Scholar] [CrossRef]

- Sharma, S.; Verma, S.; Kumar, M.; Sharma, L. Use of Motion Capture in 3D Animation: Motion Capture Systems, Challenges, and Recent Trends. In Proceedings of the 2019 International Conference on Machine Learning, Big Data, Cloud and Parallel Computing (COMITCon), Faridabad, India, 14–16 February 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 289–294. [Google Scholar] [CrossRef]

- Scataglini, S.; Abts, E.; Van Bocxlaer, C.; Van den Bussche, M.; Meletani, S.; Truijen, S. Accuracy, Validity, and Reliability of Markerless Camera-Based 3D Motion Capture Systems Versus Marker-Based 3D Motion Capture Systems in Gait Analysis: A Systematic Review and Meta-Analysis. Sensors 2024, 24, 3686. [Google Scholar] [CrossRef]

- Menolotto, M.; Komaris, D.S.; Tedesco, S.; O’Flynn, B.; Walsh, M. Motion capture technology in industrial applications: A systematic review. Sensors 2020, 20, 5687. [Google Scholar] [CrossRef]

- Wade, L.; Needham, L.; McGuigan, P.; Bilzon, J. Applications and limitations of current markerless motion capture methods for clinical gait biomechanics. PeerJ 2022, 10, e12995. [Google Scholar] [CrossRef]

- Li, S.; Zhou, Y.; Zhu, H.; Xie, W.; Zhao, Y.; Liu, X. Bidirectional recurrent autoencoder for 3D skeleton motion data refinement. Comput. Graph. 2019, 81, 92–103. [Google Scholar] [CrossRef]

- Scott, B.; Seyres, M.; Philp, F.; Chadwick, E.K.; Blana, D. Healthcare applications of single camera markerless motion capture: A scoping review. PeerJ 2022, 10, e13517. [Google Scholar] [CrossRef]

- Albanis, G.; Zioulis, N.; Thermos, S.; Chatzitofis, A.; Kolomvatsos, K. Noise-in, Bias-out: Balanced and Real-time MoCap Solving. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 4237–4247. [Google Scholar] [CrossRef]

- Hou, J.; Bian, Z.P.; Chau, L.P.; Magnenat-Thalmann, N.; He, Y. Restoring corrupted motion capture data via jointly low-rank matrix completion. In Proceedings of the 2014 IEEE International Conference on Multimedia and Expo (ICME), Chengdu, China, 14–18 July 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 1–6. [Google Scholar] [CrossRef]

- Lannan, N.; Zhou, L.; Fan, G.; Hausselle, J. Human Motion Enhancement Using Nonlinear Kalman Filter Assisted Convolutional Autoencoders. In Proceedings of the 2020 IEEE 20th International Conference on Bioinformatics and Bioengineering (BIBE), Cincinnati, OH, USA, 26–28 October 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1008–1015. [Google Scholar] [CrossRef]

- Holden, D. Robust Solving of Optical Motion Capture Data by Denoising. ACM Trans. Graph. 2018, 37, 89. [Google Scholar] [CrossRef]

- Liao, Y.; Vakanski, A.; Xian, M.; Paul, D.; Baker, R. A review of computational approaches for evaluation of rehabilitation exercises. Comput. Biol. Med. 2020, 119, 103687. [Google Scholar] [CrossRef]

- Camargo, J.; Ramanathan, A.; Csomay-Shanklin, N.; Young, A. Automated Gap-Filling for Marker-Based Biomechanical Motion Capture Data. Comput. Methods Biomech. Biomed. Eng. 2020, 23, 1180–1189. [Google Scholar] [CrossRef]

- Wang, M.; Li, K.; Wu, F.; Lai, Y.K.; Yang, J. 3-D Motion Recovery via Low Rank Matrix Analysis. In Proceedings of the 2016 Visual Communications and Image Processing (VCIP), Chengdu, China, 27–30 November 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 1–4. [Google Scholar] [CrossRef]

- Gomes, D.; Guimarães, V.; Silva, J. A Fully-Automatic Gap Filling Approach for Motion Capture Trajectories. Appl. Sci. 2021, 11, 9847. [Google Scholar] [CrossRef]

- Kamali, K.; Akbari, A.A.; Desrosiers, C.; Akbarzadeh, A.; Otis, M.J.D.; Ayena, J.C. Low-Rank and Sparse Recovery of Human Gait Data. Sensors 2020, 20, 4525. [Google Scholar] [CrossRef]

- Li, Z.; Yu, H.; Kieu, H.D.; Vuong, T.L.; Zhang, J.J. PCA-Based Robust Motion Data Recovery. IEEE Access 2020, 8, 76980–76990. [Google Scholar] [CrossRef]

- Tits, M.; Tilmanne, J.; Dutoit, T. Robust and Automatic Motion-Capture Data Recovery Using Soft Skeleton Constraints and Model Averaging. PLoS ONE 2018, 13, e0199744. [Google Scholar] [CrossRef]

- Yang, J.; Guo, X.; Li, K.; Wang, M.; Lai, Y.K.; Wu, F. Spatio-Temporal Reconstruction for 3D Motion Recovery. IEEE Trans. Circuits Syst. Video Technol. 2019, 30, 1583–1596. [Google Scholar] [CrossRef]

- Mall, U.; Lal, G.R.; Chaudhuri, S.; Chaudhuri, P. A deep recurrent framework for cleaning motion capture data. arXiv 2017, arXiv:1712.03380. [Google Scholar] [CrossRef]

- Kim, S.U.; Jang, H.; Kim, J. Human Motion Denoising Using Attention-Based Bidirectional Recurrent Neural Network. In Proceedings of the SIGGRAPH Asia 2019 Posters, New York, NY, USA, 17–20 November 2019. [Google Scholar] [CrossRef]

- Cui, Q.; Sun, H.; Li, Y.; Kong, Y. A Deep Bi-directional Attention Network for Human Motion Recovery. In Proceedings of the 28th International Joint Conference on Artificial Intelligence (IJCAI 2019), Macao, China, 10–16 August 2019; pp. 701–707. [Google Scholar] [CrossRef]

- Kim, S.U.; Jang, H.; Im, H.; Kim, J. Human Motion Reconstruction Using Deep Transformer Networks. Pattern Recognit. Lett. 2021, 150, 162–169. [Google Scholar] [CrossRef]

- Yuhai, O.; Choi, A.; Cho, Y.; Kim, H.; Mun, J.H. Deep-Learning-Based Recovery of Missing Optical Marker Trajectories in 3D Motion Capture Systems. Bioengineering 2024, 11, 560. [Google Scholar] [CrossRef]

- Chen, K.; Wang, Y.; Zhang, S.H.; Xu, S.Z.; Zhang, W.; Hu, S.M. Mocap-Solver: A Neural Solver for Optical Motion Capture Data. ACM Trans. Graph. 2021, 40, 84. [Google Scholar] [CrossRef]

- Hu, Z.; Tang, J.; Li, L.; Hou, J.; Xin, H.; Yu, X.; Bu, J. MarkerNet: A Divide-and-Conquer Solution to Motion Capture Solving from Raw Markers. Comput. Animat. Virtual Worlds 2024, 35, e2228. [Google Scholar] [CrossRef]

- Yin, W.; Yin, H.; Kragic, D.; Björkman, M. Graph-Based Normalizing Flow for Human Motion Generation and Reconstruction. In Proceedings of the 2021 30th IEEE International Conference on Robot & Human Interactive Communication (RO-MAN), Vancouver, BC, Canada, 8–12 August 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 641–648. [Google Scholar] [CrossRef]

- Pan, X.; Zheng, B.; Jiang, X.; Xu, G.; Gu, X.; Li, J.; Kou, Q.; Wang, H.; Shao, T.; Zhou, K.; et al. A Locality-Based Neural Solver for Optical Motion Capture. In Proceedings of the SIGGRAPH Asia 2023 Conference Papers, Sydney, Australia, 12–15 December 2023; pp. 1–11. [Google Scholar] [CrossRef]

- Cui, Q.; Sun, H.; Kong, Y.; Zhang, X.; Li, Y. Efficient Human Motion Prediction Using Temporal Convolutional Generative Adversarial Network. Inf. Sci. 2021, 545, 427–447. [Google Scholar] [CrossRef]

- Hernandez, A.; Gall, J.; Moreno-Noguer, F. Human Motion Prediction via Spatio-Temporal Inpainting. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27–31 October 2019; pp. 7134–7143. [Google Scholar] [CrossRef]

- Kieu, H.D.; Yu, H.; Li, Z.; Zhang, J.J. Locally Weighted PCA Regression to Recover Missing Markers in Human Motion Data. PLoS ONE 2022, 17, e0272407. [Google Scholar] [CrossRef]

- Ji, L.; Liu, R.; Zhou, D.; Zhang, Q.; Wei, X. Missing Data Recovery for Human MoCap Data Based on A-LSTM and LS Constraint. In Proceedings of the 2020 IEEE 5th International Conference on Signal and Image Processing (ICSIP), Nanjing, China, 23–25 October 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 729–734. [Google Scholar] [CrossRef]

- Yasin, H.; Ghani, S.; Krüger, B. An Effective and Efficient Approach for 3D Recovery of Human Motion Capture Data. Sensors 2023, 23, 3664. [Google Scholar] [CrossRef]

- Raj, S.M.; George, S.N. A Fast Non-Convex Optimization Technique for Human Action Recovery from Misrepresented 3D Motion Capture Data Using Trajectory Movement and Pair-Wise Hierarchical Constraints. J. Ambient Intell. Humaniz. Comput. 2023, 14, 10779–10797. [Google Scholar] [CrossRef]

- Yang, J.; Shi, J.; Zhu, Y.; Li, K.; Hou, C. 3D Motion Recovery via Low-Rank Matrix Restoration with Hankel-Like Augmentation. In Proceedings of the 2020 IEEE International Conference on Multimedia and Expo (ICME), London, UK, 6–10 July 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Zhu, Y. Reconstruction of Missing Markers in Motion Capture Based on Deep Learning. In Proceedings of the 2020 IEEE 3rd International Conference on Information Systems and Computer Aided Education (ICISCAE), Dalian, China, 27–29 September 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 346–349. [Google Scholar] [CrossRef]

- Yin, W. Developing Data-Driven Models for Understanding Human Motion. PhD Thesis, KTH Royal Institute of Technology, Stockholm, Sweden, 2024. [Google Scholar]

- Alemi, O.; Pasquier, P. Machine Learning for Data-Driven Movement Generation: A Review of the State of the Art. arXiv 2019, arXiv:1903.08356. [Google Scholar] [CrossRef]

- Kucherenko, T.; Beskow, J.; Kjellström, H. A Neural Network Approach to Missing Marker Reconstruction in Human Motion Capture. arXiv 2018, arXiv:1803.02665. [Google Scholar] [CrossRef]

- Zhu, Y.; Cai, Y. Predicting Missing Markers in MoCap Data Using LSTNet. In Proceedings of the 7th International Conference on Cyber Security and Information Engineering, Brisbane, Australia, 23–25 September 2022; pp. 947–952. [Google Scholar] [CrossRef]

- Skurowski, P.; Pawlyta, M. Tree-Based Regression Methods for Gap Reconstruction of Motion Capture Sequences. Biomed. Signal Process. Control 2024, 88, 105641. [Google Scholar] [CrossRef]

- Kim, K.; Seo, S.; Han, D.; Kang, H. DAMO: A Deep Solver for Arbitrary Marker Configuration in Optical Motion Capture. ACM Trans. Graph. 2024, 44, 3. [Google Scholar] [CrossRef]

- Yin, W.; Yin, H.; Kragic, D.; Björkman, M. Long-Term Human Motion Generation and Reconstruction Using Graph-Based Normalizing Flow. arXiv 2021, arXiv:2103.01419. [Google Scholar] [CrossRef]

- Zheng, C.; Zhuang, Q.; Peng, S.J. Efficient Motion Capture Data Recovery via Relationship-Aggregated Graph Network and Temporal Pattern Reasoning. Math. Biosci. Eng. 2023, 20, 11313–11327. [Google Scholar] [CrossRef]

- Zhu, Y.Q.; Cai, Y.M.; Zhang, F. Motion Capture Data Denoising Based on LSTNet Autoencoder. J. Internet Technol. 2022, 23, 11–20. [Google Scholar] [CrossRef]

- Choi, C.; Lee, J.; Chung, H.J.; Park, J.; Park, B.; Sohn, S.; Lee, S. Directed Graph-Based Refinement of Three-Dimensional Human Motion Data Using Spatial-Temporal Information. Int. J. Precis. Eng. Manuf. Technol. 2024, 2, 33–46. [Google Scholar] [CrossRef]

- Zhu, Y. Refining Method of MoCap Data Based on LSTM. In Proceedings of the 2022 IEEE 2nd International Conference on Data Science and Computer Application (ICDSCA), Dalian, China, 28–30 October 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 740–743. [Google Scholar] [CrossRef]

- Zhu, Y. Denoising Method of Motion Capture Data Based on Neural Network. J. Phys. Conf. Ser. 2020, 1650, 032068. [Google Scholar] [CrossRef]

- Wang, X.; Mi, Y.; Zhang, X. 3D Human Pose Data Augmentation Using Generative Adversarial Networks for Robotic-Assisted Movement Quality Assessment. Front. Neurorobot. 2024, 18, 1371385. [Google Scholar] [CrossRef]

- Liu, J.; Liu, J.; Li, P. A Method of Human Motion Reconstruction with Sparse Joints Based on Attention Mechanism. In Proceedings of the 2023 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Istanbul, Turkey, 5–8 December 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 2647–2654. [Google Scholar] [CrossRef]

- Cui, Q.; Sun, H. Towards Accurate 3D Human Motion Prediction from Incomplete Observations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Online, 19–25 June 2021; pp. 4801–4810. [Google Scholar] [CrossRef]

- Skurowski, P.; Pawlyta, M. Gap Reconstruction in Optical Motion Capture Sequences Using Neural Networks. Sensors 2021, 21, 6115. [Google Scholar] [CrossRef] [PubMed]

- Baumann, J.; Krüger, B.; Zinke, A.; Weber, A. Data-Driven Completion of Motion Capture Data. In Proceedings of the 8th Workshop on Virtual Reality Interaction and Physical Simulation (VRIPHYS), Lyon, France, 5–6 December 2011; Eurographics Association: Eindhoven, The Netherlands, 2011; pp. 111–118. [Google Scholar]

- Hodgins, J.; Wooten, W.L.; Brogan, D.C.; O’Brien, J.F. CMU Graphics Lab Motion Capture Database. 2015. Available online: http://mocap.cs.cmu.edu (accessed on 27 June 2024).

- Müller, M.; Röder, T.; Clausen, M.; Eberhardt, B.; Krüger, B.; Weber, A. Technical Report CG-2007-2; Institute of Computer Science II, University of Bonn: Bonn, Germany, 2007. [Google Scholar]

- Ionescu, C.; Papava, D.; Olaru, V.; Sminchisescu, C. Human3.6M: Large Scale Datasets and Predictive Methods for 3D Human Sensing in Natural Environments. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 36, 1325–1339. [Google Scholar] [CrossRef]

- Niu, Z.; Lu, K.; Xue, J.; Qin, X.; Wang, J.; Shao, L. From Method to Application: A Review of Deep 3D Human Motion Capture. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 11340–11359. [Google Scholar] [CrossRef]

| Category | Main Advantages | Main Limitations | Good For | Representative Studies |

|---|---|---|---|---|

| Non-Data-Driven Models | Reliable and computationally efficient. Performs well on structured, less-noisy data. Where interpretability and deterministic behaviour are important. | Limited scalability to very large or highly corrupted datasets. Struggle to adapt to unstructured or extremely noisy data and are less effective in handling complex, nonlinear motion patterns. | Well-structured and low-noise motion capture datasets; applications requiring fast processing and high interpretability, such as gap filling and trajectory smoothing in controlled environments. | [24,25,26,27,28,29,30] |

| Data-Driven Models | Excel at handling noisy and complex data. Adapt well to large datasets and can model nonlinear spatial–temporal dependencies. Provide high reconstruction accuracy when sufficient training data are available. | Require extensive training data and substantial computational resources. Sensitive to overfitting, domain shift, and dataset bias, especially with limited or imbalanced data. Model behaviour can be less interpretable. | Complex and noisy motion capture datasets; marker recovery and motion prediction; animation, biomechanics, and robotics applications where high precision and flexibility are required. | [17,31,32,33,34,35,36,37,38,39,40,41] |

| Hybrid Models | Combine the adaptability of neural networks with domain-specific or biomechanical constraints. Suitable for real-time and clinical/biomechanical applications where both accuracy and physical plausibility are important. Balance precision with interpretability and robustness. | Slightly less adaptable to highly diverse or unstructured datasets than purely data-driven approaches. Model design and integration of constraints can increase implementation and tuning complexity. | Real-time motion capture applications; biomechanical analysis with physical constraints; denoising and gap filling where domain knowledge or physics-based priors must be respected. | [42,43,44] |

| Techniques | Noise Removal | Gap Filling | Quality Enhancement | Studies |

|---|---|---|---|---|

| Bi-directional Attention Networks (BAN) with Bi-LSTM, Deep Denoising Feedforward Neural Networks, Spatial-Temporal Graph Motion Glow (STMG), Divide-and-Conquer Strategies. | ✓ | ✓ | ✓ | [22,31,33,35,36,37,38,39,53,54,55] |

| Attention-based neural networks, Bidirectional RNNs GANs and DenseNet | ✓ | ✗ | ✓ | [17,32,56,57,58,59] |

| GANs, DenseNet and SVMs | ✗ | ✗ | ✓ | [48,49,60] |

| Attention-Based Transformers, Low-Rank Matrix Restoration with Sparse Priors and Kalman Filters, Temporal Convolutional GANs (TCGAN) | ✗ | ✓ | ✓ | [3,25,26,27,28,29,30,34,40,41,43,45,46,50,61,62] |

| LSTM, GRU, FFNN, and BiLSTM, Locally Weighted PCA Regression, Regression Trees, Low-Rank Matrix Recovery, Non-Convex Optimization, kd-Tree Structures | ✗ | ✓ | ✗ | [24,42,44,51,52,63,64] |

| Graph Neural Networks combined with Temporal Transformers | ✓ | ✓ | ✗ | [47] |

| Dimension | Spatial-Temporal Transformers | Attention-LSTM (ALSTM) | GNNs | PCA | GANs |

|---|---|---|---|---|---|

| Robustness | Handles long gaps, occlusions; RMSE validated (34.8%). | Effective for mid-length occlusions; MPJPE, RMSE validated (21.7%). | Excellent for occlusions, joint connectivity; JPE, JOE validated (13%). | Struggles with noisy data; RAJE validated (6.5%). | Dependent on downstream models, F1 and AUC were validated (17.4%). |

| Accuracy | High precision for dynamic motions; BLE, RMSE validated (34.8%). | Reliable for moderate dynamics; RMSE validated (21.7%). | Accurate for spatial-temporal tasks; JPE, BLE validated (13%). | Limited for complex patterns; RAJE, MSE validated (6.5%). | High fidelity in augmented datasets; F1, RMSE validated (17.4%). |

| Adaptability | Generalizes well to unseen data; validated on large datasets (26.1%). | Good generalization via attention; MPJPE validated (13–21.7%). | Handles heterogeneous motions; NPE, BLE validated (13%). | Poor adaptability to new types; RAJE, MSE validated (6.5%). | Augments specific data scenarios; AUC validated (17.4%). |

| Computational Efficiency | High computational cost; execution time validated (13%). | Moderate cost; completion time validated (8.7%). | Graph construction adds complexity; validated with NPE (13%). | Highly efficient for small tasks; MSE validated (6.5%). | Resource-intensive training; F1, RMSE validated (17.4%). |

| Dataset Dependency | Requires large datasets; BLE, RMSE validated (26.1%). | Moderate dataset needs. MPJPE, RMSE validated (13–21.7%). | Diverse datasets required; NPE, BLE validated (13%). | Minimal dataset needs. RAJE, MSE validated (6.5%). | Effective for augmenting small datasets; AUC, F1 validated (17.4%). |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Almaleh, A.; Ushaw, G.; Davison, R. AI-Driven Motion Capture Data Recovery: A Comprehensive Review and Future Outlook. Sensors 2025, 25, 7525. https://doi.org/10.3390/s25247525

Almaleh A, Ushaw G, Davison R. AI-Driven Motion Capture Data Recovery: A Comprehensive Review and Future Outlook. Sensors. 2025; 25(24):7525. https://doi.org/10.3390/s25247525

Chicago/Turabian StyleAlmaleh, Ahood, Gary Ushaw, and Rich Davison. 2025. "AI-Driven Motion Capture Data Recovery: A Comprehensive Review and Future Outlook" Sensors 25, no. 24: 7525. https://doi.org/10.3390/s25247525

APA StyleAlmaleh, A., Ushaw, G., & Davison, R. (2025). AI-Driven Motion Capture Data Recovery: A Comprehensive Review and Future Outlook. Sensors, 25(24), 7525. https://doi.org/10.3390/s25247525