MRA-YOLOv8: A Transmission Line Fault Detection Algorithm Integrating Multi-Scale Feature Fusion

Abstract

1. Introduction

- (1)

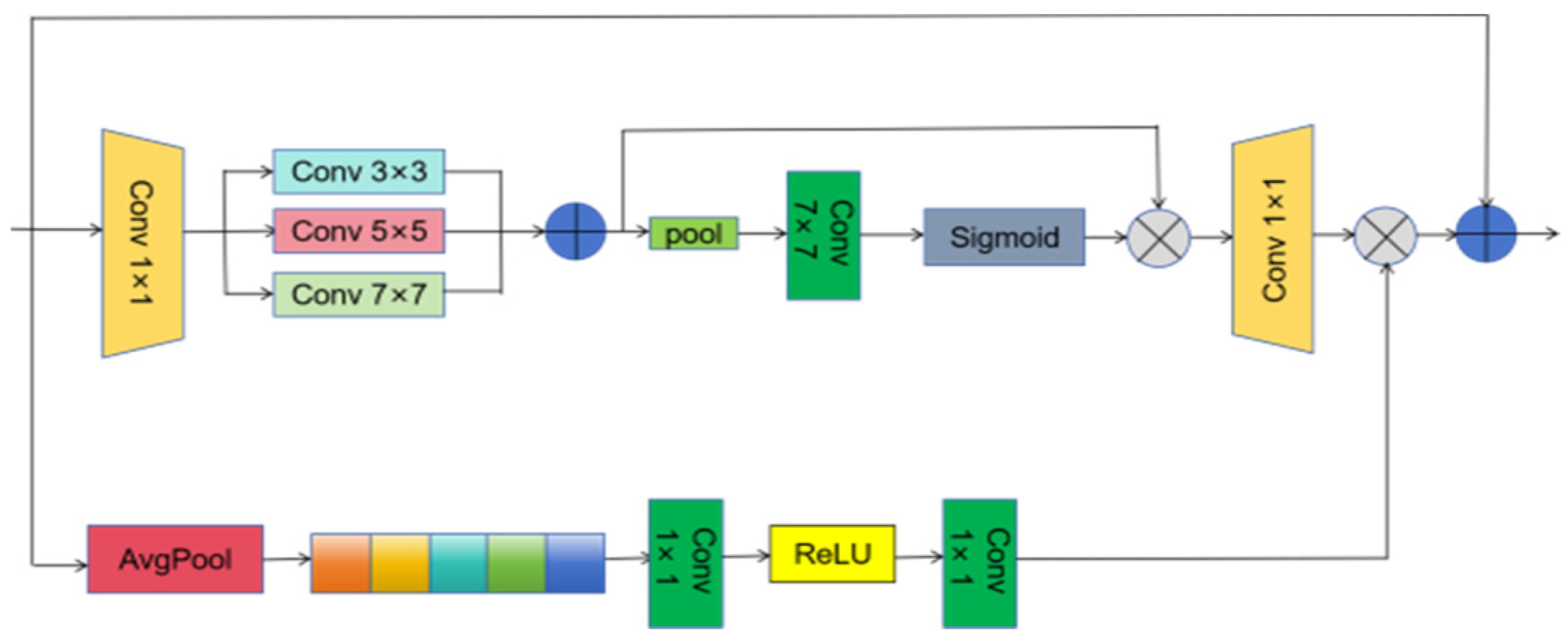

- To address the issue of weakened features for small targets against complex backgrounds, a Multi-Scale Attention Aggregation (MSAA) module is designed. In the spatial refinement path, multi-scale fusion helps retain fine-grained features of small objects, while the channel aggregation path enhances the model’s focus on channel information that is most relevant to the target. Together, these two mechanisms improve background suppression and effectively reduce interference from cluttered environments.

- (2)

- To address the performance degradation caused by partial object occlusion, a C2f-Restormer module is introduced. This module captures inter-feature relationships across different subspaces, computes attention weights to assess element importance for adaptive feature aggregation, and models long-range dependencies. Consequently, it significantly enhances the overall feature representation capacity.

- (3)

- This study proposes ATFL (Adaptive Task-focused Focal Loss), a loss function that adaptively adjusts training weights based on task-specific requirements, thereby improving model performance and convergence. It is intended to alleviate class imbalance issues and increase the model’s focus on hard-to-classify examples, thereby improving fault detection performance.

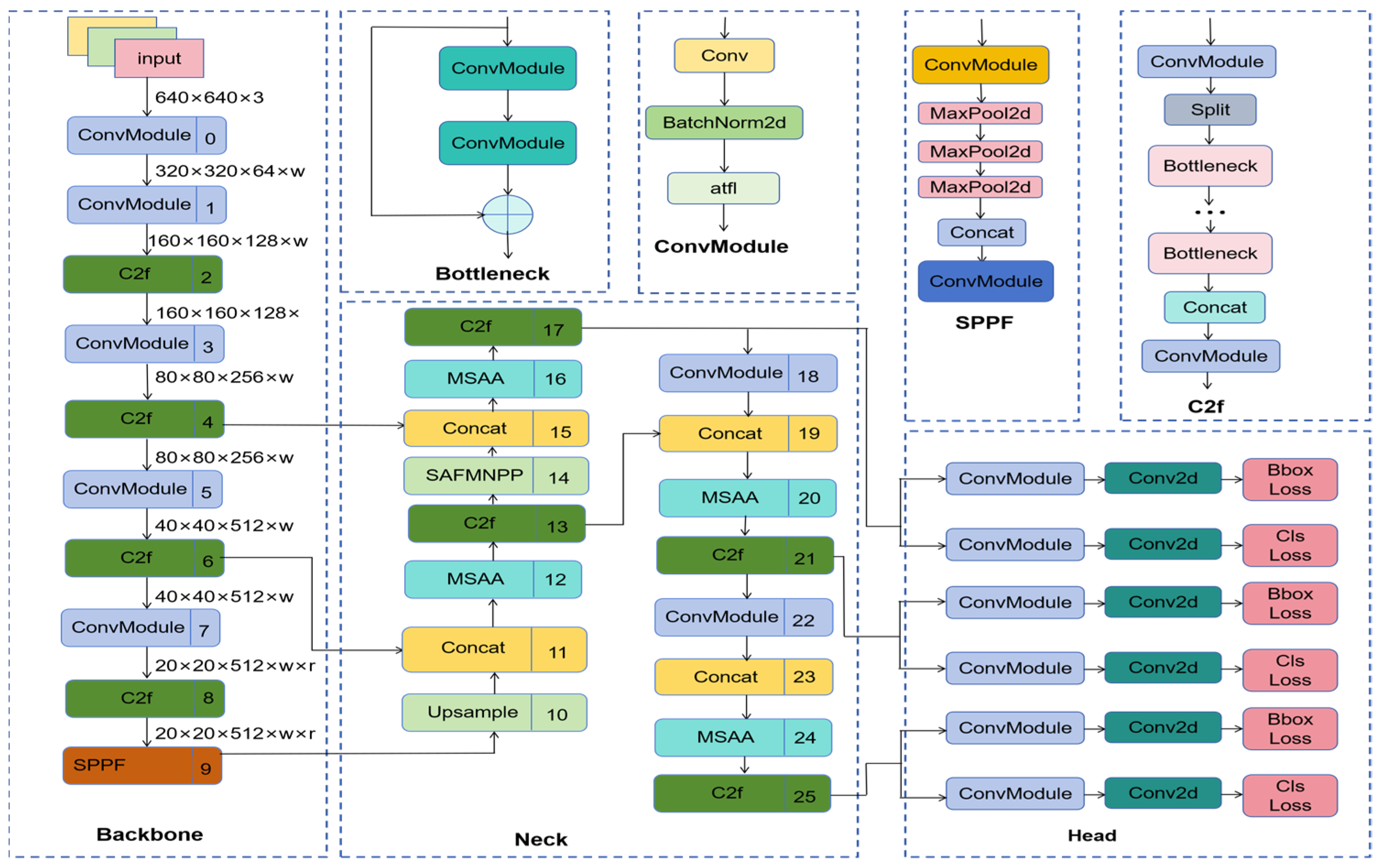

2. Principle of YOLOv8 Detection Algorithm

- (1)

- Input: Input images of varying sizes are initially resized to a uniform resolution of 640 × 640 to meet the model’s input requirements. Data diversity is enhanced through augmentation techniques such as random cropping, rotation, and flipping, thereby improving dataset quality. Predefined anchor boxes are utilized to generate candidate regions, preparing the data for subsequent feature extraction and object detection.

- (2)

- Backbone: Serving as the core component of YOLOv8, the backbone network extracts multi-level features from the input images. By incorporating a Cross-Stage Partial (CSP) structure, it effectively reduces computational overhead while maintaining strong feature representation capability. The use of depthwise separable convolution further decreases the number of parameters and computational complexity. Hierarchical convolutional operations capture features at different scales, enabling robust detection of multi-scale objects.

- (3)

- Neck: YOLOv8 employs a Path Aggregation Network (PANet) as its neck module. This architecture facilitates bidirectional (top-down and bottom-up) multi-scale feature fusion, enriching feature expressiveness. By integrating contextual information and combining features from different levels, the model gains the ability to detect objects across a wide range of scales, particularly enhancing its performance on small targets.

- (4)

- Head: The detection head constitutes the final component of YOLOv8, responsible for generating the final detection results. Its primary functions include classification and regression, multi-task learning, and non-maximum suppression (NMS). Classification and regression involve predicting object categories and bounding box coordinates for each candidate region. Through multi-task training, end-to-end object detection is achieved. Finally, the NMS algorithm is applied to eliminate redundant detection boxes and retain the most probable predictions.

3. MRA-YOLOv8 Detection Network

3.1. Backbone Network Reconfiguration

3.1.1. Multi-Scale Attention Aggregation Module (MSAA)

3.1.2. Self-Attention Mechanism Restormer

3.1.3. Improved Bounding Box Loss Function

4. Results and Analysis

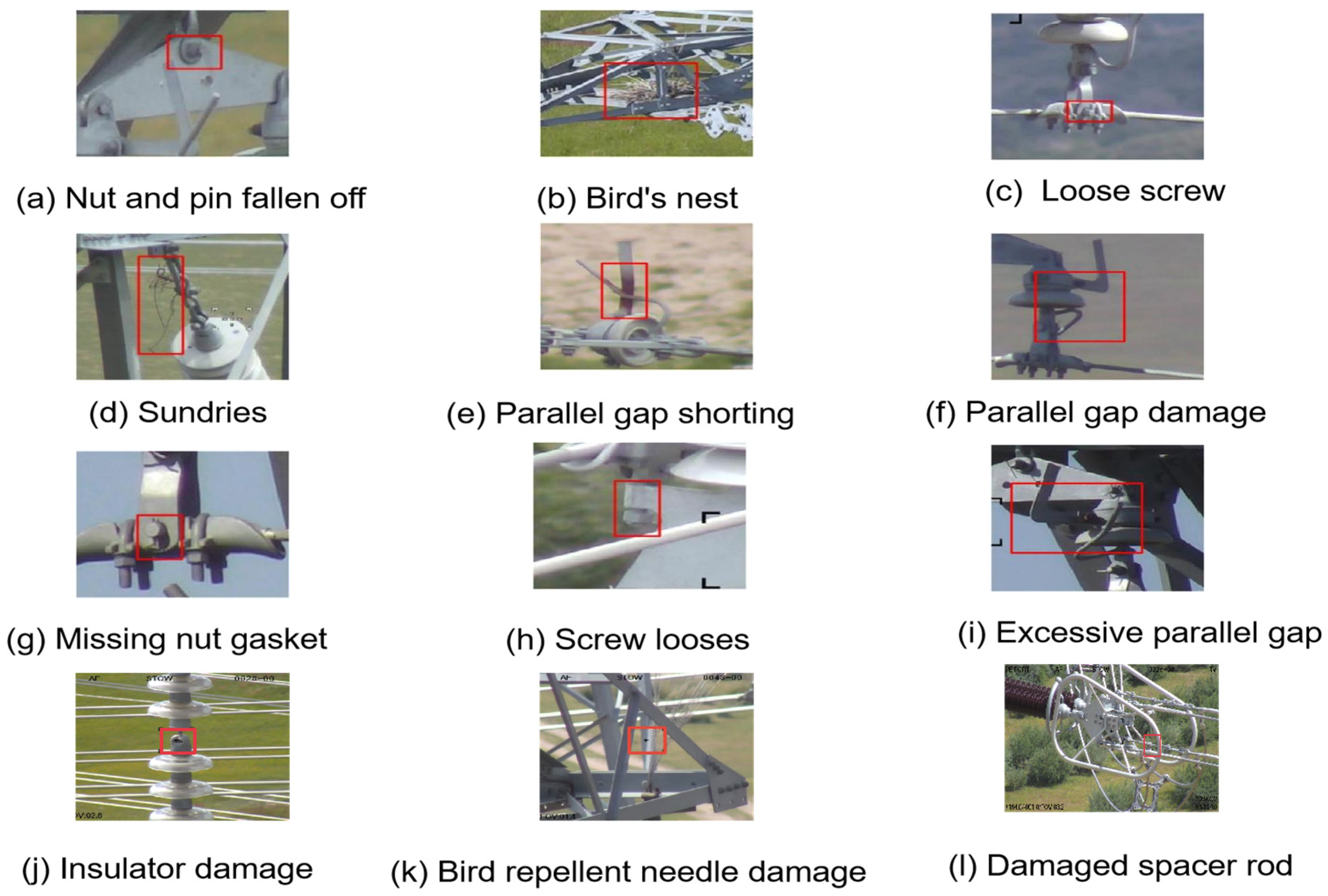

4.1. Dataset and Model Training

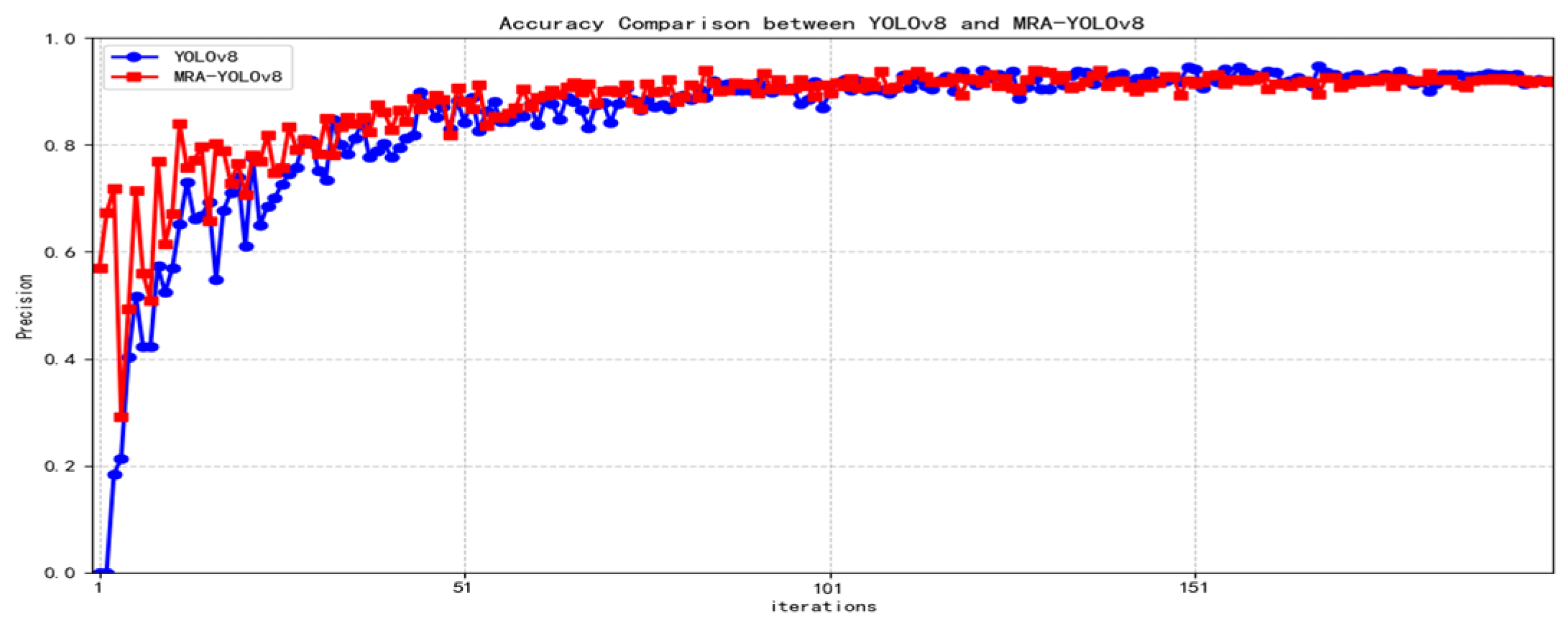

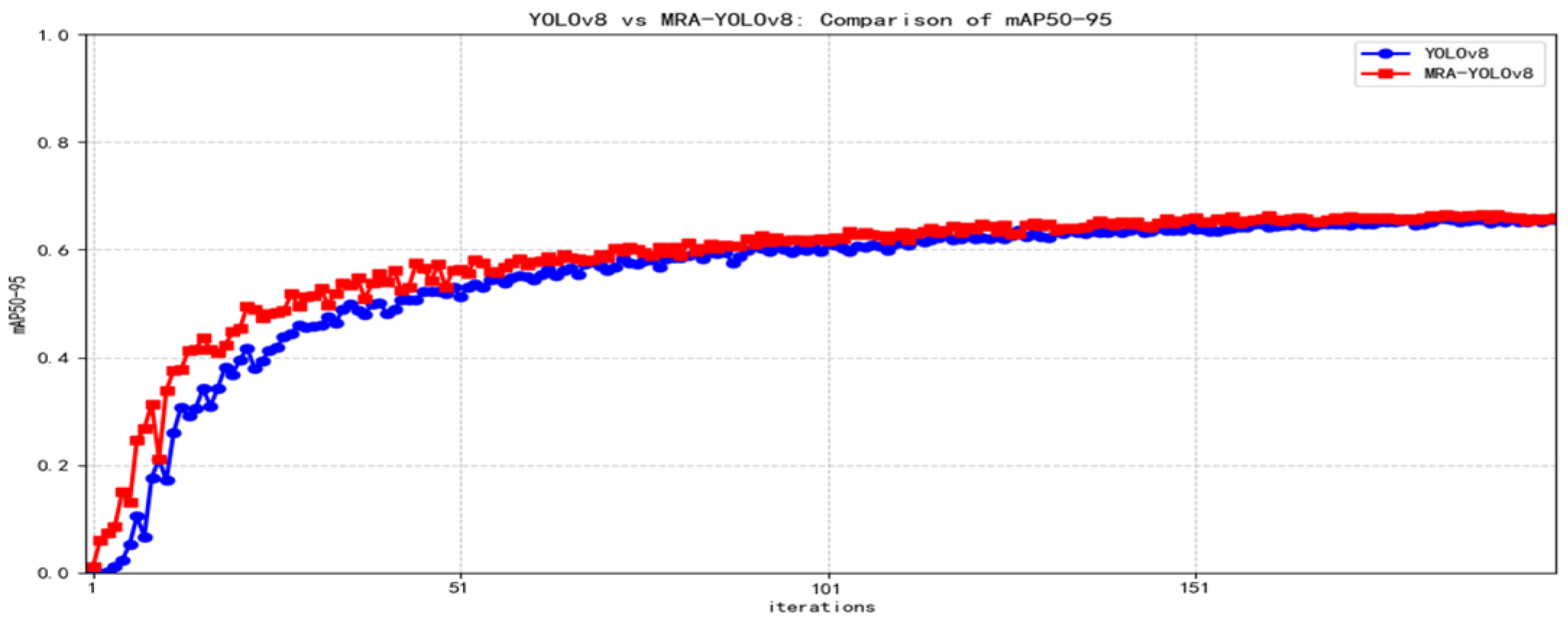

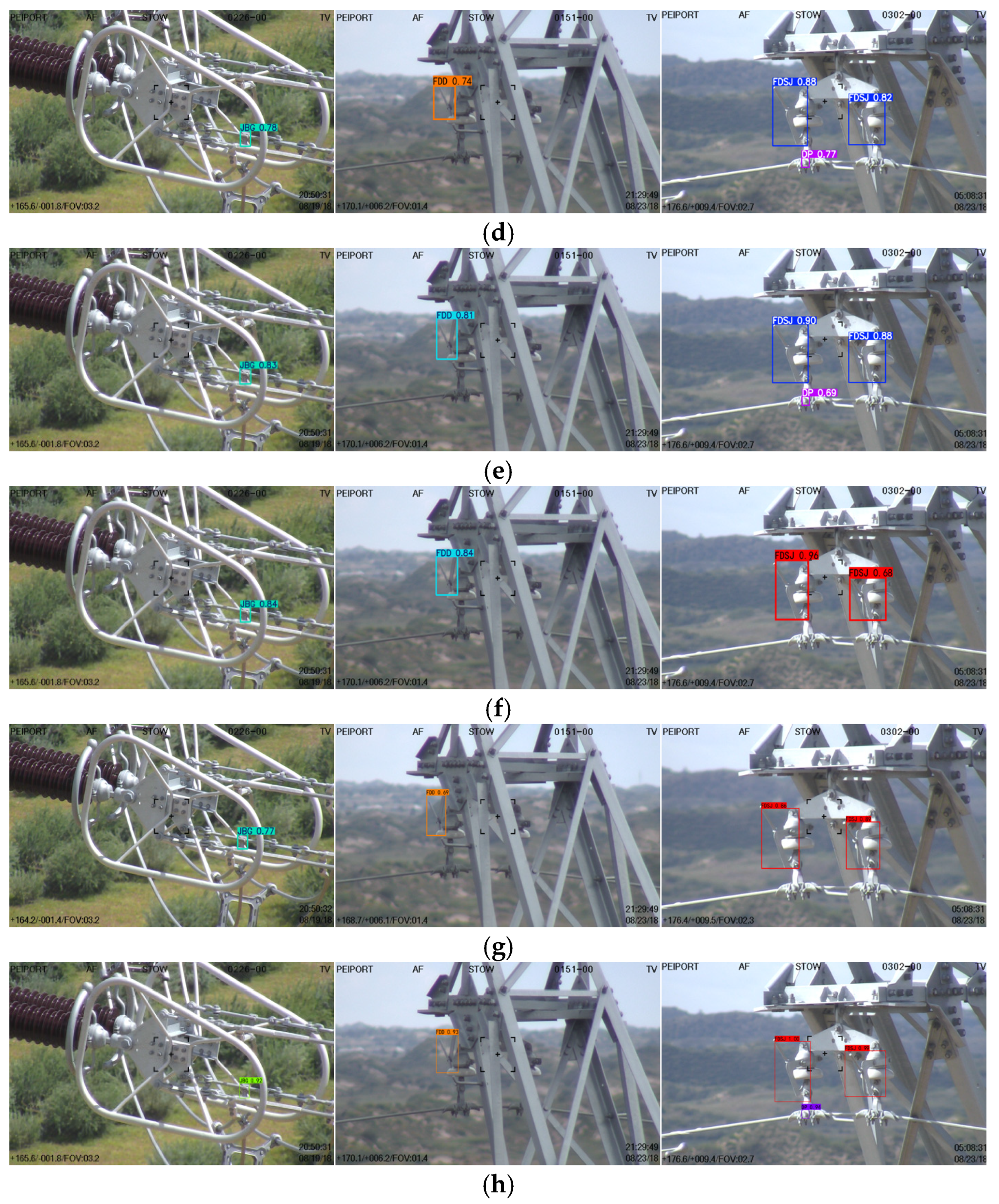

4.2. Test Results and Analysis

5. Conclusions

- (1)

- The C2f-Restormer self-attention module enhances global feature extraction and captures long-range dependencies in transmission line images, thereby improving the detection of small and partially occluded fault instances. This enhancement is accompanied by a moderate increase in GPU memory usage, which is considered acceptable given the current focus on accuracy.

- (2)

- The MSAA module facilitates multi-scale feature fusion by emphasizing informative features across different resolutions, thereby enhancing the model’s ability to detect faults of varying sizes under complex environmental conditions.

- (3)

- The ATFL dynamically adjusts training weights according to task-specific requirements, mitigating class imbalance and improving precision and recall for challenging fault instances.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Yang, L.; Fan, J.; Liu, Y.; Li, E.; Peng, J.; Liang, Z. A Review on State-of-the-Art Power Line Inspection Techniques. IEEE Trans. Instrum. Meas. 2020, 69, 9350–9365. [Google Scholar] [CrossRef]

- Zhiheng, K.; Chong, T.; Peiyao, T.; Chengbo, H.; Zheng, M. Numerical Recognition Algorithm for Power Equipment Monitoring Based on Light-Resnet Convolutional Neural Network. China Electr. Power 2024, 57, 206–213. [Google Scholar] [CrossRef]

- Zhang, S.; Guo, T.; Zheng, L. Data Aggregation Model for UAV Inspection of Transmission Lines under Digital Twin Technology. Electron. Des. Eng. 2024, 32, 102–106. Available online: http://m.qikan.cqvip.com/Article/ArticleDetail?id=7112752962 (accessed on 24 November 2025).

- Wu, L.; Bi, J.; Chang, W.; Yang, Y.; Gong, Y. Integrated UAV Inspection Technology for Distribution Network Overhead Transmission Lines. China Electr. Power 2018, 51, 97–101, 138. [Google Scholar] [CrossRef]

- Nguyen, H. Augmented Reality and Machine Learning Incorporation Using YOLOv3 and ARKit. Appl. Sci. 2021, 11, 6006. [Google Scholar] [CrossRef]

- Özüpak, Y. Machine learning-based fault detection in transmission lines: A comparative study with random search optimization. Bull. Pol. Acad. Sci. Tech. Sci. 2025, 73, e153229. [Google Scholar] [CrossRef]

- Faisal, A.A.; Mecheter, I.; Qiblawey, Y.; Fernandez, J.H.; Chowdhury, M.E.; Kiranyaz, S. Deep Learning in Automated Power Line Inspection: A Review. Appl. Energy 2025, 385, 125507. [Google Scholar] [CrossRef]

- Siddiqui, Z.A.; Park, U. A Drone Based Transmission Line Components Inspection System with Deep Learning Technique. Energies 2020, 13, 3348. [Google Scholar] [CrossRef]

- Liu, K.; Li, B.; Qin, L.; Li, L.; Zhao, F.; Wang, Q.; Lin, Z.; Yu, J. A Review of Deep Learning Object Detection Algorithms for Insulator Fault Detection in Overhead Transmission Lines. High Volt. Eng. 2023, 49, 3584–3595. [Google Scholar] [CrossRef]

- Hao, S.; Zhang, X.; Ma, X. A Method for Detecting Small-Target Faults in Transmission Lines Based on PKAMNet. High Volt. Technol. 2023, 49, 3385–3394. [Google Scholar]

- Chen, Y.; Wang, H.; Shen, J.; Zhang, X.; Gao, X. Application of Data-Driven Iterative Learning Algorithm in Transmission Line Defect Detection. Sci. Program. 2021, 2021, 9976209. [Google Scholar] [CrossRef]

- Liang, H.; Zuo, C.; Wei, W. Detection and Evaluation Method of Transmission Line Defects Based on Deep Learning. IEEE Access 2020, 8, 38448–38458. [Google Scholar] [CrossRef]

- Su, T.; Liu, D. Transmission line defect detection based on feature enhancement. Multimed. Tools Appl. 2024, 83, 36419–36431. [Google Scholar] [CrossRef]

- Tan, G. FALE-YOLO: Real-time intelligent detection of multiple defects in transmission lines based on lightweight improvement and multi-scale feature fusion. Insight 2025, 67, 362–369. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R. You only look once: Unified, ruth-time object detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Wang, X.; Cao, Q.; Jin, S.; Chen, C.; Feng, S. Research on Detection Method of Transmission Line Strand Breakage Based on Improved YOLOv8 Network Model. IEEE Access 2024, 12, 168197–168212. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4:optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Jocher, G.; Stoken, A.; Borovec, J. ultralytics/yolov5. v3.0 [Computer software]. Zenodo: Geneva, Switzerland, 2020. [Google Scholar] [CrossRef]

- Zhang, H.; Zhang, M. SSD Object Detection Algorithm Incorporating Channel Attention Mechanism. Comput. Eng. 2020, 46, 264–270. [Google Scholar]

- Xu, W.; Gao, Y.; Chen, C. Automatic Annotation of Insulator Images Based on YOLOv5. Sci. Technol. Innov. 2021, 15–17. [Google Scholar]

- Ma, X.; Wang, R.; Deng, J. Transmission Line Fault Detection Using CSD-YOLOv8. J. Xi’an Univ. Sci. Technol. 2025, 10–18. [Google Scholar]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. YOLOX: Exceeding YOLO Series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar] [CrossRef]

- Xu, X.; Jiang, Y.; Chen, W.; Huang, Y.; Zhang, Y.; Sun, X. DAMO-YOLO: A Report on Real-Time Object Detection Design. arXiv 2022, arXiv:2211.15444. [Google Scholar]

- Mittal, P. A comprehensive survey of deep learning-based lightweight object detection models for edge devices. Artif. Intell. Rev. 2024, 57, 242. [Google Scholar] [CrossRef]

- Liu, C.; Wu, Y.; Liu, J.; Sun, Z.; Xu, H. Insulator faults detection in aerial images from high-voltage transmission lines based on deep learning model. Appl. Sci. 2021, 11, 4647. [Google Scholar] [CrossRef]

- Ji, Y.; Ma, T.; Shen, H.; Feng, H.; Zhang, Z.; Li, D.; He, Y. Transmission line defect detection algorithm based on improved YOLOv12. Electronics 2025, 14, 2432. [Google Scholar] [CrossRef]

- Cai, Z.; Wang, T.; Han, W.; Ding, A. PGE-YOLO: A multi-fault-detection method for transmission lines based on cross-scale feature fusion. Electronics 2024, 13, 2738. [Google Scholar] [CrossRef]

- Yaseen, M. What is YOLOv8: An In-Depth Exploration of the Internal Features of the Next-Generation Object Detector. arXiv 2024, arXiv:2408.15857. [Google Scholar]

- Liu, M.; Dan, J.; Lu, Z.; Yu, Y.; Li, Y.; Li, X. CM-UNet: Hybrid CNN-Mamba UNet for Remote Sensing Image Semantic Segmentation. arXiv 2024, arXiv:2405.10530. [Google Scholar]

- Li, H.; Su, D.; Cai, Q.; Zhang, Y. BSAFusion: A Bidirectional Stepwise Feature Alignment Network for Unaligned Medical Image Fusion. Proc. AAAI Conf. Artif. Intell. 2025, 39, 4725–4733. [Google Scholar] [CrossRef]

- Yang, B.; Zhang, X.; Zhang, J.; Luo, J.; Zhou, M.; Pi, Y. EFLNet: Enhancing Feature Learning Network for Infrared Small Target Detection. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5906511. [Google Scholar] [CrossRef]

- Hao, S.; Ren, K.; Li, J.; Ma, X. Transmission Line Defect Target-Detection Method Based on GR-YOLOv8. Sensors 2024, 24, 6838. [Google Scholar] [CrossRef]

- Wang, D.; Zhang, S.; Yuan, B. Research on lightweight glass insulator spontaneous breakage defect detection based on an improved YOLOv5. High Volt. Technol. 2023, 49, 4382–4390. [Google Scholar]

| Configuration | Version Information |

|---|---|

| CPU | i5-13490F |

| GPU | RTX 4060ti |

| Operating System | Windows 11 |

| Deep learning framework | Pytorch2.0.1 CUDA 11.8 |

| Fault Type | Custom Name | Number of Faults |

|---|---|---|

| Damage to parallel gap | FDSJ | 152 |

| Short circuit in parallel gaps | FDD | 174 |

| Excessive parallel gaps | FDG | 230 |

| Spacer rod dislodged | JBG | 284 |

| Pin dislodged | XT | 252 |

| Screw dislodged | LST | 267 |

| Insulator dislodged | JYT | 162 |

| Power lines obstructed by debris | ZW | 178 |

| Power lines obstructed by bird nests | NC | 145 |

| Nut missing washer | DP | 263 |

| Nut loose | LSS | 260 |

| Bird deterrent spikes damaged | NS | 116 |

| C2f-Restormer | MSAA | ATFL | Precision | Recall | mAP50 | mAP50-95 |

|---|---|---|---|---|---|---|

| - | - | - | 0.902 | 0.889 | 0.910 | 0.627 |

| √ | - | - | 0.918 | 0.932 | 0.935 | 0.677 |

| - | √ | - | 0.941 | 0.881 | 0.923 | 0.641 |

| - | - | √ | 0.911 | 0.887 | 0.924 | 0.653 |

| √ | √ | - | 0.933 | 0.902 | 0.920 | 0.681 |

| √ | - | √ | 0.924 | 0.923 | 0.937 | 0.670 |

| - | √ | √ | 0.934 | 0.914 | 0.935 | 0.671 |

| √ | √ | √ | 0.925 | 0.909 | 0.93 | 0.917 |

| Algorithm | Precision | Recall | mAP50 | mAP50-95 | Time (ms) |

|---|---|---|---|---|---|

| YOLOv5 | 0.903 | 0.874 | 0.907 | 0.638 | 14.5 |

| Faster RCNN | 0.88 | 0.843 | 0.78 | 0.552 | 28.7 |

| SSD | 0.874 | 0.825 | 0.756 | 0.54 | 13.9 |

| YOLOv8 | 0.918 | 0.892 | 0.92 | 0.655 | 11.2 |

| Center Net | 0.895 | 0.87 | 0.81 | 0.575 | 16.1 |

| YOLOv8n | 0.866 | 0.81 | 0.746 | 0.52 | 10.1 |

| MRA-YOLOv8 | 0.925 | 0.909 | 0.93 | 0.917 | 13.7 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hao, S.; Li, J.; Ma, X. MRA-YOLOv8: A Transmission Line Fault Detection Algorithm Integrating Multi-Scale Feature Fusion. Sensors 2025, 25, 7508. https://doi.org/10.3390/s25247508

Hao S, Li J, Ma X. MRA-YOLOv8: A Transmission Line Fault Detection Algorithm Integrating Multi-Scale Feature Fusion. Sensors. 2025; 25(24):7508. https://doi.org/10.3390/s25247508

Chicago/Turabian StyleHao, Shuai, Jing Li, and Xu Ma. 2025. "MRA-YOLOv8: A Transmission Line Fault Detection Algorithm Integrating Multi-Scale Feature Fusion" Sensors 25, no. 24: 7508. https://doi.org/10.3390/s25247508

APA StyleHao, S., Li, J., & Ma, X. (2025). MRA-YOLOv8: A Transmission Line Fault Detection Algorithm Integrating Multi-Scale Feature Fusion. Sensors, 25(24), 7508. https://doi.org/10.3390/s25247508