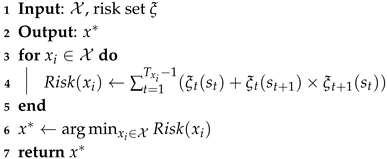

Once the agent completes one task, it receives a reward for the achievement of the goal . Meanwhile, the agent receives a step penalty for each step, also called step cost. As the environment is shared with humans, the agent is penalized with when it collides with a human.

We evaluate the performance of the proposed methods from the following aspects: average conflict number, conflict distribution, task success rate, and reward. In each scenario, we simulate 100 times and calculate the average value for evaluation. The other parameters are as follows:

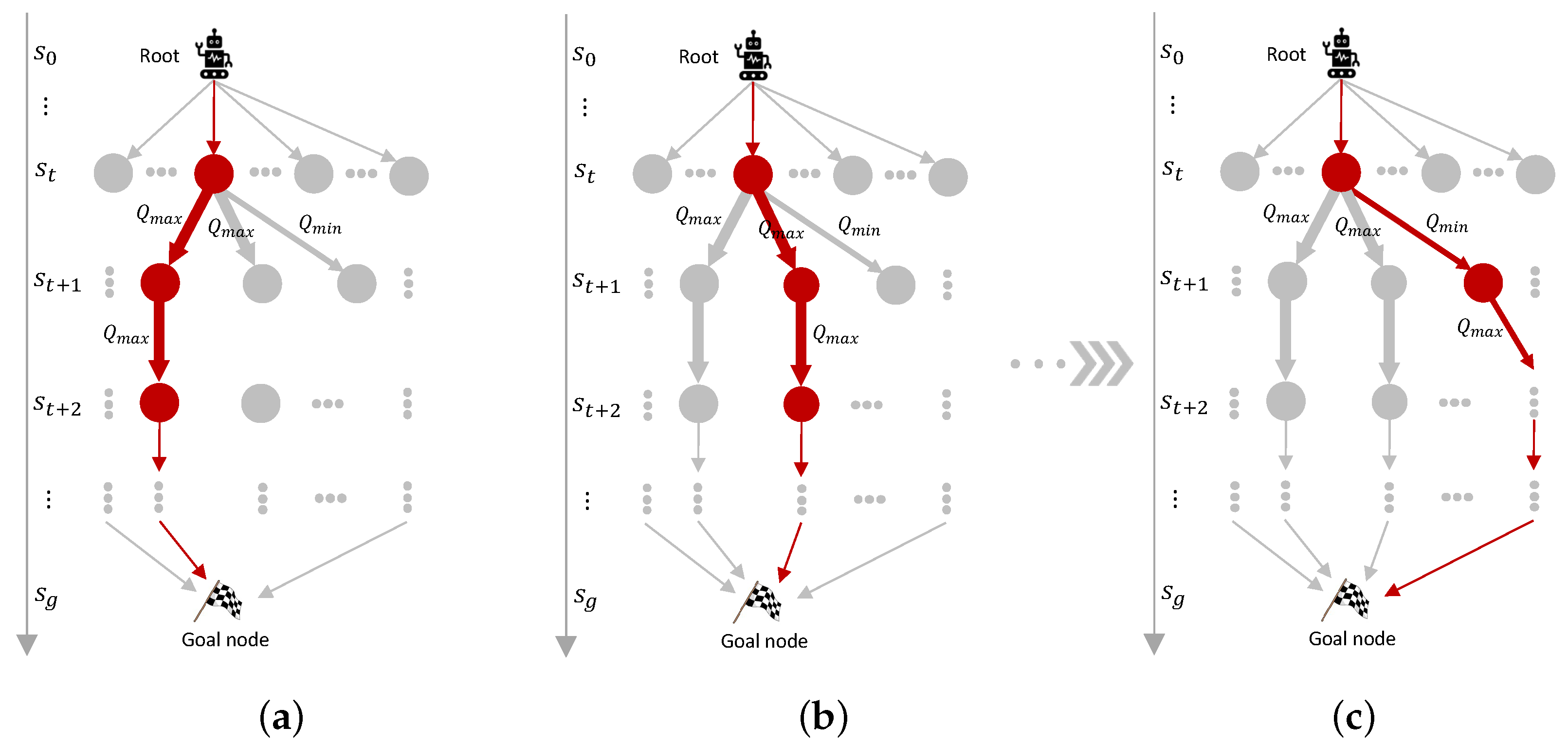

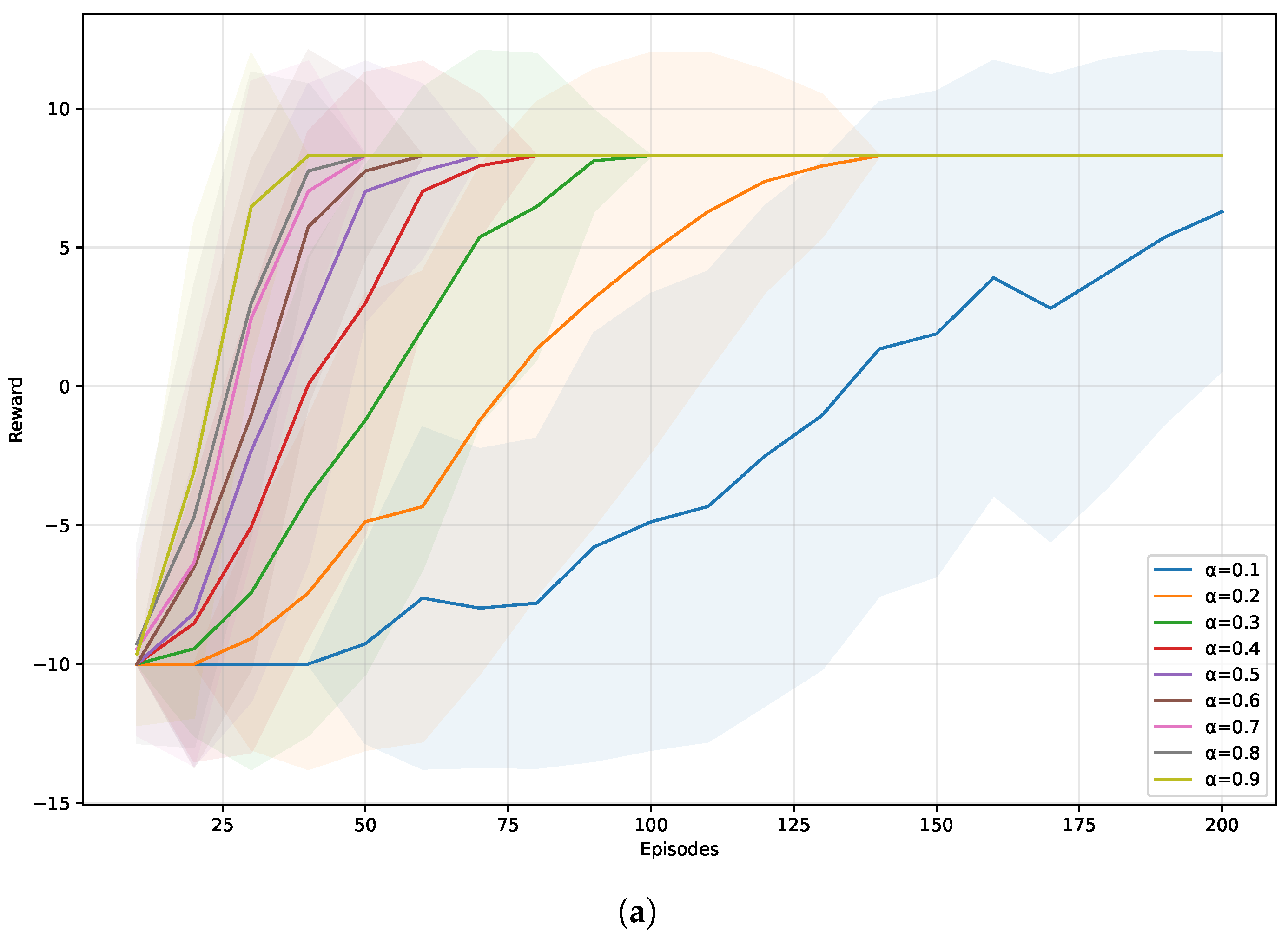

Figure 4 illustrates how different parameters influence the learning process.

Figure 4a investigates the effect of

. As expected,

affects the learning speed.

Table 1 shows the average results of conflict number, task success rate, and reward with 100 simulations. When

, the average conflict number is the least and the task success rate is high as

. Thus, we set

.

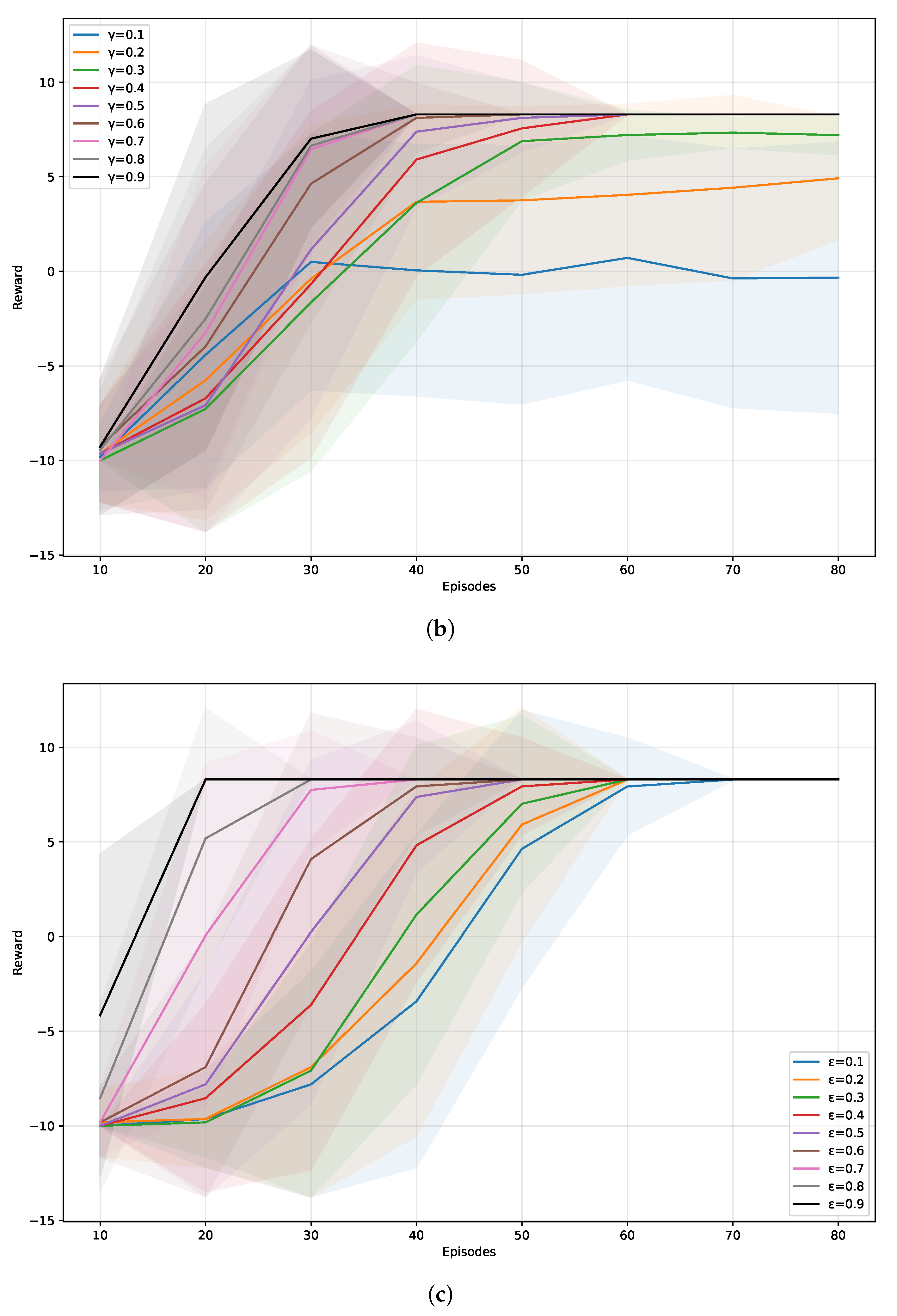

Figure 4b shows the effect of

. As we expect the algorithm to place more emphasis on future rewards, we directly set

, the same as in [

40].

Table 2 presents the average results under different values of

. It also shows that

is a good choice with fewer conflicts and higher task success rate. For the

, we do not set the common value

[

26,

40] as our purpose is to enhance the exploration of the environment. However, how large

should be is difficult to determine. As

Figure 4c shows, they finally converged at the same reward and they did not vary much in the average conflict number and task success rate, as shown in

Table 3. Finally, we set it manually as

, which is not too large or too small.

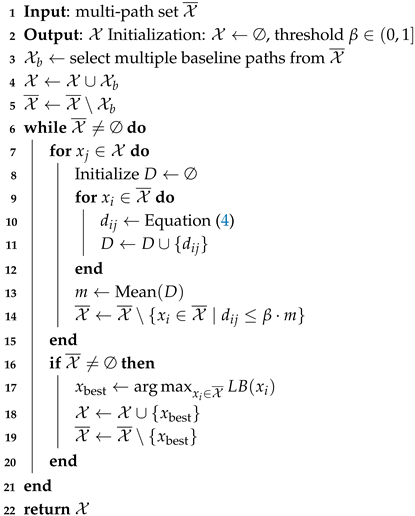

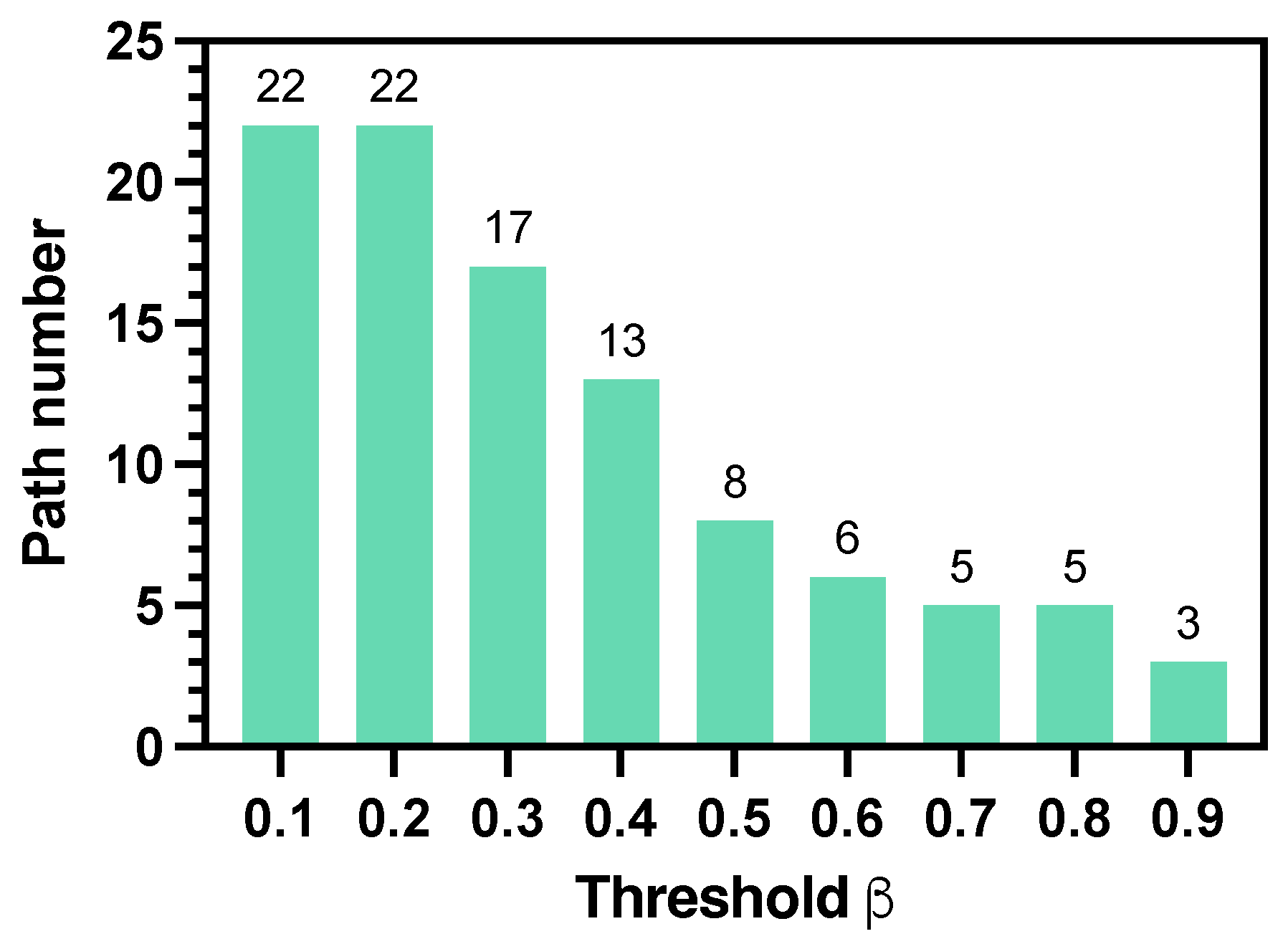

Figure 5 shows how the threshold

affects the elimination of redundant paths. We obtained

paths with the multi-path generator in a

grid environment. When

increases, more paths are eliminated. As

Figure 5 shows,

is a critical point where more paths are removed and

changes sharply. In this paper, we keep a conservative strategy and choose to reserve more paths to enhance the robustness to human uncertainties and set

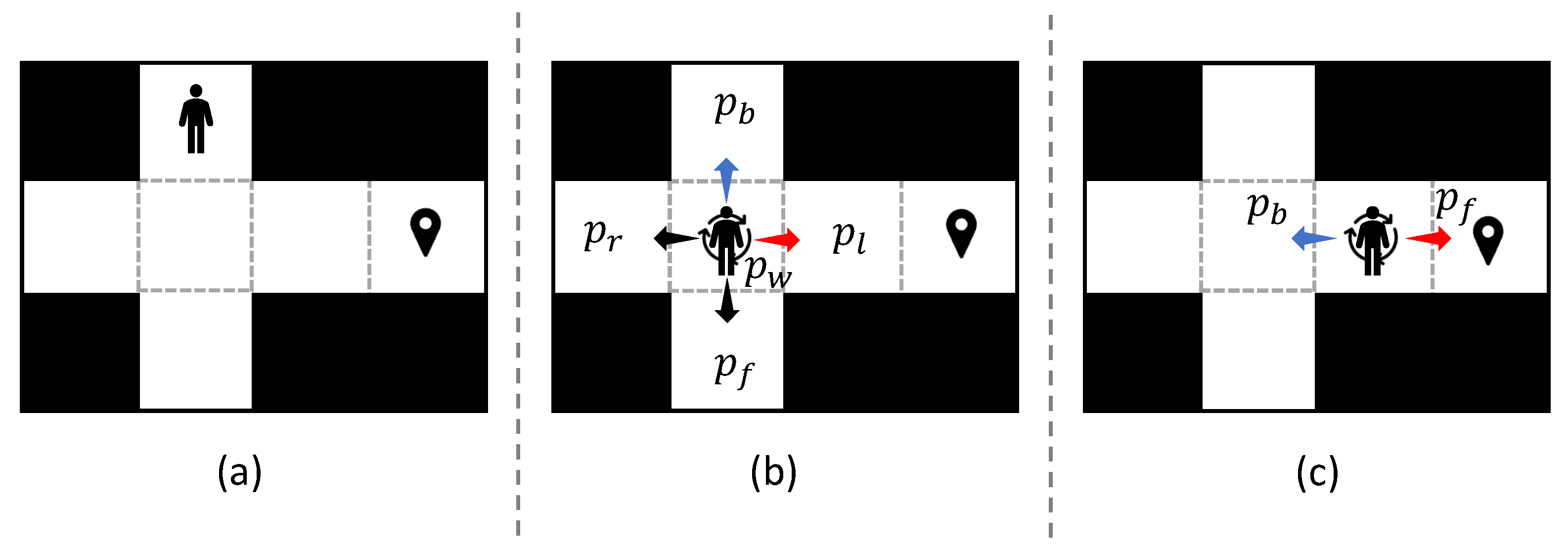

. As for the parameters in the human motion model, we set

. If the human moves according to a uniform distribution over all possible actions, the probability of each action would be

. However, under the assumption that a human tends to move toward the goal, the probability of any action outside the prioritized action set should be smaller than

. Therefore, we set

within this range to control the degree of stochasticity in human motion while maintaining goal-directed behavior.

For MDP and DQN methods, the robot receives a conflict penalty when it collides with a human during the exploration. Note that only the robot could be rewarded or penalized. A human never receives a reward and penalty as they are the uncertainties of the environment. For the A* method, we add a conflict cost along its step cost while the RRT also performs with a cost function for human avoidance.

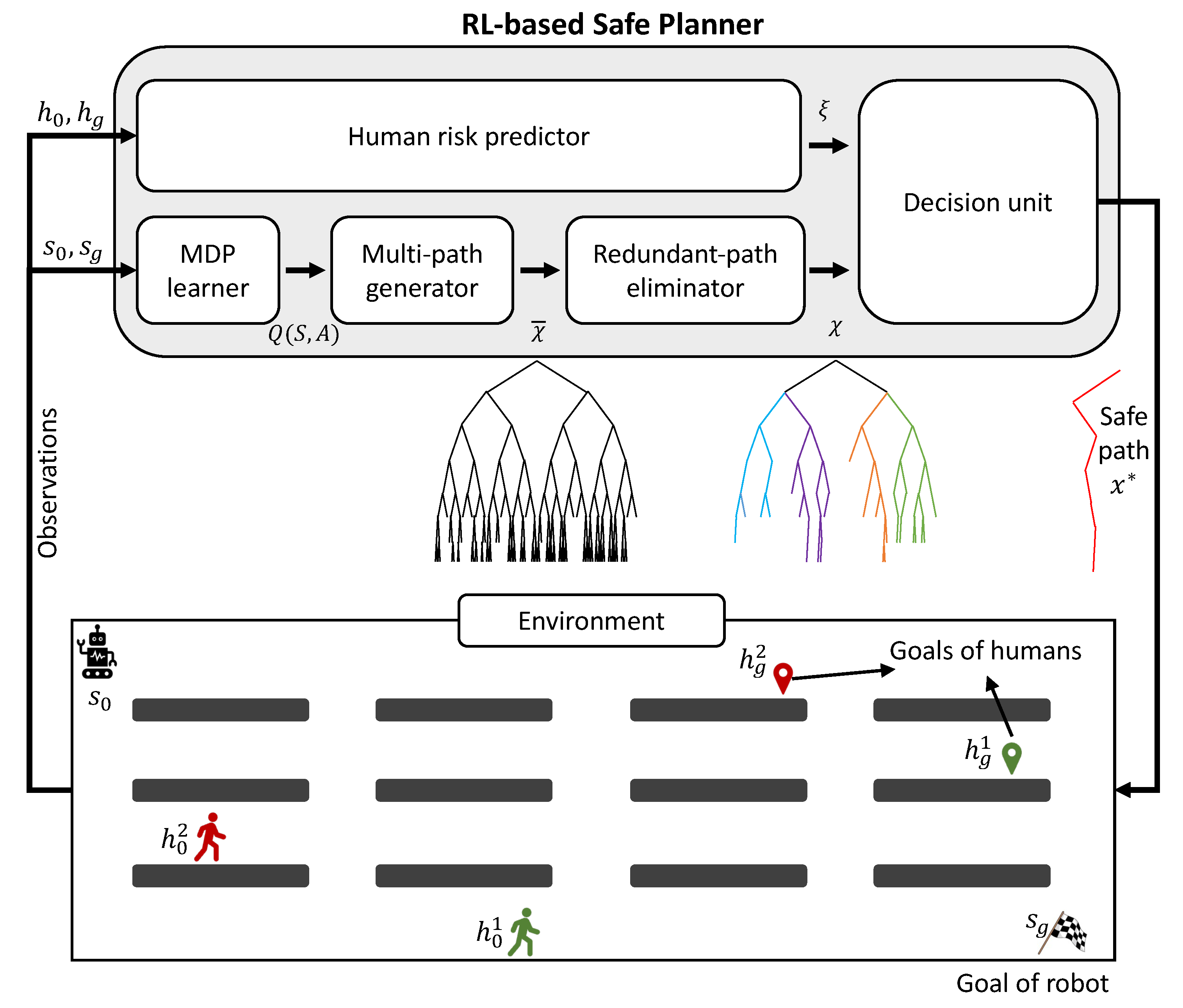

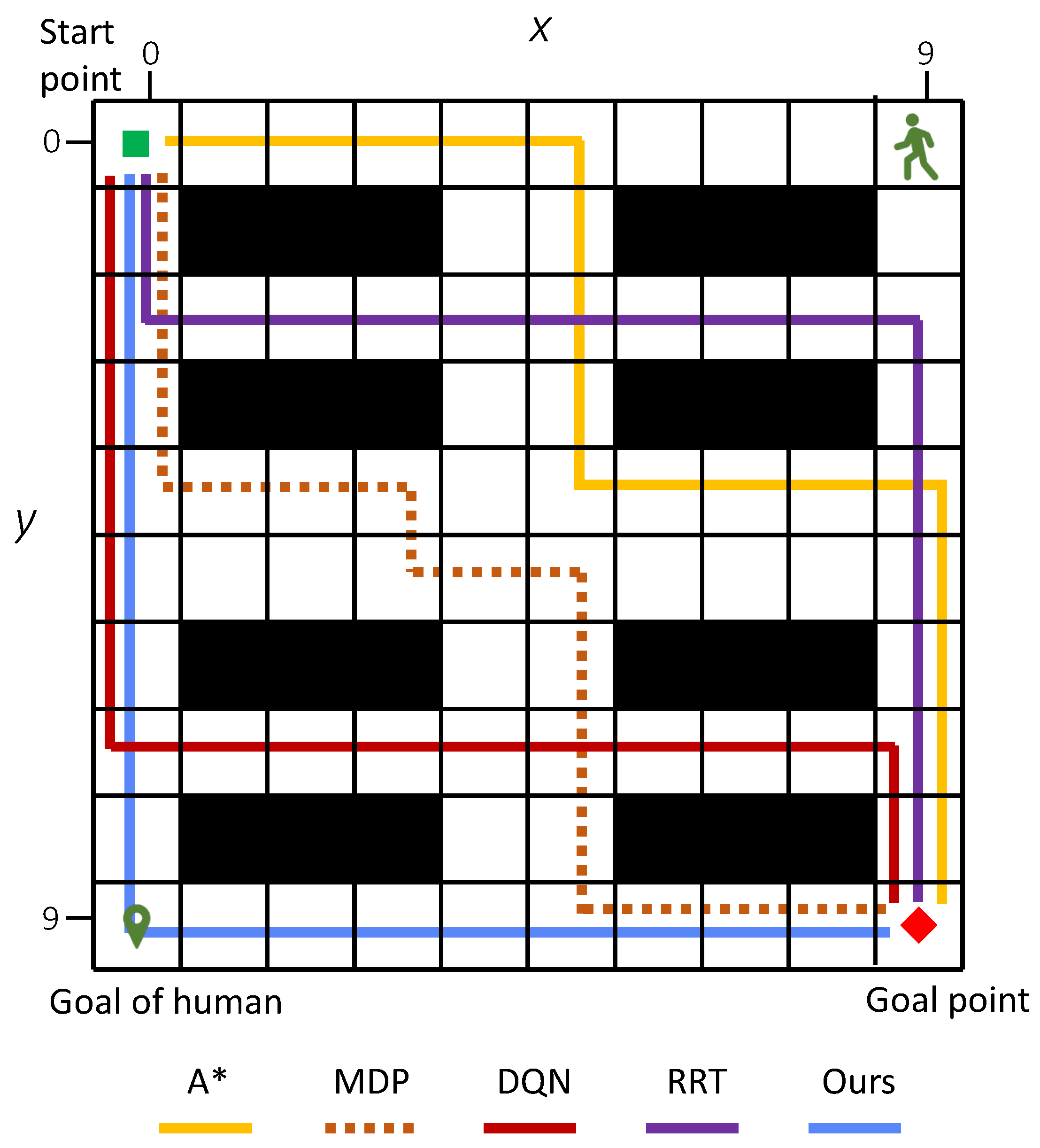

4.1. Scenario 1: Verification on a Grid World Environment

Scenario 1 is simulated in a grid world. The agent starts from = (0, 0), and = (9, 9) is its goal position. A human moves stochastically from position = (9, 0) to his/her goal position = (0, 9). The time budget . In this paper, the agent starts from the upper-left corner, and its goal is located at the lower-right corner, while the human starts from the upper-right and moves toward the lower-left. This cross configuration increases the likelihood of collisions between the agent and the human along any potential path. In addition, the human start and goal positions are fixed so that all 100 simulations are conducted under the same configuration, eliminating the randomness introduced by different human position settings. This ensures a more reliable evaluation of the proposed algorithm’s performance.

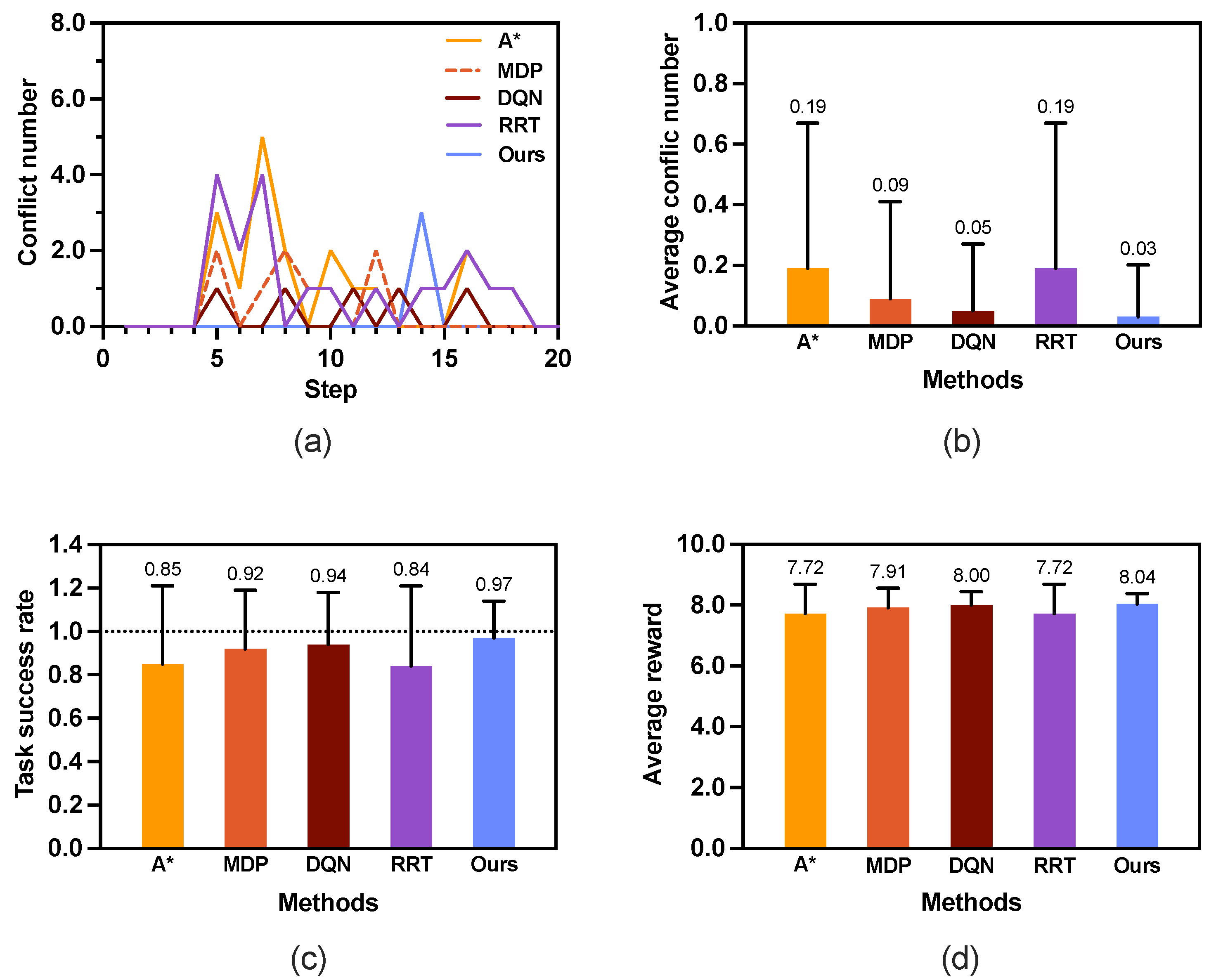

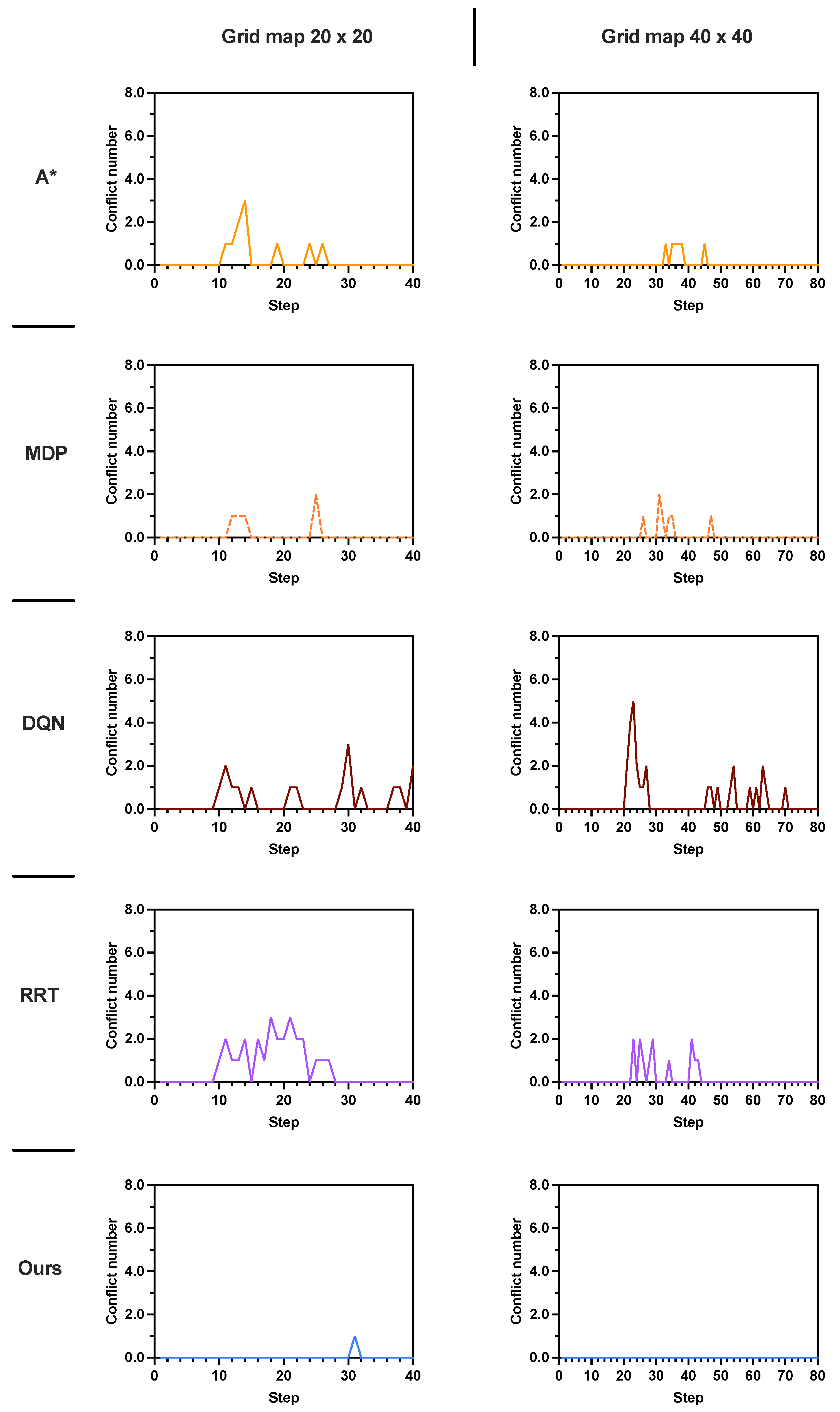

Figure 6a shows the conflict distribution that records the time step at which conflicts occur. In A* and MDP, the first conflict occurs at the same time step

. In contrast, the first conflict occurring in our proposed method is much farther behind than those in the other methods, which is at time step

.

Figure 6b depicts the average conflict number in 100 rounds of simulations. The A* method has the highest average conflict numbers (0.19 ± 0.48) compared with the other methods. The proposed method has the least average conflict number, 0.03 ± 0.17, which is much less than the those of the other two methods.

The simulation results of scenario 1 indicate that our proposed safe planner not only reduces the conflict number but also delays the time at which a conflict occurs. Notably, the proposed method demonstrates superior performance, achieving a success rate of 0.97 ± 0.17, which is significantly higher than the other methods (see

Figure 6c). Moreover, as shown in

Figure 6d, our method achieves a higher reward of the generated path than the other two methods.

Considering the average number of conflicts, task success rate, and path reward, the simulation results clearly demonstrate the effectiveness of our method.

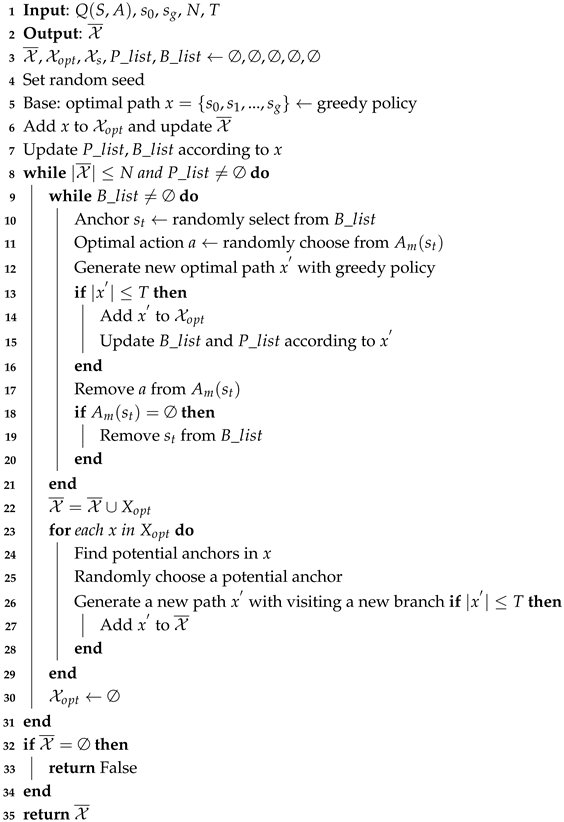

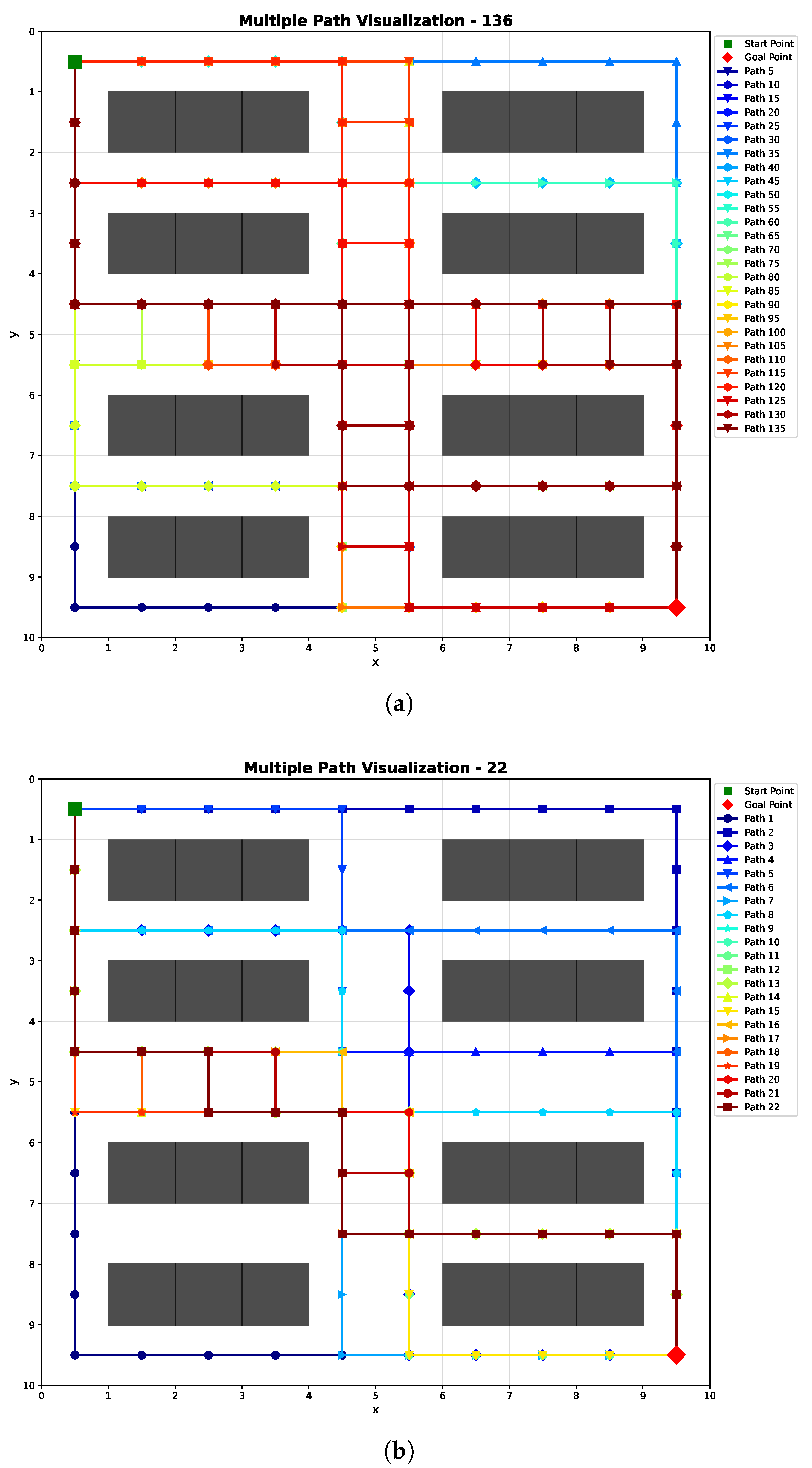

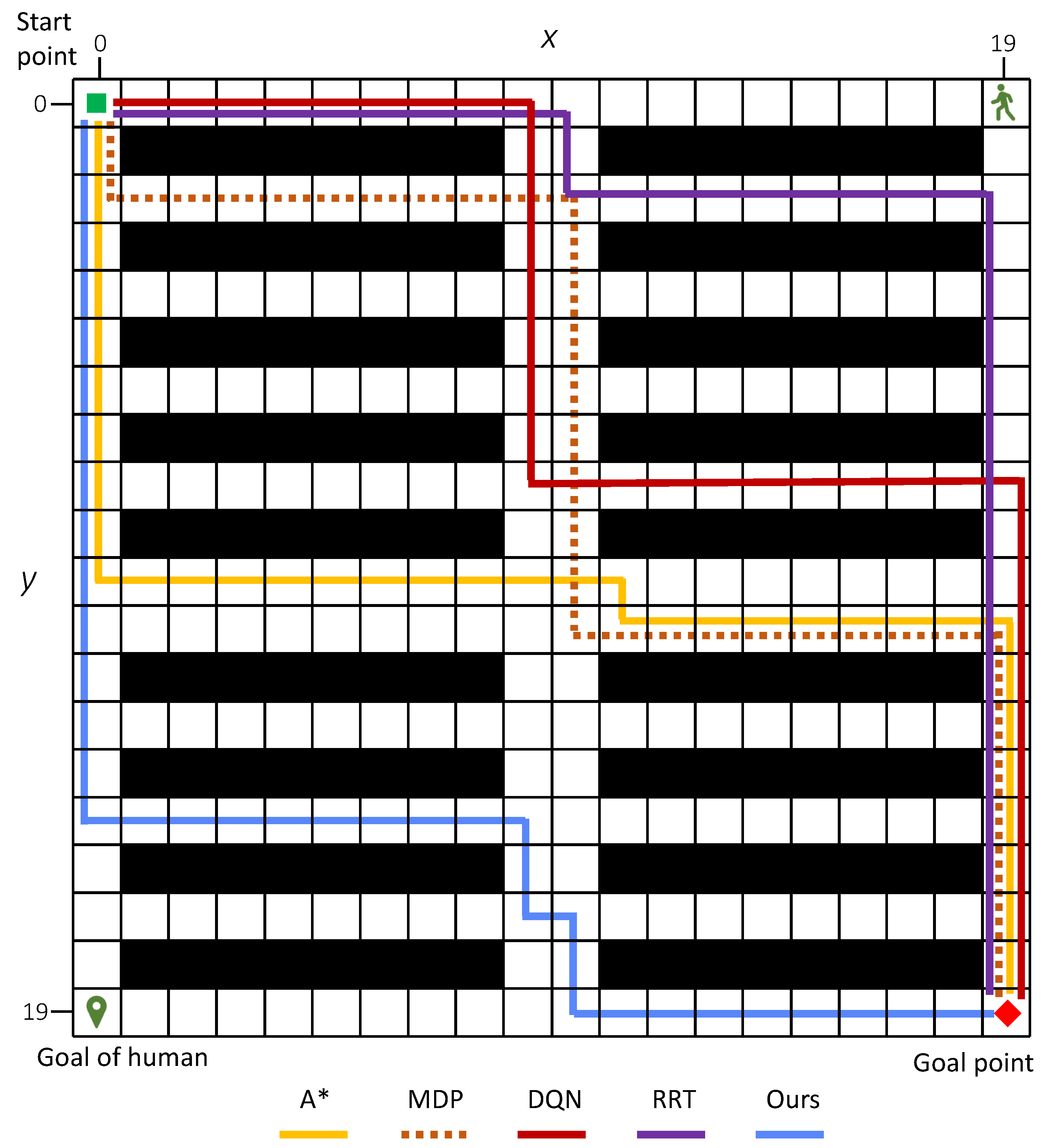

Figure 7 shows an example of an optimal path generated.

Figure 7a illustrates the generated 136 paths,

.

Figure 7b shows the 22 diverse paths

after path elimination where path number reduces by about 83.8%.

Figure 7c shows the safety-optimized path

. An example of the generated paths for a

map of different methods is illustrated in

Figure 8.

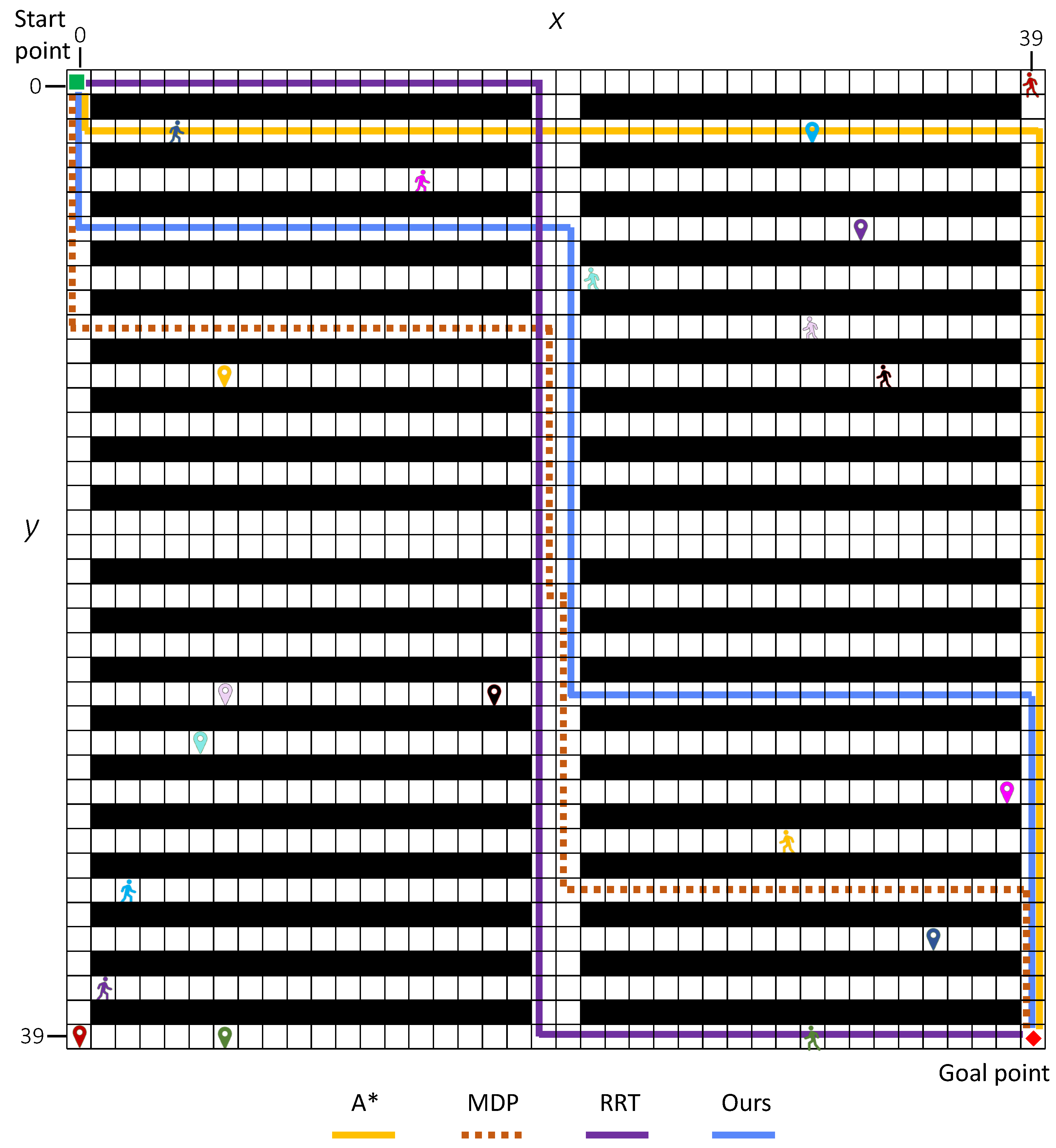

4.3. Scenario 3: Investigation on Increasing Human Numbers

In this scenario, we investigate the performance of the proposed method on a more complex environment by increasing the numbers of humans K. The simulation environment is a grid world involving 2∼10 humans. The agent starts from = (0, 0), and = (39, 39) is its goal position. The humans’ starting point and target location are also randomized where = (30, 39), = (39, 0), = (4, 2), = (1, 37), = (29, 31), = (2, 33), = (14, 4), = (21, 8), = (30, 10), = (33, 12); their goal positions are = (6, 39), = (0, 39), = (35, 35), = (32, 6), = (6, 12), = (30, 2), = (38, 29), = (5, 27), = (6, 25), and = (17, 35), respectively. The budget is configured as .

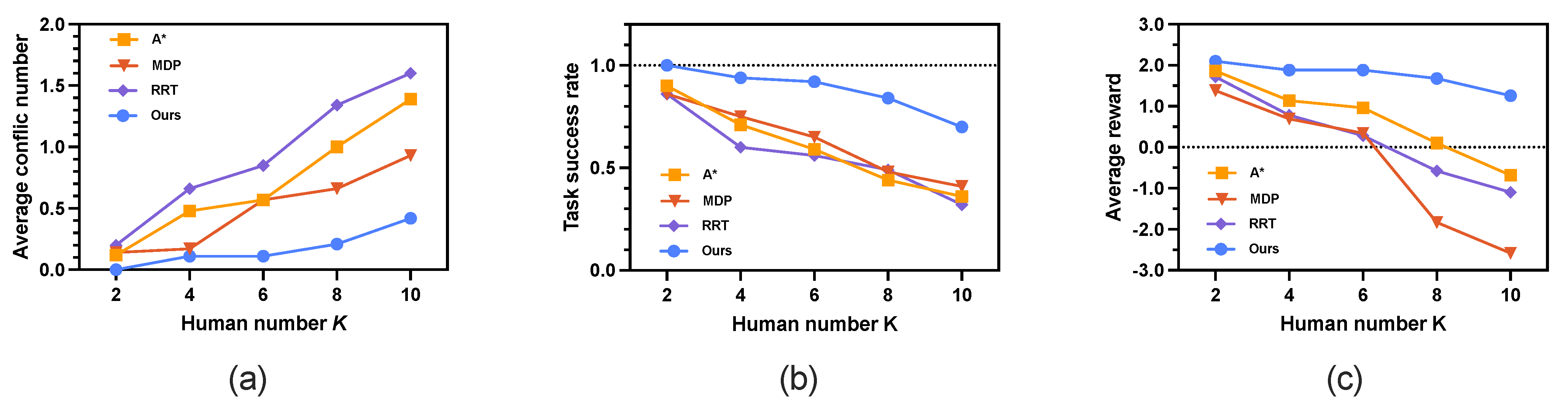

The simulation results evaluated by three key metrics are shown in

Figure 10 and summarized in detail in

Table 5. As shown in

Figure 10a, the average conflict number increases for all methods as the number of humans

K grows. The proposed method consistently outperforms the other three approaches, maintaining the lowest average conflict number. Even under high human density (

), our method effectively reduces conflicts, achieving an average conflict number of 0.42, which is

times lower than A* (1.39) and RRT (1.60). As illustrated in

Figure 10b, the proposed method also achieves the highest average task success rate across all values of

. Furthermore, it yields the highest average reward (

Figure 10c) as the number of humans increases. With increasing

K, environmental uncertainty rises significantly, making it more challenging to plan safe paths without re-planning. The simulation results demonstrate that our method is more robust to such uncertainties compared to the other three approaches.

From human number

, the results of our method differ from the other three methods. For average conflict numbers, our approach maintains the lowest averages (0.11∼0.53) with smaller standard deviations compared with A* (0.48∼1.21), MDP (0.17∼0.38), and RRT (0.66∼1.27). When

, MDP exhibited the most pronounced increase in conflict number (average from 0.17∼0.57), approximately a fivefold growth. In contrast, our proposed method consistently maintained an average conflict number of 0.11.

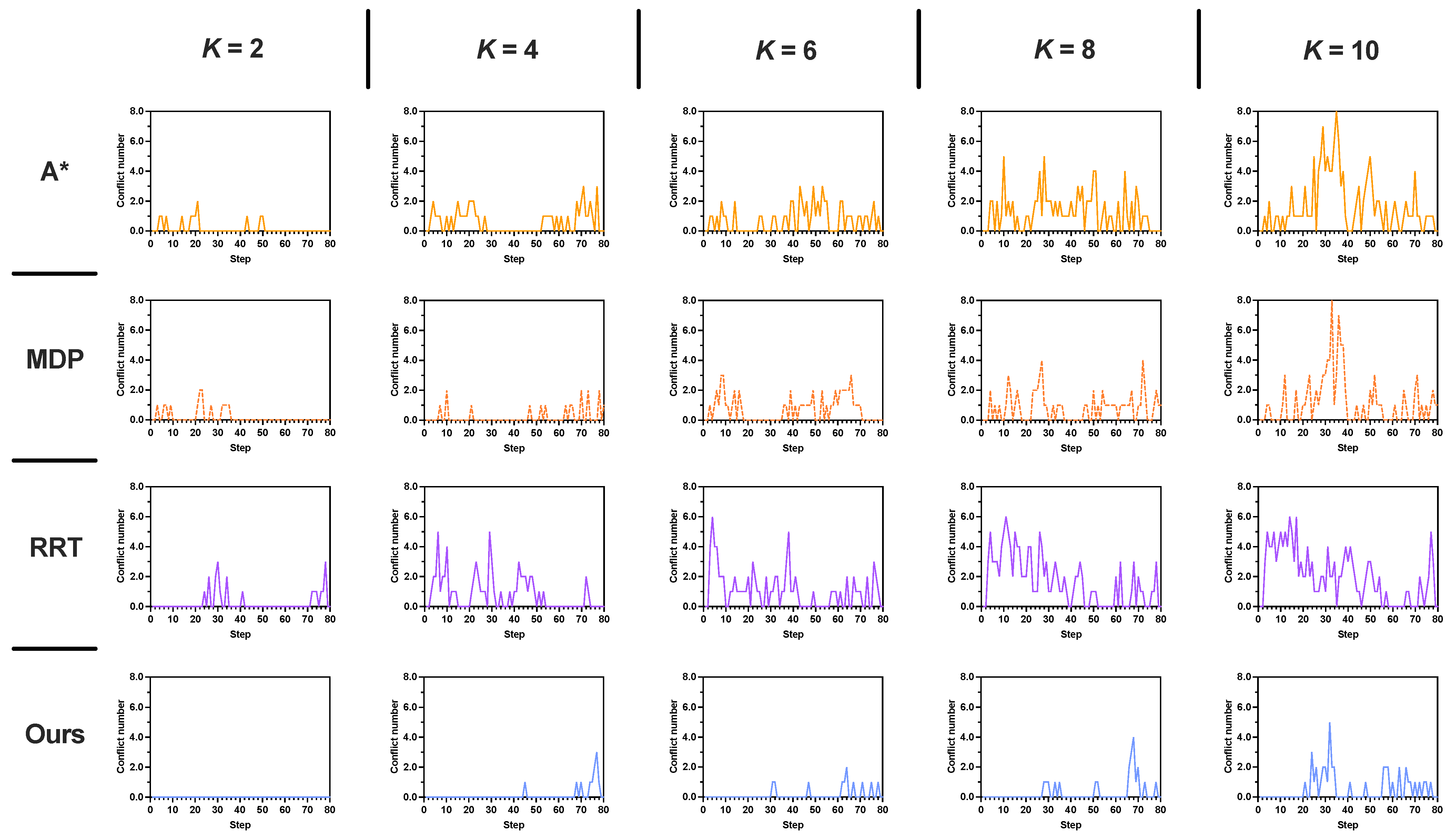

Figure 11 shows the conflict distribution of a

map involving

humans. When

, no conflict occurs in our method, whereas

in A*,

in MDP, and

in RRT. Particularly, when the number of humans doubled (

), A*, RRT and our method showed a twofold increase in conflict number compared to

, while MDP exhibited a nearly sixfold escalation. For all cases (

K= 2∼10), the first conflict in our method occurs much later than the other compared methods.

The task success rate demonstrates significant differences: our method achieves 100% success at and declines to 70% at , while A*, MDP, and RRT drop below 50% for and . Notably, the reward metric showcases our method’s balanced optimization, delivering positive rewards (2.10∼1.26) throughout. By contrast, A*, MDP, and RRT yield negative values (ranging from 0.10∼−0.68 for A*, 0.34∼−2.58 for MDP, 0.28∼−1.10 for RRT) in high-density settings.

In the most challenging scenario with human number

, our method reduces the average number of conflicts by −69.8%, −54.8%, and −73.4% compared with A*, MDP, and RRT, respectively

. Moreover, our method significantly improves the average task success rate by 94.4% over A*, 70.7% over MDP, and 118.75% over RRT, respectively

. In terms of average reward, our method achieves a positive value of 1.26, which is substantially higher than those of A* (−0.68), MDP (−2.58), and RRT (−1.10). These simulation results clearly demonstrate that our method outperforms both A* and MDP across multiple metrics. For demonstration purposes,

Figure 12 and

Figure 13 display examples of the generated paths in maps with

and

.

From the simulation results of the above three scenarios, we can see that the proposed method is more effective in solving potential conflicts at the planning level with stochastically moving humans. As discussed previously, our algorithm adopts a multi-path generator to generate multiple path candidates to find a safe path. Additionally, a redundant-path eliminator is proposed to reduce the candidate number while maintaining the diversity.

It should be noted that conflicts may still occur. Although the proposed algorithm effectively reduces the number of conflicts, there remains a gap toward our ultimate goal of completely resolving conflicts at the planning level within a single planning process. The algorithm is only tested in a warehouse grid environment with simple rectangle obstacles (shelves). The complex environment with different shapes of obstacles will be tested once the safe-path-planning problem is solved.

As discussed above, one main limitation of this work is that we cannot completely resolve all conflicts within a single planning process. Another limitation is that computational time has not been considered in this study. As described previously, the overall time computational complexity of the proposed method is . Currently, we concentrate on addressing human–robot conflicts at the planning level, while computational cost is not taken into account. We will improve the algorithm and enhance computational efficiency in our future work. In addition, taking the environment exploration with MDP into consideration, the computational cost remains relatively high, particularly in generating multiple paths using the RL-based method. As RL is effective in handling unknown environments, we employed it to generate multiple diverse paths despite its high computational cost. In addition, the human motion model adopted in this work is a simple stochastic one. We are currently developing an improved approach and will present the new algorithm in our future work.