4.1. Results of Different B-Scan Implementations

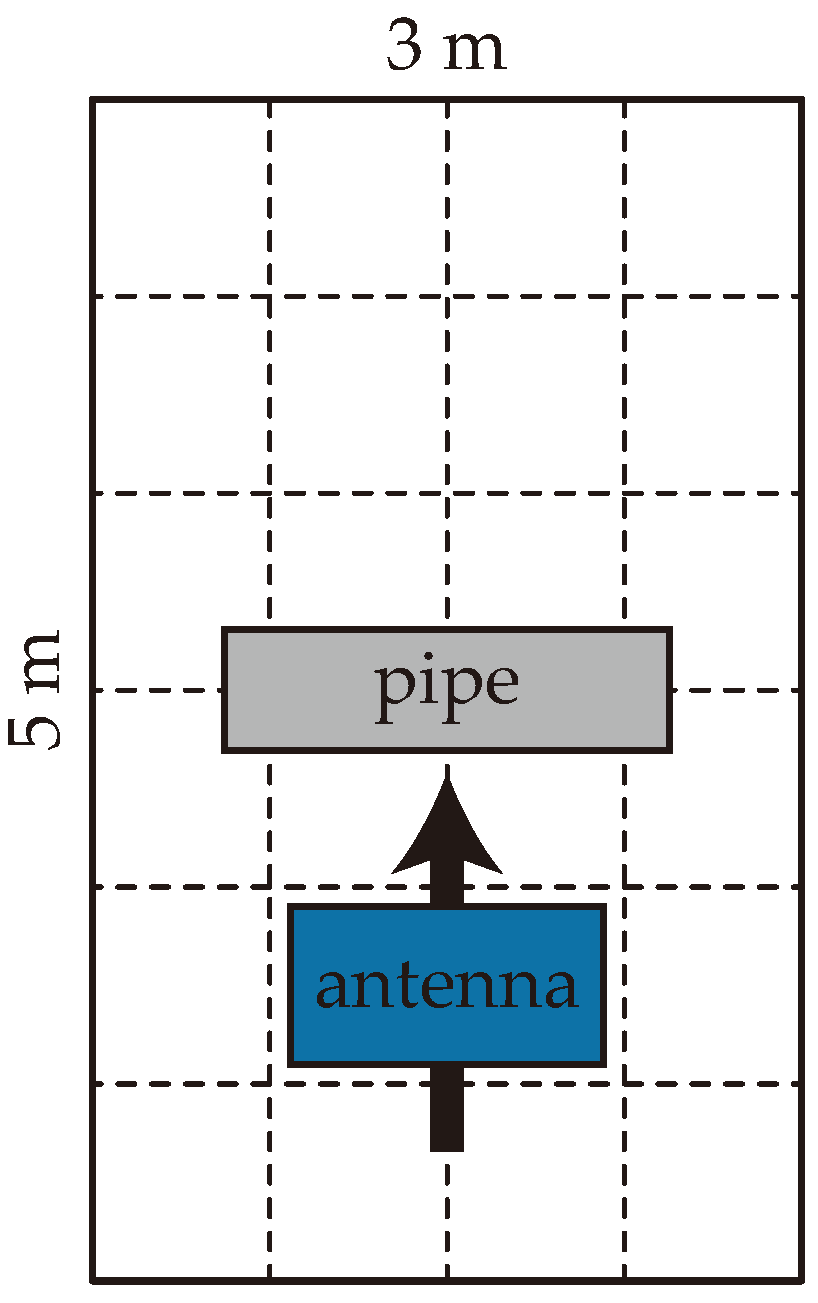

To validate the proposed model’s algorithms, a number of B-scans were randomly selected from datasets collected under different operating conditions. These two datasets are hereafter referred to as Dataset 1 and Dataset 2. Dataset 1 was obtained from a field experiment conducted in Changji, Xinjiang Province, China. The soil in the area is a mixture of saline–alkali soil and sand, which creates suboptimal detection conditions. The experimental area was a rectangle of size 5.0 m (length)

3.0 m (width). The box was divided into a 6

4 grid, as shown in

Figure 12, with every grid of size 0.75 m

0.83 m. The GPR device was an ImpulseRadar CO1760 with a 600 MHz antenna (ImpulseRadar, Malå, Sweden). The pipe was buried underground with its cylindrical axis perpendicular to the long side of the experimental rectangle so that the pipe hyperbolas were captured by moving the GPR device perpendicular to the pipe axis. In the experiment, ductile iron pipes with outer diameters of 10, 15, and 20 cm were buried underground at a depth of 10 cm. The soil in the area is a mixture of saline–alkali soil and sand, which creates suboptimal detection conditions. Owing to the small radii of the pipes, the horizontal expansion of the hyperbolas was limited. As mentioned above, the model was designed to address these challenges.

Dataset 2 was collected from a residential area located in a relatively busy urban district of a city using the same GPR device with a dual-frequency antenna of 170 MHz and 600 MHz. For the ease of reproduction, the operation configurations of the GPR device when collecting Datasets 1 and 2 are listed in

Table A1 and

Table A2, respectively, in

Appendix A. This area consists primarily of residential zones and functional zones, with multilayer asphalt pavement and heterogeneous soil conditions in the backfill layer. A variety of underground pipelines, including those for providing water supply, heating, drainage, and electricity, are buried beneath the road surface. These pipelines differ in material and burial depth, and in certain regions, their spatial arrangements are geometrically complex. Additionally, the site is subject to substantial electromagnetic interference from multiple sources. Compared to the operating environment in Dataset 1, the conditions in Dataset 2 are significantly more complex. It can be observed in

Figure 13 and

Figure 14 that the B-scan from Dataset 2 contains numerous additional reflections below the ground, which are caused by the complex subsurface structure of the pavement. Moreover, small, densely arranged hyperbola-like clutter is visible, indicating the presence of electromagnetic interference and structural heterogeneity.

To quantitatively assess the differences between Datasets 1 and 2, the JSUM signal-to-clutter ratio (SCR) metric is adopted to evaluate the relative prominence of target features with respect to background noise because the target is more easily interfered with by non-target reflections than general noises [

31]

Within the preselected background region, each A-scan is associated with a corresponding SCR metric. According to common practice, all SCR values within this region are averaged to serve as a measure of the target’s prominence relative to the background or clutter. In Equation (

16),

N is the number of time samples of the A-scan;

and

are the data value and the average value of background at

ith time bin, respectively; and

is the average standard deviation of the background A-scans. The SCR is a quantitative measure specifically designed for assessing the effectiveness of B-scan preprocessing, and it can also assess the extent of distinction of the target from the background. A larger SCR value indicates a more prominent target and thus better performance of the background removal method. In the practical calculation, all A-scans in the B-scan that do not contain any hyperbolas are manually selected as the background A-scans used in the computation. The typical SCRs of the two datasets are listed in

Table 1, along with the burial depths of the pipes. As shown in

Table 1, the signals in Dataset 2 are typically less distinct from the background than those in Dataset 1, which corresponds to the more complex acquisition surroundings in Dataset 2. And all pipes in the residential area where Dataset 2 was collected are buried much deeper than those corresponding to Dataset 1.

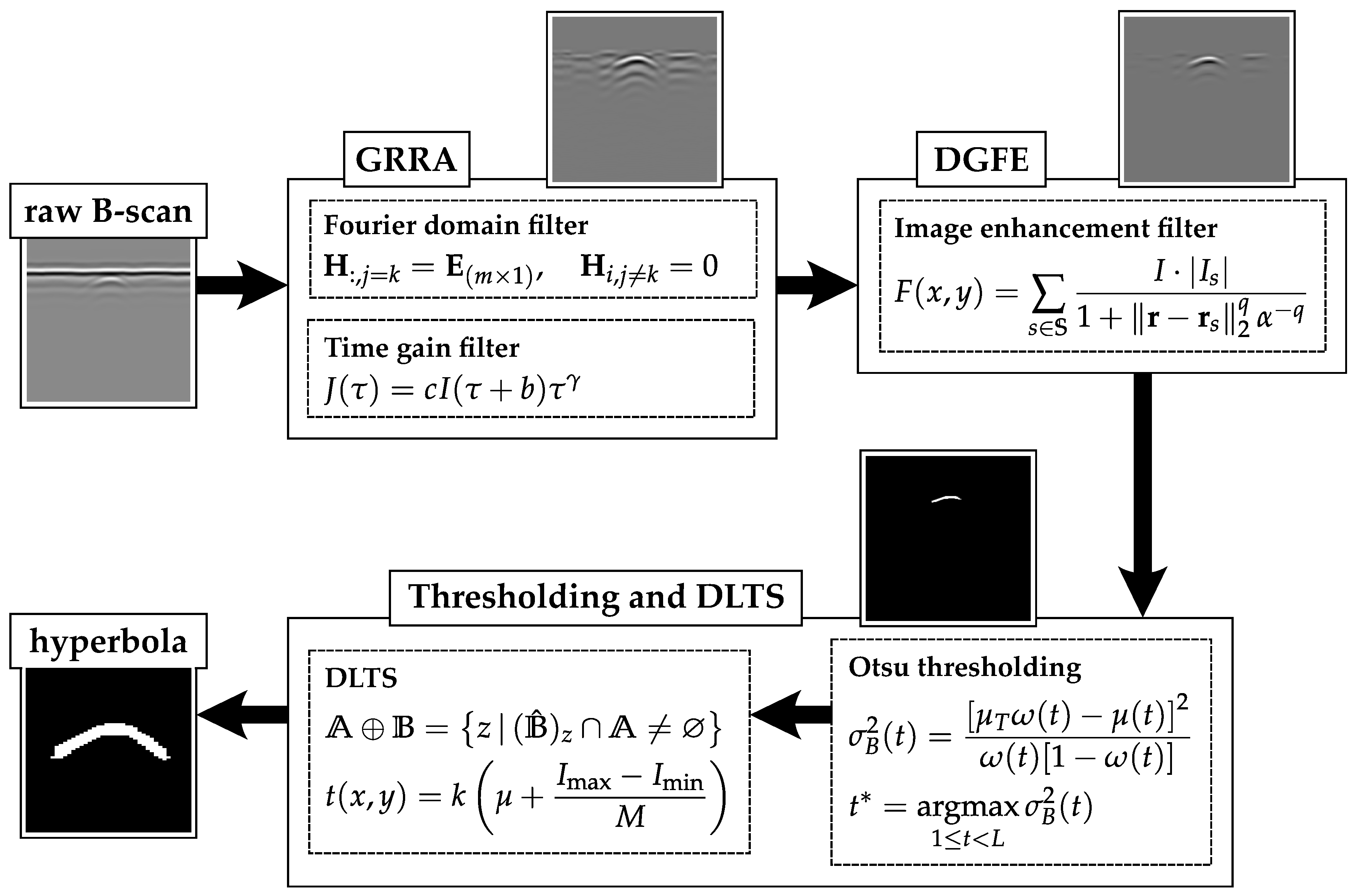

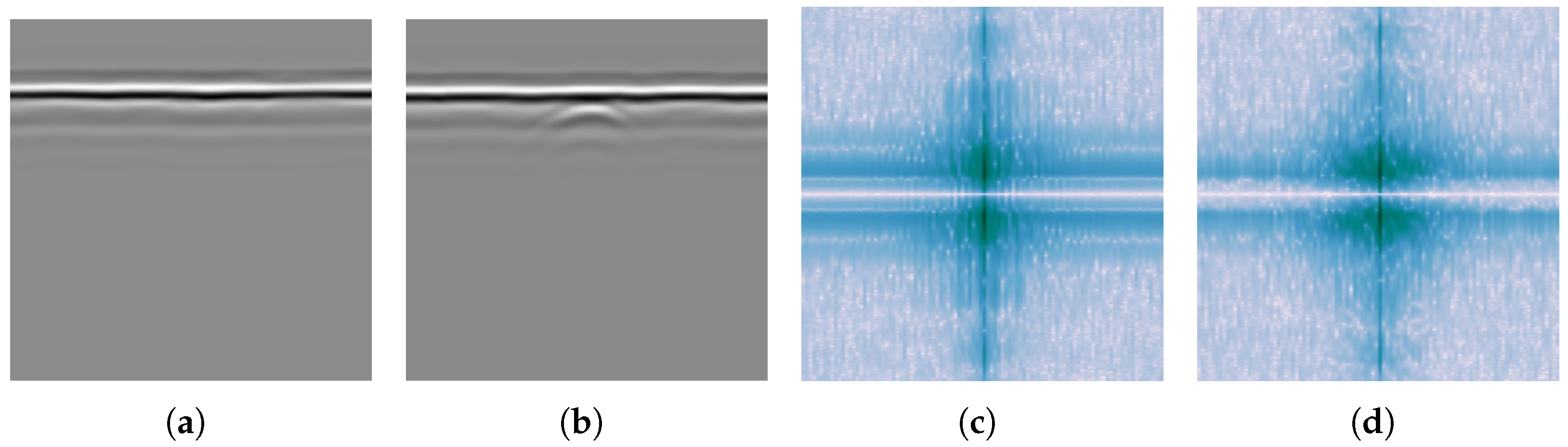

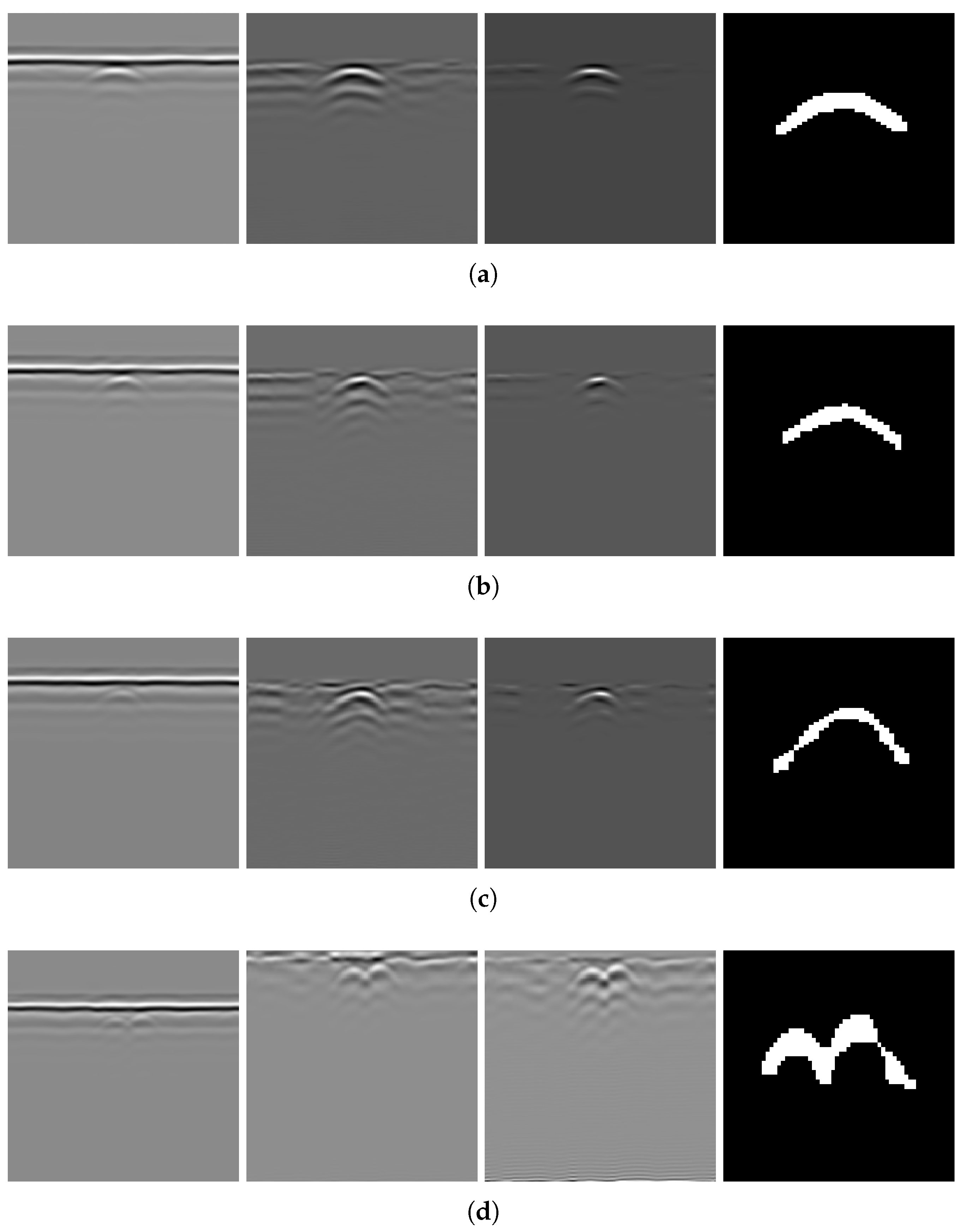

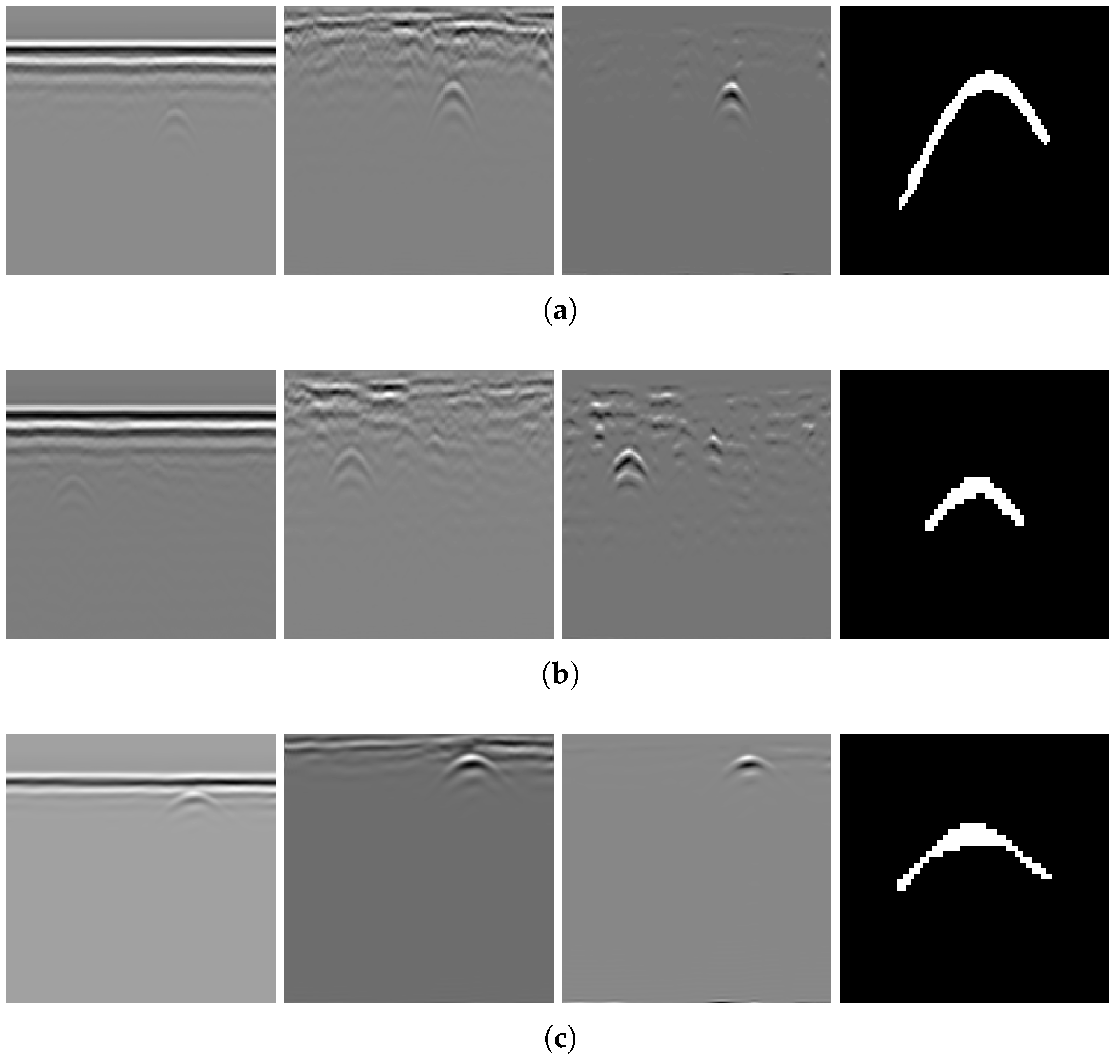

Applying the algorithm to B-scans from two different datasets can provide evidence of its generalizability across varying conditions. The results of several B-scans from Dataset 1 are shown in

Figure 13. From subfigures (a) to (c), the figure displays B-scans of pipes with diameters of 20, 15, and 10 cm and a burial depth of 10 cm, while subfigure (d) displays pipes with diameters of 15 and 20 cm, buried in parallel, at depths of 10 and 13 cm, respectively. From left to right, the four subfigures sequentially show the original B-scan and the outputs of GRRA, DGFE, and DLTS. In GRRA,

and

. For DLTS, the window size was set to 5, and

. Although fixed parameter values are used here, how variations in these parameters affect the performance and effectiveness of the algorithms will be discussed later. At a burial depth of 10 cm, the pipes are fairly distinguishable from the background by the human eye. However, at a depth of 15 cm, the images are harder to discern because of the small radius of the pipe, poor medium conditions, and limitations in the principles of electromagnetic wave propagation. This highlights the need for the algorithm to adapt to challenging conditions. The results indicate that, for pipes buried at shallower depths, the model performs reliably. Additionally, it successfully addresses scenarios where there is minimal contrast between the hyperbola and the background. When the workflow is applied to B-scans containing two overlapping hyperbolas, the situation becomes somewhat different. Under the condition that the overall reflection intensity of the hyperbolas is rather low, the enhancement effect of DGFE is less pronounced than that observed for isolated hyperbolas. This is because, according to the superposition principle and the image, the signal amplitude at the overlapping region is higher than that of the surrounding areas, producing a sudden high-intensity peak along the hyperbola tails. Such a peak weakens the algorithm’s distinction between foreground and background features. Nevertheless, the final output still largely preserves the shape of both hyperbolas, although their spread is less uniform than in the case of non-overlapping ones. In addition, the hyperbolas are overall wider, which can be attributed to the global thresholding retaining more signals when the intensity difference between foreground and background is relatively small. This further demonstrates the necessity of considering reflection intensity during the preprocessing stage. The results of applying the workflow to B-scans from Dataset 2 further support the point, demonstrating its applicability in cases where the intensity contrast between hyperbolas and the background is low.

Figure 14 presents the results obtained using the algorithms on B-scans acquired with two different antenna frequencies, with parameter values kept unchanged. It can be observed that the hyperbolas were effectively extracted in all cases.

As shown in

Figure 13 and

Figure 14, GRRA, DGFE, and DLTS each fulfill their intended design purposes, and the output images exhibit clear characteristics. GRRA applies a Fourier domain filter with a vertical DC damper, effectively removing horizontal features, such as ground reflection strips. However, this comes at the cost of enhancing other vertically periodic features, especially the multiple-like curved strips beneath the target hyperbola. DGFE is designed to mitigate the negative effects of GRRA and further enhance the intensity of the target hyperbola. As a result, other components in the images become darker as the target hyperbola becomes brighter. This effectiveness is also clearly demonstrated in the more complex subsurface conditions of Dataset 2, where DGFE effectively suppresses clutter intensity. Despite this, the tails of the hyperbola remain insufficiently bright and can be easily missed by a global threshold. This highlights the importance of DLTS, which completes the hyperbola by preserving its shape. The proposed model demonstrates its effectiveness in extracting the target hyperbola from the raw B-scan while maintaining its shape. Additionally, to assess the speed of the proposed model to meet the demand of edge deployment, 80 B-scans under the same experimental conditions from Dataset 1 and 30 B-scans from Dataset 2 were used as inputs. The runtime statistics, measured on an Intel

® Core™ i7-10700 CPU, are presented in

Table 2, where the image size of Dataset 1 is 300

120 (height

width), and that of Dataset 2 is 240

257. The results indicate that the model’s algorithms are both swift and stable.

As for algorithm complexity analysis, since the pipeline consists of multiple modules, it is conducted sequentially. Let

S (sample) and

T (trace) denote the height and width of the B-scan image, respectively. (1) GRRA includes a Fourier domain filter, where the two-dimensional fast Fourier transform and its inverse operation both have a complexity on the order of

, with an fftshiftfunction on the order of

. Fourier domain filtering features the multiplication of the Fourier spectrum and the transfer matrix, whose complexity is on the order of

. Similarly, Equation (

5) is also on

. Therefore, the computational complexity of GRRA is on the order of

. (2) DGFE has two layers of loops. The outer loop moves a two-dimensional window with the size of

for

times, and the inner loop conducts the operations between matrices with the same size of

. Therefore, the computational complexity of DGFE is on the order of

. (3) The thresholding module includes global thresholding and DLTS. The global thresholding is also on

. As for DLTS, its initialization traverses the foreground and builds statistic matrices such as means, minimum and maximum values, and contrasts, with complexity on the order of

. Other contributing computations are the outer and inner loops; the step with the heaviest computation is determining the edge at the start of each outer loop, on the order of

, where

P is the number of pixels contained in the foreground. Therefore, the complexity of thresholding is on the order of

. Since the modules are processed sequentially and without nesting, the computational complexity of our proposed model is on the order of

. The steps with the heaviest computation are filtering in GRRA and image enhancement in DGFE, both containing matrix multiplications and accumulations. As suggested by the complexity analysis and

Table 2, the computational cost is quite acceptable.

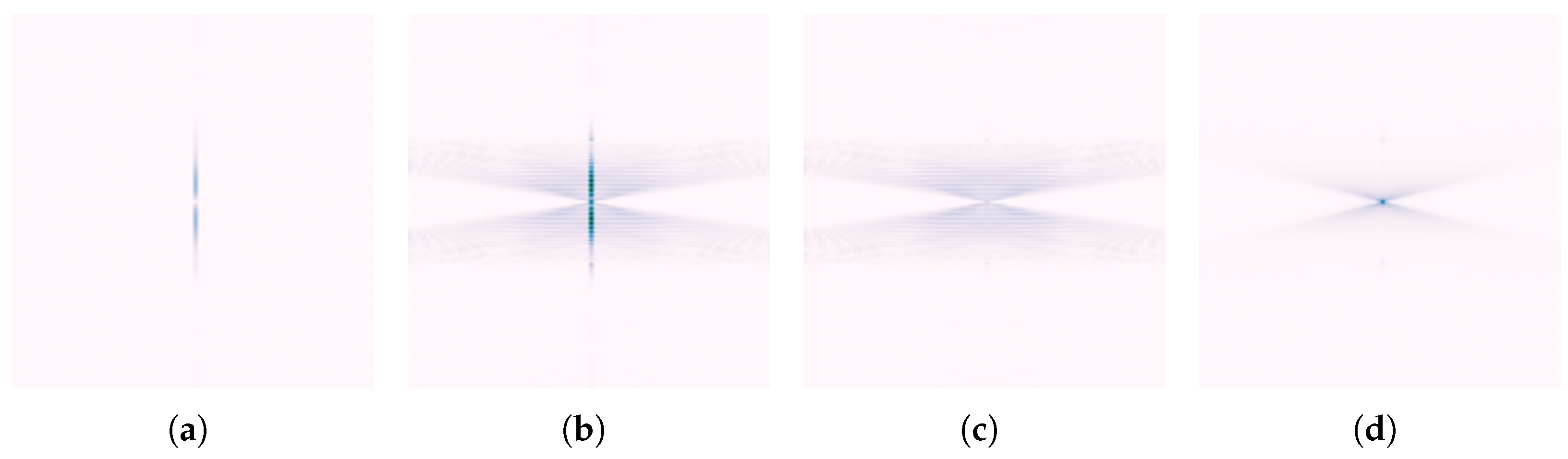

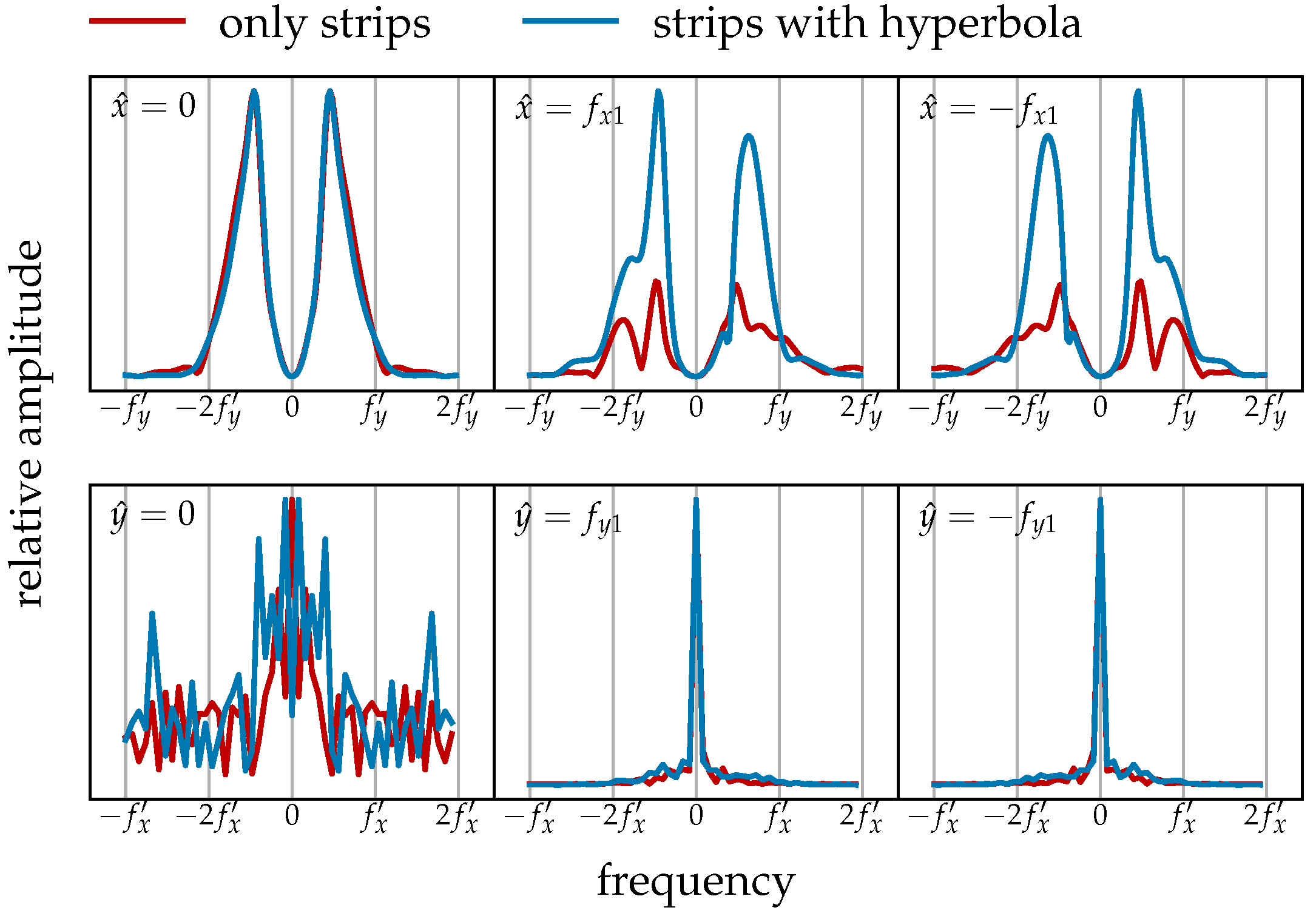

Notably, the extracted hyperbola in

Figure 13c appears thin and slightly distorted. On the basis of the equation of a hyperbola and the continuous form of Equation (

1), where

,

, the two-dimensional Fourier transform

of the hyperbola is

where

denotes the modified Bessel function of the second kind at

[

32]. In the neighborhood of

,

, and it quickly decays to 0 as

increases. The frequency-domain filter used by GRRA passes through the proximity of the origin of the Fourier plane, setting it to 0, where key features of the hyperbola reside. As a result, the shape of the hyperbola is affected, and the less intense the hyperbola is, the more pronounced the influence becomes.

To verify the effectiveness of the algorithm, a hyperbola needs to be fitted from the binary image output by the entire algorithm flow. The extracted hyperbola parameters are then compared with the experimental conditions via Equation (

19).

where

and

x refer to the two axes of the image, vertically and horizontally, respectively;

R is the outer radius of the pipe;

v is the electromagnetic wave speed in the medium, which equals

, where

c is the speed of light;

is the dielectric constant of the medium; and

is the round-trip time of the electromagnetic wave, which equals

, where

h is the burial depth of the pipe. Among these parameters, the burial depth

h and the outer radius of the pipe

R are important and can be compared with the experimental scenarios. The round-trip time

is directly deduced from the position of the topmost pixel of the hyperbola, whereas the outer radius

R is deduced from Equation (

19). The selected real-world dataset from Dataset 1 consists of three scenarios, where pipes with outer diameters of 20, 15, and 10 cm are buried at a depth of 10 cm. Each scenario includes 20 different B-scans, which vary owing to perturbations in the positions and angles of survey lines. The fitting method adopts the least-squares method from [

18], as shown in Equation (

20), which is deduced from Equation (

19). This process requires fitting a set of four parameters

from the set of hyperbola pixels in the output binary image.

where

,

, and

.

The algorithm is evaluated with

and a window size of 5, and the validation results are presented in

Table 3. In the table,

(unit: cm) refers to the actual outer radius of the pipe,

(unit: cm) refers to the mean estimated value of the outer radius with the algorithm, and

refers to the mean relative error of estimation; the symbols are similarly applied to

h, which is the burial depth.

Table 3 displays the range of pipe burial depths and outer diameters derived from the fitted hyperbola parameters in successful trials, along with the average relative error. Additionally, among the 20 B-scan images for the pipe with an outer diameter of 10 cm, 3 B-scans failed to produce a complete hyperbola, resulting in truncated tail sections instead. The algorithm performs reasonably well when applied to pipes with outer diameters of 20 and 15 cm, as it can accurately estimate the burial depth and outer radius. However, when it is applied to 10 cm pipes, the performance has a minor decline due to the faint hyperbolic signatures in the raw B-scan images. Furthermore, the estimated burial depth tends to be greater than the actual depth and the outer radii are smaller, which may be attributed to the following factors: (a) In the experimental setup, the process of excavation, pipe placement, and backfilling may cause soil compaction and pipe settlement, leading to an actual burial depth greater than the designed depth. (b) The initial position determined by the filter via Equation (

5) may deviate from the actual initial position of the first ground reflection strip, introducing estimation bias.

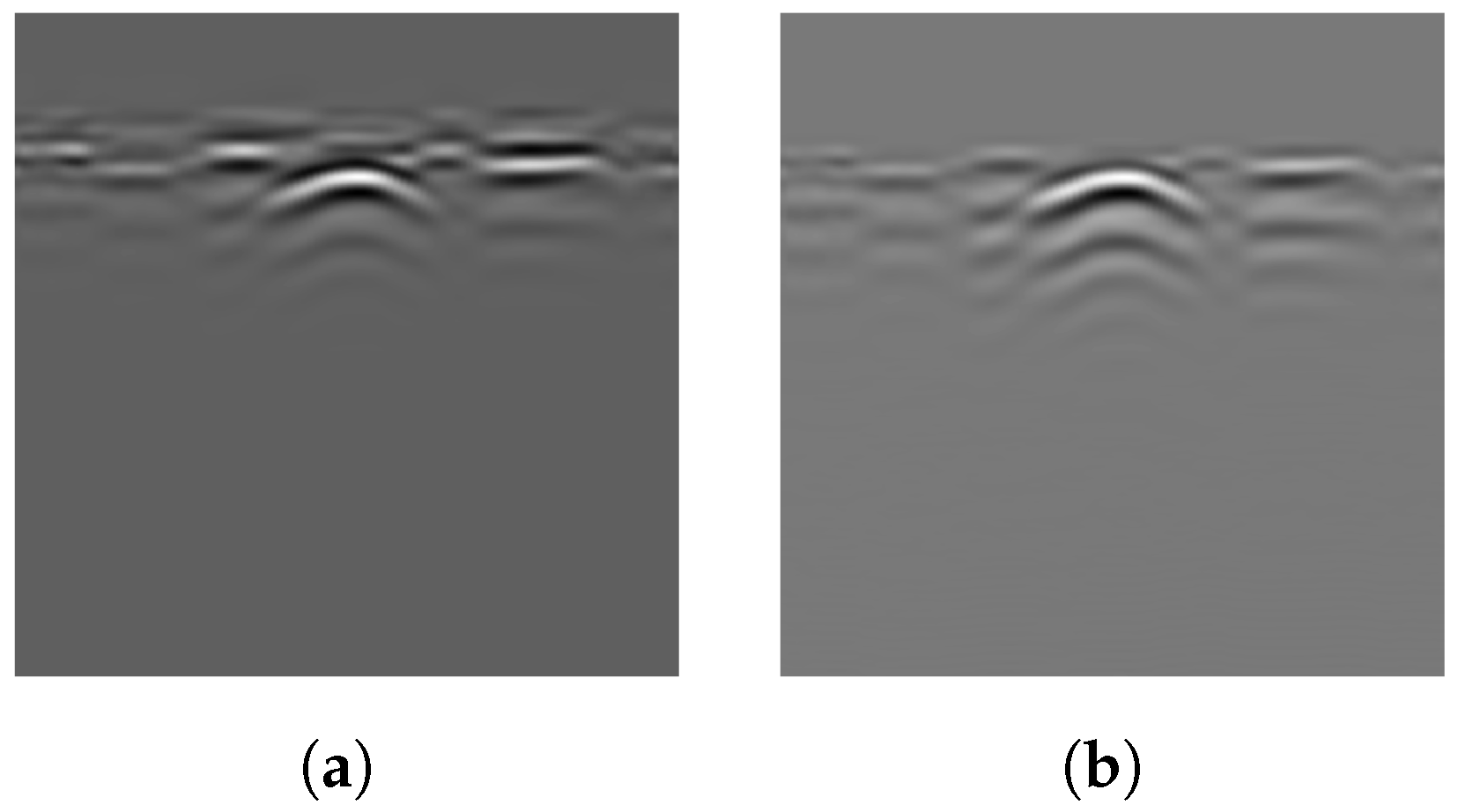

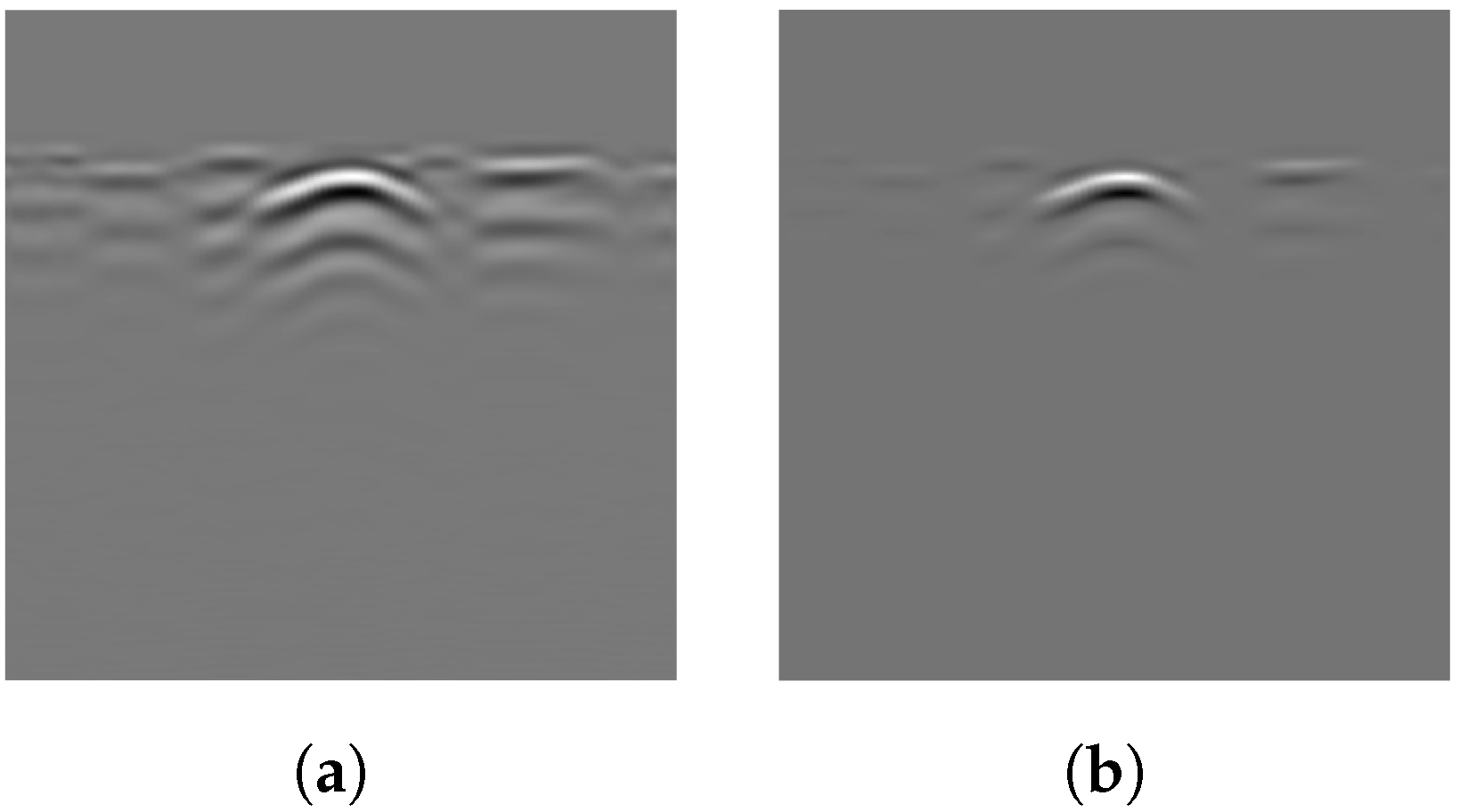

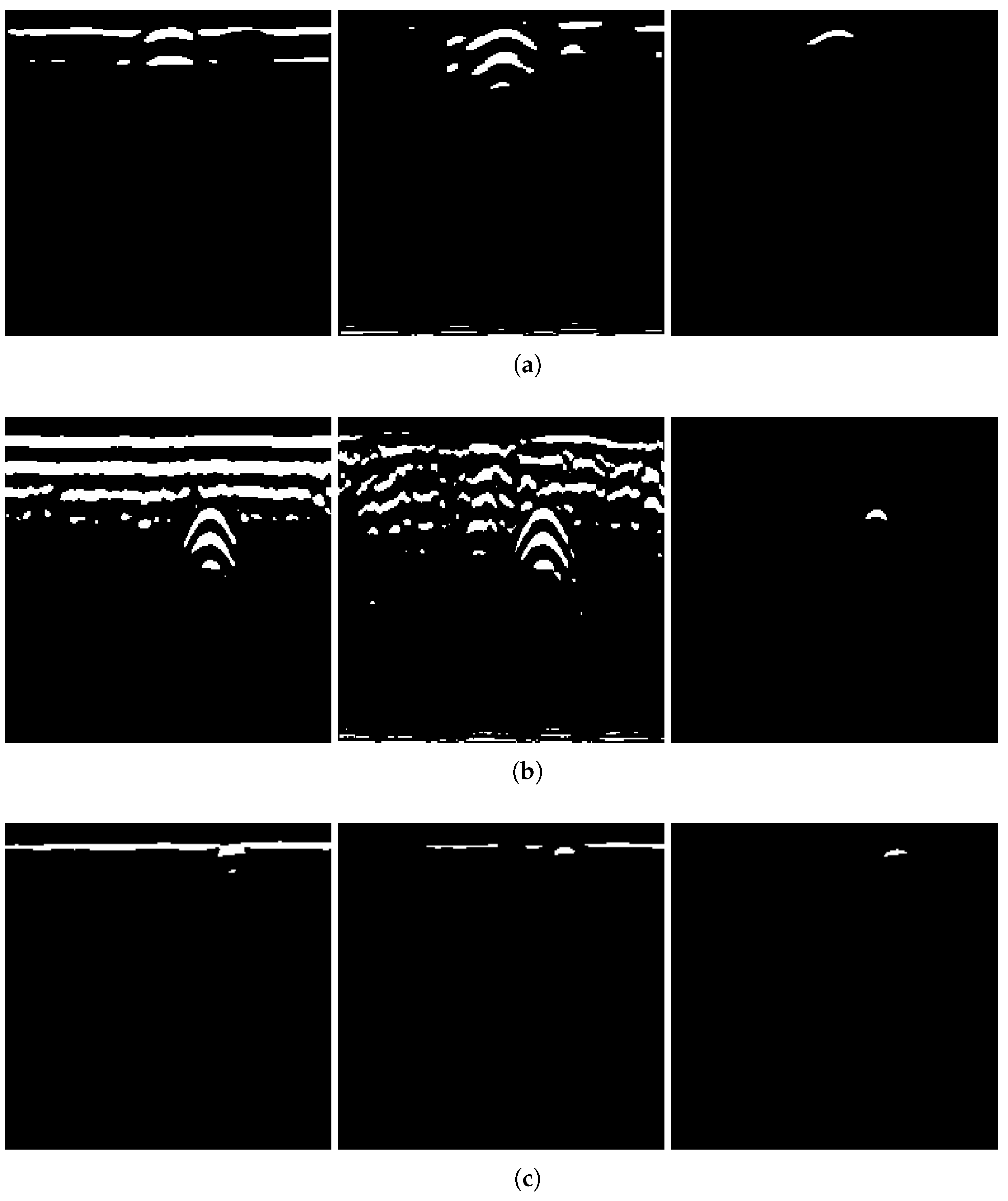

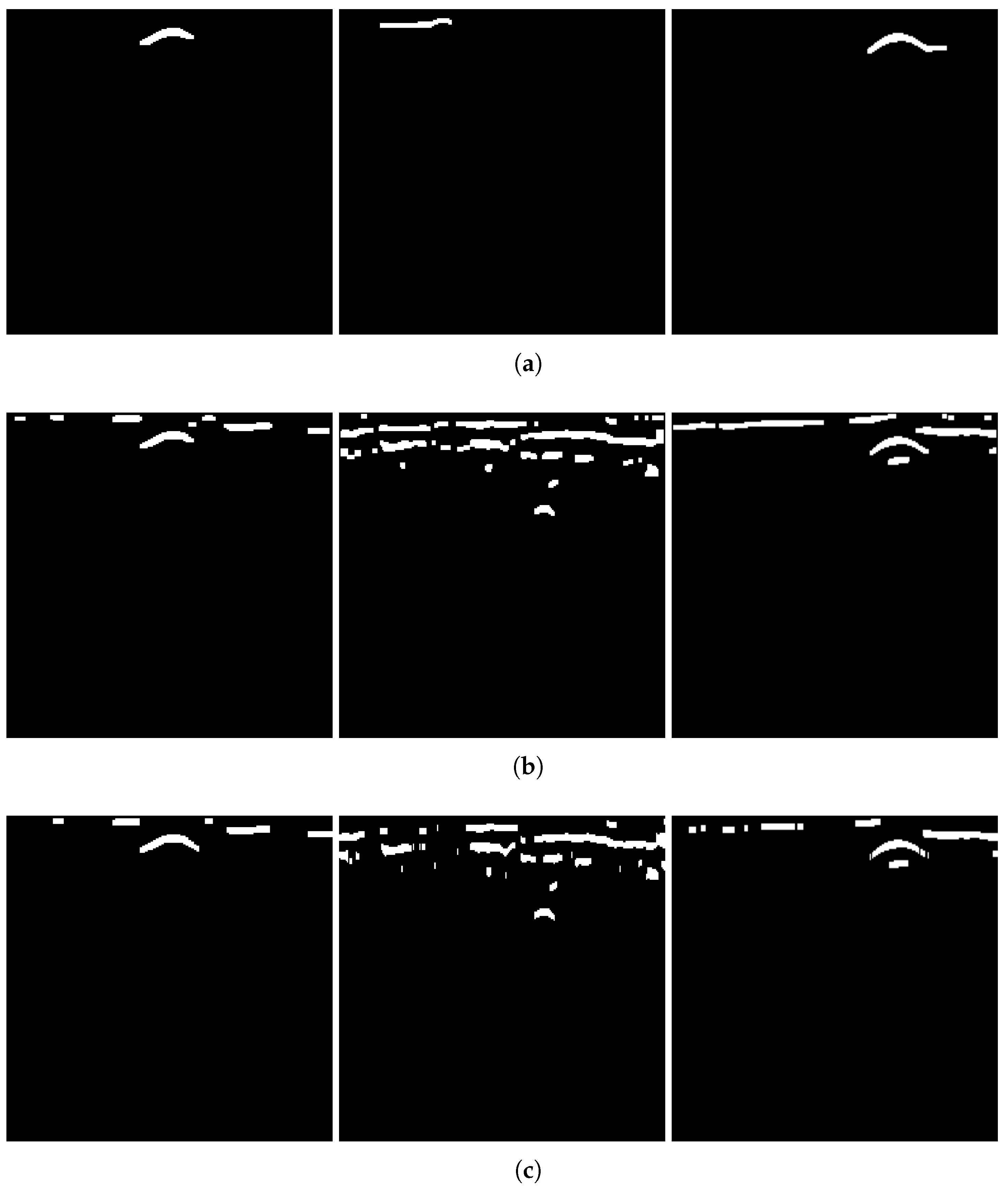

To verify the necessity of the algorithm components—GRRA, DGFE, and the DLTS-based thresholding and segmentation method—in the proposed model, an ablation study was conducted. Specifically, GRRA, DGFE, and DLTS were removed one at a time to form three alternative algorithmic variants: (a) DGFE followed by DLTS-based thresholding and segmentation; (b) GRRA followed by DLTS-based thresholding and segmentation; (c) GRRA, then DGFE, and finally thresholding without DLTS. These three variants were applied to B-scans from both Dataset 1 and Dataset 2, and the corresponding results are shown in

Figure 15. As shown in the figure, the outputs of the three algorithmic variants, each lacking a specific step, are affected to varying degrees by the absence of that step. The extent of the impact depends on the characteristics of the data acquisition environment in each dataset. Variant (a) does not specifically handle the strong ground reflection strips. Since the intensity of the ground reflection is significantly higher than that of the hyperbola, both may be retained during thresholding due to their similar intensities even after gain. In some cases, the hyperbola may even be discarded. Variant (b) lacks dedicated suppression of non-hyperbola interference. This issue is particularly evident in Dataset 2, where the presence of significant clutter and the absence of image enhancement cause the hyperbolas to exhibit intensities comparable to the background. As a result, both may be preserved during thresholding, and in some cases, the hyperbolas may be lost. Variant (c) has already been discussed earlier: the extracted hyperbola suffers from varying degrees of incompleteness, which highlights the necessity of the DLTS step for recovering missing hyperbola components. The ablation study provides evidence for the rationality and necessity of each step in the proposed model. The effectiveness of the overall algorithm relies on the combined contribution of all components, and none of them can be omitted without compromising performance.

4.2. Comparison with Other Algorithms

In terms of horizontal comparison with existing preprocessing methods, the overall algorithmic pipeline proposed in this paper performs functions analogous to conventional background removal through the GRRA and DGFE modules. Subsequently, the DLTS-based image thresholding module achieves hyperbola segmentation. In the following subsections, the performance of the proposed method is compared with that of recent approaches developed in the past few years in two aspects: (a) ground reflection and background removal and (b) end-to-end performance.

A commonly used method for B-scan image background removal is derived from the running average filter, also known as the moving average filter, which is a classical and widely used technique in the field of signal processing. It computes the average value

of the neighborhood around a given point

in a signal sequence as follows:

where

and

define how many data points before and after the current index

i are included in the average; thus, the denominator

is the total number of points being averaged [

33]. Typically, the running average filter is employed for signal smoothing. However, by subtracting the computed average

from

, background removal can be achieved. This approach is generally referred to as mean subtraction.

Mean subtraction can be performed one-dimensionally, row by row, as implemented in [

17,

18,

19], according to the following expression:

where

represents the value at

, and

m is the number of signal points; in this case,

in Equation (

21). Alternatively, it can be applied two-dimensionally within a moving local window. In addition, ref. [

18] further employed a filter known as the median filter. By sequentially applying these two filters, it is possible to suppress antenna ringing, eliminate ground reflection strips, and mitigate radiofrequency interference. In the following, the performance of DGFE followed by GRRA will be quantitatively compared with that of the combined approach of the mean subtraction method described by Equation (

22) and median filtering used by [

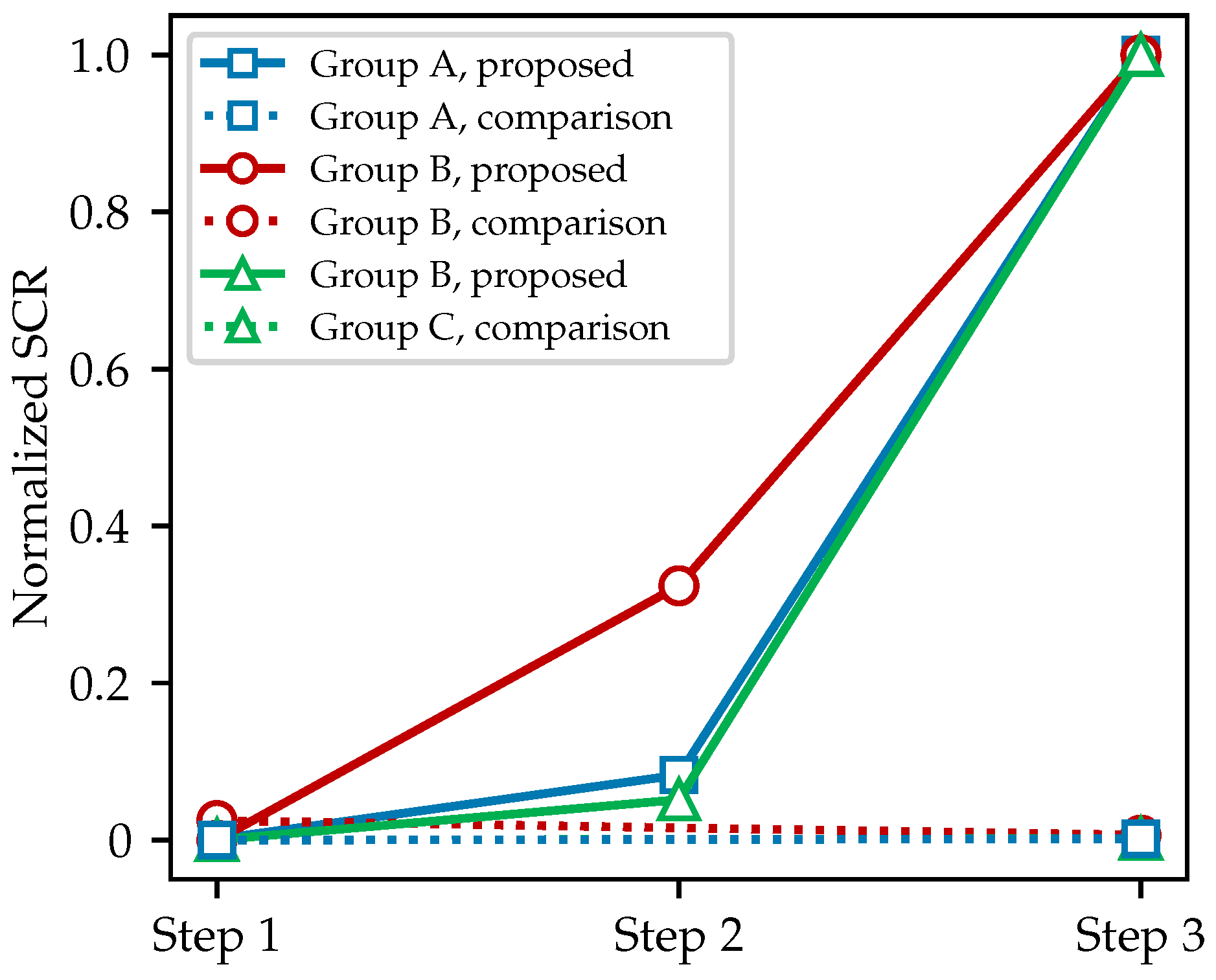

18]. Next, the above three methods for background removal will be applied to the B-scans from Dataset 1 (group A) and Dataset 2 used in the previous part, with the latter including B-scans acquired using both 600 MHz (group B) and 170 MHz (group C) antennas. The target region is defined as a box that extends laterally by one hyperbola width, determined by the result of DLTS, on each side of the target, and vertically from the topmost line of the ground reflection down to two hyperbola heights beneath the target. This ensures that the hyperbola lines are within a reasonable surrounding background region and that the SCR value if not overly diluted by a large background area. In the following discussion, all reported SCR values refer to the average SCR within the selected background region, further averaged over all sampled B-scans.

Figure 16 shows line plots comparing the SCR values at each step of the background removal process, applied to three groups of B-scans. The proposed model uses a sequence of operations, namely, GRRA (step 1), gain introduced by Equation (

5) (step 2), and DGFE (step 3), and is labeled proposed in the legend; the comparison method applies mean subtraction (step 1) followed by a median filter with a window size of 5 (step 3) and is labeled comparison in the legend. To facilitate a fair comparison of the two methods on the same B-scans, all SCR values in this figure were normalized within each group: the minimum value in each group was set to 0, and the maximum to 1. The clear separation between the solid and dashed lines in

Figure 16 indicates that, according to the commonly used SCR metric, the background removal workflow proposed by this paper significantly outperforms the conventional approach. In fact, after intra-group normalization, the contribution of the median filter to SCR improvement becomes nearly imperceptible.

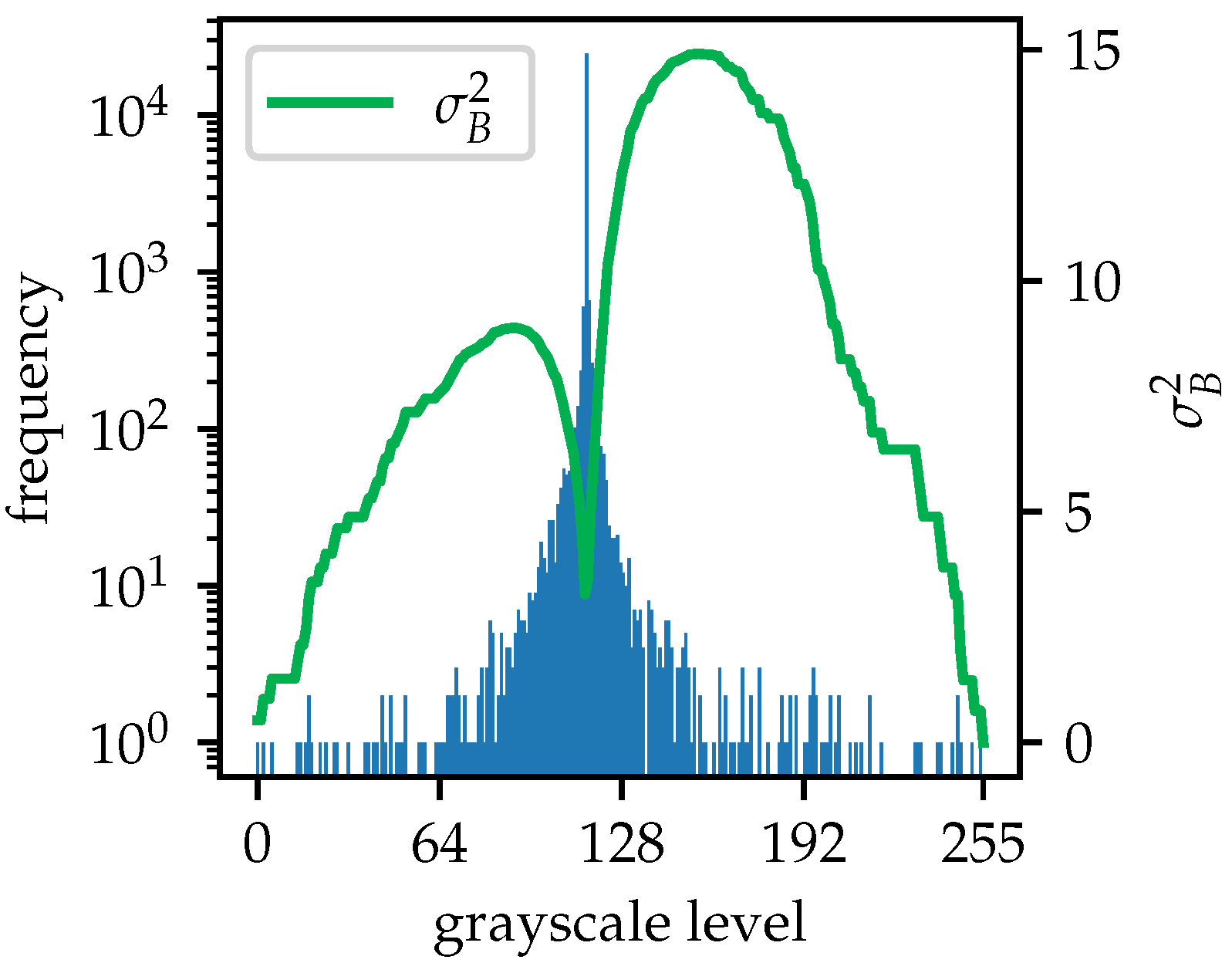

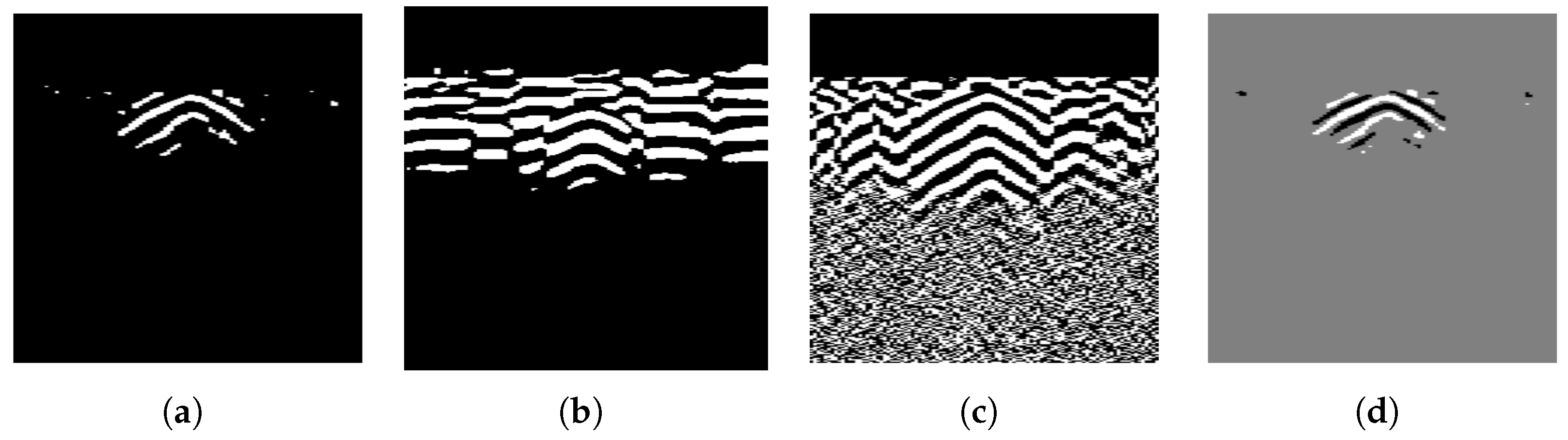

The integration of local thresholding into the thresholding and segmentation process is essential.

Figure 17 shows thresholded images produced by several mono-level and multilevel methods, including the minimum cross-entropy method introduced by Li and Liu [

34], adaptive thresholding based on Canny edge detection and lobe ratio in Dai’s model [

19], the vertical gradient method used in Zhou’s GPR model [

18], and the multilevel Otsu’s method [

35]. The multilevel Otsu’s method divides the image into

bins by

n thresholds, with

applied in

Figure 17d. In this case, pixels with grayscale values greater than the higher threshold are set to white to indicate the probable target hyperbola, values smaller than the lower threshold are set to black, and values between the two thresholds are set to gray. All four methods are clearly unsuitable for B-scans from the dataset of this paper. The outputs of these methods simultaneously retain both the target hyperbola and multiples. Moreover, the number of multiples is often large, appearing chaotically around the target hyperbola, which significantly affects subsequent processing. The key issue lies in two aspects. First, these thresholding methods fail to effectively differentiate between the target hyperbola and its multiples in terms of intensity. In general, the target hyperbola has a higher intensity than its multiples do, but this distinction is not well captured or leveraged. Second, to ensure that the algorithm completes execution within hundreds of milliseconds to a few seconds, commonly used processing algorithms often rely on global thresholding, which is rougher than local thresholding and sacrifices fine-grained image processing to save computational resources. As a result, the difference between the hyperbola and other interference signals is ignored in further analyses.

In fact, retaining unexpected multiples during the thresholding process is a common phenomenon. When Dai et al. applied several clustering-based hyperbola recognition and segmentation algorithms, including the open-scan clustering algorithm (OSCA), the column connection clustering algorithm (C3), and the hyperbolic trend clustering algorithm (HTCA), to real-world B-scans, 1–2 multiples were often observed beneath the target hyperbola [

19]. These multiples were retained along with the hyperbola due to their shared geometric characteristics, such as a downward opening and two extending tails. Although these multiples were eventually discarded on the basis of their positional relationships, they still consumed computational resources during the recognition process. Clustering-based algorithms are widely used, but they share a common limitation. These methods focus primarily on morphological features while almost neglecting signal intensity information. This is precisely the issue that DGFE and DLTS aim to address.

Next, an end-to-end comparison is presented between the proposed algorithms and the three recently introduced cluster-based methods mentioned above. The comparison focuses on two main aspects: (a) the performance differences, as reflected in the processed images, especially the capability to deal with multiple reflections; (b) the differences in runtime. As before, the B-scans used in this comparison are drawn from both Dataset 1 and Dataset 2. Before analyzing the results, it is necessary to clearly describe the toolchain used for each method. Method A follows the sequence (a) mean subtraction and median filter, (b) nonlinear time gain, (c) gradient-based thresholding, (d) opening and closing, and (e) OSCA, as originally used in [

18]. However, the component (c) gradient-based thresholding was replaced with a mono-level Otsu’s method in our implementation, because as shown in

Figure 17c, it does not handle the B-scans in our datasets effectively. Method B follows the sequence (a) mean subtraction, (b) mono-level Otsu’s method, (c) opening and closing, and (d) DCSE, as used in [

9]. Method C follows the sequence (a) mean subtraction, (b) adaptive thresholding based on Canny edge detection and lobe ratio, and (c) HTCA, as used in [

19], but the thresholding method was also replaced with a mono-level Otsu’s method.

Figure 18 presents the results of the three methods applied to three sample B-scans from Dataset 1 and Dataset 2. In many cases, these cluster-based methods are able to identify and extract the target hyperbolas, especially in scenarios with minimal ground reflection and clutter, where the hyperbolas are visually prominent, such as B-scans obtained using low-frequency antennas. However, since all three methods are morphological and cluster-based, they rely on geometric features such as the opening direction, the apex and tail of the hyperbola, and the spatial or intersecting relationships between hyperbola candidates. As a result, point clusters that happen to meet the predefined geometric criteria are often falsely included. These include, for example, horizontal strips with locally convex shapes, hyperbola-like clutter that was not removed, or artifacts caused by multiple reflections beneath real hyperbolas.

To quantitatively evaluate the characteristics of these four methods, they are applied to a group of samples containing 40 B-scans drawn from Dataset 2, which were collected by the 600 MHz antenna. This group of data contains stronger and more apparent clutter compared to Dataset 1 and those from Dataset 2 that were collected by the 170 MHz antenna. Three statistical indicators are computed: (1) whether the output preserves the shape of the hyperbola, expressed as a Boolean variable whose arithmetic sum is denoted as

, to evaluate whether useful information is retained; (2) whether the hyperbola is connected to unrelated clusters, expressed as a Boolean variable whose arithmetic sum is denoted as

; (3) the average number of isolated clusters other than the target hyperbola, denoted as

, to measure the cleanness of the processing results. The results are shown in

Table 4.

The results show that the four methods exhibit comparable abilities to preserve useful information and eliminate unintended noise, except that Method A performs slightly worse. However, both the proposed method and Method A achieve significantly higher cleanness in the output results. This quantitative validation is consistent with the preliminary conclusion drawn from the visual inspection of the outputs. The comparison highlights the necessity of DGFE, the target-intensity-based image enhancement in the proposed toolchain, as it effectively suppresses non-target information before morphological processing. However, the morphological methods themselves do not alter the shape of the targets, nor do they introduce or remove pixels unintentionally, which can sometimes occur with DGFE. As a result, these cluster-based methods tend to better preserve the original shape of the hyperbolas, which is an inherent advantage of cluster-based hyperbola extraction approaches. This observation highlights the sensitivity of one method to the specific characteristics of the dataset.

Moreover, methods like OSCA focus solely on the relative spatial relationships among point sets, making them less computationally intensive than DGFE, which requires analysis of global pixel intensity patterns. DCSE further reduces complexity by simplifying point sets into point segments row-wise in a two-dimensional image, resulting in shorter runtimes.

Table 5 compares the average runtime of the four methods on both datasets. It can be observed that all of them are computationally efficient, on the order of tens to hundreds of milliseconds, making them suitable for deployment on edge devices. Of course, beyond analyzing the topological and morphological characteristics of pixel sets, the proposed method also considers their intensity relationships based on spatial positions. This introduces a substantial number of matrix multiplications and accumulations, but leads to better overall performance on the datasets. This result demonstrates that the trade-off we made is worthwhile. After all, the processing speed is still around 10 FPS on a relatively modest CPU. This enables the proposed method to achieve above-average processing quality while still meeting the requirements for edge deployment.

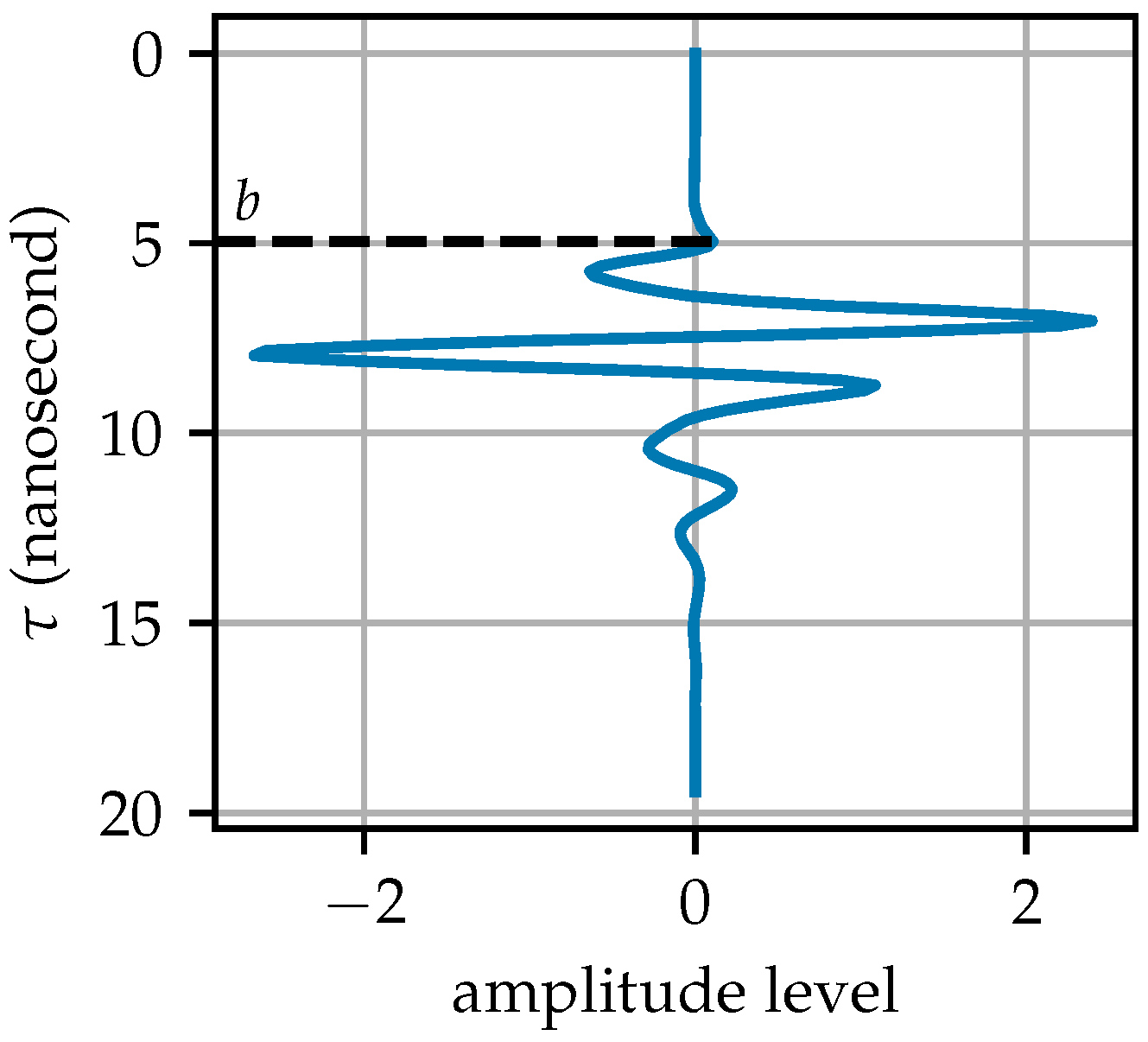

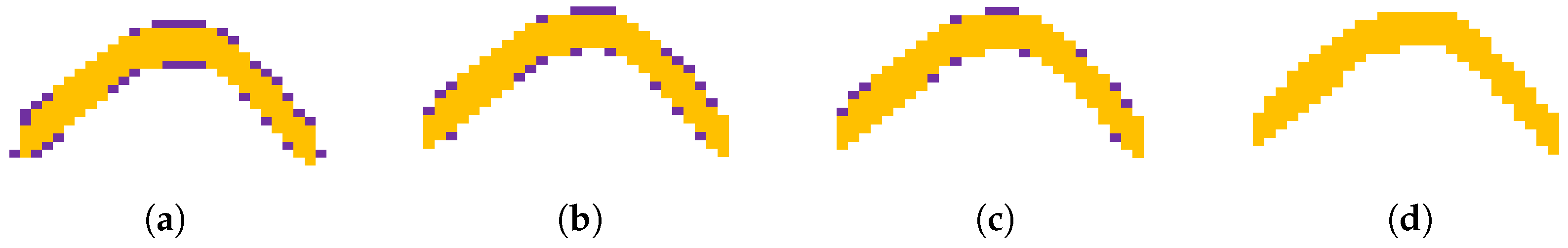

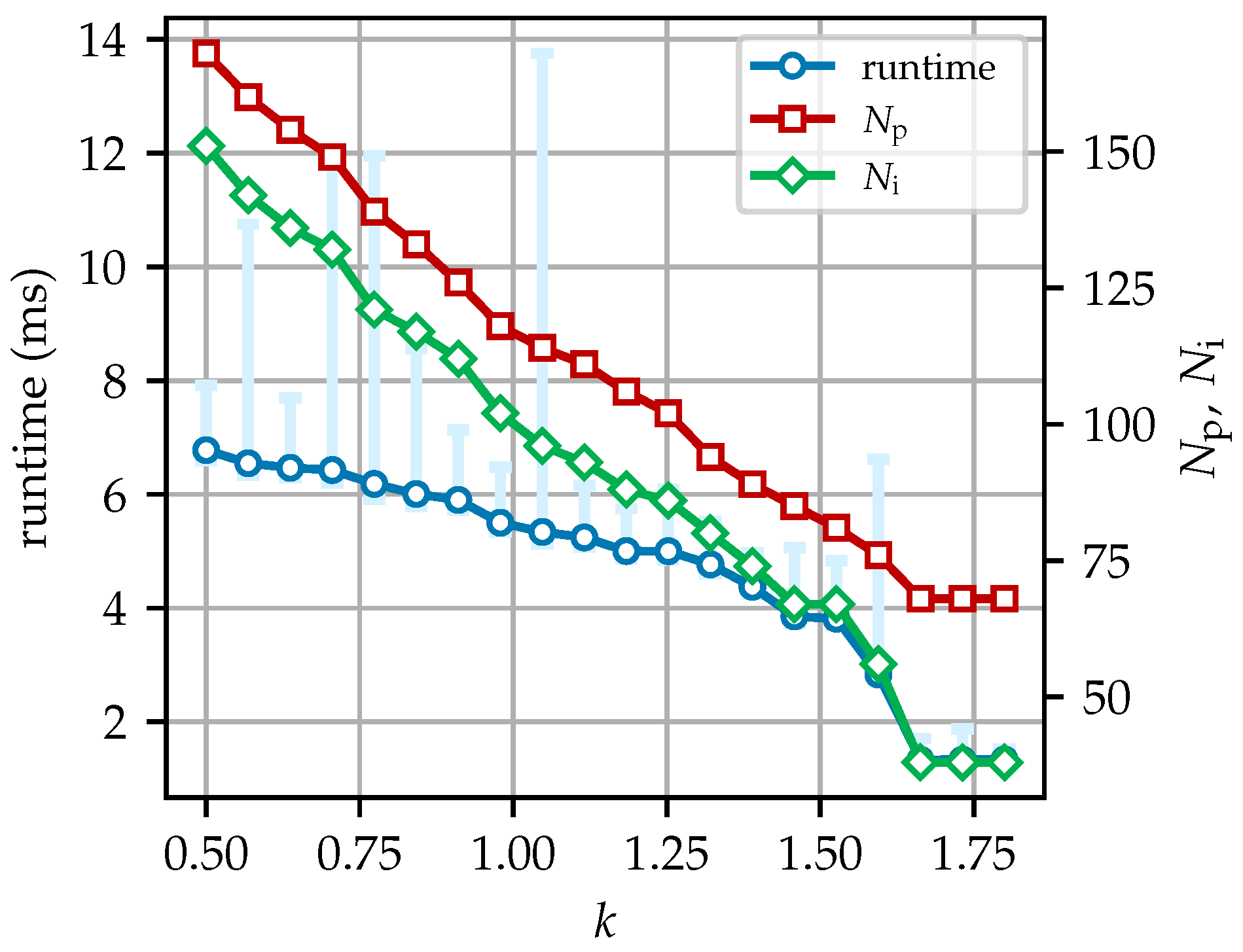

4.3. Parameter Analysis of DLTS

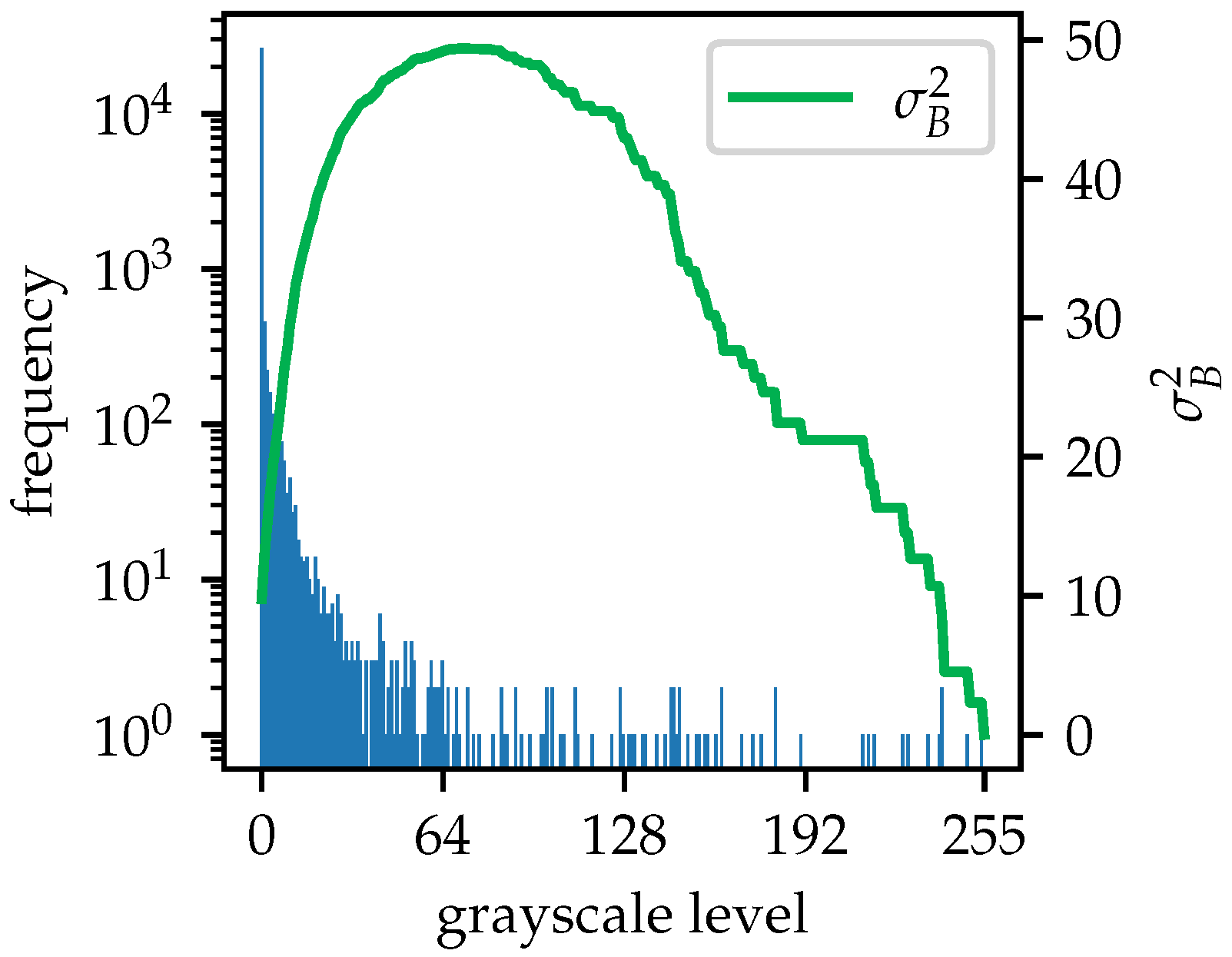

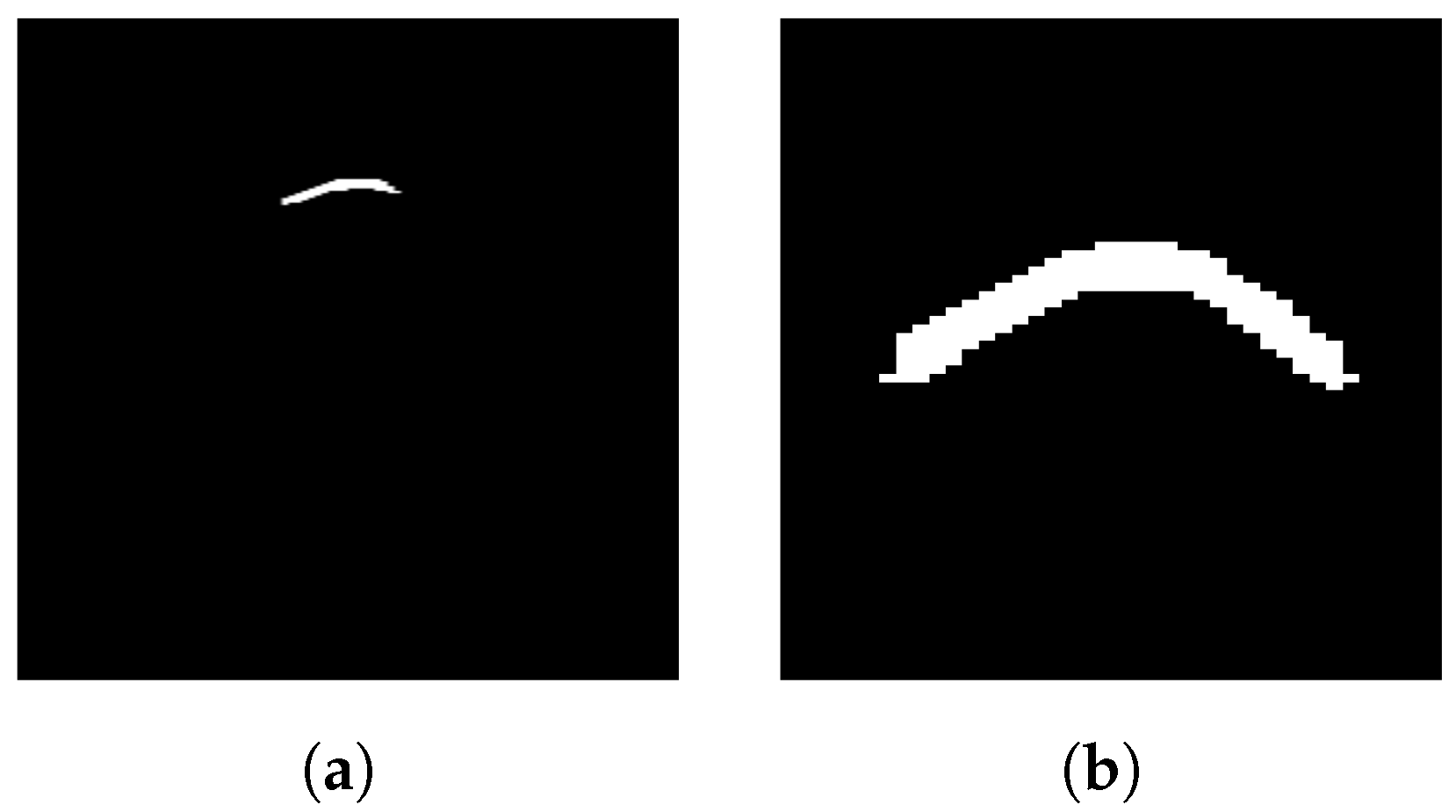

The DLTS results from

Figure 11a, which is the output of Otsu thresholding from a B-scan of a pipe with an outer diameter of 20 cm, using different values of

k and the same window size of 5, are shown in

Figure 19. As

k increases, the local thresholds tend to rise, resulting in fewer pixels being set to 255, according to Equation (

14), which makes the hyperbola appear thinner. As shown in

Figure 20, the value of

k affects not only the size of the hyperbola (i.e., the number of pixels in it,

) but also the runtime and the number of iterations,

, of the sub-DLTS procedure, as outlined in Steps 4 and 5 of the algorithm.

Figure 20 shows a general reduction in runtime,

, and

, with minor regional variations. In this figure, computation under the same conditions is conducted 1000 times to highlight runtime fluctuations, which are represented by the light-blue error bars. As is shown, the execution time of DLTS ranges from a few milliseconds to over ten milliseconds. As

k increases, although the runtime can be reduced, with the average decreasing from approximately 7 milliseconds at

to less than 4 milliseconds at

, the hyperbola shape may be compromised, as reflected in the reduction in

. Notably, when

k reaches 1.5 or higher, there is a noticeable decrease in the runtime and

. However, once

k reaches 1.6,

and

no longer decrease. According to Equation (

13), the value of

k controls the threshold for local thresholding. A larger

k results in a higher threshold, leading to fewer retained pixels in the output hyperbola. Consequently, continuously increasing

k may have adverse effects, potentially rendering the algorithm ineffective. Conversely, excessive iterations and fine computations are not always beneficial to the quality of the result, as shown in

Figure 19a, where burrs appear on the hyperbola. In terms of runtime, regardless of the value of

k, the average runtime always remains within a few milliseconds, and the maximum does not exceed 15 milliseconds. Therefore, although different

k values cause significant variations in execution time, from a practical perspective, the impact of

k on the runtime of the algorithm is negligible. Additionally, for many values of

k, the average runtime is only slightly higher than the minimum runtime but significantly lower than the maximum. This right-skewed characteristic is a normal phenomenon, as only a small number of executions experience significantly longer runtimes due to occasional variations in the operating environment. The decreases in

and

halt when

k becomes large enough, indicating that DLTS is no longer effective in completing the hyperbola. In practice,

k should be fine-tuned on the basis of the results, starting from a recommended value of no larger than 1.25 to achieve an intact and uniform spread of the hyperbola.

Clearly, the larger the value of k, the stricter the resulting local threshold becomes. When k increases beyond a certain point, no new pixels will be absorbed into the foreground. Conversely, as k decreases, the threshold becomes more lenient. If it drops too low, even background pixels may be incorrectly included, which is highly detrimental and often leads to the failure of the algorithm. Therefore, a robustness and parameter range analysis was conducted to determine the effective range of k for the majority of B-scans. A total of 60 B-scans are sampled, 20 from Dataset 1, 20 from Dataset 2 acquired with a 600 MHz antenna, and 20 from Dataset 2 acquired with a 170 MHz antenna, to investigate the effective range of the parameter k. For each B-scan, the upper bound of k is determined based on the point at which no additional foreground pixels are absorbed. In contrast, the lower bound can only be identified through visual inspection, by observing the emergence of clearly non-hyperbola pixels that adversely affected the result. During the evaluation, the fineness of k adjustment is set to 0.01.

Figure 21 shows the results of the robustness and parameter range analysis. To facilitate quantitative interpretation, two dotted horizontal reference lines at

and

are drawn. The results indicate that for B-scans from different datasets,

k is effective over a reasonably wide range, with only a few instances of failure observed on a very small number of B-scans. For Dataset 1, the effective range of

k spans from 0.1 to 0.95. However, for the two subsets of Dataset 2, the valid range is slightly narrower, with the upper bound reduced to approximately 0.65. Notably, B-scans acquired with the 170 MHz antenna allow for a slightly higher upper bound. The discrepancy is likely due to the nature of the datasets. Dataset 1 was acquired in an ideal survey environment, which was an open field where there were very few small subsurface objects, resulting in minimal clutter and clearly visible hyperbolas. In contrast, Dataset 2 was collected in an urban residential area with realistic and complex disturbance, as well as multilayer pavement and inhomogeneous backfill, leading to more clutter and generally weaker hyperbolas. Additionally, due to its internal physical properties, the low-frequency antenna in Dataset 2 is less sensitive to small objects, which contributes to lower clutter compared to the higher-frequency antenna. Although the presence of interference narrows the valid range of

k, high-frequency antennas under complex urban conditions are representative of typical GPR operations. The reasonably wide effective range of

k observed in the Dataset 2 600 MHz subset therefore demonstrates the practical robustness of the algorithm. In practice, based on the findings drawn from the results, the recommended range of the selected

k is 0.2 to 0.55, safely distant from both bounds to ensure stable performance.

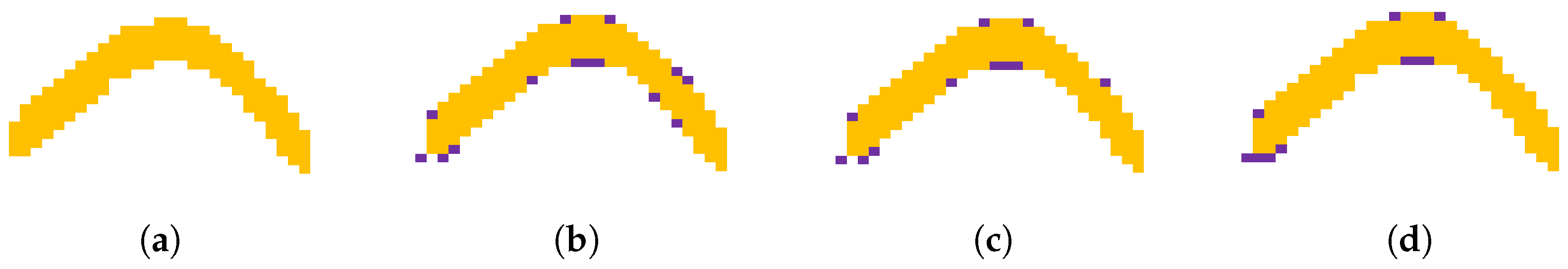

With respect to the window size,

Figure 22 shows the DLTS results for window sizes of 3, 5, 7, and 9. The original B-scan in

Figure 22 is of a pipe with an outer diameter of 20 cm. The extracted hyperbolas show no significant divergence from one another, with only subtle differences of a few pixels. However, the hyperbola at a window size of 3 is slightly larger than the others. The average runtime of 1000 executions correlates with the window size, with values of 6.66, 6.43, 6.46, and 6.72 milliseconds for window sizes of 3, 5, 7, and 9, respectively. This suggests that the choice of window size primarily depends on the size and resolution of the image, and its influence on the algorithm’s performance is relatively minor.