DAST-GAN: An Adversarial Learning Multimodal Sentiment Analysis Model Based on Dynamic Attention and Spatio-Temporal Fusion

Abstract

1. Introduction

1.1. Key Challenges in Recent MSA Models

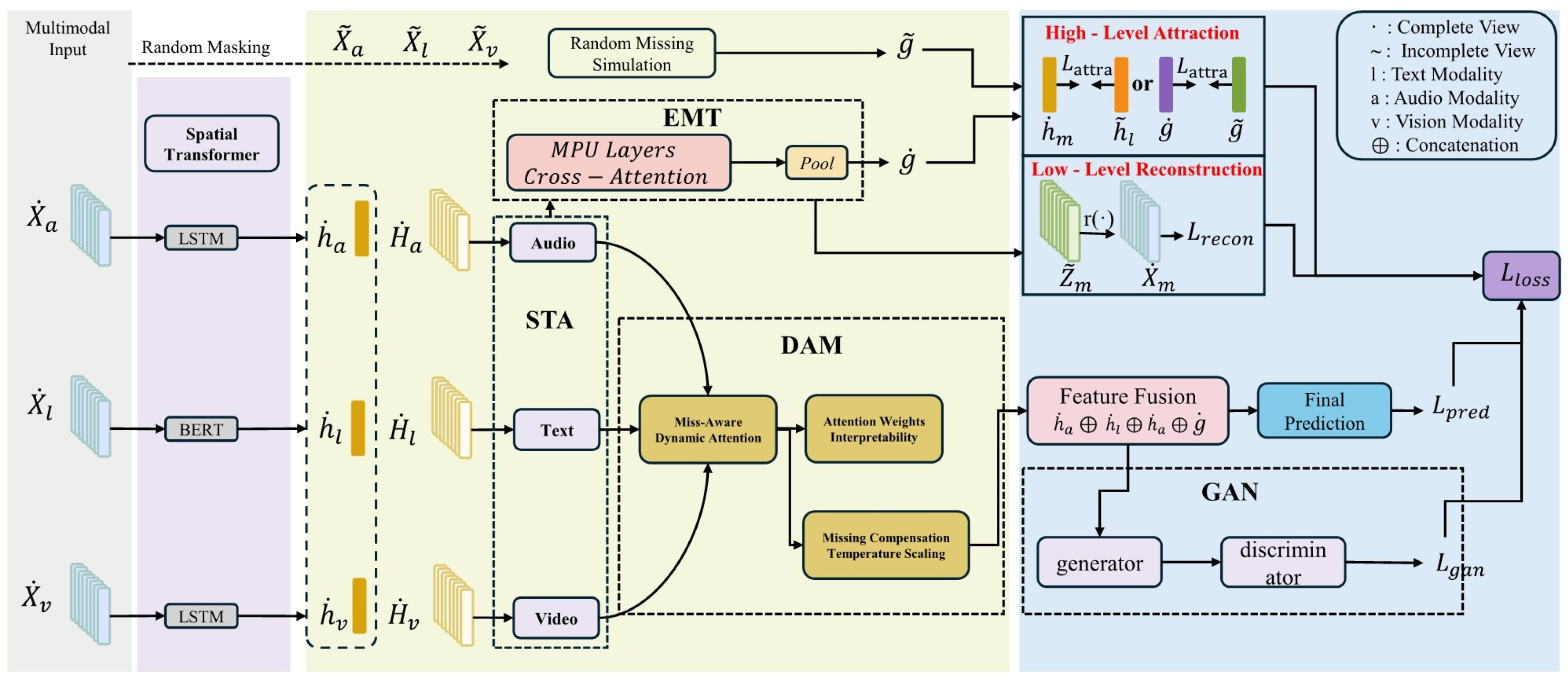

1.2. The Proposed Approach: DAST-GAN

1.3. Contributions

- A novel dynamic attention mechanism (DAM) that moves beyond static fusion by adaptively weighting modalities based on semantic context, improving fusion effectiveness.

- An enhanced spatio-temporal attention (STA) framework that jointly models temporal coherence and cross-modal spatial interactions, leading to more holistic representations.

- A GAN-based adversarial training approach designed specifically to learn robust representations from incomplete data, significantly improving model performance in realistic missing modality scenarios.

- Comprehensive experimental validation demonstrating that DAST-GAN achieves competitive or superior performance compared to a range of strong baseline methods on three benchmark datasets, particularly in incomplete modality settings.

2. Related Work

2.1. Fusion Strategies in Complete Modality Settings

2.2. Robustness in Incomplete Modality Settings

2.3. Research Gaps and Motivations

3. Method

3.1. Problem Formulation

3.2. Modality-Specific Encoders

3.2.1. Text Encoder

- Local Features (): The sequence of hidden states from BERT’s final layer, corresponding to the input tokens. These features capture fine-grained, contextualized semantic details.

- Global Feature (): The hidden state of the special [CLS] token, which is trained to aggregate the overall semantic content of the sequence.

3.2.2. Audio and Video Encoders

- Local Features (): The sequence of hidden states from the LSTM at each time step, capturing the temporal dynamics of the signal.

- Global Features (): The final hidden state of the LSTM, which serves as a summary of the entire sequence for each modality.

3.3. The DAM-STA Fusion Module

3.3.1. Global Context Embedding

3.3.2. Local Feature Alignment

3.3.3. Cross-Modal Fusion

3.4. Emotion Prediction Module

3.5. The GAN-Enhanced DLFR Module

3.5.1. High-Level Feature Attraction

3.5.2. Low-Level Feature Reconstruction

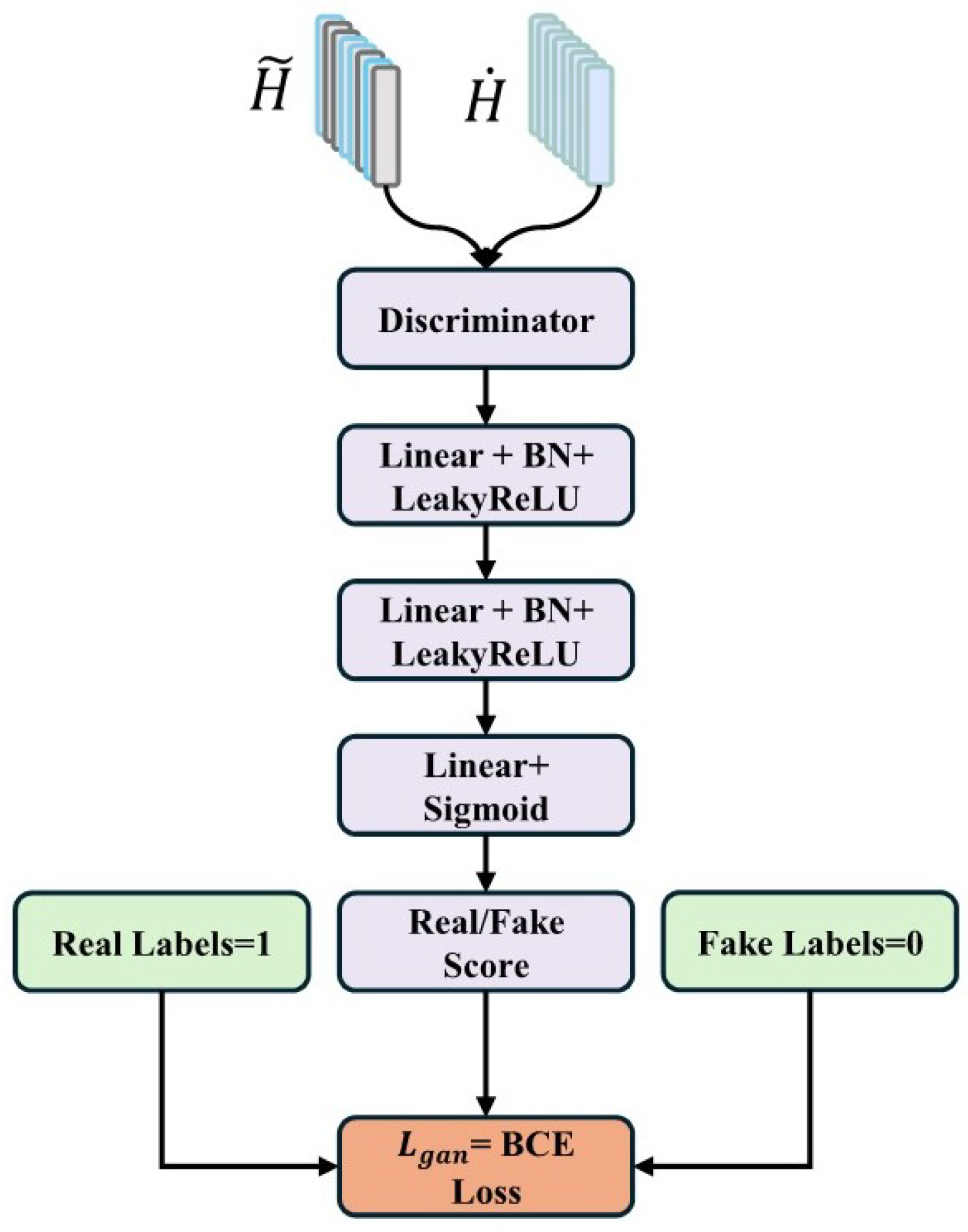

3.5.3. GAN Adversarial Training

3.6. Overall Loss Function for Model Training

3.6.1. Prediction Loss

3.6.2. High-Level Feature Attraction Loss

3.6.3. Low-Level Feature Reconstruction Loss

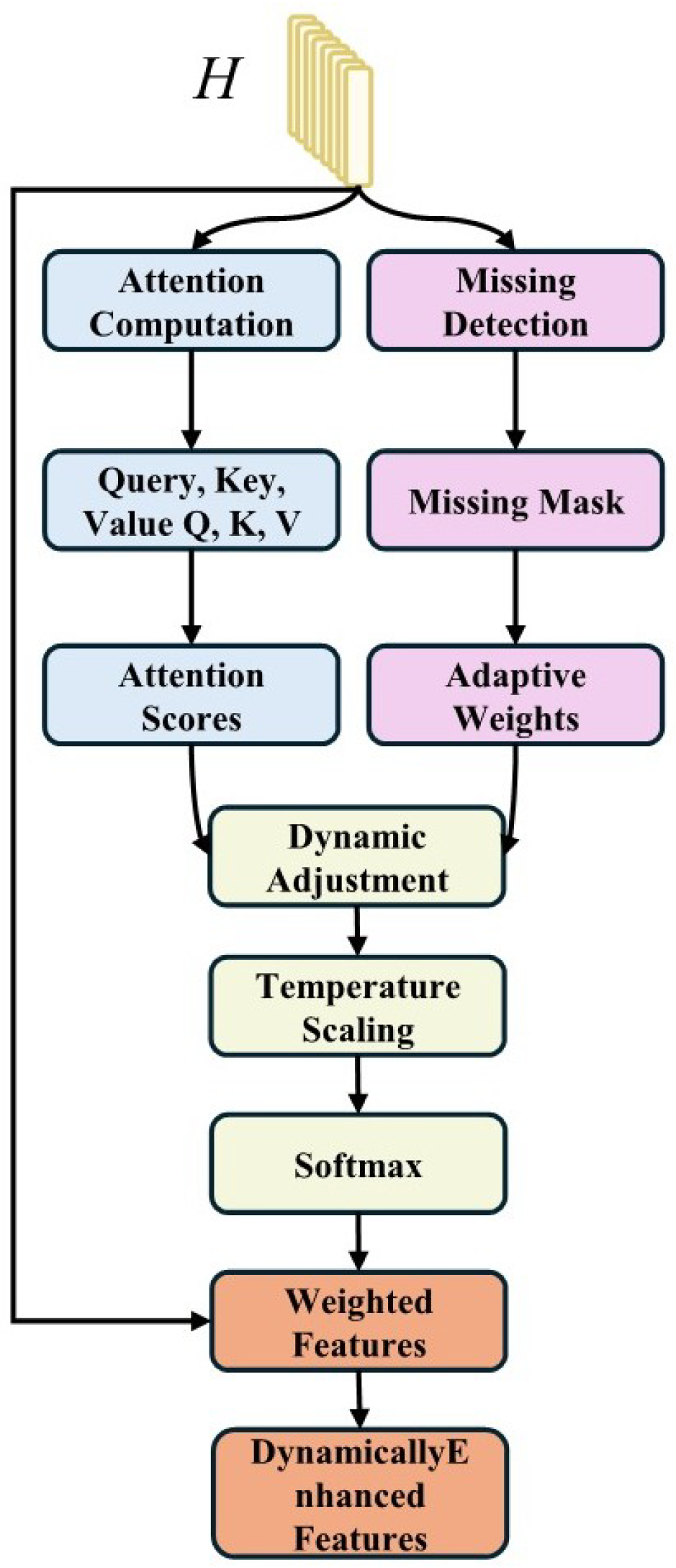

3.6.4. DAM—Technical Details

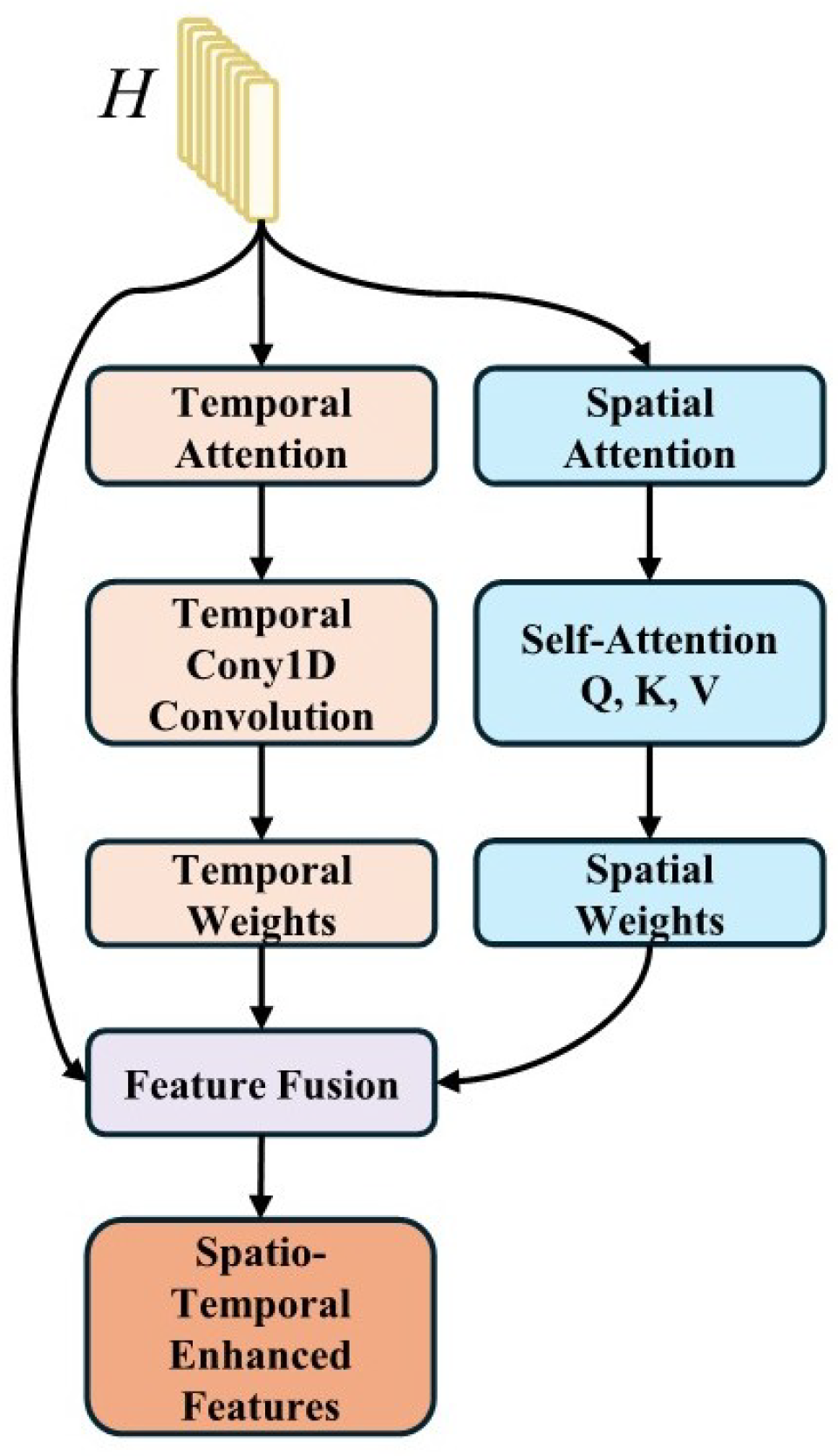

3.6.5. STA—Technical Details

- Temporal Attention

- Spatial Attention

- Spatio-temporal Fusion

3.6.6. Adversarial Loss in GAN

- Generator Design

- Discriminator Design

- Adversarial Loss

3.6.7. Overall Loss Function

3.7. Computational Complexity Analysis

4. Experiments

4.1. Datasets

4.1.1. CMU-MOSI

4.1.2. CMU-MOSEI

4.1.3. CH-SIMS

4.2. Evaluation Metrics

4.3. Experimental Details

4.3.1. Implementation Details

4.3.2. Training Strategy

4.3.3. Hyperparameter Selection

- Loss weights (, , ) were tuned using grid search in ranges [0.1, 2.0]

- Learning rates were selected from

- Hidden dimensions were chosen from based on validation performance

- Dropout rates were tuned in the range [0.0, 0.5] with 0.1 increments

4.4. Comparison with Baseline Methods

4.4.1. Complete Modality Setting

4.4.2. Incomplete Modality Setting

4.5. Ablation Study

4.5.1. Experimental Design

- DAST-GAN (Full): The complete model, including the DAM, STA, and GAN adversarial training.

- DAST-GAN w/o DAM: Removes the DAM, retaining the attention mechanism with fixed weights.

- DAST-GAN w/o STA: Removes STA, adopting a standard self-attention mechanism.

- DAST-GAN w/o GAN: Removes adversarial training, using only supervised learning loss.

- DAST-GAN w/o DAM+STA: Retains only GAN training, using a basic Transformer structure.

- DAST-GAN w/o DAM+GAN: Retains only the STA.

- DAST-GAN w/o STA+GAN: Retains only the DAM.

4.5.2. Detailed Analysis

4.5.3. Comprehensive Modality Missing Analysis

4.6. Visualization and Analysis

4.6.1. Attention Weight Visualization

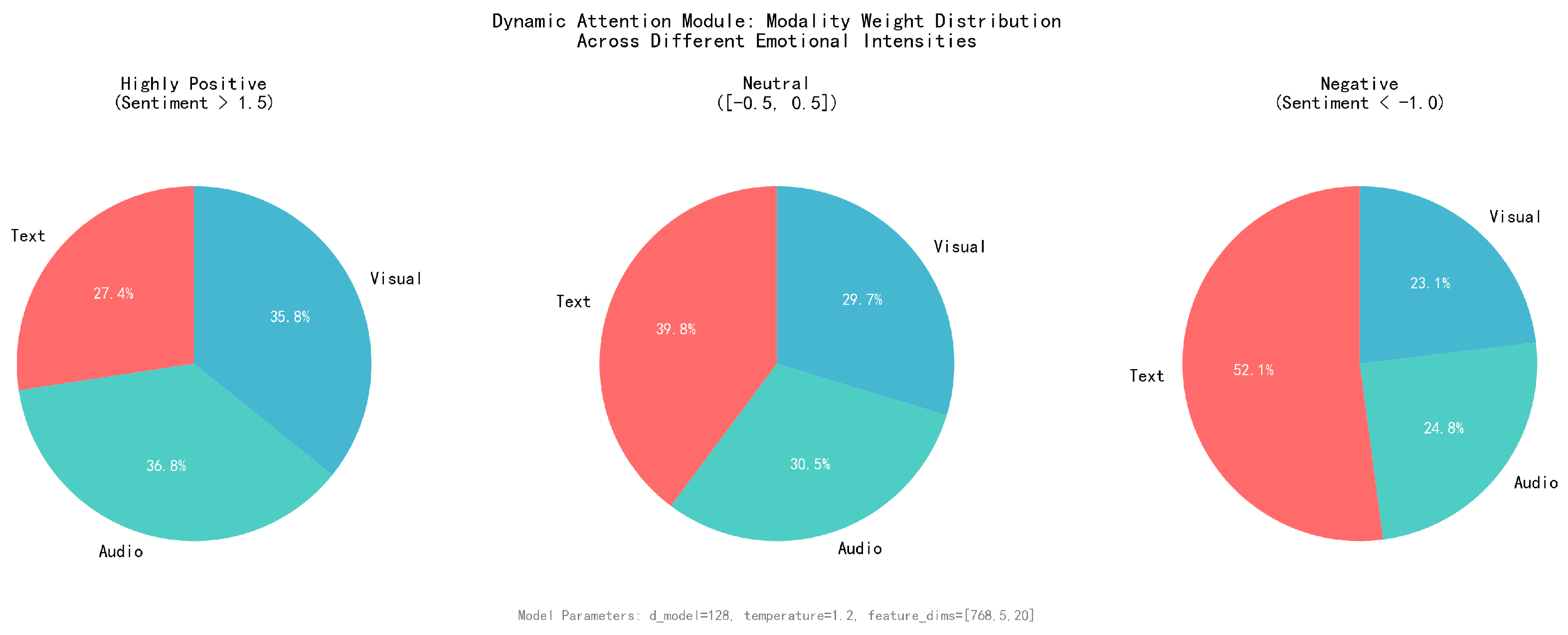

- For highly positive emotions (sentiment > 1.5), the Dynamic Attention Module allocates weights as: Text (27.4%), Audio (36.8%), Visual (35.8%), showing an audio-visual dominant pattern that effectively captures the multimodal nature of positive emotional expressions through non-verbal cues. This distribution aligns with cognitive science findings that positive emotions are often expressed through prosodic and facial features.

- For negative emotions (sentiment < −1.0), text modality receives the highest attention weight (52.1%), while Audio (24.8%) and Visual (23.1%) receive significantly lower weights, confirming that negative sentiments rely heavily on linguistic content for accurate detection. This cognitive consistency demonstrates the model’s ability to learn human-like attention patterns.

- For neutral emotions ([−0.5, 0.5]), the distribution shows Text (39.8%), Audio (30.5%), Visual (29.7%), indicating a text-dominant but balanced attention allocation that requires comprehensive multimodal analysis for disambiguation. Unlike traditional fixed-weight approaches that apply uniform attention (33.3% each), the dynamic mechanism shows meaningful adaptation.

- The dynamic weighting demonstrates strong adaptive behavior across emotional contexts, with the temperature parameter (1.2) enabling fine-grained attention allocation that varies significantly based on sentiment polarity and missing modality compensation factors. This adaptability represents a key advantage over static attention mechanisms commonly used in baseline methods.

4.6.2. Feature Distribution Analysis

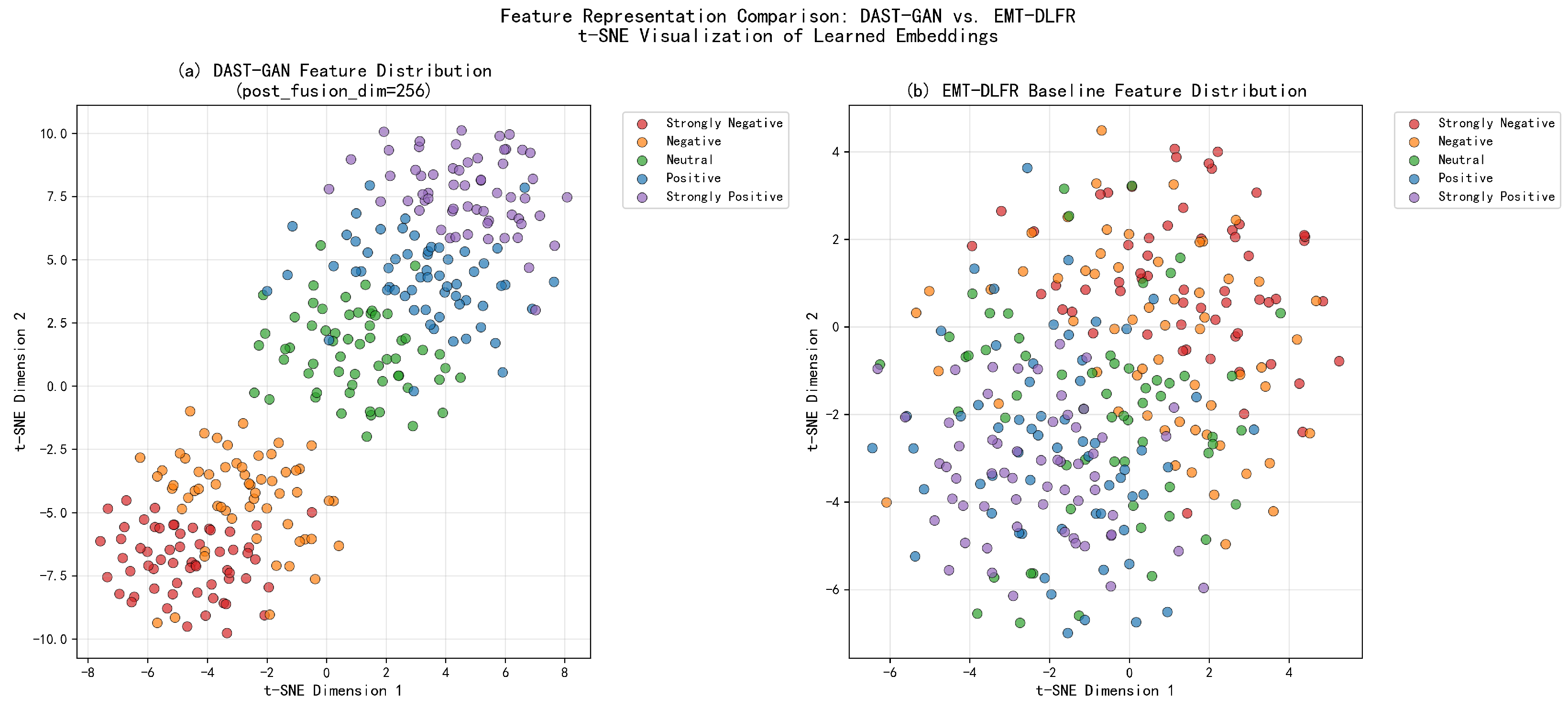

- DAST-GAN demonstrates superior clustering quality with distinct, well-separated emotion clusters across the five sentiment categories (Strongly Negative, Negative, Neutral, Positive, Strongly Positive), achieving significantly tighter intra-class variance compared to EMT-DLFR baseline.

- Clear hierarchical arrangement of sentiment categories from negative (lower left) to positive (upper right), indicating that the DAM-STA fusion mechanism learns semantically meaningful spatial representations in the 256-dimensional feature space.

- Significantly improved class separability with DAST-GAN showing compact, circular cluster formations and larger inter-class margins, reflecting the effectiveness of the GAN-enhanced feature learning process.

- The EMT-DLFR baseline exhibits more scattered and overlapping clusters, particularly in the neutral and boundary regions, while DAST-GAN maintains cleaner boundaries between sentiment categories through its enhanced spatio-temporal attention mechanisms.

- Robust feature representations demonstrate the complementary effects of Dynamic Attention Module and missing modality compensation, validating that the architecture effectively handles both complete and incomplete modality scenarios.

- The improved clustering quality (with visibly tighter intra-class compactness and clearer inter-class separation) directly contributes to the 1.5 percentage point Acc-7 improvement (from 47.1% to 48.6%), as more compact clusters and clearer boundaries enable the classifier to establish more accurate decision boundaries for fine-grained sentiment discrimination.

4.6.3. Temporal Attention Analysis

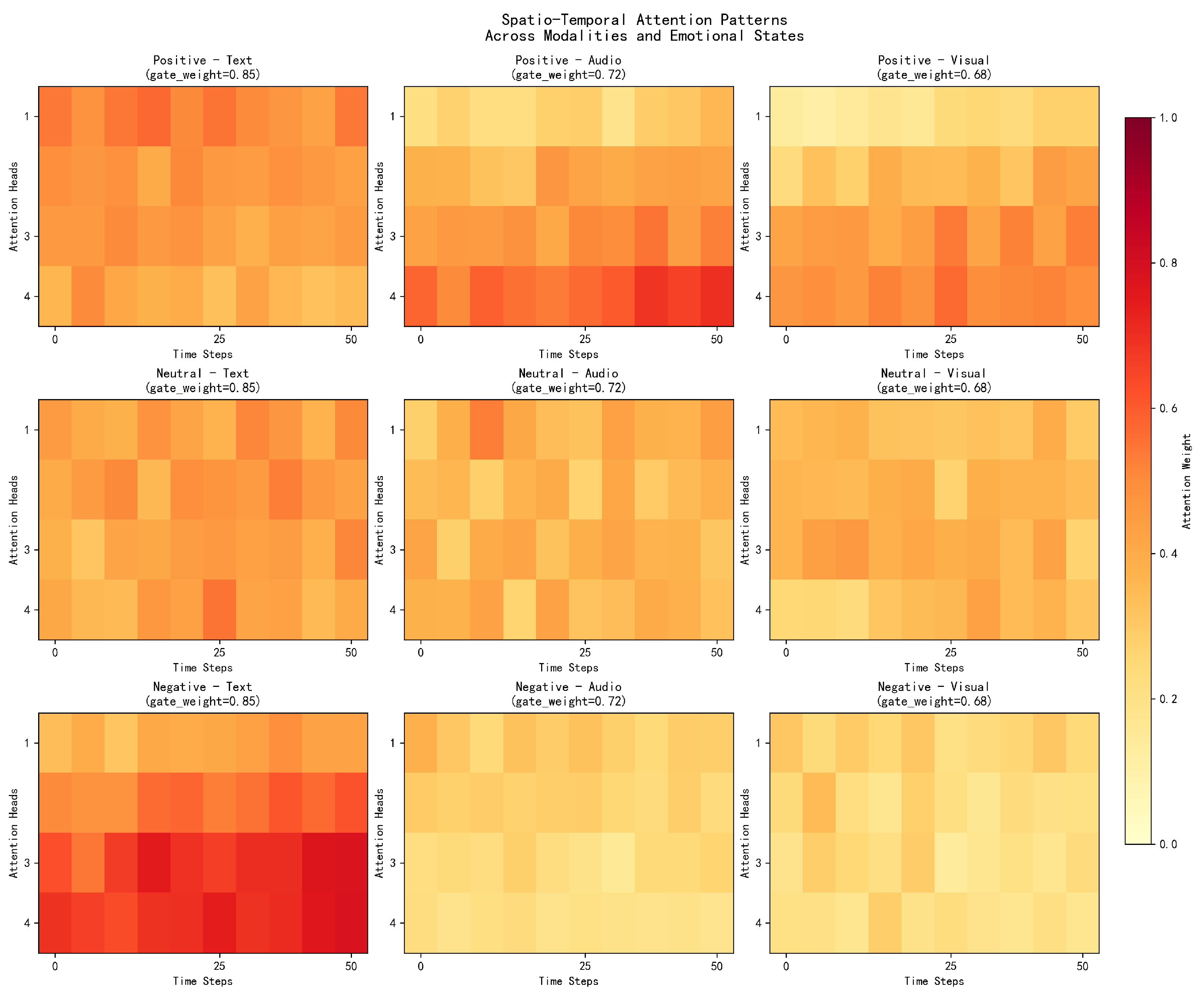

- Positive Emotions: Show sustained high attention in audio (0.6–0.9 intensity) and visual (0.7–0.8 intensity) modalities throughout the 50-step sequence, with particularly strong activation in the middle segments (steps 20–40), reflecting the temporal dynamics of positive emotional expression. This pattern aligns with natural emotion development where peak intensity occurs mid-sequence.

- Negative Emotions: Exhibit concentrated attention in text modality with consistently high weights (0.8–0.9 intensity) across all time steps, while audio and visual modalities show significantly lower attention patterns (0.2–0.3 intensity), confirming text dominance in negative sentiment detection. The temporal consistency demonstrates the model’s understanding of sustained negative expression patterns.

- Neutral Emotions: Display relatively balanced attention distribution across all modalities with moderate intensity variations around 0.5, indicating the need for comprehensive multimodal integration when emotional signals are ambiguous. This balanced approach contrasts with simple averaging methods that fail to capture subtle emotional cues.

- Modality-specific Patterns: Each modality demonstrates distinct temporal signatures influenced by their respective learned gate weights—text attention maintains sustained patterns, audio attention shows progressive intensification, and visual attention exhibits variable peaks corresponding to salient emotional expressions. These differentiated patterns showcase the effectiveness of the adaptive gate-based design.

- Multi-head Attention Diversity: The 4 attention heads focus on different temporal aspects with slight variations (±0.02 standard deviation), demonstrating the model’s ability to capture diverse spatio-temporal patterns within the CMU-MOSI sequence structure. This diversity prevents attention head collapse commonly observed in simpler architectures.

4.6.4. Error Analysis

- Samples with clear emotional expressions across multiple modalities.

- Cases where traditional methods struggle due to modality conflicts.

- Scenarios with partial modality loss (up to 50% missing rate).

- Highly ambiguous samples where even human annotators show disagreement.

- Samples with conflicting emotional cues across modalities.

- Very short utterances with limited contextual information.

4.7. Comparison with Recent Advances

4.7.1. Advantages over Existing Methods

4.7.2. Computational Efficiency Analysis

- Training Time: DAST-GAN requires approximately 15% more training time than EMT [6] due to adversarial training, but achieves a 30% reduction compared to MulT [3] due to linear complexity. The model typically converges in 35–40 epochs on CMU-MOSI compared to 30–35 epochs for non-adversarial baselines, with the multi-stage training strategy (5-epoch warm-up, 10-epoch progressive integration) effectively preventing the training instability commonly associated with GANs.

- Inference Speed: The model achieves faster inference than TFR-Net [5] while maintaining superior performance. Importantly, the discriminator is only used during training, resulting in zero additional computational cost at inference time.

- Memory Usage: Memory consumption is comparable to baseline methods, with a minimal increase due to the discriminator network. GPU memory usage is around 8 GB for batch size 32 on CMU-MOSI.

- Parameter Efficiency: During inference, DAST-GAN uses only the core model components (encoders, fusion, prediction layers), as the discriminator, projectors, and predictors are training-only modules, resulting in a compact deployment footprint.

- Training Stability: Experiments with three different random seeds demonstrate consistent performance across runs (standard deviation of 0.0075 MAE, approximately 1% relative variance), indicating that the multi-stage training strategy (5-epoch warm-up, 10-epoch progressive integration) effectively mitigates the training instability commonly associated with adversarial optimization. The model exhibits robust performance across datasets with dataset-specific hyperparameter configurations (e.g., for CMU-MOSI/MOSEI, for CH-SIMS), demonstrating adaptability without requiring extensive tuning.

4.7.3. Robustness Analysis

5. Discussion

5.1. Practical Implications and Application Value

- Empathetic Human–Computer Interaction (HCI): In virtual assistants and customer service systems, DAST-GAN operates through a sequential process (input reception → DAM attention redistribution → robust sentiment prediction): (1) receives user inputs which may include partial audio, visual, or text data; (2) when a user turns away from the camera or speaks in a noisy environment, the Dynamic Attention Module (DAM) automatically redistributes attention weights toward more reliable modalities (e.g., shifting from degraded visual to audio features); (3) maintains consistent sentiment understanding throughout the interaction, enabling systems to adapt in real-time and provide more natural responses.

- Automated Mental Health Monitoring: In telehealth applications, DAST-GAN processes patient video data through distinct stages (DLFR feature recovery → DAM indicator identification → STA temporal analysis): (1) the GAN-enhanced DLFR module handles any missing or corrupted segments by generating consistent feature representations; (2) the Dynamic Attention Module identifies the most significant emotional indicators for conditions like depression or anxiety, prioritizing specific audio patterns (monotone speech, slow tempo, reduced prosody) when visual data is compromised; (3) the Spatio-Temporal Attention (STA) component analyzes how these emotional patterns evolve over the therapy session, providing clinicians with reliable quantitative insights about patient emotional states.

- Market Research and Brand Analysis: When analyzing user-generated content, DAST-GAN employs a robust processing pipeline: (1) normalizes input data of inconsistent quality from various sources; (2) the GAN component ensures that features derived from incomplete data match the distribution of complete data, creating a consistent analytical framework; (3) applies multi-stage processing (modality encoding → dynamic weighting → temporal modeling → sentiment classification) to identify consumer opinions. Even with significant missing information due to recording issues, DAST-GAN maintains high accuracy, allowing businesses to extract reliable insights from diverse content types.

5.2. Dataset Selection and Model Design Synergy

5.3. Broader Research Implications

- A Shift from Static to Dynamic Fusion: The success of the DAM challenges the prevailing paradigm of static fusion mechanisms. It provides strong evidence that adaptive, context-aware fusion is not just beneficial but necessary for capturing the nuances of human emotional expression. This encourages a move towards models that can dynamically reason about the relative importance of different modalities.

- A New Paradigm for Representation Robustness: The GAN-enhanced DLFR module reframes the problem of missing data from one of simple reconstruction to one of distributional consistency. By training the model to produce representations from incomplete data that are indistinguishable from those of complete data, it learns features that are inherently more robust. This adversarial approach offers a more principled way to handle data imperfection than traditional imputation or reconstruction-focused methods.

5.4. Limitations and Future Work

- Computational Cost: The inclusion of a GAN-based training regimen, while effective, increases the computational overhead compared to simpler models. Future work could explore more lightweight adversarial learning techniques, such as knowledge distillation from the discriminator or more efficient generator-discriminator architectures inspired by recent advances in generative modeling [50].

- Generalization to In-the-Wild Data: While tested on standard benchmarks [44,45,46], the model’s performance on truly “in-the-wild” data—encompassing a wider range of languages, cultural expressions, and environmental conditions—remains to be validated. Future research should focus on cross-dataset and cross-cultural evaluation to assess its real-world generalizability.

- Interpretability: The attention visualizations provide some insight into the model’s decision-making process. However, the complex interactions within the deep network remain partially opaque. Developing more advanced interpretability methods to explain *why* the model arrives at a particular sentiment prediction is a critical next step for building trust and facilitating error analysis.

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Parthasarathy, S.; Sundaram, S. Training strategies to handle missing modalities for audio-visual expression recognition. In Proceedings of the Companion Publication of the 2020 International Conference on Multimodal Interaction, Virtual Event, 25–29 October 2020; pp. 400–404. [Google Scholar]

- Hazarika, D.; Li, Y.; Cheng, B.; Zhao, S.; Zimmermann, R.; Poria, S. Analyzing modality robustness in multimodal sentiment analysis. arXiv 2022, arXiv:2205.15465. [Google Scholar] [CrossRef]

- Tsai, Y.H.H.; Bai, S.; Liang, P.P.; Kolter, J.Z.; Morency, L.P.; Salakhutdinov, R. Multimodal transformer for unaligned multimodal language sequences. In Proceedings of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; Volume 2019, p. 6558. [Google Scholar]

- Lv, F.; Chen, X.; Huang, Y.; Duan, L.; Lin, G. Progressive modality reinforcement for human multimodal emotion recognition from unaligned multimodal sequences. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 2554–2562. [Google Scholar]

- Yuan, Z.; Li, W.; Xu, H.; Yu, W. Transformer-based feature reconstruction network for robust multimodal sentiment analysis. In Proceedings of the 29th ACM International Conference on Multimedia, Virtual Event, 20–24 October 2021; pp. 4400–4407. [Google Scholar]

- Sun, L.; Lian, Z.; Liu, B.; Tao, J. Efficient multimodal transformer with dual-level feature restoration for robust multimodal sentiment analysis. IEEE Trans. Affect. Comput. 2023, 15, 309–325. [Google Scholar] [CrossRef]

- Wang, W.; Huang, Y.; Wang, Y.; Wang, L. Generalized autoencoder: A neural network framework for dimensionality reduction. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Columbus, OH, USA, 23–28 June 2014; pp. 490–497. [Google Scholar]

- Li, Y.; Su, H.; Shen, X.; Li, W.; Cao, Z.; Niu, S. Dailydialog: A manually labelled multi-turn dialogue dataset. arXiv 2017, arXiv:1710.03957. [Google Scholar]

- Chen, Y.; Dai, X.; Liu, M.; Chen, D.; Yuan, L.; Liu, Z. Dynamic convolution: Attention over convolution kernels. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11030–11039. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017; Volume 30. [Google Scholar]

- Pham, H.; Liang, P.P.; Manzini, T.; Morency, L.P.; Póczos, B. Found in translation: Learning robust joint representations by cyclic translations between modalities. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 6892–6899. [Google Scholar]

- Zhao, J.; Li, R.; Jin, Q. Missing modality imagination network for emotion recognition with uncertain missing modalities. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), Virtual Event, 1–6 August 2021; pp. 2608–2618. [Google Scholar]

- Tang, J.; Li, K.; Jin, X.; Cichocki, A.; Zhao, Q.; Kong, W. CTFN: Hierarchical learning for multimodal sentiment analysis using coupled-translation fusion network. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), Virtual Event, 1–6 August 2021; pp. 5301–5311. [Google Scholar]

- Morency, L.P.; Mihalcea, R.; Doshi, P. Towards multimodal sentiment analysis: Harvesting opinions from the web. In Proceedings of the 13th International Conference on Multimodal Interfaces, Alicante, Spain, 14–18 November 2011; pp. 169–176. [Google Scholar]

- Pérez-Rosas, V.; Mihalcea, R.; Morency, L.P. Utterance-level multimodal sentiment analysis. In Proceedings of the 51st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Sofia, Bulgaria, 4–9 August 2013; pp. 973–982. [Google Scholar]

- Zadeh, A.; Chen, M.; Poria, S.; Cambria, E.; Morency, L.P. Tensor fusion network for multimodal sentiment analysis. arXiv 2017, arXiv:1707.07250. [Google Scholar] [CrossRef]

- Liu, Z.; Shen, Y.; Lakshminarasimhan, V.B.; Liang, P.P.; Zadeh, A.; Morency, L.P. Efficient low-rank multimodal fusion with modality-specific factors. arXiv 2018, arXiv:1806.00064. [Google Scholar]

- Mai, S.; Hu, H.; Xing, S. Divide, conquer and combine: Hierarchical feature fusion network with local and global perspectives for multimodal affective computing. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; pp. 481–492. [Google Scholar]

- Jin, T.; Huang, S.; Li, Y.; Zhang, Z. Dual low-rank multimodal fusion. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2020, Virtual Event, 16–20 November 2020; pp. 377–387. [Google Scholar]

- Zadeh, A.; Liang, P.P.; Mazumder, N.; Poria, S.; Cambria, E.; Morency, L.P. Memory fusion network for multi-view sequential learning. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; Volume 32, pp. 518–525. [Google Scholar]

- Zadeh, A.; Liang, P.P.; Poria, S.; Vij, P.; Cambria, E.; Morency, L.P. Multi-attention recurrent network for human communication comprehension. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; Volume 32, pp. 5810–5817. [Google Scholar]

- Rajagopalan, S.S.; Morency, L.P.; Baltrusaitis, T.; Goecke, R. Extending long short-term memory for multi-view structured learning. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; pp. 338–353. [Google Scholar]

- Gu, Y.; Yang, K.; Fu, S.; Chen, S.; Li, X.; Marsic, I. Multimodal affective analysis using hierarchical attention strategy with word-level alignment. In Proceedings of the Association for Computational Linguistics, Melbourne, Australia, 15–20 July 2018; Volume 2018, pp. 2225–2235. [Google Scholar]

- Chen, M.; Wang, S.; Liang, P.P.; Baltrušaitis, T.; Zadeh, A.; Morency, L.P. Multimodal sentiment analysis with word-level fusion and reinforcement learning. In Proceedings of the 19th ACM International Conference on Multimodal Interaction, Glasgow, UK, 13–17 November 2017; pp. 163–171. [Google Scholar]

- Wang, Y.; Shen, Y.; Liu, Z.; Liang, P.P.; Zadeh, A.; Morency, L.P. Words can shift: Dynamically adjusting word representations using nonverbal behaviors. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 7216–7223. [Google Scholar]

- Rahman, W.; Hasan, M.K.; Lee, S.; Zadeh, A.; Mao, C.; Morency, L.P.; Hoque, E. Integrating multimodal information in large pretrained transformers. In Proceedings of the Association for Computational Linguistics, Virtual Event, 5–10 July 2020; Volume 2020, pp. 2359–2369. [Google Scholar]

- Poria, S.; Cambria, E.; Hazarika, D.; Majumder, N.; Zadeh, A.; Morency, L.P. Context-dependent sentiment analysis in user-generated videos. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Vancouver, BC, Canada, 30 July–4 August 2017; pp. 873–883. [Google Scholar]

- Lian, Z.; Tao, J.; Liu, B.; Huang, J. Conversational emotion analysis via attention mechanisms. arXiv 2019, arXiv:1910.11263. [Google Scholar] [CrossRef]

- Lian, Z.; Liu, B.; Tao, J. CTNet: Conversational transformer network for emotion recognition. IEEE/ACM Trans. Audio Speech Lang. Process. 2021, 29, 985–1000. [Google Scholar] [CrossRef]

- Rajan, V.; Brutti, A.; Cavallaro, A. Is cross-attention preferable to self-attention for multi-modal emotion recognition? In Proceedings of the ICASSP 2022—2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 23–27 May 2022; pp. 4693–4697. [Google Scholar]

- Zadeh, A.; Mao, C.; Shi, K.; Zhang, Y.; Liang, P.P.; Poria, S.; Morency, L.P. Factorized multimodal transformer for multimodal sequential learning. arXiv 2019, arXiv:1911.09826. [Google Scholar] [CrossRef]

- Liang, T.; Lin, G.; Feng, L.; Zhang, Y.; Lv, F. Attention is not enough: Mitigating the distribution discrepancy in asynchronous multimodal sequence fusion. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 8148–8156. [Google Scholar]

- Sun, L.; Liu, B.; Tao, J.; Lian, Z. Multimodal cross-and self-attention network for speech emotion recognition. In Proceedings of the ICASSP 2021-2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; pp. 4275–4279. [Google Scholar]

- Liang, P.P.; Liu, Z.; Tsai, Y.H.H.; Zhao, Q.; Salakhutdinov, R.; Morency, L.P. Learning representations from imperfect time series data via tensor rank regularization. arXiv 2019, arXiv:1907.01011. [Google Scholar] [CrossRef]

- Tran, L.; Liu, X.; Zhou, J.; Jin, R. Missing modalities imputation via cascaded residual autoencoder. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1405–1414. [Google Scholar]

- Lian, Z.; Chen, L.; Sun, L.; Liu, B.; Tao, J. Gcnet: Graph completion network for incomplete multimodal learning in conversation. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 8419–8432. [Google Scholar] [CrossRef] [PubMed]

- Yu, W.; Xu, H.; Yuan, Z.; Wu, J. Learning modality-specific representations with self-supervised multi-task learning for multimodal sentiment analysis. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual Event, 19–21 May 2021; Volume 35, pp. 10790–10797. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), Minneapolis, MN, USA, 2–7 June 2019; pp. 4171–4186. [Google Scholar]

- Graves, A. Long short-term memory. In Supervised Sequence Labelling with Recurrent Neural Networks; Springer: Berlin/Heidelberg, Germany, 2012; pp. 37–45. [Google Scholar]

- Chen, X.; He, K. Exploring simple siamese representation learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 15750–15758. [Google Scholar]

- Zhang, W.; Chen, X.; Yang, J.; Wang, G. Contrastive learning for multimodal sentiment analysis. Appl. Intell. 2022, 52, 8725–8738. [Google Scholar]

- Liu, Z.; Shen, Y.; Lakshminarasimhan, V.B.; Liang, P.P.; Zadeh, A.; Morency, L.P. Self-supervised contrastive learning for multimodal sentiment analysis. In Proceedings of the Findings of the Association for Computational Linguistics: ACL-IJCNLP 2021, Virtual Event, 1–6 August 2021; pp. 3642–3653. [Google Scholar]

- Wang, H.; Wu, C.; Li, R.; Jia, X. Multimodal sentiment analysis with hierarchical graph contrastive learning. In Proceedings of the 30th ACM International Conference on Multimedia, Lisboa, Portugal, 10–14 October 2022; pp. 429–437. [Google Scholar]

- Zadeh, A.; Zellers, R.; Pincus, E.; Morency, L.P. Multimodal sentiment intensity analysis in videos: Facial gestures and verbal messages. IEEE Intell. Syst. 2016, 31, 82–88. [Google Scholar] [CrossRef]

- Zadeh, A.B.; Liang, P.P.; Poria, S.; Cambria, E.; Morency, L.P. Multimodal language analysis in the wild: Cmu-mosei dataset and interpretable dynamic fusion graph. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Melbourne, Australia, 15–20 July 2018; pp. 2236–2246. [Google Scholar]

- Yu, W.; Xu, H.; Meng, F.; Zhu, Y.; Ma, Y.; Wu, J.; Zou, J.; Yang, K. Ch-sims: A chinese multimodal sentiment analysis dataset with fine-grained annotation of modality. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Virtual Event, 5–10 July 2020; pp. 3718–3727. [Google Scholar]

- Hazarika, D.; Zimmermann, R.; Poria, S. Misa: Modality-invariant and-specific representations for multimodal sentiment analysis. In Proceedings of the 28th ACM International Conference on Multimedia, Seattle, WA, USA, 12–16 October 2020; pp. 1122–1131. [Google Scholar]

- Han, W.; Chen, H.; Poria, S. Improving multimodal fusion with hierarchical mutual information maximization for multimodal sentiment analysis. arXiv 2021, arXiv:2109.00412. [Google Scholar] [CrossRef]

- Li, Y.; Zhao, T.; Kawahara, T. Graph neural networks for multimodal sentiment analysis. Pattern Recognit. Lett. 2019, 125, 735–741. [Google Scholar]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Proceedings of the NIPS’14: Proceedings of the 28th International Conference on Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; Volume 27. [Google Scholar]

| Dataset | Train | Val | Test | Total |

|---|---|---|---|---|

| CMU-MOSI | 552/53/679 | 92/13/124 | 379/30/277 | 2199 |

| CMU-MOSEI | 4738/3540/8048 | 506/433/932 | 1350/1025/2284 | 23,453 |

| CH-SIMS | 742/207/419 | 248/69/139 | 248/69/140 | 2281 |

| Hyperparameters | CMU-MOSI | CH-SIMS | CMU-MOSEI |

|---|---|---|---|

| Batch Size | 32 | 16 | 32 |

| Learning Rate | |||

| BERT Learning Rate | |||

| Optimizer | Adam | Adam | Adam |

| Early Stopping (Epochs) | 8 | 8 | 8 |

| Gradient Accumulation (Batches) | 4 | 4 | 4 |

| Hidden Unit Size in EMT | 128 | 128 | 32 |

| Number of Stacked Layers in EMT | 3 | 2 | 4 |

| Number of Attention Heads | 4 | 4 | 4 |

| Embedding Dropout | 0.0 | 0.0 | 0.0 |

| Attention Dropout | 0.3 | 0.0 | 0.0 |

| Loss Weight | 1.0 | 1.0 | 0.5 |

| Loss Weight | 1.0 | 1.0 | 0.5 |

| Loss Weight | 0.1 | 0.1 | 0.08 |

| Models | CMU-MOSI | CMU-MOSEI | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| MAE ↓ | Corr ↑ | Acc-7 ↑ | Acc-5 ↑ | Acc-2 ↑ | F1 ↑ | MAE ↓ | Corr ↑ | Acc-7 ↑ | Acc-5 ↑ | Acc-2 ↑ | F1 ↑ | |

| 0.804 | 0.764 | - | - | 80.8/82.1 | 80.8/82.0 | 0.568 | 0.724 | - | - | 82.6/84.2 | 82.7/84.0 | |

| 0.846 | 0.725 | 40.4 | 46.7 | 81.7/83.4 | 81.9/83.5 | 0.564 | 0.731 | 52.6 | 54.1 | 80.5/83.5 | 80.9/83.6 | |

| Self-MM ‡ | 0.717 | 0.793 | 46.4 | 52.8 | 82.9/84.6 | 82.8/84.6 | 0.533 | 0.766 | 53.6 | 55.4 | 82.4/85.0 | 82.8/85.0 |

| 0.712 | 0.790 | 46.9 | 53.0 | 83.3/85.3 | 83.4/85.4 | 0.536 | 0.764 | 53.2 | 55.0 | 82.5/85.0 | 82.4/85.1 | |

| TFR-Net ‡ | 0.721 | 0.789 | 46.1 | 53.2 | 82.7/84.0 | 82.7/84.0 | 0.551 | 0.756 | 52.3 | 54.3 | 81.8/83.5 | 81.6/83.8 |

| 0.705 | 0.798 | 47.4 | 54.1 | 83.3/85.0 | 83.2/85.0 | 0.527 | 0.774 | 54.5 | 56.3 | 83.4/86.0 | 83.7/86.0 | |

| Self-MM | 0.720 | 0.790 | 46.6 | 53.0 | 83.0/84.7 | 82.9/84.8 | 0.530 | 0.769 | 53.2 | 55.3 | 82.3/85.0 | 82.4/85.1 |

| EMT | 0.717 | 0.788 | 47.1 | 53.7 | 82.9/84.4 | 82.9/84.5 | 0.537 | 0.767 | 53.2 | 55.1 | 82.9/85.3 | 83.0/85.5 |

| DAST-GAN | 0.698 | 0.800 | 48.6 | 54.9 | 83.2/85.1 | 83.1/85.0 | 0.528 | 0.780 | 54.0 | 56.0 | 83.1/85.1 | 83.2/85.3 |

| Models | CH-SIMS | |||||

|---|---|---|---|---|---|---|

| MAE ↓ | Corr ↑ | Acc-5 ↑ | Acc-3 ↑ | Acc-2 ↑ | F1 ↑ | |

| 0.447 | 0.563 | - | - | 76.5 | 76.6 | |

| 0.442 | 0.581 | 40.0 | 65.7 | 78.2 | 78.5 | |

| Self-MM ‡ | 0.411 | 0.601 | 43.1 | 66.1 | 78.6 | 78.6 |

| 0.422 | 0.597 | 42.0 | 65.5 | 78.3 | 78.2 | |

| TFR-Net ‡ | 0.437 | 0.583 | 41.2 | 64.2 | 78.0 | 78.1 |

| 0.396 | 0.623 | 43.5 | 67.4 | 80.1 | 80.1 | |

| Self-MM | 0.419 | 0.598 | 42.7 | 65.4 | 78.1 | 78.3 |

| EMT | 0.412 | 0.600 | 42.9 | 65.5 | 79.0 | 79.2 |

| DAST-GAN | 0.398 | 0.640 | 43.6 | 67.5 | 80.5 | 80.5 |

| Models | CMU-MOSI | CMU-MOSEI | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| MAE ↓ | Corr ↑ | Acc-7 ↑ | Acc-5 ↑ | Acc-2 ↑ | F1 ↑ | MAE ↓ | Corr ↑ | Acc-7 ↑ | Acc-5 ↑ | Acc-2 ↑ | F1 ↑ | |

| 1.202 | 0.405 | 25.7 | 27.4 | 63.9/63.7 | 59.0/58.8 | 0.698 | 0.514 | 45.1 | 45.7 | 75.2/75.7 | 74.4/74.0 | |

| 1.263 | 0.348 | 23.1 | 24.6 | 63.1/63.2 | 60.7/61.0 | 0.700 | 0.504 | 46.3 | 46.8 | 74.4/75.1 | 72.9/72.6 | |

| Self-MM ‡ | 1.162 | 0.444 | 27.8 | 30.3 | 66.9/67.5 | 65.4/66.2 | 0.685 | 0.507 | 46.7 | 47.3 | 75.1/75.4 | 73.7/72.9 |

| 1.168 | 0.450 | 27.0 | 29.4 | 66.8/66.9 | 64.6/65.8 | 0.687 | 0.520 | 46.5 | 47.1 | 75.0/75.3 | 73.8/74.2 | |

| TFR-Net ‡ | 1.156 | 0.452 | 27.5 | 30.5 | 67.6/67.8 | 65.7/66.1 | 0.689 | 0.511 | 46.9 | 47.3 | 74.7/74.2 | 72.5/73.4 |

| EMT-DLFR ‡ | 1.106 | 0.486 | 32.5 | 35.6 | 69.6/70.3 | 69.6/70.3 | 0.665 | 0.546 | 47.9 | 48.8 | 76.4/76.9 | 75.2/75.9 |

| Self-MM | 1.215 | 0.440 | 28.1 | 30.5 | 66.6/67.7 | 66.8/67.9 | 0.695 | 0.496 | 46.8 | 47.1 | 75.0/75.3 | 74.4/75.2 |

| EMT-DLFR | 1.113 | 0.473 | 32.0 | 35.3 | 69.3/70.0 | 69.3/70.0 | 0.673 | 0.524 | 47.1 | 48.8 | 75.5/76.7 | 74.3/75.2 |

| DAST-GAN | 1.108 | 0.490 | 33.1 | 36.0 | 69.7/70.4 | 69.6/70.4 | 0.640 | 0.563 | 48.0 | 50.2 | 77.1/77.9 | 76.2/77.2 |

| Models | CH-SIMS | |||||

|---|---|---|---|---|---|---|

| MAE ↓ | Corr ↑ | Acc-5 ↑ | Acc-3 ↑ | Acc-2 ↑ | F1 ↑ | |

| 0.293 | 0.053 | 10.6 | 26.4 | 34.8 | 28.9 | |

| 0.242 | 0.233 | 16.7 | 30.0 | 37.3 | 36.8 | |

| Self-MM ‡ | 0.231 | 0.258 | 18.3 | 30.5 | 37.4 | 37.5 |

| 0.244 | 0.238 | 17.7 | 29.8 | 37.0 | 36.2 | |

| TFR-Net ‡ | 0.237 | 0.253 | 17.8 | 30.0 | 37.3 | 37.2 |

| EMT-DLFR ‡ | 0.215 | 0.287 | 20.4 | 31.9 | 38.4 | 38.5 |

| Self-MM | 0.237 | 0.250 | 19.1 | 30.6 | 36.6 | 36.4 |

| EMT-DLFR | 0.230 | 0.268 | 19.5 | 31.2 | 37.1 | 37.3 |

| DAST-GAN | 0.213 | 0.290 | 20.8 | 32.3 | 38.7 | 38.7 |

| Models | CMU-MOSI | |||||

|---|---|---|---|---|---|---|

| MAE ↓ | Corr ↑ | Acc-7 ↑ | Acc-5 ↑ | Acc-2 ↑ | F1 ↑ | |

| Baseline (EMT-DLFR) | 0.717 | 0.788 | 47.1 | 53.7 | 82.9/84.4 | 82.9/84.5 |

| w/o DAM + STA | 0.712 | 0.791 | 47.3 | 53.9 | 83.0/84.5 | 83.0/84.6 |

| w/o STA + GAN | 0.709 | 0.793 | 47.6 | 54.1 | 83.1/84.7 | 83.1/84.7 |

| w/o DAM + GAN | 0.706 | 0.795 | 47.8 | 54.3 | 83.2/84.8 | 83.2/84.8 |

| w/o GAN | 0.704 | 0.796 | 48.0 | 54.5 | 83.0/84.8 | 83.0/84.8 |

| w/o STA | 0.702 | 0.797 | 48.2 | 54.6 | 83.1/84.9 | 83.1/84.9 |

| w/o DAM | 0.705 | 0.794 | 47.9 | 54.4 | 83.0/84.7 | 83.0/84.7 |

| DAST-GAN (Full) | 0.698 | 0.800 | 48.6 | 54.9 | 83.2/85.1 | 83.1/85.0 |

| Missing Pattern | 10% | 30% | 50% | 70% | 90% |

|---|---|---|---|---|---|

| Random Missing | 0.728 | 0.768 | 0.885 | 1.185 | 1.558 |

| Burst Missing | 0.735 | 0.782 | 0.906 | 1.208 | 1.581 |

| Modality-wise Missing | 0.742 | 0.798 | 0.927 | 1.231 | 1.604 |

| EMT-DLFR (Random) | 0.741 | 0.798 | 0.923 | 1.235 | 1.612 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tan, W.; Zhang, B. DAST-GAN: An Adversarial Learning Multimodal Sentiment Analysis Model Based on Dynamic Attention and Spatio-Temporal Fusion. Sensors 2025, 25, 7109. https://doi.org/10.3390/s25237109

Tan W, Zhang B. DAST-GAN: An Adversarial Learning Multimodal Sentiment Analysis Model Based on Dynamic Attention and Spatio-Temporal Fusion. Sensors. 2025; 25(23):7109. https://doi.org/10.3390/s25237109

Chicago/Turabian StyleTan, Wenlong, and Bo Zhang. 2025. "DAST-GAN: An Adversarial Learning Multimodal Sentiment Analysis Model Based on Dynamic Attention and Spatio-Temporal Fusion" Sensors 25, no. 23: 7109. https://doi.org/10.3390/s25237109

APA StyleTan, W., & Zhang, B. (2025). DAST-GAN: An Adversarial Learning Multimodal Sentiment Analysis Model Based on Dynamic Attention and Spatio-Temporal Fusion. Sensors, 25(23), 7109. https://doi.org/10.3390/s25237109