RMH-YOLO: A Refined Multi-Scale Architecture for Small-Target Detection in UAV Aerial Imagery

Abstract

1. Introduction

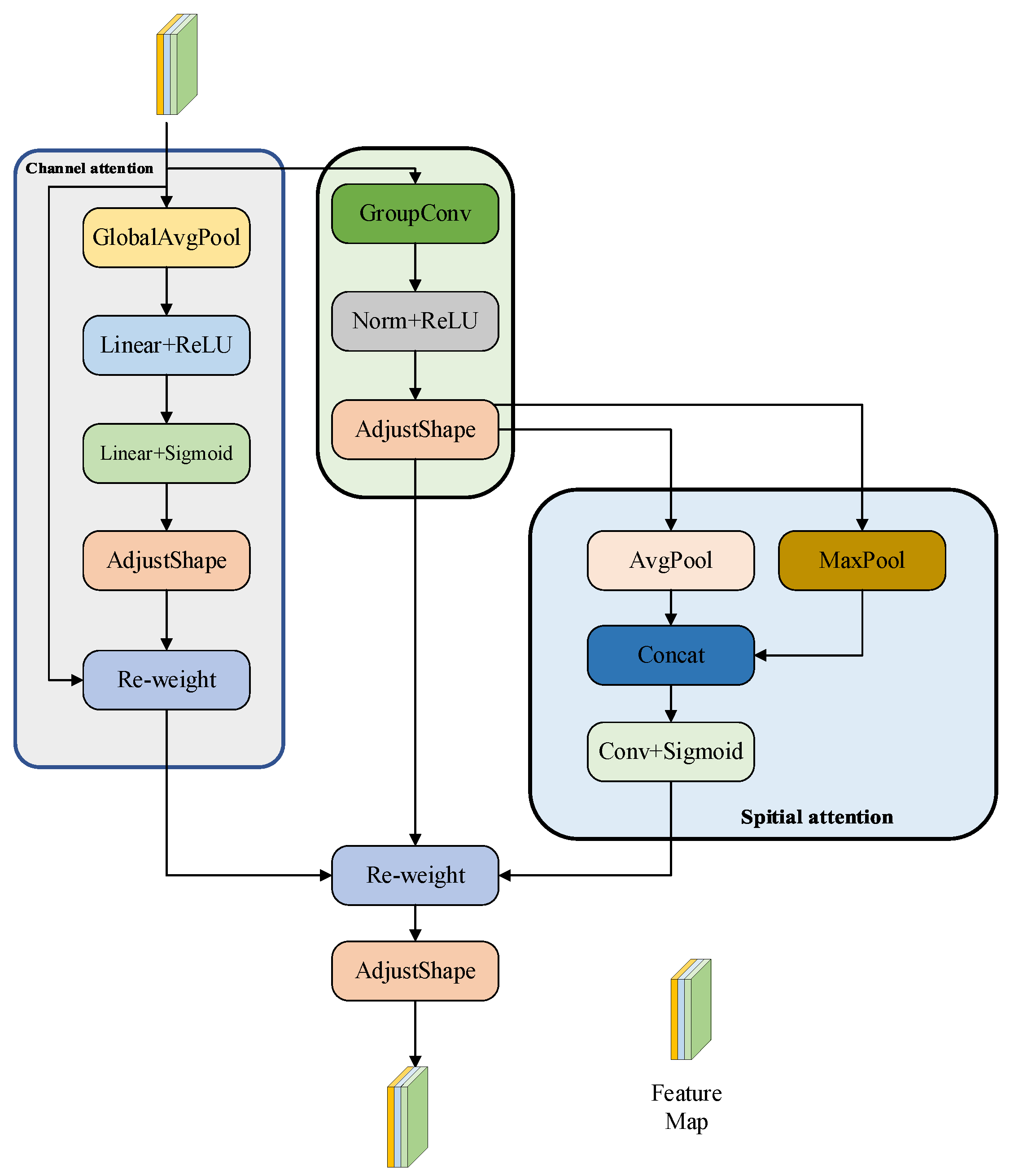

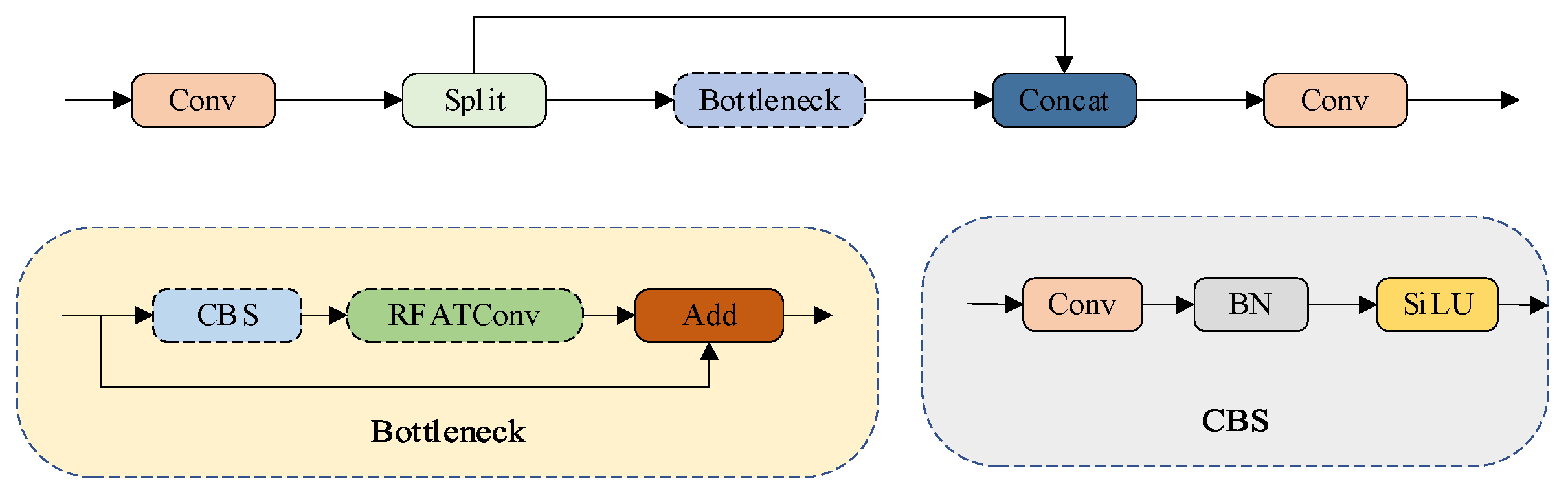

- First, a Refined Feature Module (RFM) is integrated into the backbone network to enhance feature extraction from low-resolution imagery. By fusing channel and spatial attention mechanisms within residual connections, the RFM dynamically recalibrates multi-scale feature representations, improving the discriminative capability for small targets while maintaining contextual integrity for larger instances.

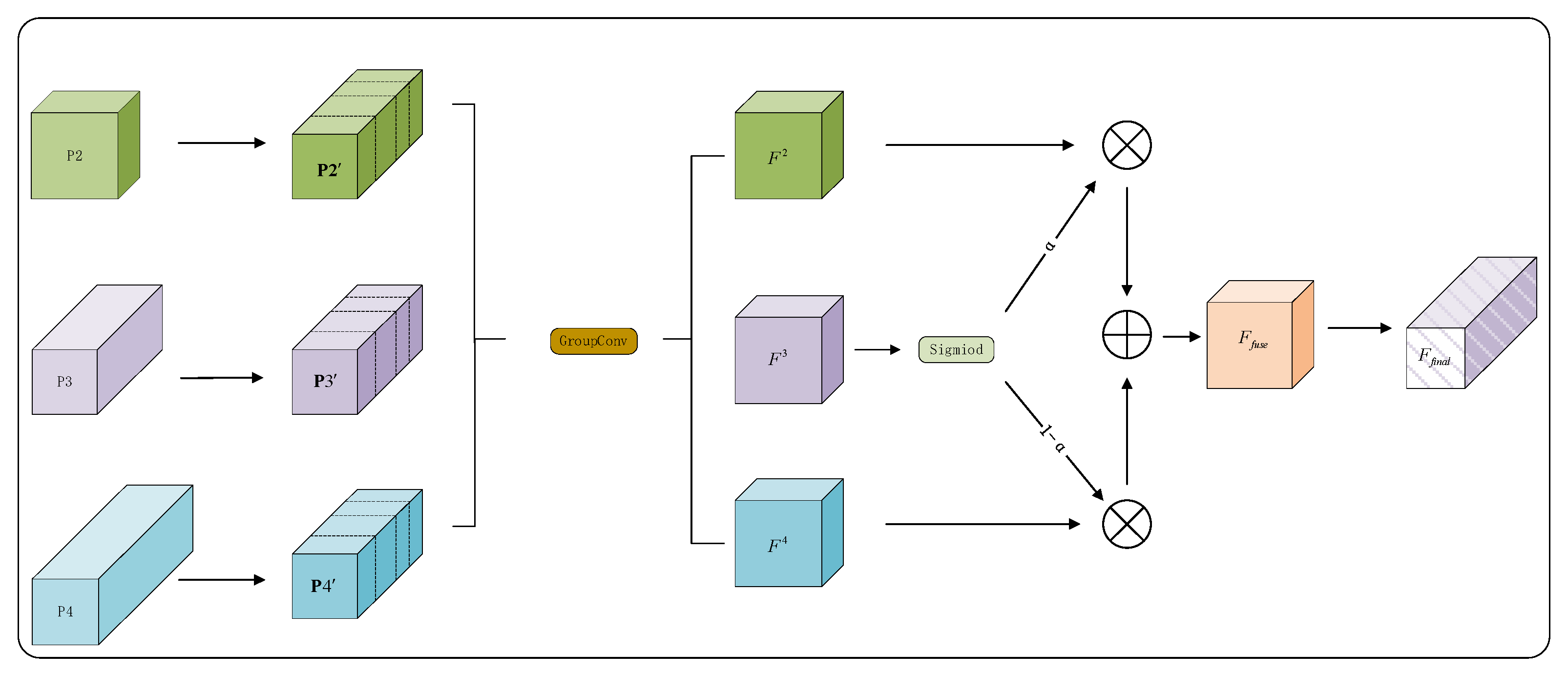

- Second, a Multi-scale Focus-and-Diffuse (MFFD) network is designed to optimize feature propagation and fusion for aerial imagery. The MFFD employs a dual-phase strategy: a focus stage that aggregates high-resolution spatial cues from shallow feature layers with semantic context from deeper features, followed by a diffuse stage where enriched features are adaptively distributed across hierarchical levels to enhance representational capacity for small objects.

- Third, an efficient detection head (CS-Head) utilizing parameter-sharing convolution is proposed to enable efficient processing on embedded UAV platforms. By sharing convolution kernels across detection tasks while maintaining discriminative capability through scale-specific adjustments, the CS-Head reduces computational requirements suitable for resource-constrained deployment.

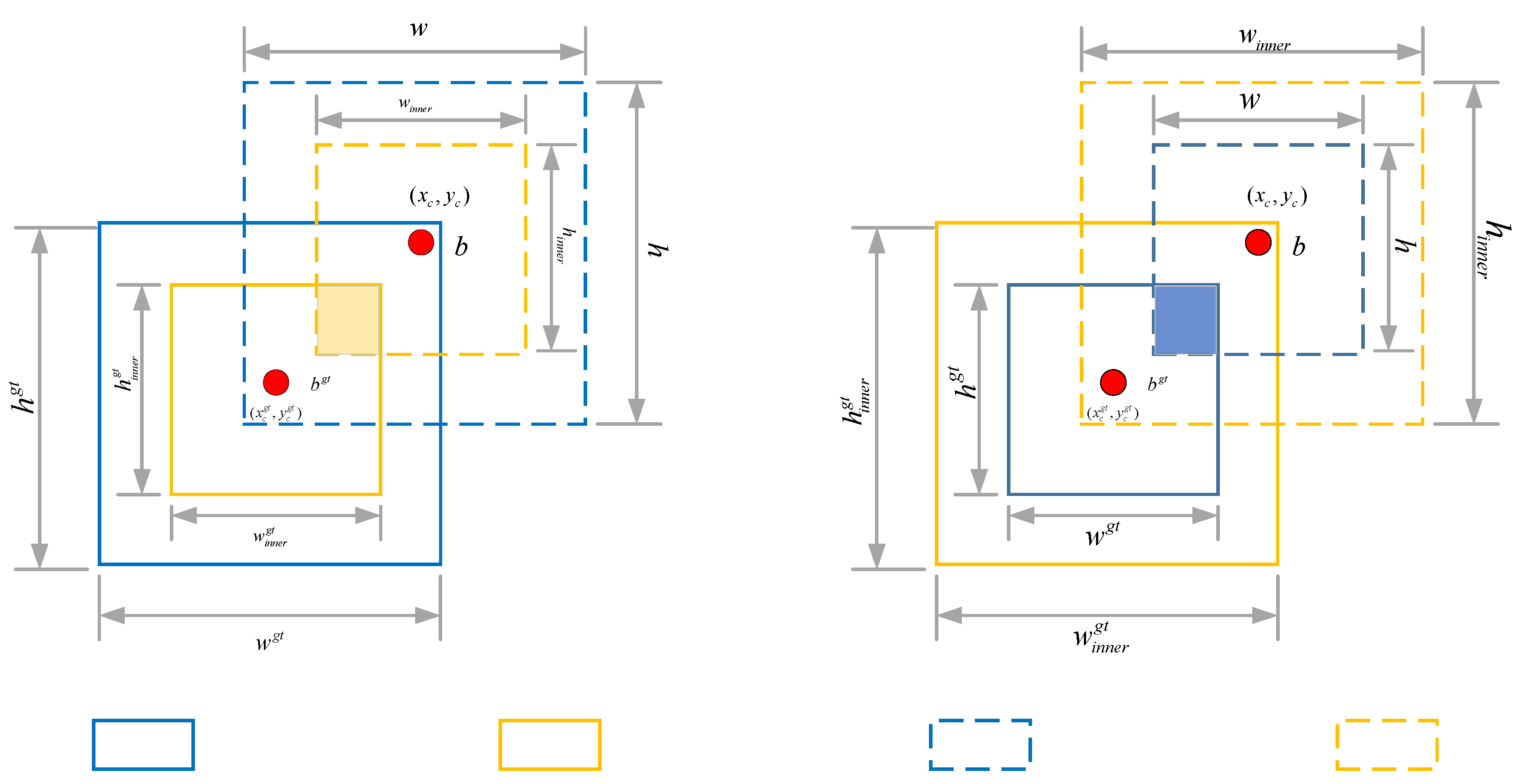

- Fourth, an optimized loss function combining Normalized Wasserstein Distance (NWD) with InnerCIoU is employed to improve localization accuracy for small targets in aerial imagery, addressing the challenge of precise bounding box regression for objects with minimal pixel representation.

2. Related Work

2.1. Generic Target Detection Methods

2.2. YOLO-Based Algorithm Improvements for UAV Aerial Small-Target Detection

3. Method

3.1. Overview of RMH-YOLO

3.2. Refined Feature Module

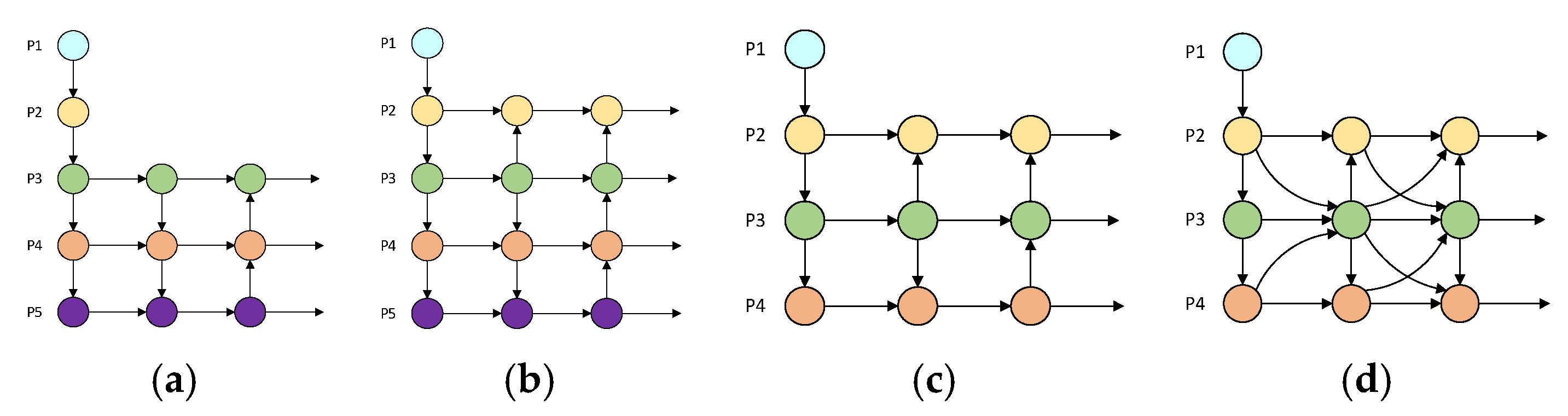

3.3. Multi-Scale Feature Fusion Network

3.3.1. ADSF Method

3.3.2. MFFD Network

3.4. CSLD Detection Head

3.5. Loss Function Design

4. Experiment

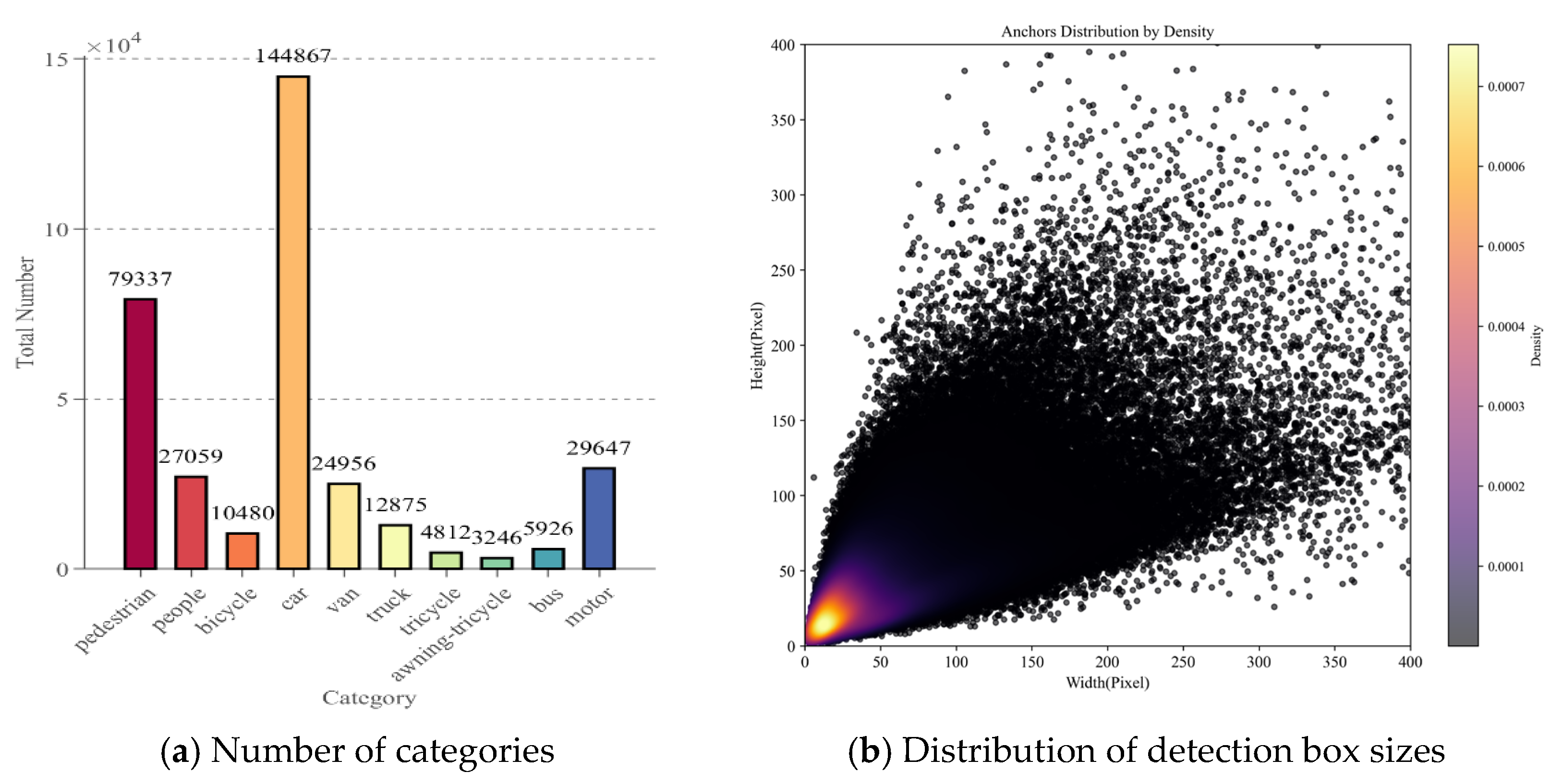

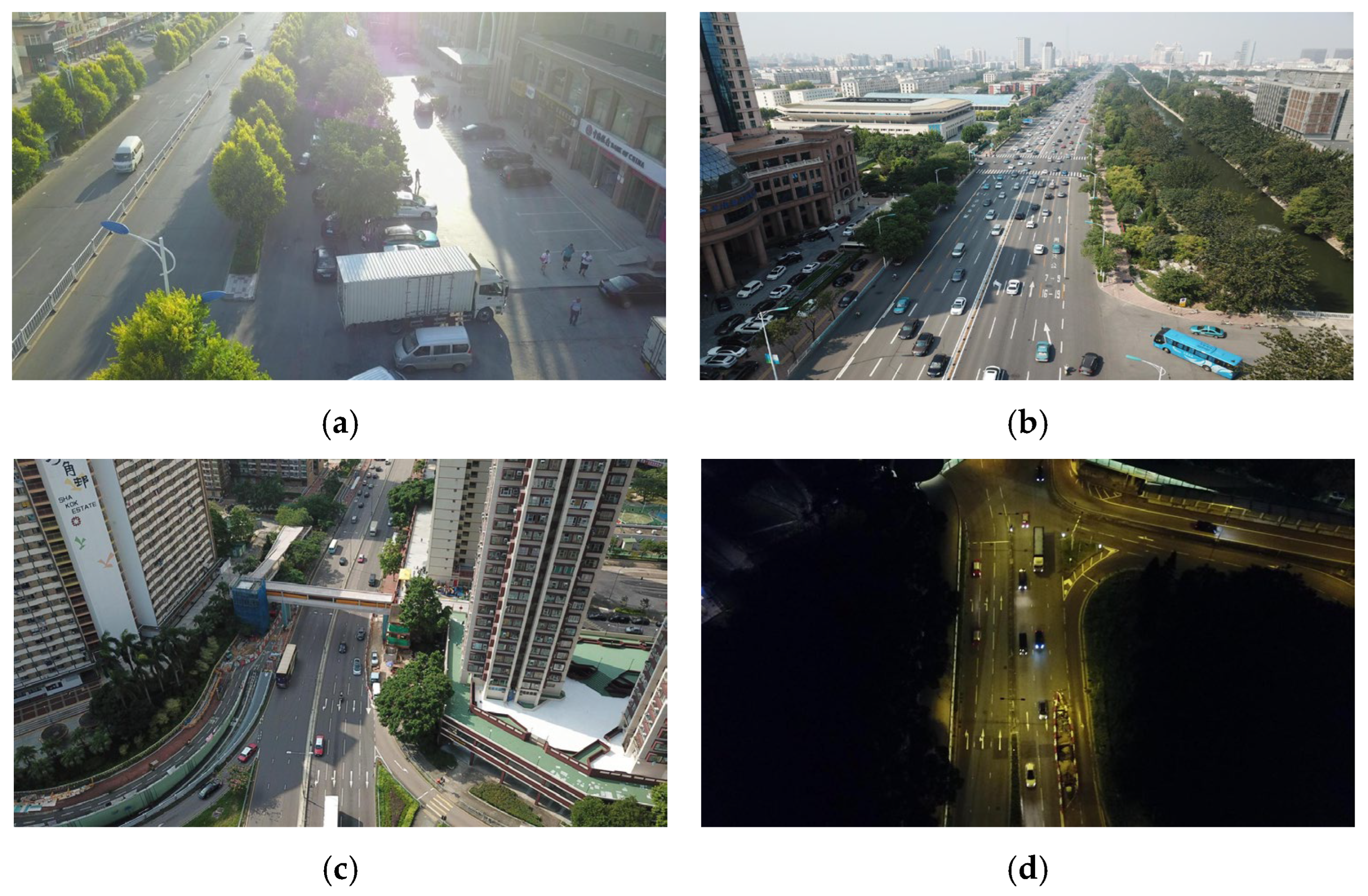

4.1. Data Set and Experimental Setup

4.2. Experimental Environment and Evaluation Metrics

4.3. Ablation Experiments

4.3.1. RMH-YOLO Ablation Experiments

4.3.2. Neck Network Ablation Experiments

4.4. Comparison Experiment

4.4.1. Comparative Experiments on Different Neck Networks

4.4.2. Comparative Experiments on Different Loss Functions

4.4.3. Comparative Experiments on Different NWD Parameters

4.4.4. Comparison with Classical Models

4.4.5. Comparison with YOLO Series Models

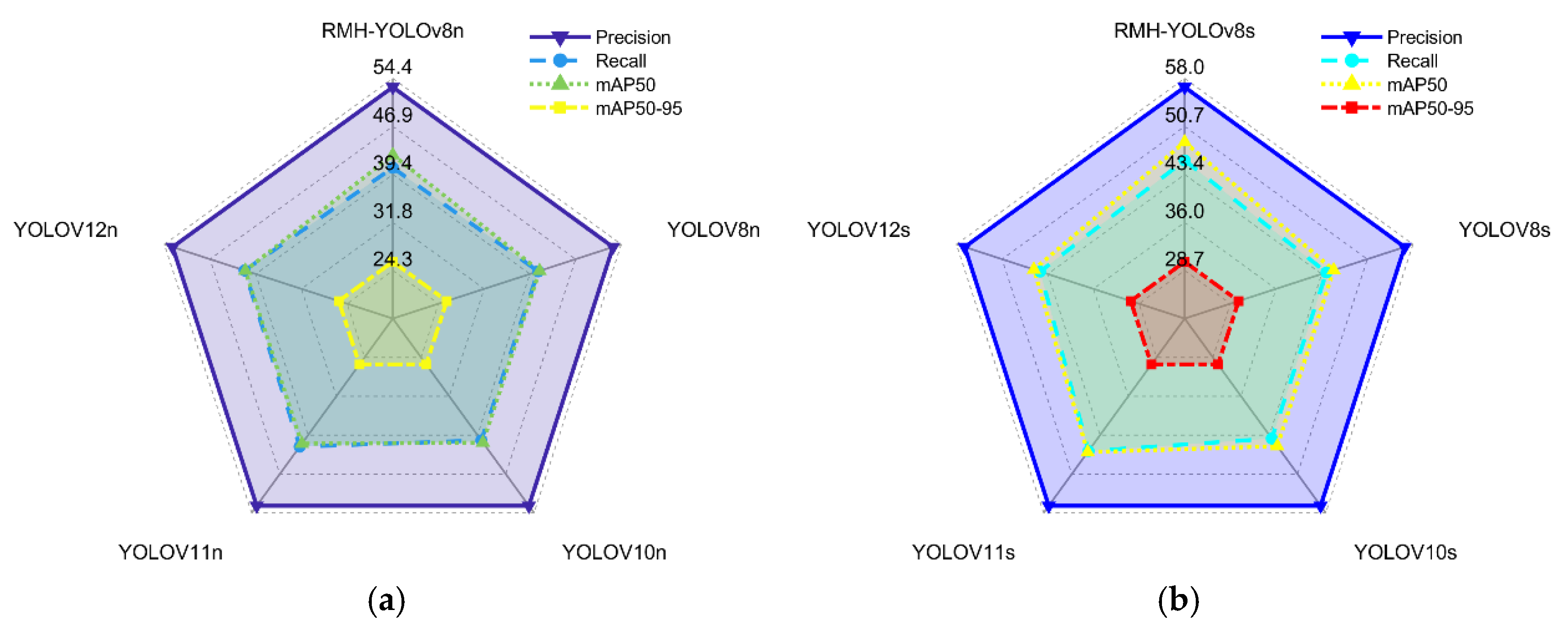

- Precision: RMH-YOLOv8n achieves 53.0%, a remarkable improvement over YOLOv8n (44.2%) and YOLOv10n (44.8%), indicating a stronger capability to correctly identify true positive samples.

- Recall: With a recall rate of 40.4%, it surpasses YOLOv8n (33.0%) and YOLOv10n (32.9%), demonstrating superiority in capturing all actual positive samples without omission.

- mAP50: Reaching 42.4%, it far outperforms YOLOv8n (33.2%) and YOLOv10n (33.3%), reflecting robust overall performance at a loose IoU (Intersection over Union) threshold of 0.5.

- mAP50:95: Attaining 25.7%, it leads YOLOv11n (18.8%) and YOLOv12n (18.8%) by a significant margin, highlighting excellent adaptability to different IoU thresholds and high localization accuracy.

- Precision: Reaching 56.7%, it outperforms YOLOv8s (50.6%) and YOLOv10s (51.0%), emphasizing enhanced ability to filter false positives.

- Recall: With a recall rate of 45.5%, it surpasses YOLOv8s (37.6%) and YOLOv10s (37.9%), ensuring fewer true positives are missed.

- mAP50: Achieving 48.3%, it far exceeds YOLOv8s (38.8%) and YOLOv10s (39.4%), confirming robust performance under IoU = 0.5.

- mAP50:95: At 30.0%, it leads YOLOv8s (23.0%) and YOLOv10s (23.5%), illustrating superior performance across IoU thresholds and high localization precision.

4.4.6. Comparison with Other Improved Methods

4.5. Generalization Experiments

5. Visualization Experiment

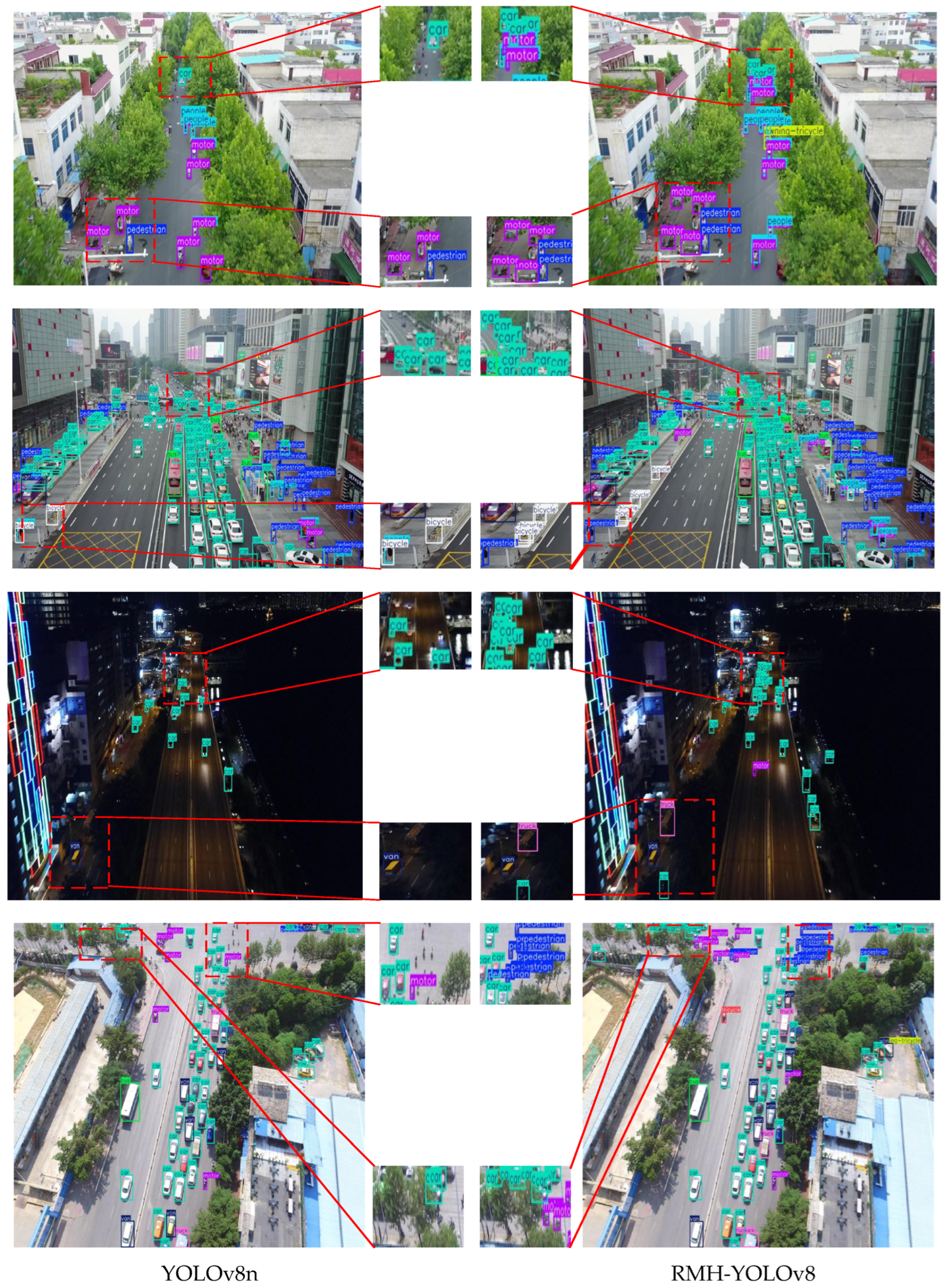

5.1. Detection Results Visualization

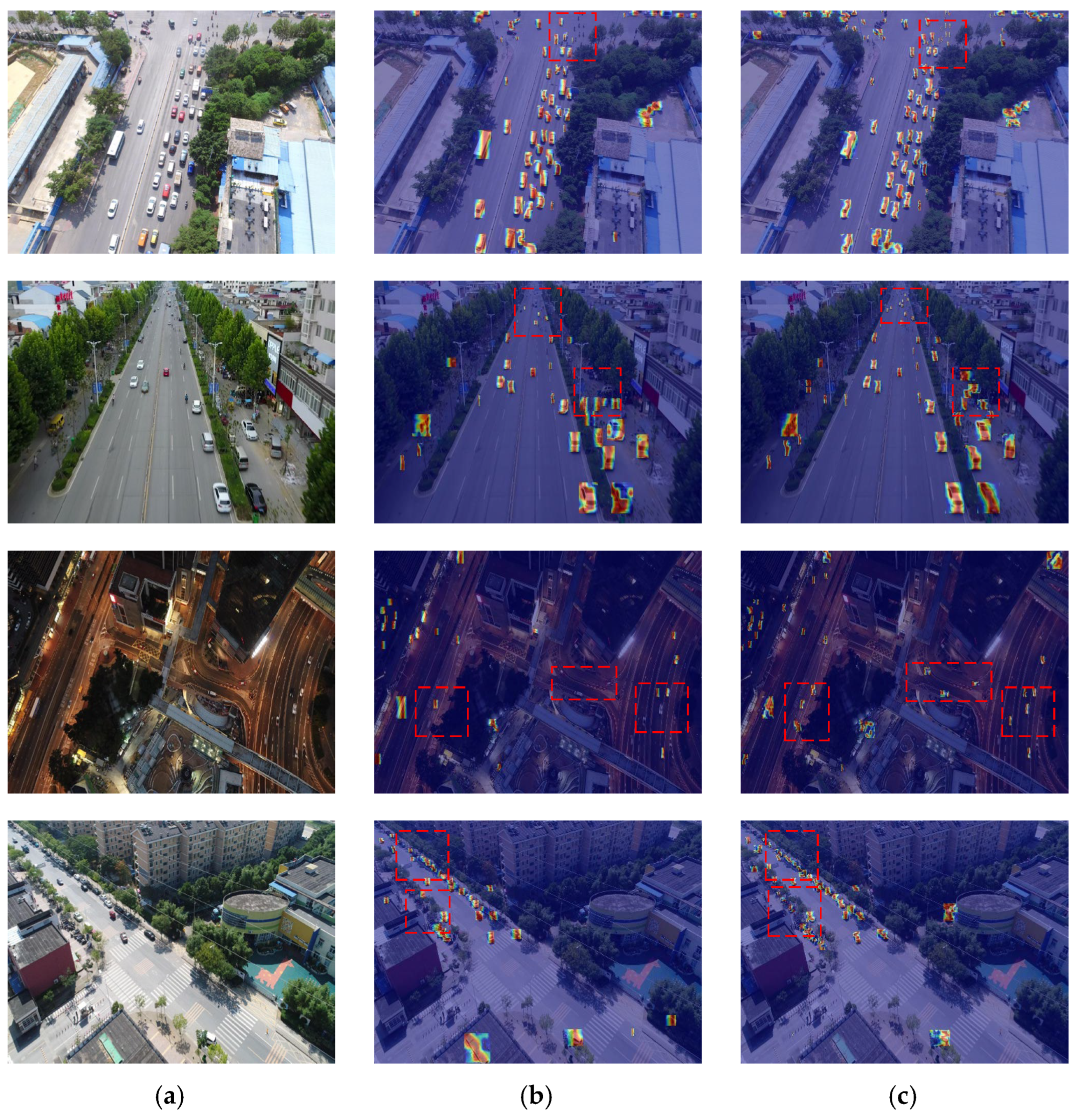

5.2. Heatmap Visualization

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ogundele, O.M.; Tamrakar, N.; Kook, J.-H.; Kim, S.-M.; Choi, J.-I.; Karki, S.; Akpenpuun, T.D.; Kim, H.T. Real-Time Strawberry Ripeness Classification and Counting: An Optimized YOLOv8s Framework with Class-Aware Multi-Object Tracking. Agriculture 2025, 15, 1906. [Google Scholar] [CrossRef]

- Xu, K.; Hou, Y.; Sun, W.; Chen, D.; Lv, D.; Xing, J.; Yang, R. A Detection Method for Sweet Potato Leaf Spot Disease and Leaf-Eating Pests. Agriculture 2025, 15, 503. [Google Scholar] [CrossRef]

- Li, W.; Huang, L.; Lai, X. A Deep Learning Framework for Traffic Accident Detection Based on Improved YOLO11. Vehicles 2025, 7, 81. [Google Scholar] [CrossRef]

- Kim, S.-K.; Chan, I.C. Novel Machine Learning-Based Smart City Pedestrian Road Crossing Alerts. Smart Cities 2025, 8, 114. [Google Scholar] [CrossRef]

- Gao, B.; Jia, W.; Wang, Q.; Yang, G. All-Weather Forest Fire Automatic Monitoring and Early Warning Application Based on Multi-Source Remote Sensing Data: Case Study of Yunnan. Fire 2025, 8, 344. [Google Scholar] [CrossRef]

- Jiang, Y.; Meng, X.; Wang, J. SFGI-YOLO: A Multi-Scale Detection Method for Early Forest Fire Smoke Using an Extended Receptive Field. Forests 2025, 16, 1345. [Google Scholar] [CrossRef]

- Tao, S.; Zheng, J. Leveraging Prototypical Prompt Learning for Robust Bridge Defect Classification in Civil Infrastructure. Electronics 2025, 14, 1407. [Google Scholar] [CrossRef]

- Su, Y.; Song, Y.; Zhan, Z.; Bi, Z.; Zhou, B.; Yu, Y.; Song, Y. Research on Intelligent Identification Technology for Bridge Cracks. Infrastructures 2025, 10, 102. [Google Scholar] [CrossRef]

- Lei, P.; Wang, C.; Liu, P. RPS-YOLO: A Recursive Pyramid Structure-Based YOLO Network for Small Object Detection in Unmanned Aerial Vehicle Scenarios. Appl. Sci. 2025, 15, 2039. [Google Scholar] [CrossRef]

- Wang, H.; Gao, J. SF-DETR: A Scale-Frequency Detection Transformer for Drone-View Object Detection. Sensors 2025, 25, 2190. [Google Scholar] [CrossRef]

- Zhou, Y.; Wei, Y. UAV-DETR: An Enhanced RT-DETR Architecture for Efficient Small Object Detection in UAV Imagery. Sensors 2025, 25, 4582. [Google Scholar] [CrossRef]

- Wan, Z.; Lan, Y.; Xu, Z.; Shang, K.; Zhang, F. DAU-YOLO: A Lightweight and Effective Method for Small Object Detection in UAV Images. Remote Sens. 2025, 17, 1768. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Wei, L.; Dragomir, A.; Dumitru, E.; Christian, S.; Scott, R.; Cheng-Yang, F.; Berg, A.C. SSD: Single Shot MultiBox Detector; Springer: Cham, Switzerland, 2016. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2999–3007. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the Computer Vision & Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; IEEE: New York, NY, USA, 2017; pp. 6517–6525. [Google Scholar]

- Zhao, S.; Chen, H.; Zhang, D.; Tao, Y.; Feng, X.; Zhang, D. SR-YOLO: Spatial-to-Depth Enhanced Multi-Scale Attention Network for Small Target Detection in UAV Aerial Imagery. Remote Sens. 2025, 17, 2441. [Google Scholar] [CrossRef]

- Luo, W.; Yuan, S. Enhanced YOLOv8 for small-object detection in multiscale UAV imagery: Innovations in detection accuracy and efficiency. Digit. Signal Process. 2025, 158, 104964. [Google Scholar] [CrossRef]

- Hui, Y.; Wang, J.; Li, B. STF-YOLO: A small target detection algorithm for UAV remote sensing images based on improved SwinTransformer and class weighted classification decoupling head. Measurement 2024, 224, 113936. [Google Scholar] [CrossRef]

- Wang, T.; Ma, Z.; Yang, T.; Zou, S. PETNet: A YOLO-based prior enhanced transformer network for aerial image detection. Neurocomputing 2023, 547, 126384. [Google Scholar] [CrossRef]

- Bi, J.; Li, K.; Zheng, X.; Zhang, G.; Lei, T. SPDC-YOLO: An Efficient Small Target Detection Network Based on Improved YOLOv8 for Drone Aerial Image. Remote Sens. 2025, 17, 685. [Google Scholar] [CrossRef]

- Liao, D.; Bi, R.; Zheng, Y.; Hua, C.; Huang, L.; Tian, X.; Liao, B. LCW-YOLO: An Explainable Computer Vision Model for Small Object Detection in Drone Images. Appl. Sci. 2025, 15, 9730. [Google Scholar] [CrossRef]

- Li, Y.; Li, Q.; Pan, J.; Zhou, Y.; Zhu, H.; Wei, H.; Liu, C. SOD-YOLO: Small-Object-Detection Algorithm Based on Improved YOLOv8 for UAV Images. Remote Sens. 2024, 16, 3057. [Google Scholar] [CrossRef]

- Xu, X.; Jiang, Y.; Chen, W.; Huang, Y.L.; Zhang, Y.; Sun, X. DAMO-YOLO: A Report on Real-Time Object Detection Design. arxiv 2022, arXiv:2211.15444. [Google Scholar]

- Chen, Y.; Zhang, C.; Chen, B.; Huang, Y.; Sun, Y.; Wang, C.; Fu, X.; Dai, Y.; Qin, F.; Peng, Y. Accurate Leukocyte Detection Based on Deformable-DETR and Multi-Level Feature Fusion for Aiding Diagnosis of Blood Diseases. Comput. Biol. Med. 2024, 170, 107917. [Google Scholar] [CrossRef]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and Efficient Object Detection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Li, X.; Wang, W.; Wu, L.; Chen, S.; Hu, X.; Li, J.; Tang, J.; Yang, J. Generalized Focal Loss: Learning Qualified and Distributed Bounding Boxes for Dense Object Detection. arXiv 2020, arXiv:2006.04388. [Google Scholar] [CrossRef]

- Zhang, S.; Chi, C.; Yao, Y.; Lei, Z.; Li, S.Z. Bridging the Gap Between Anchor-Based and Anchor-Free Detection via Adaptive Training Sample Selection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 5 December 2019; pp. 9756–9765. [Google Scholar]

- Cai, Z.; Vasconcelos, N. Cascade R-CNN: Delving Into High Quality Object Detection. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Honolulu, Hawaii, USA, 21–26 July 2017; pp. 6154–6162. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef]

- Feng, C.; Zhong, Y.; Gao, Y.; Scott, M.R.; Huang, W. TOOD: Task-aligned One-stage Object Detection. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 3490–3499. [Google Scholar]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. DETRs Beat YOLOs on Real-time Object Detection. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 16965–16974. [Google Scholar]

- Wei, C.; Wang, W. RFAG-YOLO: A Receptive Field Attention-Guided YOLO Network for Small-Object Detection in UAV Images. Sensors 2025, 25, 2193. [Google Scholar] [CrossRef] [PubMed]

- Yue, M.; Zhang, L.; Huang, J.; Zhang, H. Lightweight and Efficient Tiny-Object Detection Based on Improved YOLOv8n for UAV Aerial Images. Drones 2024, 8, 276. [Google Scholar] [CrossRef]

- Luo, J.; Liu, Z.; Wang, Y.; Tang, A.; Zuo, H.; Han, P. Efficient Small Object Detection You Only Look Once: A Small Object Detection Algorithm for Aerial Images. Sensors 2024, 24, 7067. [Google Scholar] [CrossRef]

- Li, M.; Chen, Y.; Zhang, T.; Huang, W. TA-YOLO: A lightweight small object detection model based on multi-dimensional trans-attention module for remote sensing images. Complex Intell. Syst. 2024, 10, 5459–5473. [Google Scholar] [CrossRef]

- Wang, H.; Liu, J.; Zhao, J.; Zhang, J.; Zhao, D. Precision and speed: LSOD-YOLO for lightweight small object detection. Expert Syst. Appl. 2025, 269, 126440. [Google Scholar] [CrossRef]

| Group | Baseline | RFAT | MFHD | CSLD | InnerCIoU | NWD |

|---|---|---|---|---|---|---|

| A | √ | |||||

| B | √ | √ | ||||

| C | √ | √ | ||||

| D | √ | √ | ||||

| E | √ | √ | √ | |||

| F | √ | √ | √ | √ | ||

| G | √ | √ | √ | √ | √ | |

| H | √ | √ | √ | √ | √ | √ |

| Group | P/% | R/% | mAP50/% | mAP50:95/% | Parameters/M | FLOPs/G | FPS |

|---|---|---|---|---|---|---|---|

| A | 44.2 | 33.0 | 33.2 | 19.3 | 3.0 | 8.1 | 134.8 |

| B | 46.7 | 35.1 | 35.7 | 21.1 | 3.4 | 10.4 | 112.9 |

| C | 49.4 | 39.0 | 40.0 | 23.9 | 1.5 | 19.6 | 84.2 |

| D | 43.9 | 32.7 | 32.7 | 19.0 | 2.4 | 6.5 | 151.8 |

| E | 51.6 | 41.0 | 42.0 | 25.2 | 1.8 | 21.6 | 91.5 |

| F | 50.4 | 40.7 | 41.6 | 25.1 | 1.3 | 16.7 | 76.6 |

| G | 52.3 | 40.1 | 41.9 | 25.5 | 1.3 | 16.7 | 76.5 |

| H | 53.0 (+8.8) | 40.4 (+7.4) | 42.4 (+9.4) | 25.7 (+6.4) | 1.3 (−1.7) | 16.7 (+8.6) | 76.5 |

| Group | P/% | R/% | mAP50/% | mAP50:95/% | Parameters/M | FLOPs/G | FPS |

|---|---|---|---|---|---|---|---|

| Neck_1 | 44.2 | 33.0 | 33.2 | 19.3 | 3.0 | 8.1 | 134.8 |

| Neck_2 | 47.7 | 35.5 | 36.7 | 21.8 | 2.9 | 12.2 | 124.2 |

| Neck_3 | 44.7 | 35.9 | 35.8 | 21.2 | 1.0 | 10.4 | 127.4 |

| Neck_4 | 49.4 | 39.0 | 40.0 | 23.9 | 1.5 | 19.6 | 76.6 |

| Neck | P/% | R/% | mAP50/% | mAP50:95/% | Parameters/M | FLOPs/G | FPS |

|---|---|---|---|---|---|---|---|

| PAFPN (baseline) | 44.2 | 33 | 33.2 | 19.3 | 3.0 | 8.1 | 134.8 |

| GDFPN [26] | 44.7 | 33.2 | 33.2 | 19 | 3.3 | 8.3 | 131.9 |

| HSFPN [27] | 42.7 | 31.4 | 31.2 | 18 | 1.9 | 6.9 | 109.9 |

| BiFPN [28] | 44.7 | 32.9 | 33.4 | 19.5 | 2.0 | 7.1 | 102.8 |

| MFHD (ours) | 49.4 | 39 | 40.0 | 23.9 | 1.5 | 19.6 | 76.5 |

| Loss | P/% | R/% | mAP50/% | mAP50:95/% |

|---|---|---|---|---|

| CIoU | 50.4 | 40.7 | 41.6 | 25.1 |

| DIoU | 50.6 | 40.4 | 41.9 | 25.3 |

| ShapeIoU | 52.5 | 39.4 | 41.6 | 25.2 |

| SIoU | 51.6 | 39.6 | 41.2 | 25.0 |

| InnerCIoU (ours) | 52.3 | 40.1 | 41.9 | 25.5 |

| α | P/% | R/% | mAP50/% | mAP50:95/% |

|---|---|---|---|---|

| 0.1 | 53.2 | 40.6 | 42.3 | 25.2 |

| 0.3 | 52.3 | 40.0 | 42.2 | 25.4 |

| 0.5 | 53.6 | 40.1 | 42.2 | 25.5 |

| 0.7 | 52.0 | 40.5 | 41.9 | 25.3 |

| 0.9 | 51.3 | 40.5 | 41.6 | 25.1 |

| Model | P/% | R/% | mAP50/% | mAP50:95/% | Params/M | Flops/G | FPS |

|---|---|---|---|---|---|---|---|

| GFL [29] | 47.1 | 36.2 | 35.4 | 21.0 | 32.3 | 206.0 | 15.2 |

| ATSS [30] | 46.4 | 37.8 | 35.9 | 21.7 | 38.9 | 110.0 | 35.1 |

| Cascade-RCNN [31] | 49.6 | 37.3 | 39.5 | 24.2 | 69.3 | 236.0 | 30.7 |

| Faster-RCNN [32] | 48.6 | 37.2 | 39.2 | 23.5 | 41.4 | 208.0 | 17.4 |

| TOOD [33] | 47.8 | 37.6 | 37.1 | 22.1 | 32.0 | 199.0 | 18.6 |

| RT-detr [34] | 60.4 | 45.1 | 46.5 | 28.0 | 19.9 | 57 | 69.8 |

| MFHD (ours) | 53.0 | 40.4 | 42.4 | 25.7 | 1.3 | 16.7 | 76.5 |

| Model | P/% | R/% | mAP50/% | mAP50:95/% | Parameters/M | FLOPs/G |

|---|---|---|---|---|---|---|

| RMH-YOLOv8n | 53.0 | 40.4 | 42.4 | 25.7 | 1.3 | 16.7 |

| YOLOV8n | 44.2 | 33.0 | 33.2 | 19.3 | 3.0 | 8.10 |

| YOLOV10n | 44.8 | 32.9 | 33.3 | 19.1 | 2.3 | 6.50 |

| YOLOV11n | 42.7 | 32.7 | 32.2 | 18.8 | 2.6 | 6.30 |

| YOLOV12n | 42.8 | 32.4 | 32.3 | 18.8 | 2.6 | 6.30 |

| RMH-YOLOv8s | 56.7 | 45.5 | 48.3 | 30.0 | 5.2 | 64.8 |

| YOLOV8s | 50.6 | 37.6 | 38.8 | 23.0 | 11.1 | 28.50 |

| YOLOV10s | 51.0 | 37.9 | 39.4 | 23.5 | 7.2 | 21.4 |

| YOLOV11s | 48.7 | 38.9 | 39.1 | 23.4 | 9.4 | 21.3 |

| YOLOV12s | 49.5 | 37.5 | 38.5 | 23.1 | 9.2 | 21.2 |

| Model | P/% | R/% | mAP50/% | mAP50:95/% | Parameters/M | FLOPs/G |

|---|---|---|---|---|---|---|

| RFAG-YOLO [35] | 49.6 | 37.8 | 38.9 | 23.1 | 5.94 | 15.7 |

| LE-YOLO [36] | - | - | 39.3 | 22.7 | 2.1 | 13.1 |

| ESOD-YOLO [37] | - | - | 29.3 | 16.6 | 4.47 | 14.3 |

| TA-YOLO [38] | 50.2 | 37.3 | 40.1 | 24.1 | 3.8 | - |

| LSOD-YOLO [39] | 48.4 | 38.2 | 37 | - | 3.8 | 33.9 |

| RMH-YOLOv8n | 53.0 | 40.4 | 42.4 | 25.7 | 1.3 | 16.7 |

| RMH-YOLOv8s | 56.7 | 45.5 | 48.3 | 30.0 | 5.2 | 64.8 |

| Model | P/% | R/% | mAP50/% | mAP50:95/% | Parameters/M | FLOPs/G |

|---|---|---|---|---|---|---|

| YOLOv8n | 38.9% | 25.5% | 22.6% | 7.2% | 3.0 | 8.1 |

| RMH-YOLOv8n | 44.4% | 33.1% | 28.5% | 8.9% | 1.3 | 16.7 |

| YOLOv8s | 37.7% | 29.1% | 25.3% | 8.1% | 11.1 | 28.4 |

| RMH-YOLOv8s | 48.3% | 33.7% | 30.5% | 9.8% | 5.2 | 64.7 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, F.; He, M.; Liu, J.; Jin, H. RMH-YOLO: A Refined Multi-Scale Architecture for Small-Target Detection in UAV Aerial Imagery. Sensors 2025, 25, 7088. https://doi.org/10.3390/s25227088

Yang F, He M, Liu J, Jin H. RMH-YOLO: A Refined Multi-Scale Architecture for Small-Target Detection in UAV Aerial Imagery. Sensors. 2025; 25(22):7088. https://doi.org/10.3390/s25227088

Chicago/Turabian StyleYang, Fan, Min He, Jiuxian Liu, and Haochen Jin. 2025. "RMH-YOLO: A Refined Multi-Scale Architecture for Small-Target Detection in UAV Aerial Imagery" Sensors 25, no. 22: 7088. https://doi.org/10.3390/s25227088

APA StyleYang, F., He, M., Liu, J., & Jin, H. (2025). RMH-YOLO: A Refined Multi-Scale Architecture for Small-Target Detection in UAV Aerial Imagery. Sensors, 25(22), 7088. https://doi.org/10.3390/s25227088