Multi-Feature Fusion for Fiber Optic Vibration Identification Based on Denoising Diffusion Probabilistic Models

Abstract

1. Introduction

2. Methods

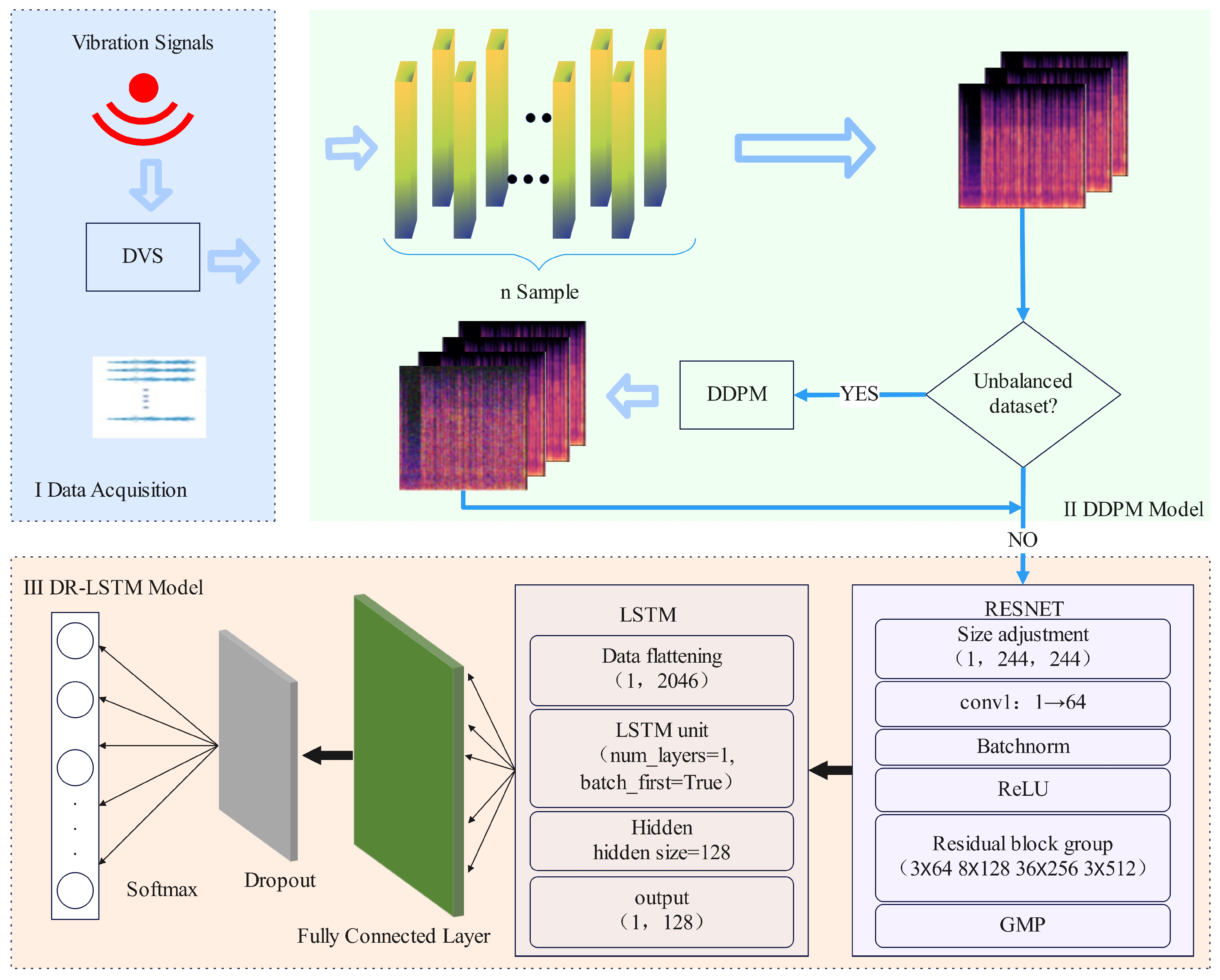

2.1. DR-LSTM Model Framework

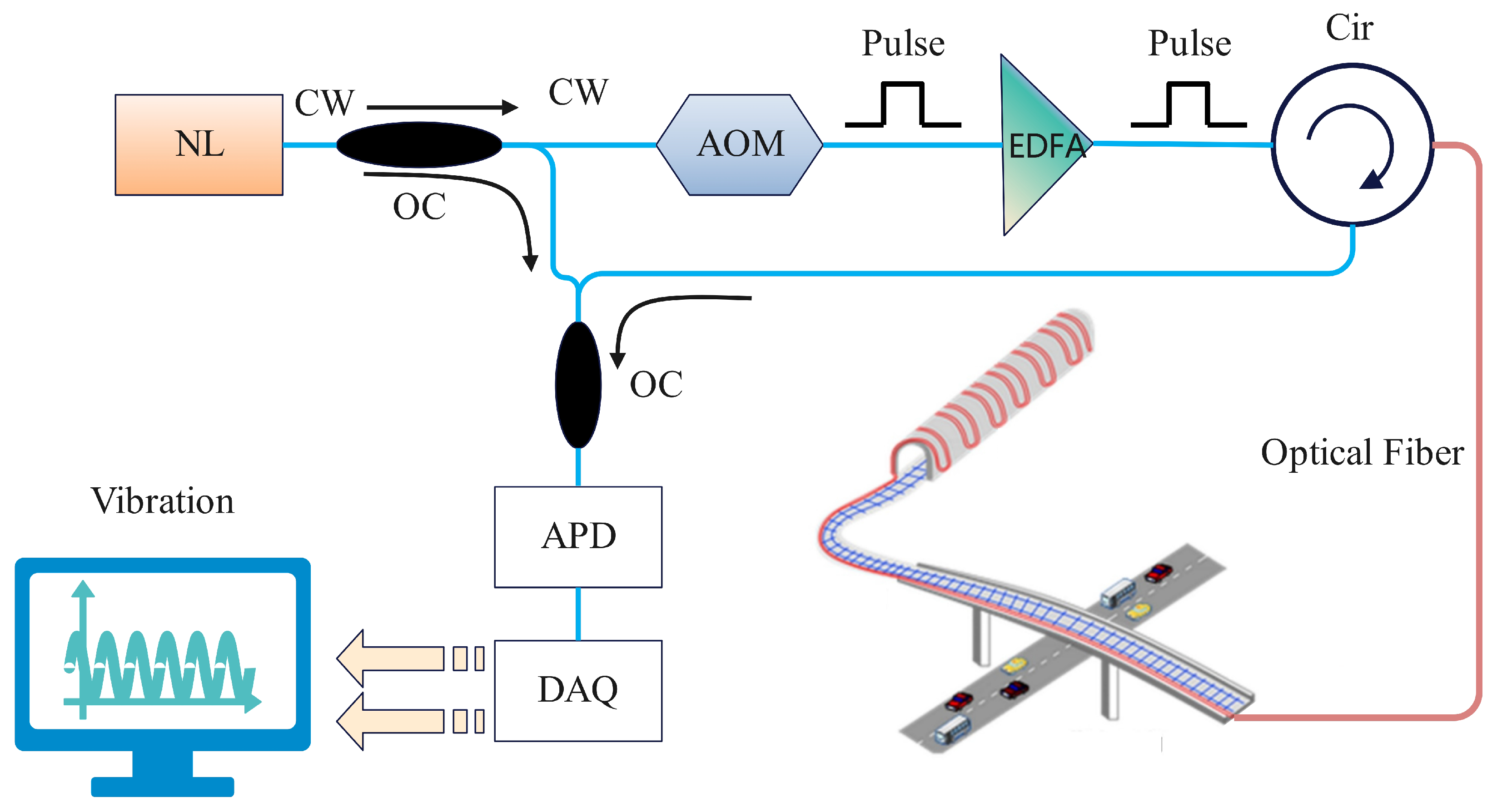

2.2. Transformation of MEL Spectrogram Images for Multi-Dimensional Vibration Signals

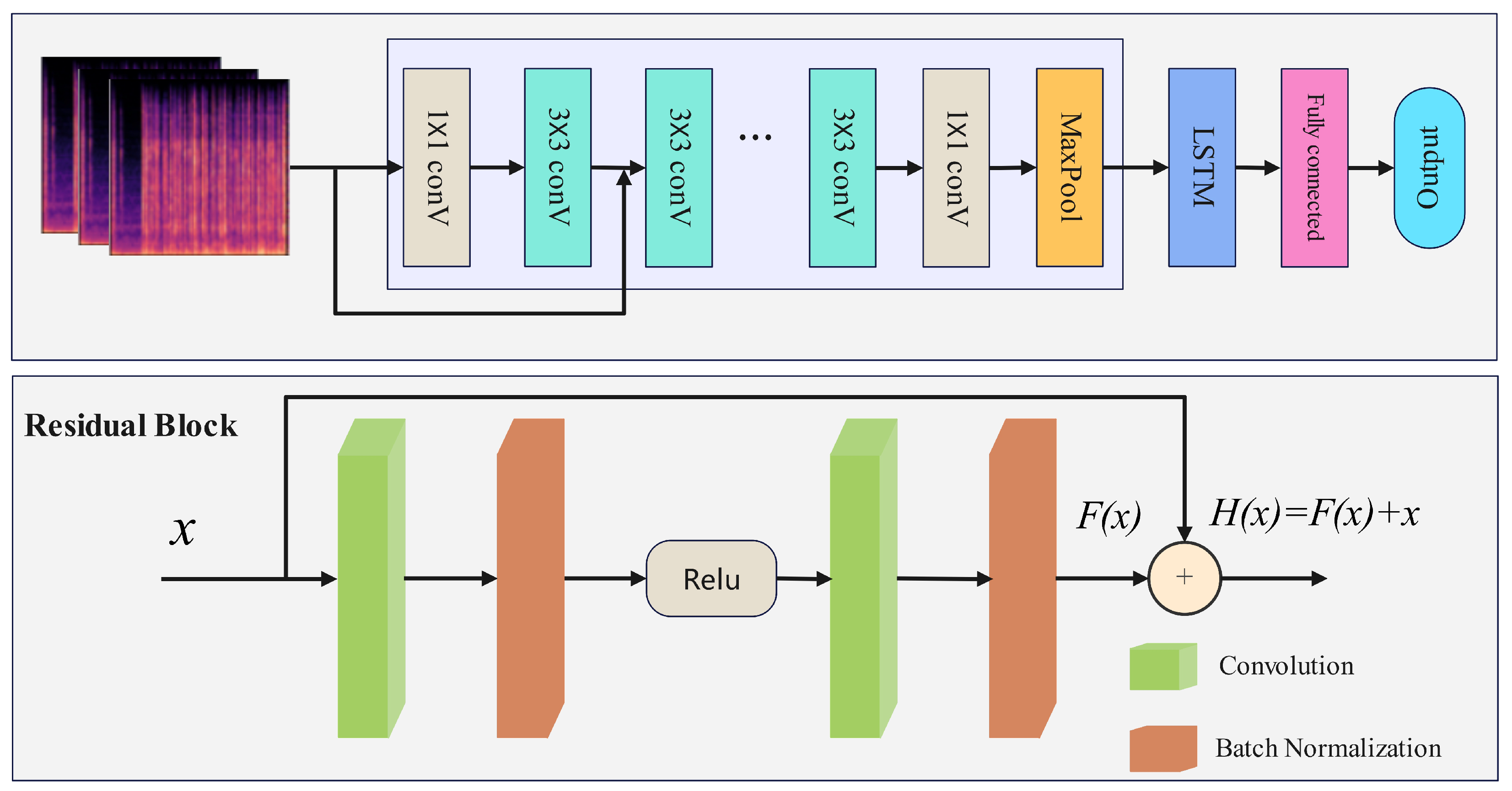

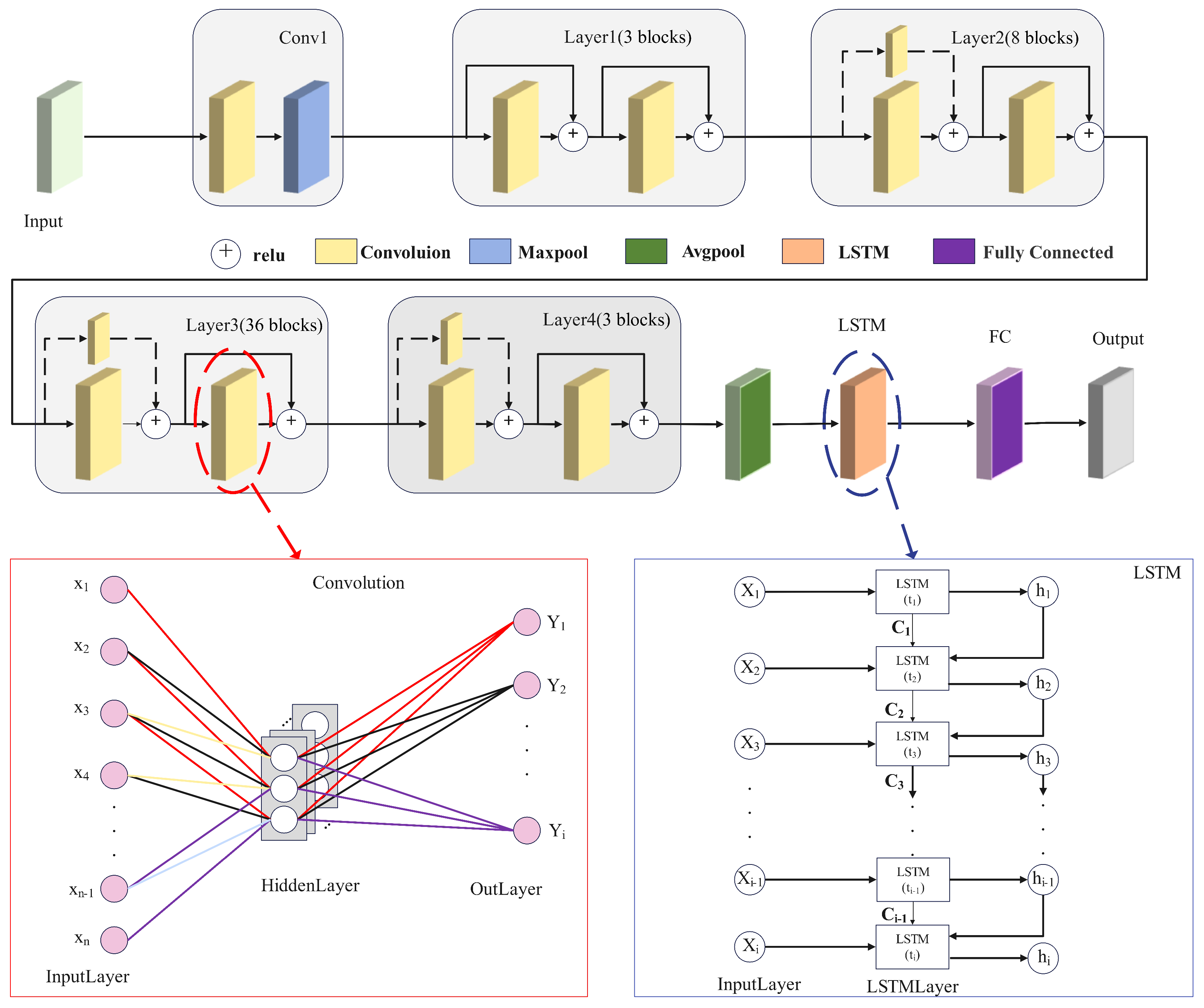

2.3. The Model of DR-LSTM

2.4. Model Evaluation and Analysis

3. Experiments and Analysis of Results

3.1. Data Description

3.2. Experimental Setup

3.3. Experimental Results and Discussion

4. Conclusions and Prospect

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Sun, Z.; Liu, K.; Jiang, J.; Ma, P.; Xu, Z.; Guo, H.; Zhou, Z.; Liu, T. Variational Mode Decomposition-Based Event Recognition in Perimeter Security Monitoring With Fiber Optic Vibration Sensor. IEEE Access 2019, 7, 182580–182587. [Google Scholar] [CrossRef]

- Stajanca, P.; Chruscicki, S.; Homann, T.; Seifert, S.; Schmidt, D.; Habib, A. Detection of Leak-Induced Pipeline Vibrations Using Fiber—Optic Distributed Acoustic Sensing. Sensors 2018, 18, 2841. [Google Scholar] [CrossRef] [PubMed]

- Butt, M.A.; Voronkov, G.S.; Grakhova, E.P.; Kutluyarov, R.V.; Kazanskiy, N.L.; Khonina, S.N. Environmental Monitoring: A Comprehensive Review on Optical Waveguide and Fiber-Based Sensors. Biosensors 2022, 12, 1038. [Google Scholar] [CrossRef] [PubMed]

- Lin, Z.; Zhang, S.; Xia, Z.; Xie, L. Spatiotemporal image-based method for external breakage event recognition in long-distance distributed fiber optic sensing. Expert Syst. Appl. 2025, 273, 126865. [Google Scholar] [CrossRef]

- Inoue, M.; Koshikiya, Y. Enhanced frequency and time domain feature extraction for communication infrastructure type classification using optical fiber sensing. Opt. Fiber Technol. 2024, 87, 103859. [Google Scholar] [CrossRef]

- Xu, H.; Kou, Q.; Feng, X. Fiber Optic Vibration Signal Recognition Based on IMFCC and CDET. IEEE Sens. J. 2024, 24, 30490–30499. [Google Scholar] [CrossRef]

- Wei, Z.; Dai, J.; Huang, Y.; Shen, W.; Hu, C.; Pang, F.; Zhang, X.; Wang, T. A Representation-Enhanced Vibration Signal Imaging Method Based on MTF-NMF for Φ-OTDR Recognition. J. Light. Technol. 2024, 42, 6395–6401. [Google Scholar] [CrossRef]

- Jin, X.; Liu, K.; Jiang, J.; Xu, T.; Ding, Z.; Hu, X.; Huang, Y.; Zhang, D.; Li, S.; Xue, K.; et al. Pattern Recognition of Distributed Optical Fiber Vibration Sensors Based on Resnet 152. IEEE Sens. J. 2023, 23, 19717–19725. [Google Scholar] [CrossRef]

- Lin, W.; Peng, W.; Kong, Y.; Shen, Z.; Du, Y.; Zhang, L.; Zhang, D. Research on Damage Identification of Buried Pipeline Based on Fiber Optic Vibration Signal. Curr. Opt. Photon. 2023, 7, 511–517. [Google Scholar]

- Wang, Y.Y.; Zhao, S.W.; Wang, C.; Zhang, H.B.; Li, X.D. Intelligent detection and recognition of multi-vibration events based on distributed acoustic sensor and improved YOLOv8 model. Opt. Fiber Technol. 2024, 84, 103706. [Google Scholar] [CrossRef]

- Zhou, Z.; Jiao, W.; Hu, X.; Zhang, D.; Qu, J.; Zheng, X.; Yang, H.; Zhuang, S. Open-Set Event Recognition Model Using 1-D RL-CNN With OpenMax Algorithm for Distributed Optical Fiber Vibration Sensing System. IEEE Sens. J. 2023, 23, 12817–12827. [Google Scholar] [CrossRef]

- Yi, J.; Shang, Y.; Wang, C.; Du, Y.; Yang, J.; Sun, M.; Huang, S.; Qu, S.; Zhao, W.; Zhao, Y.; et al. An intelligent crash recognition method based on 1DResNet-SVM with distributed vibration sensors. Opt. Commun. 2023, 536, 129263. [Google Scholar] [CrossRef]

- Chen, X.; Li, H.; Li, B.; Liu, C. Method for identifying OPGW cable vibration events based on optimized imbalanced data. In Proceedings of the Fifth International Conference on Computer Vision and Data Mining (ICCVDM 2024), Changchun, China, 19–21 July 2024; Yin, M., Zhang, X., Eds.; International Society for Optics and Photonics, SPIE: Bellingham, WA USA, 2024; Volume 13272, p. 132721O. [Google Scholar] [CrossRef]

- Zhang, J.; Zhang, X.; Ding, K.; Wang, R. Parameter identification method of nonuniform and under-sampled blade tip timing based on extended DFT and compressed sensing. Meas. Sci. Technol. 2023, 34, 125126. [Google Scholar] [CrossRef]

- Gan, J.; Xiao, Y. Signal recognition based on transfer learning for Φ-OTDR fiber optic distributed disturbance sensor. J. Phys. Conf. Ser. 2024, 2822, 012086. [Google Scholar] [CrossRef]

- Shi, Y.; Kang, X.; Wei, Z.; Yan, Q.; Lin, Z.; Yu, Z.; Yao, Y.; Dong, Z.; Wei, C. A data augmentation approach combining time series reconstruction and VAEGAN for improved event recognition in Φ-OTDR. Opt. Fiber Technol. 2025, 90, 104135. [Google Scholar] [CrossRef]

- Iglesias, G.; Talavera, E.; Díaz-Álvarez, A. A survey on GANs for computer vision: Recent research, analysis and taxonomy. Comput. Sci. Rev. 2023, 48, 100553. [Google Scholar] [CrossRef]

- Dalal, V. Short-Time Fourier Transform for deblurring Variational Autoencoders. arXiv 2024, arXiv:2401.03166. [Google Scholar] [CrossRef]

- Dubey, A.; Ojha, M.K. Advancements in Image-to-Image Generation: A Comparative Study of Stable Diffusion Model 2. In Proceedings of the 2024 1st International Conference on Advances in Computing, Communication and Networking (ICAC2N), Greater Noida, India, 16–17 December 2024; pp. 975–979. [Google Scholar] [CrossRef]

- Ho, J.; Jain, A.; Abbeel, P. Denoising Diffusion Probabilistic Models. arXiv 2020, arXiv:2006.11239. [Google Scholar] [CrossRef]

- Liu, Z.; Ma, C.; She, W.; Xie, M. Biomedical Image Segmentation Using Denoising Diffusion Probabilistic Models: A Comprehensive Review and Analysis. Appl. Sci. 2024, 14, 632. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the I2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Computation, N. Long short-term memory. Neural Comput. 2016, 9, 1735–1780. [Google Scholar] [CrossRef]

| Dataset | Class | Training Set Before Processing | Training Set After Processing |

|---|---|---|---|

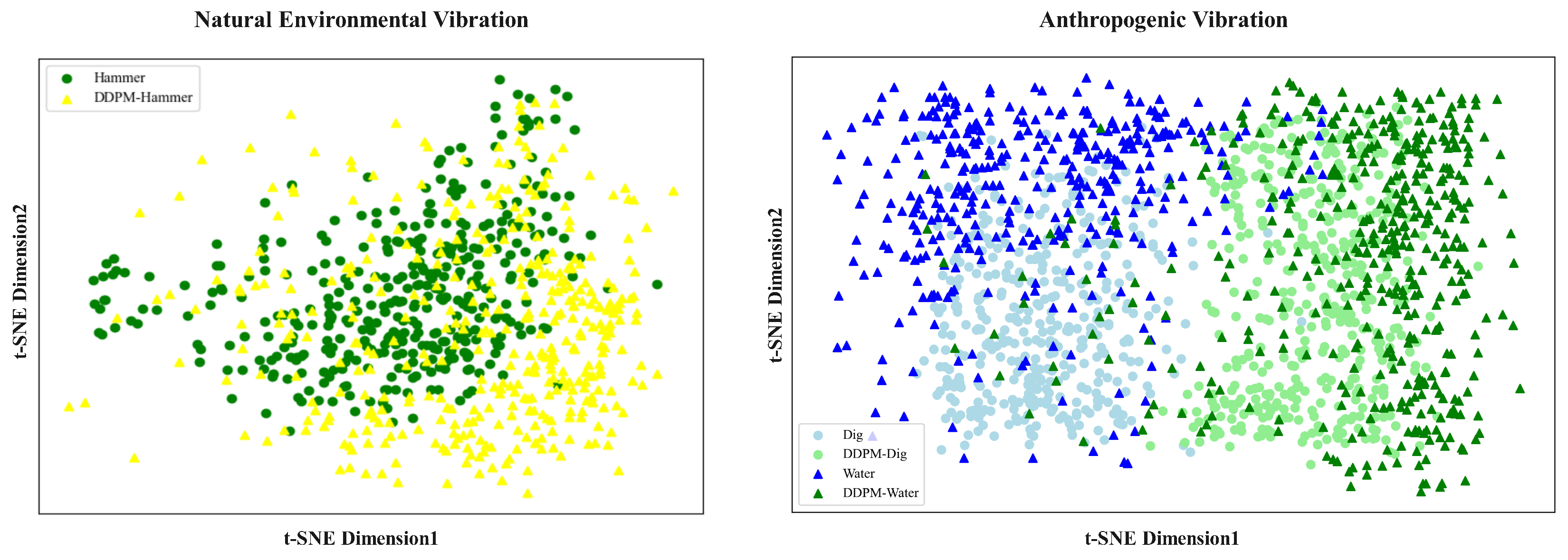

| Natural Environmental Vibration | Pick | 3558 | 3558 |

| Excavator | 3559 | 3559 | |

| Hammer | 3332 | 402 | |

| Anthropogenic Vibration | Background | 2357 | 2357 |

| Dig | 2010 | 400 | |

| Knock | 2024 | 2024 | |

| Water | 1802 | 400 | |

| Shake | 2182 | 2182 | |

| Walk | 1960 | 1960 |

| Dataset | Model | Accuracy | Precision | Recall | F1 Score |

|---|---|---|---|---|---|

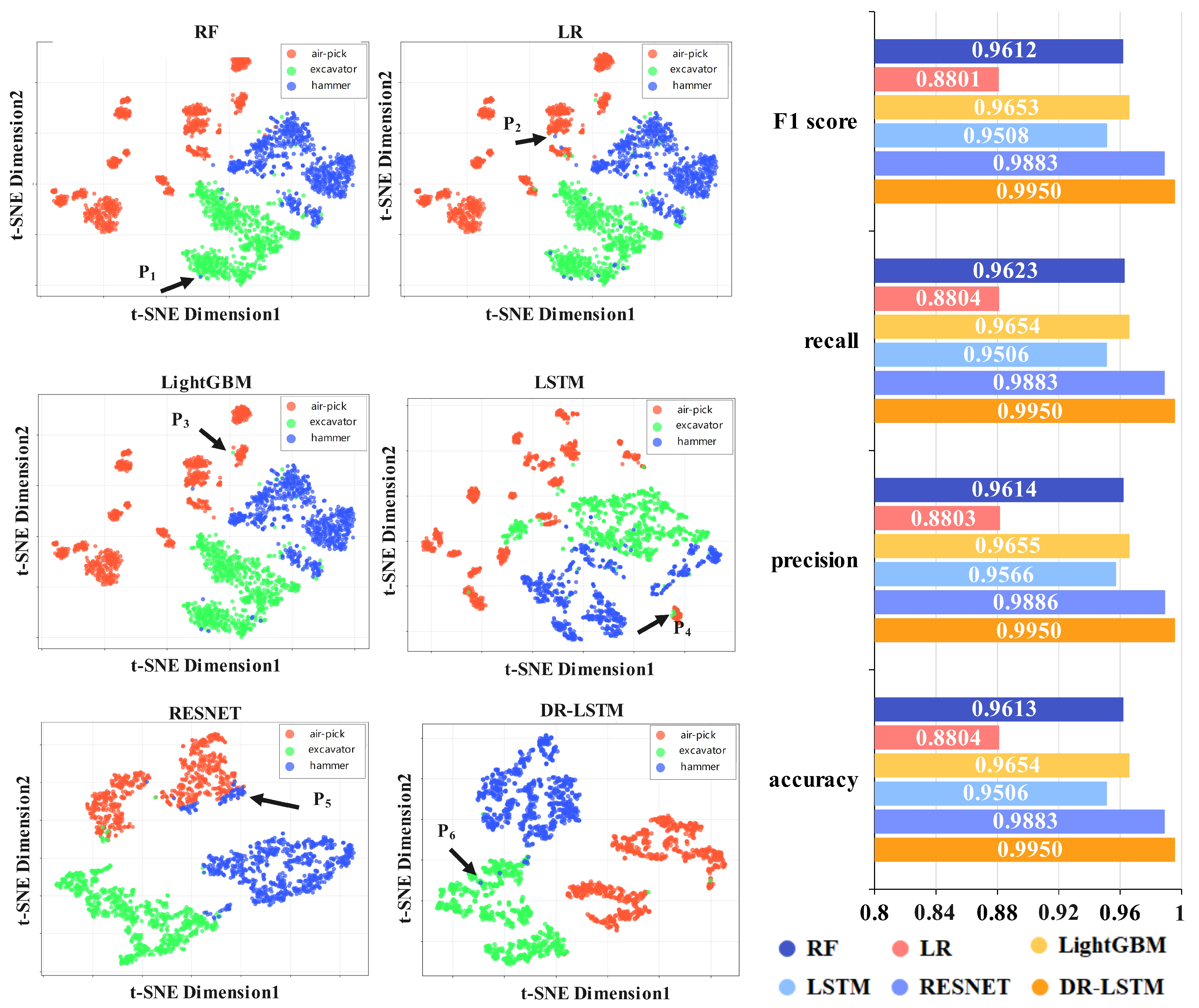

| Natural Environmental Vibration | RF | 0.9613 | 0.9614 | 0.9623 | 0.9612 |

| LR | 0.8804 | 0.8803 | 0.8804 | 0.8801 | |

| LightGBM | 0.9654 | 0.9655 | 0.9654 | 0.9653 | |

| DR-LSTM | 0.9950 | 0.9950 | 0.9950 | 0.9950 | |

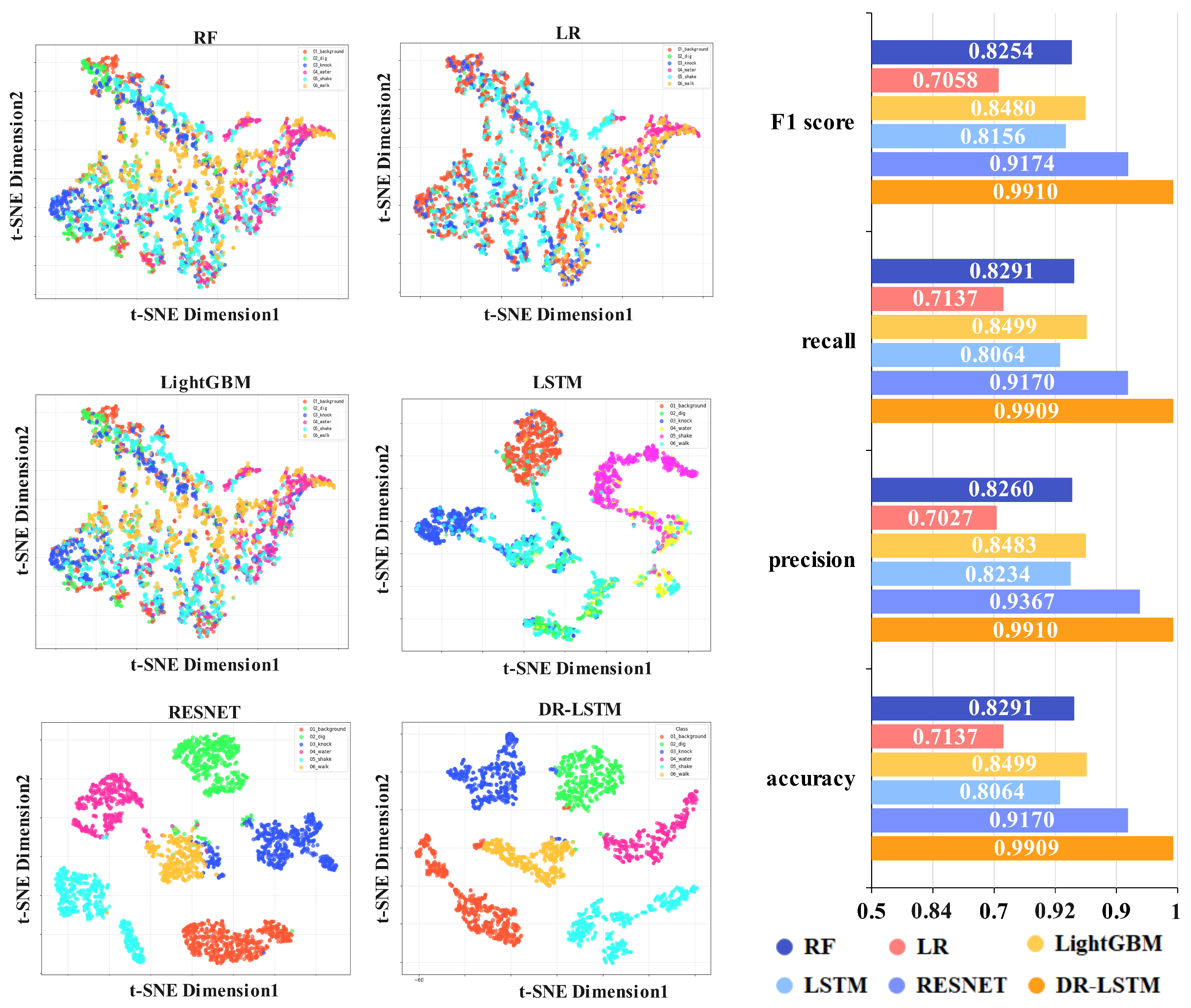

| Anthropogenic Vibration | RF | 0.8291 | 0.8260 | 0.8291 | 0.8254 |

| LR | 0.7137 | 0.7027 | 0.7137 | 0.7058 | |

| LightGBM | 0.8499 | 0.8483 | 0.8499 | 0.8480 | |

| DR-LSTM | 0.9909 | 0.9910 | 0.9909 | 0.9910 |

| Dataset | Model | Accuracy | Precision | Recall | F1 Score |

|---|---|---|---|---|---|

| Natural Environmental Vibration | LSTM | 0.9506 | 0.9566 | 0.9506 | 0.9508 |

| RESNET | 0.9883 | 0.9886 | 0.9883 | 0.9883 | |

| LSTM+DDPM | 0.9506 | 0.9566 | 0.9506 | 0.9508 | |

| RESNET+DDPM | 0.9883 | 0.9886 | 0.9883 | 0.9883 | |

| DR-LSTM | 0.9950 | 0.9950 | 0.9950 | 0.9950 | |

| Anthropogenic Vibration | LSTM | 0.8064 | 0.8234 | 0.8064 | 0.8156 |

| RESNET | 0.9170 | 0.9367 | 0.9170 | 0.9174 | |

| LSTM+DDPM | 0.8064 | 0.8234 | 0.8064 | 0.8156 | |

| RESNET+DDPM | 0.9170 | 0.9367 | 0.9170 | 0.9174 | |

| DR-LSTM | 0.9909 | 0.9910 | 0.9909 | 0.9910 |

| Dataset | Model | Accuracy | Precision | Recall | F1 Score |

|---|---|---|---|---|---|

| Natural Environmental Vibration | LSTM | 0.6320 | 0.4695 | 0.6320 | 0.5316 |

| RESNET | 0.6914 | 0.8384 | 0.6914 | 0.5904 | |

| LSTM+DDPM | 0.7234 | 0.7046 | 0.7234 | 0.7046 | |

| RESNET+DDPM | 0.7623 | 0.7896 | 0.7623 | 0.7896 | |

| RES+LSTM | 0.7423 | 0.6927 | 0.7423 | 0.6123 | |

| RES+LSTM+Transformer-based Diffusion | 0.8305 | 0.8740 | 0.8305 | 0.7781 | |

| RES+LSTM+VQ-VAE | 0.8490 | 0.8878 | 0.8490 | 0.7949 | |

| DR-LSTM | 0.8793 | 0.8793 | 0.8793 | 0.8793 | |

| Anthropogenic Vibration | LSTM | 0.8142 | 0.8518 | 0.8142 | 0.8006 |

| RESNET | 0.9034 | 0.9170 | 0.9034 | 0.8986 | |

| LSTM+DDPM | 0.8646 | 0.8513 | 0.8646 | 0.8513 | |

| RESNET+DDPM | 0.9164 | 0.9053 | 0.9145 | 0.9056 | |

| RES+LSTM | 0.8962 | 0.8764 | 0.8962 | 0.8764 | |

| RES+LSTM+Transformer-based Diffusion | 0.9183 | 0.9294 | 0.9183 | 0.9157 | |

| RES+LSTM+VQ-VAE | 0.8930 | 0.9012 | 0.8858 | 0.8837 | |

| DR-LSTM | 0.9274 | 0.9352 | 0.9274 | 0.9251 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, K.; Wang, T.; Wu, J.; Zheng, Q.; Chen, C.; Lin, J. Multi-Feature Fusion for Fiber Optic Vibration Identification Based on Denoising Diffusion Probabilistic Models. Sensors 2025, 25, 7085. https://doi.org/10.3390/s25227085

Zhang K, Wang T, Wu J, Zheng Q, Chen C, Lin J. Multi-Feature Fusion for Fiber Optic Vibration Identification Based on Denoising Diffusion Probabilistic Models. Sensors. 2025; 25(22):7085. https://doi.org/10.3390/s25227085

Chicago/Turabian StyleZhang, Keju, Tingshuo Wang, Jianwei Wu, Qin Zheng, Caiyi Chen, and Jiaxiang Lin. 2025. "Multi-Feature Fusion for Fiber Optic Vibration Identification Based on Denoising Diffusion Probabilistic Models" Sensors 25, no. 22: 7085. https://doi.org/10.3390/s25227085

APA StyleZhang, K., Wang, T., Wu, J., Zheng, Q., Chen, C., & Lin, J. (2025). Multi-Feature Fusion for Fiber Optic Vibration Identification Based on Denoising Diffusion Probabilistic Models. Sensors, 25(22), 7085. https://doi.org/10.3390/s25227085