An Unsupervised Image Enhancement Framework for Multiple Fault Detection of Insulators

Abstract

1. Introduction

- (i)

- A fault detection model for insulators in transmission lines under low-light and high-exposure environments is developed, covering four categories: normal, self-explosion, damage, and flashover;

- (ii)

- A grayscale feature-guided image generation structure is proposed to achieve fine-tuning of the brightness of local areas of the image through grayscale features;

- (iii)

- For unpaired data training scenarios, a brightness consistency constraint loss is constructed to improve the balance of the overall brightness distribution of the image by constraining the global brightness of the generated image and the reference image.

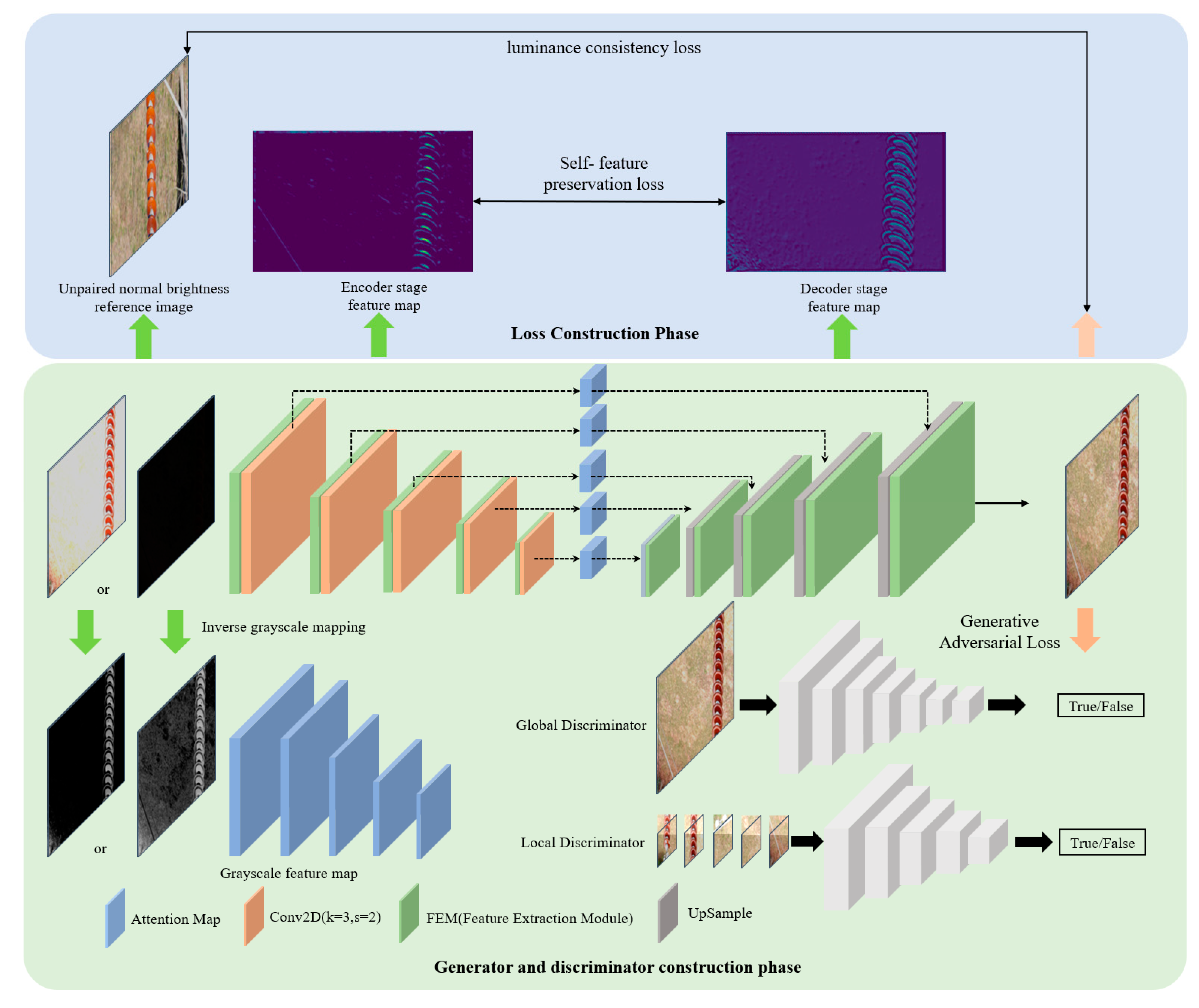

2. Model Overall Structure Design

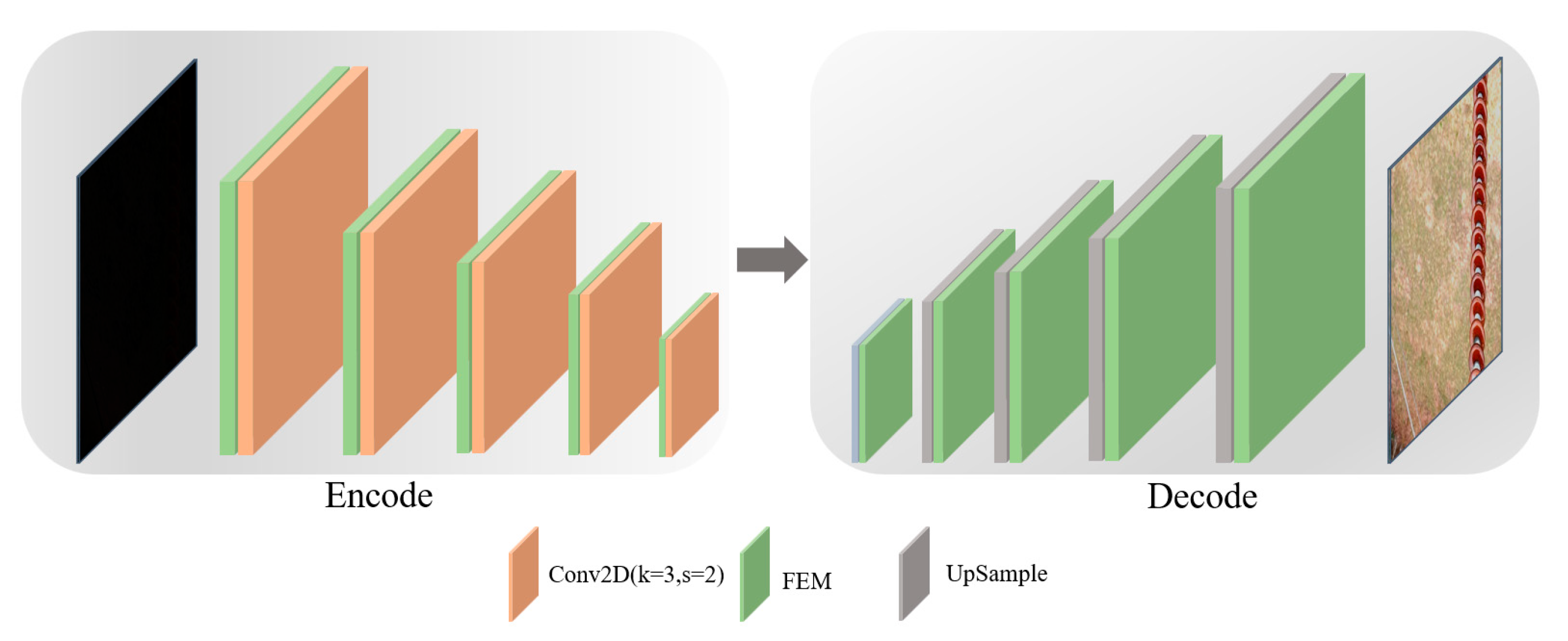

2.1. Deep Bottleneck Network Generator and Multi-Patch Discriminator

- (1)

- Generator encoding and decoding structure design

- (2)

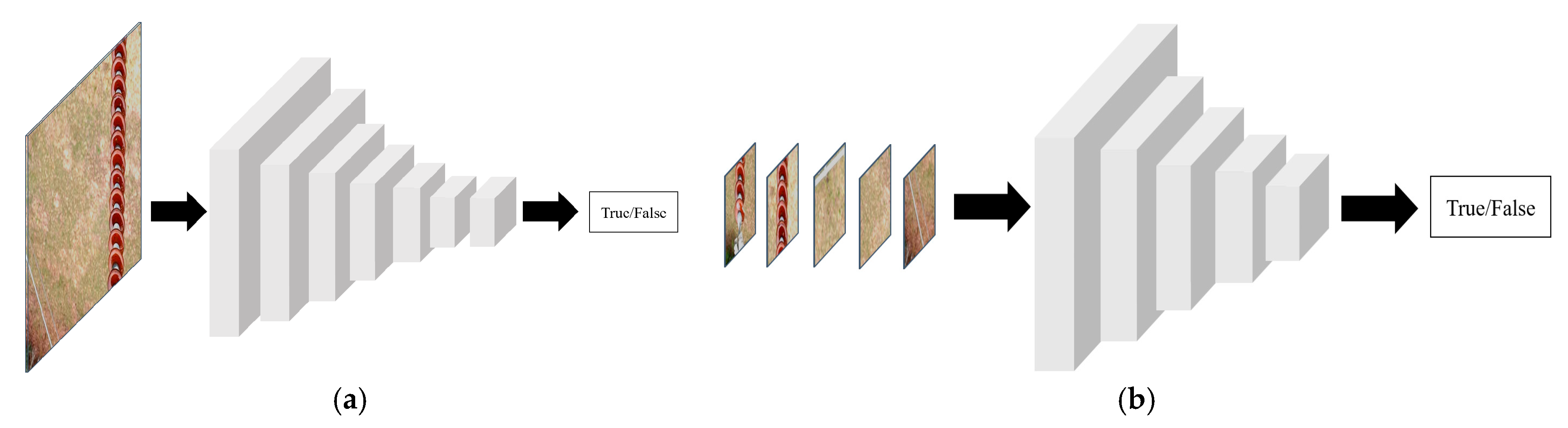

- Discriminator structure design

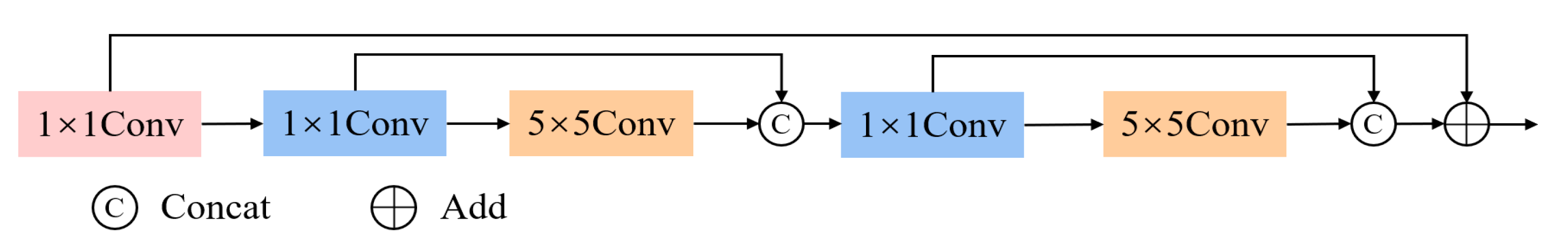

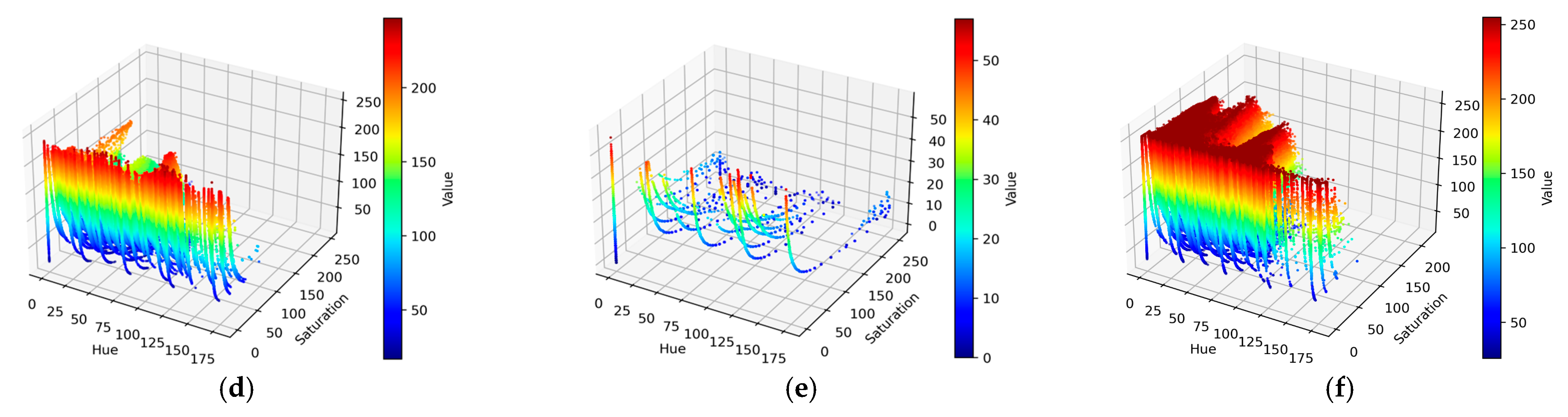

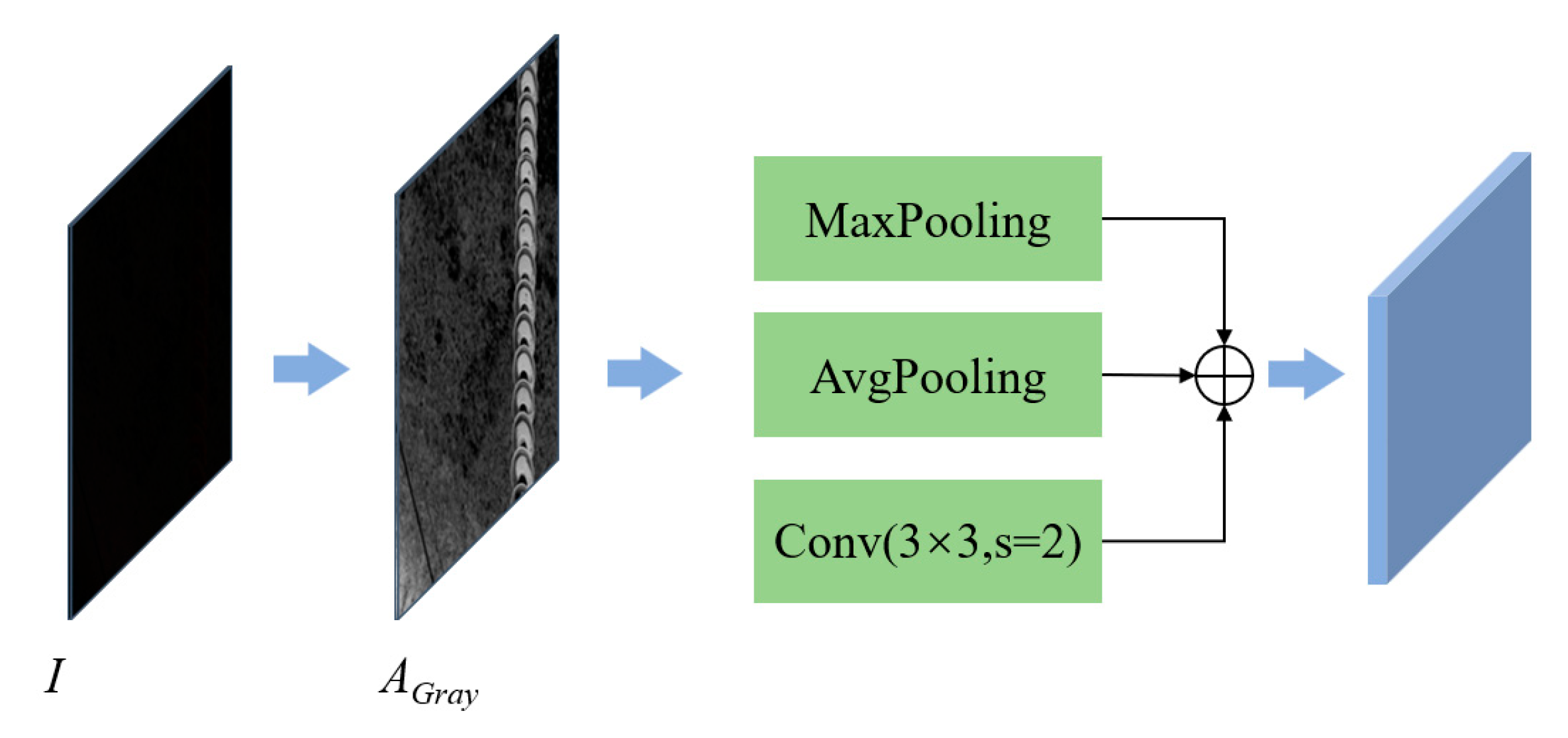

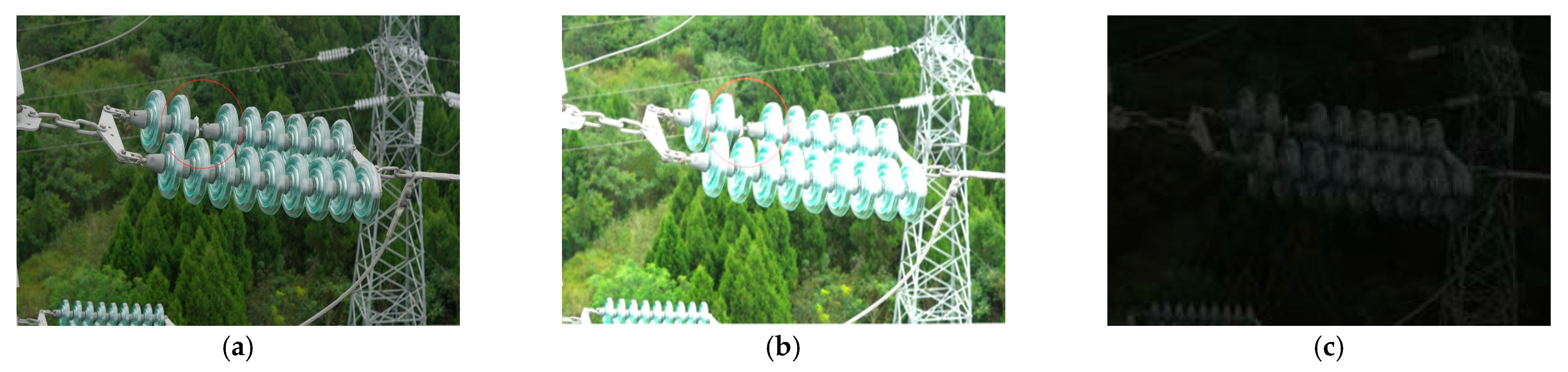

2.2. Grayscale Feature-Guided Brightness Uneven Area Enhancement Algorithm

- (1)

- Design of the Grayscale Attention Mechanism

- (2)

- Multi-scale grayscale image feature fusion

2.3. Loss with Self-Feature Preservation and Brightness Consistency Constraint

- (1)

- Adversarial Loss for Image Generation and Discrimination

- (2)

- Image self-feature preservation perceptual loss

- (3)

- Image brightness consistency loss

3. Experiments and Analysis

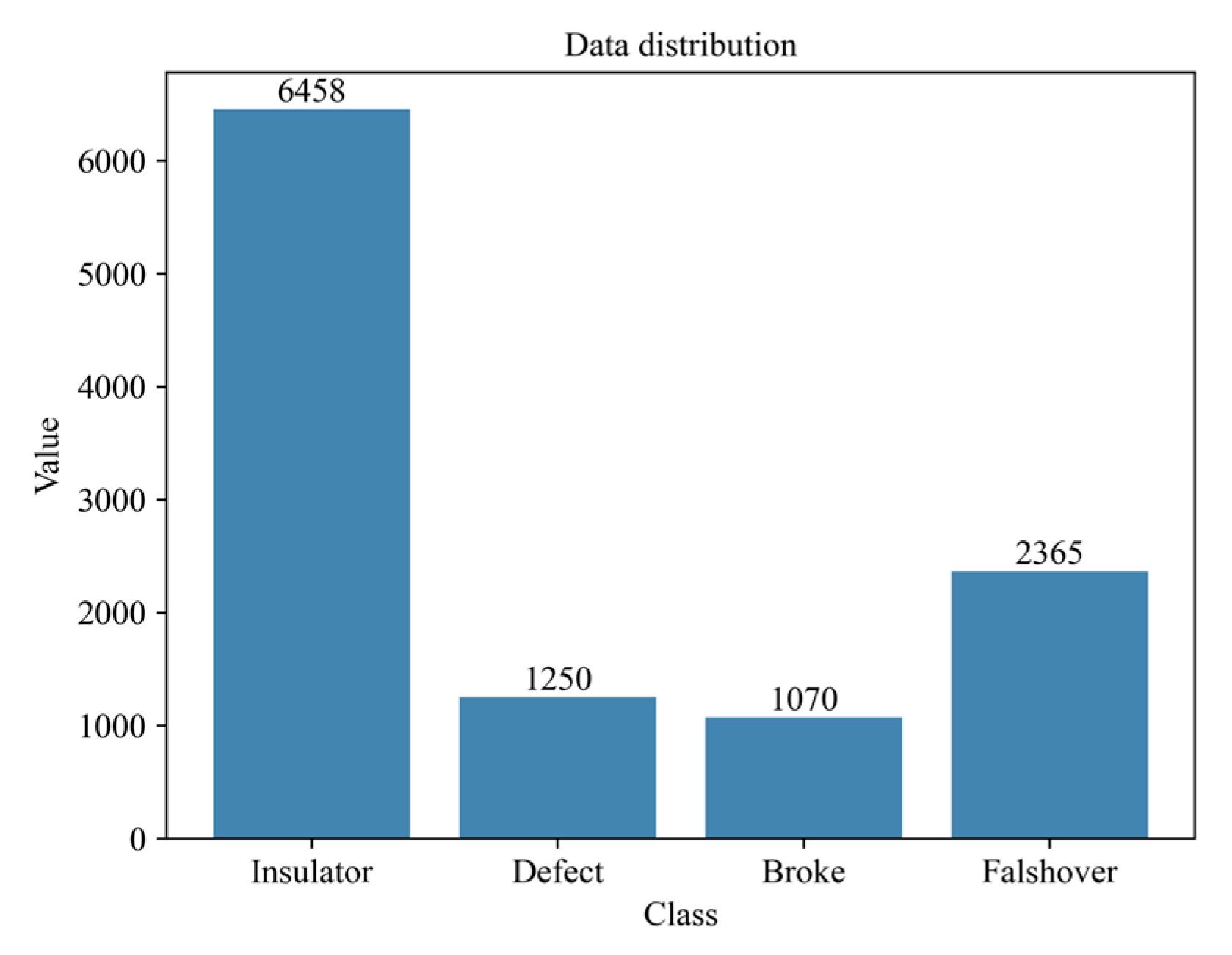

3.1. Experimental Environment and Data Description

3.2. Experimental Index

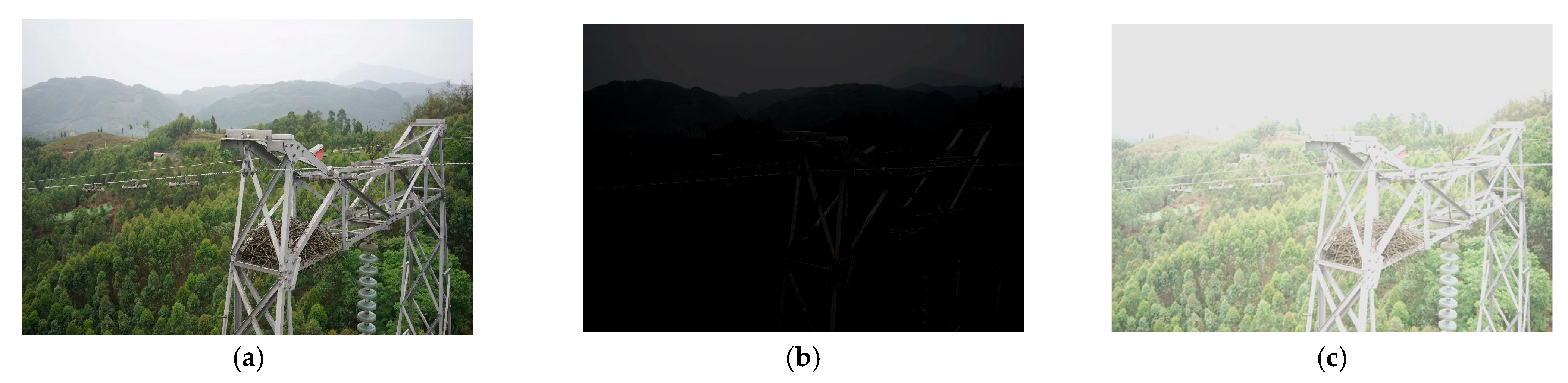

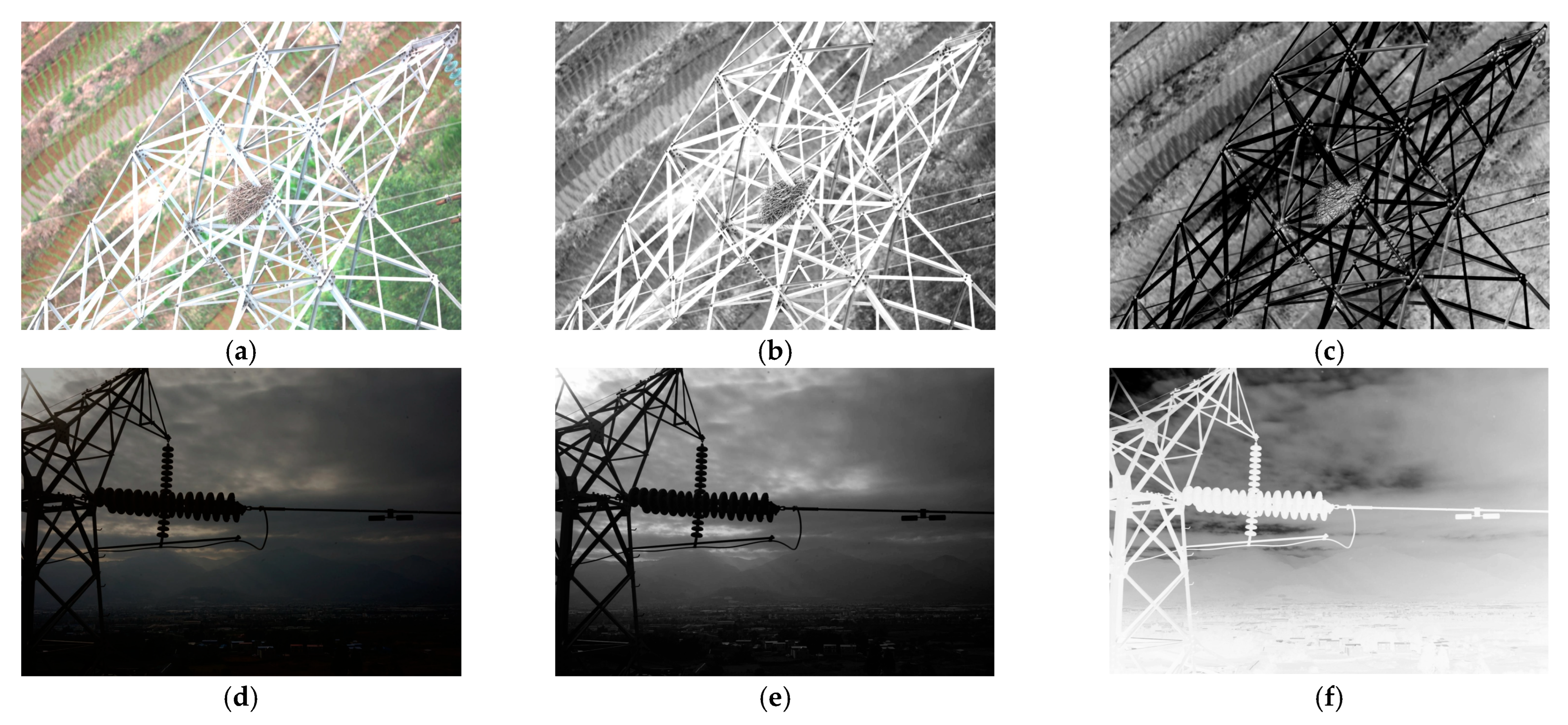

3.3. Transmission Line Power Component Defect Enhancement Data Description

3.4. Image Enhancement Experimental Analysis

3.4.1. Image Enhancement Ablation Experiment Analysis

3.4.2. Experiment on Synthetic Image Enhancement with Uneven Illumination

- (1)

- Enhanced performance analysis for low-light scenes

| Model |  |

| ZeroDCE++ |  |

| RetinexNet |  |

| EnlightenGAN |  |

| CutGAN |  |

| StyleGAN |  |

| Ours |  |

| Lables |  |

- (2)

- Enhanced performance analysis for high exposure scenes

| Model |  |

| ZeroDCE++ |  |

| RetinexNet |  |

| EnlightenGAN |  |

| CutGAN |  |

| StyleGAN |  |

| Ours |  |

| Lables |  |

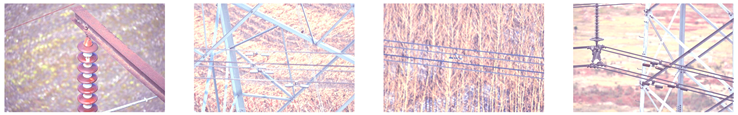

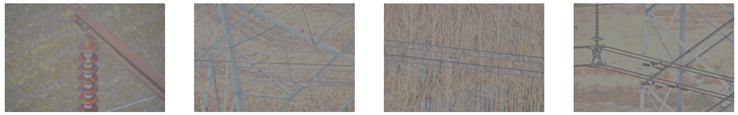

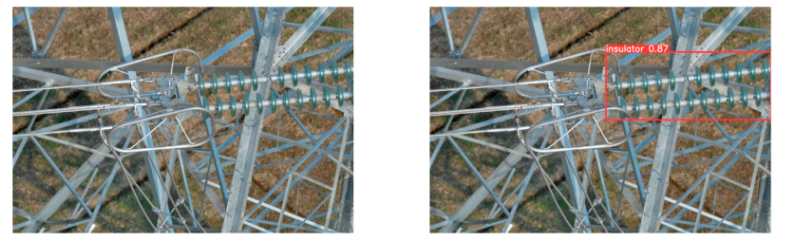

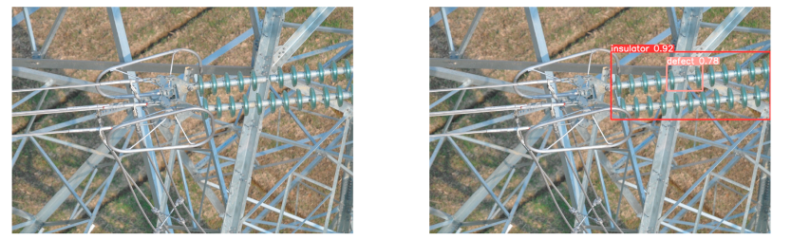

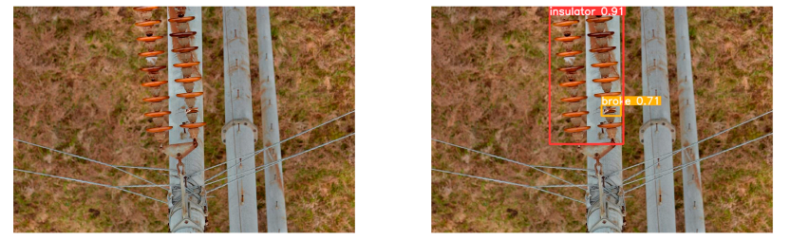

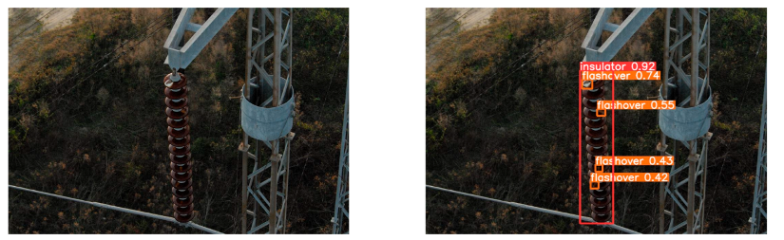

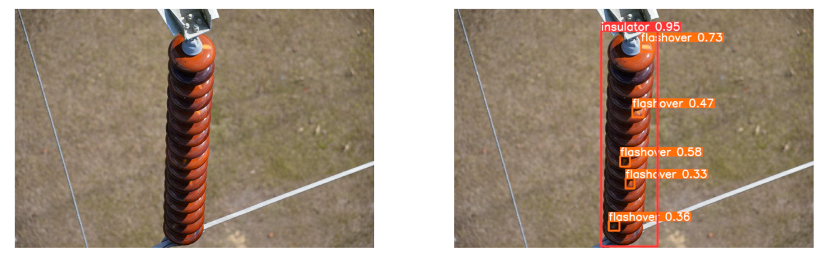

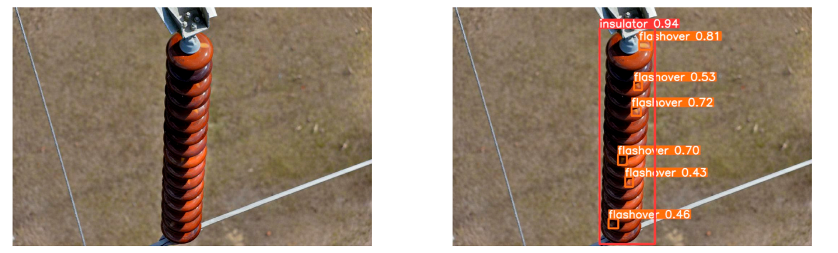

3.4.3. Experimental Test of Image Enhancement on Actual Power Transmission Lines

- (1)

- Comparison of actual image detection results

- (2)

- Visual comparison of actual image detection results

4. Discussion

5. Conclusions

- The proposed enhancement strategy can adaptively enhance images with imbalanced lighting under the condition of unpaired data. This method effectively improves the lighting balance of the generated images, preserving local details and global consistency. As a result, the enhanced images are more suitable for subsequent detection and recognition tasks. The PSNR of the enhanced images increases from 7.73 to 18.41, and the SSIM improves from 0.42 to 0.85.

- Furthermore, validation on a real-world dataset shows that the target detection algorithm, after image enhancement, significantly outperforms direct detection on the raw images. The average precision of the insulator defect detection dataset improves by 0.2% to 4.2% across different models.

- Finally, the insulator fault dataset constructed in this paper faces a class imbalance issue, which results in high detection accuracy for insulator targets but is also affected by complex backgrounds. Therefore, further research will continue to address the challenges posed by complex backgrounds and targets in complex scenes.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Meng, Z.; Xu, S.; Wang, L.; Gong, Y. Defect object detection algorithm for electroluminescence image defects of photovoltaic modules based on deep learning. Energy Sci. Eng. 2022, 10, 800–813. [Google Scholar] [CrossRef]

- Li, D.; Ren, H.; Wang, G.; Wang, S.; Wang, W.; Du, M. Coal gangue detection and recognition method based on multiscale fusion lightweight network SMS-YOLOv3. Energy Sci. Eng. 2023, 11, 1783–1797. [Google Scholar] [CrossRef]

- Shan, P.F.; Yang, T.; Wu, X.C.; Sun, H.Q. A research study of lightweight state perception algorithm based on improved YOLOv5s-Tiny for fully mechanized top-coal caving mining. Energy Sci. Eng. 2024, 12, 2864–2878. [Google Scholar] [CrossRef]

- He, M.; Qin, L.; Deng, X.; Liu, K. MFI-YOLO: Multi-fault insulator detection based on an improved YOLOv8. IEEE Trans. Power Deliv. 2023, 39, 168–179. [Google Scholar] [CrossRef]

- Tao, X.; Zhang, D.; Wang, Z.; Liu, X.; Zhang, H.; Xu, D. Detection of Power Line Insulator Defects Using Aerial Images Analyzed With Convolutional Neural Networks. EEE Trans. Syst. Man Cybern. Syst. 2020, 50, 1486–1498. [Google Scholar] [CrossRef]

- Jiang, D.; Cao, Y.; Yang, Q. Weakly-supervised learning based automatic augmentation of aerial insulator images. Expert Syst. Appl. 2024, 242, 122739. [Google Scholar] [CrossRef]

- Yang, Z.; Xu, Z.; Wang, Y. Bidirection-Fusion-YOLOv3: An Improved Method for Insulator Defect Detection Using UAV Image. IEEE Trans. Instrum. Meas. 2022, 71, 3521408. [Google Scholar] [CrossRef]

- Han, G.; He, M.; Zhao, F.; Xu, Z.; Zhang, M.; Qin, L. Insulator detection and damage identification based on improved lightweight YOLOv4 network. Energy Rep. 2021, 7, 187–197. [Google Scholar] [CrossRef]

- Zhou, M.; Li, B.; Wang, J.; He, S. Fault Detection Method of Glass Insulator Aerial Image Based on the Improved YOLOv5. IEEE Trans. Instrum. Meas. 2023, 72, 5012910. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Hao, K.; Chen, G.; Zhao, L.; Li, Z.; Liu, Y.; Wang, C. An Insulator Defect Detection Model in Aerial Images Based on Multiscale Feature Pyramid Network. IEEE Trans. Instrum. Meas. 2022, 71, 3522412. [Google Scholar] [CrossRef]

- Yang, L.; Zhang, R.Y.; Li, L. Simam: A simple, parameter-free attention module for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, PMLR, Online, 18–24 July 2021; pp. 11863–11874. [Google Scholar]

- Zhao, W.; Xu, M.; Cheng, X.; Zhao, Z. An Insulator in Transmission Lines Recognition and Fault Detection Model Based on Improved Faster RCNN. IEEE Trans. Instrum. Meas. 2021, 70, 5016408. [Google Scholar] [CrossRef]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Zhang, X.; Zhang, Y.; Liu, J.; Zhang, C.; Xue, X.; Zhang, H.; Zhang, W. InsuDet: A Fault Detection Method for Insulators of Overhead Transmission Lines Using Convolutional Neural Networks. IEEE Trans. Instrum. Meas. 2021, 70, 5018512. [Google Scholar] [CrossRef]

- Yuan, Z.; Zeng, J.; Wei, Z.; Jin, L.; Zhao, S.; Liu, X.; Zhang, Y.; Zhou, G. CLAHE-Based Low-Light Image Enhancement for Robust Object Detection in Overhead Power Transmission System. IEEE Trans. Power Deliv. 2023, 38, 2240–2243. [Google Scholar] [CrossRef]

- Liu, W.; Ren, G.; Yu, R.; Guo, S.; Zhu, J.; Zhang, L. Image-adaptive YOLO for object detection in adverse weather conditions. Proc. AAAI Conf. Artif. Intell. 2022, 36, 1792–1800. [Google Scholar] [CrossRef]

- Zhang, H.; Xiao, L.; Cao, X.; Foroosh, H. Multiple adverse weather conditions adaptation for object detection via causal intervention. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 46, 1742–1756. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.D.; Zhang, B.; Lan, Z.C.; Liu, H.C.; Li, D.Y.; Pei, L.; Yu, W.X. FINet: An Insulator Dataset and Detection Benchmark Based on Synthetic Fog and Improved YOLOv5. IEEE Trans. Instrum. Meas. 2022, 71, 6006508. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the 18th International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Jiang, Y.; Gong, X.; Liu, D.; Cheng, Y.; Fang, C.; Shen, X.; Yang, J.; Zhou, P.; Wang, Z. Enlightengan: Deep light enhancement without paired supervision. IEEE Trans. Image Process. 2021, 30, 2340–2349. [Google Scholar] [CrossRef] [PubMed]

- Mao, X.; Li, Q.; Xie, H.; Lau, Y.K.R.; Wang, Z.; Stephen, P.S. Least squares generative adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2794–2802. [Google Scholar]

- Li, C.; Guo, C.; Loy, C.C. Learning to enhance low-light image via zero-reference deep curve estimation. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 4225–4238. [Google Scholar] [CrossRef] [PubMed]

- Park, T.; Efros, A.A.; Zhang, R.; Zhu, J.Y. Contrastive learning for unpaired image-to-image translation. In Proceedings of the Computer Vision—ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; pp. 319–345. [Google Scholar]

- Karras, T.; Aittala, M.; Laine, S.; Härkönen, E.; Hellsten, J.; Lehtinen, J.; Aila, T. Alias-Free Generative Adversarial Networks. Adv. Neural Inf. Process. Syst. 2021, 34, 852–863. [Google Scholar]

| Backbone | Gray-Attention | Loss | PSNR (dB) | SSIM |

|---|---|---|---|---|

| - | - | - | 7.73 | 0.43 |

| √ | - | - | 12.57 | 0.78 |

| - | √ | - | 14.44 | 0.81 |

| - | - | √ | 11.65 | 0.74 |

| √ | - | √ | 16.51 | 0.84 |

| √ | √ | - | 17.37 | 0.83 |

| - | √ | √ | 16.54 | 0.84 |

| √ | √ | √ | 18.41 | 0.85 |

| Model | AP/% | mAP/% | |||

|---|---|---|---|---|---|

| Insulator | Defect | Flashover | Broke | ||

| YOLOv5s | 94.3 | 89.8 | 64.9 | 73.6 | 80.6 |

| YOLOv5s + IEM | 94.6 | 91.3 | 63.8 | 76.0 | 81.4+0.8 |

| YOLOv5m | 94.9 | 92.3 | 73.1 | 86.4 | 86.7 |

| YOLOv5m + IEM | 96.0 | 90.8 | 72.7 | 87.7 | 86.8+0.1 |

| YOLOv7Tiny | 90.5 | 80.8 | 54.0 | 63.6 | 72.2 |

| YOLOv7Tiny + IEM | 95.7 | 91.8 | 68.5 | 73.5 | 79.4+4.2 |

| YOLOv8s | 95.0 | 90.9 | 65.3 | 78.3 | 82.3 |

| YOLOv8s + IEM | 94.8 | 91.9 | 70.9 | 78.3 | 84.0+1.7 |

| YOLOv8m | 95.3 | 92.9 | 74.4 | 88.7 | 87.8 |

| YOLOv8m + IEM | 95.7 | 93.2 | 77.9 | 92.4 | 89.8+2.0 |

| YOLOv9s | 94.3 | 88.1 | 61.1 | 75.3 | 79.7 |

| YOLOv9s + IEM | 93.8 | 86.3 | 60.1 | 79.3 | 79.9+0.2 |

| YOLOv9m | 95.1 | 92.7 | 72.5 | 88.7 | 87.3 |

| YOLOv9m + IEM | 95.7 | 93.4 | 74.7 | 89.3 | 88.3+1.0 |

| YOLOv10s | 92.8 | 88.0 | 61.3 | 67.7 | 77.4 |

| YOLOv10s + IEM | 93.8 | 87.9 | 62.8 | 71.4 | 79.0+1.6 |

| YOLOv10m | 93.5 | 87.2 | 64.7 | 69.3 | 78.7 |

| YOLOv10m + IEM | 93.6 | 89.8 | 67.8 | 73.1 | 81.1+2.4 |

| Original Image | Original Image Detection Results | Enhanced Images | Enhanced Image Detection Results |

|---|---|---|---|

|  | ||

|  | ||

|  | ||

|  | ||

|  | ||

|  | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guo, J.; Han, G.; He, M.; Li, Y.; Qin, L.; Liu, K. An Unsupervised Image Enhancement Framework for Multiple Fault Detection of Insulators. Sensors 2025, 25, 7071. https://doi.org/10.3390/s25227071

Guo J, Han G, He M, Li Y, Qin L, Liu K. An Unsupervised Image Enhancement Framework for Multiple Fault Detection of Insulators. Sensors. 2025; 25(22):7071. https://doi.org/10.3390/s25227071

Chicago/Turabian StyleGuo, Jiaxin, Gujing Han, Min He, Yu Li, Liang Qin, and Kaipei Liu. 2025. "An Unsupervised Image Enhancement Framework for Multiple Fault Detection of Insulators" Sensors 25, no. 22: 7071. https://doi.org/10.3390/s25227071

APA StyleGuo, J., Han, G., He, M., Li, Y., Qin, L., & Liu, K. (2025). An Unsupervised Image Enhancement Framework for Multiple Fault Detection of Insulators. Sensors, 25(22), 7071. https://doi.org/10.3390/s25227071