1. Introduction

System identification is a cornerstone of modern control theory and engineering applications. It aims to create mathematical models that can accurately describe the dynamic characteristics of a system using input-output data [

1,

2]. Among various identification model structures, the autoregressive with exogenous input (ARX) model has been widely applied in the fields of industrial processes, chemical production, and biological manufacturing, due to its concise structure and efficient computation [

3,

4]. In practical environments, the measurement signals are often affected by accidental factors, such as intense noise, gross errors, missing data, and sensor failure. The above abnormal disturbances are induced by latent variables that are not directly observable. The Expectation-Maximization (EM) algorithm [

5] creates a practical statistical framework for solving maximum likelihood estimation problems involving latent variables or incomplete data. Meanwhile, multiple sets of sensors can be deployed to collect large amounts of measurement data, to improve identification accuracy [

6]. Most existing methods for the identification of multiple sensors rely on the assumption of identically distributed data, which is not suitable for simultaneous multi-sensor processing. An urgent need for new identification methods appears nowadays [

7,

8].

Titterington et al. [

9] pioneered the recursive EM algorithm (REM) via stochastic approximation, recursively updating parameters using observed data likelihood gradient and Fisher information matrix (FIM) of complete data. To avoid FIM inversion, Cappé et al. [

10] investigated an online EM algorithm based on recursive sufficient statistics with a focus on exponential family distributions. Later, the online EM algorithm was applied to the parameter estimation of hidden Markov models in [

11]. A recursive EM identification relying on sufficient statistics was developed in [

12], where additional iterations were conducted for each time instant. Considering the time-delay, Guo et al. [

13] employed REM and convex optimization for the identification of Markov jump autoregressive systems. A recursive parameter estimation approach for the Dirichlet hidden Markov models was developed in [

14]. A Student’s

t-distribution-based REM was developed in [

15] for the robust identification of linear ARX models. The varying delay issue of an integrated measurement systems was considered in [

16], where an online EM algorithm was employed for parameter learning. The robust recursive identification was developed for time-delay systems in [

17] integrated with skewed measurement noise.

On the other hand, Multi-Sensor identification mainly involves two processing methods: data fusion and multi-task learning (MTL) [

18,

19]. Data fusion technology further improves data availability and redundancy through the extensive deployment of multi-sensor systems. Simultaneously observing the same process variable with multiple sensors can reduce the impact of single-sensor failure or anomalies, improving identification accuracy and reliability [

20]. Relevant research is mainly divided into two streams: probabilistic statistical methods and artificial intelligence approaches [

21]. Due to its simplicity and efficiency, the weighted fusion algorithm has become an ideal choice for fusing data of varying accuracies. The optimal and unbiased fused data can be obtained by reasonably evaluating weight coefficients in the weighted fusion algorithms [

22]. Data fusion methods obtain high quality measurement data through weighted averaging or optimal fusion approaches [

23], whereas MTL methods share information among sensors from the perspective of optimization objectives, improving overall performance [

24,

25]. However, some deficiencies still exist in current approaches. Although these methods assume different noise characteristics across multiple sensors, they mostly rely on the Gaussian noise assumption. As is well known, the Gaussian assumptions are sensitive to non-Gaussian perturbations and outliers. Once sensor measurements exhibit heavy-tailed distributions or sudden spike disturbances, their identification performance would rapidly degrade.

In industrial engineering practice, outliers often appear due to unknown reasons, such as sensor faults and signal transmission disturbances. Improper disposition of the outliers may lead to degradation in model performance. In engineering, the most general outlier processing methods are data trimming and smoothing. Although the data trimming and smoothing approaches are intuitive and easy to understand, the identification models will suffer from information loss and estimation bias [

26]. To enhance the robustness of system identification in non-Gaussian noise environments, statistical modeling methods based on the

t-distribution have attracted researchers’ attention. Compared to ordinary Gaussian distribution,

t-distribution has heavier tails in its probability density function (pdf), which increases tolerance for outliers [

27]. Due to remarkable reliability and analytical properties, the

t-distribution has been widely adopted in identification fields. For example, a robust Bayesian technique for logistic regression modeling was proposed in [

28], where a weakly informative Student’s t prior distribution was employed. Yu et al. [

29] considered the joint estimation of states and noise covariance for linear systems with unknown covariance of multiplicative noise, where the measurements were modeled as a mixture of a Student’s

t-distribution and Gaussian distributions. Several alternatives to the EM algorithm were explored in [

30] for ML estimation of location, scatter matrix, and degree of freedom (dof) of the Student

t-distribution. Beyond the maximum likelihood ideology, the application of the

t-distribution has also been documented in numerous studies [

27,

31] within the ideology of variational Bayesian inference for identification tasks.

However, a robust multi-sensor fusion technology is still not receiving sufficient attention for online identification. Based on the above background, this paper introduces the concept of multi-sensor fusion in the online EM algorithm and proposes a robust multi-sensor recursive EM algorithm (RMSREM). On the one hand, the proposed algorithm can effectively fuse information from multi-source observations. On the other hand, RMSREM algorithm can enhance robustness against outliers via incorporating the t-distribution in noise modeling. Moreover, the recursive EM framework enables real-time updates of model parameters when new data arrives. With the implementation of the proposed method, the heterogeneous information from multiple sensors is adequately utilized. The identification accuracy is significantly improved. Moreover, the performance of the estimated models remains stable in complex environments, involving sensor plug-and-play, noise interference, and outliers. The main contributions of this article are given as follows:

The Student’s t-distribution is incorporated in the algorithm to describe the statistical characteristics of measurement noise, whose heavy-tailed property promotes the algorithm’s robustness;

Second, a recursive Q-function is derived, based on which a recursive framework of the EM algorithm is accomplished together with sufficient statistics recursion. The real-time requirement of dynamic system identification is satisfied;

A multi-sensor information fusion mechanism is designed. Multi-source information is fused via adaptive calculation of the weight of each sensor. The reliability of the identification algorithm has been enhanced.

The structure of this paper is as follows: the preliminary concepts and background knowledge of the proposed RMSREM are introduced in

Section 2,

Section 3 provides the mathematical explanation of the robust multi-sensor recursive EM algorithm,

Section 4 verifies the adaptability and efficacy of the proposed algorithm through a numerical example and simulations of the CSTR, and

Section 5 presents the final research conclusions.

2. Problem Formulation

Considering a linear system with

M sensors, one of the ARX model is defined as

where

k is the time index and

indicates the sensor number. The noisy observation obtained from the

m-th sensor at instant

k is denoted by

. The corresponding regressive vector is

, which incorporates past outputs of the same sensor together with previous inputs. The system input at time

k is represented by

. The unknown parameter vector to be estimated is

, where

and

denote the output and input polynomial orders. The measurement noise from the

m-th sensor is indicated by

, which is assumed to follow a Student’s

t-distribution, i.e.,

Assumption 1. The measurement noise from the same sensor are independent and identically distributed.

Assumption 2. The measurement sets are independent but not identically distributed.

Assumption 3. For the sake of computational simplicity, the distinct distributions are defined as Student’s t-distributions with different variances and different dofs.

The pdf of Student’s

t-distribution is as follows:

where

represents the mean of the measurement noise (taken as

in this work),

is its variance, and

specifies the dof. The measurement dimension is denoted by

, with

in this case.

is the gamma function. Furthermore, the square of Mahalanobis distance between the noise term

and the mean value

, given the variance

, is defined as

.

In system identification and parameter estimation, it is conventional to assume that the noise follows a Gaussian distribution. The Gaussian assumption is primarily due to its analytical property and alignment with engineering practice. Nevertheless, Gaussian-based models are vulnerable to outliers and abnormal disturbances in complex environments. To alleviate such sensitivity, the so-called contaminated Gaussian model introduces a mixture of two Gaussian components with different variances, where the one with the larger variance is set to accommodate outliers. From a similar perspective, the Student’s

t-distribution can be regarded as a limit case of Gaussian mixtures that share an identical mean but whose variances vary continuously from 0 to

∞, governed by a variance-scaling mechanism [

32]. By incorporating a latent weighting variable

, which adjusts the influence of irregular deviations in the measurements, the probability of the measurement noise

under the

t-distribution can be reformulated as

where the process noise

, when re-scaled by the latent weight

, is subject to a conditional Gaussian distribution, namely

In parallel, the scaling variable

is assumed to follow a Gamma distribution parameterized by the degrees of freedom

, that is

An important property is that as

, the distribution of

collapses to the constant value 1, implying that the

t-distribution gradually degrades to the Gaussian distribution.

To fully utilize the information from all sensors, a set is defined for the weight assigned to each sensor at time k. Since samples are collected sequentially over time, the measurement from the m-th sensor at time k is weighted, yielding a corrected value . This corrected measurement is assumed to be Gaussian distributed as . The set represents the weighted output values from the M sensors at time k.

Based on the above definition, when measurements data arrive sequentially, the optimization objective is formulated in the maximum likelihood sense as

where

is log-likelihood function (for details, see [

23]). Solving (

4), we can get

and the parameter

. However, due to the unknown variances and dof introduced by Student’s

t-distribution,

and

cannot be directly obtained, which will be addressed with a recursive EM scheme in the following sections.

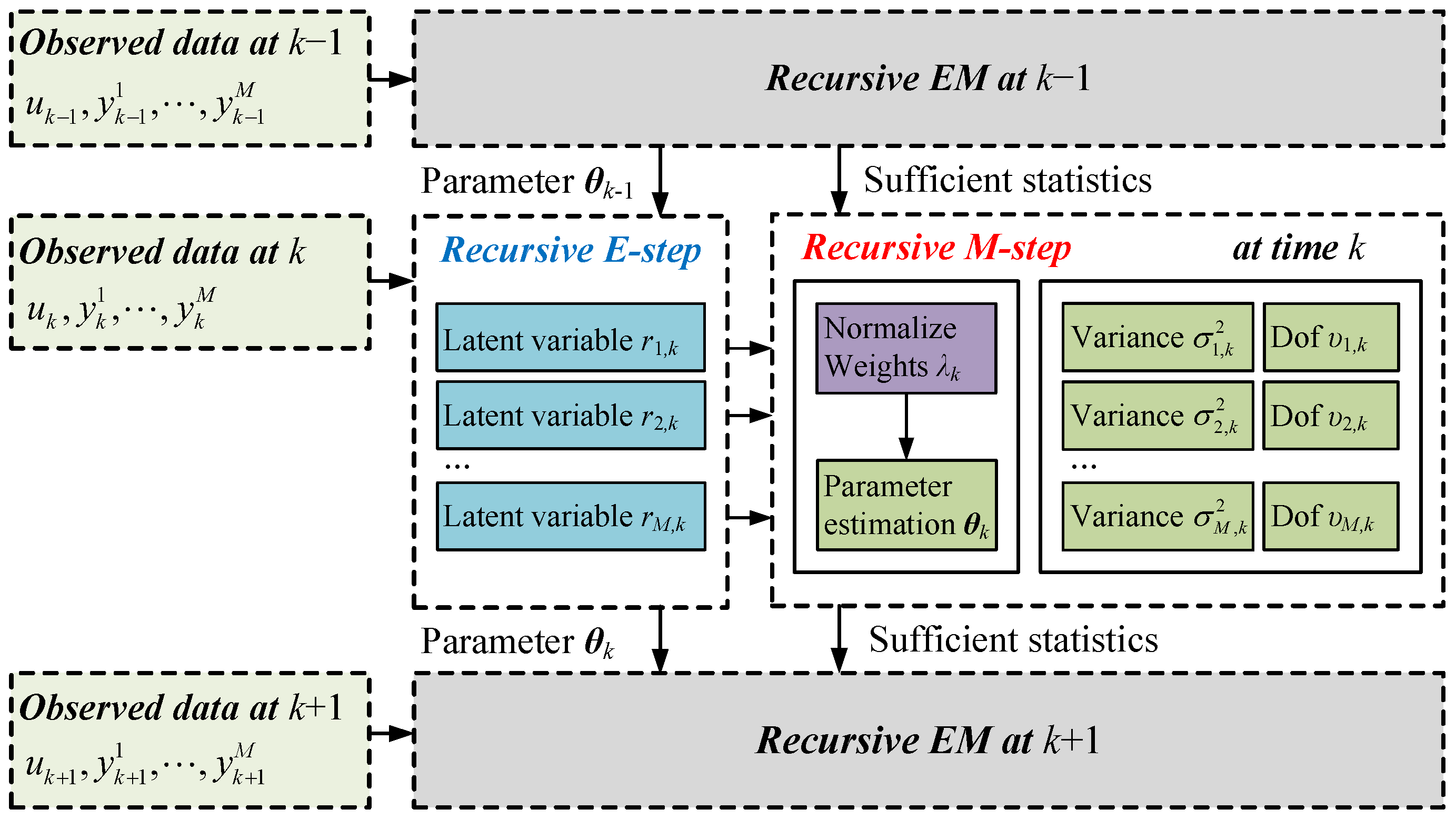

Figure 1 illustrates the general framework of the RMSREM algorithm.

3. Parameter Estimation via Robust Multi-Sensor Recursive EM Algorithm

The EM framework is widely used to estimate systems containing unobserved variables based on the maximum likelihood principle. Two core steps are composed in EM: the Expectation step (E-step) and the Maximization step (M-step), which are executed iteratively. In the E-step, the expectation of the complete-data log-likelihood function regarding the missing data needs to be calculated, and this expected value is also known as the

Q-function. The specific calculation formula of

Q-function is given by

where

represents the observed variable set,

denotes the unobserved (i.e., missing) variable set,

and

respectively stand for the parameter set to be estimated in the current iteration and obtained from the previous iteration. Then, the parameter set

is updated by maximizing (

5) in the M-step, which can be expressed as

Typically, the batch EM algorithm (BEM) is an iterative method. By iteratively performing the above two steps, the algorithm will gradually converge to a local maximum of the Q-function. For the recursive EM algorithm, the key is to convert the iterative calculation process into a recursive form. The specific implementation details of the Q-function recursion will be elaborated in the following sections.

3.1. Derivation of the Recursive Q-Function

In the batch EM, all historical data within a time period would be included in parameter estimation. When new samples arrive, the BEM requires a complete re-update, resulting in a slow response to dynamic changes in the process. This drawback limits the application of the BEM. Alternatively, a robust recursive EM is designed in this paper, where the Q-function is calculated in a recursive manner.

For the robust multi-sensor identification problem, the observed variable set

includes input

U and output

, i.e.,

, where

and

. The unobserved variable set

consists of variance scaling factors

R induced by Student’s

t-distribution, i.e.,

, where

denotes the set of variance scaling factors for all sensors over the entire sampling period, and

. Additionally, the parameter set to be estimated for this issue is

. The log-likelihood of the complete dataset can be decomposed using the chain rule of probability as follows:

where

denotes one realization of the measurement random variable

. Given the ARX model structure with parameter set

, the output

is determined jointly by the regressive vector

and the variance scaling factor

. In contrast, the factor

is only governed by the degrees of freedom

. Since the system input

U is an artificially generated excitation signal that does not depend on

, the term

is treated as a constant. Under these considerations, the

Q-function associated with the BEM is expressed as

Next, (

8) is rewritten into the following summation form:

In BEM,

is the expected value of the complete data logarithmic likelihood of the

k-th sample, with

representing the current parameter estimation. Mathematically, this expectation is detailed as

Correspondingly, is the expectation for the N-th data instance, which has the same expression as above.

In the context of online identification, the

Q-function is not computed in batch for the entire dataset. Instead, it is incrementally updated by incorporating the most recent data points. This leads to a quasi-recursive formulation of the

Q-function, expressed as

where

is the parameter set generated from successive recursive updates. The quantity

is defined as the posterior expectation of the complete log-likelihood for the

i-th data point, conditioned on the parameter set

estimated at time

. Correspondingly,

has the same definition at time

k. This quantity can be written explicitly as

The recursive

Q-function at time step

k can be formulated as follows, on the foundation of the quasi-recursive

Q-function:

Substituting (

11) into (

13), the recursive

Q-function can then be transformed into:

In this paper, the standard step size

can be replaced by a synthetic step size

. As demonstrated in [

10], convergence is guaranteed under the conditions that the step sizes satisfy

and

. Consequently, the final recursive form of the

Q-function is given by

At the initial point of the algorithm, where

, the recursive

Q-function is

, where

is an artificially assigned initial parameter set. When recursively derived from

to the current step

k, the evolution of

is governed by the following equation:

where

Now, the recursive Q-function of the RMSREM algorithm has been formulated.

3.2. Posterior Expectation of Latent Variables

Next, the posterior expectation of the latent variables in (

16) is considered. As previously described, the measurements, when conditioned on the variance-scaling factors, are Gaussian distributed. After extension, the logarithmic pdf for the measurement at time

k can be written as

The variance weighting factor

is assumed to follow a Gamma distribution. The logarithm of its likelihood function can be expressed as

Exploiting the properties of conjugate priors, the posterior distribution of the variance weighting factor

is also a Gamma distribution. Its explicit form can be written as

The derivation of posterior distribution of

is provided in

Appendix A. The Gamma distributed posterior expectation of the variance scaling factor

can be computed and is denoted by

as

where the subscript

indicates that

is computed conditioned on the measurement

of time

k and the parameters estimated at time

. The posterior of the log-variance-scaling factor, namely

, can be expressed as

where

represents the dof previously estimated for the

m-th sensor at time

, respectively. The function

represents the digamma function, defined as

.

For brevity, more detailed information of the posterior expectation in the robust multi-sensor estimation is omitted. Up to this point, the derivation of the expectation step for RMSREM has been completed. The subsequent maximization step of RMSREM will be discussed in the next subsection.

3.3. Derivation of the Recursive Maximization Step

To recursively update the parameter

, it is necessary to conduct the derivative of the recursive

Q-function with respect to (w.r.t.)

and set the resulting term to zero as

The online solution for

is given as

where the two terms that determine the update of parameter vector, namely the sufficient statistics, are both computed via recursive processes. A recursive formula for the denominator is

while the numerator of the parameters is

To estimate the measurement noise variance, the recursive

Q-function is differentiated w.r.t. the standard deviation

, and then the resulting term is set to zero, leading to

Employing a similar approach, the recursive fractional update equation for the variance

is obtained as

and the denominator is

while the numerator is updated as

Similar to the updates of the parameter vector and variance, the update for the dof

would be derived from the recursive

Q-function in (

16). The partial derivative of

w.r.t.

is computed and equated to zero. This expression is in the following equation:

Then, a function of

is obtained as

with

where

is an artificially defined recursive auxiliary statistic, which facilitates the update of the degrees of freedom. In the BEM framework,

is obtained directly by solving the associated equation of complete data. Regarding the online update step, the auxiliary statistic

admits a recursive representation.

Solving (

31) yields the estimate of the degrees of freedom of current time

k for

m-th sensor, i.e.,

, where the subscript

k is added explicitly for online estimation. In simulation studies, this can be computed using Matlab’s

fsolve function, whereas in practical applications, standard nonlinear optimization methods may be employed.

3.4. Solution for Weights

As mentioned previously, the weights of sensors depend on the variance of measurement noise. After performing a recursive estimation of the noise variance based on hyperparameters, the multi-task likelihood function relating to

can be expressed in a following form:

An intermediate solution of

w.r.t. (

33) would be obtained via partial derivatives approach. Substituting the results back into (

33), the term

would be eliminated in the subsequent derivation. Solving the sensor weights is then simplified as the following constrained optimization issue:

Introducing the Lagrange multiplier

, a Lagrange function is formulated as follows:

then, the weight of the sensor

is finally determined as

The solution of the sensor weight vector can be expressed into a matrix form as

where

is an

M-dimensional vector.

Hence, the derivation of the robust multi-sensor recursive EM (RMSREM) algorithm for handling multi-sensor linear ARX models has been completed. The operating steps are listed in Algorithm 1.

| Algorithm 1. Robust multi-sensor recursive EM algorithm. |

- Require:

Observations of sensors , regressive vector ; - Ensure:

Updated , , for a next k - 1:

Initialization: - 2:

Assign step-size ; - 3:

Assign random values in to elements of parameter vector ; - 4:

Assign large values to ; - 5:

Assign large values to ; - 6:

while do - 7:

Recursive E-step (per sensor) Calculate the posterior expectations via ( 20); Calculate the posterior expectations via ( 21); - 8:

Weight update Update the sensor weights via ( 37); - 9:

Recursive M-step1 Update the model parameter via ( 23); - 10:

Recursive M-step2 (per sensor) Update the sensor noise variance via ( 27); Update the dof of Student’s t-distribution via ( 31); - 11:

end while

|

3.5. Analysis of Convergence and Computational Complexity

- A.

Analysis of convergence issue of RMSREM algorithm.

The Student’s

t-distribution belongs to the curved exponential family. When only one sensor is considered, the complete-data likelihood can be decomposed as

where the variable

is the sufficient statistics,

is the natural parameters, and

represents the inner product. Considering the

time instant, the sufficient statistics can be defined as

, while the natural parameter can be defined as

. When the Kullback-Leibler divergence

is selected as the Lyapunov function, the convergence of the online algorithm can be proved using Lyapunov stability theorem or Theorem 1 of [

10], where

represents the actual probability density function of the observation

Y, and

is the observed likelihood function based on estimated

. The input variable

U is omitted here for clarity.

The multi-sensor case would share the same convergence property with the one-sensor case, with the weights of sensors . The mathematical proof would be investigated in future work.

- B.

Analysis of computational complexity of RMSREM algorithm.

Considering the online updating nature of the RMSREM algorithm, the computational complexity of the one-step operation is discussed.

In the proposed algorithm, the step with the heaviest computational burden is the inversion of the denominator of parameter vector

, which has a computational complexity of

. The computational complexity of the recursive updating of

is

, involving the multiple sensors. As the sensor weight matrix is diagonal, the computational complexity of its inversion is

, which will be overshadowed by other steps with higher computational complexity. Moreover, the function

in (

31) is monotonous regarding

. Therefore, the computational complexity of its optimal solution is

, where

i is the iteration quantity cannot be accurately determined before the convergence of numerical optimization approach.

Therefore, the overall computational complexity of one-step operation of RMSREM algorithm when a new sample arrives is .

4. Algorithm Verification

4.1. Numerical Simulation

This experiment is a numerical simulation. To examine the multi-sensor characteristics of the RMSREM algorithm proposed in the paper, two sensors with different variances were set up and modeled using a second-order linear ARX models. The governing equation is as follows:

Among them, regressive vector is set as , the input follows a uniform distribution, namely , is a parameter vector. Two sets of Gaussian distributed noise were generated, where and , which were added to the noiseless output. A proportion (in this case, 5%) of the measured values are replaced with random disturbances uniformly distributed on , serving as outliers.

During the numerical simulation process, dynamic changes and multi-sensor scenarios were designed to examine the robustness of the RMSREM algorithm. To test the online recursive updating characteristics of the algorithm, the process sample sequence is split into two stages in chronological order (i.e., Phase I and Phase II). The parameter vector of the system will shift along with the switch states. The time-varying nature of parameter vectors results in the time-varying dynamics of the system, which hinders the implementation of conventional batch algorithms. The RMSREM algorithm can effectively solve the problem of system dynamic changes.

As mentioned above to simulate the multi-sensor scenarios, two types of noise are generated. Subsequently, 40,000 samples are generated according to (

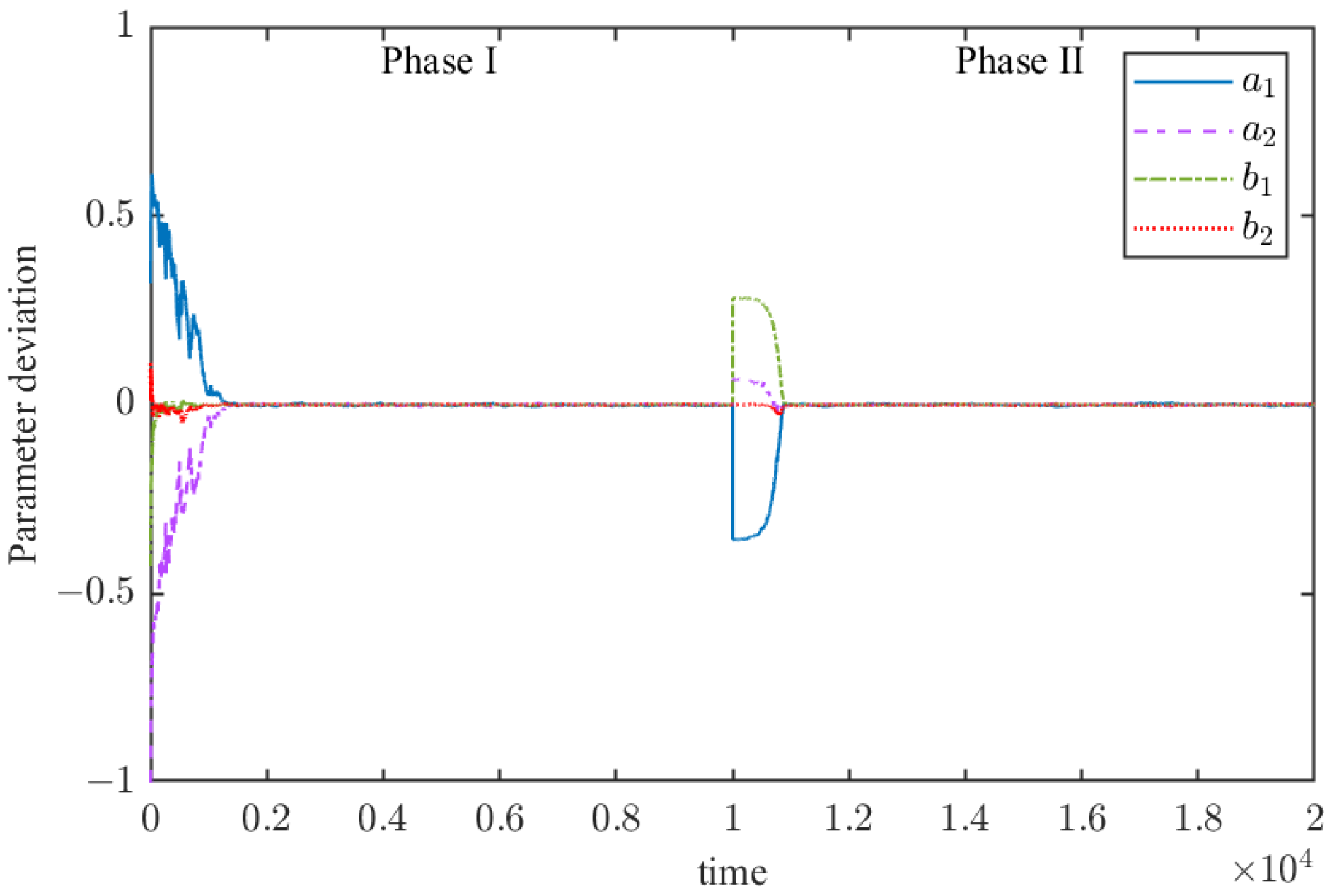

40), add noise to the generated samplewith each sensor containing 20,000 samples and each phase containing 10,000 samples. The input dual-output curves of the numerical example near the phase transition point are shown in

Figure 2, where outliers are also plotted.The time-varying actual parameter vectors are given in

Table 1.

The parameter obtained from the proposed the RMSREM algorithm are also shown in

Table 1, along with a comparison with the results of robust recursive EM (RREM) algorithm [

15]. It should be noted that the RREM is implemented separately for the two sensors, denoted as RREM 1 and RREM 2, since it cannot process multi-sensor information. For the RMSREM algorithm, the parameter vector is obtained at the last moment of each phase. As illustrated in this table, the parameter vectors estimated by RREM 1 and the RMSREM algorithm are consistent with the actual parameter vectors. However, the parameters of RREM 2 failed to converge to the true values due to the excessively large noise variance. This indicates that the RMSREM algorithm can effectively process multi-sensor information and can estimate the actual parameter vector when some sensors are failed.

Meanwhile, to evaluate the algorithm performance, the mean square error (MSE) of the estimated output is used, which is specified as

.

Table 2 presents the results of self-validation (SV) and cross-validation (CV) MSE for each algorithm under different outlier ratios. In this table, CV I stands for the first stage cross-validation, while CV II stands for the second stage cross-validation. The robust batch EM (RBEM) algorithm, the recursive robust EM (RREM) algorithm, and recursive multi-task EM (RMTEM) algorithm [

25] are adopted as benchmark methods. Since neither the RBEM algorithm nor the RREM algorithm can handle multi-sensor data simultaneously, they are implemented separately for the two sensors, denoted as RBEM 1, RBEM 2 and RREM 1, RREM 2, which process Sensor 1 and Sensor 2 respectively. As shown in the table, the RMSREM algorithm, RREM 1, and RBEM 1 exhibit similar performance, while RREM 2 and RBEM 2 perform poorly due to the excessively large variance of Sensor 2. In contrast, RMTEM algorithm demonstrates inferior performance under the interference of outliers.

Table 3 illustrates the time cost of the proposed the RMSREM algorithm on RTM i7-10750H CPU @ 2.60 GHz, alongside benchmark methods including the RLS algorithm, the RREM algorithm, and the RMTEM algorithm. We also designed a comparison focusing on two key indices: the time cost of one-step operation and the time cost of solving dof

, where the latter accounts for a significant portion of the computational load. It can be seen that the solution time of the RMSREM algorithm is consistent with that of other algorithms under the recursive EM framework.

The parameter convergence process of the RMSREM algorithm is illustrated in

Figure 3. The figure shows that convergence occurs after 2000 samples in the first stage. When the system switches the parameter vector to stage 2 at the 10,000-th sample, the proposed recursive algorithm converges again after 1500 samples.

Furthermore, to obtain the effective range of the RMSREM algorithm against outlier interference, outliers ranging from 0% to 30% (in increments of 5%) were injected into the measured values and subjected to 50 Monte Carlo simulations.

Figure 4 plots the variation curve of MSE for self-validated N-step prediction, where the knee points of the proposed algorithm are marked. The curve in the left region of the inflection point is the effective range of the algorithm in this numerical simulation. The results indicate that the inflection point of the RMSREM algorithm falls within the range of 20% to 25%.

4.2. Continuous Stirred Tank Reactor Example

The continuous stirred tank reactor (CSTR) is a constant-volume, exothermic, irreversible, and nonlinear system. Its dynamic behavior follows the reaction mechanism, with the core governing equations referenced from [

33] as shown below (see

Figure 5 for the schematic diagram):

where

denotes the product concentration of Component A (the key output variable of interest in this study), and

represents the coolant flow rate (the key manipulated input variable). The dynamic relationship between these two variables is the focus of the analysis. Due to the nonlinear nature of the CSTR, the system needs to be linearized at preset operating points to investigate this relationship. In this work, two operating points of coolant flow rate,

and

, are selected to simulate the dynamic changes of Component A concentration, thereby verifying the adaptability of the RMSREM algorithm. The definitions and nominal values of other parameters in governing equations can be found in [

15].

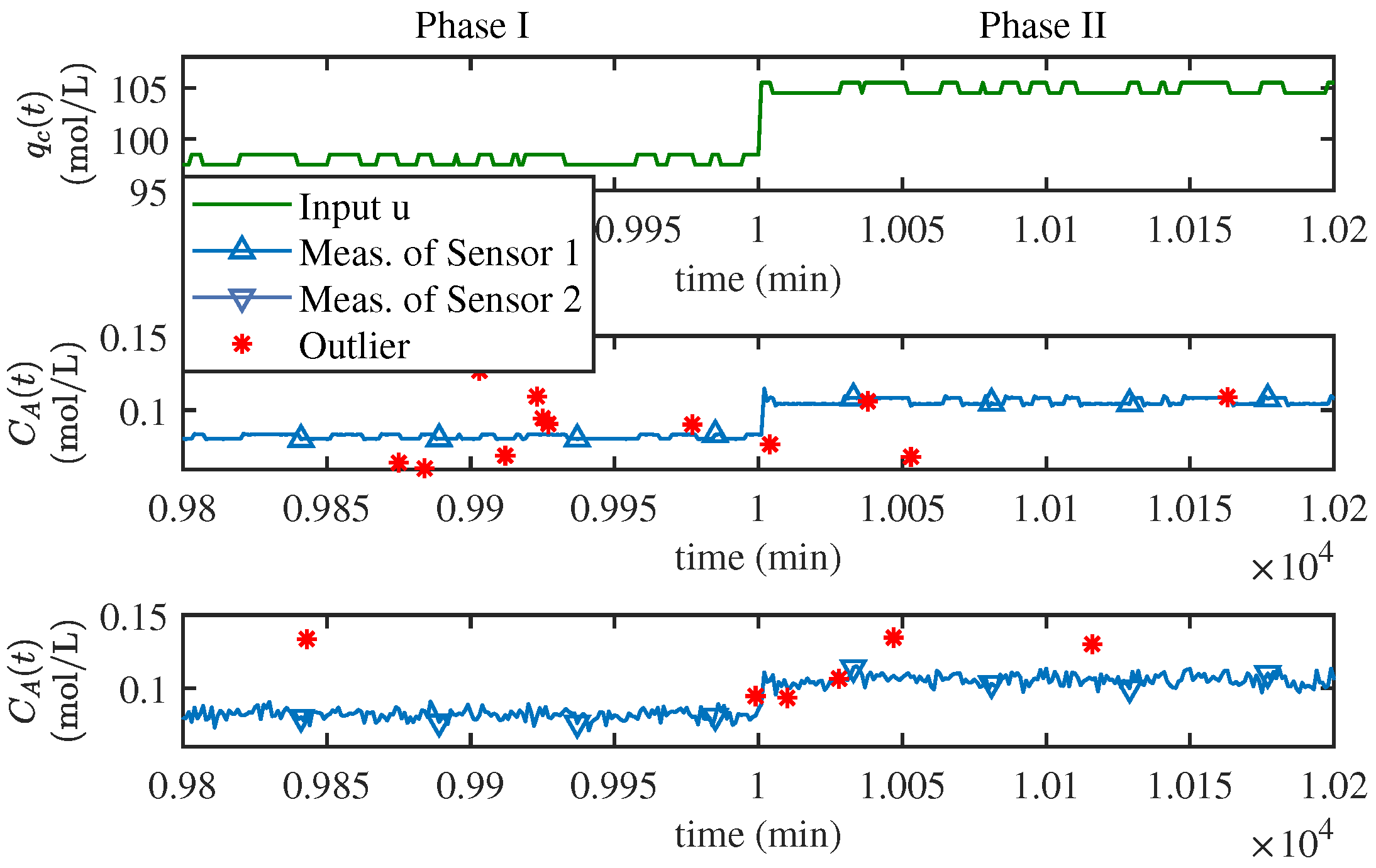

To guarantee sufficient excitation, a special binary signal is created that flips between −0.5 and 0.5 for the cooling liquid flow. Then, the reactor system is operated according to the governing Equations (

41) and (

42), and the input and output values are sampled at each minute (sampling rate: 1 min). In total, 20,000 samples are recorded, with 10,000 in each stage of the simulation. Next, two different series of Gaussian noise are added to the samples, imitating the signals coming from two separate measuring tools. The first series has a very small scatter, as

. The second series has a variance about a thousand times more, i.e.,

. To mimic occasional bad readings, 3% of the samples are randomly picked and replaced with outliers, with a random number between −0.05 and 0.05.

Figure 6 exhibits a curve segment of the input and the two sets of output neighboring the stage switching instant. The outliers that were generated on purpose are also marked in the figure.

The step size

is an artificially determined hyperparameter in the RMSREM algorithm, which significantly affects the performance of the method. To determine an appropriate step size, we conducted comparative experiments with different constant step sizes (0.001, 0.005, and 0.01) and visualized the results in

Figure 7. Through comprehensive analysis of the performance metrics, the step size of 0.01 was ultimately selected for both simulation examples in this work [

10,

11].

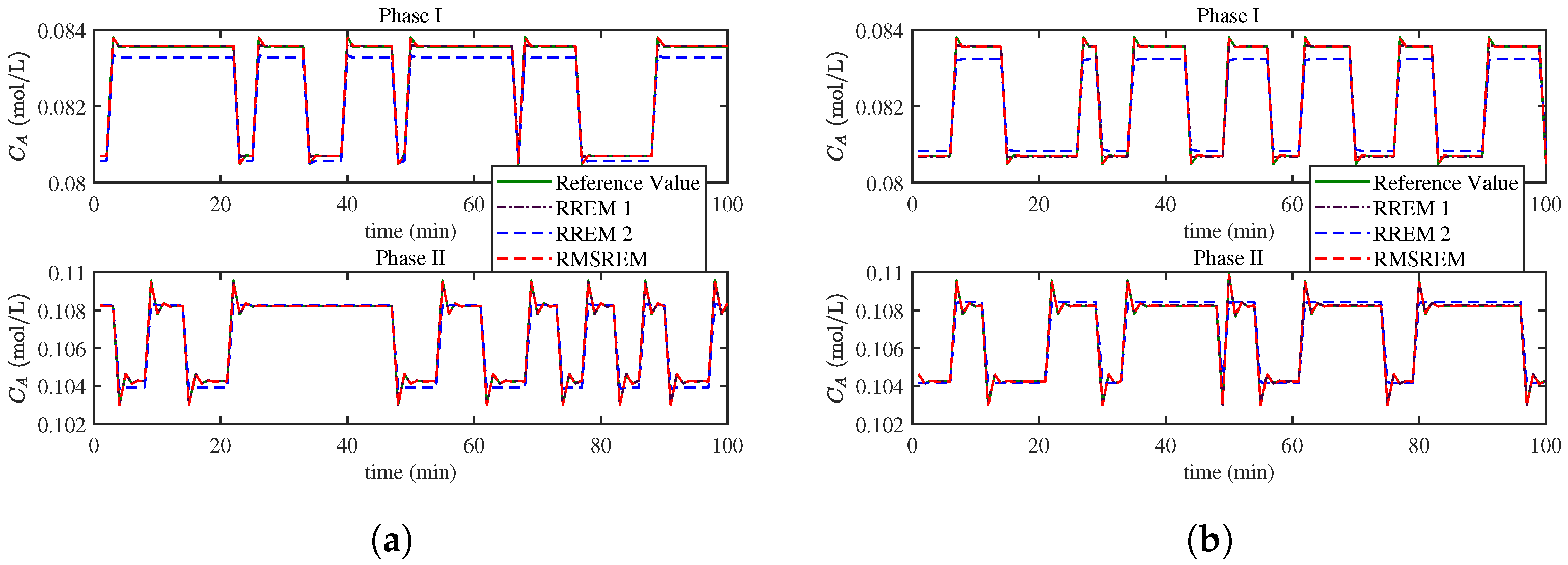

A first-order ARX model is adopted to depict the specific dynamic characteristics. The RMSREM algorithm is then implemented and compared with the RREM algorithm. Since the RREM algorithm cannot handle multi-ensor data, it is implemented separately for each sensor, denoted as RREM 1 and RREM 2.

Figure 8a shows the curve of product concentration of Component A for self-validation samples, which are the true values of samples without added noise. The corresponding curves for cross-validation are shown in

Figure 8b. For the sake of clarity, only selected segments of the curves are presented, with the displayed regions chosen at random. Both SV and CV experiment involve making N-step forecasts of the production concentration of component A in CSTR. As illustrated in the figures, even in the presence of outliers, the algorithm accurately tracks the concentration trajectory in both validation scenarios. In comparison, RREM exhibits noticeable prediction errors as sensor noise levels rise.

The MSE of the algorithms, defined as

, is listed in

Table 4, which shows the impact of different outlier proportions. All results are derived from 50 Monte Carlo simulations to ensure statistical reliability. The benchmark methods include the RBEM algorithm, the RREM algorithm (consistent with the numerical simulation section, implemented separately for the two sensors), and RMTEM algorithm. As illustrated in the table, the proposed the RMSREM algorithm outperforms RBEM algorithm, RREM algorithm, and RMTEM algorithm across all outlier proportion scenarios. Notably, RMTEM exhibits inferior performance under outlier interference, while RBEM algorithm and RREM algorithm also deteriorate significantly when the outlier proportion increases to 5%. In contrast, the RMSREM algorithm maintains excellent convergence capability even under such adverse conditions.

The effective range of the RMSREM algorithm in the CSTR process is explored through 50 Monte Carlo simulations. To better varify the multi-sensor robustness of the RMSREM algorithm, two methods of adding outliers are designed:

- -

Case 1: The outlier proportions of the two sensors are the same and increased gradually.

- -

Case 2: The second sensor is set to a damaged state with 100% outliers, and the outlier proportion of the first sensor is increased gradually.

The outlier proportion varies from 0% to 7.0% with an increment of 0.5%.

Figure 9 presents the MSE of N-step self-validation predictions within one phase under different outlier contamination degrees. We have labeled the mean and confidence intervals of MSE for 3% and 6% outlier proportions in the graph. Specifically,

Figure 9a shows that the effective range reaches 6.0% under Case 1, while

Figure 9b indicates an effective range of 3.5% under the Case 2. The comparison between these two scenarios fully demonstrates the robustness of the multi-sensor framework: even when one sensor is completely compromised by outliers, the proposed the RMSREM algorithm can still leverage valid information from the other sensor to achieve accurate parameter identification.

The ability of the RMSREM algorithm method developed in this work to handle disturbances effectively is rooted in its employment of the t-distribution. Drawing on the properties of this distribution, the algorithm uses the posterior expectation of the variance scaling factor as a weight in the process of parameter updating. Specifically, when an unusual or erroneous measurement (an outlier) appears at time k, the expectation step yields a small value of . Consequently, the influence of the k-th data point is diminished during the parameter updating. Simultaneously, the contribution of each sensor is further refined with the computation of . A lower weight is assigned to the sensor that exhibits a higher degree of measurement variability (larger variance).