Enhancing Computational Thinking and Programming Logic Skills with App Inventor 2 and Robotics: Effects on Learning Outcomes, Motivation, and Cognitive Load

Highlights

- The instructional module integrating App Inventor 2 with a six-axis robotic arm significantly improved the students’ computational thinking and programming logic skills.

- Hands-on robotic operation and problem-based learning tasks enhanced the students’ intrinsic motivation, engagement, and willingness to tackle challenges.

- The task-based, visualized programming environment effectively reduced the students’ cognitive load by simplifying syntax and supporting concrete spatial reasoning.

- Students reported high system satisfaction, particularly regarding perceived usefulness, ease of use, and behavioral intention toward continued learning.

- Future research could develop and assess interdisciplinary learning scenarios to enhance students’ scientific literacy and learning outcomes.

- The proposed instructional module has demonstrated effectiveness in cultivating students’ cross-disciplinary integration skills within the context of this study’s specific school, age group, and short-term intervention.

Abstract

1. Introduction

- (1)

- Does the teaching module integrating App Inventor 2 with interactive robotic-arm operation improve students’ computational thinking and programming logic skills?

- (2)

- How does hands-on interaction with the robotic arm affect intrinsic and extrinsic learning motivation, compared with video-based instruction?

- (3)

- Does the module reduce cognitive load, as measured by mental load and mental effort scales, compared with video-based instruction?

- (4)

- How do students evaluate system satisfaction, particularly regarding perceived usefulness, perceived ease of use, and behavioral intention of the integrated App Inventor 2 and robotic arm system?

2. Literature Review

2.1. Robotics and STEM Education

2.2. App Inventor 2

2.3. Computational Thinking

2.4. Spatial Concepts

- Spatial orientation—understanding relative positions; strengthened by visual cues and task scaffolding.

- Coordinate localization—mapping x–y positions to spatial contexts; reinforced through hands-on manipulation of robotic arms.

2.5. Learning Motivation

2.6. Cognitive Load

2.7. Technology Acceptance Model

2.8. Constructivist and Experiential Learning Theories

3. Materials and Methods

3.1. Research Design and Level

- Independent variable: Instructional activity (hands-on vs. video-based instruction);

- Dependent variables: Learning achievement, learning motivation, cognitive load, and system satisfaction;

- Control variables: The intervention lasted three weeks, with one 50 min session per week for a total 150 of minutes. To enhance internal validity, the instructional time, content, assessment items, and instructor were kept consistent, and pretest scores were used as covariates in the ANCOVA to control for initial differences. Participants were selected to have no prior experience with the target learning content.

3.2. Research Instruments

3.2.1. Learning Achievement Test

3.2.2. Learning Motivation Scale

3.2.3. Cognitive Load Scale

3.2.4. System Satisfaction Questionnaire

3.3. Experimental Design

- O1: Both groups completed a pre-test on programming logic and computational thinking.

- X1: The experimental group received instruction with robotic-arm operations.

- X2: The control group received instruction supported by video demonstrations.

- O2: Both groups completed a post-test on programming logic and computational thinking.

- O3: Both groups completed questionnaires measuring learning motivation and cognitive load.

- O4: The experimental group completed an additional TAM questionnaire.

- Program the robotic arm using App Inventor 2 to navigate a predefined maze.

- Translate logical commands into visual programming blocks within App Inventor 2.

- Test and refine their programs to achieve the correct maze navigation.

- Step-by-step explanations of robotic-arm programming using App Inventor 2.

- Visual demonstrations of the Smart Maze task being completed by the robotic arm.

- Completing equivalent task challenges presented in the experimental group using App Inventor 2, ensuring that both groups were exposed to the same content.

3.4. Instructional Design

3.4.1. Course Content

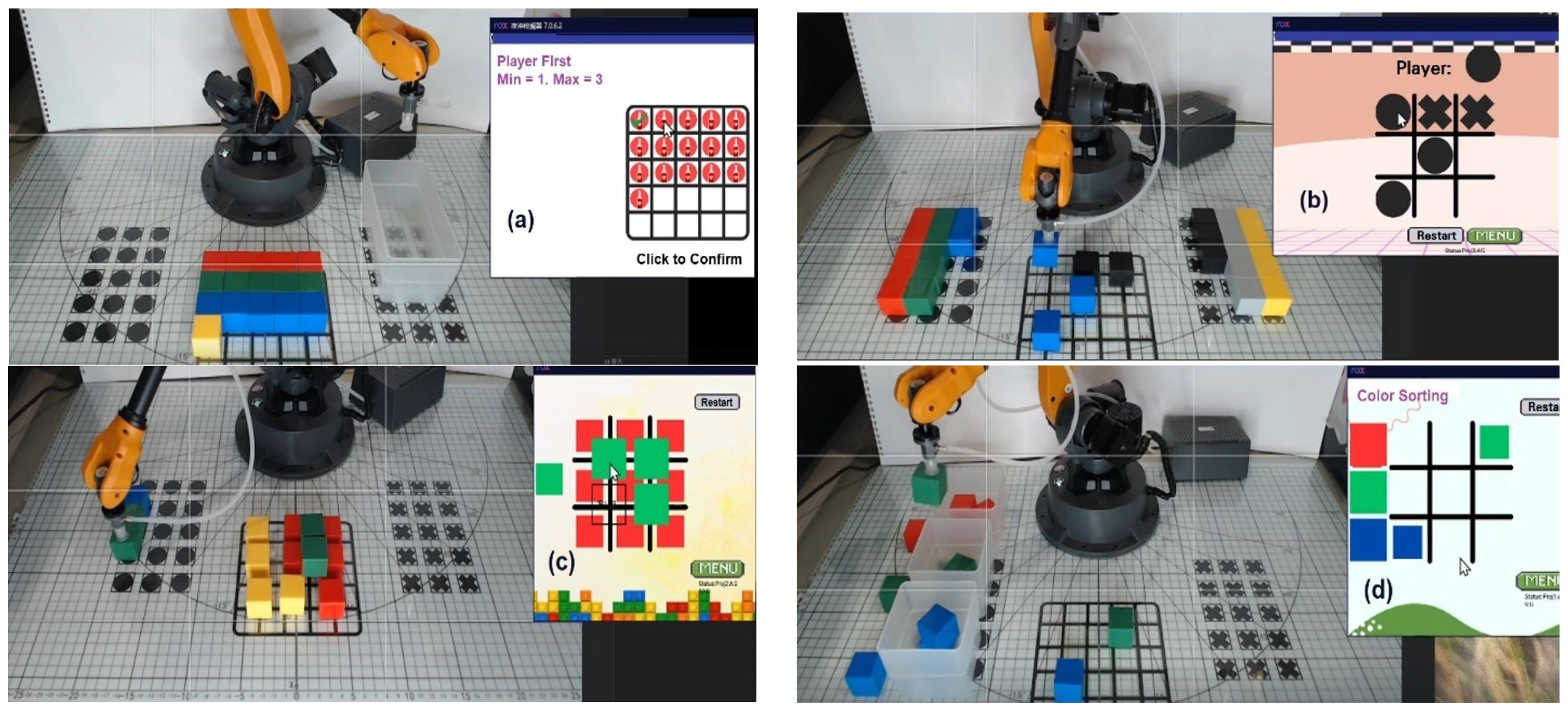

- Removing Blocks: A two-player Nim game in which players take turns removing blocks from distinct heaps or piles. On each turn, a player must remove at least one block and may take any number from a single heap, as defined at the start. The player forced to take the final block loses the game.

- Tic-Tac-Toe: A strategy game played on a 3 × 3 grid. Players take turns placing their symbol—either “X” or “O”—in an empty square. The objective is to align three of one’s symbols in a row—horizontally, vertically, or diagonally—before the opponent. If all squares are filled without a winning line, the game ends in a draw.

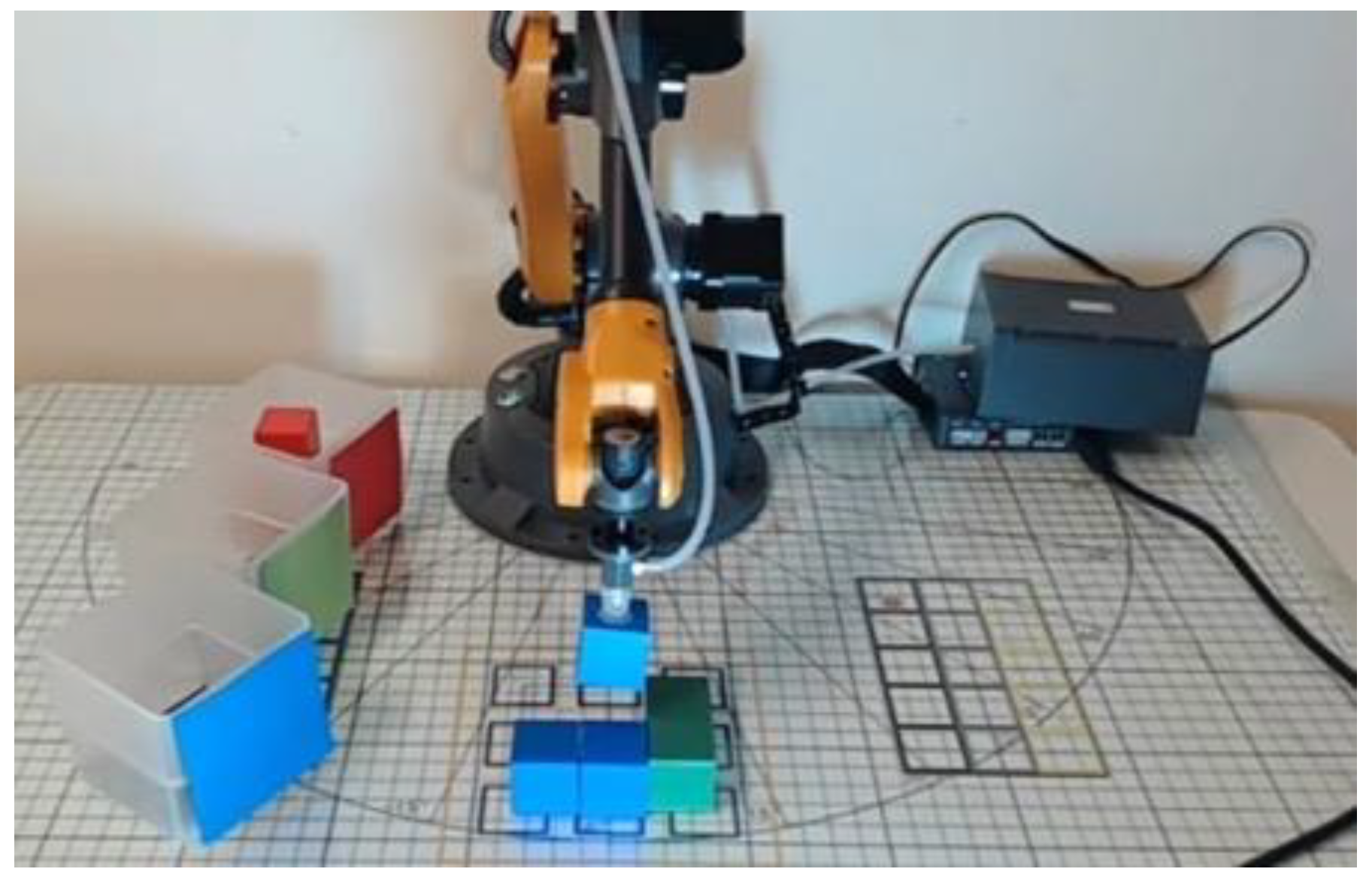

- Block Stacking: This hands-on activity requires players to use a programmed robotic arm to pick up and stack blocks to form specified shapes according to instructions. The task demands precision, careful planning, and spatial reasoning, as the robot must execute each command accurately.

- Color Sorting: In this task, students program a robotic arm to identify and sort blocks by color using a robotic vision system. The activity requires designing logical rules to ensure accurate classification of the blocks.

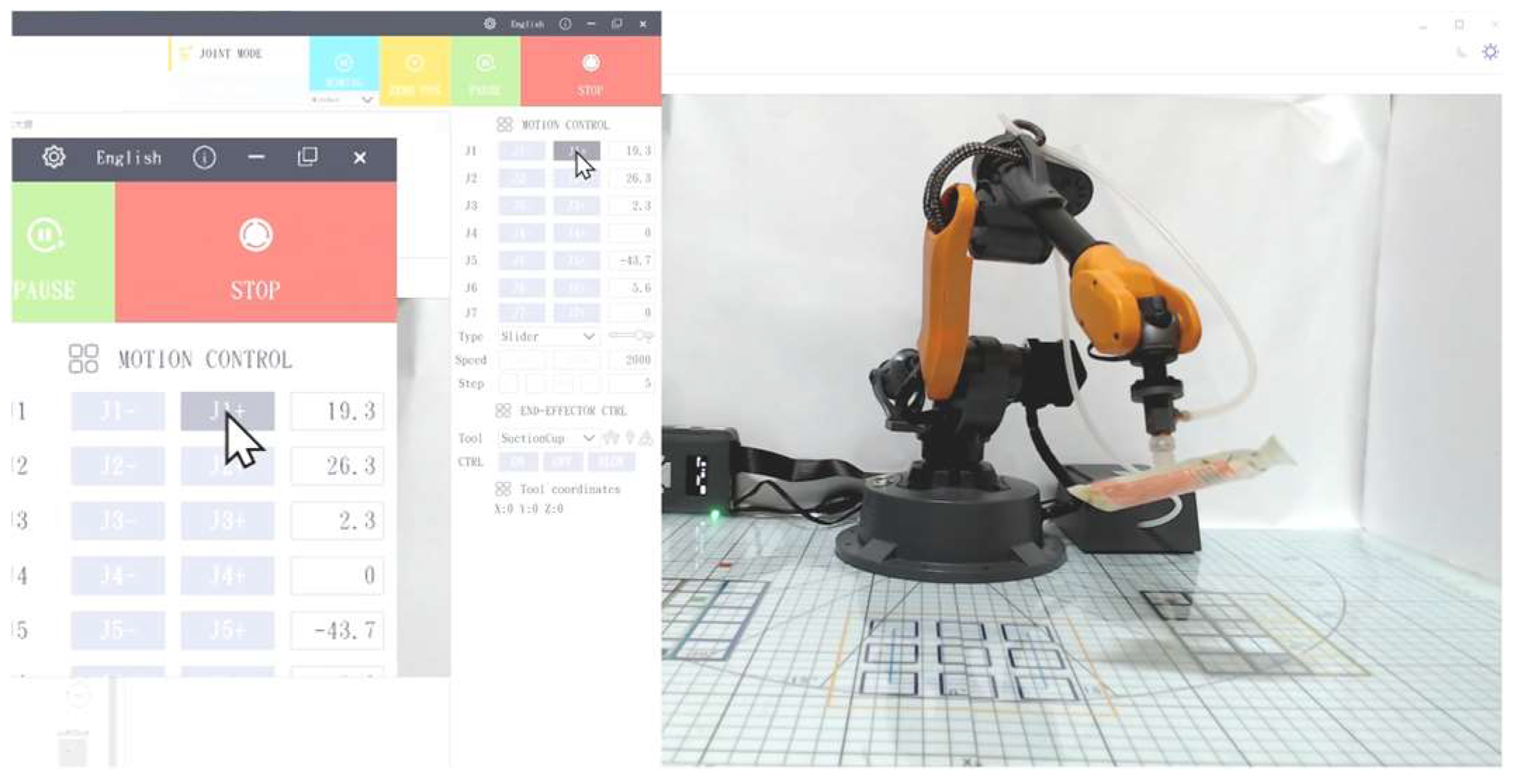

3.4.2. WLKATA Mirobot

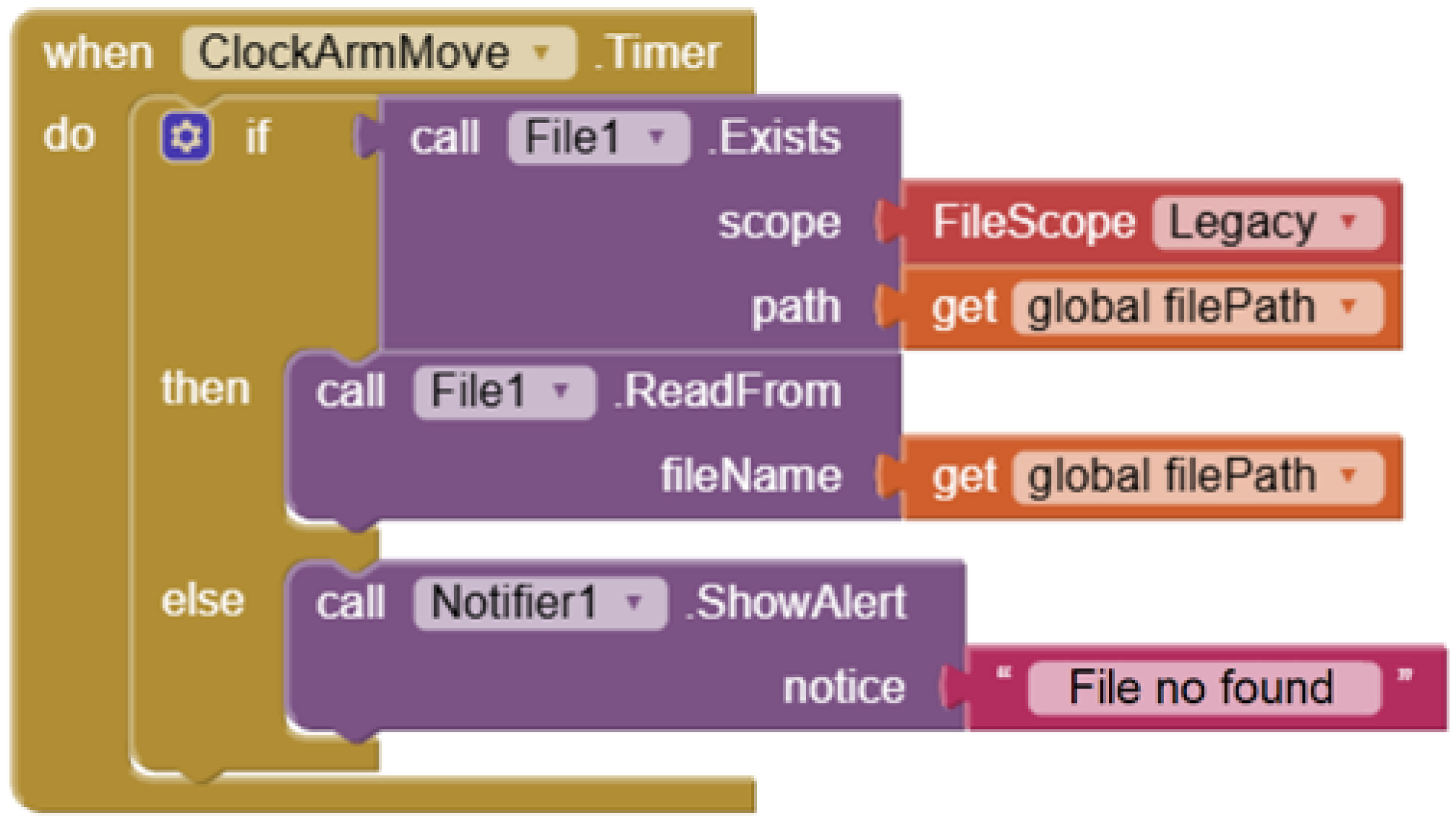

3.4.3. App Inventor 2 Programming

3.4.4. Communication Interface

3.5. Data Analysis

3.5.1. Source of Information

3.5.2. Data Processing

- Learning Motivation: Overall = 0.854; Intrinsic Motivation = 0.823; Extrinsic Motivation = 0.715.

- Cognitive Load: Overall = 0.842; Mental Load = 0.771; Mental Effort = 0.750.

- System Satisfaction: Overall = 0.775; Perceived Usefulness = 0.708; Perceived Ease of Use = 0.730; Behavioral Intention = 0.850.

3.5.3. Statistical Analysis Methods

- Software and versions: Data analyses conducted in SPSS 30.0 and MIT App Inventor 2 nb202b.

- Data cleaning rules: Participants with >20% missing responses or extreme outliers (>3 SD) were excluded, and missing values were handled by listwise deletion.

- Planned analyses: Pre–test and post-test comparisons with paired-samples t-tests; between-group differences with independent t-tests; overall effects analyzed with mixed-design ANOVA. Effect sizes reported with 95% CIs.

- Instrument: Achievement test is included in Appendix A.

3.5.4. Qualitative Data Design, Treatment, and Analysis

4. Analysis Results

4.1. Learning Effectiveness Analysis

4.2. Learning Motivation Analysis

4.3. Cognitive Load Analysis

4.4. System Satisfaction Analysis

4.5. Qualitative Results

5. Discussion

5.1. Learning Effectiveness and Conceptual Understanding

5.2. Motivation and Engagement in Interactive Learning

5.3. Cognitive Load Reduction and Learning Efficiency

5.4. System Satisfaction and Educational Implications

6. Conclusions and Future Work

6.1. Conclusions

- (1)

- Effects on Learning Outcomes

- (2)

- Effects on Learning Motivation

- (3)

- Effects on Cognitive Load

- (4)

- Student Feedback

- (5)

- Limitations and Implications

6.2. Future Work

- (1)

- Enhancing Learning Content

- (2)

- Extending Instructional Duration

- (3)

- Personalized Instruction

- (4)

- Cross-Disciplinary Applications

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Achievement Test

- Part A. Robot and Spatial Coordinates

- (1)

- If James wants the robotic arm to draw a straight line on the table, how should he operate the robotic arm?

- A.

- Only rotate Joint 1

- B.

- Only rotate Joint 2

- C.

- Only rotate Joint 3

- D.

- Rotate both Joint 2 and Joint 3

- (2)

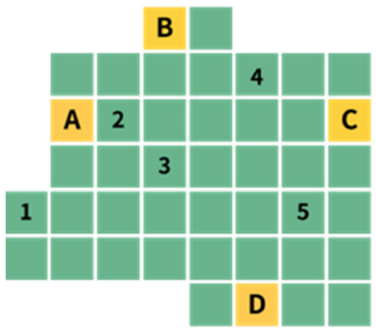

- David designed a Snake game path in App Inventor 2, aiming for it to follow the alphabetical order A→B→C…, as shown in the following figure. If the snake starts from A(2,10), what will be the coordinates of the first point where it touches the X-axis?

- A.

- (8, 0)

- B.

- (12, 0)

- C.

- (16, 0)

- D.

- (20, 0)

- (3)

- If the robotic arm is to pick up the blue object on the table as shown below, which type of rotational motion is required for the three joints?

- A.

- Joint 1 clockwise, Joint 2 clockwise, and Joint 3 counterclockwise

- B.

- Joint 1 clockwise, Joint 2 counterclockwise, and Joint 3 clockwise

- C.

- Joint 1 counterclockwise, Joint 2 clockwise, and Joint 3 counterclockwise

- D.

- Joint 1 counterclockwise, Joint 2 clockwise, and Joint 3 clockwise

- (4)

- To move the robotic arm’s end effector from the yellow grid to the blue grid, which joint will not be used?

- A.

- Joint 1

- B.

- Joint 2

- C.

- Joint 3

- D.

- Joint 4

- (5)

- A robot’s base is positioned at the origin (0, 0, 0), and its end effector is initially located at coordinates (0, 5, 2). When Joint 1 rotates 90° clockwise, the arm is raised by 2 units and extended forward by 3 units. What will be the new coordinates of the end effector?

- A.

- (8, 2, 5)

- B.

- (8, 0, 4)

- C.

- (5, 8, 5)

- D.

- (8, 5, 5)

- Part B. Computational Thinking

- (1)

- A robot begins at position (0, 0) on a two-dimensional plane, facing right along the positive X-axis. Using block-based programming to control its movement, if the following commands are executed in sequence, what will be its final position and orientation?

- Turn right 90°

- Move forward 10 units

- Turn left 90°

- Move forward 5 units

What is the final x-coordinate of the robot?- A.

- 15

- B.

- 10

- C.

- 0

- D.

- 5

- (2)

- Tom used his program to control four robots moving within the grid shown in the figure below, where A, B, C, and D represent charging stations. The program may include the following three commands:

- Step forward—move to the next grid in front

- Turn left—rotate 90° counterclockwise, but do not move forward

- Turn right—rotate 90° clockwise, but do not move forward

- A.

- Grid 2

- B.

- Grid 3

- C.

- Grid 4

- D.

- Grid 5

- (3)

- George learned the following four drawing commands and a control command in his computer graphics class:

- T5—draw a large triangle

- T1—draw a small triangle

- R—draw a square

- Z—draw a trapezoid

- [ ]#—repeat the commands inside [ ] # times

George drew the following pattern. Which sequence of commands did he use?- A.

- [T5, R, T1, T1]2 R

- B.

- T5, Z [T1]2, Z, [T1]2, R

- C.

- [T5, Z, T1]2 T1, T1, R

- D.

- [T5, Z, [T1]2]2, R

- (4)

- A robot vacuum is exploring an unknown environment that may contain obstacles. Equipped with sensors (e.g., ultrasonic sensors), it can detect both the distance and direction of obstacles. Using block-based programming to control its movement, which of the following strategies would be most effective for enabling the robot to navigate and explore the environment efficiently?

- A.

- Randomly select a direction and move, repeating many times.

- B.

- Move following a fixed pattern (e.g., square or spiral) until all areas are explored.

- C.

- Keep moving forward until encountering an obstacle, then randomly choose a direction to bypass it, repeating the process.

- D.

- Combine sensor data with pre-set map information to plan an optimal path.

- (5)

- The control commands of a robot vacuum are defined as follows:

- Move Forward n: The robot moves forward n grids (n is a positive integer).

- Move Right: The robot first turns right, then moves forward one grid.

- Move Left: The robot first turns left, then moves forward one grid.

- Repeat R: The robot repeats commands inside the brackets R times (R is a positive integer).

- Part C. Programming

- (1)

- When a robotic arm performs a task, it evaluates sensor readings to determine its actions. Suppose there is a color sensor variable and a shape sensor variable, each storing text representing the detected color or shape of an object. Which of the following programs correctly implements the behavior: if the color sensor reads “Red” and the shape sensor reads “Square”, the label displays “This is a red square”; otherwise, it displays “This is red”?

- (2)

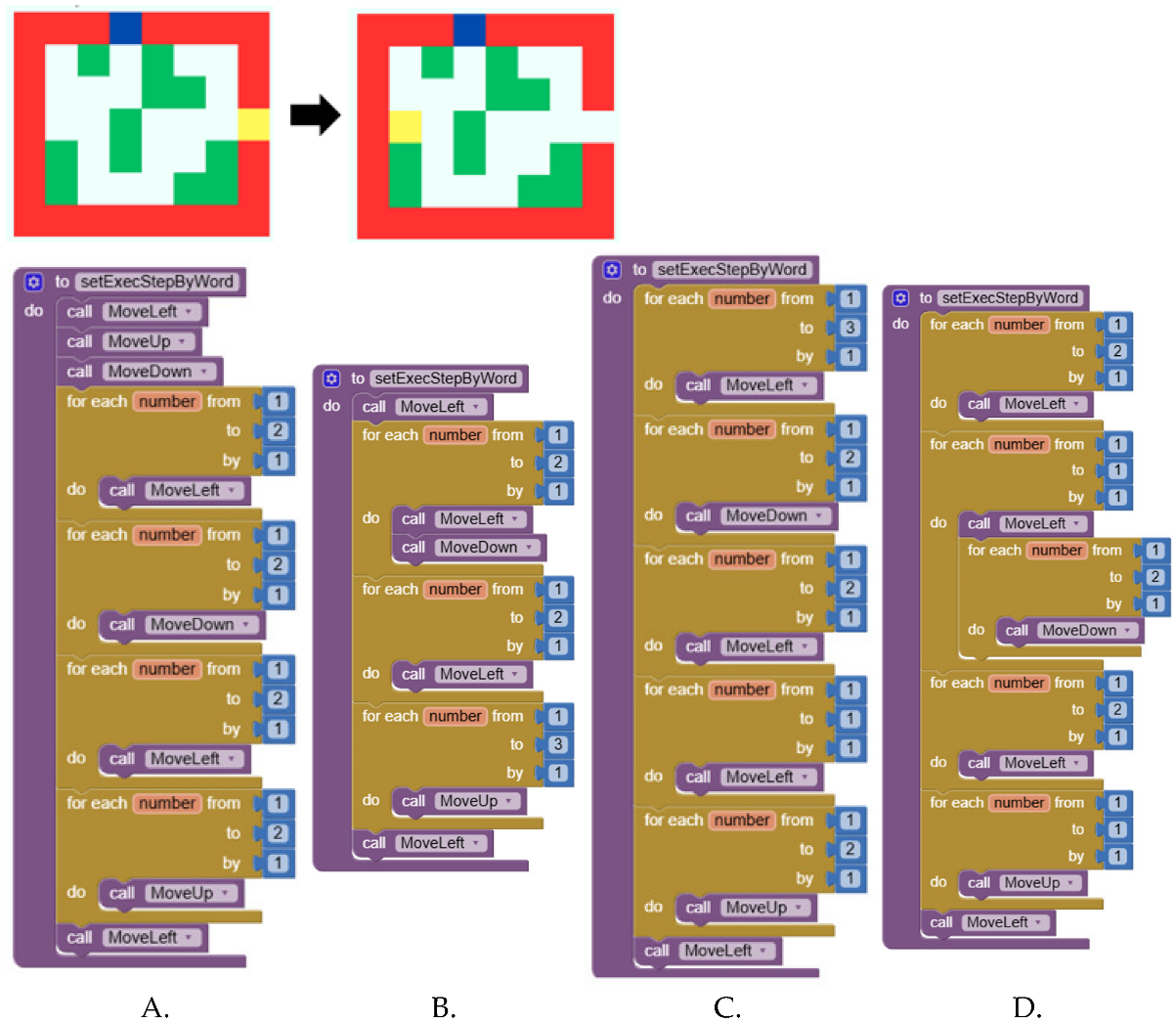

- In the maze shown below, the robot arm’s end position, indicated by the yellow block, moves from the position in the left figure to the position in the right figure. Which App Inventor 2 program correctly moves the robotic arm’s end position to the target location?

- (3)

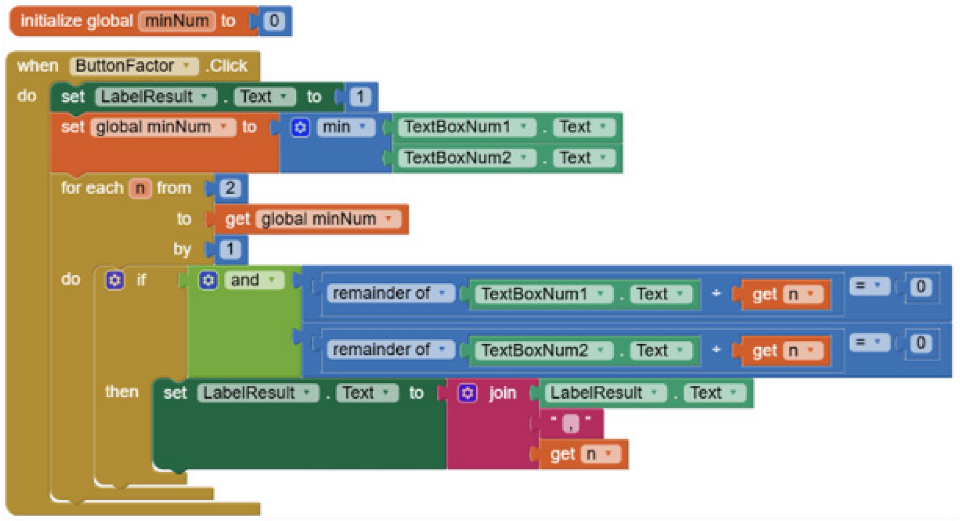

- What value is produced by the following program?

- A.

- Common multiple

- B.

- Least common multiple (LCM)

- C.

- Common factor

- D.

- Greatest common factor (GCF)

- (4)

- Which program can replace the one shown below while maintaining the same functionality?

- (5)

- The maze below was created in App Inventor 2 using a list. Red and green squares indicate obstacles, and the yellow square shows the robot’s current position. The variable lastMove is set to “Up.” After executing the following program, which of the figures (A, B, C, or D) represents the updated maze state?

References

- Ching, Y.-H.; Hsu, Y.-C. Educational Robotics for Developing Computational Thinking in Young Learners: A Systematic Review. TechTrends 2023, 67, 423–434. [Google Scholar] [CrossRef] [PubMed]

- Kalelioglu, F.; Gülbahar, Y. The effects of teaching programming via Scratch on problem-solving skills: A discussion from learners’ perspective. Inform. Educ. 2014, 13, 33–50. [Google Scholar] [CrossRef]

- Clabes, J. St. Henry District High School’s “Crubotics” Robotics Team Qualifies for State Championship Competition. NKyTribune. 24 November 2023. Available online: https://nkytribune.com/2023/11/st-henry-district-high-schools-crubotics-robotics-team-qualifies-for-state-championship-competition/ (accessed on 28 August 2025).

- ISO. Manipulating Industrial Robots: It Is the ISO Standard that Defines Terms Relevant to Manipulating Industrial Robots Operated in a Manufacturing Environment. 2021. Available online: https://www.iso.org/standard/75539.html (accessed on 28 August 2025).

- Papert, S. Situating constructionism. In Constructionism; Harel, I., Papert, S., Eds.; Ablex Publishing: Norwood, NJ, USA, 1991; pp. 1–11. [Google Scholar]

- Wing, J.M. Computational thinking. Commun. ACM 2006, 49, 33–35. [Google Scholar] [CrossRef]

- Pekarcikova, M.; Trebuna, P.; Kliment, M.; Kronova, J. Educational robotics for Industry 4.0 and 5.0 with WLKATA Mirobot in laboratory process modelling. Machines 2025, 13, 753. [Google Scholar] [CrossRef]

- Brender, J.; El-Hamamsy, L.; Bruno, B.; Lazzarotto, F. Investigating the role of educational robotics in formal mathematics education: The case of geometry for 15-year-old students. In Lecture Notes in Computer Science; Spring: Berlin/Heidelberg, Germany, 2021. [Google Scholar]

- Sabado, W.B. Education 4.0: Using Web-based Massachusetts Institute of Technology (MIT) App Inventor 2 in Android application development. Int. J. Comput. Sci. Res. 2024, 8, 2766–2780. [Google Scholar] [CrossRef]

- Chen, P.; Huang, R. Design thinking in App Inventor game design and development: A case study. In Proceedings of the IEEE 17th International Conference on Advanced Learning Technologies (ICALT), Timisoara, Romania, 3–7 July 2017. [Google Scholar]

- Denner, J.; Werner, L.; Ortiz, E. Computer games created by middle school girls: Can they be used to measure understanding of computer science concepts? Comput. Sci. Educ. 2012, 22, 358–377. [Google Scholar] [CrossRef]

- Fessakis, G.; Gouli, E.; Mavroudi, E. Problem solving by 5–6 years old kindergarten children in a computer programming environment: A case study. Comput. Educ. 2013, 63, 87–97. [Google Scholar] [CrossRef]

- Ni, L.; McKlin, T.; Guzdial, M. Outcomes from an App Inventor Summer Camp: Motivating K-12 Students Learning Fundamental Computer Science Concepts with App Inventor. 2016. Available online: https://stelar.edc.org/sites/default/files/Ni%20et%20al%202016.pdf (accessed on 28 August 2025).

- Zaranis, N.; Orfanakis, V.; Papadakis, S.; Kalogiannakis, M. Using Scratch and App Inventor for teaching introductory programming in secondary education: A case study. Int. J. Technol. Enhanc. Learn. 2016, 8, 217–233. [Google Scholar] [CrossRef]

- Kalakos, N.; Papantoniou, A. Pervasiveness in real-world educational games: A case of Lego Mindstorms and M.I.T App Inventor. In Lecture Notes of the Institute for Computer Sciences, Social Informatics and Telecommunications Engineering; Vesa, J., Ed.; Springer: Cham, Switzerland, 2012; Volume 118, pp. 17–28. [Google Scholar]

- Briz-Ponce, L.; Pereira, A.; Carvalho, L.; Juanes-Méndez, J.A.; García-Peñalvo, F.J. Learning with mobile technologies—Students’ behavior. Comput. Hum. Behav. 2017, 72, 612–620. [Google Scholar] [CrossRef]

- Ministry of Education (MOE), Taiwan. National Academy for Educational Research. 2021. Available online: https://www.lysh.tyc.edu.tw/wp-content/uploads/2025/04/taiwan-curriculum-guidelines.pdf (accessed on 28 August 2025).

- Wing, J.M. Research Notebook: Computational Thinking—What and Why? The Link Magazine Carnegie Mellon University. 2011. Available online: https://openlab.bmcc.cuny.edu/edu-210211-summer-2023-j-longley/wp-content/uploads/sites/3085/2023/06/CT-What-And-Why-copy.pdf (accessed on 28 August 2025).

- Barr, V.; Stephenson, C. Bringing computational thinking to K–12: What is it and why is it important? ACM Inroads 2011, 2, 48–54. [Google Scholar] [CrossRef]

- Rodríguez del Rey, Y.A.; Cawanga Cambinda, I.N.; Deco, C.; Bender, C.; Avello-Martínez, R.; Villalba-Condori, K.O. Developing computational thinking with a module of solved problems. Comput. Appl. Eng. Educ. 2021, 38, 506–516. [Google Scholar] [CrossRef]

- Cuervo-Cely, K.D.; Restrepo-Calle, F.; Ramírez-Echeverry, J.J. Effect of gamification on the motivation of computer programming students. J. Inf. Technol. Educ. Res. 2022, 21, 1–23. [Google Scholar] [CrossRef]

- Gundersen, S.W.; Lampropoulos, G. Using serious games and digital games to improve students’ computational thinking and programming skills in K–12 education: A systematic literature review. Technologies 2025, 13, 113. [Google Scholar] [CrossRef]

- Noordin, N.H. Computational thinking through scaffolded game development activities: A study with graphical programming. Eur. J. Educ. Res. 2025, 14, 1137–1150. [Google Scholar] [CrossRef]

- Wu, C.-H.; Chien, Y.-C.; Chou, M.-T.; Huang, Y.-M. Integrating computational thinking, game design, and design thinking: A scoping review on trends, applications, and implications for education. Humanit. Soc. Sci. Commun. 2025, 12, 163. [Google Scholar] [CrossRef]

- Yang, W.; Liu, H.; Chen, N.; Xu, P.; Lin, X. Is Early Spatial Skills Training Effective? A Meta-Analysis. Front. Psychol. 2020, 11, 1938. [Google Scholar] [CrossRef] [PubMed]

- Uttal, D.H.; Cohen, C.A. Spatial thinking and STEM education: When, why, and how? Psychol. Learn. Motiv. 2012, 57, 147–181. [Google Scholar] [CrossRef]

- Newcombe, N.S. Picture this: Increasing math and science learning by improving spatial thinking. Am. Educ. 2010, 34, 29–35. [Google Scholar]

- Cattell, J.M.; Galton, F. Mental tests and measurements. Mind 1890, 15, 373–381. [Google Scholar] [CrossRef]

- Thurstone, L.L. Primary Mental Abilities; University of Chicago Press: Chicago, IL, USA, 1938; Available online: https://archive.org/details/primarymentalabi00thur (accessed on 28 August 2025).

- Tarng, W.; Wu, Y.-J.; Ye, L.-Y.; Tang, C.-W.; Lu, Y.-C.; Wang, T.-L.; Li, C.-L. Application of virtual reality in developing the digital twin for an integrated robot learning system. Electronics 2024, 13, 2848. [Google Scholar] [CrossRef]

- Berson, I.R.; Berson, M.J.; McKinnon, C.; Aradhya, D.; Alyaeesh, M.; Luo, W.; Shapiro, B.R. An exploration of robot programming as a foundation for spatial reasoning and computational thinking in preschoolers’ guided play. Early Child. Res. Q. 2023, 65, 57–67. [Google Scholar] [CrossRef]

- Markvicka, E.; Finnegan, J.; Moomau, K.; Sommers, A.; Peteranetz, M.; Daher, T. Designing learning experiences with a low-cost robotic arm. In Proceedings of the 2023 ASEE Annual Conference & Exposition, Baltimore, MD, USA, 25–28 June 2023. [Google Scholar] [CrossRef]

- Francis, K.; Rothschuh, S.; Poscente, D.; Davis, B.; Enhancing Students’ Spatial Reasoning Skills with Robotics Intervention. University of Calgary. 2020. Available online: https://ucalgary.scholaris.ca/server/api/core/bitstreams/9a55604d-1dbc-4df1-8a2f/content (accessed on 28 August 2025).

- Julià, C.; Antolí, J.Ò. Spatial ability learning through educational robotics. Int. J. Technol. Des. Educ. 2016, 26, 185–203. [Google Scholar] [CrossRef]

- Stipek, D. Motivation and instruction. In Handbook of Educational Psychology; Berliner, D., Calfee, R., Eds.; Macmillan: New York, NY, USA, 1995; pp. 85–113. [Google Scholar]

- Maehr, M.L.; Meyer, H.A. Understanding motivation and schooling: Where we’ve been, where we are, and where we need to go. Educ. Psychol. Rev. 1997, 9, 371–409. [Google Scholar] [CrossRef]

- Ryan, R.M.; Deci, E.L. Intrinsic and extrinsic motivations: Classic definitions and new directions. Contemp. Educ. Psychol. 2000, 25, 54–67. [Google Scholar] [CrossRef] [PubMed]

- Deci, E.L.; Vallerand, R.J.; Pelletier, L.G.; Ryan, R.M. Motivation and education: The self-determination perspective. Educ. Psychol. 1991, 26, 325–346. [Google Scholar] [CrossRef]

- Zamora, M.; Lozada, D.; Buele, E.; Avilés-Castillo, M. Robotics in higher education and its impact on digital learning. Front. Comput. Sci. 2025, 7, 1607766. [Google Scholar] [CrossRef]

- Kaloti-Hallak, F.; Armoni, M.; Ben-Ari, M. The effectiveness of robotics competitions on students’ learning of computer science. Olymp. Inform. 2015, 9, 89–112. [Google Scholar] [CrossRef]

- Sweller, J. Cognitive Load Theory and Individual Differences. Learn. Individ. Differ. 2024, 110, 102423. [Google Scholar] [CrossRef]

- Sweller, J. Cognitive load theory and e-learning. In Artificial Intelligence in Education; Biswas, G., Bull, S., Kay, J., Mitrovic, A., Eds.; Springer: Berlin/Heidelberg, Germany, 2011; Volume 6738, pp. 3–17. [Google Scholar]

- Paas, F. Cognitive load measurement as a means to advance cognitive load theory. Educ. Psychol. 2003, 38, 63–71. [Google Scholar] [CrossRef]

- Chen, C.-M.; Lee, T.-H.; Hsu, S.-H. Extending the Technology Acceptance Model (TAM) to Predict Adoption of Metaverse Technology for Education. Educ. Technol. Res. Dev. 2023, 71, 123–145. [Google Scholar] [CrossRef]

- Venkatesh, V. Leveraging Context: Re-Thinking Research Processes to Make “Contributions to Theory”. Inf. Syst. Res. 2025, 36, 1871–1886. [Google Scholar] [CrossRef]

- Lee, A.T.; Ramasamy, R.K.; Subbarao, A. Understanding Psychosocial Barriers to Healthcare Technology Adoption: A Review of TAM and UTAUT Frameworks. Healthcare 2025, 13, 250. [Google Scholar] [CrossRef]

- Venkatesh, V.; Davis, F.D. A theoretical extension of the technology acceptance model: Four longitudinal field studies. Manage. Sci. 2000, 46, 186–204. [Google Scholar] [CrossRef]

- Venkatesh, V.; Morris, M.G.; Davis, G.B.; Davis, F.D. User acceptance of information technology: Toward a unified view. MIS Q. 2003, 27, 425–478. [Google Scholar] [CrossRef]

- Pedraja-Rejas, L.; Muñoz-Fritis, C.; Rodríguez-Ponce, E.; Laroze, D. Mobile Learning and Its Effect on Learning Outcomes and Critical Thinking: A Systematic Review. Appl. Sci. 2024, 14, 9105. [Google Scholar] [CrossRef]

- Teo, T. Factors influencing teachers’ intention to use technology: Model development and test. Comput. Educ. 2011, 57, 2432–2440. [Google Scholar] [CrossRef]

- Piaget, J. To Understand Is to Invent: The Future of Education; Grossman: New York, NY, USA, 1973. [Google Scholar]

- Kolb, D.A. Experiential Learning: Experience as the Source of Learning and Development; Prentice Hall: Englewood Cliffs, NJ, USA, 1984. [Google Scholar]

- Atmatzidou, S.; Demetriadis, S. Advancing students’ computational thinking skills through educational robotics: A study on age and gender relevant differences. Robot. Auton. Syst. 2016, 75, 661–670. [Google Scholar] [CrossRef]

- Anderson, L.W.; Krathwohl, D.R. A Taxonomy for Learning, Teaching, and Assessing: A Revision of Bloom’s Taxonomy of Educational Objectives: Complete Edition; Addison Wesley Longman, Inc.: New York, NY, USA, 2001. [Google Scholar]

- Dagienė, V.; Stupurienė, G. Informatics concepts and computational thinking in K–12 education: A Lithuanian perspective. J. Inf. Process. 2016, 24, 732–739. [Google Scholar] [CrossRef]

- Pintrich, P.R. A Manual for the Use of the Motivated Strategies for Learning Questionnaire (MSLQ); Nat. Center for Research to Improve Postsecondary Teaching and Learning, University of Michigan: Ann Arbor, MI, USA, 1991. [Google Scholar]

- Wang, L.C.; Chen, M.P. The effects of game strategy and preference-matching on flow experience and programming performance in game-based learning. Innov. Educ. Teach. Int. 2010, 47, 39–52. [Google Scholar] [CrossRef]

- Braun, V.; Clarke, V. Using thematic analysis in psychology. Qual. Res. Psychol. 2006, 3, 77–101. [Google Scholar] [CrossRef]

- Vygotsky, L.S. Mind in Society: The Development of Higher Psychological Processes; Harvard University Press: Cambridge, MA, USA, 1978. [Google Scholar]

- Mayer, R.E. Multimedia Learning, 2nd ed.; Cambridge University Press: Cambridge, UK, 2009. [Google Scholar]

- Fredricks, J.A.; Blumenfeld, P.C.; Paris, A.H. School Engagement: Potential of the Concept, State of the Evidence. Rev. Educ. Res. 2004, 74, 59–109. [Google Scholar] [CrossRef]

- Sweller, J. Cognitive Load Theory. In Psychology of Learning and Motivation; Elsevier Academic Press: Amsterdam, The Netherlands, 2010; Volume 55, pp. 37–76. [Google Scholar]

- Johnson-Glenberg, M.C. Embodied Learning: Why at School the Body Helps the Mind. Educ. Psychol. Rev. 2018, 30, 2098. [Google Scholar]

| Learning Topics | Cognitive Level | Test Items | Supporting References |

|---|---|---|---|

| Robots and Spatial Coordinates | Understanding | 2, 3, 5 | [1,7,31,33,34] |

| Applying | 1, 4 | [1,7,31] | |

| Analyzing | — | [1,31,34] | |

| Computational Thinking | Understanding | 8 | [6,19,20,53] |

| Applying | 6, 10 | [6,20,53,55] | |

| Analyzing | 7, 9 | [6,20,53] | |

| Programming Logic | Understanding | 13 | [9,10,14] |

| Applying | 11, 12, 14, 15 | [9,10,14,15] | |

| Analyzing | — | [9,14,15] |

| Group | Pre-Test | Treatment | Post-Test |

|---|---|---|---|

| Experimental Group | O1 | X1 | O2, O3, O4 |

| Control Group | O1 | X2 | O2, O3 |

| Group | Pre-Test | Post-Test | |||

|---|---|---|---|---|---|

| Mean | SD | Mean | SD | ||

| Experimental | Understanding | 2.95 | 1.03 | 3.54 | 0.77 |

| Control | Understanding | 2.59 | 1.21 | 2.95 | 0.94 |

| Experimental | Applying | 4.00 | 1.58 | 5.35 | 1.03 |

| Control | Applying | 3.43 | 1.48 | 3.76 | 1.40 |

| Experimental | Analyzing | 0.51 | 0.61 | 0.84 | 0.55 |

| Control | Analyzing | 0.59 | 069 | 0.59 | 0.55 |

| Experimental | Total Score | 7.45 | 2.29 | 9.73 | 1.50 |

| Control | Total Score | 6.62 | 1.97 | 7.29 | 1.83 |

| Group | Cognitive Level | Gain | SD | SE | t | p |

|---|---|---|---|---|---|---|

| Experimental | Understanding | 0.59 | 0.77 | 0.13 | 4.65 | <0.001 *** |

| Control | Understanding | 0.36 | 0.94 | 0.16 | 2.32 | 0.026 * |

| Experimental | Applying | 1.35 | 1.03 | 0.17 | 7.99 | <0.001 *** |

| Control | Applying | 0.33 | 1.40 | 0.23 | 1.43 | 0.161 |

| Experimental | Analyzing | 0.33 | 0.55 | 0.09 | 3.67 | <0.001 *** |

| Control | Analyzing | 0.00 | 0.55 | 0.09 | 0.00 | 1.00 |

| Experimental | Total Score | 2.27 | 1.50 | 0.25 | 9.27 | <0.001 *** |

| Control | Total Score | 0.67 | 1.83 | 0.30 | 2.23 | 0.032 * |

| Source | Type III Sum of Squares | df | F | p | η2 |

|---|---|---|---|---|---|

| Test | 80.277 | 1 | 40.503 | 0.000 *** | 0.360 |

| Group | 98.926 | 1 | 18.253 | 0.000 *** | 0.202 |

| Test × Group | 23.520 | 1 | 11.867 | 0.001 ** | 0.141 |

| Between Subjects | 390.216 | 72 | |||

| Residuals | 142.703 | 72 | |||

| Sum | 735.642 | 147 |

| Primary Effect | Type III Sum of Squares | df | F | p |

|---|---|---|---|---|

| Experimental Group | 95.351 | 1 | 37.454 | 0.000 *** |

| Control Group | 8.446 | 1 | 5.956 | 0.020 * |

| Error | 142.703 | 72 | ||

| Pre-test | 12.986 | 1 | 2.834 | 0.097 |

| Post-test | 109.459 | 1 | 38.818 | 0.000 *** |

| Error | 390.216 | 72 |

| Group | Mean | SD | df | t | p |

|---|---|---|---|---|---|

| Experimental Group (n = 37) | 3.71 | 0.56 | 72 | 2.206 | 0.03 * |

| Control Group (n = 37) | 3.41 | 0.61 |

| Group | Extrinsic Motivation | p | Intrinsic Motivation | p | ||

|---|---|---|---|---|---|---|

| Mean | SD | Mean | SD | |||

| Experimental Group | 3.66 | 0.62 | 0.075 | 3.77 | 0.64 | 0.031 * |

| Control Group | 3.40 | 0.62 | 3.43 | 0.69 | ||

| Dimension | Question | Experimental Group | Control Group | p | ||

|---|---|---|---|---|---|---|

| Mean | SD | Mean | SD | |||

| Extrinsic Motivation |

| 3.89 | 0.698 | 3.58 | 0.818 | 0.088 |

| 3.62 | 0.794 | 3.41 | 0.818 | 0.257 | |

| 3.45 | 0.691 | 3.45 | 0.691 | 0.153 | |

| Overall extrinsic motivation: mean and standard deviation | 3.66 | 0.64 | 3.40 | 0.62 | 0.075 | |

| Intrinsic motivation |

| 4.00 | 0.707 | 3.48 | 0.789 | 0.004 ** |

| 3.67 | 0.818 | 3.46 | 0.789 | 0.249 | |

| 3.64 | 0.823 | 3.35 | 0.777 | 0.119 | |

| Overall intrinsic motivation: mean and standard deviation | 3.77 | 0.64 | 3.43 | 0.69 | 0.031 * | |

| Overall learning motivation: mean and standard deviation | 3.71 | 0.56 | 3.41 | 0.61 | 0.030 * | |

| Group | Mean | SD | t | p | Cohen’s d |

|---|---|---|---|---|---|

| Experimental Group (n = 37) | 2.60 | 0.58 | −3.401 | 0.001 ** | 0.78 |

| Control Group (n = 37) | 3.02 | 0.50 |

| Group | Mental Load | p | Mental Effort | p | ||

|---|---|---|---|---|---|---|

| Mean | SD | Mean | SD | |||

| Experimental Group | 2.65 | 0.65 | 0.002 ** | 2.51 | 0.67 | 0.004 ** |

| Control Group | 3.07 | 0.51 | 2.94 | 0.61 | ||

| Dimension | Question | Experimental Group | Control Group | p | ||

|---|---|---|---|---|---|---|

| Mean | SD | Mean | SD | |||

| Mental Load |

| 3.10 | 0.90 | 3.76 | 0.70 | 0.001 ** |

| 2.94 | 0.81 | 3.23 | 0.70 | 0.107 | |

| 2.59 | 0.95 | 3.00 | 0.85 | 0.055 | |

| 2.37 | 1.00 | 2.56 | 0.78 | 0.376 | |

| 2.24 | 0.79 | 2.82 | 0.75 | 0.002 ** | |

| Overall mental load: mean and standard deviation | 2.65 | 0.65 | 3.07 | 0.51 | 0.002 ** | |

| Mental Effort |

| 2.59 | 0.79 | 2.94 | 0.79 | 0.056 |

| 2.48 | 0.76 | 3.17 | 0.85 | 0.000 *** | |

| 2.45 | 0.80 | 2.71 | 0.79 | 0.162 | |

| Overall mental effort: mean and standard deviation | 2.51 | 0.67 | 2.94 | 0.61 | 0.004 ** | |

| Overall cognitive load: mean and standard deviation | 2.60 | 0.58 | 3.02 | 0.50 | 0.001 ** | |

| Dimension | Question | Mean | SD |

|---|---|---|---|

| Perceived Usefulness |

| 4.08 | 0.587 |

| 4.03 | 0.677 | |

| 3.87 | 0.578 | |

| Overall Perceived Usefulness: Mean and Standard Deviation | 3.99 | 0.614 | |

| Perceived Ease of Use |

| 3.43 | 0.948 |

| 3.55 | 0.724 | |

| 3.74 | 0.724 | |

| Overall Perceived Ease of Use: Mean and Standard Deviation | 3.57 | 0.799 | |

| Behavioral Intention |

| 3.42 | 0.722 |

| 3.39 | 0.823 | |

| 3.13 | 0.777 | |

| Overall Behavioral Intention: Mean and Standard Deviation | 3.31 | 0.774 | |

| Overall System Satisfaction: Mean and Standard Deviation | 3.63 | 0.73 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, Y.-T.; Li, C.-L.; Chang, C.-C.; Tarng, W. Enhancing Computational Thinking and Programming Logic Skills with App Inventor 2 and Robotics: Effects on Learning Outcomes, Motivation, and Cognitive Load. Sensors 2025, 25, 7059. https://doi.org/10.3390/s25227059

Huang Y-T, Li C-L, Chang C-C, Tarng W. Enhancing Computational Thinking and Programming Logic Skills with App Inventor 2 and Robotics: Effects on Learning Outcomes, Motivation, and Cognitive Load. Sensors. 2025; 25(22):7059. https://doi.org/10.3390/s25227059

Chicago/Turabian StyleHuang, Yu-Ting, Chien-Lung Li, Chin-Chih Chang, and Wernhuar Tarng. 2025. "Enhancing Computational Thinking and Programming Logic Skills with App Inventor 2 and Robotics: Effects on Learning Outcomes, Motivation, and Cognitive Load" Sensors 25, no. 22: 7059. https://doi.org/10.3390/s25227059

APA StyleHuang, Y.-T., Li, C.-L., Chang, C.-C., & Tarng, W. (2025). Enhancing Computational Thinking and Programming Logic Skills with App Inventor 2 and Robotics: Effects on Learning Outcomes, Motivation, and Cognitive Load. Sensors, 25(22), 7059. https://doi.org/10.3390/s25227059