End-to-End Privacy-Aware Federated Learning for Wearable Health Devices via Encrypted Aggregation in Programmable Networks

Abstract

1. Introduction

- A formally defined mathematical framework for encrypted edge-assisted federated learning. Unlike prior heuristic or architecture-only FL designs, EAH-FL provides a rigorous mathematical formulation that models client updates, encrypted aggregation, and edge-level optimization under HE. This formalism allows analytical reasoning about latency, encryption cost, and convergence behavior.

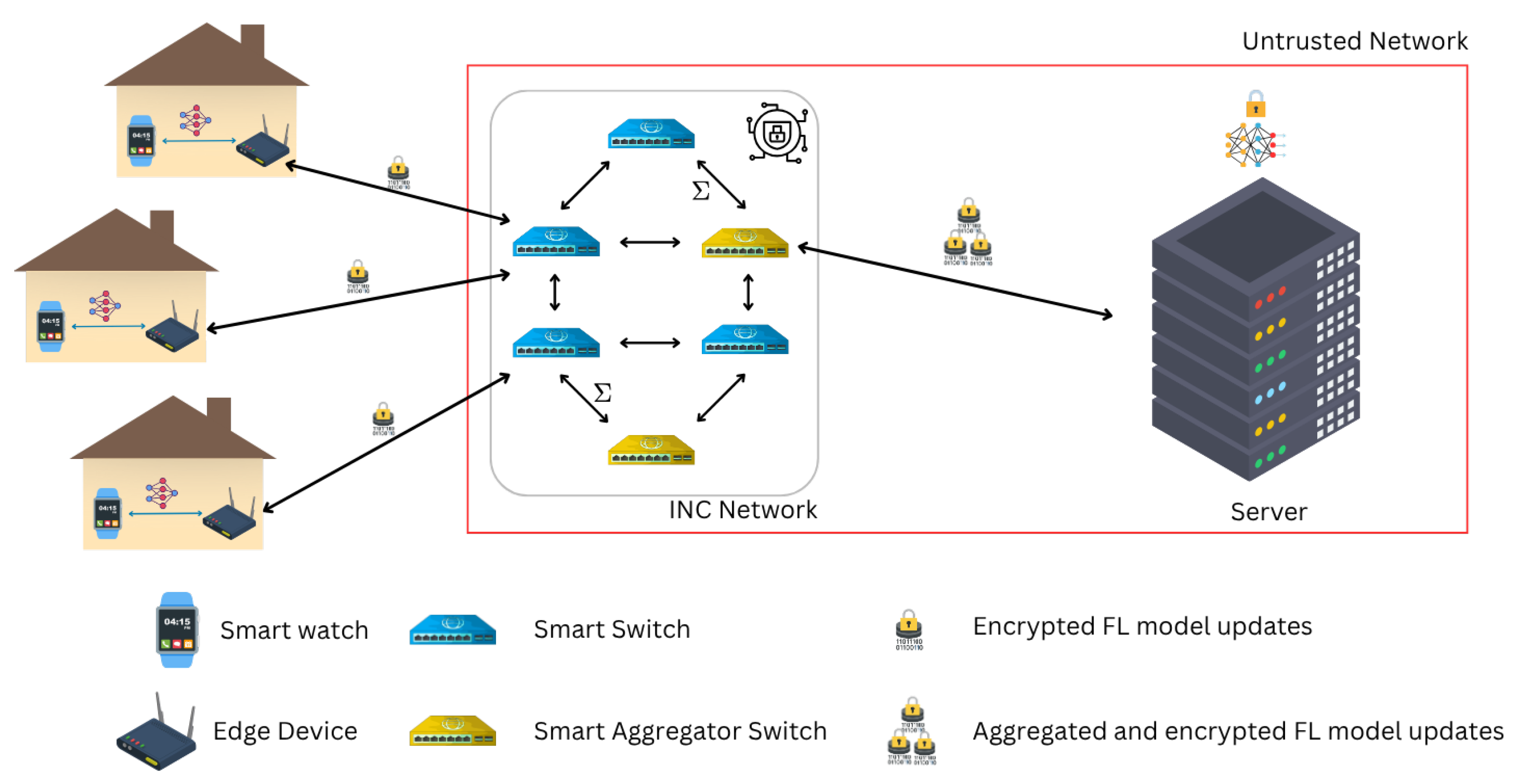

- A novel hybrid architecture with HE+INC and EAH-FL. This framework jointly leverages programmable edge nodes for in-network encrypted aggregation, ensuring that all model updates remain encrypted end-to-end. This integration bridges the gap between privacy-preserving cryptography and system-level acceleration achieving both confidentiality and real-time performance.

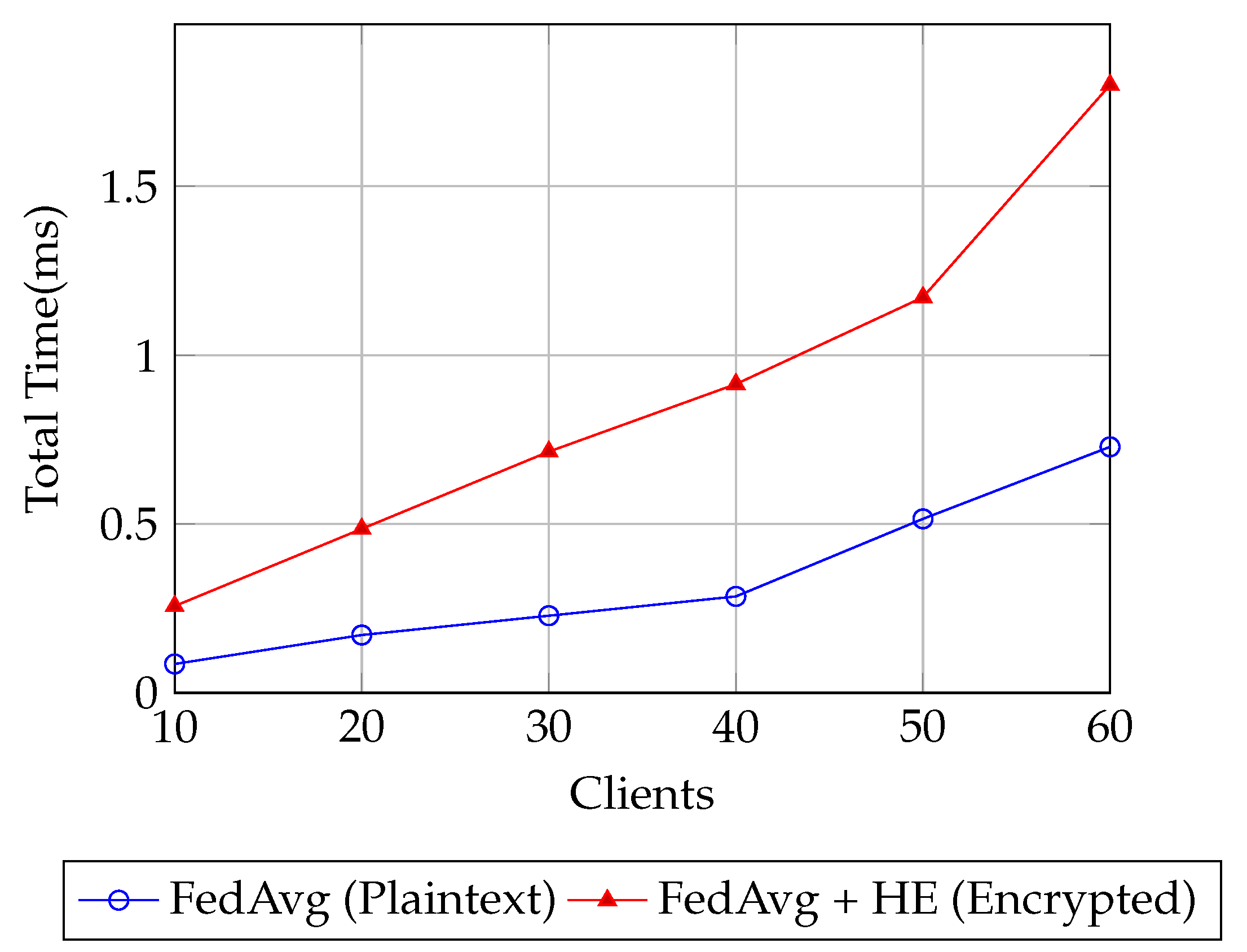

- The Grahical neural network (GNN)-assisted topology inference module improves encrypted model aggregation efficiency by learning dynamic inter-client relationships. Experimental results show up to 23% lower latency, 31% communication reduction (CRR), and a 12% improvement in convergence stability compared to static edge aggregation.

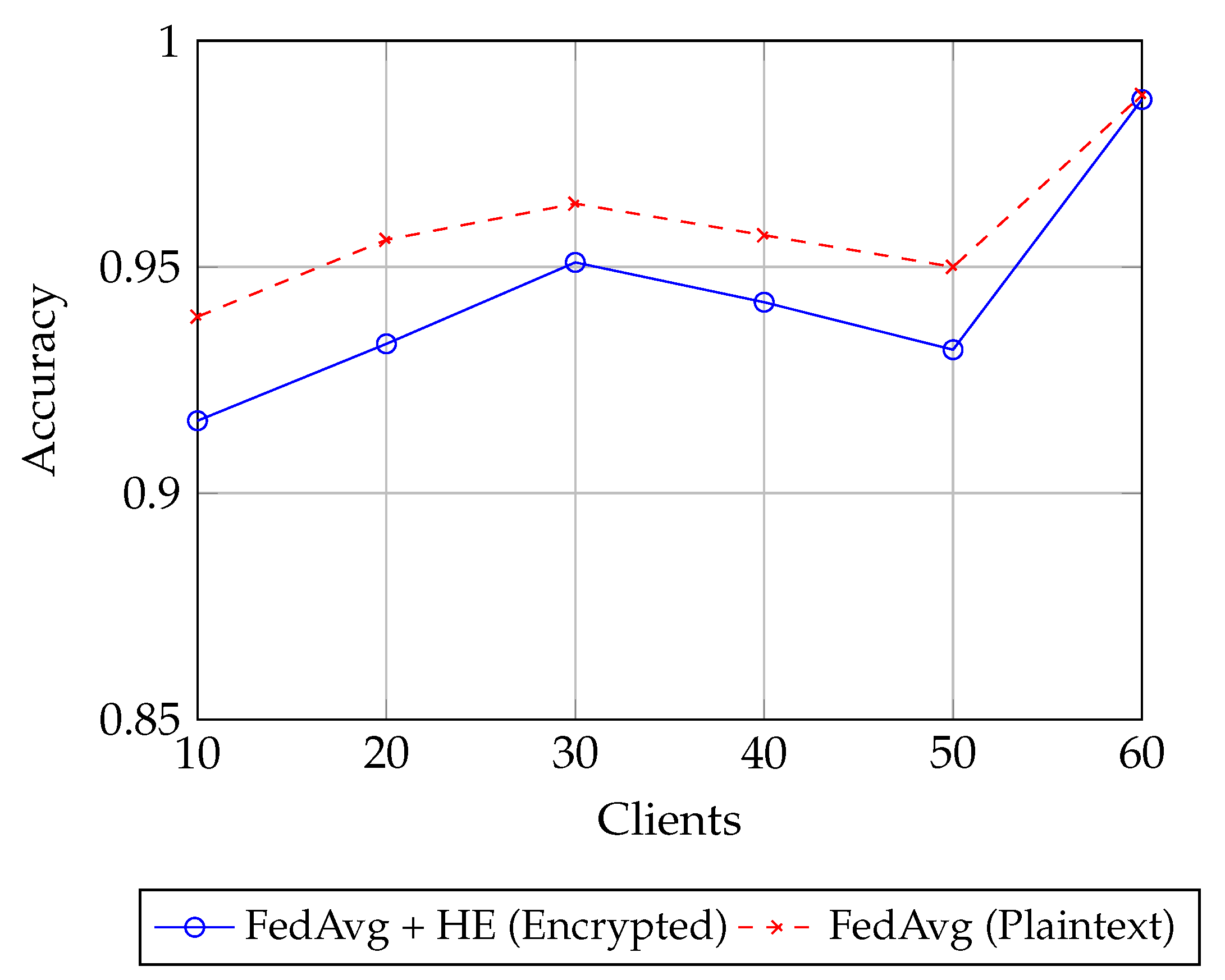

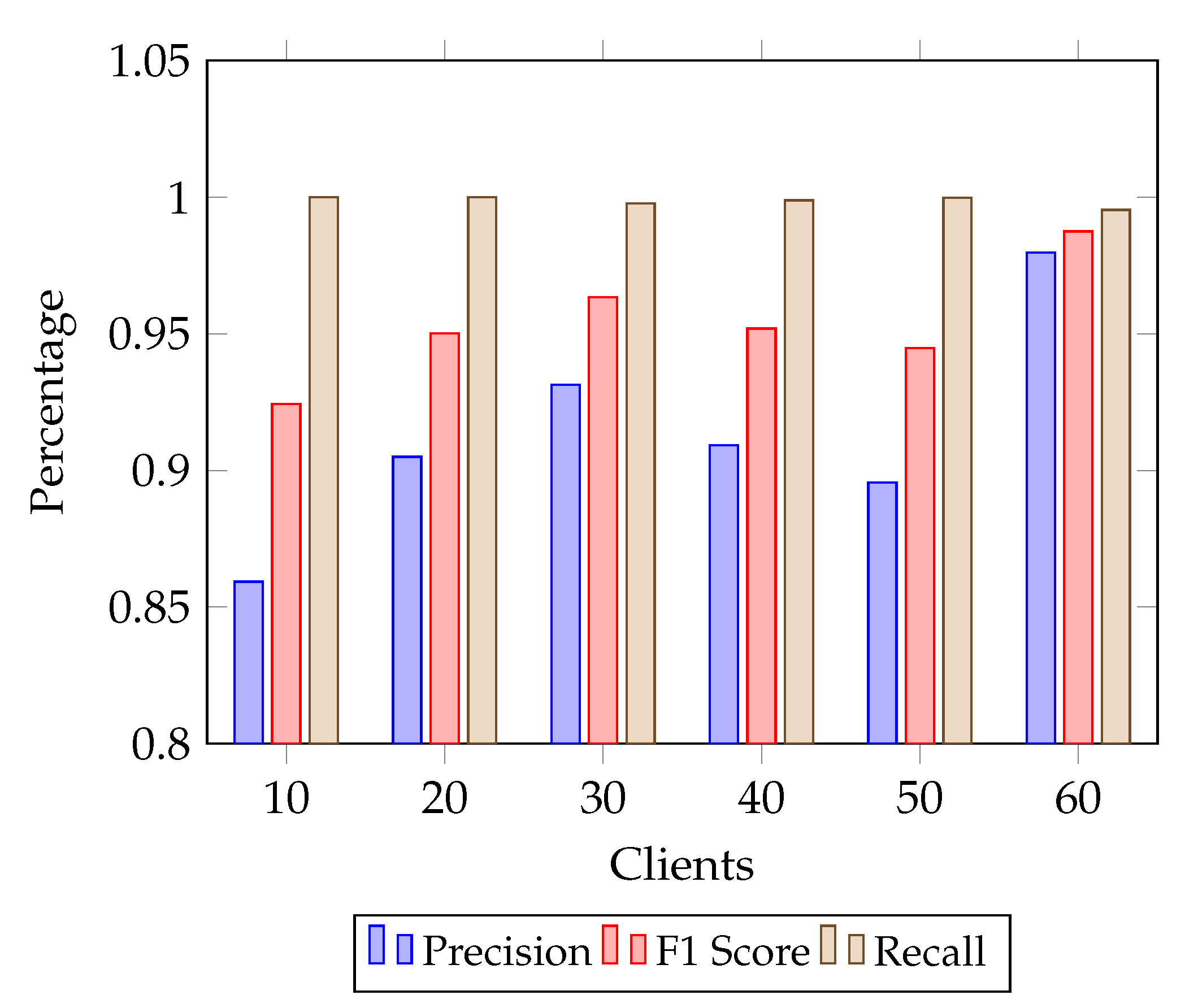

- Comprehensive empirical validation and analysis. Extensive experiments including baseline comparisons, ablation studies, and parameter sensitivity analyzes confirm that EAH-FL achieves a superior balance of privacy, efficiency, and scalability compared to state-of-the-art FL frameworks. The proposed model outperforms HE-only and INC-only systems, proving its feasibility for real-time healthcare IoT environments.

2. Related Work

3. System Model

3.1. Network Setup and Entities

3.2. Underlying Assumptions

- 1.

- Communication Model: We assume that clients connect to edge nodes over wireless links (e.g., a wearable device to a smartphone or home gateway via Bluetooth or Wifi). The edge nodes and INCs are interconnected through the broader network (which could include the internet or a private WAN). Standard networking protocols ensure basic data delivery between layers. However, network communication is not perfectly reliable—latency and packet loss can occur. We assume a packet-switched network where messages may be delayed, dropped, or reordered by adversarial conditions. Despite this, mechanisms like acknowledgments and retransmissions (or higher-level protocols) can provide eventual reliability. Time is divided into discrete intervals or rounds when analyzing performance (especially if considering synchronized operations like training rounds), but the system can also handle asynchronous requests. We also assume each link has a finite bandwidth and propagation delay; thus large messages incur transmission delays as introduced in the mathematical model later in this section.

- 2.

- Resource Constraints: Each tier has limited resources relative to its workload. Client devices have severe constraints on battery, CPU, memory, and thus cannot perform intensive computations or continuous heavy communication. This drives the need to offload tasks to the edge. Edge nodes have more computational power and energy (mains-powered), but they are still limited (e.g., a microserver with fixed CPU/GPU capacity that must be shared among multiple clients). INC nodes likewise have finite processing and storage—while they might be powerful network devices, they are not full data-center servers and typically must forward many flows, so any INC must be lightweight. The cloud has abundant resources but not infinite; in practice, we assume the cloud can scale to handle the aggregated workload of all clients, albeit with some upper bound or cost. We assume that no single edge or INC can handle an unbounded number of clients simultaneously without performance degradation; therefore, tasks may need to be distributed or queued if capacity is exceeded. In formulating our model, we will impose capacity constraints (e.g., an edge node cannot exceed CPU cycles per second across all tasks, and similarly for INC nodes). We also assume that storage at each layer is sufficient for buffering and intermediate data, but edge/INC storage is not large enough to hold full global datasets or models—such large-scale data resides in the cloud. This multi-tier resource distribution reflects common practice in edge computing, where moving computation closer to data sources improves latency but each step closer to the edge has smaller capacity.

- 3.

- Security and Trust Boundaries: We divide the system into trust domains. The client and its edge node form a fully trusted domain. Beyond this, the network—including INC nodes and links—is untrusted or semi-trusted. INC nodes are semi-trusted: they execute assigned tasks (e.g., aggregation, routing) correctly but may be curious or compromised. They do not intentionally corrupt computations but cannot be trusted with confidential data. The cloud is modeled as honest-but-curious: it follows protocols correctly but may analyze data to infer private information, so raw client data must not be exposed.Communication channels are fully untrusted, subject to eavesdropping, tampering, and replay attacks. To ensure confidentiality and integrity, all data leaving the edge is encrypted end-to-end with CKKS (FHE), and entities are authenticated (via certificates or pre-shared keys). Within the client–edge domain, plaintext handling is permitted under the assumption of physical security. In summary: the client–edge pair is trusted, INC is semi-trusted, the cloud is honest-but-curious, and network channels are untrusted. Threats include passive adversaries, compromised INC nodes, and packet injection or alteration. Standard cryptographic primitives are assumed secure. Denial-of-service attacks are possible but out of scope; we focus on confidentiality, integrity, and correct protocol execution.

- 4.

- Task and Application Model: We assume that the workload can be broken into tasks or data units that flow through the pipeline. Each client may generate tasks (or data updates) periodically or in response to events. We make the simplifying assumption that each task from a client is independent in terms of scheduling (though they could be part of a larger application workflow). The pipeline supports a variety of applications, for example, real-time sensor data aggregation, federated machine learning updates, augmented reality offloading, etc.— but in all cases, the pattern is that raw data originates at the client and the final processing is needed at the cloud or at least beyond the edge. The communication pattern is often uplink-heavy: clients send data up the chain and eventually receive some result or acknowledgment back. We assume the volume of data returned from the cloud (downlink) is relatively small (an updated model) compared to the volume of data uploaded, which is common in sensor analytics and learning scenarios. This justifies our focus on the upstream data pipeline for performance modeling. If a specific application requires heavy downlink data (e.g., content delivery), a similar analysis can be applied in reverse. Another assumption is that tasks might have real-time requirements—for instance, each task i may have a deadline or latency requirement that the end-to-end processing must meet (especially in mission-critical contexts). We assume such requirements are known and form part of the constraints in our system design (e.g., the pipeline and algorithms should aim to ensure for each task’s completion time as defined later).

- 5.

- Consistency and Fault Tolerance: We assume a consistent view of the system in terms of configuration, e.g., the mapping does not change frequently (a client is generally served by the same edge node, unless it moves and hands off to a new edge, which we assume happens relatively infrequently). INC routing paths are assumed to be known or can be discovered by the network controllers. If a node fails (edge, INC, or cloud), we assume there are failover mechanisms (out of scope for our model) that eventually reroute tasks to an alternate node of the same tier. Our focus is on steady-state operation under normal conditions and under security threats, rather than on recovery from node crashes. However, we do assume that the system is distributed—no single point of failure should halt the entire pipeline. For example, if one INC node on a path is down, another INC or an alternate route can be used (this will be orchestrated by the network’s intelligent Routing Algorithm discussed in Section 5).

3.3. Homomorphic Encryption Setup

3.3.1. Cryptographic Context

- Polynomial modulus degree:

- –

- Governs ciphertext size and security strength.

- –

- Provides 128-bit security against RLWE attacks.

- –

- Large enough to encode multiple model parameters in a single ciphertext (via SIMD packing), which is essential for vectorized aggregation in INC.

- –

- Smaller values () reduce latency but fall short of the security level required; larger values () increase computational overhead beyond real-time feasibility.

- Scaling factor:

- –

- Controls fixed-point precision of encrypted computations.

- –

- A scaling factor of yields 6 decimal digits of accuracy, sufficient for model parameter aggregation where minor floating-point deviations do not affect convergence.

- –

- Higher scaling factors increase precision but lead to faster noise growth, limiting computation depth.

- Coefficient modulus chain:

- –

- Defines the sequence of modulus sizes for rescaling.

- –

- This chain supports multiple levels of additions and multiplications before noise exhaustion, while keeping ciphertext size manageable.

- –

- The symmetric structure provides balanced noise distribution across rescaling steps, enabling secure aggregation of parameters through several INC hops.

3.3.2. Secure Dataflow in the Pipeline

3.4. Mathematical Model

3.4.1. Federated Learning Framework

3.4.2. Graph-Based Representation for Adaptive Routing

4. Edge-Assisted Homomorphic Federated Learning

- The complete encrypted training pipeline that operates across clients, edges, and the cloud (Section 4.1),

- Stabilization strategies for one-class clients to ensure convergence in heterogeneous data settings (Section 4.2)

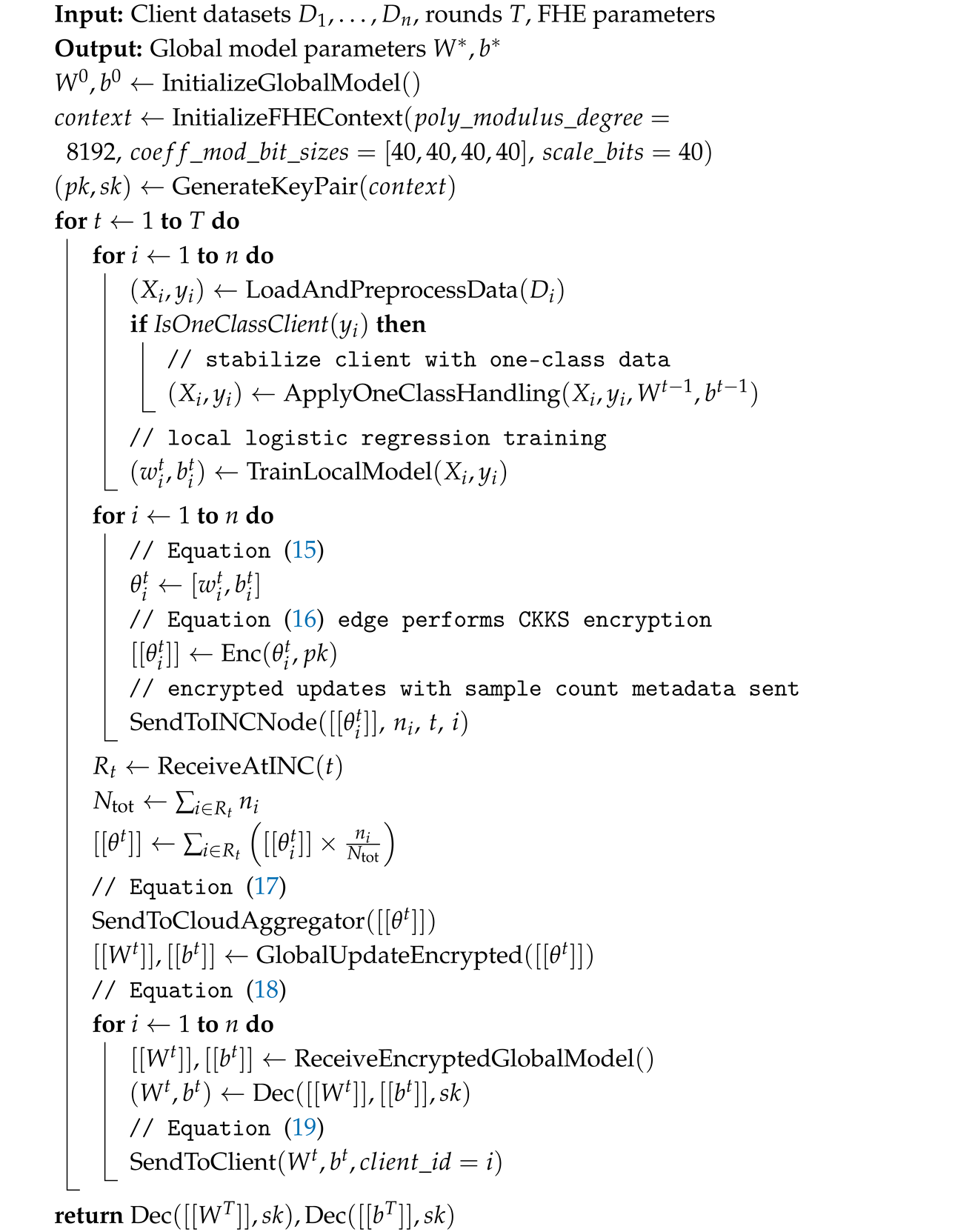

4.1. Complete Training Pipeline

- Client-side local training: Each client i loads and preprocesses its private dataset . The clients are trained on 55 engineered features using logistic regression. If the client exhibits one-class behavior (only positive or only negative samples), one-class handling strategies are applied (see line 6 of Algorithm 1). Clients then perform local training to obtain , i.e.,

- INC-side encrypted aggregation: The encrypted updates from all clients are forwarded to an INC node (see line 18 of Algorithm 1). The INC node performs homomorphic aggregation to producewhere .

- Cloud-side encrypted global update: The aggregated ciphertext from (17) is relayed to the cloud, which completes the global model update in the encrypted domain to form

- Edge-side decryption and client sync: Each edge decrypts the encrypted global model (18) using its private key , recovering the plaintext parameterswhich are then synchronized to clients for the next round (see line 26 of Algorithm 1).

| Algorithm 1: EAH-FL |

|

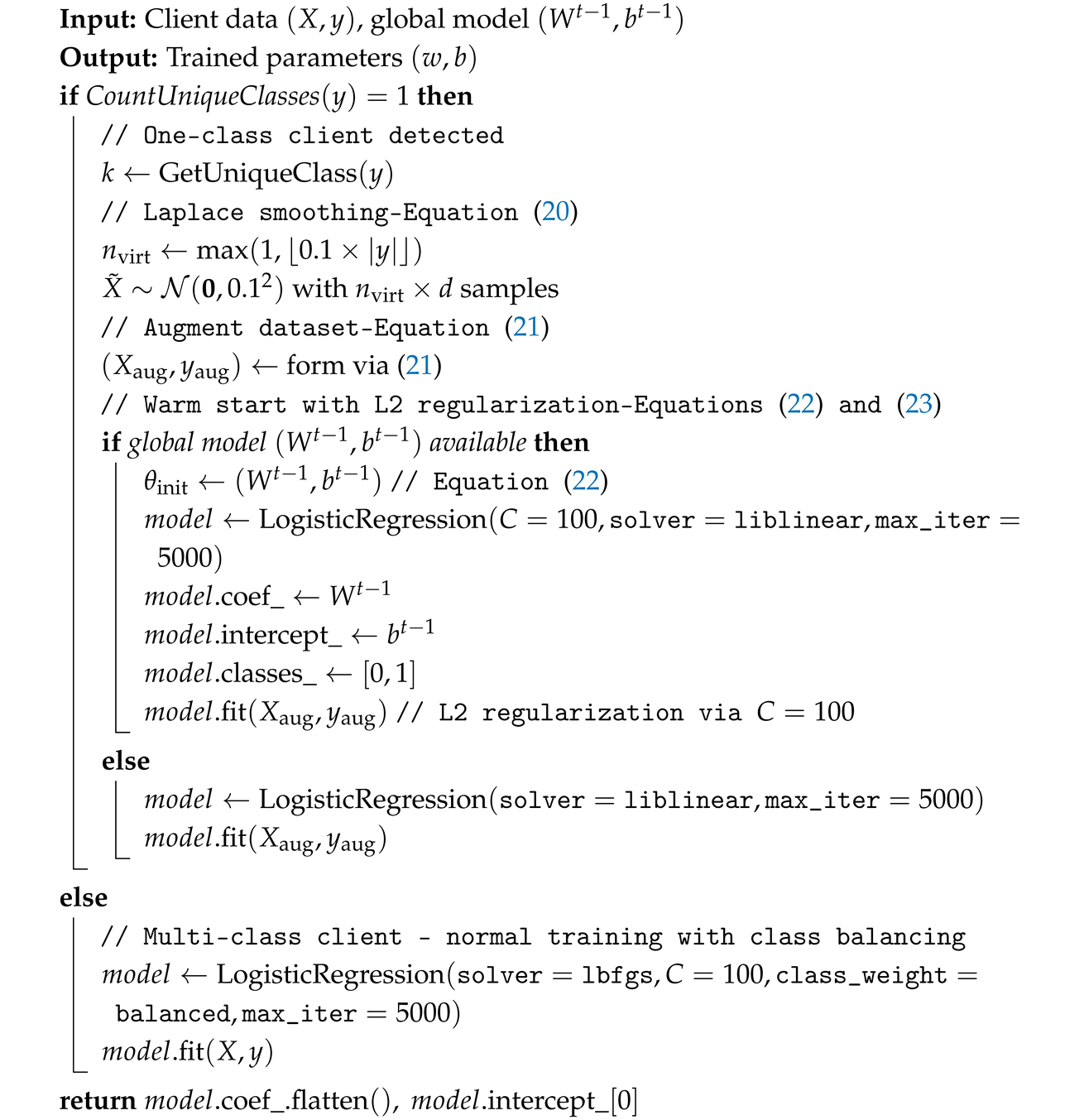

4.2. One-Class Client Handling

| Algorithm 2: One-Class Client Handling (Combined Strategy) |

|

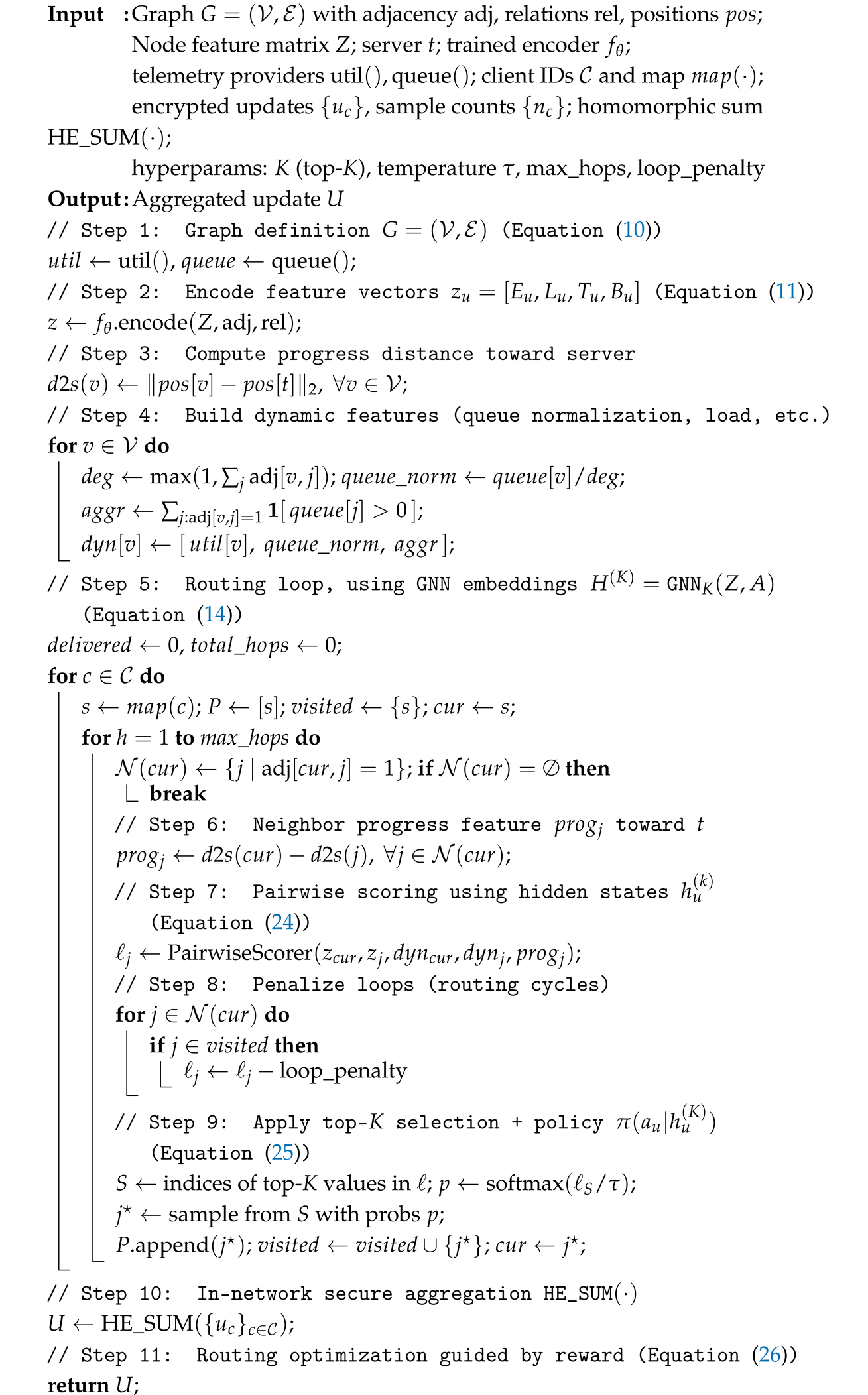

5. Adaptive Multi-Relational Routing Graph Neural Network for Smart Networks

5.1. Dynamic Routing

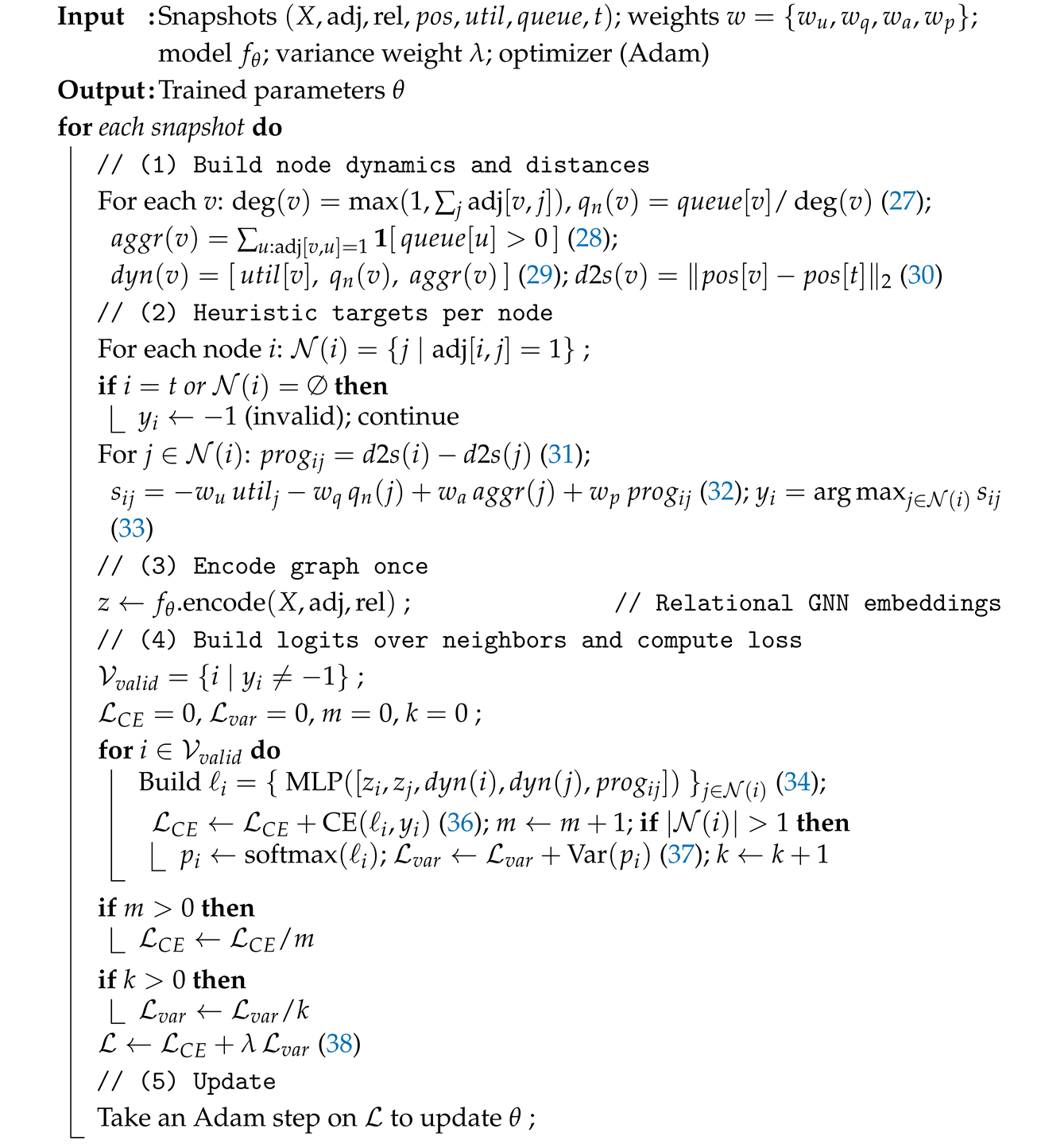

5.2. Heuristic-Imitation Training

| Algorithm 3: INC Round: Dynamic Routing and Homomorphic Aggregation |

|

| Algorithm 4: Heuristic-Imitation Training for INC-GNN |

|

6. Performance Evaluation

6.1. Simulation Setup

Ethical and Licensing Considerations

6.2. Evaluation Metrics

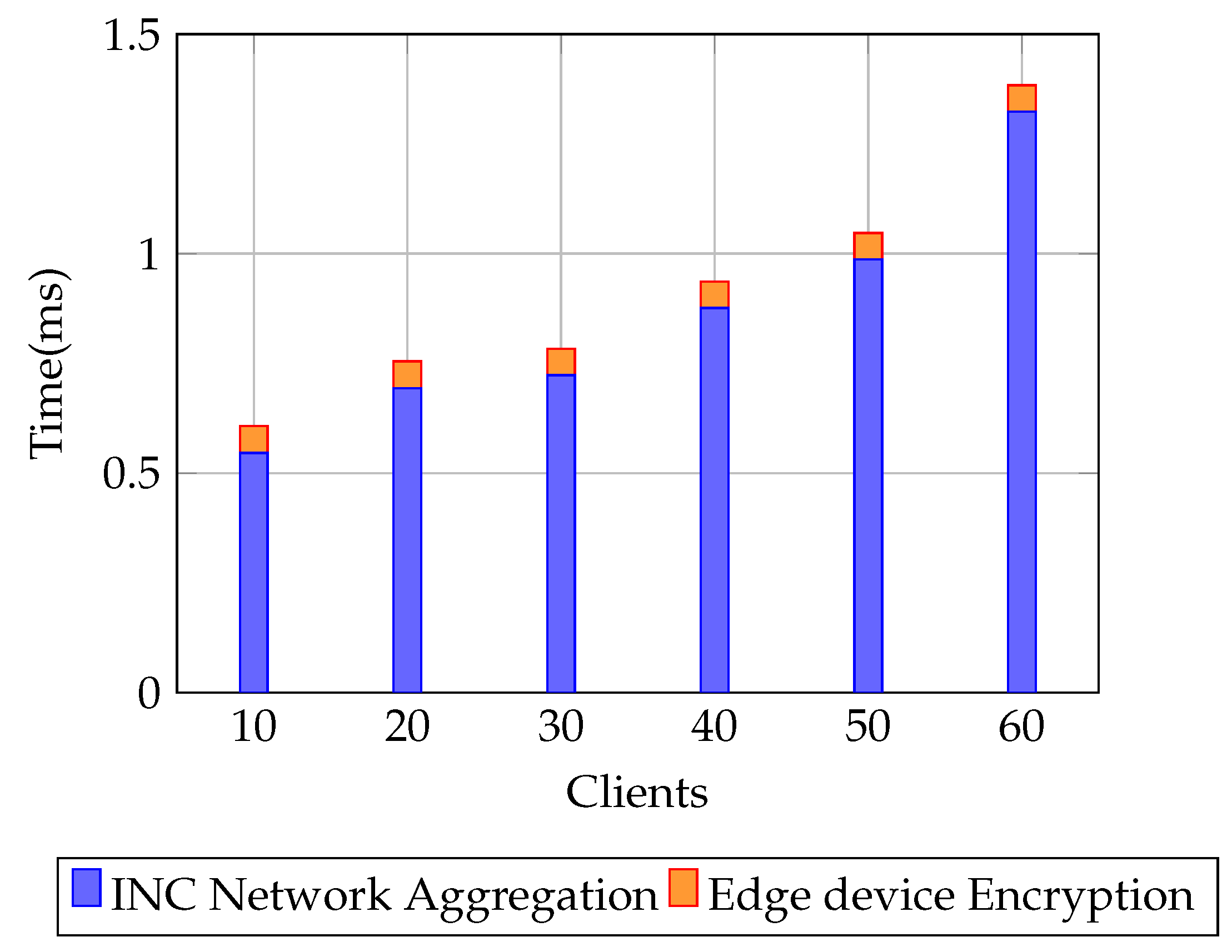

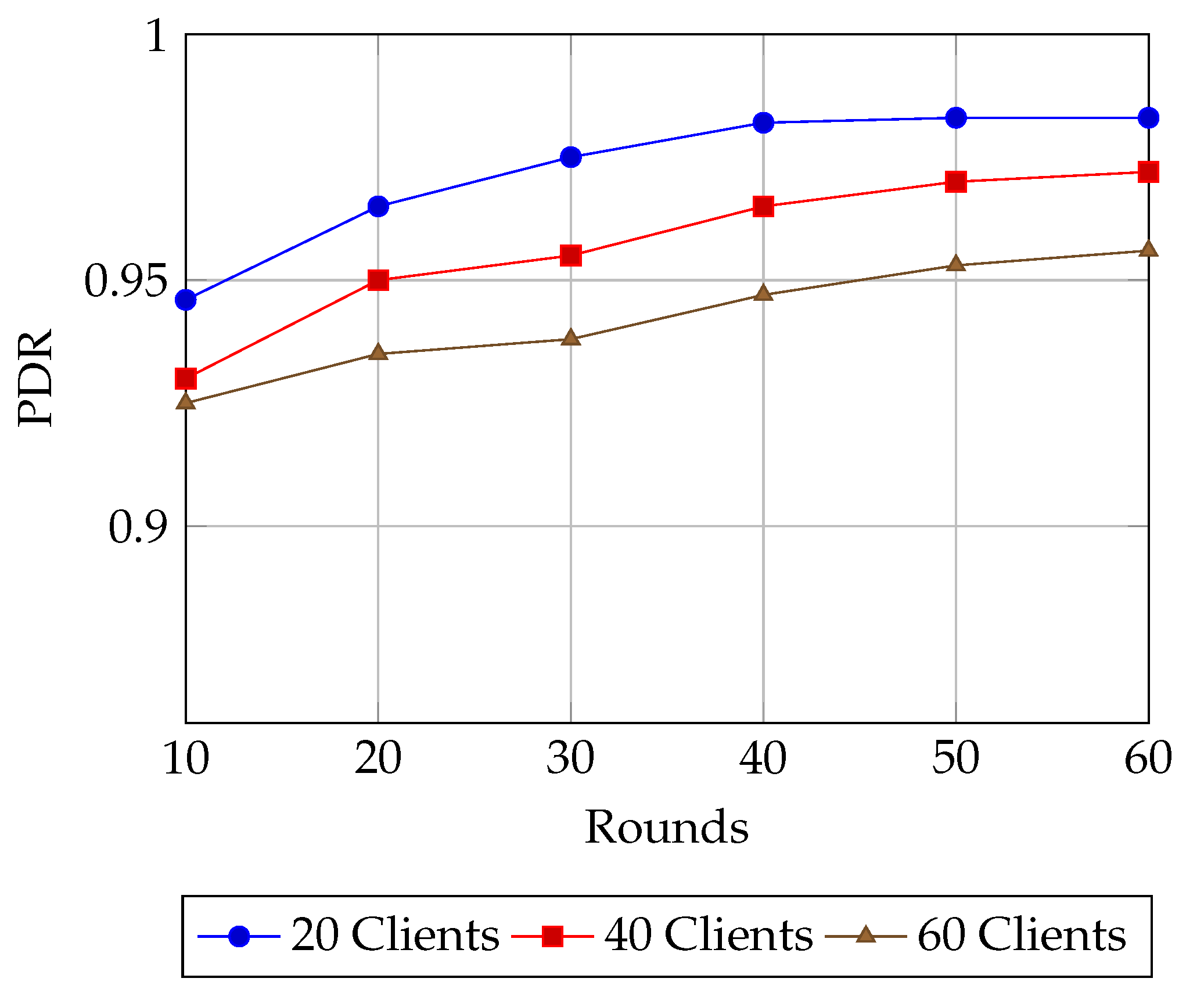

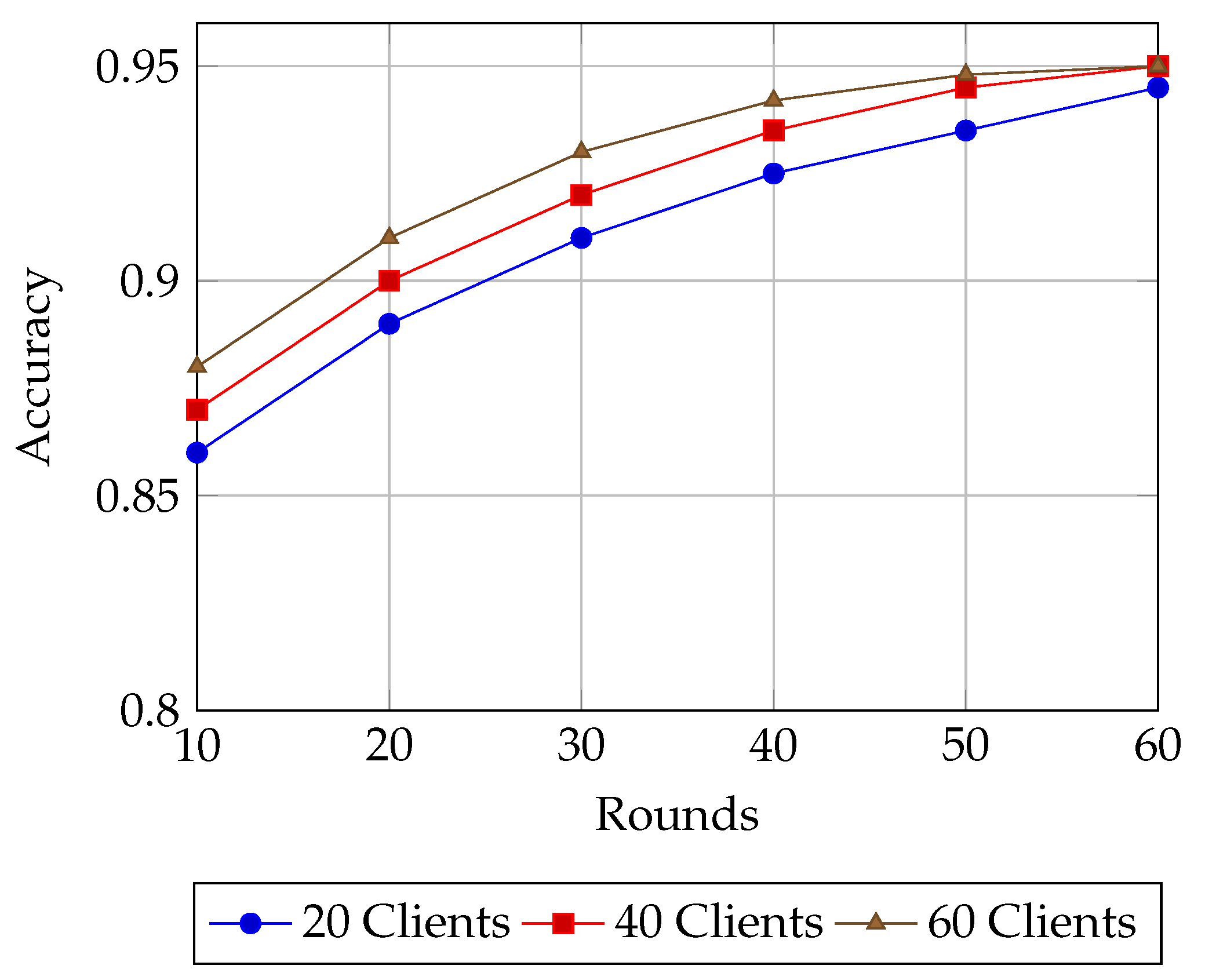

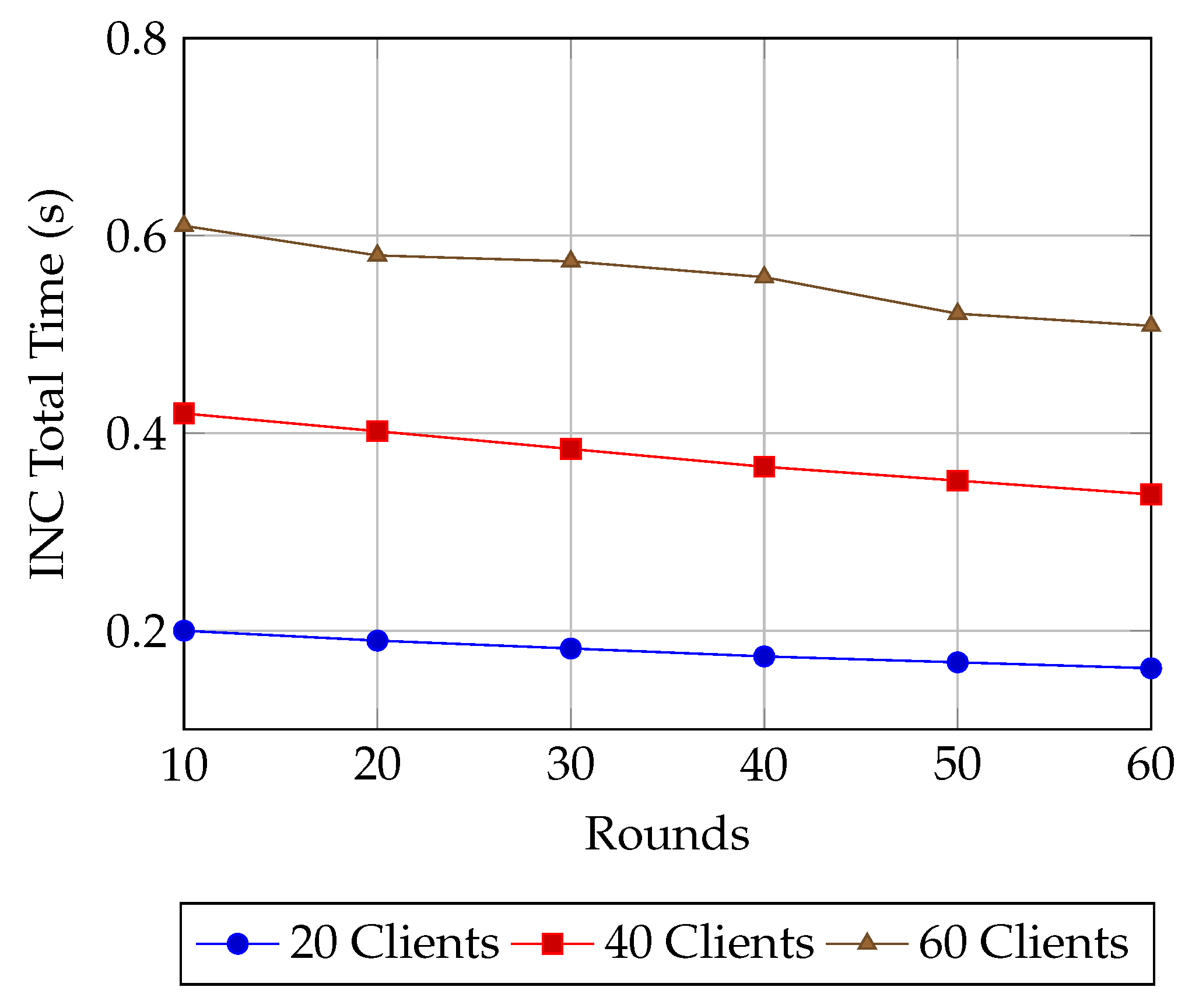

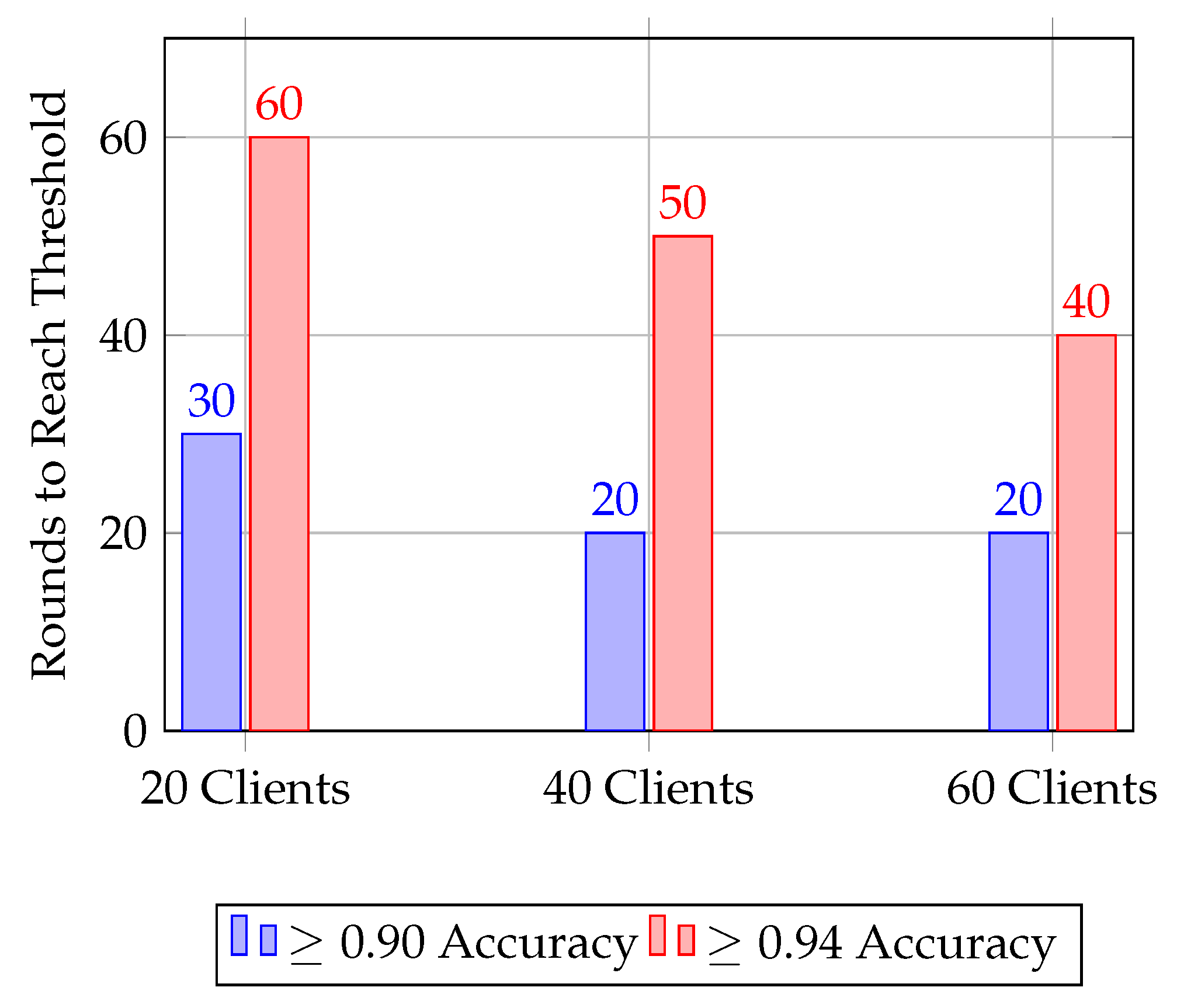

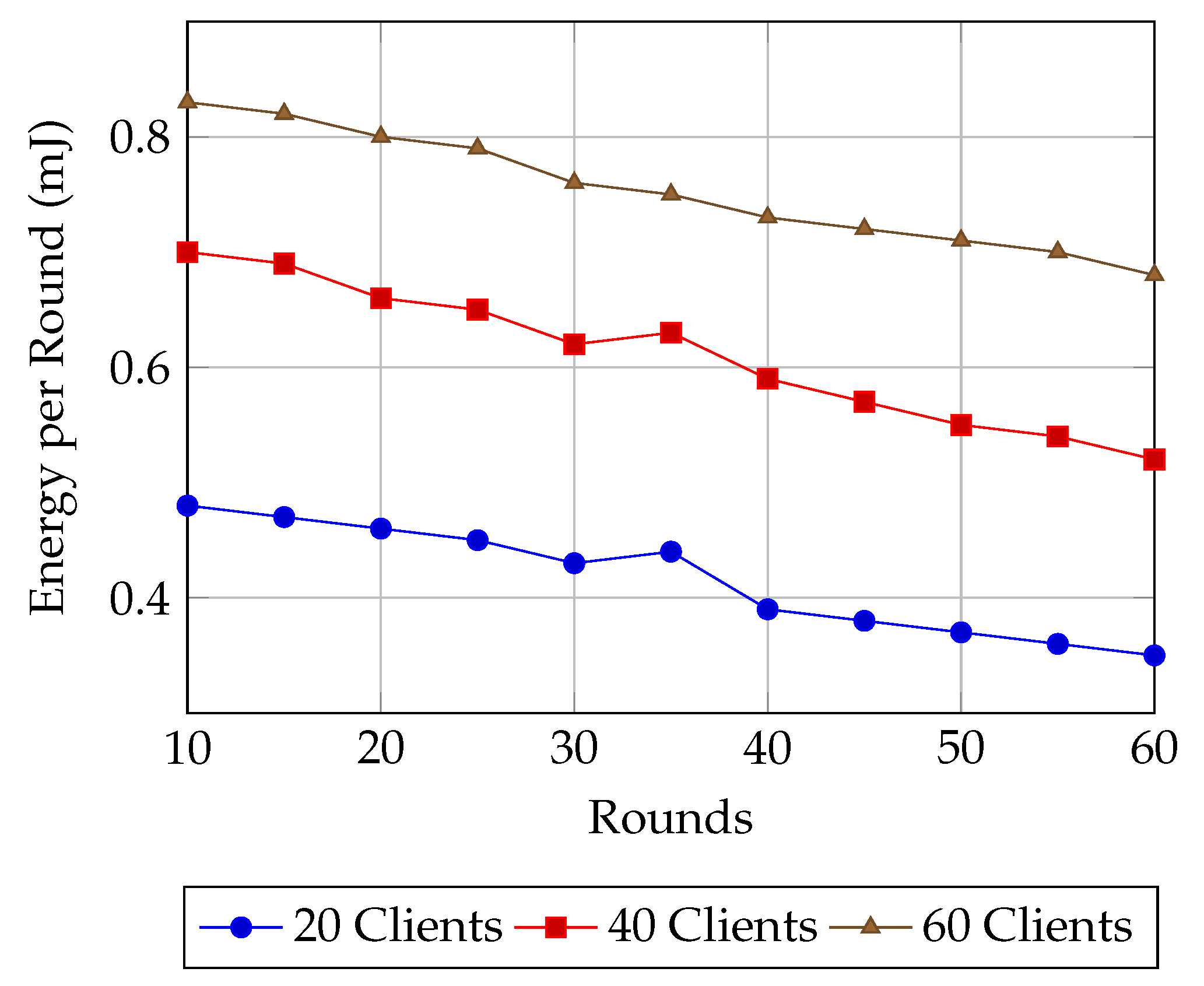

6.3. Simulation Results

7. Conclusions

7.1. Limitations

7.2. Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| HE | Homomorphic Encryption |

| INC | In-Network Computing |

| CKKS | Cheon–Kim–Kim–Song (Homomorphic Encryption Scheme) |

| GNN | Graph Neural Network |

| AMRGNN | Adaptive multi-relational Routing Graph Neural Network |

| IoT | Internet of Things |

| HIPAA | Health Insurance Portability and Accountability Act |

| GDPR | General Data Protection Regulation |

| FL | Federated Learning |

| FHE | Fully Homomorphic Encryption |

References

- IBM. Cost of a Data Breach Report 2024: Healthcare Industry Insights. Available online: https://www.ibm.com/reports/data-breach (accessed on 30 September 2025).

- Yang, Z.; Chen, Y.; Huangfu, H.; Ran, M.; Wang, H.; Li, X.; Zhang, Y. Dynamic Corrected Split Federated Learning with Homomorphic Encryption for U-Shaped Medical Image Networks. IEEE J. Biomed. Health Inform. 2023, 27, 5946–5957. [Google Scholar] [CrossRef]

- Lee, Y.; Gong, J.; Choi, S.; Kang, J. Revisit the Stability of Vanilla Federated Learning Under Diverse Conditions. arXiv 2025, arXiv:2502.19849. [Google Scholar] [CrossRef]

- Choi, S.; Patel, D.; Zad Tootaghaj, D.; Cao, L.; Ahmed, F.; Sharma, P. FedNIC: Enhancing Privacy-Preserving Federated Learning via Homomorphic Encryption Offload on SmartNIC. Front. Comput. Sci. 2024, 6, 1465352. [Google Scholar] [CrossRef]

- Shen, J.; Zhao, Y.; Huang, S.; Ren, Y. Secure and Flexible Privacy-Preserving Federated Learning Based on Multi-Key Fully Homomorphic Encryption. Electronics 2024, 13, 4478. [Google Scholar] [CrossRef]

- Walskaar, I.; Tran, M.; Catak, F. A Practical Implementation of Medical Privacy-Preserving Federated Learning Using Multi-Key Homomorphic Encryption and Flower Framework. Cryptography 2023, 7, 48. [Google Scholar] [CrossRef]

- Firdaus, M.; Larasati, H.; Hyune-Rhee, K. Blockchain-Based Federated Learning with Homomorphic Encryption for Privacy-Preserving Healthcare Data Sharing. Internet Things 2025, 31, 101579. [Google Scholar] [CrossRef]

- Mao, D.; Yang, Q.; Wang, H.; Chen, Z.; Li, C.; Song, Y.; Qin, Z. EPFed: Achieving Optimal Balance between Privacy and Efficiency in Federated Learning. Electronics 2024, 13, 1028. [Google Scholar] [CrossRef]

- Shymala Gowri, S.; Sadasivam, S.; Deva Priyan, T.A.; Hema Priya, N. Secured Machine Learning Using Approximate Homomorphic Scheme for Healthcare. In Proceedings of the 2023 International Conference on Intelligent Systems for Communication, IoT and Security (ICISCoIS), Coimbatore, India, 9–11 February 2023; pp. 361–364. [Google Scholar] [CrossRef]

- Khan, M.J.; Fang, B.; Cimino, G.; Cirillo, S.; Yang, L.; Zhao, D. Privacy-Preserving Artificial Intelligence on Edge Devices: A Homomorphic Encryption Approach. In Proceedings of the 2024 IEEE International Conference on Web Services (ICWS), Shenzhen, China, 7–13 July 2024; pp. 395–404. [Google Scholar] [CrossRef]

- Su, X.; Zhou, Y.; Cui, L.; Guo, S. Expediting In-Network Federated Learning by Voting-Based Consensus Model Compression. arXiv 2024, arXiv:2402.03815. [Google Scholar]

- Xia, J.; Wu, W.; Luo, L.; Cheng, G.; Guo, D.; Nian, Q. Accelerating and Securing Federated Learning with Stateless In-Network Aggregation at the Edge. In Proceedings of the 2024 IEEE 44th International Conference on Distributed Computing Systems (ICDCS), Jersey City, NJ, USA, 23–26 July 2024. [Google Scholar] [CrossRef]

- Zang, M.; Zheng, C.; Koziak, T.; Zilberman, N.; Dittmann, L. Federated In-Network Machine Learning for Privacy-Preserving IoT Traffic Analysis. ACM Trans. Internet Technol. 2024, 24, 1–24. [Google Scholar] [CrossRef]

- Caruccio, L.; Cimino, G.; Deufemia, V.; Iuliano, G.; Stanzione, R. Surveying Federated Learning Approaches Through a Multi-Criteria Categorization. Multimed. Tools Appl. 2024, 83, 36921–36951. [Google Scholar] [CrossRef]

- Albshaier, L.; Almarri, S.; Albuali, A. Federated Learning for Cloud and Edge Security: A Systematic Review of Challenges and AI Opportunities. Electronics 2025, 14, 1019. [Google Scholar] [CrossRef]

- Aziz, R.; Banerjee, S.; Bouzefrane, S.; Le Vinh, T. Exploring Homomorphic Encryption and Differential Privacy Techniques towards Secure Federated Learning Paradigm. Future Internet 2023, 15, 310. [Google Scholar] [CrossRef]

- Naresh, V.; Varma, G. Privacy-Enhanced Heart Stroke Detection Using Federated Learning and Homomorphic Encryption. Smart Health 2025, 37, 100594. [Google Scholar] [CrossRef]

- Ji, M.; Jiao, L.; Fan, Y.; Chen, Y.; Qian, Z.; Qi, J.; Luo, G.; Ye, B. Online Scheduling of Federated Learning with In-Network Aggregation and Flow Routing. In Proceedings of the IEEE SECON, Phoenix, AZ, USA, 2–4 December 2024. [Google Scholar]

- Xie, Q.; Jiang, S.; Jiang, L.; Huang, Y.; Zhao, Z.; Khan, S.; Dai, W.; Liu, Z.; Wu, K. Efficiency Optimization Techniques in Privacy-Preserving Federated Learning with Homomorphic Encryption: A Brief Survey. IEEE Internet Things J. 2024, 11, 24569–24590. [Google Scholar] [CrossRef]

- Gu, X.; Sabrina, F.; Fan, Z.; Sohail, S. A Review of Privacy Enhancement Methods for Federated Learning in Healthcare Systems. Int. J. Environ. Res. Public Health 2023, 20, 6539. [Google Scholar] [CrossRef] [PubMed]

- Lessage, X.; Collier, L.; Van Ouytsel, C.H.B.; Legay, A.; Mahmoudi, S.; Massonet, P. Secure Federated Learning Applied to Medical Imaging with Fully Homomorphic Encryption. In Proceedings of the IEEE 3rd International Conference on AI in Cybersecurity (ICAIC), Houston, TX, USA, 7–9 February 2024. [Google Scholar]

- Jin, W.; Yao, Y.; Han, S.; Gu, J.; Joe-Wong, C.; Ravi, S.; Avestimehr, S.; He, C. FedML-HE: An Efficient Homomorphic-Encryption-Based Privacy-Preserving Federated Learning System. arXiv 2023, arXiv:2303.10837. [Google Scholar]

- Hijazi, N.M.; Aloqaily, M.; Guizani, M.; Ouni, B.; Karray, F. Secure Federated Learning With Fully Homomorphic Encryption for IoT Communications. IEEE Internet Things J. 2024, 11, 4289–4300. [Google Scholar] [CrossRef]

- Jijagallery. FitLife Health and Fitness Tracking Dataset [Data Set]. Kaggle. 2023. Available online: https://www.kaggle.com/datasets/jijagallery/fitlife-health-and-fitness-tracking-dataset (accessed on 30 September 2025).

- Lazzarini, R.; Tianfield, H.; Charissis, V. Federated Learning for IoT Intrusion Detection. AI 2023, 4, 509–530. [Google Scholar] [CrossRef]

- Chai, D.; Wang, L.; Yang, L.; Zhang, J.; Chen, K.; Yang, Q. A Survey for Federated Learning Evaluations: Goals and Measures. arXiv 2024, arXiv:2308.11841. [Google Scholar] [CrossRef]

- Dang, X.T.; Vu, B.M.; Nguyen, Q.S.; Tran, T.T.M.; Eom, J.S.; Shin, O.S. A Survey on Energy-Efficient Design for Federated Learning over Wireless Networks. Energies 2024, 17, 6485. [Google Scholar] [CrossRef]

- Chen, X.; Zhu, G.; Deng, Y.; Fang, Y. Federated Learning over Multi-Hop Wireless Networks with In-Network Aggregation. IEEE Trans. Wireless Commun. 2022, 21, 4622–4634. [Google Scholar] [CrossRef]

= addressed,

= addressed,  = not addressed,

= not addressed,  = partially addressed).

= partially addressed).| Authors | INC | HE | FL | Res. Constr. | Latency | End-to-End Enc. | Scalability |

|---|---|---|---|---|---|---|---|

| Su et al. [11] |  |  |  |  |  |  |  |

| Xia et al. [12] |  |  |  |  |  |  |  |

| Ji et al. [18] |  |  |  |  |  |  |  |

| Zang et al. [13] |  |  |  |  |  |  |  |

| Choi et al. [4] |  |  |  |  |  |  |  |

| Yang et al. [2] |  |  |  |  |  |  |  |

| Shen et al. [5] |  |  |  |  |  |  |  |

| Walskaar et al. [6] |  |  |  |  |  |  |  |

| Naresh and Varma [17] |  |  |  |  |  |  |  |

| Firdaus et al. [7] |  |  |  |  |  |  |  |

| Lessage et al. [21] |  |  |  |  |  |  |  |

| Hijazi et al. [23] |  |  |  |  |  |  |  |

| Jin et al. [22] |  |  |  |  |  |  |  |

| Gu et al. [20] |  |  |  |  |  |  |  |

| Lee et al. [3] |  |  |  |  |  |  |  |

| Aziz et al. [16] |  |  |  |  |  |  |  |

| Mao et al. [8] |  |  |  |  |  |  |  |

| Gowri et al. [9] |  |  |  |  |  |  |  |

| Khan et al. [10] |  |  |  |  |  |  |  |

| Caruccio et al. [14] |  |  |  |  |  |  |  |

| Albshaier et al. [15] |  |  |  |  |  |  |  |

| This Work (EAH-FL) |  |  |  |  |  |  |  |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Khan, H.; Kavati, R.; Pulkaram, S.S.; Jalooli, A. End-to-End Privacy-Aware Federated Learning for Wearable Health Devices via Encrypted Aggregation in Programmable Networks. Sensors 2025, 25, 7023. https://doi.org/10.3390/s25227023

Khan H, Kavati R, Pulkaram SS, Jalooli A. End-to-End Privacy-Aware Federated Learning for Wearable Health Devices via Encrypted Aggregation in Programmable Networks. Sensors. 2025; 25(22):7023. https://doi.org/10.3390/s25227023

Chicago/Turabian StyleKhan, Huzaif, Rahul Kavati, Sriven Srilakshmi Pulkaram, and Ali Jalooli. 2025. "End-to-End Privacy-Aware Federated Learning for Wearable Health Devices via Encrypted Aggregation in Programmable Networks" Sensors 25, no. 22: 7023. https://doi.org/10.3390/s25227023

APA StyleKhan, H., Kavati, R., Pulkaram, S. S., & Jalooli, A. (2025). End-to-End Privacy-Aware Federated Learning for Wearable Health Devices via Encrypted Aggregation in Programmable Networks. Sensors, 25(22), 7023. https://doi.org/10.3390/s25227023