Real-Time Parking Space Management System Based on a Low-Power Embedded Platform

Abstract

1. Introduction

2. Related Research

2.1. Comparison of Conventional Systems

2.1.1. In-Ground Sensor-Based Parking System

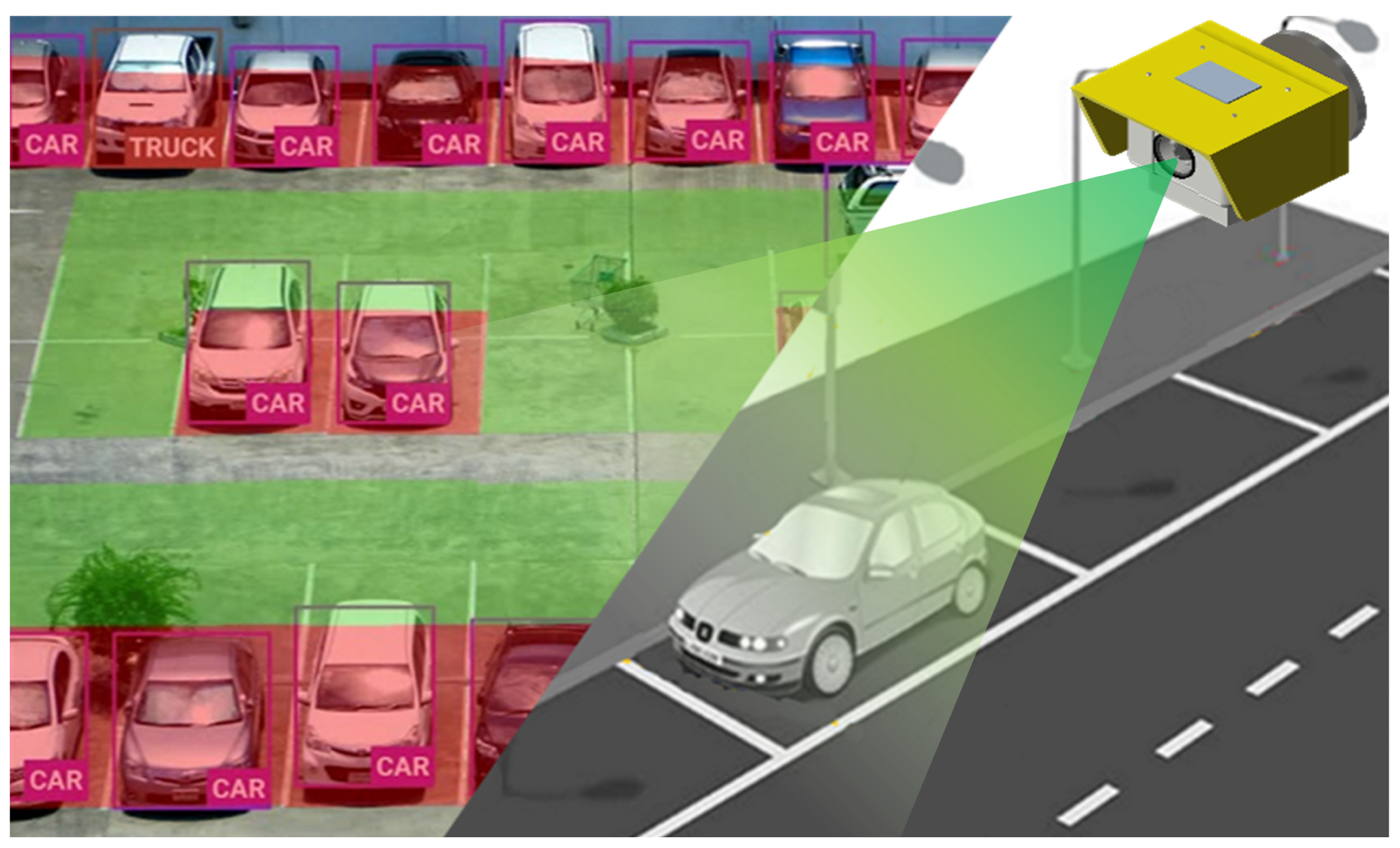

2.1.2. Camera-Based Parking Management System

2.1.3. Comparison of Deployment and Maintenance Costs in Conventional Systems

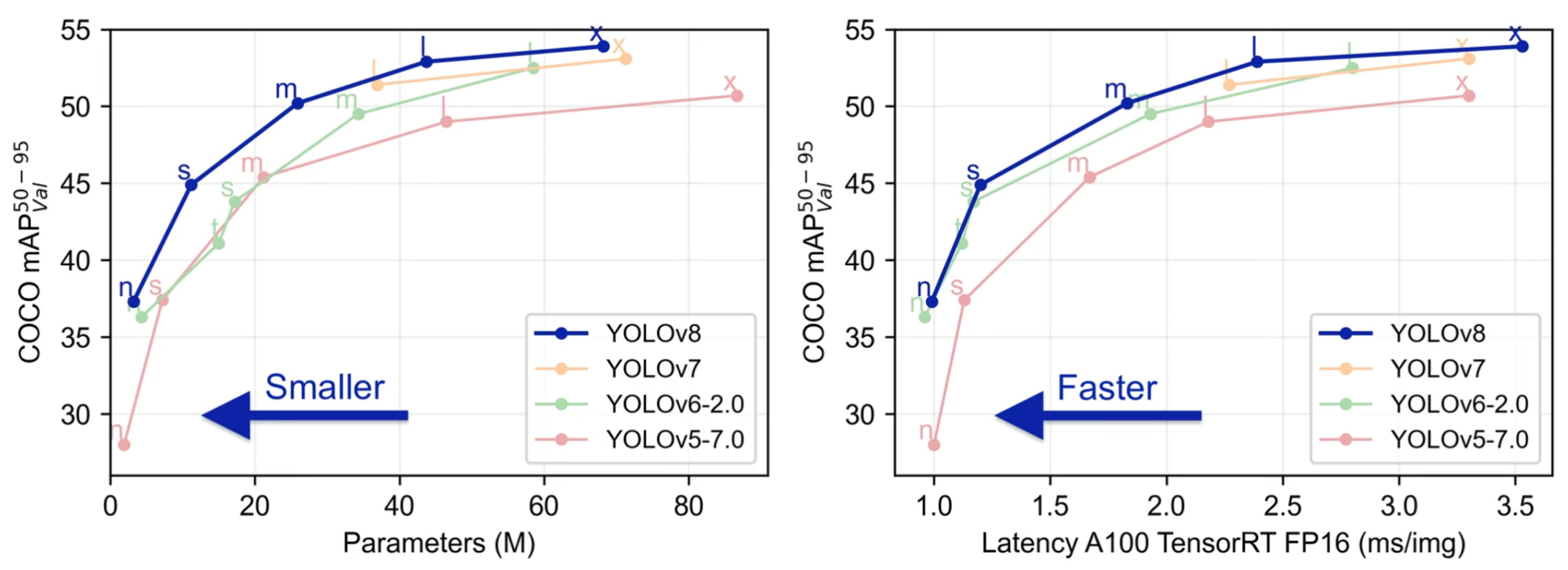

2.2. Object Recognition Algorithm

- Lightweight Nano-Model: The YOLOv8 nano-model features a lightweight architecture that can be efficiently executed on edge devices, making it well-suited for environments with limited memory and computational resources.

- Real-time processing: Fast inference speeds enable real-time vehicle detection, allowing for quick monitoring of parking lot conditions.

- Edge Device Compatibility: It is easily adaptable to various programming languages, including Python, and meets the performance requirements of edge devices.

2.3. Web-Based Control System Technology - Asynchronous Web Framework

3. Proposed System Design

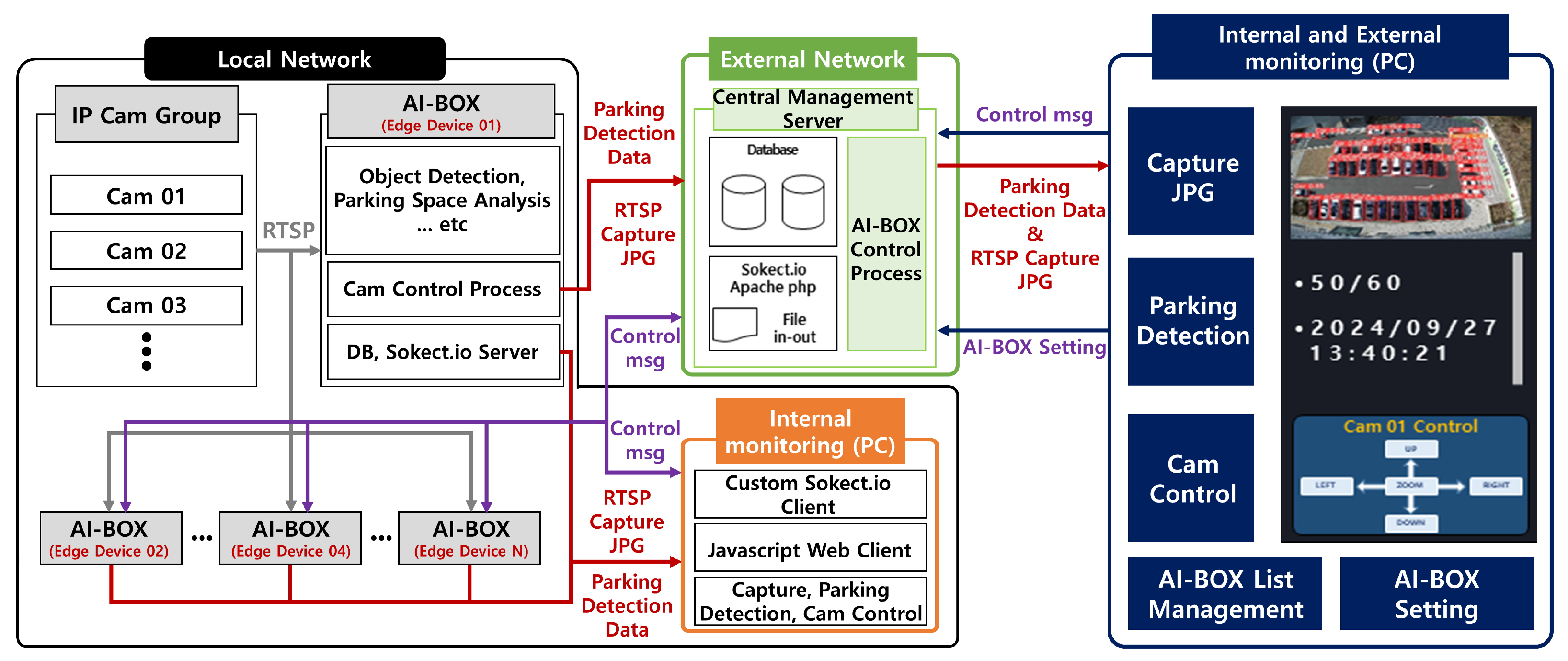

3.1. Proposed System Overview

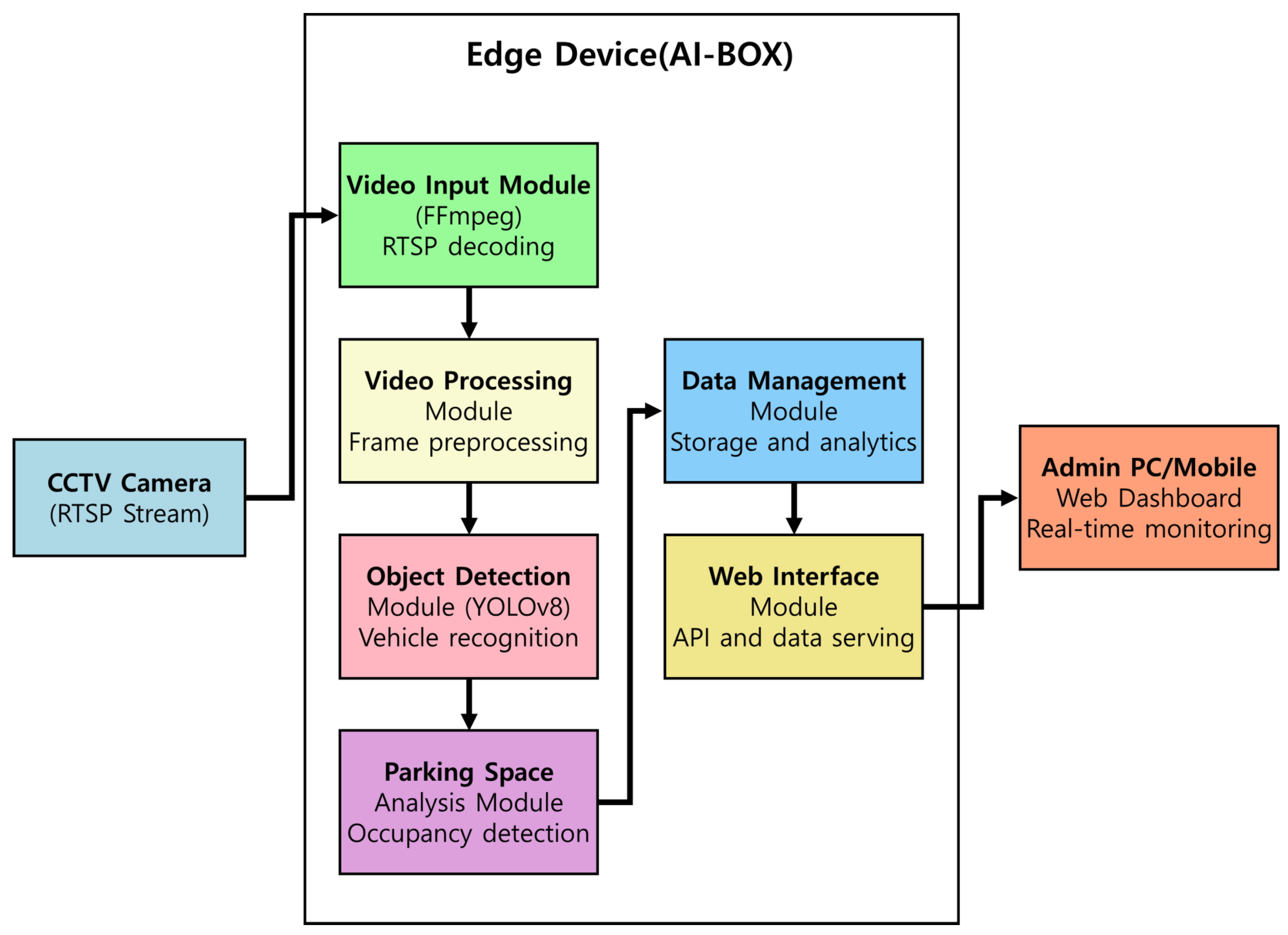

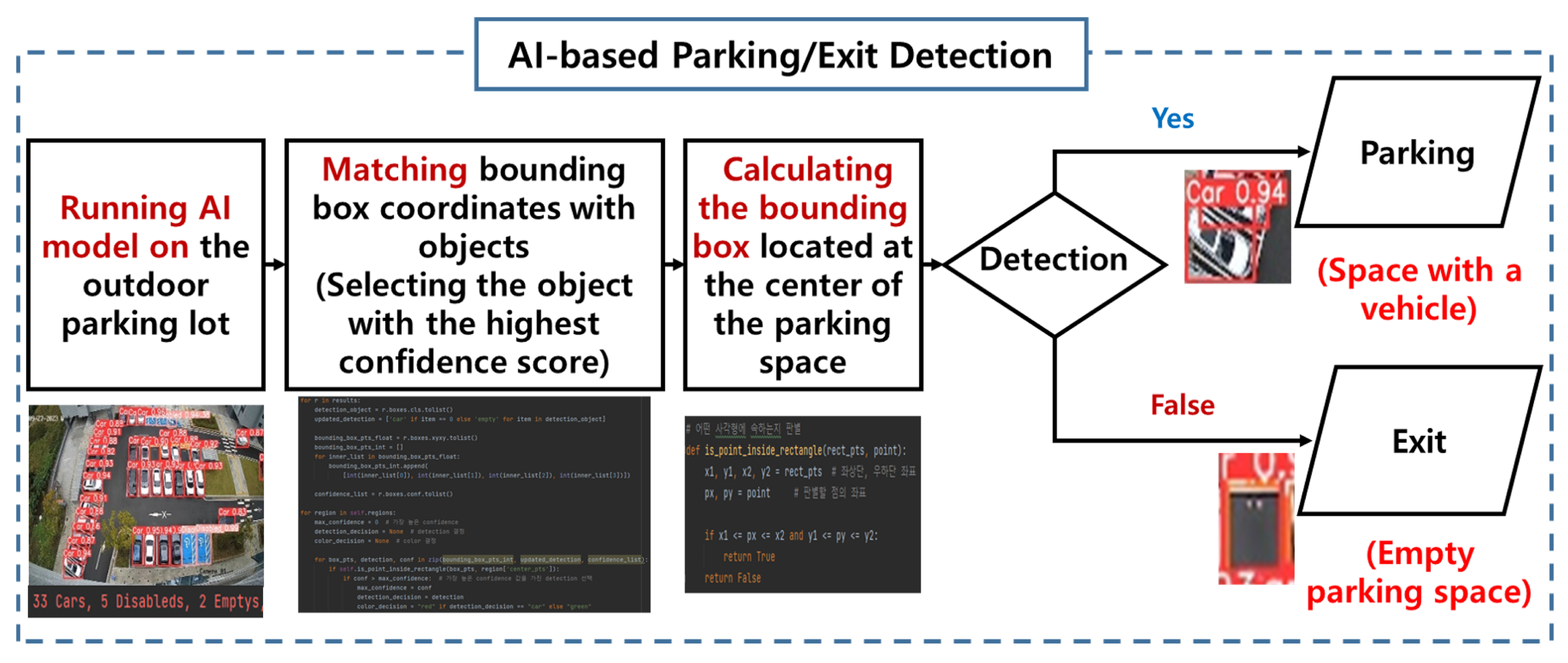

3.2. System Configuration and Key Module Design for Parking Recognition

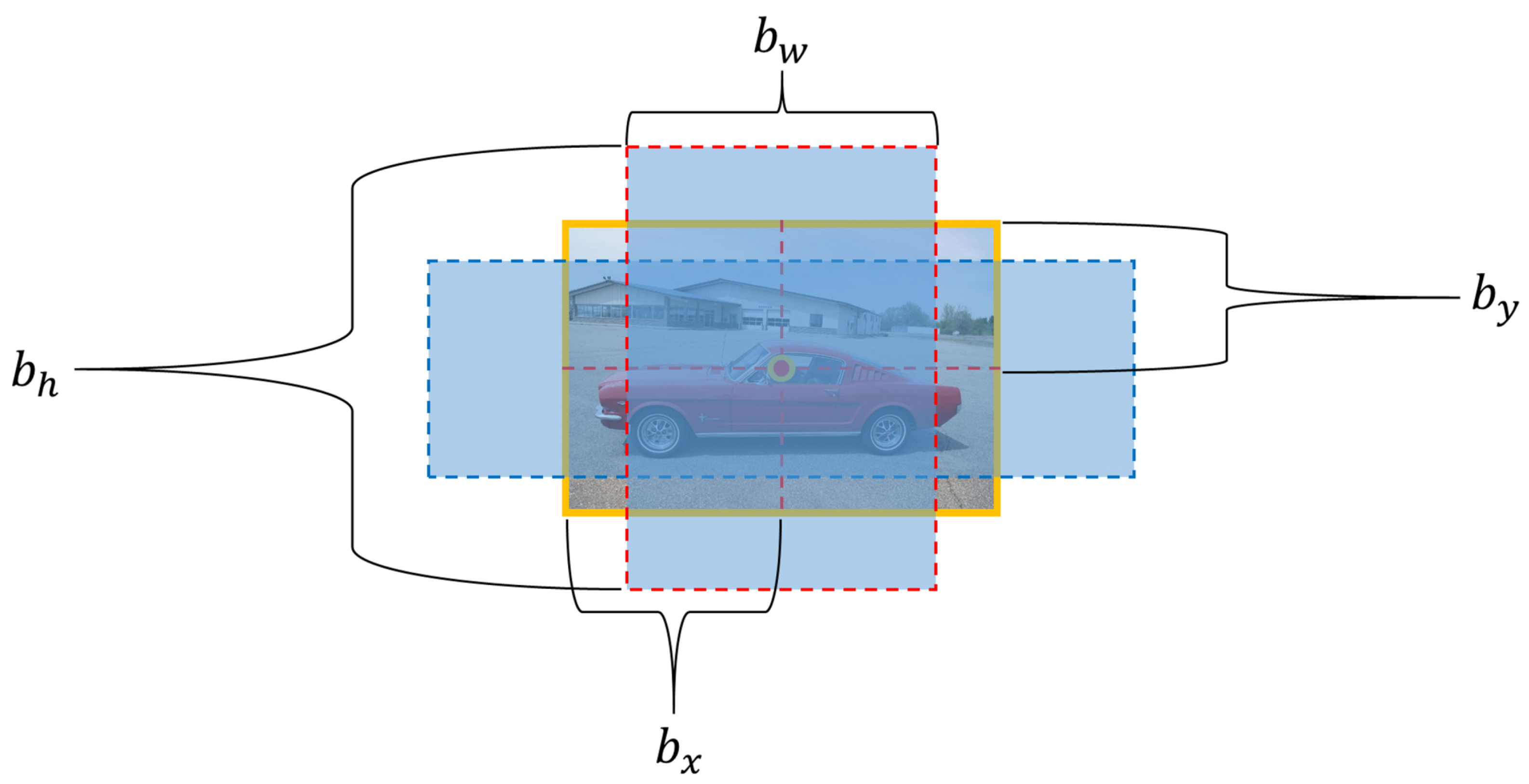

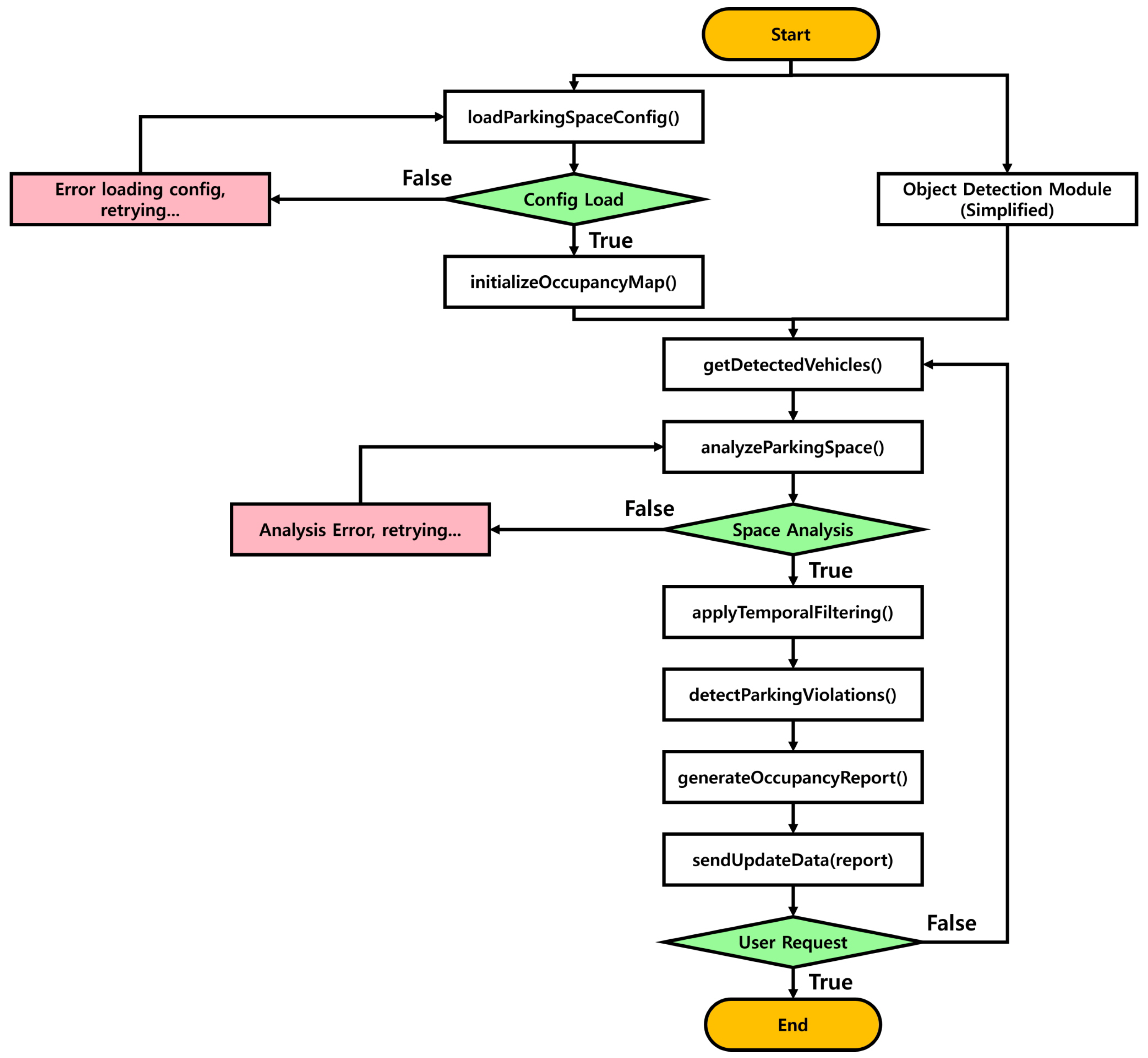

3.3. Parking Space Analysis Module Detailed Design

- parkingSpaces: an array of parking space information, where each element contains the ID, coordinates, status, etc.;

- detectedVehicles: an array containing information about the detected vehicle objects;

- occupancyMap: a map representing the occupancy status of parking spaces;

- IOU_THRESHOLD: intersection over Union (IoU) threshold used to determine if a vehicle has occupied a parking spot;

- TEMPORAL_WINDOW: the number of frames used for temporal filtering;

- violationThreshold: a time threshold (in seconds) used to determine parking violations.

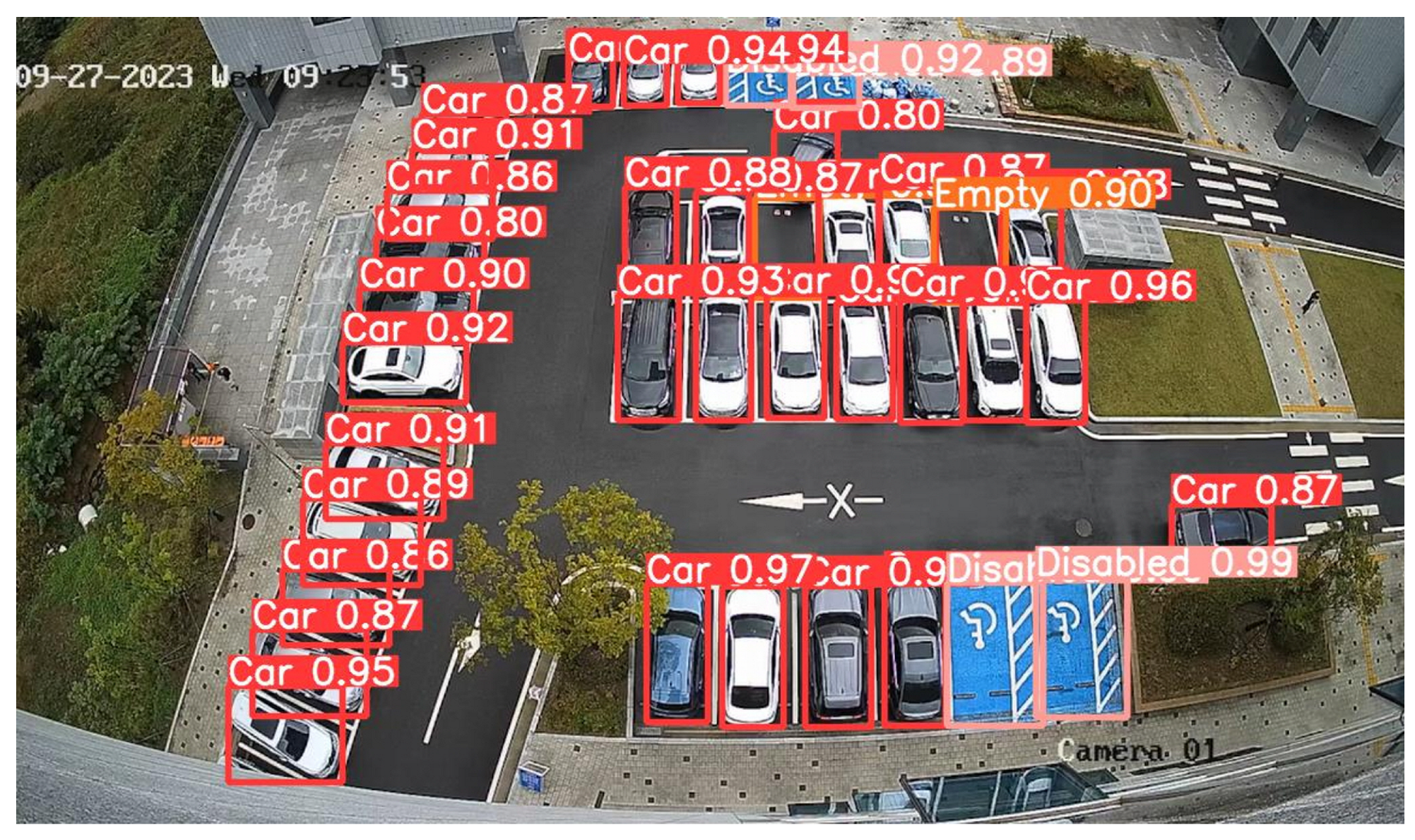

4. Proposed System Implementation

4.1. Proposed System Implementation Environment and Device Fabrication

4.2. Outdoor Parking Lot Dataset Construction

- Photographing the outdoor parking lot at Gachon University Global Campus;

- Conducting photography from June 2023 to December 2023 (over 7 months);

- Positioning cameras to capture the entire parking lot;

- Periodically photographing in various environmental conditions, including different weather and times of day;

- Collecting a total of 13,691 images featuring specific environmental characteristics.

- Duplicate and corrupted data removal: RTSP stream collection occasionally produced damaged or duplicated frames due to network latency or packet loss. These corrupted or identical images were automatically detected and removed using a custom Python script.

- Frame selection criteria: To avoid redundant scenes, frames were extracted at regular time intervals (approximately 3–5 s), ensuring a balanced distribution of illumination, viewing angle, and vehicle occupancy across samples.

- Preliminary detection-based filtering: A pretrained YOLOv8 model was used to per-form preliminary detection. Frames with detection confidence below 0.5 were discarded to exclude low-quality or ambiguous samples.

- Image quality filtering: Blurred or poorly illuminated images caused by motion, rain, or low brightness were filtered out using the variance of Laplacian and histogram-based brightness thresholds.

- Region validation: Each remaining frame was checked to confirm that vehicle objects were located within the defined polygonal parking zones annotated in the dataset.

- Manual verification and correction: After automated filtering, all remaining images were manually reviewed to remove frames where vehicles were partially occluded, misaligned with parking lines, or affected by reflections or camera artifacts.

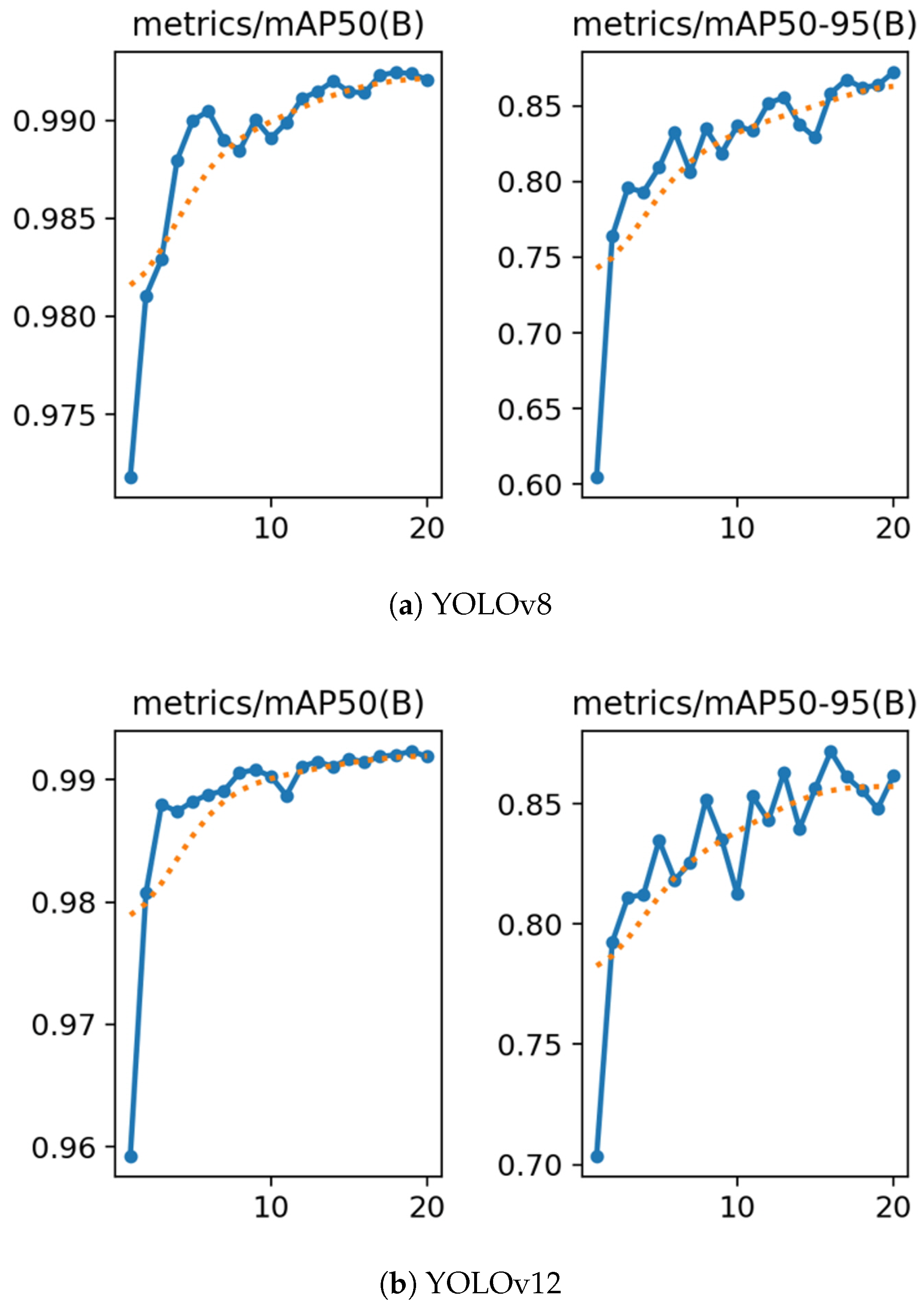

4.3. Outdoor Parking Lot AI Model Training

4.4. AI-BOX Implementation

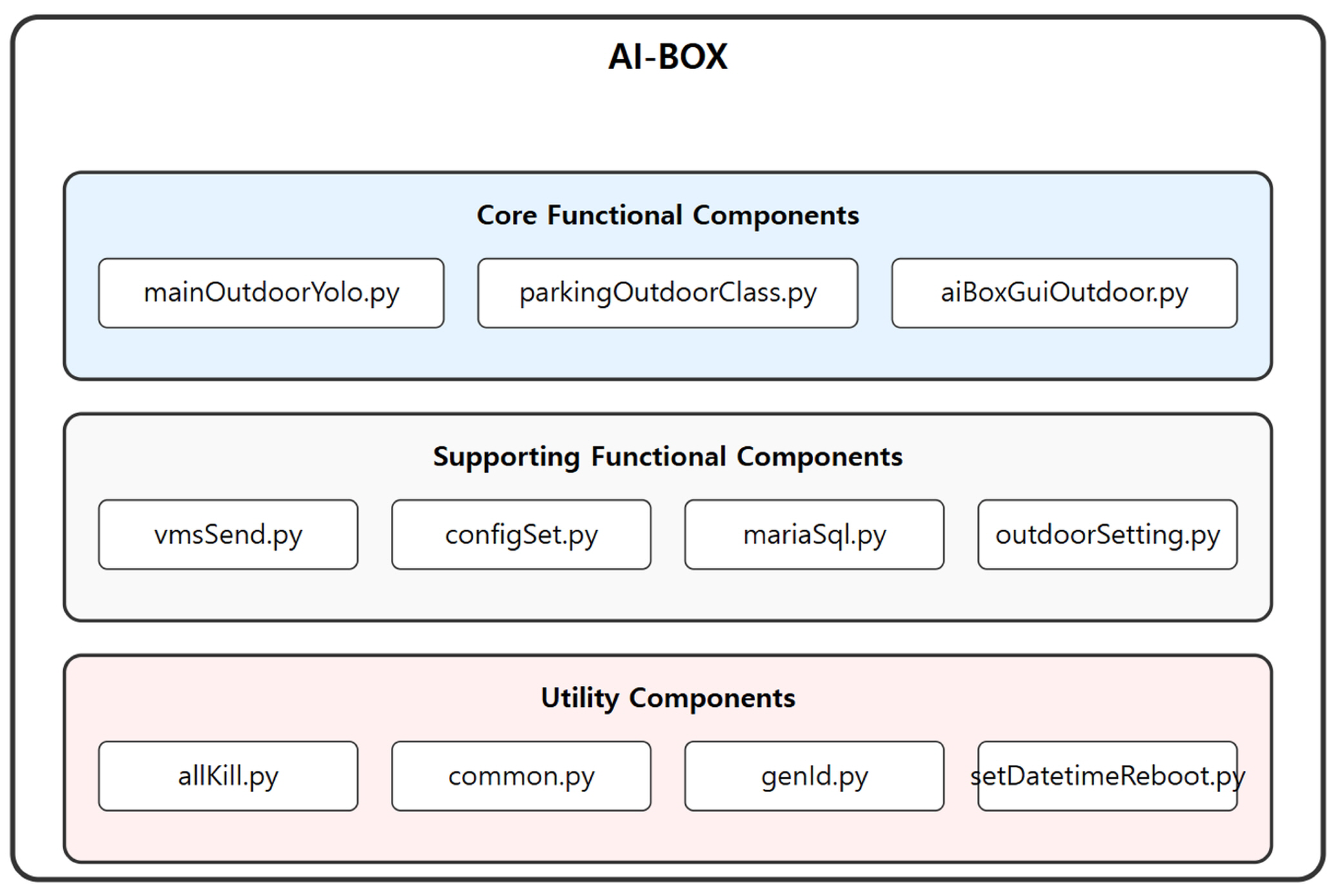

4.4.1. AI-BOX (Edge Device) Software System Architecture

4.4.2. Core Module Implementation: parkingOutdoorClass.py

- Video Input Module: Captures images from the RTSP stream using FFmpeg and stores them in the frame folder.

- Video Processing Module: Preprocesses the stored images with OpenCV and improves quality through techniques such as sharpening and histogram equalization.

- Object Detection Module: Utilizes the YOLOv8n model, trained with the proposed system, to detect vehicle objects in preprocessed images. It extracts bounding box coordinates and confidence scores of the detected objects.

- Parking Space Analysis Module: Loads parking lot coordinate data from JSON(JavaScript Object Notation) files, calculates the center coordinates of each parking space, and determines parking status by comparing these centers with the bounding boxes of detected vehicles. It also computes both the overall occupancy rate and the status of individual parking spaces.

4.5. Monitoring Web Service Implementation

4.5.1. Screens and Features of the Monitoring Web Service

4.5.2. Parking Space Settings Implementation

5. Proposed System Evaluation

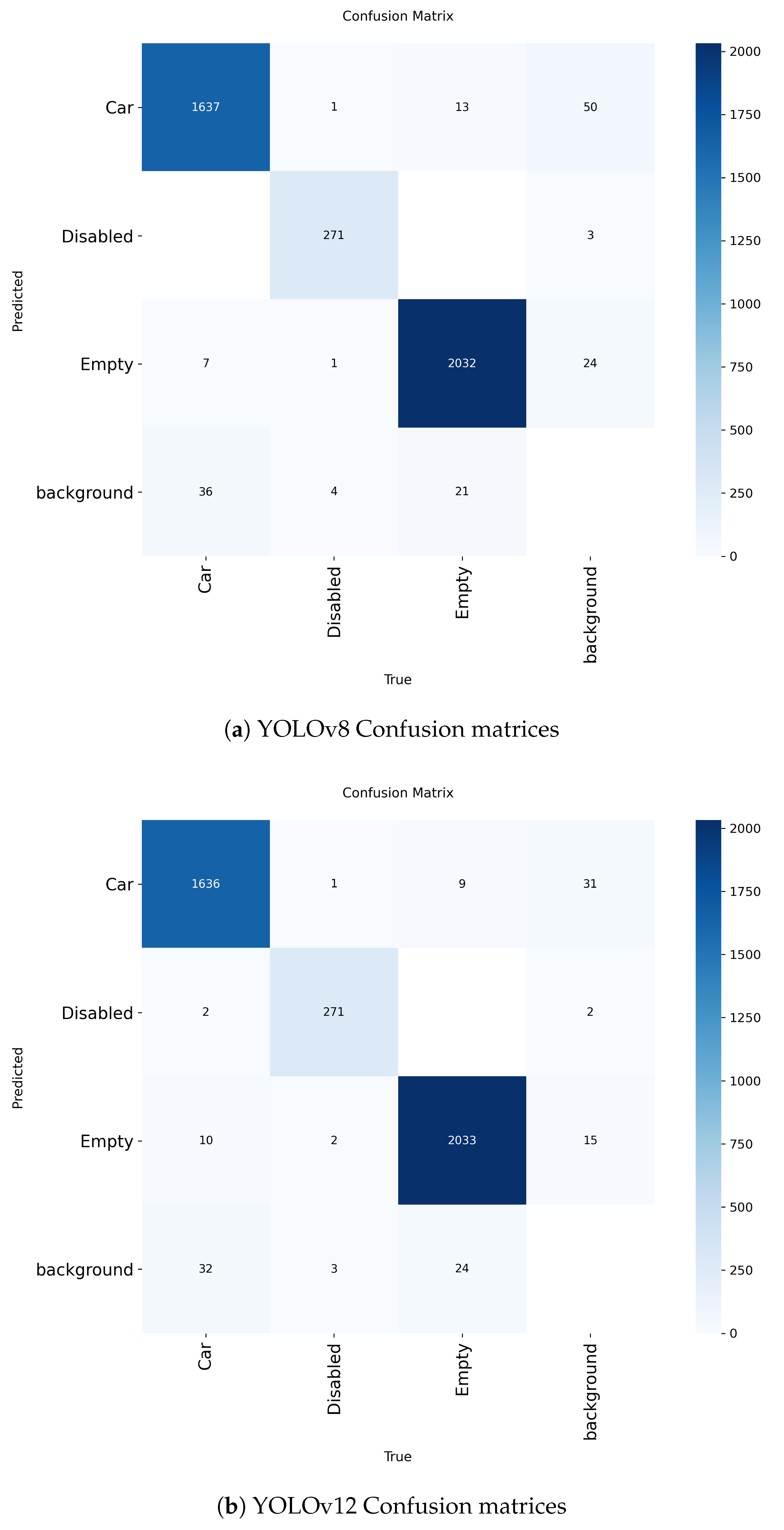

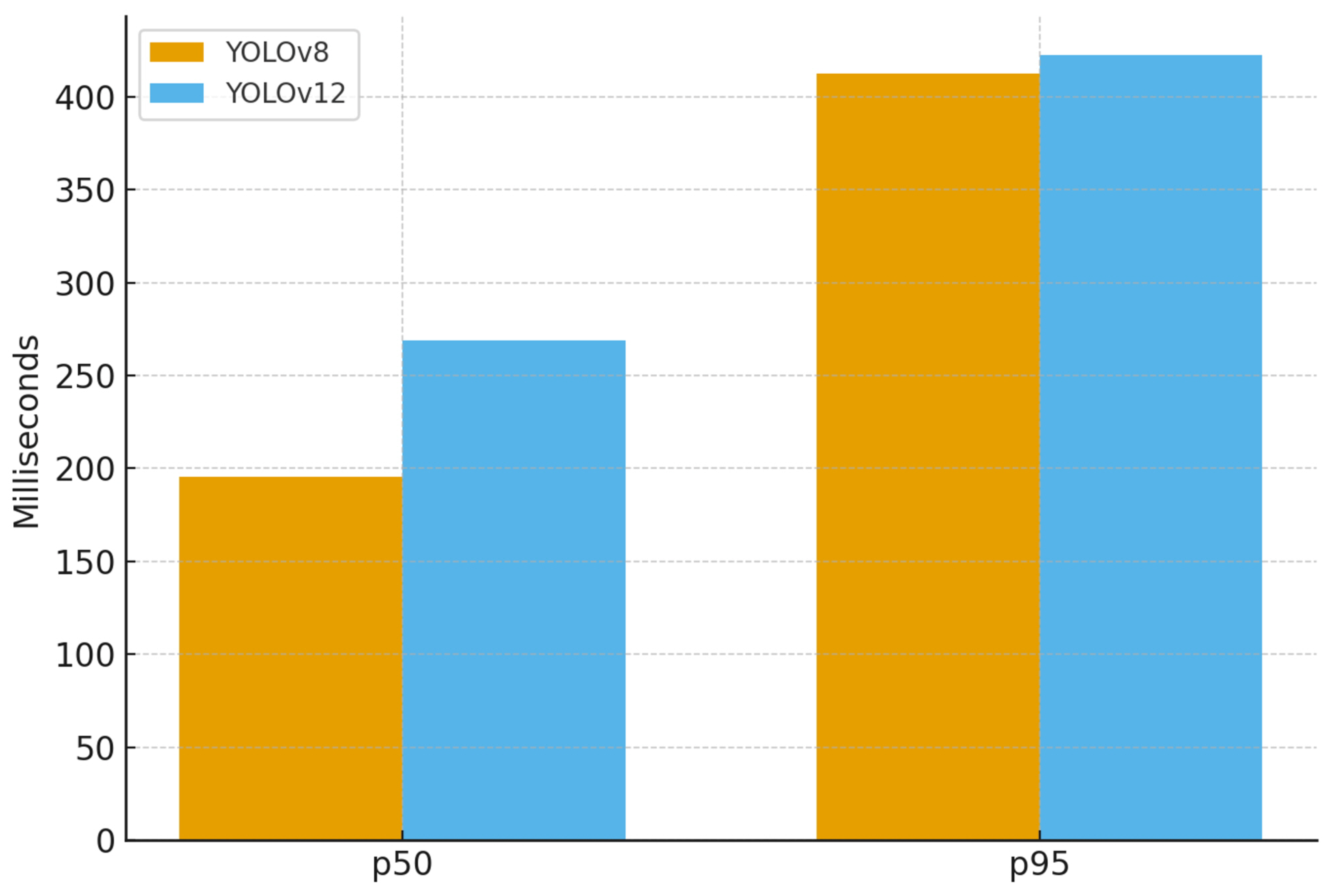

5.1. Performance Evaluation of the Outdoor Parking Recognition Model

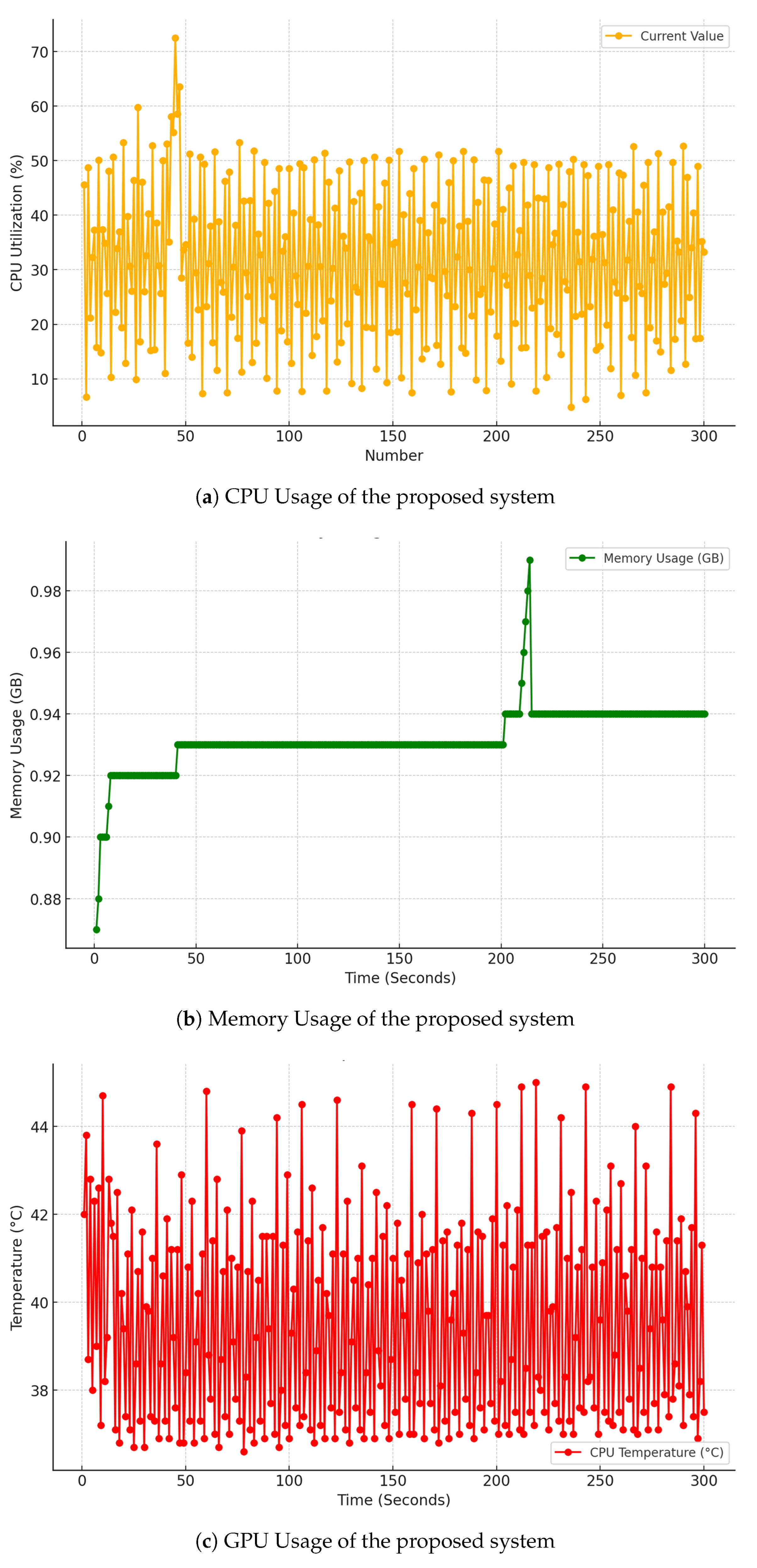

5.2. Evaluation of System Resource Usage

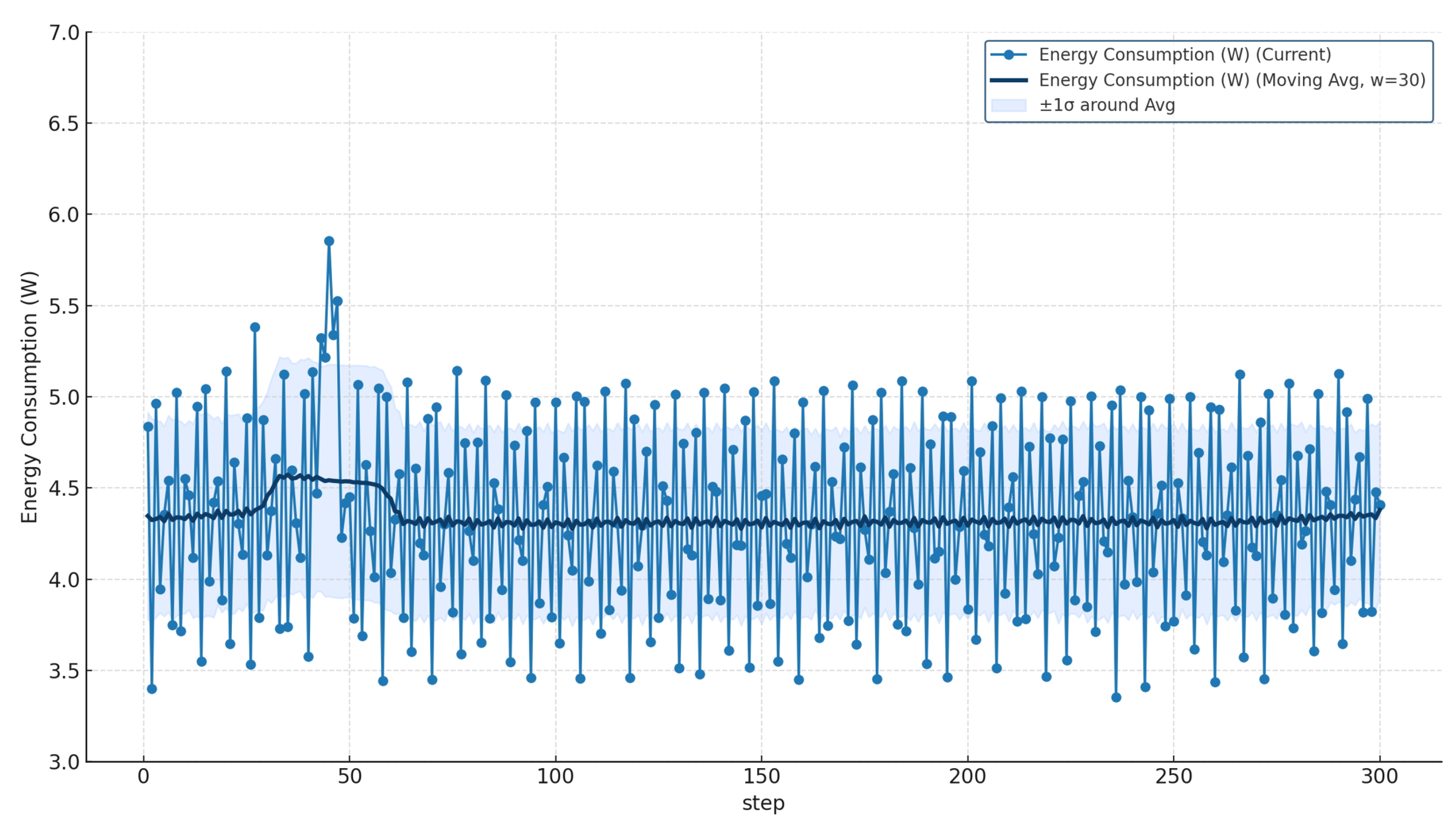

5.3. Evaluation of Power Consumption and System Resource Usage

6. Conclusions

- We developed a distributed processing architecture centered on AI-BOX and designed an intuitive web-based monitoring and management interface to enhance system usability and efficiency.

- AI-BOX was developed on a low-power embedded board by deploying the YOLOv8 model, enabling real-time parking recognition at low cost.

- The system achieved 99% precision and 97% recall, demonstrating high recognition performance.

- Stable operation and reliability were validated through real-world parking lot tests, with efficient resource usage (CPU 31.5%, memory 0.93 GB).

- Improving recognition performance in various weather conditions and lighting environments to enhance the system’s stability;

- Enhancing system scalability by improving the capability to efficiently integrate and manage multiple cameras in large parking lots;

- Expanding the comparative evaluation of object detection models. In this study, YOLOv8 and YOLOv12 were benchmarked under identical embedded environments, and future work will extend this comparison to upcoming versions (YOLOv9, YOLOv10, and YOLOv11) to analyze accuracy speed trade-offs and embedded optimization potential’

- Conducting comparative experiments with other state-of-the-art object detection models, such as Faster R-CNN, MobileNet-SSD, and EfficientDet, and performing validation on public datasets, including COCO and Pattern Analysis, Statistical Modeling and Computational Learning Visual Object Classes (Pascal VOC), to evaluate the model’s generalization capability.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Biyik, C.; Allam, Z.; Pieri, G.; Moroni, D.; O’Fraifer, M.; O’Connell, E.; Olariu, S.; Khalid, M. Smart Parking Systems: Reviewing the Literature, Architecture and Ways Forward. Smart Cities 2021, 4, 623–642. [Google Scholar] [CrossRef]

- Lin, T.; Rivano, H.; Le Mouël, F. A survey of smart parking solutions. IEEE Trans. Intell. Transp. Syst. 2017, 18, 3229–3253. [Google Scholar] [CrossRef]

- Litman, T. Parking Management: Strategies, Evaluation and Planning. 2024. Available online: https://www.vtpi.org/park_man.pdf (accessed on 16 October 2025).

- Victoria Transport Policy Institute. Comprehensive Parking Supply, Cost and Pricing Analysis. 2025. Available online: https://www.vtpi.org/pscp.pdf (accessed on 16 October 2025).

- Paidi, V.; Fleyeh, H.; Håkansson, J.; Nyberg, R.G. Smart parking sensors, technologies and applications for open parking lots: A review. IET Intell. Transp. Syst. 2018, 12, 735–741. [Google Scholar] [CrossRef]

- Channamallu, S.S.; Kermanshachi, S.; Rosenberger, J.M.; Pamidimukkala, A. A review of smart parking systems. Transp. Res. Procedia 2023, 73, 289–296. [Google Scholar] [CrossRef]

- Barriga, J.J.; Sulca, J.; León, J.L.; Ulloa, A.; Portero, D.; Andrade, R.; Yoo, S.G. Smart parking: A literature review from the technological perspective. Appl. Sci. 2019, 9, 4569. [Google Scholar] [CrossRef]

- Ala’anzy, M.A.; Abilakim, A.; Zhanuzak, R.; Li, L. Real time smart parking system based on IoT and fog computing evaluated through a practical case study. Sci. Rep. 2025, 15, 33483. [Google Scholar] [CrossRef] [PubMed]

- Mackowski, D.; Bai, Y.; Ouyang, Y. Parking space management via dynamic performance-based pricing. Transp. Res. Procedia 2015, 7, 170–191. [Google Scholar] [CrossRef]

- Sarker, V.K.; Gia, T.N.; Ben Dhaou, I.; Westerlund, T. Smart parking system with dynamic pricing, edge-cloud computing and lora. Sensors 2020, 20, 4669. [Google Scholar] [CrossRef]

- Hassoune, K.; Dachry, W.; Moutaouakkil, F.; Medromi, H. Smart parking systems: A survey. In Proceedings of the 2016 11th International Conference on Intelligent Systems: Theories and Applications (SITA), Mohammedia, Morocco, 19–20 October 2016; pp. 1–6. [Google Scholar]

- Mainetti, L.; Patrono, L.; Sergi, I. A survey on indoor positioning systems. In Proceedings of the 2014 22nd International Conference on Software, Telecommunications and Computer Networks (SoftCOM), Split, Croatia, 17–19 September 2014; pp. 111–120. [Google Scholar]

- Mao, Y.; You, C.; Zhang, J.; Huang, K.; Letaief, K.B. A survey on mobile edge computing: The communication perspective. IEEE Commun. Surv. Tutor. 2017, 19, 2322–2358. [Google Scholar] [CrossRef]

- Anagnostopoulos, T.; Zaslavsky, A.; Kolomvatsos, K.; Medvedev, A.; Amirian, P.; Morley, J.; Hadjieftymiades, S. Challenges and opportunities of waste management in IoT-enabled smart cities: A survey. IEEE Trans. Sustain. Comput. 2017, 2, 275–289. [Google Scholar] [CrossRef]

- Ultralytics. YOLOv8 Documentation. 2023. Available online: https://docs.ultralytics.com/ (accessed on 15 October 2025).

- Khanna, A.; Anand, R. IoT based smart parking system. In Proceedings of the 2016 International Conference on Internet of Things and Applications (IOTA), Pune, India, 22–24 January 2016; pp. 266–270. [Google Scholar]

- Moini, N.; Hill, D.; Gruteser, M. Impact Analyses of Curb-Street Parking Guidance System on Mobility and Environment; Technical Report ATT-RU3528; Center for Advanced Infrastructure and Transportation (CAIT), Rutgers University: Piscataway, NJ, USA, 2012. [Google Scholar]

- Zakharenko, R. The economics of parking occupancy sensors. Econ. Transp. 2019, 17, 14–23. [Google Scholar] [CrossRef]

- U.S. Department of Transportation, ITS JPO. ITS Knowledge Resources: Cost Record 2020-SC00464.; 2020. Available online: https://www.itskrs.its.dot.gov/2020-sc00464 (accessed on 3 November 2025).

- Fahim, A.; Hasan, M.; Chowdhury, M.A. Smart parking systems: Comprehensive review based on various aspects. Heliyon 2021, 7, e07050. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar] [CrossRef]

- Iandola, F.N.; Han, S.; Moskewicz, M.W.; Ashraf, K.; Dally, W.J.; Keutzer, K. SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and <0.5 MB model size. arXiv 2016, arXiv:1602.07360. [Google Scholar]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. Shufflenet: An extremely efficient convolutional neural network for mobile devices. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6848–6856. [Google Scholar]

- Jocher, G.; Chaurasia, A.; Qiu, J. YOLO by Ultralytics (Version 8.0.0) [Computer Software]. 2023. Available online: https://github.com/ultralytics/ultralytics (accessed on 15 October 2025).

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Springer: Berlin/Heidelberg, Germany, 2014; pp. 740–755. [Google Scholar]

- Nwankpa, C. Activation functions: Comparison of trends in practice and research for deep learning. arXiv 2018, arXiv:1811.03378. [Google Scholar] [CrossRef]

- Xiong, Y.; Liu, H.; Gupta, S.; Akin, B.; Bender, G.; Wang, Y.; Kindermans, P.J.; Tan, M.; Singh, V.; Chen, B. Mobiledets: Searching for object detection architectures for mobile accelerators. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 3825–3834. [Google Scholar]

- Liu, S.; Zha, J.; Sun, J.; Li, Z.; Wang, G. EdgeYOLO: An Edge-Real-Time Object Detector. arXiv 2023, arXiv:2302.07483. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J. Yolov10: Real-time end-to-end object detection. Adv. Neural Inf. Process. Syst. 2024, 37, 107984–108011. [Google Scholar]

- Zhang, H.; Hu, W.; Wang, X. EdgeFormer: Improving Light-weight ConvNets by Learning from Vision Transformers. arXiv 2022. [Google Scholar] [CrossRef]

- Asynchronous HTTP Client/Server for Asyncio and Python. 2025. Available online: https://docs.aiohttp.org/ (accessed on 15 October 2025).

- Rachapudi, A.; Parimi, P.; Alluri, S. Performance Comparison of Applications with and without Web Frameworks. Int. J. Adv. Trends Comput. Sci. Eng. 2020, 9, 1756–1760. [Google Scholar] [CrossRef]

- Paterson, C. Async Python is Not Faster. 2020. Available online: https://calpaterson.com/async-python-is-not-faster.html (accessed on 3 November 2025).

- Bednarz, B.; Miłosz, M. Benchmarking the performance of Python web frameworks. J. Comput. Sci. Inst. 2025, 36, 336–341. [Google Scholar] [CrossRef]

- Rao, A.; Lanphier, R.; Schulzrinne, H. Real Time Streaming Protocol (RTSP); RFC 2326; RFC Editor: 1998. Available online: https://www.rfc-editor.org/info/rfc2326 (accessed on 6 November 2025).

- Schulzrinne, H.; Rao, A.; Lanphier, R.; Westerlund, M.; Stiemerling, M. Real-Time Streaming Protocol Version 2.0; RFC 7826; RFC Editor: 2016. Available online: https://www.rfc-editor.org/info/rfc7826 (accessed on 6 November 2025).

- Raspberry Pi Foundation. Raspberry Pi 4 Model B Product Brief—Power and Performance Specifications. 2023. Available online: https://www.raspberrypi.com/documentation/computers/raspberry-pi.html (accessed on 19 October 2025).

- NVIDIA Corporation. Jetson AGX Xavier Series Data Sheet. 2023. Available online: https://developer.nvidia.com/embedded/jetson-agx-xavier (accessed on 19 October 2025).

- Google Cloud. Energy Consumption of Cloud GPU Instances. 2023. Available online: https://cloud.google.com/compute/docs/gpus (accessed on 19 October 2025).

- Wiki, P.D. Power Consumption Benchmarks for Raspberry Pi 4 Model B. 2024. Available online: https://www.pidramble.com/wiki/benchmarks/power-consumption (accessed on 27 October 2025).

| Method | CAPEX | Characteristics |

|---|---|---|

| In-ground Sensors [17,18] | $250–$800/slot | Robust detection using embedded ground sensors, but cost scales linearly with each slot |

| On-surface IoT Sensors [19] | $300–$500/slot | Easy wireless installation, but battery lifecycle increases maintenance needs |

| Centralized Camera System [20] | $100–$400/camera | Slot-level monitoring via server inference, but scalability is limited by network load |

| Framework | Request Throughput (req/s) | Average Response Time (ms) |

|---|---|---|

| AIOHTTP | 10,000 | 5 |

| Flask | 5000 | 10 |

| Django | 4000 | 12 |

| Module | Description |

|---|---|

| Video Input Module | Efficiently receives and decodes RTSP [37,38] streams using FFmpeg. |

| Video Processing Module | Preprocesses the input video and converts it into a form suitable for object detection. |

| Object Detection Module | Utilizes the YOLOv8 algorithm to quickly and accurately detect vehicle objects. |

| Parking Space Analysis Module | Compares detected vehicle objects with predefined parking space information to determine occupancy. |

| Data Management Module | Stores and manages the analysis results. |

| Web Interface Module | Provides processed data via API and handles communication with the administrator dashboard. |

| Component | Specification |

|---|---|

| CPU | Broadcom BCM2711, Quad core Cortex-A72 (ARM v8) 64-bit SoC @ 1.5 GHz |

| GPU | Broadcom VideoCore VI, 500 MHz |

| RAM | 4 GB LPDDR4-2400 SDRAM |

| Network | 2.4 GHz and 5.0 GHz IEEE 802.11ac Wireless, Gigabit Ethernet |

| Storage | 64 GB Micro-SD Card, 500 GB External SSD (Solid-State Drive) (USB (Universal Serial Bus) 3.0 connection) |

| Power | 5 V DC via USB-C connector (5 V/3 A) |

| Interface | 2 × USB 3.0 ports, 2 × USB 2.0 ports, 1 × CSI (Camera Serial Interface) camera port, 1 × micro-HDMI port |

| Dataset Classification | Occupied Parking Spaces | Empty Parking Spaces |

|---|---|---|

| Training Data | 5079 | 3271 |

| Validation Data | 555 | 327 |

| Test Data | 2248 | 2211 |

| Total | 7882 | 5809 |

| Parameter | Value |

|---|---|

| Input image size | 640 × 640 |

| Batch size | 16 |

| Number of epochs | 20 |

| Learning rate | 0.01 (cosine annealing schedule) |

| Optimizer | Stochastic Gradient Descent (SGD) |

| Momentum | 0.937 |

| Weight decay | 0.0005 |

| Warmup epochs | 3 |

| Confidence threshold | 0.5 |

| IoU threshold (NMS) | 0.7 |

| Augmentation techniques | Random rotation, flipping, brightness adjustment |

| Screens | Main Features |

|---|---|

| Login Screen and Password Change | User authentication Password change feature |

| Camera Information Settings | Enter RTSP URL and Web URL Check camera connection status Display live video feed Control camera PTZ (Pan-Tilt-Zoom) |

| Parking Space Settings | Add/modify/delete parking space areas Set parking space information (name, count, direction, number, type) Apply and save settings |

| VMS (Video Management System) Event Transmission | Event activation settings Enter VMS URL Set transmission interval Set special events |

| Event Log Delivery | Set event log delivery Set delivery interval and recipients |

| Model | Class | Precision | Recall | mAP@0.5 | mAP@0.5:0.95 |

|---|---|---|---|---|---|

| YOLOv8 | All | 0.991 | 0.975 | 0.992 | 0.872 |

| Car | 0.986 | 0.964 | 0.987 | 0.826 | |

| Disabled | 0.993 | 0.978 | 0.994 | 0.880 | |

| Empty | 0.993 | 0.983 | 0.994 | 0.911 | |

| YOLOv12 | All | 0.991 | 0.975 | 0.992 | 0.872 |

| Car | 0.988 | 0.972 | 0.988 | 0.826 | |

| Disabled | 0.996 | 0.978 | 0.994 | 0.878 | |

| Empty | 0.994 | 0.986 | 0.995 | 0.913 |

| Metric | YOLOv8 | YOLOv12 |

|---|---|---|

| Iterations | 250 | 250 |

| Avg Preprocess Time (ms) | 1.78 ± 1.78 | 1.93 ± 2.04 |

| Avg Inference Time (ms) | 224.53 ± 92.82 | 254.35 ± 103.80 |

| Avg Postprocess Time (ms) | 0.49 ± 0.94 | 0.38 ± 0.67 |

| Avg Total Time (ms) | 226.81 ± 93.37 | 256.67 ± 104.52 |

| Min Total Time (ms) | 118.48 | 135.57 |

| Max Total Time (ms) | 504.05 | 485.39 |

| Metric | Maximum | Minimum | Average | Baseline |

|---|---|---|---|---|

| CPU Usage | 72.5% | 4.8% | 31.5% | 3.2% |

| Memory Usage | 0.99 GB | 0.87 GB | 0.93 GB | 0.62 GB |

| CPU Temperature | 45 °C | 36.6 °C | 39.5 °C | 33.5 °C |

| Metric | Maximum | Minimum | Average | Baseline |

|---|---|---|---|---|

| Energy Consumption | 5.86 W | 3.36 W | 4.42 W | 3.35 W |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, K.; Lee, J.; Jeong, I.; Jung, J.; Cho, J. Real-Time Parking Space Management System Based on a Low-Power Embedded Platform. Sensors 2025, 25, 7009. https://doi.org/10.3390/s25227009

Kim K, Lee J, Jeong I, Jung J, Cho J. Real-Time Parking Space Management System Based on a Low-Power Embedded Platform. Sensors. 2025; 25(22):7009. https://doi.org/10.3390/s25227009

Chicago/Turabian StyleKim, Kapyol, Jongwon Lee, Incheol Jeong, Jungil Jung, and Jinsoo Cho. 2025. "Real-Time Parking Space Management System Based on a Low-Power Embedded Platform" Sensors 25, no. 22: 7009. https://doi.org/10.3390/s25227009

APA StyleKim, K., Lee, J., Jeong, I., Jung, J., & Cho, J. (2025). Real-Time Parking Space Management System Based on a Low-Power Embedded Platform. Sensors, 25(22), 7009. https://doi.org/10.3390/s25227009