1. Introduction

The widespread availability of position-tracking systems across many industries has enabled innovations in trajectory mining. Sports [

1,

2,

3] and operations research [

4,

5,

6] in particular benefit from these large datasets of unstructured multi-trajectory time series. An important application is the search and retrieval of similar instances based on similarity metrics [

1,

7,

8,

9]. Due to the scale of the datasets, a complete pairwise comparison of high-dimensional data is infeasible. Previous solutions either filtered the data [

1,

8,

9] or trained Siamese Networks to learn lower-dimensional embeddings that permit efficient search [

2,

7].

However, filtering datasets reduces the effective search space, resulting in inferior results. On the other hand, learning an embedding of sufficiently high quality for meaningful retrieval results is extremely costly: first, an optimal assignment between two instances’ trajectories is computed using the Hungarian algorithm, and second, a suitable distance metric is selected, such as the Euclidean distance. These pairwise comparisons lead to a significant computational complexity of for N samples in the training dataset.

Sub-sampling of pairs of trajectories is the standard practice for sports scene search [

2]. However, while such random sampling reduces the training cost considerably, it leads to an impoverished quality of the embedding space, because it samples fewer pairs, which tend to be less meaningful and easier. Random sampling lacks a mechanism to select more informative or representative data.

In this work, we propose a novel active sub-sampling technique that exploits gradient signals to select pairs that produce diverse gradients with high magnitudes, which we call Pairwise Diverse and Uncertain Gradient (PairDUG). The sampler is inspired by Active Learning [

10], specifically BADGE [

11], which constructs sets of query instances that are both representative and informative to reduce annotation cost in classification. We transfer the concept to pairwise similarity learning.

We pose three research questions: RQ1: Can the required compute time for training a Siamese Network be reduced while maintaining similar retrieval quality? RQ2: Does active sampling retain a higher retrieval quality compared to other baselines? RQ3: What explanations for the active samplers’ effects on learning are there? Our experiments then determine which samplers achieve the highest retrieval quality and calculate an efficiency score based on runtime and retained performance relative to baselines, such as “simulated full training” and random sampling.

We summarize our contributions as follows:

We transfer similarity learning from soccer [

2] to American football [

12] and basketball [

13] datasets.

We propose the novel Pairwise Diverse and Uncertain Gradient (PairDUG) method that extends BADGE sampling [

11] to pairwise metric learning via proxy distance cost generated using uniform keypoint sub-sampling. This sampler increases the efficiency of Siamese Network training by sampling the most relevant pairs, selecting batches of instances with diverse and informative gradient signals.

We experimentally analyze the quality of the learned embeddings on two large-scale real-world datasets, including baseline heuristics such as semi-hard sampling [

14] and active samplers such as Entropy [

15] and MC Dropout [

16] uncertainty-based sampling, and CoreSet [

17] diversity-based sampling.

3. Background

This section first provides an example of trajectory similarity search. Then, it defines a general notation for the trajectory data that this study experiments with. Next, it reviews the assignment problem and the costly optimization provided by the Hungarian algorithm [

32]. Finally, the section outlines how the learning task of the Siamese Network includes the Hungarian algorithm’s solution, i.e., the assignment.

Trajectory similarity search aims to retrieve the top-N most similar scenes with respect to a distance metric [

2]. Given the two exemplary basketball scenes in

Figure 1, one represents a query scene. The other represents a sample from a large dataset; the similarity requires two steps: first, the optimal assignment of trajectories between scenes is calculated via the Hungarian algorithm [

32], and second, the sum of their (Euclidean) distances is produced. This step repeats for all scenes in the dataset, and the top N results are returned.

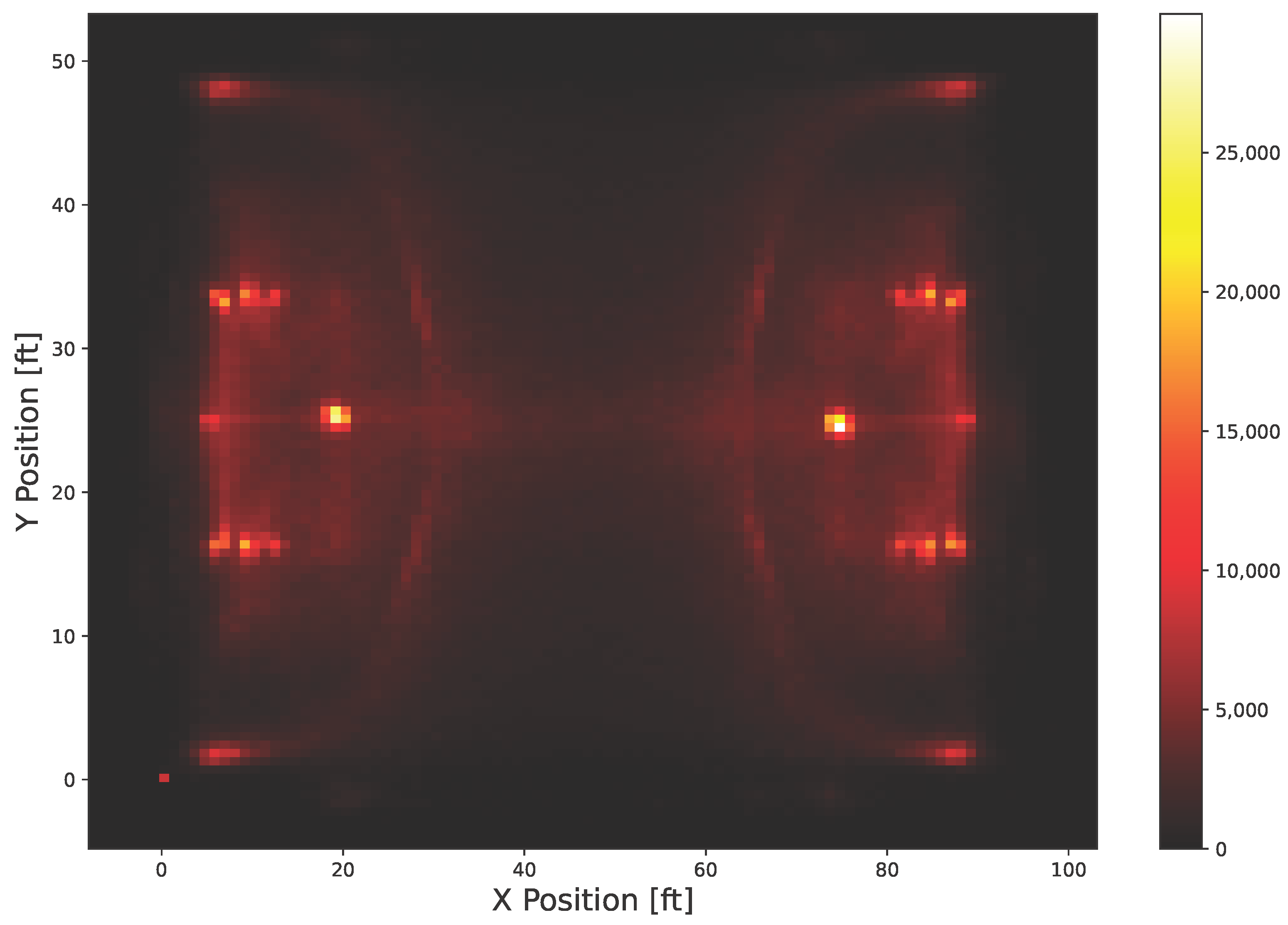

We formally define multi-trajectory sport scenes as follows. For comparability, we adapt the notation proposed by Löffler et al. [

2] to our data. We represent each trajectory as a spatio-temporal matrix

:

where

S denotes the spatial dimension of the trajectory (

for 2D positional tracking), and

T the temporal length in frames. For example, we set

for 6 s of tracked data at 25 Hz in basketball. Each row

corresponds to one spatial coordinate (e.g.,

x or

y) over time. A scene with multiple agents is denoted by

, where

N is the number of tracked entities. Thus, for an American football scene with 10 players plus the ball,

has shape

. For an American football play with 22 players plus the ball, the representation is

, i.e., tracking at 10 Hz for 5 s scenes.

To calculate distances between pairs

and

of scenes, many sports do not define a fixed assignment of the two matrices’ trajectories

(

) from

and

(

) due to the high dynamics in many team sports [

2,

7,

33]. Despite the existence of roles in basketball, the game is also highly dynamic. In American football, the roles are generally more rigid for specific groups. To retain the generalizability of our method, we do not assume fixed assignments.

To calculate the similarity between two scenes, Löffler et al. [

2] use the Hungarian algorithm to calculate row-wise assignments in dimension

N of both scenes, yielding a ground-truth distance. The authors use the Euclidean distance as a metric and sum up all distances for pairs of matrices after assignment.

This ground-truth computation is an important cost driver of training Siamese Networks on these combinatorial datasets: (1) pairwise distances have the complexity of

where

is matrix size, (2) and the Hungarian algorithm has the complexity of

. Hence, the overall complexity is

. Faster implementations, such as Jonker–Volgenant (JV) [

34] or the heuristic Auction algorithm [

35], can also be used in conjunction with our proposed method. While JV has the same asymptotic complexity,

, it is more efficient in practice, and the Auctioning Auction algorithm provides a fast, approximate solution. We leave these technical optimizations for future work.

Following Löffler et al. [

2,

7], we compute the ground-truth assignments and distances optimally for any pairwise comparison. The Siamese Network’s inputs, however, do not use any special heuristic to assign the matrices’ rows to the neural network’s input channels. In contrast to previous work, we do not explore role-/grid-based [

2] or data-driven [

7] assignments. Instead, we employ random channel assignments that maximize generalizability across diverse sports and do not introduce any bias that may compromise the validity of our experiments. Hence, we do not use stable ordering, as is common in color channels of image data, but instead use a random ordering that varies between games.

Table 1 consolidates all symbols and variable definitions used within this paper.

4. Method

Training a Siamese Network using a full dataset of all possible pairs of sport scenes is prohibitively expensive due to the combinatorial complexity of pairwise comparisons and the associated cost of computing the Hungarian algorithm’s optimal distance for high-dimensional trajectory pairs. For example, given N = 100,000 scenes, a full training would train on or approximately 5 billion pairs. Due to this cost, our experiments instead rely on “simulated full training” by generating large amounts of pairs, e.g., 10 million pairs for N = 100,000 scenes, i.e., of all possible combinations. Simulated full training, thus, is a practical and very large upper bound, whereas the true combinatorial set is intractable.

In practice, random subsampling of pairwise comparisons is the standard method for training models until convergence. However, training with data generated by random sampling can be inefficient due to data redundancy and low information content [

10]. While the cost compared to full training diminishes, the learned representation may be of lower quality for harder, rarer, or more uncertain samples.

In this work, we propose using more advanced sampling methods for sample mining by transferring Active Learning samplers that promise to select more informative samples, thereby improving the retrieval quality of sport scene embeddings and delivering higher-quality search results at lower cost.

Conceptually, we extend sample mining by transferring the Active Learning loop to pairwise representation learning. For this work, we start with a pool of scenes (not pairs of scenes) and sample informative pairs for which we still need to compute the distances. This pool of scenes is similar to the unlabelled pool. Instead of an oracle, we have the costly Hungarian algorithm to assign a cost to a pair of samples. Analogously to Active Learning, we propose selecting informative pairs using the model and a sampling method, thereby reducing distance computation and approaching the oracle’s cost.

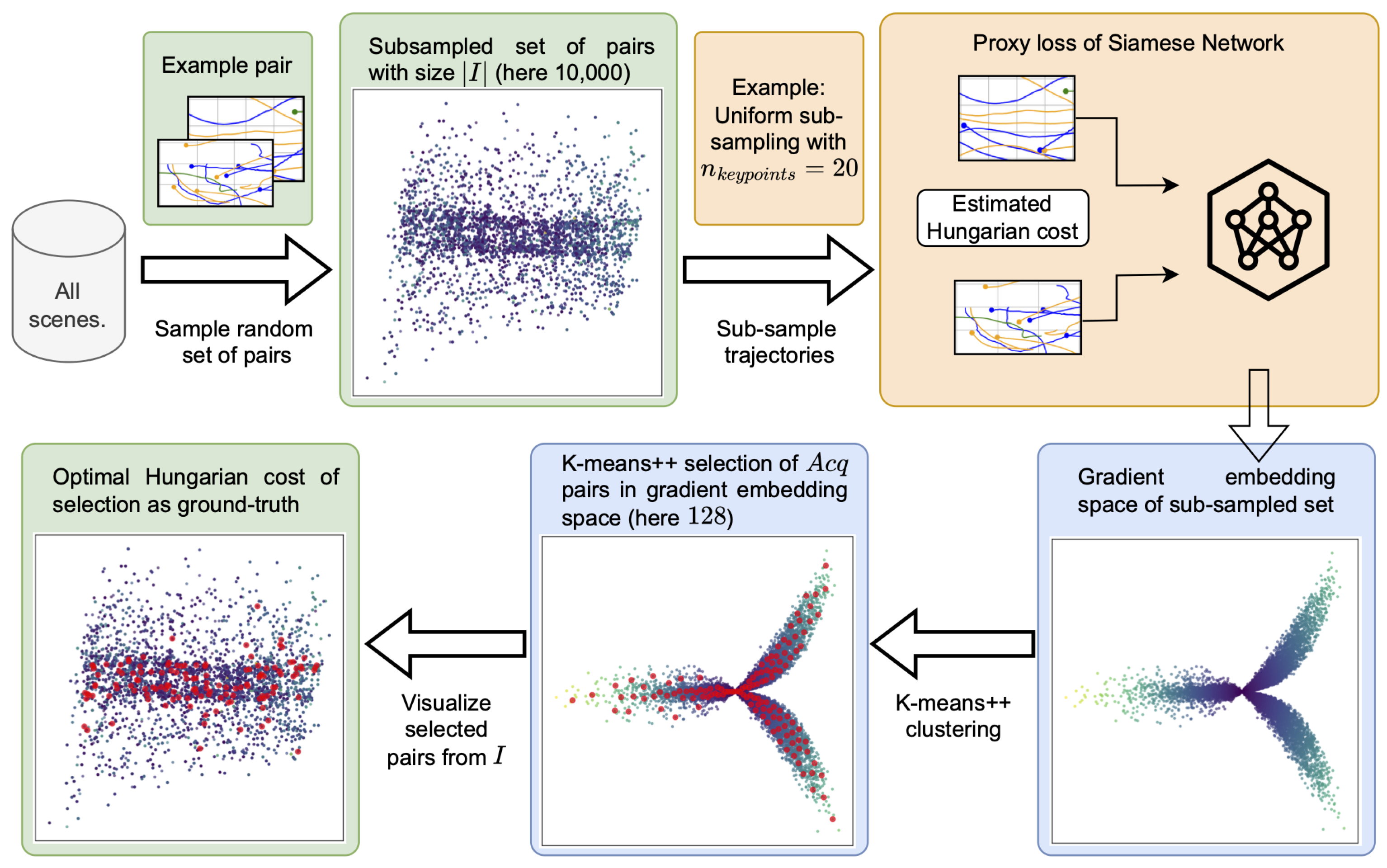

Figure 2 illustrates our proposed method as a simple step-by-step flowchart. Our method initially samples a large set

I of pairs from the pool. Then, the active sampler calculates which of these pairs are most suitable for learning. Our method uses gradient embeddings of pairs, generated from cheap-to-compute proxy losses, to select

diverse and uncertain samples. For these 128 pairs, the true Hungarian cost serves as ground truth for training. The remainder of this chapter formalizes our approach.

For Active Learning samplers, in the AL loop, the methods typically evaluate the “quality” of each sample from the unlabeled pool. In our context, this is not possible due to the combinatorial nature of pairwise comparisons. Sub-sampling of the full dataset is mandatory. From the smaller subset of possible pairs, the samplers then select the most informative instances for “labeling” by the Hungarian algorithm.

In our problem definition, one iteration of the random-sampling epoch executes as follows: The sampler first obtains a subset of scenes from the pool and selects random pairs for annotation. Next, the pairwise distances for these pairs are computed via the Hungarian algorithm, and the model trains on the pairs with associated distances. At the end of the epoch, all pairs of scenes from the pool have been evaluated, and the epoch concludes. It is important to note that the model training cannot consider all possible pairs of scenes. In the following, we describe alternative methods selecting pairs of scenes for training actively.

(1) Uncertainty-based sampling: The traditional uncertainty-based samplers for Deep Neural Networks are designed for selecting samples based on the entropy of class probability distribution, e.g., via a Softmax activation function, such as Entropy sampling [

10]. We adapt this estimation of the predictive uncertainty of the Siamese Network for a pair of samples by simulating Bayesian uncertainty through Monte Carlo (MC) Dropout [

26].

Given an input of scenes

and

, and their embeddings

and

, we apply random Dropout masks at

P forward passes during inference to generate stochastic predictions

. The distribution of the predictions then exhibits the variance

Given a set of pairs I from the pool’s subset, we can rank these by their predictive variance to select the samples with the highest variance.

We can also apply Entropy sampling by computing the entropy of a pair

assuming the distribution to be Gaussian, and selecting

of the subset

I’s pairs with the highest entropy. These methods were shown to select uncertain samples most efficiently when the selection size

was smaller, but produced redundant selections or focus on rare instances, such as outliers, when the selection size

was larger [

25,

31].

(2) Representative Sampling: The second type of method, which we adapt to the problem of pairwise representation learning, is the CoreSet sampler [

17]. In an initial step, we predict the embeddings of the pairs of scenes

in the subset

I by applying the model

f as

and

, and concatenating the embeddings

, yielding a vector per pair. Next, the step to achieve a representative sampling of these embedding vectors is outlined. Sener and Savarese [

17] apply

K-Center greedy clustering with Euclidean distance to find

samples from the subset

I that are most representative of the data.

This sampling method was shown to effectively select sets of

samples that are representative of the dataset distribution. However, the selected instances may have low information value and be redundant compared to the already annotated training data, leading to slower convergence [

25,

30,

31].

(3) Pairwise Diverse and Uncertain Gradient (PairDUG): To resolve the issues of uncertainty-based and diversity-based sampling, i.e., the focus on outliers or instances of low information value, several methods seek to combine the concepts [

11,

30]. In this work, we adapt the gradient-based BADGE [

11] to the novel learning task or pairwise metric learning. BADGE leverages gradient signals to select

samples from a subset

I. It selects uncertain samples, i.e., those with a high gradient magnitude, and those that are diverse, i.e., with gradients in the embedding space that are spread out. BADGE is designed for classification and uses the model’s own predictions to generate proxy labels used for loss calculations. We extend the sampler to pairwise comparisons.

To compute gradients, we would actually require ground-truth distances for pairs and . However, that would require solving the assignment problem using the Hungarian algorithm, with complexity , which would be practically intractable. We dub this costly variant “PairDUG gt” (gt for ground truth) and use it in our experiments as a performance benchmark for the PairDUG concept.

Instead, we simplify the distance and solve it near-optimally, resulting in “PairDUG fast”. For each pair we uniformly subsample time indices , resulting in smaller . In a pre-study, we compare the optimal number of keypoints on a subset of pairs for which we calculate ground-truth distances, and optimize to achieve low Mean Absolute Error (MAE) and low Mean Absolute Percentage Error (MAPE) at a low computational cost.

Using this approximation, the cost matrix between trajectories

j and

k of

and

with length

T is computed as

Then, the Hungarian algorithm finds the minimal assignment that acts as the pairwise distance as

Next, we compute the loss of the Siamese Network

f under training as follows. We calculate the Euclidean distance

d of the embeddings

and

of the pair

and

and use the proxy distance

as a stand-in label in place of the real ground-truth cost to compute the gradient approximation of the instance’s loss

as

This function is the derivative of the squared error loss. Finally, we calculate the gradient embedding following [

11] by taking the derivative of the loss with respect to the parameters

of the final (or embedding) layer

The resulting gradient embedding vector compactly represents the magnitude and direction of the gradients. It has beneficial properties that we can exploit to find diverse data that the model is uncertain about, i.e., has a high gradient magnitude in the embedding layer. On the gradient embedding, we apply K-means++ clustering to determine

representative centroids and to select the nearest sample for each centroid [

11]. Relying solely on gradient magnitude would result in selections that are vulnerable to gradient fluctuations, common in real applications with noise. The selection of diverse gradient vectors mitigates this instability and maintains robust convergence in learning. These

pairs are the selection of the PairDUG method.

To elaborate on the error propagation between PairDUG fast and gt, we first determine the error as

, where

is the optimal Hungarian cost without sub-sampling and

is the estimation with sub-sampling. During training, the gradient magnitude used by PairDUG is calculated via Equation (

6), from which follows that the approximation error of

is bounded by

, where

is proportional to the distance approximation error. Furthermore, assuming that

is approximately zero mean and its magnitude decays with growing

,

does not distort the selection that PairDUG fast performs. In addition, K-means++ clustering is invariant to uniform translations in gradient space, and only relative magnitude errors affect PairDUG fast’s selections.

We quantify the empirical distribution of

for Uniform sub-sampling in

Section 7.4.1, and find a Mean Absolute Percentage Error of

and a correlation of

for basketball and, respectively,

and

for American football (using 1000 random trajectory pairs and

keypoints). Consequently, the gradient’s bias is negligible compared with natural gradient variance within a batch; see

Section 7.2 for an analysis of gradients’ variance within a batch for both variants PairDUG fast and gt.

The computational complexity of the proposed method is discussed in the

Appendix A.

8. Discussion

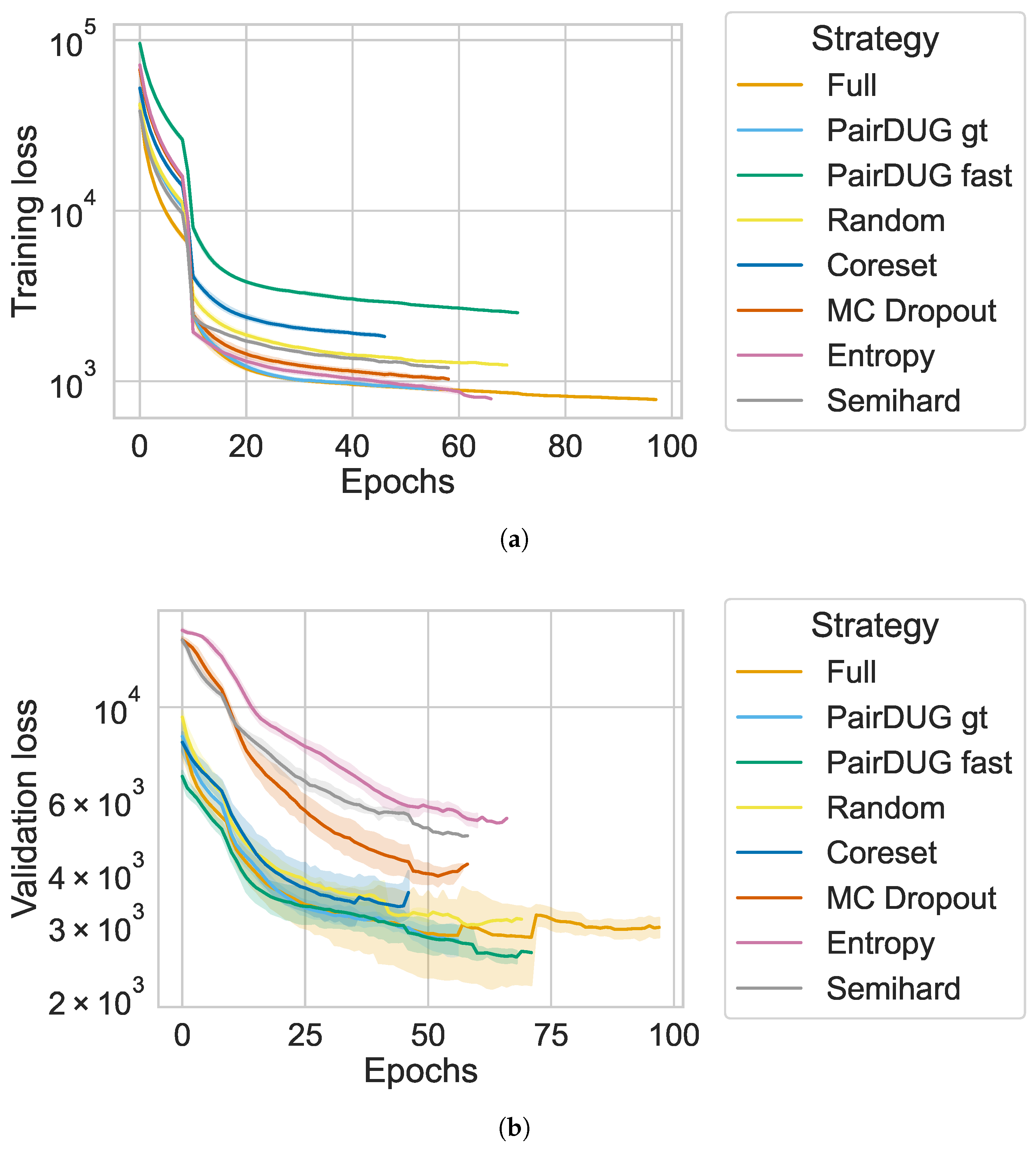

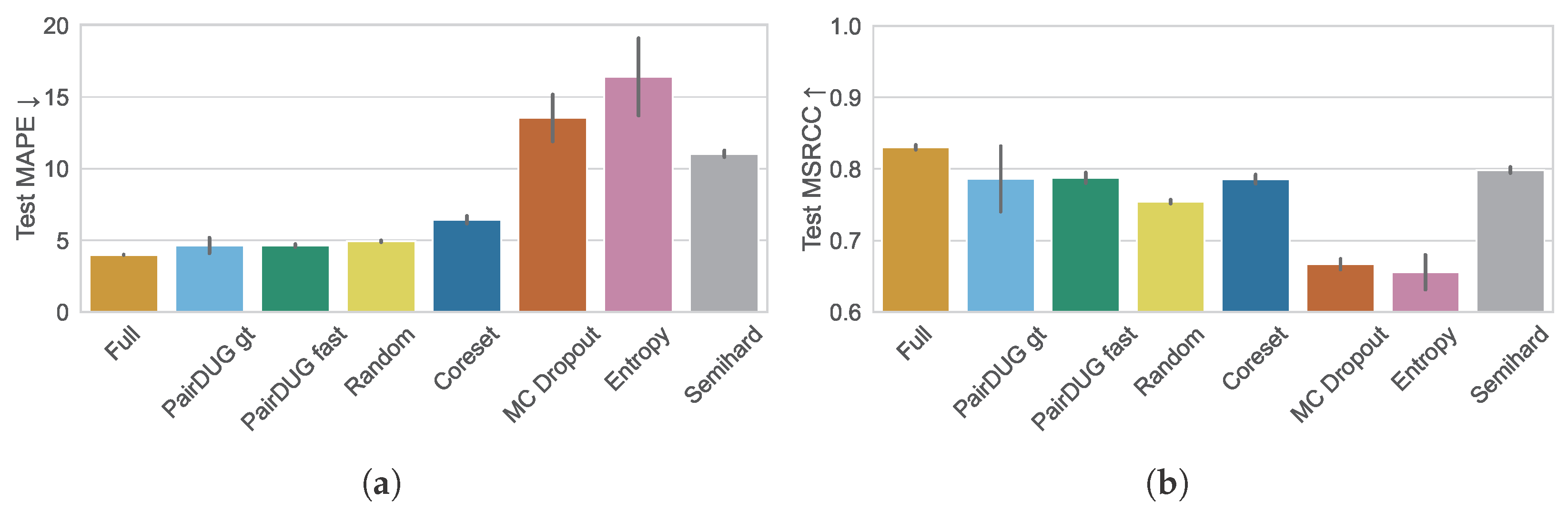

RQ1: Can the required compute time for training a Siamese Network be reduced while maintaining similar retrieval quality?

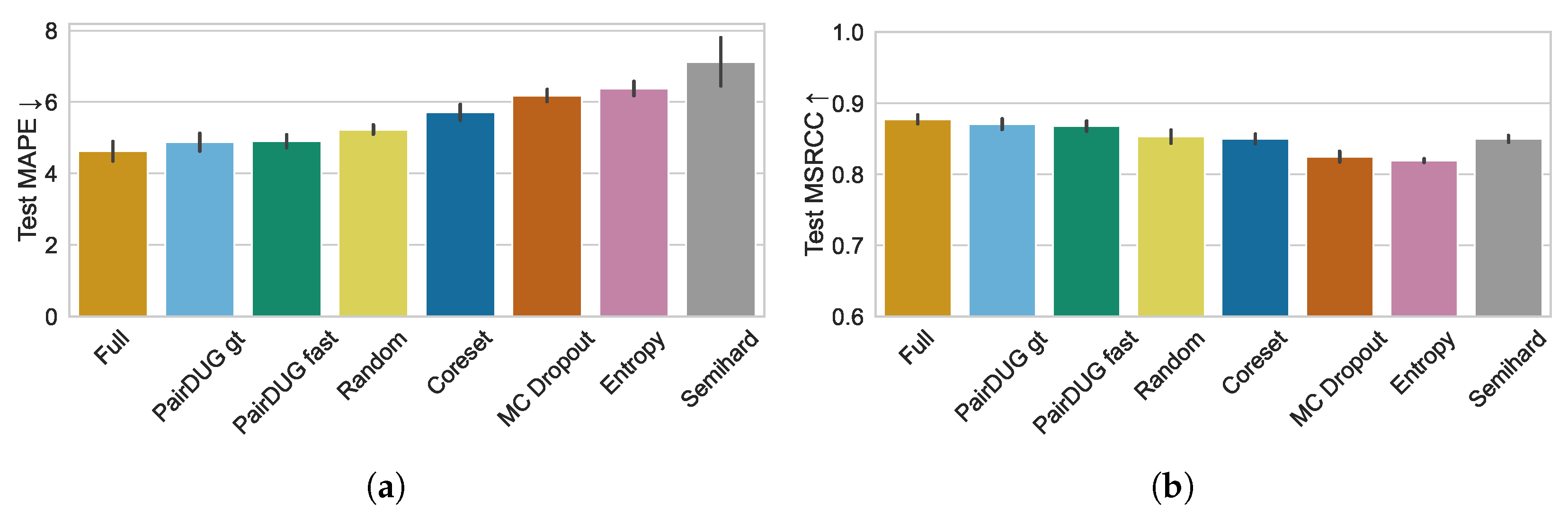

Our evaluation shows that reducing training time is possible. In fact, PairDUG fast cuts training time roughly in half while maintaining high retrieval quality, as shown by MAPE and MSCRR. This property is shown for different pruning rates of the training dataset in the ablation studies, where we evaluate different subset sizes. In contrast, other methods entail significant trade-offs. There may be faster sampling methods. However, they produce worse representations, leading to higher MAPE and lower retrieval quality. In fact, random sampling remains a strong baseline that other samplers besides PairDUG cannot consistently beat.

RQ2: Does active sampling retain a higher retrieval quality compared to other baselines?

Our experiments confirm that active samplers can outperform random sampling in maintaining higher retrieval quality. However, the method must be carefully designed. Our proposed PairDUG sampler combines uncertain and diverse gradients [

11] and thus achieves the best scores from all samplers. In comparison, only Coreset [

17] sometimes beats the random baseline by selecting diverse batches to train the Siamese Network. Uncertainty-based methods such as MC Dropout and Entropy fail to win against the Semihard sampling method and are not suitable active samplers for the sports scene retrieval application.

RQ3: What explanations for the active samplers’ effects on learning are there?

We investigate the batch composition with respect to its gradient signals that are crucial for learning. Our PairDUG samplers specifically construct batches with high gradient magnitudes of diverse nature. Furthermore, the gradient signals are consistent, which enables faster training. The gradients also represent higher information value because the Siamese Network requires fewer samples. In contrast, a randomly sampled dataset has less informative samples and more noisy gradients with regard to consistent signals, which are less well suited for learning from fewer samples. Similarly to our method, but to a lesser extent, Coreset benefits from diverse sampling, but has a lower Signal-to-Noise ratio, i.e., worse gradient consistency, resulting in worse retrieval quality.

9. Conclusions

Search and retrieval of interesting plays from large datasets of unstructured trajectories is a key problem for sports analytics and other fields with large amounts of positional data. The usual training of Siamese Networks to learn a lower-dimensional representation is costly due to the combinatorial nature of pairwise comparisons and thus employs subsampling that sacrifices quality for speed.

This work adapts and extends methods that select an informative subset from the Active Learning literature to reduce the combinatorial complexity of pairwise similarity learning, such as uncertainty-, diversity-, and gradient-based samplers.

Our proposed PairDUG fast sampler retains the retrieval quality of full training but cuts training time in half by sampling diverse and informative pairs using the Neural Network’s gradient signals, thereby beating random subsampling and other heuristics by a large margin. We analyze the PairDUG fast variant’s estimation error due to its keypoint sub-sampling to determine an error bound, which we show to be practically negligible compared with natural gradient variance within a batch. The experimental results, in addition, confirm that the fast variant performs equally as well as the ground-truth version. Furthermore, we analyze the gradient quality of their selected samples relative to the baselines and demonstrate their high magnitude, diversity, and stability.

Our results show good generalization across the large-scale sports datasets, basketball and American football, and robustness to their hyperparameters. Future work may transfer the sampler to other datasets and domains, as well as to other data mining challenges beyond pairwise distance learning.

Future avenues of research may extend PairDUG with optimized implementations that better utilize GPU-parallelism or make use of alternatives to the Hungarian algorithm, such as the Jonker–Volgenant or Auction algorithms, to increase training efficiency, or develop end-to-end data pruning techniques.