GMG-LDefmamba-YOLO: An Improved YOLOv11 Algorithm Based on Gear-Shaped Convolution and a Linear-Deformable Mamba Model for Small Object Detection in UAV Images

Abstract

1. Introduction

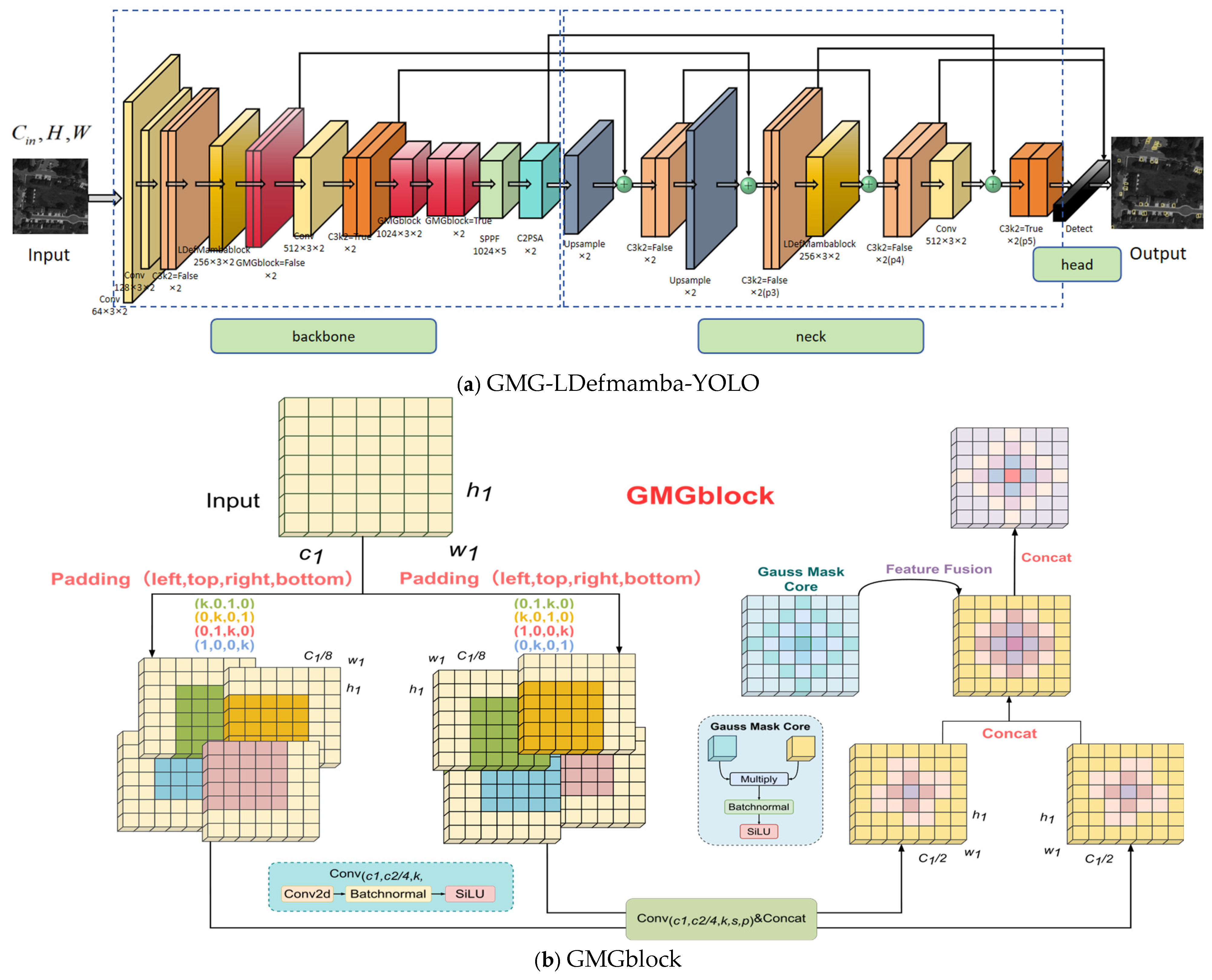

- The Gaussian mask gear convolutional GMGblock module is proposed, which extracts directional sensitivity features through the mirror-symmetrical eight-way padding convolutional kernel branch, splices it to form a symmetrical receptive field of gear shape, and combines the dynamic modulation of the Gaussian mask mechanism to strengthen the central feature extraction ability of small targets and effectively suppress complex background interference.

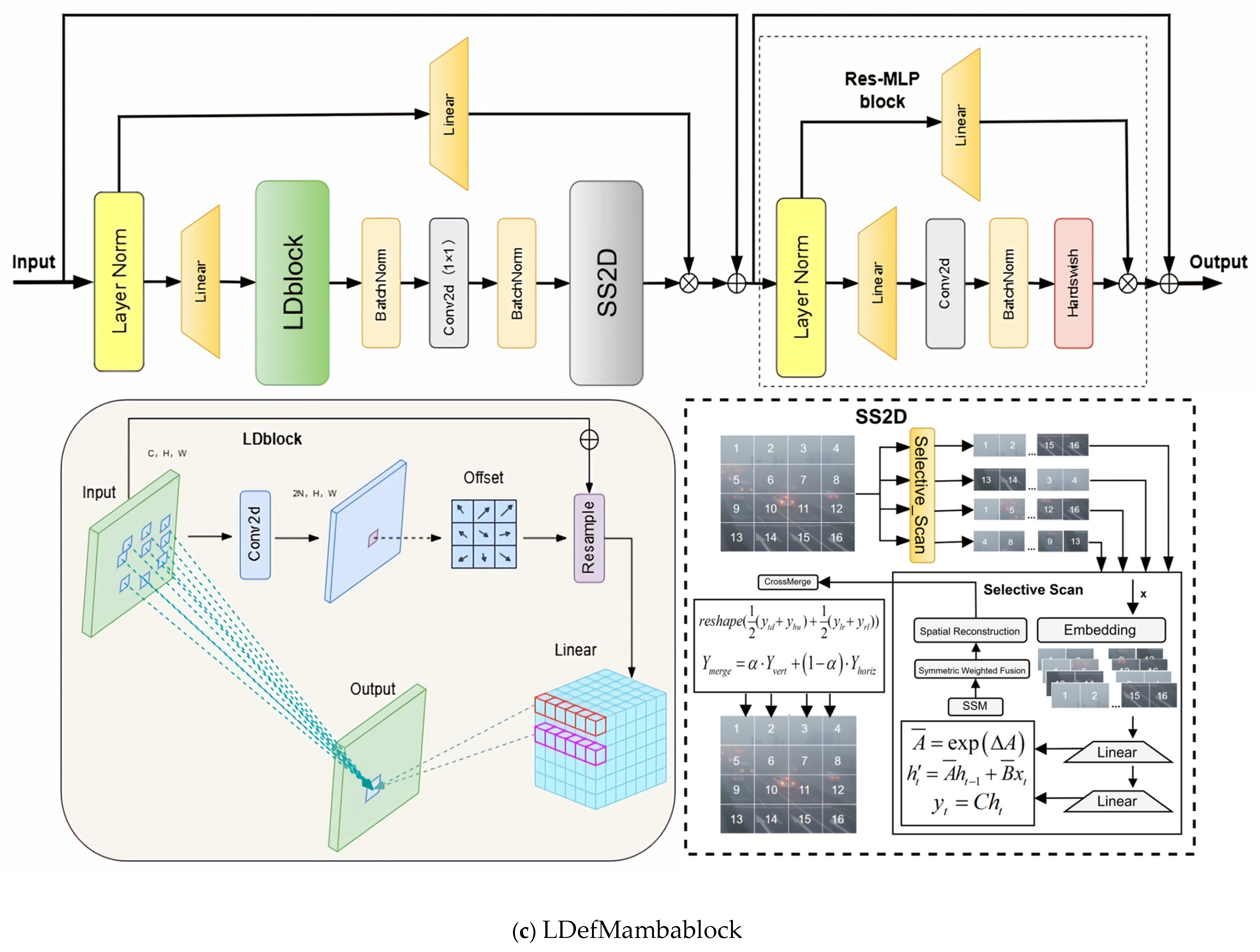

- The linear deformable Mamba block LDefMambablock module is designed, integrating the linear deformable sampling LDblock, the spatial state dual model SS2D, and the residual gating MLP component, integrating the flexible local feature capture capability of linear deformable convolution and the efficient global dependency modeling advantages of the Mamba architecture, dynamically adapting to the spatial distribution and scale changes in the target, and the gating mechanism optimizes the computing efficiency while ensuring accuracy, and adapts to the computing power constraints of edge devices.

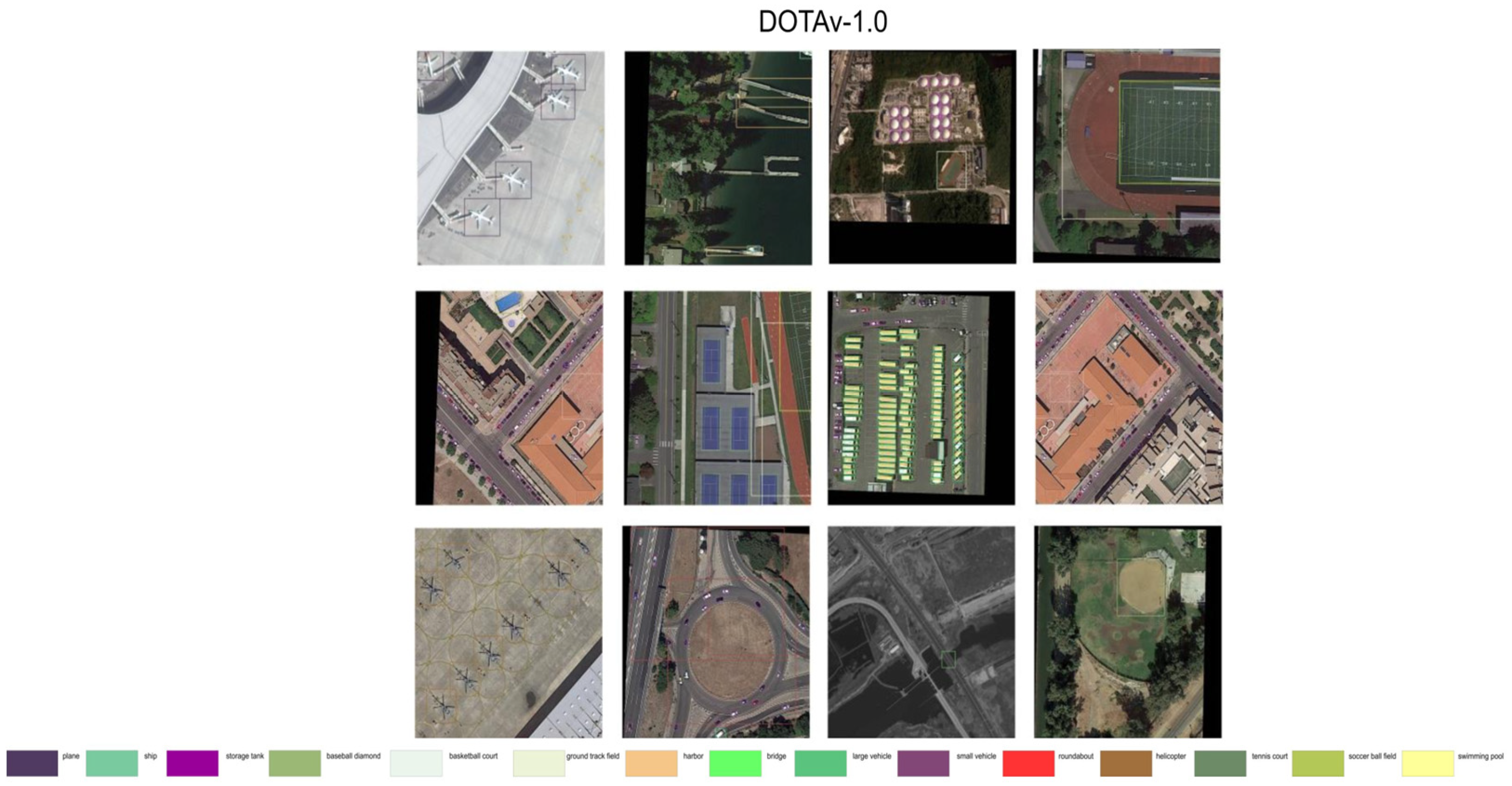

- The proposed GMG-LDefmamba-YOLO architecture achieves the most advanced detection performance in the field of remote sensing small targets. Excellent detection accuracy and inference efficiency were demonstrated on DOTA-v1.0, VEDAI, and USOD datasets.

2. Related Works

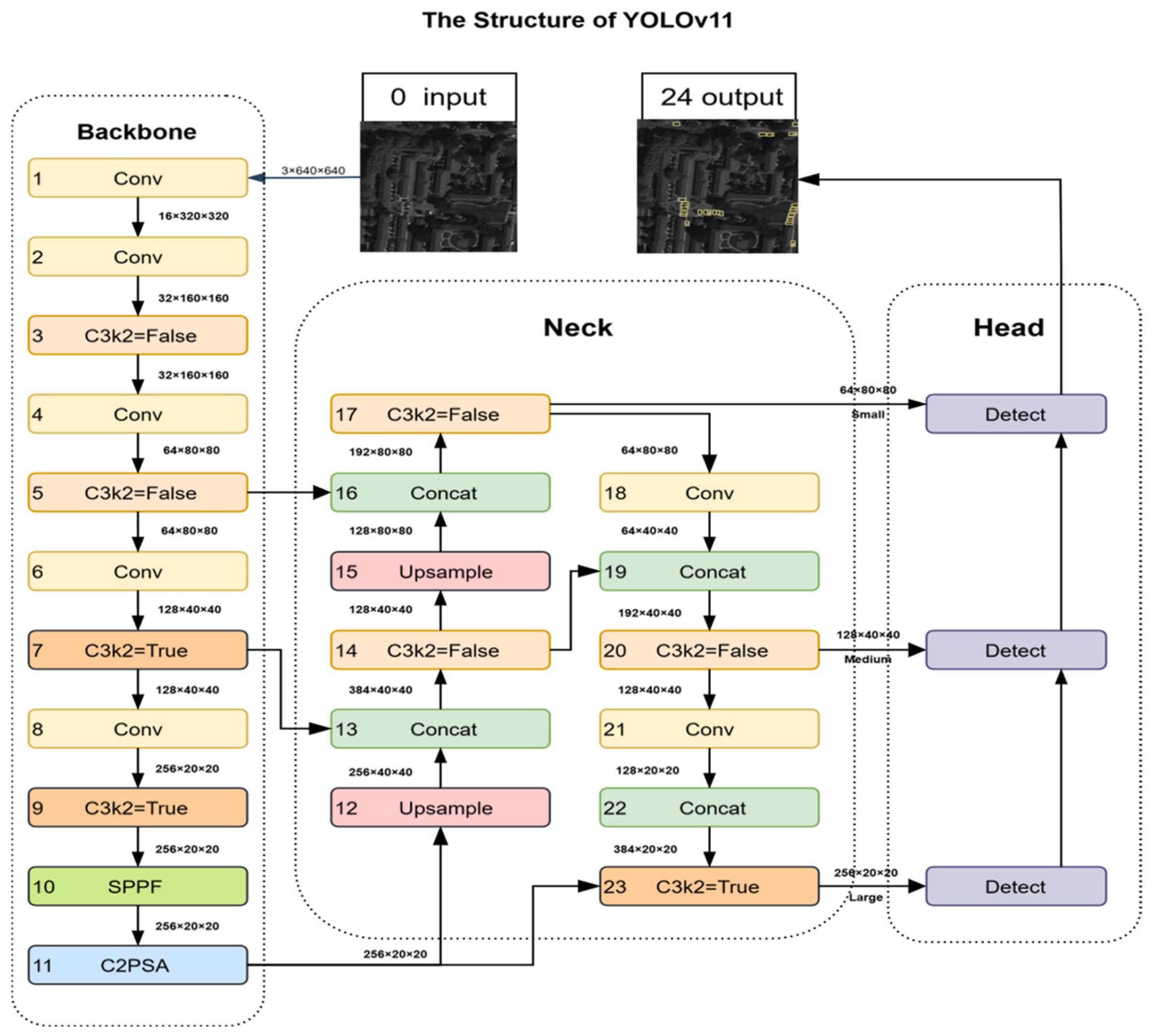

2.1. YOLOv11

2.2. State Space Models

2.3. Deformable Convolution

3. Method

3.1. Overview of GMG-LDefmamba-YOLO

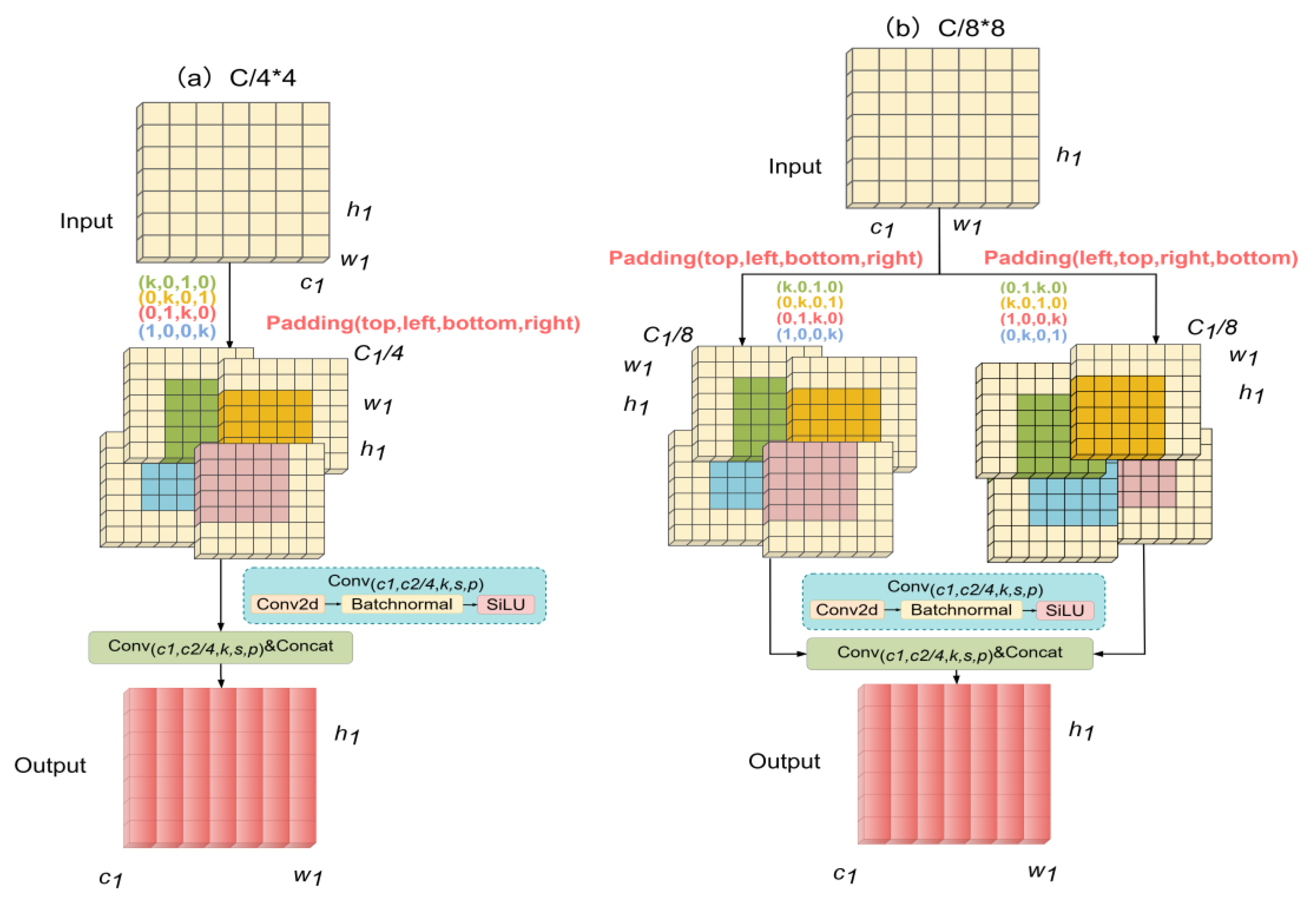

3.2. Gaussian Mask Gear Convolution GMGblock

3.3. Linear Deformable Mamba Block LDefMambablock

3.3.1. LDblock

3.3.2. SS2D Module

3.3.3. Res-MLP Block

4. Experiments

4.1. Dataset

4.2. Implementation Details and Evaluation Metrics

4.3. Ablation Study

4.3.1. Analysis of the GMGblock on Branch Design and Gaussian Mask

4.3.2. Analysis of the GMGblock on Kernel Sizes

4.3.3. Analysis of the LDefMambablock on Necessity of Each Component

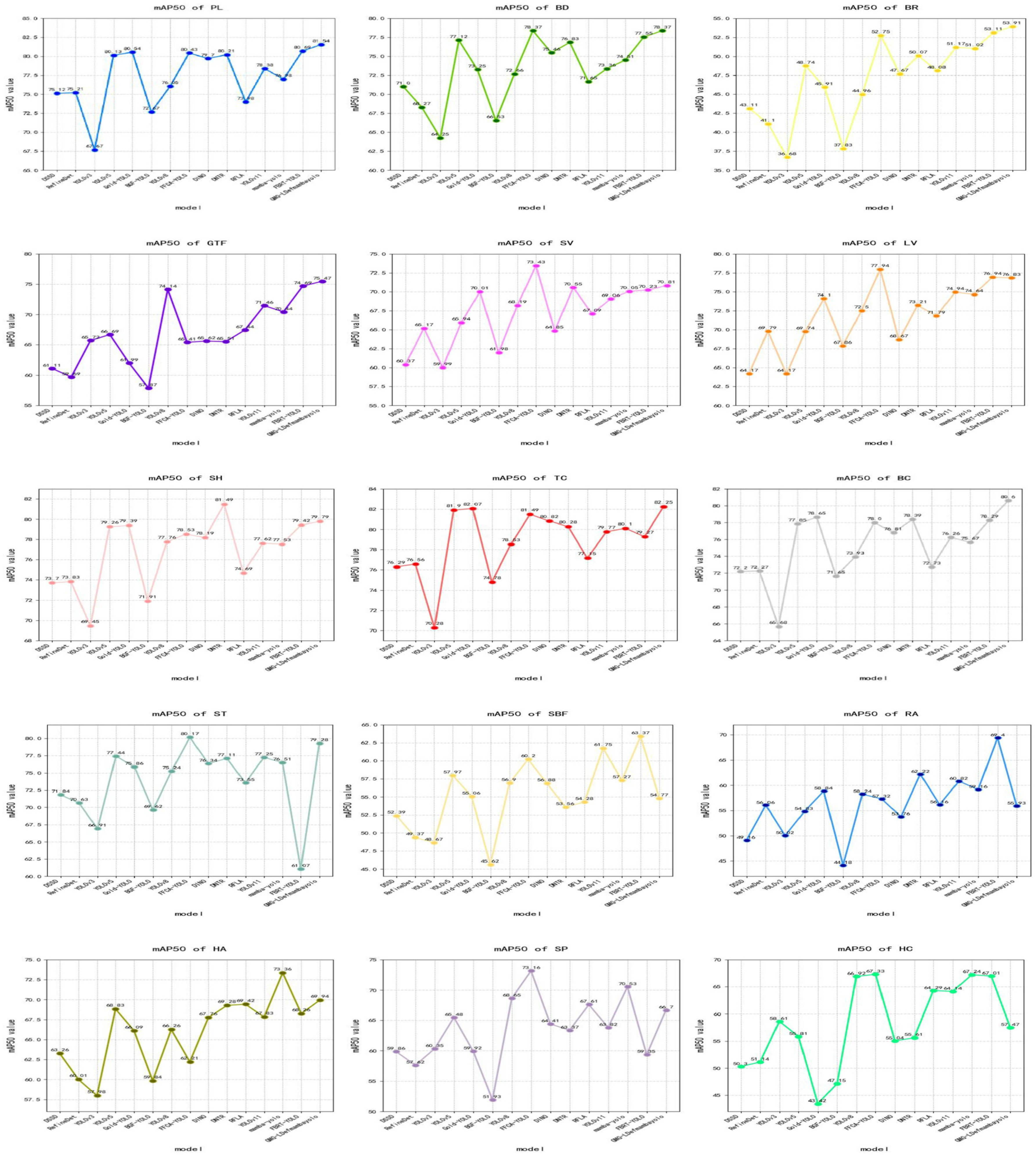

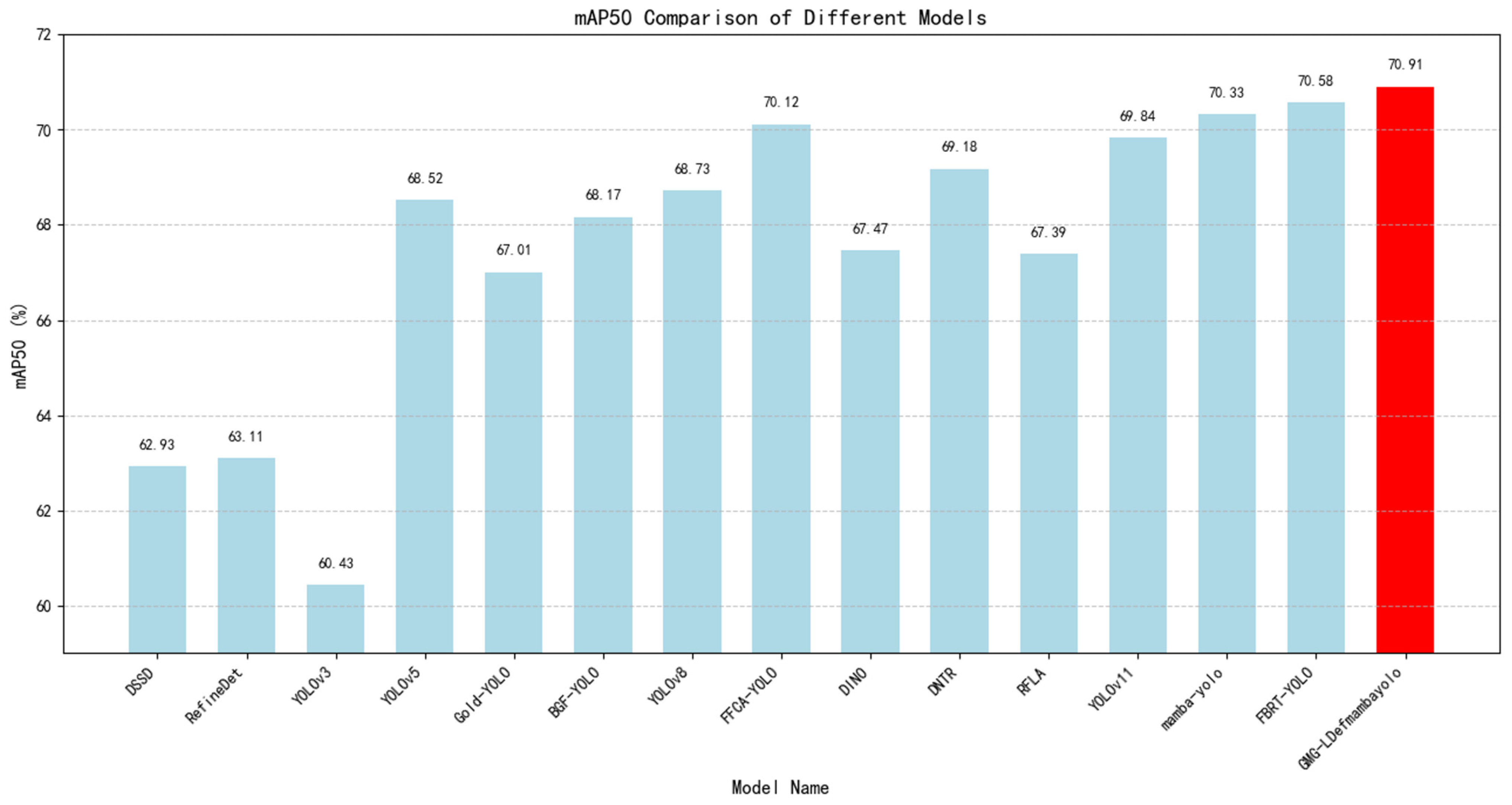

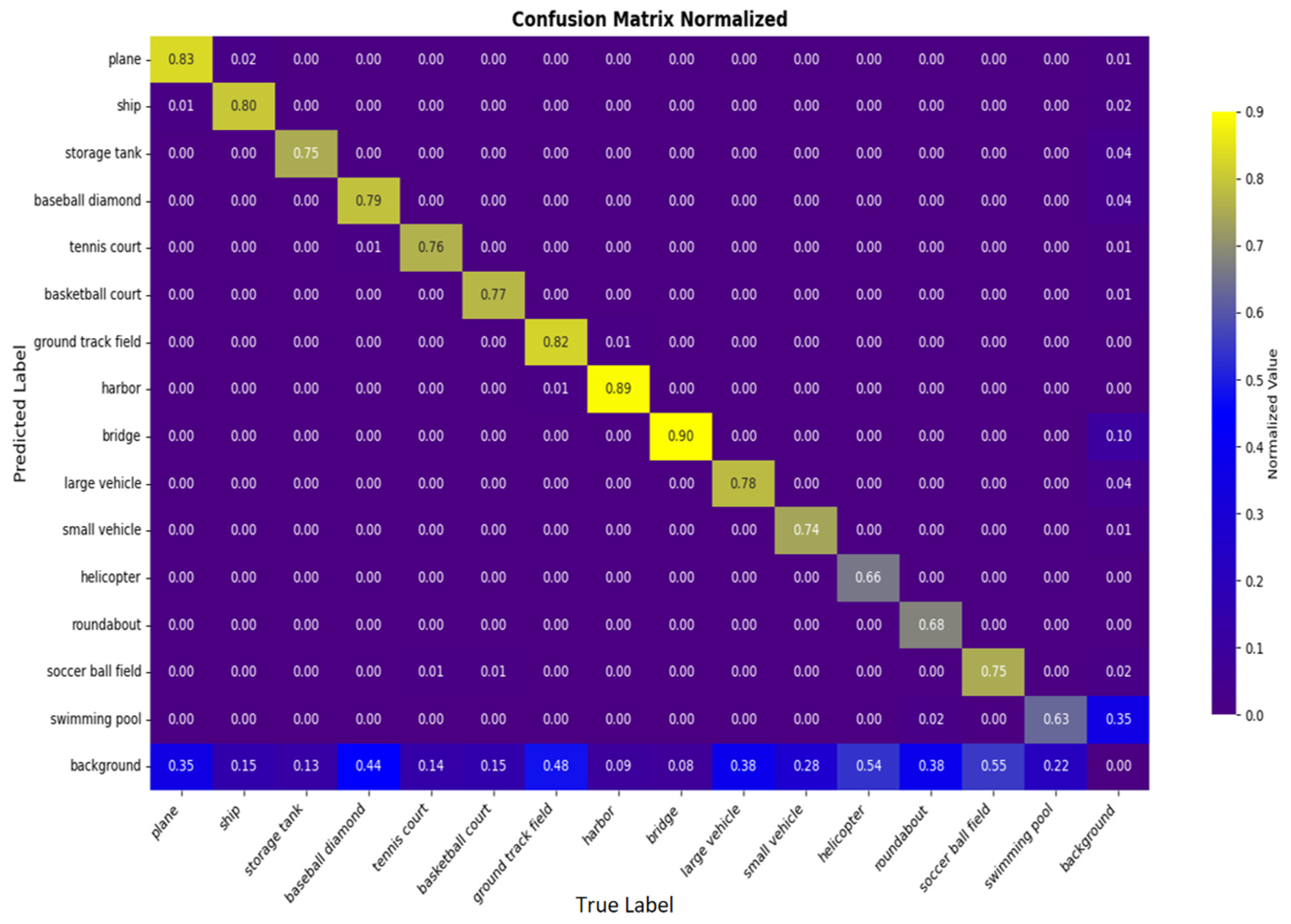

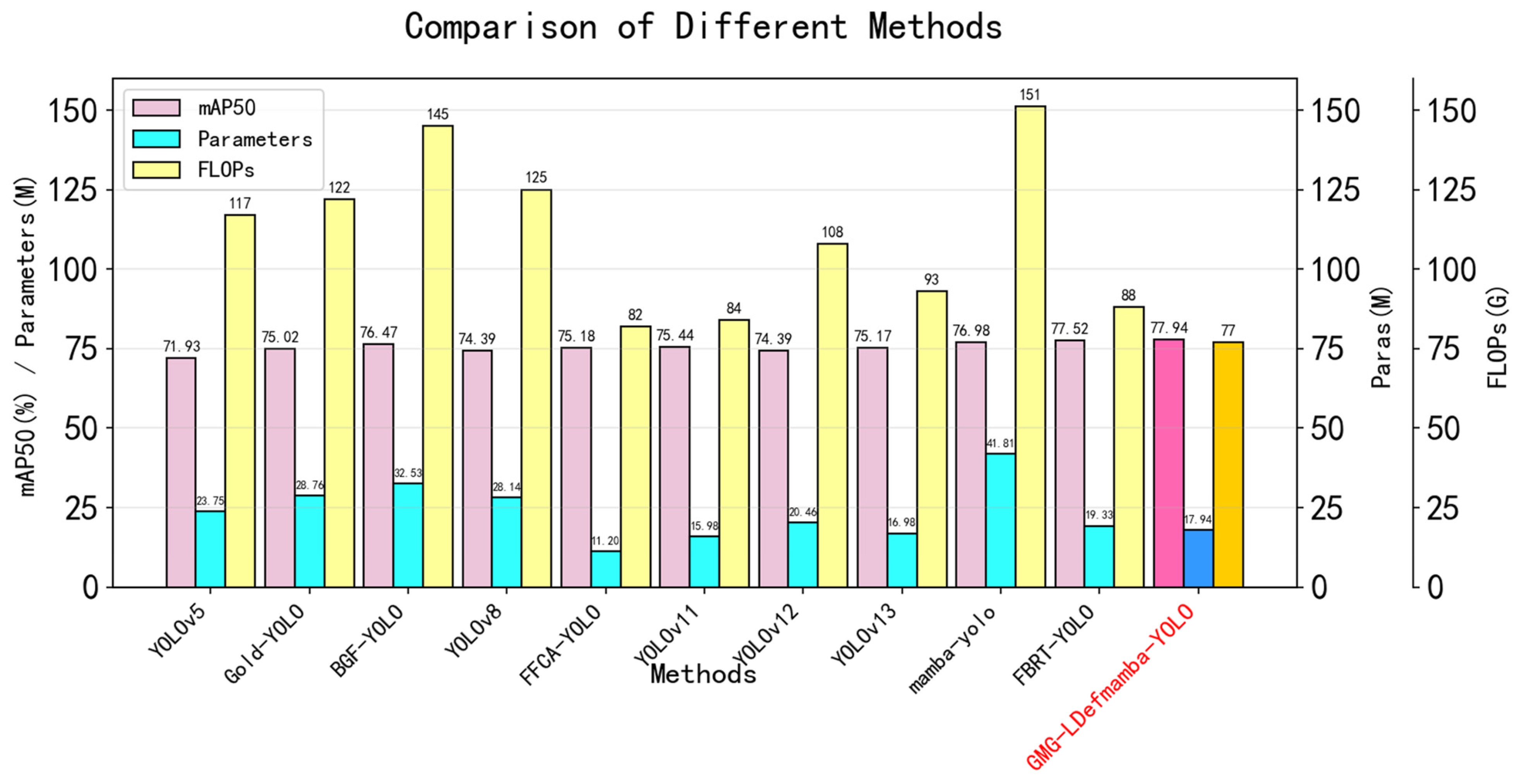

4.4. Comparisons with Previous Methods

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Li, W.; Chen, Y.; Hu, K.; Zhu, J. Oriented reppoints for aerial object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 1829–1838. [Google Scholar]

- Bouguettaya, A.; Zarzour, H.; Kechida, A.; Taberkit, A.M. Vehicle detection from UAV imagery with deep learning: A review. IEEE Trans. Neural Netw. Learn. Syst. 2021, 33, 6047–6067. [Google Scholar] [CrossRef]

- Zheng, Z.; Yuan, J.; Yao, W.; Kwan, P.; Yao, H.; Liu, Q.; Guo, L. Fusion of UAV-acquired visible images and multispectral data by applying machine-learning methods in crop classification. Agronomy 2024, 14, 2670. [Google Scholar] [CrossRef]

- Monteiro, J.G.; Jiménez, J.L.; Gizzi, F.; Přikryl, P.; Lefcheck, J.S.; Santos, R.S.; Canning-Clode, J. Novel Approach to Enhance Coastal Habitat and Biotope Mapping with Drone Aerial Imagery Analysis. Sci. Rep. 2021, 11, 574. [Google Scholar] [CrossRef] [PubMed]

- Kyrkou, C.; Theocharides, T. EmergencyNet: Efficient Aerial Image Classification for Drone-Based Emergency Monitoring Using Atrous Convolutional Feature Fusion. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 1687–1699. [Google Scholar] [CrossRef]

- Zong, H.; Pu, H.; Zhang, H.; Wang, X.; Zhong, Z.; Jiao, Z. Small Object Detection in UAV Image Based on Slicing Aided Module. In Proceedings of the 2022 IEEE 4th International Conference on Power, Intelligent Computing and Systems (ICPICS), Shenyang, China, 29–31 July 2022. [Google Scholar]

- Zhao, X.; Xia, Y.; Zhang, W.; Zheng, C.; Zhang, Z. YOLO-ViT-Based Method for Unmanned Aerial Vehicle Infrared Vehicle Target Detection. Remote Sens. 2023, 15, 3778. [Google Scholar] [CrossRef]

- Chen, J.; Wang, G.; Luo, L.; Gong, W.; Cheng, Z. Building Area Estimation in Drone Aerial Images Based on Mask R-CNN. IEEE Geosci. Remote Sens. Lett. 2021, 18, 891–894. [Google Scholar] [CrossRef]

- Hmidani, O.; Ismaili Alaoui, E.M. A Comprehensive Survey of the R-CNN Family for Object Detection. In Proceedings of the 2022 5th International Conference on Advanced Communication Technologies and Networking (CommNet), Marrakech, Morocco, 12–14 December 2022; pp. 1–6. [Google Scholar]

- Xu, J.; Ren, H.; Cai, S.; Zhang, X. An Improved Faster R-CNN Algorithm for Assisted Detection of Lung Nodules. Comput. Biol. Med. 2023, 153, 106470. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- Ross, T.Y.; Dollár, G. Focal loss for dense object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 213–229. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 10012–10022. [Google Scholar]

- Mehta, S.; Rastegari, M. MobileViT: Light-weight, General-purpose, and Mobile-friendly Vision Transformer. arXiv 2021, arXiv:2110.02178. [Google Scholar]

- Chen, Z.; Zhong, F.; Luo, Q.; Zhang, X.; Zheng, Y. EdgeViT: Efficient visual modeling for edge computing. In Proceedings of the International Conference on Wireless Algorithms, Systems, and Applications, Nanjing, China, 25–27 June 2021; Springer: Berlin/Heidelberg, Germany, 2021; pp. 393–405. [Google Scholar]

- Li, Y.; Hu, J.; Wen, Y.; Evangelidis, G.; Salahi, K.; Wang, Y.; Tulyakov, S.; Ren, J. Rethinking Vision Transformers for MobileNet Size and Speed. In Proceedings of the IEEE International Conference on Computer Vision, Paris, France, 2–3 October 2023. [Google Scholar]

- Gu, A.; Dao, T. Mamba: Linear-time sequence modeling with selective state spaces. arXiv 2023, arXiv:2312.00752. [Google Scholar] [CrossRef]

- Yan, L.; He, Z.; Zhang, Z.; Xie, G. LS-MambaNet: Integrating Large Strip Convolution and Mamba Network for Remote Sensing Object Detection. Remote Sens. 2025, 17, 1721. [Google Scholar] [CrossRef]

- Wang, Z.; Li, C.; Xu, H.; Zhu, X.; Li, H. Mamba YOLO: A Simple Baseline for Object Detection with State Space Model. Proc. AAAI Conf. Artif. Intell. 2025, 39, 8205–8213. [Google Scholar] [CrossRef]

- Yang, J.; Liu, S.; Wu, J.; Su, X.; Hai, N.; Huang, X. Pinwheel-shaped Convolution and Scale-based Dynamic Loss for Infrared Small Target Detection. Proc. AAAI Conf. Artif. Intell. 2025, 39, 9202–9210. [Google Scholar] [CrossRef]

- Xiong, Y.; Li, Z.; Chen, Y.; Wang, F.; Zhu, X.; Luo, J.; Dai, J. Efficient deformable convnets: Rethinking dynamic and sparse operator for vision applications. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 5652–5661. [Google Scholar]

- Khanam, R.; Hussain, M. Yolov11: An overview of the key architectural enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar] [CrossRef]

- Lin, C.; Hu, X.; Zhan, Y.; Hao, X. MobileNetV2 with Spatial Attention module for traffic congestion recognition in surveillance images. Expert Syst. Appl. 2024, 255, 14. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Li, X.; Wang, W.; Wu, L.; Chen, S.; Hu, X.; Li, J.; Tang, J.; Yang, J. Generalized focal loss: Learning qualified and distributed bounding boxes for dense object detection. In Advances in Neural Information Processing Systems 33 (NeurIPS 2020); Neural Information Processing Systems Foundation, Inc. (NeurIPS): La Jolla, CA, USA, 2020. [Google Scholar]

- Gao, F.; Jin, X.; Zhou, X.; Dong, J.; Du, Q. MSFMamba: Multi-Scale Feature Fusion State Space Model for Multi-Source Remote Sensing Image Classification. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5504116. [Google Scholar]

- Zhu, L.; Liao, B.; Zhang, Q.; Wang, X.; Liu, W.; Wang, X. Vision mamba: Efficient visual representation learning with bidirectionaltional state space model. arXiv 2024, arXiv:2401.09417. [Google Scholar]

- Liu, Y.; Tian, Y.; Zhao, Y.; Yu, H.; Xie, L.; Wang, Y.; Ye, Q.; Jiao, J.; Liu, Y. VMamba: Visual State Space Model. arXiv 2024, arXiv:2401.10166. [Google Scholar]

- Huang, T.; Pei, X.; You, S.; Wang, F.; Qian, C.; Xu, C. LocalMamba: Visual State Space Model with Windowed Selective Scan. arXiv 2024, arXiv:2403.09338. [Google Scholar] [CrossRef]

- Saif, A.F.M.S.; Prabuwono, A.S.; Mahayuddin, Z.R. Moment Feature Based Fast Feature Extraction Algorithm for Moving Object Detection Using Aerial Images. PLoS ONE 2015, 10, e0126212. [Google Scholar] [CrossRef] [PubMed]

- Dai, J.; Qi, H.; Xiong, Y.; Li, Y.; Zhang, G.; Hu, H.; Wei, Y. Deformable Convolutional Networks. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 764–773. [Google Scholar]

- Du, B.; Huang, Y.; Chen, J.; Huang, D. Adaptive Sparse Convolutional Networks with Global Context Enhancement for Faster Object Detection on Drone Images. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 18–22 June 2023; pp. 13435–13444. [Google Scholar]

- Wang, W.; Dai, J.; Chen, Z.; Huang, Z.; Li, Z.; Zhu, X.; Hu, X.; Lu, T.; Lu, L.; Li, H.; et al. InternImage: Exploring Large-Scale Vision Foundation Models with Deformable Convolutions. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 14408–14419. [Google Scholar]

- Qi, Y.; He, Y.; Qi, X.; Zhang, Y.; Yang, G. Dynamic Snake Convolution Based on Topological Geometric Constraints for Tubular Structure Segmentation. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 4–6 October 2023; pp. 6047–6056. [Google Scholar]

- Zhang, X.; Song, Y.; Song, T.; Yang, D.; Ye, Y.; Zhou, J.; Zhang, L. LDConv: Linear deformable convolution for improving convolutional neural networks. Image Vis. Comput. 2024, 149, 105190. [Google Scholar] [CrossRef]

- Wu, Y.; Mu, X.; Shi, H.; Hou, M. An object detection model AAPW-YOLO for UAV remote sensing images based on adaptive convolution and reconstructed feature fusion. Sci. Rep. 2025, 15, 16214. [Google Scholar] [CrossRef] [PubMed]

- Xia, G.S.; Bai, X.; Ding, J.; Zhu, Z.; Belongie, S.; Luo, J.; Datcu, M.; Pelillo, M.; Zhang, L. DOTA: A large-scale dataset for object detection in aerial images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 3974–3983. [Google Scholar]

- Razakarivony, S.; Jurie, F. Vehicle detection in aerial imagery: A small target detection benchmark. J. Vis. Commun. Image Represent. 2016, 34, 187–203. [Google Scholar] [CrossRef]

- Colin, L. Unified Coincident Optical and Radar for Recognition (UNICORN) 2008 Dataset. 2019. Available online: https://github.com/AFRL-RY/data-unicorn-2008 (accessed on 5 July 2025).

- Gao, T.; Liu, Z.; Zhang, J.; Wu, G.; Chen, T. A task-balanced multiscale adaptive fusion network for object detection in remote sensing images. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–15. [Google Scholar] [CrossRef]

- Fu, C.Y.; Liu, W.; Ranga, A.; Tyagi, A.; Berg, A.C. Dssd: Deconvolutional single shot detector. arXiv 2017, arXiv:1701.06659. [Google Scholar] [CrossRef]

- Zhang, S.; Wen, L.; Bian, X.; Lei, Z.; Li, S.Z. Single-shot refinement neural network for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4203–4212. [Google Scholar]

- Farhadi, A.; Redmon, J. YOLOv3: An Incremental Improvement. In Proceedings of the Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Zhu, X.; Lyu, S.; Wang, X.; Zhao, Q. TPH-YOLOv5: Improved YOLOv5 based on transformer prediction head for object detection on drone-captured scenarios. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 2778–2788. [Google Scholar]

- Varghese, R.; Sambath, M. Yolov8: A novel object detection algorithm with enhanced performance and robustness. In Proceedings of the 2024 International Conference on Advances in Data Engineering and Intelligent Computing Systems (ADICS), Chennai, India, 18–19 April 2024; pp. 1–6. [Google Scholar]

- Tian, Y.; Ye, Q.; Doermann, D. Yolov12: Attention-centric real-time object detectors. arXiv 2025, arXiv:2502.12524. [Google Scholar]

- Lei, M.; Li, S.; Wu, Y.; Hu, H.; Zhou, Y.; Zheng, X.; Gao, Y. YOLOv13: Real-Time Object Detection with Hypergraph-Enhanced Adaptive Visual Perception. arXiv 2025, arXiv:2506.17733. [Google Scholar]

- Wang, C.; He, W.; Nie, Y.; Guo, J.; Liu, C.; Wang, Y.; Han, K. Gold-YOLO: Efficient object detector via gather-and-distribute mechanism. Adv. Neural Inf. Process. Syst. 2023, 36, 51094–51112. [Google Scholar]

- Kang, M.; Ting, C.M.; Ting, F.F.; Phan, R.C.W. Bgf-yolo: Enhanced yolov8 with multiscale attentional feature fusion for brain tumor detection. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Daejeon, Republic of Korea, 23–27 September 2024; Springer Nature: Cham, Switzerland, 2024; pp. 35–45. [Google Scholar]

- Zhang, Y.; Ye, M.; Zhu, G.; Liu, Y.; Guo, P.; Yan, J. FFCA-YOLO for Small Object Detection in Remote Sensing Images. IEEE Trans. Geosci. Remote. Sens. 2024, 62, 1–15. [Google Scholar] [CrossRef]

- Caron, M.; Touvron, H.; Misra, I.; Jégou, H.; Mairal, J.; Bojanowski, P.; Joulin, A. Emerging properties in self-supervised vision transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021; pp. 9650–9660. [Google Scholar]

- Xu, C.; Wang, J.; Yang, W.; Yu, H.; Yu, L.; Xia, G.S. RFLA: Gaussian receptive field based label assignment for tiny object detection. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer Nature: Cham, Switzerland, 2022; pp. 526–543. [Google Scholar]

- Xiao, Y.; Xu, T.; Xin, Y.; Li, J. FBRT-YOLO: Faster and Better for Real-Time Aerial Image Detection. Proc. AAAI Conf. Artif. Intell. 2025, 39, 8673–8681. [Google Scholar] [CrossRef]

| Dataset Name | Object Quantity | Object Distribution | Object Size | Category Quantity |

|---|---|---|---|---|

| DOTA-v1.0 | 263,427 | 3 per image | 20 × 20–300 × 300 | 15 |

| VEDAI | 3575 | 3 per image | 12 × 12–24 × 24 | 9 |

| USOD | 50,298 | 14.5 per image | 8 × 8–16 × 16 | 1 |

| Dataset | Branch Design | Paras | FLOPs | mAP50 |

|---|---|---|---|---|

| USOD | C/4*4 | 17.15 M | 73 | 89.60 |

| C/8*8 | 16.88 M | 68 | 90.35 | |

| C/4*8/2 | 19.29 M | 80 | 89.98 | |

| C/16*8*2 | 18.34 M | 76 | 89.77 | |

| DOTAv1.0 | C/4*4 | 19.01 M | 81 | 70.07 |

| C/8*8 | 18.59 M | 79 | 70.91 | |

| C/4*8/2 | 20.97 M | 89 | 69.98 | |

| C/16*8*2 | 20.14 M | 84 | 70.35 | |

| VEDAI | C/4*4 | 18.83 M | 79 | 75.65 |

| C/8*8 | 17.94 M | 77 | 77.94 | |

| C/4*8/2 | 19.50 M | 85 | 75.49 | |

| C/16*8*2 | 18.99 M | 81 | 76.33 |

| Dataset | Gaussian Mask | Paras | FLOPs | mAP50 |

|---|---|---|---|---|

| USOD | configure | 16.88 M | 68 | 90.35 |

| unconfigure | 16.81 M | 67 | 89.82 | |

| DOTAv1.0 | configure | 18.59 M | 79 | 70.91 |

| unconfigure | 18.53 M | 78 | 70.15 | |

| VEDAI | configure | 17.94 M | 77 | 77.94 |

| unconfigure | 17.90 M | 76 | 76.88 |

| Dataset | Kernel Size (1 × k, k × 1) | Paras | FLOPs | mAP50 |

|---|---|---|---|---|

| USOD | 3 | 16.88 M | 68 | 90.35 |

| 5 | 17.52 M | 70 | 89.45 | |

| 7 | 20.15 M | 75 | 89.98 | |

| 11 | 26.44 M | 83 | 87.62 | |

| DOTAv1.0 | 3 | 18.59 M | 79 | 70.91 |

| 5 | 20.68 M | 83 | 69.89 | |

| 7 | 25.42 M | 88 | 70.32 | |

| 11 | 30.07 M | 96 | 69.40 | |

| VEDAI | 3 | 17.94 M | 77 | 77.94 |

| 5 | 19.33 M | 80 | 76.85 | |

| 7 | 23.82 M | 85 | 77.00 | |

| 11 | 27.37 M | 91 | 76.13 |

| Structure | mAP50 | Speed (ms) | Memory (MB) | LDblock | SS2D | MLP |

|---|---|---|---|---|---|---|

| (a) | 88.87 | 3.5 | 1257 | × | × | × |

| (b) | 89.59 | 3.6 | 1289 | √ | × | × |

| (c) | 89.83 | 3.9 | 1365 | × | √ | √ |

| (d) | 90.35 | 4.2 | 1418 | √ | √ | √ |

| Method | PL | BD | BR | GTF | SV | LV | SH | TC | BC | ST | SBF | RA | HA | SP | HC | mAP50 | mAP50:95 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| DSSD | 75.12 | 71.00 | 43.11 | 61.11 | 60.37 | 64.17 | 73.70 | 76.29 | 72.20 | 71.84 | 52.39 | 49.16 | 63.26 | 59.86 | 50.30 | 62.93 | 45.32 |

| RefineDet | 75.21 | 68.27 | 41.10 | 59.69 | 65.17 | 69.79 | 73.83 | 76.56 | 72.27 | 70.63 | 49.37 | 56.06 | 60.01 | 57.62 | 51.14 | 63.11 | 46.54 |

| YOLOv3 | 67.67 | 64.25 | 36.68 | 65.72 | 59.99 | 64.17 | 69.45 | 70.28 | 65.68 | 66.91 | 48.67 | 50.02 | 57.98 | 60.35 | 58.61 | 60.43 | 44.21 |

| YOLOv5 | 80.13 | 77.12 | 48.74 | 66.69 | 65.94 | 69.74 | 79.26 | 81.90 | 77.85 | 77.44 | 57.97 | 54.83 | 68.83 | 65.48 | 55.81 | 68.52 | 47.58 |

| Gold-YOLO | 80.54 | 73.25 | 45.91 | 61.99 | 70.01 | 74.10 | 79.39 | 82.07 | 78.65 | 75.86 | 55.06 | 58.84 | 66.09 | 59.92 | 43.42 | 67.01 | 47.45 |

| BGF-YOLO | 72.67 | 66.53 | 37.83 | 57.87 | 61.98 | 67.86 | 71.91 | 74.78 | 71.65 | 69.62 | 45.62 | 44.18 | 59.84 | 51.93 | 47.15 | 68.17 | 47.81 |

| YOLOv8 | 76.05 | 72.66 | 44.96 | 74.14 | 68.19 | 72.50 | 77.76 | 78.53 | 73.93 | 75.24 | 56.90 | 58.24 | 66.26 | 68.65 | 66.92 | 68.73 | 47.99 |

| FFCA-YOLO | 80.43 | 78.37 | 52.75 | 65.41 | 73.43 | 77.94 | 78.53 | 81.49 | 78.00 | 80.17 | 60.20 | 57.32 | 62.21 | 73.16 | 67.33 | 70.12 | 49.88 |

| DINO | 79.70 | 75.46 | 47.67 | 65.62 | 64.85 | 68.67 | 78.19 | 80.82 | 76.81 | 76.34 | 56.88 | 53.76 | 67.76 | 64.41 | 55.04 | 67.47 | 47.69 |

| DNTR | 80.21 | 76.83 | 50.07 | 65.51 | 70.55 | 73.21 | 81.49 | 80.28 | 78.39 | 77.11 | 53.56 | 62.22 | 69.28 | 63.37 | 55.61 | 69.18 | 48.17 |

| RFLA | 73.98 | 71.65 | 48.08 | 67.44 | 67.09 | 71.79 | 74.69 | 77.15 | 72.73 | 73.55 | 54.28 | 56.16 | 69.42 | 67.61 | 64.29 | 67.39 | 47.32 |

| YOLOv11 | 78.38 | 73.36 | 51.17 | 71.46 | 69.06 | 74.94 | 77.62 | 79.77 | 76.26 | 77.25 | 61.75 | 60.82 | 67.83 | 63.82 | 64.14 | 69.84 | 48.57 |

| YOLOv12 | 75.94 | 75.08 | 53.42 | 65.99 | 70.21 | 72.92 | 72.10 | 77.33 | 73.28 | 69.91 | 60.86 | 58.62 | 66.10 | 68.29 | 66.33 | 68.43 | 47.78 |

| YOLOv13 | 72.11 | 76.13 | 51.87 | 73.86 | 69.67 | 75.28 | 76.88 | 80.29 | 75.07 | 77.31 | 60.18 | 61.09 | 66.38 | 70.25 | 64.42 | 70.05 | 49.63 |

| Mamba-YOLO | 76.98 | 74.51 | 51.02 | 70.44 | 70.05 | 74.64 | 77.53 | 80.10 | 75.67 | 76.51 | 57.27 | 59.16 | 73.36 | 70.53 | 67.24 | 70.33 | 49.76 |

| FBRT-YOLO | 80.69 | 77.55 | 53.11 | 74.69 | 70.23 | 76.94 | 79.42 | 79.27 | 78.29 | 61.07 | 63.37 | 69.40 | 68.26 | 59.35 | 67.01 | 70.58 | 49.93 |

| GMG-LDef Mamba-YOLO | 81.54 | 78.37 | 53.91 | 75.47 | 70.81 | 76.83 | 79.79 | 82.25 | 80.60 | 79.28 | 54.77 | 55.93 | 69.94 | 66.70 | 57.47 | 70.91 | 50.39 |

| Method | mAP50 (%) | Parameters (M) | FLOPs |

|---|---|---|---|

| YOLOv5 | 71.93 | 23.75 | 117 |

| Gold-YOLO | 75.02 | 28.76 | 122 |

| BGF-YOLO | 76.47 | 32.53 | 145 |

| YOLOv8 | 74.39 | 28.14 | 125 |

| FFCA-YOLO | 75.18 | 11.20 | 82 |

| YOLOv11 | 75.44 | 15.98 | 84 |

| YOLOv12 | 74.39 | 20.46 | 108 |

| YOLOv13 | 75.17 | 16.98 | 93 |

| Mamba-YOLO [21] | 76.98 | 41.81 | 151 |

| FBRT-YOLO [56] | 77.52 | 19.33 | 88 |

| GMG-LDefmamba-YOLO | 77.94 | 17.94 | 77 |

| Method | Precision | Recall | mAP50 | Speed (ms) | Memory (MB) |

|---|---|---|---|---|---|

| YOLOv5 | 85.80 | 79.41 | 83.95 | 5.6 | 1861 |

| Gold-YOLO | 88.77 | 82.10 | 87.95 | 5.2 | 1589 |

| BGF-YOLO | 88.18 | 82.56 | 88.24 | 5.3 | 1751 |

| YOLOv8 | 87.65 | 82.01 | 87.40 | 4.8 | 1668 |

| FFCA-YOLO | 90.04 | 83.98 | 89.80 | 4.6 | 1655 |

| YOLOv11 | 89.50 | 83.25 | 89.06 | 4.3 | 1446 |

| YOLOv12 | 88.77 | 82.01 | 88.52 | 4. 7 | 1714 |

| YOLOv13 | 89.38 | 82.53 | 88.89 | 4.5 | 1533 |

| Mamba-YOLO | 90.09 | 83.96 | 89.60 | 4.6 | 1729 |

| FBRT-YOLO | 90.33 | 83.95 | 89.83 | 4.4 | 1509 |

| GMG-LDefmamba-YOLO | 90.35 | 84.03 | 90.28 | 4.2 | 1418 |

| Model | Small Targets (mAP50, %) | Medium Targets (mAP50, %) | Large Targets (mAP50, %) | Extra-Large Targets (mAP50, %) |

|---|---|---|---|---|

| YOLOv11 | 61.29 | 77.08 | 88.36 | 93.01 |

| Mamba-YOLO | 63.17 | 75.33 | 88.67 | 94.13 |

| YOLOv12 | 58.23 | 74.15 | 87.94 | 93.42 |

| YOLOv13 | 59.05 | 73.82 | 89.51 | 92.76 |

| GMG-LDefmamba-YOLO | 72.58 | 81.34 | 91.29 | 95.05 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, Y.; Yan, L.; Wang, J.; Liu, J.; Tang, X. GMG-LDefmamba-YOLO: An Improved YOLOv11 Algorithm Based on Gear-Shaped Convolution and a Linear-Deformable Mamba Model for Small Object Detection in UAV Images. Sensors 2025, 25, 6856. https://doi.org/10.3390/s25226856

Yang Y, Yan L, Wang J, Liu J, Tang X. GMG-LDefmamba-YOLO: An Improved YOLOv11 Algorithm Based on Gear-Shaped Convolution and a Linear-Deformable Mamba Model for Small Object Detection in UAV Images. Sensors. 2025; 25(22):6856. https://doi.org/10.3390/s25226856

Chicago/Turabian StyleYang, Yiming, Lingyu Yan, Jing Wang, Jinhang Liu, and Xing Tang. 2025. "GMG-LDefmamba-YOLO: An Improved YOLOv11 Algorithm Based on Gear-Shaped Convolution and a Linear-Deformable Mamba Model for Small Object Detection in UAV Images" Sensors 25, no. 22: 6856. https://doi.org/10.3390/s25226856

APA StyleYang, Y., Yan, L., Wang, J., Liu, J., & Tang, X. (2025). GMG-LDefmamba-YOLO: An Improved YOLOv11 Algorithm Based on Gear-Shaped Convolution and a Linear-Deformable Mamba Model for Small Object Detection in UAV Images. Sensors, 25(22), 6856. https://doi.org/10.3390/s25226856